Abstract

We reconsider the Schröder–Siegel problem of conjugating an analytic map in \(\mathbb {C}\) in the neighborhood of a fixed point to its linear part, extending it to the case of dimension \(n> 1\). Assuming a condition which is equivalent to Bruno’s one on the eigenvalues \(\lambda _1,\ldots ,\lambda _n\) of the linear part, we show that the convergence radius \(\rho \) of the conjugating transformation satisfies \(\ln \rho (\lambda )\ge -C\Gamma (\lambda )+C'\) with \(\Gamma (\lambda )\) characterizing the eigenvalues \(\lambda \), a constant \(C'\) not depending on \(\lambda \) and \(C=1\). This improves the previous results for \(n> 1\), where the known proofs give \(C=2\). We also recall that \(C=1\) is known to be the optimal value for \(n=1\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and statement of the result

We reconsider the classical problem of iteration of analytic functions, previously investigated by Schröder [14] and Siegel [15], extending it to higher dimension. Our aim was to improve the existing results on the convergence radius of an analytic near the identity transformation that conjugates the map to its linear part, thus producing general and possibly optimal estimates.

We consider an analytic map of the complex space \({\mathbb {C}}^n\) into itself that leaves the origin fixed, so that it may be written as

where \(\varLambda \) is a \(n\times n\) complex matrix and \(v_s(x)\) is a homogeneous polynomial of degree \(s+1\). The series is assumed to be convergent in a neighborhood of the origin of \({\mathbb {C}}^n\). We also assume that \(\varLambda =\hbox {diag}(\lambda _1,\ldots ,\lambda _n)\) is a diagonal complex matrix with non-resonant eigenvalues, in a sense to be clarified in a short.

The problem raised by Schröder is the following: to find an analytic near the identity coordinate transformation written as a convergent power series

with \(\varphi _{s,j}\) of degree \(s+1\) which conjugates the map (1) to its linear part, namely in the new coordinates \(y\) the map takes the form

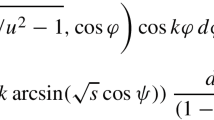

Let us write the eigenvalues of the complex matrix \(\varLambda \) in exponential form, namely as \(\lambda _j=e^{\mu _j+i\omega _j}\), with real \(\mu _j\) and \(\omega _j\). We define the sequence \(\{\beta _r\}_{r\ge 0}\) of positive real numbers as

The eigenvalues are said to be non-resonant if \(\beta _r\ne 0\) for \(r\ge 1\). This is enough in order to positively answer the question raised by Schröder in formal sense, i.e., disregarding the question of convergence.

We briefly recall some known results that motivate the present work. For a detailed discussion of the problem, we refer to Arnold’s book [1]. The eigenvalues are said to belong to the Poincaré domain in case all \(\mu _j\)’s have the same sign (i.e., the eigenvalues \(\lambda \) are all inside or all outside the unit circle in the complex plane). The complement of the Poincaré domain is named Siegel domain. In the latter case, the problem raised by Schröder represented a paradigmatic case of perturbation series involving small divisors, since the sequence \(\{\beta _r\}_{r\ge 0}\) above has zero as inferior limit.

The formal solution of the problem has been established by Schröder [14] for the case \(n=1\), when the Siegel domain is the unit circle, i.e., \(\lambda =e^{i\omega }\). The problem of convergence in this case has been solved by Siegel [15], assuming a strong non-resonance condition of diophantine type. Siegel’s proof is worked out using the classical method of majorants introduced by Cauchy and makes use of a delicate number-theoretical lemma, often called Siegel’s lemma, putting particular emphasis on the relevance of Diophantine approximations. This was actually the first proof of convergence of a meaningful problem involving small divisors.

A condition of diophantine type was also used by Kolmogorov in his proof of persistence of invariant tori under small perturbation of integrable Hamiltonian systems and has become a standard one in KAM theory. It should be noted that Kolmogorov introduced also an iteration scheme exhibiting a fast convergence, which he described as “similar to Newton’s method” and is often referred to as “quadratic method.” The fast convergence scheme applies also to the case of maps discussed here: see, e.g., [1], section 28.

In the last decades, two problems have been raised (among many others). The first one is concerned with determining the optimal non-resonance condition and the second one with the size of the convergence radius of the transformation to normal form. In the case \(n=1\), both questions have been answered exploiting the geometric renormalization approach introduced by Yoccoz (see [2, 16, 17]). The optimal set of rotation numbers for which an analytic transformation to linear normal form exists has been identified with the set of Bruno numbers. These numbers obey a non-resonance condition weaker than the diophantine one introduced by Siegel.

Bruno’s condition in case \(n> 1\) may be stated as follows. Let us introduce the sequence \(\{\alpha _r\}_{r\ge 0}\) defined as

The latter sequence is monotonically non-increasing, and if the eigenvalues belong to Siegel’s domain has zero as lower limit. The non-resonance condition is

The series expansion of the transformation that solves Schröder’s problem is proved to be convergent in a disk \(\varDelta _{\rho }\) centered at the origin with radius \(\rho (\lambda )\) satisfying

where \(C'\) is a constant independent of \(\lambda \). For \(n=1\), the optimal value has been proved to be \(C=1\). However, the geometric renormalization methods cannot be extended to the case \(n> 1\), for which only the value \(C=2\) has been found. A proof that gives \(C=1\) in the case \(n=1\) using the majorant method has been given in [3], but has not been extended to the case \(n> 1\).

In this paper, we prove that the same bound with \(C=1\) holds true in any finite dimension. We obtain this result by implementing a representation of the map with the method of Lie series and Lie transforms and producing convergence estimates in the spirit of Cauchy’s majorants method. The formal implementation of the representation of maps may be found in [11].

This paper extends to the case of maps a previous similar result for the case of the Poincaré–Siegel theoretical center problem, where the problem of linearization of an analytic system of differential equations in the neighborhood of a fixed point is considered. We stress that the interest of the method is not limited to the cases mentioned here; e.g., for applications to KAM theory see [4, 5] and [12]; a proof of Lyapounov’s theorem on the existence of periodic orbits in the neighborhood of the equilibrium is given in [10]; extensions of Lyapounov’s theorem may be found in [6] and [8].

We come now to a formal statement of our result. We assume the following

Condition \(\varvec{\tau }\): The sequence \(\alpha _r\) above satisfies

Theorem 1

Consider the map (1) and assume that the eigenvalues of \(\varLambda \) are non-resonant and satisfy condition \(\varvec{\tau }\). Then there exists a near to identity coordinate transformation \(y=x+\psi (x)\), with \(\psi \) analytic at least in the polydisk of radius \(B^{-1}e^{-\Gamma }\), where \(B>0\) is a universal constant, which transforms the map into the normal form \(y'=\varLambda y\).

Our condition \(\varvec{\tau }\) is equivalent to Bruno’s one. We have indeed

However, our formulation of condition \(\varvec{\tau }\) comes out naturally from our analysis of accumulation of small divisors and turns out to be the key that allows us to find the estimate with \(C=1\). For a brief discussion of the relations between the two conditions, see [9].

The paper is organized as follows. In Sect. 2, we include the essential information on the formal algorithm, referring to [11] for details. In Sect. 3, we give the technical estimates that lead to the proof of convergence of the formal algorithm. In particular, we include a complete discussion of the mechanism of accumulation of small divisors which allows us to have an accurate control. We remark in particular that we do not need to use the Siegel lemma, because the latter controls combinations of small divisors that do not occur in our scheme. The estimates of Sect. 3 are used in Sect. 4 in order to complete the proof of the main theorem. A technical appendix follows.

2 Formal algorithm

We represent a map of type (1) using Lie transforms. A detailed exposition of the representation algorithm and of the method of reduction to normal form is given in [11], where the problem is treated at a formal level. We refer to that paper for details concerning the formal setting, while in this paper, we pay particular attention to the problem of convergence. Here, we just include the definitions that we will need later, so that also the notations will be fixed. Some relevant lemmas are reported in Appendix A.

We start with the definition of Lie series and Lie transform. Let \(X_s(x)\) be a vector field on \({\mathbb {C}}^n\) whose components are homogeneous polynomials of degree \(s+1\). We will say that \(X_s(x)\) is of order \(s\), as indicated by the label. Moreover, in the following, we will denote by \(X_{s,j}\) the \(j\)th component of the vector field \(X_s\). The Lie series operator is defined as

where \(L_{X_s}\) is the Lie derivative with respect to the vector field \(X_s\).

Let now \(X=\{X_j\}_{j\ge 1}\) be a sequence of polynomial vector fields of degree \(j+1\). The Lie transform operator \(T_X\) is defined as

where the sequence \(E^{X}_s\) of linear operators is recursively defined as

The superscript in \(E^{X}\) is introduced in order to specify which sequence of vector fields is intended. By letting the sequence to have only one vector field different from zero, e.g., \(X=\{0,\ldots ,0,X_k,0,\ldots \}\) it is easily seen that one gets \(T_X=\exp \bigl (L_{X_k}\bigr )\).

2.1 Representation and conjugation of maps

We recall the representation of maps introduced in [11] together with some formal results that we are going to use here. Let \(\varLambda =e^{\mathsf{A}}\), i.e., in our case, \(\mathsf{A}=\hbox {diag}(\mu _1+i\omega _1,\ldots ,\mu _n+i\omega _n)\) with \(\lambda _j=e^{\mu _j+i\omega _j}\). Remark that we may express the linear part of the map as a Lie series by introducing the exponential operator \(\mathsf{R}=\exp \bigl (L_{\mathsf{A}x}\bigr )\). The action of the operator \(\mathsf{R}\) on a function \(f\) or on a vector field \(V\) is easily calculated as

The first result is concerned with the representation of the map (1) using a Lie transform.

Lemma 1

There exist generating sequences of vector fields \(V= \bigl \{V_s(x)\bigr \}_{s\ge 1}\) and \(W =\bigl \{W_s(x)\bigr \}_{s\ge 1}\) with \(W_s =\mathsf{R}V_s\) such that the map (1) is represented as

The second result is concerned with the composition of Lie transforms.

Lemma 2

Let \(X,\,Y\) be generating sequences. Then, one has \(T_X\circ T_Y = T_Z\) where \(Z\) is the generating sequence recursively defined as

The latter formula reminds the well-known Baker–Campbell–Hausdorff composition of exponentials. The difference is that the result is expressed as a Lie transform instead of an exponential, which makes the formula more effective for our purposes.

The third result gives the algorithm that we will use in order to conjugate the map (1) to its linear part. We formulate it in a more general form, looking for conjugation between maps. Let two maps

be given, where \(W=\bigl \{W_s\bigr \}_{s\ge 1}\), \(Z=\bigl \{Z_s\bigr \}_{s\ge 1}\) are generating sequences. We say that the maps are conjugated up to order \(r\) in case there exists a finite generating sequence \(X=\bigl \{X_1,\ldots ,X_r\bigr \}\) such that the transformation \(y=S^{(r)}_{X}x\) makes the difference between the maps to be of order higher than \(r\), i.e.,

where \(S^{(r)}_{X} =\exp \bigl (L_{X_r}\bigr )\circ \ldots \circ \exp \bigl (L_{X_1}\bigr )\). Suppose that we have \(W_1=Z_1,\ldots ,W_{r}=Z_{r}\). Then, the maps are conjugated up to order \(r\), since one has \(T_{W} x - T_{Z} x = \mathcal{O}(r+1)\).

Lemma 3

Let the generating sequences of the maps (14) coincide up to order \(r-1\) and let \(X_r\) be a vector field of order \(r\) generating the near the identity transformation \(y=\exp \bigl (L_{X_r} x\bigr )\). Then, the maps are conjugated up to order \(r\) if

The vector field \(X_r\) must satisfy the equation

2.2 Construction of the normal form

Following Schröder and Siegel, we want to conjugate the map (1) to its linear part; that is, writing the map as

with a known sequence \(W^{(0)}\) of vector fields, we want to reduce it to the linear normal form

To this end, we look for a generating sequence \(\{X_r\}_{r\ge 1}\) of vector fields and a corresponding sequence \(\{W^{(r)}\}_{r\ge 1}\) satisfying \(W^{(r)}_1=\ldots =W^{(r)}_r=0\). We emphasize that the map \(x' =T_{W^{(r)}}\circ \mathsf{R}x\) is conjugated to (18) up to order \(r\). We say that \(W^{(r)}\) is in normal form up to order \(r\).

According to (16), we should solve for \(X_r\) the equation

The operator \(\mathsf{D}\) is diagonal on the basis of monomials \(x^k\mathbf{e}_j = x_1^{k_1}\cdot \ldots \cdot x_n^{k_n}\mathbf{e}_j\), where \((\mathbf{e}_1,\ldots ,\mathbf{e}_n)\) is the canonical basis of \({\mathbb {C}}^n\). For we have

Thus, provided the eigenvalues of \(\mathsf{D}\) are not zero, the vector field \(X_r\) is determined as

where \(w_{j,k}\) are the coefficients of \(W^{(r-1)}_r\).

Next, we need an explicit form for the transformed sequence \(W^{(r)}\). We use the conjugation formula (15) replacing \(W\) and \(Z\) with \(W^{(r-1)}\) and \(W^{(r)}\), respectively. It is convenient to introduce an auxiliary vector field \(V^{(r)}\) and to split the formula as

A more explicit form comes from Lemma 2, recalling that \(T_{X}=\exp (L_{X_r})\) if one considers the generating sequence \(X=\{0,\ldots ,0,X_r,0,\ldots \}\). The auxiliary vector field \(V^{(r)}\) is determined as

where we use the simplified notation \(E^{(r-1)}\) in place of \(E^{(W^{(r-1)})}\). Having so determined the sequence \(V^{(r)}\), we calculate the transformed sequence \(W^{(r)}\) as

Here, a remark is in order. The formulæ above define the sequences \(V^{(r)}_r,V^{(r)}_{r+1},\ldots \) and \(W^{(r)}_{r+1},W^{(r)}_{r+2},\ldots \) starting with terms of order \(r\) and \(r+1\), respectively. This is fully natural, because all terms of lower order vanish due to \(W^{(r)}\) being in normal form up to order \(r\). Moreover, we emphasize that in view of (16), we should determine \(X_r\) by solving the equation \(\mathsf{D}X_r=W^{(r-1)}_r\), since we want \(W^{(r)}_r=0\).

3 Quantitative estimates

Our aim now was to complete the formal algorithm of the previous section with quantitative estimates that will lead to the proof of convergence of the transformation to normal form. The main result of this section is the iteration lemma of Sect. 3.3 below. However, we must anticipate a few technical tools.

3.1 Norms on vector fields and generalized Cauchy estimates

For a homogeneous polynomial \(f(x)=\sum _{|k|=s}f_kx^k\) (using multiindex notation and with \(|k|=|k_1|+\cdots +|k_n|\)) with complex coefficients \(f_k\) and for a homogeneous polynomial vector field \(X_s=(X_{s,1},\ldots ,X_{s,n})\), we use the polynomial norm

The following lemma allows us to control the norms of Lie derivatives of functions and vector fields.

Lemma 4

Let \(X_r\) be a homogeneous polynomial vector field of degree \(r+1\). Let \(f_s\) and \(v_s\) be a homogeneous polynomial and vector field, respectively, of degree \(s+1\). Then, we have

Proof

Write \(f_s=\sum _{|k|=s+1} b_k x^k\) with complex coefficients \(b_k\). Similarly, write the \(j\)th component of the vector field \(X_r\) as \(X_{r,j}=\sum _{|k'|=r+1} c_{j,k'} x^{k'}\). Recalling that \(L_{X_r}f_s=\sum _{j=1}^{n}X_{r,j}{{{\partial }{f_s}}\over {{\partial }{x_j}}}\), we have

Thus, in view of \(|k_j|\le s+1\), we have

namely the first of (25). In order to prove the second inequality recall that the \(j\)th component of the Lie derivative of the vector field \(v_s\) is

Then using the first of (25), we have

which readily gives the wanted inequality in view of the definition (24) of the polynomial norm of a vector field.\(\square \)

Lemma 5

Let \(V_r\) be a homogeneous polynomial vector field of degree \(r+1\). Then the solution \(X_r\) of the equation \(\mathsf{D}X_r = V_r\) satisfies

with the sequence \(\alpha _r\) defined by (5)

Proof

The first inequality is a straightforward consequence of the definition (24) of the norm and of the sequence \(\alpha _r\) in terms of \(\beta _r\) defined by (4). If \(v_{j,k}\) are the coefficients of \(V_r\), then the coefficients of \(X_r\) are bounded by \(|v_{j,k}|/\beta _r\le |v_{j,k}|/\alpha _r\). The second inequality follows from \(\mathsf{R}X_r = X_r+V_r\), which gives the stated inequality.\(\square \)

3.2 Accumulation of small divisors

Lemma 5 shows that solving the equation for every vector field of the generating sequence introduces a divisor \(\alpha _r\). Such divisors do accumulate, and our aim now is to analyze in detail the process of accumulation. It will be convenient to introduce a further sequence \(\{\sigma _r\}_{r\ge 0}\) defined as

which will play a major role in the rest of the proof. The extra factor \(1/r^2\) can be interpreted as due to the generalized Cauchy estimates for derivatives that are also a source of divergence in perturbation processes. The quantities \(\sigma _r\) are the actual small divisors that we must deal with. Here, we follow the scheme presented in [9], with a few variazioni. However, since this is a crucial part of the proof, we include it in a detailed and self-contained form.

The guiding remark is that the small divisors \(\sigma _r\) propagate and accumulate through the formal construction due to the use of the recursive formulæ (22) and (23); e.g., the expression \(L_{X_r}V_s^{(r)}\) will contain the product of the divisors contained in both \(X_r\) and \(V_s^{(r)}\), with no extra divisors generated by the Lie derivative. The explicit constructive form of the algorithm allows us to have a quite precise control on the accumulation process. It is an easy remark that unfolding the recursive formulæ (22) and (11) will produce an estimate of \(\Vert {W^{(r)}_{s}}\Vert \) as a sum of many terms every one of which contains as denominator a product of \(q\) divisors of the form \(\sigma _{j_1}\ldots \sigma _{j_q}\), with some indexes \(j_1,\ldots ,j_q\) and some \(q\) to be found. This is what we call the accumulation of small divisors, and the problem is to identify the worst product among them. The key of our argument is to focus our attention on the indexes rather than on the actual values of the divisors.

We call \(I=\{j_1,\ldots ,j_s\}\) with non-negative integers \(j_1,\ldots ,j_s\) a set of indexes. We introduce a partial ordering as follows. Let \(I=\{j_1,\ldots ,j_s\}\) and \(I'=\{j'_1,\ldots ,j'_s\}\) be two sets of indexes with the same number \(s\) of elements. We say that \(I\triangleleft I'\) in case there is a permutation of the indexes such that the relation \(j_m\le j'_m\) holds true for \(m=1,\ldots ,s\,\). If two sets of indexes contain a different number of elements, we pad the shorter one with zeros and use the same definition. We also define the special sets of indexes

Lemma 6

For the sets of indexes \(I_s^*=\{j_1,\ldots ,j_s\}\), the following statements hold true:

-

(i)

the maximal index is \(j_\mathrm{max}=\bigl \lfloor \frac{s}{2}\bigr \rfloor \,\);

-

(ii)

for every \(k\in \{1,\ldots ,j_{\max }\}\) the index \(k\) appears exactly \(\bigl \lfloor \frac{s}{k}\bigr \rfloor -\bigl \lfloor \frac{s}{k+1}\bigr \rfloor \) times;

-

(iii)

for \(0< r\le s\) one has

$$\begin{aligned} \bigl (\{r\}\cup I^*_r\cup I^*_s\bigr ) \triangleleft I^*_{r+s}. \end{aligned}$$

Proof

The claim (i) is a trivial consequence of the definition.

(ii) For each fixed value of \(s>0\) and \(1\le k\le \lfloor s/2\rfloor \,\), we have to determine the cardinality of the set \(M_{k,s}=\{m\in {\mathbb {N}}:\ 2\le m\le s\,,\ \lfloor s/m\rfloor =k\}\). For this purpose, we use the trivial inequalities

After having rewritten the same relations with \(k+1\) in place of \(k\,\), one immediately realizes that a index \(m\in M_{k,s}\) if and only if \(m\le \lfloor s/k\rfloor \) and \(m\ge \lfloor s/(k+1)\rfloor +1\), therefore \(\#M_{k,s}=\bigl \lfloor \frac{s}{k}\bigr \rfloor -\bigl \lfloor \frac{s}{k+1}\bigr \rfloor \).

(iii) Since \(r\le s\), the definition in (28) implies that neither \(\{r\}\cup I^*_r\cup I^*_s\) nor \(I^*_{r+s}\) can include any index exceeding \(\bigl \lfloor (r+s)/2\bigr \rfloor \). Thus, let us define some finite sequences of non-negative integers as follows:

where \(1\le k \le \lfloor (r+s)/2\rfloor \). When \(k< r\), the property (ii) of the present lemma allows us to write

using the elementary estimate \(\lfloor x\rfloor + \lfloor y\rfloor \le \lfloor x+y\rfloor \,\), from the equations above it follows that \(M_k \ge N_k\) for \(1\le k<r\,\). In the remaining cases, i.e., when \(r\le k\le \lfloor (r+s)/2\rfloor \,\), we have that

therefore, \(M_k=1+R_k+S_k\ge N_k\). Since we have just shown that \(M_k \ge N_k\;\forall \ 1\le k\le \lfloor (r+s)/2\rfloor \), it is now an easy matter to complete the proof. Let us first imagine to have reordered both the set of indexes \(\{r\}\cup I^*_r\cup I^*_s\) and \(I^*_{r+s}\) in increasing order; moreover, let us recall that \(\#\big (\{r\}\cup I^*_r\cup I^*_s\big )=\# I^*_{r+s}=r+s-1\), because of the definition in (28). Thus, since \(M_1\ge N_1\), every element equal to \(1\) in \(\{r\}\cup I^*_r\cup I^*_s\) has a corresponding index in \(I^*_{r+s}\) the value of which is at least \(1\,\). Analogously, since \(M_2\ge N_2\), every index \(2\) in \(\{r\}\cup I^*_r\cup I^*_s\) has a corresponding index in \(I^*_{r+s}\) which is at least 2, and so on up to \(k =\lfloor (r+s)/2\rfloor \). We conclude that \(\{r\}\cup I^*_r\cup I^*_s\triangleleft I^*_{r+s}\).\(\square \)

We come now to identify the sets of indexes that describe the allowed combinations of small divisors. These are the sets

We will refer to condition \(I\triangleleft I^*_s\) as the selection rule \(\mathsf{S}\). The relevant properties are stated by the following

Lemma 7

For the sets of indexes \(\mathcal{J}_{r,s}\) the following statements hold true:

-

(i)

\(\mathcal{J}_{r-1,s}\subset \mathcal{J}_{r,s}\);

-

(ii)

if \(I\in \mathcal{J}_{r-1,r}\) and \(I'\in \mathcal{J}_{r,s}\) then we have \(\bigr (\{r\} \cup I \cup I'\bigl )\in \mathcal{J}_{r,r+s}\).

Remark

Property (ii) plays a major role in controlling the accumulation of small divisors, since it gives us a control of how the indexes are accumulated. For it reflects the fact that a Lie derivative, e.g., \(L_{X_r}V_s^{(r)}\) contains the union of the divisors in \(V_s^{(r)}\) and in \(X_r\), taking also into account that \(X_r\) contains an extra divisor in view of Lemma 5.

Proof of lemma 7

(i) is immediately checked in view of the definition of \(\mathcal{J}_{r,s}\).

(ii) We have \(\#\bigl (\{r\}\cup \, I\cup \, I'\bigr ) = 1+\#(I)+\#(I') = 1+r-1+s-1 = r+s-1\,\). For \(j\in \{r\}\cup \, I\cup \, I'\,\), we also have \(1\le j\le r\) because this is true for all \(j\in I\) and for all \(j\in I'\), and we just add an extra index \(r\). Since \(r< s\), we also have \(r =\min \bigl (r,(r+s)/2\bigr )\), as required. Coming to the selection rule \(\mathsf{S}\), we remark that \(\{r\}\cup I\cup I'\triangleleft \{r\}\cup I^*_r\cup I^*_s\) readily follows from \(I\triangleleft I^*_r\) and \(I'\triangleleft I^*_s\), which are true in view of \(I\in \mathcal{J}_{r-1,r}\) and \(I'\in \mathcal{J}_{r,s}\), so that the claim follows from property (iii) of Lemma 6.\(\square \)

We come now to consider the accumulation of small divisors. Recall the definition (27) of the sequence \(\sigma _r\). We associate to the sets of indexes \(\mathcal{J}_{r,s}\) the sequence of positive real numbers \(T_{r,s}\) defined as

Lemma 8

The sequence \(T_{r,s}\) satisfies the following properties for \(1\le r\le s\):

-

(i)

\(T_{r-1,s} \le T_{r,s}\);

-

(ii)

\(\frac{1}{\sigma _r} T_{r-1,r} T_{r,s} \le T_{r,r+s}\).

Proof

(i) From property (i) of Lemma 7, we readily get

since the maximum is evaluated over a larger set of indexes.

(ii) Compute

where in the inequality of the last line property (ii) of Lemma 7 has been used. \(\square \)

The final estimate uses condition \(\varvec{\tau }\) and the definition (27) of the sequence \(\sigma _r\,\).

Lemma 9

Let \(\lambda \) satisfy condition \(\varvec{\tau }\). Then the sequence \(T_{r,s}\) is bounded by

with some positive constant \(\gamma \) not depending on \(\lambda \).

Proof

In view of \(\sigma _s\le 1\), it is enough to prove the second inequality. We use the property (ii) of Lemma 7 and the selection rule \(\mathsf{S}\). We readily get

By property (ii) of Lemma 6, the latter product is evaluated as

where \(q_k =\bigl \lfloor \frac{s}{k}\bigr \rfloor -\bigl \lfloor \frac{s}{k+1}\bigr \rfloor \) is the number of indexes in \(I_s^*\) which are equal to \(k\). In view of the definition (27) of the sequence \(\sigma _s\), we have

where \(a=\sum _{k\ge 1} \frac{2\ln k}{k(k+1)}<\infty \) is clearly independent of \(\lambda \). The claim follows by just setting \(\gamma =e^a\).\(\square \)

3.3 Iteration lemma

This is the main lemma which allows us to control the norms of the sequence of vector fields \(\{X_r\}_{r> 1}\,\).

Lemma 10

Assume that the sequence \(W^{(0)}\) of vector fields satisfies \(\Vert {W^{(0)}_s}\Vert \le \frac{C_0^{s-1}A}{s}\) with some constants \(A> 0\) and \(C_0\ge 0\). Then the sequence of vector fields \(\{X_r\}_{r\ge 1}\) that for every \(r\) give the normal form \(W^{(r)}\) satisfies the following estimates: there exists a bounded monotonically non-decreasing sequence \(\{C_r\}_{r\ge 1}\) of positive constants, with \(C_r\rightarrow C_{\infty }<\infty \) for \(r\rightarrow \infty \), such that we have

The sequence may be recursively defined as

so that one has \(C_r> 16A\).

Proof

The proof proceeds by induction. For \(r=0\), the inequality for \(W^{(0)}_s\) is nothing but the initial hypothesis, recalling that by definition we have \(T_{0,s}=1\). From this, the corresponding inequality for \(X_1\) immediately follows by Lemma 5. The induction requires two main steps, namely (a) estimating \(\Vert {V^{(r)}_s}\Vert \) as defined by (22) and (b) estimating \(\Vert {W^{(r)}_s}\Vert \) as defined by (23).

The estimate for \(X_r\) is determined by solving (19). In view of the induction hypothesis (31), we rewrite the claim of Lemma 5 as

In order to estimate \(\Vert {V^{(r)}_s}\Vert \), we first prove that for \(s> r\) we have

The proof of the latter estimates is based on the general inequality

where we have introduced the quantities

The inequality (36) follows by repeatedly applying Lemma 4 to the non-recursive expression for \(E^{(r-1)}_{s-r}\) given in Lemma 23. We describe this process in detail. First estimate

which follows by the induction hypothesis (31), from (33) and from Lemma 4. Remark that the factor \(j_1+r+2\) is the degree of the vector field \(L_{W^{(r-1)}_{j_1}}\mathsf{R}X_r\). The same estimate is repeated for \(L_{W^{(r-1)}_{j_2}}L_{W^{(r-1)}_{j_1}}\mathsf{R}X_r,\ldots \) thus yielding

In view of the expression (44) of \(E^{(r-1)}_{s-r}\), we get (36) and (37). Here, we need an estimate for \(\Theta (r,s,k)\), that we defer to Appendix A.3. Putting \(m=2\) in (55), we get

Furthermore, we isolate the contribution of the quantities \(T_{r-1,\cdot }\) that control the small divisors. With an appropriate use of property (ii) of Lemma 8, we have

Thus, using also \(\alpha _r\le 1\) which is true in view of the definition of \(\alpha _r\), we replace (36) with

For \(r=1\), the latter formula readily gives (34). For \(r> 1\), we use \(1+\frac{1}{r}< 2\) and \(\frac{8A}{C_{r-1}}< 1\), which follows from the choice of the sequence \(C_r\) in the statement of the Lemma, so that (35) is easily recovered.

We come now to the estimate of \(\Vert {V^{(r)}_s}\Vert \). Here, it is convenient to separate the case \(r=1\). Putting (34) and the induction hypothesis (31) in (22) we get

Thus, we may write

For \(r> 1\) we put (35) in (22), and we get

Thus, we conclude

Now we look for an estimate of \(\Vert {W^{(r)}_s}\Vert \) as given by (11). Recall that we have \(s> r\), because \(W^{(r)}_r=0\) by construction. We use (40) and (41) together with Lemma 4, and get

Here, we use again the statement (ii) of Lemma 8. By the trivial inequality

and remarking that \(\hat{C}_r> 4A\), the latter estimate yields

We conclude

In view of (40) and (41), this proves the claim of the Lemma.\(\square \)

4 Proof of the main theorem

Having established the estimate of the iteration Lemma 10 on the sequence of generating vector fields, it is now a standard matter to complete the proof of Theorem 1. Hence, this section will be less detailed with respect to the previous ones.

In view of the iteration lemma, we are given an infinite sequence \(\{X_r\}_{r\ge 1}\) of generating vector fields with \(X_r\) homogeneous polynomial of degree \(r+1\) satisfying

By Lemma 9, we have \(\frac{T_{r-1,r}}{\alpha _r}\le \gamma ^r e^{r\Gamma }\) with \(\Gamma \) as in condition \(\varvec{\tau }\) and \(\gamma \) a constant independent of \(\lambda \). Moreover, still by Lemma 10, we have \(C_r\le C_{\infty }\), a positive constant independent of \(\lambda \). Thus, we have

with positive constants \(\eta \) and \(K\) independent of \(\lambda \).

We refer now to the analytic setting that we recall in Appendix A.2. Every vector field \(X_r\) generates a near the identity transformation \(y=\exp \bigl (L_{X_r})x\) which transforms the generating sequence \(W^{(r-1)}\) into \(W^{(r)}\) according to the algorithm of Sect. 2.2. Thus, the near the identity transformation to normal form is generated by the limit \(S_X\) of the sequence of operators \(S^{(r)}_X =\exp (L_{X_r})\circ \ldots \circ \exp (L_{X_1})\). We apply Proposition 2 of Appendix A.2. In view of (43), in a polydisk \(\varDelta _\rho \) of radius \(\rho \) centered at the origin of \({\mathbb {C}}^n\), we have

where \(\bigl |X_r\bigr |_{\rho }\) is the supremum norm. Thus, condition (52) of Proposition 2 reads

which is true if we take, e.g., \(\rho <\overline{\rho }=\frac{3}{2}B^{-1}e^{-\Gamma }\) with a constant \(B\) independent of \(\lambda \). Thus, Proposition 2 applies with, e.g., \(\delta =\overline{\rho }/3\), and we conclude that the near the identity transformation that gives the map the wanted linear normal form is analytic at least in a polydisk of radius \(B^{-1}e^{-\Gamma }\), as claimed. This concludes the proof of the main theorem.

References

Arnold, V.I.: Geometrical Methods in the Theory of Ordinary Differential Equations. Springer, Berlin (1980)

Buff, X., Chéritat, A.: The Brjuno function continuously estimates the size of quadratic Siegel disks. Ann. Math. 164, 265–312 (2006)

Carletti, T., Marmi, S.: Linearization of analytic and non-analytic germs of diffeomorphisms of \((\mathbb{C},0)\). Bull. Soc. Math. France 128, 69–85 (2000)

Giorgilli, A.. Locatelli, U.: On classical series expansions for quasi-periodic motions, MPEJ 3 N. 5 (1997)

Giorgilli, A., Locatelli, U.: A classical self-contained proof of Kolmogorov’s theorem on invariant tori. In: Carles Simó, (ed.) Hamiltonian Systems with Three or More Degrees of Freedom. NATO ASI series C, vol. 533. Kluwer Academic, Dordrecht (1999)

Giorgilli, A.: Unstable equilibria of Hamiltonian systems. Disc. Cont. Dyn. Syst. 7(4), 855–871 (2001)

Giorgilli, A.: Notes on exponential stability of Hamiltonian systems. In: Marmi, S. (ed.) Dynamical Systems, Part I: Hamiltonian systems and Celestial Mechanics, pp. 87–198. Pubblicazioni del Centro di Ricerca Matematica Ennio De Giorgi, Pisa, (2003)

Giorgilli, A., Muraro, D.: Exponentially stable manifolds in the neighbourhood of elliptic equilibria. Boll. Unione Mat. Ital. Sez. B 9, 1–20 (2006)

Giorgilli, A., Marmi, S.: Convergence radius in the Poincaré–Siegel problem. DCDS Ser. S 3, 601–621 (2010)

Giorgilli, A.: On a theorem of Lyapounov, Rendiconti dell’Istituto Lombardo Accademia di Scienze e Lettere, Classe di Scienze Matematiche e Naturali (to appear) (see also arXiv:1303.7322)

Giorgilli, A.: On the representation of maps by Lie transforms, Rendiconti dell’Istituto Lombardo Accademia di Scienze e Lettere, Classe di Scienze Matematiche e Naturali (to appear) (see also arXiv:1401.6529)

Giorgilli, A., Locatelli, U., Sansottera, M.: On the convergence of an algorithm constructing the normal form for lower dimensional elliptic tori in planetary systems, preprint (2013)

Gröbner, W.: Die Lie-Reihen und Ihre Anwendungen, VEB Deutscher Verlag der Wissenschaften, Mathematische Monographien, 3 (1960). Italian translation: Serie di Lie e loro applicazioni, ed. Cremonese, Roma (1973)

Schröder, E.: Über iterierte functionen. Math. Ann. 3, 296–322 (1871)

Siegel, C.L.: Iterations of analytic functions. Ann. Math. 43, 607–612 (1942)

Yoccoz, J.-C.: Théorème de Siegel, nombres de Bruno et polynômes quadratiques. Astérisque 231, 3–88 (1995)

Yoccoz, J.-C.: Analytic linearisation of circle diffeomorphisms in “Dynamical Systems and Small Divisors”. Lect. Notes Math. 1784, 125–173 (2002)

Acknowledgments

This research is partially supported by PRIN-MIUR 2010JJ4KPA “Teorie geometriche e analitiche dei sistemi Hamiltoniani in dimensioni finite e infinite.” M.S. has also been partially supported by an FSR Incoming Postdoctoral Fellowship of the Académie universitaire Louvain, co-funded by the Marie Curie Actions of the European Commission.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix

A. Technicalities

This appendix is mainly devoted to recalling some definitions and properties related to Lie series and Lie transforms that are used in the paper. The estimate of the sequence (37) is also included.

1.1 A.1 Lie series and Lie transforms

We recall here some formal properties of the Lie series and Lie transforms as defined by (8) and (9). The Lie derivative with respect to the vector field \(X\) is defined as

where \(\phi _X^t\) is the flow generated by \(X\). We also recall that the explicit form of the Lie derivatives for a function \(f(x)\) and a vector field \(v(x)\) are, respectively,

where the l.h.s. is the \(j\)th component of the vector field \(L_{X}v\).

A non-recursive formula for the operator \(E^{X}_s\) defined by (10) is given by

Lemma 11

Let \(X=\{X_l\}_{l> 1}\) be a sequence of vector fields and \(T_X=\sum _{s\ge 0} E^{X}_s\) as in (10). Then for \(s> 0\) we have

If moreover \(X_1=\cdots =X_{r-1}=0\) then we get the same formula with the limitation \(j_1,\ldots ,j_k\ge r\) on the indices \(j\).

Proof

The formula may be written down directly by combinatorial considerations. A proof by induction goes as follows. We write the denominator in the equivalent form \( {s(s-j_k)\cdot \ldots \cdot (s-j_k-\ldots -j_2)}\,\). For \(s=1\), both the formula and the recursive definition (10) give \(E^{X}_1=L_{X_1}\). Assuming it is true up to \(s-1\), for \(l=1,\ldots ,s-1\) write the corresponding term in the r.h.s. of (10) as

while for \(l=s\) we have the sole term \(L_{X_s}\). Renaming the index \(l\) as \(j_{k+1}\) the corresponding term \(\frac{l}{s}L_{X_l}\) is included in the sum over the indexes \(j_1,\ldots ,j_{k+1}\) with the condition \(j_1+\cdots +j_{k+1}=s\), and writing \(k\) in place of \(k+1\) the limits of the first sum run from \(2\) to \(s\), thus changing the sum into \(\sum _{k=2}^{s}\sum _{j_1,\ldots ,j_k}\cdots \) so that only the term \(k=1\) is missing. Adding it means that the second sum contains the sole term \(j=s\), corresponding to \(L_{X_s}\), which is actually the missing term in order to recover (10).\(\square \)

The Lie series and Lie transform are linear operators acting on the space of holomorphic functions and of holomorphic vector fields. They preserve products between functions and commutators between vector fields, i.e., if \(f,\,g\) are functions and \(v,\,w\) are vector fields then one has

Here, replacing \(T_X\) with \(\exp \bigl (L_{X}\bigr )\) gives the corresponding property for Lie series. Moreover, both operators are invertible. The inverse of \(\exp \bigl (L_{X}\bigr )\) is \(\exp \bigl (L_{-X}\bigr )\), which is a natural fact if one recalls the origin of Lie series as a solution of an autonomous system of differential equations.

We come now to a remarkable property which justifies the usefulness of Lie methods in perturbation theory. We adopt the name exchange theorem introduced by Gröbner. Let \(f\) be a function and \(v\) be a vector field. Consider the transformation \(y = T_X x\), , i.e., in coordinates,

and denote by \(\mathsf{J}\) its differential, namely in coordinates, the Jacobian matrix with elements \(J_{j,k} ={{{\partial }{y_{j}}}\over {{\partial }{x_k}}}\). Then one has

The same statement holds true for a transformation generated by a Lie series.

The main difference between Lie series and Lie transform is that a near the identity transformation of the form (2) can be represented via a Lie transform with a suitable sequence of vector fields. The same does not hold true for a Lie series, but the same result may be achieved by using the composition of Lie series. Again, see [11] for details.

1.2 A.2 Analyticity of the compositions of Lie series

We include here some quantitative estimates which lead to the convergence of a near the identity coordinate transformation defined by composition of Lie series. The results here are basically an extension of Chapter 1 of Gröbner’s book [13]. The reader may also find more details in [7] where the Hamiltonian case is dealt with.

We consider a polydisk of radius \(\rho \) centered at the origin

For a function \(f\) and a vector field \(X\), it is convenient to consider the usual supremum norm

The following lemma states the Cauchy inequality for Lie derivatives in \(\varDelta _{\rho }\).

Lemma 12

For \(0\le \delta ' <\delta <\rho \) the following inequalities apply to Lie derivatives:

and, for \(s\ge 1\),

The proof is a straightforward adaptation of Cauchy estimates for derivatives of analytic functions. The reader will be able to reproduce it himself, perhaps with the help of the proof for the Hamiltonian case in [7].

The following proposition essentially restates Cauchy’s existence theorem of solution of differential equations in the analytic case.

Proposition 1

Let \(X\) be an analytic vector field on the interior of \(\varDelta _{\rho }\) and bounded on \(\varDelta _{\rho }\). Then, for every positive \(\delta \le \rho /2\) the following statement holds true: if \(|X|_{\rho }<\delta (e-1)/e^2\) then the map

is bianalytic and satisfies

so that we have

Proof

Using Lemma 12, compute

namely (48). The same estimates apply to the inverse \(\exp \bigl (L_{-X}\bigr )\).\(\square \)

Let now \(\{X_1,\,X_2,\ldots \}\) be a sequence of analytic vector fields. Consider the sequence of transformations \(\{S^{(0)}_X,\,S^{(1)}_X,\,S^{(2)}_X,\ldots \}\) recursively defined as

with inverse

We may well consider in formal sense the composition of Lie series as the limit

with a similar formal limit for the sequence \(\tilde{S}_X^{(r)}\) giving the inverse \(S^{-1}_X\).

Proposition 2

Let the sequence \(X=\{X_r\}_{r\ge 1}\) of vector fields be analytic in \(\varDelta _{\rho }\). Then for every positive \(\delta <\rho /2\) the following statement holds true: if the convergence condition

is satisfied then the sequence \(\{S^{(0)}_X,\,S^{(1)}_X,\,S^{(2)}_X,\ldots \}\) of maps defined by (49) is bianalytic and converges to a bianalytic map \(S_X\) satisfying

Proof

Consider the sequence of positive numbers

so that \(\sum _{s\ge 1}\delta _s=\delta \). By definition, we have \(|X_s|_{\rho }< \frac{\delta _s}{2e}\), so that Proposition 1 applies to every vector field \(X_s\,\). Consider also the two sequences

so that we have \(\rho ^-_s\mathop {\longrightarrow }\limits _{s\rightarrow +\infty }\rho -2\delta \) and \(\rho ^+_s\mathop {\longrightarrow }\limits _{s\rightarrow +\infty }\rho \). Applying Proposition 1, we see that the map \(S^{(1)}\) is analytic and satisfies \(\varDelta _{\rho ^-_1}\subset S^{(1)}\varDelta _{\rho _0}\subset \varDelta _{\rho ^+_1}\) and that its inverse is analytic and satisfies \(\varDelta _{\rho ^-_1}\subset S^{(1)}\varDelta _{\rho _0}\subset \varDelta _{\rho ^+_1}\,\). Proceeding by induction it is straightforward to check that the same claim holds true for \(S^{(r)}\) and \(\tilde{S}^{(r)}\) for every \(r> 1\), with \(\rho ^-_1\) and \(\rho ^+_1\) replaced by \(\rho ^-_r\) and \(\rho ^+_r\), respectively. Moreover, the inequalities \(\bigl |S^{(r)}x-S^{(r-1)x}\bigr |< \delta _r\) and \(\bigl |\tilde{S}^{(r)}x-\tilde{S}^{(r-1)x}\bigr |< \delta _r\) hold true. Thus, the sequences \(S^{(r)}\) and \(\tilde{S}^{(r)}\) of maps are uniformly convergent in any compact subset of \(\varDelta _{\rho _0}=\varDelta _{\rho -\delta }\), and by Weierstrass theorem the limits \(S_x\) and \(S^{-1}_X\) do exist and are analytic maps satisfying (53).\(\square \)

1.3 B.2 Estimate of the sequence (37)

We introduce a parameter \(m\ge 0\) which is arbitrary but fixed and rewrite the sequence (37) as

with \(s\ge kr\) and \(1\le k\le \lfloor s/r\rfloor \). With a straightforward calculation, we get the uniform estimate

which holds true because \(j_1,\ldots ,j_k\ge r\). Thus, we get

Writing \(j_1=r+q_1,\ldots ,j_k=r+q_k\) the number of terms in the sum is equal to the number of non-negative integer vectors \(q=(q_1,\ldots ,q_n)\) with \(|q|= s-kr\), which is known to be

We conclude

Rights and permissions

About this article

Cite this article

Giorgilli, A., Locatelli, U. & Sansottera, M. Improved convergence estimates for the Schröder–Siegel problem. Annali di Matematica 194, 995–1013 (2015). https://doi.org/10.1007/s10231-014-0408-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10231-014-0408-4