Abstract

We discuss differential equations depending non-smoothly on the integration time of the form

where \(n\in \mathbb N , n>0\), and \(F, \sigma \) are piecewise-\({\fancyscript{C}}^{\infty }\) periodic functions. The main results deal with the existence of periodic solutions of such equations as well as their computation by explicit formulas. No infinite series appear, and it is indeed established that these periodic solutions are explicitly computable by means of finitely many Euler polynomials. We also introduce a wider class of piecewise-\({\fancyscript{C}}^{\infty }\) equations where the problem of finding periodic solutions is finitely solvable as well.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Non-smooth differential equations

A modern approach of differential equations with discontinuous right hand sides has risen up from applications (see [1]), and one can find in [2] an early theoretical frame for their study, enhanced by the formulation of [9]. They are being increasingly used in applications to describe a large variety of physical phenomena (refer to [20, 21]). Mathematically, these systems are often described by sets of piecewise-smooth ordinary differential equations whose phase space is partitioned, by a set of switching manifolds, in different regions (see [4, 11, 12]). It is common that they correspond to real electrical models, even in presence of quite complicated singularities (see [5, 15]).

We start our analysis from a very simple model, which will appear to correspond to an instance of a distinguished class of differential equations.

Let us consider the problem of an automatic train, having to commute between two stations, and changing its direction at given times. We assume that the absolute value of the acceleration is constant, just switching its sign when the train changes its direction. We wish to identify conditions under which the train has a periodic behavior.

We assume that the variable \(y\) indicates the position of the train (algebraic distance from a given origin) and \(t\) the time and that the switching times are given by the zeros of a periodic function \(t\mapsto \sigma (t)\). Without loss of generality, we assume that \(\sigma \) is a piecewise-\({\fancyscript{C}}^{\infty }\) function, \(2\,\tau \)-periodic (\(\tau \in \mathbb R , \tau >0\)), such that \(\sigma (0)=0, \sigma (t)>0\) on \(]0,\tau [\) and \(\sigma (t)<0\) on \(]-\tau ,0[\).

Let us also introduce the sign function \(t\mapsto \mathop {\text{ sgn}}\nolimits (t)\) :

It is worth remarking that \(\mathop {\text{ sgn}}\nolimits \) models various electronic devices such as a physical relay, or corresponds to the output of a flip-flop device (bistable switch). Relays and bistable switches are very common in electronic items (for instance, digital clocks). We mention that a large class of autonomous systems involving \(\mathop {\text{ sgn}}\nolimits \), introduced in [2] as relay systems, have been studied. It is an important issue to find conditions for the existence of periodic solutions, since they are time-persistent phenomena. Another challenge is to give explicit formulas or algorithms to compute these solutions (see [10, 12, 13, 16]). The dynamics of non-autonomous equations are in general much more complex, especially when the system is forced by an external excitation (see the case of a simple model of a ship autopilot in [14]).

Returning to our setup, the problem of the train mentioned above corresponds to a non-autonomous second order equation which is, up to constants:

In the case of such a simple equation of order \(2\), the solutions can be found readily, just by means of computing some constants. It then appears (see Sect. 2.5) that periodic solutions correspond to a given speed at time \(0\) and that there is only one periodic solution if one fixes the position at the origin of time.

But our main goal in what follows is to show, by examining the case of order \(n\) equations, that this type of equation belongs indeed to a larger class of non-smooth differential equations whose periodic solutions are given explicitly. Let us mention that such equations may of course be treated by means of Fourier transforms, but the issue here is to have explicit formulas expressing our solutions exactly with a finite number of terms.

1.2 From smooth to non-smooth order \(n\) equations

In many applications (in electronics for instance), one can easily replace a smooth parameter of a differential equation by a non-smooth one, just using semi-conductors. Mathematically, the effect is to jump from smooth dynamical systems theory to non-smooth dynamical systems theory, and the methods change drastically.

A large part of the dynamical systems theory linked to applications is concerned with periodicity: differential equations involving periodic excitations or periodic coefficient functions (refer for instance to [18]) and of course periodic solutions.

So let \(F\) be a \({\fancyscript{C}}^{\infty } 2\,\tau _{F}\)-periodic function (where \(\tau _{F}\in \mathbb R , \tau _{F}>0\)) and \(n\) be a strictly positive integer. Let us remark that the solutions of a smooth differential equation of the type

are expressed by:

where \(\varTheta _F^{[n]}\) is the \(n\)-primitive of \(F\) vanishing at \(0\) defined in Sect. 2.3, and \(P_{n-1}\) is a polynomial of degree \(n-1\). Moreover, \(\varTheta _F^{[n]}\) is periodic (of period \(2\,\tau _{F}\)) if and only if the average value of \(F\)

is equal to \(0\) (see Lemma 9).

Under this hypothesis, there is only one periodic solution vanishing at the origin, namely \(\varTheta _F^{[n]}\). The algorithmic computation of \(\varTheta _F^{[n]}\) (involving a finite number of expressions) is in general an intractable problem. Two natural questions arise. What happens if \(F\) is no longer \({\fancyscript{C}}^{\infty }\)? Are periodic solutions given by explicit formulas?

In our approach, we will start from non-smooth differential equations which are piecewise-autonomous. The switch cuts the time in subintervals, and between two switches, one has to deal with an autonomous equation. After having solved the problem for these basic differential equations, we will perturb them by a general periodic function, thus exiting from the piecewise-autonomous world. After an analysis of the effects of such perturbations, we will exhibit a general class of equations where the problem of finding explicitly the periodic solutions are solvable.

1.3 Differential equations involving sawtooth functions

We should remark that in the model proposed above, the regularity of the periodic function \(\sigma \) is not an issue. We just use the fact that the sign alternatively changes from half-period to half-period.

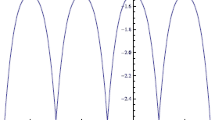

It is worth to introduce the sawtooth function \(\mathop {\text{ sw}}\nolimits \), whose graph is given in Fig. 1. It is defined in the following way. If \(t\in \mathbb N \), we put \(\mathop {\text{ sw}}\nolimits (t)=0\). If \(t> 0, t\notin \mathbb N \), there exists a unique \(m\in \mathbb N \) such that \(m< t <m+1\). We then define \(\mathop {\text{ sw}}\nolimits (t)=t-m\) if \(m\) is even, \(\mathop {\text{ sw}}\nolimits (t)=t-m-1\) if \(m\) is odd. If \(t<0, \mathop {\text{ sw}}\nolimits (t)=-\mathop {\text{ sw}}\nolimits (-t)\).

Let us denote by \(O(\mathbb Z )\) the set of the odd integers, and by \(\mathop {\text{ frac}}\nolimits \) the fractional part function (according to our notations, when \(t>0, t\notin \mathbb N , \mathop {\text{ frac}}\nolimits (t)=t-m\)).

The function \(\mathop {\text{ sw}}\nolimits \) is 2-periodic and coincides with the identity on \(]-1,1[\). It presents discontinuities on \(O(\mathbb Z )\), and for all \(t\in \mathbb R \setminus O(\mathbb Z )\), we have

Observe that in electronics such a function can be produced from the periodic discharge of a capacitor.

In all that follows, the notation \(\tau \) shall represent a strictly positive real, and our basic \(2\,\tau \)-periodic function \(\sigma \) will simply be \(t\mapsto \mathop {\text{ sw}}\nolimits (t\,\tau ^{-1})\).

The basic equation from which we start is a discontinuous equation of order \(n\):

Notice that the function \(t\mapsto \mathop {\text{ sgn}}\nolimits \big (\mathop {\text{ sw}}\nolimits (t\,\tau ^{-1})\big )\) is piecewise-constant. So, on each interval on which \(\mathop {\text{ sgn}}\nolimits \big (\mathop {\text{ sw}}\nolimits (t\,\tau ^{-1})\big )\) has a constant value, Eq. (1) appears as an autonomous differential equation.

We are interested in solutions of maximal regularity, which are \({\fancyscript{C}}^{n-1}\) on a countable set of points (including the origin), and \({\fancyscript{C}}^{\infty }\) everywhere else (in particular on a dense set of points). Observe that, at any point \(t_0\) there are only two possibilities: either the solution is not \({\fancyscript{C}}^n\) or it is \({\fancyscript{C}}^{\infty }\).

1.4 Euler polynomials

We introduce here the Euler polynomials which will appear in the resolution of our problem. For all \(n\in \mathbb N \), the Euler polynomial \(E_n\) is a degree \(n\) polynomial defined by:

For instance, \(E_0(t)=1, E_1(t)=t-\frac{1}{2}, E_2(t)=t^2-t\).

These Euler polynomials have been extensively studied by many authors, mainly in the mathematical field of Number Theory. We refer the reader to [3, 6–8] and [17] (Chapter VI) for details concerning this subject. Some of their properties are mentioned in Sect. 3.2. These polynomials will be generalized in this present work to piecewise-polynomial functions (see Sects. 2.2 and 3.2).

1.5 Finite formulas expressing periodic solutions and final remarks

As previously described, this work deals with the study of the periodic solutions of piecewise-\({\fancyscript{C}}^{\infty }\) differential equations. In the following, it appears that, up to normalization, the periodic solutions of our order \(n\) equations are expressed in a very direct way in terms of the Euler polynomial of degree \(n\).

The interest of this formulation in terms of Euler polynomials is double. On one hand, although Euler polynomials are well known and extensively studied by several mathematicians, it is the very first time that they appear in non-smooth dynamical systems theory. On the other hand, they allow us to write explicitly our solutions without any numerical approximation due to the presence of infinite series. In this sense, this approach is conceptually different from the classical one using Fourier series theory.

Looking closer to this first result, appears the need of considering a new class of piecewise-\({\fancyscript{C}}^{\infty }\) periodic functions, the periodic switched Euler polynomials, which are themselves built from Euler polynomials. Using this new class of functions, appears naturally a generalized class of differential equations where the problem of finding and expressing finitely their periodic solutions is solvable.

Observe that we give a characterization of the periodic solutions either by means of an explicit formula (see Theorem 1) or by means of the initial conditions in order to compute a periodic solution by numerical integration (see Corollary 1).

The paper is organized in the following way. In Sect. 2, we introduce the periodic switched Euler polynomials and present the main results. We state and prove several lemmas in Sect. 3, and finally, we prove our main results in Sect. 4.

2 Main results

2.1 Basic free equation

We state and discuss the major results which will be proved in Sect. 4. We begin our study by proving that the basic Eq. (1) has periodic solutions and that these periodic solutions have an explicit form expressed in terms of the Euler polynomial of degree \(n\). Moreover, if the value at one point is fixed, then the periodic solution is unique.

Theorem 1

For all \(y_0\in \mathbb R \), there exists a unique \(2\,\tau \)-periodic solution of class \(\mathcal{C ^{n-1}}\) of Eq. (1) such that \(y(0)=y_0\). Furthermore, for all \(t\in ]-\tau ,\tau [\), this solution is given by

where \(E_n\) is the Euler polynomial of degree \(n\).

It is indeed important to have a characterization of the solution in terms of Euler polynomials. They occur in Number Theory, and a lot is known about their behavior.

Since the restrictions of the solutions to \([0,\tau [\) (resp. \(]-\tau ,0]\)) are uniquely determined by the values at \(0\) of \((y^{(i)}, i\in [0,n-1])\), we get (by means of a recursive application of item (a), Lemma 3) the following corollary.

Corollary 1

For all \(y_0\in \mathbb R \), there exists a unique \(2\,\tau \)-periodic solution of class \({\fancyscript{C}^{n-1}}\) of Eq. (1) such that \(y(0)=y_0\). Furthermore, this solution is such that

This corollary might be useful if one wants to compute directly the periodic solution by means of numerical integration.

2.2 Periodic switched Euler polynomials

For all \(n\in \mathbb N \), let us introduce (see Sect. 3.2 for more details) the non-smooth function \(\psi _n\) such that, for all \(t\in \mathbb R \),

The restriction of \(\psi _n\) to \([0,+\infty [\) (resp. \(]-\infty ,0]\)) is a polynomial function. We call \(\psi _n\) a switched Euler polynomial of degree \(n\). The graphs of the restrictions to \([-1,1]\) of \(\psi _n\) for values of \(n\) up to \(5\) are represented in Fig. 2. Observe that \(\psi _0=\mathop {\text{ sgn}}\nolimits \) and provided \(n>0, \psi _n\) is \({\fancyscript{C}}^{n-1}\) at \(0\) (see Lemma 5), but not \({\fancyscript{C}}^{n}\) at \(0\). Our aim is to construct from \(\psi _n\) a \(2\)-periodic piecewise-\({\fancyscript{C}}^{\infty }\) function with maximal regularity.

From Lemma 8, provided \(\tau =1\) and \(n>0\), the periodic solution of Eq. (1) vanishing at \(0\), expressed in Theorem 1 is exactly defined, for all \(t\in \mathbb R \setminus O(\mathbb Z )\), by

The function \(\psi _n\circ \,\mathop {\text{ sw}}\nolimits \) presents potential discontinuities on points of \(O(\mathbb Z )\) since \(\mathop {\text{ sw}}\nolimits \) is involved. But, by Lemma 8, there exists a unique function \(\psi _n^{*}\) which is \({\fancyscript{C}}^{n-1}\) at points of \(2\,\mathbb Z , {\fancyscript{C}}^\infty \) everywhere else, and coinciding with \(\psi _n\circ \,\mathop {\text{ sw}}\nolimits \) on \(\mathbb R \setminus O(\mathbb Z )\).

We call periodic switched Euler polynomials these functions \(\psi _n^{*}\) which are going to play a central role in our study. Note that we also fix by convention \(\psi _0^{*}=\psi _0\circ \,\mathop {\text{ sw}}\nolimits \) (which remains discontinuous at points of \(\mathbb Z \)).

2.3 Forced equations

Due to the physical meaning, it is very usual to force a free differential equation by means of a periodic deformation appearing in the right hand side (see for instance [21]). Indeed, our next goal will be the study of some deformations of our basic equation of arbitrary order (1). Furthermore, we assume that these deformations are just piecewise-\({\fancyscript{C}}^\infty \). So we consider Equations of the form:

where \(n\in \mathbb N , n> 0, F : \mathbb R \rightarrow \mathbb R \) is piecewise-\({\fancyscript{C}}^\infty , 2\,\tau _{F}\)-periodic (\(\tau _{F}\in \mathbb R , \tau _{F}>0\)) with null average value.

We define the \(n\)-primitive of \(F\) (vanishing at \(0\)) in the following way:

Note that a non-recursive expression of \(\varTheta _{F}^{[n]}\) is:

and that the \(n\)th derivative of \(\varTheta _{F}^{[n]}\) is exactly \(F\).

Let us recall that we assume that \(F\) has null average value, so that \(\varTheta _{F}^{[n]}\) remains periodic (see Lemma 9).

The following result states that the forced equation has periodic solutions provided that the period of \(F\) and \(\tau \) are commensurable, that is, their periods are rationally dependent.

Theorem 2

Equation (2) has periodic solutions if and only if the half-periods \(\tau _F\) of \(F\) and \(\tau \) satisfy \(\tau _{F}^{-1}\,\tau \in \mathbb N \).

In that case, for all \(y_0\in \mathbb R \), Eq. (2) has a unique periodic solution of class \(\mathcal{C ^{n-1}}\) such that \(y(0)=y_0\). Furthermore, this solution is given, for all \(t\in \mathbb R \), by

A consequence is the existence of a countable family of periodic solutions vanishing at \(0\). Observe that the only requested condition is that \(\tau _F^{-1}\,\tau \in \mathbb N \) which is completely different from the case studied in [14] where such a condition is necessary but not sufficient.

Let us denote by \(\mathbf{i }\) the complex number such that \(\mathbf{i }^2=-1\). In the case, \(F\) has a finite Fourier series, we get an explicit and computable formula of the solution, as described in the next corollary:

Corollary 2

Provided that \(F\) has a finite Fourier series of the form

and that \(\beta \,\tau \in \mathbb N \), then for all \(y_0\in \mathbb R \), Eq. (2) has a unique periodic solution of class \(\mathcal{C ^{n-1}}\) such that \(y(0)=y_0\). Furthermore, this solution is given, for all \(t\in \mathbb R \), by

We illustrate in Figs. 6, 7, and 8 the periodic solutions of \(y^{\prime \prime }=\mathop {\text{ sgn}}\nolimits \big (\mathop {\text{ sw}}\nolimits (t)\big )+\alpha \,\cos (\pi \,\beta \,t)\) vanishing at \(0\) and their corresponding phase portraits, for different values of \((\alpha ,\beta )\).

2.4 Equations involving periodic switched Euler polynomials

The solutions of Eq. (2) are built in a simple manner from the Euler polynomials. On the other hand, Euler polynomials satisfy very particular differential properties. Indeed, the set of these Euler polynomials is closed by differentiation. This leads us to consider a distinguished class of non-smooth differential equations for which the problem of finding periodic solutions is solvable.

Let us study now differential equations of the form:

where \(F\) is \(2\,\tau _F\)-periodic, with null average value.

Observe that the case \(F=0\) and \(k=0\) corresponds to Eq. (1), already treated in Theorem 1. The following theorem generalizes this previous result.

Theorem 3

Provided \(\tau _F^{-1}\,\tau \in \mathbb N \), for all \(y_0\in \mathbb R \), Eq. (3) has a unique periodic solution of class \({\fancyscript{C}^{n+k-1}}\) such that \(y(0)=y_0\). Furthermore, this solution is given, for all \(t\in \mathbb R \), by

Some examples are represented in Figs. 3 and 4.

Observe that the functions \(F\) such that \(F(t)=\psi _l^{*}\big (t\,\tau ^{-1}\big ), l\in \mathbb N \), are themselves piecewise-\({\fancyscript{C}}^{\infty }\) periodic. Moreover, the average value of a switched periodic Euler polynomial is zero (see Lemma 10). So we can consider differential equations which involve exclusively periodic switched Euler polynomials:

Moreover, the \(n\)-primitive of a switched Euler polynomial is itself a switched Euler polynomial (see Lemma 8). By induction on the number on switched polynomials, we get:

Corollary 3

Equation (4) has a unique periodic solution of class \({\fancyscript{C}^{n+k_0-1}}\) such that \(y(0)\!=\!0\), given by:

2.5 The particular case of a second order equation

Second order equations are the most frequent in applications, due to their physical meaning. In that case, it is also possible to draw phase portraits which are very useful to completely understand the periodic solutions.

Let us consider a \(2\,\tau _{F}\) periodic function \(F\), of null average value, and the following equation:

By Theorem 2, Eq. (5) has periodic solutions if and only if the half-periods \(\tau _F\) of \(F\) and \(\tau \) are such that \(\tau _{F}^{-1}\,\tau \in \mathbb N \). Then, for all \(y_0\in \mathbb R \), Eq. (5) has a unique periodic solution \(y\) such that \(y(0)=y_0\). It is defined outside \(\tau \,O(\mathbb Z )\) by

Provided that \(y(0)\) is fixed, this unique solution is also determined by the initial conditions:

The solutions of different equations are illustrated in Figs. 5, 6, 7 and 8.

If \(F=0, y_0=0\), the solution of Eq. (5) and its derivative are given for all \(t\in ]-\tau ,\tau [\) respectively, by:

Provided that \(\varepsilon \ne 0, t\) can be eliminated from the second equation, and we get

which is another description of the periodic solution of Eq. (5) (when \(F=0\)) such that \(y(0)=0\). This implies that \((y,y^{\prime })\) belongs to the semi-algebraic set

3 Conditions for the existence of periodic solutions

In this section, we present several lemmas which will be used for proving our main results.

3.1 Reduction and resolution of the basic equation

As said in the introduction, we start the discussion from Eq. (1), and we search the solutions on the interval \(]-\tau ,\tau [\). We reformulate the problem by means of the following Lemma.

Lemma 1

-

(a)

\(y\) is a solution of Eq. (1) if and only if \(z\) defined by

$$\begin{aligned} z(t)=\frac{n!}{\eta \,\tau ^n}y\big ({t}\,{\tau }\big ) \end{aligned}$$(6)is a solution of

$$\begin{aligned} z^{(n)}-n!\, \mathrm{sgn}\big (\mathrm{sw}(t)\big ) =0. \end{aligned}$$(7) -

(b)

If \(y\) is a \(2\,\tau \)-periodic \({\fancyscript{C}}^{n-1}\) solution of Eq. (1) vanishing at \(0\), then \(z\) defined by formula (6) is a \({\fancyscript{C}}^{n-1}\) solution of the boundary problem

$$\begin{aligned} \left\{ \begin{array}{l} \forall t \in [-1,1], \ \ z^{(n)}(t)-n!\,\mathrm{sgn}(t)=0 \\ z(0)=0 \\ \mathrm{for\,\, all\,\, integer} \ i\in [0,n-1], \ \ z^{(i)}(1)=z^{(i)}(-1). \end{array}\right. \end{aligned}$$(8)

Proof

-

(a)

Straightforward from the computation of \(z^{(n)}\).

-

(b)

By item (a), Eq. (1) is equivalent to Eq. (7), which simplifies on \(]-1,1[\) to:

$$\begin{aligned} z^{(n)}-n!\,\mathop {\text{ sgn}}\nolimits (t)=0. \end{aligned}$$(9)It is straightforward that if \(y\) is \(2\,\tau \)-periodic, then \(z\) is 2-periodic. So for all \(i\in [0,n\!-1], z^{(i)}(1)=z^{(i)}(-1+2)=z^{(i)}(-1)\).

\(\square \)

Observe that no solution of (9) can be \({\fancyscript{C}}^{n}\) at the origin. We will restrict ourselves to solutions which are \({\fancyscript{C}}^{n-1}\) everywhere, that is, are of maximal regularity. In the following lemma, we establish the existence and the unicity of such a solution of Eq. (9) vanishing at the origin and satisfying boundary constraints.

Lemma 2

Let us consider Eq. (9) on \([-1,1]\). There exists a unique solution \(z\) of Eq. (9) such that

-

\(z(0)=0\);

-

\(z\) is \({\fancyscript{C}}^{n-1}\) on \([-1,1]\);

-

for all \(i\in [0,n-1], z^{(i)}(1)=z^{(i)}(-1)\).

Observe that outside \(0\) any solution of Eq. (9) is \({\fancyscript{C}}^{\infty }\).

Proof

We search for solutions of Eq. (9) vanishing at the origin.

Let \(z_{+}\) (resp. \(z_{-}\)) be a solution on \([0,1]\) (resp. \([-1,0]\)) vanishing at \(0\) of the equation \(z^{(n)}=n!\) (resp. \(z^{(n)}=-n!\)). It is immediate that both \(z_{+}\) and \(z_{-}\) are polynomials of degree \(n\).

We wish to construct a solution \(z\) on \([-1,1]\) such that:

The solution \(z_{+}\) (resp. \(z_{-}\)) is right (resp. left) differentiable at any order at \(0\). And to get a \({\fancyscript{C}}^{n-1}\) solution at \(0\), we must have \(z_{+}^{(i)}(0)=z_{-}^{(i)}(0)\) for all \(i\in [0,n-1]\). This implies that the coefficients of \(z_{+}\) and \(z_{-}\) are the same, excepted the dominant coefficients which are \(1\) in the case of \(z_{+}, -1\) in the case of \(z_{-}\).

So a solution of Eq. (9) vanishing at \(0\) has the general form:

Hence, for all \(t\ne 0\), for all \(j\in [0,n-1]\), the \(j\)-th derivative of \(z\) at \(t\) is:

Furthermore, for all \(j\in [0,n-1]\), we impose the condition \(z^{(j)}(1)=z^{(j)}(-1)\) which corresponds to the equation \(J(j)=0\) where:

Observe that \(J(n-1)=0\). Moreover, when \(i=j\), we have \((1-(-1)^{i-j})=0\). Hence, for all \(j\in [0,n-2], J(j)\) reduces to:

Observe that for all \(j\in [0,n-2], J(j)\) involves \((a_i, i\in [j+1,n-1])\) and the coefficient of \(a_{j+1}\) in \(J(j)\) is \(2\,(j+1)! \ne 0\).

Assume first that \(n\) is even, \(n\ge 2\). Let us solve the system:

with respect to the unknowns \((a_i, i\in [1,n-1])\).

Let us collect the equations of the linear system (10) this way:

-

we consider the subsystem of all equations corresponding to even indices of derivation:

$$\begin{aligned} \left\{ J(2j)=0 ,\ \text{ for} \text{ all} \, j \in \left[0, \frac{n}{2}-1\right] \right\} . \end{aligned}$$(11)If one writes

$$\begin{aligned} \tilde{J}(j) = \sum _{i=j+1}^{n-1} \frac{i!}{(i-j)!}\,\big (1-(-1)^{i-j}\big )\,a_{i}, \end{aligned}$$system (11) is equivalent to

$$\begin{aligned} \left\{ \begin{array}{l} \tilde{J}(0)=-2\\ \tilde{J}(2)= -2\,n\,(n-1)\\ \ldots \\ { \tilde{J}(2j) = -\frac{2\,n!}{(n-2j)!}} \text{ for} \text{ all} \,0 \le j \le \frac{n}{2}-1\\ \ldots \\ \tilde{J}(n-2) =-{n!} \end{array}\right. \end{aligned}$$(12)For all \(j\in [0,\frac{n}{2}-1]\), the coefficient of the variable \(a_{2j+1}\) present in the equation \(\tilde{J}(2j)=0\) is \(2\,(2j+1)! \ne 0\). The right hand side of (12) is nonzero. So (12) is a triangular system of \(\frac{n}{2}\) equations and \(\frac{n}{2}\) unknowns. Since it is non-singular and non-homogeneous, it has a unique non-trivial solution with respect to the unknowns \((a_{2j+1},{j\in [0,\frac{n}{2}-1]})\). The conclusion is the same for (11).

-

we consider the subsystem of all equations corresponding to odd indices of derivation:

$$\begin{aligned} \left\{ J(2j+1)=0 ,\ \text{ for} \text{ all} j \in \Big [0,\frac{n}{2}-2\Big ] \right\} . \end{aligned}$$(13)System (13) is also a triangular system, of \(\frac{n}{2}-1\) equations, and \(\frac{n}{2}-1\) unknowns. This system is also non-singular (for all \(j\in [1,\frac{n}{2}-1]\), the coefficient of \(a_{2j}\) in the equation \(J(2j-1)=0\) is \(2\,(2j)! \ne 0\)), but its right hand side is trivial. So it has a unique trivial solution with respect to the unknowns \((a_{2j}, {j\in [1,\frac{n}{2}-1]})\).

Since (10) is equivalent to (11) and (13), it has a unique solution. Moreover, all the even coefficients are zero. Note that the polynomial part of degree \(n-1\) of the solution \(z\) is an odd polynomial.

The case \(n\) odd is similar. The subsystem corresponding to even derivatives has a unique trivial solution, while the subsystem corresponding to odd derivatives has a unique non-trivial one. In that case, the polynomial part of degree \(n-1\) of the solution \(z\) is an even polynomial. \(\square \)

3.2 Euler polynomials and periodic switched Euler polynomials

Let us recall some well-known properties of the Euler polynomials \(E_n\), already defined in Sect. 1.4.

Lemma 3

For all \(t\in \mathbb R \), for all \(n\in \mathbb N \):

-

(a)

\(E_n^{^{\prime }}(t)=n\,E_{n-1}(t), n\ge 1\)

-

(b)

\(E_n(1-t)=(-1)^n\, E_n(t), n\ge 0\)

-

(c)

\(E_n(1+t)+E_n(t)=2\,t^n, n\ge 0\)

-

(d)

\((-1)^n\,E_n(-t)=2\,t^n-E_n(t), n\ge 0\).

Proof

Refer to [19] for (c), and to [3] for (a), (b).

Observe that (d) is a direct consequence of (b) (changing \(t\) into \(-t\)) and (c). \(\square \)

This implies that Euler polynomials corresponding to even indices vanish at both \(0\) and \(1\):

Lemma 4

If \(n\ge 1\) then \(E_{2n}(1)=E_{2n}(0)=0\).

Proof

By item (d), Lemma 3, \((-1)^{2n}\,E_{2n}(0)=-E_{2n}(0)\). So \(E_{2n}(0)=-E_{2n}(0)\), and \(E_{2n}(0)=0\). By item (b), Lemma 3, \(E_{2n}(1)=(-1)^{2n}\, E_{2n}(0)\), which implies \(E_{2n}(1)=0\). \(\square \)

We investigate now some properties of a piecewise-polynomial function built from the Euler polynomial. For all \(n\in \mathbb N \), let \(\psi _n\) be defined by

Observe that \(\psi _0=\mathop {\text{ sgn}}\nolimits \).

Lemma 5

For all \(n\in \mathbb N , n>0, \psi _n\) is \({\fancyscript{C}}^{n-1}\) at \(0, {\fancyscript{C}}^{\infty }\) everywhere else.

Proof

Let us call \((\psi _{n})_{+}\) (resp. \((\psi _n)_{-}\)) the restriction of \(\psi _n\) to \(]0,+\infty [\) (resp. \(]-\infty ,0[\)). The function \((\psi _n)_{+}\) (resp. \((\psi _n){-}\)) is right (resp. left) differentiable at any order at \(0\). And \(\psi _n\) is a \({\fancyscript{C}}^{n-1}\) function at \(0\) if and only if, for all \(j\in [0,n-1], (\psi _n)_{+}^{(j)}(0)=(\psi _n)_{-}^{(j)}(0)\).

For \(j=0\), we have \(E_n(0)\) on the right and \((-1)^{n+1}\,E_n(0)\) on the left. These quantities are equal if and only if \(E_n(0) \, (1+ (-1)^n)=0\). This is trivial if \(n\) is odd, and results from Lemma 4 if \(n\) is even.

For all \(j\in [1,n\!-\!1]\), for \(t\!<\!0\), the derivation chain rule gives \(\psi _{n}^{(j)}(t)=(-1)^{n+1+j}\, E_n^{(j)}(-t) \) . So,

But, for all \(j\in [1,n-1]\), we have

Hence, for all \(j\in [1,n-1], (\psi _n)_{-}^{(j)}(0^{-})=(\psi _n)_{+}^{(j)}(0^{+}) \) if and only if

which leads to

Condition (14) is trivially satisfied if \(n\) and \(j\) have different parity. And if \(n\) and \(j\) have the same parity, \(n-j\) is even with \(n-j\ge 1\). So \(n-j\ge 2\), and by Lemma 4, \(E_{n-j}(0)=0\). For all \(j\in [1,n-1]\), condition (14) is satisfied. Hence, \(\psi _n\) is \({\fancyscript{C}}^{n-1}\) at \(0\). It is \({\fancyscript{C}}^{\infty }\) outside \(0\) since it coincides on an open interval with a polynomial function. \(\square \)

Lemma 6

For all \(n\ge 1\), for all \(j\in [0,n-1], \psi _n^{(j)}(1)=\psi _n^{(j)}(-1)\).

Proof

If \(j=0, \psi _n(1)=\psi _n(-1)\) if and only if \(E_{n}(1)=(-1)^{n+1}\,E_{n}(1)\). If \(n\) is odd, this relation holds. If \(n\) is even, \(n\ge 2\), by Lemma 4, \(E_{n}(1)=0\) and the above relation still holds.

As in Lemma 5, we derive that for all \(j\in [1,n-1], \psi _n^{(j)}(1)=\psi _n^{(j)}(-1)\) if and only if

which leads to \( \left(1+ \left(-1\right)^{n+j}\right)\, E_{n-j}\left(1\right)=0 \ . \) If \(n\) and \(j\) have the same parity, we use the equality \(E_{n-j}(1)=0\) established in Lemma 4. \(\square \)

In the following lemma, we show that the Euler polynomial \(E_n\) is involved in the periodic solutions of Eq. (1).

Lemma 7

Let \(y\) be a periodic solution of Eq. (1), of period \(2\,\tau , \tau \in \mathbb R , \tau > 0\), such that \(y(0)=0\). Then, for all \(t\in [-\tau ,\tau ]\)

Proof

We start by using Lemma 1, item (a), and passing from Eq. (9) to Eq. (7).

For \(n\in \mathbb N , n>0\), let us consider \(\psi _n\). By Lemma 5, \(\psi _n\) is \({\fancyscript{C}}^{\infty }\) outside \(0, {\fancyscript{C}}^{n-1}\) at \(0\). For all \(t > 0\), we can apply recursively rule (a), Lemma 3. We get \( E^{(n)}_n(t)=n!\, E_{0}(t)=n! \) , while for all \(t<0, (-1)^{n+1}\,E^{(n)}_n(-t)=(-1)^{2n+1}\, n!\, E_{0}(t)= - n! \) . So for all \(t\ne 0, \psi _n^{(n)}(t)=\mathop {\text{ sgn}}\nolimits (t)\, n!\).

By Lemma 6, for all \(j\in [0,n-1], \psi _n\) satisfies \(\psi _n^{(j)}(1)=\psi _n^{(j)}(-1)\).

Let us denote \(u=\psi _n-E_n(0)\). We have \(u(0)=0\), and \(u\) is a solution of the boundary problem (8). Consequently \(u\) is the unique solution of (8). \(\square \)

We can give a shorter form of the solution of Eq. (7) as described in the next lemma.

Lemma 8

For all \(n\in \mathbb N , n>0\), there exists a unique \({\fancyscript{C}}^{n-1}\) function \(\psi _n^{*}\) such that, for all \(t\in \mathbb R \setminus O(\mathbb Z )\),

Moreover, \(\psi _n^{*}\) is the unique solution of Eq. (7) vanishing at \(0\). And for all \(k\in \mathbb N , k\le n\),

Proof

Observe that for \(t\in ]-1,1[, \psi _n^{*}\) is expressed by the formula:

and it is a solution of Eq. (7) vanishing at \(0\). Using Lemma 6, at every point of \(O(\mathbb Z )\), the formula valid on \(\mathbb R \setminus O(\mathbb Z )\) can be extended on both sides. The statement about the derivatives of \(\psi _{n}^{*}\) is a direct consequence of item (d), Lemma 3. The remaining of the proof is straightforward. \(\square \)

By convention, we define \(\psi _0^{*}=\psi _0\circ \,\mathop {\text{ sw}}\nolimits \).

We then show that the \(n\)-primitive of a function is periodic if and only if its average value (defined in Sect. 1.2) is zero:

Lemma 9

Let \(F\) be a periodic piecewise-\({\fancyscript{C}}^\infty \) function. For all \(n\in \mathbb N , n>0, \varTheta _{F}^{[n]}\) is periodic if and only is the average value of \(F\) is equal to \(0\).

Proof

Since \(F\) is periodic piecewise-\({\fancyscript{C}}^\infty \), it equals its Fourier series almost everywhere. So for almost every \(t\),

and the average value of \(F\) is the constant term \(2\,\tau _F\, a_0\). The term \(a_0\) integrates, up to a constant, in \(\varTheta _{F}^{[n]}\) as the monomial \(a_0\,t^{n}\) which is periodic if and only if \(a_0=0\). \(\square \)

And indeed, the functions \(\psi _n^{*}\) have null average value:

Lemma 10

For all \(n\in \mathbb N \), the average value of \(\psi _n^{*}\) on any interval of length \(2\) is zero.

Proof

Without loss of generality, let us integrate on the interval \([-1,1]\):

If \(n\) is even, the result is zero.

Let us now assume \(n\) odd. From item (d), Lemma 3, a primitive of \((n+1)\, E_n\) is \(E_{n+1}\), so

But from item (b), Lemma 3, we have \(E_{n+1}(1)=(-1)^{n+1}E_{n+1}(0)=E_{n+1}(0)\), and the average value is also zero. \(\square \)

4 Proofs of the main results

4.1 Proof of Theorem 1

Proof

By Lemma 1, item (a), we replace Eq. (1) by Eq. (7). By Lemma 7, Eq. (7) has a unique solution vanishing at \(0\).

It is straightforward to see that \(z\) is a solution of Eq. (7) vanishing at \(0\) if and only if \(\tilde{z}=z+z_0\) (\(z_0\in \mathbb R \)) is a solution of Eq. (7) such that \(\tilde{z}(0)=z_0\).\(\square \)

4.2 Proof of Theorem 2

Proof

As in the proof of Theorem 1, we consider the same unknown \(z\) [refer to Lemma 1, item (a)] and replace Eq. (2) by an equivalent equation

We first consider solutions \(z\) of Eq. (15) such that \(z(0)=0\).

For all \(t\), we have \(z{}^{(n)}(t+2)=z{}^{(n)}(t)\), so \(F(t\,\tau +2\,\tau )=F(t\,\tau )\). This implies that \(\tau _F^{-1}\,\tau \in \mathbb N \).

Up to changing \(F\), we can assume that \(\eta =\tau =1\). By Theorem 1, \(z\) defined on \(]-1,1[\) by \(z(t)=\psi _n(t)-E_n(0)+n!\,\varTheta _F^{[n]}(t)\) is a solution of Eq. (15) on \(]-1,1[\). It follows that \(t\mapsto \psi _n^{*}(t)-E_n(0)+n!\,\varTheta _F^{[n]}(t)\) is a 2-periodic solution of Eq. (15) vanishing at \(0\).

Assume that \(t\mapsto u(t)\) is another 2-periodic solution of Eq. (15), such that \(u(0)=0\). Then, \(u-n!\,\varTheta _F^{[n]}\) is a 2-periodic solution of Eq. (7) vanishing at \(0\). By Theorem 1, \(z\) is unique, so \(u-n!\,\varTheta _F^{[n]}=z\). It follows that Eq. (15) has a unique 2-periodic solution vanishing at \(0\).

It is straightforward to deduce that \(z\) is a solution of Eq. (15) vanishing at \(0\) if and only if \(u=z+z_0\) (\(z_0\in \mathbb R \)) is a solution of Eq. (15) such that \(u(0)=z_0\).\(\square \)

4.3 Proof of Theorem 3

Proof

The first parts of the proofs of Theorem 3 and Theorem 2 are the same.

Let us assume that \(F=0\) and \(n>0\).

As in Theorem 2, we replace the initial equation by an equivalent equation

by involving \(z\) such that

Let us split the resolution of Eq. (16) between the intervals \(]0,1[\) and \(]-1,0[\).

For \(t\in ]0,1[\), we have \(k!\, z^{(n)}(t)=(n+k)!\, E_k(t)\). Hence \(z^{(n+k)}(t)=(n+k)!\).

On the interval \(]-1,0[\), we have \(k!\, z^{(n)}(t)=(n+k)!\, (-1)^{k+1} E_k(-t)\).

So, \(z^{(n+k)}(t)=(-1)^{2k+1} \, (n+k)!= -(n+k)!\).

It follows that \(z\) must be a 2-periodic solution, vanishing at \(0\), of

By Theorem 1, Eq. (17) has a unique 2-periodic solution vanishing at \(0\), namely,

Conversely, it is immediate to check that the function above is a 2-periodic solution of Eq. (16) vanishing at \(0\).

Finally, the proof in the case \(F\ne 0\) is similar to the proof of Theorem 2. \(\square \)

References

Andronov, A.A., Vitt, A.A., Khaikin, S.E.: Theory of oscillators, (translated from the Russian by F. Immirzi; translation edited and abridged by W. Fishwick) Pergamon Press, Oxford-New York-Toronto (1966)

Anosov, D.V.: Stability of the equilibrium positions in relay systems. Autom. Remote Control 20(2), 130–143 (1959)

Brillhart, J.: On the Euler and Bernoulli polynomials. J. Reine Angew. Math. 234, 45–64 (1969)

di Bernardo, M., Budd, C.J., Champneys, A.R., Kowalczyk, P.: Piecewise-Smooth Dynamical Systems—Theory and Applications. Applied Mathematical Sciences 163, Springer-Verlag London Ltd., London (2008)

Colombo, A., di Bernardo, M., Fossas, E.: Two-fold singularity in nonsmooth electrical systems. In: (ISCAS) Proceedings of 2011 IEEE International Symposium on Circuits and Systems, pp. 2713–2716 (2011)

Delange, H.: On the real roots of Euler polynomials. Monatsh. Math. 106(2), 115–138 (1988)

Dilcher, K.: Bernoulli and Euler Polynomials. NIST Handbook of Mathematical Functions, U.S. Department of Commerce, Washington, DC, pp. 587–599 (2010)

Howard, F.T.: Roots of the Euler polynomials. Pac. J. Math. 64(1), 181–191 (1976)

Filippov, A.F.: Differential Equations with Discontinuous Righthand Sides, (translated from the Russian). Mathematics and its Applications (Soviet Series) 18. Kluwer Academic Publishers Group, Dordrecht (1988)

Jacquemard, A., Tonon, D.J.: Coupled systems of non-smooth differential equations. Bull. Sci. Math. 136(3), 239–255 (2012)

Jacquemard, A., Teixeira, M.A.: On singularities of discontinuous vector fields. Bull. Sci. Math. 127(7), 611–633 (2003)

Jacquemard, A., Teixeira, M.A.: Invariant varieties of discontinuous vector fields. Nonlinearity 18(1), 21–43 (2005)

Jacquemard, A., Teixeira, M.A.: Computer Analysis of Periodic Orbits of Discontinuous Vector Fields. Computer Algebra and Computer Analysis (Berlin, 2001). J. Symb. Comput. 35(5), 617–636 (2003)

Jacquemard, A., Teixeira, M.A.: Periodic solutions of a class of non-autonomous second order differential equations with discontinuous right-hand side. Physica D: Nonlinear Phenomena 241(22), 2003–2009 (2012)

Jacquemard, A., Teixeira, M.A., Tonon, D.J.: Piecewise smooth reversible dynamical systems at a two-fold singularity. Int. J. Bifurcation Chaos 22 (2012). doi:10.1142/S0218127412501921

Jacquemard, A., Pereira, W.F.: On periodic orbits of polynomial relay systems. Discret. Contin. Dyn. Syst. 17(2), 331–347 (2007)

Jordan, C.: Calc. Finite Differ. Chelsea Publishing Co, New York (1965)

Minorsky, N.: Nonlinear Oscillations. D. Van Nostrand Co., Inc., Princeton, N.J.-Toronto-London-New York (1962)

Wu, K.J., Sun, Z.W., Pan, H.: Some identities for Bernouilli and Euler Polynomials. Fibonacci Quart. 42(4), 295–299 (2004)

Zelikin, M.I., Borisov, V.F.: Theory of chattering control. With applications to Astronautics, Robotics, Economics, and Engineering. Systems and Control: Foundations and Applications. Birkhäuser Boston, Inc., Boston, MA (1994)

Zhusubaliyev, Z.T., Mosekilde, E.: Bifurcations and chaos in piecewise-smooth dynamical systems. World Scientific Series on Nonlinear Science. Series A: Monographs and Treatises 44. World Scientific Publishing Co., Inc., River Edge, NJ (2003)

Acknowledgments

The origin of this work is due to the France–Brazil cooperation agreement. The second author wishes to thank the Institut de Mathématiques de Toulouse (France) for support. The authors are grateful to G.M. Vago for helpful remarks.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bertolim, M.A., Jacquemard, A. Time switched differential equations and the Euler polynomials. Annali di Matematica 193, 1147–1165 (2014). https://doi.org/10.1007/s10231-012-0321-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10231-012-0321-7