Abstract

Metacognitive accuracy is understood as the congruency of subjective evaluation and objectively measured learning performance. With reference to the cue utilisation framework and the embedded-processes model of working memory, we proposed that prompts impact attentional processes during learning. Through guided prompting, learners place their attention on specific information during the learning process. We assumed that the information will be taken into account when comprehension judgments are formed. Subsequently, metacognitive accuracy will be altered. Based on the results of this online study with pre-service biology teachers, we can neither confirm nor reject our main hypothesis and assume small effects of prompting on metacognitive accuracy if there are any. Learning performance and judgment of comprehension were not found to be impacted by the use of resource- and deficit-oriented prompting. Other measurements of self-evaluation (i.e. satisfaction with learning outcome and prediction about prolonged comprehension) were not influenced through prompting. The study provides merely tentative evidence for altered metacognitive accuracy and effects on information processing through prompting. Results are discussed in light of online learning settings in which the effectiveness of prompt implementation might have been restricted compared to a classroom environment. We provide recommendations for the use of prompts in learning settings with the aim to facilitate their effectiveness, so that both resource-oriented and deficit-oriented prompts can contribute to metacognitive skill development if they are applied appropriately.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Background

Metacognitive accuracy and metacognition

Our modern and rapidly changing world demands flexible and fast learning in many areas of life (e.g. grasping new software features after changing a job or developing communication skills in order to optimise work efficiency). The abilities to set learning goals, to watch our progress and to assess goal attainment are vital to adapt to new challenges. Evolving the ability to evaluate one’s own learning process accurately is inherent to learners’ skill development towards lifelong self-regulated learning (e.g. de Boer et al., 2018). The congruency of subjective evaluation of one’s own learning and objectively measured learning performance can be defined as metacognitive accuracy. It is located within the evaluating domain of metacognitive processes that have been investigated since the introduction of the term ‘metacognition’ into educational research and practice in the 1970s (Flavell, 1979). Generally, metacognition refers to three domains of learning: planning, monitoring and evaluating (Schraw & Moshman, 1995). These domains incorporate metacognitive knowledge (i.e. knowledge about person variables, task features and learning strategies) and active regulation of cognition (i.e. skills and processes that guide, monitor, control and regulate learning; Veenman, 2012).

The importance of metacognitive accuracy and the impact of instructional practices

Metacognitive evaluations and their accuracy can drive future learning behaviour and its continuity. This has been observed in experimental studies (e.g. Mazzoni et al., 1990; Mazzoni & Cornoldi, 1993; Metcalfe & Finn, 2008; Mitchum et al., 2016; Rhodes & Castel, 2009) and suggested by theoretical approaches like the ‘region of proximal learning model of study time allocation’ (Metcalfe & Kornell, 2005) and ‘discrepancy-reduction’ models (summarised in Thiede et al., 2003). Metacognitive accuracy has been shown to influence regulation of learning and learning performance (Thiede et al., 2003). At the same time, metacognitive evaluations are prone to errors (Dunlosky & Lipko, 2007; Dunning et al., 2004), and metacognitive accuracy might be comparably low without specific instructional practices aiming to improve accuracy. For example, summarising, re-reading, retrieval practice or delaying time between study phase and metacognitive evaluation (delayed judgment of learning effect) lead to an increase in metacognitive accuracy (Miller & Geraci, 2014; Nelson & Dunlosky, 1991; Rawson et al., 2000; Thiede et al., 2003; Thiede et al., 2005). In detail, delaying judgment intervals and retention time, matching judgment of learning items with test questions, and applying cued recall tasks in learning tests are associated with higher metacognitive accuracy (Rhodes & Tauber, 2011).

Given the far-reaching consequences of inaccurate metacognitive evaluations (e.g. stopping study efforts without realising that expectations have not yet been met due to overestimation), continuous investigation of influencing factors is needed (for a prior summary, see Thiede et al., 2003). Research about underlying cognitive processes through specific instructional practices contributes to a better understanding of the formation of metacognitive evaluations, their accuracy and the link to adaptive and effective learning behaviour.

Metacognitive evaluation and information processing via cue utilisation

According to the cue-utilisation framework (Koriat, 1997), the formation of metacognitive evaluations relies on ‘incoming’ information (‘cues’) during learning instead of memory traces being directly utilised as proposed by King et al. (1980). Such cues might include task-specific, content-specific, emotion-related and behavioural-related information. Other information might be drawn from past experiences or expectations about the future (see Table 1 for examples). Because of the large number of cues that learners might focus on—even on multiple cues simultaneously (Undorf et al., 2018)—it seems conclusive that some cues are more predictive of future performance than others. Indeed, it was shown that comprehension-based cues, such as the self-judged ability to explain a text, are more predictive of performance than information about the quality of a text itself (Thiede et al., 2010). It is assumed that the use of less predictive cues relates to lower metacognitive accuracy (Prinz-Weiß et al., 2022; Serra & Dunlosky, 2010; Thiede et al., 2010). Nevertheless, learners’ attention might be directed towards more predictive cues through instructional practices (e.g. use of prompts). We assume that such deliberate and specific guidance of information processing impacts metacognitive accuracy via the allocation of attention.

Prompts and their link to performance outcome and metacognitive accuracy

Prompts are typically applied by teachers to guide information processing and scaffold students learning. Metacognitive prompts can take the shape of questions or cues and target learners’ monitoring abilities. Metacognitive prompts are the most widely studied practice of metacognitive instructions (Zohar & Barzilai, 2013). They can be combined with cognitive instructions (e.g. Berthold et al., 2007; Hübner et al., 2006) or broader instructional approaches such as context-based learning (e.g., Dori et al., 2018). Recent research shows a special interest in computer-based learning (Bannert & Mengelkamp, 2013; Bannert & Reimann, 2012; Daumiller & Dresel, 2019; Van den Boom et al., 2004; Zheng, 2016) and self-directed metacognitive prompts (Bannert et al., 2015; Engelmann et al., 2021). Single studies show that metacognitive prompts aligned with cognitive tasks improved learning outcome in psychology students (Berthold et al., 2007) and biology students (Großschedl & Harms, 2013). Metacognitive prompts in context-based learning improved scientific understanding in chemistry students (Dori et al., 2018). Metacognitive prompts also increased understanding of the nature of science in pupils (Peters & Kitsantas, 2010). Some studies did not observe effects on learning outcome (e.g. McCarthy et al., 2018; Moser et al., 2017; van Alten et al., 2020) but on task completion rate (van Alten et al., 2020) and qualitative reports of goal setting (McCarthy et al., 2018). Metacognitive processes (i.e. self-awareness) were improved through generic prompts during learning (Kramarski & Kohen, 2017). One study emphasises that metacognitive prompts are only effective when they are used regularly and with elaborate note-taking (Moser et al., 2017). In general, an increasing number of studies provide evidence for enhanced learning performance and increased metacognitive activity through metacognitive prompts (Devolder et al., 2012; Donker et al., 2014; Haller et al., 1988; Kim et al., 2018; Zohar & Barzilai, 2013).

Less is known about the effectiveness of prompts on metacognitive accuracy, and findings are inconclusive. For example, cognitive and metacognitive prompts were not found to increase metacognitive accuracy (Berthold et al., 2007). At the same time, accuracy ranged widely in different prompting conditions in this study. A more recent study suggests alterations in cue-use through variation of prompt frequency (Vangsness & Young, 2021). However, questions about the link to metacognitive accuracy remain unanswered. Potential effects of prompting on metacognitive accuracy might be derived from the embedded-processes model of working memory (Cowan, 1988).

Information processing via cue utilisation, prompts and attention allocation

The embedded-processes model of working memory (Cowan, 1988) proposes that information needs to obtain a state of availability in order to be utilised for the execution of a task (here: formation of metacognitive evaluations). Information might reach different, hierarchically structured states of availability—from long-term memory (a) and an activated state of long-term memory (b) to a ‘focus of attention’ (c). At the highest level of the hierarchy, information is highly accessible if it reaches the state of ‘focus of attention’. Attention can be controlled by voluntary (central executive function) and involuntary processes (attentional orienting system). We expect that prompts impact these attentional processes.

We assume that prompts initially provide a stimulus to the attentional orienting system: they are able to direct learners’ attention to specific cues during learning. Ideally, attention is drawn to cues that are predictive of performance. Simultaneously, prompts stimulate the central executive and ‘encourage’ regulation of attention. Information processing shifts from bottom-up to top-down regulation. In this way, prompts stimulate metacognitive activity and actions of self-regulated learning.

Which information is made available for the subsequent formation of metacognitive evaluation is determined by the nature of a prompt.

Resource-oriented and deficit-oriented prompts can be derived from the field of developmental psychology (Petermann & Schmidt, 2006). Both types of prompts might be applied during learning, i.e. ‘What have I already understood’ vs. ‘What have I not yet understood?’. Both prompts are comprehension-based, non-specific and versatile in their application (for practical use of these questions see: Schraw, 1998). If these prompts are applied during text-reading, we assume attention to be discriminatively allocated.

If the resource-oriented prompt is applied, the focus of attention is directed towards one’s own comprehension (i.e. content of the topic that is already known to the learner). This includes currently acquired knowledge and also prior knowledge acquired through previous learning opportunities. Although comprehension-based information is likely to recede from the ‘focus of attention’ after reading, they will remain in an increased state of availability (activated state of working memory) for subsequent evaluations and serve as information that improve metacognitive accuracy. Because we expect learners to internally repeat topic content, the effects of resource-oriented prompts are potentially comparable to the effects of retrieval practice (Miller & Geraci, 2014). Resource-oriented prompts supposedly lead to similar improvements in metacognitive accuracy.

If the deficit-oriented prompt is applied, information processes might be altered in multiple ways. First, attention might initially be directed towards one’s own comprehension as a benchmark measure in order to identify what has not yet been understood as suggested by discrepancy-reduction models (see: Thiede et al., 2003). The effects are likely to be similar compared to those following the use of resource-oriented prompts. Second, applying the deficit-oriented prompt might direct learners’ attention away from internal comprehension-based information towards text passages that have not yet been understood (external information). Immediate regulation of learning behaviour takes place, and learners focus their attention on ‘new’ content. This might increase the total amount of available comprehension-based cues and may enhance metacognitive accuracy beyond the effects of resource-oriented prompts. Effects on metacognitive accuracy might be similar to those of re-reading methods (Rawson et al., 2000). While these first two mechanisms might occur in parallel and increase metacognitive accuracy, a third mechanism might be detrimental to metacognitive accuracy. Instead of a redirection towards well-understood content or passages that are yet to be understood, attention might be directed to a lack of understanding (void of comprehension) but because this lack of understanding itself is not a valid information on which attention could be placed on, information processing will be interrupted (or even stopped). Learning behaviour is not regulated, and metacognitive accuracy will either be unaffected or negatively affected.

Study aim and hypotheses

This study aims to extend current scientific evidence about instructional practices that are applied to increase metacognitive accuracy. We investigate the effects of two comprehension-based and non-specific prompts (i.e., resource-oriented and deficit-oriented) on the congruency of subjective evaluation and objectively measured text comprehension after reading a biology text. This study tests the hypothesis that prompts direct learners’ attention and impact metacognitive accuracy. In detail, we hypothesise that applying resource-oriented prompts leads to an increase in metacognitive accuracy, and we propose multiple mechanisms when applying a deficit-oriented prompt.

Methods

Participants

A total of 162 pre-service biology teachers took part in this study. On average, university students were 25.18 years old (SD = 3.62 years), and 80.2% were female, 17.9% were male, 0.6% were non-binary and 1.2% made no gender specification. At the time of the assessment, students studied biology education for various school forms in Germany: vocational college (‘Berufsschule’, n = 2) elementary school (‘Grundschule’, n = 7), non-academic track secondary school (‘Hauptschule, Realschule, Sekundarstufe, Gesamtschule’, n = 45), academic track grammar school (‘Gymnasium/ Gesamtschule’, n = 61) and special needs education (‘Sonderpädagogische Förderung’, n = 47). Eleven students attended the bachelor’s programme in biology teaching, and 151 attended the equivalent master’s programme.

General study design

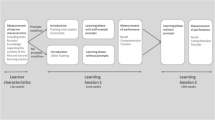

This study was designed as an online learning experiment and distributed via a survey link. The online survey was designed with SoSci Survey (Leiner, 2022). The experiment included four parts: gathering of demographic information (1), text-reading in either one of three conditions (2), self-evaluation of the learning process (3) and the measurement of learning performance (4).

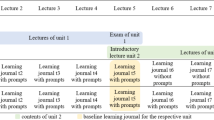

In part 2—text-reading, participants were randomly assigned to one of three groups, i.e. two experimental groups that were prompted to use a metacognitive question and one control group without the use of prompts (see Fig. 1 for participant allocation). In one experimental group, participants were prompted to apply the resource-oriented metacognitive question ‘What have I already understood?’. In the other experimental group, participants were prompted to apply the deficit-oriented metacognitive question ‘What have I not yet understood?’. Prompts were placed at four positions throughout the text: at the beginning, after the first and the second third of the text and at the end of the text. Participants in both experimental groups were prompted to apply and answer the respective metacognitive question for themselves. Fifty-five participants were prompted to use a resource-oriented metacognitive question, 50 participants were prompted to use a deficit-oriented metacognitive question and 57 participants were not prompted to use a metacognitive question.

Procedure

For this study, pre-service biology teachers were recruited from a university course in biology education in Germany. Pre-service teachers were invited to take part in this online learning experiment via email. They were also asked to share the survey link with any biology pre-service teacher interested in participating in the study. Participants were informed about the general research aim, the study procedure including study length and a planned learning test, criteria of eligibility, voluntariness and the non-risk character of the study. Protection of data privacy was ensured, and participants were informed about the possibility to withdraw from participation at any time. Participants gave their consent after reading the study information by clicking ‘continue’. We gathered demographic information including age, gender, school form, and second subject. Participants were then randomly assigned to one of three groups (i.e. two experimental groups that were prompted to use a metacognitive question and one control group without the application of prompts). In accordance with recommendations for metacognition teaching (Schraw, 1998), a short written introduction about metacognition in learning settings was given to both experimental groups to inform them about the usefulness of metacognitive learning strategies. Participants in the control group received neither an introduction about the usefulness of metacognitive learning strategies nor any prompts to apply a metacognitive question. Subsequently, participants were asked to read a book chapter of approximately 1500 words retrieved from a teaching book about ‘epigenetics’ (Knippers, 2017). The chapter discussed the origin of epigenetics, DNA methylation and differences between identical genes. We expected a reading duration of approximately 15 min. To ensure thorough reading, participants were informed that they can continue to the next survey page when they have spent at least 10 min on the text-containing page. After text-reading, all participants were asked to self-evaluate their learning process and to take a short learning test about the text content (i.e. epigenetics). After data submission, participants were shown correct answers to the learning test question.

Instruments

Self-evaluation of the learning process

Single self-report questions were applied to measure self-evaluation in different manifestations. Prior knowledge about epigenetics and interest in the topic were gathered. Beyond that, frequency of the use of a metacognitive question, satisfaction with the learning outcome and students’ judgment of comprehension were measured. Students were also asked to make a prediction of prolonged comprehension. Prior knowledge about epigenetics was evaluated on a visual analogue scale from 0 to 100% (‘How much of the text have you known before reading?’). Interest in epigenetics and satisfaction with learning outcome were rated on a visual analogue scale from ‘not at all’ (= 0) to ‘completely’ (= 100) through agreement with the statements ‘I find the topic epigenetics interesting’ and ‘I am satisfied with my learning outcome’. The use frequency of a metacognitive question was captured by the question ‘How often did you apply the/a metacognitive question?’ on an eight-stepped scale (‘never’, ‘one time’, ‘two times’, ‘three times’, ‘four times’, ‘five times’, ‘six times’, ‘more than six times’). The control group received a one-sentence explanation about the meaning of metacognitive questions to account for a potential lack of knowledge about metacognitive learning strategies. Judgment of comprehension and a prediction about prolonged comprehension were made on a visual analogue scale from 0 to 100% (‘How much of the content did you comprehend?’ and ‘How much of the content will you still know in one week?’).

Learning performance

Text comprehension was measured with a learning test about epigenetics. This learning test was designed based on the chapter that students were asked to read (Knippers, 2017). It consisted of seven closed, single-choice questions and eight open-ended questions (see supplementary material for learning test questions and assessment criteria). Sample questions are ‘Early observations suggest a relationship between methylation of cytosine bases and the gene regulation. Describe these observations!’ and ‘Which answer is correct? Patterns of methylation …’ with the response options ‘…vary from cell to cell’, ‘vary from person to person’, ‘…can alter during the course of a life’ and ‘all of these answers are correct’. One point was assigned to each correct closed single-choice question. Two points were assigned to correct open-ended questions. One point was assigned to partially correct open-ended questions. Incorrect answers received no point. All open-ended questions were rated by one rater. Additionally, two staff members in research positions rated twenty percent of the material. Based on mean ratings (k = 3), intraclass correlation coefficients were calculated using a one-way, random model in the SPSS version 28.0. Intraclass estimates revealed moderate to excellent agreement in reference to (Koo & Li, 2016) between the three raters across all eight open-ended questions (.66 to .96). Learning performance was measured as a sum score that was transformed into a percentage value between 0 and 100%. As a measurement of reliability, we report Cronbach’s α of 0.79 across all 15 items of the learning test.

Data preparation and data analyses

Prior to data acquisition, power analyses were carried out to determine the optimal number of participants. A priori power analyses for a one-way ANOVA with three groups, an expected effect size of f = 0.25, α-level of .05 and a favoured power of 0.80, resulted in a total sample size of 159 participants. Actual sample size reached 162 participants.

As a measurement of metacognitive accuracy, we subtracted learning performance from judgment of comprehension. It reflects the discrepancy between subjective evaluation of one’s own learning and objectively measured learning performance. A value of zero indicates complete congruity. A negative value indicates an underestimation. A positive value indicates an overestimation.

Prior to data analyses, all data were checked for extreme values. Values that exceeded the threshold of two standard deviations from the group mean were excluded from analyses (Simmons et al., 2011). For inferential statistical analyses, respective assumptions were tested. In case of a violation, alternative tests were applied and are being reported where they are applied. In accordance with recommendations by (Doering & Bortz, 2016, pp. 673 – 674) and well-known criticism about the use and interpretation of p-values (e.g., Gardner & Altman, 1986; Wasserstein & Lazar, 2016), we report 95% confidence intervals for mean values and effect sizes in addition to typical p-value interpretation. For inferential statistics in which the null hypothesis was ‘favoured’, we carried out analyses at an α-level of 0.10 because increasing the α-level allows to indirectly minimise the β-error in statistical analyses (Doering & Bortz, 2016, pp. 885 – 888). We adjusted p-values for multiple testing in all analyses in which the alternative hypothesis was favoured. We adjusted according to the Bonferroni-Holm method (Hemmerich, 2016; Holm, 1979). Original and adjusted values are reported where they were applied. Most statistical analyses were carried out with IBM SPSS statistics, version 28.0. If not provided by SPSS, effect sizes were calculated in https://www.psychometrica.de. Data are openly available in DOI 10.17605/OSF.IO/YKZVG.

Results

Metacognitive accuracy—congruency of judgment of comprehension and learning performance

This study aimed to investigate potential effects of resource-oriented and deficit-oriented prompting on pre-service teachers’ metacognitive accuracy after text-reading. It reflects the discrepancy between subjective evaluation of one’s own learning and objectively measured learning performance. A value of zero indicates complete congruity. A negative value indicates an underestimation. A positive value indicates an overestimation (see Table 2 for descriptive data and inferential statistics). Our results show overall positive means indicating overestimation in metacognitive accuracy in all three groups (resource-oriented prompting: M = 18.24, 95%, SD = 21.85, n = 55; deficit-oriented prompting: M = 28.40, 95%, SD = 18.64, n = 47; no prompting M = 18.54, SD = 24.14, n = 52). We compared pre-service teachers’ metacognitive accuracy between the three groups. After adjusting for multiple testing, we did not observe statistically detectable differences between resource-oriented prompting, deficit-oriented prompting and no prompting applying a Welch ANOVA with heterogeneity of variances F(2, 100.17) = 4.07; p = .020, padj. = .140; η2 = .044. We also investigated the effect of the use frequency of the metacognitive question as an indicator of instruction efficacy. A rank analysis of covariance (Quade, 1967) with use frequency as covariate yielded similar results (F(2, 100.17) = 4.04; p = .021, padj. = .140) as the Welch ANOVA, unexpectedly suggesting no meaningful impact of use frequency of a metacognitive question.

Nevertheless, 95% confidence intervals provide indication for an increased overestimation elicited through deficit-oriented prompting. Confidence intervals for mean metacognitive accuracy after resource-oriented prompting and no prompting largely overlap. We observed a confidence interval of 12.34 to 24.15 after resource-oriented prompting and a confidence interval of 11.82 to 25.26 after no prompting. The confidence interval of 22.93 to 33.87 for mean accuracy after deficit-oriented prompting is somewhat shifted towards positive values. Effect sizes are small to medium. Based on p-values, confidence intervals for mean values and effect sizes, we can neither confirm nor reject our hypothesis regarding the effects of prompting on metacognitive accuracy.

We neither observed statistically detectable differences in learning performance applying a Kruskal-Wallis test χ2(2) = 0.21, p = .901; padj. > .999; η2 = 0.011 or in judgment of comprehension applying a Kruskal-Wallis test χ2(2) = 3.39, p = .184; padj. > .920; η2 = 0.009. Overall, our results revealed mean learning performance of 44.1% (SD = 20.84%) and mean judgment of comprehension of 65.5% (SD = 21.62%).

Self-evaluated satisfaction with learning outcome and prediction about prolonged comprehension

We tested whether resource-oriented prompting, deficit-oriented prompting and no prompting influenced satisfaction with the learning outcome and prediction about prolonged comprehension (see Table 2 for descriptive data) via Kruskal-Wallis tests. We did not observe a statistically detectable difference in satisfaction with learning outcome between resource-oriented, deficit-oriented prompting and no prompting; χ2(2) = 0.87, p = .648; padj. > 0.999; η2 = 0.007. We did not observe a statistically detectable difference in the prediction about prolonged comprehension (‘How much of the content will you still know in one week?’)¸ χ2(2) = 2.87, p = .238; padj. > .952; η2 = 0.006.

Preliminary tests

Preliminary analyses were carried out to ensure absence of substantial differences between the three groups (resource-oriented prompting, deficit-oriented prompting, no prompting) at baseline (see Table 2 for descriptive data). Preliminary tests revealed no statistically detectable differences in age; F(2, 159) = 0.56, p = .571, or prior knowledge; F(2, 156) = 1.87; p = .158; η2 = 0.023 between groups. We observed ratings of prior knowledge at a moderate level with high variance across all groups; resource-oriented prompting: M = 31.89, 95% CI (26.44; 37.33), SD = 19.75, n = 53, deficit-oriented prompting: M = 39.52, 95% CI (33.55; 45.49), SD = 20.99, n = 50, no prompting: 35.21, 95% CI (29.99; 40.44), SD = 19.52, n = 56. Interest in the topic ‘epigenetics’ did not differ between the groups; χ2 (2) = 0.63, p = .728; η2 = 0.009. We observed rather high mean ratings of interest in the topic ‘epigenetics’ with high variance across all groups; resource-oriented prompting: M = 76.47, 95% CI (70.75; 82.19), SD = 20.76; n = 53, deficit-oriented prompting: M = 75.73, 95% CI (69.48; 81.99), SD = 21.79; n = 49, no prompting: M = 73.63, 95% CI (67.52; 79.75), SD = 21.29, n = 49.

Manipulation check

To ensure that prompting did indeed increase the use of a metacognitive question as intended through prompting, we ran a Kruskal-Wallis test with ordinal scaled data for use frequency of a metacognitive question. Unexpectedly, no statistically detectable difference between the three groups was observed (χ2(2) = 1.95, p = .378; padj. > 0.999) with mean ranks for use frequency of 84.85 (resource-oriented prompt), 85.53 (deficit-oriented prompt) and 74.74 (no prompt). Using a metacognitive question three times was most frequently reported in the group with the resource-oriented question (23 times, 42%) and the deficit-oriented question (15 times, 30%). Using a metacognitive question two times was most frequently reported in the control group (18 times, 32%). This unexpected outcome will be discussed in the limitations section.

Discussion

How accurate are metacognitive judgments of comprehension?

The main aim of this study was to examine the impact of resource-oriented and deficit-oriented prompts during text-reading on metacognitive accuracy when evaluating text comprehension. Our hypothesis was based on the embedded-processes model of working memory (Cowan, 1988), and the notion that metacognitive prompts can be used to allocate attention to specific features during learning. We assumed that the information will subsequently be used when forming a judgment about comprehension. We hypothesised that applying resource-oriented prompts leads to an increase in metacognitive accuracy, and we propose multiple mechanisms when applying a deficit-oriented prompt. To test this hypothesis, we examined the discrepancy of judgment of comprehension and learning performance between groups.

Based on our analyses, we can neither confirm nor reject our hypothesis. We observed shifted metacognitive accuracy through deficit-oriented prompts (towards increased overestimation) based on confidence intervals as well as a small to moderate effect size, but significance testing for mean difference does not confirm these initial observations. We are hesitant to express a conclusion, but assume that if there was an effect of prompting on information processing and the formation of a metacognitive judgment, it might merely be a small effect. Other studies that addressed the effects of context-free and content-specific have found that context-free prompts are more effective than context-free prompts (e.g. Kramarski & Kohen, 2017). Having applied context-free prompts, our results are congruent with these studies.

In our study, we observed overall positive values in metacognitive accuracy in all groups implying an overestimation of judgment of comprehension. This finding is similar to a general and stable overconfidence effect as addressed by others (e.g. Gigerenzer et al., 1991; Koriat et al., 1980). This effect is said to occur when ‘confidence judgments are larger than the relative frequencies of the correct answers’ (Gigerenzer et al., 1991, p. 506). The overconfidence effect is generally observed when making a judgment after having answered a performance question. That is in contrast to our study design, in which a judgment about comprehension was made before answering performance questions.

The observed overestimation might also be a result of the difficult learning test that was designed to particularly obtain test results in and around the centre of the performance spectrum to avoid ceiling effects. A difference between judgment of comprehension and learning performance is not unexpected. Naturally, the absolute values of discrepancy in our study need to be viewed in a different light compared to classroom assessments in which learners are supposedly more familiar with learning standards set by teachers. We would expect less overall discrepancy between judgment of comprehension and learning performance in settings in which learning goals and criteria for assessment are communicated transparently to learners (e.g., Bol et al., 2012).

The impact of metacognitive prompting on learning performance and self-evaluation

In this study, we compared the impact of resource-oriented and a deficit-oriented prompting on various measurements of learning. Contrary to previous findings (e.g., Berthold et al., 2007; Dori et al., 2018; Großschedl & Harms, 2013; Peters & Kitsantas, 2010), we did not observe improvements in learning performance. In accordance with the finding, that prompts need to be used regularly to be an effective tool to improve learning performance (Moser et al., 2017), we observe no immediate impact of prompts on learning performance. Beyond, we did not observe differences in satisfaction with learning, judgment of comprehension or the prediction of prolonged comprehension between both types of metacognitive questions.

Effectiveness of prompting and metacognitive activity in an online environment

In this study, the effectiveness of prompting was measured using a self-reported question regarding the use frequency of a question. Participants in the control group did not receive an introduction on metacognition and were likely to be unfamiliar with the term ‘metacognitive question’ in the self-report question. A short explanation on metacognitive question was integrated. We believe this short explanation led participants to report the use of a metacognitive question retrospectively and does not reflect metacognitive activity itself. In our view, it is likely that pre-service teachers in the control group did not apply the prompts deliberately and consciously as intended through prompting, and the measurement of use frequency is likely to be invalid. This is supported by the finding that the comparison of metacognitive accuracy with use frequency as covariate yielded no findings, suggesting that use frequency has no impact on metacognitive accuracy.

However, the effectiveness of prompting (how often and how extensive a metacognitive question is used) is likely to play a major role in its impact on metacognitive accuracy. We would like to raise the question whether learning environment impacts the effectiveness of prompts. Although prompts are widely investigated in online learning settings, they might have restricted effectiveness compared to classroom or group settings in which learning might be more standardised. For example, the use of metacognitive questions in a classroom might be instructed verbally, a certain time span might be specifically assigned to answer these questions, and instructors could clarify task instruction in case of misunderstandings. Potential difference between learning settings could be a future study objective.

The role of extreme values in statistical analyses

We chose a conservative way of handling extreme values in this study (i.e. eliminating all extreme values that exceeded the threshold of two standard deviations from the mean value of each dependent variable). This was done to ensure that assumptions of the respective statistical test are met and to account for validity constraints that naturally accompany an online survey during the COVID19 pandemic with lock down restrictions. Indeed, there are good reasons to address extreme values. The extreme values that we observe might represent students that over- or underestimate their own performance particularly divergently from the average student (or perform particularly low or high) in the classroom. In naturalistic learning environments, these students might need individual support or feedback. In this study, we could not include these students due to our necessary statistical decisions and point out that those are the students that might benefit the most from prompts intended to improve metacognitive accuracy (see Kruger & Dunning, 1999). For future studies, we propose analyses of extreme values in naturalistic environments in an attempt to identify approaches to improve metacognitive accuracy in those groups who are particularly prone to over- and underestimation.

Limitations

As any study, this experimental study needs to be viewed in light of some limitations. Given that this study was carried out as an online survey, constraints in validity need to be addressed. Participants might have used additional aids to answer test questions despite being asked to refrain from doing so. Participants may have taken different amounts of time for reading and answering the question. Participants’ motivation might have been diminished by the online survey in comparison to a classroom assessment. It needs to be questioned if they put appropriate effort into the task which we believe to be secured by the observed high interest in the topic. We addressed the anticipated limitation by choosing a conservative way of handling extreme values.

We did not identify in what depth students addressed and answered metacognitive questions. For instance, we were unable to observe whether pre-service took notes, how much time they spent answering the metacognitive questions and which specific contents they focused on. However, the depth and specificity in which the question is addressed probably contributes to the activation of memory traces and hence, its impact on metacognitive accuracy and learning performance. A qualitative, laboratory research design with opportunities for participant observation or sufficient time for students’ introspection might allow answering in what depth students addressed the metacognitive questions.

Conclusion and practical implications

Following the idea of the cue-utilisation approach (Koriat, 1997), metacognitive accuracy is influenced by information processing during learning. With reference to the embedded-processes model (Cowan, 1988), we argued that attentional processes are guided through prompting. Through guided prompting, learners place their attention on specific information during the learning process. The information will be taken into account when forming a judgment of comprehension and hence impact metacognitive accuracy. Based on our analyses, we can neither confirm nor reject our hypothesis but assume small effects of prompting on metacognitive accuracy if any. Learning performance and judgment of comprehension were not impacted by the use of resource-oriented and deficit-oriented prompting. Other measurements of self-evaluation (i.e. satisfaction with learning outcome and prediction about prolonged comprehension) were not influenced by prompting either. Results are viewed in the background of online learning which might have restricted effectiveness of their implementation.

To increase the use of resource-oriented and deficit-oriented questions, we would like to address some practical considerations. These considerations are needed because addressing resources and deficits in an objective way might offer learners a range of opportunities for their academic development. Identifying gaps in comprehension is the key to filling those gaps, which in turn can lead to a manifestation of resources in the future. Finding a style of managing own resources and deficits and cultivating appropriate regulation of the attendant emotions can be seen as a goal of metacognition itself as much as it can be viewed as an opportunity for academic development. Recommendations on how to address deficits and mistakes specifically might include the following: (a) making deficit-oriented prompts transparent and explaining how these questions are intended to improve metacognitive processes, (b) communicate learning goals transparently, (c) applying additional prompts to provide opportunities to overcome lacks in comprehension, (d) acknowledging potential negative emotions that might be involved and (e) creating an environment in which learners are encouraged to contribute openly and freely to classroom discussions and in which mistakes are not viewed as personal failures. Instead, a stance should be hold that supports the idea of deficits and mistakes being inherent to learning which offer the opportunity for development. We view the promotion of pleasure in understanding one’s own thinking as the key to teaching metacognitive skills. Developing metacognitive skills and improving metacognitive accuracy are long-term processes to which resource-oriented and deficit-oriented prompts might contribute. For future research in this field, we identify the need for long-term studies investigating efficiency of prompts on cognitive and emotional criterions.

Data availability

Data are openly available in https://osf.io/ykzvg/.

References

Bannert, M., & Mengelkamp, C. (2013). Scaffolding hypermedia learning through metacognitive prompts. In International handbook of Metacognition and Learning Technologies (pp. 171–186). Springer. https://doi.org/10.1007/978-1-4419-5546-3_12

Bannert, M., & Reimann, P. (2012). Supporting self-regulated hypermedia learning through prompts. Instructional Science, 40(1), 193–211. https://doi.org/10.1007/s11251-011-9167-4

Bannert, M., Sonnenberg, C., Mengelkamp, C., & Pieger, E. (2015). Short-and long-term effects of students’ self-directed metacognitive prompts on navigation behavior and learning performance. Computers in Human Behavior, 52, 293–306. https://doi.org/10.1016/j.chb.2015.05.038

Berthold, K., Nückles, M., & Renkl, A. (2007). Do learning protocols support learning strategies and outcomes? The role of cognitive and metacognitive prompts. Learning and Instruction, 17(5), 564–577. https://doi.org/10.1016/J.LEARNINSTRUC.2007.09.007

Bol, L., Hacker, D. J., Walck, C. C., & Nunnery, J. A. (2012). The effects of individual or group guidelines on the calibration accuracy and achievement of high school biology students. Contemporary Educational Psychology, 37(4), 280–287.

Cowan, N. (1988). Evolving conceptions of memory storage, selective attention, and their mutual constraints within the human information-processing system. Psychological Bulletin, 104(2), 163. https://doi.org/10.1037/0033-2909.104.2.163

Daumiller, M., & Dresel, M. (2019). Supporting self-regulated learning with digital media using motivational regulation and metacognitive prompts. The Journal of Experimental Education, 87(1), 161–176. https://doi.org/10.1080/00220973.2018.1448744

de Boer, H., Donker, A. S., Kostons, D. D., & van der Werf, G. P. (2018). Long-term effects of metacognitive strategy instruction on student academic performance: A meta-analysis. Educational Research Review, 24, 98–115. https://doi.org/10.1016/j.edurev.2018.03.002

Devolder, A., van Braak, J., & Tondeur, J. (2012). Supporting self-regulated learning in computer-based learning environments: Systematic review of effects of scaffolding in the domain of science education. Journal of Computer Assisted Learning, 28(6), 557–573. https://doi.org/10.1111/j.1365-2729.2011.00476.x

Donker, A. S., De Boer, H., Kostons, D., Van Ewijk, C. D., & van der Werf, M. P. (2014). Effectiveness of learning strategy instruction on academic performance: A meta-analysis. Educational Research Review, 11, 1–26. https://doi.org/10.1016/j.edurev.2013.11.002

Dori, Y. J., Avargil, S., Kohen, Z., & Saar, L. (2018). Context-based learning and metacognitive prompts for enhancing scientific text comprehension. International Journal of Science Education, 40(10), 1198–1220. https://doi.org/10.1080/09500693.2018.1470351

Doering, N., & Bortz, J. (2016). Forschungsmethoden und Evaluation. [Research Methods and Evaluation]. Springerverlag.

Dunlosky, J., & Lipko, A. R. (2007). Metacomprehension: A brief history and how to improve its accuracy. Current Directions in Psychological Science, 16(4), 228–232.

Dunning, D., Heath, C., & Suls, J. M. (2004). Flawed self-assessment: Implications for health, education, and the workplace. Psychological Science in the Public Interest, 5(3), 69–106. https://doi.org/10.1111/j.1529-1006.2004.00018.x

Engelmann, K., Bannert, M., & Melzner, N. (2021). Do self-created metacognitive prompts promote short-and long-term effects in computer-based learning environments? Research and Practice in Technology Enhanced Learning, 16(1), 1–21. https://doi.org/10.1186/s41039-021-00148-w

Flavell, J. H. (1979). Metacognition and cognitive monitoring: A new area of cognitive–developmental inquiry. American Psychologist, 34(10), 906. https://doi.org/10.1037/0003-066X.34.10.906

Gardner, M. J., & Altman, D. G. (1986). Confidence intervals rather than P values: Estimation rather than hypothesis testing. British Medical Journal, 292(6522), 746–750.

Gigerenzer, G., Hoffrage, U., & Kleinbölting, H. (1991). Probabilistic mental models: A Brunswikian theory of confidence. Psychological Review, 98(4), 506. https://doi.org/10.1037/0033-295x.98.4.506

Großschedl, J., & Harms, U. (2013). Effekte metakognitiver Prompts auf den Wissenserwerb beim Concept Mapping und Notizen Erstellen. [Effects of metacognitive prompts on knowledge acquisition in concept mapping and note taking]. Zeitschrift für Didaktik der Naturwissenschaften, 19, 375–395.

Haller, E. P., Child, D. A., & Walberg, H. J. (1988). Can comprehension be taught? A quantitative synthesis of “metacognitive” studies. Educational Researcher, 17(9), 5–8. https://doi.org/10.3102/0013189X017009005

Hemmerich, W. (2016). StatistikGuru: Rechner zur Adjustierung des α-Niveaus. [StatisitikGuru: Calculator for the adjustment of α-levels]. Retrieved from https://statistikguru.de/rechner/adjustierung-des-alphaniveaus.html

Holm, S. (1979). A simple sequentially rejective multiple test procedure. Scandinavian Journal of Statistics, 6(2), 65–70. https://www.jstor.org/stable/4615733

Hübner, S., Nückles, M., & Renkl, A. (2006). Prompting cognitive and metacognitive processing in writing-to-learn enhances learning outcomes. In Proceedings of the annual meeting of the cognitive science society (Vol. 28, No. 28).

Kim, N. J., Belland, B. R., & Walker, A. E. (2018). Effectiveness of computer-based scaffolding in the context of problem-based learning for STEM education: Bayesian meta-analysis. Educational Psychology Review, 30(2), 397–429. https://doi.org/10.1007/S10648-017-9419-1

King, J. F., Zechmeister, E. B., & Shaughnessy, J. J. (1980). Judgments of knowing: The influence of retrieval practice. The American Journal of Psychology, 329–343.

Knippers, R. (2017). Epigenetik. In Eine kurze Geschichte der Genetik (pp. 327–354). [A short History of Genetics]. Springer.

Koo, T. K., & Li, M. Y. (2016). A guideline of selecting and reporting intraclass correlation coefficients for reliability research. Journal of Chiropractic Medicine, 15(2), 155–163. https://doi.org/10.1016/j.jcm.2016.02.012

Koriat, A. (1997). Monitoring one’s own knowledge during study: A cue-utilization approach to judgments of learning. Journal of Experimental Psychology: General, 126(4), 349. https://doi.org/10.1037/0096-3445.126.4.349

Koriat, A., Lichtenstein, S., & Fischhoff, B. (1980). Reasons for confidence. Journal of Experimental Psychology: Human learning and memory, 6(2), 107. https://doi.org/10.1037/0278-7393.6.2.107

Kramarski, B., & Kohen, Z. (2017). Promoting preservice teachers’ dual self-regulation roles as learners and as teachers: Effects of generic vs. specific prompts. Metacognition and Learning, 12(2), 157–191. https://doi.org/10.1007/s11409-016-9164-8

Kruger, J., & Dunning, D. (1999). Unskilled and unaware of it: How difficulties in recognizing one’s own incompetence lead to inflated self-assessments. Journal of Personality and social Psychology, 77(6), 1121. https://doi.org/10.1037/0022-3514.77.6.1121

Leiner, D. J. (2022). SoSci Survey (Version 3.3.20) [Computer software]. Available at https://www.soscisurvey.de

Mazzoni, G., & Cornoldi, C. (1993). Strategies in study time allocation: Why is study time sometimes not effective? Journal of experimental psychology: General, 122(1), 47.

Mazzoni, G., Cornoldi, C., & Marchitelli, G. (1990). Do memorability ratings affect study-time allocation? Memory & Cognition, 18, 196–204.

McCarthy, K. S., Likens, A. D., Johnson, A. M., Guerrero, T. A., & McNamara, D. S. (2018). Metacognitive overload!: Positive and negative effects of metacognitive prompts in an intelligent tutoring system. International Journal of Artificial Intelligence in Education, 28(3), 420–438. https://doi.org/10.1007/s40593-018-0164-5

Metcalfe, J., & Finn, B. (2008). Evidence that judgments of learning are causally related to study choice. Psychonomic Bulletin & Review, 15(1), 174–179. https://doi.org/10.3758/pbr.15.1.174

Metcalfe, J., & Kornell, N. (2005). A region of proximal learning model of study time allocation. Journal of memory and language, 52(4), 463–477.

Miller, T. M., & Geraci, L. (2014). Improving metacognitive accuracy: How failing to retrieve practice items reduces overconfidence. Consciousness and cognition, 29, 131–140.

Mitchum, A. L., Kelley, C. M., & Fox, M. C. (2016). When asking the question changes the ultimate answer: Metamemory judgments change memory. Journal of experimental psychology: General, 145(2), 200.

Moser, S., Zumbach, J., & Deibl, I. (2017). The effect of metacognitive training and prompting on learning success in simulation-based physics learning. Science Education, 101(6), 944–967. https://doi.org/10.1002/SCE.21295

Nelson, T. O., & Dunlosky, J. (1991). When people’s judgments of learning (JOLs) are extremely accurate at predicting subsequent recall: The “delayed-JOL effect”. Psychological Science, 2(4), 267–271.

Petermann, F., & Schmidt, M. H. (2006). Ressourcen-ein Grundbegriff der Entwicklungspsychologie und Entwicklungspsychopathologie? [Resources - a basic concept in developmental Psychology and developmental Psychopathology?]. Kindheit und Entwicklung, 15(2), 118–127. https://doi.org/10.1026/0942-5403.15.2.118

Peters, E., & Kitsantas, A. (2010). The effect of nature of science metacognitive prompts on science students’ content and nature of science knowledge, metacognition, and self-regulatory efficacy. School Science and Mathematics, 110(8), 382–396. https://doi.org/10.1111/j.1949-8594.2010.00050.x

Prinz-Weiß, A., Lukosiute, L., Meyer, M., & Riedel, J. (2023). The role of achievement emotions for text comprehension and metacomprehension. Metacognition and Learning, 18(2), 347–373.

Quade, D. (1967). Rank analysis of covariance. Journal of the American Statistical Association, 62(320), 1187–1200.

Rawson, K. A., Dunlosky, J., & Thiede, K. W. (2000). The rereading effect: Metacomprehension accuracy improves across reading trials. Memory & Cognition, 28, 1004–1010.

Rhodes, M. G., & Castel, A. D. (2009). Metacognitive illusions for auditory information: Effects on monitoring and control. Psychonomic Bulletin & Review, 16(3), 550–554. https://doi.org/10.3758/PBR.16.3.550

Rhodes, M. G., & Tauber, S. K. (2011). The influence of delaying judgments of learning on metacognitive accuracy: A meta-analytic review. Psychological bulletin, 137(1), 131. https://doi.org/10.1037/a0021705

Schraw, G. (1998). Promoting general metacognitive awareness. Instructional Science, 26(1), 113–125. https://doi.org/10.1023/A:1003044231033

Schraw, G., & Moshman, D. (1995). Metacognitive theories. Educational Psychology Review, 7(4), 351–371. https://doi.org/10.1007/BF02212307

Serra, M. J., & Dunlosky, J. (2010). Metacomprehension judgements reflect the belief that diagrams improve learning from text. Memory, 18(7), 698–711.

Simmons, J. P., Nelson, L. D., & Simonsohn, U. (2011). False-positive psychology: Undisclosed flexibility in data collection and analysis allows presenting anything as significant. Psychological Science, 22(11), 1359–1366. https://doi.org/10.1177/0956797611417632

Thiede, K. W., Anderson, M., & Therriault, D. (2003). Accuracy of metacognitive monitoring affects learning of texts. Journal of educational psychology, 95(1), 66.

Thiede, K. W., Dunlosky, J., Griffin, T. D., & Wiley, J. (2005). Understanding the delayed-keyword effect on metacomprehension accuracy. Journal of Experimental Psychology: Learning, Memory, and Cognition, 31(6), 1267.

Thiede, K. W., Griffin, T. D., Wiley, J., & Anderson, M. C. (2010). Poor metacomprehension accuracy as a result of inappropriate cue use. Discourse Processes, 47(4), 331–362.

Undorf, M., Söllner, A., & Bröder, A. (2018). Simultaneous utilization of multiple cues in judgments of learning. Memory & Cognition, 46, 507–519.

van Alten, D. C., Phielix, C., Janssen, J., & Kester, L. (2020). Effects of self-regulated learning prompts in a flipped history classroom. Computers in Human Behavior, 108, 106318. https://doi.org/10.1016/j.chb.2020.106318

Van den Boom, G., Paas, F., Van Merrienboer, J. J., & Van Gog, T. (2004). Reflection prompts and tutor feedback in a web-based learning environment: Effects on students’ self-regulated learning competence. Computers in Human Behavior, 20(4), 551–567. https://doi.org/10.1016/j.chb.2003.10.001

Vangsness, L., & Young, M. E. (2021). More isn’t always better: When metacognitive prompts are misleading. Metacognition and Learning, 16(1), 135–156.

Veenman, M. V. (2012). Metacognition in science education: Definitions, constituents, and their intricate relation with cognition metacognition in science education (pp. 21–36). Springer. https://doi.org/10.1007/978-94-007-2132-6_2

Wasserstein, R. L., & Lazar, N. A. (2016). The ASA statement on p-values: Context, process, and purpose. The American Statistician, 70(2), 129–133.

Zheng, L. (2016). The effectiveness of self-regulated learning scaffolds on academic performance in computer-based learning environments: A meta-analysis. Asia Pacific Education Review, 17(2), 187–202. https://doi.org/10.1007/s12564-016-9426-9

Zohar, A., & Barzilai, S. (2013). A review of research on metacognition in science education: Current and future directions. Studies in Science Education, 49(2), 121–169. https://doi.org/10.1080/03057267.2013.847261

Acknowledgements

We would like to thank Maren Flottmann, Britta Moers and Lara Tolentino for their professional proof-reading.

Funding

Open Access funding enabled and organized by Projekt DEAL. This study was conducted using resources within the working group of Prof. Großschedl at the Institute for Biology Education, Faculty of Mathematics and Natural Sciences, University of Cologne, Cologne, Germany. This study received no specific funding.

Author information

Authors and Affiliations

Contributions

The authors agree with the content of this work and give their consent to its submission. The authors made the following contributions: SE: conceptualization and study design, acquisition, data analysis, data interpretation, original draft preparation and writing; JG: resources, revision and supervision

Corresponding authors

Ethics declarations

Ethics approval

The conduction of this online study involving human participants was in accordance with the ethical standards set in the declaration of Helsinki from 2013, the ethical guidelines of the German Psychological Society (DGPs) and the institutional ethical standards.

Conflict of interest

The authors declare no competing interests.

Additional information

Current themes of research and most relevant publications in the field of Psychology of Education

Stefanie Elsner is a PhD student at the Institute for Biology Education at the University of Cologne. She is currently interested in research about teaching instructions to support learners’ skill development in metacognition and self-regulated learning. A second branch of her research interest covers exercise-induced effects on cognition and metacognition in children. She has published one paper (shared first authorship) in the field of Psychology of Education:

Lenski, S. J., Elsner, S., & Großschedl, J. Comparing construction and study of concept maps–An intervention study on cognitive, metacognitive and emotional effects of training & learning. In Frontiers in Education (p. 376). Frontiers.

Jörg Großschedl is Professor at the Institute for Biology Education at the University of Cologne. His research interests lie in learning and teaching strategies, metacognition, contextual thinking, teacher education and teaching evolution. He has published several papers in the field of Psychology of Education, for instance:

Großschedl, J., Welter, V., & Harms, U. (2019). A new instrument for measuring pre-service biology teachers' pedagogical content knowledge: The PCK-IBI. Journal of Research in Science Teaching, 56(4), 402-439. 10.1002/tea.21482

Mahler, D., Großschedl, J., & Harms, U. (2018). Does motivation matter? – The relationship between teachers’ self-efficacy and enthusiasm and students’ performance. PLoS ONE, 13(11), e0207252. 10.1371/journal.pone.0207252

Paulick, I., Großschedl, J., Harms, U., & Möller, J. (2017). How teachers perceive their expertise: The role of dimensional and social comparisons. Contemporary Educational Psychology, 51, 114-122.

Welter, V. D. E., Herzog, S., Harms, U., Steffensky, M., Großschedl, J. (2022). School subjects’ synergy and teacher knowledge: Do biology and chemistry teachers benefit equally from their second subject? Journal of Research in Science Teaching, 59, 1-42. 10.1002/tea.21728

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

ESM 1

(DOCX 31 kb)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Elsner, S., Großschedl, J. Can metacognitive accuracy be altered through prompting in biology text reading?. Eur J Psychol Educ 39, 1465–1483 (2024). https://doi.org/10.1007/s10212-023-00747-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10212-023-00747-9