Abstract

The present study aimed to merge expertise from evidence-based practice and user-centered design to develop a rating scale for considering user input and other sources of information about end-users in studies reporting on the design of technology-based support for autism. We conducted a systematic review of the relevant literature to test the reliability and validity of the scale. The scale demonstrated acceptable reliability and validity based on a randomized sample of 211 studies extracted from the output of the systematic review. The scale can help provide a more complete assessment of the quality of the design process of technology-based supports for autism and be beneficial to autistic people, their families, and related professionals in making informed decisions regarding such supports.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Digital technologies can be used as therapeutic, educational, or accessibility tools [1]. This can include using technologies with a leisure function to facilitate participation and enhance quality of life. Members of the autistic community find technology-based supports particularly acceptable [2]. Subsequently, there has been an exponential increase in the number of technology-based supports for the autistic community [3,4,5]. In the past decade, technology-based supports for autism have extended beyond the use of simple desktop computers. They now involve robots [6,7,8], touch screen devices [9], speech-generating devices [10], virtual reality [11,12,13,14], tangibles [15], and wearables [16, 17]. Several systematic reviews highlight the intensity of research on supports for autistic people based on technologies such as virtual or augmented reality [18,19,20,21], mobile or wearable devices [22, 23], robots [24], computerized instruction [25, 26], and serious games [27, 28]. At the same time, parents/caregivers often worry about their autistic children (young people or adults) spending too much time on digital technology (e.g., games and social networks), expressing fears about obsessive behaviors or social isolation [29,30,31,32]. It is, therefore, pertinent for this group that technology-based supports are selected based upon solid evidence of appropriateness and that they are designed with autistic users in mind.

Although the results of studies evaluating technological supports vary in terms of the benefits for autistic people, the overall findings are positive. Technology-based supports often result in benefits such as increased motivation, decreased external signs of anxiety or distress, increased attention, and sometimes increased learning compared to traditional methods [33]. The literature also shows that technology-based supports are beneficial in the areas of social communication [34], emotion recognition [35,36,37,38] as well as academic skills acquisition and improvement [39, 40]. Technology, both in educational and home settings, is being used in a variety of supportive ways to increase independence, reduce anxiety, and create social opportunities for young autistic people [41,42,43,44,45]. With the advent of tablets and smartphones, digital technologies have become easier to use. They are accessible for a broader range of the autism spectrum, including very young children and individuals with lower reading and language abilities [31]. Studies have also reported participants' eagerness to engage with technology [46, 47] and high motivation to complete their work [48, 49].

Although a wide range of technology is available to support autistic people, evidence of its effectiveness is still a matter of debate [4, 50]. Despite almost five decades since the first studies on technology-based supports for autism [51, 52], and the intense research on this topic during the last fifteen years, technology-based supports are still perceived as "emerging" and lacking clinical validity (National Autism Center, www.nationalautismcenter.org). Evidence-based practice (EBP), when applied in healthcare, advocates for clinical decisions to be guided by the integration of best available research, clinical expertise, patient values, circumstances, and healthcare system policies [53,54,55]. Evidence of efficacy is based on relevant scientific methodologies, with meta-analyses and randomized controlled trials (RCT) considered "gold standard" methods [56]. Given the importance of non-clinical supports and educational approaches in autism support practices and a dearth of RCTs in this field, some argue in favor of a more inclusive range of methodologies to be considered, such as single-case designs [57, 58]. Ramdoss et al. [34] systematically reviewed the use of computer-based communication interventions for autistic children for their certainty of evidence. Conclusive evidence was found for only two studies. Two others had strong but not conclusive evidence (categorized by the authors as "preponderance level" of evidence), and six had suggestive evidence. A meta-analysis conducted by Grynszpan et al. [4] identified only 22 pre-post group design studies out of 379. Their findings indicated that digital supports could be effective for autism. Still, only a very small proportion of the evidence from group-design studies (6%) provided sufficient evidence to make an informed decision whether to use digital support.

Although the field of technology for autism largely lacks evidence from RCT, there are signs that this gold-standard methodology is becoming more commonplace [8, 36, 37, 59,60,61,62,63,64,65,66,67,68]. For instance, three RCT studies [59, 60, 62] report computer-assisted learning to teach attention to and recognition of facial cues. An RCT [63] compared a conventional (low-tech) version of the Picture Exchange Communication System [69] with an open-source digital (high-tech) version (called FastTalker). Another RCT [67] found significant improvements in socialization for autistic participants receiving intervention with Superpower Glass, an artificial intelligence-driven wearable behavioral intervention. However, advances in the quality of evidence are somewhat undermined by the observation that technology-based supports having robust evidence of effectiveness are rarely commercially available [70], while commercially available therapeutic technologies are infrequently evaluated in research [34, 71]. For example, from an analysis of 695 commercially available apps for autism, only 5 (< 1%) were found to be supported by direct evidence [50].

EBP focuses on evaluating the effectiveness of a finished product and is less concerned with its design process. Numerous EBP-inspired studies have assessed the effect of using technological supports on outcome measures. However, they have rarely analyzed how the design process was informed by evidence from autistic users [72]. Technology-based support requires not only a robust evidence base but also the involvement of the autistic community in the research and development process [30, 73,74,75] to ensure the needs of the community are met. Several studies have involved autistic end-users with diverse competency levels at different stages of the design process, which has benefitted the development of assistive technologies [76]. These methods are referred to as user-centered design (UCD)' [54]. UCD is an iterative design approach based on an explicit understanding of users, tasks, and environments, driven by user evaluation and multidisciplinary perspectives. Involving potential users in the design process and testing prototypes is a hallmark of UCD [77]. Existing UCD models for health technologies advocate collecting evidence from end-users through various means, such as field observation, interviews, focus groups, literature reviews (including grey literature), surveys, demographic data, web analytics, prototype testing, and participation in the design process [78,79,80]. To the authors' best knowledge, there are currently no scaling instruments to evaluate the level of user input and other sources of evidence contributing to the design process as it relates to technology in autism. The goal of the present study was to create such a scale, primarily intended for autism specialists to apply without needing specific expertise in technological design.

The goal of the present study was to develop and conduct a systematic literature review to test a rating instrument, named the User-based Information in Designing Support (UIDS), that enables systematic evaluation of user input and other sources of information about end-users informing design processes reported in the technological support literature. This instrument was meant to supplement a newly developed framework of evidence-based practice for technological supports for autistic people [32]. This framework was the output of a Delphi study, a methodology used to integrate opinions from diverse experts [81]. The study involved 27 panel members, including autistic people, families, autism-related professionals, and researchers who were asked to list and rate possible sources of evidence on technological supports. Outcomes revealed that the panel valued academic research, expertise of design teams, and inclusion of autistic people in design teams. Their conclusions thus suggested a need for a standardized instrument to evaluate sources of evidence used to guide the design process in studies on technological supports for autism.

2 Methods

2.1 Material: the User-based Information in Designing Support (UIDS) scale

The User-based Information in Designing Support (UIDS) scale was developed to assess the degree to which the design process of a piece of technology meant for support was informed about autistic users. It integrates ISO (International Organization for Standardization, www.iso.org) norms for human–computer interaction (ISO 9241-210:2010) and Druin's [82] taxonomy of user participation. As UCD requires understanding users' incentives, capacities, needs, and goals, we included criteria in the UIDS scale that evaluated the extent to which end-users were involved in iterative cycles of technological development. In addition, in compliance with Evidence-Based Software Engineering (EBSE) [54], the UIDS scale gauges whether the design process involved literature reviews. To translate these requirements in the context of a rating instrument for examining research reports, we created a scoring system that considers whether the developer of the technology conducted a systematic literature review, referred to the literature or other relevant sources, made a replicable design with end-users or one with proxies (e.g., parents, autism professionals), a non-replicable design with end-users or with proxies, and whether they observed end-users in their environment (see Table 1). The items' weights were determined based on a consensus between authors. Most of them followed an ordinal scale. Some were given half points if they were considered of lesser quality. To the best of the authors' knowledge, no other similar instrument could be used as a reference base to assess the quality of information about users in studies reporting on the design process of technology-based support.

2.1.1 Scoring of the UIDS scale

Three criteria were scored: design process, literature review, and user’s role (Tables 1, 2). They were presented in two separate tables for the sake of clarity.

The terms mentioned above were defined as follows:

Replicable design: Enough details are provided for another team to be able to run the same study.

End-users: Eason [83] defined system users as primary (people using the system), secondary (occasional users, or users through an intermediary), and tertiary (people affected by the usage or people making decisions about its purchase). Our study focused on primary users, whom we refer to as “end-users,” meaning the autistic people for whom the system was designed.

Proxies: People affiliated with the target population, such as parents or autism professionals. Typically developing people of the same developmental age as end-users can also be counted as proxies if they are involved in the design process to collect data relevant to the end-users due to similarities in abilities, incentives, goals, or needs. Some UCD methods require verbal reports from end-users testing the technology [84]. This can be an issue when designing technology for minimally verbal individuals, in which case resorting to proxies may be an acceptable workaround [74]. However, a non-replicable design with proxies associates with only a half-point. It is considered less than the average quality expected from a standard design process from a UCD perspective.

Systematic literature review: Overview of primary research on a focused question following a systematic research strategy.

Literature review: Narrative review of a topic.

Reference to literature or official sources: Limited references, which are not sufficient to present a review on a topic, or references to grey literature, e.g., Government technical reports, citations of a few academic sources or material from relevant national organizations such as NAS (National Autistic Society, UK) and CRA (Centres Ressources Autisme, France). This label was associated with only a half-point as it was considered less than the average quality expected from a standard scientific literature review.

For the design procedure criterion, points could be added. For example, if a study involved users' observations and a non-replicable design with proxies, it would be given 1 + 0.5 = 1.5 points. However, no more than 2 points could be awarded in this part. For the literature review criterion, a study could only receive one of these ratings: 0.5, 1, or 2.

These ratings were combined with a rating for the user’s role in the design process. The definition of user’s roles was based on Druin's taxonomy [82, 85] (see Table 2). The UIDS scale assessed the extent to which user needs and goals were prioritized rather than business/institution goals. It also considered the time and number of occasions users were consulted. The user’s role criterion focused on end-users and not proxies, as we considered that the actual end-user needed to be consulted as she/he was to play a role in the technological design. Studies in which end-users were genuine design partners, in other words, "participatory designs,” received the highest scores. Attributing the highest score to participatory designs is consistent with the expert panel consensus yielded by the Delphi study reported in Zervogianni et al. [32]. Points 1–4 were awarded only if end-users (i.e., autistic people) were involved; otherwise, a 0 score was given (Table 2).

Any study could get a minimum of 0.5 and a maximum of 8 points (2 points for Design procedure, 2 points for Literature review, and 4 points for User’s role). UIDS quality was labelled "low" when scores were between "0.5 and 2.5", "medium" for scores between "3 and 5.5," and "high" for scores between "6 and 8". A short scoring sheet is available in the joint online supplementary material (S1 UIDS scoring sheet).

2.2 Literature search and selection procedure

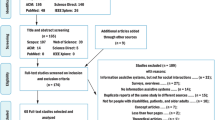

Validity testing of the instrument described above-required material from relevant literature. A systematic literature review was organized following PRISMA's guidelines for the identification, screening, eligibility, and inclusion of papers (Fig. 1). The PRISMA statement is a set of guidelines for the minimum collection of items reported in systematic reviews and meta-analyses on healthcare supports [86].

2.2.1 Identification of relevant literature

Our work aimed at screening digital interactive technology for the autistic population. The following keywords pertaining to technology-based supports were used: Computer, Mobile, Smartphone, iPad, Tablet, Virtual, Robot, Haptic, Wearable, and Wii. They were combined with keywords referring to autism conditions, namely autism and Asperger. Although the latter diagnosis is no longer made [87], it has been used extensively during the last decades.

Scientific databases of different foci were searched to accommodate the multidisciplinary nature of the field. More specifically, records were sought in the following scientific databases, covering the domains of education, medicine, psychology, and computer science: ACM (Association for Computing Machinery) Digital Library, IEEE (Institute of Electrical and Electronics Engineers) Xplore Digital Library, ERIC (Education Resources Information Center), PsycINFO, PubMed, and Web of Science.

Relevant papers were identified based on the following fields: Title, abstract, and meta-data (language, publication year, location, number of pages). As technology rapidly becomes outdated, the search focused on recent literature. The date range for the records search was set between 2010 and 2018. The motivation for choosing 2010 as the start year was that it coincided with the launch of the iPad, a platform massively used in healthcare and educational contexts due to its accessibility and portability and the popularity of its manufacturer's products [31, 50]. The database search was conducted in March 2018. The total number of records returned through the process was 5,496. The citation files of each database were exported, and a software script (in Microsoft Visual Studio) was developed to process the files and remove duplicates. The number of records that remained after duplicate removal was 2716.

2.3 Screening

The inclusion and exclusion criteria listed below were applied to the title, abstract, and specific meta-data (language, year of publication, number of pages) in the screening process.

Inclusion criteria:

-

The technology-based support was employed to result in a cognitive and/or behavioral change. Assistive technologies were considered resulting in cognitive or behavioral changes;

-

The technology used was interactive. It involved interactions with the user (e.g., playing computer games) compared to technology where users are passive (e.g., watching television). This criterion was the same as that used in the meta-analysis by Grynszpan et al. [4];

-

Autistic individuals would be end-users of the technology. Technology meant to train or support practitioners or caregivers was discarded. In cases where a study targeted more than one population, including autism, at least 50% of the participants had to be autistic, or the support could not target more than one condition in addition to autism;

-

The paper was written in English.

Exclusion criteria:

-

Technology is only used for clinical evaluation, e.g., screening, assessment, diagnosis, monitoring tools, and fundamental research using technology;

-

Literature reviews;

-

Conference papers less than two pages long.

Two independent reviewers screened the titles, abstracts, and meta-data (language, year of publication, number of pages) of the 2716 records yielded by the literature search. They applied the above-mentioned inclusion and exclusion criteria to filter out irrelevant papers. The lists of papers they each selected were then compared. In case of discrepancy between them, the decision to include or exclude of a paper was made through consensus by consulting a third reviewer. The screening yielded 792 records that met our inclusion criteria. Our main goal was to evaluate the rating scale in a representative sample of individual research reports (i.e., we did not seek to apply it to the entire autism and technology literature). We opted for a sample of sufficient size to assume a normal distribution. From the 792 eligible articles, a random sample of 30% (n = 237) was considered appropriate to evaluate the scale. Selected articles were read in full, and the inclusion and exclusion criteria reapplied to the full text. Twenty-six records were excluded at this stage. The final sample was 211 papers (references can be found in online supplementary material: S2 References of scored papers).

2.4 Reliability and validity testing procedure

Two independent raters scored the sample of 211 papers (see the supplementary material: S3 All UIDS scored papers). The two raters were the persons who had screened the initial records yielded by the literature search to apply inclusion/exclusion criteria. To assess the scale's reliability, a random sub-sample (n = 78), amounting to a third of the included papers (after sampling, before full reading), was scored by both raters and served as a test dataset to determine the level of consensus. We used the intra-class correlation coefficient (ICC), an index used in inter-rater reliability analyses [88, 89].

We also assessed the validity of our UIDS scale. As there are no scales for measuring the quality of information about users guiding the design process of technology-based supports, we could not compare this new scale with an equivalent rating instrument. Instead, we relied on the judgment of an independent researcher trained in user-centered design. This researcher had three years of experience in evaluating human–computer interaction (HCI) and completed a Ph.D., which was awarded soon after the end of this task. Ten papers given a low score on the UIDS scale (0–2.5) and 10 papers given a high score on the UIDS scale (6–8) were selected. The independent researcher was asked to categorize each of these papers as having either high or low user-centered quality. We then evaluated if the researcher's classification matched the grouping derived from UIDS scores.

Finally, we computed descriptive statistics to characterize the sample of 211 papers scored with the UIDS scale for illustrative purposes. Means with confidence intervals were estimated from the sample to evaluate the percentage of studies falling under each scoring label (low, medium, high) of the scale. We also categorized the studies of the sample according to the type of technology they employed.

3 Results

As explained above, a sub-sample of 78 studies was randomly selected to compute ICC between scores given by the two raters. Those scores can be found in the joint online supplementary material (S4 UIDS Ratings for Intra-Class Correlation (ICC)). Inter-rater reliability measured with the ICC for the UIDS scale was 0.93, 95%CI [0.87–0.96]. In addition, regarding the validity assessment of the UIDS scale, the independent researcher in user-centered design agreed 100% of the time with classifications derived from the UIDS scale. As there was no variability between the categorizations yielded by the scale and the independent researcher, it was deemed unnecessary to resort to any further statistical analysis. Indeed, the probability of obtaining such a perfect match by chance is lower than 10–5.

Table 3 displays descriptive statistics for the random sample of 211 studies that were thoroughly reviewed. The mean score on the UIDS scale was 3.57 ± 1.50, 95%CI [3.37–3.77], with a range of possible values between 0.5 and 8.

Table 4 presents the type of technologies featured in the sample of 211 scored studies. Mobile devices (tablets and smartphones), robots, desktop computers, and virtual or augmented reality were the most frequent technologies. Less frequent technologies included were smart glasses (e.g., Google Glass [90]), wearable interfaces (e.g., tactile sleeve [91]), tangible user interfaces (e.g., soft haptic toys [92]), and Shared Active Surface (e.g., multi-users digital tabletop [93]).

4 Discussion

This was the first study to develop an instrument to evaluate information about the users guiding the design process of technology-based supports for autism. The inter-rater agreement for the UIDS scale was in the range of excellent values [88]. Additionally, there was complete agreement between scores obtained on this scale and how an independent researcher trained in user-centered design classified studies as high or low quality. These results are good indications of the relevance and applicability of this scale to evaluate the evidence on which technological design processes of supports for autism are based. Descriptive statistics revealed that the reviewed studies covered a large diversity of technology. As the UIDS scale was not technology-dependent, this diversity was not an issue when scoring papers. Design and development processes are often defined by business requirements, availability of technical means, and generic market research and thus not supported by insights as to what users actually do when using the product. By contrast, user involvement throughout the design and development process can reduce the risk of launching an unsuccessful or unusable product thanks to better-informed design decisions. User-centered design has been linked to more effective, efficient, and safer products being developed, contributing simultaneously to user-acceptance [84, 94]. Additionally, it has been argued that active involvement can improve users' understanding of a technology's potential and help them realize how the tasks they are required to perform may change [95]. A user-centered design approach including autistic users in its different phases gives autistic people opportunities to make their voices heard and control the support they receive.

Most studies received a medium score on the UIDS scale in the sample of papers we reviewed. None of the studies received the maximum possible score. Participatory designs which gain the most points in the implementation phase were rare, potentially due to the cost both in money and time to have participants involved in all stages of analysis and implementation. Lack of awareness of the importance of autistic users shaping the design of a product targeting them may be another factor. The UIDS scale requires evaluating the extent and quality of the literature review performed during a study. The assessed studies often included substantial and well-researched reviews on technology, but did not have sufficient information on autism and approaches used in supporting autistic people. They were usually focused on general technological advancements or on the mechanics of specific algorithms without attempting to scope the potential benefit for the autistic community. This tended to lower their score on the UIDS scale. Such studies merely assessed whether autistic people were willing or able to use a specific piece of technology that was pre-existing, like virtual reality helmets, or developed by the research team, for instance, robotic heads.

When deciding which support is the most appropriate for a person, various factors are taken into consideration, evidence being only one of them [53]. The weight of evidence in the decision process is modulated by other factors like societal values and personal preferences. The level of required evidence depends on the personal goal of using a specific piece of technology. For instance, digital products can be used for the element of fun, in which case stringent evidence criteria may not be critical. In such circumstances, though, ease of use and adequacy with the user's requirements are pivotal to the decision-making process. Assessment of the evidence base of technological support should thus be accompanied by evaluating how users were involved in the technological design process. Additionally, the rapid development of technology-based supports often prevents careful evaluation using long RCTs due to a mismatch between commercial and academic progress [96]. When this is the case, the UIDS scale could be used to evaluate the extent to which the design process was informed by a literature review of autism research and by autistic users' experience.

The rationale endorsed by the UIDS scale favors autistic users' involvement in the design process of technological supports. User participation in health and education digital support design remains limited to providing feedback on designers' ideas [97, 98]. Efforts to involve users as co-designers are often challenging due to the targeted users' abstraction ability [99]. Despite the challenges it poses, with appropriate adjustments, participatory design in the area of technology-based supports can enable digital empowerment and social inclusion of autistic individuals [73]. Additionally, the UIDS scale with minor modifications could be applied to conditions other than autism and extend to research areas such as mental health and education.

The present study has several limitations that should be noted. First, the UIDS scale was not applied to all eligible papers retrieved from the literature search. This was due to the vast number of relevant articles (n = 792) compared to our limited resources. A sample of randomly selected papers (n = 211) was used to test the scale to tackle this problem. It is, therefore, possible that the present study failed to identify specific issues in papers that were not included in the sample. Secondly, the application of user-centered design with autistic participants of younger age or with severe learning difficulties/intellectual disorders may not be so straightforward. For those cases, involving participants as designers can be challenging, and thus decisions often rely on caregivers. These additional difficulties may have lowered the scores on the UIDS scale for studies focusing on such participants.

5 Implications

The present study designed and tested the UIDS scale, which assesses information about users guiding the technological design process of digital supports for autistic users. This scale demonstrated high reliability and validity. As with other scales, the UIDS scale should be used according to its stated procedures, scoring systems, and concepts considered within the framework of the present article. The scaling instrument presented here should help address the critical challenge posed by the current proliferation of studies on technology-based supports for autistic users. The scale could prove useful to national and international organizations responsible for disseminating knowledge about autism, especially those that manage databases of technology-based supports and need a standardized way to display information about the quality of the design of those supports. Moreover, it should be of interest to the entire autism community (including researchers in the field, autistic people, their families, and related professionals) when deciding what digital supports to purchase.

Data availability

All data generated or analyzed during this study are included in this published article and its supplementary information files.

References

Durkin, K.: Videogames and young people with developmental disorders. Rev. Gen. Psychol. 14, 122 (2010)

Frauenberger, C.: Disability and technology: a critical realist perspective. In: Proceedings of the 17th International ACM SIGACCESS Conference on Computers & Accessibility, pp. 89–96. ACM (2015)

Grossard, C., Grynspan, O., Serret, S., Jouen, A.-L., Bailly, K., Cohen, D.: Serious games to teach social interactions and emotions to individuals with autism spectrum disorders (ASD). Comput. Educ. 113, 195–211 (2017)

Grynszpan, O., Weiss, P.L., Perez-Diaz, F., Gal, E.: Innovative technology-based interventions for autism spectrum disorders: a meta-analysis. Autism 18, 346–361 (2014). https://doi.org/10.1177/1362361313476767

Ploog, B.O., Scharf, A., Nelson, D., Brooks, P.J.: Use of computer-assisted technologies (CAT) to enhance social, communicative, and language development in children with autism spectrum disorders. J. Autism Dev. Disord. 43, 301–322 (2013)

Billard, A., Robins, B., Nadel, J., Dautenhahn, K.: Building Robota, a mini-humanoid robot for the rehabilitation of children with autism. Assist. Technol. 19, 37–49 (2007)

Dautenhahn, K.: Roles and functions of robots in human society: implications from research in autism therapy. Robotica 21, 443–452 (2003)

van den Berk-Smeekens, I., de Korte, M.W.P., van Dongen-Boomsma, M., Oosterling, I.J., den Boer, J.C., Barakova, E.I., Lourens, T., Glennon, J.C., Staal, W.G., Buitelaar, J.K.: Pivotal response treatment with and without robot-assistance for children with autism: a randomized controlled trial. Eur Child Adolesc Psychiatry (2021). https://doi.org/10.1007/s00787-021-01804-8

Cihak, D.F., Wright, R., Ayres, K.M.: Use of self-modeling static-picture prompts via a handheld computer to facilitate self-monitoring in the general education classroom. Educ. Train. Autism Dev. Disabil. 45, 136–149 (2010)

Blischak, D.M., Schlosser, R.W.: Use of technology to support independent spelling by students with autism. Top. Lang. Disord. 23, 293–304 (2003)

Bradley, R., Newbutt, N.: Autism and virtual reality head-mounted displays: a state of the art systematic review. J. Enabling Technol. 12, 101–113 (2018). https://doi.org/10.1108/JET-01-2018-0004

Mesa-Gresa, P., Gil-Gómez, H., Lozano-Quilis, J.-A., Gil-Gómez, J.-A.: Effectiveness of virtual reality for children and adolescents with autism spectrum disorder: an evidence-based systematic review. Sensors 18, 2486 (2018). https://doi.org/10.3390/s18082486

Parsons, S., Cobb, S.: State-of-the-art of virtual reality technologies for children on the autism spectrum. Eur. J. Spec. Needs Educ. 26, 355–366 (2011)

Parsons, S., Mitchell, P.: The potential of virtual reality in social skills training for people with autistic spectrum disorders. J. Intellect. Disabil. Res. 46, 430–443 (2002)

van Dijk, J., Hummels, C.: Designing for embodied being-in-the-world: two cases, seven principles and one framework. In: Proceedings of the Eleventh International Conference on Tangible, Embedded, and Embodied Interaction, pp. 47–56. ACM (2017)

Escobedo, L., Nguyen, D.H., Boyd, L., Hirano, S., Rangel, A., Garcia-Rosas, D., Tentori, M., Hayes, G.: MOSOCO: a mobile assistive tool to support children with autism practicing social skills in real-life situations. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pp. 2589–2598. ACM (2012)

Taj-Eldin, M., Ryan, C., O’Flynn, B., Galvin, P.: A review of wearable solutions for physiological and emotional monitoring for use by people with autism spectrum disorder and their caregivers. Sensors 18, 4271 (2018). https://doi.org/10.3390/s18124271

Chen, Y., Zhou, Z., Cao, M., Liu, M., Lin, Z., Yang, W., Yang, X., Dhaidhai, D., Xiong, P.: Extended Reality (XR) and telehealth interventions for children or adolescents with autism spectrum disorder: systematic review of qualitative and quantitative studies. Neurosci. Biobehav. Rev. 138, 104683 (2022). https://doi.org/10.1016/j.neubiorev.2022.104683

Dechsling, A., Orm, S., Kalandadze, T., Sütterlin, S., Øien, R.A., Shic, F., Nordahl-Hansen, A.: Virtual and augmented reality in social skills interventions for individuals with autism spectrum disorder: a scoping review. J. Autism Dev. Disord. 52, 4692–4707 (2022). https://doi.org/10.1007/s10803-021-05338-5

Khowaja, K., Banire, B., Al-Thani, D., Sqalli, M.T., Aqle, A., Shah, A., Salim, S.S.: Augmented reality for learning of children and adolescents with autism spectrum disorder (ASD): a systematic review. IEEE Access 8, 78779–78807 (2020). https://doi.org/10.1109/ACCESS.2020.2986608

Parsons, S.: Authenticity in virtual reality for assessment and intervention in autism: a conceptual review. Educ. Res. Rev. 19, 138–157 (2016). https://doi.org/10.1016/j.edurev.2016.08.001

Koumpouros, Y., Kafazis, T.: Wearables and mobile technologies in autism spectrum disorder interventions: a systematic literature review. Res. Autism Spectr. Disord. 66, 101405 (2019). https://doi.org/10.1016/j.rasd.2019.05.005

Al-Rashaida, M., Amayra, I., López-Paz, J.F., Martínez, O., Lázaro, E., Berrocoso, S., García, M., Pérez, M., Rodríguez, A.A., Luna, P.M., Pérez-Núñez, P., Caballero, P.: Studying the effects of mobile devices on young children with autism spectrum disorder: a systematic literature review. Rev. J. Autism Dev. Disord. 9, 400–415 (2022). https://doi.org/10.1007/s40489-021-00264-9

Salimi, Z., Jenabi, E., Bashirian, S.: Are social robots ready yet to be used in care and therapy of autism spectrum disorder: a systematic review of randomized controlled trials. Neurosci. Biobehav. Rev. 129, 1–16 (2021). https://doi.org/10.1016/j.neubiorev.2021.04.009

Khowaja, K., Salim, S.S.: A systematic review of strategies and computer-based intervention (CBI) for reading comprehension of children with autism. Res. Autism Spectr. Disord. 7, 1111–1121 (2013)

Mazon, C., Fage, C., Sauzéon, H.: Effectiveness and usability of technology-based interventions for children and adolescents with ASD: a systematic review of reliability, consistency, generalization and durability related to the effects of intervention. Comput. Hum. Behav. 93, 235–251 (2019). https://doi.org/10.1016/j.chb.2018.12.001

Hassan, A., Pinkwart, N., Shafi, M.: Serious games to improve social and emotional intelligence in children with autism. Entertain. Comput. 38, 100417 (2021). https://doi.org/10.1016/j.entcom.2021.100417

Silva, G.M., Souto, J.J.S., Fernandes, T.P., Bolis, I., Santos, N.A.: Interventions with serious games and entertainment games in autism spectrum disorder: a systematic review. Dev. Neuropsychol. 46, 463–485 (2021). https://doi.org/10.1080/87565641.2021.1981905

Finkenauer, C., Pollmann, M.M., Begeer, S., Kerkhof, P.: Brief report: examining the link between autistic traits and compulsive Internet use in a non-clinical sample. J. Autism Dev. Disord. 42, 2252–2256 (2012)

Fletcher-Watson, S., Adams, J., Brook, K., Charman, T., Crane, L., Cusack, J., Leekam, S., Milton, D., Parr, J.R., Pellicano, E.: Making the future together: shaping autism research through meaningful participation. Autism 23, 943–953 (2019). https://doi.org/10.1177/1362361318786721

Laurie, M.H., Warreyn, P., Uriarte, B.V., Boonen, C., Fletcher-Watson, S.: An international survey of parental attitudes to technology use by their autistic children at home. J. Autism Dev. Disord. (2018). https://doi.org/10.1007/s10803-018-3798-0

Zervogianni, V., Fletcher-Watson, S., Herrera, G., Goodwin, M., Pérez-Fuster, P., Brosnan, M., Grynszpan, O.: A framework of evidence-based practice for digital support, co-developed with and for the autism community. Autism (2020). https://doi.org/10.1177/1362361319898331

Goldsmith, T.R., LeBlanc, L.A.: Use of technology in interventions for children with autism. J. Early Intensive Behav. Interv. 1, 166 (2004)

Ramdoss, S., Lang, R., Mulloy, A., Franco, J., O’Reilly, M., Didden, R., Lancioni, G.: Use of computer-based interventions to teach communication skills to children with autism spectrum disorders: a systematic review. J. Behav. Educ. 20, 55–76 (2011)

Berggren, S., Fletcher-Watson, S., Milenkovic, N., Marschik, P.B., Bölte, S., Jonsson, U.: Emotion recognition training in autism spectrum disorder: A systematic review of challenges related to generalizability. Dev. Neurorehabil. 21, 141–154 (2018)

Bolte, S., Hubl, D., Feineis-Matthews, S., Prvulovic, D., Dierks, T., Poustka, F.: Facial affect recognition training in autism: can we animate the fusiform gyrus? Behav. Neurosci. 120, 211–216 (2006). https://doi.org/10.1037/0735-7044.120.1.211

Faja, S., Webb, S.J., Jones, E., Merkle, K., Kamara, D., Bavaro, J., Aylward, E., Dawson, G.: The effects of face expertise training on the behavioral performance and brain activity of adults with high functioning autism spectrum disorders. J. Autism Dev. Disord. 42, 278–293 (2012)

Silver, M., Oakes, P.: Evaluation of a new computer intervention to teach people with autism or Asperger syndrome to recognize and predict emotions in others. Autism 5, 299–316 (2001)

Massaro, D.W., Bosseler, A.: Read my lips: the importance of the face in a computer-animated tutor for vocabulary learning by children with autism. Autism 10, 495–510 (2006)

Pennington, R.C.: Computer-assisted instruction for teaching academic skills to students with autism spectrum disorders: a review of literature. Focus Autism Other Dev. Disabil. 25, 239–248 (2010)

Aresti-Bartolome, N., Garcia-Zapirain, B.: Technologies as support tools for persons with autistic spectrum disorder: a systematic review. Int. J. Environ. Res. Public Health 11, 7767–7802 (2014)

Brosnan, M., Gavin, J.: Why those with an autism spectrum disorder (ASD) thrive in online cultures but suffer in offline cultures. In: Rosen, L.D., et al. (eds.) The Wiley Handbook of Psychology, Technology, and Society, p. 250. Wiley, London (2015)

Brosnan, M., Good, J., Parsons, S., Yuill, N.: Look up! Digital technologies for autistic people to support interaction and embodiment in the real world. Res. Autism Spectr. Disord. 58, 52–53 (2019)

Hedges, S.H., Odom, S.L., Hume, K., Sam, A.: Technology use as a support tool by secondary students with autism. Autism 22, 70–79 (2018)

Odom, S.L., Thompson, J.L., Hedges, S., Boyd, B.A., Dykstra, J.R., Duda, M.A., Szidon, K.L., Smith, L.E., Bord, A.: Technology-aided interventions and instruction for adolescents with autism spectrum disorder. J Autism Dev Disord. 45, 3805–3819 (2015). https://doi.org/10.1007/s10803-014-2320-6

Moore, M., Calvert, S.: Brief report: Vocabulary acquisition for children with autism: teacher or computer instruction. J. Autism Dev. Disord. 30, 359–362 (2000)

Williams, C., Wright, B., Callaghan, G., Coughlan, B.: Do children with autism learn to read more readily by computer assisted instruction or traditional book methods? A pilot study. Autism. 6, 71–91 (2002)

Bernard-Opitz, V., Sriram, N., Nakhoda-Sapuan, S.: Enhancing social problem solving in children with autism and normal children through computer-assisted instruction. J. Autism Dev. Disord. 31, 377–384 (2001)

Heimann, M., Nelson, K., Tjus, T., Gillberg, C.: Increasing reading and communication-skills in children with autism through an interactive multimedia computer-program. J. Autism Dev. Disord. 25, 459–480 (1995)

Kim, J.W., Nguyen, T.-Q., Gipson, S.Y.-M.T., Shin, A.L., Torous, J.: Smartphone apps for autism spectrum disorder—understanding the evidence. J. Technol. Behav. Sci. 3, 1–4 (2018). https://doi.org/10.1007/s41347-017-0040-4

Colby, K.M., Kraemer, H.C.: An objective measurement of nonspeaking children’s performance with a computer-controlled program for the stimulation of language behavior. J Autism Child Schizophr. 5, 139–146 (1975)

Colby, K.M., Smith, D.C.: Computers in the treatment of nonspeaking autistic children. Curr Psychiatr Ther. 11, 1–17 (1971)

Dijkers, M.P., Murphy, S.L., Krellman, J.: Evidence-based practice for rehabilitation professionals: concepts and controversies. Arch. Phys. Med. Rehabil. 93, S164–S176 (2012)

Kitchenham, B.A., Dyba, T., Jorgensen, M.: Evidence-based software engineering. In: Proceedings of the 26th International Conference on Software Engineering, pp. 273–281. IEEE Computer Society (2004)

Sackett, D.L.: Evidence-based medicine. In: Seminars in Perinatology, pp. 3–5. Elsevier (1997)

Sackett, D.L., Rosenberg, W.M., Gray, J.M., Haynes, R.B., Richardson, W.S.: Evidence based medicine. Br. Med. J. 313, 170 (1996)

Guldberg, K.: Evidence-based practice in autism educational research: can we bridge the research and practice gap? Oxf. Rev. Educ. 43, 149–161 (2017)

Mesibov, G.B., Shea, V.: Evidence-based practices and autism. Autism 15, 114–133 (2011)

Beaumont, R., Walker, H., Weiss, J., Sofronoff, K.: Randomized controlled trial of a video gaming-based social skills program for children on the autism spectrum. J. Autism Dev. Disord. (2021). https://doi.org/10.1007/s10803-020-04801-z

Beaumont, R., Sofronoff, K.: A multi-component social skills intervention for children with asperger syndrome: the junior detective training program. J. Child Psychol. Psychiatry 49, 743–753 (2008)

Faja, S., Aylward, E., Bernier, R., Dawson, G.: Becoming a face expert: a computerized face-training program for high-functioning individuals with autism spectrum disorders. Dev. Neuropsychol. 33, 1–24 (2008). https://doi.org/10.1080/87565640701729573

Fletcher-Watson, S., Petrou, A., Scott-Barrett, J., Dicks, P., Graham, C., O’Hare, A., Pain, H., McConachie, H.: A trial of an iPad ™ intervention targeting social communication skills in children with autism. Autism 20, 771–782 (2016). https://doi.org/10.1177/1362361315605624

Gilroy, S.P., Leader, G., McCleery, J.P.: A pilot community-based randomized comparison of speech generating devices and the picture exchange communication system for children diagnosed with autism spectrum disorder. Autism Res. 11, 1701–1711 (2018). https://doi.org/10.1002/aur.2025

Golan, O., Baron-Cohen, S.: Systemizing empathy: teaching adults with Asperger syndrome or high-functioning autism to recognize complex emotions using interactive multimedia. Dev. Psychopathol. 18, 591–617 (2006)

Hopkins, I.M., Gower, M.W., Perez, T.A., Smith, D.S., Amthor, F.R., Wimsatt, F.C., Biasini, F.J.: Avatar assistant: improving social skills in students with an ASD through a computer-based intervention. J. Autism Dev. Disord. 41, 1543–1555 (2011)

Tanaka, J.W., Wolf, J.M., Klaiman, C., Koenig, K., Cockburn, J., Herlihy, L., Brown, C., Stahl, S., Kaiser, M.D., Schultz, R.T.: Using computerized games to teach face recognition skills to children with autism spectrum disorder: the Let’s Face It! program. J. Child Psychol. Psychiatry 51, 944–952 (2010)

Voss, C., Schwartz, J., Daniels, J., Kline, A., Haber, N., Washington, P., Tariq, Q., Robinson, T.N., Desai, M., Phillips, J.M., Feinstein, C., Winograd, T., Wall, D.P.: Effect of wearable digital intervention for improving socialization in children with autism spectrum disorder: a randomized clinical trial. JAMA Pediatr. 173, 446–454 (2019). https://doi.org/10.1001/jamapediatrics.2019.0285

Zheng, Z., Nie, G., Swanson, A., Weitlauf, A., Warren, Z., Sarkar, N.: A randomized controlled trial of an intelligent robotic response to joint attention intervention system. J. Autism. Dev. Disord. 50, 2819–2831 (2020). https://doi.org/10.1007/s10803-020-04388-5

Bondy, A.S., Frost, L.A.: The picture exchange communication system. Semin. Speech Lang. 19, 373–388 (1998). https://doi.org/10.1055/s-2008-1064055

Kientz, J.A., Hayes, G.R., Goodwin, M.S., Gelsomini, M., Abowd, G.D.: Interactive Technologies and Autism, 2nd edn. In: Synthesis Lectures on Assistive, Rehabilitative, and Health-Preserving Technologies, vol. 9, pp. i–229 (2020). https://doi.org/10.2200/S00988ED2V01Y202002ARH013

Constantin, A., Johnson, H., Smith, E., Lengyel, D., Brosnan, M.: Designing computer-based rewards with and for children with autism spectrum disorder and/or intellectual disability. Comput. Hum. Behav. 75, 404–414 (2017)

Fletcher-Watson, S.: A targeted review of computer-assisted learning for people with autism spectrum disorder: towards a consistent methodology. Rev.J.Autism Dev. Disord. 1, 87–100 (2014)

Brosnan, M., Parsons, S., Good, J., Yuill, N.: How can participatory design inform the design and development of innovative technologies for autistic communities? J. Assist. Technol. 10, 115–120 (2016)

Parsons, S., Yuill, N., Good, J., Brosnan, M.: ‘Whose agenda? Who knows best? Whose voice?’ Co-creating a technology research roadmap with autism stakeholders. Disabil. Soc. (2019). https://doi.org/10.1080/09687599.2019.1624152

Pellicano, E., Dinsmore, A., Charman, T.: Views on researcher-community engagement in autism research in the United Kingdom: a mixed-methods study. PLoS ONE 9, e109946 (2014)

Vines, J., Clarke, R., Wright, P., McCarthy, J., Olivier, P.: Configuring participation: on how we involve people in design. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. pp. 429–438. ACM (2013)

Jordan, P.W.: Designing Pleasurable Products: An Introduction to the New Human Factors. CRC Press, London (2003)

Schnall, R., Rojas, M., Bakken, S., Brown, W., Carballo-Dieguez, A., Carry, M., Gelaude, D., Mosley, J.P., Travers, J.: A user-centered model for designing consumer mobile health (mHealth) applications (apps). J. Biomed. Inform. 60, 243–251 (2016). https://doi.org/10.1016/j.jbi.2016.02.002

LeRouge, C., Ma, J., Sneha, S., Tolle, K.: User profiles and personas in the design and development of consumer health technologies. Int. J. Med. Inform. 82, e251-268 (2013). https://doi.org/10.1016/j.ijmedinf.2011.03.006

Gaynor, M., Schneider, D., Seltzer, M., Crannage, E., Barron, M.L., Waterman, J., Oberle, A.: A user-centered, learning asthma smartphone application for patients and providers. Learn Health Syst. 4, e10217 (2020). https://doi.org/10.1002/lrh2.10217

Hasson, F., Keeney, S., McKenna, H.: Research guidelines for the Delphi survey technique. J. Adv. Nurs. 32, 1008–1015 (2000)

Druin, A.: The role of children in the design of new technology. Behav. Inf. Technol. 21, 1–25 (2002)

Eason, K.D.: Information Technology and Organisational Change. CRC Press, London (1989)

Preece, J., Rogers, Y., Sharp, H.: Interaction Design: Beyond Human-Computer Interaction. Wiley, London (2015)

Fails, J.A., Guha, M.L., Druin, A.: Methods and techniques for involving children in the design of new technology for children. Found. Trends Hum. Comput. Interact. 6, 85–166 (2013)

Moher, D., Liberati, A., Tetzlaff, J., Altman, D.G., Group, P.: Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 6, e1000097 (2009)

American Psychiatric Association: Diagnostic and Statistical Manual of Mental Disorders (DSM-5®). American Psychiatric Publishing, Washington (2013)

Hallgren, K.A.: Computing inter-rater reliability for observational data: an overview and tutorial. Tutor. Quant. Methods Psychol. 8, 23–34 (2012)

Koo, T.K., Li, M.Y.: A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J. Chiropr. Med. 15, 155–163 (2016)

Sahin, N.T., Keshav, N.U., Salisbury, J.P., Vahabzadeh, A.: Second version of google glass as a wearable socio-affective aid: positive school desirability, high usability, and theoretical framework in a sample of children with autism. JMIR Hum. Factors 5, e1 (2018). https://doi.org/10.2196/humanfactors.8785

Tang, F., McMahan, R.P., Allen, T.T.: Development of a low-cost tactile sleeve for autism intervention. In: 2014 IEEE International Symposium on Haptic, Audio and Visual Environments and Games (HAVE) Proceedings, pp. 35–40 (2014)

Seo, J.H., Aravindan, P., Sungkajun, A.: Toward creative engagement of soft haptic toys with children with autism spectrum disorder. In: Proceedings of the 2017 ACM SIGCHI Conference on Creativity and Cognition, pp. 75–79. ACM, New York, NY, USA (2017)

Roldán-Álvarez, D., Márquez-Fernández, A., Rosado-Martín, S., Martín, E., Haya, P.A., García-Herranz, M.: Benefits of combining multitouch tabletops and turn-based collaborative learning activities for people with cognitive disabilities and people with ASD. In: 2014 IEEE 14th International Conference on Advanced Learning Technologies, pp. 566–570 (2014)

Abras, C., Maloney-Krichmar, D., Preece, J.: User-centered design. In: Bainbridge, W. (ed.) Encyclopedia of Human–Computer Interaction, vol. 37, pp. 445–456. Sage Publications, Thousand Oaks (2004)

Seffah, A., Gulliksen, J., Desmarais, M.C.: Human-Centered Software Engineering-Integrating Usability in the Software Development Lifecycle. Springer, Berlin (2005)

Fletcher-Watson, S.: Evidence-based technology design and commercialisation: recommendations derived from research in education and autism. TechTrends 59, 84–88 (2015)

Danielsson, K., Wiberg, C.: Participatory design of learning media: designing educational computer games with and for teenagers. Interact. Technol. Smart Educ. 3, 275–291 (2006)

Tan, J.L., Goh, D.H.-L., Ang, R.P., Huan, V.S.: Child-centered interaction in the design of a game for social skills intervention. Comput. Entertain. 9, 2 (2011)

Mazzone, E., Read, J.C., Beale, R.: Design with and for disaffected teenagers. In: Proceedings of the 5th Nordic Conference on Human–Computer Interaction: Building Bridges, pp. 290–297. ACM (2008)

Funding

This work was supported by a grant from a consortium composed of the International Foundation of Applied Disability Research, Orange Foundation, and UEFA (Union of European Football Associations) foundation for children (Grant Number: APa2016_026). This publication has also received financial support from the project Indigo! (Spanish Ministry of Science and Innovation and Universidad Autónoma de Madrid, with reference PID2019-105951RB-I00/AEI/10.13039/501100011033). The funding sources were not involved in conducting the research study.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

In exchange for scientific advisory board membership, Dr. Matthew Goodwin holds stock in Empatica, Inc., and Behavior Imaging Solutions, Inc. He also receives income from Janssen (the pharmaceutical division of Johnson & Johnson) for consulting on their JAKE data collection platform. Dr. Sue Fletcher-Watson contributed to creating an app ("FindMe") for autistic children, including an arrangement to receive royalties if sales exceeded an agreed threshold. However, this threshold was not exceeded, and the app is no longer available commercially. The other authors have no competing interests to declare relevant to this article's content.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary S1.

UIDS scoring sheet. (DOCX 16 kb)

Supplementary S2.

References of scored papers. References of the 211 articles that were included. (DOCX 40 kb)

Supplementary S3.

All UIDS scored papers. Scores of the 211 articles that were included. (XLSX 28 kb)

Supplementary S4.

UIDS Ratings for Intra-Class Correlation (ICC). Scores on the UIDS scales, given by two independent raters, on which Inter-Rater Reliability was assessed using ICC. (XLSX 33 kb)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zervogianni, V., Fletcher-Watson, S., Herrera, G. et al. A user-based information rating scale to evaluate the design of technology-based supports for autism. Univ Access Inf Soc (2023). https://doi.org/10.1007/s10209-023-00995-y

Accepted:

Published:

DOI: https://doi.org/10.1007/s10209-023-00995-y