Abstract

Automatic translation from signed to spoken languages is an interdisciplinary research domain on the intersection of computer vision, machine translation (MT), and linguistics. While the domain is growing in terms of popularity—the majority of scientific papers on sign language (SL) translation have been published in the past five years—research in this domain is performed mostly by computer scientists in isolation. This article presents an extensive and cross-domain overview of the work on SL translation. We first give a high level introduction to SL linguistics and MT to illustrate the requirements of automatic SL translation. Then, we present a systematic literature review of the state of the art in the domain. Finally, we outline important challenges for future research. We find that significant advances have been made on the shoulders of spoken language MT research. However, current approaches often lack linguistic motivation or are not adapted to the different characteristics of SLs. We explore challenges related to the representation of SL data, the collection of datasets and the evaluation of SL translation models. We advocate for interdisciplinary research and for grounding future research in linguistic analysis of SLs. Furthermore, the inclusion of deaf and hearing end users of SL translation applications in use case identification, data collection, and evaluation, is of utmost importance in the creation of useful SL translation models.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The speedy progress in deep learning has seemingly enabled a bevy of new applications related to sign language recognition, translation, and synthesis, which can be grouped under the umbrella term “sign language processing.” Sign Language Recognition (SLR) can be likened to “information extraction from sign language data,” for example fingerspelling recognition [1, 2] and sign classification [3, 4]. Sign Language Translation (SLT) maps this extracted information to meaning and translates it to another (signed or spoken) language [5, 6]; the opposite direction, from text to sign language, is also possible [7, 8]. Sign Language Synthesis (SLS) aims to generate sign language from some representation of meaning, for example through virtual avatars [9, 10]. In this article, we are zooming in on translation from signed languages to spoken languages.

In particular, we focus on translating videos containing sign language utterances to text, i.e., the written form of spoken language. We will only discuss SLT models that support video data as input, as opposed to models that require wearable bracelets or gloves, or 3D cameras. Systems that use smart gloves, wristbands or other wearables are considered intrusive and not accepted by sign language communities (SLCs) [11]. In addition, they are unable to capture all information present in signing, such as non-manual actions. Video-based approaches also have benefits compared to wearable-based approaches: they can be trained with existing data, and they could for example be integrated into conference calling software, or used for automatic captioning in videos of signing vloggers.

Several previously published scientific papers liken SLR to gesture recognition (among others, [12, 13]), or even present a fingerspelling recognition system as an SLT solution (among others, [14, 15]). Such classifications are overly simplified and incorrect. They may lead to a misunderstanding of the technical challenges that must be solved. As Fig. 1 illustrates, SLT lies on the intersection of computer vision, machine translation, and linguistics. Experts from each domain must come together to truly address SLT.

This article aims to provide a comprehensive overview of the state of the art (SOTA) of sign to spoken language translation. To do this, we perform a systematic literature review and discuss the state of the domain. We aim to find answers to the following research questions:

- RQ1.:

-

Which datasets are used, for what languages, and what are the properties of these datasets?

- RQ2.:

-

How should we represent sign language data for Machine Translation (MT) purposes?

- RQ3.:

-

Which algorithms are currently the SOTA for SLT?

- RQ4.:

-

How are current SLT models evaluated?

Furthermore, we list several challenges in SLT. These challenges are of a technical and linguistic nature. We propose research directions to tackle them.

In parallel with this article, another survey on SLT was written and published [16]. It provides a narrative historical overview of the domains of SLR and SLT and positions them in the wider scope of sign language processing. They also discuss the “to-sign” direction of SLT that we disregard. We provide a systematic and extensive analysis of the most recent work on SLT, supported by a discussion of sign language linguistics. Their work is a broader overview of the domain, but less in depth and remains mostly limited to computer science.

This article is also related to the work of Bragg et al. [17], that gives a limited but informative overview of the domains of SLR, SLS and SLT that is based on the results of panel discussions. They list several challenges in the field that align with our own findings, e.g., data scarcity and involvement of SLCs.

We first provide a high level overview of some required background information on sign languages in Sect. 2. This background can help in the understanding of the remainder of this article, in particular the reasoning behind our inclusion criteria. Section 3 provides the necessary background in machine translation. We discuss the inclusion criteria and search strategy for our systematic literature search in Sect. 4 and objectively compare the considered papers on SLT in Sect. 5; this includes Sect. 5.7 focusing on a specific benchmark dataset. The research questions introduced above are answered in our discussion of the literature overview, in Sect. 6. We present several open challenges in SLT in Sect. 7. The conclusion and takeaway messages are given in Sect. 8.

2 Sign language background

2.1 Introduction

It is a common misconception that there exists a single, universal, sign language. Just like spoken languages, sign languages evolve naturally through time and space. Several countries have national sign languages, but often there are also regional differences and local dialects. Furthermore, signs in a sign language do not have a one-to-one mapping to words in any spoken language: translation is not as simple as recognizing individual signs and replacing them with the corresponding words in a spoken language. Sign languages have distinct vocabularies and grammars and they are not tied to any spoken language. Even in two regions with a shared spoken language, the regional sign languages used can differ greatly. In the Netherlands and in Flanders (Belgium), for example, the majority spoken language is Dutch. However, Flemish Sign Language (VGT) and the Sign Language of the Netherlands (NGT) are quite different. Meanwhile, VGT is linguistically and historically much closer to French Belgian Sign Language (LSFB) [18], the sign language used primarily in the French-speaking part of Belgium, because both originate from a common Belgian Sign Language, diverging in the 1990s [19]. In a similar vein, American Sign Language (ASL) and British Sign Language (BSL) are completely different even though the two countries share English as the official spoken language.

2.2 Sign language characteristics

2.2.1 Sign components

Sign languages are visual; they make use of a large space around the signer. Signs are not composed solely of manual gestures. In fact, there are many more components to a sign. Stokoe stated in 1960 that signs are composed of hand shape, movement and place of articulation parameters [20]. Battison later added orientation, both of the palm and of the fingers [21]. There are also non-manual components such as mouth patterns. These can be divided into mouthings—where the pattern refers to (part of) a spoken language word—and mouth gestures, e.g., touting one’s lips. Non-manual components play an important role in sign language lexicons and grammars [22]. They can, for example, separate minimal pairs: signs that share all articulation parameters but one. When hand shape, orientation, movement and place of articulation are identical, mouth patterns can for example be used to differentiate two signs. Non-manual actions are not only important at the lexical level as just illustrated, but also at the grammatical level. A clear example of this can be found in eyebrow movements: furrowing or raising the eyebrows can signal that a question is being asked and indicate the type of question (open or closed).

2.2.2 Simultaneity

Sign languages exhibit simultaneity on several levels. There is simultaneity on the component level: as explained above, manual actions can be combined with non-manual actions simultaneously. We also observe simultaneity at the utterance level. It is, for example, possible to turn a positive utterance into a negative utterance by shaking one’s head while performing the manual actions. Another example is the use of eyebrow movements to transform a statement into a question.

2.2.3 Signing space

The space around the signer can also be used to indicate, for instance, the location or moment in time of the conversational topic. A signer can point behind their back to specify that an event occurred in the past and likewise, point in front of them to indicate a future event. An imaginary timeline can also be constructed in front of the signer, with time passing from left to right. Space is also used to position referents [18, 23]. For example, a person can be discussing a conversation with their mother and father. Both referents get assigned a location (locus) in the signing space and further references to these persons are made by pointing to, looking at, or signing toward these loci. For example, “mom gives something to dad” can be signed by moving the sign for “to give” from the locus associated with the mother to the one associated with the father. Modeling space, detecting positions in space, and remembering these positions is important for SLT models.

2.2.4 Classifiers

Another important aspect of sign languages is the use of classifiers. Zwitserlood [24] describes them as “morphemes with a non-specific meaning, which are expressed by particular configurations of the manual articulator (or: hands) and which represent entities by denoting salient characteristics.” There are many more intricacies of classifiers than can be listed here, so we give a limited set of examples instead. Several types of classifiers exist. They can, for example, represent nouns or adjectives according to their shape or size. Whole entity classifiers can be used to represent objects, e.g., a flat hand can represent a car; handling classifiers can be used to indicate that an object is being handled, e.g., a pencil is picked up from a table. In a whole entity classifier, the articulator represents the object, whereas in a handling classifier it operates on the object.

2.2.5 The established and the productive lexicon

The vocabularies of sign languages are not fixed. Oftentimes new signs are constructed by sign language users. On the one hand, sign languages can borrow signs from other sign languages, similar to loanwords in spoken languages. In this case, these signs become part of the established lexicon. On the other hand, there is the productive lexicon—one can create an ad hoc sign. Vermeerbergen [25] gives the example of “a man walking on long legs” in VGT: rather than expressing this clause by signing “man,” “walk,” “long” and “legs”, the hands are used (as classifiers) to imitate the man walking. Both the established and productive lexicons are integral parts of sign languages.

Signers can also enact other subjects with their whole body, or part of it. They can, for example, enact animals by imitating their movements or behaviors.

2.2.6 Fingerspelling

Fingerspelling can be used to convey concepts for which a sign does not (yet) exist, or to introduce a person who has not yet been assigned a name sign. It is based on the alphabet of a spoken language, where every letter in that alphabet has a corresponding (static or dynamic) sign. Fingerspelling is also not shared between sign languages. For example, in ASL, fingerspelling is one-handed, but in BSL two hands are used.

2.3 Notation systems for sign languages

Unlike many spoken languages, sign languages do not have a standardized written form. Several notation systems do exist, but none of them are generally accepted as a standard [26]. The earliest notation system was proposed in the 1960s, namely the Stokoe notation [20]. It was designed for ASL and comprises a set of symbols to notate the different components of signs. The position, movement and orientation of the hands are encoded in iconic symbols, and for hand shapes, letters from the Latin alphabet corresponding to the most similar fingerspelling hand shape are used [20]. Later, in the 1970s, Sutton introduced SignWritingFootnote 1: a notation system for sign languages based on a dance choreography notation system [27]. The SignWriting notation for a sign is composed of iconic symbols for the hands, face and body. The signing location and movements are also encoded in symbols, in order to capture the dynamic nature of signing. SignWriting is designed as a system for writing signed utterances for everyday communication. In 1989, the Hamburg Notation System (HamNoSys) was introduced [28]. Unlike SignWriting, it is designed mainly for linguistic analysis of sign languages. It encodes hand shapes, hand orientation, movements and non-manual components in the form of symbols.

Stokoe notation, SignWriting and HamNoSys represent the visual nature of signs in a compact format. They are notation systems that operate on the phonological level. These systems, however, do not capture the meaning of signs. In linguistic analysis of sign languages, glosses are typically used to represent meaning. A sign language gloss is a written representation of a sign in one or more words of a spoken language, commonly the majority language of the region. Glosses can be composed of single words in the spoken language, but also of combinations of words. Examples of glosses are: “CAR,” “BRIDGE,” but also “car-crosses-bridge.” Glosses do not accurately represent the meaning of signs in all cases and glossing has several limitations and problems [26]. They are inherently sequential, whereas signs often exhibit simultaneity [29].Footnote 2 Furthermore, as glosses are based on spoken languages, there may be an implicit influence of the spoken language projected onto the sign language [25, 26]. Finally, there is no universal standard on how glosses should be constructed: this leads to differences between corpora of different sign languages, or even between several sign language annotators working on the same corpus [30].

Sign_A is a recently developed framework that aims to define an architecture that is sufficiently robust to model sign languages on both the phonological level as well as containing meaning (when combined with a role and reference grammar (RRG)) [31]. Sign_A with RRG does not only encode the meaning of sign language utterances, but also parameters pertaining to manual and non-manual actions. De Sisto et al. [32] propose investigating the application of Sign_A for data-driven SLT systems.

The above notation systems for sign languages range from graphical to written and computational representations of signs and signed utterances. None of these notation systems were originally designed for the purpose of automatic translation from signed to spoken languages, but they can be used to train MT models. For example, glosses are often used for SLT because of their similarity to written language text, e.g., [5, 6]. These notation systems can also be used as labels to pre-train feature extractors for SLT models. For instance, Koller et al. presented SLR systems that exploit SignWriting [33, 34], and these systems are leveraged in some later works on SLT, e.g., [35, 36]. Many SLT models also use feature extractors that were pre-trained with gloss labels, e.g., [37, 38].

3 Machine translation

3.1 Spoken language MT

Machine translation is a sequence-to-sequence task. That is, given an input sequence of tokens that constitute a sentence in a source language, an MT system generates a new sequence of tokens that represents a sentence in a target language. A token refers to a sentence construction unit: a word, a number, a symbol, a character or a subword unit.

Current SOTA models for spoken language MT are based on a neural encoder-decoder architecture: an encoder network encodes an input sequence in the source language into a multi-dimensional representation; it is then fed into a decoder network which generates a hypothesis translation conditioned on this representation. The original encoder-decoder was based on Recurrent Neural Networks (RNNs) [39]. To deal with long sequences, Long Short-Term Memory Networks (LSTMs) [40] and Gated Recurrent Units (GRUs) [41] were used. To further improve the performance of RNN-based MT, an attention mechanism was introduced by Bahdanau et al. [42]. In recent years the transformer architecture [43], based primarily on the idea of attention (in combination with positional encoding) has pushed the SOTA even further.

As noted above, a sentence is broken down into tokens and each token is fed into the Neural Machine Translation (NMT) model. NMT converts each token into a multidimensional representation before that token representation is used in the encoder or decoder to construct a sentence level representation. These token representations, typically referred to as word embeddings, encode the meaning of a token based on its context. Learning word embeddings is a monolingual task, since they are associated with tokens in a particular language. Given that for a large number of languages and use cases monolingual data is abundant, it is relatively easy to build word embedding models of high quality and coverage. Building such word embedding models is typically performed using unsupervised algorithms such as GLoVe [44], BERT [45] and BART [46]. These algorithms encode words into vectors in such a way that the vectors of related words are similar.Footnote 3

The domain of spoken language MT is extensive and the current SOTA of NMT builds upon years of research. To provide a complete overview of spoken language MT is out of scope for this article. For a more in depth overview of the domain, we refer readers to the work of Stahlberg [49].

3.2 Sign language MT

Conceptually, sign language MT and spoken language MT are similar. The main difference is the input modality. Spoken language MT operates on two streams of discrete tokens (text to text). As sign languages have no standardized notation system, a generic SLT model must translate from a continuous stream to a discrete stream (video to text). To reduce the complexity of this problem, sign language videos are discretized to a sequence of still frames that make up the video. SLT can now be framed as a sequence-to-sequence, frame-to-token task. As they are, these individual frames do not convey meaning in the way that the word embeddings in a spoken language translation model do. Even though it is possible to train SLT models using frame-based representations as inputs, the extraction of salient sign language representations is required to facilitate the modeling of meaning in sign language encoders.

Figure 2 shows a spoken language NMT and sign language NMT model side by side. The main difference between the two is the input modality. For a spoken language NMT model, both the inputs and outputs are text. For a sign language NMT model, the inputs are some representation of sign language (in this case, video embeddings). Other than this input modality, the models function similarly and are trained and evaluated in the same manner.

3.2.1 Sign language representations

For the encoder of the translation model to capture the meaning of the sign language utterance, a salient representation for sign language videos is required. We can differentiate between representations that are linked to the source modality, namely videos, and linguistically motivated representations.

As will be discussed in Sect. 5.4, the former type of representations are often frame-based, i.e., every frame in the video is assigned a vector, or clip-based, i.e., clips of arbitrary length are assigned a vector. These types of representations are rather simple to derive, e.g., by extracting information directly from a Convolutional Neural Network (CNN). However, they suffer from two main drawbacks. First, such representations are fairly long. For example, the RWTH-PHOENIX-Weather 2014T dataset [6] contains samples of on average 114 frames (in German Sign Language (DGS)), whereas the average sentence length (in German) is 13.7 words in that dataset. As a result, frame-based representations for sign languages negatively impact the computational performance of SLT models. Second, such representations do not originate from domain knowledge. That is, they do not capture the semantics of sign language. If semantic information is not encoded in the sign language representation, the translation model is forced to model the semantics and perform translation at the same time.

The second category includes a range of linguistically motivated representations, from semantic representations to individual sign representations. In Sect. 2.3, we presented an overview of some notation systems for sign languages: Stokoe notation, SignWriting, HamNoSys, glosses, and Sign_A. These notation systems can be used as representations in an SLT model, or to pre-train the feature extractor of SLT models. In current research, only glosses have been used as inputs or labels for the SLT models themselves, because large annotated datasets for the other systems do not exist.

3.2.2 Tasks

The reviewed papers cover five distinct translation tasks that can be classified based on whether, and how, glosses are used. To denote these tasks, we borrow the naming conventions from Camgöz et al. [6, 37]. These tasks are illustrated in Fig. 3.

Gloss2Text Gloss2Text models are used to translate from sign language glosses to spoken language text. They provide a reference for the performance that can be achieved using a salient representation. Therefore they can serve as a compass for the design of sign language representations and the corresponding SLR systems. Note that the performance of a Gloss2Text model is not an upper bound for the performance of an SLT model: glosses do not capture all linguistic properties of signs (see Sect. 2.3).

Sign2Gloss2Text A Sign2Gloss2Text translation system includes an SLR system as the first step, to predict glosses from video. Consequently, errors made by the recognition system are propagated to the translation system. Camgöz et al. [6] for example report a drop in translation accuracy when comparing a Sign2Gloss2Text system to a Gloss2Text system.

(Sign2Gloss, Gloss2Text) In this training setup, first a Gloss2Text model is trained using ground truth gloss and text data. Then, this model is fixed and used to evaluate the performance of the entire translation model, including the SLR model (Sign2Gloss). This is different from Sign2Gloss2Text models, where the Gloss2Text model is trained with the gloss annotations generated by the Sign2Gloss model. Camgöz et al. [6] show that these models perform worse than Sign2Gloss2Text models, because those can learn to correct the noisy outputs of the Sign2Gloss model in the translation model.

Sign2(Gloss+Text) Glosses can provide a supervised signal to a translation system without being an information bottleneck, if the model is trained to jointly predict both glosses and text [37]. Such a model must be able to predict glosses and text from a single sign language representation. The gloss labels provide additional information to the encoder, facilitating the training process. In a Sign2Gloss2Text model (as previously discussed), the translation model receives glosses as inputs: any information that is not present in glosses cannot be used to translate into spoken language text. In Sign2(Gloss+Text) models, however, the translation model input is the sign language representation (embeddings), which may be richer.

Sign2Text Sign2Text models forgo the explicit use of a separate SLR model, and instead perform translation directly with features that are extracted from videos. Sign2Text models do not need glosses to train the translation model. Note that in some cases, these features are still extracted using a model that was pre-trained for SLR, e.g., [37, 38]. This means that some Sign2Text models do indirectly require gloss level annotations for training.

3.3 Requirements for sign language MT

With the given information on sign language linguistics and MT techniques, we are now able to sketch the requirements for sign language MT.

3.3.1 Data requirements

The training of data-driven MT models requires large datasets. The collection of such datasets is expensive and should therefore be tailored to specific use cases. To determine these use cases, members of SLCs must be involved. We answer RQ1 by providing an overview of existing datasets for SLT.

3.3.2 Video processing and sign language representation

We need to be able to process sign language videos and convert them into an internal representation (SLR). This representation must be rich enough to cover several aspects of sign languages (including manual and non-manual actions, simultaneity, signing space, classifiers, the productive lexicon, and fingerspelling). We look in our literature overview for an answer to RQ2 on how we should represent sign language data.

3.3.3 Translating between sign and spoken representations

We need to be able to translate from such a representation into a spoken language representation, which can be reused from existing spoken language MT systems. We need to adapt NMT systems to be able to work with the sign language representation, which will possibly contain simultaneous elements. By comparing different methods for SLT, we evaluate which MT algorithms perform best in the current SOTA (RQ3).

3.3.4 Evaluation

The evaluation of the resulting models can be automated by computing metrics on corpora. These metrics provide an estimate of the quality of translations. Human evaluation (by hearing and deaf people, signing and non-signing) and qualitative evaluations can provide insights into the models and data. We illustrate how current SLT models are evaluated (RQ4).

4 Literature review methodology

4.1 Inclusion criteria and search strategy

To provide an overview of sound SLT research, we adhere to the following principles in our literature search. We consider only peer-reviewed publications. We include journal articles as well as conference papers: the latter are especially important in computer science research. Any paper that is included must be on the topic of sign language machine translation and must not misrepresent the natural language status of sign languages. Therefore, we omit any papers that present classification or transcription of signs or fingerspelling recognition as SLT models (we will show in this section that there are many papers that do this). As we focus on non-intrusive translation from sign languages to text, we exclude papers that use gloves or other wearable devices.

Three scientific databases were queried: Google Scholar, Web of Science and IEEE Xplore.Footnote 4 Four queries were used to obtain initial results: “sign language translation,” “sign language machine translation,” “gloss translation” and “gloss machine translation.” These key phrases were chosen for the following reasons. We aimed to obtain scientific research papers on the topic of MT from sign to spoken languages; therefore, we search for “sign language machine translation.” Several works perform translation between sign language glosses and spoken language text, hence “gloss machine translation”. As many papers omit the word “machine” in “machine translation,” we also include the key phrases “sign language translation” and “gloss translation.”

4.2 Search results and selection of papers

Our initial search yielded 855 results, corresponding to 565 unique documents. We applied our inclusion criteria step by step (see Table 1), and obtained a final set of 57 papers [5, 6, 35,36,37,38, 50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100]. The complete list of search results can be found in supplementary material (resource 1).

We further explain the reasons for excluding papers with examples. We found 30 papers not related to sign language. These papers discuss the classification and translation of traffic signs and other public signs. 60 papers consider a topic related to sign language, but not to sign language processing. These include papers from the domains of linguistics and psychology. Out of the remaining 345 papers, 130 papers claim to present a translation model in their title or main text, but in fact present a fingerspelling recognition (52 papers [14, 15, 101,102,103,104,105,106,107,108,109,110,111,112,113,114,115,116,117,118,119,120,121,122,123,124,125,126,127,128,129,130,131,132,133,134,135,136,137,138,139,140,141,142,143,144,145,146,147,148,149,150]), sign classification (58 papers [151,152,153,154,155,156,157,158,159,160,161,162,163,164,165,166,167,168,169,170,171,172,173,174,175,176,177,178,179,180,181,182,183,184,185,186,187,188,189,190,191,192,193,194,195,196,197,198,199,200,201,202,203,204,205,206,207,208]), or SLR (20 papers [209,210,211,212,213,214,215,216,217,218,219,220,221,222,223,224,225,226,227,228]) system. There are 36 papers ([16, 30, 229,230,231,232,233,234,235,236,237,238,239,240,241,242,243,244,245,246,247,248,249,250,251,252,253,254,255,256,257,258,259,260,261,262]) on various topics within the domain of sign language processing that do not implement a new MT model.

We find double the amount of papers on MT from spoken languages to sign languages than vice versa: 117 compared to 59. These papers are closely related to the subject of this article, but often use different techniques, including virtual avatars (e.g., [9, 10]), due to the different source and target modality. Hence, translation from a spoken language to a sign language is outside the scope of this article. For an overview of this related field, we refer readers to a recent review article by Kahlon and Singh [263].

Remark that our final inclusion criterion, “present a non-intrusive system based only on RGB camera inputs” is almost entirely covered by the previous criteria. We find several papers that present glove-based systems, but they do not present translation systems. Instead, they are focused on fingerspelling recognition or sign classification (e.g., [119, 164,165,166]). The following five papers present an intrusive SLT system. Fang et al. [264] present an SLT system where signing deaf and hard of hearing people wear a device with integrated depth camera and augmented reality glasses to communicate with hearing people. Guo et al. [265] use a Kinect (RGB-D) camera to record the sign language data. The data used by Xu et al. [266] was also recorded using a Kinect. Gu et al. propose wearable sensors [267] and so do Zhang et al. [268]. After discarding these five papers, we obtain the final set of 57.

5 Literature overview

5.1 Sign language MT

Following our methodology on paper selection, laid out in Sect. 4, we obtain 57 papers published from 2004 until and including 2022. In the analysis, papers are classified based on tasks, datasets, methods and evaluation techniques.

The early work on MT from signed to spoken languages is based entirely on statistical methods [5, 50,51,52,53,54,55,56,57,58]. These works focus on gloss based translation. Several of them add visual inputs to augment the (limited) information provided by the glosses. Bungeroth et al. present the first statistical model that translates from signed to spoken languages [5]. They remark that glosses have limitations and need to be adapted for use in MT systems. Stein et al. incorporate visual information in the form of small images and hand tracking information to augment their model and enhance its performance [50], as do Dreuw et al. [51]. Dreuw et al. later ground this approach by listing requirements for SLT models, such as modeling simultaneity, signing space, and handling coarticulation [53]. Schmidt et al. further add non-manual visual information by incorporating lip reading [57]. The other papers in this set use similar techniques but on different datasets, or compare SMT algorithms [52, 54,55,56, 58].

In 2018, the domain moved away from SMT and toward NMT. This trend is clearly visible in Fig. 4. This drastic shift was not only motivated by the successful applications of NMT techniques in spoken language MT, but also by the publication of the RWTH-PHOENIX-Weather 2014T dataset and the promising results obtained on that dataset using NMT methods [6]. Two exceptions are found. Luqman et al. [63] use Rule-based Machine Translation (RBMT) in 2020 to translate from Arabic sign language into Arabic, and Moe et al. [60] compare NMT and SMT approaches for Myanmar sign language translation in 2018.

Between 2004 and 2018, research into translation from signed to spoken languages was sporadic (10 papers in our subset were published over 14 years). Since 2018, with the move toward NMT, the domain has become more popular, with 47 papers in our subset published over the span of 5 years.

5.2 Datasets

Several datasets are used in SLT research. Some are used often, whereas others are only used once. The distribution is shown in Fig. 5. It is clear that the most used dataset is RWTH-PHOENIX-Weather 2014T [6]. This is because it was the first dataset large enough for neural SLT and because it is readily available for research purposes. This dataset is an extension of earlier versions, RWTH-PHOENIX-Weather [269] and RWTH-PHOENIX-Weather 2014 [58]. It contains videos in DGS, gloss annotations, and text in German. Precisely because of the popularity of this dataset, we can compare several approaches to SLT: see Sect. 5.7.

Other datasets are also used several times. The KETI dataset [62] contains Korean Sign Language (KSL) videos, gloss annotations, and Korean text. RWTH-Boston-104 [50] is a dataset for ASL to English translation containing ASL videos, gloss annotations, and English text. The ASLG-PC12 dataset [270] contains ASL glosses and English text. The glosses are generated from English with a rule based approach. FocusNews and SRF were both introduced as part of the WMTSLT22 task [85], and they contain news broadcasts in Swiss German Sign Language (DSGS) with German translations. CSL-Daily is a dataset containing translations from Chinese Sign Language (CSL) to Chinese on everyday topics [77].

In 2022, the first SLT dataset containing parallel data in multiple sign languages was introduced [98]. The SP-10 dataset contains sign language data and parallel translations from ten sign languages. It was created from data collected in the SpreadTheSign research project [271]. Yin et al. [98] show that multilingual training of SLT models can improve performance and allow for zero-shot translation.

Several papers use the CSL dataset to evaluate SLT models [72, 79, 94]. However, this is problematic because this dataset was originally proposed for SLR [272]. Because the sign (and therefore gloss) order is the same as the word order in the target spoken language, this dataset is not suited for the evaluation of translation models (as explained in Sect. 2.1).

Table 2 presents an overview of dataset and vocabulary sizes. The number of sign instances refers to the amount of individual signs that are produced. Each of these belongs to a vocabulary, of which the size is also given. Finally, there can be singleton signs: these are signs that occur only in the training set but not in the validation or test sets. ASLG-PC12 contains 827 thousand training sentences. It is the largest dataset in terms of number of parallel sentences. The most popular dataset with video data (RWTH-PHOENIX-Weather 2014T) contains only 7,096 training sentences. For MT between spoken languages, datasets typically contain several millions of sentences, for example the Paracrawl corpus [273]. It is clear that compared to spoken language datasets, sign language datasets lack labeled data. In other words, SLT is a low-resource MT task.

5.3 Tasks

A total of 20 papers report on a Gloss2Text model [5, 6, 37, 51, 52, 54,55,56,57,58, 60, 61, 63, 65, 68, 70, 71, 74, 91, 100]. Sign2Gloss2Text models are proposed in five papers [6, 37, 65, 77, 94] and (Sign2Gloss, Gloss2Text) models also in five [6, 37, 50, 51, 65]. Sign2(Gloss+Text) models are found eight times within the reviewed papers [37, 38, 78, 80, 88, 89, 92, 99] and Sign2Text models 30 times [6, 35,36,37, 62, 64, 66, 67, 69, 72, 73, 75, 76, 79, 81,82,83,84,85,86,87,88, 90, 92, 93, 95,96,97,98,99].

Before 2018, when SMT was dominant, Gloss2Text models were most popular, being proposed nine times out of eleven models, the other two being (Sign2Gloss, Gloss2Text) models. Since 2018, with the availability of larger datasets, deep neural feature extractors and neural SLR models, Sign2Gloss2Text, Sign2(Gloss+Text) and Sign2Text are becoming dominant. This gradual evolution from Gloss2Text models toward end-to-end models is visible in Fig. 6.Footnote 5

5.4 Sign language representations

The sign language representations used in the current scientific literature are glosses and representations extracted from videos. Early on, researchers using SMT models for SLT recognized the limitations of glosses and began to add additional visual information to their models [50, 51, 53, 57]. The advent of CNNs has made processing and incorporating visual inputs easier and more robust. All but one model since 2018 that include feature extraction, use neural networks to do so.

We examine the representations on two dimensions. First, there is the method of extracting visual information (e.g., by using human pose estimation or CNNs). Second, there is the matter of which visual information is extracted (e.g., full frames, or specific parts such as the hands or face).

5.4.1 Extraction methods

The most popular feature extraction method in modern SLT is the 2D CNN. 19 papers use a 2D CNN as feature extractor [6, 35,36,37,38, 64, 65, 72, 75, 77,78,79,80,81, 87, 92, 93, 95, 98]. These are often pre-trained for image classification using the ImageNet dataset [274]; some are further pre-trained on the task of Continuous Sign Language Recognition (CSLR), e.g., [37, 38, 92, 93]. Three papers use a subsequent 1D CNN to temporally process the resulting spatial features [64, 77, 80].

Human pose estimation systems are used to extract features in fifteen papers [35, 36, 62, 69, 73, 79, 80, 84, 85, 88,89,90, 94, 95, 97]. The estimated poses can be the only inputs to the translation model [35, 62, 69, 73, 84, 85, 90, 94, 97], or they can augment other spatial or spatio-temporal features [36, 79, 80, 88, 89, 95]. Often, the keypoints are used as a sign language representation directly. In other cases they are processed using a graph neural network to map them onto an embedding space before translation [89, 94].

Ten papers use 3D CNNs for feature extraction [35, 66, 67, 76, 82, 83, 86, 88, 96, 99]. These networks are able to extract spatio-temporal features, leveraging the temporal relations between neighboring frames in video data. The output of a 3D CNN is typically a sequence that is shorter than the input, summarizing multiple frames in a single feature vector. Similarly to 2D CNNs, these networks can be pre-trained on general tasks such as action recognition (on Kinetics [275]) or on more specific tasks such as isolated SLR (e.g., on WL-ASL [276]). Chen et al. [99] and Shi et al. [82] have shown independently that pre-training on sign language specific tasks yields better downstream SLT scores.

CNNs were state of the art in image feature extraction for several years. More recently, the vision transformer architecture was created that can outperform CNNs in certain scenarios [277]. Li et al. are the first to leverage vision transformers for feature extraction [89].

Kumar et al. opt to use traditional computer vision techniques instead of deep neural networks. They represent the hands and the face of the signer in the video as a set of contours [59]. First, they perform binarization to segment the hands and the face based on skin tone. Then they use the active contours method [278] to detect the edges of the hands and face. These are normalized with respect to the signer’s position in the video frame by representing every coordinate as an angle (binned to 360 different angles).

5.4.2 Multi-cue approaches

A simple approach to feature extraction is to consider full video frames as inputs. Performing further pre-processing of the visual information to target hands, face and pose information separately (referred to as a multi-cue approach) improves the performance of SLT models [36, 59, 65, 75, 80, 86, 96]. Zheng et al. [75] show through qualitative analysis that adding facial feature extraction improves translation accuracy in utterances where facial expressions are used. Dey et al. [96] observe improvements in BLEU scores when adding lip reading as an input channel. By adding face crops as an additional channel, Miranda et al. [86] improve the performance of the TSPNet architecture [66].

5.5 Sign language translation models

The current SOTA in SLT is entirely based on encoder-decoder NMT models. RNNs are evaluated in 16 papers [6, 35, 59,60,61,62, 64, 65, 67, 70, 75, 76, 79, 80, 91, 94] and transformers in 34 papers [35,36,37,38, 60, 62, 65, 66, 68, 69, 71,72,73,74, 77, 78, 81,82,83,84,85,86,87,88,89,90,91,92,93, 95, 96, 98,99,100]. Within the RNN-based models, several attention schemes are used: no attention, Luong attention [279] and Bahdanau attention [42].

To the best of our knowledge, there has been no systematic comparison of RNNs and transformers across multiple tasks and datasets for SLT. Some authors perform a comparison between both architectures on specific datasets with specific sign language representations. A conclusive meta-study across papers is problematic due to inter-paper differences.

Ko et al. [62] report that RNNs with Luong attention obtain the highest ROUGE score, but transformers perform better in terms of METEOR, BLEU, and CIDEr (on the KETI dataset). In their experiments, Luong attention outperforms Bahdanau attention and RNNs without attention.

Moe et al. [60] compare RNNs and transformers for Gloss2Text with different tokenization schemes, and in every one of the experiments (on their own dataset), the transformer outperforms the RNN.

Four papers compare RNNs and transformers on RWTH-PHOENIX-Weather 2014T. Orbay et al. [35] report that an RNN with Bahdanau attention outperforms both an RNN with Luong attention and a transformer in terms of ROUGE and BLEU scores. Yin et al. [65] find that a transformer outperforms RNNs and that an RNN with Luong attention outperforms one with Bahdanau attention. Angelova et al. [91] achieve higher scores with RNNs than with transformers (on the DGS corpus [280] as well). Finally, Camgöz et al. [37] report a large increase in BLEU scores when using transformers, compared to their previous paper using RNNs [6]. However, the comparison is between models with different feature extractors and the impact of the architecture versus that of the feature extractors is not evaluated. It is likely that replacing a 2D CNN pre-trained on ImageNet [274] image classification with one pre-trained on CSLR will result in a significant increase in performance, especially when the CSLR model was trained on data from the same source (i.e., RWTH-PHOENIX-Weather 2014), as is the case here.

Pre-trained language models are readily available for transformers (for example via the HuggingFace Transformers library [281]). De Coster et al. have shown that integrating pre-trained spoken language models can improve SLT performance [38, 92]. Chen et al. pre-train their decoder network in two steps: first on a multilingual corpus, and then on Gloss2Text translation [88, 99]. This pre-training approach can drastically improve performance. Chen et al. outperform other models on the RWTH-PHOENIX-Weather 2014T dataset [99]: 28.39 and 28.59 BLEU-4 (the next highest score is 25.59).

5.6 Evaluation

The majority of evaluation studies of the quality of SLT models is based on quantitative metrics. Eight different metrics are used across the 57 papers: BLEU, ROUGE, WER, TER, PER, CIDEr, METEOR, COMET and NIST.

A total of 22 papers [5, 6, 37, 51, 52, 61, 63,64,65,66, 70, 72, 75, 77, 79, 81,82,83, 86, 92, 93, 97] also provide a small set of example translations, along with ground truth reference translations, allowing for qualitative analysis. Dreuw et al.’s model outputs mostly correct translations, but with different word order than the ground truth [51]. Camgöz et al. mention that the most common errors are related to numbers, dates, and places: these can be difficult to derive from context in weather broadcasts [6, 37]. The same kind of errors is made by the models of Partaourides et al. [70] and Voskou et al. [81]. Zheng et al. illustrate how their model improves accuracy for longer sentences [64]. Including facial expressions in the input space improves the detection of emphasis laid on adjectives [75].

The datasets used in the WMTSLT22 task, FocusNews and SRF, have a broader domain (news broadcasts) than, e.g., the RWTH-PHOENIX-Weather 2014T dataset (weather broadcasts). This makes the task significantly more challenging, as can be observed in the range of BLEU scores that are achieved (typically less than 1, compared to scores in the twenties for RWTH-PHOENIX-Weather 2014T). Example translation outputs also provide insight here. The models of Tarres et al. [97] and Hamidullah et al. [83] simply predict the most common German words in many cases, indicating that the SLT model has failed to learn the structure of the data. Shi’s model [82] only translates phrases correctly when they occur in the training set, suggesting overfitting. Angelova et al. use the DGS corpus [280] (which contains discourse on general topics) as a dataset; they also obtain much lower translation scores than on RWTH-PHOENIX-Weather 2014T [91].

To the best of our knowledge, none of the papers discussed in this overview contain evaluations by members of SLCs. Two papers perform human evaluation, but only by hearing people. Luqman et al. [63] ask native Arabic speakers to evaluate the model’s output translations on a three-point scale. For the WMTSLT22 challenge [85], translation outputs were scored by human evaluators (native German speakers trained as DSGS interpreters). The resulting scores indicate a considerable gap between the performance of human translators (87%) and MT (2%).

5.7 The RWTH-PHOENIX-Weather 2014T benchmark

The popularity of the RWTH-PHOENIX-Weather 2014T dataset facilitates the comparison of different SLT models on this dataset. We compare models based on their BLEU-4 score as this is the only metric consistently reported on in all of the papers using RWTH-PHOENIX-Weather 2014T (except [86]).

An overview of Gloss2Text models is shown in Table 3. For Sign2Gloss2Text, we refer to Table 4, and for (Sign2Gloss, Gloss2Text) to Table 5. For Sign2(Gloss+Text) and Sign2Text, we list the results in Tables 6 and 7, respectively.

5.7.1 Sign language representations

Six papers use features extracted using a 2D CNNs by first training a CSLR model on RWTH-PHOENIX-Weather 2014Footnote 6 [6, 36, 38, 81, 92, 93]. These papers use the full frame as inputs to the feature extractor.

Others combine multiple input channels. Yin et al. [65] use Spatio-Temporal Multi-Cue (STMC) features, extracting images of the face, hands and full frames as well as including estimated poses of the body. These features are processed by a network which performs temporal processing, both on the intra- and the inter-cue level. Their models are the SOTA of Sign2Gloss2Text translation (25.4 BLEU-4). The model by Zhou et al. is similar and obtains a BLEU-4 score of 23.65 on Sign2(Gloss+Text) translation [80]. Camgöz et al. [36] use mouth pattern cues, pose information and hand shape information; by using this multi-cue representation, they are able to remove glosses from their translation model (but their feature extractors are still trained using glosses). Zheng et al. [75] use an additional channel of facial information for Sign2Text and obtain an increase of 1.6 BLEU-4 compared to their baseline. Miranda et al. [86] augment TSPNet [66] with face crops, improving the performance of the network.

Frame-based feature representations result in long input sequences to the translation model. The length of these sequences can be reduced by considering short clips instead of frames. This is done by using a pre-trained 3D CNN or by reducing the sequence length using temporal convolutions or RNNs that are trained jointly with the translation model. Zhou et al. [77] use 2D CNN features extracted from full frames, which are then further processed using temporal convolutions, reducing the temporal feature size by a factor 4. They call this approach Temporal Inception Networks (TIN). They achieve near-SOTA performance on Sign2Gloss2Text translation (23.51 BLEU-4) and Sign2Text translation (24.32 BLEU-4). Zheng et al. [64] use an unsupervised algorithm called Frame Stream Density Compression (FSDC) to remove temporally redundant frames by comparing frames on the level of pixels. The resulting features are processed using a combination of temporal convolutions and RNNs. They compare the different settings and their combination and find that these techniques can be used to reduce the input size of the sign language features and to increase the BLEU-4 score. Chen et al. [99] achieve SOTA results of 28.39 BLEU-4 using 3D CNNs pre-trained first on Kinetics-400 [275] and then on WL-ASL [276].

5.7.2 Neural architectures

We investigate whether RNNs or transformers perform best on this dataset. As this may depend on the used sign language representation, we analyze Gloss2Text, Sign2Gloss2Text, (Sign2Gloss,Gloss2Text) Sign2(Gloss+Text) and Sign2Text separately.

Because all Gloss2Text models use the same sign language representation (glosses), we can directly compare the performance of different encoder-decoder architectures. Transformers (\(23.02 \pm 2.05\)) outperform RNNs (\(18.29 \pm 1.931\)).

The Sign2Gloss2Text transformer models by Yin et al. [65] achieve better performance (\(23.84 \pm 1.225\)) than their recurrent models (\(20.47 \pm 2.032\)).

There is only a single (Sign2Gloss, Gloss2Text) model using an RNN, and it achieves 17.79 BLEU-4 [6]. The transformer models of Camgöz et al. [37] and Yin et al. [65] achieve 21.59 and 23.77, respectively. These models all use different feature extractors, so direct comparison is not possible.

No direct comparison is available for Sign2(Gloss+Text) translation. Zhou et al. [80] present an LSTMs encoder-decoder using spatio-temporal multi-cue features and obtain 24.32 BLEU-4. The best Sign2(Gloss+Text) model leverages the pre-trained large language model mBART [282] (a transformer) and obtains 28.39 BLEU-4 [283].

The Sign2Text translation models exhibit higher variance in their scores than models for the other tasks. This is likely due to the lack of additional supervision signal in the form of glosses: the choice of sign language representation has a larger impact on the translation score. The difference in BLEU-4 score between transformers (\(19.86 \pm 5.62\)) and RNNs (\(10.72 \pm 3.63\)) is larger than in other tasks. However, we do not draw definitive conclusions from these results, as the sign language representations differ in architecture and pre-training task between models.

We provide a graphical overview of the performance of RNNs and transformers across tasks in Fig. 7 and observe that transformers often outperform RNNs on RWTH-PHOENIX-Weather 2014T. However, we cannot conclusively state whether this is due to the network architecture, or due to the sign language representations that these models are trained with.

5.7.3 Evolution of scores

Figure 8 shows an overview of the BLEU-4 scores on the RWTH-PHOENIX-Weather 2014T dataset from 2018 until 2023. It illustrates that the current best performing model (28.59 BLEU-4) is a Sign2Text transformer proposed by Chen et al. [88].

6 Discussion of the current state of the art

The analysis of the scientific literature on SLT in Sect. 5 allows us to formulate answers to the four research questions.

6.1 RQ1: Datasets

RQ1 asks, “Which datasets are used and what are their properties?” The most frequently used dataset is RWTH-PHOENIX-Weather 2014T [6] for translation from DGS to German. It contains 8257 parallel utterances from several different interpreters. The domain is weather broadcasts.

Current datasets have several limitations. They are typically restricted to controlled domains of discourse (e.g., weather broadcasts) and have little variability in terms of visual conditions (e.g., TV studios). Camgöz et al. recently introduced three new benchmark datasets from the TV news and weather broadcasts domain [73]. Two similar datasets were introduced in 2022 by Müller et al. [85]. Because news broadcasts are included, the domain of discourse (and thus the vocabulary) is broader. It is more challenging to achieve acceptable translation performance with broader domains [56, 73, 83, 91, 97]. Yet, these datasets are more representative of real-world signing.

Another limitation is not related to the content, but rather the style of signing. Many SLT datasets contain recordings of non-native signers. In several cases, the signing is interpreted (often under time pressure) from spoken language. This means that the used signing may not be representative of the sign language and may in fact be influenced by the grammar of a spoken language. Training a translation model on these kinds of data has implications for the quality and accuracy of the resulting translations.

6.2 RQ2: Sign language representations

RQ2 asks, “Which kinds of sign language representations are most informative?” The limitations and drawbacks of glosses lead to the use of visual-based sign language representations. This representation can have a large impact on the performance of the SLT model. Spatio-temporal and multi-cue sign language representations outperform simple spatial (frame-based) sign language representations. Pre-training on SLR tasks yields better features for SLT.

6.3 RQ3: Translation model architectures

RQ3 asks, “Which algorithms are currently the SOTA for SLT?” Despite the generally small size of the datasets used for SLT, we see that neural MT models achieve the highest translation scores. Transformers outperform RNNs in many cases, but our literature overview suggests that the choice of sign language representation has a larger impact than the choice of translation architecture.

6.4 RQ4: Evaluation

RQ4 asks, “How are current SLT models evaluated?” Many papers report several translation related metrics, such as BLEU, ROUGE, WER and METEOR. These are standard metrics in MT. Several papers also provide example translations to allow the reader to gauge the translation quality for themselves. Whereas the above metrics often correlate quite well with human evaluation, this is not always the case [284]. They also sometimes do not correlate among each other (what is the best model can be different depending on the considered metric). Only two of the 57 reviewed papers incorporate human evaluators in the loop [63, 85]. None of the reviewed papers evaluate their models in collaboration with native signers.

7 Challenges and proposals

Our literature overview (Sect. 5) and discussion thereof (Sect. 6) illustrate that the current challenges in the domain are threefold: (i) the collection of datasets, (ii) the design of sign language representations, and (iii) evaluation of the proposed models. We discuss these below, and finally give suggestions for the development of SLT models with SOTA methods.

7.1 Dataset collection

7.1.1 Challenges

Currently, SLT is a low-resource MT task: the largest public video datasets for MT contain just thousands of training examples (see Table 2). Current research uses datasets in which the videos have fixed viewpoints, similar backgrounds, and sometimes the signers even wear similar clothing for maximum contrast with the background. Yet, in real-world applications, dynamic viewpoints and lighting conditions will be a common occurrence. Furthermore, far from all sign languages have corresponding translation datasets. Additional datasets need to be collected and existing ones need to be extended.

Current datasets are insufficiently large to support SLT on general topics. When moving from weather broadcasts to news broadcasts, we observe a significant drop in translation scores. There is a clear trade-off between dataset size and vocabulary size.

De Meulder [285] raises concerns with current dataset collection efforts. Existing datasets and those currently being collected suffer from several biases. If interpreted data are used, influence from spoken languages will be present in the dataset. If only native signer data are used, then the majority of signers will have the same ethnicity. Both statistical as well as neural MT exacerbate bias [286, 287]. Therefore, when our training datasets are biased and of small volumes, we cannot expect (data driven) MT systems to reach high qualities and be generalizable.

7.1.2 Proposals

We propose to gather two kinds of datasets: focused datasets for training SLT models, but also large, multi-lingual datasets for the design of sign language representations. The former type of datasets already exists, but the latter kind, to the best of our knowledge, does not yet exist.

By collecting larger, multilingual datasets, we can learn sign language representations with (self-)supervised deep learning techniques. Such datasets do not need to consist entirely of native signing. They should include many topics and visual characteristics to be as general as possible.

In contrast, SLT requires high quality labeled data, the collection of which is challenging. Bragg et al.’s first and second calls to action, “Involve Deaf team members throughout” and “Focus on real-world applications” [17], guide the dataset collection process. By involving SLC members, the dataset collection effort can be guided toward use cases that would benefit SLCs. Additionally, by collecting datasets with a limited domain of discourse targeted at specific use cases, the SLT problem is effectively simplified. As a result, any applications would be limited in scope, but more useful in practice.

7.2 Sign language representations

7.2.1 Challenges

Current sign language representations do not take into account the productive lexicon. In fact, it is doubtful whether a pure end-to-end NMT approach is capable of tackling productive signs. To recognize and understand productive signs, we need models that have the ability to link abstract visual information to the properties of objects. Incorporating the productive lexicon in translation systems is a significant challenge, one for which, to the best of our knowledge, labeled data is currently not available.

Current end-to-end representations moreover do not explicitly account for fingerspelling, signing space, or classifiers. Learning these aspects in the translation model with an end-to-end approach is challenging, especially due the scarcity of annotated data.

Our literature overview shows that the choice of representation has a significant impact on the translation performance. Hence, improving the feature extraction and incorporating the aforementioned sign language characteristics is paramount.

7.2.2 Proposals

Linguistic analysis of sign languages can inform the design of sign language representations. The definition of the so-called meaningful units has been discussed by De Sisto et al. [32]. It requires collaboration between computer scientists and (computational) linguists. Researchers should analyze the representations that are automatically learned by SOTA SLT models. For example, SLR models appear to implicitly learn to recognize hand shapes [288]. Based on such analyses, linguists can suggest which components to focus on next.

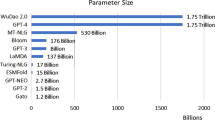

In parallel, we can exploit unlabeled sign language data to learn sign language representations in a self-supervised manner. Recently, increasingly larger neural networks are being trained on unlabeled datasets to discover latent patterns and to learn neural representations of textual, auditory, and visual data. In the domain of natural language processing, we already observe tremendous advances thanks to self-supervised language models such as BERT [45]. In computer vision, self-supervised techniques are applied to pre-train powerful feature extractors which can then be applied to downstream tasks such as image classification or object detection. Algorithms such as SimCLR [283], BYOL [289] and DINO [290] are used to train 2D CNNs and vision transformers without labels, reaching performance that is almost on the same level as models trained with supervised techniques. In the audio domain, Wav2Vec 2.0 learns discrete speech units in a self-supervised manner [291]. In sign language processing, self-supervised learning can be applied to train spatio-temporal representations (like Wav2Vec 2.0 or SimCLR), and to contextualize those representations (like BERT).

Sign languages share some common elements, for example the fact that they all use the human body to convey information. Movements used in signing are composed of motion primitives and the configuration of the hand (shape and orientation) is important in all sign languages. The recognition of these low level components does not require language specific datasets and could be performed on multilingual datasets, containing videos recorded around the world with people of various ages, genders, and ethnicities. The representations extracted from multilingual SLR models can then be fine-tuned in monolingual or multilingual SLT models.

Self-supervised and multilingual learning should be evaluated for the purpose of learning such common elements of sign languages. This will not only facilitate automatic SLT, but could also lead to the development of new tools supporting linguistic analysis of sign languages and their commonalities and differences.

7.3 Evaluation

7.3.1 Challenges

Current research uses mostly quantitative metrics to evaluate SLT models, on datasets with limited scope. In-depth error analysis is missing from many SLT papers. SLT models should also be evaluated on real-world data from real-world settings. Furthermore, human evaluation from signers and non-signers is required to truly assess the translation quality. This is especially true because many of the SLT models are currently designed, implemented and evaluated by hearing researchers.

7.3.2 Proposals

Human-in-the-loop development can alleviate some of the concerns that live in SLCs about the application of MT techniques to sign languages about appropriation of sign languages. Human (signing and non-signing) evaluators should be included in every step of SLT research. Their feedback should guide the development of new models. For example, if the current models fail to properly translate classifiers, then SLT researchers could choose to focus on classifiers. This would hasten the progress in this field which is currently mostly focusing on improving metrics that say little about the usability of the SLT models.

Inspiration for human evaluation can be found in the yearly conference on machine translation (WMT), where researchers perform both direct assessment of translations, and relative ranking [292]. Müller et al. performed human evaluation on a benchmark dataset after an SLT challenge [85]. They hired native German speakers trained as DSGS interpreters to evaluate four different models, and compare their outputs to human translations. Their work can be a guideline for human evaluation in future research.

7.4 Applying SOTA techniques

There is still a large gap between MT and human level performance for SLT [85]. However, with the current SOTA and sufficient constraints, it may be possible to develop limited SLT applications. The development of these applications can be guided with the following three principles.

First, a dataset should be collected that has a specific topic related to the application: it is not yet possible to train robust SLT models with large vocabularies [56, 73, 83, 91, 97]. Second, the feature extractor should be pre-trained on SLR tasks as this yields the most informative representations [82, 96, 99]. Third, qualitative evaluation and evaluation by humans can provide insights into the failure cases of SLT models.

8 Conclusion

In this article, we discuss the SOTA of SLT and explore challenges and opportunities for future research through a systematic overview of the papers in this domain. We review 57 papers on machine translation from sign to spoken languages. These papers are selected based on predefined criteria and they are indicative of sound SLT research. The selected papers are written in English and peer-reviewed. They propose, implement and evaluate a sign language machine translation system from a sign language to a spoken language, supporting RGB video inputs. We discuss the SOTA of SLT and explore several challenges and opportunities for future research.

In recent years, neural machine translation has become dominant in the growing domain of SLT. The most powerful sign language representations are those that combine information from multiple channels (manual actions, body movements and mouth patterns) and those that are reduced in length by temporal processing modules. These translation models are typically RNNs or transformers. Transformers outperform RNNs in many cases, and large language models allow for transfer learning. SLT datasets are small: we are dealing with a low-resource machine translation problem. Many datasets consider limited domains of discourse and generally contain recordings of non-native signers. This has implications on the quality and accuracy of translations generated by models trained on these datasets, which must be taken into account when evaluating SLT models. Datasets that consider a broader domain of discourse are too small to train NMT models on. Evaluation is mostly performed using quantitative metrics that can be computed automatically, given a corpus. There are currently no works that perform evaluation of neural SLT models in collaboration with sign language users.

Progressing beyond the current SOTA of SLT requires efforts in data collection, the design of sign language representations, machine translation, and evaluation. Future research may improve sign language representations by incorporating domain knowledge into their design and by leveraging abundant, but as of yet unexploited, unlabeled data. Research should be conducted in an interdisciplinary manner, with computer scientists, sign language linguists, and experts on sign language cultures working together. Finally, SLT models should be evaluated in collaboration with end users: native signers as well as hearing people that do not know any sign language.

Change history

25 January 2024

A Correction to this paper has been published: https://doi.org/10.1007/s10209-023-01085-9

Notes

For this reason, annotators of sign language corpora sometimes provide two parallel gloss tiers: one per hand [30].

Google Scholar: https://scholar.google.com, Web of Science: https://www.webofscience.com, IEEE Xplore: https://ieeexplore.ieee.org/.

As one paper may discuss several tasks, the total count is higher than the amount of papers.

As discussed in Sect. 5.2, this dataset is an earlier version of RWTH-PHOENIX-Weather 2014T and they contain the same videos.

References

Pugeault, N., Bowden, R.: Spelling it out: Real-time asl fingerspelling recognition. In: 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), pp. 1114–1119 (2011). IEEE

Fowley, F., Ventresque, A.: Sign language fingerspelling recognition using synthetic data. In: AICS, pp. 84–95 (2021). CEUR-WS

Pigou, L., Van Herreweghe, M., Dambre, J.: Gesture and sign language recognition with temporal residual networks. In: Proceedings of the IEEE International Conference on Computer Vision Workshops, pp. 3086–3093 (2017)

Jiang, S., Sun, B., Wang, L., Bai, Y., Li, K., Fu, Y.: Skeleton aware multi-modal sign language recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3413–3423 (2021)

Bungeroth, J., Ney, H.: Statistical sign language translation. In: Workshop on Representation and Processing of Sign Languages, LREC, vol. 4, pp. 105–108 (2004). Citeseer

Camgoz, N.C., Hadfield, S., Koller, O., Ney, H., Bowden, R.: Neural sign language translation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7784–7793 (2018). https://doi.org/10.1109/CVPR.2018.00812

Stein, D., Bungeroth, J., Ney, H.: Morpho-syntax based statistical methods for automatic sign language translation. In: Proceedings of the 11th Annual Conference of the European Association for Machine Translation (2006)

Morrissey, S., Way, A.: Joining hands: Developing a sign language machine translation system with and for the deaf community. In: CVHI (2007)

San-Segundo, R., López, V., Martın, R., Sánchez, D., Garcıa, A.: Language resources for spanish–spanish sign language (lse) translation. In: Proceedings of the 4th Workshop on the Representation and Processing of Sign Languages: Corpora and Sign Language Technologies at LREC, pp. 208–211 (2010)

David, B., Bouillon, P.: Prototype of automatic translation to the sign language of french-speaking Belgium. Evaluation by the deaf community. Modelling, Measurement and Control C 79(4), 162–167 (2018)

Erard, M.: Why sign language gloves don’t help deaf people (2017). https://www.theatlantic.com/technology/archive/2017/11/why-sign-language-gloves-dont-help-deaf-people/545441/

Adnan, N.H., Wan, K., AB, S., BAKAR, J.A.A.: Learning and manipulating human’s fingertip bending data for sign language translation using pca-bmu classifier. CREAM: Curr. Res. Malaysia (Penyelidikan Terkini di Malaysia) 3, 361–372 (2013)

Caliwag, A., Angsanto, S.R., Lim, W.: Korean sign language translation using machine learning. In: 2018 Tenth International Conference on Ubiquitous and Future Networks (ICUFN), pp. 826–828 (2018). IEEE

Mistry, J., Inden, B.: An approach to sign language translation using the intel realsense camera. In: 2018 10th Computer Science and Electronic Engineering (CEEC), pp. 219–224 (2018). IEEE

Krishnan, P.T., Balasubramanian, P.: Detection of alphabets for machine translation of sign language using deep neural net. In: 2019 International Conference on Data Science and Communication (IconDSC), pp. 1–3 (2019). IEEE

Núñez-Marcos, A., Perez-de-Viñaspre, O., Labaka, G.: A survey on sign language machine translation. Expert Systems with Applications, 118993 (2022)

Bragg, D., Koller, O., Bellard, M., Berke, L., Boudreault, P., Braffort, A., Caselli, N., Huenerfauth, M., Kacorri, H., Verhoef, T., et al.: Sign language recognition, generation, and translation: An interdisciplinary perspective. In: The 21st International ACM SIGACCESS Conference on Computers and Accessibility, pp. 16–31 (2019)

Vermeerbergen, M., Twilhaar, J.N., Van Herreweghe, M.: Variation between and within sign language of the netherlands and flemish sign language. In: Language and Space Volume 30 (3): Dutch, pp. 680–699. De Gruyter Mouton, Berlin (2013)

Van Herreweghe, M., Vermeerbergen, M.: Flemish sign language standardisation. Current issues in language planning 10(3), 308–326 (2009)

Stokoe, W.: Sign language structure: An outline of the visual communication systems of the american deaf. Studies in Linguistics, Occasional Papers 8 (1960)

Battison, R.: Lexical Borrowing in American Sign Language. Linstok Press, Silver Spring (1978)

Bank, R., Crasborn, O.A., Van Hout, R.: Variation in mouth actions with manual signs in Sign Language of the Netherlands (NGT). Sign Language & Linguistics 14(2), 248–270 (2011)

Perniss, P.: 19. use of sign space. In: Sign Language, pp. 412–431. De Gruyter Mouton, Berlin (2012)

Zwitserlood, I.: In: Pfau, R., Steinbach, M., Woll, B. (eds.) Classifiers, pp. 158–186. De Gruyter Mouton, Berlin (2012). https://doi.org/10.1515/9783110261325.158

Vermeerbergen, M.: Past and current trends in sign language research. Lang. Commun. 26(2), 168–192 (2006). https://doi.org/10.1016/j.langcom.2005.10.004

Frishberg, N., Hoiting, N., Slobin, D.I.: In: Pfau, R., Steinbach, M., Woll, B. (eds.) Transcription, pp. 1045–1075. De Gruyter Mouton, Berlin (2012). https://doi.org/10.1515/9783110261325.1045

Sutton, V.: Sign Writing for Everyday Use. Sutton Movement Writing Press, New York (1981)

Prillwitz, S.: HamNoSys Version 2.0. Hamburg Notation System for Sign Languages: An Introductory Guide. Intern. Arb. z. Gebärdensprache u. Kommunik. Signum Press, Berlin (1989)

Vermeerbergen, M., Leeson, L., Crasborn, O.A.: Simultaneity in Signed Languages: Form and Function vol. 281. John Benjamins Publishing, Amsterdam (2007). https://doi.org/10.1075/cilt.281

De Sisto, M., Vandeghinste, V., Gómez, S.E., De Coster, M., Shterionov, D., Seggion, H.: Challenges with sign language datasets for sign language recognition and translation. In: LREC2022, the 13th International Conference on Language Resources and Evaluation, pp. 2478–2487 (2022)

Murtagh, I.E.: A linguistically motivated computational framework for irish sign language. PhD thesis, Trinity College Dublin.School of Linguistic Speech and Comm Sci (2019)

De Sisto, M., Shterionov, D., Murtagh, I., Vermeerbergen, M., Leeson, L.: Defining meaningful units. challenges in sign segmentation and segment-meaning mapping. In: Proceedings of the 1st International Workshop on Automatic Translation for Signed and Spoken Languages (AT4SSL), pp. 98–103. Association for Machine Translation in the Americas, Virtual (2021). https://aclanthology.org/2021.mtsummit-at4ssl.11

Koller, O., Camgoz, N.C., Ney, H., Bowden, R.: Weakly supervised learning with multi-stream cnn-lstm-hmms to discover sequential parallelism in sign language videos. IEEE Trans. Pattern Anal. Mach. Intell. 42(9), 2306–2320 (2019). https://doi.org/10.1109/TPAMI.2019.2911077

Koller, O., Ney, H., Bowden, R.: Deep hand: How to train a cnn on 1 million hand images when your data is continuous and weakly labelled. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3793–3802 (2016)

Orbay, A., Akarun, L.: Neural sign language translation by learning tokenization. In: 2020 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2020), pp. 222–228 (2020). IEEE

Camgoz, N.C., Koller, O., Hadfield, S., Bowden, R.: Multi-channel transformers for multi-articulatory sign language translation. In: European Conference on Computer Vision, pp. 301–319 (2020). Springer

Camgoz, N.C., Koller, O., Hadfield, S., Bowden, R.: Sign language transformers: Joint end-to-end sign language recognition and translation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10023–10033 (2020)

De Coster, M., D’Oosterlinck, K., Pizurica, M., Rabaey, P., Verlinden, S., Van Herreweghe, M., Dambre, J.: Frozen pretrained transformers for neural sign language translation. In: Proceedings of the 1st International Workshop on Automatic Translation for Signed and Spoken Languages (AT4SSL), pp. 88–97. Association for Machine Translation in the Americas, Virtual (2021). https://aclanthology.org/2021.mtsummit-at4ssl.10

Sutskever, I., Vinyals, O., Le, Q.V.: Sequence to sequence learning with neural networks. In: Advances in Neural Information Processing Systems, pp. 3104–3112 (2014)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997). https://doi.org/10.1162/neco.1997.9.8.1735

Cho, K., van Merriënboer, B., Gülçehre, Ç., Bahdanau, D., Bougares, F., Schwenk, H., Bengio, Y.: Learning phrase representations using RNN encoder–decoder for statistical machine translation. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, pp. 1724–1734 (2014)

Bahdanau, D., Cho, K.H., Bengio, Y.: Neural machine translation by jointly learning to align and translate. In: 3rd International Conference on Learning Representations, ICLR 2015 (2015)

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. In: Advances in Neural Information Processing Systems, pp. 5998–6008 (2017)

Pennington, J., Socher, R., Manning, C.D.: Glove: Global vectors for word representation. In: Empirical Methods in Natural Language Processing (EMNLP), pp. 1532–1543 (2014). https://doi.org/10.3115/v1/D14-1162

Devlin, J., Chang, M.-W., Lee, K., Toutanova, K.: BERT: Pre-training of deep bidirectional transformers for language understanding. In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pp. 4171–4186. Association for Computational Linguistics, Minneapolis, Minnesota (2019). https://doi.org/10.18653/v1/N19-1423