Abstract

Given a real inner product space V and a group G of linear isometries, we construct a family of G-invariant real-valued functions on V that we call max filters. In the case where \(V={\mathbb {R}}^d\) and G is finite, a suitable max filter bank separates orbits, and is even bilipschitz in the quotient metric. In the case where \(V=L^2({\mathbb {R}}^d)\) and G is the group of translation operators, a max filter exhibits stability to diffeomorphic distortion like that of the scattering transform introduced by Mallat. We establish that max filters are well suited for various classification tasks, both in theory and in practice.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Modern machine learning has been extraordinarily successful in domains where large volumes of labeled data are available [50, 68]. Indeed, highly expressive models can generalize once they fit an appropriately large training set. Unfortunately, many important domains are plagued by a scarcity of data or by expensive labels (or both). One way to bridge this gap is by augmenting the given dataset with the help of a large family of innocuous distortions. In many cases, the distortions correspond to the action of a group, meaning the ground truth exhibits known symmetries. Augmenting the training set by applying the group action encourages the model to learn these symmetries. While this approach has been successful [28, 30, 50, 69], it is extremely inefficient to train a large, symmetry-agnostic model to find a highly symmetric function. One wonders:

Why not use a model that already accounts for known symmetries?

This motivates invariant machine learning (e.g., [6, 43, 72, 79, 81]), where the model is invariant to underlying symmetries in the data. To illustrate, suppose an object is represented by a point x in a set V, but there is a group G acting on V such that the same object is also represented by \(gx\in V\) for every \(g\in G\). This ambiguity emerges, for example, when using a matrix to represent a point cloud or a graph, since the representation depends on the labeling of the points or vertices. If we apply a G-invariant feature map \(\Phi :V\rightarrow F\), then the learning task can be performed in the feature domain F without having to worry about symmetries in the problem. If F is a Euclidean space, then we can capitalize on the trove of machine learning methods that rely on that particular structure. Furthermore, if \(\Phi \) separates the G-orbits in V, then no information is lost by passing to the feature domain. In practice, V and F tend to be vector spaces out of convenience, and G is frequently a linear group.

While our interest in invariants stems from modern machine learning, maps like \(\Phi \) have been studied since Cayley established invariant theory in the nineteenth century [27]. Here, we take \(V={\mathbb {C}}^d\) and \(G\le {\text {GL}}(V)\), and the maps of interest consist of the G-invariant polynomials \({\mathbb {C}}[V]^G\). Hilbert [44] proved that \({\mathbb {C}}[V]^G\) is finitely generated as a \({\mathbb {C}}\)-algebra in the special case where G is the image of a representation of \({\text {SL}}({\mathbb {C}}^k)\), meaning one may take the feature domain F to be finite dimensional (one dimension for each generator). Since G is not a compact subset of \({\mathbb {C}}^{d\times d}\) in such cases, there may exist distinct G-orbits whose closures intersect, meaning no continuous G-invariant function can separate them; this subtlety plays an important role in Mumford’s more general geometric invariant theory [58]. In general, the generating set of \({\mathbb {C}}[V]^G\) is often extraordinarily large [38], making it impractical for machine learning applications. To alleviate this issue, there has been some work to construct separating sets of polynomials [34,35,36], i.e., sets that separate as well as \({\mathbb {C}}[V]^G\) does without necessarily generating all of \({\mathbb {C}}[V]^G\). For every reductive group G, there exists a separating set of \(2d+1\) invariant polynomials [21, 38], but the complexity of evaluating these polynomials is still quite large. Furthermore, these polynomials tend to have high degree, and so they are numerically unstable in practice. In practice, one also desires a quantitative notion of separating so that distant orbits are not sent to nearby points in the feature space, and this behavior is not always afforded by a separating set of polynomials [21]. Despite these shortcomings, polynomial invariants are popular in the data science literature due in part to their rich algebraic theory, e.g., [6, 9, 12, 21, 61].

In this paper, we focus on the case where V is a real inner product space and G is a group of linear isometries of V. We introduce a family of non-polynomial invariants that we call max filters. In Sect. 2, we define max filters, we identify some basic properties, and we highlight a few familiar examples. In Sect. 3, we use ideas from [39] to establish that 2d generic max filters separate all G-orbits when G is finite (see Corollary 15), and then we describe various settings in which max filtering is computationally efficient. In Sect. 4, we show that when G is finite, a sufficiently large random max filter bank is bilipschitz with high probability; see Theorem 20. This is the first known construction of invariant maps for a general class of groups that enjoy a lower Lipschitz bound, meaning they separate orbits in a quantitative sense. In the same section, we later show that when \(V=L^2({\mathbb {R}}^d)\) and G is the group of translations, certain max filters exhibit stability to diffeomorphic distortion akin to what Mallat established for his scattering transform in [54]; see Theorem 24. In Sect. 5, we explain how to select max filters for classification in a couple of different settings, we determine the subgradient of max filters to enable training, and we characterize how random max filters behave for the symmetric group. In Sect. 6, we use max filtering to process real-world datasets. Specifically, we visualize the shape space of voting districts, we use electrocardiogram data to classify whether patients had a heart attack, and we classify a multitude of textures. Surprisingly, we find that even in cases where the data do not appear to exhibit symmetries in a group G, max filtering with respect to G can still reveal salient features. We conclude in Sect. 7 with a discussion of opportunities for follow-on work.

2 Preliminaries

Given a real inner product space V and a group G of linear isometries of V, consider the quotient space V/G consisting of the G-orbits \([x]:=G\cdot x\) for \(x\in V\). From this point forward, we assume that these orbits are closed, and so the quotient space is equipped with the metric

(Indeed, d satisfies the triangle inequality since G is a group of isometries of V.) This paper is concerned with the following function.

Definition 1

The max filtering map \(\langle \hspace{-2.5pt}\langle \cdot ,\cdot \rangle \hspace{-2.5pt}\rangle :V/G\times V/G\rightarrow {\mathbb {R}}\) is defined by

Sometimes, “max filtering map” refers to the related function \(\langle \hspace{-2.5pt}\langle [\cdot ],[\cdot ]\rangle \hspace{-2.5pt}\rangle :V\times V\rightarrow {\mathbb {R}}\) instead. (Note the change of domain.) The intended domain should be clear from context.

We note that since G consists of linear isometries, it is closed under adjoints, and so the max filtering map can be alternatively expressed as

Furthermore, if G is compact in the strong operator topology (e.g., finite), then the supremum can be replaced with a maximum:

The max filtering map satisfies several important properties, summarized below.

Lemma 2

Suppose \(x,y,z\in V\). Then each of the following holds:

-

(a)

\(\langle \hspace{-2.5pt}\langle [x],[x]\rangle \hspace{-2.5pt}\rangle =\Vert x\Vert ^2\).

-

(b)

\(\langle \hspace{-2.5pt}\langle [x],[y]\rangle \hspace{-2.5pt}\rangle =\langle \hspace{-2.5pt}\langle [y],[x]\rangle \hspace{-2.5pt}\rangle \).

-

(c)

\(\langle \hspace{-2.5pt}\langle [x],[ry]\rangle \hspace{-2.5pt}\rangle =r\langle \hspace{-2.5pt}\langle [x],[y]\rangle \hspace{-2.5pt}\rangle \) for every \(r\ge 0\).

-

(d)

\(\langle \hspace{-2.5pt}\langle [x],[\cdot ]\rangle \hspace{-2.5pt}\rangle :V\rightarrow {\mathbb {R}}\) is convex.

-

(e)

\(\langle \hspace{-2.5pt}\langle [x],[y+z]\rangle \hspace{-2.5pt}\rangle \le \langle \hspace{-2.5pt}\langle [x],[y]\rangle \hspace{-2.5pt}\rangle +\langle \hspace{-2.5pt}\langle [x],[z]\rangle \hspace{-2.5pt}\rangle \).

-

(f)

\(d([x],[y])^2=\Vert x\Vert ^2-2\langle \hspace{-2.5pt}\langle [x],[y]\rangle \hspace{-2.5pt}\rangle +\Vert y\Vert ^2\).

-

(g)

\(\langle \hspace{-2.5pt}\langle [x],\cdot \rangle \hspace{-2.5pt}\rangle :V/G\rightarrow {\mathbb {R}}\) is \(\Vert x\Vert \)-Lipschitz.

Proof

First, (a) and (b) are immediate, as is the \(r=0\) case of (c). For \(r>0\), observe that \(q\in [ry]\) precisely when \(q':=r^{-1}q\in [y]\), since each member of G is a linear isometry. Thus,

Next, (d) follows from the identity \(\langle \hspace{-2.5pt}\langle [x],[y]\rangle \hspace{-2.5pt}\rangle =\sup _{p\in [x]}\langle p,y\rangle \), which expresses \(\langle \hspace{-2.5pt}\langle [x],[\cdot ]\rangle \hspace{-2.5pt}\rangle \) as a pointwise supremum of convex functions. For (e), we apply (d) and (c):

Next, (f) is immediate. For (g), select any \(y,z\in V\). We may assume \(\langle \hspace{-2.5pt}\langle [x],[y]\rangle \hspace{-2.5pt}\rangle \ge \langle \hspace{-2.5pt}\langle [x],[z]\rangle \hspace{-2.5pt}\rangle \) without loss of generality. Select any \(p\in [y]\), \(q\in [z]\), and \(\epsilon >0\), and take \(g\in G\) such that \(\langle x,gp\rangle >\langle \hspace{-2.5pt}\langle [x],[y]\rangle \hspace{-2.5pt}\rangle -\epsilon \). Then

Since p, q, and \(\epsilon \) were arbitrary, the result follows. \(\square \)

Definition 3

Given a template \(z\in V\), we refer to \(\langle \hspace{-2.5pt}\langle [z],\cdot \rangle \hspace{-2.5pt}\rangle :V/G\rightarrow {\mathbb {R}}\) as the corresponding max filter. Given a (possibly infinite) sequence \(\{z_i\}_{i\in I}\) of templates in V, the corresponding max filter bank is \(\Phi :V/G\rightarrow {\mathbb {R}}^I\) defined by \(\Phi ([x]):=\{\langle \hspace{-2.5pt}\langle [z_i],[x]\rangle \hspace{-2.5pt}\rangle \}_{i\in I}\).

In what follows, we identify a few familiar examples of max filters. Reader beware: These examples (and our subsequent discussion) run the gamut of applied mathematics, and so there is no single choice of notation that captures all settings simultaneously, but we try to keep notation consistent whenever possible.

Example 4

(Norms) There are several norms that can be thought of as a max filter with some template. For example, consider \(V={\mathbb {R}}^n\). Then taking G to be the orthogonal group \({\text {O}}(n)\) and any unit-norm template z gives

Similarly, the infinity norm is obtained by taking G to be the group of signed permutation matrices and z to be a standard basis element, while the 1-norm comes from taking G to be the group of diagonal orthogonal matrices and z to be the all-ones vector. We can also recover various matrix norms when \(V={\mathbb {R}}^{m\times n}\). For example, taking \(G\cong {\text {O}}(m)\times {\text {O}}(n)\) to be the group of linear operators of the form \(X\mapsto Q_1XQ_2^{-1}\) for \(Q_1\in {\text {O}}(m)\) and \(Q_2\in {\text {O}}(n)\), then max filtering with any rank-1 matrix of unit Frobenius norm gives the spectral norm.

Example 5

(Power spectrum) Consider the case where \(V=L^2({\mathbb {R}}/{\mathbb {Z}})\) and G is the group of circular translation operators \(T_a\) defined by \(T_ag(t):=g(t-a)\) for \(a\in {\mathbb {R}}\). (Here and throughout, functions in \(L^2\) will be real valued by default.) Given a template \(z_k\) of the form \(z_k(t):=\cos (2\pi kt)\) for some \(k\in {\mathbb {N}}\), it holds that

A similar choice of templates recovers the power spectrum over finite abelian groups.

Example 6

(Unitary groups) While we generally assume V is a real inner produce space, our theory also applies in the complex setting. For example, consider the case where \(V={\mathbb {C}}^n\) and G is a subgroup of the unitary group \({\text {U}}(n)\). Then V is a 2n-dimensional real inner product space with

where \(x^*\) denotes the conjugate transpose of x. As such, \({\text {U}}(n)\le {\text {O}}(V)\) since \(g\in {\text {U}}(n)\) implies

Thus, \(G\le {\text {O}}(V)\).

Example 7

(Phase retrieval) Suppose \(V={\mathbb {C}}^r\) and \(G=\{c\cdot {\text {id}}:|c|=1\}\le {\text {U}}(r)\). Then

The max filter bank corresponding to \(\{z_i\}_{i=1}^n\) in V is given by \(\Phi ([x])=\{|z_i^*x|\}_{i=1}^n\). The inverse problem of recovering [x] from \(\Phi ([x])\) is known as complex phase retrieval [4, 10, 31, 73], and over the last decade, several algorithms were developed to solve this inverse problem [22,23,24, 29, 33, 75]. In the related setting where \(V={\mathbb {R}}^d\) and \(G=\{\pm {\text {id}}\}\le {\text {O}}(d)\), the analogous inverse problem is known as real phase retrieval [4].

Example 8

(Matched filtering) In classical radar, the primary task is to locate a target. Here, a transmitter emits a pulse \(p\in L^2({\mathbb {R}})\), which then bounces off the target and is received at the transmitter’s location with a known direction of arrival. The return signal q is a noisy version of \(T_ap\) for some \(a>0\), where \(T_a\) denotes the translation-by-a operator defined by \(T_af(t):=f(t-a)\). Considering the transmitter-to-target distance is a/2 times the speed of light, the objective is to estimate a, which can be accomplished with matched filtering: simply find a for which \(\langle T_ap,q\rangle \) is largest. This is essentially a max filter with \(V=L^2({\mathbb {R}})\) and G being the group of translation operators, though for this estimation problem, the object of interest is the maximizer a, not the maximum value \(\langle \hspace{-2.5pt}\langle [p],[q]\rangle \hspace{-2.5pt}\rangle \). Meanwhile, the maximum value is used for the detection problem of distinguishing noise from noisy versions of translates of p. (This accounts for half of the etymology of max filtering.)

Example 9

(Template matching) In computer vision, images are frequently processed with the help of a template image that is designed to locate a certain feature. Letting V denote the vector space of grayscale images of a certain size, in which each pixel is treated as a coordinate of the image vector, then translations with wraparound form a subgroup \(G\le {\text {O}}(V)\) of permutations of these pixels. Template matching is then performed by scanning the template (which is only supported on a small window of pixels) over the image by translations, and then recording the translation that yields the largest inner product. This can be viewed as a two-dimensional version of matched filtering (Example 8).

Example 10

(Max pooling) In a convolutional neural network, it is common for a convolutional layer to be followed by a max pooling layer. Here, the convolutional layer convolves the input image with several localized templates, and then the max pooling layer downsamples each of the resulting convolutions by partitioning the scene into patches and recording the maximum value in each patch. In the extreme case where the max pooling layer takes the entire scene to be a single patch to maximize over, these layers implement a max filter bank in which V is the image space and G is the group of translation operators. (This accounts for the other half of the etymology of max filtering.)

Example 11

(Geometric deep learning) The Geometric Deep Learning Blueprint given in [16] provides a framework for constructing group invariant and equivariant neural networks. Here, neural networks are decomposed into building blocks consisting of different types of layers, for example, the linear G-equivariant layer and the G-invariant (global pooling) layer. In fact, we may decompose max filtering into such layers:

For a fixed template \(z\in V\), the map \(f_z:x \mapsto \{\langle gz, x \rangle \}_{g \in G}\) is linear and G-equivariant, where G acts on the vector space \(G \rightarrow {\mathbb {R}}\) of real-valued functions over G by precomposition. Next, the sup map returns the supremum of a member of \(G\rightarrow {\mathbb {R}}\), which is G-invariant. Max filtering is precisely the composition of these two maps: \(\langle \hspace{-2.5pt}\langle [z],[\cdot ] \rangle \hspace{-2.5pt}\rangle = \sup \circ f_z\).

3 The complexity of separating orbits

For practical reasons, we are interested in orbit-separating invariants \(\Phi :V\rightarrow {\mathbb {R}}^n\) of low complexity, which we take to mean two different things simultaneously:

-

(i)

n is small (i.e., the map has low sample complexity), and

-

(ii)

One may evaluate \(\Phi \) efficiently (i.e., the map has low computational complexity).

While these notions of complexity are related, they impact the learning task in different ways. In what follows, we study both notions of complexity in the context of max filtering.

3.1 Generic templates separate orbits

In this subsection, we focus on the case in which \(V={\mathbb {R}}^d\) and \(G\le {\text {O}}(d)\) is semialgebraic, which we will define shortly. Every polynomial function \(p:{\mathbb {R}}^n\rightarrow {\mathbb {R}}\) determines a basic semialgebraic set

By closing under finite unions, finite intersections, and complementation, the basic semialgebraic sets of \({\mathbb {R}}^n\) generate an algebra of sets known as the semialgebraic sets in \({\mathbb {R}}^n\). A semialgebraic subgroup is a subgroup of the general linear group \({\text {GL}}(d)\) that is also a semialgebraic set in \({\mathbb {R}}^{d\times d}\), e.g., the orthogonal group \({\text {O}}(d)\). A semialgebraic function is a function \(f:{\mathbb {R}}^s\rightarrow {\mathbb {R}}^t\) for which the graph \(\{(x,f(x)):x\in {\mathbb {R}}^s\}\) is a semialgebraic set in \({\mathbb {R}}^{s+t}\).

Lemma 12

For every semialgebraic subgroup \(G\le {\text {O}}(d)\), the corresponding max filtering map \(\langle \hspace{-2.5pt}\langle [\cdot ],[\cdot ]\rangle \hspace{-2.5pt}\rangle :{\mathbb {R}}^d\times {\mathbb {R}}^d\rightarrow {\mathbb {R}}\) is semialgebraic.

Proof

The graph of the max filtering map can be expressed in first-order logic:

(To be precise, one should replace our quantifiers over G with the polynomial conditions that define the semialgebraic set G to obtain a condition in first-order logic.) It follows from Proposition 2.2.4 in [13] that the graph is semialgebraic. \(\square \)

Every semialgebraic set A can be decomposed as a disjoint union \(A=\bigcup _i A_i\) of finitely many semialgebraic sets \(A_i\), each of which is homeomorphic to an open hypercube \((0,1)^{d_i}\) (where \((0,1)^0\) is a point). The dimension of A can be defined in terms of this decomposition as \({\text {dim}}(A):=\max _i d_i\). (It does not depend on the decomposition.)

Definition 13

Given a semialgebraic subgroup \(G\le {\text {O}}(d)\), we say the corresponding max filtering map \(\langle \hspace{-2.5pt}\langle [\cdot ],[\cdot ]\rangle \hspace{-2.5pt}\rangle :{\mathbb {R}}^d\times {\mathbb {R}}^d\rightarrow {\mathbb {R}}\) is k-strongly separating if for every \(x,y\in {\mathbb {R}}^d\) with \([x]\ne [y]\), it holds that

As an example, consider the case where \(G={\text {O}}(d)\). Then \(\langle \hspace{-2.5pt}\langle [z],[x]\rangle \hspace{-2.5pt}\rangle =\langle \hspace{-2.5pt}\langle [z],[y]\rangle \hspace{-2.5pt}\rangle \) holds precisely when \(\Vert z\Vert \Vert x\Vert =\Vert z\Vert \Vert y\Vert \), i.e., \(z=0\) or \([x]=[y]\). Thus, the max filtering map is d-strongly separating in this case. In the following, we say a property holds for a generic point if the failure set is a semialgebraic set of less-than-full dimension.

Theorem 14

Consider any semialgebraic subgroup \(G\le {\text {O}}(d)\) with k-strongly separating max filtering map \(\langle \hspace{-2.5pt}\langle [\cdot ],[\cdot ]\rangle \hspace{-2.5pt}\rangle :{\mathbb {R}}^d\times {\mathbb {R}}^d\rightarrow {\mathbb {R}}\) for some \(k\in {\mathbb {N}}\). For generic \(z_1,\ldots ,z_n\in {\mathbb {R}}^d\), the max filter bank \(x\mapsto \{\langle \hspace{-2.5pt}\langle [z_i],[x]\rangle \hspace{-2.5pt}\rangle \}_{i=1}^n\) separates G-orbits in \({\mathbb {R}}^d\) provided \(n\ge 2d/k\).

In the d-strongly separating case where \(G={\text {O}}(d)\), Theorem 14 implies that \(n=2\) generic templates suffice to separate orbits. (Of course, any single nonzero template suffices in this case.) Theorem 14 is an improvement to Theorem 2.7 in [39], which gives the condition \(n\ge 2d+1\). We obtain the improvement \(n\ge 2d/k\) by leveraging a more detailed notion of strongly separating, as well as the positive homogeneity of max filtering. The proof makes use of a lift-and-project technique that first appeared in [4] and was subsequently applied in [21, 31, 65, 76].

Proof of Theorem 14

Fix \(n\ge 2d\), and let \({\mathcal {Z}}\subseteq ({\mathbb {R}}^d)^n\) denote the set of \(\{z_i\}_{i=1}^n\) for which the max filter bank \(x\mapsto \{\langle \hspace{-2.5pt}\langle [z_i],[x]\rangle \hspace{-2.5pt}\rangle \}_{i=1}^n\) fails to separate G-orbits in \({\mathbb {R}}^d\). We will show that \({\mathcal {Z}}\) is semialgebraic with dimension \(\le dn-1\), from which the result follows. To do so, observe that \(\{z_i\}_{i=1}^n\in {\mathcal {Z}}\) precisely when there exists a witness, namely, \((x,y)\in {\mathbb {R}}^d\times {\mathbb {R}}^d\) with \([x]\ne [y]\) such that \(\langle \hspace{-2.5pt}\langle [z_i],[x]\rangle \hspace{-2.5pt}\rangle =\langle \hspace{-2.5pt}\langle [z_i],[y]\rangle \hspace{-2.5pt}\rangle \) for every \(i\in \{1,\ldots ,n\}\). In fact, we may assume that the witness (x, y) satisfies \(\Vert x\Vert ^2+\Vert y\Vert ^2=1\) without loss of generality since the set of witnesses for \(\{z_i\}_{i=1}^n\) avoids (0, 0) and is closed under positive scalar multiplication by Lemma 2c. This suggests the following lift of \({\mathcal {Z}}\):

Since G is semialgebraic, we have that \([x]\ne [y]\) is a semialgebraic condition. Furthermore, \(\langle \hspace{-2.5pt}\langle [z_i],[x]\rangle \hspace{-2.5pt}\rangle =\langle \hspace{-2.5pt}\langle [z_i],[y]\rangle \hspace{-2.5pt}\rangle \) is a semialgebraic condition for each i by Lemma 12. It follows that \({\mathcal {L}}\) is semialgebraic. Next, we define the projection maps \(\pi _1:(\{z_i\}_{i=1}^n,(x,y))\mapsto \{z_i\}_{i=1}^n\) and \(\pi _2:(\{z_i\}_{i=1}^n,(x,y))\mapsto (x,y)\). Then \({\mathcal {Z}}=\pi _1({\mathcal {L}})\) is semialgebraic by Tarski–Seidenberg (Proposition 2.2.1 in [13]). To bound the dimension of \({\mathcal {Z}}\), we first observe that

and so \({\text {dim}}(\pi _2^{-1}(x,y)) \le n(d-k)\), since the max filtering map is k-strongly separating by assumption. We use the fact that \(\pi _2({\mathcal {L}})\) is contained in the unit sphere in \(({\mathbb {R}}^d)^2\) together with Lemma 2.8 in [39] to obtain

where the last step is equivalent to the assumption \(n\ge 2d/k\). \(\square \)

Corollary 15

Consider any finite subgroup \(G\le {\text {O}}(d)\). For generic \(z_1,\ldots ,z_n\in {\mathbb {R}}^d\), the max filter bank \(x\mapsto \{\langle \hspace{-2.5pt}\langle [z_i],[x]\rangle \hspace{-2.5pt}\rangle \}_{i=1}^n\) separates G-orbits in \({\mathbb {R}}^d\) provided \(n\ge 2d\).

Corollary 15 follows immediately from Theorem 14 and the following lemma:

Lemma 16

For every finite subgroup \(G\le {\text {O}}(d)\), the corresponding max filtering map \(\langle \hspace{-2.5pt}\langle [\cdot ],[\cdot ]\rangle \hspace{-2.5pt}\rangle :{\mathbb {R}}^d\times {\mathbb {R}}^d\rightarrow {\mathbb {R}}\) is 1-strongly separating.

Proof

Consider any \(x,y\in {\mathbb {R}}^d\) with \([x]\ne [y]\). Then \(\langle \hspace{-2.5pt}\langle [z],[x]\rangle \hspace{-2.5pt}\rangle =\langle \hspace{-2.5pt}\langle [z],[y]\rangle \hspace{-2.5pt}\rangle \) only if there exists \(g\in G\) such that \(\langle z,x\rangle =\langle z,gy\rangle \), i.e., \(z\in {\text {span}}\{x-gy\}^\perp \). Thus,

Since the max filtering map is semialgebraic by Lemma 12, it follows that the left-hand set is also semialgebraic. Since G is finite, the right-hand set is semialgebraic with dimension \(d-1\), and the result follows. \(\square \)

We would like to know if a version of Corollary 15 holds for all semialgebraic groups, but we do not have a proof of strongly separating for infinite groups in general. This motivates the following problem:

Problem 17

-

(a)

For which semialgebraic groups is the max filtering map k-strongly separating?Footnote 1

-

(b)

How many templates are needed to separate orbits for a given group?

We identify a couple of interesting instances of Problem 17. First, we consider the case of complex phase retrieval (as in Example 7), where \(V={\mathbb {C}}^r\) and \(G=\{\lambda \cdot {\text {id}}:\lambda \in {\mathbb {C}},|\lambda |=1\}\) is the center of \({\text {U}}(r)\). It is known that \(n=4r-4=2{\text {dim}}(V)-4\) generic templates separate orbits for every r, and this is the optimal threshold for infinitely many r [31], but there also exist 11 templates in \({\mathbb {C}}^4\) that separate orbits, for example [73].

As another example, consider the case where \(V={\mathbb {R}}^d\) and \(G\cong S_d\) is the group of \(d\times d\) permutation matrices. Then Corollary 15 gives that 2d generic templates separate orbits. However, it is straightforward to see that the templates \(z_j:=\sum _{i=1}^j e_i\) for \(j\in \{1,\ldots ,d\}\) also separate orbits, where \(e_i\) denotes the ith standard basis element. Indeed, take \({\text {sort}}(x)\) to have weakly decreasing entries. Then the first entry equals \(\langle \hspace{-2.5pt}\langle [z_1],[x]\rangle \hspace{-2.5pt}\rangle \), while for each \(j>1\), the jth entry equals \(\langle \hspace{-2.5pt}\langle [z_j],[x]\rangle \hspace{-2.5pt}\rangle -\langle \hspace{-2.5pt}\langle [z_{j-1}],[x]\rangle \hspace{-2.5pt}\rangle \). As such, this max filter bank determines \({\text {sort}}(x)\), which is a separating invariant of V/G. Considering Theorem 14, one might suspect that the max filtering map is 2-strongly separating in this case, but this is not so. Indeed, the cone C of sorted vectors in \({\mathbb {R}}^d\) has dimension d, and so there exists a subspace H of co-dimension 1 that intersects the interior of C. Select any x in the interior of C and any unit vector \(v\in H^\perp \), and then take \(y=x+\epsilon v\) for \(\epsilon >0\) sufficiently small so that \(y\in C\). Then

which is a semialgebraic set of dimension \(d-1\), and so the claim follows.

3.2 Low-complexity max filtering

In this subsection, we focus on the case in which \(V\cong {\mathbb {R}}^d\). Naively, one may compute the max filtering map \((x,y)\mapsto \langle \hspace{-2.5pt}\langle [x],[y]\rangle \hspace{-2.5pt}\rangle \) over a finite group \(G\le {\text {O}}(d)\) of order m by computing \(\langle x,gy\rangle \) for every \(g\in G\) and then returning the maximum. This approach costs O(md) operations. Of course, this is not possible when G is infinite, and it is prohibitive when G is finite but large. Interestingly, many of the groups that we encounter in practice admit a faster implementation. In particular, for many quotient spaces V/G, the quotient metric \(d:V/G\times V/G\rightarrow {\mathbb {R}}\) is easy to compute, and by Lemma 2f, the max filtering map is equally easy to compute in these cases:

In this subsection, we highlight a few examples of such quotient spaces before considering the harder setting of graphs.

3.2.1 Point clouds

Consider \(V={\mathbb {R}}^{k\times n}\) with Frobenius inner product. We can represent a point cloud of n points in \({\mathbb {R}}^k\) as a member of V by arbitrarily labeling the points with column indices. In this setting, we identify members of V that reside in a common G-orbit with \(G\cong S_n\) permuting the columns. The resulting quotient metric is known as the 2-Wasserstein distance:

where \(\Pi (n)\) denotes the set of \(n\times n\) permutation matrices and \(\Vert \cdot \Vert _F\) denotes the Frobenius norm. The corresponding max filtering map is then given by

where \({\text {conv}}\Pi (n)\) denotes the convex hull of \(\Pi (n)\), namely, the doubly stochastic matrices. By this formulation, the max filtering map can be computed in polynomial time by linear programming. In the special case where \(k=1\), the max filtering map has an even faster implementation:

which can be computed in linearithmic time.

3.2.2 Circular translations

Consider the case where \(V\cong {\mathbb {R}}^n\) is the space of vectors with entries indexed by the cyclic group \(C_n:={\mathbb {Z}}/n{\mathbb {Z}}\), and \(G\cong C_n\) is the group of circular translations \(T_a\) defined by \(T_af(x):=f(x-a)\). Then the max filtering map is given by

where \(\star \) denotes the circular convolution and R denotes the reversal operator. Thus, the max filtering map can be computed in linearithmic time with the help of the fast Fourier transform.

3.2.3 Shape analysis

In geometric morphometics [55], it is common for data to take the form of a sequence of n landmarks in \({\mathbb {R}}^k\) (where k is typically 2 or 3) with a global rotation ambiguity. This corresponds to taking \(V={\mathbb {R}}^{k\times n}\) and \(G\cong {\text {O}}(k)\) acting on the left, and so the max filtering map is given by

where \(\Vert \cdot \Vert _*\) denotes the nuclear norm. As such, the max filtering map can be computed in polynomial time with the aid of the singular value decomposition.

3.2.4 Separation hierarchy for weighted graphs

Here, we focus on the case in which V is the vector space of real symmetric \(n\times n\) matrices with zero diagonal and \(G\cong S_n\) is the group of linear isometries of the form \(A\mapsto PAP^{-1}\), where P is a permutation matrix. We think of V/G as the space of weighted graphs on n vertices (up to isomorphism). One popular approach for separating graphs uses message-passing graph neural networks, but the separation power of such networks is limited by the so-called Weisfeler–Lehman test [57, 78, 80]. For example, message-passing graph neural networks fail to distinguish \(C_3\cup C_3\) from \(C_6\). See [45, 67] for surveys of this rapidly growing literature.

As an alternative, we consider a max filtering approach. Given two adjacency matrices \(A_1\) and \(A_2\), Lemma 2f implies that the corresponding graphs are isomorphic if and only if

As such, max filtering is graph isomorphism–hard in this setting. Interestingly, there exist \(A\in V\) for which the map \(X\mapsto \langle \hspace{-2.5pt}\langle [A],[X]\rangle \hspace{-2.5pt}\rangle \) can be computed in linearithmic time, and furthermore, these easy-to-compute max filters help with separating orbits. To see this, we follow [2], which uses color coding to facilitate computation by dynamic programming.

Definition 18

A tuple \(\{f_i\}_{i=1}^N\) with \(f_i:[n]\rightarrow [k]\) for each \(i\in [N]\) is an (n , k)-color coding if \(n\ge k\) and for every \(S\subseteq [n]\) of cardinality k, there exists \(i\in [N]\) such that \(f_i(S)=[k]\).

Lemma 19

Given \(n,k\in {\mathbb {N}}\) with \(n\ge k\), there exists an (n, k)-color coding of size \(\lceil ke^k\log n\rceil \).

Proof

We show that N random colorings form a color coding with positive probability. For each \(i\in [N]\) and \(S\in \left( {\begin{array}{c}[n]\\ k\end{array}}\right) \), we have \({\mathbb {P}}\{f_i(S)=[k]\}=k!/k^k\), and so the union bound gives

It suffices to select N so that the right-hand side is strictly smaller than 1. The result follows by applying the bounds \(\left( {\begin{array}{c}n\\ k\end{array}}\right) \le n^k\), \(k!\ge (k/e)^k\), and \((1-1/t)^t<1/e\) for \(t>1\):

which is at most 1 when \(N\ge ke^k\log n\). \(\square \)

Algorithm 1 computes the max filter with a small weighted tree using a color coding and dynamic programming. Lemma 19 implies that Algorithm 1 has runtime \(e^{O(k\log k)}n^2\log n\), which is linearithmic in the size of the data when k is fixed. Notice that max filtering with the path on \(k=4\) vertices already separates the graphs \(C_3\cup C_3\) and \(C_6\). Furthermore, using techniques from [2], one can modify Algorithm 1 to max filter with any template graph H on k vertices, though the runtime becomes \(e^{O(k\log k)}n^{t+1}\log n\), where t is the treewidth of H. Letting \({\mathcal {H}}(k,t)\) denote the set of weighted graphs on at most k vertices with treewidth at most t, we have the following hierarchy:

Corollary 15 gives that \(n(n-1)\) generic templates from \({\mathcal {H}}(n,n-1)\) separate all isomorphism classes of weighted graphs on n vertices. It would be interesting to study the separation power of templates of logarithmic order and bounded treewidth.

4 Stability of max filtering

4.1 Bilipschitz max filter banks

Upper and lower Lipschitz bounds are used to quantify the stability of a mapping between metric spaces, but it is generally difficult to estimate such bounds; see [5, 8, 10, 19, 47] for examples from phase retrieval and [6, 20, 21, 82] for other examples. In this subsection, we prove the following:

Theorem 20

Fix a finite group \(G\le {\text {O}}(d)\) of order m and select

Draw independent random vectors \(z_1,\ldots ,z_n\sim \textsf{Unif}(S^{d-1})\). With probability \(\ge 1-e^{-n/(12m^2)}\), it holds that the max filter bank \(\Phi :{\mathbb {R}}^d/G\rightarrow {\mathbb {R}}^n\) with templates \(\{z_i\}_{i=1}^n\) has lower Lipschitz bound \(\delta \) and upper Lipschitz bound \(n^{1/2}\).

This result distinguishes max filtering from separating polynomial invariants, which do not necessarily enjoy upper or lower Lipschitz bounds [21]. In Theorem 20, we may take the embedding dimension to be \(n=\Theta ^*(m^2d)\) with bilipschitz bounds \(\Theta ^*(\frac{1}{m^2d^{1/2}})\) and \(\Theta ^*(md^{1/2})\), where \(\Theta ^*(\cdot )\) suppresses logarithmic factors. For comparison, we consider a couple of cases that have already been studied in the literature. First, the case where \(G=\{\pm {\text {id}}\}\) reduces to the setting of real phase retrieval (as in Example 7), where it is known that there exist \(n=\Theta (d)\) templates that deliver lower- and upper-Lipschitz bounds \(\frac{1}{4}\) and 4, say; see equation (17) in [10]. Notably, these bounds do not get worse as d gets large. It would be interesting if a version of Theorem 20 held for infinite groups, but we do not expect it to hold for infinite-dimensional inner product spaces. Case in point, for \(V=\ell ^2\) with \(G=\{\pm {\text {id}}\}\), it was shown in [19] that for every choice of templates, the map is not bilipschitz.

Another interesting phenomenon from finite-dimensional phase retrieval is that separating implies bilipschitz; see Lemma 16 and Theorem 18 in [10] and Proposition 1.4 in [19]. This suggests the following:

Problem 21

Is every separating max filter bank \(\Phi :{\mathbb {R}}^d/G\rightarrow {\mathbb {R}}^n\) bilipschitz?.Footnote 2

If the answer to Problem 21 is “yes,” then Corollary 15 implies that 2d generic templates produce a bilipschitz max filter bank \(\Phi :{\mathbb {R}}^d/G\rightarrow {\mathbb {R}}^{2d}\) whenever \(G\le {\text {O}}(d)\) is finite.

Theorem 20 follows immediately from Lemmas 22 and 23 below. Our proof uses the following notion that was introduced in [1]. We say \(\{z_i\}_{i=1}^n\in ({\mathbb {R}}^d)^n\) exhibits \((k,\delta )\)-projective uniformity if

for every \(x\in {\mathbb {R}}^d\), where \(s_k:{\mathbb {R}}^n\rightarrow {\mathbb {R}}\) returns the kth smallest entry of the input. In what follows, we denote \(\Vert \{z_i\}_{i=1}^n\Vert _F:=(\sum _{i=1}^n\Vert z_i\Vert ^2)^{1/2}\).

Lemma 22

Fix a finite subgroup \(G\le {\text {O}}(d)\) and suppose \(\{z_i\}_{i=1}^n\in ({\mathbb {R}}^d)^n\) exhibits \((\lceil \frac{n}{|G|^2}\rceil ,\delta )\)-projective uniformity. Then the max filter bank \(\Phi :{\mathbb {R}}^d/G\rightarrow {\mathbb {R}}^n\) with templates \(\{z_i\}_{i=1}^n\) has lower Lipschitz bound \(\delta \) and upper Lipschitz bound \(\Vert \{z_i\}_{i=1}^n\Vert _F\).

Proof

The upper Lipschitz bound follows from Lemma 2g:

For the lower Lipschitz bound, fix \(x,y\in {\mathbb {R}}^d\) with \([x]\ne [y]\), and then for each \(i\in \{1,\ldots ,n\}\), select \(g_i,h_i\in G\) such that \(\langle \hspace{-2.5pt}\langle [z_i],[x]\rangle \hspace{-2.5pt}\rangle =\langle z_i,g_ix\rangle \) and \(\langle \hspace{-2.5pt}\langle [z_i],[y]\rangle \hspace{-2.5pt}\rangle =\langle z_i,h_iy\rangle \). Then

where the inequality follows from the bound \(\Vert g_ix-h_iy\Vert \ge d([x],[y])\). Next, consider the map \(p:i\mapsto (g_i,h_i)\), and select \((g,h)\in G^2\) with the largest preimage. By pigeonhole, we have \(|p^{-1}(g,h)|\ge \lceil \frac{n}{|G|^2}\rceil =:k\), and so

Combining with (2) gives the result. \(\square \)

The following lemma gives that random templates exhibit projective uniformity.

Lemma 23

(cf. Lemma 6.9 in [1]) Select \(p\in (0,1)\) and take

Draw independent random vectors \(z_1,\ldots ,z_n\sim \textsf{Unif}(S^{d-1})\). Then \(\{z_i\}_{i=1}^n\) exhibits \((\lceil pn\rceil ,\delta )\)-projective uniformity with probability \(\ge 1-e^{-pn/12}\).

Proof

Put \(k:=\lceil pn\rceil \), let \({\mathcal {E}}\) denote the failure event that \(\{z_i\}_{i=1}^n\) does not have \((k,\delta )\)-projective uniformity, and let \(N_\delta \) denote a \(\delta \)-net of \(S^{d-1}\) of minimum size. Note that if v is within \(\delta \) of x, then for every \(z_i\), it holds that

Thus, we may pass to the \(\delta \)-net to get

where the second inequality applies the union bound and the rotation invariance of the distribution \(\textsf{Unif}(S^{d-1})\). A standard volume comparison argument gives \(|N_\delta |\le (\frac{2}{\delta }+1)^d\). The final probability concerns a sum of independent Bernoulli variables with some success probability \(q=q(d,\delta )\), which can be estimated using the multiplicative Chernoff bound:

provided \(p>q\). Next, we verify that \(q(d,\delta )\le \frac{p}{2}\). Denoting \(g\sim {\textsf{N}}(0,I_d)\), we have

where the final inequality uses the facts that \(|\langle g,e_1\rangle |\) has half-normal distribution and \(\Vert g\Vert ^2\) has chi-squared distribution with d degrees of freedom. We select \(t:=(2d+3\log (\frac{4}{p}))^{1/2}\) so that the second term equals \(\frac{p}{4}\), and then our choice (3) for \(\delta \) ensures that the first term equals \(\frac{p}{4}\). Overall, we have

where the last step applied our assumption that \(n\ge \frac{12d}{p}\log (\frac{2}{\delta }+1)\). \(\square \)

4.2 Mallat-type stability to diffeomorphic distortion

In this subsection, we focus on the case in which \(V=L^2({\mathbb {R}}^d)\) and G is the group of translation operators \(T_a\) defined by \(T_af(x):=f(x-a)\) for \(a\in {\mathbb {R}}^d\). We first verify that G has closed orbits. Take any \(f,h\in L^2({\mathbb {R}}^d)\). Then for each \(a\in {\mathbb {R}}^d\), we have

where R denotes the reversal operator defined by \(Rh(x):=h(-x)\) and \(\star \) denotes convolution. Thus,

To see that the orbit [f] is closed, assume \(f \ne 0\) and take h to be an accumulation point of [f]. Then \(\inf _{a \in {\mathbb {R}}^d} \Vert h - T_a f \Vert ^2 = 0\), and \((Rh \star f)(a)\) is positive for some \(a \in {\mathbb {R}}^d\). Since \(Rh \star f\) also belongs to \(C_0({\mathbb {R}}^d)\), it attains a maximum at some \(a_0 \in {\mathbb {R}}^d\). Then \(\Vert h - T_a f \Vert ^2\) attains a minimum at \(a = a_0\), and \(h = T_{a_0} f \in [f]\).

Our motivation for this setting stems from image analysis, in which case \(d=2\). For a familiar example, consider the task of classifying handwritten digits. Intuitively, each class is translation invariant, and so it makes sense to treat images as members of V/G. In addition, images that are slight elastic distortions of each other should be sent to nearby points in the feature domain. The fact that image classification is invariant to such distortions has been used to augment the MNIST training set and boost classification performance [69]. Instead of using data augmentation to learn distortion-invariant features, it is desirable to restrict to feature maps that already exhibit distortion invariance. (Indeed, such feature maps would require fewer parameters to train.) This compelled Mallat to introduce his scattering transform [54], which has since played an important role in the theory of invariant machine learning [17, 18, 41, 60, 74]. Mallat used the following formalism to analyze the stability of the scattering transform to distortion.

Given a diffeomorphism \(g\in C^1({\mathbb {R}}^d)\), we consider the corresponding distortion operator \(L_g\) defined by \(L_gf(x):=f(g^{-1}(x))\). It will be convenient to interact with the vector field \(\tau :={\text {id}}-g^{-1}\in C^1({\mathbb {R}}^d)\), since \(L_gf(x)=f(x-\tau (x))\). For example, if \(\tau (x)=a\) for every \(x\in {\mathbb {R}}^d\), then \(L_g\) is translation by a. In what follows, \(J\tau (x)\in {\mathbb {R}}^{d\times d}\) denotes the Jacobian matrix of \(\tau \) at x.

Theorem 24

Take any continuously differentiable \(h\in L^2({\mathbb {R}}^d)\) for which

are bounded. There exists \(C(h)>0\) such that for every \(f\in L^2({\mathbb {R}}^d)\) and every diffeomorphism \(g\in C^1({\mathbb {R}}^d)\) for which \(\tau :={\text {id}}-g^{-1}\) satisfies \(\sup _{x\in {\mathbb {R}}^d}\Vert J\tau (x)\Vert _{2\rightarrow 2}\le \frac{1}{2}\), it holds that

This matches Mallat’s bound [54] on the stability of the scattering transform to diffeomorphic distortion. The proof of Theorem 24 follows almost immediately from the following modification of Lemma E.1 in [54], which bounds the commutator between the filter and the distortion by the magnitude of the distortion:

Lemma 25

Take \(h\in L^2({\mathbb {R}}^d)\) as in Theorem 24, and consider the linear operator \(Z_h\) defined by \(Z_hf:=h\star f\). There exists \(C(h)>0\) such that for every diffeomorphism \(g\in C^1({\mathbb {R}}^d)\) for which \(\tau :={\text {id}}-g^{-1}\) satisfies \(\sup _{x\in {\mathbb {R}}^d}\Vert J\tau (x)\Vert _{2\rightarrow 2}\le \frac{1}{2}\), it holds that

Assuming Lemma 25 for the moment, we can prove Theorem 24.

Proof of Theorem 24

The change of variables \(a=g^{-1}(a')\) gives

and so the result follows from Lemma 25. \(\square \)

The rest of this section proves Lemma 25. Our proof follows some of the main ideas in the proof of Lemma E.1 in [54].

Proof of Lemma 25

Denote \(K:=Z_h-L_gZ_hL_g^{-1}\). Then \(L_gZ_h-Z_hL_g=-KL_g\), and so

We first bound the second factor. For \(f\in L^2({\mathbb {R}}^d)\), a change of variables gives

For \(x\in {\mathbb {R}}^d\), the fact that \(\Vert J\tau (x)\Vert _{2\rightarrow 2}\le \frac{1}{2}\) implies

and so combining with the above estimate gives

It remains to bound \(\Vert K\Vert _{L^2({\mathbb {R}}^d)\rightarrow L^\infty ({\mathbb {R}}^d)}\). To this end, one may verify that K can be expressed as \(Kf(x)=\int _{{\mathbb {R}}^d}k(x,u)f(u)du\), where the kernel k is defined by

We will bound the \(L^2\) norms of every \(k(x,\cdot )\) and \(k(\cdot ,u)\), and then appeal to Young’s inequality for integral operators to bound \(\Vert K\Vert _{L^2({\mathbb {R}}^d)\rightarrow L^\infty ({\mathbb {R}}^d)}\). We decompose \(k=k_1+k_2+k_3\), where

First, we analyze \(k_1\). Letting \(p_1:[0,1]\rightarrow {\mathbb {R}}^d\) denote the parameterized line segment of constant velocity from \((I_d-J\tau (u))(x-u)\) to \(x-u\), we have

and so

To bound the first factor, let \(C_\infty (h)>0\) denote a simultaneous bound on the absolute value and 2-norm of (4). To use this, we bound \(\inf _{t\in [0,1]}\Vert p_1(t)\Vert _2\) from below:

Then

which allows us to further bound (6):

Next, we analyze \(k_2\). Since \(\Vert J\tau (x)\Vert _{2\rightarrow 2}\le \frac{1}{2}\) by assumption, Bernoulli’s inequality gives

Also, the convexity bound \((1+t)^d\le 1+(2^d-1)t\) for \(t\in [0,1]\) implies

Furthermore, we have

and so

Finally, we analyze \(k_3\). Put

Then letting \(p_2:[0,1]\rightarrow {\mathbb {R}}^d\) denote the parameterized line segment of constant velocity from r to \(r+s\), we have

and so

For the first factor of (9), we have \(|{\text {det}}(I_d-J\tau (u))|\le \Vert I_d-J\tau (u)\Vert _{2\rightarrow 2}^d\le (3/2)^d\). To bound the second factor of (9), we use our bound \(C_\infty (h)>0\) on (4). To do so, we bound \(\inf _{t\in [0,1]}\Vert p_2(t)\Vert _2\) from below. First, we note that

where \(p_3:[0,1]\rightarrow {\mathbb {R}}^d\) is the parameterized line segment of constant velocity from u to x. Thus,

and so for each \(t\in [0,1]\), we have

Overall, we have

Finally, we apply (10) to bound the third factor of (9):

We combine these estimates to obtain the following bound on (9):

Finally, (7), (8), and (11) together imply

Importantly, this is a bounded function of \(x-u\) that decays like \(\Vert x-u\Vert _2^{-(d+1)/2}\). By integrating the square, this simultaneously bounds the \(L^2\) norm of every \(k(x,\cdot )\) and \(k(\cdot ,u)\) by a quantity of the form \(C_0(h)\cdot \sup _{z\in {\mathbb {R}}^d}\Vert J\tau (z)\Vert _{2\rightarrow 2}\). By Young’s inequality for integral operators (see Theorem 0.3.1 in [70], for example), it follows that

Combining with (5) then gives the result with \(C(h):=2^d\cdot C_0(h)\). \(\square \)

5 Template selection for classification

5.1 Classifying characteristic functions

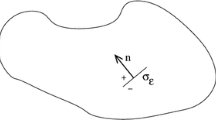

In this subsection, we focus on the case in which \(V=L^2({\mathbb {R}}^d)\) and G is the group of translation operators. Suppose we have k distinct G-orbits of indicator functions of compact subsets of \({\mathbb {R}}^d\). According to the following result, there is a simple classifier based on a size-k max filter bank that correctly classifies these orbits. (This cartoon setting enjoys precursors in [25, 26].)

Theorem 26

Given compact sets \(S_1,\ldots ,S_k\subseteq {\mathbb {R}}^d\) of positive measure satisfying

there exist templates \(z_1,\ldots , z_k\in L^2({\mathbb {R}}^d)\) satisfying

Proof

By compactness, there exists \(r>0\) such that every \(S_i\) is contained in the closed ball centered at the origin with radius r. For reasons that will become apparent later, we take B to be the closed ball centered at the origin with radius 3r, and we define \(z_i:={\textbf{1}}_{S_i}-{\textbf{1}}_{B\setminus S_i}\). Then for every i and j, it holds that

In the special case where \(j=i\), this implies

and so \(\langle \hspace{-2.5pt}\langle [z_i],[{\textbf{1}}_{S_i}]\rangle \hspace{-2.5pt}\rangle =|S_i|\). We consider all \(j\ne i\) in two cases.

Case I: \(j\ne i\) and \(|S_j|\le |S_i|\). Considering (12), it suffices to bound \(\langle \hspace{-2.5pt}\langle [{\textbf{1}}_{S_i}],[{\textbf{1}}_{S_j}]\rangle \hspace{-2.5pt}\rangle \). Letting R denote the reversal operator defined by \(Rf(x):=f(-x)\), then

Since \(R{\textbf{1}}_{S_i},{\textbf{1}}_{S_j}\in L^2({\mathbb {R}}^d)\), it holds that the convolution \(R{\textbf{1}}_{S_i}\star {\textbf{1}}_{S_j}\) is continuous, and since \(S_i\) and \(S_j\) are compact, the convolution has compact support. Thus, the extreme value theorem gives that the convolution achieves its supremum, meaning there exists \(a\in {\mathbb {R}}^d\) such that

Next, the assumptions \(|S_j|\le |S_i|\) and \([{\textbf{1}}_{S_i}]\ne [{\textbf{1}}_{S_j}]\) together imply

Indeed, equality in the bound \(|S_i\cap (S_j+a)|\le |S_i|\) is only possible if \(S_i\subseteq S_j+a\) (modulo null sets), but since \(|S_j|\le |S_i|\) by assumption, this requires \(S_i=S_j+a\) (modulo null sets), which violates the assumption \([{\textbf{1}}_{S_i}]\ne [{\textbf{1}}_{S_j}]\). Overall, we combine (12), (13) and (14) to get

Case II: \(j\ne i\) and \(|S_j|\ge |S_i|\). If \(\langle \hspace{-2.5pt}\langle [z_i],[{\textbf{1}}_{S_j}]\rangle \hspace{-2.5pt}\rangle \le 0\), then

and so we are done. Now suppose \(\langle \hspace{-2.5pt}\langle [z_i],[{\textbf{1}}_{S_j}]\rangle \hspace{-2.5pt}\rangle >0\). Considering

then by continuity and compactness, the extreme value theorem produces \(a\in {\mathbb {R}}^d\) such that

Since \(\langle \hspace{-2.5pt}\langle [z_i],[{\textbf{1}}_{S_j}]\rangle \hspace{-2.5pt}\rangle >0\), it follows that \(|S_i\cap (S_j+a)|>0\), i.e., \(S_i\cap (S_j+a)\) is nonempty, which in turn implies \(S_j+a\subseteq B\). (This is why we defined B to have radius 3r.) As before, \(|S_j|\ge |S_i|\) and \([{\textbf{1}}_{S_i}]\ne [{\textbf{1}}_{S_j}]\) together give \(|S_i\cap (S_j+a)|<|S_j|\). Thus,

We combine (15) and (16) to get

as claimed. \(\square \)

For each i, assume \(S_i\) is translated so that it is contained in the smallest possible ball centered at the origin, and let \(r_i\) denote the radius of this ball. The proof of Theorem 26 gives that each template \(z_i\) is supported in a closed ball of radius \(R:=3\max _i r_i\). The fact that these templates are localized bears some consequence for certain image articulation manifolds [37]. In particular, for each \(\pi :\{1,\ldots ,k\}\rightarrow {\mathbb {N}}\cup \{0\}\), let \(M_\pi \subseteq L^2({\mathbb {R}}^d)\) denote the manifold of images of the form

Thanks to the 4R spacing, each translate of each template interacts with at most one component of the image, and so for every \(f\in M_\pi \), it holds that

In particular, the same max filter bank can be used to determine the support of \(\pi \). As an example, if some multiset of characters are typed on a page in a common font and with sufficient separation, then the max filter bank from Theorem 26 that distinguishes the characters can be used to determine which ones appear on the page.

5.2 Classifying mixtures of stationary processes

In this subsection, we focus on the case in which \(V={\mathbb {R}}^n\) and \(G\cong C_n\) is the group of circular translation operators. A natural G-invariant probability distribution is a multivariate Gaussian with mean zero and circulant covariance, and so we consider the task of classifying a mixture of such distributions. One-dimensional textures can be modeled in this way, especially if the covariance matrix has a small bandwidth so that distant pixels are statistically independent. A standard approach for this problem is to estimate the first- and second-order moments given a random draw. As we will soon see, one can alternatively classify with high accuracy by thresholding a single max filter. In what follows, we make use of Thompson’s part metric on the set of positive definite matrices:

We also let \(A_k\) denote the leading \(k\times k\) principal submatrix of A.

Theorem 27

Fix \(C>\log 2\), take \(n,w\in {\mathbb {N}}\) such that \(k:=\lfloor \sqrt{n/2}\rfloor \ge w\), and consider any positive definite \(A,B\in {\mathbb {R}}^{n\times n}\) that are circulant with bandwidth w and satisfy

There exists \(z\in {\mathbb {R}}^n\) supported on an interval of length k and a threshold \(\theta \in {\mathbb {R}}\) such that for every mixture \({\textsf{M}}\) of \({\textsf{N}}(0,A)\) and \({\textsf{N}}(0,B)\), then given \(x\sim {\textsf{M}}\), the comparison

correctly classifies the latent mixture component of x with probability \(1-o_{n\rightarrow \infty ;C}(1)\).

Proof

First, we observe that \(d_\infty (A_k,B_k)\ge C\) is equivalent to

Without loss of generality, we may assume \(\lambda _{\textrm{max}}(A_k^{-1/2}B_k A_k^{-1/2})\ge e^C\). Let \(v\in {\mathbb {R}}^k\) denote a corresponding unit eigenvector of \(A_k^{-1/2}B_k A_k^{-1/2}\), and define \(z\in {\mathbb {R}}^n\) to be supported in its first k entries as the subvector \(z_k:=A_k^{-1/2}v\). Then

Consider \(x_1\sim {\textsf{N}}(0,A)\). Then \(\langle \hspace{-2.5pt}\langle [z],[x]\rangle \hspace{-2.5pt}\rangle =\max _{a\in C_n}\langle T_az,x_1\rangle \), where each \(\langle T_az,x_1\rangle \) has Gaussian distribution with mean zero and variance \((T_az)^\top A(T_az)\), which in turn equals \(z^\top Az\) since A is circulant. Denoting \(Z\sim {\textsf{N}}(0,1)\), a union bound then gives

for \(t\ge 0\). This failure probability is \(o_{n\rightarrow \infty ;c_1}(1)\) by taking \(t:=\sqrt{c_1\cdot z^\top Az\cdot \log n}\) for any \(c_1>2\). Next, consider \(x_2\sim {\textsf{N}}(0,B)\), and take any subset \(S\subseteq C_n\) consisting of k members of \(C_n\) of pairwise distance at least 2k. Then

In this case, each \(\langle T_az,x_2\rangle \) has Gaussian distribution with mean zero and variance \(z^\top Bz\). Furthermore, since \(k\ge w\), these random variables have pairwise covariance zero, and since they are Gaussian, they are therefore independent. For independent \(Z_1,\ldots ,Z_k\sim {\textsf{N}}(0,1)\) and \(t\ge 0\), we have

where the last step follows from a standard lower bound on the tail of a standard Gaussian distribution. Take \(t:=\sqrt{c_2\log k}\) to get

which is \(o_{k\rightarrow \infty ;c_2}(1)\) when \(c_2<2\). Overall, we simultaneously have

with probability \(1-o_{n\rightarrow \infty ;c_1,c_2}(1)\), provided \(c_1>2>c_2\). We select \(c_1:=2(\tfrac{e^C}{2})^{1/2}>2\) and \(c_2:=2(\tfrac{e^C}{2})^{-1/2}<2\), and then (18) implies

Thus, \(\theta _1\le \theta _2\), and so the result follows by taking \(\theta :=(\theta _1+\theta _2)/2\). \(\square \)

Given a mixture of k Gaussians with covariance matrices that pairwise satisfy (17), then we can perform multiclass classification by a one-vs-one reduction. Indeed, the binary classifier in Theorem 27 can be applied to all \(\left( {\begin{array}{c}k\\ 2\end{array}}\right) \) pairs of Gaussians, in which case we correctly classify the latent mixture component with high probability (provided k is fixed and \(n\rightarrow \infty \)).

Interestingly, max filters can also distinguish between stationary processes with identical first- and second-order moments. For example, \(x\sim \textsf{Unif}(\{\pm 1\}^n)\) and \(y\sim {\textsf{N}}(0,I_n)\) are both stationary with mean zero and identity covariance. If \(z\in {\mathbb {R}}^n\) is a standard basis element, then with high probability, it holds that

This indicates that max filters incorporate higher-order moments.

5.3 Subgradients

In this subsection, we focus on the case in which \(V={\mathbb {R}}^d\) and G is a closed subgroup of \({\text {O}}(d)\). The previous subsections carefully designed templates to classify certain data models. For real-world classification problems, one is expected to train a classifier on a given training set of labeled data. To do so, one selects a parameterized family of classifiers and then locally minimizes some notion of training loss over this family. This is feasible provided the classifier is a differentiable function of the parameters. We envision a classifier in which the first layer is a max filter bank, and so this subsection establishes how to differentiate a max filter with respect to the template. This is made possible by convexity; see Lemma 2d.

Every convex function \(f:{\mathbb {R}}^d\rightarrow {\mathbb {R}}\) has a subdifferential \(\partial f:{\mathbb {R}}^d\rightarrow 2^{{\mathbb {R}}^d}\) defined by

For a fixed \(x\in {\mathbb {R}}^d\) and \(u\in \partial f(x)\), it is helpful to interpret the graph of \(z\mapsto f(x) + \langle z-x, u\rangle \) as a supporting hyperplane of the epigraph of f at x. For example, the absolute value function over \({\mathbb {R}}\) has the following subdifferential:

Indeed, this gives the slopes of the hyperplanes that support the epigraph of \(|\cdot |\) at \(x\in {\mathbb {R}}\).

Following [77], we will determine the subdifferential of the max filtering map by first finding its directional derivatives. In general, the directional derivative of f at x in the direction of \(v\ne 0\) is given by

The second equality is a standard result; see for example Theorem 23.4 in [64]. It will be convenient to denote the set

Observe that G(x, y) is a closed subset of G since the map \(g\mapsto \langle x,gy\rangle \) is continuous.

Lemma 28

Suppose G is a closed subgroup of \({\text {O}}(d)\) and select \(x,y,v\in {\mathbb {R}}^d\) with \(v\ne 0\). Then

Proof

For each \(t>0\) and \(g\in G(x,y)\), we have

For each \(t>0\), select \(g_t\in G(x+tv,y)\). Then

We rearrange and combine (19) and (20) to get

for all \(t>0\). Select a sequence \(t_n\rightarrow 0^+\) such that \(g_{t_n}\) converges to some \(g^\star \in G\) (by passing to a subsequence if necessary). Then continuity implies

Furthermore,

It follows that \(\langle x,g^\star y\rangle =\langle \hspace{-2.5pt}\langle [x],[y]\rangle \hspace{-2.5pt}\rangle \), i.e., \(g^\star \in G(x,y)\). Taking limits of (21) then gives

\(\square \)

Theorem 29

Suppose G is a closed subgroup of \({\text {O}}(d)\) and select \(x,y\in {\mathbb {R}}^d\). Then

Proof

First, we claim that for every \(g\in G(x,y)\), the vector gy is in the subdifferential \(\partial \langle \hspace{-2.5pt}\langle [\cdot ],[y]\rangle \hspace{-2.5pt}\rangle (x)\). Indeed, for every \(h\in {\mathbb {R}}^d\), we have

where the last step uses the fact that \(g\in G(x,y)\). Since \(\partial \langle \hspace{-2.5pt}\langle [\cdot ],[y]\rangle \hspace{-2.5pt}\rangle (x)\) is convex, it follows that the \(\supseteq \) portion of the desired result holds. Next, suppose there exists \(w\in \partial \langle \hspace{-2.5pt}\langle [\cdot ],[y]\rangle \hspace{-2.5pt}\rangle (x)\) such that \(w\not \in {\text {conv}}\{gy:g\in G(x,y)\}\). Then there exists a hyperplane that separates w from \(\{gy:g\in G(x,y)\}\), i.e., there is a nonzero vector \(v\in {\mathbb {R}}^d\) such that

for every \(g\in G(x,y)\). By Lemma 28, it follows that

a contradiction. This establishes the \(\subseteq \) portion of the desired result. \(\square \)

5.4 Random templates and limit laws

While the previous subsection was concerned with the differentiability needed to optimize a feature map, it is well known that random feature maps suffice for various tasks (e.g., Johnson–Lindenstrauss maps [48] and random kitchen sinks [63]). In fact, our bilipschitz result (Theorem 20) uses random templates. As such, one may be inclined to use random templates to produce features for classification.

In this subsection, we focus on the case in which \(V={\mathbb {R}}^d\) and \(G\cong S_d\) is the group of \(d\times d\) permutation matrices. Consider a max filter bank consisting of independent standard gaussian templates \(z_1,\ldots ,z_n\in {\mathbb {R}}^d\). Then

When d is large, we expect the vectors \({\text {sort}}(z_i)\) to exhibit little variation, and so we are inclined to perform dimensionality reduction. Figure 1 illustrates that the principal components of \(\{{\text {sort}}(z_i)\}_{i=1}^n\) exhibit a high degree of regularity.

(left) Draw \(n=10,000\) independent standard gaussian random vectors in \({\mathbb {R}}^d\) with \(d=1,000\), sort the coordinates of each vector, and then plot the top 6 eigenvectors of the resulting sample covariance matrix. (right) Discretize and plot the top 6 eigenfunctions described in Theorem 30

To explain this regularity, select \(Q:(0,1)\rightarrow {\mathbb {R}}\) so that \(Q^{-1}\) is the cumulative distribution function of the standard normal distribution. Take \(z\in {\textsf{N}}(0,I_d)\) and put \(s:={\text {sort}}(z)\). Denoting \(p_i:=\frac{i}{d+1}\) and \(q_i:=1-p_i\), then Sect. 4.6 in [32] gives

The principal components of \(\{{\text {sort}}(z_i)\}_{i=1}^n\) approximate the top eigenvectors of the covariance matrix, which in turn approximate discretizations of eigenfunctions of the integral operator with kernel \(K:(0,1)^2\rightarrow {\mathbb {R}}\) defined by

The following result expresses these eigenfunctions in terms of the probabilist’s Hermite polynomials, which are defined by the following recurrence:

Theorem 30

The integral operator \(L:L^2([0,1])\rightarrow L^2([0,1])\) defined by

has eigenvalue \(\frac{1}{n+1}\) with corresponding eigenfunction \(p_n\circ Q\) for each \(n\in {\mathbb {N}}\cup \{0\}\).

As such, instead of max filtering with independent gaussian templates, one can efficiently capture the same information by taking the inner product between \({\text {sort}}(x)\) and discretized versions of the eigenfunctions \(p_n\circ Q\). To reduce the dimensionality, one can simply use fewer eigenfunctions. To prove Theorem 30, we will use the following lemma; here, \(\varphi :{\mathbb {R}}\rightarrow {\mathbb {R}}\) denotes the probability density function of the standard normal distribution.

Lemma 31

-

(a)

\(p_n(x)=\frac{1}{n+1}p_{n+1}'(x)\).

-

(b)

\(\int _0^x p_{n+1}(Q(y))dy=-\varphi (Q(x))p_n(Q(x))\).

The proof of Lemma 31 follows quickly from the recurrence (22).

Proof of Theorem 30

We compute \(L(p_n\circ Q)\) by splitting the integral:

For both \(I_1\) and \(I_2\), we integrate by parts with

where the middle step follows from Lemma 31a. Then Lemma 31b gives

These combine (and mostly cancel) to give

\(\square \)

6 Numerical examples

In this section, we use max filters as feature maps for various learning tasks.

Example 32

(Thresholded bispectrum) Given a group-invariant classification task, one may use a max filter bank as a feature map. In this synthetic example, we compare how random templates and trained templates perform for such a task. First, we select a classification task by thresholding a group-invariant function. The bispectrum of a signal \(f :{\mathbb {Z}}_d\rightarrow {\mathbb {C}}\) consists of translation-invariant products of entries in the Fourier transform \({\hat{f}}:{\mathbb {Z}}_d\rightarrow {\mathbb {C}}\) and its complex conjugate. Explicitly, \({\text {bisp}}(f):{\mathbb {Z}}_d\times {\mathbb {Z}}_d\rightarrow {\mathbb {C}}\) is defined by

Using the bispectrum, we can define an (admittedly arbitrary) translation-invariant classification task: Label any given function \(f:{\mathbb {Z}}_d\rightarrow {\mathbb {R}}\) by \({\text {sign}}\,{\text {Re}}\,{\text {bisp}}(f)(1,2)\).

Fix \(d=20\), and let the training set, validation set, and test set each consist of 1,000 gaussian random examples. We predict the classification label of a given vector by first passing it through a max filter bank and then classifying in this feature domain with a support vector machine (SVM). For the random template approach, we draw gaussian templates for the max filter bank and train the SVM in the feature domain. For the trained template approach, we simultaneously train the SVM and the max filtering templates. For each number n of templates, we trained both architectures using the Adam optimizer with a learning rate of 0.01, and we halted training once the validation error began to increase, at which point we recorded the accuracy of the model on the test set. (For larger n, we only trained the random architecture due to computational constraints.) The results of these trials are illustrated in Fig. 2. Notably, far fewer trained templates are necessary to achieve a given test accuracy.

Performance of the max filter–SVM architecture for various numbers of templates; see Example 32 for details. For each number of templates, we performed 30 trials to compute medians and 90% intervals. Note that the horizontal axis is in log scale

Example 33

(Voting districts) The one person, one vote principle insists that in each state, different voting districts must have nearly the same number of constituents. This principle is enforced with the help of a decennial redistricting process based on U.S. Census data. Interestingly, each state assembly applies its own process for redistricting, and partisan approaches can produce unwanted gerrymandering. Historically, gerrymandering is detected by how contorted a district’s shape looks; for example, the Washington Post article [46] uses a particular geometric score to identify the top 10 most gerrymandered voting districts of the 113th Congress.

As an alternative, we visualize the distribution of district shapes with the help of max filtering. The shape files for all voting districts of the 116th Congress are available in [71]. We center each district at the origin and scale it to have unit perimeter, and then we sample the boundary at \(n=50\) equally spaced points. This results in a \(2\times 50\) matrix representation of each district. However, the same district shape may be represented by many matrices corresponding to an action of \({\text {O}}(2)\) on the left and an action of \(C_n\) that cyclically permutes columns. Hence, it is appropriate to apply max filtering with \(G\cong {\text {O}}(2)\times C_n\). It is convenient to identify \({\mathbb {R}}^{2\times 50}\) with \({\mathbb {C}}^{50}\) so that the corresponding max filter is given by

which can be computed efficiently with the help of the fast Fourier transform. We max filter with 100 random templates to embed these districts in the feature space \({\mathbb {R}}^{100}\), and then we visualize the result using PCA; see Fig. 3.

Visualization of voting districts of the 116th Congress obtained by max filtering and principal component analysis. See Example 33 for details

Interestingly, the principal components of this feature domain appear to be interpretable. The first principal component (given by the horizontal axis) seems to capture the extent to which the district is convex, while the second principal component seems to capture the eccentricity of the district. Six of the ten most gerrymandered districts from [46] are drawn in red, which appear at relatively extreme points in this feature space. The four remaining districts (NC-1, NC-4, NC-12, and PA-7) were redrawn by court order between the 113th and 116th Congresses; the new versions of these districts are drawn in blue. Unsurprisingly, the redrawn districts are not contorted, and they appear at not-so-extreme points in the feature space.

Example 34

(ECG time series) An electrocardiogram (ECG) uses electrodes placed on the skin to quantify the heart’s electrical activity over time. With ECG data, a physician can determine whether a patient has had a heart attack with about 70% accuracy [53]. Recently, Makimoto et al. [53] trained a 6-layer convolutional neural network to perform this task with about 80% accuracy. The dataset they used is available at [15], which consists of \((12+3)\)-lead ECG data sampled at 1 kHz from 148 patients who recently had a heart attack (i.e., myocardial infarction or MI) and from 141 patients who did not.

As an alternative to convolutional neural networks, we use max filters to classify patients based on one second of ECG data (i.e., roughly one heartbeat). In particular, we read the first \(t=1000\) samples of all 15 leads to obtain a \(15\times t\) matrix X. We then lift this matrix to a \(15\times w\times (t-w+1)\) tensor with \(w=30\), where each \(15\times w\) slice corresponds to a contiguous \(15\times w\) submatrix of X. Then we normalize each \(1\times w\times 1\) subvector to have mean zero to get the tensor Y; this discards any trend in the data. We account for time invariance by taking G to be the order-\((t-w+1)\) group of circular permutations of the \(15\times w\) slices of Y. Using this group, we max filter with \(n=5\) different templates and then classify with a support-vector machine. We constrain each template to be supported on a single slice, and we train these templates together with the support-vector machine classifier by gradient descent to minimize hinge loss.

We train max filter templates and a support-vector machine classifier on electrocardiogram data to distinguish between patients who have had a heart attack from those who have not. Above, we plot the most extreme examples in the test set (those with heart attacks on the left, and those without on the right). The blue windows illustrate the time segments of width \(w=30\) that align best with the templates. See Example 34 for details

Following [53], we form the test set by randomly drawing 25 examples from the MI class and 25 examples from the non-MI class, and then we form the training set by randomly drawing 108 non-test set examples from each class. We perform 10 trials of this experiment, and each achieve between 74 and 84% accuracy on the test set. In particular, this is competitive with the 6-layer convolutional neural network in [53] despite accessing only a fraction of the data. In Fig. 4, we illustrate what the classifier considers to be the most extreme examples in the test set. The time segments that align best with the trained templates typically cover the heartbeat portion of the ECG signal.

Example 35

(Textures) In this example, we use max filtering to classify various textures, specifically, those given in the Kylberg Texture Dataset v. 1.0, available in [51]. This dataset consists of 28 classes of textures, such as blanket, grass, and oatmeal. Each class consists of 160 distinct grayscale images. (We crop these \(576\times 576\) images down to \(256\times 256\) for convenience.) A few classifiers in the literature have been trained on this dataset [3, 52], but in order to achieve a high level of performance, they leverage domain-specific feature maps, augment the training set with other image sets, or simply initialize with a pre-trained network. As an alternative, we apply max filtering with random features, and we succeed with much smaller training sets. For an honest comparison, we train different classifiers on training sets of different sizes, and the results are displayed in Table 1. (Here, the test set consists of 32 points from each class.) We describe each classifier in what follows.

First, we consider a convolutional neural network (CNN) with a standard architecture. We pass the \(256\times 256\) image through a convolutional layer with a \(3\times 3\) kernel before max pooling over \(2\times 2\) patches. Then we pass the result through another convolutional layer with a \(3\times 3\) kernel, and again max pool over \(2\times 2\) patches. We end with a dense layer that maps to 28 dimensions, one for each class. We train by minimizing cross entropy loss against the one-hot vector representation of each label. This model has 1.7 million parameters, which enables us to achieve perfect accuracy on the training set.

As an alternative, we apply linear discriminant analysis (LDA). Training this classifier involves a massive matrix operation, and this requires too much memory in our setting when the training set has 128 points per class. This motivates the use of principal component analysis to reduce the dimensionality of each \(256\times 256\) image to \(k=25\) principal components. The resulting classifier (PCA–LDA) works for larger training sets, and even exhibits improved accuracy when the training set is not too small.

For our method, we apply the same PCA–LDA method to a max filtering feature domain. Surprisingly, we find that modding out by the entire group of pixel permutations (i.e., just sorting the pixel values) performs reasonably well as a feature map. Our method implements a multiscale version of this observation. For each \(\ell \in \{0,1,\ldots ,8\}\), one may partition the \(2^8\times 2^8\) image into \(4^{8-\ell }\) square patches of width \(2^\ell \). We perform max filtering with the group \((S_{4^\ell })^{4^{8-\ell }}\) of all patch-preserving pixel permutations for each \(\ell \in \{2,\ldots ,8\}\). For simplicity, we are inclined to draw templates at random, but for computational efficiency, we simulate random templates with the help of Theorem 30. In particular, for each \(\ell \in \{2,\ldots ,8\}\) and \(n\in \{0,\ldots ,5\}\), we sort the pixels in each \(2^\ell \times 2^\ell \) patch and take their inner products with a discretized version of \(p_n\circ Q\) and sum over the patches. This maps each \(2^8\times 2^8\) image to a 42-dimensional feature vector. As before, we apply PCA with \(k=25\) and then train an LDA classifier on the result. In the end, this max filtering approach significantly outperforms the other classifiers we considered, as seen in Table 1.

7 Discussion

Max filtering offers a rich source of invariants that can be used for a variety of machine learning tasks. In this section, we discuss several opportunities for follow-on work.

Separating. In terms of sample complexity, Corollary 15 gives that a generic max filter bank of size 2d separates all G-orbits in \({\mathbb {R}}^d\) provided \(G\le {\text {O}}(d)\) is finite. If \(G\le {\text {O}}(d)\) is not topologically closed, then no continuous invariant (such as a max filter bank) can separate all G-orbits. If \(G\le {\text {O}}(d)\) is topologically closed, then by Theorem 3.4.5 in [59], G is algebraic, and so Theorem 14 applies. We suspect that max filtering separates orbits in such cases, but progress on this front will likely factor through Problem 17a. For computational complexity, how well does the max filtering separation hierarchy (1) separate isomorphism classes of weighted graphs? Judging by [2], we suspect that max filtering with a template of order k and treewidth t can be computed with runtime \(e^{O(k)}n^{t+1}\log n\), which is polynomial in n when k is logarithmic and t is bounded. Which classes of graphs are separated by such max filters? Also, can max filtering be used to solve real-world problems involving graph data, or is the exponent too large to be practical?

Bilipschitz. The proof of Lemma 22 contains our approach to finding Lipschitz bounds on max filter banks. Our upper Lipschitz bound follows immediately from the fact that each individual max filter is Lipschitz, and it does not require the group to be finite. This bound is optimal for \(G={\text {O}}(d)\), but it is known to be loose for small groups like \(G=\{\pm {\text {id}}\}\). We suspect that our lower Lipschitz bound leaves a lot more room for improvement. In particular, we use the pigeonhole principle to pass from a sum to a maximum so that we can leverage projective uniformity. A different approach might lead to a tighter bound that does not require G to be finite. More abstractly, we suspect that separating implies bilipschitz, though the bounds might be arbitrarily bad; see Problem 21.

Randomness. Our separating and bilipschitz results (Corollary 15 and Theorem 20) are not given in terms of explicit templates. Meanwhile, our Mallat-type stability result (Theorem 24) requires a localized template, and we can interpret the templates used in our weighted graph separation hierarchy (1) as being localized, too. We expect that there are other types of structured templates that would reduce the computational complexity of max filtering (much like the structured measurements studied in compressed sensing [49, 62, 66] and phase retrieval [11, 14, 40, 42]). It would be interesting to prove separating or bilipschitz results in such settings. More generally, can one construct explicit templates for which max filtering is separating or bilipschitz for a given group? Going the other direction, the reader may have noticed that the plots in Fig. 1 deviate from each other at the edges of the interval. We expect that such deviations decay as the dimension grows, but this requires further analysis. How can one analyze the behavior of random max filters for other groups?

Max filtering networks. Example 35 opens the door to a variety of innovations with max filtering. Here, instead of fixing a single group to mod out by, we applied max filtering with a family of different groups. We selected permutations over patches due to the computational simplicity of using Theorem 30, and we arranged the patches in a hierarchical structure so as to capture behavior at different scales. Is there any theory to explain the performance of this architecture? Are there other useful architectures in this vicinity, perhaps by combining with neural networks?

References

B. Alexeev, A. S. Bandeira, M. Fickus, D. G. Mixon, Phase retrieval with polarization, SIAM J. Imaging Sci. 7 (2014) 35–66.

N. Alon, R. Yuster, U. Zwick, Color-coding, J. ACM 42 (1995) 844–856.

V. Andrearczyk, P. F. Whelan, Using filter banks in convolutional neural networks for texture classification, Pattern Recognit. Lett. 84 (2016) 63–69.

R. Balan, P. Casazza, D. Edidin, On signal reconstruction without phase, Appl. Comput. Harmon. Anal. 20 (2006) 345–356.

R. Balan, C. B. Dock, Lipschitz Analysis of Generalized Phase Retrievable Matrix Frames, SIAM J. Matrix Anal. Appl. 43 (2022) 1518–1571.

R. Balan, N. Haghani, M. Singh, Permutation Invariant Representations with Applications to Graph Deep Learning, arXiv:2203.07546 (2022).

R. Balan, E. Tsoukanis, \(G\)-Invariant Representations using Coorbits: Bi-Lipschitz Properties, arXiv:2308.11784 (2023).

R. Balan, Y. Wang, Invertibility and robustness of phaseless reconstruction, Appl. Comput. Harmon. Anal. 38 (2015) 469–488.

A. S. Bandeira, B. Blum-Smith, J. Kileel, J. Niles-Weed, A. Perry, A. S. Wein, Estimation under group actions: Recovering orbits from invariants, Appl. Comput. Harmon. Anal. 66 (2023) 236–319.

A. S. Bandeira, J. Cahill, D. G. Mixon, A. A. Nelson, Saving phase: Injectivity and stability for phase retrieval, Appl. Comput. Harmon. Anal. 37 (2014) 106–125.

A. S. Bandeira, Y. Chen, D. G. Mixon, Phase retrieval from power spectra of masked signals, Inform. Inference 3 (2014) 83–102.

T. Bendory, D. Edidin, W. Leeb, N. Sharon, Dihedral multi-reference alignment, IEEE Trans. Inform. Theory 68 (2022) 3489–3499.

J. Bochnak, M. Coste, M.-F. Roy, Real algebraic geometry, Springer, 2013.

B. G. Bodmann, N. Hammen, Stable phase retrieval with low-redundancy frames, Adv. Comput. Math. 41 (2015) 317–331.

R. Bousseljot, D. Kreiseler, A. Schnabel, Nutzung der EKG-Signaldatenbank CARDIODAT der PTB über das Internet, Biomed. Tech. 40 (1995) 317–318, https://www.physionet.org/content/ptbdb/1.0.0/.

M. M. Bronstein, J. Bruna, T. Cohen, P. Veličković, Geometric Deep Learning: Grids, Groups, Graphs, Geodesics, and Gauges, arXiv:2104.13478 (2021).