Abstract

Regularization is necessary when solving inverse problems to ensure the well-posedness of the solution map. Additionally, it is desired that the chosen regularization strategy is convergent in the sense that the solution map converges to a solution of the noise-free operator equation. This provides an important guarantee that stable solutions can be computed for all noise levels and that solutions satisfy the operator equation in the limit of vanishing noise. In recent years, reconstructions in inverse problems are increasingly approached from a data-driven perspective. Despite empirical success, the majority of data-driven approaches do not provide a convergent regularization strategy. One such popular example is given by iterative plug-and-play (PnP) denoising using off-the-shelf image denoisers. These usually provide only convergence of the PnP iterates to a fixed point, under suitable regularity assumptions on the denoiser, rather than convergence of the method as a regularization technique, thatis under vanishing noise and regularization strength. This paper serves two purposes: first, we provide an overview of the classical regularization theory in inverse problems and survey a few notable recent data-driven methods that are provably convergent regularization schemes. We then continue to discuss PnP algorithms and their established convergence guarantees. Subsequently, we consider PnP algorithms with learned linear denoisers and propose a novel spectral filtering technique of the denoiser to control the strength of regularization. Further, by relating the implicit regularization of the denoiser to an explicit regularization functional, we are the first to rigorously show that PnP with a learned linear denoiser leads to a convergent regularization scheme. The theoretical analysis is corroborated by numerical experiments for the classical inverse problem of tomographic image reconstruction.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Inverse problems deal with the estimation of an unknown model parameter \(x^{*}\in X\) from its noisy and indirect measurement \(y^{\delta }\in Y\) given by

We consider the case where X and Y are (potentially infinite dimensional) separable Hilbert spaces and \(A:X\rightarrow Y\) is a bounded linear operator. X and Y are endowed with inner products \(\langle \cdot , \cdot \rangle _X\) and \(\langle \cdot , \cdot \rangle _Y\), inducing the norms \(\Vert \cdot \Vert _X\) and \(\Vert \cdot \Vert _Y\), respectively. The measurement noise level is bounded by \(\delta \), i.e., \(\Vert e\Vert _Y\le \delta \). The clean measurement is denoted by \(y^0\).

The inverse problem in (1) is considered ill-posed in the sense of Hadamard, if either injectivity or surjectivity of the forward operator, or stability of the solution map is violated. For instance, if A is a compact operator with an infinite-dimensional range, then surjectivity and stability are not satisfied. This is, for example, the case for the ray transform operator that underlies many applications in medical imaging, such as computed tomography (CT) and positron emission tomography (PET) [35, 36]. The study of inverse problems usually assumes ill-posedness, as we will also do in the following.

To address ill-posedness, one needs to introduce a general concept for stable and unique solvability for an inverse problem of the form (1). Due to the aforementioned ill-posedness, we can not guarantee the recovery of the true solution \(x^*\) for all measurements and hence we first need the concept of a generalized solution. A common approach is to search for solutions that are closest to the measured data with respect to a suitable data discrepancy term \(f:Y\times Y\rightarrow \mathbb {R}_+\), such as the (squared) distance in the norm, i.e., \(f(Ax,y^\delta )=\Vert Ax-y^\delta \Vert _Y^2\). Then we search for \(\widetilde{x}\in X\) such that

(2) implies that \(\widetilde{x}\) is closest to the measured data with respect to \(f\), which deals with the violation of surjectivity by disregarding components of \(y^\delta \) in the co-kernel of \(A\). Furthermore, if \(A\) has a non-trivial null space, then \(\widetilde{x}\) is not unique. To obtain a unique solution, one can define the minimum norm solution as

The element \(x^\dagger \) can now be considered a desirable generalized solution to (1). When f and \(\Vert \cdot \Vert _X\) are given by the squared \(L^2\)-norm, we call \(x^\dagger \) the least-squares minimum-norm solution and can define a mapping \(A^\dagger :Y\rightarrow X\), such that \(x^\dagger =A^\dagger y^\delta \). In fact, the mapping \(A^\dagger \) defines what is referred to as the Moore-Penrose pseudo-inverse. Unfortunately, if the operator A is compact, then \(A^\dagger \) will be unbounded and as such does not take care of the stability problem in the presence of noise in the data. This is where the concept of regularization becomes important, as we will discuss next.

Regularization theory considers specifically designed solution maps to deal with the stability issue. Such a solution map \(\mathcal {R}({\cdot ;\lambda }):Y\rightarrow X\), also called a reconstruction operator, is expressed as a parametric map that produces a solution estimate of \(x^*\) given \(y^{\delta }\). Here, the parameter \(\lambda \) depends on the noise level \(\delta \) and the measured data \(y^{\delta }\), which we denote explicitly by the mapping \(\lambda =\lambda (\delta ,y^\delta )\). In this paper, we are specifically interested in the notion of convergent regularization which can be understood as convergence of the reconstruction operator when the noise level \(\delta \) tends to zero. More specifically, we want that when the noise level \(\delta \rightarrow 0\), then \(\lambda (\delta ,y^\delta )\rightarrow \lambda _0\ge 0\), and the reconstruction operator \(\mathcal {R}({y^{\delta };\lambda })\) converges to a generalized solution of the noiseless operator equation

A family of such reconstruction operators \(\mathcal {R}({y^{\delta };\lambda })\) can be formulated in the framework of variational regularization (see Sect. 2.1.3 for more details) by defining them as the mapping to the minimizer of a variational energy function

Here, the first term ensures data consistency as in (2) and the second term acts as the regularizer to make the problem well-posed. It is often the case (although not always) that f is convex and smooth in x, while the regularizer g is convex but potentially non-smooth, e.g., sparsity-promoting regularizers involving the \(L^1\)-norm [6]. Therefore, to compute a minimizer of the variational problem, one utilizes non-smooth convex optimization algorithms to iteratively estimate the solution map \(\mathcal {R}({y^{\delta };\lambda })\). In particular, proximal splitting schemes are often used, such as forward-backward splitting (FBS), also referred to as proximal gradient descent, and the alternating directions method of multipliers (ADMM), which involve applying the proximal operator of g (see (21) for definition) in each iteration to refine the solution. For instance, the FBS scheme iteratively updates the solution as

starting from an initialization \(x_0\), where \(\eta \) is the step-size and \(\text {prox}_{\lambda \,g}\) is the proximal operator of \(\lambda \,g(x)\) (see (21) for definition). Note that (6) retains the modularity of the variational framework, in the sense that the proximal operator enforces prior knowledge about the image and its argument depends entirely on the forward operator and the fidelity loss. This decoupling of the fidelity and the prior in proximal splitting algorithms forms the basis of the so-called plug-and-play (PnP) denoising algorithms.

The PnP approach, pioneered by Venkatakrishnan et al. [49], noted that the proximal step can be interpreted as a denoising step and suggests replacing the proximal operator with an off-the-shelf (Gaussian) image denoiser. While the initial PnP methods utilized classical model-based denoisers (such as BM3D, non-local means, KSVD with a learned basis, etc.), more recent PnP schemes have leveraged deep denoisers that outperform their classical counterparts in terms of denoising quality.

Image reconstruction with different regularizers from images corrupted by Gaussian blurring. The data in (d) is generated by convolving the ground-truth image (a) with a Gaussian kernel and adding white Gaussian noise. b and c correspond to variational reconstructions with the \(H^1\) seminorm, \(g(x) = \Vert \nabla x\Vert _2^2\), and total variation (TV) seminorm, \(g(x) = \Vert \nabla x\Vert _1\), respectively. On the other hand, e and f represent plug-and-play (PnP) reconstructions (see Sect. 3) with a linear denoiser and non-linear gradient-step denoiser respectively. For b and c the regularization parameters were chosen to optimize the peak signal-to-noise ratio (PSNR). Both b and e correspond to linear reconstructions, whereas c and f are non-linear reconstructions

Figure 1 shows a comparison of the performance of variational regularization methods and PnP methods on an image deblurring task. We compare linear reconstructions (corresponding to a quadratic regularization functional and linear denoiser) in (b) and (e) and non-linear reconstructions (with a non-quadratic regularization functional and non-linear denoiser) in (c) and (f). Notwithstanding the impressive empirical performance of data-driven PnP algorithms for imaging problems, their analysis as a convergent regularization scheme has largely remained unaddressed [15, 33]. In this paper, we address the question of convergent data-driven regularization, provide a survey of existing approaches and establish a convergence result for learned PnP denoisers, based on a linearity assumption of the denoiser (corresponding to the setting of (e) in Fig. 1) and a novel spectral filtering to control regularization strength.

Organization and contributions: We will first review classical approaches to regularization in Sect. 2 and why inverse problems necessitate a generalized notion of solvability. We will then continue to discuss how this classical approach can be combined with modern data-driven methods. In particular, we will devote special attention to plug-and-play (PnP) approaches in Sect. 3. We discuss, that depending on the regularity of the denoiser, different convergence guarantees of the PnP iterations can be established, such as fixed point [41] and objective convergence [22, 34]. Nevertheless, there is still a gap in the literature on whether PnP can provide a convergent regularization for general denoisers.

In Sect. 4 we will provide an important step forward to fill this gap and consider PnP algorithms with data-driven linear denoisers. We propose a novel spectral filtering technique to control the regularization strength of the denoiser, which allows us to establish that PnP with a learned linear denoiser leads to a convergent regularization scheme. More specifically, we prove that in the limit as the noise vanishes, the PnP reconstruction converges to the minimizer of a regularizing potential subject to the solution satisfying the noiseless operator equation (4). In the numerical experiments we examine the spectral filtering approach for the classical inverse problem of X-ray tomography and show that the proposed method does indeed provide a numerically verifiable convergent regularization. Finally, in Sect. 5 we provide concluding remarks and discuss directions forward.

2 Regularization for Inverse Problems and Data-Driven Methods

Regularization theory has been a rich and successful field in inverse problems for several decades. The primary motivation is to formulate a well-posed and stable inversion procedure that converges provably to a solution of the noiseless operator equation (4). The emergence of data-driven methods has given the field of inverse problems a new direction: by using large quantities of data we can significantly improve reconstruction results. However, the underlying question of a convergent regularization remains: does the obtained reconstruction solve the underlying operator equation?

Indeed, there exist a few methods that are provably convergent regularization methods, we refer to [33] for a survey. In the following, we will give a short overview of the regularization theory and existing data-driven approaches that are provably convergent regularization methods in this context.

2.1 Classical Regularization Theory

Stable solutions to inverse problems need a way to handle varying noise levels. For this purpose, the concept of regularization has proven highly useful. Roughly, regularization can be understood as a convergence requirement to a unique solution, e.g., the minimum norm solution \(x^\dagger \), where convergence depends on the noise level \(\delta \). That is, formally we consider the previously discussed reconstruction operator \(\mathcal {R}_\lambda := \mathcal {R}(\cdot ,\lambda )\), which provides a parameterized family of continuous operators \(\mathcal {R}_\lambda :Y\rightarrow X\). The parameter \(\lambda \) depends on the noise level \(\delta >0\), where \(\Vert y^{\delta } - y^0 \Vert \le \delta \) and \(y^0:= A x^*\) denotes noise-free data. We say that the family of reconstruction operators is a convergent regularization method if there exists a parameter choice rule \(\lambda = \lambda (\delta , y^{\delta })\) such that reconstructions \(x^\delta :=\mathcal {R}_{\lambda (\delta ,y^\delta )}(y^\delta )\) converge to the solution \(x^\dagger :=A^{\dagger } y^0\) given by the pseudo-inverse as noise vanishes, in the sense that

In other words, we have point-wise convergence of the reconstruction operators to the pseudo-inverse, i.e., \(\mathcal {R}_{\lambda (\delta , y^{\delta })}(y^\delta ) \rightarrow A^\dagger y^0\) as \(\delta \rightarrow 0\). We refer interested readers to [16] for a detailed discussion. This is, of course, quite restrictive and only considers convergence to the least-squares minimum-norm solution. Nevertheless, this can already be used as an important tool to design learned regularization methods, i.e., learned reconstruction approaches that formally satisfy the above convergence criteria, as we will discuss in the following.

2.1.1 Direct Regularization

Motivated by the convergence to the pseudo-inverse solution, one can obtain a regularization method by mimicking the construction of the pseudo-inverse. In finite dimensions, this can be achieved by the singular value decomposition (SVD) \(A=USV^{\top }\) of the forward operator. The pseudo-inverse can then be simply obtained by \(A^\dagger = VS^\dagger U^{\top }\), where \(S^\dagger \) is the transposed singular value matrix with inverted singular values. A regularization method is now obtained by filtering the singular values with a noise-dependent filter function, or a noise level-dependent truncation.

Similarly, direct reconstruction methods that apply a regularized inverse of the forward operator can be shown to be convergent regularization methods. The most prominent example of such methods is the filtered back-projection (FBP) for X-ray CT, which is, in fact, still relevant in clinical practice. Here, the filtering operation removes high-frequency components in Fourier space to regularize the reconstructions. If the filtering is interpreted as a noise-dependent mollifier, one obtains the general class of approximate inverse [44] with convergence as noise vanishes.

A popular approach in data-driven methods is to formulate a learned reconstruction operator as the composition of a regularized reconstruction operator \(\mathcal {R}_\lambda :Y\rightarrow X\) with a data-driven component \(C_{\theta }:X\rightarrow X\). That is, the reconstruction operator is parameterized as \(\mathcal {R}_{(\theta ,\lambda )}:= C_{\theta }\circ \mathcal {R}_\lambda \), where the data-driven component \(C_{\theta }\), usually parameterized using a deep convolutional neural network (CNN), is designed to improve the reconstruction by removing noise or undersampling artifacts [23, 26]. These approaches are also popularly referred to as post-processing methods.

Such one-step post-processing approaches are especially popular due to their simplicity, as \(C_{\theta }\) can be efficiently trained when supervised pairs of high and low-quality reconstructions are available. Unfortunately, there are very few results on reconstruction guarantees for such methods. Specifically, the problem formulation as a composition of a regularized reconstruction followed by the data-driven component causes the reconstruction to often violate the so-called data-consistency criterion. That is, even if the data-fidelity \(f(\bigl (A\circ \mathcal {R}_\lambda \bigr )(y^\delta ),y^\delta )\) is small, it does not necessarily imply a small value of \(f\bigl (\bigl (A\circ {C}_{\theta }\circ \mathcal {R}_\lambda \bigr )(y^\delta ),y^\delta \bigr )\) corresponding to the output of the post-processing network \({C}_{\theta }\). Thus, such schemes do not satisfy the convergence of the data fidelity and hence fail to be a convergent regularization strategy.

Nevertheless, as proposed in [45], this approach can be reformulated by constructing the post-processing network as \({C}_{\theta } = {{\,\textrm{id}\,}}+\left( {{\,\textrm{id}\,}}-A^{\dagger }A\right) {Q}_{\theta }\), where \({Q}_{\theta }\) is a Lipschitz-continuous deep neural network (DNN) and \({{\,\textrm{id}\,}}\) denotes the identity operator on X. Here, \(\left( {{\,\textrm{id}\,}}-A^{\dagger }A\right) \) is the projection operator onto the null-space of A and hence the operator \({C}_{\theta }\) (referred to as null-space network) always satisfies \(\bigl (A\circ {C}_{\theta }\circ \mathcal {R}_\lambda \bigr )(y^\delta )=\bigl (A\circ \mathcal {R}_\lambda \bigr )(y^\delta )\), ensuring that the output of \({C}_{\theta }\) explains the observed data. More importantly, the null-space network maintains the regularizing properties of the reconstruction method \(\mathcal {R}_\lambda \) and hence provides a convergent regularization scheme [45] in the sense of direct regularization. See [7] for a recent extension of null-space networks to non-linear inverse problems.

2.1.2 Iterative Regularization

Iterative techniques constitute another important class of regularization approach in the classical literature [24]. Here, a regularized solution is obtained by applying early stopping on an iterative algorithm based on a discrepancy principle. Landweber iteration is a such a classical iterative regularization approach, in which iterations of the form \(x_{k+1}=x_k-A^\top (Ax_k-y^{\delta })\) are terminated after K steps, where K is the smallest integer such that \(\Vert Ax_K-y^{\delta }\Vert \le \delta \) is satisfied. Landweber iteration can be modified to include a damping term (see [43]), resulting in iterations of the form

where \(x^{(0)}\) is an initial guess that encodes prior knowledge about the solution. The modified iteration converges to a solution that is closest to \(x^{(0)}\), thereby introducing further stability. Aspri et al. [5] considered the modified Landweber scheme for non-linear inverse problems (with a forward operator F) and proposed a data-driven variant of it by using a learned damping term, leading to an iterative regularization scheme of the form

where \(\tilde{A}\) is a learned linear operator introduced in the damping term. The authors are able to prove strong convergence and stability in infinite dimensional Hilbert spaces. We refer interested readers to [5] for a detailed exposition on the learning strategy for \(\tilde{A}\) and the analysis of the resulting data-driven iterative regularization approach.

2.1.3 Variational Regularization

The classical regularization theory, which defines convergent regularization by convergence to the pseudo-inverse solution as defined in (7) limits possible solutions. Therefore, one can consider more general variational approaches to inverse problems, which have been particularly popular due to their flexibility in incorporating prior knowledge and dealing with varying noise distributions. In the variational regularization framework, solutions are computed by minimizing a composite objective consisting of the data-consistency term and a regularization term. In particular, the solutions are given by

The loss functional \(f:Y\times Y\rightarrow \mathbb {R}^{+}\) measures data fidelity and is not restricted anymore to be the squared \(L^2\)-norm. The regularization functional \(g:X\rightarrow \mathbb {R}\) encodes prior belief about the ground-truth \(x^*\) and effectively restricts the null space of A. Here, \(\lambda >0\) is a simple weighting parameter to balance between the two terms of the composite objective in (8), but more generally could be a parameter of the functional itself, in which case we will write \(g_\lambda \) instead. The choice of a suitable regularizer g is governed by the need to balance two important factors: desirable analytical features and the encoded prior belief. For instance, an analytically favorable choice is given by the squared \(L^2\)-norm, which, in combination with a squared \(L^2\)-norm for the data fidelity, provides a closed-form solution. Unfortunately, the obtained solutions corresponding to this choice of the regularizer will be smooth, which may not be suitable for many imaging applications. Consequently, more advanced sparsity-promoting priors have been favored, most commonly the \(L^1\)-norm for sparse signals and total variation (TV) for sparse gradients, i.e., piece-wise constant functions. These regularizers are non-differentiable and hence need more advanced non-smooth optimization techniques to compute a minimizer [6], but they typically lead to a better reconstruction than the simple squared \(L^2\)-norm-based regularization. See the top row of Fig. 1 for a comparison of some handcrafted regularizers in the context of the inverse problem of image deblurring.

Notably, the role of the two terms in (8) is conceptually similar to the general formulation in (3) of a minimum-norm solution. Nevertheless, the variational formulation provides more flexibility and also necessitates a broader concept of regularization. This is because we can not always guarantee convergence to the minimum-norm solution, but we have to consider convergence with respect to the chosen regularization functional \(g\) [42]. The formal definition of a convergent regularization scheme is given in Definition 1. The primary differences to the classical formulation here are, that the minimizer of the regularizing functional g is not necessarily unique and the regularization parameter is not required to converge to 0.

Let us remark to this end, that it is desirable to formulate a regularizer that has small values for the desired images, i.e., it penalizes undesired solutions but is also analytically or computationally tractable. It is important to note at this point that different regularizers g which provide a convergent regularization, will still produce different reconstruction results as illustrated in Fig. 1, as not all choices of g are a good representation of the desired ground-truth image. Here, learned regularizers have proven very successful, as the data itself can now be used to represent the regularizer and hence naturally offer a good representation of the desired features. Depending on the choice of representation, analysis of the learned regularizer may become more involved. In the following, we will discuss several choices for learned data-driven regularizers and how these can be used within the realm of variational regularization.

2.2 Learning a Regularizer

The idea of learning a regularizer from data, rather than the classical approach of modeling it from first principles as outlined above, has appeared in the literature in various forms. We outline here a few such approaches, ranging from relatively older yet widely popular ideas like dictionary learning to the more recent approaches of learning regularizers using deep neural networks.

2.2.1 Learning Sparsity-Promoting Dictionaries

We start with the concept of dictionary learning, which nicely illustrates how data can be used to learn a representation of the desired images. Here, we will use the concept of sparsity, which has long been important for modeling prior knowledge of solutions, to regularize inverse problems. Assuming that the reconstruction possesses a sparse representation in a given dictionary \(\mathbb {D}\), one can develop sparse recovery strategies, associated computational approaches, and error estimates for the reconstruction. Instead of working with a given dictionary, the key idea is to learn a dictionary either a-priori or jointly with the reconstruction. Notably, almost all work on dictionary learning in sparse models has been carried out in the context of denoising, i.e., with \(A={{\,\textrm{id}\,}}\).

Learning the dictionary separately to solve the reconstruction problem is usually done using a sparsity assumption on the representation given by the dictionary. Let \(L_X: X\times X\rightarrow \mathbb {R}\) be a given loss function (e.g. the \(L^2\)- or \(L^1\)-norm). Further, let \(x_1, \ldots , x_N \in X\) be the given unsupervised training data, \(\mathbb {D}= \{ {\phi _i} \} \subset X\) a dictionary, and the synthesis operator  acting on the encoder space \(\Xi \) given as

acting on the encoder space \(\Xi \) given as  for \(\xi \in \Xi \). One approach in dictionary learning is given by

for \(\xi \in \Xi \). One approach in dictionary learning is given by

Here, (10) is posed in terms of the \(L^0\)-norm and is an NP-hard problem. This suggests the use of convex relaxation, by replacing \( \Vert \xi _i \Vert _0 \) with \(\Vert \xi _i \Vert _1\) in (10). This relaxation turns (10) into a bi-convex problem (convex in each variable when the others are kept fixed) subject to usual choices for \(L_X\), and one can apply alternating minimization approaches for obtaining an approximate solution. Seminal work on sparse dictionary learning includes the K-SVD approach [2], geometric multi-resolution analysis (GMRA) [3], and online dictionary learning [30]. See also [40] and references therein for a thorough discussion on sparse dictionary learning approaches.

While dictionary learning in the context of sparse coding has been very popular and successful, there are still several issues with it related to the locality of learned structures and the computational effort needed, for instance when sparse coding is performed over a large number of images or image patches. Aiming for a computationally more feasible approach, convolutional dictionaries have been introduced. Here, the dictionary atoms are given by convolution kernels that act on signal features via convolution and hence provide computationally feasible shift-invariant dictionaries, where the atoms depend on the entire signal/image, see for instance [17].

The dictionary can also be learned jointly with the reconstruction, by formulating a joint optimization problem. An example of such an approach is the adaptive dictionary-based statistical iterative reconstruction (ADSIR) [52], and its variants [11, 51]. A joint problem could be formulated as:

where

while  being the synthesis operator associated with \(\mathbb {D}\).

being the synthesis operator associated with \(\mathbb {D}\).

A convergent regularization could now be obtained under suitable conditions on \(g_\lambda \) following the variational regularization framework in Sect. 2.1.3. Finally, a formulation in infinite dimensional spaces is studied in [9], proposing a convex variational model for joint reconstruction and dictionary learning, that applies to inverse problems and allows to establish existence and stability guarantees for the reconstruction.

2.2.2 Bilevel Learning

Starting from variational regularization methods where the reconstruction operator  is defined as the solution map for (8), one can formulate a generic setup for learning selected components of (8) utilizing supervised training data and a suitable loss function \(L_X :X\times X\rightarrow \mathbb {R}\). This setup can be tailored towards learning the regularization functional \(g_\lambda \) [13, 14], the data fidelity term \(f\), or even an appropriate component in the forward operator \(A\), e.g., in blind image deconvolution [21]. Notably, the joint dictionary learning problem (11) can also be formulated as a bilevel learning problem.

is defined as the solution map for (8), one can formulate a generic setup for learning selected components of (8) utilizing supervised training data and a suitable loss function \(L_X :X\times X\rightarrow \mathbb {R}\). This setup can be tailored towards learning the regularization functional \(g_\lambda \) [13, 14], the data fidelity term \(f\), or even an appropriate component in the forward operator \(A\), e.g., in blind image deconvolution [21]. Notably, the joint dictionary learning problem (11) can also be formulated as a bilevel learning problem.

First, we generalize the regularizer \(g_\lambda \) consisting of a single regularization parameter \(\lambda \) to a set of parameters \(\theta \) (vector-valued). Subsequently, we define the reconstruction operator as

Given paired training data \((x_i, y_i) \in X\times Y\) that are i.i.d. samples of the \((X\times Y)\)-valued random variable \((\textbf{x},\textbf{y}) \sim \pi _\textrm{joint}\), we can formulate the following bilevel learning problem:

Note that \(\widehat{\theta }\) is, by definition, a Bayes estimator [27, Chapter 4]: a set of parameters that minimizes the risk over the distribution \(\pi _\text {joint}\). However, the true joint distribution \(\pi _\textrm{joint}\) is typically unknown and is replaced by its empirical counterpart given by the training data, in which case \(\widehat{\theta }\) corresponds to empirical risk minimization.

In the bilevel optimization literature, as in the optimization literature as a whole, there are two main and mostly distinct approaches. In the discrete approach, one first discretizes the problem (13) and subsequently optimizes its parameters. In this way, optimality conditions and their well-posedness are derived in finite dimensions. Alternatively,  and its parameter \(\theta \) in (14) are optimized in the continuum (i.e., appropriate infinite-dimensional function spaces) and then discretized. It should be noted that the resulting problems present several difficulties due to the frequent non-smoothness of the lower-level problem (think of TV regularization), which, in general, makes it impossible to verify Karush–Kuhn–Tucker constraint qualification conditions. This issue has led to the development of alternative analytical approaches to obtain first-order necessary optimality conditions [12, 20].

and its parameter \(\theta \) in (14) are optimized in the continuum (i.e., appropriate infinite-dimensional function spaces) and then discretized. It should be noted that the resulting problems present several difficulties due to the frequent non-smoothness of the lower-level problem (think of TV regularization), which, in general, makes it impossible to verify Karush–Kuhn–Tucker constraint qualification conditions. This issue has led to the development of alternative analytical approaches to obtain first-order necessary optimality conditions [12, 20].

2.2.3 Adversarial Regularization

Another notable alternative approach to include a data-driven regularization in the reconstruction process is to learn an explicit regularization term in (8) and solve the variational problem subsequently. One such option is to learn adversarial regularizers as first proposed in [29] and further developed in [32]. Here, the construction of data-driven regularization is inspired by how discriminative networks (also referred to as critics) are trained using modern Generative Adversarial Network (GAN) architectures.

To train such an adversarial regularizer, we assume to have \(\left\{ x_i\right\} _{i=1}^{n_1}\in {X}\) and \(\left\{ y_i\right\} _{i=1}^{n_2}\in {Y}\), which are i.i.d. samples from the marginal distributions \(\pi _x\) and \(\pi _y\) of ground-truth images and measurement data, respectively. It is important to note here that the training samples are unpaired, i.e., \(y_i\) does not necessarily correspond to the noisy measurement of \(x_i\), unlike, for instance, a supervised approach such as the learned primal-dual (LPD) method [1]. Additionally, we assume that there exists a (potentially regularizing) pseudo-inverse \(A^\dagger :{Y} \rightarrow {X}\) to the forward operator \(A\) and define the measure \({\pi }_\dagger \in \mathbb {P}_{{X}}\) as \({\pi }_\dagger := A^\dagger _\# (\pi _{\textrm{data}})\) for \(\pi _{\textrm{data}} \in \mathbb {P}_{{Y}}\).

Then, the idea is to train a regularizer \(g_{\theta }\), parameterized by a neural network, to discriminate between the distributions \(\pi _x\) and \({\pi }_\dagger \), the latter representing the distribution of imperfect solutions \(A^\dagger y_i\). More concretely, we compute

where \(L(\theta )\) is chosen to be a Wasserstein-flavored loss functional [29]. In particular, one minimizes

Here, \(\widetilde{\pi }\) denotes the distribution of the random variable \(\textbf{u}=\epsilon \,\textbf{x}+(1-\epsilon )\textbf{z}\), where \(\textbf{x}\sim \pi _x\), \(\textbf{z}\sim \pi _{\dagger }\), and \(\epsilon \) is drawn uniformly at random from [0, 1]. The heuristic behind this choice is that a regularizer trained this way will penalize noise and artifacts generated by the pseudo-inverse (and contained in \({\pi }_\dagger \)). The term penalizing the gradient norm of \(g_\theta \) in (16) encourages \(g_{\theta }\) to be approximately 1-Lipschitz, which is required for the well-posedness of (16) and the stability of the variational solution obtained using the regularizer resulting from (15). When used as a regularizer, it will, therefore, prevent these undesirable features from occurring as a result of adversarial training. The resulting regularizer \(g_{\widehat{\theta }}\) is called an adversarial regularizer (AR). Note that in practical applications, the measures \(\pi _x, {\pi }_\dagger \in \mathbb {P}_{{X}}\) are replaced with their empirical counterparts given by training data \(x_i\) and \(A^\dagger y_i\), respectively.

Suppose, one computes a gradient step on the learned regularizer, given by \(x_\eta =x-\eta \,\nabla _\textbf{x}g_{\widehat{\theta }}(\textbf{x})\), starting from \(x\sim \pi _{\dagger }\). Let \({\pi }^\eta _\dagger \) be the distribution of \(x_\eta \). Under appropriate regularity assumptions on the Wasserstein distance \(\mathcal {W}({\pi }^\eta _\dagger ,\pi _x)\) (see [29, Theorem 1]), one can show that

This ensures that by taking a small enough gradient step, one can reduce the Wasserstein distance from the ground truth \(\pi _x\). This is a good indicator that using \(g_{\widehat{\theta }}\) as a variational regularization term and consequently penalizing it indeed introduces the highly desirable incentive to align the distribution of regularized solutions with the distribution \(\pi _x\) of ground truth samples. Further, one can show that if the AR is Lipschitz-continuous,Footnote 1 then a minimizer of the following variational problem exists

where the squared norm on x is needed to enforce coercivity. In this setting, convergence of the regularization procedure in the weak topology of X can be ensured.

Additionally, we can enforce (strong) convexity on \(g_\theta \), leading to the adversarial convex regularizer (ACR), to achieve stronger forms of convergence while precluding discontinuities in the reconstruction operator. This necessitates a suitable parameterization of the learned regularizer. One such option is given by input convex neural networks for imposing convexity [4] on \(g_{\widehat{\theta }}\). Given a so-constructed (ACR) \(g_{\widehat{\theta }}\) that is convex in x, we then consider a similar regularization functional of the form

where \(g_{\widehat{\theta }}:{X}\rightarrow \mathbb {R}\) is the trained (ACR) which we assume to be 1-Lipschitz and convex in x. The corresponding variational regularization problem then consists in minimizing

with respect to \(x\in {X}\). In this setting, we get the following set of improved theoretical guarantees for the ACR, by following standard arguments in variational calculus for the proofs.

Theorem 1

(Properties of Adversarial Convex Regularizer [32])

-

i.

Existence and uniqueness: The functional in (19) is strongly convex in x and has a unique minimizer \(\widehat{x}_{\lambda }\left( y\right) \) for every \(y\in {Y}\) and \(\lambda >0\).

-

ii.

Stability: The optimal solution \(\widehat{x}_{\lambda }\left( y\right) \) is continuous in y.

-

iii.

Convergence: For \(\delta \rightarrow 0\) and \(\lambda (\delta ) \rightarrow 0\) such that \(\displaystyle \frac{\delta }{\lambda (\delta )}\rightarrow 0\), we have that \(\widehat{x}_{\lambda }\left( y^{\delta }\right) \) converges to the \(g\)-minimizing solution \(x^{\dagger }\) given in (9).

Theoretical guarantees notwithstanding, the numerical experiments in [32] (especially, for sparse-view CT reconstruction) indicate a lack of expressive power of ACRs as compared to their non-convex counterpart AR. This underscores the need to develop techniques that achieve a better compromise between empirical performance and theoretical certificates.

2.2.4 The Network Tikhonov (NETT) Approach

Traditionally, regularizers are often chosen as sparsifying transforms with respect to certain features. For instance, total variation (TV) is sparsifying for piecewise constant functions. Similarly, neural networks are often trained in an encoder-decoder (autoencoder) structure, where the encoder is trained to represent the input signal in a low-dimensional space or to find a more efficient, i.e., a sparse structure. The approach proposed as Network Tikhonov (NETT) in [28] follows this paradigm to learn a regularizer. Here, a pretrained network \(\mathcal {E}_{\theta } :{X} \rightarrow \Xi \) is composed with a regularization functional \(g:\Xi \rightarrow [0,+\infty ]\), such that \(g\circ \mathcal {E}_{\theta } :{X} \rightarrow [0,+\infty ]\) takes small values for desired model parameters and penalizes (by producing larger values for) model parameters with artifacts or other unwanted structures. The deep neural network \(\mathcal {E}_{\theta }\) in this approach is allowed to be a rather general architecture, such as the above-mentioned autoencoder. Once trained, the reconstruction is then given as the minimizer of the variational objective

Indeed, the NETT approach also provides a provably convergent regularization method under certain analytic conditions on (20), such as weak lower semi-continuity and coercivity of the regularizer \(g(\mathcal {E}_{\theta } (\cdot ))\), which can be achieved as follows. First, the usual ReLU activation function is replaced by leaky ReLU defined with a small \(\tau >0\) as

which tends to \(- \infty \) for \(s \rightarrow - \infty \). In combination with the affine linear maps (weight matrices) in \(\mathcal {E}_{\theta }\), this yields a coercive and weakly lower semi-continuous regularization function \(g\circ \mathcal {E}_{\theta }\) for standard choices of \(g\), such as weighted \(\ell _p\)-norms \(g(\xi )= \sum _i v_i |\xi |^p\), with uniformly positive weights \(v_i\) and \(p \ge 1\). Finally, we note that strong convergence can be achieved by introducing the novel concept of absolute Bregman distances and imposing stronger conditions on the regularizer.

3 Regularization by Plug-and-Play (PnP) Denoising

Denoising is the simplest and arguably the most well-studied inverse problem in imaging, with numerous algorithms developed over the past few decades, particularly for removing additive white Gaussian noise from images. It is, therefore, natural to ask if one can leverage off-the-shelf denoisers for solving more complicated image recovery tasks with a non-trivial forward operator. Venkatakrishnan et al. [49] pioneered the idea of using denoisers within proximal splitting algorithms (e.g., the alternating directions method of multipliers (ADMM) algorithm) in a plug-and-play (PnP) fashion, and the resulting class of algorithms came to be known as the PnP denoising approach. To see the motivation behind using denoisers in place of proximal operators, let us recall the definition of the proximal operator with respect to a (potentially non-smooth) convex functional \(g:X\rightarrow \mathbb {R}\cup \{+\infty \}\) and a step-size \(\tau >0\):

As indicated by (21), evaluating the proximal operator amounts to denoising a noisy image x using the Bayesian maximum a-posteriori probability (MAP) estimation framework with a Gibbs prior proportional to \(\exp \left( -\tau \,g(u)\right) \). This denoising interpretation of proximal operators underlies the foundation of PnP approaches, which have been shown to produce excellent reconstruction results for a wide range of imaging inverse problems. A classic and widely popular example of PnP denoising would be to consider it in conjunction with forward-backward splitting (FBS), leading to the following iterative reconstruction algorithm:

Here, f denotes the data fidelity loss for the underlying inverse problem, \(\eta _k>0\) is the step-size at iteration k, and \(D_{\sigma }\) is a denoiser that eliminates Gaussian noise of standard deviation \(\sigma \) from its input.

Besides the PnP denoising framework within proximal methods, wherein a denoiser implicitly acts as a regularizer, Romano et al. [38] proposed an alternative approach to explicitly construct a regularizer as

while utilizing a denoiser \(D_{\sigma }(x)\). One can then seek to minimize the energy functional \(f(x)+\lambda \,g(x)\), where g is as defined in (23), leading to fixed-point iterative schemes known as the regularization-by-denoising (RED) algorithms. Nevertheless, it was shown subsequently by Schniter et al. [37] that the energy minimization interpretation of the RED algorithms is valid only when (i) the denoiser is locally homogeneous, i.e., \(D_{\sigma }\left( (1+\epsilon )x\right) =(1+\epsilon )D_{\sigma }(x)\) holds for all x with sufficiently small \(\epsilon \), and (ii) the Jacobian of \(D_{\sigma }\) is symmetric. These conditions are generally not satisfied by generic denoisers, thereby invalidating the energy minimization-based interpretation of RED. Instead, the authors of [37] developed a new framework called score-matching to analyze the convergence of RED algorithms.

In spite of their empirical success, PnP denoising algorithms such as (22) do not immediately inherit the convergence properties of the corresponding optimization scheme (in this specific instance, FBS). Studying the convergence of PnP denoising has received a significant amount of attention in the mathematical imaging community in recent years. Arguably, the most natural form of convergence for PnP algorithms of the form (22) is the stability of the iterations, i.e., to ascertain whether the sequence of iterates \(x_k\) generated by a PnP algorithm converges. Such convergence guarantees are typically derived from fixed point theorems, which require showing that the PnP iterations are contractive maps [10, 41]. For instance, [41] established the fixed-point convergence of PnP-ADMM (i.e., PnP with the alternating direction method of multipliers algorithm) under the assumption of Lipschitz continuity of the operator \(\left( D_{\sigma }-{{\,\textrm{id}\,}}\right) \). The specific result is stated in Theorem 2.

Theorem 2

(Fixed-point convergence of PnP-ADMM [41]) Consider the PnP-ADMM algorithm, given by

where the data-fidelity loss f is assumed to be \(\mu \)-strongly convex. One can equivalently express (24) as the fixed-point iteration  , where

, where

Suppose, the denoiser satisfies

for all \(u,v\in X\) and some \(\epsilon >0\), and the strong convexity parameter \(\mu \) is such that \(\displaystyle \frac{\epsilon }{(1+\epsilon -2\epsilon ^2)\,\mu }<\tau \) holds, the operator  is contractive and the PnP-ADMM algorithm is fixed-point convergent. That is, \(\left( x_k,z_k\right) \rightarrow (x_{\infty },z_{\infty })\), where \((x_{\infty },z_{\infty })\) satisfy

is contractive and the PnP-ADMM algorithm is fixed-point convergent. That is, \(\left( x_k,z_k\right) \rightarrow (x_{\infty },z_{\infty })\), where \((x_{\infty },z_{\infty })\) satisfy

As noted in [41], fixed-point convergence of PnP-ADMM follows from monotone operator theory if \(\left( 2D_{\sigma }-{{\,\textrm{id}\,}}\right) \) is non-expansive, but (26) imposes a less restrictive condition on the denoiser.

While fixed-point convergence ensures that the PnP iterations are stable, the specific fixed point to which they converge does not automatically minimize a variational energy function. To bridge the gap between classical variational approaches and PnP methods, it is important to derive conditions under which the limit point of PnP iterations can be characterized as the minimizer (or, at least a stationary point) of some regularized variational objective (which, of course, depends on the denoiser). This type of convergence is referred to as objective convergence and is stronger than fixed-point convergence.

Objective convergence of PnP with classical (pseudo) linear denoisers (e.g., non-local means denoiser) has been established in [34]. Hurault et al. [22] showed that PnP with a denoiser constructed as a gradient field (referred to as gradient-step (GS) denoisers) converges to a stationary point of a (possibly non-convex) variational objective (c.f. Theorem 3). The construction of GS denoisers is motivated by Tweedie’s identity: the optimal minimum mean-squared error (MMSE) Gaussian denoiser is given by

Here, \(\textbf{x}=\textbf{x}_0+\sigma \,\textbf{z}\), where \(\textbf{z}\sim \mathcal {N}(0,{{\,\textrm{id}\,}})\), is the Gaussian noise (with variance \(\sigma ^2\)) corrupted version of the clean image \(\textbf{x}_0\in X\subseteq \mathbb {R}^d\) and

Indeed, the optimal Gaussian denoiser is of the form \(D_{\sigma }^*(x)=x-\nabla \,g^{*}_{\sigma }(x)\), where \(g^{*}_{\sigma }\) is the negative log of the smoothed distribution \(p_{\sigma }\) defined in (29), which has a structure identical to that of a GS denoiser.

Theorem 3

(Objective convergence of PnP iterations with gradient-step (GS) denoisers [22]) Suppose, the denoiser is constructed as a gradient-step (GS) denoiser, i.e., \(D_{\sigma }={{\,\textrm{id}\,}}-\nabla g_{\sigma }\), where \(g_{\sigma }\) is proper, lower semi-continuous, and differentiable with an L-Lipschitz gradient. The PnP algorithm proposed in [22] is given by

where \(f :X\rightarrow \mathbb {R}\cup \{+\infty \}\) denotes data-fidelity and is assumed to be convex and lower semi-continuous. Then, the following guarantees hold for \(\tau <\frac{1}{\lambda \, L}\):

-

1.

The sequence \(F(x_k)\), where \(F=f+\lambda \, g_{\sigma }\), is non-increasing and convergent.

-

2.

\(\left\| x_{k+1}-x_k\right\| _2 \rightarrow 0\), which indicates that iterations are stable, in the sense that they do not diverge if one iterates indefinitely.

-

3.

All limit points of \(\{x_k\}\) are stationary points of F(x).

Notably, the PnP iteration defined by (30) is exactly equivalent to proximal gradient descent on \(f+\lambda \,g_{\sigma }\), with a potentially non-convex \(g_{\sigma }\).

While objective convergence ensures a one-to-one connection between PnP iterates with the minimization of a variational objective, it does not provide any guarantees about the regularizing properties of the solution that the iterates converge to. In the same spirit as classical regularization theory, it is therefore desirable to be able to control the implicit regularization effected by the denoiser in PnP algorithms and analyze the asymptotic behavior of the PnP reconstruction as the noise level and the regularization strength tend to vanish. More precisely, assuming that the PnP iterations converge to a solution \(\hat{x}\left( y^\delta ,\sigma ,\lambda \right) \), where \(\sigma \) is a parameter associated with the denoiser and \(\lambda \) is an explicit regularization penalty, one would like to obtain appropriate selection rules for \(\sigma \) and/or \(\lambda \) such that \(\hat{x}\left( y^\delta ,\sigma ,\lambda \right) \) exhibits convergence akin to (9) in the limit as \(\delta \rightarrow 0\). To the best of our knowledge, some progress in this direction was first made in [15], and the precise convergence result is stated in Theorem 4.

Theorem 4

(Convergent plug-and-play (PnP) regularization [15]) Consider the PnP-FBS iterates of the form

where \(D_{\lambda }\) is a denoiser with a tuneable regularization parameter \(\lambda \). Let \({{\,\textrm{PnP}\,}}\left( \lambda ,y^{\delta }\right) \) be the fixed point of the PnP iteration (31). For any \(y\in {{\,\textrm{range}\,}}(A)\) and any sequence \(\delta _k>0\) of noise levels converging to 0, there exists a sequence \(\lambda _k\) of regularization parameters converging to 0 such that for all \(y_k\) with \(\Vert y_k-y^0\Vert _2\le \delta _k\), the following hold under appropriate assumptions on the denoiser (see Definition 3.1 in [15] for details):

-

1.

\({{\,\textrm{PnP}\,}}\left( \lambda ,y^{\delta }\right) \) is continuous in \(y^{\delta }\) for any \(\lambda >0\);

-

2.

The sequence \(\left( {{\,\textrm{PnP}\,}}\left( \lambda _k,y_k\right) \right) _{k\in \mathbb {N}}\) has a weakly convergent subsequence; and

-

3.

The limit of every weakly convergent subsequence of \(\left( {{\,\textrm{PnP}\,}}\left( \lambda _k,y_k\right) \right) _{k\in \mathbb {N}}\) is a solution of the operator equation \(y^0=Ax\).

The result in [15], although the first of its kind, suffers from two main shortcomings: (i) the stability and convergence theory in [15] is based on fairly restrictive assumptions on the denoiser. In particular, the denoiser needs to be contractive, which is not satisfied by most practical denoisers, especially denoisers modeled using deep CNNs. (ii) It is not apriori clear how to select the denoiser parameter \(\lambda \) to control the strength of regularization corresponding to different noise levels. In this paper, we address the latter issue by considering linear denoisers, which are simple yet lead to competitive PnP algorithms [34]. Further, we show in Sect. 4 that the well-known idea of denoiser scaling [50] does not work for linear denoisers, which also underscores the need for devising an effective strategy to control the regularization of linear denoisers.

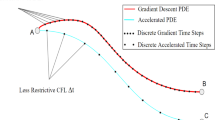

However, let us first discuss a more ambitious question here: Are PnP approaches with more general and expressive denoisers also convergent regularization methods, while offering a straightforward approach to control the regularization strength? This question is perhaps more tractable if one can associate the PnP solution (after convergence) with the minimizer of an underlying variational objective. We, therefore, first consider gradient-step denoisers, for which it is possible to establish such a connection (see Theorem 3). Treating \(\lambda \) in (30) as an explicit regularization parameter while using a fixed, pre-trained denoiser, one can interpret the converged PnP solution as a minimizer of \(f+\lambda \,g_{\sigma }\), where \(\lambda \) is varied depending on the noise level \(\delta \) in the measurement data and \(\sigma \) is kept fixed. The numerical results for image deblurring in Fig. 2 seem to indicate that gradient-step PnP is indeed a convergent regularization scheme, while the classical theory only guarantees stability akin to what is shown in [29] subject to \(g_{\sigma }\) being coercive and bounded below. In addition, the role of \(\sigma \) as an implicit regularization parameter is not exploited, and it is kept unchanged in our experiments regardless of the noise level in the measurement. This, in part, is due to the fact that the behavior of \(g_{\sigma }\) w.r.t. \(\sigma \) is non-trivial to characterize in a precise manner, leading to difficulties in tuning \(\sigma \) based on \(\delta \). Therefore, in order to rigorously establish convergence, together with developing a principled approach to control the regularization strength arising from the denoiser, we focus our attention on PnP with linear denoisers in the next section.

PnP gradient-step DRUNet denoiser as a convergent regularization method for image deblurring. The PnP scheme for reconstruction minimizes variational energy of the form \(f+\lambda \,g_{\sigma }\), where f is the fidelity and \(g_{\sigma }\) is the regularizer induced by a pre-trained denoiser. Plot a quantitatively demonstrates the convergence of the reconstructions as the noise level decreases. The input blurry image is given by \(y^{\sigma _0}=Ax+w\), where A is a Gaussian blur kernel and w is additive Gaussian noise with variance \(\sigma _0^2\). The images from b to i are the deblurred images \(\hat{x}\left( y^{\sigma _0}\right) \) corresponding to the noise level \(\sigma _0\) (expressed as the % of maximum pixel value 255.0 in the ground truth), while image j is the ground truth image. The regularization parameter is selected as \(\lambda =c\,\sigma _0+\epsilon \), where the constant \(c=0.04\) and \(\epsilon =10^{-4}\)

4 Controlling the Regularization Strength in PnP

A fundamental question that arises when applying learned denoisers for solving inverse problems using PnP concerns itself with how to adjust the regularization strength that is applied. Indeed, learned denoisers are typically trained at a fixed noise level, whereas their practical application to inverse problems in a PnP framework and the theoretical notion of convergent regularization both require one to have certain control over the regularization strength.

An approach that has been shown to be beneficial in practice is the denoiser scaling approach [50]: given a denoiser \(D_\sigma \) (designed for denoising at a given noise level \(\sigma \)), we introduce an extra scaling parameter \(\alpha > 0\), and define the scaled denoisers \(\{D_{\sigma , \alpha }\}_{\alpha > 0}\) as

This choice of scaling is motivated by the fact that if \(J:X\rightarrow \mathbb {R}\cup \{\infty \}\) is 1-homogeneous (i.e., \(J(\tau \,u)=\tau J(u)\), for \(\tau >0\)) and its proximal operator is well-defined, we have

In other words, if \(D_\sigma = {{\,\textrm{prox}\,}}_J\), then \(D_{\sigma , \alpha } = {{\,\textrm{prox}\,}}_{J/\alpha }\). Let us note that the choice of this particular scaling, while natural (norms and seminorms are 1-homogeneous, for example), is somewhat arbitrary. Indeed, suppose that J is instead c-homogeneous for some \(c > 0\), i.e., \(J(\delta \,u)=\delta ^c J(u)\) for any u and \(\delta >0\). We have, with \(\delta >0\) arbitrary,

Choosing \(\delta = \tau ^{\frac{1}{2-c}}\), we find that

which agrees with the result for 1-homogeneous functionals and generalizes it, except for 2-homogeneous functionals where the above derivation does not work. In fact, this leads nicely into a setting where no form of denoiser scaling as in Eq. (33) can possibly be used to control the regularization strength to give a convergent regularization: for linear denoisers, the multiplicative factor inside the denoiser can be pulled out and canceled against the factor outside of it.

4.1 Controlling the Regularization Strength of a Linear Denoiser

Let us consider the setting in which we have a linear denoiser \(D_\sigma :X\rightarrow X\). If we are to interpret it as a proximal operator of some underlying functional, we must assume that it is a symmetric, positive semi-definite (p.s.d.) operator, and if we assume that the underlying functional is convex as well, then \(D_\sigma \) must be non-expansive in addition. These properties are direct consequences of the characterization of proximal operators given in [31] and generalized (to potentially non-convex functionals) in [19]. Let us restrict to the case where \(D_\sigma \) is non-expansive, bypassing the potential difficulties of non-convexity of the underlying variational problem. In fact, we will assume that \(D_\sigma \) is contractive, i.e. \(\Vert D_\sigma \Vert <1\), which as we will see later corresponds to assuming that the underlying regularization functional is coercive. Furthermore, we will assume that \(D_\sigma \) is bounded from below, i.e. \(\Vert D_\sigma (x)\Vert \ge c \Vert x\Vert \) for some \(c >0\), so that \(D_\sigma ^{-1}\) exists and is a bounded operator.

Remark 1

In practice, the assumption of symmetry can be relaxed somewhat by taking a different perspective: in [18] it is shown in finite dimensions that any denoiser which is similar to a symmetric p.s.d. matrix is admissible in PnP applications. Indeed, in this case we can find a modified inner product, with respect to which the denoiser is a proximal operator.

Note that the assumptions that we make are ideally suited to the application of the spectral theory of bounded linear operators on Hilbert spaces. Recall that the spectrum of a bounded linear operator \(A:X\rightarrow X\), \({{\,\textrm{spec}\,}}(A)\), is defined as the set of \(\lambda \in {\mathbb {C}}\) such that \(A-\lambda {{\,\textrm{id}\,}}\) is not boundedly invertible. For bounded and symmetric operators A (such as the ones we consider) \({{\,\textrm{spec}\,}}(A)\) is a compact subset of \({\mathbb {R}}\), and we have a spectral theorem that enables the use of the continuous functional calculus. This is crucial in what follows as it allows us to apply a spectral filtering operation to denoisers to control their regularization strength, by adjusting the weights of the spectral components of the denoiser. We recommend that readers who are interested in studying these topics in more detail consult the textbook [46, Chapter 5].

Let us study the characterization of proximal operators in more detail for the linear denoiser \(D_\sigma \). The goal is to understand the underlying functional \(J:X\rightarrow \mathbb {R}\) such that \(D_\sigma = {{\,\textrm{prox}\,}}_J\). Note first that it is immediate from the definition (Eq. 21) that we can only hope to recover J up to an additive constant. We have

with \(\partial \) being the subdifferential. On the other hand, since \(D_\sigma \) is linear, \(D_\sigma ^{-1}\) is linear, and by the equation above \(\partial J =: W\) is also linear. As a result of this we have the following, up to an additive constant: \(J(x) = \frac{1}{2}\langle x, W x\rangle \). Furthermore, inverting the equation above and rearranging, we find that \(W = D_{\sigma }^{-1} - {{\,\textrm{id}\,}}\). Hence, up to an irrelevant additive constant, we find that the underlying regularization functional J corresponding to \(D_\sigma \) is given by

The most common way of controlling the regularization strength, when we have access to the underlying regularization functional J, is to simply scale it: introduce a parameter \(\tau >0\) and consider \({{\,\textrm{prox}\,}}_{\tau J}\). If we apply this to Eq. (34), we obtain

which suggests, by following the above reasoning in reverse, that

Here \(h_\tau : \mathbb {R}\rightarrow \mathbb {R}\), given by \(h_\tau (\lambda ) = \lambda /(\tau - \lambda (\tau - 1))\) is applied to \(D_\sigma \) using the functional calculus. The takeaway message of the preceding derivation is that we can perform a spectral filtering operation on the linear denoiser \(D_\sigma \) to control its regularization strength. In fact, more general filter functions \(h_\tau \) than the one seen here can be used, as we will see in what follows.

Remark 2

It is worth contrasting the spectral filtering approach proposed here with well-established spectral filtering approaches to regularization of linear, ill-posed, inverse problems [16]: whereas the traditional approaches operate on the forward operator to enact a regularization effect, we operate on the denoiser (agnostic about the forward operator to which the denoiser will be applied) to control its regularization strength.

To get a better understanding of what the spectral filtering operation does to a denoiser, consider Fig. 3. This will help us get an idea of what we should ask of generalized filter functions, i.e. filter functions that do not just implement a scaling of the underlying regularization functional: as \(\tau \rightarrow 0 \), the effect of the denoiser should vanish at an appropriate rate.

The effect of filtering the denoiser as in (35). In accordance with intuition, the spectrum is flattened as \(\tau \rightarrow 0\): as the regularization strength vanishes, the effect of the denoiser should vanish too

4.2 Convergent Regularization Through Generalized Spectral Filtering of Linear Denoisers

In the previous section, we saw that there is a way in which we can spectrally filter a linear denoiser to effectively scale the underlying regularization functional. Now, we will generalize the conditions on the spectral filter and show that this spectral filtering of linear denoisers allows us to obtain a convergent regularization of linear, ill-posed, inverse problems.

Equation (34) tells us that a linear denoiser is related to an underlying regularization functional J as follows: we have \(D_\sigma = {{\,\textrm{prox}\,}}_J\), where

Furthermore, we have seen the effect of scaling the regularization functional on the corresponding proximal operator. We can generalize this idea and look at

where \(\{h_\tau :{\mathbb {R}}\rightarrow {\mathbb {R}}\}_{\tau \in (0,\infty )}\) is a family of spectral filters that we can apply to \(D_\sigma \) using the continuous functional calculus. Let us now derive conditions on the spectral filters \(h_\tau \) such that this gives a convergent regularization. For one, since we are assuming that \(D_\sigma \) is bounded from below and contractive, we have that \({{\,\textrm{spec}\,}}(D_\sigma ) \subset (0, 1)\), and the same considerations that led to these assumptions then lead to us asking that \(h_\tau ({{\,\textrm{spec}\,}}(D_\sigma )) \subset (0, 1)\) for each \(\tau > 0\).

Since we would like to think of \(J_\tau \) as somewhat similar to \(\tau J\), we ask the question whether the limit

exists and is sufficiently well-behaved. Indeed, if this limit is well-defined, a natural result to aim for would be that we have convergence to a \(J^*\)-minimizing least-squares solution to the inverse problem with appropriate choices of \(\tau \rightarrow 0\) as \(\delta \rightarrow 0\).

Remark 3

In Theorem 5, as above, we will assume that the denoiser is contractive, which by (34) implies that the corresponding regularization functional is coercive. We may be able to relax this assumption, by requiring that the kernel of the forward operator is compatible with the denoiser in the sense that the objective function in (36) is coercive.

Theorem 5

Suppose that \(D_\sigma :X\rightarrow X\) is a bounded, linear, self-adjoint operator, which is interpreted as a denoiser. Furthermore, assume that \(D_\sigma \) is positive definite, bounded from below, and contractive (so that \({{\,\textrm{spec}\,}}(D_\sigma )\subset (0, 1)\)). Suppose in addition that we have a bounded, linear forward operator \(A: X\rightarrow Y\) (assuming w.l.o.g. that \(\Vert A\Vert =1\)), and that \(\{h_\tau : {\mathbb {R}} \rightarrow {\mathbb {R}}\}_{\tau \in (0, \infty )}\) is a collection of continuous scalar functions satisfying

- A.1:

-

$$\begin{aligned} h_\tau ({{\,\textrm{spec}\,}}(D_\sigma )) \subset (0, 1)\,\quad \text {for any } \tau > 0, \end{aligned}$$

- A.2:

-

$$\begin{aligned} r_\tau (\lambda ):= \frac{1-h_\tau (\lambda )}{\tau h_\tau (\lambda )} \quad \text{ converges } \text{ uniformly } \text{ for }\, \lambda \in {{\,\text {spec}\,}}(D_\sigma ) \,\text{ as } \tau \rightarrow 0, \end{aligned}$$

with limit \(r^*\) and rate \(\Vert r_\tau - r^*\Vert _{L^\infty ({{\,\textrm{spec}\,}}(D_\sigma ))} = o(\tau )\),

- A.3:

-

$$\begin{aligned} {\underline{c}}:=\inf _{\tau> 0, \lambda \in {{\,\textrm{spec}\,}}(D_\sigma )} r_\tau (\lambda )> 0, \quad {\overline{c}}:=\sup _{\tau > 0, \lambda \in {{\,\textrm{spec}\,}}(D_\sigma )} r_\tau (\lambda ) < \infty . \end{aligned}$$

In this setting, let us define (using the continuous functional calculus to apply scalar functions to \(D_\sigma \))

We can compute the solution to the variational problem

using PnP-FBS:

By A.2 we can define \(J^*(x) = \lim _{\tau \rightarrow 0}J_\tau (x)/\tau \). Now, we obtain a convergent regularization when the regularization parameter \(\tau ^\delta \) is chosen appropriately: suppose that \(\tau ^\delta \sim \delta \). Assume that we have an underlying image \(x^*\in X\), clean measurements \(y=Ax^*\), \(\{y^\delta \}_{\delta > 0}\) is a sequence in Y satisfying \(\Vert y^\delta - y\Vert \le \delta \), and

Then \({\hat{x}}(y^\delta , \tau ^\delta ) \rightarrow x^\dagger \), where

is the \(J^*\)-minimizing least squares solution to the inverse problem \(Ax = y\).

Proof

First note that under the assumptions of the theorem, PnP-FBS as described in (37) is a contractive fixed-point iteration (so that it has a unique fixed point to which it converges) for any \(\tau \) and y, with fixed points satisfying the optimality condition of the variational problem in (36).

By A.3, we can define \({\underline{J}}(x) = \inf _\tau J_\tau (x) / \tau \) and \({\overline{J}}(x) = \sup _\tau J_\tau (x) / \tau \), so that

Taking limits, this also gives us that

The above bounds tell us that the \(J^*\)-minimizing least squares solution to the inverse problem is unique, since it is defined by the minimization of a strongly convex functional on a closed linear subspace of X.

We have clean measurements \(y = Ax^*\), a set of \(y^\delta \) such that \(\Vert y - y^\delta \Vert \le \delta \) and a parameter choice rule \(\delta \mapsto \tau ^\delta \) satisfying \(\tau ^\delta \sim \delta \) as \(\tau \rightarrow 0\). We are considering the corresponding set of reconstructions

By the remarks above, we can compute these reconstructions using (37). For the sake of the proof, let us also define the variational reconstruction operators with a static regularization functional \(J^*\), as follows

This static regularization approach, with the parameter choice that we are using, is a convergent regularization by the existing theory (this is guaranteed, for example, by the general result in [42, Proposition 3.32]): \({\hat{x}}_\text {static}(y^\delta , \tau ^\delta ) \rightarrow x^\dagger \) with \(x^\dagger \) the \(J^*\)-minimizing least squares solution to the inverse problem. Furthermore, the triangle inequality gives us that

so it suffices to show that

We can write

and

so we just need to show that \(\Vert M_\tau ^{-1} - M_{\tau ,\text {static}}^{-1}\Vert \rightarrow 0 \) as \(\tau \rightarrow 0\) (since \(\tau ^\delta \sim \delta \)), where \(M_\tau = A^\top A + \tau r_\tau (D)\) and \(M_{\tau , \text {static}} = A^\top A + \tau r^*(D)\). We have

We will expand the inner matrix inversion using a Neumann series. Note first (by A.3) that \(M_{\tau , \text {static}}\) is bounded from below: \(\Vert M_{\tau ,\text {static}} x\Vert \ge {\underline{c}} \tau \Vert x\Vert \). As a result, \(\Vert M_{\tau ,\text {static}}^{-1}\Vert \le 1/({\underline{c}} \tau )\) and we can estimate

Since A.2 tells us that \(\Vert r_\tau - r^*\Vert _{L^\infty ({{\,\textrm{spec}\,}}(D_\sigma ))} \rightarrow 0\) as \(\tau \rightarrow 0\), this must be smaller than 1 for sufficiently small \(\tau \), which is a sufficient condition for absolute convergence of the Neumann series. Using this and (41), we see that

Finally, we can simply estimate its norm from this as follows, using (42) and the fact \(\Vert M_{\tau ,\text {static}}^{-1} \Vert \le 1 / ({\underline{c}} \tau )\):

Since we have assumed that \(\Vert r_\tau - r^* \Vert _{L^\infty ({{\,\textrm{spec}\,}}(D_\sigma ))} = o(\tau )\) as \(\tau \rightarrow 0\), we find by the above reasoning that \(\Vert {\hat{x}}(y^\delta , \tau ^\delta ) - {\hat{x}}_\text {static}(y^\delta , \tau ^\delta )\Vert \rightarrow 0\). Recalling the inequality in (40) lets us conclude that the spectral filtering approach is a convergent regularization. \(\square \)

Example 1

Consider the case previously considered in (34) and (35), corresponding to \(h_\tau (\lambda ) =\lambda / (\tau (1 - \lambda ) + \lambda )\). We have

In particular, the assumptions A.3, A.2 and A.1 are trivially satisfied: we have \(h_\tau (\lambda ) < \lambda \) for \(\lambda > 0\), \(r^* = r_\tau \) for all \(\tau > 0\) and

and

This should come as no surprise, since by the previous discussion, this choice of spectral filtering simply corresponds to the static regularization approach used in the proof of Theorem 5, for which classical theory establishes its convergence properties.

4.3 Experiments

In this section, we will demonstrate the use of the spectral filtering approach to control the regularization strength of a learned denoiser, when applied to an inverse problem using PnP-FBS, showing that it in fact gives rise to a practically convergent regularization method. Since the spectral filtering approach was developed for linear denoisers, we first need to decide on a reasonable design for a linear learnable denoiser.

In this work, we will modify the U-net architecture [39], which continues to be used with great success in image-to-image tasks, and combines a downscaling and upscaling path (as in an autoencoder) with skip connections that connect the corresponding scales before and after the bottleneck. The key insight for us is that the U-net architecture is symmetric, in the following sense: if the downscaling and upscaling operations are linear and each other’s transposes, and the activation functions and biases are omitted, the U-net is linear and its transpose is a U-net of the same shape (which can be thought of as running the original U-net in reverse). In particular, it is straightforward to see that we can obtain a symmetric linear U-net in this way by tying weights between the downscaling and upscaling paths. Alternatively, and perhaps more simply, we can take the average of a linear U-net and its transpose to get a symmetric linear denoiser. This is the approach that we will take in the experiments considered in this section, since we can leverage the power of JAX [8] to do so: given a linear U-net, we can efficiently compute its vector-Jacobian products to get its transpose. Figure 4 shows a comparison of the denoising performance (in the same setting as the one we will consider for the application to inverse problems below) of such a linear U-net with a comparable non-linear U-net. By this, we mean that the networks have the same sizes and the same number of trainable parameters. While the non-linear U-net allows for better reconstructions, most notably in terms of sharpness, both denoisers remove a significant part of the noise in the noisy images. In what follows, we will use the linear U-net \(D_{\sigma , \text {l}}\) and simply call it \(D_\sigma \).

Comparing the denoising performance of a non-linear U-net (\(D_{\sigma ,\text {nl}}\)) with a linear, symmetric U-net (\(D_{\sigma ,\text {l}}\)) based on the same architecture. Here \(x^*\) is a ground truth image and y is the same image, corrupted by Gaussian noise. These images are generated in the same way as the training data was generated. In contrast to \(D_{\sigma ,\text {nl}}\), \(D_{\sigma , \text {l}}\) struggles to reconstruct sharp edges as it does not contain any non-linearity. On the other hand, both denoisers significantly improve the signal-to-noise ratio: y has a PSNR of 24.3 dB, \(D_{\sigma , \text {nl}}(y)\) has a PSNR of 34.0 dB and \(D_{\sigma , \text {l}}(y)\) has a PSNR of 27.8 dB

The experiment that we will consider is concerned with the inverse problem of image reconstruction in computed tomography (CT). We will consider images of size \(64\times 64\), consisting of randomly generated ellipse phantoms as in Fig. 6, and simulate CT measurements (sinograms) using the ASTRA toolbox [47, 48] with a parallel beam geometry with 150 equispaced views. A linear U-net is trained as a denoiser on ellipse phantoms corrupted with Gaussian white noise, after which we apply the denoiser in a PnP-FBS manner: denoting the forward operator, which maps images u to (clean) sinograms y by A, the noisy measurements \(y^\delta \) and the trained denoiser by \(D_\sigma \), we iterate

where \(\eta \) is a step size, satisfying \(\eta \le 2/\Vert A\Vert ^2\), so that the limit is well-defined. Here, we simply use the spectral filters \(h_\tau \) corresponding to scaling the underlying regularization functional as seen in Eq. (35). Considering the size of the images used in this experiment, it is still feasible to implement Eq. (35) by computing a full eigendecomposition of the trained denoiser and applying the filter to the found eigenvalues. An alternative approach that could be used since \(h_\tau \) is analytic, is to compute a power series expansion. This has the advantage that it requires only repeated applications of the denoiser, i.e. it is not necessary to compute and store an eigenbasis, and the power series can be truncated to give an approximate result.

In order to verify that the proposed spectral filtering provides a convergent regularization we consider a sequence of noisy measurements \(\{y^\delta \}_{\delta > 0}\) such that \(\Vert y^\delta - y\Vert \le \delta \) and a corresponding step size \(\tau ^\delta \propto \delta \), satisfying the conditions of Theorem 5. The reference \(x^\dagger \) is the \(J^*\)-minimizing solution that was computed from noiseless measurement data with high accuracy. In Figs. 5 and 6 we show that the spectral filtering approach indeed leads to a numerically verifiable convergent regularization, as predicted by Theorem 5. In comparison to reconstructions obtained with filtered backprojection (FBP) we can observe a successful noise suppression for high noise cases, while the \(J^*\)-minimizing solution lacks some sharpness. This suggests the limitations of the linear denoiser in comparison to non-linear networks.

Applying the spectral filtering approach, we observe convergent regularization in practice. Here \(x^\dagger \) is the \(J^*\)-minimizing solution to the noiseless least-squares problem as in Theorem 5

Applying the spectral filtering approach, we observe convergent regularization in practice. We show a selection of snapshots corresponding to the plot in Fig. 5. Note that \(x^*\) (the underlying ground truth) is distinct from \(x^\dagger \) since the forward operator has a non-trivial kernel

5 Conclusions

The question if a reconstruction algorithm provides a convergent regularization has been long studied in inverse problems, as it provides more than just the knowledge that a solution can be computed at a certain noise level. It tells us that stable solutions exist for all noise realizations and even more importantly that in the limit case, when noise vanishes, we obtain a solution of the underlying operator equation. In other words, we can guarantee mathematically that obtained solutions are indeed solutions to the inverse problem.

This is in contrast to some novel data-driven approaches where we may only guarantee that obtained solutions are minimizers of the empirical loss, given suitable training data. Consequently, the concept of convergent data-driven reconstructions has gained considerable interest very recently, see for instance [33]. Here, PnP approaches take a special role due to their straightforward connection to convex optimization [25] and the possibility to incorporate learned denoisers given by non-linear neural networks. But despite considerable advances in establishing convergence notions, i.e., fixed-point and objective convergence, the question of convergent regularization is still open for general non-linear denoisers.

In this work, we presented a step forward for learned linear denoisers using the novel concept of spectral filtering of the denoiser. The presented approach allows to establish a provably convergent regularization in the PnP framework. Additionally, this convergence is demonstrated numerically on the inverse problem of CT image reconstruction. As established in Theorem 5, there is some freedom in the choice of filters to apply to the denoiser. In future work, this choice could be studied in more detail. In this direction, it is of particular interest to choose spectral filters that are not too computationally costly to evaluate but still give a way to tune the regularization strength of the denoiser. Indeed, in the present implementation of the method, after training, the denoiser is instantiated as a matrix, the eigen-decomposition of which is computed to apply the spectral filtering. By considering spectral filters given by polynomials, for example, we would circumvent the need to instantiate the denoiser as a matrix and compute a full eigen-decomposition. Besides this, it would be of great interest to study whether there is any reasonable generalization of the denoiser filtering approach to the setting in which the denoiser is non-linear.

In fact, we have observed similar convergence behavior numerically even when using a non-linear denoiser in the PnP gradient-step framework (see Fig. 2), suggesting a promising direction for proving that PnP with realistic assumptions on the denoiser can give rise to convergent regularization. The gradient-step framework is, however, just one way of controlling the regularization strength of the learned denoiser. In particular, it relies on flipping the usual splitting of the variational objective and, as a result, requires repeated evaluation of the proximal operator of the data term. This may be very computationally costly if the forward operator is expensive to evaluate. As a result, it is still of great interest to study other ways of controlling the regularization strength of a realistic learned denoiser in PnP that will result in provably convergent regularization.

Notes

1-Lipschitz continuity is approximately enforced by the gradient penalty term in (16), which does not guarantee, however, that the (AR) is Lipschitz continuous. This property can be enforced by choosing the right network architecture. Indeed, all convolutional neural networks with ReLU activations are Lipschitz continuous for some Lipschitz constant L, which might be arbitrarily large.

References