Abstract

We propose and study a class of novel algorithms that aim at solving bilinear and quadratic inverse problems. Using a convex relaxation based on tensorial lifting, and applying first-order proximal algorithms, these problems could be solved numerically by singular value thresholding methods. However, a direct realization of these algorithms for, e.g., image recovery problems is often impracticable, since computations have to be performed on the tensor-product space, whose dimension is usually tremendous. To overcome this limitation, we derive tensor-free versions of common singular value thresholding methods by exploiting low-rank representations and incorporating an augmented Lanczos process. Using a novel reweighting technique, we further improve the convergence behavior and rank evolution of the iterative algorithms. Applying the method to the two-dimensional masked Fourier phase retrieval problem, we obtain an efficient recovery method. Moreover, the tensor-free algorithms are flexible enough to incorporate a priori smoothness constraints that greatly improve the recovery results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The theory of inverse problems is nowadays one of the main tools to deal with recovery problems in medicine, engineering, and life sciences. The real-world applications of this theory embrace for instance computed tomography, magnetic resonance imaging, and deconvolution problems in microscopy, see [8, 48, 49, 59, 65, 66]. Besides these recent monographs, which are only a small selection, there exist many further publications about applications, regularization, and numerical solvers. In particular, the modern theory of inverse problems studies the regularization of ill-posed problems, i.e., strategies to overcome instability of the solution with respect to noisy data [24]. Among the various available regularization strategies, Tikhonov regularization [61,62,63] or, more generally, variational regularization, i.e., the stabilization of an inverse problem by solving suitable optimization problems, enjoys great attention within the literature. In particular, the latter allows to incorporate a priori assumptions on the sought solutions and to exploit problem structure. Further, variational regularization commonly allows the utilization of optimization algorithms for the numerical solution and thus inherently provides, in many cases, also approaches to solve given inverse problems in practice [34, 68].

In this paper, we consider the subclass of bilinear and quadratic inverse problems and propose dedicated solution algorithms based on specific variational regularization approaches. Problem formulations of these kinds originate from real-world applications in imaging and physics [56] like blind deconvolution [12, 36], deautoconvolution [1, 26, 29], phase retrieval [22, 47, 57], parallel imaging in MRI [9], and parameter identification in EIT [48]. Being nonlinear, bilinear and quadratic inverse problems can be studied with general techniques from nonlinear inverse problems [24, 64]. Recently, however, dedicated approaches have started to emerge, firstly for quadratic problems [25]. One of these approaches for both bilinear and quadratic inverse problems is the exploitation of so-called tensorial liftings. This allows, in particular, to generalize the linear regularization theory to show well-posedness and to derive convergence rates for the solutions of the regularized problems in a common treatment [5]. The question of how to exploit the specific structure of bilinear and quadratic inverse problems to solve these problems numerically with a common approach however has remained open.

In the recent years, PhaseLift [14, 17] has become increasingly popular to solve phase retrieval formulations of the form

for the measurement vectors \(\varvec{a}_m \in \mathbb {R}^M\). The main idea of PhaseLift is to rewrite (1) into

Relaxing the rank by the trace or nuclear norm, we here obtain a semi-definite program, which can be solved by interior point methods, projected subgradient methods, or non-convex low-rank parametrizations [51, 67]. Because of the squared number of unknown of the lifted problem, solving the semi-definite program becomes tremendously challenging for high-dimensional instances since the matrix \(\varvec{U} \in \mathbb {R}^{N\times N}\) cannot be hold in memory. From the theoretical side, one has proved that the solution of the relaxed problem has rank one with high probability and thus yields a solution of the original phase retrieval problem [15, 17]. The close relation to linear matrix equation and matrix completion yield several further recovery guarantees for generic phase retrieval [23, 37, 51].

Noticing that the lifted and relaxed phase retrieval formulation is a convex minimization problem, one can replace the semi-definite programming solvers by convex optimization methods like forward-backward splitting [21, 44], the fast iterative shrinkage-thresholding algorithm (FISTA) [3], the alternating direction method of multiplies (ADMM) [10], or the proximal primal-dual methods [18, 19] to name a few examples. All of these methods have been intensively studied in the literature. Unfortunately, these methods usually have the same problems as semi-definite solvers because, again, of the dimension of the lifted formulation.

Methodology One central idea of this paper is to employ tensorial liftings to lift the bilinear/quadratic structure of the considered problems into a linear using the universal property of the tensor product; so we transfer the idea behind PhaseLift for generic phase retrieval to arbitrary bilinear/quadratic inverse problems. In fact, the lifting allows us to rewrite the bilinear/quadratic inverse problem into a linear one with rank-one constraint. Similarly to PhaseLift or matrix completion, we then relax the lifted problem to obtain a convex variational formulation on the tensor product. If the dimension of the problem is large like in image recovery problems, then the dimension of the tensor product literally explodes such that the required operations on the tensor product for most convex solver usually become intractable. The main focus of this work is to show that for some specific solvers like primal-dual [18] or FISTA [3], the required operations can be performed in a tensor-free manner, which has significantly less memory requirements and makes the lifted problem computationally tractable.

Main contributions The main goal of this paper is to develop tensor-free numerical methods capable of solving general bilinear/quadratic inverse problems on the high-dimensional tensor product in a common manner. For this purpose, we combine the lifting ideas behind PhaseLift with convex optimization methods as well as algorithms from numerical linear algebra. Our main contributions consist in the following points:

-

We show that the structure of the primal-dual method [19] and FISTA [3] can be exploited to derive tensor-free implementations for the solution of lifted bilinear/quadratic problems, which are efficient and memory-saving since they are based on a low-rank representation of the tensorial iteration variable. Moreover, our approaches allow an explicit evaluation of the proximal methods, the lifted operator, and its adjoint. The proposed methods can be used in real-world applications like imaging in masked phase retrieval as shown in the numerical examples.

-

Throughout the paper, we generalize the classical nuclear norm heuristic that is based on the Euclidean setting to nuclear norms deduced from arbitrary Hilbert norms. In this manner, each argument of the bilinear forward operator may be regularized differently with respect to the bilinear/quadratic structure, and the nuclear norm remains computable. This allows us to incorporate a priori information like smoothness of one or both components directly into the nuclear norm regularizer to improve the convergence of the algorithm.

-

We give detailed information on how the tensor-free methods, which turn out to be an iterative singular value thresholding, can be implemented with respect to the generalized nuclear norm heuristic based on arbitrary Hilbert spaces. For this, we generalize the restarted Lanczos process [2] and the orthogonal iteration [60] to compute the required partial singular value decompositions directly with respect to the actual inner products.

-

To improve the convergence and solution behavior, we additionally propose a novel reweighting technique that reduces the rank of the iteration variables.

Road map The paper is organized as follows: In Sect. 2, we introduce the considered bilinear inverse problems in more detail. The focus here lies on the bilinear setting since quadratic formulations are based on underlying bilinear structures. Based on the universal property of bilinear mappings and the nuclear norm heuristic, we then derive a relaxed convex minimization problem with linear lifted forward operator. To stabilize the lifted problem regarding noise and measurement errors, we additionally consider a Tikhonov approach.

In Sect. 3, we first develop a proximal solver based on the first-order primal-dual method of Chambolle and Pock [18] to solve the lifted problem numerically. The primal-dual iteration is here only one explicit example and can be replaced by other proximal methods. In particular, we show an adaption to FISTA. In so doing, we obtain a singular value thresholding depending on the actual Hilbert spaces building the domain of the original problem. Although the tensorial lifting allows us to apply linear methods, the dimension of the relaxed minimization problem becomes tremendous.

To overcome this issue, we derive a tensor-free representation of the suggested algorithm. The efficient computation of the required singular value thresholding is here ensured by exploiting an orthogonal power iteration or, alternatively, an augmented Lanczos process, see Sect. 4. Moreover, in Sect. 5, we introduce a novel Hilbert space reweighting to promote low-rank iterations and solutions. The effect of the slightly different structure of quadratic problems instead of bilinear problems is discussed in Sect. 6. We complete the paper with a numerical study, where we consider generic Gaussian bilinear inverse problems and the masked Fourier phase retrieval; see Sects. 7 and 8.

2 Convex Liftings of Bilinear Inverse Problems

Bilinear problem formulations arise in a wide range of applications in imaging and physics [56] like blind deconvolution [12, 36], parallel imaging in MRI [9], and parameter identification in EIT [48]. Since we are mainly interested in computing a numerical solution, we restrict ourselves to finite-dimensional bilinear problems of the form

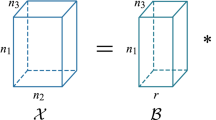

where \(\mathcal {B}\) is a bilinear operator from \(\mathbb {R}^{N_1} \times \mathbb {R}^{N_2}\) into \(\mathbb {R}^{M}\). In the following, we write \(\varvec{u} \in \mathbb {R}^{N_1}\) in the form \(\varvec{u} := (u_n)_{n=0}^{N_1-1}\). To incorporate a priori conditions, we equip each vector space with its own inner product and norm. In the finite-dimensional setting, every inner product corresponds to a unique symmetric, positive definite matrix \(\varvec{H}\) and can be written as \(\langle \cdot ,\cdot \rangle _{\varvec{H}} := \langle \varvec{H} \, \cdot ,\cdot \rangle = \langle \cdot ,\varvec{H} \, \cdot \rangle \), where the inner products on the right-hand side denote the usual Euclidean inner product \(\left\langle \varvec{u},\varvec{v}\right\rangle := \varvec{v}^* \varvec{u}\). Here \(\cdot ^*\) labels the transposition of a vector or matrix. The corresponding norm is denoted by \(||\cdot ||_{\varvec{H}}\). In the following, we denote the associate matrices of the spaces \(\mathbb {R}^{N_1}\), \(\mathbb {R}^{N_2}\), \(\mathbb {R}^M\) by \(\varvec{H}_1\), \(\varvec{H}_2\), \(\varvec{K}\) respectively. The associated matrix of the Euclidean inner product is the identity \(\varvec{I}_N \in \mathbb {R}^{N \times N}\).

Although we restrict ourselves to the real-valued setting, all following algorithms and statements remain valid for the complex-valued setting, where one considers sesquilinear mappings \(\mathcal {B} :{\mathbb {C}}^{N_1} \times {\mathbb {C}}^{N_2} \rightarrow {\mathbb {C}}^M\). Replacing the property ‘symmetric’ by ‘Hermitian,’ and using the real part of the inner products, i.e. \(\left\langle \varvec{u},\varvec{v}\right\rangle = \mathfrak {R}[ \varvec{v}^* \varvec{u}]\), where \(\cdot ^*\) is the transposition and conjugation, all considerations translate one to one. In the complex case, the associate matrices \(\varvec{H}\) may be complex-valued, Hermitian, and positive definite.

Inspired by PhaseLift [14, 17], which exploits the solution strategy developed for matrix completion problems [13, 46], we suggest to tackle the general bilinear problem (\({\mathfrak {B}}\)) by convex liftings and relaxations. Our approach is here based on the so-called universal property of the tensor product with respect to bilinear mappings; see, for instance, [35, 54, 55]. In the finite-dimensional setting, the lifting may be stated as follows.

Definition 1

(Universal property) For every bilinear mapping \(\mathcal {B} :\mathbb {R}^{N_1} \times \mathbb {R}^{N_2} \rightarrow \mathbb {R}^{M}\), there exists a unique linear mapping \(\breve{\mathcal {B}} :\mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2} \rightarrow \mathbb {R}^{M}\) such that \(\breve{\mathcal {B}}(\varvec{u} \otimes \varvec{v}) = \mathcal {B}(\varvec{u}, \varvec{v})\).

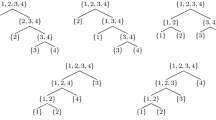

Notice that the universal property uniquely defines the tensor product \(\mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2}\) in an abstract sense [35, 54]. The other way round, one can also first define the tensor product and then deduce the universal property or the bilinear lifting [55]. In the finite-dimensional setting considered by us, the tensor product \(\mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2}\) can be identified with the matrix space \(\mathbb {R}^{N_2 \times N_1}\), where the rank-one tensor \(\varvec{u} \otimes \varvec{v}\) corresponds to the matrix \(\varvec{v} \varvec{u}^* = (v_{n_2} u_{n_1})_{n_2=0,n_1=0}^{N_2-1,N_1-1}\).

Due to the uniqueness of the lifting, the bilinear inverse problem (\({\mathfrak {B}}\)) is equivalent to the linear inverse problems

The central benefit of these reformulations is the shift of the nonlinearity of the forward operator into the rank-one constraint. Although the problem is now linear, we have to deal with an additional non-convex side condition.

In order to eliminate the nonlinear constraint, we first rewrite (\({\breve{\mathfrak {B}}}\)) into the rank minimization problem

and then relax the non-convex objective function by replacing it with the nuclear or projective norm \(||\cdot ||_{\pi ( \varvec{H}_1, \varvec{H}_2)}\) of the tensor \(\varvec{w}\). Depending on the norms \(||\cdot ||_{\varvec{H}_1}\) and \(||\cdot ||_{\varvec{H}_2}\), this norm is defined by

where the infimum is taken over all finite representations of the tensor \(\varvec{w}\). In so doing, we finally obtain the convex minimization problem

with linear constraints.

Since the considered norm are induced by inner products, the nuclear norm here coincides with the trace class norm or with the Schatten one-norm; so the nuclear norm is the sum of the corresponding singular values of the matrix \(\varvec{w} \in \mathbb {R}^{N_2 \times N_1}\) with respect to the chosen inner products, cf. for instance [69, Satz VI.5.5].

Lemma 1

(Projective norm, [69]) For \(\varvec{w} \in \mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2}\), the projective norm is given by \(||\varvec{w}||_{\pi (\varvec{H}_1, \varvec{H}_2)} = \sum _{n=0}^{R-1} \sigma _n\), where \(\sigma _n\) denotes the nth singular value and R the rank of \(\varvec{w}\).

The main idea behind the nuclear norm heuristic is that the projective norm is the convex envelope of the rank on the unit ball with respect to the spectral norm. Since we have restricted ourselves to the finite-dimensional setting, each bilinear operator \(\mathcal {B} :\mathbb {R}^{N_1} \times \mathbb {R}^{N_2} \rightarrow \mathbb {R}^{M}\) may be written as

with appropriate matrices \(\varvec{A}_k \in \mathbb {R}^{N_2 \times N_1}\). In general, we cannot expect that the solution of the lifted and relaxed problem (\({\mathfrak {B}_{0}}\)) is rank-one and thus yields a meaningful solution of the original inverse problem (\({\mathfrak {B}}\)). The lifting of the bilinear inverse problem is here a linear matrix equation. More precisely, the lifting can be written as

where \({\cdot }\) is the columnwise vectorization; so the minimum-rank guarantees in [51] are applicable. Therefore, if the matrices \(\varvec{A}_k\) are, for instance, randomly generated with respect to the Gaussian or symmetric Bernoulli distribution, i.e.

the situation changes from the ground up. Combining [51, Thm. 3.3] and [51, Thm. 4.2], we obtain the following recovery guarantee.

Theorem 1

(Recht–Fazel–Parrilo) Let \(\mathcal {B}\) be a bilinear operator randomly generated as in (2). Then there exist positive constants \(c_0\) and \(c_1\) such that the solutions of (\({\mathfrak {B}}\)) and (\({\mathfrak {B}_{0}}\)) coincide with probability at least \(1 - \mathrm {e}^{-c_1 p}\) whenever \(p \ge c_0(N_1+N_2) \log (N_1 N_2)\).

Remark 1

Note that the random bilinear operators in (2) are only two examples of nearly isometrically distributed random variables considered in [51]. Therefore, Theorem 1 is only a special case of the theory presented in [51], which means that many other classes of randomly generated bilinear operators guarantee the recovery of the wanted solution with high probability using tensorial lifting and convex relaxation.

Further, recovery guarantees that directly apply to the lifted bilinear problem can be found in [20, 23, 37]. For instance, [23, Thm. 2.2] ensures the recovery of the wanted rank-one solution tensor for Gaussian operators (left-hand side of (2)) almost surely.

Theorem 2

(Eldar–Needell–Plan) Let \(\mathcal {B} :\mathbb {R}^N \times \mathbb {R}^N \rightarrow \mathbb {R}^M\) with \(M \ge 2N\) be a bilinear Gaussian operator. Then the solutions of (\({\mathfrak {B}}\)) and (\({\mathfrak {B}_{0}}\)) coincide almost surely.

Up to this point, the given data \(\varvec{g}^\dagger \) have been known exactly. A first approach to incorporate noisy measurements into the inverse problems (\({\mathfrak {B}}\)) could be to extend the subspace of exact solutions with respect to a supposed error level. More precisely, one may consider the minimization problems

In other words, we minimize over all solutions that approximate the given noisy data \(\varvec{g}^\epsilon \) with \(||\varvec{g} - \varvec{g}^\epsilon ||_{\varvec{K}} \le \epsilon \) up to the error level \(\epsilon \). Another approach is to incorporate the data fidelity of the possible solutions directly into the objective function. Following this approach, we may minimize a Tikhonov functional with projective norm regularization to solve (\({\mathfrak {B}}\)). In more detail, we consider the problems

For the Euclidean setting, the stability of the lifted and relaxed problem (\({\mathfrak {B}_{\epsilon }}\)) has been well studied, see for instance [20, 37]. If the corresponding matrices \(\varvec{A}_k\) are again realizations of certain random variables or fulfil a restricted isometry property, then the solutions of (\({\mathfrak {B}_{\epsilon }}\)) yield a good approximation of the true rank-one solution \(\varvec{u}^\dagger \otimes \varvec{v}^\dagger \) of (\({\mathfrak {B}}\)). More precisely, one can show that

with high probability, where \(\varvec{w}^\dagger \) denotes a minimizer of (\({\mathfrak {B}_{\epsilon }}\)), and where C is an appropriate constant. For a bilinear Gaussian operator, the relaxed problem (\({\mathfrak {B}_{\epsilon }}\)) guarantees a stable solution as follows, which is a consequence of [37, Thm. 2].

Theorem 3

(Kabanava–Kueng–Rauhut–Terstiege) Let \(\mathcal {B} :\mathbb {R}^{N_1 \times N_2} \rightarrow \mathbb {R}^M\) with \(M \ge c_1 \rho ^{-2} (N_1 + N_2)\), where \(0< \rho < 1\), be a bilinear Gaussian operator. Then with probability at least \(1 - \mathrm {e}^{-c_2 M}\), the solution \(\varvec{w}^\dagger \) of (\({\mathfrak {B}_{\epsilon }}\)) approximates the solution \(\varvec{u}^\dagger \otimes \varvec{v}^\dagger \) of (\({\mathfrak {B}}\)) with

where \(c_1\), \(c_2\), \(c_3\) are positive constants.

For small noise, we thus expect that the solution \(\varvec{w}^\dagger \) is nearly rank-one, i.e., the leading singular value is large compared with the remaining, and that the projection to the rank-one tensors yield a good approximation of \(\varvec{u} \otimes \varvec{v}\). More precisely, on the basis of the Lidskii–Mirsky–Wielandt theorem, see for instance [41], the difference between the singular values is here bounded by

where \(\sigma _n\) are the singular values in decreasing order, and where R is the rank of \(\varvec{w}^\dagger \).

3 Proximal Algorithms for the Lifted Problem

To exploit the nuclear norm heuristic, we have to solve the minimization problem (\({\mathfrak {B}_{0}}\)), (\({\mathfrak {B}_{\epsilon }}\)), and (\({\mathfrak {B}_\alpha }\)) in an efficient manner. Looking back at the comprehensive literature about matrix completion [13, 16, 46], low-rank solutions of matrix equations [51], and PhaseLift [14, 17], there exists several numerical methods like interior-point methods for semi-definite programming, fixed point iterations, singular value thresholding algorithm, projected subgradient methods, and low-rank parametrization approaches.

In order to solve the lifted bilinear inverse problems, we follow another approach. Let us first consider the actual structure of the three derived minimization problems in Sect. 2, which is given by

where \(\mathcal {A} :\mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2} \rightarrow \mathbb {R}^{M}\) denotes the lifted bilinear forward operator, \(F :\mathbb {R}^{M} \rightarrow \overline{\mathbb {R}}\) with \(\overline{\mathbb {R}}:= \mathbb {R}\cup \{-\infty , +\infty \}\) describes the data fidelity, and \(G :\mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2} \rightarrow \overline{\mathbb {R}}\) is the projective norm. Since the regularization mapping G and, in some circumstances, the data fidelity mapping F are non-smooth but convex functions, we may apply proximal first-order methods like the forward-backward splitting, the primal-dual method by Chambolle–Pock, the alternating directions method of multipliers (ADMM), the Douglas–Rachford splitting, and several variants of these and other algorithms, see for instance [19].

Theoretically, we can apply any of these algorithms to solve the lifted variational problems. In many applications, the dimension of the tensor product, however, explodes literally such that the required tensorial operations cannot be computed efficiently. With respect to this problematic, we exemplarily consider the primal-dual method [18, Alg. 1]

with fixed parameters \(\tau , \sigma > 0\) and \(\theta \in [0,1]\) and FISTA (fast iterative shrinkage-thresholding algorithm) [3, Sect. 4]

with fixed parameter \(\tau > 0\). The details of these methods are given below. The main reason for this restriction is that both algorithms can be implemented in a tensor-free fashion. For further methods, which, for instance, require implicit steps on the tensor product, the efficient implementation on the tensor product is a non-trivial task.

First, the primal-dual method and FISTA are originally defined for linear forward operators between finite-dimensional Hilbert spaces; so we have to equip the tensor product \(\mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2}\) with a corresponding structure. In the following, we always assume that this structure arises from the inner product defined by

see for instance [38, Sect. 2.6]. Using the matrices \(\varvec{H}_1\) and \(\varvec{H}_2\) defining the inner products, we can write the resulting Hilbertian inner product for \(\mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2}\) in the form

where the inner products on the right-hand side denote the Hilbert–Schmidt inner product for matrices. Notice that the Hilbertian inner product is related to the Kronecker product \(\varvec{H}_1 \otimes \varvec{H}_2\) due to

where we recall that \({\cdot }\) denotes the column-wise vectorization. Further, the defined inner product introduces the norm \(||\cdot ||_{\varvec{H}_1 \otimes \varvec{H}_2}\), which differ from the projective norm \(||\cdot ||_{\pi (\varvec{H}_1,\varvec{H}_2)}\). Similarly, the Hilbertian norm may be computed using the singular values with respect to the equipped inner products. Here we have

thus \(||\cdot ||_{\varvec{H}_1 \otimes \varvec{H}_2}\) corresponds to the Schatten two-norm whereas \(||\cdot ||_{\pi (\varvec{H}_1,\varvec{H}_2)}\) corresponds to the Schatten one-norm.

Next, the above stated methods are mainly based on concepts from convex analysis, which is reflected in the presuppositions; so the data fidelity mapping F and, similarly, the regularization mapping G have to be convex and lower semicontinuous. Commonly, a function \(f :\mathbb {R}^{N} \rightarrow \overline{\mathbb {R}}\) is called convex when

for all \(\varvec{x}_1, \varvec{x}_2 \in \mathbb {R}^{N}\) and all \(t \in [0,1]\), and lower semicontinuous when

for all sequences \((\varvec{x}_n)_{n\in \mathbb {N}}\) in \(\mathbb {R}^{N}\) with \(\varvec{x}_n \rightarrow \varvec{x}\). Since F and G in the relaxed minimization problems of Sect. 2 represent norms or indicator functions on closed convex sets, here the assumptions for the primal-dual method are always fulfilled. The forward-backward splitting additionally requires a differentiable data fidelity F with Lipschitz-continuous derivative; so this method can only be applied to the Tikhonov relaxations.

For the primal-dual iteration (4), the first proximity operator \(\text {prox}_{\sigma F^*}\) is computed with respect to the Legendre–Fenchel conjugate \(F^*\). For any function \(f :\mathbb {R}^{N} \rightarrow \overline{\mathbb {R}}\), where \(\mathbb {R}^{N}\) is equipped with the inner product associated to \(\varvec{H}\), the Legendre–Fenchel conjugate \(f^* :\mathbb {R}^{N} \rightarrow \overline{\mathbb {R}}\) is defined by

and is always convex and lower semicontinuous; see [52]. If the function \(f :\mathbb {R}^{N} \rightarrow \overline{\mathbb {R}}\) is lower semicontinuous and convex, the subdifferential \(\partial f\) at a certain point \(\varvec{x} \in \mathbb {R}^{N}\) is given by

and figuratively consists of all linear minorants, see [52]. Finally, the proximation or proximity operator \(\text {prox}_{f}\) of a lower semicontinuous, convex function \(f :\mathbb {R}^{N} \rightarrow \overline{\mathbb {R}}\) is defined as the unique minimizer

Using the subdifferential calculus, one can show that the proximation coincides with the resolvent, i.e.

The most crucial step in the primal-dual iteration (4) and FISTA (5) is the application of the proximal projective norm \(\text {prox}_{\tau ||\cdot ||_{\pi (\varvec{H}_1, \varvec{H}_2)}}\), whereas the computation of proximal conjugated data fidelity \(\text {prox}_{\sigma F^*}\) is usually much simpler. To determine the proximal projective norm explicitly, we exploit the singular value decomposition of the argument with respect to the underlying inner products, which can be derived by an adaption of the classical singular value decomposition for matrices with respect to the Euclidean inner product.

Lemma 2

(Singular value decomposition) Let \(\varvec{w}\) be a tensor in \(\mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2}\). The singular value decomposition of \(\varvec{w}\) with respect to the associate matrices \(\varvec{H}_1\) and \(\varvec{H}_2\) is given by

where \(\sum _{n=0}^{R-1} \sigma _n \, (\varvec{u}_n \otimes \varvec{v}_n)\) is the classical singular value decomposition of \(\varvec{H}_2^{\nicefrac 12} \varvec{w} \, (\varvec{H}_1^{\nicefrac 12})^*\) with respect to the Euclidean inner product.

Remark 2

Unless stated otherwise, the square roots \(\varvec{H}_1^{\nicefrac 12} \in \mathbb {R}^{N_1 \times N_1}\) and \(\varvec{H}_2^{\nicefrac 12} \in \mathbb {R}^{N_2 \times N_2}\) are taken with respect to the factorizations

Allowing also non-symmetric but invertible factorizations, the root \(\varvec{H}_1^{\nicefrac 12}\) and \(\varvec{H}_2^{\nicefrac 12}\) are here non-unique. Possible candidates are the symmetric positive definite square root or the Cholesky decomposition of \(\varvec{H}_1\) and \(\varvec{H}_2\). In the following, the roots are solely required to derive the proximal projective norm mathematically. Their actual computation is not necessary in the final tensor-free algorithm.

Proof of of Lemma 2

By assumption the possibly non-symmetric square roots \(\varvec{H}_1^{\nicefrac 12}\) and \(\varvec{H}_2^{\nicefrac 12}\) are invertible. Considering the classical Euclidean singular value decomposition of the matrix \(\varvec{H}_2^{\nicefrac 12} \varvec{w} \, (\varvec{H}_1^{\nicefrac 12})^*\), we immediately obtain

The last arrangement may be easily validated by using the matrix notation \(\varvec{v}_n \varvec{u}_n^*\) of the rank-one tensor \(\varvec{u}_n \otimes \varvec{v}_n\). Due to the identity

for all \(n,m \in \{0, \dots , R-1\}\), the singular vectors \(\{\tilde{\varvec{u}}_n : n=0,\dots ,R-1\}\) form an orthonormal system with respect to \(\varvec{H}_1\) as well as their counterparts \(\{\tilde{\varvec{v}}_n : n=0,\dots , R-1\}\) with respect to \(\varvec{H}_2\). \(\square \)

Remark 3

The singular value decomposition can also be interpreted as a matrix factorization of the tensor \(\varvec{w}\). In this case, we have the factorization

where \(\varvec{V} \varvec{\Sigma } \varvec{U}^*\) is the classical Euclidean singular value decomposition of \(\varvec{H}_2^{\nicefrac 12} \varvec{w} \, (\varvec{H}_1^{\nicefrac 12})^*\) with the left singular vectors \(\varvec{V} := [\varvec{v}_0, \dots , \varvec{v}_{R-1}]\), the right singular vectors \(\varvec{U} := [\varvec{u}_0, \dots , \varvec{u}_{R-1}]\), and the singular values \(\varvec{\Sigma } := \text {diag}(\sigma _0, \dots , \sigma _{R-1})\).

With the adaption in Lemma 2, we can apply any numerical singular value method to compute the singular value decomposition of a given tensor. The next ingredient is the subdifferential of the nuclear norm based on \(\varvec{H}_1\) and \(\varvec{H}_2\) with respect to Hilbertian inner product on \(\mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2}\) associated to \(\varvec{H}_1 \otimes \varvec{H}_2\). In the following, the set-valued signum function \({\text {sgn}}\) is defined by

Lemma 3

(Subdifferential) Let \(\varvec{w}\) be a tensor in \(\mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2}\). Then the subdifferential of the projective norm \(||\cdot ||_{\pi (\varvec{H}_1, \varvec{H}_2)}\) at \(\varvec{w}\) with respect to \(\varvec{H}_1 \otimes \varvec{H}_2\) is given by

where \(\varvec{w} = \sum _{n=0}^{R-1} \sigma _n \, (\tilde{\varvec{u}}_n \otimes \tilde{\varvec{v}}_n)\) is a valid singular value decomposition of \(\varvec{w}\) with respect to \(\varvec{H}_1\) and \(\varvec{H}_2\).

Proof

The central idea to compute the subdifferential is to rely on the corresponding statement for the Euclidean setting in [39]. More precisely, if \(\mathbb {R}^{N_1}\) and \(\mathbb {R}^{N_2}\) are equipped with the Euclidean inner product, then the subdifferential \(\partial _{\mathcal {H\!S}}\) with respect to the Hilbert–Schmidt inner product on \(\mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2}\) is given by

where \(\varvec{w} = \sum _{n=0}^{R-1} \sigma _n \, (\varvec{u}_n \otimes \varvec{v}_n)\) is an Euclidean singular value decomposition of \(\varvec{w}\), see [39, Cor. 2.5]. The upper bound R is here some number less than or equal to \(\min \{N_1, N_2\}\), and the singular value decomposition of \(\varvec{w}\) may contain zero as singular value.

Next, we adapt this result to our specific case. Therefore, we exploit that the projective norm is the sum of the singular values. Using Lemma 2, we notice that the generalized projective norm of a tensor \(\varvec{w}\) is given by

where the norm on the right-hand side is the usual projective norm with respect to the Euclidean inner product. In order to consider the Hilbertian inner product associated to \(\varvec{H}_1 \otimes \varvec{H}_2\) in the subdifferential, we exploit that

if and only if

where the inner product on the right-hand side is the usual Hilbert–Schmidt scalar product for matrices; see (6). Thus, the subdifferential with respect to the \(\varvec{H}_1 \otimes \varvec{H}_2\) scalar product can be expressed in terms of \(\partial _{\mathcal {H\!S}}\) by

Plugging (8) into (9), and using the chain rule, we obtain the assertion. \(\square \)

With the characterization of the subdifferential, we are ready to determine the proximity operator for the projective norm with respect to \(\varvec{H}_1\) and \(\varvec{H}_2\). In the following, the soft-thresholding operator with respect to the level \(\tau \) is defined by

Theorem 4

(Proximal projective norm) Let \(\varvec{w}\) be a tensor in \(\mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2}\). The proximation of the projective norm is given by

where \(\sum _{n=0}^{R-1} \sigma _n \, (\tilde{\varvec{u}}_n \otimes \tilde{\varvec{v}}_n)\) is a singular value decomposition of \(\varvec{w}\) with respect to \(\varvec{H}_1\) and \(\varvec{H}_2\).

Proof

In order to establish the statement, we only have to convince ourselves that \(\breve{\varvec{w}} := \sum _{n=0}^{R-1} S_\tau (\sigma _n) \, (\tilde{\varvec{u}}_n \otimes \tilde{\varvec{v}}_n)\) is the resolvent for the given \(\varvec{w}\), i.e. \(\varvec{w} \in (I + \tau \, \partial ||\cdot ||_{\pi (\varvec{H}_1, \varvec{H}_2)})(\breve{\varvec{w}})\). Since \(\breve{\varvec{w}}\) is already represented by its singular value decomposition, Lemma 3 implies

If \(\sigma _n > \tau \), the related summand becomes \(\sigma _n \, (\tilde{\varvec{u}}_n \otimes \tilde{\varvec{v}}_n)\). Otherwise, the summand is \(\mu _n \, (\tilde{\varvec{u}}_n \otimes \tilde{\varvec{v}}_n)\) with \(\mu \in [-\tau , \tau ]\). Since the singular value decomposition of \(\varvec{w}\) obviously has this form, the proof is completed. \(\square \)

Remark 4

(Singular value thresholding) The proximation of the projective norm with respect to \(\varvec{H}_1\) and \(\varvec{H}_2\) is a soft thresholding of the corresponding singular values. In the following, we denote the matrix-valued operation

as (soft) singular value thresholding \(\mathcal {S}_\tau \).

Knowing the proximation of the (modified) projective norm, we are now able to perform proximal algorithms to solve the minimization problem in Sect. 2. Although we can use any of the mentioned method, here we restrict ourselves the primal-dual iteration (4). First, we consider the bilinear minimization problems (\({\mathfrak {B}_{0}}\)), (\({\mathfrak {B}_{\epsilon }}\)), and (\({\mathfrak {B}_\alpha }\)).

For exactly given data \(\varvec{g}^\dagger \) corresponding to the minimization problem (\({\mathfrak {B}_{0}}\)), the data fidelity functional corresponds to \(F :\mathbb {R}^M \rightarrow \overline{\mathbb {R}}\) with \(F(\varvec{y}) := \chi _{\{0\}}(\varvec{y} - \varvec{g}^\dagger )\). Here and in the following, the indicator function \(\chi _C\) is equal to 0 for arguments in the set C and \(+\infty \) otherwise. A simple computation shows that the conjugate \(F^*\) is given by \(F^*(\varvec{y}') := \langle \varvec{y}',\varvec{g}^\dagger \rangle _{\varvec{K}}\) and the associated proximal mapping by

Thus, we obtain the following algorithm.

Algorithm 1

(Primal-dual for exact data)

-

(i)

Initiation: Fix the parameters \(\tau , \sigma > 0\) and \(\theta \in [0,1]\). Choose an arbitrary start value \((\varvec{w}^{(0)}, \varvec{y}^{(0)})\) in \((\mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2}) \times \mathbb {R}^M\), and set \(\breve{\varvec{w}}^{(0)}\) to \(\varvec{w}^{(0)}\).

-

(ii)

Iteration: For \(n>0\), update \(\varvec{w}^{(n)}\), \(\breve{\varvec{w}}^{(n)}\), and \(\varvec{y}^{(n)}\) by

$$\begin{aligned} \varvec{y}^{(n+1)}:= & {} \varvec{y}^{(n)} + \sigma \, (\breve{\mathcal {B}}(\breve{\varvec{w}}^{(n)}) - \varvec{g}^\dagger )\\ \varvec{w}^{(n+1)}:= & {} \mathcal {S}_{\tau }\bigl (\varvec{w}^{(n)} - \tau \,\breve{\mathcal {B}}^* (\varvec{y}^{(n+1)})\bigr )\\ \breve{\varvec{w}}^{(n+1)}:= & {} \varvec{w}^{(n+1)} + \theta \, ( \varvec{w}^{(n+1)} - \varvec{w}^{(n)}). \end{aligned}$$

Remark 5

If the projective norm in (\({\mathfrak {B}_{0}}\)) is weighed with a parameter \(\alpha > 0\) in order to control the influence of the data fidelity and the regularization, cf. (\({\mathfrak {B}_\alpha }\)), then the iteration in Algorithm 1 changes slightly. More precisely, one has to replace \(\mathcal {S}_\tau \) by \(\mathcal {S}_{\alpha \tau }\).

For inexact data \(\varvec{g}^\epsilon \), we first consider the Tikhonov minimization (\({\mathfrak {B}_\alpha }\)), whose data fidelity corresponds to \(F(\varvec{y}) := \nicefrac 12 \, ||\varvec{y} - \varvec{g}^\epsilon ||_{\varvec{K}}^2\). Here the conjugate \(F^*\) is given by \(F^*(\varvec{y}') = \nicefrac 12 \, ||\varvec{y}'||_{\varvec{K}}^2 + \left\langle \varvec{y}',\varvec{g}^\epsilon \right\rangle _{\varvec{K}}\) with subdifferential \(\partial F^*(\varvec{y}') = \{\varvec{y}' + \varvec{g}^\epsilon \}\). Again a simple computation leads to the proximation

which yields the following algorithm.

Algorithm 2

(Tikhonov regularization)

-

(i)

Initiation: Fix the parameters \(\tau , \sigma > 0\) and \(\theta \in [0,1]\). Choose an arbitrary start value \((\varvec{w}^{(0)}, \varvec{y}^{(0)})\) in \((\mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2}) \times \mathbb {R}^M\), and set \(\breve{\varvec{w}}^{(0)}\) to \(\varvec{w}^{(0)}\).

-

(ii)

Iteration: For \(n>0\), update \(\varvec{w}^{(n)}\), \(\breve{\varvec{w}}^{(n)}\), and \(\varvec{y}^{(n)}\) by

$$\begin{aligned} \varvec{y}^{(n+1)}:= & {} \tfrac{1}{\sigma + 1} \bigl (\varvec{y}^{(n)} + \sigma \, (\breve{\mathcal {B}}(\breve{\varvec{w}}^{(n)}) -\varvec{g}^\epsilon ) \bigr ) \\ \varvec{w}^{(n+1)}:= & {} \mathcal {S}_{\tau \alpha }\bigl (\varvec{w}^{(n)} - \tau \,\breve{\mathcal {B}}^* (\varvec{y}^{(n+1)})\bigr ) \\ \breve{\varvec{w}}^{(n+1)}:= & {} \varvec{w}^{(n+1)} + \theta \, ( \varvec{w}^{(n+1)} - \varvec{w}^{(n)}). \end{aligned}$$

Remark 6

Since the data fidelity F for the Tikhonov functional is differentiable, one may here apply FISTA as an alternative for the primal-dual iteration. In so doing, the whole iteration in Algorithm 2.ii becomes

where \(\breve{\varvec{w}}^{(0)} := \varvec{w}^{(0)}\) and \(t_0 := 1\).

If we incorporate the measurement errors by extending the solution space as in (\({\mathfrak {B}_{\epsilon }}\)), then the data fidelity is chosen by \(F(\varvec{y}) := \chi _{\epsilon \mathbb {B}_{\varvec{K}}}(\varvec{y} - \varvec{g}^\epsilon )\), where \(\mathbb {B}_{\varvec{K}}\) denotes the closed unit ball with respect to the norm induced by \(\varvec{K}\), and \(\chi _{\epsilon \mathbb {B}_{\varvec{K}}}\) is the indicator functional of the closed \(\epsilon \)-ball, i.e., \(\chi _{\epsilon \mathbb {B}_{\varvec{K}}}(\varvec{y}) = 0\) if \(||\varvec{y}||_{\varvec{K}} \le \epsilon \) and \(\infty \) otherwise. Since the conjugation of the unit ball yields the corresponding norm, we obtain \(F^*(\varvec{y}') = \epsilon \, ||\varvec{y}'||_{\varvec{K}} + \left\langle \varvec{y}',\varvec{g}^\epsilon \right\rangle \) with subdifferential

cf. [53, Ex. 8.27]. Since the proximation is not as simple as in the previous cases, we give a more detailed computation.

Lemma 4

(Proximity operator) Let the functional \(F :\mathbb {R}^M \rightarrow \overline{\mathbb {R}}\) be defined by \(F(\varvec{y}) := \chi _{\epsilon \mathbb {B}_{\varvec{K}}}(\varvec{y} - \varvec{g}^\epsilon )\). The proximation of \(F^*\) is then given by

Proof

The vector \(\breve{\varvec{y}}\) is the resolvent \((I + \sigma \, \partial F^*)^{-1}(\varvec{y})\) if and only if

which is an immediate consequence of (10). Bringing \(\sigma \varvec{g}^\epsilon \) to the left-hand side, we are looking for a \(\breve{\varvec{y}}\) such that

For \(||\varvec{y} - \sigma \varvec{g}^\epsilon ||_{\varvec{K}} \le \sigma \epsilon \), the last condition is fulfilled for \(\breve{\varvec{y}} = \varvec{0}\). Otherwise, it follows that \(\breve{\varvec{y}} = \gamma \, (\varvec{y} - \sigma \varvec{g}^\epsilon )\) for some \(\gamma > 0\). With the notation \(\varvec{z} := \varvec{y} - \sigma \varvec{g}^\epsilon \), the first condition becomes

Since \(||\varvec{z}||_{\varvec{K}} > \sigma \epsilon \), we obtain \(\gamma = 1 - \nicefrac {(\sigma \epsilon )}{||\varvec{z}||_{\varvec{K}}}\), and consequently, the assertion. \(\square \)

Remark 7

The central part of the resolvent in Lemma 4 is given by the operator \(\mathcal {P}_\gamma :\mathbb {R}^M \rightarrow \mathbb {R}^M\) with

Pictorially, this operator may be interpreted as shrinkage or contraction around the origin.

After this small digression to compute the proximation of the conjugated data fidelity, the minimization problem (\({\mathfrak {B}_{\epsilon }}\)) may be solved by the following primal-dual iteration.

Algorithm 3

(Primal-dual for inexact data)

-

(i)

Initiation: Fix the parameters \(\tau , \sigma , \epsilon > 0\) and \(\theta \in [0,1]\). Choose an arbitrary start value \((\varvec{w}^{(0)}, \varvec{y}^{(0)})\) in \((\mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2}) \times \mathbb {R}^M\), and set \(\breve{\varvec{w}}^{(0)}\) to \(\varvec{w}^{(0)}\).

-

(ii)

Iteration: For \(n>0\), update \(\varvec{w}^{(n)}\), \(\breve{\varvec{w}}^{(n)}\), and \(\varvec{y}^{(n)}\) by

$$\begin{aligned} \varvec{y}^{(n+1)}:= & {} \mathcal {P}_{\sigma \epsilon }\bigl ( \varvec{y}^{(n)} + \sigma \, ( \breve{\mathcal {B}}(\breve{\varvec{w}}^{(n)}) - \varvec{g}^\epsilon )\bigr ) \\ \varvec{w}^{(n+1)}:= & {} \mathcal {S}_{\tau }\bigl (\varvec{w}^{(n)} - \tau \, \breve{\mathcal {B}}^* (\varvec{y}^{(n+1)})\bigr ) \\ \breve{\varvec{w}}^{(n+1)}:= & {} \varvec{w}^{(n+1)} + \theta \, ( \varvec{w}^{(n+1)} - \varvec{w}^{(n)}). \end{aligned}$$

The weighing between data fidelity and regularization in Remark 5 analogously holds for Algorithm 3.

The central differences between the primal-dual iterations in Algorithms 1, 2, and 3 for the minimization problems (\({\mathfrak {B}_{0}}\)), (\({\mathfrak {B}_\alpha }\)), and (\({\mathfrak {B}_{\epsilon }}\)) are contained in the dual update of \(\varvec{y}^{(n+1)}\). If the parameter \(\sigma \) is chosen close to zero, the three iterations nearly coincide. Thus, all three iterations should yield similar results; so Algorithm 1 should also be able to deal with noisy measurements.

4 Tensor-Free Singular Value Thresholding

Each of the proposed methods solving bilinear inverse problems is based on a singular value thresholding on the tensor product \(\mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2}\) . If the dimension of the original space \(\mathbb {R}^{N_1} \times \mathbb {R}^{N_2}\) is already enormous, then the dimension of the tensor product literally explodes, which makes the computation of the required singular value decomposition impracticable. This difficulty occurs for nearly all bilinear image recovery problems. However, since the tensor \(\varvec{w}^{(n)}\) is generated by a singular value thresholding, the iterates \(\varvec{w}^{(n)}\) usually possesses a very low rank. Hence, the involved tensors can be stored in an efficient and storage-saving manner. In order to determine this low-rank representation, we only compute a partial singular value decomposition of the argument \(\varvec{w}\) of \(\mathcal {S}_\tau \) by deriving iterative algorithms only requiring the left- and right-hand actions of \(\varvec{w}\).

Our first algorithm is based on the orthogonal iteration with Ritz acceleration, see [28, 60]. In order to compute the leading \(\ell \) singular values, the main idea is here a joint power iteration over two \(\ell \)-dimensional subspaces \(\tilde{\mathcal {U}}_n \subset \mathbb {R}^{N_1}\) and \(\tilde{\mathcal {V}}_n \subset \mathbb {R}^{N_2}\) alternately generated by \(\tilde{\mathcal {U}}_n := \varvec{w}^* \varvec{H}_2 \tilde{\mathcal {V}}_{n-1}\) and \(\tilde{\mathcal {V}}_n := \varvec{w} \varvec{H}_1 \tilde{\mathcal {U}}_{n}\). These subspaces are represented by orthonormal bases \(\tilde{\varvec{U}}_n := [\tilde{\varvec{u}}_0^{(n)}, \dots , \tilde{\varvec{u}}_{\ell -1}^{(n)}]\) and \(\tilde{\varvec{V}}_n := [\tilde{\varvec{v}}_0^{(n)}, \dots , \tilde{\varvec{v}}_{\ell -1}^{(n)}]\) with respect to the inner products associated with \(\varvec{H}_1\) and \(\varvec{H}_2\).

Algorithm 4

(Subspace iteration)

Input: \(\varvec{w} \in \mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2}\), \(\ell > 0\), \(\delta > 0\).

-

(i)

Choose \(\tilde{\varvec{V}}_0 \in \mathbb {R}^{N_2 \times \ell }\), whose columns are orthonormal with respect to \(\varvec{H}_2\).

-

(ii)

For \(n>0\), repeat:

-

(a)

Compute \(\tilde{\varvec{E}}_n := \varvec{w}^* \varvec{H}_2 \tilde{\varvec{V}}_{n-1}\), and reorthonormalize the columns regarding \(\varvec{H}_1\).

-

(b)

Compute \(\tilde{\varvec{F}}_n := \varvec{w} \varvec{H}_1 \tilde{\varvec{E}}_{n}\), and reorthonormalize the columns regarding \(\varvec{H}_2\).

-

(c)

Determine the Euclidean singular value decomposition

$$\begin{aligned} \tilde{\varvec{F}}_n^* \varvec{H}_2 \varvec{w} \varvec{H}_1 \tilde{\varvec{E}}_n = \varvec{Y}_n \varvec{\Sigma }_n \varvec{Z}_n^*, \end{aligned}$$and set \(\tilde{\varvec{U}}_n := \tilde{\varvec{E}}_n \varvec{Z}_n\) and \(\tilde{\varvec{V}}_n := \tilde{\varvec{F}}_n \varvec{Y}_n\).

until \(\ell \) singular vectors have converged, which means

$$\begin{aligned} \left| \left| \varvec{w}^* \varvec{H}_2 \tilde{\varvec{v}}_m^{(n)} - \sigma _m^{(n)} \tilde{\varvec{u}}_m^{(n)}\right| \right| _{\varvec{H}_1} \le \delta \, ||\varvec{w}||_{\mathcal {L}(\varvec{H}_1, \varvec{H}_2)} \qquad \text {for}\qquad 0 \le m < \ell , \end{aligned}$$where \(||\cdot ||_{\mathcal {L}(\varvec{H}_1, \varvec{H}_2)}\) denotes the operator norm with respect to norms induced by \(\varvec{H}_1\) and \(\varvec{H}_2\), which may be estimated by \(\sigma _0^{(n)}\).

-

(a)

Output: \(\tilde{\varvec{U}}_n \in \mathbb {R}^{N_1 \times \ell }\), \(\tilde{\varvec{V}}_n \in \mathbb {R}^{N_2 \times \ell }\), \(\varvec{\Sigma }_n \in \mathbb {R}^{\ell \times \ell }\) with \(\tilde{\varvec{V}}_n^* \varvec{H}_2 \varvec{w} \varvec{H}_1 \tilde{\varvec{U}}_n = \varvec{\Sigma }_n\).

Here, reorthonormalization means that for each applicable m, the span of the first m columns of the matrix and its reorthonormalization coincide, and that the reorthonormalized matrix has orthonormal columns. This can, for instance, be achieved by the well-known Gram–Schmidt procedure.

Under mild conditions on the subspace associated with \(\tilde{\varvec{V}}_0\), the matrices \(\tilde{\varvec{U}}_n\), \(\tilde{\varvec{V}}_n\), and \(\varvec{\Sigma }_n := \text {diag}(\sigma _0^{(n)}, \dots , \sigma _{\ell -1}^{(n)})\) converge to leading singular vectors as well as to the leading singular values of a singular value decomposition \(\varvec{w} = \sum _{n=0}^{R-1} \sigma _n \, (\tilde{\varvec{u}}_n \otimes \tilde{\varvec{v}}_n)\).

Theorem 5

(Subspace iteration) If none of the basis vectors in \(\tilde{\varvec{V}}_0\) is orthogonal to the \(\ell \) leading singular vectors \(\tilde{\varvec{v}}_0, \dots , \tilde{\varvec{v}}_{\ell -1}\), and if \(\sigma _{\ell -1} > \sigma _\ell \), then the singular values \(\sigma _0^{(n)} \ge \cdots \ge \sigma _{\ell -1}^{(n)}\) in Algorithm 4 converge to \(\sigma _0 \ge \cdots \ge \sigma _{\ell -1}\) with a rate of

Proof

By the construction in steps (a) and (b), the columns in \(\tilde{\varvec{E}}_n\) and \(\tilde{\varvec{F}}_n\) form orthonormal systems with respect to \(\varvec{H}_1\) and \(\varvec{H}_2\). In this proof, we denote the corresponding subspaces by \(\tilde{\mathcal {E}}_n\) and \(\tilde{\mathcal {F}}_n\), which are related by \(\tilde{\mathcal {E}}_n = \varvec{w}^* \varvec{H}_2 \tilde{\mathcal {F}}_{n-1}\) and \(\tilde{\mathcal {F}}_n = \varvec{w} \varvec{H}_1 \tilde{\mathcal {E}}_n\). Due to the basis transformation in (c), the columns of \(\tilde{\varvec{U}}_n\) and \(\tilde{\varvec{V}}_n\) also form orthonormal bases of \(\tilde{\mathcal {E}}_n\) and \(\tilde{\mathcal {F}}_n\). Next, we exploit that the projection \(\varvec{P}_n := \tilde{\varvec{V}}_n \tilde{\varvec{V}}_n^* \varvec{H}_2\) onto \(\tilde{\mathcal {F}}_n\) acts as identity on \(\varvec{w} \varvec{H}_1 \tilde{\mathcal {E}}_n\) by construction. Since \(\tilde{\varvec{U}}_n\) is a basis of \(\tilde{\mathcal {E}}_n\), and since \(\tilde{\varvec{V}}_n^* \varvec{H}_2 \varvec{w} \varvec{H}_1 \tilde{\varvec{U}}_n = \varvec{\Sigma }_n\) by the singular value decomposition in step (c), we have

and \(\tilde{\varvec{U}}_n\) diagonalizes \(\varvec{H}_1 \varvec{w}^* \varvec{H}_2 \varvec{w} \varvec{H}_1\) on the subspace \(\tilde{\mathcal {E}}_n\).

Using the substitutions

as well as

we notice that the iteration in Algorithm 4 is composed of two main steps. First, in (a) and (b), we compute an orthonormal basis \(\varvec{E}_n\) of

Secondly, (11) implies that we determine an Euclidean eigenvalue decomposition on the subspace \(\mathcal {E}_n\) by

and \(\varvec{U}_n := \varvec{E}_n \varvec{Z}_n\).

This two-step iteration exactly coincides with the orthogonal iteration with Ritz acceleration for the matrix \( (\varvec{H}_1^{\nicefrac 12} \varvec{w}^* (\varvec{H}_2^{\nicefrac 12})^* ) (\varvec{H}_2^{\nicefrac 12} \varvec{w} \, (\varvec{H}_1^{\nicefrac 12} )^*)\), see [28, 60]. Under the given assumptions, this iteration converges to the \(\ell \) leading eigenvalues and eigenvectors with the asserted rates. In view of Lemma 2, the columns in \(\tilde{\varvec{U}}_n\) and \(\tilde{\varvec{V}}_n\) together with \(\varvec{\Sigma }_n\) converge to the leading components of the singular value decomposition of \(\varvec{w}\) with respect to \(\varvec{H}_1\) and \(\varvec{H}_2\). \(\square \)

Considering the subspace iteration (Algorithm 4), notice that the algorithm does not need an explicit representation of its argument \(\varvec{w}\) but the left- and right-hand actions of \(\varvec{w}\) as a matrix-vector multiplication. We may thus use the subspace iteration to compute the singular value thresholding \(\mathcal {S}_\tau (\varvec{w})\) without a tensor representation of \(\varvec{w}\).

Algorithm 5

(Tensor-free singular value thresholding)

Input: \(\varvec{w} \in \mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2}\), \(\tau > 0\), \(\ell > 0\), \(\delta > 0\).

-

(i)

Apply Algorithm 4 with the following modifications:

-

If \(\sigma _m^{(n)} > \tau \) for all \(0 \le m < \ell \), increase \(\ell \) and extend \(\tilde{\varvec{V}}_n\) by further orthonormal columns, unless \(\ell = {\text {rank}}\varvec{w}\), i.e., when the columns of \(\tilde{\varvec{E}}_n\) would become linearly dependent.

-

Additionally, stop the subspace iterations when the first \(\ell ' + 1\) singular values with \(\ell ' < \ell \) have converged and \(\sigma _{\ell '+1}^{(n)} < \tau \). Otherwise, continue the iteration until all nonzero singular values converge and set \(\ell ' = \ell \).

-

-

(ii)

Set \(\tilde{\varvec{U}}' := [\tilde{\varvec{u}}_0, \dots , \tilde{\varvec{u}}_{\ell '-1}]\), \(\tilde{\varvec{V}}' := [\tilde{\varvec{v}}_0, \dots , \tilde{\varvec{v}}_{\ell '-1}]\), and

$$\begin{aligned} \varvec{\Sigma }' := \text {diag}\bigl (S_\tau \bigl (\sigma _0^{(n)}\bigr ), \dots , S_\tau \bigl (\sigma _{\ell '}^{(n)}\bigr )\bigr ). \end{aligned}$$

Output: \(\tilde{\varvec{U}}' \in \mathbb {R}^{N_1 \times \ell '}\), \(\tilde{\varvec{V}}' \in \mathbb {R}^{N_2 \times \ell '}\), \(\varvec{\Sigma }' \in \mathbb {R}^{\ell ' \times \ell '}\) with \(\tilde{\varvec{V}}' \varvec{\Sigma }' (\tilde{\varvec{U}}')^* = \mathcal {S}_\tau (\varvec{w})\).

Corollary 1

(Exact singular value thresholding) If the nonzero singular values of \(\varvec{w}\) are distinct, and if none of the columns in \(\tilde{\varvec{V}}_n\) is orthogonal to the singular vectors with \(\sigma _n > \tau \), then Algorithm 5 computes the low-rank representation of \(\mathcal {S}_\tau (\varvec{w})\).

Although Algorithm 5 for generic start values always yields the singular value thresholding, the convergence of the subspace iteration is rather slow. Therefore, we now derive an algorithm that is based on the Lanczos-based bidiagonalization method proposed by Golub and Kahan in [27] and the Ritz approximation in [28]. This method again only require the left-hand and right-hand action of \(\varvec{w}\) with respect to a given vector. For simplifying the following considerations, we initially present the employed Lanczos process with respect to the Euclidean singular value decomposition.

The central idea is here to construct, for fixed k, orthonormal matrices \(\varvec{F}_k = [\varvec{f}_0, \dots , \varvec{f}_{k-1}] \in \mathbb {R}^{N_2 \times k}\) and \(\varvec{E}_k = [\varvec{e}_0, \dots , \varvec{e}_{k-1}] \in \mathbb {R}^{N_1 \times k}\) such that the transformed matrix

is bidiagonal, and then to compute the singular value decomposition of \(\varvec{B}_k\) by determining orthogonal matrices \(\varvec{Y}_k\), \(\varvec{Z}_k\), and \(\varvec{\Sigma }_k\) in \(\mathbb {R}^{k \times k}\) such that

Defining \(\varvec{U}_k \in \mathbb {R}^{N_1 \times k}\) and \(\varvec{V}_k \in \mathbb {R}^{N_2 \times k}\) as

we finally obtain a set of approximate right-hand and left-hand singular vectors, see [2, 27, 28].

The values \(\beta _n\) and \(\gamma _n\) of the bidiagonal matrix \(\varvec{B}_k\) and the related vectors \(\varvec{e}_n\) and \(\varvec{f}_n\) can be determined by the following iterative procedure [27]: Choose an arbitrary unit vector \(\varvec{p}_{-1} \in \mathbb {R}^{N_1}\) with respect to the Euclidean norm, and compute

For the first iteration, we set \(\gamma _{-1} := 1\) and \(\varvec{f}_{-1} := \varvec{0}\). If \(\gamma _{m+1}\) vanishes, then we stop the Lanczos process since we have found an invariant Krylov subspace such that the computed singular values become exact.

In order to compute an approximate singular value decomposition with respect to \(\varvec{H}_1\) and \(\varvec{H}_2\), we exploit Lemma 2 and perform the Lanczos bidiagonalization regarding the transformed matrix \(\varvec{H}_2^{\nicefrac 12} \varvec{w} \, (\varvec{H}_1^{\nicefrac 12})^*\). Moreover, we incorporate the back transformation in Lemma 2 with the aid of the substitutions

In this manner, the square roots \(\varvec{H}_1^{\nicefrac 12}\) and \(\varvec{H}_2^{\nicefrac 12}\) and their inverses cancel out, and we obtain the following algorithm, which only relies on the original matrices \(\varvec{H}_1\) and \(\varvec{H}_2\).

Algorithm 6

(Lanczos bidiagonalization)

Input: \(\varvec{w} \in \mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2}\), \(k>0\).

-

(i)

Initiation: Set \(\gamma _{-1} := 1\) and \(\tilde{\varvec{f}}_{-1} := \varvec{0}\). Choose a unit vector \(\tilde{\varvec{p}}_{-1}\) with respect to \(\varvec{H}_1\).

-

(ii)

Lanczos bidiagonalization: For \(m = -1, \dots , k-2\) while \(\gamma _m \ne 0\), repeat:

-

(a)

Compute \(\tilde{\varvec{e}}_{m+1} := \gamma _m^{-1} \, \tilde{\varvec{p}}_m\), and reorthogonalize with \(\tilde{\varvec{e}}_0, \dots , \tilde{\varvec{e}}_m\) as to \(\varvec{H}_1\).

-

(b)

Determine \(\tilde{\varvec{q}}_{m+1} := \varvec{w} \varvec{H}_1 \tilde{\varvec{e}}_{m+1} - \gamma _m \tilde{\varvec{f}}_m\), and set \(\beta _{m+1} := ||\tilde{\varvec{q}}_{m+1}||_{\varvec{H}_2}\). Compute \(\tilde{\varvec{f}}_{m+1} := \beta _{m+1}^{-1} \tilde{\varvec{q}}_{m+1}\) and reorthogonalize with \(\tilde{\varvec{f}}_0, \dots , \tilde{\varvec{f}}_m\) as to \(\varvec{H}_2\).

-

(c)

Determine \(\tilde{\varvec{p}}_{m+1} := \varvec{w}^* \varvec{H}_2 \tilde{\varvec{f}}_{m+1} - \beta _{m+1} \tilde{\varvec{e}}_{m+1}\), and set \(\gamma _{m+1} := ||\tilde{\varvec{p}}_{m+1}||\).

-

(a)

-

(iii)

Compute the Euclidean singular value decomposition of \(\varvec{B}_k\) according to (12), i.e. \(\varvec{B}_k = \varvec{Y}_k \varvec{\Sigma }_k \varvec{Z}_{k}^*\), and set \(\tilde{\varvec{U}}_k := \tilde{\varvec{E}}_k \varvec{Z}_k\) and \(\tilde{\varvec{V}}_k := \tilde{\varvec{F}}_k \varvec{Y}_k\).

Output: \(\tilde{\varvec{U}}_k \in \mathbb {R}^{N_1 \times k}\), \(\tilde{\varvec{V}}_k \in \mathbb {R}^{N_2 \times k}\), \(\varvec{\Sigma }_k \in \mathbb {R}^{k \times k}\) with \(\tilde{\varvec{V}}_{k}^* \varvec{H}_2 \varvec{w} \varvec{H}_1 \tilde{\varvec{U}}_k = \varvec{\Sigma }_k\).

Remark 8

The bidiagonalization by Golub and Kahan is based on a Lanczos-type process, which is numerically unstable in the computation of \(\tilde{\varvec{e}}_n\) and \(\tilde{\varvec{f}}_n\). For this reason, we have to reorthogonalize all newly generated vectors \(\tilde{\varvec{e}}_n\) and \(\tilde{\varvec{f}}_n\) with the previously generated vectors, see [27]. This amounts to projecting \(\tilde{\varvec{e}}_{m+1}\) to the orthogonal complement of the span of \(\{\tilde{\varvec{e}}_{0}, \ldots , \tilde{\varvec{e}}_{m} \}\) and the analog for \(\tilde{\varvec{f}}_{m+1}\), for instance, via the Gram–Schmidt procedure.

Remark 9

The computation of the last \(\tilde{\varvec{p}}_{k-1}\) seems to be superfluous since it is not needed for the determination of the matrix \(\varvec{B}_k\). On the other side, this vector represents the residuals of the approximate singular value decomposition. More precisely, we have

for \(m = 0, \dots , k-1\), see [2]. Here the vectors \(\tilde{\varvec{u}}_m\), \(\tilde{\varvec{v}}_m\), and \(\varvec{y}_m\) denote the columns of the matrices \(\tilde{\varvec{U}}_k = [\tilde{\varvec{u}}_0, \dots , \tilde{\varvec{u}}_{k-1}]\), \(\tilde{\varvec{V}}_k = [\tilde{\varvec{v}}_0, \dots , \tilde{\varvec{v}}_{k-1}]\), and \(\varvec{Y}_k = [\varvec{y}_0, \dots , \varvec{y}_{k-1}]\) respectively; the singular values \(\sigma _m\) of \(\varvec{B}_k\) are given by \(\varvec{\Sigma }_k = \text {diag}(\sigma _0, \dots , \sigma _{k-1})\); the vector \(\varvec{\eta }_{k-1} \in \mathbb {R}^k\) represents the last unit vector \((0, \dots , 0, 1)^*\).

Since the bidiagonalization method by Golub and Kahan is based on the Lanczos process for symmetric matrices, one can apply the related convergence theory to show that the approximate singular values and singular vectors – for increasing k – converge to the wanted singular value decomposition of \(\varvec{w}\), see [28]. Since we are only interested in the leading singular values and singular vectors, and since we want to choose the matrix \(\varvec{B}_k\) as small as possible, this convergence theory does not apply to our setting.

In order to improve the quality of the approximate singular value decomposition computed by Algorithm 6, we here use a restarting technique proposed by Baglama and Reichel [2]. The central idea is to adapt the Lanczos bidiagonalization such that the method can be restarted by a set of \(\ell \) previously computed Ritz vectors. For this purpose, Baglama and Reichel suggest a modified bidiagonalization of the form

where the first \(\ell \) columns of the orthonormal matrices

are predefined by the Ritz vectors of the previous iteration. For the computation of the first \(\ell < k\) leading singular values and singular vectors, we employ the following algorithm [2], which has been adapted to our setting by incorporating Lemma 2 and the substitution (14).

Algorithm 7

(Augmented Lanczos Bidiagonalization)

Input: \(\varvec{w} \in \mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2}\), \(\ell > 0\) \(k> \ell \), \(\delta >0\).

-

(i)

Apply Algorithm 6 to compute an approximate singular value decomposition \(\tilde{\varvec{V}}_{k,0}^* \varvec{H}_2 \varvec{w} \varvec{H}_1 \tilde{\varvec{U}}_{k,0} = \varvec{\Sigma }_{k,0}\).

-

(ii)

For \(n > 0\), until \(\ell \) singular vectors have converged, which means

$$\begin{aligned} \gamma _{k-1}^{(n-1)} |\varvec{\eta }_{k-1}^* \varvec{y}_m^{(n-1)}| \le \delta ||\varvec{w}||_{\mathcal {L}(\varvec{H}_1, \varvec{H}_2)} \qquad \text {for}\qquad 0 \le m < \ell , \end{aligned}$$where \(||\cdot ||_{\mathcal {L}(\varvec{H}_1, \varvec{H}_2)}\) denotes the operator norm with respect to the norms induced by \(\varvec{H}_1\) and \(\varvec{H}_2\), which may be estimated by \(\sigma _0^{(n-1)}\), repeat:

-

(a)

Initialize the new iteration by setting \(\tilde{\varvec{e}}_m^{(n)} := \tilde{\varvec{u}}_m^{(n-1)}\) and \(\tilde{\varvec{f}}_m^{(n)} := \tilde{\varvec{v}}_m^{(n-1)}\) for \(m = 0, \dots , \ell - 1\). Further, set \(\tilde{\varvec{p}}_{\ell - 1}^{(n)} := \tilde{\varvec{p}}_{k-1}^{(n-1)}\) and \(\gamma _{\ell - 1}^{(n)} := ||\tilde{\varvec{p}}_{\ell - 1}^{(n)}||_{\varvec{H}_1}\).

-

(b)

Compute \(\tilde{\varvec{e}}_\ell ^{(n)} := (\gamma _{\ell - 1}^{(n)})^{-1} \, \tilde{\varvec{p}}_{\ell - 1}^{(n)}\), and reorthogonalize with \(\tilde{\varvec{e}}_0^{(n)}, \dots , \tilde{\varvec{e}}_{\ell - 1}^{(n)}\) as to \(\varvec{H}_1\).

-

(c)

Determine \(\tilde{\varvec{q}}_{\ell }^{(n)} := \varvec{w} \varvec{H}_1 \tilde{\varvec{e}}_\ell ^{(n)}\), compute the inner products \(\rho _m^{(n)} := \langle \tilde{\varvec{f}}_m^{(n)},\tilde{\varvec{q}}_\ell ^{(n)}\rangle _{\varvec{H}_2}\) for \(m = 0, \dots , \ell - 1\), and reorthogonalize \(\tilde{\varvec{q}}_\ell ^{(n)}\) as to \(\varvec{H}_2\) by

$$\begin{aligned} \tilde{\varvec{q}}_\ell ^{(n)} := \tilde{\varvec{q}}_\ell ^{(n)} - \sum _{m=0}^{\ell - 1} \rho _m^{(n)} \tilde{\varvec{f}}_m^{(n)} . \end{aligned}$$ -

(d)

Set \(\beta _\ell ^{(n)} := ||\tilde{\varvec{q}}_\ell ^{(n)}||_{\varvec{H}_2}\) and \(\tilde{\varvec{f}}_\ell ^{(n)} := (\beta _\ell ^{(n)})^{-1} \, \tilde{\varvec{q}}_\ell ^{(n)}\).

-

(e)

Determine \(\tilde{\varvec{p}}_\ell ^{(n)} := \varvec{w}^* \varvec{H}_2 \tilde{\varvec{f}}_\ell ^{(n)} - \beta _\ell ^{(n)} \tilde{\varvec{e}}_\ell ^{(n)}\), and set \(\gamma _\ell ^{(n)} := ||\tilde{\varvec{p}}_\ell ^{(n)}||_{\varvec{H}_1}\).

-

(f)

Calculate the remaining values of \(\varvec{B}_{k,n}\) by applying step (ii) of Algorithm 6 with \(m = \ell , \dots , k - 2\).

-

(g)

Compute the Euclidean singular value decomposition of \(\varvec{B}_{k,n}\) in (15), i.e. \(\varvec{B}_{k,n} = \varvec{Y}_{k,n} \varvec{\Sigma }_{k,n} \varvec{Z}_{k,n}^*\), and set \(\tilde{\varvec{U}}_{k,n} := \tilde{\varvec{E}}_{k,n} \varvec{Z}_{k,n}\) and \(\tilde{\varvec{V}}_{k,n} := \tilde{\varvec{F}}_{k,n} \varvec{Y}_{k,n}\).

-

(a)

-

(iii)

Set \(\tilde{\varvec{U}} := [\tilde{\varvec{u}}_0^{(n)}, \dots , \tilde{\varvec{u}}_{\ell -1}^{(n)}]\), \(\tilde{\varvec{V}} :=[\tilde{\varvec{v}}_0^{(n)}, \dots , \tilde{\varvec{v}}_{\ell -1}^{(n)}]\), and \(\varvec{\Sigma } := \text {diag}(\sigma _0^{(n)}, \dots , \sigma _{\ell -1}^{(n)})\).

Output: \(\tilde{\varvec{U}} \in \mathbb {R}^{N_1 \times \ell }\), \(\tilde{\varvec{V}} \in \mathbb {R}^{N_2 \times \ell }\), \(\varvec{\Sigma } \in \mathbb {R}^{\ell \times \ell }\) with \(\tilde{\varvec{V}}^* \varvec{H}_2 \varvec{w} \varvec{H}_1 \tilde{\varvec{U}} = \varvec{\Sigma }\).

Remark 10

The stopping criterion in step (ii) originates from the error representation in (14). For the operator norm \(||\tilde{\varvec{w}}||_{\mathcal {L}(\varvec{H}_1, \varvec{H}_2)}\), one may use the maximal leading singular values of the former iterations, which usually gives a sufficiently good approximation, see [2].

Although the numerical effort of the restarted augmented Lanczos process is enormously reduced compared with the subspace iteration, we are unfortunately not aware of a convergence and error analysis for this specific variant of Lanczos-type method. Nevertheless, we can employ the obtained partial singular value decomposition to determine the singular value thresholding.

Algorithm 8

(Tensor-free singular value thresholding)

Input: \(\varvec{w} \in \mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2}\), \(\tau > 0\), \(\ell > 0\), \(k>\ell \), \(\delta > 0\).

-

(i)

Apply Algorithm 7 with the following modifications:

-

If \(\sigma _m^{(n)} > \tau \) for all \(0 \le m < \ell \), increase \(\ell \) and k with \(\ell < k\), unless \(k = {\text {rank}}\varvec{w}\), i.e., when \(\gamma _{k}^{(n)}\) in Algorithm 6 vanishes.

-

Additionally, stop the augmented Lanczos method when the first \(\ell ' + 1\) singular values with \(\ell ' < \ell \) have converged and \(\sigma _{\ell '+1}^{(n)} < \tau \). Otherwise, continue the iteration until all nonzero singular values converge and set \(\ell ' = \ell \).

-

-

(ii)

Set \(\tilde{\varvec{U}}' := [\tilde{\varvec{u}}_0^{(n)}, \dots , \tilde{\varvec{u}}_{\ell '-1}^{(n)}]\), \(\tilde{\varvec{V}}' := [\tilde{\varvec{v}}_0^{(n)}, \dots , \tilde{\varvec{v}}_{\ell '-1}^{(n)}]\), and

$$\begin{aligned} \varvec{\Sigma }' := \text {diag}\bigl (S_\tau \bigl (\sigma _0^{(n)}\bigr ), \dots , S_\tau \bigl (\sigma _{\ell '}^{(n)}\bigr )\bigr ). \end{aligned}$$

Output: \(\tilde{\varvec{U}}' \in \mathbb {R}^{N_1 \times \ell '}\), \(\tilde{\varvec{V}}' \in \mathbb {R}^{N_2 \times \ell '}\), \(\varvec{\Sigma }' \in \mathbb {R}^{\ell ' \times \ell '}\) with \(\tilde{\varvec{V}}' \varvec{\Sigma }' (\tilde{\varvec{U}}')^* = \mathcal {S}_\tau (\varvec{w})\).

Besides the singular value thresholding, the proximal methods in Sect. 3 to solve the lifted and relaxed bilinear problems in Sect. 2 require the application of the lifted operators \(\breve{\mathcal {B}}\) well as its adjoints \(\breve{\mathcal {B}}^*\). Both operations can be computed in a tensor-free manner. Assuming that \(\varvec{w}\) has a low rank, one may compute the lifted bilinear forward operator with the aid of the universal property in Definition 1.

Corollary 2

(Tensor-free bilinear lifting) Let \(\mathcal {B} :\mathbb {R}^{N_1} \times \mathbb {R}^{N_2} \rightarrow \mathbb {R}^{M}\) be a bilinear mapping. If \(\varvec{w} \in \mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2}\) has the representation \(\varvec{w} = \tilde{\varvec{V}} \varvec{\Sigma } \tilde{\varvec{U}}^*\) with \(\tilde{\varvec{U}} := [\tilde{\varvec{u}}_0, \dots , \tilde{\varvec{u}}_{\ell -1}]\), \(\varvec{\Sigma } := \text {diag}(\sigma _0, \dots , \sigma _{\ell -1})\), and \(\tilde{\varvec{V}} := [\tilde{\varvec{v}}_0, \dots , \tilde{\varvec{v}}_{\ell -1}]\), then the lifted forward operator \(\breve{\mathcal {B}}\) acts by

Considering the proximal methods, we see that the adjoint lifting only occurs in the argument of the singular value thresholding. If one applies the subspace iteration or the augmented Lanczos process, it is hence enough to study the left-hand and right-hand actions of the adjoint liftings. These actions can be expressed by the left-hand or right-hand adjoint of the original bilinear mapping \(\mathcal {B}\).

Lemma 5

(Tensor-free adjoint bilinear lifting) Let \(\mathcal {B} :\mathbb {R}^{N_1} \times \mathbb {R}^{N_2} \rightarrow \mathbb {R}^{M}\) be a bilinear mapping. The left-hand and right-hand actions of the adjoint lifting \(\breve{\mathcal {B}}^*(\varvec{y}) \in \mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2}\) with \(\varvec{y} \in \mathbb {R}^M\) are given by

for \(\varvec{e} \in \mathbb {R}^{N_1}\) and \(\varvec{f} \in \mathbb {R}^{N_2}\).

Proof

Testing the right-hand action of the image \(\breve{\mathcal {B}}^*(\varvec{y})\) on \(\varvec{e} \in \mathbb {R}^{N_1}\) with an arbitrary vector \(\varvec{f} \in \mathbb {R}^{N_2}\), we obtain

The left-hand action follows analogously. \(\square \)

Remark 11

(Composed tensor-free adjoint lifting) Since the left-hand and right-hand actions of the tensor \(\varvec{w}^{(n)} = \sum _{k=0}^{R-1} \sigma _{k}^{(n)} \, (\tilde{\varvec{u}}_{k}^{(n)} \otimes \tilde{\varvec{v}}_{k}^{(n)})\) are given by

and

the right-hand action of the singular value thresholding argument \(\varvec{w} = \varvec{w}^{(n)} - \tau \, \breve{\mathcal {B}}^*(\varvec{y}^{(n+1)})\) within the proximal methods in Sect. 3 is given by

and the left-hand action by

where \(\varvec{w}^{(n)} = \sum _{k=0}^{R-1} \sigma _{k}^{(n)} \, (\tilde{\varvec{u}}_{k}^{(n)} \otimes \tilde{\varvec{v}}_{k}^{(n)})\).

Now we are ready to rewrite the proximal methods in Sect. 3 into tensor-free variants. Exemplarily, we consider the primal-dual method for bilinear operators and exact data, see Algorithm 1.

Algorithm 9

(Tensor-free primal-dual for exact data)

-

(i)

Initiation: Fix the parameters \(\tau , \sigma > 0\) and \(\theta \in [0,1]\). Choose the start value \((\varvec{w}^{(0)}, \varvec{y}^{(0)}) = (\varvec{0} \otimes \varvec{0}, \varvec{0})\) in \((\mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2}) \times \mathbb {R}^M\), and set \(\varvec{w}^{(-1)}\) to \(\varvec{w}^{(0)}\).

-

(ii)

Iteration: For \(n \ge 0\), update \(\varvec{w}^{(n)}\) and \(\varvec{y}^{(n)}\):

-

(a)

Using the tensor-free computations in Corollary 2, determine

$$\begin{aligned} \varvec{y}^{(n+1)} := \varvec{y}^{(n)} + \sigma \, \bigl ( (1 + \theta ) \; \breve{\mathcal {B}}(\varvec{w}^{(n)}) - \theta \; \breve{\mathcal {B}}(\varvec{w}^{(n-1)}) - \varvec{g}^\dagger \bigr ). \end{aligned}$$ -

(b)

Compute a low-rank representation \(\varvec{w}^{(n+1)} = \tilde{\varvec{V}}^{(n+1)} \varvec{\Sigma }^{(n+1)} \tilde{\varvec{U}}^{(n+1)}\) of the singular value threshold

$$\begin{aligned} \mathcal {S}_\tau ( \varvec{w}^{(n)} - \tau \, \breve{\mathcal {B}}^*(\varvec{y}^{(n+1)})) \end{aligned}$$with Algorithms 8 (or 5). The required actions are given in (18) and (19).

-

(a)

Remark 12

As starting value for the augmented Lanczos bidiagonalization according to Algorithm 8 required for step (ii.b) of Algorithm 9, we suggest a linear combination of the right-hand singular vectors of the previous iteration \(\varvec{w}^{(n)}\) in the hope that they are good approximations of the new singular vectors.

Using the above tensor-free computation methods, we immediately obtain a tensor-free variant for FISTA in Remark 6 since this iteration scheme is also based on the singular value thresholding, the lifted operator, and the action of its adjoint as well. Exploiting the universal property and Lemma 5, we can compute the actions of \(\breve{\varvec{w}}^{(n)} - \tau \breve{\mathcal {B}}^*(\breve{\mathcal {B}} \breve{\varvec{w}}^{(n)} - \varvec{g}^\epsilon )\) by setting \(\beta _{n+1} := \nicefrac {(t_n - 1)}{t_{n+1}}\) and

The right-hand and left-hand actions are now given by

and

where \(\varvec{w}^{(n)} = \sum _{k=0}^{R^{(n)}-1} \sigma _{k}^{(n)} \, (\tilde{\varvec{u}}_{k}^{(n)} \otimes \tilde{\varvec{v}}_{k}^{(n)})\). These lead us to the following tensor-free algorithm.

Algorithm 10

(Tensor-free FISTA for Tikhonov)

-

(i)

Initiation: Fix the parameters \(\alpha , \tau > 0\). Choose the start value \((\varvec{w}^{(0)}) = (\varvec{0} \otimes \varvec{0})\) in \((\mathbb {R}^{N_1} \otimes \mathbb {R}^{N_2})\), and set \(\varvec{w}^{(-1)}\) to \(\varvec{w}^{(0)}\) as well as \(t_0 := 1\) and \(\beta _0 := 0\).

-

(ii)

Iteration: For \(n \ge 0\), update \(\varvec{w}^{(n)}\), \(t_n\) and \(\beta _n\):

-

(a)

Determine \(\varvec{y}^{(n)}\) in (20).

-

(b)

Compute a low-rank representation \(\varvec{w}^{(n+1)} = \tilde{\varvec{V}}^{(n+1)} \varvec{\Sigma }^{(n+1)} \tilde{\varvec{U}}^{(n+1)}\) of the singular value threshold

$$\begin{aligned} \mathcal {S}_{\tau \alpha } ( (1+\beta _n) \varvec{w}^{(n)} - \beta _n \varvec{w}^{(n-1)}- \tau \, \breve{\mathcal {B}}^*(\varvec{y}^{(n)})) \end{aligned}$$with Algorithms 8 (or 5). The required actions are given in (21) and (22).

-

(c)

Set

$$\begin{aligned} t_{n+1} := \frac{1 + \sqrt{1 + 4 t_n^2}}{2} \qquad \text {and}\qquad \beta _{n+1} := \frac{t_n - 1}{t_{n+1}}. \end{aligned}$$

-

(a)

Since FISTA as well as the primal-dual method require two times the evaluation of the lifted operator and one time the singular value thresholding in every iteration, the numerical complexity of both algorithms is comparable.

Adapting the computation of \(\varvec{y}^{(n+1)}\), one may analogously apply Algorithms 2 and 3 in a completely tensor-free manner. Because the singular value thresholding can be computed with arbitrary high accuracy, the convergence results for the primal-dual algorithm translates to our setting. The convergence analysis [18, Thm. 1] yields the following convergence guarantee, where the norm of the bilinear operator \(\mathcal {B}\) is defined by

Theorem 6

(Convergence—exact primal-dual) Under the parameter choice rule \(\theta = 1\) and \(\tau \sigma ||\mathcal {B}||^2 < 1\), the iteration \((\varvec{w}^{(n)}, \varvec{y}^{(n)})\) in Algorithm 9 converges to a minimizer \((\varvec{w}^\dagger , \varvec{y}^\dagger )\) of the lifted and relaxed problem (\({\mathfrak {B}_{0}}\)).

Proof

For the general minimization problem (3), the related saddle-point problem is given by

cf. [18]. Hence, the bilinear relaxation with exact data (\({\mathfrak {B}_{0}}\)) corresponds to the primal-dual formulation

Due to [52, Thm. 28.3], the first components \(\tilde{\varvec{w}}\) of the saddle-points \((\tilde{\varvec{w}}, \tilde{\varvec{y}})\) of (24) are solutions of (\({\mathfrak {B}_{0}}\)). Vice versa, [52, Cor. 28.2.2] implies that the solutions \(\tilde{\varvec{w}}\) of (\({\mathfrak {B}_{0}}\)) are saddle-points of (24). In particular, the saddle-point problem (24) has at least one solution since the given data are exact.

Now, [18, Thm. 1] yields the convergence \((\varvec{w}^{(n)}, \varvec{y}^{(n)}) \rightarrow (\varvec{w}^\dagger , \varvec{y}^\dagger )\) of the primal-dual iteration in Algorithm 9, where the limit \((\varvec{w}^\dagger , \varvec{y}^\dagger )\) denotes a saddle point of (24), and \(\varvec{w}^\dagger \) thus a solution of (\({\mathfrak {B}_{0}}\)). \(\square \)

The employed subspace iteration and augmented Lanczos bidiagonalization are iterative schemes, which only calculate an approximation of the required singular value decomposition. How does this errors affect the convergence of the tensor-free primal-dual method? Using the subspace iteration, we may theoretically calculate the required singular values and vectors arbitrarily precise, which allows us to control the approximation error

between the exact thresholding \(\varvec{w}^{(n)}\) and the approximated \(\tilde{\varvec{w}}^{(n)}\). If the made errors \(E_n\) are square-root summable, the primal-dual method converges nevertheless to the wanted solution.

Theorem 7

(Convergence – inexact primal-dual) Let \(\theta = 1\) and \(\tau \sigma ||\mathcal {B}||^2 < 1\). If the series \(\sum _{n = 1}^\infty E_n^{\nicefrac 12}\) converges, then the iteration \((\varvec{w}^{(n)}, \varvec{y}^{(n)})\) in Algorithm 9 converges to a point \((\varvec{w}^\dagger , \varvec{y}^\dagger )\), where \(\varvec{w}^\dagger \) is a minimizer of the lifted and relaxed problem (\({\mathfrak {B}_{0}}\)).

Proof

Without loss of generality, we assume that the approximations errors are bounded by \(E_n \le 1\). Next, we compare the objective of the proximation function in (7) at the minimizer \(\varvec{w}^{(n)} := \mathcal {S}_\tau (\varvec{w})\) and its approximation \(\tilde{\varvec{w}}^{(n)}\), where \(\varvec{w}\) is the argument of the singular value thresholding in the nth iteration. Exploiting that the projective (Schatten-one) norm is bounded by the Hilbertian (Schatten-two) norm, we here have

with \(S := \min \{N_1,N_2\}\) and with an appropriate constant \(C > 0\). Since \(\tilde{\varvec{w}}^{(n)}\) approximates the minimum of the proximal function with precision \(C E_n\), the calculated \(\tilde{\varvec{w}}^{(n)}\) is a so-called type-one approximation of the proximal point \(\varvec{w}^{(n)} := \mathcal {S}_\tau (\varvec{w})\) with precision \(C E_n\), see [50, p. 385]. Since the precisions \((C E_n)^{\nicefrac 12}\) are summable, [50, Thm. 2] guarantees the convergence of the inexact primal-dual method to a saddle-point \((\varvec{w}^\dagger , \varvec{y}^\dagger )\). As in the proof of Theorem 6, the first component \(\varvec{w}^\dagger \) is a solution of the lifted problem (\({\mathfrak {B}_{0}}\)). \(\square \)

Similar convergence guarantees can be obtained for the bilinear relaxations (\({\mathfrak {B}_{\epsilon }}\)) and (\({\mathfrak {B}_\alpha }\)). Depending on the considered problem—the bilinear forward operator—and on the applied proximal algorithm, one may even obtain explicit convergence rates. Recalling the recovery guarantee in Theorem 1 exemplarily, then Algorithm 9 moreover converges to a rank-one tensor and thus to a solution of the bilinear inverse problem (\({\mathfrak {B}}\)) with high probability. Analogous convergence results apply for other recovery guarantees for noise-free and noisy measurements.

Corollary 3

(Recovery guarantee) Let \(\mathcal {B}\) be a bilinear operator randomly generated as in (2). Then, there exist positive constants \(c_0\) and \(c_1\) such that the Algorithm 9 converges to a solution of (\({\mathfrak {B}}\)) with probability at least \(1 - \mathrm {e}^{-c_1 p}\) whenever \(p \ge c_0(N_1+N_2) \log (N_1 N_2)\).

5 Reducing Rank by Hilbert Space Reweighting