Abstract

A family of effective equations for wave propagation in periodic media for arbitrary timescales \(\mathcal {O}(\varepsilon ^{-\alpha })\), where \(\varepsilon \ll 1\) is the period of the tensor describing the medium, is proposed. The well-posedness of the effective equations of the family is ensured without requiring a regularization process as in previous models (Benoit and Gloria in Long-time homogenization and asymptotic ballistic transport of classical waves, 2017, arXiv:1701.08600; Allaire et al. in Crime pays; homogenized wave equations for long times, 2018, arXiv:1803.09455). The effective solutions in the family are proved to be \(\varepsilon \) close to the original wave in a norm equivalent to the \({\mathrm {L}^{\infty }}(0,\varepsilon ^{-\alpha }T;{{\mathrm {L}^{2}}(\varOmega )})\) norm. In addition, a numerical procedure for the computation of the effective tensors of arbitrary order is provided. In particular, we present a new relation between the correctors of arbitrary order, which allows to substantially reduce the computational cost of the effective tensors of arbitrary order. This relation is not limited to the effective equations presented in this paper and can be used to compute the effective tensors of alternative effective models.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The wave equation in heterogeneous media is widely used in many applications such as seismic inversion, medical imaging or the manufacture of composite materials. We consider the following model problem: let \(\varOmega \subset \mathbb {R}^d\) be a hypercube and let \(u^\varepsilon :[0,T]\times \varOmega \rightarrow \mathbb {R}\) be the solution of

where we require \(x\mapsto u^\varepsilon (t,x)\) to be \(\varOmega \)-periodic and the initial conditions \(u^\varepsilon (0,x)\) and \(\partial _tu^\varepsilon (0,x)\) are given. As we allow the domain \(\varOmega \) to be arbitrarily large, (1) can be used to model wave propagation in infinite media. We assume here that the tensor \(a^\varepsilon \) varies at the scale \(\varepsilon \ll 1\) while the initial conditions and the source f have wavelength of order \(\mathcal {O}(1)\). In such multiscale situations, standard numerical methods such as the finite element (FE) method or the finite difference (FD) method are accurate only if the size of the grid resolves the microscopic scale \(\mathcal {O}(\varepsilon )\). Hence, as \(\varepsilon \rightarrow 0\) or as the domain \(\varOmega \) grows the computational cost of the method becomes prohibitive and multiscale numerical methods are needed.

Several multiscale methods for the approximation of (1) are available in the literature. They can be divided into two groups (see [3] for a review). First, the methods suited when the medium does not have scale separation: [26, 27, 33, 34], and [4]. These methods rely on sophisticated finite element spaces relying on the solutions of localized problems at the fine scale. Second, the methods suited when the medium has scale separation (i.e., a special structure of the medium is required). These methods are built in the framework of the heterogeneous multiscale method (HMM): the FD-HMM [11, 23] and the FE-HMM [1]. In both methods, the effective behavior of the wave is approximated by solving micro-problems in small sampling domains.

The FD-HMM and the FE-HMM rely on homogenization theory [12, 14, 17, 28, 32, 36]: they are built to approximate the homogenized equation and thus provide approximations of \(u^\varepsilon \) in an \({\mathrm {L}^{\infty }}(0,T;{{\mathrm {L}^{2}}(\varOmega )})\) sense. The homogenization of the wave equation (1) is provided in [15]. For a given sequence of tensors \(\{a^\varepsilon \}_{\varepsilon >0}\), we have the existence of a subsequence of \(\{u^\varepsilon \}_{\varepsilon >0}\) that converges weakly\(^*\) in \({\mathrm {L}^{\infty }}(0,T;{{\mathrm {W}_{\!{\mathrm {per}}}}(\varOmega )})\) to \(u^0\) as \(\varepsilon \rightarrow 0\) (definitions of the functional spaces are provided below). The limit \(u^0\), called the homogenized solution, solves the homogenized equation

with the same initial conditions as for \(u^\varepsilon \). The homogenized tensor \(a^0\) in (2) is obtained as the G-limit of a subsequence of \(\{a^\varepsilon \}_{\varepsilon >0}\) (see [19, 38]). In general, \(a^0\) depends on the choice of the subsequence and thus no formula is available for its computation. In this paper, we consider periodic media, i.e., we assume that the medium is described by

where Y is a reference cell (typically \(Y=(0,1)^d\)). Under assumption (3), \(a^0\) is proved to be constant and an explicit formula is obtained (see, e.g., [12, 14, 17, 28]): it can be computed by means of the first-order correctors, which are defined as the solutions of cell problems (i.e., elliptic equations in Y based on a(y) with periodic boundary conditions). Therefore, in the periodic case the homogenized solution \(u^0\) can be accurately approximated independently of \(\varepsilon \).

However, for wave propagation on large timescales, \(u^\varepsilon \) develops dispersive effects at the macroscopic scale that are not captured by \(u^0\). Furthermore, if the initial conditions or the source have high spatial frequencies (in between \(\mathcal {O}(1)\) and \(\mathcal {O}(\varepsilon )\)), the dispersion appears at shorter times. Hence, to develop numerical homogenization methods for long-time propagation, or in high frequency regimes, new effective models are required.

The study of this dispersion phenomenon has recently been the subject of considerable interest. Analyses for periodic media and timescales \(\mathcal {O}(\varepsilon ^{-2})\) are provided in [5, 6, 8, 20, 21, 29, 37] and numerical approaches are studied in [2, 10]. A result for locally periodic media for timescales \(\mathcal {O}(\varepsilon ^{-2})\) was also obtained in [7]. For arbitrary timescales \(\mathcal {O}(\varepsilon ^{-\alpha })\), \(\alpha \in \mathbb {N}\), effective equations were proposed in [9] and [13]. The well-posedness of these equations is obtained using regularization techniques: in [13], the regularization relies on the tuning of an unknown parameter, which poses problems in practice; in [9] a filtering process is introduced (yet not tested in practice).

In this paper, we present two main results first reported in [35, Chap. 5]. The first main result is the definition of a family of effective equations that approximate \(u^\varepsilon \) for arbitrary timescales \(\mathcal {O}(\varepsilon ^{-\alpha })\). The effective equations, derived by generalizing the technique introduced for timescales \(\mathcal {O}(\varepsilon ^{-2})\) in [5], have the formFootnote 1

where \(a^0\) is the homogenized tensor and \(a^{2r},b^{2r}\) are pairs of nonnegative, symmetric tensors of order \(2r+2\) and 2r, respectively, which satisfy constraints based on high order correctors, solutions of cell problems. Note that the correction of the right-hand side generalizes the one introduced in the case \(\alpha =2\) in [8] and discussed in [5]. For all effective solutions \(\tilde{u}\) in the family, we prove an error estimate that ensures \(\tilde{u}\) to be \(\varepsilon \) close to \(u^\varepsilon \) in the \({\mathrm {L}^{\infty }}(0,\varepsilon ^{-\alpha }T;W)\) norm (see (5)). In contrast to the effective equations proposed in [9] and [13], the well-posedness of (4) does not rely on regularization but is naturally ensured by the non-negativity of the tensors. The unregularized versions of the effective equations from [9] and [13] do not belong to the family (4) but are closely related: they have the form (4) with \(a^{2r}=g^{2r}\), \(b^{2r}=0\) and these pairs of tensors satisfy the correct constraints. The issue is however that the sign of \(g^{2r}\) happens to be negative for some r (this is proved for \(g^{2}\) [18]) which results in the ill-posedness of the unregularized version of these effective equations.

The second main result of the paper is an explicit procedure for the computation of the high order effective tensors \(\{a^{2r},b^{2r}\}\) in (4), for which we provide a new relation between the high order correctors. In particular, while the natural formula to compute \(a^{2r},b^{2r}\) requires to solve the cell problems of order 1 to \(2r+1\), this relation ensures that only the cell problems of order 1 to \(r+1\) are in fact necessary. The consequence is a significant reduction of the computational cost needed to compute the effective tensors of arbitrary order. We emphasize that this result can also directly be used to reduce the computational cost for the tensors of the effective equations from [9] and [13].

The paper is organized as follows. In Sect. 2, we present our first main result: We derive the family of effective equations and state the error estimate. We then compare the obtained effective equations with the ones from [9] and [13]. In Sect. 3, we construct a numerical procedure to compute the tensors of effective equations. We then present our second main result: a relation between the correctors which allows to reduce the computational cost of the effective tensors. In Sect. 4, we illustrate our theoretical findings in various numerical experiments. Finally, in Sect. 5 we provide the proofs of the main results.

1.1 Definitions and Notation

Let us start by introducing some definitions and notations used in the paper. Let \({\mathrm {H}^{1}_{\mathrm {per}}}(\varOmega )\) be the closure of the space \(\mathcal {C}^\infty _{\mathrm {per}}(\varOmega )\) for the \({\mathrm {H}^{1}}\) norm. We denote the quotient spaces \({\mathcal {L}^2}(\varOmega )= {\mathrm {L}^{2}}(\varOmega )/\mathbb {R}\) and \({\mathcal {W}_{{\mathrm {per}}}}(\varOmega )= {\mathrm {H}^{1}_{\mathrm {per}}}(\varOmega )/\mathbb {R}\). The space \({\mathrm {W}_{\!{\mathrm {per}}}}(\varOmega )\) (resp. \({{\mathrm {L}^{2}_0}(\varOmega )}\)) is composed of the zero mean representatives of the equivalence classes in \({\mathcal {W}_{{\mathrm {per}}}}(\varOmega )\) (resp. \({\mathcal {L}^2}(\varOmega )\)). The dual space of \({\mathrm {W}_{\!{\mathrm {per}}}}(\varOmega )\) (resp. \({\mathcal {W}_{{\mathrm {per}}}}(\varOmega )\)) is denoted \({\mathrm {W}_{\!{\mathrm {per}}}^*}(\varOmega )\) (resp. \({\mathcal {W}_{{\mathrm {per}}}^*}(\varOmega )\)). The integral mean of \(v\in {\mathrm {L}^{1}}(\varOmega )\) is denoted \(\langle {v}\rangle _\varOmega = \frac{1}{|\varOmega |} \int _\varOmega v\) and \((\cdot ,\cdot )_\varOmega \) denotes the standard inner product in \({\mathrm {L}^{2}}(\varOmega )\). We define the following norm on \({\mathrm {W}_{\!{\mathrm {per}}}}(\varOmega )\)

Using the Poincaré–Wirtinger inequality, we verify that \(\Vert \cdot \Vert _{W}\) is equivalent to the \({\mathrm {L}^{2}}\) norm: \(\Vert w\Vert _{W} \le \Vert w\Vert _{{\mathrm {L}^{2}}(\varOmega )} \le \max \{1,C_\varOmega \} \Vert w\Vert _{W}\) where \(C_\varOmega \) is the Poincaré constant.

We denote \({\mathrm {Ten}}^n(\mathbb {R}^d)\) the vector space of tensors of order n. In the whole text, we drop the notation of the sum symbol for the dot product between two tensors and use the convention that repeated indices are summed. The subspace of \({\mathrm {Ten}}^n(\mathbb {R}^d)\) of symmetric tensors is denoted \({\mathrm {Sym}}^n(\mathbb {R}^d)\), i.e., \(q\in {\mathrm {Sym}}^n(\mathbb {R}^d)\) iff \(q_{i_1\cdots i_n} = q_{i_{\sigma (1)}\cdots i_{\sigma (n)}}\) for any permutation of order n \(\sigma \in \mathbb {S}_n\). We define the symmetrization operator \(S^n:{\mathrm {Ten}}^n(\mathbb {R}^d)\rightarrow {\mathrm {Sym}}^n(\mathbb {R}^d)\) as

The coordinate \(\big (S^n(q)\big )_{i_1\cdots i_n}\) is denoted \(S^n_{i_1\cdots i_n}\{q_{i_1\cdots i_n}\}\). We denote \(=_S\) an equality holding up to symmetries, i.e., for \(p,q\in {\mathrm {Ten}}^n(\mathbb {R}^d)\) we have

A colon is used to denote the inner product of two tensors in \({\mathrm {Ten}}^{n}(\mathbb {R}^d)\), \(p:q= p_{i_1\cdots i_n}q_{i_1\cdots i_n}\). We say that a tensor \(q\in {\mathrm {Ten}}^{2n}(\mathbb {R}^d)\) is major symmetric if it satisfies

We say that a tensor \(q\in {\mathrm {Ten}}^{2n}(\mathbb {R}^d)\) is positive semidefinite if

and it is positive definite if the equality in (9) holds only for \(\xi =0\). The tensor product of \(p\in {\mathrm {Ten}}^m(\mathbb {R}^d)\) and \(q\in {\mathrm {Ten}}^n(\mathbb {R}^d)\) is the tensor of \({\mathrm {Ten}}^{m+n}(\mathbb {R}^d)\) defined as \((p\otimes q)_{i_1\cdots i_{m+n}} = p_{i_1\cdots i_m} q_{i_{m+1}\cdots i_{m+n}}\). Note that up to symmetries the tensor product is commutative, i.e., \(p\otimes q=_S q\otimes p\). We use the shorthand notation

The derivative with respect to the i-th space variable \(x_i\) is denoted \(\partial _{i}\) and the derivation with respect to any other variable is specified. For \(q\in {\mathrm {Ten}}^n(\mathbb {R}^d)\), we denote the differential operator

1.2 Settings of the Problem

Recall assumption (3): \(a^\varepsilon (x) = a\big (\tfrac{x}{\varepsilon }\big )\), where \(y\mapsto a(y)\) is a \(d\times d\) symmetric, Y-periodic tensor. In addition, we assume that a(y) is uniformly elliptic and bounded, i.e., there exists \(\lambda ,\varLambda >0\) such that

Without loss of generality, let the reference cell be \(Y=(0,\ell _1)\times \cdots \times (0,\ell _d)\). We assume that the hypercube \(\varOmega =(\omega ^l_{1},\omega ^r_{1})\times \cdots \times (\omega ^l_{d},\omega ^r_{d})\) satisfies

In particular, (12) ensures that for a Y-periodic function \(\gamma \), the map \(x\mapsto \gamma \big (\tfrac{x}{\varepsilon }\big )\) is \(\varOmega \)-periodic (\(\gamma \) is extended to \(\mathbb {R}^d\) by periodicity). Note that the integers \(n_i\) in (12) can be arbitrarily large. In particular \(n_i\) can be of order \(\mathcal {O}(\varepsilon ^{-\alpha })\).

Given an integer \(\alpha \ge 0\), we consider the wave equation: \(u^\varepsilon :[0,\varepsilon ^{-\alpha }T]\times \varOmega \rightarrow \mathbb {R}\) such that

where \(u_{0},u_1\) are given initial conditions and f is a source. The well-posedness of (13) is proved in [24, 31]: if \(u_{0}\in {{\mathrm {W}_{\!{\mathrm {per}}}}(\varOmega )}\), \(u_1\in {{\mathrm {L}^{2}_0}(\varOmega )}\), and \(f\in {\mathrm {L}^{2}}(0,{T^\varepsilon };{{\mathrm {L}^{2}_0}(\varOmega )})\), then there exists a unique weak solution \(u^\varepsilon \in {\mathrm {L}^{\infty }}(0,{T^\varepsilon };{{\mathrm {W}_{\!{\mathrm {per}}}}(\varOmega )})\) with \(\partial _tu^\varepsilon \in {\mathrm {L}^{\infty }}(0,{T^\varepsilon };{{\mathrm {L}^{2}_0}(\varOmega )})\) and \({\partial ^2_t}u^\varepsilon \in {\mathrm {L}^{2}}(0,{T^\varepsilon };{{\mathrm {W}_{\!{\mathrm {per}}}^*}(\varOmega )})\).

2 First Main Result: Family of Effective Equations and a Priori Error Estimate

In this section, we present the family of effective equations and provide the corresponding a priori error estimate. In Sect. 2.1, we derive the family in three steps: (i) We discuss the ansatz on the form of the effective equations; (ii) using asymptotic expansion we derive the high order cell problems; (iii) we obtain the constraints on the effective tensors by investigating the well-posedness of the cell problems. In Sect. 2.2, we define rigorously the family of effective equations and state the a priori error estimate. Finally, in Sect. 2.3 we compare the obtained equations with the other effective equations available in the literature. For the sake of readability, we postpone the technical proofs to Sect. 5.

2.1 Derivation of the Family of Effective Equations

In the whole derivation, we assume that the data are as regular as necessary. The specific requirements are stated in Theorem 2. Note that we consider here timescales \(\varepsilon ^{-\alpha }T\) with \(\alpha \ge 2\). For timescales \(\varepsilon ^{-\alpha }T\) with \(\alpha <2\) it can be shown following similar techniques that the standard homogenized equation is a valid effective model (see [35, Sect. 5.1.1]).

2.1.1 Ansatz on the Form of the Effective Equations

We first discuss the ansatz on the form of the effective equations, which has a major importance in the derivation. We assume that the effective equations have the form

where \(a^0\) is the homogenized tensor (26), \(a^{2r}\in {\mathrm {Ten}}^{2r+2}(\mathbb {R}^d)\), \(b^{2r}\in {\mathrm {Ten}}^{2r}(\mathbb {R}^d)\) are tensors to be defined and Q is a differential operator to be defined (the construction of Qf is discussed in Remark 9). As discussed in [5], if the set of considered equations is too small, we end up with ill-posed equations. In particular, without the operators \(-b^{2r}\nabla ^{2r}_{x}{\partial ^2_t}\) in (14), our derivation would lead to the same ill-posed effective equations obtained in [9] and [13] (the unregularized versions).

Following the classical Faedo–Galerkin method (see [24, Chap. 7]), we prove the following well-posedness result for (14). We define the bilinear forms

and the associated Banach spaces

We call a function \(\tilde{u}\in {\mathrm {L}^{\infty }}(0,{T^\varepsilon };\mathcal {V})\), with \(\partial _t\tilde{u}\in {\mathrm {L}^{\infty }}(0,{T^\varepsilon };\mathcal {H})\), a weak solution of (14) if for all test functions \(v \in \mathcal {C}^2([0,{T^\varepsilon }];\mathcal {V})\), with \(v({T^\varepsilon })=\partial _tv({T^\varepsilon })=0\), \(\tilde{u}\) satisfies

Theorem 1

Assume that the tensors \(a^{2r}\in {\mathrm {Ten}}^{2r+2}(\mathbb {R}^d)\), \(b^{2r}\in {\mathrm {Ten}}^{2r}(\mathbb {R}^d)\) are positive semidefinite (9) and satisfy the major symmetries (8). Furthermore, assume that the data satisfy the regularity

Then, there exists a unique weak solution of (14).

Let us provide a short sketch of the proof. We look for successive approximations of a weak solution in the form \(u^m(t) = \sum _{k=0}^m u^m_k(t)\varphi _k\), where \(\{\varphi _k\}_{k\in \mathbb {N}}\) is a smooth basis of \({{\mathrm {W}_{\!{\mathrm {per}}}}(\varOmega )}\). For each m, \(u^m(t)\) is obtained as the solution of a well-posed ordinary differential equation. We then prove that the sequence \(\{u^m\}_{m\ge 0}\) is bounded in \({\mathrm {L}^{\infty }}(0,{T^\varepsilon };\mathcal {V})\). In particular, note that the sign assumptions on the tensors ensure that \((v,v)_\mathcal {H}\ge \Vert v\Vert _{\mathrm {L}^{2}}^2\) for any \(v\in \mathcal {H}\) and \(A(v,v)\ge \lambda \Vert \nabla v\Vert _{\mathrm {L}^{2}}^2\) for all \(v\in \mathcal {V}\). We thus obtain the existence of a subsequence that weakly\(^*\) converges in \({\mathrm {L}^{\infty }}(0,{T^\varepsilon };\mathcal {V})\). We can then prove that the weak\(^*\) limit is the unique weak solution.

2.1.2 Asymptotic Expansion, Inductive Boussinesq Tricks

We make the ansatz that \(u^\varepsilon \) can be approximated by an adaptation of \(\tilde{u}\) of the form

where \(u^k\) are to be defined and the map \(y\mapsto u^k(t,x,y)\) is Y-periodic. We split the error as

and follow the argument presented in [5] based on the error estimate of Lemma 9 (see also [35, Sect. 4.2.2]): for \(\Vert u^\varepsilon - \mathcal {B}^\varepsilon \tilde{u}\Vert _{{\mathrm {L}^{\infty }}(0,\varepsilon ^{-\alpha }T;W)}\) to be of order \(\mathcal {O}(\varepsilon )\), we need the terms involving \(\tilde{u}\) in the remainder

to be of order \(\mathcal {O}(\varepsilon ^{\alpha +1})\) in the \({\mathrm {L}^{\infty }}(0,\varepsilon ^{-\alpha }T;{{\mathrm {W}_{\!{\mathrm {per}}}^*}(\varOmega )})\) norm (it is sufficient that this holds in the stronger \({\mathrm {L}^{\infty }}(0,\varepsilon ^{-\alpha }T;{{\mathrm {L}^{2}}(\varOmega )})\) norm). We now expand \(r^\varepsilon \): using the equation for \(u^\varepsilon \) (13) and the form of the adaptation (16), we obtain

where the operators \(\mathcal {A}_{yy},\mathcal {A}_{xy},\mathcal {A}_{xx}\) are defined as

and the remainder is

Classical two-scale asymptotic expansion [14] advises to look for \(u^k\) of the form

where the components of the tensor \(\chi ^k\) are Y-periodic functions to be defined.

The next step is the main difficulty of the derivation: We must use the effective equation (14) to substitute all the time derivatives in the terms of order \(\mathcal {O}(\varepsilon ^0)\) to \(\mathcal {O}(\varepsilon ^{\alpha })\) in (18). Various versions of this process have been used in related works [5, 6, 8, 21, 25, 30] to obtain well-posed effective equations from ill-posed ones. To refer to this kind of manipulation, the term Boussinesq trick was coined in [8] (see [16] where such tricks are used for several Boussinesq’s type of equations).

As these inductive Boussinesq tricks represent a technical challenge, let us explain here the case \(\alpha =2\) and \(f=0\) and postpone the general case to Sect. 5.1.1 (Theorem 5 and Lemma 8). For \(\alpha =2\) and \(f=0\), the effective equation (14) can be written as

We now use the equation to substitute \({\partial ^2_t}\tilde{u}\) in the last term and obtain

Using (20) and the two last equalities, we find that the time derivatives in (18) can be written as

where the remainder \(\mathcal {R}^\varepsilon \tilde{u}\) has order \(\mathcal {O}(\varepsilon ^3)\) in the \({\mathrm {L}^{\infty }}(0,\varepsilon ^{-\alpha }T;{{\mathrm {L}^{2}}(\varOmega )})\) norm (for \(\tilde{u}\) sufficiently regular). Using this expression in (18), we obtain the desired development in the case \(\alpha =2\) and \(f=0\).

This process is generalized in Theorem 5 and Lemma 8. With these results, we are able to rewrite \(r^\varepsilon \) in (18) without time derivative in the terms of order \(\mathcal {O}(\varepsilon ^0)\) to \(\mathcal {O}(\varepsilon ^{\alpha })\): defining the tensors \(c^r\in {\mathrm {Ten}}^{2r+2}(\mathbb {R}^d)\) inductively and \(p^{k}\in {\mathrm {Ten}}^{k+2}(\mathbb {R}^d)\) as

we obtain

Provided sufficient regularity of \(\tilde{u}\), the remainder \(\mathcal {R}^\varepsilon \tilde{u}\) has order \(\mathcal {O}(\varepsilon ^{\alpha +1})\) in the \({\mathrm {L}^{\infty }}(0,\varepsilon ^{-\alpha }T;{{\mathrm {L}^{2}}(\varOmega )})\) norm. Furthermore, provided sufficient regularity of f, the remainder \(\mathcal {S}^\varepsilon f\) has order \(\mathcal {O}(\varepsilon )\) in the \({\mathrm {L}^{\infty }}(0,\varepsilon ^{-\alpha }T;{{\mathrm {L}^{2}}(\varOmega )})\) norm (this is sufficient for \(\tilde{u}\) and \(u^\varepsilon \) to be \(\varepsilon \) close, see Theorem 2). Using the definition of \(u^k\) in (20) and of \(\mathcal {A}_{yy},\mathcal {A}_{xy},\mathcal {A}_{xx}\), we verify that (for \(k=0\) use \(u^0 = \tilde{u}\) and \(\chi ^0=1\))

where \(e_i\) denotes the i-th vector of the canonical basis of \(\mathbb {R}^d\). Hence, canceling successively the terms of order \(\mathcal {O}(\varepsilon ^{-1})\) to \(\mathcal {O}(\varepsilon ^{\alpha })\) in (22), we obtain the cell problems: the correctors \(\{\chi ^{k}_{i_1\cdot \cdot i_{k}}\}_{k=1}^{\alpha +2}\) are the functions in \({{\mathrm {W}_{\!{\mathrm {per}}}}(Y)}\) such that

where the tensors \(p^k\) are defined in (21) and the symmetrization operator is defined in (6). Note that the cell problems for \(\chi ^1_i\) and \(\chi ^2_{ij}\) are the same as the cell problems obtained with two-scale asymptotic expansion [14].

Remark 1

In (23), we have chosen symmetric right-hand sides. This is possible thanks to the symmetry of \(\nabla ^{n}_{x}\tilde{u}\) in (22), as a term of the form \(q\nabla ^{n}_{x}\tilde{u}\) can be rewritten as \(S^n(q)\nabla ^{n}_{x}\tilde{u}\). This choice ensures that the correctors are symmetric tensors functions. In particular, \(\chi ^k\) has only \(\left( {\begin{array}{c}k+d-1\\ k\end{array}}\right) \) distinct entries instead of \(d^k\) if it was not symmetric. When it comes to numerical approximation, each distinct entry of the corrector corresponds to a cell problem to solve and this symmetrization saves computational time. In addition, this choice ensures that the odd order cell problems are well-posed unconditionally (see below).

2.1.3 Constraints on the High Order Effective Tensors

The last step in the derivation of the family of effective equations is to obtain the constraints on the effective tensors by imposing the well-posedness of the cell problems. In order to investigate the solvability of (23), let us state the following classical result (obtained with the Fredholm alternative or the Lax–Milgram theorem combined with the characterization of \({{\mathrm {W}_{\!{\mathrm {per}}}^*}(Y)}\)).

Lemma 1

For an elliptic and bounded tensor a(y), consider the following variational problem: find \(v\in {{\mathrm {W}_{\!{\mathrm {per}}}}(Y)}\) such that

where \(f^0,f^1_1,\ldots ,f^1_d\) are given functions. Then, (24) has a unique solution \(v\in {{\mathrm {W}_{\!{\mathrm {per}}}}(Y)}\) if and only if

We now proceed to the two following tasks: First, we verify that the odd order cell problems satisfy unconditionally the solvability condition (25); second, we impose the solvability condition (25) on the right-hand sides of the even order cell problems to obtain the constraints on the effective tensors.

First note that (23a) is well-posed as its right-hand side unconditionally satisfies (25). Next, we verify that the cell problem for \(\chi ^2\) is well-posed as the homogenized tensor satisfies

which ensures the solvability condition (25) to hold. We then continue this process to derive the constraints on the higher order effective tensors imposed by the well-posedness of the higher order cell problems.

We assume that the cell problems are well-posed up to order 2r, \(r\ge 1\). Consider the cell problem for \(\chi ^{2r+1}\). Recalling that \(p^{2r-1}=0\) and as the correctors \(\chi ^{1},\ldots ,\chi ^{2r}\) have zero mean, we verify that the solvability condition (25) is equivalent to the following relation between the correctors.

Lemma 2

For any \(1\le r \le \lfloor \alpha /2\rfloor \), the correctors \(\chi ^{2r}\) and \(\chi ^{2r-1}\) satisfy the equality

Remark 2

A proof of Lemma 2 can be found in [35, Lemma 5.2.5]. A similar result is also known in the context of Bloch wave theory (see, e.g., [13, 20] and the references therein). As discussed, Lemma 2 guarantees that the odd order cell problems are well-posed unconditionally. Pursuing the reasoning, this result ensures that no operator of odd order is needed in the effective equations. It is also the reason why no additional correction is required in the effective equation when increasing the timescale from an even integer \(\alpha \) to \(\alpha +1\).

Finally, consider the cell problem for \(\chi ^{2r+2}\). Imposing the solvability condition (25) on the right-hand side, we obtain the following constraint on the tensor \(p^{2r}\):

This constraint can be rewritten in terms of the effective tensors \(a^{2r},b^{2r}\) using the definition of \(c^r\) and \(p^{2r}\) in (21):

The constraints (28) characterize the family of effective equations. Indeed, if the effective tensors \(a^{2r},b^{2r}\) satisfy (28), the cell problems for \(\chi ^1\) to \(\chi ^{\alpha +2}\) are well-posed because their right-hand sides satisfy the solvability condition (25). Therefore, the adaptation \(\mathcal {B}^\varepsilon \tilde{u}\) given by (16) and (20) is well defined and can be used to prove an error estimate between \(u^\varepsilon -\tilde{u}\). This result is presented in the next section and rigorously proved in Sect. 5.2.

2.2 A Priori Error Estimate for the Family of Effective Equations

In the previous section, we derived the constraints on the effective tensors for the effective equations to approximate \(u^\varepsilon \). We present here an error estimate that ensures that the solutions of the derived effective equations are \(\varepsilon \) close to \(u^\varepsilon \) in the \({\mathrm {L}^{\infty }}(0,\varepsilon ^{-\alpha }T;W)\) norm.

Let us first rigorously define the family of effective equations derived in Sect. 2.1.

Definition 1

The family \(\mathcal {E}\) of effective equations is the set of equations (14), where Qf is defined as

and for \(1\le r \le \lfloor \alpha /2\rfloor \) the tensors \(a^{2r},b^{2r}\) satisfy the following requirements:

-

(i)

\(a^{2r}\in {\mathrm {Ten}}^{2r+2}(\mathbb {R}^d)\), \(b^{2r}\in {\mathrm {Ten}}^{2r}(\mathbb {R}^d)\);

-

(ii)

\(a^{2r}\) and \(b^{2r}\) are positive semidefinite (see (9));

-

(iii)

\(a^{2r}\) and \(b^{2r}\) satisfy the major symmetries (8);

-

(vi)

\(a^{2r}\) and \(b^{2r}\) satisfy the constraints (28).

Remark 3

In the case \(\alpha =2\), the correction Qf of the right-hand side in the effective equations was introduced in [8] and discussed in [5]. We verify that the definition of Qf in (29) leads to a lower constant multiplying \(\Vert f\Vert _{{\mathrm {L}^{1}}(0,\varepsilon ^{-\alpha }T;{\mathrm {H}^{r(\alpha )}(\varOmega )})}\) in estimate (30). More details on the origin of (29) are given in Remarks 9 and 11.

For the effective solutions in family \(\mathcal {E}\), we prove in Sect. 5.2 the following error estimate.

Theorem 2

Let \(d\le 3\), \(\alpha \ge 2\) and let \(\tilde{u}\) belong to the family of effective equations \(\mathcal {E}\) (Definition 1). Furthermore, assume that \(a(y)\in {\mathrm {L}^{\infty }}(Y)\) and that the data and \(\tilde{u}\) satisfy the following regularity

where \(r(\alpha )=\alpha +2\lfloor \alpha /2\rfloor +2\). Then, the following error estimate holds

where C depends only on T, \(\lambda \), \(\varLambda \), \(\{|a^{2r}|_\infty ,|b^{2r}|_\infty \}_{r=1}^{\lfloor \alpha /2\rfloor }\), and Y, and the norm \(\Vert {\cdot }\Vert _W\) is defined in (5).

Remark 4

For \(d\ge 4\), the result of Theorem 2 holds provided a higher regularity on the effective solution and f are assumed. Specifically, assuming that m is a sufficiently large integer for the embedding \(\mathrm {H}^{m}_{\mathrm {per}}(\varOmega )\hookrightarrow \mathcal {C}^0(\varOmega )\) to hold, the statement of Theorem 2 is true for \(r(\alpha )=\alpha +2\lfloor \alpha /2\rfloor +m\).

Remark 5

From the derivation in Sect. 2.1, we may hope that \(\mathcal {B}^\varepsilon \tilde{u}\) is a better approximation of \(u^\varepsilon \) than \(\tilde{u}\). For example, in the elliptic case the error between \(u^\varepsilon \) and an adaptation can be estimated in the energy norm (see, e.g., [28], note that under (12) there is no boundary layer). However, in the case of the wave equation, the answer is not as simple. In fact, an error estimate in the energy norm can be proved only in some specific settings: for example if \(f=0\) and for well prepared initial position \(u_{0}\) of the form \(\mathcal {B}^\varepsilon \bar{u}_0\) for some \(\bar{u}_0\). Note that this issue is related to the lack of convergence of the energy of the fine scale wave toward the energy of the homogenized wave (see [15]).

2.3 Comparison with Other Effective Equations in the Literature

In [13], effective equations for arbitrary timescales are derived. The settings are slightly different as the wave equation (13) is considered in the whole space \(\mathbb {R}^d\) and with a tensor that can be of a more general nature: periodic, almost periodic, quasiperiodic and random (we refer to [13] for the specific definitions). In these circumstances, [13] proposes effective equations that have the form

The effective tensors in this equation are indeed verified to match the tensors \(g^{2r}\) defined in (27) (see [35, Sect. 5.2.6]). Under some weak regularity assumption on the initial condition, an error estimate between \(u^\varepsilon \) and \(\bar{u}\) is proved in the \({\mathrm {L}^{\infty }}(0,\varepsilon ^{-\alpha }T;{\mathrm {L}^{2}}(\mathbb {R}^d))\) norm. This estimate is a strong theoretical result as it holds in the norm we expect in the context of homogenization of the wave equation. However, the use of the effective equation (31) in practice is problematic as no procedure for the computation of the regularization parameter \(\gamma \) is available. In fact, numerical tests indicate that the range of acceptable values for \(\gamma \) is narrow: if \(\gamma \) is too small, the equation is ill-posed and if \(\gamma \) is too large, the solution \(\bar{u}\) of (31) does not describe \(u^\varepsilon \) accurately (see [35, Sect. 5.4.3]).

In [9], another effective equation for arbitrary timescales is proposed. The settings of this result are the following: The wave equation (13) is considered in the whole space \(\mathbb {R}^d\) with a periodic tensor, it includes an oscillating density and admits nonzero source and trivial initial conditions. In the particular case of a constant density (as considered in the present paper), the effective equation proposed in [9] reads

where \(S^\varepsilon _1\), \(S^\varepsilon _2\) are filtering differential operators that ensure the equation to be well-posed. The main result is an error estimate in energy norm between \(u^\varepsilon \) and an adaptation of \(\hat{u}\). In particular, the results enable the adaptation to approximate \(u^\varepsilon \) as accurately as one wants by increasing \(\alpha \) accordingly. Nevertheless, the filtering process used in (32) to obtain a well-posed equation has yet to be tested in practice.

Despite the inherent difference between (31) and (32) and effective equations (14) in family \(\mathcal {E}\), their respective effective tensors are tied through the relation (see (28)):

Hence, if we let \(b^{2r}=0\) for all r in the effective equations in \(\mathcal {E}\), relation (33) reads \(g^{2r} = (-1)^ra^{2r}\) and we end up with the unregularized versions of (31) and (32) (i.e., \(\gamma =0\) and \(S_1^\varepsilon =S_2^\varepsilon =\mathrm {Id}\)). Similarly, Eqs. (31) and (32) without their regularization devices satisfy requirement (vi) in Definition 1. However, while the well-posednesses of (31) and (32) rely on their respective regularization process, the well-posedness of the effective equations in \(\mathcal {E}\) is guaranteed by requirements (ii) and (iii). To fulfill these requirements, we have an explicit and constructive algorithm described in Sect. 3.1.

Finally, before approximating any of the effective equations (14), (31) or (32), the effective tensors \(g^{2r}\) must be computed. For this calculation, a substantial gain of computational time is obtained by using the formula provided by our second main result in Theorem 3, Sect. 3.2.

3 Second Main Result: Computation of Effective Tensors and Reduction of the Computational Cost

In this section, we provide a numerical procedure for the computation of the tensors of some effective equations in the family \(\mathcal {E}\). In particular, in Sect. 3.2 we present a new relation between the correctors that allows to reduce significantly the computational cost for the effective tensors. We emphasize that this result is not limited to the family \(\mathcal {E}\) and can be used to compute the effective tensors of the alternative effective models from [9] and [13] discussed in Sect. 2.3. The final algorithm is provided in Sect. 3.4.

3.1 Construction of High Order Effective Tensors

Recall that the tensors \(\{a^{2r},b^{2r}\}_{r=1}^{\lfloor \alpha /2\rfloor }\) of an effective equation in family \(\mathcal {E}\) are characterized by the following properties (Definition 1): for \(1\le r\le \lfloor \alpha /2\rfloor \)

-

(i)

\(a^{2r}\in {\mathrm {Ten}}^{2r+2}(\mathbb {R}^d)\), \(b^{2r}\in {\mathrm {Ten}}^{2r}(\mathbb {R}^d)\);

-

(ii)

\(a^{2r}\) and \(b^{2r}\) are positive semidefinite, i.e., (see (9))

$$\begin{aligned} a^{2r}\xi :\xi \ge 0\quad \forall \xi \in {\mathrm {Sym}}^{r+1}(\mathbb {R}^d), \qquad b^{2r}\xi :\xi \ge 0\quad \forall \xi \in {\mathrm {Sym}}^{r}(\mathbb {R}^d); \end{aligned}$$ -

(iii)

\(a^{2r}\) and \(b^{2r}\) satisfy the major symmetries, i.e.,

$$\begin{aligned} a^{2r}_{i_1\cdots i_{r+1}i_{r+2}\cdots i_{2r+2}} = a^{2r}_{i_{r+2}\cdots i_{2r+2}i_1\cdots i_{r+1}}, \qquad b^{2r}_{i_1\cdots i_{r}i_{r+1}\cdots i_{2r}} = b^{2r}_{i_{r+1}\cdots i_{2r}i_1\cdots i_{r}}; \end{aligned}$$ -

(vi)

\(a^{2r}\) and \(b^{2r}\) satisfy the constraints

$$\begin{aligned} a^{2r}-b^{2r}\otimes a^0 =_S \check{q}^r, \end{aligned}$$(34)with the tensors \(\check{q}^r\in {\mathrm {Sym}}^{2r+2}(\mathbb {R}^d)\) defined as

$$\begin{aligned} \check{q}^1 = S^4\big ( -g^2 \big ) , \qquad \check{q}^r = S^{2r+2}\Big ( (-1)^r g^{2r} + \sum _{\ell =1}^{r-1} c^\ell \otimes b^{2(r-\ell )} \Big ) \quad 2\le r\le \lfloor \alpha /2\rfloor , \end{aligned}$$(35)where the tensor \(c^1\in {\mathrm {Ten}}^{4}(\mathbb {R}^d),\ldots , c^{r-1}\in {\mathrm {Ten}}^{2r}(\mathbb {R}^d)\), defined in (21), are computed with \(a^0\), \(\{a^{2s},b^{2s}\}_{s=1}^{r-1}\) and the tensor \(g^{2r}\in {\mathrm {Ten}}^{2r+2}(\mathbb {R}^d)\), defined in (27), can be computed from the correctors \(\chi ^{2r}\) and \(\chi ^{2r+1}\) (a much cheaper alternative is to compute only \(S^{2r+2}(g^{2r})\) with formula (41), see Sect. 3.2).

Our goal is now to construct tensors \(\{a^{2r},b^{2r}\}_{r=1}^{\lfloor \alpha /2\rfloor }\) satisfying the requirements (i) to (vi). Note that if \(\check{q}^r\) was positive semidefinite, the pair \(a^{2r}=\check{q}^r\) and \(b^{2r}=0\) would trivially satisfy (i) to (vi). However, although the sign of \(\check{q}^r\) is unknown for \(r\ge 2\), it is known that \(\check{q}^1\) is negative definite (see [5, 8, 18]). Hence, the main challenge of the construction lies in the sign of the tensors and to build valid tensors, we need two basics from the tensor world. First, we use the following result, proved in Sect. 5.3.

Lemma 3

For any \(n\ge 1\), the tensor \(S^{2n}(\otimes ^{n} a^0)\) is positive definite.

Second, we use a “matricization” operator, which linearly maps a symmetric tensor \(q\in {\mathrm {Sym}}^{n}(\mathbb {R}^d)\) to a symmetric matrix M(q) whose sign is the same as q, i.e.,

where \(\nu \) is a simple tensor-to-vector transformation. One construction for such an operator is provided in Sect. 3.3.

With Lemma 3 and the operator M, we are able to build tensors that satisfy (i) to (vi).

Lemma 4

For \(r\ge 1\), assume that \(a^0\) and \(\{a^{2s},b^{2s}\}_{s=1}^{r-1}\) have already been computed and let \(\check{q}^r\) be the tensor defined in (35). Define the tensor \(R\in {\mathrm {Ten}}^{2r}(\mathbb {R}^d)\) as

where \(\lambda _{\min }(\cdot )\) denotes the minimal eigenvalue of a symmetric matrix and \(\{\cdot \}_+ = \max \{0,\cdot \}\). Then, the tensors

satisfy the requirements (i) to (vi) of Definition 1.

Proof

First, note that the orders of the tensors in (i) are correct. Second, as \(a^{2r},b^{2r}\) are fully symmetric tensors, they trivially satisfy the major symmetries and (iii) is verified. Next, we verify (vi), i.e., \(a^{2r},b^{2r}\) satisfy (34) (recall the meaning of \(=_S\) in (7)):

We are left with (ii): verifying that \(a^{2r},b^{2r}\) are positive semidefinite. The positive semidefiniteness of \(b^{2r}=R\) follows directly from the non-negativity of \(\delta ^*\) and Lemma 3. Finally, let us verify that \(a^{2r}\) is positive semidefinite. Using (36) and the definitions in (37), we have

where we denoted \(v_\xi =\nu (\xi )\) and \(|\cdot |\) the Euclidean norm. The definition of \(\delta ^*\) in (37) ensures that the right-hand side is nonnegative, proving that \(a^{2r}\) is positive semidefinite. That concludes the verification of (i) to (vi) and the proof of the lemma is complete. \(\square \)

Lemma 4 allows to compute the tensors of one effective equations in the family \(\mathcal {E}\). We emphasize that this is one possible construction among others. To obtain other effective equations, we have many options. For example, note that replacing \(\delta ^*\) in (37) with any \(\delta \ge \delta ^*\) provides other valid pairs of tensors. Alternatively, we can replace \(S^{2r}(\otimes ^{r} a^0)\) in the definition of R with another positive definite tensor. Finally, let us note the following alternative (used in [5] in the case \(\alpha =2\)).

Remark 6

Assume that the tensor \(\check{q}^r\) in (35) can be decomposed as \(\check{q}^r =_S \check{q}^{r,1} - \check{q}^{r,2}\!\otimes \!a^0\), where \(\check{q}^{r,2}\in {\mathrm {Ten}}^{2r}(\mathbb {R}^d)\) is positive semidefinite. Then we verify that the pairs (38), where R is defined as

define effective equations in the family.

3.2 A New Remarkable Relation Between the Correctors to Reduce the Cost of Computation of the Effective Tensors

Let us discuss the cost of the procedure described in the previous section. For an integer \(\alpha \), assume that we want to construct the tensors of an effective equation for a timescale \(\mathcal {O}(\varepsilon ^{-\alpha })\): \(a^0, \{a^{2r},b^{2r}\}_{r=1}^{s}\), where \(s = \lfloor \alpha /2\rfloor \). The main computational cost of this construction lies in the calculation of the tensors \(\{\check{q}^r\}_{r=1}^{s}\) in (35), which involves the tensors \(\{S^{2r+2}(g^{2r})\}_{r=1}^{s}\), where

Following this natural—but naive—formula, we thus need approximations of the correctors \(\chi ^{1}\) to \(\chi ^{2s+1}\). In this section, we present a new relation between the correctors ensuring that in fact the correctors \(\chi ^{1}\) to \(\chi ^{s+1}\) are sufficient (Theorem 3).

Let us quantify the computational gain achieved thanks to this result. As each \(\chi ^r\) is a symmetric tensor valued function, it has \(\left( {\begin{array}{c}r+d-1\\ r\end{array}}\right) \) distinct components (see Remark 1). The number of cell problems to solve to have all the distinct components of \(\chi ^{1}\) to \(\chi ^{k}\) is thus

Hence, to compute \(\{\check{q}^r\}_{r=1}^{s}\) Theorem 3 allows to avoid the approximation of \(\chi ^{s+2},\ldots ,\chi ^{2s+1}\), i.e., we spare the approximation of

cell problems. To fully appreciate this gain, assume that we want to compute the effective tensors of an effective equation for a timescale \(\mathcal {O}(\varepsilon ^{-6})\), i.e. \(s=\lfloor \alpha /2\rfloor =3\): for \(d=2\), we spare 21 cell problems (14 cell problems to solve instead of 35) and if \(d=3\), we spare 85 cell problems (34 cell problems to solve instead of 119).

Remark 7

It is important to note that this gain is not specific to the effective equations presented here. Indeed, formula (41) can directly be used to compute the (sufficient) symmetric part of the high order tensors in the effective models from [9] and [13] discussed in Sect. 2.3.

The new relation between the correctors is presented in the following result, proved in Sect. 5.4. Note that this result was obtained independently in [22, Remark 2.5] in the context of stochastic homogenization.

Theorem 3

Let \(\{\chi ^{k}_{i_1\cdot \cdot i_{k}}\}_{k=1}^{2r+1}\) be the zero mean correctors defined in (23). Then, for \(1\le r\le \lfloor \alpha /2\rfloor \), the tensor \(g^{2r}\) defined by (40) satisfies the decomposition

where the tensors \(k^r, h^r \in {\mathrm {Ten}}^{2r+2}(\mathbb {R}^d)\) are defined as

where the second double sum in the definition of \(h^r\) vanishes for \(r=1\).

Observe that the tensor \(h^r\) only depends on the correctors \(\chi ^1\) to \(\chi ^{r}\) so that decomposition (41) indeed guarantees that \(S^{2r+2}(g^{2r})\) can be computed from \(\chi ^1\) to \(\chi ^{r+1}\).

Remark 8

It can be verified that the homogenized tensor (26) satisfies \(a^0 = g^0 = k^0\). Hence, decomposition (41) also holds in the case \(r=0\) with \(h^0=0\) (recall that \(\chi ^0=1\)).

3.3 Matrix Associated to a Symmetric Tensor of Even Order

In this section, we construct the “matricization” operator used in the construction of Sect. 3.1. This operator maps a given symmetric tensor of even order \(q\) to a matrix whose sign is the same as \(q\).

We consider the bilinear map

Denote \(I(d,n)\) the set of multiindices of the distinct entries of a tensor in \({\mathrm {Sym}}^{n}(\mathbb {R}^d)\), i.e.,

We verify that the cardinality of \(I(d,n)\) is \( N(d,n) = |I(d,n)| = \left( {\begin{array}{c}d+n-1\\ n\end{array}}\right) . \) We denote \(J(d,n) = \{ 1,\ldots , N(d,n)\}\) and let \(\ell :J(d,n)\rightarrow I(d,n)\) be a bijection. We define then the bijective mapping

For \(i\in I(d,n)\), let z(i) be the number of multiindices in \(\{1,\ldots ,d\}^n\) that are equal to i up to symmetries, i.e.,

With these notations, we rewrite the map defined in (43) as

Finally, we define the matrix associated to a tensor as

We verify that for any \(\xi ,\eta \in {\mathrm {Sym}}^{n}(\mathbb {R}^d)\), \(q\xi :\eta = M(q) \nu (\xi ) \cdot \nu (\eta )\). Hence, \(q\) is positive definite (resp. semidefinite) if and only if \(M(q)\) is positive definite (resp. semidefinite). In particular, property (36) holds.

3.4 Algorithm for the Computation of the High Order Effective Tensors

We present here the algorithm for the computation of the effective tensors of one equation in the family \(\mathcal {E}\). The procedure relies on the construction explained in Lemma 4, the formula provided by Theorem 3, and the “matricization” operator M defined in (45). The set of index I(d, n) is defined in (44).

4 Numerical Experiments

In this section, we present numerical experiments to illustrate the result of Theorem 2: the effective equations in the family capture the long-time behavior of \(u^\varepsilon \). Note that reporting numerical error in the approximation of \(u^\varepsilon \) in a pseudo-infinite medium for very large timescales is not conceivable as computing a reference solution is out of reach even for one-dimensional problems. We can however consider a small periodic domain as this setting is covered by our theory. In addition, we will illustrate that high order effective models are also useful when we deal with high frequency regimes.

We consider the one-dimensional model problem (13) given by the data \(u_{0}(x) = e^{-4{x^2}}\), \(u_1=f=0\), \(a(y) = \sqrt{2} -\cos (2\pi y)\) (we verify that \(a^0=1\)) with \(\varepsilon =1/10\), and the periodic domain \(\varOmega = (-L,L)\), \(L=84\). Let us give some insight on the macroscopic evolution of \(u^\varepsilon \): the central pulse \(u_{0}\) separates into left- and right-going waves packets with speed \(a^0=\pm 1\); these packets meet at \(x=L\) (equivalently \(x=-L\)) for \(t=L+2kL\), \(k\in \mathbb {N}\), and at \(x=0\) for \(t=2kL\), \(k\in \mathbb {N}\). As time increases, dispersion appears in each packet. As the domain has a periodic boundary, at some point in time the packets start to superpose. In our settings, \(t=\varepsilon ^{-4}\) is the larger timescale until the bulk of the dispersion spans approximately half of the domain \(\varOmega \). For \(\alpha =0,2,4\), we compare \(u^\varepsilon \) with the corresponding effective solutions of order \(s=\lfloor {\alpha /2}\rfloor \), denoted \(\tilde{u}^{\lfloor {\alpha /2}\rfloor }\) (\(\tilde{u}^{0}\) is the homogenized solution). We approximate \(u^\varepsilon \) using a spectral method grid of size \(h=\varepsilon /16\) and a leap frog scheme for the time integration with \(\varDelta t = h/16\). The effective solutions \(\{\tilde{u}^s\}_{s=0}^2\) are approximated with a Fourier method (the effective coefficients are computed using Algorithm 4) on a grid of size \(h=\varepsilon /16\) (no time integration). Details on the numerical methods can be found in [35, Sect. 5.3].

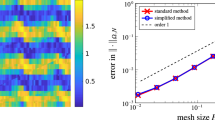

The results are displayed in Fig. 1. In the top-left plot, we compare \(u^\varepsilon \), \(\tilde{u}^0\), \(\tilde{u}^1\) at \(t=\varepsilon ^{-2}=10^2\). We observe that \(\tilde{u}^0\) does not capture the macroscopic dispersion developed by \(u^\varepsilon \), while \(\tilde{u}^1\) accurately describes it. In the top-right plot, we compare \(u^\varepsilon \), \(\{\tilde{u}^s\}_{s=0}^2\), at \(t=\varepsilon ^{-4}=10^4\) (observe that right- and left- going wave packets are in fact superposed). At this timescale, the first-order effective solution \(\tilde{u}^1\) does not capture accurately the dispersion anymore, as expected, but the second order \(\tilde{u}^2\) does. In the bottom plot, we compare the normalized errors \( \mathrm {err} (\tilde{u}^{s})(t) = \Vert (u^\varepsilon -\tilde{u}^s)(t)\Vert _{{\mathrm {L}^{2}}(\varOmega )}/ \Vert u^\varepsilon (t)\Vert _{{\mathrm {L}^{2}}(\varOmega )}\) for the effective solutions \(\{\tilde{u}^s\}_{s=0}^2\) on the time interval \([0,\varepsilon ^{-4}]\) (the x-axis is in log scale). We observe that \(\tilde{u}^0\) is accurate up to \(\varepsilon ^{-1}\) and then deteriorates. The first order \(\tilde{u}^1\) is accurate up to \(\varepsilon ^{-3}\), then deteriorates. Finally, the second order \(\tilde{u}^2\) has a satisfying accuracy over the whole time interval \((0,\varepsilon ^{-4})\). The observations of this experiment corroborate the result of Theorem 2: the effective solution \(\tilde{u}^{\lfloor {\alpha /2}\rfloor }\) accurately describe \(u^\varepsilon \) up to \(\mathcal {O}(\varepsilon ^{-\alpha })\) timescales.

Top-left: Comparison of \(u^\varepsilon \) with the homogenized solution \(\tilde{u}^0\) and the order 1 effective solution \(\tilde{u}^1\) at \(t=\varepsilon ^{-2}\). Top-right: Comparison of \(u^\varepsilon \) with \(\tilde{u}^0\), \(\tilde{u}^1\), and an order 2 effective solution \(\tilde{u}^2\) at \(t=\varepsilon ^{-4}\). Bottom: Plot of the errors between \(u^\varepsilon \) and \(\tilde{u}^s\), \(0\le s\le 3\), over the time interval \([0,\varepsilon ^{-4}]\)

In two dimensions, computing a reference solution for the previous experiment has a huge computational cost. However, we can illustrate an interesting fact: high order effective models are useful in high frequency regimes. Let us first give some insights on this fact. We consider the following specific settings: let \(a\big (\tfrac{x}{\varepsilon }\big )\) be a given tensor with \(\varepsilon >0\) fixed, \(g(x)=e^{\beta ^2|x|^2}\) be a Gaussian with \(\beta =\mathcal {O}(1)\) and \(\nu >0\) a scaling parameter. In (13), we let \(f=0\) and the initial conditions \(u_{0}(x) = g(\nu x)\) and \(u_1=0\) (we assume that \(\varOmega \) is arbitrarily large). To specify the dependence of \(u^\varepsilon \) on the parameter \(\nu \), we denote it as \(u^\varepsilon _\nu \). In the equation for \(u^\varepsilon _\nu \), making the changes of variables \(\hat{x} =\nu x\) and \(\hat{t} =\nu t\) and introducing \(\hat{u}^\varepsilon _\nu (\hat{t},\hat{x}) = u^\varepsilon _\nu (\hat{t}/\nu ,\hat{x}/\nu )\), we find

and we conclude that \(u^\varepsilon _\nu (\hat{t}/\nu ,\hat{x}/\nu ) = \hat{u}^\varepsilon _\nu (\hat{t},\hat{x}) = u^{\nu \varepsilon }_1(\hat{t},\hat{x})\). In other words, for \(\nu >1\) (i.e., an increase in the frequencies of the initial wave) the long-time effects of \(u^{\nu \varepsilon }_1\) can be observed at a shorter time in \(u^\varepsilon _\nu \) (modulo a contraction of space). However, for high values of \(\nu \) we meet situations where Theorem 2 does not provide a satisfactory error estimate: on the one hand, in the estimate for \(u^\varepsilon _\nu \), the increase of \(\nu \) deteriorates the error constant in Theorem 2; one the other hand, in the estimate for \(u^{\nu \varepsilon }_1\), the constant is good, but the period of the tensor \(\varepsilon '=\nu \varepsilon \) is to far from the asymptotic regime for homogenization to be meaningful. In practice, we observe that the increase of the frequencies of the initial wave (increase of \(\nu \)) leads to additional dispersive effects in \(u^\varepsilon _\nu \) (or \(u^{\nu \varepsilon }_1\)). Furthermore, the use of higher order effective models allows to capture these additional effects.

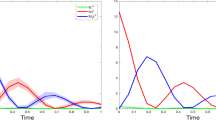

To illustrate this, we consider the two dimensional model problem given by the data \(f=u_1=0\) and

We compute the effective tensors using Algorithm 4 and approximate \(u^\varepsilon _\nu \) and \(\{\tilde{u}^s_\nu \}_{s=1}^3\) at time \(t=20\). As above, \(u^\varepsilon \) is approximated with a spectral method on a grid of size \(h=\varepsilon /16\) and leap frog scheme with \(\varDelta t = h/100\) and \(\{\tilde{u}^s\}_{s=0}^2\) are computed with a Fourier method on a grid of size \(h=\varepsilon /16\) (see [35, Sect. 5.3]). In Fig. 2, we compare the solution in the periodic medium \(u^\varepsilon _\nu \) (top-left) with the effective solutions of order 1, \(\tilde{u}^1_\nu \) (top-right), and order 2, \(\tilde{u}^2_\nu \) (bottom-left). We observe that \(\tilde{u}^2_\nu \) captures more accurately the dispersion developed by \(u^\varepsilon _\nu \) than \(\tilde{u}^1_\nu \). This is even better seen in the 1d cut at \(\{x_1=0\}\) in the bottom-right plot of Fig. 2. Furthermore, even though distinguishing the higher order dispersion from the \(\varepsilon \)-scale oscillation is not easy in this regime, in the zoom we can guess that the model of order 3 is better than the order 2.

5 Proofs of the Main Results

In this section, we provide the proofs of the main results of the paper. In Sect. 5.1, we prove the inductive Boussinesq tricks: Theorem 5 and Lemma 8. In Sect. 5.2, the a priori error estimate for the family of effective equations of Theorem 2 is proved. In Sect. 5.3, we prove the result on positive definite tensors from Lemma 3 and in Sect. 5.4, we prove the new relation between the correctors from Theorem 3.

5.1 Inductive Boussinesq Tricks for the Derivation of the Family of Effective Equations

In this section, we present the technical task that was postponed in the derivation of the family of effective equations in Sect. 2.1. Specifically, we provide the result allowing to substitute the time derivatives in the terms of order \(\mathcal {O}(\varepsilon ^0)\) to \(\mathcal {O}(\varepsilon ^{\alpha })\) in \(r^\varepsilon \) (18). To that end, the main challenge is to proceed to inductive Boussinesq tricks (Theorem 5). This result is then used in Lemma 8, which provides the specific relation used in Sect. 2.1.

5.1.1 Inductive Boussinesq Tricks

The following theorem is the key result of this section.

Theorem 5

Let \(\tilde{u}\) be the solution of (14) and let \(N\) be an even integer such that \(0\le N\le 2\lfloor \alpha /2\rfloor \). If the right-hand side of (14) is defined as

then \(\tilde{u}\) satisfies

where \(c^r\) is the tensor defined inductively as

and the remainders \(\mathcal {R}^\varepsilon _{N}\tilde{u}\) and \(\mathcal {S}^\varepsilon _{N} f\) are defined in (67) and satisfy the following estimates: for any integer n such that \(N+n\le \alpha \)

where \(r(\alpha ,N,n) = 2\lfloor \alpha /2\rfloor +N+n\) and the constants depend only on the tensors \(a^0,\{a^{2r},b^{2r}\}_{r=1}^{\lfloor \alpha /2\rfloor }\).

Remark 9

We verify that the definition (46) of Qf ensures the remainder \(\mathcal {S}^\varepsilon _{N} f\) to have maximal order in terms of \(\varepsilon \) in the second estimate in (49). More specifically, among the right-hand side of the form \(Qf = \sum _{s=0}^{\lfloor \alpha /2\rfloor } (-1)^s \varepsilon ^{2s} q^s \nabla ^{2s}_{x} f\), \(q^s\in {\mathrm {Ten}}^{2s}(\mathbb {R}^d)\), (46) is the only one that ensures \(\Vert \mathcal {S}^\varepsilon _{N}f\Vert = \mathcal {O}(\varepsilon ^{N+2})\) for all even \(N\) such that \(0\le N\le 2\lfloor \alpha /2\rfloor \). This affirmation is proved in Remark 10.

The result of Theorem 5 relies on inductive Boussinesq tricks. Let us summarize this process. We start from the expression of \({\partial ^2_t}\tilde{u}\) given by the effective equation (14), which we rewrite as

where Qf is defined in (46) (see Remark 9). The result for \(N=0\) trivially follows (50) with \(\mathcal {S}^\varepsilon _{0} f= G^0(0)\) and \(\mathcal {R}^\varepsilon _{0} \tilde{u}=T^0(0)\) (defined in (55) below). Let us then assume that \(N\) is an even number such that \(2 \le N\le \alpha \). In the right-hand side of (50), we inductively substitute \({\partial ^2_t}\tilde{u}\) (using (50) itself) in the terms of order \(\mathcal {O}(\varepsilon ^{2})\) to \(\mathcal {O}(\varepsilon ^{N})\). To understand this technical process at best, let us define inductively some quantities and functions. Note that these definitions arise in the result of Lemma 5, which exhibits the decomposition process of one Boussinesq trick.

We define the tensors

and

Associated with the tensors \(A^r(j)\) and \(B^r(j)\), we define for the given N the functions

With these notations, we verify that (50) reads (recall the definition of Qf in (46))

where the remainders are \(T^N(0)=G^N(0)=0\) if \(N=\alpha \) and

otherwise.

The proof is divided into three steps. In the first step (Lemma 5), we apply one Boussinesq trick: in \(S^N(j)\), we use (50) to substitute \({\partial ^2_t}\tilde{u}\) and decompose the result into terms of interest plus remainders. In the second step (Lemma 6), the decomposition is used inductively to obtain a first version of Theorem 5, which involves the tensor \(\tilde{c}^r\) instead of \(c^r\). The final step is to prove that \(\tilde{c}^r\) and \(c^r\) are equal (Lemma 7).

Lemma 5

The functions defined in (53) satisfy the relations ((56a) and (56b) hold only if \(N\ge 4\))

where the remainders \(\{T^N(j)\}_{j=1}^{N/2}\) and \(\{G^N(j)\}_{j=1}^{N/2}\) are defined in (61), (62), (64), and (65).

Lemma 6

The effective solution of (14) \(\tilde{u}\) satisfies the equality

where \(\tilde{c}^{r}\) is the tensor defined as

and the remainders \(\mathcal {R}^\varepsilon _{N}\tilde{u}\) and \(\mathcal {S}^\varepsilon _{N} f\) are defined in (67) and satisfy the estimates (49).

Lemma 7

For \(1\le r\le \lfloor \alpha /2\rfloor \), the tensor \(\tilde{c}^r\), defined in (58), equals the tensor \(c^r\), defined in (48).

Remark 10

Let us explain how the definition of Qf is obtained. Assuming that Qf is unknown, (54) reads \(\partial _t\tilde{u}= Qf + R^N(0)+ S^N(0)+ T^N(0)\). Using inductively (56a) and (56c), which hold independently of Qf, we obtain (57) with \(\mathcal {S}^\varepsilon _{N} f\) defined as

We want to build Qf so that \(\mathcal {S}^\varepsilon _{N} f\) has order \(\mathcal {O}(\varepsilon ^{N+2})\) for all even \(0\le N\le 2\lfloor \alpha /2\rfloor \). Inserting the ansatz \(Qf = \sum _{s=0}^{\lfloor \alpha /2\rfloor } (-1)^s \varepsilon ^{2s} q^s \nabla ^{2s}_{x} f\), where \(q^s\in {\mathrm {Ten}}^{2s}(\mathbb {R}^d)\) are unknown, we obtain (after some work)

Canceling the terms of order \(\mathcal {O}(1)\) to \(\mathcal {O}(\varepsilon ^{N})\), we obtain \(q^0=1\) and an inductive definition for \(q^m\), \(m\ge 1\). We then verify by induction that \(q^m = b^{2m}\) for \(1\le m\le \lfloor \alpha /2\rfloor \), i.e., Qf is defined as (46).

Proof of Lemma 5

To slightly simplify the notation, Let us denote \(\bar{\alpha }= 2\lfloor \alpha /2\rfloor \). We first prove (56a). Using (50), we substitute \({\partial ^2_t}\tilde{u}\) in \(S^N(j)\) and obtain

where the remainder is \(T^N_1(j+1) =0\) if \(N=\bar{\alpha }\) and

otherwise. In order to decompose the terms in (59), we study the two index sets (denoting \(\ell =N/2\))

where we recall that \(0\le j\le \ell -2\). We verify that these sets can be written as

Hence, we rewrite (59) as

where the remainder is

Using the definitions of \(A^r(j)\) in (52) and \(B^r(j)\) in (51), we verify that the two sums in (60) match \(R^N(j+1)\) and \(S^N(j+1)\), respectively. That proves (56a).

Next, let us prove (56b). Using the definition of Qf in (46) and recalling (53c) and (53d), we have

As done above, we decompose the sum and use the expression of \(J^{\ell }_m(j)\) to obtain

where the remainder is

Using the definitions of \(B^{m}(j+1)\) in (51) and \(F^N(j+1)\) in (53c), we obtain (56b).

Next, we prove (56c). From the definition of \(S^N(j)\) in (53b), we use (50) to get

where the remainder is

The first term of the right-hand side of (63) matches the definition of \(R^N(\bar{\alpha }/2)\) in (53a). That proves (56c).

Finally, let us prove (56d). From the definition of \(\tilde{F}^N(j)\), we verify that (56d) holds with \(G^N(N/2)\) defined as

That concludes the proof of Lemma 5. \(\square \)

Proof of Lemma 6

Starting from (54), we use inductively the decompositions in (56) and obtain

We define the remainders as

where \(\{T^N(j)\}_{j=0}^{N/2}\) and \(\{G^N(j)\}_{j=0}^{N/2}\) are defined in (55), (61), (62), (64), and (65). From the definitions of \(T^N(j)\) and \(G^N(j)\), we verify that \(\mathcal {R}^\varepsilon _{N}\tilde{u}\) and \(\mathcal {S}^\varepsilon _{N}f\) satisfy the estimates in (49). Next, we have to develop \(\sum _{j=0}^{N/2} R^N(j)\) in (66). Using the definition of \(R^N(j)\) in (53a) and exchanging the sums, we find that

Using the definition of \(A^r(j)\) in (52), we verify that for \(r=0\), \(\sum _{j=0}^r A^{r}(j)=a^0=\tilde{c}^0\), and for \(1\le r\le \alpha /2\),

Combining (66), (67), (68), and (69), we obtain (57) and the proof of Lemma 6 is complete. \(\square \)

Proof of Lemma 7

We prove by induction on r that the tensor \(\tilde{c}^r\), defined in (58), equals the tensor \(c^r\), defined in (48). The base case is trivially verified as \(\tilde{c}^0 = a^0 = c^0\). Then, for \(r\ge 2\), we assume that \(\tilde{c}^s=c^s\) for \(1\le s \le r-1\) and we have to verify that the tensor

equals \(\tilde{c}^r\), defined in (58). Using the induction assumption and (58), we have

Let us denote the triple sum T and its summand \(x^r_{\ell ,j,s} = a^{2s}\!\otimes \!B^{\ell -s}(j-1) \!\otimes \!b^{2(r-\ell )}\). Applying the change of indices \(m=r-\ell \) and exchange the sums twice to get

Using the definition of \(B^r(j)\) in (51), we then have

We change the index \(k=j+1\) in this equality to obtain from (70) (recall that \(B^r(0)=-b^{2r}\))

This expression matches the definition of \(\tilde{c}^r\) in (58). Hence, we have proved that \(\tilde{c}^r=c^r\) for all \(0\le r\le \lfloor \alpha /2\rfloor \) and the proof of Lemma 7 is complete. \(\square \)

5.1.2 Use of the Inductive Boussinesq Tricks

In the asymptotic expansion in Sect. 2.1, we need to substitute the terms involving \({\partial ^2_t}\tilde{u}\) in the development of \(r^\varepsilon \) in (18). This task is performed in the following result, obtained thanks to Theorem 5.

Lemma 8

Define tensors \(p^{k}\in {\mathrm {Ten}}^{k}(\mathbb {R}^d)\) as

where \(c^r\) is defined in (48) and let \(\chi ^0=1\) and \(\{\chi ^k\}_{k=1}^\alpha \) be the tensor functions in (20). Then, the following equality holds

where the remainders \(\mathcal {S}^\varepsilon f\) and \(\mathcal {R}^\varepsilon \tilde{u}\) are defined in (74), (75). In particular, provided sufficient regularity of \(\tilde{u}\), \(\mathcal {R}^\varepsilon \tilde{u}\) has order \(\mathcal {O}(\varepsilon ^{\alpha +1})\) in the \({\mathrm {L}^{\infty }}(0,\varepsilon ^{-\alpha }T; {{\mathrm {L}^{2}}(\varOmega )})\) norm.

Proof of Lemma 8

Let us define \(T {:}{=}\sum _{k=0}^\alpha \varepsilon ^k\chi ^k\nabla ^{k}_{x}{\partial ^2_t}\tilde{u}\).

Using Theorem 5 and the definition of \(p^k\), we obtain for any choice of even integers \(0\le N(k)\le \alpha \):

where \(z^j_k\) and the remainders are defined as

with \(\mathcal {S}^\varepsilon _{N(k)} f\) and \(\mathcal {R}^\varepsilon _{N(k)} \tilde{u}\) the remainders defined in (67). The first estimate in (49) implies that

Hence, as our goal is for this quantity to have order \(\mathcal {O}(\varepsilon ^{\alpha +1})\) (see the discussion in Sect. 2.1), we need to set N(k) such that

Dealing with even and odd indices separately, we verify that the smallest even integer satisfying the above inequality is

We now rewrite the double sum in the right-hand side of (73) separately for even and odd index k. For the sum on even index \(\big \{k=2\ell \,:\, 0\le \ell \le \lfloor \alpha /2\rfloor \big \}\), we have

where in the first equality we changed the index \(m=r+\ell \) and in the second we exchanged the order of summation and used that \(z^{2s+1}_k=0\) for any s, k. Similarly, for the sum on odd index \(\big \{k=2\ell -1 \,:\, 1\le \ell \le \lceil \alpha /2\rceil \big \}\), we have

Using the two last equalities in (73), we gather the sums to find

Replacing \(z^{k-j}_j\) by its definition in (74), we obtain (72) and that concludes the proof of Lemma 8. \(\square \)

5.2 Proof of the a Priori Error Estimate for the Family of Effective Equations (Theorem 2)

In this section, we prove Theorem 2. Note that the main ingredient of the proof is an adaptation operator based on the adaptation constructed in Sect. 2.1.

Let us first introduce some notations. We use a bracket \([{v}]\) to denote the equivalence class of \(v\in {\mathrm {L}^{2}}(\varOmega )\) in \({\mathcal {L}^2}(\varOmega )\), and a bold face letter \(\varvec{v}\) to denote elements of \({\mathcal {W}_{{\mathrm {per}}}}(\varOmega )\). Note that the Hilbert space \({\mathcal {L}^2}(\varOmega )\) is equipped with the inner product \(\big ([{v}],[{w}]\big )_{{\mathcal {L}^2}(\varOmega )} = \big (v- \langle {v}\rangle _\varOmega ,w -\langle {w}\rangle _\varOmega \big )_{{\mathrm {L}^{2}}(\varOmega )} \). We define the following norm on \({\mathcal {W}_{{\mathrm {per}}}}(\varOmega )\)

In particular, we verify that a function \(w\in {{\mathrm {W}_{\!{\mathrm {per}}}}(\varOmega )}\) satisfies \(\Vert w\Vert _{W} = \Vert [{w}]\Vert _{\mathcal {W}}\), where \(\Vert {\cdot }\Vert _W\) is defined in (5).

The proof of Theorem 2 is structured as follows. First, based on the adaptation \(\mathcal {B}^\varepsilon \tilde{u}\) defined by (16) and (20), we define the adaptation operator \(\varvec{\mathcal {B}}^\varepsilon \tilde{u}\). Then, using the triangle inequality, we split the error as

and estimate the two terms of the right-hand side separately.

Let us first discuss the consequences of the assumptions made in the theorem. The fact that the effective equation belongs to the family \(\mathcal {E}\) (Definition 1) has two major implications: first, the equation is well-posed; second, the tensors \(\{a^{2r},b^{2r}\}_{r=1}^{\lfloor \alpha /2\rfloor }\) satisfy the constraints (28) and thus the cell problems (23) are well-posed. Hence, we have the existence and uniqueness of the effective solution \(\tilde{u}\) and of the correctors \(\chi ^1,\ldots ,\chi ^{\alpha +2}\). Inductively, we can show that the correctors satisfy the following bound

where the constant C depends only on the ellipticity constant \(\lambda \) and the reference cell Y. Let us then investigate the regularity of \(\tilde{u}\) and f. As we assume \(d\le 3\), the embedding \(\mathrm {H}^{2}_{\mathrm {per}}(\varOmega )\hookrightarrow \mathcal {C}^0_{\mathrm {per}}(\bar{\varOmega })\) is continuous and the regularity assumption ensures that

where we recall that \(r(\alpha ) = \alpha + 2\lfloor \alpha /2\rfloor + 2\) (as \(\alpha \ge 2\) we have \(r(\alpha )\ge \alpha +4\)).

We are now able to define the adaptation operator. Thanks to the regularity of f and the correctors, \(\mathcal {S}^\varepsilon f\) defined in (74) belongs to \({\mathrm {L}^{2}}(0,\varepsilon ^{-\alpha } T;{{\mathcal {L}^2}(\varOmega )})\). We can thus define \(\varvec{\varphi }\) as the unique solution in \({\mathrm {L}^{\infty }}(0,\varepsilon ^{-\alpha } T;{{\mathcal {W}_{{\mathrm {per}}}}(\varOmega )})\) of

where \(\mathcal {A}^\varepsilon \) is defined in (17). The adaptation operator is then defined as

where \(\mathcal {B}^\varepsilon \tilde{u}\) is the adaptation constructed in Sect. 2.1 (see (16) and (20)):

Assumption (12) ensures that \(x\mapsto \chi ^k\big (\tfrac{x}{\varepsilon }\big )\nabla ^{k}_{x}\tilde{u}(t,x)\) is \(\varOmega \)-periodic. Using the regularity of the correctors and \(\tilde{u}\), we verify that the adaptation

(notice that the regularity of \(\varvec{\varphi }\) and its derivatives are weaker than that of \(\mathcal {B}^\varepsilon \tilde{u}\)).

Define then \(\varvec{\eta }^\varepsilon (t) = \varvec{\mathcal {B}}^\varepsilon \tilde{u}(t)-[{u^\varepsilon (t)}]\) and recall that we developed \(r^\varepsilon =({\partial ^2_t}+\mathcal {A}^\varepsilon ) (\mathcal {B}^\varepsilon \tilde{u}-u^\varepsilon )\) in Sect. 2.1. The development in (22) together with the cell problems (23) ensure that

where \(\mathcal {R}_{\mathrm {ini}}^\varepsilon \tilde{u}(t)\) is defined in (19) and \(\mathcal {R}^\varepsilon \tilde{u}\) in (74). The following error estimate can then be used to quantify \(\varvec{\eta }^\varepsilon \) (see [5, Corollary 2.2] or [35, Corollary 4.2.2]).

Lemma 9

Assume that \(\varvec{\eta }\in {\mathrm {L}^{\infty }}(0,\varepsilon ^{-\alpha }T;{{\mathcal {W}_{{\mathrm {per}}}}(\varOmega )})\), with \(\partial _t\varvec{\eta }\in {\mathrm {L}^{\infty }}(0,\varepsilon ^{-\alpha }T;{{\mathcal {L}^2}(\varOmega )})\), \({\partial ^2_t}\varvec{\eta }\in {\mathrm {L}^{2}}(0,\varepsilon ^{-\alpha }T;{{\mathcal {W}_{{\mathrm {per}}}^*}(\varOmega )})\) satisfies

where \(\varvec{\eta }^0\in {{\mathcal {W}_{{\mathrm {per}}}}(\varOmega )}\), \(\varvec{\eta }^1\in {{\mathcal {L}^2}(\varOmega )}\), and \(\varvec{r} \in {\mathrm {L}^{1}}(0,\varepsilon ^{-\alpha }T;{{\mathcal {L}^2}(\varOmega )})\). Then, the following estimate holds

where \(C(\lambda )\) depends only on the ellipticity constant \(\lambda \). If in addition, \(\varvec{r} \in {\mathrm {L}^{\infty }}(0,\varepsilon ^{-\alpha }T;{{\mathcal {L}^2}(\varOmega )})\), then

In order to estimate the remainder terms in (77), we also need the following result.

Lemma 10

Let \(\gamma \in \mathrm {L}^2_{\mathrm {per}}(Y)\) and \(v\in \mathrm {H}^{2}_{\mathrm {per}}(\varOmega )\). Then, the following estimate holds

where the constant C depends only on Y and d.

Proof

Recall that \(Y=(0,\ell _1)\times \cdots \times (0,\ell _d)\) and \(\varOmega = (\omega _1^l,\omega _1^r)\times \cdots \times (\omega ^l_d,\omega ^r_d)\). As \(\varOmega \) satisfies (12), the numbers \(N_i=\frac{\omega _i^l-\omega _i^r}{\ell _i\varepsilon }\) are integers and the cells constituting \(\varOmega \) belongs to the set \(\{ \varepsilon (n\cdot \ell + Y) : 0\le n_i \le N_i-1\}\). Denoting \(\varXi = \{\xi = n\cdot \ell : 0\le n_i \le N_i-1\}\), the domain \(\varOmega \) satisfies

Hence, almost every \(x\in \varOmega \) can be written as \(x=\varepsilon (\xi +y)\) for some \(\xi \in \varXi , y\in Y\). For such triplet \((x,\xi ,y)\), the Y-periodic function \(\gamma \) satisfies \(\gamma \big (\tfrac{x}{\varepsilon }\big )= \gamma (\xi +y) = \gamma (y)\). Let \(Z\subset \mathbb {R}^d\) be an open set with a \(\mathcal {C}^{1}\) boundary that contains Y and is contained in its neighborhood, i.e.,

As Z has a \(\mathcal {C}^1\) boundary and \(d\le 3\), Sobolev embedding theorem ensures that the embedding \(\mathrm {H}^{2}(Z)\hookrightarrow \mathcal {C}^0(\bar{Z})\) is continuous. Hence, there exists a constant \(C_{Y}\), depending only on Y, such that

We now prove (78). Using (79), we have

where we made the change of variables \(x=\varepsilon (\xi +y)\). As \(v\in \mathrm {H}^{2}_{\mathrm {per}}(\varOmega )\hookrightarrow \mathcal {C}^0_{\mathrm {per}}(\bar{\varOmega })\), we have

where \(v_{\xi ,\varepsilon }\) is the function of \(\mathcal {C}^0(\bar{Y})\) defined by \(v_{\xi ,\varepsilon }(y) = v\big (\varepsilon (\xi +y)\big )\). Using (80) gives \(\Vert v_{\xi ,\varepsilon }\Vert _{\mathcal {C}^0(\bar{Y})}\le C_Y \Vert v_{\xi ,\varepsilon }\Vert _{\mathrm {H}^{2}(N_Y)}\). Furthermore, we have

As \(\partial _{y_i}v_{\xi ,\varepsilon } = \varepsilon \partial _{x_i}v\) and \(\partial ^2_{y_{ij}}v_{\xi ,\varepsilon } = \varepsilon ^2 \partial ^2_{x_{ij}}v\), the change of variable \(x=\varepsilon (\xi +y)\) leads to

where we used that every cell \(\varepsilon (\xi +Y)\) belongs to the neighborhoods of \((6d-3)\) cells (including itself). This proves (78) and the proof of the lemma is complete. \(\square \)

With Lemma 10, we can estimate the remainders in (77). Using (74) and estimate (49), we obtain

From (75), we verify that \(\alpha -1\le N(k)+k \le \alpha \) and thus

Similarly, using (49) to estimate \(\mathcal {R}^\varepsilon _{\mathrm {ini}}\) (19) and \(\mathcal {S}^\varepsilon f\) (74), we verify that

Remark 11

From (74), we verify that

Referring to Remark 9, the definition of Qf ensures that the second term has order \(\mathcal {O}(\varepsilon ^{\alpha +1})\). Hence, Qf ensures a lower constant in the second estimate in (83).

With these estimates, we are able to prove Theorem 2.

Proof of Theorem 2

We have to estimate both error terms the right-hand side of (76). Using Lemma 9 and (83), we verify that \(\varvec{\varphi }\) satisfies the estimate

where \(r(\alpha ) = \alpha +2\lfloor \alpha /2\rfloor +2\). Hence, from the definition of \(\varvec{\mathcal {B}}^\varepsilon \tilde{u}\), we deduce that

Next, applying Lemma 9 with \(\varvec{\eta }^\varepsilon (t) = \varvec{\mathcal {B}}^\varepsilon \tilde{u}(t)-[{u^\varepsilon (t)}]\) (see (83)), we obtain

where we used that for \(\alpha \ge 2\), \(r(\alpha ) \ge \alpha +4\). Using (84) and (85) in (76) proves estimate (30) and the proof of Theorem 2 is complete. \(\square \)

5.3 A Symmetrized Tensor Product of Symmetric Positive Definite Matrices is Positive Definite (Proof of Lemma 3)

Lemma 3 states that the tensor \(S^{2n}(\otimes ^n a^0)\) is positive definite, where \(a^0\) is the homogenized tensor. We prove here that this property is true for any symmetric, positive definite matrix.

A first important result is the following.

Lemma 11

Let \(R\in {\mathrm {Ten}}^{2n}(\mathbb {R}^d)\) be a positive definite tensor and let \(A\in {\mathrm {Sym}}^2(\mathbb {R}^d)\) be a symmetric, positive definite matrix. Then the tensor of \({\mathrm {Ten}}^{2n+2}(\mathbb {R}^d)\) defined by \(A_{i_1i_{2n+2}} R_{i_2\cdot \cdot i_{2n+1}}\) is positive definite.

Proof

As A is symmetric positive definite, the Cholesky factorization provides an invertible matrix H such that \(A=H^TH\). For \(\xi \in {\mathrm {Sym}}^{n+1}(\mathbb {R}^d)\), we thus have

As R is positive definite, the equality holds if and only if \(H_{rj}\xi _{j i_2\cdot \cdot i_{n+1}}=0\) for all \(r,i_2,\ldots , i_{n+1}\in \{1,\ldots ,d\}\). Let \(i_2,\cdots ,i_{n+1}\) be arbitrarily fixed and denote \(v_j = \xi _{j i_2\cdot \cdot i_{n+1}}\). Hence, we have \(H_{rj}v_j=0\) for all r, which is equivalent to \(H^Tv=0\). As \(H^T\) is regular, we obtain that \(v=0\). We have proved that the equality in (86) implies \(\xi =0\). Hence, the tensor is positive definite and the proof of the lemma is complete. \(\square \)

With Lemma 11 at hand, we are able to prove the following result, which implies Lemma 3.

Lemma 12

If \(A\in {\mathrm {Sym}}^2(\mathbb {R}^d)\) is a symmetric, positive definite matrix, then the tensor \(S^{2n}(\otimes ^n A)\in {\mathrm {Sym}}^{2n}(\mathbb {R}^d)\) is positive definite.

Proof

We proceed by induction on n. The case \(n=1\) is ensured by [5, Lemma 4.1] (it can also be deduced from Lemma 11). We assume that the result holds for \(1,\ldots ,n-1\) and prove it for n. Let \(\xi \in {\mathrm {Sym}}^{n}(\mathbb {R}^d)\backslash \{0\}\). First, assume that n is odd. Then, the product \(S^{2n}(\otimes ^n A)\xi : \xi \) is composed of terms of the form

i.e., one of the factor \(A_{i_ri_s}\) share on index with both entities of \(\xi \). The induction hypothesis combined with Lemma 11 ensure that all these terms are strictly positive and thus \(S^{2n}(\otimes ^n A)\) is positive definite. Second, we assume that n is even. Then, the product \(S^{2n}(\otimes ^n A)\xi : \xi \) is composed of terms of two forms. First, there are terms of the form (87). By the same induction argument as before, they are strictly positive. Second, terms of the form

Altogether, we verify that \(S^{2n}(\otimes ^n A)\xi : \xi >0\) and the proof of the lemma is complete. \(\square \)

5.4 Proof of the New Relation Between the Correctors (Theorem 3)

We prove the result for \(r\ge 1\). Note that in the case \(r=1\), we adopt the convention that empty sums vanish, i.e., \(\sum _{k=1}^0 x_k=0\). For the sake of clarity, we assume that \(|Y|=1\) so that \((\cdot ,1)_Y = \langle {\cdot }\rangle _Y\).

In Sect. 2.1, we explained that the cell problems (23) are well-posed if and only if \(p^{j} =_S g^{j}\). Let us then replace \(p^{j}\) by \(g^{j}\) in the expression of the cell problems (23). For \(r\ge 1\), let \(\chi _1,\ldots ,\chi ^{2r+1}\) be the \(2r+1\) first zero mean correctors. We define the tensors \(A^{k},B^k,C^k\in {\mathrm {Ten}}^{2r+2}(\mathbb {R}^d)\) as

Note that the symmetry of a ensures the following symmetry relations for \(A^k\) and \(C^k\):

Furthermore, using the test function \(w=\chi ^{2r+2-k}_{i_{k+1}\cdot \cdot i_{2r+2}}\) in the cell problem for \(\chi ^k_{i_1\cdot \cdot i_k}\) in (23) and the symmetry of a, we obtain the following relations

Define then the tensor \(T\in {\mathrm {Ten}}^{2r+2}(\mathbb {R}^d)\) as

Using the definition of \(\sigma ^k\) and (88), we verify that

where in the second equality we changed the index \(k=2r-m\). Using (89), we decompose the tensor T as

where the tensors \(U^1\), \(U^2\) and \(U^3\) are

The rest of the proof relies on the following result.

Lemma 13

The tensors \(U^i\) defined above satisfy the following relations:

where \(h^r\) is the tensor defined in (42).

While the proof of (i) and (ii) is direct, the proof of (iii) requires preliminary work, done in the two following lemmas.

Lemma 14

The tensors \(D^k\) satisfy

where for \(r=1\) the sum on the right-hand side vanishes.

Lemma 15

The tensor \(H\) defined in (93) satisfies \(H=_S h^r\), where \(h^r\) is the tensor defined in (42).

Proof of Lemma 14

Let us denote \(z^{j,k}= \big \langle {g^{k-j} \otimes \chi ^j \otimes \chi ^{2r-k} }\big \rangle _Y\). As \(\chi ^0=1\) and the correctors have zero mean, we verify that \(z^{0,k} = z^{j,2r}= 0\). Hence, \(D^k\) can be written as \(D^k = \sum _{j=1}^k z^{j,k}\). Splitting the sum and making the change of index \(\ell =2r-j\), we write for \(1\le k\le r-1\)

As we verify that \(z^{2r-\ell ,2r-k} = _S z^{k,\ell }\), we can write

As \(g^{s}\) is nonzero only for even index (see (71)), we have for all s, \((-1)^{s}g^{s} = g^{s}\). In particular, for all \(s=k-\ell \), we have \((-1)^{k}g^{k-\ell } = (-1)^{\ell }g^{k-\ell }\) which ensures that \((-1)^{k} z^{k,\ell } = (-1)^{\ell } z^{k,\ell }\). Hence, changing the summation order, we find

Furthermore, we verify that