Abstract

Detecting coordinated attacks in cybersecurity is challenging due to their sophisticated and distributed nature, making traditional Intrusion Detection Systems often ineffective, especially in heterogeneous networks with diverse devices and systems. This research introduces a novel Collaborative Intrusion Detection System (CIDS) using a Weighted Ensemble Averaging Deep Neural Network (WEA-DNN) designed to detect such attacks. The WEA-DNN combines deep learning techniques and ensemble methods to enhance detection capabilities by integrating multiple Deep Neural Network (DNN) models, each trained on different data subsets with varying architectures. Differential Evolution optimizes the model’s contributions by calculating optimal weights, allowing the system to collaboratively analyze network traffic data from diverse sources. Extensive experiments on real-world datasets like CICIDS2017, CSE-CICIDS2018, CICToNIoT, and CICBotIoT show that the CIDS framework achieves an average accuracy of 93.8%, precision of 78.6%, recall of 60.4%, and an F1-score of 62.4%, surpassing traditional ensemble models and matching the performance of local DNN models. This demonstrates the practical benefits of WEA-DNN in improving detection capabilities in real-world heterogeneous network environments, offering superior adaptability and robustness in handling complex attack patterns.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In today’s interconnected world, many different devices and systems are part of heterogeneous networks, which use various technologies, protocols, and infrastructures. This diversity enhances functionality and connectivity but also presents unique challenges, particularly in cybersecurity [1]. Coordinated attacks, where attackers strategically plan and carry out cyberattacks across multiple devices and systems either simultaneously or sequentially, present a significant threat in heterogeneous networks. The diverse technologies and protocols within these networks add complexity and variability, making it challenging to detect such attacks [2]. Traditional IDS often struggle with this task because they rely on fixed signatures or rules that may not detect the complex and evolving nature of such attacks. The difficulty increases in heterogeneous networks, where attacks can exploit weaknesses in different segments, protocols, or devices, making detection and response more complex. These coordinated attacks often use sophisticated strategies to avoid detection and take advantage of the network’s diversity. CIDS has emerged as a promising solution, enhancing the capabilities of traditional IDS by using the collective intelligence of multiple detection systems [3].

One effective way to use collective intelligence in CIDS for detecting complex attack patterns like coordinated attacks is by combining ensemble models with deep learning. Ensemble models leverage multiple classifiers, each trained on different data subsets or using various architectures, to handle diverse data by integrating insights from different detectors, each focusing on different aspects of the network. Meanwhile, deep learning models identify complex patterns and correlations within high-dimensional data [4]. Traditional ensemble methods usually give equal weight to each base classifier, regardless of their individual performance or characteristics. However, this approach might not effectively capture each detector’s varying expertise or reliability, especially with datasets containing different data patterns. Weighted strategies are crucial here, as they adjust the influence of each base detector based on its performance or relevance to the current context. By giving higher weights to more accurate detectors or those specializing in certain attack patterns, weighted strategies help the ensemble prioritize reliable insights while minimizing the impact of less accurate or less relevant detectors [5, 6].

In this research, we present a new approach for CIDS designed to detect coordinated attacks in heterogeneous network environments. Our method combines deep learning with ensemble learning and weighting strategies to create a WEA-DNN specifically for this task. We use ensemble averaging to merge model predictions, offering a detailed assessment of intrusion likelihood, reducing the impact of outliers, and creating smoother decision boundaries for better understanding of intrusion patterns [7,8,9]. The base classifier in our ensemble is a DNN, chosen for its ability to handle large and complex data, manage the increasing volume of network traffic, and adapt to evolving network conditions [10, 11]. Additionally, we use DE as the weighting strategy for our ensemble methods. DE, a population-based optimization algorithm, efficiently finds optimal weight values to combine individual detectors in the ensemble. This ensures that the weights reflect each detector’s performance and relevance in identifying different types of attacks, thereby improving the overall detection effectiveness [12, 13].

We evaluate the proposed model using real-world datasets such as CICIDS2017, CSE-CICIDS2018, CICToNIoT, and CICBotIoT, encompassing instances of coordinated attacks and simulating heterogeneous networks. This study extends beyond the analysis of accuracy, incorporating considerations of parameters such as precision, recall, F1-score, training time, and the size of the training model. By collaboratively analyzing network traffic data from heterogeneous sources, the CIDS based on WEA-DNN is expected to demonstrate its effectiveness in accurately identifying coordinated attacks across diverse network architectures.

This research begins by defining research questions, which include:

-

1.

How is the performance of CIDS for coordinated attack detection using a DNN in the heterogeneous network?

-

2.

How is the performance of CIDS for coordinated attack detection using an Ensemble Averaging DNN in the heterogeneous network?

-

3.

How is the performance of CIDS for coordinated attack detection using a WEA-DNN in the heterogeneous network?

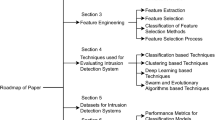

This research is organized as follows: Sect. 1 is the Introduction. The section explains the background and state-of-the-art of this research. Section 2 is Related Terms and Works. The section explains related terms about Coordinated Attacks, CIDS, and heterogeneous networks. The section also explains the contribution and difference between this research with other research. Section 3 is the Methodology. The section explains step-by-step methods to propose the model and evaluation scenario. Section 4 is the Result and Discussion. The section discusses the experiment result from the proposed model. Section 5 is the conclusion of this research.

2 Related terms and works

This section of the research carefully looks at terms like Coordinated Attack, CIDS, and Heterogeneous Networks. It also reviews related works to this study by studying existing literature to summarize important findings and the progress of the current research.

2.1 Coordinated attack

A coordinated attack is a type of malicious activity in which multiple attackers or groups collaborate and act in concert to achieve a common goal. These attackers may coordinate their efforts in terms of timing, techniques, and targets to maximize the impact of their attack and overcome defenses. Coordinated attacks have three common types: large-scale stealthy scans, distributed denial-of-service (DDoS), and worm outbreaks attacks [14].

A port scan can be used as an initial attack to find vulnerabilities. To avoid detection, large-scale stealthy scans involve network scanning attacks that employ cautious timing profiles, such as T0 or T1 profiles in Nmap. Despite requiring a considerable amount of time to scan many machines or ports, these scans effectively cover a large part of the network while staying hidden [15].

After finding vulnerabilities, the attacker will write a botnet script to spread across the computer network. Computer worms are malicious programs that propagate quickly across computer networks without user interaction. They can encrypt or delete data on affected systems and may also have real-world consequences. Worm outbreaks happen when numerous computers or devices get infected by a worm, leading to compromised security and potential risks to public safety [16].

When a computer is infected with a botnet, the computer will be used as a “zombie” to launch a DDoS attack on another network. A DDoS attack is a cyber-attack that aims to overwhelm a target network with malicious traffic, making it unavailable to legitimate users [17].

The impact of this scenario is very severe because the attacker can take over the network of one organization to attack the network of another organization. Therefore, coordinated attacks are very useful tools for attackers to achieve monetary gain [18].

Most researchers try to detect these attacks separately, such as the research [19] surveying all research on specific Port Scan detection, the research [20] surveying all research on specific Botnet detection, and the research [21] surveying all research on specific DDoS detection. Some researchers also mix the detection of coordinated attacks with other attack types, as seen in the survey paper [22]. Coordinated attacks conduct joint attacks to damage the network, making the patterns of these attacks complex and sophisticated. Considering the level of damage and complexity of the attacks, it is better to focus on detecting all coordinated attack patterns rather than a single attack pattern or mixing them with other attacks.

2.2 CIDS

To effectively address the detection of coordinated attack complex attack patterns, the role of an IDS becomes crucial. An IDS is a security tool designed to monitor network traffic or system activity for malicious behavior or policy violations. IDS employs various detection methods, including anomaly detection, which identifies deviations from normal behavior that could indicate potential intrusions or attacks. This method is effective in detecting new or unknown attacks that signature-based systems may overlook [23]. Given the sophisticated nature of coordinated attacks, standard IDS can be insufficient, necessitating the use of CIDS capable of collecting evidence from large-scale distributed networks [24].

CIDS is a security concept that involves multiple entities working together to detect and mitigate security threats within a network or system. This approach often involves the sharing of information and resources among different security systems or organizations to enhance the overall effectiveness of intrusion detection. The basic idea behind CIDS is that by pooling resources and sharing information about potential threats, organizations can better identify and respond to security incidents. This can be particularly useful in detecting sophisticated and coordinated attacks that may span multiple systems or networks. CIDS typically involves various components such as sensors, analyzers, and decision-making modules. These components work together to monitor network traffic, analyze patterns and anomalies, and generate alerts or responses when potential intrusions are detected [25, 26].

CIDS consists of two primary operational units working together to provide a comprehensive view of network security. The first unit, the detection unit, monitors subnetworks and hosts using diverse sensors, which generate intrusion alerts at a granular level. These alerts are then forwarded to the second unit, the data correlation unit. Here, correlation processes are conducted to aggregate the alerts from individual sensors, presenting a comprehensive overview of the network’s security posture. CIDS operates by combining evidence from diverse networks, facilitating the detection of intrusions occurring across the entire network simultaneously. This is achieved through correlating attack signatures across distinct subnetworks, enabling CIDS to offer a holistic perspective on network security and identifying patterns and trends indicative of larger-scale attacks [27, 28].

2.3 Heterogeneous network

Effectively implementing CIDS to detect coordinated attacks becomes even more critical in the context of heterogeneous networks. A heterogeneous network refers to a network that consists of diverse elements, components, or devices that may vary in terms of their technologies, protocols, capabilities, or characteristics. In a heterogeneous network environment, different types of devices, such as computers, servers, routers, switches, and mobile devices, may be interconnected using various communication technologies and protocols [29]. Heterogeneous networks often include a wide range of devices from different manufacturers, with varying hardware specifications, operating systems, and software applications. These devices may have different capabilities, functionalities, and requirements, which can pose challenges for network management and interoperability. Integrating and managing heterogeneous devices and technologies in a network environment often requires addressing interoperability challenges. This involves ensuring that different devices and systems can communicate effectively and seamlessly exchange data despite differences in protocols, formats, or standards [30].

Managing security in a heterogeneous network environment can be challenging due to the diverse nature of devices and technologies involved. To protect against cyber threats in these complex networks, organizations need a comprehensive strategy. This includes securing everything from traditional servers to IoT devices and cloud services, focusing on key areas like access control, encryption, authentication, and intrusion detection [31]. However, given the evolving nature of threats, relying solely on these measures may prove insufficient. CIDS emerges as a crucial approach, leveraging interconnected security systems to enhance threat detection and response capabilities. By sharing information and coordinating responses across multiple detection points, organizations can identify suspicious activities more swiftly and accurately. This collaborative approach enables adaptive responses to emerging threats through automated incident response mechanisms and facilitates proactive updates based on shared threat intelligence [32]. Securing heterogeneous networks requires a unified strategy that combines various security measures and CIDS to effectively reduce cyber risks and protect critical assets.

2.4 Related works

In this research, we propose a novel approach for CIDS tailored to detect coordinated attacks in heterogeneous network environments by integrating DNN techniques with ensemble averaging and DE as weight strategies to develop a WEA-DNN specifically designed for this purpose. To strengthen the novelty of our research, we try to review related works that have already been done in the area of intrusion detection, using ensemble models with weight strategies.

In the research [33], a novel ensemble classifier called OW-ECIDM is introduced, utilizing a Genetic Algorithm (GA) to optimize the weights of individual base classifiers for intrusion detection. The focus is specifically on addressing low detection rates in U2L attacks and mitigating data imbalance issues. Feature extraction and dimension reduction are performed through Principal Component Analysis (PCA) to enhance feature quality, while parameters for the Support Vector Machine (SVM) are optimized. Evaluation of the model’s effectiveness is conducted on the NSL-KDD dataset, encompassing various attack types, including unknown ones. OW-ECIDM exhibits superior accuracy and generalization performance compared to alternative combination strategies such as stacking, majority vote, average of probabilities, and bagging. These results position OW-ECIDM as a promising solution for intrusion detection within the domain of network security.

Research [34] advocates the utilization of Particle Swarm Optimization (PSO) to derive weights for each classifier within the ensemble methodology. Fine-tuning of the PSO is achieved through the incorporation of Local Unimodal Sampling (LUS) as a meta-optimizer, and the creation of ensemble classifiers is executed using the weighted majority algorithm (WMA). This study focuses on tasks related to binary classification, employing SVM and k-nearest neighbor (KNN) as the foundational learning algorithms. The model is evaluated against the KDD99 dataset.

Research [12] introduces MultiTree and adaptive ensemble voting algorithms to augment the detection capabilities of IDS. In this scenario, the classification weights for each algorithm are established according to their training accuracy, culminating in the creation of the adaptive voting algorithm model. The study integrates DNNs, random forests, and decision trees as fundamental machine learning models and assesses the proposed model’s efficacy using the NSL-KDD dataset.

Research [35] proposing Weighted Majority Voting as an ensemble strategy to bolster the reliability of diverse classifiers. This approach involves generating weights using Ant Colony Optimization (ACO) for continuous search spaces and focuses on multiclass classification tasks with Artificial Neural Network (ANN), KNN, and Naive Bayes (NB) as base learning algorithms. The research’s validity is confirmed through testing with the NSL-KDD dataset.

Research [36] introduces a genetic programming (GP) method for ensemble combination, harnessing PSO to generate weights for the combiner function in the ensemble of classifiers. This study employs the Weighted Majority Algorithm (WMA) for the ensemble method and utilizes various machine learning algorithms for base classifiers. The proposed model is designed for binary classification tasks, and its performance is assessed using the ISCX IDS dataset.

Research [37] puts forth an IDS model known for its high accuracy, employing weighted ensemble techniques and a Chi-square feature reduction technique. The approach entails deploying classifiers such as SVM, LPBoost, and modified Naive Bayes (MNB) individually and applying a majority voting technique to predict the class label based on the weights assigned to each classifier. The research undertakes multi-class classification tasks and is tested using the NSL dataset.

Based on the relatively brief related works, we have concluded that intrusion detection using the weighted ensemble method is efficient in identifying anomalies. However, its deployment in CIDS within a heterogeneous network environment is still a work in progress, as indicated in Table 1. Most existing research focuses on homogeneous networks generated from a single dataset to create classifiers and ensemble them. In real-world scenarios, detecting anomalies, such as coordinated attack patterns, in multiple networks with varying configurations and devices can be quite challenging. Therefore, this research aims to explore the detection of coordinated attacks in a heterogeneous network environment.

The implementation of deep learning in other research has not been explored yet, as indicated in Table 1. Deep learning models have the capability to automatically learn features from raw data, eliminating the need for manual feature engineering. They can capture intricate relationships between features at multiple levels of abstraction and are highly scalable, efficiently processing large volumes of data. Deep learning is particularly suitable for dealing with complex, high-dimensional data and tasks that require learning intricate relationships between features. While traditional machine learning algorithms may suffice for simpler tasks or when interpretability is crucial, deep learning offers significant performance, scalability, and adaptability advantages, making it a powerful tool in various domains [38, 39]. Therefore, this research utilizes a deep learning algorithm, namely DNN, to improve predictions in CIDS instead of traditional machine learning algorithms.

Based on Table 1, most existing research uses the majority voting method, whereas ours employs the averaging method. The averaging process mitigates the impact of outliers or misclassified instances by considering the average prediction across multiple models. In contrast, outliers may heavily influence majority voting, especially when they occur in significant numbers. In scenarios where classes are imbalanced, the averaging process can provide more balanced predictions by considering the collective output of all models, regardless of class distribution. This can help prevent biases towards majority classes that may arise with majority voting. Additionally, the averaging process allows for a probabilistic interpretation of the ensemble’s predictions, providing a probabilistic score or confidence level for each class and offering more insight into the uncertainty associated with the predictions [40, 41]. Therefore, this research utilizes the averaging method due to its stability, robustness, and probabilistic interpretation advantages, making it a valuable alternative to majority voting in ensemble learning, especially in scenarios where data may be noisy or uncertain.

Based on Table 1, while PSO is a popular algorithm for weighted algorithms, our research utilizes DE, which is more advanced than GA. Another related work also used the ACO algorithm to compute weights in the ensemble process. DE is known for its efficiency in optimizing high-dimensional search spaces, is often more scalable, and requires fewer function evaluations to converge to a solution compared to PSO and GA. It is relatively easy to implement and requires fewer parameters to tune, making it more straightforward to use in practice. DE also exhibits robustness to noise and is less susceptible to getting trapped in local optima compared to PSO and GA. This is because DE operates by perturbing existing candidate solutions and exploring the search space through differential mutation, allowing it to escape local optima more effectively. While also robust in some cases, ACO may struggle with noise and local optima due to the pheromone-based approach, which can lead to biased search behavior [42,43,44]. Therefore, our research utilizes DE due to its efficiency in high-dimensional spaces, robustness to noise and local optima, and ease of implementation.

Shifting the focus to this current research, it explores approaches to analyzing coordinated attacks using CIDS based on WEA-DNN in heterogeneous networks. This research used CICIDS2017, CSE-CICIDS2018, CICToNIoT, and CICBotIoT datasets to simulate heterogeneous networks. That dataset is selected because it contains coordinated attack traffic like portscan, DDoS, and botnet. The research employs distinct datasets that feature coordinated attack patterns, creating a simulated environment for multiple and heterogeneous networks. In this context, the differential algorithm is harnessed to compute the weight value in each classifier, utilizing sample traffic from each network. We utilize a single type of classifier based on DNN, each trained with different dataset patterns. This approach imparts strong variance to the ensemble method while maintaining simplicity, efficiency in computational resource utilization, and consistent behavior across the ensemble.

3 Methodology

3.1 Dataset preprocessing

This research utilized the CICIDS2017 datasets and CSE-CICIDS2018 datasets, generously provided by the Canadian Institute for Cybersecurity (CIC) [45, 46]. Furthermore, two additional datasets were employed, both extracted from pcap files using CICFlowMeter. The first dataset is referred to as the CICToNIoT Dataset, while the second is the CICBotIoT dataset, both of which were developed from research [47].

The reason for selecting these datasets lies in their similarities in features and their analysis using CICFlowMeter tools. It indicates that the datasets simulate traffic captured using the same sensor but from different networks with heterogeneous characteristics. CICIDS2017 and CSE-CICIDS2018 represent common networks, which typically include traditional computer networks with a variety of devices and protocols. On the other hand, CICToNIoT and CICBotIoT represent IoT networks, characterized by interconnected devices using specialized protocols and communication patterns. This distinction enables researchers to design and implement detection and security measures that address the specific challenges of each network type. Each dataset provides sample traffic patterns of coordinated attacks, showcasing diverse devices, protocols, network architectures, and traffic behaviors. This diversity enriches the simulations and analyses, offering insights into how different types of networks respond to and defend against coordinated attacks.

This study utilized these datasets to simulate a heterogeneous network. CICIDS2017 is designated as network 1, CSE-CICIDS2018 as network 2, CICToNIoT as network 3, and CICBotIoT as network 4. This setup effectively represents heterogeneous networks because each dataset varies in terms of devices, protocols, network architectures, and traffic patterns. The research focuses on analyzing anomalies in network traffic, specifically coordinated attacks. It adopts a centralized collaborative anomaly detection method to continuously monitor and analyze network traffic for unusual patterns. Rather than focusing on specific attack behaviors, the approach aims to detect deviations from established network norms. Therefore, the study does not explicitly aim to correlate attacks across different networks.

3.1.1 Data extraction and label verification

The first step is extracting normal traffic and coordinated attack patterns from the dataset to simulate coordinated attacks in a heterogeneous network. After that, this research verifies the labels and establishes consistent labels for coordinated attacks. This was necessary because this research employed a multi-class classification task. The coordinated attack patterns encompass extensive stealthy scans, DDoS attacks, and worm outbreaks. We extract data containing labels that match the criteria for these coordinated attack characteristics. The details of the data and labels that are extracted and verified from all of the datasets are shown in Table 2.

The CICIDS2017 dataset contains 14 labels for cyberattacks and 1 label for normal traffic (BENIGN). Among these 14 types of attacks in the CICIDS2017 dataset, 3 labels correspond to cyberattacks that include coordinated attacks, namely DDoS, PortScan, and Bot. Therefore, this research used only the traffic from these 3 cyberattack labels and the 1 label for normal traffic (BENIGN) in the CICIDS2017 dataset to create a coordinated attack pattern in the dataset.

The CSE-CICIDS2018 dataset also contains 14 labels for cyberattacks and 1 label for normal traffic (BENIGN). The data has labels corresponds to cyberattacks that include coordinated attacks such as Infiltration, DDOS attack-HOIC, DDoS attacks-LOIC-HTTP, and DDOS attack-LOIC-UDP, and Bot. According to research [48], infiltration can involve scanning activities as part of the process of gaining unauthorized access to a system or network. Scanning is typically one of the initial stages in an infiltration attempt where attackers attempt to gather information about the target environment. This information gathering phase often includes scanning for open ports, identifying network services running on those ports, probing for vulnerabilities, and mapping out the network topology. Therefore, Data with the label Infiltration was relabeled as PortScan. Data with labels DDOS attack-HOIC, DDoS attacks-LOIC-HTTP, and DDOS attack-LOIC-UDP were relabeled as DDoS. Consolidating these specific attack types under a more generalized label can effectively streamline data management and analysis processes.

The CICToNIoT dataset comprises 9 labels for cyberattacks and 1 label for normal traffic (BENIGN). The data has labels correspond to cyberattacks that include coordinated attacks such as scanning, DDoS, and backdoor. Data labeled as scanning is relabeled as PortScan because it involves activities related to scanning for vulnerabilities on the system or network. While this dataset does not have a label for bot, it does include data labeled as backdoor. According to research [49], one of the behaviors of a bot attack is to target remote-access computers by responding to specific, constructed client applications. Therefore, data with the backdoor label is relabeled as bot.

The CICBotIoT dataset contains 4 labels for cyberattacks and 1 label for normal traffic (BENIGN). The data has labels correspond to cyberattacks that include coordinated attacks such as Reconnaissance, DDoS, and Theft. The dataset includes data labeled as “Reconnaissance,” which is a technique for gathering information about a network host and is also known as a probe. According to research [50], this data is related to scanning to find vulnerabilities in the system. Therefore, the data labeled as “Reconnaissance” is relabeled as “PortScan.” The dataset also contains data labeled as “Theft,” which encompasses a group of attacks aimed at obtaining sensitive data, such as data theft and keylogging. According to research [51], this data is related to bot attack activity. Therefore, the data labeled as “Theft” is relabeled as “Bot.”

After the relabeling process, each dataset now includes data labeled as BENIGN, PortScan, DDoS, and Bot, as illustrated in Table 2. This research focuses on analyzing traffic categorized as PortScan, DDoS, and Bot for cyberattacks (coordinated attack), while data labeled as BENIGN represents normal traffic within all of the dataset. These labels are utilized to identify and analyze coordinated attack patterns.

3.1.2 Feature renaming

The second step involves renaming the features in the dataset to ensure consistency in both the number and names of features. This is necessary because this research employs horizontal learning, and consistent feature names are essential. Since the dataset is constructed using CICFlowMeter, the features, and input size have the same number and share similar names. However, some feature names exhibit inconsistencies such as variations in capitalization, inclusion of symbols, and differences in abbreviations. Therefore, this study standardizes all feature names across each dataset, as shown in Table 3. The naming of features aligns with the 78 features defined by CICFlowMeter.

3.1.3 Data cleaning

The final step is the cleaning process, which includes the removal of missing values (NA) and zero values to prevent biases and inaccuracies in the data analysis. Subsequently, the next step involves eliminating redundant features to reduce noise and model complexity without compromising value. The process also involves removing duplicate features that result in redundant data within the dataset. After surpassing all processes from dataset preprocessing, the detailed traffic counts for each label from each dataset can be found in Table 4.

3.2 CIDS model

The architecture of the CIDS for a heterogeneous network can be observed in Fig. 1. The CIDS consists of a detector unit and a correlation unit. The detector unit is distributed across each network and consists of a sensor unit and an analyzer unit. The sensor unit captures the data, while the analyzer unit is responsible for analyzing the data captured by the sensor unit. In this research, the analyzer unit also handles the construction of the training model using traffic data from each network. Subsequently, both the training model and sample traffic are transmitted to the central server’s correlation unit from each network.

All training models and sample traffic from each network are aggregated within the correlation unit. This unit computes the weight value for each training model using sample traffic. Once the correlation unit determines the weight value for each network, all training models and weight values for each network are transmitted back to their respective networks. These training models and weights are employed for traffic analysis in each network to detect anomalies.

3.3 Weighted ensemble averaging DNN

3.3.1 Details of DNN hyperparameter and configuration

This research develops WEA-DNN, featuring a multi-class classification mode, as a collaborative anomaly detection model within CIDS to identify coordinated attacks within heterogeneous networks. The detailed process of the proposed CIDS detection process based on WEA-DNN can be seen in Fig. 2. The first step of WEA-DNN is to create a classifier using the DNN algorithm and data train from networks 1–4. The input layer for the DNN algorithm used 77 features that correspond to the input dimensionality of the data. In the DNN algorithm, the process of input data propagates through multiple layers of interconnected nodes to produce an output, which can be seen in Eq. (1). Let’s donate \( X \) as the input data matrix of shape \((n, m)\), where \( n \) is the number of samples and \( m \) is the number of features. The input data is then passed through a series of hidden layers, each containing a different number of neurons. The weights and biases of each layer, denoted as \( W^{(l)} \) and \( b^{(l)} \) respectively, are learned during the training process using the Adam optimization algorithm. The Adam optimization algorithm is chosen because it is ideal for multiclass classification due to its adaptive learning rates, efficiency with large datasets and high-dimensional spaces, bias correction, and minimal need for hyperparameter tuning. \( l \) is the total number of layers in the network (including input and output layers).

During the forward pass, the input data is multiplied by a set of weights and added to a bias term, resulting in a pre-activation value. This pre-activation value is then passed through an activation function. This research applies a Rectified Linear Unit (ReLU) activation function in each hidden layer. ReLU was chosen because it mitigates the vanishing gradient problem, introduces sparsity and computational efficiency, combines linear and non-linear properties, easy to implement, and often results in faster convergence during training. The process of the ReLU activation function in each hidden layer can be seen in Eq. (2). Let’s donate \( \text {Activation}(\cdot ) \) to represent the ReLU activation function applied to the hidden layers.

The training data is divided into batches of size 1000, and the optimization algorithm updates the weights and biases iteratively over 10 epochs to minimize the loss function. The loss function used in this DNN is Categorical Crossentropy, which is well-suited for multi-class classification problems like the one described here. Categorical Crossentropy measures the difference between the predicted probabilities and the actual target labels, encouraging the network to make accurate predictions. During the forward pass, the input data is transformed linearly by multiplying it with the weight matrix \( W^{(l)} \) of each layer and adding the bias vector \( b^{(l)} \). The resulting pre-activation values \( z^{(l)} \) are then passed through the ReLU activation function to introduce non-linearity and generate the output activations \( a^{(l)} \) of each layer.

Finally, the output layer applies a Softmax activation function to the pre-activation values \( z^{(l)} \) to convert them into probabilities. The process can be seen in Eq. (3). Let’s donate \( \text {OutputActivation}(\cdot ) \) to represent the Softmax activation function applied to the output layer. Softmax ensures that the output values sum up to 1 and represent the probability distribution over the different classes in the output dimension, which in this case is 4 for multi-class classification. For the creation of DNN training models, this research utilizes 70% of the traffic from each network for data training [52, 53]. The hyperparameters and details regarding the hidden layers of the DNN are outlined in Tables 5 and 6.

This research used a combination of the geometric pyramid rule and domain-specific knowledge to determine the number of nodes in the hidden layers of the DNN. The geometric pyramid rule suggests that the number of neurons in each successive hidden layer forms a geometric sequence, typically halving at each layer. On the other hand, the domain-specific knowledge method operates by integrating accumulated expertise and insights from within a particular field to guide and optimize research processes [54].

In this study, we adopted the hyperparameter settings outlined in research [7] due to their relevance to our research domain. The referenced study explores IDS heterogeneity within the IoT domain, which parallels our investigation into heterogeneity within CIDS. Therefore, the configurations of the number of nodes in each hidden layer, detailed in Table 6, are informed by the insights from research [7]. While these configurations do not strictly follow the halving principle of the geometric pyramid rule, they do represent a progressively decreasing sequence of nodes, which aligns with the principle of gradual reduction advocated by the rule.

3.3.2 Weight strategy with DE algorithm

In Fig. 2, after the training process is done, the training model will be used as input for weight computation. This research used a DE algorithm to find the weight from each classifier using sample traffic. DE is a heuristic optimization algorithm that is classified under the evolutionary algorithms family. It is extensively applied to tackle optimization and search problems that encompass complex, nonlinear, and potentially noisy objective functions, rendering traditional gradient-based techniques less effective. The process of DE involves emulating a population of candidate solutions (vectors) in the search space and systematically refining these solutions to converge toward the optimal solution. The algorithm draws inspiration from the natural selection and evolution process, and it employs simple mathematical operations to evolve solutions over generations [55].

The implementation of the DE in this research is based on research [56] and [57]. Let’s assume we have an objective function \(f(\textbf{x})\), where \(\textbf{x}\) represents a vector of decision variables. In the context of weighted ensemble learning, this objective function represents the performance metric (accuracy) of an ensemble with a set of weights. The step of the DE algorithm is like the list below:

-

Initialization

-

Select a population size N.

-

Generate an initial population of candidate solutions (weight vectors) \(\{\textbf{x}_1, \textbf{x}_2, \ldots , \textbf{x}_N\}\).

-

-

Mutation

-

For each candidate solution \(\textbf{x}_i\), select three distinct candidate solutions \(\textbf{x}_a\), \(\textbf{x}_b\), and \(\textbf{x}_c\) from the population.

-

Generate a trial solution \(\textbf{u}_i\) using a mutation strategy, e.g., \(\textbf{u}_i = \textbf{x}_a + F \cdot (\textbf{x}_b - \textbf{x}_c)\), where F is a scaling factor.

-

-

Crossover

-

Perform a binomial crossover operation between \(\textbf{x}_i\) and \(\textbf{u}_i\) to create a trial vector \(\textbf{v}_i\). The crossover operation decides which components of \(\textbf{u}_i\) to accept and which to discard based on a probability.

-

-

Selection

-

Compare the trial vector \(\textbf{v}_i\) with the current candidate solution \(\textbf{x}_i\):

-

If \(f(\textbf{v}_i) \le f(\textbf{x}_i)\), replace \(\textbf{x}_i\) with \(\textbf{v}_i\) in the population.

-

Otherwise, keep \(\textbf{x}_i\) in the population.

-

-

Termination

-

Repeat steps 2–4 for a fixed number of iterations (generations) or until a convergence criterion is met.

-

The algorithm can terminate after a specified number of iterations (generations) or when a convergence criterion is met (the small improvement in the objective function value). The algorithm returns the best-found solution (weight vector) that optimizes the objective function \(f(\textbf{x})\). In the context of weighted ensemble learning, this weight vector corresponds to the optimal ensemble weights. The goal of the DE algorithm is to find the weight vector \(\textbf{x}^*\) that maximizes the objective function like Eq. (4).

The algorithm iteratively refines the weight vector to approach the optimal solution that maximizes the ensemble’s performance metric. Let M be the set of ensemble models \(m_1, m_2, \ldots , m_n\). w be the weight vector \([w_1, w_2, \ldots , w_n]\) where \(w_i\) represents the weight of model \(m_i\). X is the input sample dataset. y be the sample labels. L(w, X, y) be a performance metric for the ensemble with weights w. This research uses accuracy as a performance metric. The goal is to find the optimal weight vector \(w^*\) that maximizes the ensemble’s performance metric using Eq. (5).

3.3.3 Proposed WEA-DNN algorithm

The detailed algorithm for WEA-DNN can be found in Algorithm 1. In this algorithm, 30% of the traffic from each network is designated as the test data, while 10% of the traffic from the dataset is used as sample traffic for weight computation. The initial step of the WEA-DNN algorithm involves aggregating the training models from each network. Additionally, the sample traffic from each network needs to be sent to the correlation unit. The input data is the list of models, sample data for testing testX, and sample labels testy. The correlation unit calculates the weight values for each DNN training model or classifier using the sample traffic. The sample traffic is processed in each training model using the DE algorithm to determine the best weight value for each training model. In line 1, the algorithm initializes the variable \(n\_members\_w\) with the length of the members list to determine the number of models in the ensemble. In line 2, the \(bound\_w\) variable is initialized as a list of tuples representing the bounds for optimizing the weights. In line 3, The variable \(search\_arg\) is set to a tuple containing the members, testX, and testy.

The algorithm defines a function named EnsPredW which calculates predictions for each model using the given weights and input data. Within the function, rslts stores the predictions for each model on the input data. The variable rslts is converted into a NumPy array. The function calculates the weighted sum of the predictions from all models using the given weights. The function calculates the class label with the highest score for each data point. The EnsPredW function returns the result (lines 4–9). The algorithm defines another function, EvalEnsW, to evaluate the performance of the ensemble using weights and input data. Within this function, rslt stores the ensemble predictions. The function calculates and returns the accuracy score of the ensemble predictions (lines 10–12).

The algorithm defines a function NormalizeW for normalizing weights. It calculates the L1 normalization of the weights. If the result of the normalization is zero (all weights are zero), the original weights are returned to avoid division by zero. If the result is not zero, the normalized weights are returned (lines 13–17). The LossFunc calculates the loss using normalized weights and models. It calculates the complement of the accuracy score based on the normalized weights (lines 18–20). The \(rslt\_ev\) variable is set to the result of the DE optimization, minimizing the loss function with defined bounds and parameters. This research uses DE from scipy library in python to compute weight from each classifier. After that, the algorithm normalizes the optimized weights obtained from the DE. The final normalized weights are returned (lines 21–22).

Once the correlation unit determines the weight values for each classifier from each network, all training models and weight values for each network are sent back to their respective networks. The training model and weight are then tested using 30% of the traffic from each network. The final result of each traffic prediction in each network is determined using the ensemble averaging method.

The ensemble averaging equation incorporates weighted contributions from individual DNN models, which can be seen in Eq. (6). The ensemble computes the final prediction for a given input \( x_i \) and class \( c \). Each model’s prediction \( y_i^{(j)} \) is weighted by a corresponding weight \( w_j \), reflecting the significance or reliability of that model’s contribution to the ensemble. By summing these weighted predictions over all models \( N \), and then normalizing by the sum of the weights, the equation ensures that each model’s influence on the final prediction is appropriately balanced. \(P(y_i^{(j)} = c)\) represents the probability assigned by the \(j\)-th model to class \(c\) for input \(x_i\).

This weighted ensemble averaging process allows for flexibility in assigning importance to different models based on their performance characteristics or domain expertise. Models demonstrating higher accuracy or specialized knowledge in certain areas can be assigned higher weights, enabling them to have a greater impact on the final prediction. Conversely, models exhibiting weaker performance or less relevance to the task at hand may be assigned lower weights, thereby reducing their influence on the ensemble’s decision-making process. The normalization step ensures that the final ensemble prediction remains consistent and interpretable across different sets of models and weights. By dividing the weighted sum of predictions by the total weight, the equation guarantees that the ensemble’s output lies within the valid range of probabilities and reflects a coherent combination of the individual models’ insights [58, 59].

3.4 Performance parameters

This study employed various parameters to conduct a benchmarking analysis. The evaluation included accuracy, F1-score, precision, and recall metrics, which are pivotal for assessing classification model performance from diverse angles. These metrics offer unique insights into the predictive efficacy of the model, thereby aiding in problem-solving within classification tasks.

Furthermore, the research assessed the size of the training model generated by each algorithm, along with the duration of both training and testing phases. These metrics hold significance in real-world deployment and performance of classification models, influencing resource allocation, cost, latency, scalability, and user experience. Striking a balance among model size, training time, and prediction time is essential to ensure efficient and effective solutions for classification tasks in practical scenarios.

-

1.

Accuracy (%). Accuracy is crucial for ensuring an Intrusion Detection System’s (IDS) effective identification and response to malicious activities while minimizing false positives. It is computed using the Eq. (7).

$$\begin{aligned} \text {Accuracy (ACC)} = \frac{TP+TN}{TP+FP+TN+FN} \end{aligned}$$(7)where TP denotes true positive, TN is true negative, FP is false positive, and FN is false negative [60].

-

2.

Precision (%). Precision measures the accuracy of positive predictions in IDS, calculated as the ratio of true positive predictions to the total number of positive predictions. It is computed using the Eq. (8) [60].

$$\begin{aligned} \text {Precision (PRE)} = \frac{TP}{TP+FP} \end{aligned}$$(8) -

3.

Recall (%). Recall, also known as sensitivity or true positive rate, assesses the model’s ability to correctly identify positive instances. It is computed using the Eq. (9) [60].

$$\begin{aligned} \text {Recall (RCL)} = \frac{TP}{TP+FN} \end{aligned}$$(9) -

4.

F1-Score (%). The F1 score combines precision and recall into a single value, providing a balanced assessment of model performance. It is computed using the Eq. (10) [60].

$$\begin{aligned} \text {F1-Score (F1)} = \frac{2 \cdot (Precision \cdot Recall)}{Precision + Recall} \end{aligned}$$(10) -

5.

Processing Time (seconds (s)). Processing time includes training time (TT) and prediction time (PdT). It is computed using the Eq. (11).

$$\begin{aligned} TT + PdT = PcT \end{aligned}$$(11)where TT represents Training Time, PdT denotes Prediction Time, and PcT signifies Processing Time [61].

-

6.

Size of Training Model (kilobyte (KB)). The size of the training model (SM) in kilobytes serves as a benchmarking parameter for performance analysis in anomaly detection [62].

3.5 Benchmarking scenarios

This research encompasses two categories of benchmarking scenarios: preliminary analysis and proposed model results. The preliminary analysis section comprises Scenario 1 and Scenario 2, which were employed to address research questions 1 and 2, respectively. Meanwhile, the proposed model results section includes Scenario 3, designed to answer research question 3. To facilitate the explanation of benchmarking scenario results in the results and discussion section, several term lists are provided. Table 7 presents the term list relevant to the benchmarking scenarios. Details for each scenario are provided below:

-

1.

Scenario 1: The first scenario involves benchmarking the performance of each DNN training model from every network (M1-M4) and testing them using testing data from their respective device traffic (TS1–TS4). In this scenario, M1 will be tested using TS1, M2 using TS2, and so forth. The objective of this scenario is to assess the performance of the training models from each network using their corresponding test data from the network traffic. Furthermore, this scenario evaluates the performance of each DNN training model from every network (M1–M4) through cross-testing using testing data from other networks (TS1–TS4). Therefore, M1 will be tested using M2–M4 traffic, M2 using M1 and M3 traffic, M3 using M1 and M2 traffic, and M4 using M1–M3 traffic. This scenario is designed to address research question 1.

-

2.

Scenario 2: The second scenario aims to evaluate the performance of the ensemble averaging DNN (ENS) and test it using traffic data from each network (TS1–TS4). This scenario is designed to address research question 2.

-

3.

Scenario 3: The third scenario benchmarks the performance of the proposed WEA-DNN and tests it using traffic data from each network (TS1–TS4). This scenario is designed to address research question 3.

4 Result and discussion

In this section, the results of the simulation modeling and benchmarking study are presented and discussed. The findings of this research are discussed in the context of their impact on WEA-DNN for CIDS in heterogeneous network. Additionally, potential areas for future research in this field are highlighted.

4.1 Experiment environment

The investigation utilized a server equipped with the following hardware: a 2.3 GHz 16-Core Intel(R) Xeon(R) CPU E5-2650 v3 and 128 GB of memory. The operating system chosen was Ubuntu 22.04.2 LTS. Python version 3.10.6, along with Keras version 2.12, served as the machine learning framework for executing the DNN experiments. To present the experiment and simulation outcomes, Jupyter notebook version 6.5.3 was employed.

4.2 Preliminaries analysis

This section presents the results of experiments conducted in Scenario 1 and Scenario 2 of the benchmarking scenarios. Scenario 1 is further divided into Scenario 1a and Scenario 1b. Scenario 1a aims to evaluate the performance of each DNN training model from every network and test them using testing data from their respective network traffic. Scenario 1b assesses the performance of each DNN training model from every network through cross-testing using testing data from each other network.

The results of Scenario 1a can be seen in Table 8. In Scenario 1a, DNN models M1 and M3 demonstrate superior accuracy and performance metrics. M1 achieves an accuracy of 98.6% with precision, recall, and F1 scores of 88.6%, 81.9%, and 85.1% respectively. M3 achieves an accuracy of 98.8% with precision, recall, and F1 scores of 83.7%, 75.7%, and 79.4% respectively. In contrast, M2 and M4 show significantly lower performance, particularly M2, which has an F1 score of just 34.5%. Despite their lower performance, M2 and M4 have much longer training times (490 s for M2 and 310 s for M4) and prediction times, leading to higher overall processing times (727 s for M2 and 443 s for M4) compared to M1 and M3 (116 s and 123 s respectively). All models have similar sizes, around 64.4\(-\)64.5 KB, indicating that model size does not significantly affect performance metrics.

The results of Scenario 1b can be seen in Table 9. The table provides a detailed comparison of the performance and resource utilization of different DNN models (M1–M4) cross-testing using testing data from each other network (TS1–TS4). In Scenario 1b, where models are tested across different datasets, all models experience a decline in performance. Model M1 maintains high accuracy with TS1 (98.6%) but drops to 21.4% with TS4. Similarly, M2 and M3 achieve high accuracy with TS3 (97.3% and 98.8%) but perform poorly with TS4 (12.8% and 5.4%, respectively). M4 performs well with TS4 (87.6%) but shows significant decreases with TS1, TS2, and TS3. Despite these performance variations, all models consistently utilize resources such as training time, prediction time, and processing time across different testing scenarios. This uniformity in resource usage suggests stable computational demands regardless of the dataset. Model sizes remain consistent around 64.4\(-\)64.5 KB throughout the experiments.

The findings indicate that each DNN training model performs poorly when analyzing traffic from different datasets. M1, M2, and M4 exhibit lower accuracy, precision, recall, and F1-score when tested using data from other datasets. This can be compared to the results when the DNN model is used to predict data from their respective datasets. When comparing Scenario 1b to Scenario 1a, it is important to note that some models exhibit different performance characteristics. Therefore, selecting the appropriate model and training data becomes pivotal depending on the specific task at hand.

Given that all models share the same DNN architecture and hyperparameters, the variations in performance observed among DNN models M1 through M4 in Scenarios 1a and 1b can be attributed to a combination of dataset characteristics and the effectiveness of model training rather than differences in model configuration. In Scenario 1a, where models were tested with data from their respective training sets, M1 and M3 consistently outperformed M2 and M4 across multiple metrics such as accuracy, precision, recall, and F1-score. This difference suggests that M1 (trained on N1, characterized by a substantial volume of benign traffic and moderate DDoS instances) and M3 (trained on N3, focusing on IoT traffic with diverse attacks) may have benefited from training on datasets that better matched the patterns inherent in their respective traffic types. In contrast, M2 (trained on N2, featuring a wide range of attack types including bots and DDoS) and M4 (trained on N4, focusing on IoT botnet traffic with significant DDoS events) encountered difficulties potentially because they were too tailored to particular dataset characteristics. The decline in performance observed in Scenario 1b, where models were tested with data from other datasets, highlights the difficulty of generalizing across diverse network traffic types. Despite these differences, all models displayed consistent resource utilization patterns, indicating robust computational efficiency across different scenarios. Future refinements could involve optimizing hyperparameters to better adapt to varied dataset compositions, thereby enhancing overall model adaptability and performance in cross-domain applications.

In the next scenario, in Scenario 2, we assess the performance of the traditional ensemble averaging model when exposed to various training datasets. Scenario 2 assesses the performance of the ensemble averaging DNN model (ENS) across multiple testing datasets (TS1–TS4). Table 10 details the model’s accuracy scores and resource utilization metrics. The ensemble model achieves consistent accuracy ranging from 88.6 to 89.2% when evaluated with TS1, TS2, and TS3, showcasing varying precision, recall, and F1 scores that indicate its adaptability to diverse data characteristics. Particularly, TS3 demonstrates improved performance with an accuracy of 89.2% and stronger precision, recall, and F1 scores. In contrast, TS4 shows a significant decrease with an accuracy of 23.4% and lower precision, recall, and F1 scores. Resource utilization metrics, including training time, prediction time, processing time, and model size, exhibit stability across all scenarios. Training time, prediction time, and processing time consistently demonstrate efficient computational performance, maintaining similar values across different datasets. The model size remains unchanged at 257.9 KB, highlighting minimal variation in computational resource demands.

The analysis of Scenario 2’s results on the ensemble averaging DNN model (ENS) provides valuable insights into the effectiveness of combining diverse DNN models trained on heterogeneous datasets. It is evident that the ensemble model exhibits lower performance when tested with data from each network compared to individual models evaluated on their respective datasets. This highlights the complexity involved in combining models trained on different datasets and suggests that a straightforward aggregation approach may not optimize results. Each DNN model has unique strengths and biases that affect its performance on specific datasets. When these models are combined through averaging, assigning equal weight to each prediction may lead to suboptimal outcomes, especially when the ensemble’s accuracy and performance metrics diverge significantly from those of the individual models.

The key challenge lies in harnessing the diverse capabilities of individual models within the ensemble framework effectively. Merely averaging predictions may not sufficiently address variations in model performance and dataset characteristics. Instead, employing a more sophisticated weighting scheme that considers each model’s reliability and expertise could enhance ensemble performance. Furthermore, the consistent resource utilization observed across different testing scenarios suggests that computational constraints are not the primary factor influencing ensemble performance. Rather, the primary factors are the heterogeneous nature of the datasets and the distinct capabilities of individual models, which contribute to the observed variations in performance.

Based on the preliminary results, it is evident that each DNN training model possesses distinct capabilities and specialized expertise in analyzing traffic derived from its respective dataset. The ensemble averaging method is anticipated to offer a more comprehensive perspective for analyzing heterogeneous networks. However, the indiscriminate application of equal averaging across models may result in suboptimal prediction performance, primarily due to the inherent uniqueness of each DNN training model. Thus, a crucial need arises for assigning appropriate weights to individual DNN training models within the ensemble learning framework to optimize predictive outcomes and ensure the effective integration of diverse model expertise.

4.3 Proposed model result

This section presents the results of Scenario 3 from the benchmarking scenarios. Scenario 3 is designed to assess the performance of the proposed WEA-DNN when analyzing traffic from heterogeneous datasets. The first step of WEA-DNN involves determining the weight value for each training model using sample traffic from each network. The results of the weight values for each DNN training model can be seen in Table 11. The table provides insights into the weight assigned to each DNN model (M1–M4) for different testing data sets (TS1–TS4) following the application of the DE algorithm.

For TS1, M1 holds the highest weight (0.53), followed by M2 (0.26), M3 (0.16), and M4 (0.02), indicating that M1 and M2 are more influential in predictions for this dataset. In TS2, M2 is most significant (0.37), followed by M1 (0.28), M4 (0.27), and M3 (0.05), showing a shift in importance towards M2 and M1. In TS3, M3 is the most influential with a weight of 0.50, followed by M1 (0.38), with M2 and M4 having minimal impact (0.05 and 0.02, respectively). For TS4, M4 has the highest weight (0.75), indicating its predominant role, followed by M3 (0.18), with M1 and M2 contributing minimally (0.01 and 0.04, respectively).

The variability in weights across different testing data sets reflects the algorithm’s ability to adapt and optimize the contribution of each model based on the specific characteristics and patterns present in the data. By assigning higher weights to models that demonstrate better performance or are more suited to the underlying data distribution, the algorithm seeks to enhance the overall predictive accuracy of the ensemble model.

Subsequently, these weight values are used in predicting traffic using the ensemble method. The results of Scenario 3 can be found in Table 12. The table presents the performance metrics of the WEA-DNN model across different testing data sets (TS1-TS4). This model was developed to address research question 3. Notably, the WEA-DNN model demonstrates commendable accuracy levels across all testing scenarios, ranging from 87.6 to 98.9%. This suggests the model’s effectiveness in making correct predictions across diverse data sets. However, a closer examination reveals varying precision, recall, and F1 scores across different testing data sets. For instance, when tested with TS2, the model exhibits relatively lower precision, recall, and F1 scores compared to other scenarios, indicating potential challenges in correctly identifying positive cases and avoiding false positives. Despite these variations in performance metrics, the training time, prediction time, and processing time remain consistent across all testing data sets, suggesting stable resource utilization patterns. Furthermore, the size of the model remains constant across different testing scenarios, indicating consistent model complexity. In summary, while the WEA-DNN model demonstrates high accuracy and consistent resource utilization, further optimization may be required to enhance its precision, recall, and F1 scores across all testing scenarios.

When comparing the average results of WEA-DNN with those of other training models, it is observed that WEA-DNN outperforms the others. The results of the comparison scenario can be seen in Fig. 3. The table offers a detailed comparison of the evaluation results for six different methods or models: WEA-DNN, ENS, M1, M2, M3, and M4, based on four key performance metrics: average of accuracy, precision, recall, and F1-score. WEA-DNN emerges as the top performer in terms of accuracy, scoring approximately 93,8%, showcasing its strong predictive capabilities. However, it falls short in precision, recall, and F1-score, indicating a need for improvement in these areas to achieve a more balanced performance. Traditional ensemble averaging DNN, on the other hand, demonstrates consistent performance across all metrics, with accuracy, precision, recall, and F1-score averaging around 72–70%, highlighting its reliability and stability in making predictions. In comparison, Models M1, M3, and M4 exhibit lower performance levels across the board, with accuracy, precision, recall, and F1-score hovering around 38–34% and 49–38%, respectively, indicating significant room for enhancement in accuracy and precision to boost overall effectiveness. Model M2 stands out for its high accuracy and recall scores, reaching close to 74% and 71%, respectively. However, its precision and F1-score are relatively lower, suggesting a need to strike a better balance between precision and recall to optimize overall performance.

Additionally, comparisons between WEA-DNN and other DNN training models when analyzing testing data from their respective datasets are presented in Fig. 4. The comparison between the Local DNN Model and the WEA-DNN reveals substantial performance gaps across all metrics and tasks. Scientifically, these gaps indicate the superior performance of the WEA-DNN model in handling the tasks evaluated. Looking at the Accuracy metric, the WEA-DNN consistently achieves significantly higher values compared to the Local DNN Model, with an average performance gap of around 10%. This gap suggests that the WEA-DNN is more accurate in its predictions, which can be attributed to its enhanced feature extraction capabilities and optimized architecture.

In terms of Precision, the WEA-DNN showcases a noticeable performance gap of approximately 8% on average across all tasks when compared to the Local DNN Model. This gap signifies the WEA-DNN’s superior ability to correctly identify relevant instances while minimizing false positives, which is crucial in various applications such as medical diagnosis or anomaly detection. Similarly, the Recall metric demonstrates a substantial performance gap in favor of the WEA-DNN, with an average difference of around 12% across tasks. This gap indicates that the WEA-DNN excels in retrieving relevant instances from the dataset, showcasing its effectiveness in capturing all positive instances without missing important data points. Analyzing the F1-Score, the WEA-DNN displays a significant performance gap of approximately 9% on average compared to the Local DNN Model. The higher F1-Score of the WEA-DNN highlights its ability to strike a better balance between Precision and Recall, leading to more reliable and robust predictions.

In conclusion, the performance gaps observed between the WEA-DNN and the Local DNN Model in terms of Accuracy, Precision, Recall, and F1-Score provide scientific evidence of the WEA-DNN’s superior performance in handling the tasks. These gaps can be attributed to the WEA-DNN’s advanced architecture, improved feature extraction mechanisms, and optimized learning processes, making it a more effective and reliable model for the tasks evaluated.

In this context, WEA-DNN can maintain pace with DNN training models when analyzing testing data from their respective datasets. The analysis from WEA-DNN is also strengthened because it offers a broader and more holistic perspective compared to the individual models in each network. The WEA-DNN exhibits the best performance when compared with all scenarios in the benchmarking scenario. The proposed model demonstrates high accuracy, precision, recall, and F1-score results, providing a broader and more holistic perspective in heterogeneous networks.

4.4 Discussion

In the analysis of Scenario 1a, an important observation was that each model (M1, M2, M3, and M4) demonstrated different performance levels depending on the training data they received. This pattern was also evident in Scenario 1b, where the models showed varying performance based on their specific training datasets. These findings emphasize that the effectiveness of a model largely depends on the nature of the data it is trained with. This variation in performance highlights the need to carefully match models with appropriate training datasets to achieve the best results.

In Scenario 2, the introduction of the Ensemble model was intended to reduce the performance discrepancies observed in individual models trained on different datasets. However, even with this combined approach, the Ensemble model still performed inconsistently across various training datasets. This highlights an important point: different models react differently to training data, making it essential to carefully choose the right model and training data combination for each specific case. It also suggests that the simple averaging method used in the Ensemble model may not be enough, potentially leading to less accurate predictions.

To address this limitation, Scenario 3 introduced the use of distinct weights for each network in the ensemble method’s averaging process. This tailored weighting aimed to improve predictive accuracy by assigning different levels of importance to each network based on its performance. When evaluating the WEA-DNN model, it showed consistent performance across various training datasets. This approach proved effective, demonstrating the WEA-DNN’s ability to maintain strong performance across diverse network environments.

The performance metrics of the WEA-DNN model in detecting coordinated attacks across heterogeneous networks highlight its effectiveness. With an average accuracy of 93.8%, a precision of 78.6%, a recall of 60.4%, and an F1-score of 62.4%, the WEA-DNN surpasses individual DNN models with similar objectives. These results demonstrate the model’s ability to accurately identify coordinated attacks in diverse network environments. Additionally, the WEA-DNN’s performance is comparable to that of individual DNN models when detecting attacks within single networks, further validating its utility and effectiveness in heterogeneous network scenarios.

The experimental results in this study reveal a notable discrepancy between the high accuracy and the relatively low precision, recall, and F1-scores observed across various scenarios. While the high accuracy suggests that the model effectively classifies a significant number of instances correctly, the lower precision, recall, and F1-scores indicate potential reliability issues, especially for real-time applications. This discrepancy can be attributed to several factors, including class imbalance in the datasets, algorithmic limitations, and potential overfitting. The datasets used for training and evaluation, such as CICIDS2017 and CICBotIoT, exhibit substantial differences in the number of instances per class. This imbalance can lead to a model biased towards the majority class, resulting in high overall accuracy but poor performance in identifying minority classes.

The algorithmic approach, particularly the ensemble averaging models used in this study, may have inherent limitations. Variations in model weights across different testing datasets in Scenario 3 suggest that the ensemble model relies heavily on certain individual models for specific datasets, which may not generalize well across other datasets. The feature extraction and representation capabilities of the DNN models might not fully capture the nuances necessary to distinguish between different types of network traffic effectively. High accuracy may reflect the model’s ability to identify prevalent benign traffic rather than accurately detecting more complex anomalies, contributing to lower precision and recall.

To address these issues, several steps can be taken. Implementing data augmentation and resampling techniques can help balance the dataset, ensuring the model trains on a more representative sample. Enhancing the algorithm with advanced ensemble methods that dynamically adjust weights based on performance can improve its adaptability to diverse data scenarios. Improving feature extraction methods will also help capture more relevant data patterns, enhancing the model’s ability to distinguish between different classes. Applying regularization techniques can prevent overfitting, helping the model generalize better to unseen data. Addressing these areas will enhance the model’s reliability and robustness, making it more suitable for real-time applications.

Moreover, the sample sizes and the distribution of labels across the datasets, briefly mentioned in the tables, play a crucial role in the analysis. Uneven distribution of labels can lead to imbalanced datasets, which often skew performance metrics. Techniques such as oversampling minority classes or undersampling majority classes could be employed to address these imbalances. These balancing techniques are essential, as they directly impact the reliability and generalizability of the model. To summarize, the observed gap between high accuracy and lower precision, recall, and F1-score highlights potential reliability issues for real-time applications. Nevertheless, this study identifies critical areas for improvement. By tackling limitations like label distribution and implementing balancing techniques, along with ongoing research, we strive to develop a more dependable and effective real-time system. The discussion section will explore these factors in greater detail and examine their influence on our results.

Looking beyond the experimental phase, the practical application of the WEA-DNN model shows great potential for real-world cybersecurity. With its strong performance in detecting coordinated attacks across diverse networks, the WEA-DNN is a valuable tool for enhancing network security. In real-world scenarios where cyber threats constantly evolve, the ability to accurately identify and mitigate coordinated attacks is crucial. The WEA-DNN provides a comprehensive view of network activity and can adapt to various data environments, making it a significant asset for cybersecurity professionals and organizations. Deploying the WEA-DNN in real-world settings could strengthen proactive defense strategies, allowing for timely responses to new threats and protecting critical network infrastructures from malicious activities. Additionally, ongoing refinements and optimizations based on real-world use and feedback could further improve its effectiveness against modern cybersecurity challenges. Therefore, the WEA-DNN represents a meaningful advancement in fortifying network security against today’s dynamic cyber threats.

4.5 Future works

This research primarily focuses on outsider attacks that need to be detected by the CIDS. However, there is an insider attack related to vulnerabilities within the CIDS system, known as a data poisoning attack, which poses a significant threat. Data poisoning attacks can be highly detrimental to the CIDS system, especially since it employs collaborative anomaly detection. Collaborative anomaly detection relies on collaborative learning to identify vulnerabilities, and data poisoning can corrupt the model, leading to incorrect predictions and potentially resulting in byzantine failure within collaborative learning. Additionally, the presence of Sybil nodes in the network can further exacerbate the risks associated with data poisoning attacks. Therefore, enhancing trust in CIDS is crucial to mitigate the threat of data poisoning attacks. This attack could serve as a valuable avenue for future research in the field of CIDS based on collaborative anomaly detection.

The other interesting future work involves finding unknown attacks and checking data from new networks using models we already have. The big goal is to notice strange things that we’ve never seen before to make sure we catch any risky situations in our networks and systems. Therefore, the main focus should be on finding these problems before they become big issues. This idea of finding new problems early is an important direction for future research in cybersecurity.

To simulate the scenario of this research in a future study, creating a dataset tailored for specific coordinated attack scenarios, as well as heterogeneous network environments, can effectively address the observed discrepancy between high accuracy and lower precision, recall, and F1-scores. This approach ensures a more balanced representation of attack types and benign traffic, reducing the risk of model bias towards majority classes and enhancing precision and recall. By including diverse examples of coordinated attacks, the model can better learn the subtle patterns associated with these threats, leading to improved differentiation from benign traffic and increased reliability. Additionally, a scenario-specific dataset allows for effective feature engineering, focusing on characteristics most indicative of attack behavior, thus enhancing model performance. Continuous improvement through the addition of new attack types ensures the model remains relevant and accurate, ultimately enhancing its practical utility in real-time applications and providing a robust security measure against evolving threats.

5 Conclusion

The primary objective of this research was to devise a CIDS leveraging WEA-DNN for the detection of coordinated attacks within heterogeneous networks. The evaluation of WEA-DNN’s detection capabilities revealed a remarkable detection rate characterized by high accuracy, surpassing the performance of individual DNN models derived from each network and even outperforming the ensemble averaging DNN method in detecting coordinated attacks across diverse networks. Notably, the performance of WEA-DNN remained competitive with that of localized DNN models when analyzing traffic within individual networks. This compelling finding underscores the WEA-DNN’s proficiency in offering a comprehensive perspective for analyzing and detecting coordinated attacks in the intricate context of heterogeneous networks. Such robust detection capabilities position the proposed WEA-DNN model as a promising solution for bolstering network security against coordinated intrusion attempts across diverse network environments.

Data availability

The data that support the findings of this study are available to the public. Here is the list of public data that used in this research: 1. CICIDS2017: www.unb.ca/cic/datasets/ids-2017.html. 2. CSE-CICIDS2018: www.kaggle.com/datasets/solarmainframe/ids-intrusion-csv. 3. CICToNIoT: staff.itee.uq.edu.au/marius/NIDS_datasets/#RA13. 4. CICBotIoT: staff.itee.uq.edu.au/marius/NIDS_datasets/#RA14.

Code availability

The code supporting the findings of this study is publicly available. You can access it via the following link:github.com/aulwardana/WEA-DNN

References

Colom, J.F., et al.: Scheduling framework for distributed intrusion detection systems over heterogeneous network architectures. J. Netw. Comput. Appl. 108, 76–86 (2018)

Zhou, C.V., Leckie, C., Karunasekera, S.: A survey of coordinated attacks and collaborative intrusion detection. Comput. Sec. 29(1), 124–140 (2010)

Vasilomanolakis, E., Karuppayah, S., Mühlhäuser, M., Fischer, M.: Taxonomy and survey of collaborative intrusion detection. ACM Comput. Surv. (CSUR) 47(4), 1–33 (2015)

Folino, G., Sabatino, P.: Ensemble based collaborative and distributed intrusion detection systems: a survey. J. Netw. Comput. Appl. 66, 1–16 (2016)