Abstract

Software can be vulnerable to various types of interference. The production of cyber threat intelligence for closed source software requires significant effort, experience, and many manual steps. The objective of this study is to automate the process of producing cyber threat intelligence, focusing on closed source software vulnerabilities. To achieve our goal, we have developed a system called cti-for-css. Deep learning algorithms were used for detection. To simplify data representation and reduce pre-processing workload, the study proposes the function-as-sentence approach. The MLP, OneDNN, LSTM, and Bi-LSTM algorithms were trained using this approach with the SOSP and NDSS18 binary datasets, and their results were compared. The aforementioned datasets contain buffer error vulnerabilities (CWE-119) and resource management error vulnerabilities (CWE-399). Our results are as successful as the studies in the literature. The system achieved the best performance using Bi-LSTM, with F1 score of 82.4%. Additionally, AUC score of 93.0% was acquired, which is the best in the literature. The study concluded by producing cyber threat intelligence using closed source software. Shareable intelligence was produced in an average of 0.1 s, excluding the detection process. Each record, which was represented using our approach, was classified in under 0.32 s on average.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Software security is the safeguarding of software and its components within the scope of information security’s three elements: confidentiality, integrity, and availability [1]. It also involves ensuring that software should perform its expected functions even when under attack. To detect potential software vulnerabilities, institutions perform various analyses on source code developed in-house or obtained from third parties.

Analysis of closed source software, including executables and libraries, is also essential. Accordingly, institutions also aim to identify security vulnerabilities in the closed source software they provide or use and take appropriate measures to address them. The Lighttpd web server, which is widely used, provides an example of the use of vulnerable executables. The absence of a verification process for the input string used in the vulnerable function base64_decode enables an adversary to use malicious inputs, which may result in the occurrence of out-of-bounds and abnormal termination of the web server [2]. Nevertheless, by being aware of the aforementioned circumstance, it is possible to implement a security mechanism that checks the input from users. Furthermore, the Heartbleed vulnerability is a well-known example. As the data size was not controlled by the popular OpenSSL cryptographic software library, it was sending back as much data as requested. This meant that the server was capable of sending all information in the memory [3]. Consequently, with relative ease, the attackers were able to obtain the server memory, which may contain passwords, certificates, and so forth.

Even end users want to know whether the applications they are currently using contain any vulnerabilities. The Free MP3 CD Ripper software may be an illustrative example of this necessity. This software enables the conversion of audio CDs into the MP3 format. Due to the presence of a local buffer overflow vulnerability, in the event that the user took a malicious.wav file which had been prepared by an attacker, the version 1.1 of the software was capable of executing attacker’s instructions which had been embedded in the.wav file [4]. It is essential to inform users when such a vulnerability is identified. This enables users to refrain from utilising software that contains vulnerabilities. As a result, in today’s world, there is an increasing demand for information about vulnerabilities in closed source software.

Cyber threat intelligence can be shared with institutions, developers, and end users who lack the ability or resources to conduct their own analysis. This enables them to become aware of security vulnerabilities and take necessary precautions.

To produce cyber threat intelligence, it is obvious that the first step is to identify any vulnerabilities. Software can exist in three states: source code, bytecode, or binary code. Therefore, security vulnerabilities can be detected by examining all three types of code. As the study focuses on software whose source code cannot be accessed, the analysis and examination were carried out on binary code. This is because the application area is broader as source code is not required for analysis [5]. In certain fields, such as Internet of Things (IoT) and performance-oriented industries, the source code is not shared and is often compiled into binary [6,7,8]. Additionally, updates are usually distributed in binary code [9]. Furthermore, the existence of malicious compilers, such as Xcode-Ghost, which inject additional code, is a concern [8, 10]. In numerous cases, the source code may not precisely represent the code that is executed. Analyzing the source code alone may not provide adequate results as compilers make changes when converting the source code into executable binary code [5, 6, 11, 12]. Given the significant progress made in source code-based vulnerability analysis, binary code analysis is now considered more important [13]. Additionally, advanced analysis of byte code may not be necessary as it can be converted back into source code.

With most existing analysis methods, many vulnerabilities can be missed due to the need for human resources that create features and require expertise [7]. It is therefore important to develop automated methods. Automated analysis of binary code is a promising research area, because it enables the development of new software analysis technologies that can perform more accurate and reliable examinations [5, 14]. Detecting security vulnerabilities in binary code is a challenging process [6, 11]. The structure of binary code is complex [11]. There are many architectures that contain dozens of instructions [14]. Furthermore, the data and control structures lack high-level and semantically rich information [6, 11]. Additionally, there is a significant requirement for programming effort to develop a tool for binary code analysis [14].

This study aims to automate the process of creating cyber threat intelligence for closed source software vulnerabilities by addressing the issue of manual steps that require expert knowledge to detect vulnerabilities and create related intelligence. The objective is to present an approach that offers a new perspective for binary code analysis, shares a standardized way for vulnerability intelligence creation, and does not compromise performance by simplifying the steps. This paper contributes to the literature on the following topics:

-

Literature review of vulnerability detection in binary code and software vulnerability intelligence,

-

Proposed flowchart and system design for creating threat intelligence for closed source software, based on the analysis of existing process steps and system architectures,

-

Reviews and assessments for currently available datasets and cyber threat intelligence standards,

-

Data types that should be defined in accordance with the recommended intelligence standard,

-

A deep learning model that is not limited to a single processor architecture, file format, or software domain,

-

The function-as-sentence approach, which converts each machine instruction as a word and each function as a sentence, for quickly detecting vulnerabilities in binary code,

-

New data representation for binary code analysis,

-

Publicly available source code that is ready to use.

This study differs from previous studies in several ways. The majority of studies have focused solely on vulnerability analysis. There is insufficient focus on the production of intelligence. So our study aims to fill the gap and produce intelligence in a short time. Our approach assumes that each sentence expresses information about the existence of security vulnerability. It therefore tries to understand what the function wants to say. For this reason, we have proposed simple transformations, avoiding any steps that could introduce additional meanings. We have shared the usage details of the proposed cyber threat intelligence standard to establish a standardised approach for the literature.

Section 2 examines studies presented in the literature on the analysis of closed source software. It also analyses studies that have performed production, use, and/or management for software vulnerability intelligence. In the section, an evaluation of the literature is presented and the necessity of the study is shown. Section 3 presents the recommended flowchart and system design. It also includes a survey of usable datasets and cyber threat intelligence. In the section, our approach is detailed and compared with approaches in the literature. Section 4 describes the algorithms used, presents their performance, and evaluates the implemented system. The conclusion is presented in Sect. 5.

2 Related studies

The literature review addressed vulnerability analysis and vulnerability intelligence separately in order to cover a wide range of approaches, to evaluate the solutions effectively, and to enable better comparison with our work. Relevant studies were identified using combinations of the following keywords:

-

For vulnerability detection studies:

-

binary software, binary program, binary code, binary code executable, binary, executable, program

-

vulnerability, vulnerability detection, software vulnerability, software vulnerability analysis

-

machine learning, deep learning

-

-

For vulnerability intelligence studies:

-

software vulnerability, software vulnerability analysis

-

cyber threat intelligence, threat intelligence, vulnerability intelligence

-

Keywords were searched in both English and Turkish. The results are not restricted to a specific start or end date. Keywords were searched in the fields of title, abstract, keywords and main text fields.

Some studies [15,16,17,18,19,20,21] used control and data dependencies, semantic information, or flow graphs for the purpose of detecting vulnerabilities. Cui et al. employed a combination of CNN and Bi-LSTM algorithms to detect multiple vulnerabilities [15]. The proposed model created binary code slices based on function calls, control dependencies, and data dependencies. Features were extracted from the created slices during the analysis. 19 types of vulnerabilities were identified. Similarly, in [16], control and data dependencies were analyzed from function calls to determine the exact location of vulnerabilities within binary code.

Diwan et al. [17] used VDGraph2Vec, which was developed to represent the machine language, along with RoBERTa to obtain control flow and semantic information. The analysis was carried out through various neural networks, with a focus on the CWE-121 and CWE-190 vulnerabilities of the Juliet dataset. Only the codes containing the CWE-119 vulnerability of the NDSS18 dataset were used.

Cheng et al. [18] conducted a vulnerability analysis of Internet of Things devices. The study examined control flow using CNN and achieved 80% accuracy.

Gao et al. [19] analysed binary code vulnerabilities across multiple platforms. Labelled semantic flow graphs were created for the binary code, followed by the use of DNN for vulnerability detection. The tests reported a 0.2 s time to detect a vulnerable function and an AUC value of 88.48%.

Padmanabhuni and Tan [20] utilised machine learning techniques to identify buffer overflow vulnerabilities in binary code. The variable information was obtained by parsing the binary data, and then the BinAnalysis tool was used to detect dependencies. They then created and analyzed various attributes using the Weka tool. The analysis compared the Naive Bayes, Multilayer Perceptron (MLP), Simple Logistics (SL), and Sequential Minimal Optimization (SMO) techniques. The results indicated that SMO had the highest accuracy rate of 94%.

Chen and He [21] conducted risk analysis and vulnerability detection through feature matching. The control flow graph was first obtained through binary code, then semantic information was extracted using LSTM, and finally, the vector representation was produced.

In some studies [22,23,24,25], prior to analysis, the binary code was translated into an intermediate language. Wang et al. [22] conducted a study on detecting known and unknown vulnerabilities in binary code. They obtained pseudocode from the binary code and analyzed it using the BiLSTM-attention model. The Juliet test set was used with a focus on the C/C++ programming language.

Taviss et al. conducted a vulnerability analysis on binary code using machine language summarisation methods [23]. The Bi-GRU algorithm yielded the best results, achieving a performance of 79.6% on the Juliet dataset and 84.1% on the NDSS18 dataset.

Redmond [24] utilised Natural Language Processing (NLP) to support multiple architectures, including ARM and X86. The machine instructions that are dependent on architecture were converted into a common language using NLP. Analyses were conducted using Bivec. Tests were performed on the openssl, coreutils, findutils, diffutils, and binutils programs.

Zheng et al. [25] proposed a method to detect CWE-134, CWE-191, CWE-401, and CWE-590 vulnerabilities in binary code using a recurrent neural network. Binary code was decompiled with the RetDec tool and converted to an intermediate language. Then, flow analysis was performed. To determine the most suitable recurrent neural network, they compared the performance of six different models: SRNN, BSRNN, LSTM, BLSTM, GRU, and BGRU. The analysis revealed that BSRNN achieved the highest accuracy. The NIST SARD dataset was used compiling with GCC 5.3.1. Upon examining the compiled programs, they identified vulnerabilities in 9,352 out of 14,657 binary codes.

A number of studies [26,27,28,29,30] employed the use of vectors to represent binary data. Lee et al. [26] introduced a method that converts assembly codes into fixed vectors, rather than prioritising the ordering of API function calls. To achieve this, they utilised the Intrusion2vec tool and employed Text-CNN for analysis and classification of the vectors. The function classification model was trained and tested using the Juliet dataset provided by NIST, which was divided into 80% for training and 20% for testing, resulting in an accuracy rate of 96.1%.

Yan et al. [27] analysed the machine language using the Juliet and ICLR19 datasets and various algorithms. They first used Bi-GRU and a ‘word-attention module’ to obtain contextual information, followed by TextCNN and a ‘patial-attention module’ for feature extraction. Their hybrid approach yielded better results than previous studies.

Nguyen et al. [28] proposed the deep cost-sensitive kernel machine to analyze binary code for CWE-119 and CWE-399. A total of 79,848 binary codes were tested. Their best F1 score for the NDSS18 binary dataset was 85.7%.

Le et al. [29] proposed the maximal divergence sequential autoencoder to automatically derive features for binary code vulnerability analysis. Furthermore, a labelled dataset suitable for binary code vulnerability analysis was developed. Their best F1 score for the dataset was 87.1%.

Feng et al. [30] developed a bug detection engine called Genius using unsupervised machine learning. The study analysed 8126 firmware containing 420,558,702 functions using high-level numerical feature vectors instead of raw features.

Few studies [31,32,33] employed similarity analysis as a method of detection. Eschweiler et al. [31] presented a new approach for performing similarity analysis of functions within binary code. They implemented a preprocessing process to reduce computational costs, allowing for analysis of high-dimensional code bases. The developed prototype analysed binary codes for various architectures, including x86, x64, ARM and MIPS. The system identified vulnerabilities, including Heartbleed and POODLE, in an Android image that contained over 130,000 ARM functions. Luo et al. [32] also performed a study of binary code similarity analysis using a DeBERTa model. Recall was 79% for their best model.

Durmuş and Soğukpınar [33] presented a new method for analysing heap buffer overflow vulnerabilities in binary executables. The study aimed to identify similarities between the distributions and sequences of binary opcodes used for branching, looping, register value updating, memory value updating, stack operations, and system calls. Learning and testing sets were created, consisting of a total of 30 binaries, 15 of which contain vulnerabilities. These binaries were derived from the NIST SARD dataset. All programs were developed using the C/C++ programming language. The tests employed various classification methods/algorithms, including KNN, Naive Bayes, SVM, ZeroR, Bayes Multinomial, Hoeffding Tree, J48, K-Star, Random Forest, Random Tree, and Decision Table. The KNN, Naive Bayes, Hoeffding Tree, K-Star, and Random Tree algorithms demonstrated high performance rates, indicating their suitability for vulnerability analysis.

Various studies [7, 34,35,36,37,38,39] indicated that the analysis of binary instructions is a preferable approach. Liu et al. [7] analysed binary code programs created for the Internet of Things using a deep learning-based approach for automated vulnerability analysis. The binary instructions obtained through the IDA Pro tool were preprocessed and cleaned before extracting binary functions. Model learning was then performed on these functions using Att-BiLSTM (attention bidirectional LSTM) for vulnerability prediction.

Dong et al. [34] analysed defects in Android binary executables. They obtained over 90,000 smali files from approximately 50 apk files. Then, the extracted features were analysed using DNN, resulting in an AUC value of 85.98%.

Morrison et al. [35] investigated several methods for analysing binary data of Windows 7/8, including logistic regression, naive bayes, recursive partitioning, support vector machines, tree bagging, and random forest. However, when compared with the literature, the accuracy of the results fell behind the studies that detect vulnerabilities through source code.

Saxe and Berlin [36] presented the Invincea system for binary code analysis. The system achieved an accuracy rate of 95% and a false positive rate (FPR) of 0.1 based on tests conducted on over 400,000 pieces of software from various sources. The process involved creating features from binary code and then using a DNN with 4 layers, 1024 inputs, 1204 hidden units, and 1 output. Feedforward is preferred, and Keras, a deep learning library, is used to create the neural network model.

Rosenblum et al. [37] performed a binary code analysis on the Intel IA32 architecture in both Windows and Linux environments. A probabilistic model was used with IDA Pro and Dyninst tools to classify the start byte (FEP) of each function. The modelling was done with Markov Random Field (MRF) resulting in the recall rate of 1%.

Tian et al. [38] compared the performance of several algorithms, including RNN, LSTM, GRU, Bi-RNN, Bi-LSTM, and Bi-GRU. The results show that the Bi-GRU and Bi-LSTM algorithms achieved the highest F1 score, reaching almost 90%. They developed a prototype called BVDetector and tested it using the SARD (Software Assurance Reference Dataset) provided by NIST.

Wu and Tang [39] used the Bi-GRU algorithm with the ELMo model. They achieved 98.9% and 87.7% accuracy on the Juliet and NDSS18 datasets, respectively.

A limited number of studies [40, 41] employed a dynamic approach to analyse the binary. Li et al. [40] proposed the use of fuzzing techniques to identify vulnerabilities. The V-Fuzz prototype was implemented using the described approach. The prototype comprises two main components: the vulnerability prediction model and the vulnerability-oriented evolutionary fuzzifier. When analysing a binary program with V-Fuzz, the system first predicts which parts of the program are more likely to contain vulnerabilities. The fuzzing component then tests the program by using an evolutionary algorithm to generate inputs to the relevant parts of the program. The testing was conducted using Uniq, MP3Gain, pdftotext, and libgxps. The experimental results indicate that 10 common CWEs were detected, including 3 new ones.

Grieco et al. [41] conducted a study on memory corruption prediction using static and dynamic features. The study analysed a dataset called VDiscover, which was built from 1039 Debian programs. The binary code was disassembled, static properties were removed, and dynamic properties were obtained through execution analysis. The predictive module is trained on the extracted features using supervised machine learning techniques, such as logistic regression and random forest. The experiments were conducted with different algorithms, and the maximum accuracy achieved was 55%.

Commercial products that analyse binary code were found through search engines. Examples of these tools include Grammatech CodeSurfer [42] and CodeSonar [43, 44], SourceForge BugScam [45], hex-rays IDA Pro [46], Veracode SAST [47], Microsoft CAT.Net [48] and Carnegie Mellon University BAP [5]. The tools do not provide a detailed description of the business logic, and we could not find any information on the use of machine learning/deep learning techniques.

Only a few studies have focused on cyber threat intelligence for software vulnerabilities. Patil and Malla [49] focused on virtualization environment software, with the aim of identifying vulnerabilities and prioritising their patch management. The vulnerabilities were identified by analysing both internal and external threat intelligence. Following this, patch prioritisation was carried out, and information was provided on possible controls to prevent the exploitation of vulnerabilities.

Wu et al. [50] presented a model for producing vulnerability intelligence by analysing code repositories of open source tools. The tool was developed solely for C/C++ programming languages, and no cyber threat intelligence standards were utilised in the model.

According to Wu et al. [51], software vulnerabilities present a significant threat to power systems. To minimize this threat, they presented a solution and developed a model using the China National Vulnerability Database (CNNVD). The model enables corrective action to be taken based on vulnerability intelligence. The initial stage involves defining the entities within a topology. Based on the gathered information, patch management is performed on these assets to eliminate vulnerabilities.

Davidson et al. [52] discussed a solution for transferring information about software vulnerabilities between organisations or units within an organisation. The authors employed game theory as a methodology and developed a system that utilised standards such as STIX and TAXII. They also developed a proof-of-concept protocol for exchanging intelligence. The protocol was implemented using the Go programming language.

Previously, we examined [7, 38] in the context of vulnerability analysis. In this section, we will discuss them again, this time with a focus on vulnerability intelligence. The models did not focus on producing or distributing cyber threat intelligence in a specific format. The data acquired is primarily static and not shareable.

The majority of studies have focused solely on vulnerability analysis. Intelligence production is not given sufficient weight, especially in a shareable format. The lack of a commonly used benchmark dataset makes comparisons difficult [53]. Furthermore, many studies concentrate on software specific to a particular domain.

Studies in the literature vary in granularity. Some only identify vulnerabilities in binary code, while others classify them. Moreover, many studies concentrate on a single vulnerability and/or architecture. As a result, it is not feasible to make direct comparisons of the results presented in the literature.

It is clear that deep learning techniques are not yet mature enough for binary code analysis [54]. Additionally, there is a lack of models/systems that can produce shareable cyber threat intelligence. Therefore, our study aims to fill this gap.

3 Proposed system

3.1 Dataset selection

An analysis has been conducted on the available datasets. Buffer overflow benchmark from MIT Lincoln Laboratories [55] contains outdated applications. Furthermore, the dataset only includes three applications and 14 exploitable vulnerabilities. Similarly, the LAVA-M dataset [56] also has a limited number of applications.

The DARPA CGC dataset [57] focuses on a single architecture. Hence, it may not be sufficient for studies aiming to support multiple architectures.

The NIST SARD [58] is a collection of source code for various programming languages, platforms, and vulnerabilities. It contains numerous test cases and test suites, such as the Juliet C++ [59], C# Vulnerability Test Suite [60], and Java Test Suite [61]. However, researchers must compile the source code, which may pose a challenge.

The VulDeePecker dataset [62], also known as the NDSS18 dataset or NDSS18 source code dataset, was created using the NIST SARD. It contains buffer error vulnerabilities (CWE-119) and resource management error vulnerabilities (CWE-399). The dataset has been created for deep learning studies, but it also does not include compiled binary.

By compiling the source code files of the VulDeePecker dataset, the NDSS18 binary dataset [29] were created with a specific data representation in 2018. The dataset includes records for various operating systems and architectures. In 2020, Nguyen et al. [28] presented a new dataset created from six open-source applications, using the same specific data representation as before.

Our study utilised the binary dataset from six open-source projects (hereinafter referred to as SOSP) and the NDSS18 binary dataset to detect CWE-119 and CWE-399 vulnerabilities. These datasets were chosen because of their simple raw data acquisition procedures. Firstly, the binary code is decompiled to obtain machine language instruction sequences in base 16. Then, the function blocks are converted to decimal base. The opcode and instruction values are extracted from each line and merged using the pipe and comma symbols respectively. This representation conforms to our desired word structure in the function-as-sentence approach.

3.2 Solution method

Natural languages are formed by combining root and derived word groups with suffixes, whereas machine language is formed by combining opcode and instruction information. The function-as-sentence approach has been proposed to treat machine language as a natural language. This approach considers each line in machine language as a word and each function as a sentence.

The first step is to convert the binary code based on the data representation of the selected dataset. This process provides the words for the functions. Next, these words are joined with spaces to form a sentence. In our vectorisation approach, we consider punctuation marks within the word structure as separate entities rather than as part of the alphabet. Therefore, we remove all punctuation marks. The dataset is then analysed to determine the frequency of individual string values. This information is used to create a vocabulary. The batch of strings is transformed into a list of integer token indices using the vocabulary. A deep learning model utilises the resulting vectorised sentence for training.

When analysing a binary, the same steps are applied. The sentence is then classified using a deep learning model, and cyber threat intelligence is produced based on the presence of vulnerabilities. Accuracy is considered to be the main criterion of our approach, rather than time and space complexity. However, time remains an important consideration for detection systems. So we have included metrics to evaluate performance. Therefore, in Sect. 4, we compared our achievements with those of other studies.

This study differs from previous approaches in several ways. For vulnerability analysis, some studies in the literature used control and data dependencies, semantic information, or flow graphs [15,16,17,18,19,20,21]. Our approach assumes that functions express themselves, similar to sentences in natural language, rather than focusing on their implementation details.

In certain cases, the binary code was transformed into an intermediate language before analysis [22,23,24,25]. Furthermore, several studies used vectors to represent binary data [26,27,28,29,30]. Our approach does not intend to impose new meanings. It solely acquires existing machine language sequences and applies various transformations to facilitate more efficient and effective calculations.

Similarity analysis was also employed in the literature [31,32,33]. The approach does not perform similarity analysis directly as it assigns meaning to functions through patterns. The function-as-sentence approach assumes that each sentence expresses information about the existence of security vulnerability. It therefore tries to understand what the function wants to say. For this reason, the objective is to perform simple transformations, avoiding any steps that could introduce additional meanings.

In the field of vulnerability intelligence, some researchers have narrowed their focus to specific domains [51]. Additionally, the exchange of intelligence has been studied [52], but most of the data obtained is static and not easily shareable [7, 38]. This study proposes the automatic production of shareable cyber threat intelligence without focusing on a specific domain or platform. Table 1 presents the methods and features of the proposed solution.

3.3 General flow

The studies obtained in the literature review have been analysed for their process steps and system architectures. Based on this analysis, a flow has been determined.

Then, it has been improved by adding new steps, including plotting history, saving and importing a model, exporting an intelligence file, and preparing data in accordance with our approach. The flow is shown in Fig. 1.

First, the model preparation phase begins. Then, binary files are converted to the appropriate format and the classification process is performed. After that, the cyber threat intelligence creation phase uses the classification results and exports the intelligence file.

3.4 General design

The system architecture was designed based on the literature and then improved by updating it according to the flow diagram, standardizing the components, and refactoring it according to software engineering best practices.

Figure 2 illustrates the architecture. In this regard, a comprehensive design has been presented to the literature in order to build a system that can analyze binary code and create cyber threat intelligence.

In the architecture, Orchesrator ensures that the learning, testing and detection steps are performed in the correct order. ModelManager is responsible for preparing the deep learning model and analysing the binary code for classification. FormManager vectorises the input for training or testing. Additionally, it transforms machine code into the appropriate format. Reconstructor decompiles the executable for analysis. CtiManager generates and exports the acquired intelligence. Commons comprises common modules and necessary functionalities, such as executing operating system commands, storing and retrieving configurations, reading and writing files, creating and deleting directories and files, logging, and preparing and exporting figures.

3.5 Cyber threat intelligence selection

Intelligence that does not comply with a specific format may create additional workloads and incompatibilities for stakeholders. Several formats are available for creating intelligence in a standardised form. The following formats have been found [63,64,65,66,67,68,69,70,71,72,73,74,75,76]: CybOX, CSAF, CVRF, GENE, IDMEF, IODEF, MAEC, MISP, MMDEF, OCIL, OpenIOC, OVAL, RID, Sigma, STIX, VERIS, XCCDF, YARA.

The GENE [77], OCIL [78], Sigma [79], XCCDF [80], and YARA [81] standards are not suitable for representing cyber threat intelligence related to software vulnerabilities. GENE, Sigma, and YARA are primarily used for creating detection rules. OCIL focuses on creating audit questions, while XCCDF is used for defining checklists.

The RID XML data schema is currently based on the IODEF [76]. Neither the IODEF [82] nor the RID [83] standard include data fields directly relevant to a software vulnerability.

The MAEC [84] and MMDEF [85] standards are primarily intended to express information obtained from static and dynamic analysis of malware. Therefore, a vulnerability may not be expressed in full detail.

CVRF [86] is currently presented as CSAF [87], and CybOX [88] has been integrated into STIX [89]. As a result, the most suitable formats are CSAF, IDMEF [90], MISP [91], OpenIOC [92], OVAL [93], STIX and VERIS [94].

After evaluating which standard provides more compatible results with less effort, it was determined that the STIX standard is the most suitable due to its compatible fields, widespread usage, and mature documentation. All data fields in the standard can be utilised for vulnerability intelligence. Table 2 provides recommendations for the information to be included in each field when using the standard. This is expected to make a valuable contribution to the literature and prevent inconsistencies in studies using the same standard.

All information is automatically filled in by our system. For CWE-199 and CWE-399 vulnerabilities, any data that cannot be automatically extracted from the binary file is directly included in the source code.

4 Algorithms, achievements and evaluation

The architecture was implemented using Python (v3.11.3) [95] due to the availability of numerous mature libraries and its widespread use in deep learning applications. Keras (v2.11.0) [96] and Tensorflow (v2.11.0) [97] libraries were used to manage deep learning models. The dataset was processed using sklearn (v0.0.post1) [98] for operations such as splitting and random selection. Numpy (v1.23.4) [99] and Pandas (1.5.2) [100] libraries were used for data filtering and transformation. Figures of data distributions and learning/test performance curves were generated using the matplotlib (v3.6.2) [101] library. The RetDec tool [102] was used on an executable file containing binary code to decompile, obtain machine code in 16-bit format and access additional data for cyber threat intelligence. The intelligence schema was created with the STIX 2 Python API (stix2) [103]. The hardware specifications were as follows: Intel Core I7-7700HQ CPU (@2.80 GHz), 16 GB of RAM, and the 64-bit version of Microsoft Windows 10 operating system.

The following metrics have been employed for the purposes of evaluation: accuracy, precision, recall, F1 score, and AUC. Accuracy is the ratio of number of correctly predicted functions to the total number of functions. Precision indicates the model’s ability to accurately predict vulnerable functions. Recall measures the number of correct vulnerable function predictions made across all vulnerable functions in the dataset. The F1 score is defined as the harmonic mean of the precision and recall values. It provides a single score, making it a more valuable metric. The AUC is a measure of the model’s ability to distinguish between non-vulnerable and vulnerable functions.

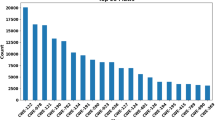

MLP, OneDNN, LSTM and Bi-LSTM were used because the algorithm selection was made based on the existing capabilities of the deep learning library (Keras). Bi-LSTM achieved the best performance for both the NDSS18 binary dataset and the SOSP dataset. Figure 3 shows its learning and validation (val_prefix is used) achievements, as well as the label distribution.

An accuracy of over 99% was achieved on the SOSP dataset when a test rate of 0.2, a validation rate of 0.5, and shuffling were used. The dataset comprises approximately 52,000 non-vulnerable records and only 600 vulnerable records. The fluctuation in the AUC, precision, and recall values during the learning and validation stages confirms the presence of skewed data. Furthermore, the small percentage of vulnerable data is insufficient for proportional selection.

For this reason, the NDSS18 binary dataset was used for the learning phase and to compare achievements. The dataset was split using a 0.2 test rate, 0.5 validation rate, and shuffling. The data distribution was equal.

The results showed that the Bi-LSTM model achieved an accuracy of 84.2%, precision of 81.7%, recall of 88.6%, and AUC of 94.6% on the training subset. On the test subset, the model achieved an accuracy of 81.8%, precision of 82.3%, recall of 82.6%, and an AUC of 93%. Table 3 presents the confusion matrix of the Bi-LSTM algorithm on the test subset, along with the rates of false positive and false negative.

The accuracy, AUC, and precision values in both the learning and validation stages are similar and converge at a common point. Although the recall value initially produced varying results, it eventually converged as well. This is a common occurrence in machine learning applications, where the model improves with each iteration and produces more true positives than in the beginning. Furthermore, there are no significant differences between the results achieved in the learning and testing phases. Table 4 presents a comparison with other studies that have used the same NDSS18 binary dataset.

Although achievements on the learning subset were much higher, achievements on the test subset have been used for comparison. In the table, if the highest achievement in our study (HA) is higher than other study in the relevant category, green is used. Blue indicates the proximity of the HA to the achievement in the other study. The closeness rate is taken as 1%. If our accuracy is lower and our F1 score is higher, purple is used. Additionally, if there was no accuracy, comparison has been made through the F1 score computed by us. In the light of all this information, the comparison of our achievements can be summarized as follows:

-

There were 21 models for Windows data. Our model outperformed 14 of them. The two models were evaluated as similar. Therefore, our model was as successful as 16 of the other 21 studies.

-

There were 21 models for Linux data. Our model outperformed 10 of them. The two models were evaluated as similar. Therefore, our model was as successful as 12 of the other 21 studies.

-

There were 26 models for all data. Our model outperformed 22 of them. One model was evaluated as similar. Therefore, our model was as successful as 23 of the other 26 studies. Additionally, we achieved the highest AUC score.

AUC is a measure of the model’s capacity to discriminate between classes. Our model has demonstrated the most effective ability in the existing literature to distinguish between vulnerable and non-vulnerable functions.

When using the same dataset and algorithm, our approach has achieved better results. Table 4 shows the achievements of [23] using the Bi-LSTM algorithm. The Bi-LSTM algorithm, when used with our approach, achieved approximately twice the precision and recall values.

In conclusion, the function-as-sentence approach has been proven to be effective in performing binary code vulnerability analysis. To evaluate the efficiency of the developed system on real applications, we created two binary samples using C++ and compiled them with the gcc [104] tool. We analysed cmd.exe as a real-world application. During all testing processes, we used the Bi-LSTM algorithm model, which was trained on all x32 and x64 datasets of Windows and Linux.

One of the binary samples is the hello-world application. As expected, the software did not detect any vulnerabilities. The other is a binary containing two functions including buffer overflow vulnerability. Its source code is presented in Fig. 4.

The cti-for-css has calculated a 74% probability for the badLevelA function and a 99% probability for the badLevelB function. This demonstrates that the calculations are dependent on the codes used within the function. It is a predictable outcome that the strength of the calculation will vary depending on the use and repetition of codes that cause vulnerability. It is also widely acknowledged that the sscanf and cin functions are vulnerable [105,106,107].

On average, cyber threat intelligence reports were produced in 0.1 s, excluding the detection process. There are no studies in the literature that report the related duration. The transformation from binary to our data representation took approximately 0.49 s for each function. Each record, which was represented using our approach, was classified in 0.32 s. Thanks to our filtering feature, unwanted functions can be ignored in under 0.001 s while classifying.

Duration information was not provided in most studies. Gao et al. [19], Feng et al. [30], Eschweiler et al. [31] classified a function in 0.2, 0.1, and 0.08 s, respectively. Gao et al. [19] and Eschweiler et al. [31] require preparation time of 50 min. Feng et al. [30] did not provide any information on its preparation time. However, instead of using Portable Executable (PE) or Executable and Linkable Format (ELF) binaries, they processed images. In addition, their time costs may increase linearly with the number of basic blocks in a function [19]. Also, the preferred datasets and system resources are not the same. Therefore, a direct comparison would not be accurate. Figure 5 provides a section from the classification logs of the binary sample containing two problematic functions.

Figure 6 shows the visualisation of the cyber threat intelligence produced automatically for the relevant sample. The intelligence includes metadata such as the software producer, production location, and processor architecture used. It also presents information on the vulnerable code section, vulnerability definition, remediation techniques, groups aiming to exploit the vulnerability, the analyzing product, and the relational connections.

During the analysis process of cmd.exe, 1332 functions were obtained. These functions had mostly meaningless names and code lines that are difficult to understand. As a result, it was suspected that the software may be obfuscated. To prevent false positives, the threshold value was increased to 0.99 using the system’s configuration feature.

In conclusion, 10 of the functions have been classified as having CWE-199/CWE-399 vulnerabilities. This executable is known to contain vulnerabilities [108, 109]. Upon examination of the detected functions, it was manually verified that they were performing memory-related operations and could contain vulnerabilities.

5 Conclusion

The aim of the study is to automatically identify vulnerabilities in closed source software and create cyber threat intelligence. The study focused on multiple architectures and developed a deep learning-based system named cti-for-css, which stands for “Cyber Threat Intelligence for Closed Source Software”. CWE-119 and CWE-399 vulnerabilities have been detected successfully.

When using the entire dataset, our model was as successful as 23 of the other 26 studies according to F1 scores. The results obtained from our approach outperformed those of other algorithms that used the same dataset and algorithm. Additionally, our model achieved the highest AUC score. AUC is a measure of the model’s capacity to discriminate between classes. Thus, our model has demonstrated the best ability in the existing literature to distinguish between vulnerable and non-vulnerable functions. The data transformation, data classification, and production of shareable intelligence were achieved quickly. The system can produce a shareable intelligence from known vulnerabilities in 0.1 s. The simplified pre-processing steps, low time cost, and absence of hybrid/fusion strategies render our approach an optimal benchmark for the comparative evaluation of future studies.

Furthermore, this study presents the proposed flow diagram, software design, cyber threat intelligence standard, and dataset to the literature. Sharing the usage details of the proposed cyber threat intelligence standard is expected to make a valuable contribution to the literature and prevent inconsistencies in studies using the same standard.

The source code is publicly available on the Github platform at https://github.com/arikansm/cti-for-css. We have also shared three different tools: cti-for-css-library-usage, which shows how to use the system; cti-for-css-gui, which provides a graphical interface; and cti-for-css-stix-viewer, which visualises the produced cyber threat intelligence.

As a future study, we have planned to create a balanced dataset from real-world applications. And future research could investigate the performance of our approach in addressing different vulnerabilities and utilising hybrid/fusion strategies. Additionally, the approach may be used for purposes beyond software vulnerability, such as malware analysis and function task interpretation. For this reason, the issue of software vulnerability is not emphasised in the name of the tool.

Data availability

The source code is publicly accessible on the Github platform at https://github.com/arikansm/cti-for-css.

References

TÜBITAK BILGEM, Secure Software Development Guide. Tech. Rep., TÜBITAK (2018). https://siberakademi.bilgem.tubitak.gov.tr/pluginfile.php/6115/mod_page/content/26/SGE-KLV-GuvenliYazilimGelistirmeKilavuzu_R1.1.pdf

Huang, Z., Tan, G., Yu, X.: Mitigating vulnerabilities in closed source software. ICST Trans. Secur. Saf. 8, e4 (2022). https://doi.org/10.4108/eetss.v8i30.253

heartbleed.com. Heartbleed Bug. https://heartbleed.com/

Ahrens, J.: Buffer overflow exploitation: a real world example. https://www.rcesecurity.com/2011/11/buffer-overflow-a-real-world-example/

Brumley, D., Jager, I., Avgerinos, T., Schwartz, E.J.: BAP: a binary analysis platform. In: Gopalakrishnan, G., Qadeer S. (eds.) Computer Aided Verification. Springer, Berlin, pp. 463–469 (2011). https://doi.org/10.1007/978-3-642-22110-1_37

Shoshitaishvili, Y., Wang, R., Salls, C., Stephens, N., Polino, M., Dutcher, A., Grosen, J., Feng, S., Hauser, C., Kruegel, C., Vigna, G.: SOK: (State of) the art of war: offensive techniques in binary analysis. In: 2016 IEEE Symposium on Security and Privacy (SP), pp. 138–157 (2016). https://doi.org/10.1109/SP.2016.17

Liu, S., Dibaei, M., Tai, Y., Chen, C., Zhang, J., Xiang, Y.: Cyber vulnerability intelligence for Internet of Things binary. IEEE Trans. Ind. Inform. 16(3), 2154 (2020). https://doi.org/10.1109/TII.2019.2942800

Kochetkova, K.: Allegedly 40 apps on App Store are infected (2015). https://www.kaspersky.com/blog/xcodeghost-compromises-apps-in-app-store/9965/

Sun, P., Garcia, L., Salles-Loustau, G., Zonouz, S.: Hybrid firmware analysis for known mobile and IoT security vulnerabilities. In: 2020 50th Annual IEEE/IFIP International Conference on Dependable Systems and Networks (DSN), pp. 373–384 (2020). https://doi.org/10.1109/DSN48063.2020.00053

B.B.C. News. Apple’s App Store infected with XcodeGhost malware in China (2015). https://www.bbc.com/news/technology-34311203

Song, D., Brumley, D., Yin, H., Caballero, J., Jager, I., Kang, M.G., Liang, Z., Newsome, J., Poosankam, P., Saxena, P.: BitBlaze: A new approach to computer security via binary analysis, In: International Conference on Information Systems Security. Springer, pp. 1–25 (2008)

Siman, M.: Enterprise application security source vs. binary code analysis. Tech. Rep., Checkmarx (2012). http://docs.media.bitpipe.com/io_10x/io_105943/item_564714/Enterprise%20Application%20Security%20-%20Source%20Vs.%20Binary%20Code%20Analysis.pdf

Tan, T., Wang, B., Xu, Z., Tang, Y.: The new progress in the research of binary vulnerability analysis. In: International Conference on Cloud Computing and Security. Springer, pp. 265–276 (2018)

Bardin, S., Herrmann, P., Leroux, J., Ly, O., Tabary, R., Vincent, A.: The BINCOA framework for binary code analysis. In: International Conference on Computer Aided Verification. Springer, pp. 165–170 (2011)

Cui, N., Chen, L., Du, G., Wu, T., Zhu, C., Shi, G.: BHMVD: binary code-based hybrid neural network for multiclass vulnerability detection. In: 2022 IEEE International Conference on Parallel & Distributed Processing with Applications, Big Data & Cloud Computing, Sustainable Computing & Communications, Social Computing & Networking (ISPA/BDCloud/SocialCom/SustainCom), pp. 238–245 (2022). https://doi.org/10.1109/ISPA-BDCloud-SocialCom-SustainCom57177.2022.00037

Cui, N., Chen, L., Shi, G.: Binary code vulnerability location identification with fine-grained slicing. In: 2023 3rd Asia-Pacific Conference on Communications Technology and Computer Science (ACCTCS), pp. 502–506 (2023). https://doi.org/10.1109/ACCTCS58815.2023.00103

Diwan, A., Li, M.Q., Fung, B.C.M.: VDGraph2Vec: vulnerability detection in assembly code using message passing neural networks. In: 2022 21st IEEE International Conference on Machine Learning and Applications (ICMLA), pp. 1039–1046 (2022). https://doi.org/10.1109/ICMLA55696.2022.00173

Cheng, Y., Cui, B., Chen, C., Baker, T., Qi, T.: Static vulnerability mining of IoT devices based on control flow graph construction and graph embedding network. Comput. Commun. 197, 267 (2023). https://doi.org/10.1016/j.comcom.2022.10.021

Gao, J., Yang, X., Fu, Y., Jiang, Y., Sun, J.: VulSeeker: VulSeeker: a semantic learning based vulnerability seeker for cross-platform binary. In: 2018 33rd IEEE/ACM International Conference on Automated Software Engineering (ASE), pp. 896–899 (2018). https://doi.org/10.1145/3238147.3240480

Padmanabhuni, B.M., Tan, H.B.K.: Buffer Overflow Vulnerability Prediction from x86 Executables Using Static Analysis and Machine Learning. In: 2015 IEEE 39th Annual Computer Software and Applications Conference, vol. 2, pp. 450–459 (2015). https://doi.org/10.1109/COMPSAC.2015.78

Chen, Y., He, Y.: Computer software vulnerability detection and risk assessment system based on feature matching. In: International Conference on Multi-modal Information Analytics. Springer, pp. 162–169 (2022)

Wang, Y., Jia, P., Peng, X., Huang, C., Liu, J.: BinVulDet: detecting vulnerability in binary program via decompiled pseudo code and BiLSTM-attention. Comput. Secur. 125, 103023 (2023)

Taviss, S., Ding, S.H.H., Zulkernine, M., Charland, P., Acharya, S.: Asm2Seq: explainable assembly code functional summary generation for reverse engineering and vulnerability analysis. Digital Threats (2023). https://doi.org/10.1145/3592623

Redmond, K.M.: An instruction embedding model for binary code analysis. Ph.D. Thesis, University of South Carolina (2019)

Zheng, J., Pang, J., Zhang, X., Zhou, X., Li, M., Wang, J.: Recurrent neural network based binary code vulnerability detection. In: Proceedings of the 2019 2nd International Conference on Algorithms, Computing and Artificial Intelligence, pp. 160–165 (2019)

Lee, Y.J., Choi, S.H., Kim, C., Lim, S.H., Park, K.W.: Learning binary code with deep learning to detect software weakness. In: KSII the 9th International Conference on Internet (ICONI) 2017 Symposium (2017)

Yan, H., Luo, S., Pan, L., Zhang, Y.: HAN-BSVD: a hierarchical attention network for binary software vulnerability detection. Comput. Secur. 108, 102286 (2021). https://doi.org/10.1016/j.cose.2021.102286

Nguyen, T., Le, T., Nguyen, K., de Vel, O., Montague, P., Grundy, J., Phung, D.: Deep cost-sensitive kernel machine for binary software vulnerability detection. In: Pacific-Asia Conference on Knowledge Discovery and Data Mining. Springer, pp. 164–177 (2020)

Le, T., Nguyen, T.V., Le, T., Phung, D., Montague, P., De Vel, O., Qu, L.: Maximal divergence sequential auto-encoder for binary software vulnerability detection. In: Rush A. (ed.) International Conference on Learning Representations 2019. International Conference on Learning Representations (ICLR), United States of America (2019). https://iclr.cc/, https://iclr.cc/Conferences/2019

Feng, Q., Zhou, R., Xu, C., Cheng, Y., Testa, B., Yin, H.: Scalable graph-based bug search for firmware images. In: Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security (2016)

Eschweiler, S., Yakdan, K., Gerhards-Padilla, E.: discovRE: efficient cross-architecture identification of bugs in binary code. In: NDSS (2016)

Luo, Z., Wang, P., Xie, W., Zhou, X., Wang, B.: BlockMatch: a fine-grained binary code similarity detection approach using contrastive learning for basic block matching. Appl. Sci. (2023). https://doi.org/10.3390/app132312751

Durmuş, G., Soğukpinar, I.: A novel approach for analyzing buffer overflow vulnerabilities in binary executables by using machine learning techniques. J. Fac. Eng. Archit. Gazi Univ. 34(4), 1695 (2019)

Dong, F., Wang, J., Li, Q., Xu, G., Zhang, S.: Defect prediction in android binary executables using deep neural network. Wirel. Personal Commun. 102, 2261 (2018)

Morrison, P., Herzig, K., Murphy, B., Williams, L.: Challenges with applying vulnerability prediction models. In: Proceedings of the 2015 Symposium and Bootcamp on the Science of Security, pp. 1–9 (2015)

Saxe, J., Berlin, K.: Deep neural network based malware detection using two dimensional binary program features. In: 2015 10th International Conference on Malicious and Unwanted Software (MALWARE) (2015), pp. 11–20. https://doi.org/10.1109/MALWARE.2015.7413680

Rosenblum, N., Zhu, X., Miller, B., Hunt, K.: Machine learning-assisted binary code analysis. In: NIPS Workshop on Machine Learning in Adversarial Environments for Computer Security, Whistler, British Columbia, Canada (Citeseer, 2007)

Tian, J., Xing, W., Li, Z.: BVDetector: a program slice-based binary code vulnerability intelligent detection system. Inf. Softw. Technol. 123, 106289 (2020)

Wu, G., Tang, H.: Binary code vulnerability detection based on multi-level feature fusion. IEEE Access 11, 63904 (2023). https://doi.org/10.1109/ACCESS.2023.3289001

Li, Y., Ji, S., Lyu, C., Chen, Y., Chen, J., Gu, Q., Wu, C., Beyah, R.: V-fuzz: vulnerability prediction-assisted evolutionary fuzzing for binary programs. IEEE Trans. Cybern. 52(5), 3745 (2020)

Grieco, G., Grinblat, G.L., Uzal, L., Rawat, S., Feist, J., Mounier, L.: In: Toward large-scale vulnerability discovery using machine learning. In: Proceedings of the Sixth ACM Conference on Data and Application Security and Privacy, pp. 85–96 (2016)

Pradeep, L.: GrammaTech Releases CodeSurfer 1.6 for C (2020). https://news.grammatech.com/grammatech-releases-codesurfer-1-6-for-c

GrammaTech. CodeSonar SAST for Binary: Static Code Analysis Tool. https://www.grammatech.com/codesonar-sast-binary

Bill, G.: GrammaTech CodeSonar for Binary Code (2017). https://blogs.grammatech.com/grammatech-codesonar-for-binary-code

Sourceforge: BugScam IDC Package. https://sourceforge.net/projects/ bugscam/files/bugscam/

Hex-rays: IDA Pro. https://www.hex-rays.com/ida-pro/

Veracode: Static Analysis (SAST). https://www.veracode.com/products/binary-static-analysis-sast

CAT.NET: Static Analysis (SAST) (2009). https://marketplace.visualstudio.com/items ?itemName=MarkCurphey.CATNET

Patil, K., Malla, A.V.: Threat intelligence framework for vulnerability identification and patch management for virtual environment. In: Proceedings of 2nd International Conference on Innovative Practices in Technology and Management, ICIPTM 2022, vol. 2, p. 787 (2022). https://doi.org/10.1109/ICIPTM54933.2022.9754169

Wu, S., Chen, B., Sun, M.X., Duan, R., Zhang, Q., Huang, C.: DeepVuler: a vulnerability intelligence mining system for open-source communities. In: Proceedings of the 2021 IEEE 20th International Conference on Trust, Security and Privacy in Computing and Communications, TrustCom 2021 pp. 598–605 (2021). https://doi.org/10.1109/TrustCom53373.2021.00090

Wu, Q.Q., Wei, L.H., Liang, Z.Q., Yu, Z.W., Chen, M., Chen, Z.H., Tan, J.J.: Patching power system software vulnerability using CNNVD. DEStech Trans. Comput. Sci. Eng. (2019). https://doi.org/10.12783/dtcse/ccme2018/28630

Davidson, A., Fenn, G., Cid, C.: A model for secure and mutually beneficial software vulnerability sharing. In: WISCS 2016—Proceedings of the 2016 ACM Workshop on Information Sharing and Collaborative Security, co-located with CCS 2016, pp. 3–14 (2016). https://doi.org/10.1145/2994539.2994547

Ghaffarian, S.M., Shahriari, H.R.: Software vulnerability analysis and discovery using machine-learning and data-mining techniques: a survey. ACM Comput. Surv. 50(4), 1 (2017)

Zeng, P., Lin, G., Pan, L., Tai, Y., Zhang, J.: Software vulnerability analysis and discovery using deep learning techniques: a survey. IEEE Access 8, 197158 (2020)

Zitser, M., Lippmann, R., Leek, T.: Testing static analysis tools using exploitable buffer overflows from open source code. SIGSOFT Softw. Eng. Notes 29(6), 97 (2004). https://doi.org/10.1145/1041685.1029911

Dolan-Gavitt, B., Hulin, P., Kirda, E., Leek, T., Mambretti, A., Robertson, W., Ulrich, F., Whelan, R.: Lava: large-scale automated vulnerability addition. In: 2016 IEEE Symposium on Security and Privacy (SP). IEEE, pp. 110–121 (2016)

Fraze, D.: Cyber Grand Challenge (CGC) (Archived). https://www.darpa.mil/program/cyber-grand-challenge

NIST: Software Assurance Reference Dataset (SARD) Manual (2021). https://www.nist.gov/itl/ssd/software-quality-group/software-assurance-reference-dataset-sard-manual

NSA CAS: Juliet Test Suite for C/C++ 1.3 - NIST Software Assurance Reference Dataset (2017). https://samate.nist.gov/SARD/test-suites/112

Stivalet, B.C.: C# Vulnerability Test Suite—NIST Software Assurance Reference Dataset (2016). https://samate.nist.gov/SARD/test-suites/105

Koo, M.: Java Test Suite—NIST Software Assurance Reference Dataset (2010). https://samate.nist.gov/SARD/test-suites/64

Li, Z., Zou, D., Xu, S., Ou, X., Jin, H., Wang, S., Deng, Z., Zhong, Y.: VulDeePecker: a deep learning-based system for vulnerability detection. arXiv:abs/1801.0 (2018)

GOV.UK: Cyber-threat intelligence information sharing guide (2021). https://www.gov.uk/government/publications/cyber-threat-intelligence-information-sharing/cyber-threat-intelligence-information-sharing-guide

Tounsi, Wiem, Rais, Helmi: A survey on technical threat intelligence in the age of sophisticated cyber attacks. Comput. Secur. 72, 212–233 (2018). https://doi.org/10.1016/j.cose.2017.09.001

Brown, S., Gommers, J., Serrano, O.: From cyber security information sharing to threat management. In: Proceedings of the 2nd ACM Workshop on Information Sharing and Collaborative Security. Association for Computing Machinery, New York, WISCS’15, pp. 43–49 (2015). https://doi.org/10.1145/2808128.2808133

de Melo e Silva, A., Costa Gondim, J.J., de Oliveira Albuquerque, R., García Villalba, L.J.: A methodology to evaluate standards and platforms within cyber threat intelligence. Future Internet 12(6), 108 (2020). https://doi.org/10.3390/fi12060108

Wagner, T.D., Mahbub, K., Palomar, E., Abdallah, A.E.: Cyber threat intelligence sharing: survey and research directions. Comput. Secur. 87, 101589 (2019). https://doi.org/10.1016/j.cose.2019.101589

Ramsdale, A., Shiaeles, S., Kolokotronis, N.: A comparative analysis of cyber-threat intelligence sources. Formats Lang. Electron. 9(5), 824 (2020). https://doi.org/10.3390/electronics9050824

Burger, E.W., Goodman, M.D., Kampanakis, P., Zhu, K.A.: Taxonomy model for cyber threat intelligence information exchange technologies. In: Proceedings of the 2014 ACM Workshop on Information Sharing & Collaborative Security. Association for Computing Machinery, New York, WISCS’14, pp. 51–60 (2014). https://doi.org/10.1145/2663876.2663883

Mkuzangwe, N., Khan, Z.: Cyber-threat information-sharing standards: a review of evaluation literature. Afr. J. Inf. Commun. 25, 1 (1999). https://doi.org/10.23962/10539/29191

Farnham, G., Leune, K.: Tools and standards for cyber threat intelligence projects. SANS Inst. 3(2), 25 (2013)

Ahmed, N.: Recent review on image clustering. IET Image Process. 9(11), 1020 (2015). https://doi.org/10.1049/iet-ipr.2014.0885

Özdemir, A.: Cyber threat intelligence sharing technologies and threat sharing model using blockchain. Ph.D. Thesis, Middle East Technical University (2021)

El-Kosairy, A., Abdelbaki, N., Aslan, H.: A survey on cyber threat intelligence sharing based on Blockchain. Adv. Comput. Intell. 3(3), 10 (2023). https://doi.org/10.1007/s43674-023-00057-z

CERT: Standards and Tools for Exchange and Processing of Actionable Information. The European Union Agency for Network and Information Security (2014)

CBEST: CBEST Intelligence-Led Testing—Understanding Cyber Threat Intelligence Operations. Bank of England (2016)

Jerome, Q.: Go Evtc Signature Engine (Gene) (2018). https://rawsec.lu/blog/2018/02/04/gene-intro/

NIST: Security Content Automation Protocol. https://csrc.nist.gov/projects/security-content-automation-protocol

Darrington, J.: The Ultimate Guide To Sigma Rules. https://graylog.org/post/the-ultimate-guide-to-sigma-rules/

Ziring, D.W.C.S.K.S.N.: Specification for the Extensible Configuration Checklist Description Format (XCCDF) Version 1.2. National Institute of Standards and Technology (2012)

YARA: Welcome to YARA’s Documentation! https://yara.readthedocs.io/en/latest/

IODEF: The Incident Object Description Exchange Format. https://www.ietf.org/rfc/rfc5070.txt

IETF: Real-Time Inter-network Defense (RID). https://datatracker.ietf.org/doc/rfc6045/

MAEC: Malware Attribute Enumeration and Characterization. https://maecproject.github.io/

IEEE: ICSG ICAID Version 6. IEEE Standards Association (2018). https://standards.ieee.org/wp-content/uploads/import/governance/iccom/IC09-001-05_Computer_Security_Group_ICSG1.pdf

CVRF: Common Vulnerability Reporting Framework. https://github.com/CVRF/cvrf1.1

CSAF: Common Security Advisory Framework. https://oasis-open.github.io/csaf-documentation/

CybOX: Cyber Observable Expression. http://cyboxproject.github.io/

STIX: Introduction to STIX. https://oasis-open.github.io/cti-documentation/stix/intro

The IETF Trust: The Incident Detection Message Exchange Format (IDMEF). https://datatracker.ietf.org/doc/rfc4765/

MISPStandard: Malware Information Sharing Platform Standard. https://www.misp-standard.org/

What is open indicators of compromise (openioc) framework? https://cyware.com/security-guides/cyber-threat-intelligence/what-is-open-indicators-of-compromise-openioc-framework-ed9d

OVAL: Open Vulnerability and Assessment Language. https://oval.mitre.org/

VERIS: The VERIS Framework. https://verisframework.org/

Python.org: Python 3.11.3 (2023). https://www.python.org/downloads/release/python-3113/

Keras.io: Keras: deep learning for humans. https://keras.io/

Tensorflow.org: TensorFlow.org. https://www.tensorflow.org/?hl=en

Scikit-learn.org: scikit-learn: machine learning in Python. https://scikit-learn.org/stable/

Numpy.org: NumPy. https://numpy.org/

Pydata.org: pandas—Python Data Analysis Library. https://pandas.pydata.org/

Matplotlib.org: Matplotlib—Visualization with Python. https://matplotlib.org/

Avast: RetDec. https://github.com/avast/retdec

OASIS Open: STIX 2 Python API Documentation. https://stix2.readthedocs.io/en/latest/

GCC: The GNU Compiler Collection (2023). https://gcc.gnu.org/

Synopsys: String-overflow: Fn sscanf to fixed-sized destination (2022). https://community.synopsys.com/s/article/STRING-OVERFLOW-FN-sscanf-to-fixed-sized-destination

ExploitDB: Client/Server Remote sscanf() Buffer Overflow. https://www.exploit-db.com/exploits/23115

CodeQL: Dangerous use of cin. https://codeql.github.com/codeql-query-help/cpp/cpp-dangerous-cin/

PacketStorm: cmd.exe Stack Buffer Overflow (2021). https://packetstormsecurity.com/files/164175/Microsoft-Windows-cmd.exe-Stack-Buffer-Overflow.html

Gh0st0ne: Microsoft Windows cmd.exe Stack Buffer Overflow (2021). https://github.com/Gh0st0ne/Microsoft-Windows-cmd.exe-Stack-Buffer-Overflow

Funding

Open access funding provided by the Scientific and Technological Research Council of Türkiye (TÜBİTAK).

Author information

Authors and Affiliations

Contributions

The authors confirm contribution to the paper as follows: study conception and design: S. M. Arikan, M. Alkan; implementation: S. M. Arikan; analysis and interpretation of results: S. M. Arikan, A. Koçak, M. Alkan; draft manuscript preparation: S. M. Arikan. All authors reviewed the results and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare full compliance with ethical standards. This paper does not contain any studies involving humans or animals performed by any of the authors. The authors have no conflict of interest as defined by Springer, or other interests that might be perceived to influence the results and/or discussion reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Arıkan, S.M., Koçak, A. & Alkan, M. Automating shareable cyber threat intelligence production for closed source software vulnerabilities: a deep learning based detection system. Int. J. Inf. Secur. 23, 3135–3151 (2024). https://doi.org/10.1007/s10207-024-00882-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10207-024-00882-4