Abstract

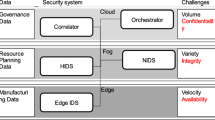

Compliance data consists of manufacturing quality measures collected in the production process. Quality checks are most of the times computationally expensive to perform, mainly due to the amount of collected data. Having trusted solutions for outsourcing analyses to the Cloud is an opportunity for reducing costs of operation. However, the adoption of the Cloud computation paradigm is delayed for the many security risks associated with it. In the use case we consider in this paper, compliance data is very sensitive, because it may contain IP-critical information, or it may be related to safety-critical operations or products. While the technological solutions that protect data in-transit or at rest have reached a satisfying level of maturity, there is a huge demand for securing data in-use. Homomorphic Encryption (HE) is one of the main technological enablers for secure computation outsourcing. In the last decade, HE has reached maturity with remarkable pace. However, using HE is still far from being an automated process and each use case introduces different challenges. In this paper, we investigate application of HE to the described scenario. In particular, we redesign the compliance check algorithm to a HE-friendly equivalent. We propose efficient data input encoding that takes advantage of SIMD type of computations supported by the CKKS HE scheme. Moreover, we introduce security/performance trade-offs by proposing limited but acceptable information leakage. We have implemented our solution using SEAL HE library and evaluated our results in terms of time complexity and accuracy. Finally, we analyze the benefits and limitations of integration of a Trusted Execution Environment for secure execution of some computations that are overly expensive for the chosen HE scheme.

Similar content being viewed by others

1 Introduction

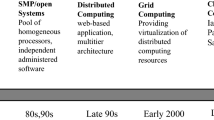

In recent years, more and more highly regulated manufacturing companies and organizations have outsourced their data and services to a cloud provider.Footnote 1 As public or hybrid Cloud-based infrastructures become more and more adopted, there is an increase of threats related to multi-tenancy, further increasing risks of data leakage or compromise. In most of the cases, confidentiality of data is of supreme importance, either due to legislation and compliance obligations or due to the importance of data secrecy for the organization (IP protection needs, competitiveness, international regulations on technical data).

Compliance data consists of manufacturing quality measures (such as tests results, geometrical measures and images) collected in the production process either by sensors deployed on the industrial equipment itself or by dedicated machines used in intermediate steps of the production (e.g., tools for visual parts inspection). Such data is an essential company assetFootnote 2: it is used as evidence of parts’ manufacturing quality as well as to assess responsibilities in case of failures. Since quality checks may be computationally expensive to perform, due to the amount of collected data, having trusted solutions for sharing and processing compliance data is an opportunity for (1) continuously monitoring and improving quality of products through the help of external services, and (2) reducing costs of ownership and operation of an adequate computation infrastructures by outsourcing analyses to the Cloud. However, this collaboration paradigm is also experiencing a delayed adoption for the many security risks associated with it. Indeed, compliance data is very sensitive because it may contain IP-sensitive or competitive information, sometimes relative to safety-critical systems.

Therefore, sensitive data should only be shared under the assumption that reliable security guarantees are in place, in particular for what concerns confidentiality. While the technological solutions that protect data in-transit or at rest have reached a satisfying level of maturity, there is a huge demand for securing data in-use.

Three are the main technological enablers for secure computation outsourcing: (1) secure multi-party computation (SMPC) cryptographic schemes, (2) hardware-based Trusted Execution Environment (TEE), like the Intel SGX or the ARM TrustZone and (3) homomorphic encryption (HE) cryptographic schemes. SMPC is a cryptographic tool that allows multiple parties to evaluate a function over their inputs without revealing their individual data. A TEE enables the creation of a protected environment for sensitive data storage and protected execution of critical code. Data access policies are enforced at the CPU level, thus providing data confidentiality and integrity as well as code confidentiality and integrity without relying on the operating system, which may be subject to possible vulnerability or misconfiguration. TEEs provide a broader set of security guarantees with respect to HE (e.g., data and code integrity), but they are subject to vulnerabilities that may reduce the confidentiality guarantees (CVE contains several vulnerabilities affecting Intel SGXFootnote 3 and ARM TrustZoneFootnote 4) and offer a very limited environment for execution of complex algorithms (e.g., offering a limited amount of memory or lacking numerical libraries to support complex applications like the one presented in this paper).

On the other side, HE offers confidentiality guarantees based on the mathematical properties of the underlying cryptographic primitives. The concept of HE was introduced in 1978 [1] and it is a form of cryptography that enables users to perform computations on encrypted data without first decrypting it, thus providing full confidentiality guarantees on the shared data but no code confidentiality and no guarantees of integrity of data and code.

HE schemes are implemented in libraries developed by professional cryptographers and widely used in the community, but it may not support all of the needed mathematical operations natively, requiring either to reformulate them in terms of the supported mathematical operations or rely on a trusted third party only for those specific operations. In principle, therefore, these technologies offer complementary capabilities and can be fruitfully used in cooperation.

Our contribution In this paper, we consider the compliance checks of manufacturing of engine turbine blades. In Fig. 1, it is possible to see a full aircraft engine, made of several turbine blades. The proposed compliance use case requires to compute “how much” the 3D CAD model of the turbine blade differs from the scanning of the manufactured turbine blade. Figure 3 shows the scenario where all computations are executed in-house, with no release of sensitive data in untrusted environments.

Driven by the stronger confidentiality guarantees offered by HE, we explore the applicability of HE to a scenario that it is very common in safety-critical parts manufacturing compliance. The reason we choose HE rather than SMPC is because there is no shared data among multiple parties, since there is only one client in our use case. In the HE implementation, the inputs are encrypted before being shared with a non-trusted party, like a cloud provider, and remain always encrypted. The computations are performed on the encrypted data.

Our contributions can be summarized as follows:

-

1.

We provide a mathematical formulation of a compliance check use case that is used in manufacturing that can be implemented leveraging HE technology. The scenario has been formulated in the context of COLLABS,Footnote 5 an HORIZON EC funded project.

-

2.

We redesign the compliance check algorithm to an HE-friendly one. By taking into account the limitations of the CKKS HE scheme, we address all the challenges that derive from COLLABS scenario’s requirements.

-

3.

We analyze a data packing strategy to encode multiple input data into a single plaintext. The proposed strategy is tailored to the needs of our use case and achieves computational gain using SIMD-like execution of operations.

-

4.

We analyze the idea of leaking limited and practically insignificant information as a trade-off that improves the performance of the algorithm and we present the computational cost we can gain.

-

5.

We have implemented our algorithm using the CKKS scheme as it is implemented in the SEAL HE library and presented our results regarding accuracy and run time.

All the computations are outsourced to the server. We assume the honest but curious security attack model for our use case. That is that, the server owner is a passive attacker that executes the operations correctly, but she wants to reveal any information that she can. In the passive attacker model, we focus on the data confidentiality.

The paper is organized as follows: after a brief overview of related work in the field, homomorphic encryption is introduced, together with its known challenges and limitations; in Sect. 4 more details are given of our reference scenario, which requires the comparison of 3D images to identify any manufacturing differences, then, in Sects. 5 and 6 we propose solutions for several of HE challenges and propose some solution architectures and design. In Sect. 7, we evaluate and compare our proposed solutions; in Sect. 8, we discuss alternative design solutions assisted by TEE. Finally, in Sect. 9 we conclude our work, proposing different next steps, as opportunities of synergy with other technologies that offer confidential computing capabilities and may help fill the gaps identified in our application of HE.

2 Related work

2.1 HE-based applications

In the literature of HE, the main application area is neural-network inference. Recently, HE compilers, such as CHET [2] and EVA [3], have been designed to address the need of easier FHE programming by hiding and automating tasks that require manually design by human experts. Although previous work demonstrates the capabilities of FHE for privacy preserving machine learning and most of HE compilers described in [4] focus on the machine learning applications, HE is not yet a generic solution. Building secure and efficient HE-based solutions in other application areas is challenging and a lot of tailoring needs to performed per use case by HE experts. In [5], which is a preliminary version of this research work, the authors describe the idea of using HE to provide secure outsourcing of manufacturing compliance checks. To the best of our knowledge, there is no other work related to manufacturing compliance checks.

2.2 Relation with our previous work

We would like to highlight that this research work is based on our preliminary work in [5]. In this section, we explain what are the new contributions of this paper and how we enhanced preliminary results, improved the application scenario and validation and provided an in-depth explanation of how our HE algorithm has been designed and implementation choices have been made.

In this paper, we provide more details regarding the COLLABS use case and better describe a real scenario in manufacturing where compliance checks take place. The compliance use case requires to compute “how much” the 3D CAD model of the turbine blade differs from the scanning of the manufactured turbine blade. The geometric plaintext algorithm of the compliance check is formalized and included in the Appendix A to support the explanation of design decisions for our HE-friendly algorithm to help the reader understand our approach in more details.

The main contribution of this work is the complete design and implementation of our HE-friendly algorithm, including communication and computational improvements regarding time performance and accuracy of the algorithm, which has been extensively validated using real data, proving the feasibility of the initial concept presented in [5].

We provide a brief background description of the CKKS scheme, describing the reasons for which we choose this HE scheme for our implementation. In addition, we analyze the parameters selection for CKKS scheme, as they influence the security level, the accuracy of the computed results and the computational complexity. Since recent HE compilers focus on machine learning applications, we have designed and implemented a HE-friendly algorithm manually based on our use case requirements as well as provided guidelines to make the process repeatable for the scientific community. We design an HE algorithm with security level 128 bits and described how to select parameters to achieve the required accuracy of the computed results (\(10^{-5}\)) taking into account that CKKS performs approximate computations introducing small extra error on the final results after decryption. We also described how error can be managed to be in the boundaries of the desired accuracy.

The efficiency of all RLWE schemes is based on their packing capacity. In [5], we have described and analyzed two packing techniques, the coordinated-wise packing and the point-wise packing, along with their storage capacity and the required number of plaintext (and ciphertexts). In this paper, we also evaluated a hybrid packing solution, by combining the two packing methods based on the available input data and the required accuracy defined by our use case.

In our efforts to minimize the computational complexity, in [5] we also proposed the high-level idea of leaking insignificant information with the aim to gain performance improvement. In this paper, we extend that idea by providing a description of how information leakage can be implemented in our HE-friendly algorithm and we mathematically quantified the reduction of computational cost, indicated by time performance improvement and reduction of the number of homomorphically computed operations.

The fact that CKKS does not efficiently compute non-polynomial functions is an other limitation of the HE scheme that we have tackled. We proposed two additional and novel solutions, besides the idea of leveraging the client as proposed in [5]. The first approach we proposed is to use HE by adopting polynomial approximations or by combining multiple HE schemes. The second approach we proposed is to use a hybrid solution by combining TEE with HE. Recent research work efficiently combines the TEE with HE. Based on that, we defined and proposed a custom TEE-based architecture, analyzed the limitations of the TEE (e.g., memory resources limitations inside the enclave, secure channel to send the decryption keys) and the different attack models that should also be considered, offering a feasibility analysis for the proposed solution.

Finally, we have performed extensive evaluation of the HE-friendly algorithm, considering two metrics: the accuracy and the computational resources (time performance and memory), by taking into account the maximum accepted error of the computed values, \(10^{-5}\). We have compared the geometric plaintext algorithm with HE-friendly algorithm, both coordinate-wise and point-wise with different polynomial degree n. The experimental results show that the accuracy of the computed result is influenced by greater value of polynomial degree n in the coordinate-wise packing and by rotations in the point-wise packing. Regarding the time performance, comparison between the geometric plaintext and the HE-friendly algorithm using both packing methods with different input data workloads is performed, proving that we can leverage the SIMD packing of CKKS to significantly improve time performance and showing how lower value of n reduces the run time. As an additional contribution, we have evaluated the required memory resources of each packing technique, which should be considered in case we want to use the TEE for computation of the non-polynomial functions.

2.3 HE and TEE related work

In the literature, the need for preserving privacy and confidentiality when relying on remote platforms provided by third parties emerged even before the rise of the cloud paradigm. In particular, the use of homomorphic schemes and hardware-based isolation solutions was already considered for hosting databases on untrusted servers [6, 7]. Drucker et al. [8] first adopted an approach combining homomorphic encryption (Paillier scheme) with a modern Trusted Execution Environment technology (intel SGX).

Wang et al. [9] generalized this approach by proposing TEEFHE, a solution combining the use of FHE and Trusted Execution Environments. Specifically, in the proposed scheme, the Microsoft SEAL library has been ported to an SGX-based Trusted Execution Environment in order to support the execution of the FHE bootstrapping and complying with SGX memory constraints. TEEFHE requires remote attestation as a preliminary step, in order to verify the configuration of the cloud server and allowing the provisioning of both encryption parameters and the secret and public keys to the bootstrapping enclaves, es explained previously.

Coppolino et al. [10] proposed instead the Virtual Secure Enclave (VISE), a scheme combining homomorphic encryption and Trusted Execution Environments to support confidential computing in manufacturing scenarios. Their scheme also requires attestation as a preliminary step in order to establish a secure communication channel between field devices and the cloud server. In their approach, data is directly sent to the protected enclaves, which oversee the cyphering step, while data analytics are executed on the encrypted data outside of the enclave. In VISE, enclaves also support evaluation of conditionals during the computation flow.

As a final note, it should be remarked that moving part or all the required computation in a Trusted Execution Environment may cause unexpected problems due to existing vulnerabilities of the TEE supporting technology. As an example, confidentiality problems due to possible side channel leakages from the enclave may arise. Wang et al. [9] addressed this aspect by modifying the SEAL library to obtain memory access patterns that are independent from the secret information.

3 Preliminaries

3.1 Homomorphic encryption preliminaries

Modern HE schemes can be classified into two main categories depending on how they perform computations. The first category includes schemes that support Boolean operations. These schemes are able to compute logical gates efficiently, and their performance depends on the depth of the Boolean circuit that implements a program. These bit-wise HE schemes are considered best choice for computation of non-polynomial functions, such as number comparison. FHEW [11] and TFHE [12] are the main schemes in this category.

The second category includes schemes that support modular arithmetic operations over finite fields, such as BGV [13] and BFV [14, 15]. An input message is represented as integer and using an encoder it is transformed into a polynomial with modular coefficients. These word-wise HE schemes support efficiently computation of polynomial functions. Of special interest is CKKS [16] which takes as input real or complex numbers and supports approximate arithmetic operations. CKKS is considered very efficient for use cases like machine learning model inference. In Sect. 3.1.1, we provide an overview of CKKS as we use it in our HE-based design.

3.1.1 CKKS scheme

Like the majority of HE schemes, CKKS’s security is based on the hardness of the ring variant of the Learning with Errors (RLWE) problem [17]. The plaintext is a polynomial with modular coefficients and it is protected by adding a noise vector from a carefully chosen distribution. The ciphertext is a pair of polynomials \(c = (c_0, c_1)\) over the ring \(\mathbb {Z}_q \left[ X \right] \diagup (X^n + 1)\), where n is the polynomial degree and q is the ciphertext modulus.

In CKKS, the noise added during the encryption is treated as part of the error occurring during approximate computations. The ciphertext noise level must be greater than a minimum level \(B_0\) to be secure and lower than a maximum \(B_{{\textit{max}}}\) to be decryptable. Each operation performed homomorphically increases the noise level of the result in encrypted form. When it is greater than \(B_{{\textit{max}}}\), decryption is erroneous. Since ciphertext–ciphertext multiplication dominates noise increase, the multiplicative depth must be as small as possible.

In case that the noise level reaches \(B_{{\textit{max}}}\), the ciphertext must be decrypted and re-encrypted to reduce the noise level back to the initial minimum level \(B_0\). This procedure is performed either by a trusted party that has the decryption secret key (the data owner or a TEE module controlled by the data owner) or homomorphically by the server using a function called bootstrapping. Bootstrapping is used in the bit-wise schemes, but is barely practical in word-wise schemes, as it adds very large computation overhead. Alternatively, since \(B_{{\textit{max}}}\) depends on the parameter q, when the computations to be performed are known before hand, q is chosen adequately. Larger q allows more computations on encrypted data at the expense of efficiency.

The RLWE schemes, like CKKS, owe their efficiency to plaintext batching [18], i.e., the ability to encode multiple messages into a single plaintext. The plaintext (and the corresponding ciphertext) can be seen as a vector of values. In CKKS, each operation between two ciphertexts is performed simultaneously between the packed values as a point-to-point operation (addition or multiplication) between the two vectors values. That is that, it supports a simulation of Single Instruction Multiple Data (SIMD) type of computations.

CKKS can efficiently compute polynomial functions, as it supports multiplications and additions between two ciphertexts or between a plaintext and ciphertext. It also supports ciphertext cyclic shift that cyclically shifts the values of encrypted plaintext. The runtime cost is dominated by two operations, namely ciphertext–ciphertext multiplication and cyclic shift. Finally, there are also ciphertext management operations, like rescale and relinearization, that are auxiliary in nature. Rescale reduces the noise of the ciphertext and the number of times that rescale can be used is defined by the initial value q.

3.1.2 HE-based algorithm design: challenges and limitations

Although HE technology has been pacing rapidly to maturity during the last decade, building efficient HE applications is still challenging for non-experts. In general, naively replacing operations by the corresponding HE ones leads to inefficient solutions. The developer must take into consideration the particularities and limitations of the HE scheme used and transform the initial (non-HE) algorithm to an equivalent which is HE friendly. In this section, we analyze the challenges that developers of HE applications face that range from minimization of the multiplicative depth (number of consecutive ciphertext to ciphertext multiplications) and the selection of the most efficient plaintext packing strategy, to the implementation of non-polynomial functions and if/then conditions.

Recently, there are compilers trying to automate the transformation of an algorithm into an HE algorithm friendly, but they are still far from optimal [4].

Plaintext packing strategy An HE ciphertext can be seen as a vector several hundred of times larger than a single input message, leading to storage blow-up. To deal with this problem, HE schemes based on RLWE are able to use all the vector slots to store (packing) several different messages in one ciphertext. The operations applied to this ciphertext are performed to all the packed messages simultaneously, simulating an SIMD architecture.

Thus, plaintext packing leads to storage and computation complexity amortization. However, there are several packing strategies and each one of them can affect differently the performance of the HE algorithm. It is up to the HE designer to choose the optimal per use case.

Non-polynomial function computation RLWE-based schemes that support SIMD are efficient for polynomial function computation, but they perform poorly with non-polynomial ones, like number comparison, square root or division. Three solutions have been proposed to deal with this problem:

-

1.

the non-polynomial functions are approximated by polynomial ones, at the expense of accuracy loss [19],

-

2.

switching between HE schemes in the same program, using Boolean HE schemes for the non-polynomial functions and arithmetic HE schemes for polynomials [20, 21]. However, switching between schemes is very expensive,

-

3.

non-polynomial functions are computed in plaintext by a trusted party. In the last solution, either the data owner, with some communication overhead, or a trusted hardware at the server controlled by the data owner, like a TEE module, decrypts the HE ciphertext, performs the function and then HE re-encrypts it.

Branching Implementing “if then else” conditions or “switch/case” type of operations is very expensive for HE algorithms. In order to hide which branch is computed, the algorithm must compute all possible cases. For instance, let us assume that three conditions (only one can be true) define which one of three functions \(f_i\), \(i=1,2,3\) will be executed using “if then else” branching.

In any case, only one of them will be executed. However, the HE implementation of the same algorithm must homomorphically compute all three functions \(f_i\), \(i=1,2,3\), in order to hide the conditions values.

HE parameters selection The HE parameters for CKKS are selected on the basis of the security level, the multiplicative depth and required accuracy of the result. These parameters define the key space, the plaintext and ciphertext space and the noise distribution, affecting the number of messages that can be packed in a single plaintext, the size of the ciphertext and the performance of the computations.

There are two main parameters that need to be selected:

-

1.

The polynomial degree n, which declares the number of messages that can be packed in a single plaintext and ciphertext.

-

2.

The coefficient modulus q, which bounds the size of each message in the ciphertext.

Figure 2 [22] illustrates how both parameters influence the security level, which increases as n grows and decreases as q grows. Since HE parameters determine the plaintext and ciphertext space, increasing both the plaintext and ciphertext dimension n and coefficient modulus q leads to increasing the computational cost of homomorphic operations and the algorithm is significant slower. Larger values of q allow more computations performed on the encrypted data and help avoiding precision loss and overflow issues. However, as q grows, the RLWE problem becomes easier, causing security issues. The recently proposed HE standard [23] provides parameter selection guidelines.

Due to computation approximation, for CKKS there is another HE parameter, called scaling factor. This parameter controls computations accuracy. Large values of the scaling factor, and therefore large values of q, mean performing operations with higher precision and higher computational cost.

3.2 TEE overview

An Execution Environment is a set of hardware and software resources that supports running applications in a computing system. A Trusted Execution Environment (TEE) can be defined as an execution environment that is inherently trusted and isolated from the rest of the computing environment (usually referred to as Rich Execution Environment). A TEE ensures that sensitive assets (code, data and platform resources) are stored and processed in an isolated and trusted way, protecting them from unauthorized users and software components operating in the Rich Execution Environment.

Supporting a TEE usually requires a combination of capabilities at hardware level (usually providing the basic protection mechanisms supporting isolation) and firmware/software level (usually in terms of a small runtime providing the basic abstractions and API to interact with the underlying hardware primitives and supporting the interfacing with the TEE). This set of hardware and software mechanisms is usually referred to as part of the platform Trusted Computing Base, as other components of the platform necessarily must rely upon them for the assurance guarantees to hold. For example, software applications running in the TEE benefit of the assurance properties guaranteed by the separated environment and are thus referred to as Trusted Applications.

Different processing architectures adopt different approaches in supporting the establishment of Trusted Execution Environments, with different combinations of hardware and software elements providing the baseline capabilities.

A notable and widely adopted example of technology supporting TEEs is the Intel Software Guard Extensions (SGX) [24]. SGX relies quasi exclusively on hardware support to provide TEE capabilities, leveraging microcode (SGX is often referred as an Intel ISA extension) and other elements (Page Table Walker, TLBs) to partition the memory in a non-trusted section and a trusted section (referred as Processor Reserved Memory) hosting Trusted Applications. In the SGX framework, a TEE is referred to as an Enclave, intended as an isolated container able to host critical applications along with their data, and to protect them from the external environment, including an untrusted hypervisor or operating system.

AMD proposed a different approach to trusted computing, introducing the Secure Encrypted Virtualization (SEV) technology [25]. At its core, SEV provides an isolation primitive for virtualized environment (containers or virtual machines), leveraging AES-based memory encryption for code and data protection. Successive extensions of the technology provide additional guarantees, adding register state encryption (SEV Encrypted State) and Secure Nested Paging (SEV-SNP), respectively, protecting the virtual environments from an untrusted hypervisor or a malicious cloud provider. With respect to Intel SGX, AMD-SEV facilitates software development (as applications do not need to be split between a trusted and an untrusted section) but requires a larger Trusted Computing Base. SEV also poses limitations in accessing host devices.

ARM provides instead the TrustZone framework [26], a collection of several IPs supporting the partitioning of a system between an untrusted and a trusted mode of execution (referred to as, respectively, Normal World and Secure World). The Normal World represents the Rich Execution Environment, and usually runs a complex and untrusted software stack, spanning along different privilege levels. The Secure World represents the Trusted Execution Environment and is intended to run a trusted runtime and multiple trusted applications. In the TrustZone framework, the most privileged execution mode is reserved for a Secure Monitor software component (referred to as ARM Trusted Firmware), managing both the configuration of the platform and the interaction between the Normal and Secure Worlds.

Several TEE-oriented frameworks targeting the open-source RISC-V ISA have also been presented. One of the most mature solutions, the Keystone framework [27], leverages the native support for Physical Memory Protection (PMP) to support isolation and introduce multiple enclaves. Differently from the SGX case, Keystone relies on a software Secure Monitor to configure the PMP and support interactions with the enclaves.

4 Manufacturing compliance scenario

4.1 Notation

To address the automatic compliance check scenario in a formal and mathematical way, we need to introduce some definitions.

A point P is a triple (x, y, z) of Cartesian coordinates, i.e., \(x,y,z\in \mathbb {R}\) and a triangle T is a triple of points,

A point cloud C is defined as a finite set of \({N_C}\) points

and a mesh M as a finite set of \(N_M\) triangles

A point cloud C and a mesh M can therefore be represented as \(N_C \times 3\) and \(N_M \times 9\) matrices of real numbers, respectively, where each entry is a point coordinate in the three-dimensional space. We use \({{\textit{dist}}}(P,K)\) to denote the Euclidean distance between two points P and K.

4.2 Manufacturing scenario

The scenario under consideration is about manufactured parts compliance checks, requiring comparison of different 3D images to identify any manufacturing errors and it has been driven by the quality assurance needs in aerospace parts manufacturing. This scenario has been formulated in the context of the COLLABS EU project,Footnote 6 working on security in collaborative manufacturing.

Figure 3 shows the flow of artifacts, highlighting in particular the sensitive ones. In more details, engineers define a 3D production model, typically a CAD-like file, representing the part that shall be manufactured. The 3D model is built from a mesh, i.e., it is a collection of triangles, and it is used to program manufacturing machines, such as CNC Milling machines.

Each part that it is produced in the factory is scanned for quality checks, producing a point cloud file, i.e., a file that contains a discrete set of data points which represent the 3D shape of the product. Point clouds are produced by 3D scanners and by photogrammetry software.

To execute quality checks, every point cloud file and corresponding 3D model file are compared using an industrial metrology tool. The point cloud file and 3D model file represent the actual manufactured object and the desired manufactured object, respectively. Metrology tools implement algorithms for computing the normal distance between the surfaces of the scanned object and its reference model, and provide as output a visualization of the regions where the two models differ based on the distance between the actual and the expected point.

At the moment, since data is confidential and potentially subject to national regulations for its disclosure, the possibility to use an external cloud infrastructure would pose a cyber-security problem, therefore computations are performed in-house.

4.3 Point cloud to mesh distance computation

Computation of the distance between a 3D model and a point cloud has been widely investigated in recent literature, as in [28,29,30,31,32].

In our case, the algorithm to compute the distance between a point cloud C (the scanned object manufactured) and a mesh M (the 3D model used as reference) is using function distance \(\Delta \), defined as a vector function of \(N_C\) real values

where the scalar function distance \(\delta \) is defined as

i.e., for every triangle \(T \in M\) and every point \(K \in T\) the distance \({{\textit{dist}}}(P, K)\) is considered. Hence, the compliance check is based on numerous computations of the function \({{\textit{dist}}}(P, K)\).

Let P be a point in the point cloud C and T a triangle in the mesh M, and let T be included in a plane \(\pi (T)\). Let H be the orthogonal projection of P onto \(\pi (T)\). Then,

-

when H is inside the triangle T, we compute d(P, T) as the usual Euclidean norm \({{\textit{dist}}}(P, H)\),

-

when H is not inside the triangle T, we compute d(P, T) as \({{\textit{dist}}}(P, K)\), where K is the closest point to H which is inside T.

The algorithm outputs \(\delta (P, M)\) for all \(P\in C\). In order to avoid computing the Euclidean norm \({{\textit{dist}}}(P, K)\), for all \(K \in T\) in relation (1), the plane \(\pi (T)\) containing T is partitioned in 7 regions. Depending on which of the seven regions contains the point P projection, the value d(P, T) is computed differently. In Fig. 4, the partition into regions is shown and, as an example, if H, the projection of a point P in the plane containing \(T_i=\{T_{i1},T_{i2},T_{i3}\}\) is in region \({\textit{Reg}}1\), then \(d(P, T_i)= \min _{K \in T_{i2}T_{i3}} {{\textit{dist}}}(P,K)\).

The abstract steps of the implemented algorithm appear in the second column of Table 1, as we have hidden the details of each step to emphasize the HE challenges. For more details on the algorithm, please see Appendix A. The key point to stress out is that the algorithm relies on several Euclidean distance computations (therefore, square root computations), some real number divisions and comparisons. Also, there is algorithm branching and different computations are performed depending on which of the seven regions triangle T partitions plane \(\pi (T)\) every point P stands in.

Note

We note that, before computing the distance between C and M, it is needed to execute a preliminary step called registration algorithm. In the registration, the iterative closest point (ICP) algorithm is used. Practically, a rotation and translation matrix is applied to the point cloud, to achieve the best fit between the modified point cloud and the mesh M. This step is not analyzed in this paper.

5 Compliance check secure outsourcing

5.1 HE-based architecture

In Fig. 5, the HE-based solution is presented. It consists of three steps.

As a first step, the compliance check algorithm must be redesigned and transformed into a HE-friendly one. In this step, among others, the most suitable HE scheme is chosen as well as and the best input encoding strategy. The HE scheme’s parameters are selected for the required level of security and computations accuracy. Finally, all the necessary keys are generated and they are established in the cloud server together with the HE code.

In the second step, input data, i.e., the mesh and the cloud point files, is encrypted, and sent to the server for computation. Finally, outsourced data is processed in encrypted form by the service provider and the ciphertext result is sent back to the data owner who decrypts it using the private key.

5.2 Design and setup phase

For the HE-based algorithm, we have made the following design decisions based on the requirements of the COLLABS scenario described in Sect. 4.3.

Scheme selection For the Point Cloud to Mesh distance computation, we used the CKKS [16] scheme, as the computation involves several addition and multiplication operations on real numbers. CKKS supports very efficiently these operations.

Input data encoding In our use case, input data consists of triangles T from the CAD model of the desired manufactured object and points P, from the 3D scan of the manufactured object. Each triangle is defined by 3 points \(v_1 = (x_1, y_1, z_1), v_2 = (x_2, y_2, z_2)\) and \(v_3 = (x_3, y_3, z_3)\) and the point P is given by \(v_p = (x_p, y_p, z_p)\), i.e., in total 12 real numbers per point/triangle pair.

One of the main advantages of CKSS is that it allows to use plaintext packing to encode multiple input values into a single plaintext and perform computations in a SIMD manner. We propose two different data packing techniques depending on the available workload, the coordinate-wise and point-wise packing. The two packing strategies are analyzed in Sect. 6.1.

Implementation of non-polynomial functions One of the most critical design challenges is the non-polynomial function computation. Operations like, real number square root, division and comparison computations are not supported by CKKS. We provide a specific solution for each of those challenges, as follows.

Square root and division In the literature, square root and division computations are implemented with CKKS by computing a polynomial approximation of the corresponding computation. However, those approximations produce results that are not sufficiently accurate for our use case requirements. To avoid this side effect, we follow a different strategy. We avoid the division and square root operations by using a scaled version of the computations. Thus, at the end of the algorithm we only need a single division by a scalar \({\textit{scale}}\) and a single square root operation.

These operations are very simple and they are performed by the user after the decryption. It is shown in step 5 of Table 1. The scalar \({\textit{scale}}\) depends on the input data and it is computed homomorphically by the cloud server in the first four steps of the HE algorithm. This solution is also described in [5].

Comparison with real numbers For the comparison computation, we propose three solutions, (1) leverage the client, (2) use polynomial approximation or (3) use a hybrid solution.

In [5], the authors proposed sending a fraction of the original data in encrypted form back to the data owner, so after decryption she performs the comparison in plaintext form and return a new ciphertext to the server to continue the operations homomorphically. Obviously, this option requires several communication steps between the server and the client.

The second approach is using an approximation representation of the comparison operation proposed in [33]. In the plaintext algorithm, a value is compared with zero, hence we need only the sign function. The main idea of [33] is to approximate a non-polynomial function by composite polynomials to increase the computational efficiency. In the hybrid solution, we have two options, (1) multiple HE schemes and (2) combine TEE with HE. We analyze the two approaches in Sect. 8.

HE parameters selection The data owner selects the HE scheme’s parameters based on the desired level of security and the required computation accuracy. Then, the HE scheme’s keys are generated. The private key is stored securely locally, while the evaluation keys are sent to compliance check server deployed on the third-party infrastructure.

During the transformation of the plaintext algorithm into a HE-friendly algorithm, we design the optimal HE circuit. Since we have chosen the CKKS scheme for our implementation, the HE circuit should have the minimum possible multiplicative depth (number of consecutive multiplications). For our COLLABS use case, we select the level of security, hence the polynomial degree n. Taking into account the desired accuracy, the result from the HE algorithm can differ from the expected distance, the one computed using the plaintext algorithm, with tolerance of \(10^{-5}\). In order to achieve the desired level of accuracy, we have chosen to increase the n from HE parameters, as smaller values of n cause an overflow of the slots’ values. Obviously, this decision affects the efficiency of algorithm, as it may lead to slower program.

6 Communication and computation improvements

6.1 Plaintext packing strategy

The main benefit of word-wise HE schemes is the ability to encode multiple input values in a single plaintext and perform multiple operations at the cost of one. We also refer to this encoding technique as batching. For our scenario, described in Sect. 4, we designed two encoding methods. Namely, the coordinate-wise and point-wise packing. Depending on the available data workload, i.e., the number of point/triangle pairs that are available for processing, each strategy outperforms the other. Thus, each time, the data owner can choose the best packing technique based on the available input data.

The coordinate-wise packing (CWP) strategy is straightforward. Each plaintext contains one of the Cartesian coordinates of one of the input points. Given that four points are needed to define each point/triangle pair (three points for the triangle and one point from the model), 12 plaintexts are used in this encoding method. Since each plaintext has \(\frac{n}{2}\) available slots, up to and \(\frac{n}{2}\) point/triangle pairs can be encoded and processed at the cost of one. If the number of pairs are more than \(\frac{n}{2}\), then an extra set of 12 plaintexts will be used. If the number of sets is less than \(\frac{n}{2}\), the remaining plaintext slots will be idle. The CWP strategy is illustrated in Fig. 6.

In the point-wise packing (PWP) strategy, each one of the four points, \(v_1\), \(v_2\), \(v_3\) and p, is encoded in a separate plaintext and then encrypted, i.e., four ciphertexts are used for each triangle/point pair. However, for each point 9 slots are needed instead of 3, i.e., three copies of each Cartesian coordinate are stored.

This redundancy is due to the limitations of HE. In more details, while packing multiple data in a single plaintext increases the overall efficiency, there are several limitations, since random access to an individual slot is not possible. Thus, the developer can use other operations, like (cyclic) plaintext rotation. In PWP, since we leverage the cyclic rotation operations, each point’s coordinates are repeated 3 times and in total, 9 slots are required for each point/triangle pair, as shown in Fig. 7. It is clear that, per each point/triangle pair, 6 slots are filled with repeated coordinate values. We refer to them as redundancy slots.

Thus, 9 slots are required for each point/triangle pair from each of the four plaintexts and, in total, \(\frac{1}{9}\cdot \frac{n}{2} = \frac{n}{18}\) point/triangle pairs can be encoded in the same set plaintexts and processed with a single operation. Figure 8 illustrates PWP.

Hybrid packing strategy One of the HE parameters is the degree n of the polynomials that represent the ciphertexts and plaintext which is determined by solution requirements (level of security, multiplicative depth, accuracy). Thus, the number of available slots n/2 can be considered as a constant defined by the use case.

Thus, in our analysis we aim to minimize storage needed for a given number P of triangle/point pairs.

In the case of CWP, plaintexts are processed in groups of 12 offering \(12\cdot n/2\) slots for input values, or in other words, in each group of 12, we can encode n/2 triangle/point pairs.

On the other hand, PWP plaintexts are processed in groups of 4, and in each group we can encode n/18 triangle/point pairs due to the redundancy slots. Thus, two groups need 8 plaintexts (ciphertexts) storage and can encode up to n/9, while three groups need the same storage as CWP, but they can encode only n/6 pairs.

Let \(\nu _1=P \mod \frac{n}{2}\) and let \(\nu _2 = \frac{P-\nu _1}{\frac{n}{2}}\). Based on the analysis above, the hybrid strategy goes as follows

-

If \(1\le \nu _1\le \frac{n}{9}\), then \(\nu _2\) CWP groups are used and \(\lceil \frac{\nu _1}{\frac{n}{9}}\rceil \) PWP groups. The total cost is \(\nu _2\cdot 12+ \lceil \frac{\nu _1}{\frac{n}{9}}\rceil \cdot 4\) plaintexts (ciphertexts).

-

If \(\nu _1 =0\), then \(\nu _2\) CWP groups are used and storage cost is \(\nu _2\cdot 12\) plaintexts (ciphertexts).

-

If \(\nu _1 >\frac{n}{9}\), then \(\nu _2+1\) CWP groups are used and the storage cost is \((\nu _2+1)\cdot 12\) plaintexts (ciphertexts).

6.2 Security/performance trade-off

Cryptography has always been a trade-off between security and practicality. Especially, in cases where the protection cost of information’s secrecy is disproportionately high compared to the protected information’s importance.

However, the vast majority of HE-based solutions do not consider information leakage as a design parameter. In [34], the role of information leakage was highlighted in the context of AI oblivious inference and the notion of partial oblivious inference was defined.

In our case, we investigated also the possibility to improve performance at the expense of leakage. We used two criteria. The importance of leaked information, based on the data owner’s assessment, and the efficiency gain. The leakage of the region Reg seems the optimal choice.

Reg is used in branching, and the HE computation of branching can be very expensive. In our case, depending on the point/triangle input pair, the point P is projected to one of 7 regions of surface \(\pi (T)\). Depending on the region Reg, a different Euclidean distance is computed. In a data-agnostic implementation, all 7 functions must be computed homomorphically in order to hide the projection result from the server, resulting in an costly implementation.

However, for the specific use case, the projection region of each triangle is not subject to confidentiality, while the cost of hiding is too high. According to the data owner (the Customer), the projection of an unknown point to an unknown plane, does not provide any significant information to a malicious actor. Moreover, even if some of the triangle/point pairs are known to the attacker, the semantic security offered by the HE scheme protects from any correlation between different pairs.

Regarding the COLLABS scenario, using the projection information from Step 3 described in Table 1, 7 ciphertexts are computed (one for each region). The ciphertexts are filled with encrypted ones and zeros. The encryption of value one in the slots represents “true,” i.e., corresponds to the computed region for each point/triangle pair, and zero represents “false.” Assuming that the CWP is used, Fig. 9 illustrates an example of the region ciphertexts where points P stand in Reg 0 for the first point/triangle pair, in Reg 1 for the second pair, in Reg 3 for the third pair etc.

Since the input data is packed and we hide which branch is computed, the server must compute 7 Euclidean-like functions (Euclidean norm type of computations), one for each of the seven candidate regions. The aforementioned computations are performed without taking into account the region ciphertexts. In Step 4 of the Table 1, different distance formulas are used per region. Hence, 7 different ciphertexts are computed containing the encrypted results per distance formula and 7 different ciphertexts containing the encrypted scales for each formula. To describe the distance and scale computation using HE in a mathematical way, we define the following notations:

-

1.

Let \(f_{{{\textit{dist}}}, i}\) denotes the distance formula per region

-

2.

Let \(f_{{{\textit{scale}}}, i}\) denotes the scale function per region, used to avoid square root and division

-

3.

Let \(C_{{{\textit{reg}}}, i}\) denotes the ciphertext of each region

where i is the index the region, i.e., \(i = 0, 1, \ldots , 6\).

The server performs operations homomorphically, multiplications and addition, to compute the ciphertext which contains the distance of each point/triangle pair \(C_{{{\textit{dist}}}}\)

and the scale \(C_{{\textit{scale}}}\)

In our implementation, this leads to 14 ciphertext to ciphertext HE multiplications, rescale, relinearization operations and 12 ciphertext to ciphertext HE additions.

With region leakage, runtime improvement is proportional, i.e., the computational overhead of the branching is reduced by a factor of 7 (averaging over 1000 trials we have a reduction from 0.9 to 0.1 s), since only one of the 7 different Euclidean distance-like functions will be computed.

7 Experiments and evaluation

7.1 Description of the tests

Experimental environment We implemented a HE-friendly algorithm, which will be referred to as the geometric plaintext algorithm implementation, and that will be used as benchmark. Starting from this version of the algorithm, the HE version has been developed (also called here homomorphic algorithm) using SEAL library.

For the HE algorithm implementation, we used the version 4.1 of the SEAL library with a C++ 17 compiler. The machine used for performance evaluation has 32 GB RAM and an Intel i7-6820HQ processor, with processor base frequency 2.70 GHz.

User requirements Due to the nature of the algorithm, and following Customer accuracy requirements, the HE algorithm version has to take as inputs floating point numbers and has to match with the geometric plaintext algorithm implementation with a tolerance of \(10^{-5}\).

Datasets The dataset used to evaluate the accuracy is real data representing a piece of a model of an engine turbine blade (mesh) and the associated scanned actual manufactured turbine blade (point cloud) piece, formed of 27,251 triangles and 456 points (resulting in a total of \(27{,}251 \times 456 = 12{,}426{,}456\) point/triangle distance computations). The complete dataset representing the entire engine turbine blade would contain about \(10^9\) point/triangle pairs.

Experiments description We evaluate the performance of the HE-based program, for the two packing strategies CWP (tests 1 and 3) and PWP (test 2), for security level of 128 bits. The parameters of the CKKS scheme are selected based on the HE standard [23]. The polynomial degree chosen is \(n = 32{,}768\) for tests 1 and 2, while \(n = 16{,}384\) for test 3. We recall that the homomorphic algorithm, CWP version, processes in parallel up to \(\frac{n}{2}\) point/triangle pairs and requires 12 different ciphertexts, while the PWP version can process in parallel up to \(\frac{n}{18}\) point/triangle pairs and requires 4 different ciphertexts.

The approach used to face comparisons is to combine TEE with HE: the implementation of the homomorphic algorithm mimics the presence of a TEE inside the server that performs all comparisons in plaintext form.

We recall that, when designing the HE algorithm, it has been decided (accordingly with the requirements from the Customer) to set the maximum acceptable error as \(10^{-5}\). Therefore, when comparing the results obtained with the (geometric) plaintext algorithm vs the results obtained with the homomoprhic algorithm, we will consider them equal if they differ less than this agreed threshold of \(10^{-5}\). Table 2 lists the three tests we are considering.

7.2 Test results

In order to evaluate the proposed solutions, we are considering the following factors: accuracy and computational resources (execution time and memory). For accuracy and execution time, it is used as benchmark the geometric plaintext algorithm, which, in particular, computes the distance between a point cloud and a mesh simply computing the distance between every point in the point cloud and every triangle in the mesh, as described in Sect. 4.3 section, i.e., with no optimizations based on the proximity (or not) of points and triangles. The three tests will also be compared among them, to evaluate necessary memory resources.

7.2.1 Accuracy

Test ID 1 The evaluation of the two algorithms, geometric plaintext and its homomoprhic version CWP with polynomial degree \(n = 32{,}768\), differs more than \(10^{-5}\) only 0.00007% of times (i.e., 9 out of almost 12 millions and a half), with a mean error of \(3.21 \times 10^{-5}\). When the two algorithms differ less than the threshold, they differ with a mean discrepancy of \(2.17 \times 10^{-12}\).

Test ID 2 The evaluation of the two algorithms geometric plaintext algorithm and its homomoprhic version PWP and polynomial degree \(n = 32{,}768\) differ more than \(10^{-5}\) 0.53915% of times, with a mean error of \(2.00 \times 10^{-4}\). When the two algorithms differ less than the threshold, they differ with a mean discrepancy of \(2.99 \times 10^{-8}\). We notice the accuracy performances are significantly worse for PWP strategy with respect to CWP (same n), this result is explainable due to the introduction of the rotations which bring to more noise that affects the accuracy of results.

Test ID 3 Lowering the polynomial degree n to 16,384 affects the accuracy performances of the algorithm. 19,750 times out of 12,426,456 the evaluation of the two algorithms differ more than \(10^{-5}\) (mean error 0.0034). When the two algorithms differ less than the threshold, they differ with a mean discrepancy of \(3.08 \times 10^{-7}\).

In Table 3 accuracy results are shown.

7.2.2 Time performances

For the plaintext algorithm, the mean time of a single point/triangle pair distance computation is constant, independently from the number of points and triangles in the dataset. The time performances of homomorphic algorithm depend on the data packing strategy (CWP or PWP), the polynomial degree n and the time spent to encrypt/decrypt data between Client and Server and Server and TEE. We do not want to focus on the absolute time in seconds each point/triangle distance computation took, but rather focus on the performances of the homomoprhic algorithm in terms of multiplicative factor, varying the amount of data packed together.

Test ID 1 We tested time performances fixing the number of points and varying the number of triangles. We used 1 point and 2000, 3000, 4500, 6750, 10,125, 15,188 and 16,384 triangles. In Fig. 10, we notice the mean time the homomoprhic algorithm takes to compute the distance for a point-triangle pair lowers as the number of triangles packed in the same ciphertext increases, from a multiplicative factor of 67 to a factor of 9; the limit of 16,384 is due to the choice of the CKKS parameter referring to the polynomial degree. Factor 1 would mean the homomoprhic and plaintext algorithms have same time performances.

Test ID 2 We tested performances varying the number of triangles. We used 1 point and 16, 64, 512, 900 and 1819 triangles, in order to do not exceed the maximum limit \(\frac{n}{18}\) of triangles per ciphertext. In Fig. 11, we notice that the time performances improve of 2 orders of magnitude, but still are 2 orders of magnitude worse than the ones of plaintext algorithm.

Test ID 3 We ran this last test to evaluate how it is balanced the fact that it is possible to store only up to 8192 triangles in a single ciphertext with respect to the fact that, since the polynomial degree n is lower than in Test ID 1, the internal computations shall be faster (operations are done in a lower degree ring).We tested time performances fixing the number of points and varying the number of triangles. We used 1 point and 2000, 3000, 6750, 4500 and 8192 triangles. In Fig. 10 (orange line), we notice lowering the polynomial degree n actually speeds up computations, but not enough to compensate the possibility of packing less triangles.

Delegating computations to the TEE, instead of executing them in the REE or by the client, has an overhead. As analyzed by Suzaki et al. [35] operations on integers, double float and access to the memory (especially sequential) has a low impact on performances when using SGX as TEE, instead of a REE.

7.2.3 Memory resources

Server and TEE have to exchange (encrypted) data three times, in order for the enclave to execute all needed comparisons. During each of these three steps, the TEE has to access a piece of memory. Depending on the strategy adopted to pack data, CWP or PWP, and the value of the polynomial degree n, the size of these pieces of memory changes, as shown in Table 4. The overall size of memory needed at each step is in any case lower than 128 MB, therefore compliant with current technical limitations affecting most TEEs.

We conclude the test results section comparing tests with respect to the minimum number of needed ciphertexts, varying the input number of point/triangle pairs,Footnote 7 as shown in Table 5. These numbers are directly related to the polynomial degree n, because this HE parameter implies the number of available slots in each ciphertext. Based on the accuracy results from Table 3 and since higher precision is needed for our use case, we compare the CPW and the PWP strategy with \(n = 32{,}768\). However, the PWP technique is suitable for low data workloads, since it requires significantly fewer ciphertexts than CWP, we should also take into account the available amount of point/triangles pairs. The description of the dataset in Sect. 7.1 implies that, regrading the storage overhead, CWP strategy is more suitable.

8 TEE-based architecture analysis

In a cloud environment, TEEs may support overcoming some of the limitations of HE. If the environment on the server side offers TEE capabilities, the server can be configured to host cryptographic keys securely and support decryption and re-encryption operations, thus allowing protected, server-side operations on the plaintext (Fig. 12). As described, this capability supports noise management, non-polynomial function computations and branching directly on the server, avoiding any additional communication and computation overhead for the client.

In more details, regarding our scenario, all computations are performed homomorphically, except from the comparisons (step 3 of the algorithm) and real number divisions (last step, division by the number \({\textit{scale}}\)).

Instead of increasing the computational cost of the client, both operations are performed inside the TEE in plaintext form. Obviously, this requires a secure channel to send the decryption keys to the TEE and the memory limitations of the TEE should be also taken into account. Recent research work is focused on hybrid solutions, trying to combine the HE with TEE to efficiently perform ML computations. Especially, in Slalom [36] the ML computations are split in trusted and untrusted components. The nonlinear layers are hard to be computed using HE, so they are evaluated in the SGX. The linear layers computations are outsourced to GPU and it is possible to securely compute them using HE schemes.

In the rest of the Section, we elaborate on the constraints the TEE imposes on the redesign of the solution architecture.

Trust base expansion Offloading plaintext computations to the Trusted Execution Environment requires sharing the decryption key with the Trusted Applications. Thus, as a preliminary step, a secure communication channel shall establish a shared key with the TEE. The first step for establishing a secure channel is authentication of the enclave (providing guarantees that the server-side application executes in an environment providing confidentiality guarantees). Then, for establishing trust in the server-side application, we need to authenticate it and assess its integrity. Finally, we can share the key, with assurance that it will be kept confidential and used in the proper way.

However, the use of TEE implies that the data owner has to trust the TEE manufacturer. This is not compliant with many security policies in the industry.

Limited portability The technology supporting the TEE shall be available on the cloud server. This may reduce the portability of the applications to a different hosting environment. Among cited technologies, SGX and SEV are widely adopted in cloud setups, mainly operating on the server side, whereas ARM and RISC-V architecture are more frequently deployed at the edge of network infrastructures.

Limited resource availability A Trusted Execution Environment may pose rigid limitations in terms of available resources. For example, SGX provides very limited memory resources to the enclaves (up to 128 MB). This limits the data processing capabilities of applications deployed in the enclaves. AMD-SEV provides less limitations from this point of view but relies on the less flexible model based on full-fledged virtual machines.

Attack model Remote attestation [37] allows a user to verify the status of software running in a remote platform, checking that it is actually running in a protected enclave. Thanks to remote attestation, the user and the remote enclave can establish a shared key with a key agreement protocol, enabling an authenticated communication channel between them. This effectively allows provisioning the software running in the enclave with confidential data or keys in a protected way. Remote attestation is directly supported by both Intel SGX and AMD-SEV. Leveraging remote attestation, the setup phase of a FHE scheme leveraging a server-side enclave can be revisited as illustrated in Fig. 13.

At the same time, the attack model can be expanded to include active attacker, since remote attestation offers code integrity.

9 Conclusion and future work

In conclusion, this paper investigates the use of HE-based computations in manufacturing. More precisely, we demonstrate how to securely outsource comparison of 3D images and, specifically, the comparison of the 3D engineering models required for production with the 3D scans of the actual part. This research work extends our preliminary work in [5]. The main contributions of the current paper are summarized as follows: First, we translate the plain-text 3D comparison algorithm to an HE-friendly one and its implementation, using CKKS scheme and the SEAL library. Most of the HE compilers focus on machine learning applications and therefore are not effective for our application, and we decided to design and implement our HE algorithm manually to be tailored to the COLLABS use case performance requirements, i.e. input data and the maximum acceptable error of the homomorphically computed results (\(10^{-5}\)). Second, based on our previous work [5], we propose and achieve communication and computational improvements regarding the accuracy of the computed results as well as of the time performance. Especially, we designed and implemented two packing methods, CWP and PWP, to realize a SIMD computation strategy in order to increase efficiency. We evaluated a hybrid packing solution, by combining the two packing methods, based on characteristics of the available input data. Third, we analyzed, mathematically supported and evaluated the idea of leaking insignificant information to the server, with the aim of gaining additional performance improvements. By using the HE algorithm with CWP strategy and information leakage, we managed to have a reduction from 0.9 seconds to 0.1 seconds. Fourth, we performed an extensive evaluation of HE algorithm, for both CWP and PWP strategies, with security level 128 bits and depicted how different HE parameters (different values of polynomial degree) and cyclic rotations (in case of PWP) can influence the accuracy of the computed results as well as the time performance. Based on the requirements of our use case, the maximum acceptable error of the computed results (\(10^{-5}\)) and the available amount of point/triangle pairs for distance computation (about \(10^9\) pairs), we established that CWP with polynomial degree \(n = 32{,}768\) is the most suitable approach for our COLLABS use case. In more details, comparing the results computed from geometric plain-text algorithm and HE algorithm using CWP technique, the two differ more than \(10^{-5}\) only \(0.00007\%\) of times (i.e. 9 out of almost 12 millions and a half comparisons), with a mean error of \(3.21 \times 10^{-5}\). Finally, we also defined and proposed a custom TEE-based architecture to overcome some limitations of HE on specific mathematical operations and analyzed pros/cons and the security posture of such a TEE-based solution, such as the need of a secure channel to share the secret key as well as challenges related to limited portability and connection availability. We also evaluated the required memory resources in case of combining TEE with HE, which resulted into indications to guarantee feasibility of the computations within the TEE enclave.

Regarding possible next steps, it would be useful to enlarge the bucket of algorithms usable directly over the encrypted data during the compliance check analyses. Candidate algorithms may be: (1) Thickness estimation, and (2) Curvature estimation, see as reference algorithms [38] and [39], respectively. The challenges that these algorithms bring are partially the same already discussed (division, square roots, if-else statements) but the HE algorithm reformulation shall be adapted to fit the new calculation chain. An additional challenge introduced by the curvature estimation algorithm is the inversion of a matrix. In Fig. 14 it is shown how these algorithms can be applied to meshes.

Data availibility

The dataset used in the experiments and evaluation section of this paper is real data representing a piece of a model of an engine turbine blade and the associated scanned actual manufactured turbine blade piece. Hence, the dataset is classified as “confidential” information for the manufacturing company and is not publicly available.

Notes

We say minimum because for sake of completeness it shall also be considered how many points and how many triangles we are considering, forming the pairs.

References

Rivest, R.L., Adleman, L., Dertouzos, M.L., et al.: On data banks and privacy homomorphisms. Found. Secur. Comput. 4(11), 169–180 (1978)

Dathathri, R., Saarikivi, O., Chen, H., Laine, K., Lauter, K., Maleki, S., Musuvathi, M., Mytkowicz, T.: CHET: An optimizing compiler for fully-homomorphic neural-network inferencing. In: Proceedings of the 40th ACM SIGPLAN Conference on Programming Language Design and Implementation, pp. 142–156 (2019)

Dathathri, R., Kostova, B., Saarikivi, O., Dai, W., Laine, K., Musuvathi, M.: EVA: An encrypted vector arithmetic language and compiler for efficient homomorphic computation. In: Proceedings of the 41st ACM SIGPLAN Conference on Programming Language Design and Implementation, pp. 546–561 (2020)

Viand, A., Jattke, P., Hithnawi, A.: SOK: fully homomorphic encryption compilers. In: 42nd IEEE Symposium on Security and Privacy, SP 2021, San Francisco, CA, USA, 24–27 May 2021, pp. 1092–1108. IEEE (2021)

Triakosia, A., Rizomiliotis, P., Tserpes, K., Tonelli, C., Senni, V., Federici, F.: Homomorphic encryption in manufacturing compliance checks. In: Katsikas, S.K., Furnell, S. (eds.) Trust, Privacy and Security in Digital Business—19th International Conference, TrustBus 2022, Vienna, Austria, August 24, 2022, Proceedings, volume 13582 of Lecture Notes in Computer Science, pp. 81–95. Springer (2022)

Tu, S., Kaashoek, M.F., Madden, S., Zeldovich, N.: Processing analytical queries over encrypted data. In: Proceedings of the 39th International Conference on Very Large Data Bases. PVLDB 13, Trento, Italy, VLDB Endowment, pp. 289–300 (2013)

Arasu, A., Blanas, S., Eguro, K., Kaushik, R., Kossmann, D., Ramamurthy, R., Venkatesan, R.: Orthogonal security with cipherbase. In: CIDR, CIDR 1–10 (2013)

Drucker, N., Gueron, S.: Combining homomorphic encryption with trusted execution environment: a demonstration with Paillier encryption and SGX. In: Proceedings of the 2017 International Workshop on Managing Insider Security Threats (2017)

Wang, W., et al.: Toward scalable fully homomorphic encryption through light trusted computing assistance. arXiv preprint arXiv:1905.07766 (2019)

Coppolino, L., et al.: VISE: Combining intel SGX and homomorphic encryption for cloud industrial control systems. IEEE Trans. Comput. 70(5), 711–724 (2020)

Ducas, L., Micciancio, D.: FHEW: Bootstrapping homomorphic encryption in less than a second. In: Annual International Conference on the Theory and Applications of Cryptographic Techniques, pp. 617–640. Springer (2015)

Chillotti, I., Gama, N., Georgieva, M., Izabachene, M.: Faster fully homomorphic encryption: bootstrapping in less than 0.1 seconds. In: International Conference on the Theory and Application of Cryptology and Information Security, pp. 3–33. Springer (2016)

Brakerski, Z., Gentry, C., Vaikuntanathan, V.: (Leveled) fully homomorphic encryption without bootstrapping. ACM Trans. Comput. Theory (TOCT) 6(3), 1–36 (2014)

Brakerski, Z.: Fully homomorphic encryption without modulus switching from classical GapSVP. In: Annual Cryptology Conference, pp. 868–886. Springer, Berlin (2012)

Fan, J., Vercauteren, F.: Somewhat practical fully homomorphic encryption. IACR Cryptol. ePrint Arch. 2012, 144 (2012)

Cheon, J.H., Kim, A., Kim, M., Song, Y.: Homomorphic encryption for arithmetic of approximate numbers. In: International Conference on the Theory and Application of Cryptology and Information Security, pp. 409–437. Springer, Berlin (2017)

Lyubashevsky, V., Peikert, C., Regev, O.: On ideal lattices and learning with errors over rings. In: Annual International Conference on the Theory and Applications of Cryptographic Techniques, pp. 1–23. Springer, Berlin (2010)

Smart, N.P., Vercauteren, F.: Fully homomorphic SIMD operations. In: IACR Cryptol. ePrint Arch., p. 133 (2011)

Juvekar, C., Vaikuntanathan, V., Chandrakasan, A.P.: GAZELLE: A low latency framework for secure neural network inference. In: Enck, W., Felt, A.P. (eds.) 27th USENIX Security Symposium, USENIX Security 2018, Baltimore, MD, USA, August 15–17, 2018, pp. 1651–1669. USENIX Association (2018)

Lu, W., Huang, Z., Hong, C., Ma, Y., Qu, H.: PEGASUS: bridging polynomial and non-polynomial evaluations in homomorphic encryption. In: 42nd IEEE Symposium on Security and Privacy, SP 2021, San Francisco, CA, USA, 24–27 May 2021, pp. 1057–1073. IEEE (2021)

Boura, C., Gama, N., Georgieva, M., Jetchev, D.: Chimera: combining ring-lwe-based fully homomorphic encryption schemes. J. Math. Cryptol. 14(1), 316–338 (2020)

Lauter, K.E., Dai, W., Laine, K.: Protecting Privacy Through Homomorphic Encryption. Springer, Berlin (2022)

Homomorphic Encryption Standardization. https://homomorphicencryption.org/standard/. Standard

Costan, V., Devadas, S.: Intel SGX explained. Cryptology ePrint Archive (2016)

SEV-SNP, A.M.D.: Strengthening VM isolation with integrity protection and more. White Paper (2020)

Pinto, S., Santos, N.: Demystifying arm trustzone: a comprehensive survey. ACM Comput. Surv. (CSUR) 51(6), 1–36 (2019)

Lee, D., Kohlbrenner, D., Shinde, S., Asanović, K., Song, D.: Keystone: an open framework for architecting trusted execution environments. In: Proceedings of the Fifteenth European Conference on Computer Systems, pp 1–16 (2020)

Anil, E., Tang, P., Akinci, B., Huber, D.: Deviation analysis method for the assessment of the quality of the as-is building information models generated from point cloud data. Automat. Constr. 35, 507–516 (2013)

Nguyen, C.H.P., Choi, Y.: Comparison of point cloud data and 3d cad data for on-site dimensional inspection of industrial plant piping systems. Automat. Constr. 91, 44–52 (2018)

Zhao, X., Kang, R., Ruodan, L.: 3D reconstruction and measurement of surface defects in prefabricated elements using point clouds. J. Comput. Civ. Eng. 34(5), 04020033 (2020)

Abdallah, H.B., Orteu, J.-J., Jovancevic, I., Dolives, B.: Three-dimensional point cloud analysis for automatic inspection of complex aeronautical mechanical assemblies. J. Electron. Imaging 29(4), 041012 (2020)

Chen, X., Qin, F., Xia, C., Bao, J., Huang, Y., Zhang, X.: An innovative detection method of high-speed railway track slab supporting block plane based on point cloud data from 3d scanning technology. Appl. Sci. (2019)

Cheon, J.H., Kim, D., Kim, D.: Efficient homomorphic comparison methods with optimal complexity. In: International Conference on the Theory and Application of Cryptology and Information Security, pp. 221–256. Springer, Berlin (2020)

Rizomiliotis, P., Diou, C., Triakosia, A., Kyrannas, I., Tserpes, K.: Partially oblivious neural network inference. In: di Vimercati, S.D.C., Samarati, P. (eds.) Proceedings of the 19th International Conference on Security and Cryptography, SECRYPT 2022, Lisbon, Portugal, July 11–13, 2022, pp. 158–169. SCITEPRESS (2022)

Suzaki, K.K., Nakajima, K., Oi, T., Tsukamoto, A.: Ts-perf: General performance measurement of trusted execution environment and rich execution environment on intel SGX, arm trustzone, and risc-v keystone. IEEE Access 9, 133520–133530 (2021). https://doi.org/10.1109/ACCESS.2021.3112202

Tramer, F., Boneh, D.: Slalom: Fast, verifiable and private execution of neural networks in trusted hardware. arXiv preprint arXiv:1806.03287 (2018)

Ménétrey, J., Göttel, C., Pasin, M., Felber, P., Schiavoni, V.: An exploratory study of attestation mechanisms for trusted execution environments. arXiv preprint arXiv:2204.06790 (2022)

Möller, T., Trumbore, B.: Fast, minimum storage ray-triangle intersection. J. Graph. GPU Games Tools 2(1), 21–28 (1997)

Rusinkiewicz, S.: Estimating curvatures and their derivatives on triangle meshes. In: Symposium on 3D Data Processing, Visualization, and Transmission (2004)

Collabs-871518 project website. https://www.collabs-project.eu/

Acknowledgements

This research has been funded by the European Union’s Horizon 2020 Research and Innovation program under grant agreement No. 871518, a project named COLLABS [40]. We would like to thank our colleagues Marc-Andre Bernier and Maikel Geres from Pratt & Whitney Canada MB-DBI for providing the use case of this paper and the real 3D data, which has been used for the performance evaluation.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare that are relevant to the content of this paper.

Ethical approval

This paper does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Plaintext algorithm

Appendix A: Plaintext algorithm

The inputs of the plaintext algorithm are a set of triangles and a set of points. For simplicity, we describe the algorithm computing the distance between a set of three points \(\{ T_{i1}, T_{i2}\), \( T_{i3} \}\), defining a triangle T, and a point P in three-dimensional space and we hide all the details for data preprocess for multiple triangles, used to improve performance. To describe the plaintext algorithm in a mathematical way, we define the following notations:

-

1.

Let \(\lambda * v\) denotes the scalar multiplication between a number \(\lambda \) and a vector v.

-

2.

Let \(\langle v_1, v_2 \rangle \) denotes the inner product between two vectors \(v_1\) and \(v_2\).

-

3.

Let ||v|| denotes the magnitude of a vector v.

-

4.

Let dist(A, B) denotes the distance between two points A and B.

The steps of the plaintext algorithm are analyzed in this section and illustrated in Fig. 4.

Step 1: Compute a vector n which is perpendicular to the plane containing the triangle, \(\pi (T)\). The normal vector n is calculated by using the formula \(n = \langle T_{i1} - T_{i2}, T_{i1} - T_{i3} \rangle \) and then normalize it by using \(\hat{n}=\frac{n}{||n||}\).

Step 2: Compute the projection H of point P onto the plane \(\pi (T)\). The point H is computed by using the formula \(H = P - \langle P - T_{i3}, n \rangle * n\).

Step 3: Decide whether the projection H is inside the triangle, i.e., in Region 0, or not. Step 3 contains two procedures:

-

1.