Abstract

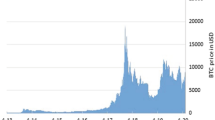

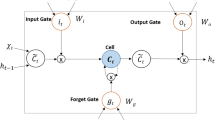

The goal of this paper is to provide a novel quantitative framework to describe the Bitcoin price behavior, estimate model parameters and study the pricing problem for Bitcoin derivatives. To this end, we propose a continuous time model for Bitcoin price motivated by the findings in recent literature on Bitcoin, showing that price changes are affected by sentiment and attention of investors, see e.g., (Kristoufek in Sci Rep 3:3415, 2013, PLoS ONE 10(4):e0123923, 2015; Bukovina and Marticek in Sentiment and bitcoin volatility. Technical report, Mendel University in Brno, Faculty of Business and Economics 2016). Economic studies, such as Yermack (Handbook of Digital Currency, chapter second. Elsevier, Amsterdam, pp 31–43, 2015), have also classified Bitcoin as a speculative asset rather than a currency due to its high volatility. Building on these outcomes, the price dynamics in our suggestion is indeed affected by an exogenous factor which represents market attention in the Bitcoin system. We prove the model to be arbitrage-free under a mild condition and we fit the model to historical data for the Bitcoin price; after obtaining a approximate formula for the likelihood, parameter values are estimated by means of the profile likelihood method. In addition, we derive a closed pricing formula for European-style derivatives on Bitcoin, the performance of which is assessed on a panel of market prices for Plain Vanilla options quoted on www.deribit.com.

Similar content being viewed by others

Notes

This Web site collects data on sentiment through an algorithm, based on Natural Language Processing techniques, which is capable of identifying string of words conveying positive, neutral or negative sentiment on a topic (Bitcoin in this case).

It is well documented that Google is the most popular search engine.

Data available only from 01/07/2015.

The function \(\varphi \) is usually referred to as the contract function.

We thank an anonymous referee for this suggestion.

The Root Mean Squared Error is computed as the square root of the sum, across all options in the sample or subsample, of the squared differences between model and market prices.

We thank an anonymous referee for pointing this.

With the exception of Futures, traded on both the CBOE and CME, which are not suited for calibration.

References

Barber, B.M., Odean, T.: All that glitters: the effect of attention and news on the buying behavior of individual and institutional investors. Rev. Financ. Stud. 21(2), 785–818 (2007)

Bistarelli, S., Cretarola, A., Figà-Talamanca, G., Mercanti, I., Patacca, M.: Is arbitrage possible in the bitcoin market? In: Coppola, M., Carlini, E., D’Agostino, D., Altmann, J., Bañares, J.Á.: editors, Economics of Grids, Clouds, Systems, and Services—15th International Conference, GECON 2018, Pisa, Italy, September 18–20, 2018. Springer International Publishing. https://doi.org/10.1007/978-3-030-13342-9_21 (2018)

Bistarelli, S., Cretarola, A., Figà-Talamanca, G., Patacca, M.: Model-based arbitrage in multi-exchange models for Bitcoin price dynamics Digit. Finance (2019a). https://doi.org/10.1007/s42521-019-00001-2

Bistarelli, S., Figà-Talamanca, G., Lucarini, F., Mercanti, I.: Studying forward looking bubbles in Bitcoin/USD exchange rates. In: Proceedings of the 23rd International Database Applications & Engineering Symposium. ACM (2019b)

Black, F., Scholes, M.: The pricing of options and corporate liabilities. J. Polit. Econ. pp. 637–654 (1973)

Bukovina, J., Martiček, M.: Sentiment and bitcoin volatility. Technical report, Mendel University in Brno, Faculty of Business and Economics (2016)

Catania, L., Grassi, S.: Modelling crypto-currencies financial time-series. CEIS Working Paper, (2017)

Chu, J., Nadarajah, S., Chan, S.: Statistical analysis of the exchange rate of bitcoin. PLoS ONE 10(7), e0133678 (2015)

Corbet, S., Lucey, B., Yarovaya, L.: Datestamping the Bitcoin and Ethereum bubbles. Finance Res. Lett. 26, 81–88 (2018)

Cretarola, A., Figà-Talamanca, G., Patacca, M.: A continuous time model for Bitcoin price dynamics. In: Corazza, M., Durbán, M., Grané, A., Perna, C., Sibillo, M. editors, Mathematical and Statistical Methods for Actuarial Sciences and Finance - MAF 2018, pp. 273–277. Springer International Publishing, https://doi.org/10.1007/978-3-319-89824-7_49 (2018)

Cretarola, A., Figà-Talamanca, G.: Detecting bubbles in Bitcoin price dynamics via market exuberance. Ann. Oper. Res. (2019). https://doi.org/10.1007/s10479-019-03321-z

Da, Z., Engelberg, J., Gao, P.: In search of attention. J. Finance 66(5), 1461–1499 (2011)

Davison, A.C.: Statistical Models, vol. 11. Cambridge University Press, Cambridge (2003)

Donier, J., Bouchaud, J.-P.: Why do markets crash? Bitcoin data offers unprecedented insights. PLoS ONE 10(10), e0139356 (2015)

Figà-Talamanca, G., Patacca, M.: Does market attention affect Bitcoin returns and volatility? Decisions Econ. Finan. (2019). https://doi.org/10.1007/s10203-019-00258-7

Föllmer, H., Schweizer, M.: Hedging of contingent claims under incomplete information. In: Davis, M.H.A., Elliot, R.J. (eds.) Applied Stochastic Analysis. volume 5, pp. 389–414. Gordon and Breach, New York (1991)

Föllmer, H., Schweizer, M.: Minimal martingale measure. In: Encyclopedia of Quantitative Finance, Wiley Online Library (2010)

Fry, J., Cheah, E.-T.: Speculative bubbles in bitcoin markets? An empirical investigation into the fundamental value of bitcoin. Econ. Lett. 130, 32–36 (2015)

Gervais, S., Kaniel, R., Mingelgrin, D.H.: The high-volume return premium. J. Finance 56(3), 877–919 (2001)

Gourieroux, C., Monfort, A., Trognon, A.: Pseudo maximum likelihood methods: theory. Econometrica 52(3), 681–700 (1984)

Guo, L., Li, XJ.: Risk analysis of cryptocurrency as an alternative asset class. In: Applied Quantitative Finance, pp. 309–329. Springer (2017)

Hou, K., Xiong, W., Peng, L.: A tale of two anomalies: The implications of investor attention for price and earnings momentum. SSRN Electr. J. (2009)

Hull, J., White, A.: The pricing of options on assets with stochastic volatilities. J. Finance 42(2), 281–300 (1987)

Kim, Y.B., Lee, S.H., Kang, S.J., Choi, M.J., Lee, J., Kim, C.H.: Virtual world currency value fluctuation prediction system based on user sentiment analysis. PLoS ONE 10(8), e0132944 (2015)

Kou, S.G.: A jump-diffusion model for option pricing. Manage. Sci. 48(8), 1086–1101 (2002)

Kristoufek, L.: BitCoin meets Google trends and Wikipedia: quantifying the relationship between phenomena of the internet era. Sci. Rep. 3, 3415 (2013)

Kristoufek, L.: What are the main drivers of the bitcoin price? Evidence from wavelet coherence analysis. PLoS ONE 10(4), e0123923 (2015)

Levy, E.: Pricing European average rate currency options. J. Int. Money Finance 11(5), 474–491 (1992)

Malhotra, A., Maloo, M.: Bitcoin-is it a bubble? Evidence from unit root tests. SSRN Electr. J. (2014)

Mao, X., Sabanis, S.: Delay geometric Brownian motion in financial option valuation. Stoch. Int. J. Probab. Stoch. Process. 85(2), 295–320 (2013)

Massey Jr., F.J.: The Kolmogorov–Smirnov test for goodness of fit. J. Am Stat. Assoc. 46(253), 68–78 (1951)

Milevsky, M.A., Posner, S.E.: Asian options, the sum of lognormals, and the reciprocal gamma distribution. J. Financ. Quant. Anal. 33(03), 409–422 (1998)

Nakamoto, S.: Bitcoin: A peer-to-peer electronic cash system. Working Paper (2008)

Pascucci, A.: PDE and Martingale Methods in Option Pricing. Springer Science & Business Media, New York (2011)

Pawitan, Y.: In All Likelihood: Statistical Modelling and Inference Using Likelihood. Oxford University Press, Oxford (2001)

Protter, P.E.: Stochastic Integration and Differential Equations, volume 21 of Stochastic Modelling and Applied Probability. Springer, Berlin, 3rd corrected printing, 2nd edn (2005)

The Wall Street Journal. CBOE Teams Up with Winklevoss Twins for Bitcoin Data. https://www.wsj.com/articles/cboe-teams-up-with-winklevoss-twins-for-bitcoin-data-1501675200 (2017a)

The Wall Street Journal. Bitcoin Options Exchange Wins Approval from CFTC. https://www.wsj.com/articles/bitcoin-options-exchange-wins-approval-from-cftc-1500935886 (2017b)

Tsay, R.S.: Analysis of Financial Time Series, vol. 543. Wiley, New York (2005)

White, H.: Maximum likelihood estimation of misspecified models. Econometrica, pp. 1–25, (1982)

Yermack, D.: Is bitcoin a real currency? An economic appraisal. In: Handbook of Digital Currency, chapter second, pp. 31–43. Elsevier, Amsterdam (2015)

Acknowledgements

The authors are thankful to Fabio Bellini for valuable discussions on the topic of the present paper and to an anonymous referee for valuable suggestions that helped to significantly improve the paper. Part of the paper was written during the stay of the second-named author at the Department of Finance and Risk Engineering, Tandon School of Engineering, New York University. The second-named author is especially grateful to Peter Carr and Charles Tapiero for valuable suggestions and stimulating discussions. The authors also gratefully acknowledge Stefano Bistarelli for having introduced themselves to the intriguing world of cryptocurrencies. The first-named author is member of the Gruppo Nazionale per l’Analisi Matematica, la Probabilità e le loro Applicazioni (GNAMPA) of the Istituto Nazionale di Alta Matematica (INdAM). The authors notify that this paper has circulated under the title “A sentiment-based model for the BitCoin: theory, estimation and option pricing” and, in its preliminary form, under the title “A confidence-based model for asset and derivative prices in the bitcoin market”.

Funding

The first and the second-named authors are grateful to Bank of Italy and Fondazione Cassa di Risparmio Perugia for the financial support, through Project Numbers 407660/16 and 2015.0459013, respectively.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Levy approximation

In Levy (1992) the author proves that the distribution of the mean integrated Brownian motion \(\frac{1}{s} \int _0^s P_u \mathrm {d}u\) can be approximated with a log-normal distribution, at least for suitable values of the model parameters \(\mu _P, \sigma _P\); the parameters of the approximating log-normal distribution are obtained by applying a moment matching technique. Set

Of course, the distribution of IP(s) can also be approximated by a log-normal for \(s >0\). By applying the moment matching technique the parameters of the corresponding log-Normal distribution for IP(s) are given by

The approximate distribution density function \(f_{IP(s)}\) of IP(s) is thus given by

where \( {\mathcal {LN}} pdf_{m,v} \) denotes the probability distribution function of a log-normal distribution with parameters m and v, defined as

In the paper the above approximation is applied twice with completely different purposes. In Sect. 4, once \(\tau <\Delta \) is assigned, the Levy approximation is applied to derive the distribution of \(A_1\) and of \(A_i\) given \(A_{i-1}\) where \(A_1=X_\tau ^\tau + IP(\Delta -\tau )\) and, for \(i\ge 2\), \(A_i=\int _{(i-1)\Delta -\tau }^{i\Delta -\tau } P_u du=\int _{-\tau }^{\Delta -\tau } P_{u+(i-1)\Delta } \mathrm {d}u =P_{(i-1)\Delta }\int _{-\tau }^{\Delta -\tau } P_{u} \mathrm {d}u =P_{(i-1)\Delta } \left( X_\tau ^\tau +IP(\Delta -\tau ) \right) \).

In Sect. 5 it is applied to derive an approximate distribution for the integrated attention process starting at \(t=0\), i.e., to \(X_{0,T}^\tau =X_\tau ^\tau +IP(T-\tau )\). Note that \(X_{0,T}^\tau -X_\tau ^\tau =IP(T-\tau )\) hence the derivations of its distribution is trivial once that of \(IP(T-\tau )\) is known.

Appendix B: Technical proofs

The following result provides basic statistical properties for the integrated attention process \(X^\tau \) defined in (2.3), as well as for its variation in case they are not fully deterministic.

Lemma B.1

In the market model outlined in Eqs. (2.1)–(2.2), we have:

-

(i)

For \(t > \tau \),

$$\begin{aligned} \mathbb {E}\left[ X_t^\tau \right]&= X_\tau ^\tau +\frac{\phi (0)}{\mu _{P}}\left( e^{\mu _{P}(t-\tau )} -1\right) ;\\ \mathbb V\mathrm{ar}[X_t^\tau ]&= \frac{2\phi ^{2}(0)}{\left( \mu _{P}+\sigma _{P}^{2}\right) \left( 2\mu _{P}+\sigma _{P}^{2}\right) }\left( e^{\left( 2\mu _{P}+\sigma _{P}^{2}\right) (t-\tau )} -1\right) \\&\quad -\frac{2\phi ^{2}(0)}{\mu _{P}\left( \mu _{P}+\sigma _{P}^{2}\right) }\left( e^{\mu _{P}(t-\tau )}-1\right) -\left( \frac{\phi (0)}{\mu _{P}}\left( e^{\mu _{P}(t-\tau )}-1\right) \right) ^2. \end{aligned}$$ -

(ii)

For \( \tau \le t < T \),

$$\begin{aligned} \mathbb {E}\left[ X_{t,T}^\tau \right]&= \frac{\phi (0)e^{\mu _{P}(t-\tau )}}{\mu _{P}}\left( e^{\mu _{P}(T-t)}-1\right) ;\\ \mathbb V\mathrm{ar}[X_{t,T}^\tau ]&= \frac{2\phi ^{2}(0)e^{\left( 2\mu _{P}+\sigma _{P}^{2}\right) (t-\tau )}}{\left( \mu _{P}+\sigma _{P}^{2}\right) \left( 2\mu _{P}+\sigma _{P}^{2}\right) }\left( e^{\left( 2\mu _{P}+\sigma _{P}^{2}\right) (T-t)} -1\right) \\&\quad -\frac{2\phi ^{2}(0)e^{\mu _{P}(t-\tau )}}{\mu _{P}\left( \mu _{P}+\sigma _{P}^{2}\right) }\left( e^{\mu _{P}(T-t)} -1\right) \\&\quad -\left( \frac{\phi (0)e^{\mu _{P}(t-\tau )}}{\mu _{P}}\left( e^{\mu _{P}(T-t)} -1\right) \right) ^2. \end{aligned}$$ -

(iii)

For \( t \le \tau < T \),

$$\begin{aligned} \mathbb {E}\left[ X_{t,T}^\tau \right]&= \int _{t-\tau }^0 \phi \left( u\right) \mathrm {d}u + \frac{\phi (0)}{\mu _{P}}\left( e^{\mu _{P}(T-\tau )}-1\right) ;\\ \mathbb V\mathrm{ar}[X_{t,T}^\tau ]&= \frac{2\phi ^{2}(0)}{\left( \mu _{P}+\sigma _{P}^{2}\right) \left( 2\mu _{P}+\sigma _{P}^{2}\right) }\left( e^{\left( 2\mu _{P}+\sigma _{P}^{2}\right) (T-\tau )} -1\right) \\&\quad -\frac{2\phi ^{2}(0)}{\mu _{P}\left( \mu _{P}+\sigma _{P}^{2}\right) }\left( e^{\mu _{P}(T-\tau )} -1\right) -\left( \frac{\phi (0)}{\mu _{P}}\left( e^{\mu _{P}(T-\tau )} -1\right) \right) ^2. \end{aligned}$$

In Hull and White (1987) similar outcomes are claimed for \(\tau =0\) without providing a proof; for the sake of clarity, we give here a self-contained proof.

Proof

In order to prove the Lemma let us first compute the mean and the variance of IP(s) given in (A.1) for each \(s>0\).

Fix \(s >0\). Since \(P_u > 0\) for each \(u \in (0, s]\), by applying the Fubini theorem we get

where, for each \(u \ge 0\), we have

since P is a geometric Brownian motion with \(P_0=\phi (0)\). Hence

As for the variance of IP(s), we have

with

where the last equality again holds thanks to Fubini’s theorem. Moreover, by the independence property of the increments of Brownian motion, for \(0< u < v \le s\), we get

Further,

Hence

and by plugging (B.2) into (B.1), we have

Finally, gathering the results we get

Note that \(X_t^\tau \), with \(t\in [0,\tau ]\), and \(X_{t,T}^\tau \), with \(t <T \le \tau \), are fully deterministic and the computation is trivial.

To prove points (i)–(iii), it suffices to observe that

and the computation easily follows once those of IP(s) are known for \(s>0\).

To prove point (ii), it is worth noticing that given \(0 \le v<s\)

where \(r=u-v\). To obtain the desired result, it suffices to note that, for \(\tau \le t<T\),

and apply (B.3). The computation of the mean and variance of the above difference is straightforward given the independence of Brownian increments. \(\square \)

The system given by Eqs. (2.1)–(2.2) is well-defined in \(\mathbb {R}_+\), as stated in the following theorem, which also provides its explicit solution.

Theorem B.2

In the market model outlined in (2.1)–(2.2), the followings hold:

-

(i)

the bivariate stochastic delayed differential equation

$$\begin{aligned} \left\{ \begin{array}{ll} \mathrm {d}S_t = \mu _S P_{t-\tau } S_t \mathrm {d}t+\sigma _S \sqrt{P_{t-\tau }}S_t\mathrm {d}W_t, \quad S_0=s_0 \in \mathbb {R}_+, \\ \mathrm {d}P_t =\mu _P P_t\mathrm {d}t+\sigma _P P_t\mathrm {d}Z_t, \quad P_t = \phi (t),\ t \in [-L,0], \\ \end{array} \right. \end{aligned}$$(B.4)has a continuous, \(\mathbb {F}\)-adapted, unique solution \((S,P)=\{(S_t,P_t),\ t \ge 0\}\) given by

$$\begin{aligned} S_t&=s_0e^{\left( \mu _{S}-\frac{\sigma _{S}^{2}}{2}\right) \int _0^t P_{u-\tau } \mathrm {d}u+\sigma _S \int _0^t \sqrt{P_{u-\tau }}\mathrm {d}W_u},\quad t \ge 0, \end{aligned}$$(B.5)$$\begin{aligned} P_t&= \phi (0)e^{\left( \mu _{P}-\frac{\sigma _{P}^{2}}{2}\right) t+\sigma _P Z_t}, \quad t \ge 0. \end{aligned}$$(B.6)More precisely, S can be computed step by step as follows: for \(k=0,1,2,\ldots \) and \(t \in [k\tau ,(k+1)\tau ]\),

$$\begin{aligned} S_t = S_{k\tau }e^{\left( \mu _{S}-\frac{\sigma _{S}^{2}}{2}\right) \int _{k\tau }^t P_{u-\tau } \mathrm {d}u+\sigma _S \int _ {k\tau }^t \sqrt{P_{u-\tau }}\mathrm {d}W_u}. \end{aligned}$$(B.7)In particular, \(P_t \ge 0\)\(\mathbf {P}\)-a.s. for all \(t \ge 0\). If in addition, \(\phi (0) > 0\), then \(P_t > 0\)\(\mathbf {P}\)-a.s. for all \(t \ge 0\).

-

(ii)

Further, for every \(t \ge 0\), the conditional distribution of \(S_{t}\), given the integrated attention \(X_t^\tau \), is log-Normal with mean \(\log \left( s_0\right) + \left( \mu _{S}-\frac{\sigma _{S}^{2}}{2}\right) X_t^\tau \) and variance \(\sigma _{S}^{2}X_t^\tau \).

-

(iii)

Finally, for every \(t \in [0,\tau ]\), the random variable \(\log \left( S_t \right) \) has mean \(\log \left( s_0\right) + \left( \mu _{S}-\frac{\sigma _{S}^{2}}{2}\right) X_t^\tau \) and variance \(\sigma _{S}^{2}X_t^\tau \); for every \(t > \tau \), \(\log \left( S_t\right) \) has mean and variance, respectively, given by

$$\begin{aligned} \mathbb {E}\left[ \log \left( S_t \right) \right]&= \log \left( s_0\right) + \left( \mu _S-\frac{\sigma _S^2}{2}\right) \mathbb {E}\left[ X_t^\tau \right] ;\\ \mathbb V\mathrm{ar}\left[ {\log \left( S_t \right) }\right]&= \left( \mu _S-\frac{\sigma _S^2}{2}\right) ^2\mathbb V\mathrm{ar}[X_t^\tau ]+ \sigma _S^2 \mathbb {E}\left[ X_t^\tau \right] , \end{aligned}$$where \(\mathbb {E}\left[ X_t^\tau \right] \) and \(\mathbb V\mathrm{ar}[X_t^\tau ]\) are both provided by Lemma B.1, point (i).

Proof

Point (i). Clearly, S and P, given in (B.5) and (B.6), respectively, are \(\mathbb {F}\)-adapted processes with continuous trajectories. Similarly to (Mao and Sabanis 2013, Theorem 2.1), we provide existence and uniqueness of a strong solution to the pair of stochastic differential equations in system (B.4) by using forward induction steps of length \(\tau \), without the need of checking any assumptions on the coefficients, e.g., the local Lipschitz condition and the linear growth condition.

First, note that the second equation in the system (B.4) does not depend on S, and its solution is well known for all \(t \ge 0\). Clearly, Eq. (B.6) says that \(P_t \ge 0\)\(\mathbf {P}\)-a.s. for all \(t \ge 0\) and that \(\phi (0) > 0\) implies that the solution P remains strictly greater than 0 over \([0,+\infty )\), i.e., \(P_t > 0\), \(\mathbf {P}\)-a.s. for all \(t \ge 0\).

Next, by the first Eq. (B.4) and applying Itô’s formula to \(\log \left( S_t\right) \), we get

or equivalently, in integral form

For \(t \in [0,\tau ]\), (B.9) can be written as

that is, (B.7) holds for \(k = 0\).

Given that \(S_t\) is now known for \(t \in [0,\tau ]\), we may restrict the first Eq. (B.4) on \(t \in [\tau , 2\tau ]\), so that it corresponds to consider (B.8) for \(t \in [\tau , 2\tau ]\). Equivalently, in integral form,

This shows that (B.7) holds for \(k = 1\). Similar computations for \(k=2,3,\ldots \), give the final result.

Point (ii). Set \(Y_t:=\int _0^t\sqrt{\phi \left( t-\tau \right) } \mathrm {d}W_u \), for \(t \in [0,\tau ]\) and \(Y_t:= Y_{k\tau }+ \int _{k\tau }^t\sqrt{P_{u-\tau }} \mathrm {d}W_u \), for \(t \in [k\tau ,(k+1)\tau ]\), with \(k=1,2,\ldots \). Then, by applying the outcomes in Point (i) and the decomposition

for \(t\in [k\tau ,(k+1)\tau ]\), with \(k=1,2,\ldots \), we can write

To complete the proof, it suffices to show that, for each \(t\ge 0\) the random variable \(Y_t\), conditional on \(X_t^\tau \), is Normally distributed with mean 0 and variance \(X_t^\tau \). This is straightforward from (B.10) if \(t \in [0,\tau ]\). Otherwise, we first observe that since \(Z_{u-\tau }\) is independent of \(W_u\) for every \(\tau < u \le t\), the distribution of \(Y_t\), conditional on \(\{Z_{u-\tau }:\ \tau< u \le t-\tau \}=\{P_{u-\tau }:\ \tau < u \le t-\tau \} =\mathcal {F}_{t-\tau }^P\), is Normal with mean 0 and variance \(\sigma _S^2X_t^\tau \).

Now, for each \(t > \tau \), the moment-generating function of \(Y_t\), conditioned on the history of the process P up to time \(t-\tau \), is given by

that only depends on \(X_t^\tau \) up to time t, that is,

Point (iii). The proof is trivial for \(t \in [0,\tau ]\). If \(t >\tau \), (B.11) and Lemma B.1, point (i), together with the null-expectation property of the Itô integral, give

Now, we compute the variance of \(\log \left( S_t\right) \). Since for each \(t > \tau \) the random variable \(Y_t \) has mean 0 conditional on \(\mathcal {F}_{t-\tau }^P\), we have

Thus, the proof is complete. \(\square \)

Let \(T > 0\) be a fixed and finite time horizon. The following result ensures that the model given in (2.1)–(2.2) is arbitrage-free.

Lemma B.3

Let \(\phi (t) > 0\), for each \(t \in [-L,0]\), in (2.2). Then, every equivalent martingale measure \(\mathbf {Q}\) for S defined on \((\Omega ,\mathcal {F}_T)\) has the following density

where \(L_T^\mathbf {Q}\) is the terminal value of the \((\mathbb {F},\mathbf {P})\)-martingale \(L^\mathbf {Q}=\{L_t^\mathbf {Q},\ t \in [0,T]\}\) given by

for a suitable \(\mathbb {F}\)-progressively measurable process \(\gamma =\{\gamma _t,\ t \in [0,T]\}\).

Proof

Firstly, we prove that formula (B.12) defines a probability measure \(\mathbf {Q}\) equivalent to \(\mathbf {P}\) on \((\Omega ,\mathcal {F}_T)\). This means we need to show that \(L^\mathbf {Q}\) is an \((\mathbb {F},\mathbf {P})\)-martingale, that is, \(\mathbb {E}\left[ L_T^\mathbf {Q}\right] =1\). Since the \(\mathbb {F}\)-progressively measurable process \(\gamma \) can be suitably chosen, to prove this relation we can assume \(\gamma \equiv 0\), without loss of generality. Set

We observe that since \(\phi (t) > 0\), for each \(t \in [-L,0]\), in (2.2), by Theorem B.2, point (i), we have that \(P_{t-\tau } > 0\), \(\mathbf {P}\)-a.s. for all \(t \in [0,T]\), so that the process \(\alpha =\{\alpha _t,\ t \in [0,T]\}\) given in (B.13) is well-defined, as well as the random variable \(L_T^\mathbf {Q}\). Clearly, \(\alpha \) is an \(\mathbb {F}\)-progressively measurable process. Moreover, since the trajectories of the process P are continuous, then P is almost surely bounded on [0, T] and this implies that \(\int _0^T|\alpha _u|^2 \mathrm {d}u < \infty \)\(\mathbf {P}\)-a.s.; on the other hand, the condition \(\phi (t)>0\), for every \(t \in [-L,0]\), implies that almost every path of \(\left\{ \frac{1}{\sigma _S \sqrt{P_{t-\tau }}},\ t \in [0,T]\right\} \) is bounded on the compact interval [0, T]. Set \(\mathcal {F}_t^P:=\mathcal {F}_0^P=\{\Omega ,\emptyset \}\), for \(t \le 0\). Then, \(\alpha _u\), for every \(u \in [0,T]\), is \(\mathcal {F}_{T-\tau }^P\)-measurable. Since \(Z_{u-\tau }\) is independent of \(W_u\), for every \(u \in [\tau , T]\), the stochastic integral \(\int _0^T \alpha _u \mathrm {d}W_u\) conditioned on \(\mathcal {F}_{T-\tau }^P\) has a normal distribution with mean zero and variance \(\int _0^T |\alpha _u|^2 \mathrm {d}u\). Consequently, the formula for the moment-generating function of a normal distribution implies

or equivalently

Taking the expectation of both sides of (B.14) immediately yields \(\mathbb {E}\left[ L_T^\mathbf {Q}\right] =1\). Now, set \(\widetilde{S}_t:= \displaystyle \frac{S_t}{B_t}\), for each \(t \in [0,T]\). It remains to verify that the discounted Bitcoin price process \(\widetilde{S}=\{\widetilde{S}_t,\ t \in [0,T]\}\) is an \((\mathbb {F},\mathbf {Q})\)-martingale. By Girsanov’s theorem, under the change of measure from \(\mathbf {P}\) to \(\mathbf {Q}\), we have two independent \((\mathbb {F},\mathbf {Q})\)-Brownian motions \(W^\mathbf {Q}=\{W_t^\mathbf {Q},\ t \in [0,T]\}\) and \(Z^\mathbf {Q}=\{Z_t^\mathbf {Q},\ t \in [0,T]\}\) defined, respectively, by

Under the martingale measure \(\mathbf {Q}\), the discounted Bitcoin price process \(\widetilde{S}\) satisfies the following dynamics

which implies that \(\widetilde{S}\) is an \((\mathbb {F},\mathbf {Q})\)-local martingale. Finally, proceeding as above it is easy to check that \(\widetilde{S}\) is a true \((\mathbb {F},\mathbf {Q})\)-martingale. \(\square \)

Here \(\mathcal {E}(Y)\) denotes the Doléans-Dade exponential of an \((\mathbb {F}, \mathbf {P})\)-semimartingale Y. Then, Lemma B.3 ensures that the space of equivalent martingale measures for S is described by (B.12). Note that the attention factor dynamics under \(\mathbf {Q}\) in the Bitcoin market is given by

The process \(\gamma \) can be interpreted as the price for attention risk, i.e., the perception associated to the future direction of investor attention on the Bitcoin market. The probability measure which corresponds to the choice \(\gamma \equiv 0\) in (B.12) is the so-called minimal martingale measure. Let us focus on the special case of a constant process \(\gamma \); in this setting the equivalent martingale measure, denoted by \(\mathbf {Q}^\gamma \), is parameterized by the constant \(\gamma \) which governs the change of drift of the \((\mathbb {F},\mathbf {P})\)-Brownian motion Z. As a special case of the above dynamics, the two independent \((\mathbb {F},\mathbf {Q}^\gamma )\)-Brownian motions \({\widehat{W}}=\{{\widehat{W}}_t,\ t \in [0,T]\}\) and \({\widehat{Z}}= \{{\widehat{Z}}_t,\ t \in [0,T]\}\) are defined, respectively, by

Denote by \(\widetilde{S}_t=\{\widetilde{S}_t,\ t \in [0,T]\}\) the discounted Bitcoin price process defined as \(\displaystyle \widetilde{S}_t:=\frac{S_t}{B_t}\), for each \(t \in [0,T]\). Then, on the probability space \((\Omega ,\mathcal {F},\mathbf {Q}^\gamma )\), the pair \((\widetilde{S},P)\) satisfies the following system of stochastic delayed differential equations:

By Theorem B.2, point (i), the explicit expression of the solution to (B.15), which provides the discounted Bitcoin price \(\widetilde{S}_t\), at any time \(t \in [0,T]\), is given by

with the representation of the attention factor P still provided by (B.6) where the parameter \(\mu _P\) is replaced by \({\tilde{\mu }}_P=\mu _P -\gamma \,\sigma _P\). All the outcomes on the integrated attention variable \(X_{t,T}\) hold under the transformed probability measure \(\mathbf {Q}^\gamma \) by replacing parameter \(\mu _P\) with \({\tilde{\mu }}_P\).

In what follows we prove Lemma 3.1.

Proof of Lemma 3.1

By applying (Levy 1992) we have (see “Appendix A”) that the distribution of \(A_1-X_\tau ^\tau \) can be approximated by a log-normal with parameters

By applying the outcomes of Lemma B.1, we have

Hence

By applying simple computation, we get the outcomes for part (i). Moreover,

Then

Conditioning to \(A_{i-1}\), we get

which gives part (ii). \(\square \)

Appendix C: Finite sample behavior of QML estimates

In order to check the goodness of the log-likelihood approximation introduced in Theorem 3.2, we apply the proposed estimation method to simulated data and assume, for the sake of simplicity, \(\tau =0\). We simulate m samples of length n for the processes in (2.1) and (2.2) assuming a constant finer observation step \(\delta \); we extract corresponding samples for \({\mathbf {R,A}}\) at a lower frequency, with observation step \(\Delta =r\delta \). In the numerical exercise we choose \(n=730\), \(m=1000\), \(\delta =\frac{1}{365}\) (daily observations), \(\Delta =7\delta \) (weekly observations); parameters values are set as \(\mu _P=2\), \(\sigma _P=0.5\)\(\mu _S=0.05\), \(\sigma _S=0.3\). We end with 1000 samples of 104 observations for \(\left( {\mathbf {R,A}}\right) \); for each sample we estimate the parameters by means of the quasi-maximum likelihood as suggested in previous subsection. The results are summed up in Table 11.

We also performed a t test in order to check for estimation bias. The fitted values of \(\mu _P,\mu _S,\sigma _S\) are close in mean to their theoretical value and with a reasonable standard deviation; the p-values of the t test confirm that estimated are not biased. Different conclusions are in order as for parameter \(\sigma _P\) which estimations is by no doubt biased. In Fig. 2 we plot the histograms of the estimated as well as the fitted normal distribution and the expected mean of the asymptotic distribution. Pictures confirm the biasedness of the estimator for \(\sigma _P\) but all other estimates perform well and outcomes may become better by increasing the sample length. The simulation exercise have been repeated by letting the parameters values, the number and the sample length vary obtaining analogous qualitative results.

In order to disentangle the contribution of the Levy approximation (Levy 1992) to the estimation bias, we suggest to apply the method of moments to estimate \(\mu _P\) and \(\sigma _P\) considering the whole sample for P generated at the finer observation step \(\delta \) to compute the sample mean and sample variance of the attention realizations. If we then we plug the estimated values in the likelihood (3.2) in order to estimate \(\lbrace \mu _S, \sigma _S \rbrace \) these two estimates remain unchanged; in fact the likelihood may be maximized separately with respect to \(\mu _P,\sigma _P\) and \(\mu _S,\sigma _S\) since each of the two addend in the likelihood expression depends on just one of this pairs. The results of this alternative estimation method are reported in Table 12.

To visualize the bias of \(\lbrace \sigma _P \rbrace \) we plot in Fig. 3 the histogram of parameter \(\lbrace \mu _P, \sigma _P \rbrace \) fit with simulated data using the two methods. As we can see using the two step procedure we obtain better estimates of \(\lbrace \sigma _P \rbrace \) both in terms of expected value and standard deviation. It is evident from Table 12 and Fig. 3 that the estimation of \(\sigma _P\) is not biased in this case hence the estimation bias may essentially be attributed to the aggregation of the attention over time intervals and to the corresponding approximating distribution. Hence, whether the attention factor is observed at a finer step than the price, the above separate estimation is more reliable.

Rights and permissions

About this article

Cite this article

Cretarola, A., Figà-Talamanca, G. & Patacca, M. Market attention and Bitcoin price modeling: theory, estimation and option pricing. Decisions Econ Finan 43, 187–228 (2020). https://doi.org/10.1007/s10203-019-00262-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10203-019-00262-x