Abstract

A family of consistent tests, derived from a characterization of the probability generating function, is proposed for assessing Poissonity against a wide class of count distributions, which includes some of the most frequently adopted alternatives to the Poisson distribution. Actually, the family of test statistics is based on the difference between the plug-in estimator of the Poisson cumulative distribution function and the empirical cumulative distribution function. The test statistics have an intuitive and simple form and are asymptotically normally distributed, allowing a straightforward implementation of the test. The finite sample properties of the test are investigated by means of an extensive simulation study. The test shows satisfactory behaviour compared to other tests with known limit distribution.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Assessing the Poissonity assumption is a relevant issue of statistical inference, both because Poisson distribution has an impressive list of applications in biology, epidemiology, physics, and queue theory (see e.g. Johnson et al 2005; Puig and Weiß 2020) and because it is a preliminary step in order to apply many popular statistical models. The use of the probability generating function (p.g.f.) has a long tradition (see e.g. Kocherlakota and Kocherlakota 1986; Meintanis and Bassiakos 2005; Rémillard and Theodorescu 2000) for testing discrete distributions and some omnibus procedures based on the p.g.f. have been proposed for Poissonity (e.g. Nakamura and Pérez-Abreu 1993; Baringhaus and Henze 1992; Rueda and O’Reilly 1999; Gürtler and Henze 2000; Meintanis and Nikitin 2008; Inglot 2019; Puig and Weiß 2020). Omnibus tests are particularly appealing since they are consistent against all possible alternative distributions but they commonly have a non-trivial asymptotic behavior. Moreover, the distribution of the test statistic may depend on the unknown value of the Poisson parameter, implying the necessity to use computationally intensive bootstrap, jackknife, or other resampling methods to approximate it. On the other hand, Poissonity tests against specific alternatives may achieve high power but rely on the knowledge of what deviations from Poissonity can occur. An alternative approach, proposed by Meintanis and Nikitin (2008), is to consider tests with suitable asymptotic properties with respect to a fairly wide class of alternatives, which are also the most likely when dealing with the Poissonity assumption.

In this paper, by referring to the same class of alternative distributions and by using the characterization of the Poisson distribution based on its p.g.f., we propose a family of consistent and asymptotically normally distributed test statistics, based on the difference between the plug-in estimator of the Poisson cumulative distribution function (c.d.f.) and the empirical c.d.f., and a data-driven procedure for the choice of the parameter indexing the statistics. In particular, the test statistics not only have an intuitive interpretation but, being simple to compute, allow a straightforward implementation of the test and lead to test procedures with satisfactory performance also in presence of contiguous alternatives.

2 Characterization of the Poisson distribution

Let X be a random variable (r.v.) taking natural values with probability mass function \(p_X\) and \(\textrm{E}[X]=\mu\). Moreover, let \(\Psi _{X}(t)=\textrm{E}[t^X]\), with \(t\in [0,1]\), be the p.g.f. of X. Following Meintanis and Nikitin (2008), we consider the class of count distributions \(\Delta\) such that

is not negative for any \(t\in [0,1]\) or not positive for any \(t\in [0,1]\) for all \(\mu >0\), where \(\Psi _{X}^\prime (t)\) is the first order derivative of the p.g.f..

As proven by Meintanis and Nikitin (2008), this class contains many popular alternatives to the Poisson distribution, such as the Binomial distribution, the Negative Binomial distribution, the generalized Hermite distribution, the Zero-Inflated and generalized Poisson distribution, among others.

It must be pointed out that \(D(t,\mu )=0\) for any \(t\in [0,1]\) and for some \(\mu >0\) if and only if X is a Poisson r.v.. This characterization allows to construct a goodness of fit test for Poissonity against alternatives belonging to the class \(\Delta\), that is for the hypothesis system

where \(\Pi _\mu\) denotes the Poisson distribution with parameter \(\mu\). In particular, Meintanis and Nikitin (2008) adopt the previous characterization to construct a consistent test for the Poisson distribution by means of the empirical counterpart of \(D(t,\mu )\) suitably weighted. An alternative approach can be based on the \(L^1\) distance of \(D(t,\mu )\) from 0, whose positive values evidence departures from Poissonity. The following Proposition, giving bounds for this distance, provides insight into the introduction of a family of test statistics.

Proposition 1

For any natural number k, let \(f_k(\mu )=e^{-\mu }(1+\ldots +{\frac{\mu ^k}{ k!}}).\) For any \(X \in \Delta\) and for any \(\mu >0\), it holds

where \(T^{(k)}=f_k(\mu )-p_X(0)(1+\ldots +{\frac{\mu ^k}{ k!}}).\) In particular, for \(k=0\),

Proof

Since \(X \in \Delta\)

As \(D(t,\mu )e^{-\mu t}=\{\Psi _{X}(t)e^{-\mu t}\}^\prime\), then

By dividing and multiplying for \(1+\ldots +{\frac{\mu ^k}{ k!}}\), the thesis immediately follows. \(\square\)

Thanks to inequality (1), a family of test statistics, indexed by k and depending on an estimator of

can be defined. Note that \(f_k(\mu )\) and \(p_X(0)(1+\ldots +{\frac{\mu ^k}{ k!}})\) are equal to \(P(X\le k)\) when X belongs to \(\Pi _\mu\) and obviously \(T^{(k)}\ne 0\) for some \(k\ge 0\) iff X is not a Poisson r.v. iff \(T^{(0)}\ne 0\).

3 The test statistic

Given a random sample \(X_1,\ldots ,X_n\) from X, let \({\overline{X}}_n\) be the sample mean and

The simplest test statistic arises from the estimator \({\widehat{T}}^{ (0)}=e^{-{\overline{X}}_n }-{\widehat{p}}_X(0)\) of \(T^{ (0)}\). This statistic is really appealing also owing to its straightforward interpretation, being based on the comparison of the probability that X takes value zero with the probability of zero for a Poisson r.v. Unfortunately, its performance may be not satisfactory, especially when the sample size is small while \(\mu\) is relatively large, as the estimation of \(p_X(0)\) becomes even more crucial.

Nevertheless, for \(k>0\), as \(T^{ (k)}=T^{ (0)}(1+\ldots +{{\mu ^k}\over { k!}})\), the natural estimator of \(T^{ (k)}\), given by \({\widehat{T}}^{ (0)}(1+\ldots +{{{{\overline{X}}_n} ^k}\over { k!}})\), suffers from the same drawbacks of \({\widehat{T}}^{ (0)}\). To avoid the estimation of \(p_X(0)\), since \(p_X(0) (1+\ldots +{\frac{\mu ^k}{ k!}})\) is equal to \(P(X\le k)\) under \(H_0\), the following estimator of \(T^{(k)}\) is proposed

where

It is worth noting that, since k is a fixed natural number (often \(k=0\)), \(\sqrt{n}{\widehat{T}}_n^{(k)}\) is the k-th r.v. of the estimated (discrete) empirical process introduced by Henze (1996) for dealing with goodness-of-fit tests for discrete distributions and also considered by Gürtler and Henze (2000) in their critical synopsis of several procedures for assessing Poissonity. However, as \(\sqrt{n}{\widehat{T}}_n^{ (k)}\) is a r.v., its asymptotic distribution can be easily derived from classical Central Limit Theorems under very mild assumptions, as shown in the following Proposition.

Proposition 2

Let X be a r.v. with \(\textrm{Var}[X]\) finite and k be a fixed natural number. Let

If \(X\in \Pi _\mu\) then \(V_n^{ (k)}\) converges in distribution to \({{\mathcal {N}}}(0,\sigma ^2_{\mu ,k})\) as \(n\rightarrow \infty\), where

Moreover, if \(r_k=P(X\le k)-f_k(\mu )\ne 0\) for some k, namely X is not a Poisson r.v., then \(\vert V_n^{ (k)}\vert\) converges in probability to \(\infty\).

Proof

Note that \(f^\prime _k(\mu )=-e^{-\mu }\frac{\mu ^k}{k!}.\) Owing to the Delta Method

where g is the function defined by \(x\mapsto -e^{-\mu }{\frac{\mu ^{k}}{ k!}}(x-\mu )-\{I(x\le k)-P(X\le k)\}\). When \(X\in \Pi _\mu\), \(r_k=0\), \(\textrm{E}[g(X)]=0\) and \(\textrm{E}[o_P^2(1)]\) is o(1) since \(\vert f_k({\overline{X}}_n)-f_k(\mu )\vert \le \vert {\overline{X}}_n-\mu \vert .\) Then, under \(H_0\), \(V_n^{ (k)}\) converges in distribution to \({{\mathcal {N}}}(0,{ \textrm{Var}\, [g(X)]})\) owing to the Central Limit Theorem. Moreover, since

and

relation (2), and consequently the first part of the proposition, is proven.

Now, let X be a r.v. such that \(r_k\ne 0\), in particular X is not a Poisson r.v.. Thus, \(\vert V_n^{ (k)}\vert\) converges in probability to \(\infty\) because \({\frac{g(X_1)+\ldots +g(X_n)}{\sqrt{n}}}+o_P(1)\) is bounded in probability and \(\sqrt{n} \vert r_k\vert\) converges to \(\infty\). The second part of the proposition is so proven. \(\square\)

Thanks to Proposition 2, fixed a natural number \(k\ge 0\) and under the null hypothesis \(H_0\), \(\sqrt{n}\, {\widehat{T}}_n^{ (k)}/\sigma _{\mu ,k}\) converges to \({{\mathcal {N}}}(0,1)\). Therefore, an estimator of \(\sigma _{\mu ,k}\) is needed to define the test statistic. As the plug-in estimator

converges a.s. and in quadratic mean to \(\sigma ^2_{\mu ,k}\), for any natural number k, the test statistic turns out to be

An \(\alpha\)-level large sample test rejects \(H_0\) for realizations of the test statistic whose absolute values are greater than \(z_{ 1-\alpha /2}\), where \(z_{ 1-\alpha /2}\) denotes the \({ 1-\alpha /2}\)-quantile of the standard normal distribution.

Corollary 1

Under \(H_1\), for any natural number k such that \(P(X\le k)-f_k(\mu )\ne 0\), \(Z_{n,k}\) converges in probability to \(\infty\).

Proof

As \({\widetilde{\sigma }}^2_{n,k}\) converges a.s. to \(\sigma ^2_{\mu ,k}\), the proof immediately follows from the second part of Proposition 2. \(\square\)

It is at once apparent that \(Z_{n,k}\) actually constitutes a family of test statistics giving rise to consistent test for \(k=0\) and for all the other values of k for which there is a discrepancy between the cumulative distribution of the Poisson and of X. Among this family of test statistics, only \(Z_{n,0}\) belongs to the family of the Poisson zero indexes (Weiß et al 2019). It is particularly attractive owing to its simplicity but, as already pointed out, its finite-sample performance may deteriorate, especially if the sample size is small and \(\mu\) is relatively large. Therefore, the selection of the parameter k ensuring consistency and good discriminatory capability is crucial and a data-driven selection criterion is proposed.

4 Data-driven choice of k

An heuristic, relatively simple, criterion for choosing k is based on the relative discrepancy measure \(T_n^{ (k)}/f_k(\mu )\) which can be estimated by \(\frac{{\widetilde{\sigma }}_{n,k}}{f_k({\overline{X}}_n)\sqrt{n}}Z_{n,k}\). Recalling that, under \(H_0\), \(Z_{n,k}\) is approximately a standard normal r.v. also for moderate sample size, as \(\frac{{\widetilde{\sigma }}_{n,k}}{f_k({\overline{X}}_n)\sqrt{n}}\) converges a.s. to 0 when \(n\rightarrow \infty\), for any fixed n, k may be selected in a such a way that \(\frac{{\widetilde{\sigma }}_{n,k}}{f_k({\overline{X}}_n)\sqrt{n}}\) is not negligible. This choice should ensure both high power and an actual significance level close to the nominal one. To this purpose, note that the function \(\mu \mapsto f_k(\mu )\) is decreasing for any k and if \(\mu \ge 1\), it holds \(f^2_k(\mu )/f_k(1)\le f_k(\mu )\) and \(\sup _k 1/f_k(\mu )=1/f_0(1)=e\). Then k can be selected as the smallest natural number such that \(\frac{{\widetilde{\sigma }}_{n,k}}{f^2_k({\overline{X}}_n)\sqrt{n}}\) is not greater than e when \({\overline{X}}_n\ge 1\), that is

Notwithstanding \(k^*_n\) converges a.s. to 0 since \({{\widetilde{\sigma }}_{n,k}}\le 1/2\) from (2), the convergence rate may be very slow for large values of \(\mu\) in such a way that \(k^*_n\) can be rather larger than 0 even for large sample sizes. Finally, by considering the test statistic corresponding to \(k^*_n\)

its asymptotic behaviour can be obtained. Obviously in this case \(\sqrt{n}\, {\widehat{T}}_n^{ (k^*_n)}\) is no more a r.v. belonging to the estimated empirical process (Henze 1996).

Corollary 2

Under \(H_0\), \(W_n\) converges in distribution to \({{\mathcal {N}}}(0,1)\) as \(n\rightarrow \infty\) and, under \(H_1\), \(W_n\) converges in probability to \(\infty\).

Proof

Since \(k^*_n\) converges to 0, \(W_n\) and \(Z_{n,0}\) have the same asymptotic behaviour and the proof immediately follows from Proposition 2 and Corollary 1. \(\square\)

It is worth noting that the selection of \(k_n^*\) by means of the proposed data-driven criterion ensures consistency, maintaining the asymptotic normal distribution of the test statistic.

5 Asymptotic behaviour under contiguous alternatives

The asymptotic behaviour of the proposed test is investigated for detecting Poisson departures from contiguous alternatives. More precisely, given a positive number \(\lambda\), for any \(n\ge \lambda ^2\), let \(X^{(n)}\) be a mixture of r.v.s given by

where \(X\in \Pi _\mu\), Y is a positive random variable with \(\textrm{E}[Y]=\mu\) and \(\textrm{E}[Y^2]<\infty\), \(X,Y,I(A_n)\) are independent and \(P(A_n)=(1-\frac{\lambda }{\sqrt{n}})\). Roughly speaking, \(\lambda\) represents a parameter quantifying the discrepancy between the distribution of X and the distribution of \(X^{(n)}\). Obviously, for small \(\lambda\) values detecting departures from Poisson is extremely difficult. Note that \(X^{(n)}\) belongs to \(\Delta\) if Y belongs to \(\Delta\) and converges to a Poisson r.v..

Proposition 3

For any \(n\ge \lambda ^2\), given a random sample \(X^{(n)}_1,\ldots , X^{(n)}_n\) from \(X^{(n)}\), for a fixed natural number k, let

where \({\overline{X}}_n^{\prime }\) and \(F^{\prime }_n\) are the sample mean and the empirical cumulative distribution function. Then \(U_n^{ (k)}\) converges in distribution to \({{\mathcal {N}}}(\tau _k,\sigma ^2_{\mu ,k})\) as \(n\rightarrow \infty\), where \(\tau _k=-\lambda \{P(Y\le k)-f_k(\mu )\}\). In particular \(U^{(k^*_n)}_n\), where \(k^*_n\) is obtained by the data-driven criterion, is equivalent to \(U^{ (0)}_n\), which converges in distribution to \({{\mathcal {N}}}(\lambda \{e^{-\mu }-P(Y=0)\},e^{-2\mu }(e^{\mu }-1-\mu ))\).

Proof

Note that \(\vert f^{\prime \prime }_k\vert \le 2\) for any k. Owing to the Taylor’s Theorem

Since

\(U^{ (k)}_n\) and \({\frac{g_n(X^{(n)}_1)+\ldots +g_n(X^{(n)}_n)}{\sqrt{n}}}-\sqrt{n} r_{n,k}\) have the same asymptotic behaviour, where \(g_n\) is the function defined by \(x\mapsto -e^{-\mu }{\frac{\mu ^{k}}{ k!}}(x-\mu )-\{I(x\le k)-P(X^{(n)}\le k)\}\) and \(r_{n,k}=P(X^{(n)}\le k)-f_k(\mu )\). Since \(\lim _n -\sqrt{n} r_{n,k}=\lambda ^*_k\), the thesis follows from the convergence in distribution of \({\frac{g_n(X^{(n)}_1)+\ldots +g_n(X^{(n)}_n)}{\sqrt{n}}}\) to \({{\mathcal {N}}}(0,\sigma ^2_{\mu ,k}).\) \(\square\)

The previous proposition can be considered a non-parametric version of classical asymptotic analysis under the so-called shrinking alternative. Moreover, the test statistic has a local asymptotic normal distribution which is useful to highlight its discriminatory capability under not trivial contiguous alternatives. In a parametric setting, by means of Le Cam lemmas (Le Cam 2012), it could be possible to derive the limiting power function and to build an efficiency measure for test statistics. Clearly, in a non-parametric functional setting, a closed form of the power function is not available and must be assessed by means of simulation studies.

6 Simulation study

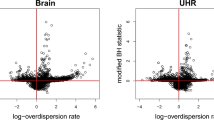

The performance of the proposed test has been assessed by means of an extensive Monte Carlo simulation. First of all, fixed the nominal level \(\alpha =0.05\), the significance level of the test is empirically evaluated, as the proportion of rejections of the null hypothesis, by independently generating 10000 samples of size \(n=50\) from Poisson distributions with \(\mu\) varying from 1 to 16 by 0.5. As early mentioned, the family of test statistics \(Z_{n,k}\) depends on the parameter k and therefore, for any \(\mu\), the empirical significance level is computed for \(k=0,1,2,3\) and reported in Fig. 1. Simulation results confirm that for large values of \(\mu\) the empirical level is far from the nominal one even for a reasonably large sample size and that a data-driven procedure is needed to select k. Thus, the test statistic \(W_n\) is considered, and its performance is compared to those of two tests having known asymptotic distributions: the test by Meintanis and Nikitin (2008), \(MN_n\), also recommended by Mijburgh and Visagie (2020) to achieve good power against a large variety of deviations from the Poisson distribution, and the Fisher index of dispersion, \(ID_n\), which, owing to its simplicity, is often considered as a benchmark. The explicit ready-to-implement test statistic \(MN_n\) has a non-trivial expression and it is based on \(\int _0^1D(t,\mu )t^adt,\) where a is a suitable parameter. \(MN_n\) is proven to have an asymptotic normal distribution. In the simulation, a is set equal to 3 as suggested when there is no prior information on the alternative model. The Fisher index of dispersion test is performed as an asymptotic two-sided chi-square test and it is based on the extremely simple test statistic \(ID_n={\sum _{i=1}^n(X_i-\overline{X}_n)^2}/{\overline{X}_n}.\)

Initially, given \(\alpha =0.05\), the three tests are compared by means of their empirical significance level computed generating 10000 samples of size \(n=20, 50\) from Poisson distributions with \(\mu\) varying from 1 to 16 by 0.5. From Fig. 2, it is worth noting that, even for the moderate sample size \(n = 20\), the test based on \(W_n\) captures the nominal significance level satisfactory, highlighting a rather good speed of convergence to the normal distribution, also confirmed by the empirical level for \(n=50\). The Fisher test shows an empirical significance level very close to the nominal one even for \(n = 20\), except when \(\mu\) is small. The test based on \(MN_n\), on the contrary, maintains the nominal level of significance rather closely only for \(n=50\).

The null hypothesis of Poissonity is tested against the following alternative models (for details see Johnson et al 2005): mixture of two Poisson denoted by \({\mathcal {M}}{\mathcal {P}}(\mu _{1},\mu _{2})\), Binomial by \(\mathcal {B}(k, p)\), Negative Binomial by \(\mathcal{N}\mathcal{B}(k, p)\), Generalized Hermite by \(\mathcal{G}\mathcal{H}(a, b, k)\), Discrete Uniform in \(\{0,1,\dots ,\nu \}\) by \(\mathcal{D}\mathcal{U}(\nu )\), Discrete Weibull by \(\mathcal{D}\mathcal{W}(q, \beta )\), Logarithmic Series translated by -1 by \(\mathcal{LS}^{-}(\theta )\), Logarithmic Series by \(\mathcal{L}\mathcal{S}(\theta )\), Generalized Poisson denoted by \(\mathcal{G}\mathcal{P}(\mu _1,\mu _2)\), Zero-inflated Binomial denoted by \(\mathcal{Z}\mathcal{B}(k, p_{1}, p_{2})\), Zero-inflated Negative Binomial by \(\mathcal {ZNB}(k, p_{1}, p_{2})\), Zero-inflated Poisson by \(\mathcal{Z}\mathcal{P}(\mu _{1},\mu _{2})\). Various parameters values are considered (see Table 1). Moreover, the significance level of the tests is reported for Poisson distributions with \(\mu =0.5, 1, 2, 5, 10, 15\). The alternatives considered in the simulation study include overdispersed and underdispersed, heavy tails, mixtures and zero-inflated distributions together with distributions having mean close to variance. Some alternatives that do not belong to the class \(\Delta\), such as the logarithmic and shifted-logarithmic with parameters 0.7, 0.8, and 0.9 and the discrete uniform in \(\{0,1,2,3\}\), have been included to check the robustness of the \(W_n\) and \(MN_n\) tests.

From each distribution, 10000 samples of size \(n=20, 30, 50\) are independently generated and, on each sample, the three tests are performed. The empirical power of each test is computed as the percentage of rejections of the null hypothesis. The simulation is implemented by using R Core Team (2021) and in particular the packages extraDistr, hermite and RNGforGPD.

Simulation results are reported in Table 1. The \(MN_n\) test is somewhat too conservative for smaller sample sizes and the \(ID_n\) test does not capture the significance level for small \(\mu\), while \(W_n\) shows an empirical significance level rather close to the nominal one even for small sample size and small \(\mu\).

As expected, also from the theoretical results by Janssen (2000), none of the three tests shows performance superior to the others for any alternative and for any sample size, and their power crucially depends on the set of parameters also for alternatives in the same class. Obviously, when the alternative model is very similar to a Poisson r.v., e.g. when the alternative is Binomial with k large and p small, or when dealing with the Poisson Mixtures or the Negative Binomial with k large, the power of all the tests predictably decreases. Low power is also observed against slightly overdispersed or underdispersed discrete uniform distributions, while the power rapidly increases as overdispersion becomes more marked, with the performance of all three tests becoming comparable as n increases. For the Weibull distributions, the \(W_n\) test has a certain edge over its competitors, which, on the other hand, perform better when the generalized Poisson distributions are considered, even though their power is satisfactory only for \({{\mathcal {G}}{\mathcal {P}}}(5,0.4)\). The power of the test based on \(W_n\) is the highest for all the logarithmic distributions, with less remarkable differences for \(\theta =0.9\), while the three tests exhibit nearly the same power for the shifted log-normal distribution, where a decrease in the power of \(W_n\) occurs especially for \(n=20\). As to the zero-inflated distributions, the three tests have a really unsatisfactory behaviour for \(\mathcal {ZNB}(5, 0.9, 0.1)\) and \(\mathcal{Z}\mathcal{P}(1,0.2)\) also for \(n=50\), but \(W_n\) shows the best performance for most of the remaining alternatives and sample sizes. Overall, the number of alternatives for which the three tests reach a power greater than 90% is almost the same for \(n=20\) and \(n=30\). Interestingly, for \(n=50\) the proposed test reaches a power greater than 90% more frequently not only than the straightforward Fisher test but also than the Meintanis test, which is more complex to be implemented.

Finally, the discriminatory capability of the tests under contiguous alternatives is evaluated. In particular, the tests based on \(W_n\), \(MN_n\) and \(ID_n\) are considered and, for sake of brevity, let \(P_n\) be the power function corresponding to each test statistic. Obviously \(P_n\) is a function of \(\lambda\), where \(\lambda \in \,\, ] 0,\sqrt{n}[\), which ensures that the contiguous mixture never completely degenerates, keeping its mixture nature for any \(\lambda\). Hence a basic efficiency measure is the following

which evidently takes values in \(]0,1[\) and, since \(P_n\) is not known, the Monte Carlo estimate

is considered, where \(\lambda _{i}=i\varepsilon\), with \(i=1,\ldots ,m\) and \(m\le \lceil \frac{\sqrt{n}}{\varepsilon }\rceil -1\), for \(\varepsilon\) sufficiently small, and \(\hat{P_n}\) is the empirical power.

To assess the performance of the three tests fairly, the alternative distributions of type (3) are obtained by selecting Y such that the tests achieve similar power when Y is the alternative distribution. In particular, Y is \(\mathcal {B}(1, 0.5)\) and X is \(\Pi _{0.5}\). In this case, it should be noted that the behaviour of \(W_n\) coincides with that of the simpler version \(Z_{n,0}\) since \(k^*_n=0\) almost surely. In Fig. 3 the empirical power as a function of \(\lambda\), computed on 10,000 independently generated samples, is reported for both \(n=20\) and \(n=50\) sample sizes and for \(\varepsilon ={0.25}\), and in Table 2 the corresponding values of \({{\hat{r}}}_n\) are reported.

Graphical and numerical results show that, even if all the tests improve as n increases, the proposed test performs better for both sample sizes. In contrast, the \(ID_n\) and \(MN_n\) tests have very similar behaviour.

7 Some applications in biodosimetry

Biodosimetry, the measurement of biological response to radiation, plays an important role in accurately reconstructing the dose of radiation received by an individual by using biological markers, such as chromosomal abnormalities caused by radiation. When radiation exposure occurs, the damage in DNA is randomly distributed between cells producing chromosome aberrations and the interest is the number of aberrations (generally dicentrics and/or rings) observed. The Poisson distribution is the most widely recognised and commonly used distribution for the number of recorded dicentrics or rings per cell (Ainsbury et al 2013) even though, due to the complexity of radiation exposure cases, other distributions may be suitably applied. Indeed, in presence of partial body irradiation, heterogeneous exposures, and exposure to high Linear Energy Transfer radiations, the Poisson distribution does not fit properly and the distribution of the chromosome aberrations provides useful insight about the patient’s exposure. Therefore, when dealing with data coming from the framework of biodosimetry, a first necessary step consists of testing Poissonity.

Following Puig and Weiß (2020), we test Poissonity on the following datasets:

-

Dataset 1: number of chromosome aberrations (dicentrics and rings) from a patient, exposed to radiation after the nuclear accident of Stamboliyski (Bulgaria) in 2011;

-

Dataset 2: total number of dicentrics from a male exposed to high doses of radiation caused by the nuclear accident happened in Tokai-mura (Japan) in 1999;

-

Dataset 3: total number of rings from a male exposed to high doses of radiation caused by the nuclear accident happened in Tokai-mura (Japan) in 1999;

-

Dataset 4: number of dicentrics observed from a healthy donor when exposed to 5 Gy of X rays;

-

Dataset 5: number of dicentrics observed from a healthy donor when exposed to 7 Gy of X rays.

Data are reported in Table 3 and the values of the test statistic, together with the corresponding p-values, are given in Table 4. The test suggests that there are not noticeable departures from the Poisson distribution for Dataset 1 and Dataset 2, while for Dataset 3 the result of the test is statistically significant at \(5\%\) level. Finally, the p-values of the test for Dataset 4 and Dataset 5 reveal a strong evidence against the null hypothesis of Poisson distributed data.

8 Discussion

Notwithstanding many tests for Poissonity are in literature, the proposed family of test statistics seems to be an appealing alternative in the absence of prior information regarding the type of deviation from Poissonity. In particular, the statistics are rather simple and easily interpretable and the test implementation does not require intensive computational effort. Moreover, the test is consistent against any fixed alternative when k is equal to 0 and when it is selected using the data-driven criterion, that is \(k=k^*_n\). For \(k=0\) the test statistic basically compares an estimator of \(P(X = 0)\) assuming that X is Poisson with the relative frequency of 0 but the finite sample performance of the test may not be satisfactory, especially for small sample size and relatively large Poisson parameter. The performance improves for \(k^*_n\), when the test juxtaposes the plug-in estimator of the cumulative distribution function of a Poisson r.v. and the empirical cumulative distribution function in \(k^*_n\). Indeed, even if \(k^*_n\) converges a.s. to 0, the convergence rate may be very slow for large values of the Poisson parameter, and thus, even for large sample sizes, \(k^*_n\) can be rather larger than 0. Finally, the simulation study shows that, with respect to the test by Meintanis and Nikitin (2008) and that based on the Fisher index of dispersion, the test based on \(k^*_n\) offers a rather satisfactory protection against a range of alternatives.

References

Ainsbury, E.A., Vinnikov, V.A., Maznyk, N.A., et al.: A comparison of six statistical distributions for analysis of chromosome aberration data for radiation biodosimetry. Radiat. Prot. Dosimetry 155, 253–267 (2013). https://doi.org/10.1093/rpd/ncs335

Baringhaus, L., Henze, N.: A goodness of fit test for the Poisson distribution based on the empirical generating function. Stat. Probab. Lett. 13, 269–274 (1992). https://doi.org/10.1016/0167-7152(92)90033-2

Gürtler, N., Henze, N.: Recent and classical goodness-of-fit tests for the Poisson distribution. J. Stat. Plan. Inference 90, 207–225 (2000). https://doi.org/10.1016/S0378-3758(00)00114-2

Henze, N.: Empirical-distribution-function goodness-of-fit tests for discrete models. Can. J. Stat. 24, 81–93 (1996). https://doi.org/10.2307/3315691

Inglot, T.: Data driven efficient score tests for Poissonity. Probab. Math. Stat. 39, 115–126 (2019). https://doi.org/10.19195/0208-4147.39.1.8

Janssen, A.: Global power functions of goodness of fit tests. Ann. Stat. 28, 239–253 (2000). https://doi.org/10.1214/aos/1016120371

Johnson, N.L., Kotz, S., Kemp, A.W.: Univariate discrete distributions. John Wiley & Sons, United States (2005)

Kocherlakota, S., Kocherlakota, K.: Goodness of fit tests for discrete distributions. Commun. Stat. Theor. Method. 15, 815–829 (1986). https://doi.org/10.1080/03610928608829153

Le Cam L.: Asymptotic methods in statistical decision theory. Springer Science & Business Media (2012)

Meintanis S., Bassiakos Y.: Goodness-of-fit tests for additively closed count models with an application to the generalized Hermite distribution. Sankhyā: Indian J. Stat. 67, 538–552 (2005)

Meintanis, S., Nikitin, Y.Y.: A class of count models and a new consistent test for the Poisson distribution. J. Stat. Plan. Inference 138, 3722–3732 (2008). https://doi.org/10.1016/j.jspi.2007.12.011

Mijburgh, P., Visagie, I.: An overview of goodness-of-fit tests for the Poisson distribution. S. Afr. Stat. J. 54, 207–230 (2020). https://hdl.handle.net/10520/EJC-1fdb1ad43b

Nakamura, M., Pérez-Abreu, V.: Use of an empirical probability generating function for testing a Poisson model. Can. J. Stat. 21, 149–156 (1993). https://doi.org/10.2307/3315808

Puig, P., Weiß, C.H.: Some goodness-of-fit tests for the Poisson distribution with applications in biodosimetry. Comput. Stat. Data Anal. 144,106878 (2020). https://doi.org/10.1016/j.csda.2019.106878

R Core Team: R: A Language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria, (2021). https://www.R-project.org/

Rémillard, B., Theodorescu, R.: Inference based on the empirical probability generating function for mixtures of Poisson distributions. Stat. Decis. 18, 349–366 (2000). https://doi.org/10.1524/strm.2000.18.4.349

Rueda, R., O’Reilly, F.: Tests of fit for discrete distributions based on the probability generating function. Commun. Stat. Simul. Comput. 28, 259–274 (1999). https://doi.org/10.1080/03610919908813547

Weiß, C.H., Homburg, A., Puig, P.: Testing for zero inflation and overdispersion in INAR(1) models. Stat. Pap. 60, 823–848 (2019). https://doi.org/10.1007/s00362-016-0851-y

Funding

Open access funding provided by Università degli Studi di Siena within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Di Noia, A., Marcheselli, M., Pisani, C. et al. A family of consistent normally distributed tests for Poissonity. AStA Adv Stat Anal 108, 209–223 (2024). https://doi.org/10.1007/s10182-023-00478-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10182-023-00478-8