Abstract

Artificial Intelligence (AI) has emerged as a transformative force within medical imaging, making significant strides within emergency radiology. Presently, there is a strong reliance on radiologists to accurately diagnose and characterize foreign bodies in a timely fashion, a task that can be readily augmented with AI tools. This article will first explore the most common clinical scenarios involving foreign bodies, such as retained surgical instruments, open and penetrating injuries, catheter and tube malposition, and foreign body ingestion and aspiration. By initially exploring the existing imaging techniques employed for diagnosing these conditions, the potential role of AI in detecting non-biological materials can be better elucidated. Yet, the heterogeneous nature of foreign bodies and limited data availability complicates the development of computer-aided detection models. Despite these challenges, integrating AI can potentially decrease radiologist workload, enhance diagnostic accuracy, and improve patient outcomes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

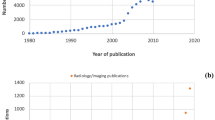

Over the past decade, artificial intelligence (AI) has ushered in a new age of radiology and is poised to revolutionize medical imaging. The concept behind AI involves creating systems to perform tasks that typically require human intelligence. As the number and type of radiological imaging studies increase, so does the workload on radiologists globally. By automating routine tasks and providing rapid insights, AI can be a valuable tool in alleviating radiologist workloads.

Ultimately, AI holds great promise in the field of emergency radiology, particularly in the detection of foreign bodies. The ability of AI models to process vast amounts of imaging data quickly and accurately may enhance diagnostic accuracy in the imaging of non-biological materials. However, there is a paucity of literature describing the use of AI for this application, as well as a variety of other challenges. This review will delve into the various applications of AI in detecting non-biological materials, including retained surgical bodies, open and penetrating injuries, catheter and tube malposition, and foreign body ingestion and aspiration.

Overview of artificial intelligence techniques

Within AI, machine learning (ML) techniques craft statistical models and algorithms to perform specific user-defined tasks [1]. This technique relies on expert knowledge to define and quantify radiographic features, which are then presented to the machine. Thus, machine learning trains itself to identify radiologic features based on patterns extrapolated from human-engineered data and algorithms [2]. Recent strides in AI have leaned heavily towards deep learning (DL), a subset of traditional machine learning techniques. Deep learning differs from traditional machine learning approaches as it uses a larger data set and doesn’t rely on human-engineered algorithms. Instead, it uses artificial neural networks (ANN) with hidden layers, such as convolutional neural networks (CNN), that permit a machine to train itself to perform a task [3]. Ultimately, DL systems can autonomously extract radiologic data from images, removing the human interface, manual image processing, and the risk of operator biases [4]. Thus, deep learning can outperform traditional machine learning when the data set is larger and more complex. The following sections will discuss the applications of deep learning techniques in detecting retained surgical bodies, open and penetrating injuries, catheter and tube malposition, and foreign body ingestion and aspiration.

Retained surgical bodies

Retained surgical bodies (RSB), such as sponges, sutures, needles, and other instruments, can engender dire consequences for patients and cause severe financial and legal ramifications for the involved medical institution. These reportable “never events” are rare, with certain studies estimating an incidence of 1 in every 5,500–7,000 procedures, with higher rates with abdominal surgeries of up to 1 RSB per 1000 surgeries [5, 6]. The actual number of cases with RSB is most likely underestimated due to low reporting rates of these incidents, and patients can be asymptomatic and, thus, unaware of their occurrence. Many authors note that the risk of this complication decreases if institutions follow the recommended perioperative and postoperative checklists and guidelines [7]. Yet over 80% of operations noted to have RSB reported correct counts at the end of the case [8]. As most RSB have standardized shapes and sizes, computer-aided detection (CAD) systems can be highly effective for identification.

Regarding the current imaging techniques to evaluate RSB, plain radiographs represent the gold standard imaging modality. On X-ray, retained objects often present as radiopacities with associated mass effect, mottled air, or density over surrounding soft tissues [9]. One benefit of this modality is that most sponges have radiopaque markers that make them detectable on X-ray [10]. However, these markers can become disfigured within the patient’s body, so they are not a reliable detection source [11]. Sponges without these markers are often visualized through cross-sectional imaging or radiographic visualization of radiolucency secondary to air trapping [10]. Yet it is essential to note that false-negative radiographs can exist, with certain authors reporting that intraoperative radiographs can miss up to one-third of RSB (Fig. 1) [12]. Further, obtaining and reading a radiograph can be time-consuming, particularly after a surgical case. Thus, AI techniques can play a prominent role in quickly and accurately detecting RSB.

72-year-old male undergoing renal transplantation. Due to an incorrect count, an intraoperative X-ray was performed (A), which was negative for any retained metallic device. The optimal protocol in these clinical scenarios involves providing the interpreting radiologist an image of the missing foreign body (B).

Other imaging modalities such as ultrasound, CT, and magnetic resonance imaging (MRI) have also been proposed to identify RSB. On ultrasound, the most common presentation of retained surgical bodies such as sponges and gauze are hyperechogenic masses with hypoechoic rims [13]. Notably, ultrasound is minimally effective in identifying retained surgical bodies. In a study by Modrzejewski et al., the authors reported that ultrasound could detect one in 25 RSB cases, thus yielding a sensitivity of 4% [14]. Conversely, CT is the most sensitive detection method and is usually obtained if an X-ray returns negative and there is high clinical suspicion [15]. On CT, RSB often presents as either a heterogeneous mass in a spongiform pattern with an associated radio-dense linear structure and entrapped gas bubbles or, if the RSB is long-lasting, a reticular mass with a peripheral rind of calcification [9, 16]. MRI is not commonly utilized to identify RSB due to the risk of metallic fragment migration due to magnetic fields and the risk of internal tissue damage from the heat produced by radiofrequency fields [17].

Though limited in its extent, certain authors have explored the use of AI in RSB detection and recognized its potential to support human workflows (Table 1). In a study by Yamaguchi et al., the authors developed and validated a deep learning CAD system for detecting retained surgical sponges, the item found to be by far the most common RSB according to one report studying 191,168 operations at a tertiary care center [12, 18]. The software demonstrated strong performance across tests with phantom radiographs (100% sensitivity; 100% specificity), composite radiographs (97.9% sensitivity; 83.8% specificity), cadaver radiographs (97.7% sensitivity; 90.4% specificity), and normal postoperative radiographs (86.6% specificity) [18]. The software even detected sponges overlapping with bone or normal surgical matter like drains, monitor leads, and staples. Yet, these authors note that a limitation of the study was that the software only identified specific surgical sponges and could not recognize other retained surgical objects [18]. Kawakubo et al. also developed a DL model to detect retained surgical items by post-processing fused images of surgical sponges and unremarkable postoperative X-rays [19]. The authors subsequently compared the model to two experienced radiologists identifying retained surgical sponges [19]. The deep learning model had higher sensitivity and lower specificity for sponge detection compared to both human observers, suggesting its potential to support diagnostic ability by reducing the rate of missed RSBs.

AI has also been employed to detect other less common RSBs, such as retained surgical needles. Accurately diagnosing retained surgical needles remains a significant issue, as certain studies report that conventional radiographs detect radiopaque needles less than 1 cm (cm) in diameter with a sensitivity of only 30% [20]. Further, surgical needles are one of the most incorrectly counted instruments [21]. In a proof-of-concept study by Asiyanbola et al., the authors generated a map-seeking circuit and a modified map-seeking circuit algorithm to detect needles in abdominal X-rays [22]. The model in this study was deployed with two detection threshold settings to analyze two sets of images and their corresponding sub-images, one set from a cassette-based X-ray machine and another from a C-arm (digital) machine [22]. The authors set these thresholds to determine when the algorithm should classify an image as containing a retained needle. The modified map-seeking circuit algorithm outperformed its unmodified counterparts with reduced computing times and higher detection rates. For the cassette-based X-ray machine, this algorithm had a detection rate of 85.19% and a false positive rate of 9.98% at the lower detection threshold and rates of 53.70% and 0.00%, respectively, at the higher threshold. For the digital machine images, the algorithm had a detection rate of 72.73% and a false positive rate of 15.67% at the lower threshold and rates of 50.91% and 6.67%, respectively, at the higher threshold [22]. Sengupta et al. also developed a series of four CAD models with rule-based, random forest, linear discriminant analysis (LDA), and neural network classifiers to detect retained surgical needles on postoperative radiographs [23]. The model was run with two modes with different decision thresholds: mode I with higher specificity and mode II with higher sensitivity. Ultimately, the authors found that the mode with high specificity yielded a neural network sensitivity and false positive (FP) rate of 75.4% and 0.23 FPs/image, respectively, and mode II with higher sensitivity had a neural network sensitivity and FP rate of 86.0% and 0.57 FPs/image, respectively [23]. Such results not only suggest AI’s ability to detect surgical needles specifically, but also can help clinicians identify what threshold can maximize algorithm sensitivity and specificity. Figure 2 demonstrates needles detected by the CAD system. In contrast, Fig. 3 showcases needles missed by this system due to overlapping structures such as bone distorting the shape of the needle.

Needles of various shapes and orientations with different backgrounds that were detected by the CAD system. Figure reproduced with permission from Sengupta A, Hadjiiski L, Chan HP, Cha K, Chronis N, Marentis TC. Computer-aided detection of retained surgical needles from postoperative radiographs. Med Phys. 2017;44(1):180–191.https://doi.org/10.1002/mp.12011

Example of needle missed by both the rule-based and the neural network-based CAD systems. Figure reproduced with permission from Sengupta A, Hadjiiski L, Chan HP, Cha K, Chronis N, Marentis TC. Computer-aided detection of retained surgical needles from postoperative radiographs. Med Phys. 2017;44(1):180–191. https://doi.org/10.1002/mp.12011

Within RSB imaging, additional physical technological innovations can be used in conjunction with AI to enhance the effectiveness of detection furthe r[24]. In a study by Marentis et al., the authors demonstrated the efficacy of CAD in detecting radiopaque micro-tags, which can be attached to sponges and other surgical instruments [25]. In the detection of these micro-tags, the high-specificity CAD system had a sensitivity of 79.5% and a specificity of 99.7%, and after the use of this CAD system in conjunction with one of five radiologists, sensitivity ranged from 98.5–100% and specificity from 99.0–99.7% [25]. This data ultimately shows the high utility of combining a CAD system with a radiologist to complement one another in detecting RSB.

Penetrating and open injuries

Another application of AI that will be discussed involves imaging of penetrating wounds. This class of injuries is caused by objects that pierce and penetrate the skin to create an open wound [26]. Firearms and sharp objects are among the most common causes of these injuries [26]. One report estimated that in the United States alone, from 2009–2017, an annual average of more than 85,000 emergency department visits annually were attributable to nonfatal firearm injury in addition to 34,538 deaths [27]. Additionally, the CDC estimates that annually in the United States, cut or pierce wounds are responsible for over 1.8 million nonfatal injuries, along with over 3,000 deaths [28]. Prompt diagnosis of penetrating wounds is crucial to facilitate effective management and intervention.

While large, superficial foreign objects are often detected by palpation, imaging plays a role when detecting smaller foreign bodies in patients with open wounds or penetrating injuries. Ultrasound, for instance, can be highly useful in identifying a radiolucent foreign body and assisting with object removal [29]. On ultrasound, foreign bodies disrupt the homogenous echogenicity inherent in soft tissue and thus often present hyperechoic compared to surrounding tissue [30]. Over time, hypoechoic rings can form around the foreign object, which indicates the development of inflammatory processes. Some advantages of ultrasonography include its ability to image dynamically and provide timely access compared to other modalities [31]. In superficial tissues, US may even offer higher resolution than X-ray or CT. However, its effectiveness can be minimized when imaging deeper tissue, as ultrasound’s acoustic waves only penetrate to a certain depth. This may be further limited by bone or air obfuscation of the region of interest [32]. Yet, US is restricted by its dependence on operator skill and its limitations in detecting foreign bodies of smaller sizes [33]. Certain authors reported a decline in foreign body identification by almost 20% when the size of the foreign object decreased from 2 to 1 mm [31]

Conventional radiography can also detect foreign bodies from penetrating injuries, but the advent of more advanced imaging modalities makes it less commonly utilized [34]. This technique remains limited because its detectability depends on the density contrast with surrounding tissue, making it difficult to detect objects such as wood or plastic due to density similarities with soft tissue and graphite and gravel due to density similarities with bone [32, 35]. However, radiographs can still be used to identify retained metal, such as metallic bullet fragments (Fig. 4).

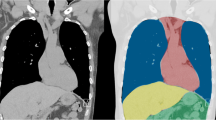

Conversely, CT is the first-line modality in imaging penetrating injuries due to its high specificity and sensitivity and its ability to acquire multiplanar images relatively quickly [36, 37]. CT angiography (CTA) is also often utilized to detect suspected vascular injury. Ultimately, the multiplanar nature and re-constructability of CT and CTA aid in the detection of injuries within the tissue, as well as help predict probabilistic injuries [38]. While CT and CTA excel in imaging penetrating injuries, AI introduces a promising avenue for further enhancing accuracy and efficiency in detecting such injuries.

Presently, there is limited literature regarding the use of AI in imaging penetrating wounds. A series of models, TraumaSCAN and TraumaSCAN-Web, have employed three-dimensional (3D) anatomical models in conjunction with patient signs, symptoms, and imaging findings to estimate the likelihood of injury to anatomic structures as well as the probability of subsequent conditions using Bayesian networks [39,40,41]. However, these models do not use AI to evaluate the images themselves; instead, they rely on human assessment to output a variable, which serves as an input for the model [42]. Thus, it is evident that further development of AI models is necessary before CAD systems are implemented within clinical practice. Yet, integrating AI with other clinical variables presents the potential for rapid, streamlined clinical evaluation in urgent, high-acuity cases of penetrating wounds.

Before AI can be confidently utilized for the imaging of penetrating wounds, a number of challenges must be addressed. First, different models must be developed for each existing imaging modality. Second, the diversity of objects causing penetrating injuries, coupled with the multitude of potential locations on the body that an object can penetrate, require large, standardized datasets to train a potential model [43]. Furthermore, some penetrating objects may splinter within the body or may induce bone fragments, which can have varied trajectories as secondary projectiles [43]. Other challenges involve cases where penetrating objects have left the body. Thus, it is difficult for AI models to ascertain the penetrating object’s tract within the body and the subsequently injured tissues [43]. However, even if not directly involved in the identification of the object’s track or injured tissues, AI models still have the potential to augment such clinical workflows through image enhancement or reconstruction.

Catheter/tube malposition

Endotracheal tubes (ETT), enteric tubes, and central venous catheters (CVCs) are devices commonly employed in emergency or intensive care settings to provide and deliver care. However, malpositioning of these devices can result in adverse outcomes, either through direct harm from improper insertion or the inability to provide treatment. The malposition of endotracheal tubes, enteric tubes, and central venous catheters is estimated to occur in 5–28%, 3–20%, and 2–7% of cases, respectively [44]. Ultimately, an automated method to interpret catheter and tube malposition may allow for earlier identification and reduce the detrimental effects of an improperly placed tube.

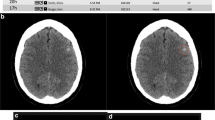

Chest radiography is the preferred imaging technique to confirm the proper positioning of these devices after placement, mainly due to its low cost and wide availability [45]. Portable X-rays are often employed in emergency departments or intensive care units (ICU), although these often result in images with low contrast and high noise [45]. Radiographs should also be obtained after any positioning changes in support devices, after bedside procedures, and if a patient experiences an acute change in clinical status [46]. In addition to X-ray, ultrasound has emerged as another rapid and viable alternative with high diagnostic accuracy [47]. However, ultrasound has limitations in cases with unusual airway anatomy, cervical collars, neck edema, subcutaneous emphysema, or neck masses [48].

Currently, substantial research is occurring regarding the use of AI in tube/catheter malposition, particularly endotracheal tube detection and position localization [49]. While the data regarding endotracheal tube detection and critical tube malpositions (ETT-carina distance < 1 cm) is strong across studies, the models identifying subtle malpositions are weaker. In a model developed by An et al., the sensitivity and specificity for detection of critical tube position (ETT-carina distance < 1 cm) amongst ICU images was 100% and 99.2%-100%, respectively, whereas detection of less critical malpositions resulted in sensitivities and specificities of just 72.5%-83.7% and 92.0%-100% [50]. Lakani et al. reported similar findings with a sensitivity of 93.9% and specificity of 97.7% for differentiating ETT-carina distance of less than 1 cm, but the sensitivity and specificity were 66.5% and 99.2%, respectively, for differentiating ETT-carina distance > 7 cm [51]. Such results indicate that a complementary rather than entirely independent role may be most appropriate for such models [52]. If AI models can alert ICU physicians and radiologists when the endotracheal tube is improperly positioned, clinicians can quickly evaluate the need for ETT repositioning and assess patient safety.

AI has also been used to detect central venous catheter malposition. A model developed by Rueckel et al. reported that chest radiographs with improperly positioned CVCs were identified with an area under the receiver operating characteristic curve (AUC) of > 0.93–0.96 [53]. Tang et al.’s model achieved an AUC of 0.8715 for detecting unsatisfactory tube position [54]. However, the application of AI position detection with this class of devices presents additional challenges compared to endotracheal tubes. For example, it is more difficult to define optimal CVC position, and CVC insertions may occur through different veins. Additionally, there are a variety of mimicking objects, such as pacemaker wires, electrocardiogram (ECG) electrodes, and sheaths [53]. In their analysis of various central venous catheter subgroups, Tang et al. also noted that their model found it more challenging to detect peripherally inserted CVCs when compared to other subtypes, including dialysis catheters and jugular and subclavian lines [54]. This is likely a consequence of the thinner lines of peripherally inserted central catheters, as well as the more variable, peripherally located tips compared to other subtypes [54]. These results highlight the need for specific models to be developed for certain subtypes of catheters or tubes.

Research has also explored the use of AI in detecting enteric tubes, though the performance of these models leaves room for improvement (Table 1). Mallon et al.’s algorithm detected critically misplaced enteric tubes with sensitivities and specificities of 80% and 92%, respectively [55]. Other authors reported sensitivities of 100% and specificities of 76%, respectively, in identifying enteric tube malposition [56]. When used in conjunction with human readers, one model tested by Drozdov et al. increased the confidence of junior emergency medicine physicians and their interpretative capabilities [57]. When junior physicians were given a second opinion from this AI model regarding enteric tube placement, sensitivity and specificity increased from 96 to 100% and from 69 to 78%, respectively [57]. However, it is essential to address the elevated rate of false positives and negatives reported by these algorithms. Analysis of one model noted false positives due to ECG leads and endobronchial barium and false negatives when multiple tubes were present [55]. Figure 5 showcases class activation maps utilized to conduct failure analysis for the false positives and negatives reported. Additionally, some models highlighted many irrelevant features, a frequent flaw of algorithms that analyze the whole image. Further, applying segmentation techniques to circumvent this issue adds complexity and room for other sources of error [55, 56]. At present, the high number of false positives and negatives associated with these models minimizes their efficacy but highlights their potential role as a complementary tool to human readers.

Failure analysis using class activation maps that highlight regions of interest within each radiograph. A. Correct classification of a safe enteric tube position shows maximum activation values along the course of the esophagus and stomach. B. Incorrectly classification of a safe enteric tube position (false positive), with high activation in the right lower zone caused by linear opacification due to aspiration of barium. C. Correct classification of an enteric tube that is misplaced within the right lower lobe airways. D. Incorrect classification of a misplaced enteric tube within the left lower lobe (false negative). Misclassification may be due to the presence of a safely positioned enteric tube that enters the stomach. Figure reproduced with permission from Mallon DH, McNamara CD, Rahmani GS, O'Regan DP, Amiras DG. Automated detection of enteric tubes misplaced in the respiratory tract on chest radiographs using deep learning with two-centre validation. Clin Radiol. 2022;77(10):e758-e64

Foreign Body Ingestion/Aspiration

Foreign body ingestion represents a significant clinical problem that can manifest itself in a variety of forms. Ingestion of foreign bodies is particularly prominent among those with psychiatric or neurological disorders as well as young children, and it is estimated that between 1995 and 2015, 795,074 patients under the age of six years old presented to the ED for foreign body ingestion [58,59,60]. Among the most commonly ingested items are coins, toys, jewelry, batteries, and bones, including fish bones [58]. One of the major consequences of foreign body ingestion is aspiration, a complication often seen among young children [59]. Globally, it is estimated that from 1990–2019, foreign body aspiration had an incidence of 109.6 per 100,000 children under five years old[61]. Like foreign body ingestion, the most commonly aspirated objects include batteries, coins, and other inorganic objects, though organic objects and food items are far more frequent causes [62].

In order to detect foreign body ingestion, various imaging modalities can be utilized. Ultrasound is beneficial in the detection of radiolucent foreign bodies and for imaging in the pediatric population [63]. Radiographs are commonly used for initial diagnosis due to their widespread availability and ability to detect foreign bodies cheaply and rapidly. Further, radiographs can help quickly rule out aspirated foreign objects [64]. This technique is often the first-line imaging modality to detect radiopaque objects, yet it is imperative to note that a negative X-ray can only rule out retained radiopaque materials but not retained radiolucent foreign bodies [65]. Figure 6 represents the X-ray findings of a patient who ingested multiple radiolucent plastic bags, which were initially overlooked due to a small difference in density between the plastic bags and soft tissue. Some common radiolucent foreign bodies include chicken and fish bones, plastic, wood, and small metal objects [66]. There is also often a role for serial X-ray imaging if the object is most likely to pass without intervention.

51-year-old male with past medical history of schizoaffective and schizotypal personality disorder and multiple prior foreign body ingestions. Abdominal radiograph shows multiple regular radiolucencies projecting over the gastric fundus and body in the left upper abdominal quadrant (green arrows), concerning for a radiolucent foreign body. This was initially missed due to the small difference in density between soft tissue and plastic bags. Upper GI endoscopy found multiple plastic bags, which were successfully removed

Compared to X-ray, CT has a higher sensitivity in imaging foreign objects. This technique allows for the detection of radiopaque objects such as metal, stone, and glass and can also detect objects, including plastics, wood, or other organic materials [32]. The 3D rendering of cross-sectional CT images also allows for enhanced localization and detection, which may aid in removing the foreign body [67]. Further, 3D models help prevent the obscuring of foreign objects by bone[33]. However, CT is often not the modality used for initial imaging due to the high level of radiation, its cost, and low sensitivity for the detection of radiolucent materials [33].

Lastly, MRI is typically the most expensive, timely, and least widely available of the major imaging modalities, leading to its limited use in foreign body detection [33]. Additionally, it can be challenging to ascertain an object’s ferromagnetic properties. Thus, significant safety concerns exist with the potential interaction between the magnetic field and ferromagnetic foreign bodies. However, MRI is vital in the imaging of radiolucent objects, as it can visualize tissues not apparent on ultrasound [32].

Despite the common occurrence of foreign body ingestion and aspiration, there is a dearth of literature regarding the use of AI for imaging in this capacity. The few articles published on AI’s role in foreign body ingestion and aspiration emphasize the advantage of CAD systems in not only detecting foreign objects but also classifying them. In a study by Rostad et al., the authors developed two AI models for analysis of pediatric esophageal radiographs, one which aimed to detect discoid foreign bodies and a subsequent one which aimed to classify objects such as coins or button batteries [68]. As button batteries in the esophagus require emergent endoscopic removal, the presence of coinlike objects on radiographs must be differentiated [69]. Ultimately, the authors reported that the object detector identified all foreign bodies with 100% specificity and 100% sensitivity. The image classifier also demonstrated strong performance, classifying 6/6 (100%) button batteries as such, 93/95 (97.9%) of the coins as such, and 2/95 (2.1%) of the coins as button batteries [69]. Outside of these instances, there were only two cases incorrectly classified as coins: a stacked button battery and coin (Fig. 7), as well as two stacked coins.

An 11-month-old girl with a stacked button battery and coin in her proximal esophagus. A: An anteroposterior chest radiograph shows the stacked button battery and coin were detected but classified as a coin. B: The lateral radiograph view shows the stacked button battery and coin. Figure reproduced with permission from Rostad, B. S., E. J. Richer, E. L. Riedesel and A. L. Alazraki (2022). "Esophageal discoid foreign body detection and classification using artificial intelligence." Pediatr Radiol 52(3): 477–482

Limitations of AI imaging

Yet, these cases of incorrect object classification illustrate an essential limitation when applying AI to foreign body imaging. First, the model’s ability to detect objects relies on the images encountered during the training data set. Thus, the model will not be able to identify and classify foreign objects it has not previously encountered. This was particularly evident in the model developed by Kawakubo et al., as the software only identified specific surgical sponges for which the model was trained and could not recognize other retained surgical objects [19]. Moreover, the breadth and variety of training datasets, encompassing objects in diverse orientations and forms, are crucial for AI's ability to detect foreign bodies. Further, gaining access to expansive datasets remains challenging given patient data and privacy concerns, though systems are being developed to circumvent this [70]. Complicating the matter is the fact that models must be developed and trained for each imaging modality, a particularly significant issue when the object composition is unknown and the most effective imaging technique is not immediately apparent. Lastly, there are challenges associated with training software to recognize and/or classify heterogeneous objects. Objects of uniform size and shape, such as surgical equipment or medical tubes and lines, are far easier to train models to recognize compared to commonly aspirated or ingested objects like fish bones, toys, or jewelry of varying size and composition. Thus, it is unsurprising that one of the first reports demonstrating AI’s utility in imaging ingested foreign bodies has been with coins and button batteries: objects of uniform shape and size.

Conclusion

Despite AI’s enormous potential in foreign body detection, current applications have thus far been in research settings, often training and validating models on devised images such as those with cadavers or fusion images. Before the widespread deployment of AI systems, these models must be trialed on natural datasets to ensure real-world clinical utility and performance. Though significant legal hurdles surrounding liability and tort law remain that may limit AI’s potential use, the ongoing advancements in the field augment its clinical utility and potential [71]. Despite these challenges, the advancements in AI technology, coupled with collective efforts to obtain diverse and comprehensive datasets, offer a promising trajectory for the future of medical imaging in foreign body analysis. Further, the integration of AI in clinical practice has the potential to alleviate radiologist workload, enhance their efficiency, and reduce diagnostic errors. As the field of medical imaging continues to progress, the collaboration between AI and radiology may ultimately enhance diagnostic precision and patient care.

References

Lee JG, Jun S, Cho YW, Lee H, Kim GB, Seo JB, Kim N (2017) Deep learning in medical imaging: general overview. Korean J Radiol. 18(4):570–584. https://doi.org/10.3348/kjr.2017.18.4.570

Liu J, Varghese B, Taravat F, Eibschutz LS, Gholamrezanezhad A (2022) An extra set of intelligent eyes: application of artificial intelligence in imaging of abdominopelvic pathologies in emergency radiology. Diagnostics. 12(6):1351. https://doi.org/10.3390/diagnostics12061351

Behzadi-Khormouji H, Rostami H, Salehi S, Derakhshande-Rishehri T, Masoumi M, Salemi S, Keshavarz A, Gholamrezanezhad A, Assadi M, Batouli A (2020) Deep learning, reusable and problem-based architectures for detection of consolida-tion on chest X-ray images. Comput Methods Programs Biomed 185:105162. https://doi.org/10.1016/j.cmpb.2019.105162. (Epub 2019 Oct 31 PMID: 31715332)

Hazarika I (2020Jul 1) Artificial intelligence: opportunities and implications for the health workforce. Int Health 12(4):241–245. https://doi.org/10.1093/inthealth/ihaa007.PMID:32300794;PMCID:PMC7322190

Zejnullahu VA, Bicaj BX, Zejnullahu VA, Hamza AR (2017) Retained surgical foreign bodies after surgery. Open Access Maced J Med Sci 5(1):97–100. https://doi.org/10.3889/oamjms.2017.005

Williams TL, Tung DK, Steelman VM, Chang PK, Szekendi MK (2014) Retained surgical sponges: findings from incident reports and a cost-benefit analysis of radiofrequency technology. J Am Coll Surg 219(3):354–364

Goldberg JL, Feldman DL (2012) Implementing AORN recommended practices for prevention of retained Surgical Items. AORN J 95:205–219

Gibbs VC, Coakley FD, Reines HD (2007) Preventable errors in the operating room: retained foreign bodies after surgery–Part I. Curr Probl Surg 44(5):281–337. https://doi.org/10.1067/j.cpsurg.2007.03.002.PMid:17512832

Kumar GVS, Ramani S, Mahajan A, Jain N, Sequeira R, Thakur M (2017) Imaging of retained surgical items: A pictorial review including new innovations. Indian J Radiol Imaging 27(3):354–361. https://doi.org/10.4103/ijri.IJRI_31_17

O’Connor AR, Coakley FV, Meng MV, Eberhardt SC (2003) Imaging of retained surgical sponges in the abdomen and pelvis. AJR Am J Roentgenol 180(2):481–489. https://doi.org/10.2214/ajr.180.2.1800481

Yun G, Kazerooni EA, Lee EM, Shah PN, Deeb M, Agarwal PP (2021) Retained surgical items at chest imaging. Radiographics. 41(2):E10–E11. https://doi.org/10.1148/rg.2021200128

Cima RR, Kollengode A, Garnatz J, Storsveen A, Weisbrod C, Deschamps C (2008) Incidence and characteristics of potential and actual retained foreign object events in surgical patients. J Am Coll Surg 207(1):80–87. https://doi.org/10.1016/j.jamcollsurg.2007.12.047

Pole G, Thomas B (2017) A Pictorial Review of the Many Faces of Gossypiboma - Observations in 6 Cases. Pol J Radiol 82:418–421. https://doi.org/10.12659/PJR.900745

Modrzejewski A, Kaźmierczak KM, Kowalik K, Grochal I (2023) Surgical items retained in the abdominal cavity in diagnostic imaging tests: a series of 10 cases and literature review. Pol J Radiol 88:264–269. https://doi.org/10.5114/pjr.2023.127668

Wan W, Le T, Riskin L, Macario A (2009) Improving safety in the operating room: a systematic literature review of retained surgical sponges. Curr Opin Anaesthesiol 22(2):207–214. https://doi.org/10.1097/ACO.0b013e328324f82d

Lu YY, Cheung YC, Ko SF, Ng SH (2005) Calcified reticulate rind sign: A characteristic feature of gossypiboma on computed tomography. World J Gastroenterol 11:4927–4929

Pennsylvania Patient Safety Authority (no date) Retained surgical items: Events and guidelines revisited: Advisory, Pennsylvania Patient Safety Authority. Available at: https://patientsafety.pa.gov/ADVISORIES/Pages/201703_RSI.aspx. Accessed 19 Mar 2024

Yamaguchi S, Soyama A, Ono S, Hamauzu S, Yamada M, Fukuda T, Hidaka M, Tsurumoto T, Uetani M, Eguchi S (2021) Novel computer-aided diagnosis software for the prevention of retained surgical items. J Am Coll Surg 233(6):686–696

Kawakubo M, Waki H, Shirasaka T, Kojima T, Mikayama R, Hamasaki H, Akamine H, Kato T, Baba S, Ushiro S, Ishigami K (2023) A deep learning model based on fusion images of chest radiography and X-ray sponge images supports human visual characteristics of retained surgical items detection. Int J Comput Assist Radiol Surg 18(8):1459–1467

Ponrartana S, Coakley FV, Yeh BM et al (2008) Accuracy of plain abdominal radiographs in the detection of retained surgical needles in the peritoneal cavity. Ann Surg 247(1):8–12. https://doi.org/10.1097/SLA.0b013e31812eeca5

Egorova NN, Moskowitz A, Gelijns A et al (2008) Managing the prevention of retained surgical instruments: what is the value of counting? Ann Surg 247:13–18

Asiyanbola B, Cheng-Wu C, Lewin JS, Etienne-Cummings R (2012) Modified Map-Seeking Circuit: Use of Computer-Aided Detection in Locating Postoperative Retained Foreign Bodies1. J Surg Res 175(2):47–52. https://doi.org/10.1016/j.jss.2011.11.1018

Sengupta A, Hadjiiski L, Chan HP, Cha K, Chronis N, Marentis TC (2017) Computer-aided detection of retained surgical needles from postoperative radiographs. Med Phys 44(1):180–191. https://doi.org/10.1002/mp.12011

Tripathi A, Marentis T, Chronis N (2012) Microfrabricated instrument tag for the radiographic detection of retained foreign bodies during surgery, SPIE

Marentis TC, Davenport MS, Dillman JR, Sanchez R, Kelly AM, Cronin P, DeFreitas MR, Hadjiiski L, Chan HP (2018) Interrater Agreement and Diagnostic Accuracy of a Novel Computer-Aided Detection Process for the Detection and Prevention of Retained Surgical Instruments. AJR Am J Roentgenol 210(4):709–714. https://doi.org/10.2214/AJR.17.18576

Asensio JA, Verde JM (2012) Penetrating Wounds. In: Vincent JL, Hal JB (eds) l Encyclopedia of Intensive Care Medicine. Springer, Berlin, Heidelberg, pp 1699–1703

Kaufman EJ, Wiebe DJ, Xiong RA, Morrison CN, Seamon MJ, Delgado MK (2021) Epidemiologic Trends in Fatal and Nonfatal Firearm Injuries in the US, 2009–2017. JAMA Intern Med 181(2):237–244

(2023) Web-based injury statistics query and reporting system (WISQARS), Centers for Disease Control and Prevention.

Bryczkowski C (2020) Foreign body localization, Sonoguide. Available at: https://www.acep.org/sonoguide/procedures/foreign-bodies. Accessed 18 Dec 2023

Lewis D, Jivraj A, Atkinson P, Jarman R (2015) My patient is injured: identifying foreign bodies with ultrasound. Ultrasound 23(3):174–180. https://doi.org/10.1177/1742271X15579950. Accessed 19 Dec 2023

Shelhoss SC, Burgin CM (2022) Maximizing Foreign Body Detection by Ultrasound With the Water Bath Technique Coupled With the Focal Zone Advantage: A Technical Report. Cureus 14(11):e31577. https://doi.org/10.7759/cureus.31577

Voss JO, Maier C, Wüster J et al (2021) Imaging foreign bodies in head and neck trauma: a pictorial review. Insights Imaging 12:20. https://doi.org/10.1186/s13244-021-00969-9

Rupert J, Honeycutt JD, Odom MR (2020) Foreign bodies in the skin: evaluation and management. Am Fam Physician 101(12):740–747

Bukur M, Green DJ (2012) Plain x-rays for penetrating trauma. In: Velmahos G, Degiannis E, Doll D (eds) Penetrating trauma. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-20453-1_13

Campbell EA, Wilbert CD (2023) Foreign Body Imaging. [Updated 2023 Jul 30]. In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing Available from: https://www.ncbi.nlm.nih.gov/books/NBK470294/

Gopireddy DR, Kee-Sampson JW, Vulasala SSR, Stein R, Kumar S, Virarkar M (2023) Imaging of penetrating vascular trauma of the body and extremities secondary to ballistic and stab wounds. J Clin Imaging Sci 13:1

Ballard DH, Naeem M, Hoegger MJ, Rajput MZ, Mellnick VM (2020) Imaging of Penetrating Abdominal and Pelvic Trauma. In: Patlas MN, Katz DS, Scaglione M (eds) Atlas of Emergency Imaging from Head-to-Toe. Springer International Publishing, Cham, pp 1–17

Gunn ML, Clark RT, Sadro CT, Linnau KF, Sandstrom CK (2014) Current concepts in imaging evaluation of penetrating transmediastinal injury. Radiographics 34(7):1824–1841

Ogunyemi O, Clarke JR, Webber B, Badler N (2000) "TraumaSCAN: assessing penetrating trauma with geometric and probabilistic reasoning." Proc AMIA Symp: 620–624

Ogunyemi O (2006) Methods for reasoning from geometry about anatomic structures injured by penetrating trauma. J Biomed Inform 39(4):389–400

Matheny ME, Ogunyemi OI, Rice PL, Clarke JR (2005) Evaluating the discriminatory power of a computer-based system for assessing penetrating trauma on retrospective multi-center data. AMIA Annu Symp Proc 2005:500–504

Ahmed BA, Matheny ME, Rice PL, Clarke JR, Ogunyemi OI (2009) A comparison of methods for assessing penetrating trauma on retrospective multi-center data. J Biomed Inform 42(2):308–316

Steenburg SD, Sliker CW, Shanmuganathan K, Siegel EL (2010) Imaging evaluation of penetrating neck injuries. Radiographics 30(4):869–886

Yi X, Adams SJ, Henderson RDE, Babyn P (2020) Computer-aided assessment of catheters and tubes on radiographs: how good is artificial intelligence for assessment? Radiol Artif Intell 2(1):e190082

Wang CH, Hwang T, Huang YS, Tay J, Wu CY, Wu MC et al (2024) Deep learning-based localization and detection of malpositioned endotracheal tube on portable supine chest radiographs in intensive and emergency medicine: a multicenter retrospective study. Crit Care Med 52(2):237–247

Sakthivel MK, Bosemani T, Bacchus L, Pamuklar E (2020) Malpositioned lines and tubes on chest radiograph - a concise pictorial review. J Clin Imaging Sci 10:66

Zatelli M, Vezzali N (2017) 4-Point ultrasonography to confirm the correct position of the nasogastric tube in 114 critically ill patients. J Ultrasound 20(1):53–58

Farrokhi M, Yarmohammadi B, Mangouri A, Hekmatnia Y, Bahramvand Y, Kiani M et al (2021) Screening performance characteristics of ultrasonography in confirmation of endotracheal intubation; a systematic review and meta-analysis. Arch Acad Emerg Med 9(1):e68

Kara S, Akers JY, Chang PD (2021) Identification and localization of endotracheal tube on chest radiographs using a cascaded convolutional neural network approach. J Digit Imaging 34(4):898–904

An JY, Hwang EJ, Nam G, Lee SH, Park CM, Goo JM, Choi YR (2024) Artificial intelligence for assessment of endotracheal tube position on chest radiographs: validation in patients from two institutions. AJR Am J Roentgenol 222(1):e2329769

Lakhani P, Flanders A, Gorniak R (2021) Endotracheal tube position assessment on chest radiographs using deep learning. Radiol Artif Intell 3(1):e200026

Little BP (2024) Editorial comment: artificial intelligence for detection of endotracheal tube malposition-augmented rather than autonomous radiology interpretation. AJR Am J Roentgenol 222(1):e2330297

Rueckel J, Huemmer C, Shahidi C, Buizza G, Hoppe BF, Liebig T et al (2023) Artificial Intelligence to Assess Tracheal Tubes and Central Venous Catheters in Chest Radiographs Using an Algorithmic Approach With Adjustable Positioning Definitions. Invest Radiol 59(4):306–313

Tang CHM, Seah JCY, Ahmad HK, Milne MR, Wardman JB, Buchlak QD, et al (2023) Analysis of line and tube detection performance of a chest x-ray deep learning model to evaluate hidden stratification. Diagnostics 13(14)

Mallon DH, McNamara CD, Rahmani GS, O’Regan DP, Amiras DG (2022) Automated detection of enteric tubes misplaced in the respiratory tract on chest radiographs using deep learning with two centre validation. Clin Radiol 77(10):e758–e764

Singh V, Danda V, Gorniak R, Flanders A, Lakhani P (2019) Assessment of critical feeding tube malpositions on radiographs using deep learning. J Digit Imaging 32(4):651–655. https://doi.org/10.1007/s10278-019-00229-9

Drozdov I, Dixon R, Szubert B, Dunn J, Green D, Hall N et al (2023) An artificial neural network for nasogastric tube position decision support. Radiol Artif Intell 5(2):e220165

Orsagh-Yentis D, McAdams RJ, Roberts K,J McKenzie LB (2019) Foreign-body ingestions of young children treated in US emergency departments: 1995–2015. Pediatrics 143(5). https://doi.org/10.1542/peds.2018-1988

Sehgal IS, Dhooria S, Ram B, Singh N, Aggarwal AN, Gupta D, Behera D, Agarwal R (2015) Foreign body inhalation in the adult population: experience of 25,998 bronchoscopies and systematic review of the literature. Respir Care 60(10):1438–1448

Nastoulis E, Karakasi MV, Alexandri M, Thomaidis V, Fiska A, Pavlidis P (2019) Foreign bodies in the abdominal area: review of the literature. Acta Medica (Hradec Kralove) 62(3):85–93

Wu Y, Zhang X, Lin Z, Ding C, Wu Y, Chen Y, Wang D, Yi X, Chen F (2023) Changes in the global burden of foreign body aspiration among under-5 children from 1990 to 2019. Front Pediatr 11:1235308

Joseph M, Alajmi S, Alshammari V, Singh A, Parakh W, Indawati RT, Fasseeh N (2023) The characteristics of foreign bodies aspirated by children across different continents: A comparative review. Pediatr Pulmonol 58(2):408–424

Bella S, Heiney J, Patwa A (2023) Point-of-care ultrasound use for detection of multiple metallic foreign body ingestion in the pediatric emergency department: a case report. J Educ Teach Emerg Med 8(4):V1–V4. https://doi.org/10.21980/J83D2D

Ikenberry SO, Jue TL, Anderson MA et al (2011) Management of ingested foreign bodies and food impactions. Gastrointest Endosc 73:1085–1091

Hodge D 3rd, Tecklenburg F, Fleisher G (1985) Coin ingestion: does every child need a radiograph? Ann Emerg Med 14:443–446

Guelfguat M, Kaplinskiy V, Reddy SH, DiPoce J (2014) Clinical guidelines for imaging and reporting ingested foreign bodies. Am J Roentgenol 203(1):37–53. https://doi.org/10.2214/AJR.13.12185

Ariz C, Horton KM, Fishman EK (2004) 3D CT evaluation of retained foreign bodies. Emerg Radiol 11(2):95–99

Rostad BS, Richer EJ, Riedesel EL, Alazraki AL (2022) Esophageal discoid foreign body detection and classification using artificial intelligence. Pediatr Radiol 52(3):477–482

Tseng HJ, Hanna TN, Shuaib W, Aized M, Khosa F, Linnau KF (2015) Imaging foreign bodies: ingested, aspirated, and inserted. Ann Emerg Med 66(6):570-582.e5. https://doi.org/10.1016/j.annemergmed.2015.07.499

Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts H (2018) Artificial intelligence in radiology. Nat Rev Cancer 18(8):500–510

Mezrich JL (2022) Is artificial intelligence (ai) a pipe dream? why legal issues present significant hurdles to ai autonomy. AJR Am J Roentgenol 219(1):152–156

Funding

Open access funding provided by SCELC, Statewide California Electronic Library Consortium

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of Interest

None.

Disclosures

None.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Eibschutz, L., Lu, M.Y., Abbassi, M.T. et al. Artificial intelligence in the detection of non-biological materials. Emerg Radiol 31, 391–403 (2024). https://doi.org/10.1007/s10140-024-02222-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10140-024-02222-4