Abstract

Purpose

The increased utilization, and potential overutilization, of computed tomography pulmonary angiography (CTPA) is a well-recognized issue within emergency departments (EDs). The objective of this study is to determine the impact of performance feedback reports on CTPA ordering behavior among ED physicians.

Methods

We conducted a prospective study of the impact of individualized performance feedback reports on the ordering behavior of physicians working at two high-volume community EDs in Ontario, Canada. We generated individualized reports (or “Dashboards”) for each ED physician containing detailed feedback and peer comparison for each physician’s CTPA ordering. Our baseline pre-intervention period was January 1 to December 31, 2018, and our intervention period was January 1, 2019, to December 31, 2021. We tracked individual and group ordering behavior through the study period. Our primary outcomes are impact of feedback on (1) overall group ordering rate and (2) overall diagnostic yield. Secondary analysis was done to determine the impact of the intervention on those physicians with the highest CTPA utilization rate.

Results

There was no statistically significant difference in the diagnostic yield of the included physicians in either of the years of the intervention period. There was a statically significant increase in the utilization rate for CTPA from 2018 to 2020 and 2021 from 5.9 to 7.9 and 11.4 CTPAs per 1000 ED visits respectively (p < 0.5).

Conclusion

Our study found no consistent significant impact of individualized feedback and peer comparison on physician ordering of CTPAs. This points to a potentially greater impact of environmental and institutional factors, as opposed to physician-targeted quality improvement measures, on physician ordering behavior.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Overutilization of health care resources, defined as the provision of low-value or no-value health care, has been a central focus of health system research for the past several decades [1]. This issue has gained increasing urgency as health care resource utilization has increased, and the proportion of health care expenditure felt to improve patient outcomes had decreased [2]. In tandem with this increase in health care utilization and expenditure, there has been an increasing effort directed at mitigating this rise in low-value care through interventions targeting practitioners, institutions, and health systems [3,4,5,6]. Many of these efforts target individual physicians and aim to change physician ordering behavior through some combination of directive and persuasive measures.

The Choosing Wisely Campaign, while not the first to identify specific high-cost, low-yield health care interventions, is the most widely recognized campaign of its type, targeting multiple modalities across a variety of specialties [1, 6]. One such modality, and a very common target of quality improvement (QI) measures implemented within emergency departments (EDs), is the use computed tomography pulmonary angiography (CTPA) to diagnose pulmonary embolism. This is partly due to the availability of algorithms and decision support tools that allow practitioners to safely rule out PE in low-risk patients based solely on clinical criteria and/or serum assays (i.e., the D-dimer assay) [7, 8]. The QI measures aimed at reducing, or “optimizing,” the use of CTPAs have included physician education, audit-and-feedback, peer comparison, clinical decision support tools, and computerized order entries [9,10,11,12]. While a multitude of research studies have been directed at isolating the optimal combination of QI interventions, many of the interventions with demonstrated success in the initial studies have failed to replicate their initial positive findings in more real-world settings [13,14,15,16].

In this study, we evaluate the impact of one such physician-targeted QI intervention, carried out in a community emergency department. The objective of this study is to measure the impact of individualized performance feedback reports on CTPA ordering behavior among ED physicians working in a non-academic community setting.

Methods

We conducted a prospective study of the impact of individualized performance feedback reports on the ordering behavior of physicians working at two high-volume community EDs in Ontario, Canada. See Table 1 for study site characteristics derived from our institution’s annual fiscal year reports.

Intervention

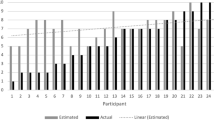

The intervention included components of both individualized audit-and-feedback and peer comparison feedback. We generated individualized reports (or “Dashboards”) for each ED physician containing detailed feedback on each physician’s CTPA ordering for the previous 6-month period. Each physician was provided with information on the number of CTPAs they had ordered, their CTPA utilization rate (defined as the total number of CTPAs ordered per 1000 ED visits), and their CTPA diagnostic yield (the proportion of CTPAs ordered that were positive for PE). The report also included measures of comparison to the provider group as a whole, including the group mean diagnostic yield and each physician’s rank and quartile utilization rate as compared to the group CTPA utilization rate.

Individualized reports were distributed to the group via email for the period between January 1, 2019, and June 30, 2019, and every 6 months thereafter. Each report contained the Dashboard and a “user guide” on how to interpret the data contained in the report. The final Dashboard was distributed for the July 1 to December 31, 2021, period.

Data collection

Data was collected through our hospital’s Radiology Information System (RIS), as well as our institution’s electronic medical records system and the National Ambulatory Care Reporting System (NACRS).

We collected data on all CTPAs ordered during the period from January 1, 2018, to December 31, 2021. Our baseline pre-intervention period was January 1 to December 31, 2018, and our intervention period was January 1, 2019, to December 31, 2021. We collected individual and group ordering data for each 6-month period of the intervention period (starting in January 1, 2019). Summary statistics were created for each year of the intervention (i.e., 2019, 2020, and 2021). The primary outcomes of this study were impact of performance feedback on (1) overall group utilization rate and (2) overall group diagnostic yield. We defined utilization rate as the total number of CTPAs ordered per 1000 ED visits. We defined diagnostic yield as the proportion of CTPAs ordered that were positive for a pulmonary embolism.

We also conducted secondary analysis to determine the impact of the intervention on those physicians with the highest utilization rates, defined as those in the highest quartile for utilization in the pre-intervention period. This secondary analysis was limited to those ED physicians who had worked ED shifts within our system for each calendar year over the 4 years.

Data analysis

Simple summary statistics were used to determine the utilization rates and diagnostic yields in each year of the intervention period. Each year of the intervention period was compared to the pre-intervention period. The statistical significance of any difference in group utilization rate and group diagnostic yield was determined using one-way analysis of variance (ANOVA) test.

When looking at the quartile of highest CTPA utilizers, the top quartile was identified by first limiting our analysis to those physicians who were present for all 4 years of the study period. We then identified those physicians with the highest utilization rates in the pre-intervention period of 2018. We tracked their CTPA ordering outcomes over the subsequent 3 years. We carried out a paired t-test to determine if there were any statistically significant differences in CTPA utilization rate or diagnostic yield in the intervention period.

All analyses were carried out using SAS statistical software program version 9.4 for Windows, SAS Institute Inc. and Microsoft Excel 365.

Ethics approval was obtained through our institutional Research Ethics Office.

Results

A total of 103 physicians who worked during all or part of the study period were included in the initial analysis. Table 2 presents data on physician characteristics and practice patterns for each of the 4 pre-intervention and intervention years.

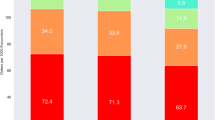

Table 3 presents data on the yearly total ED visits, CTPA ordered, CTPA diagnostic yield, and CTPA utilization rate (i.e., CTPA ordered per 1000 ED visits) for the group as whole for the study period. The total ED visit numbers for the group remained relatively stable for the 4-year pre-intervention and intervention periods, with the exception of a decrease in ED visits in 2020. The total CTPAs ordered by the group increased throughout the 4-year period, from 1,268 to 2,437. The greatest surge in numbers occurred between 2020 and 2021, in which there was an increase of 954 CTPAs in the total number of ordered tests. There was no statistically significant difference in the diagnostic yield of the included physicians in either of the years of the intervention (p = 0.83).

Comparison of means between the different years using one-way ANOVA and multiple pairwise comparisons revealed a statistically significant increase in the utilization rate (p < 0.001). Pairwise comparisons demonstrated a statically significant increase in the utilization rate among the physicians in 2021 as compared to 2018 (p < 0.001) and an increase in utilization rate among physicians in 2020 (p = 0.06) and 2019 (p = 0.9) that did not reach statistical significance.

Sixty nine of the 103 physicians who worked within our ED were present throughout all of the 4 years, and 17 of these physicians were included in the secondary analysis examining the ordering patterns of the highest utilizers (i.e., those physicians in the top quartile for utilization rate). Table 4 presents the data for the top quartile of CTPA utilizers. There were no statistically significant changes to the utilization rate for the highest CTPA utilizers during any of the intervention years as compared to the pre-intervention period. There was a statistically significant increase of 3.9% in the diagnostic yield of the highest CTPA utilizers only for the 2020 year.

Discussion

Our study found no consistent significant impact of individualized feedback and peer comparison on physician ordering behavior. In fact, there was an overall increase in CTPA ordering, both in total number of CTPA ordered and in the utilization rate per 1000 ED visits, over the course of the study period.

Two factors specific to our group may have dampened the overall impact of the intervention. First, our group’s initial pre-intervention diagnostic yield of 12% may be deemed acceptable, particularly in the context of other studies citing CTPA diagnostic yields of 5–10% in various US centers and 12–15% in Canadian centers [17,18,19]. Second, the wide variability in practice patterns among physicians in terms of both patient volume and CTPA utilization may have been such that a QI intervention and subsequent evaluation encompassing all physicians, would fail to target and isolate those physicians who may benefit most from practice change and optimization. However, the lack of impact of our intervention persisted even within the highest utilizers of CTPA within the group, i.e., those individuals with presumably the most potential for alteration and optimization in ordering behavior.

The absence of any measurable impact on ordering behavior in our study, while somewhat disappointing, is not surprising when looking at the current literature on the topic of QI interventions that target individual practitioners’ ordering behavior. Population-based studies have consistently shown a trend toward increased utilization of imaging studies, particularly CTs and MRIs, in the past two decades [20,21,22]. This trend has held steady over the past several years, and across several jurisdictions, despite the volume and breadth of campaigns and institutional initiatives aimed at reducing “overutilization,” or optimizing ordering behavior. Interventions that have focused on optimizing ordering behavior through, for example, increasing guideline adherence through educational campaigns or—as within our study—through audit-and-feedback and peer comparison have demonstrated either poor adherence to evidence-based guidelines, or a lack of impact on measurable outcomes of utilization and yield despite increased guideline adherence [10, 13, 15, 16, 23,24,25]. One study that aimed to evaluate the impact of automated clinical decision support tools (based on predetermined professional society guidelines) on the volume of unnecessary diagnostic imaging found that physicians ignored close to 99% of all computer-generated alerts [23]. Within those studies that found a positive impact of these physician-targeted interventions, the effect sizes are often modest [14, 26]. Of course, any potential modest decrease in resource utilization and expenditure must be weighed against the expenditures and material and human resources required for the design, purchase, implementation, and ongoing maintenance of these QI interventions and their associated technologies.

Total resource consumption is the result of a complex interplay between three main drivers: patient factors (e.g., age, comorbidity, clinical presentation, and patient expectations), physician factors (e.g., experience, level of training, risk tolerance, and tolerance of uncertainty), and contextual factors (e.g., crowding, wait-times, and accessibility of advanced diagnostics and therapeutics) [27,28,29]. Any intervention, and any subsequent evaluation, that focuses only on one part of this triad will inevitably leave unaccounted for the contributory impact of the other two drivers of utilization. The physicians in our group worked in a similar clinical environment, but were each exposed to a different population of patients, with a different mix of acuity, complexity, and expectations. This is true at any given point in time and also across the 4 years of the study period. As well, the clinical context changed over time, with increased hours of diagnostic imaging availability in response to increased demand. However, the most unpredictable, yet impactful phenomenon during the time period of this study was the COVID-19 pandemic. The pandemic significantly altered the patient population, clinical presentations, and contextual health system factors, thereby leading to alterations in established physician ordering behavior in ways that are extremely difficult to measure and quantify [30].

It has been posited that the increased availability of a certain modality will increase its utilization, which will in turn increase its supply in a feedback loop of supply-induced demand. This phenomenon has been discussed in health policy academia dating as far back as the 1960s and was most famously coined in Roemer’s law that “a built bed is a filled bed” [31]. Studies examining the impact of increased accessibility and supply of health care resources have shown a positive relationship between increased empty beds and increased admissions [32], between fee-for-service (versus salary-based) provider reimbursement and higher volume of procedures [33], increased regional physician density and increased health care expenditures [34], and increased hospital capacity and increased hospital utilization even after correcting for disease burden and demographic factors [35]. We discussed in a separate study the exponential rise of CT angiography of the head and neck within our center following the implementation of a Code Stroke protocol designed to aid in selecting patients for EVT [36].

It is not possible, based on observational data, to determine if this positive relationship between supply and utilization is strictly causal (i.e., simply the result of supply-induced demand) or rather simply correlational and driven by external factors (such as increased demand due to changing patient demographics, broader systems factors, or other unidentified or unmeasurable confounders). However, one consistent finding among these studies is the absence of any mortality benefit associated with this increased utilization [35, 37, 38].

Therefore, it is a logical extension that decreasing availability of—or restricting access to—a certain modality through a more directive, top-down approach will decrease, or at least “optimize,” its utilization. In fact, a review of the literature does point toward success with interventions that target areas a step above altering individual physician behavior, such as strict policy interventions that limit physicians’ access to certain diagnostics or therapeutics, or those that require additional steps in the prescribing pathway in order to decrease the amount of overutilization. Examples of such interventions include removal of certain low-yield tests from test profiles or formularies, removal of automatic renewal orders, restricting insurer payment or requiring special authorizations for certain tests and therapeutics, restricting ordering to certain physician groups (e.g., specialists), or decreasing availability [39,40,41,42,43,44].

The natural objection to this more proscriptive approach is that it encroaches upon physician autonomy and thus is highly likely to be met with resistance by physicians, and/or that it may lead to patient harm through delayed or missed diagnoses. For the latter objection, there is actually no compelling evidence in the literature that such policies cause harm through missed diagnoses [45]. Presumably, this is because the decreased ordering rate is not equally distributed among the patient population, but rather concentrated on those patients that the physician feels have the lowest pre-test probability for a disease, or the lowest likelihood to benefit from a specific therapy.

Limitations

There are several limitations to this study. First, our study did not include a concurrent control group, and it is therefore not possible to isolate the effects of the intervention from the situational and environmental changes that occurred over time. Second, this is a single-center study, and its results may not be generalizable to other centers. The third limitation is the shifting and fluctuating composition of our ED physician workforce. Though staff turn-over is a reality in many community EDs, this hinders our ability to make direct year-to-year comparisons. We tried to correct for this by limiting the analysis of our top quartile of the highest utilizers to only those physicians who were present for all four years of the pre-intervention and intervention periods. Fourth, this intervention did not include an educational component on appropriate CTPA ordering. It is possible that the impact of the feedback would have been enhanced had it been coupled with an educational intervention. Fifth, we did not collect information on whether the physicians opened the emails that they had received, or if they accessed the included Dashboard, and we do not know if and to what degree physicians engaged with the feedback information provided. It is possible that there was an impact on the ordering behavior of those physicians who accessed and engaged with the feedback reports, and this impact was not captured in our current analysis. Finally, we have throughout the conception, design, and implementation of this study made the assumption that “optimized” or appropriate utilization is equivalent to reduced utilization and increased diagnostic yield. While these are fairly common outcome measures for similar QI initiatives, they do leave unaddressed and unanswered the question of whether the intervention had the unintended consequence of “underdiagnosis” or missed diagnoses due to changes in physician ordering that may have resulted from the QI initiative. As well, peer comparison is limited by a lack of an “objective” benchmark for quality, and implicitly presents prevailing average group ordering behavior as optimal ordering.

Conclusion

Our study failed to show any significant impact of individualized audit-and-feedback and peer comparison feedback on CTPA ordering volume, utilization rate, or diagnostic yield. This lack of impact was present both within the group as a whole and within those physicians with the highest utilization rates. Systems may choose to adopt such QI initiatives encompassing physician feedback forms more as a form of continuing medical education and as a self-reflective tool, or as a part of a more system-wide multimodal approach to optimizing utilization that shifts the overall clinical context in such a way as to deter or disincentivize the overutilization of diagnostic imaging.

Abbreviations

- ED:

-

Emergency department

- CTPA:

-

Computed tomography pulmonary angiography

- PE:

-

Pulmonary embolism

- QI:

-

Quality improvement

- CDS:

-

Clinical decision support

- CPOE:

-

Computerized physician order entry

References

Blumenthal-Barby JS (2013) “Choosing wisely” to reduce low-value care: a conceptual and ethical analysis. J Med Phil: A Forum Bioethics Philos Med 38(5):559–580

Hendee WR, Becker GJ, Borgstede JP, Bosma J, Casarella WJ, Erickson BA, Maynard CD, Thrall JH, Wallner PE (2010) Addressing overutilization in medical imaging. Radiology 257(1):240–245

Schattner A (2008) The unbearable lightness of diagnostic testing: time to contain inappropriate test ordering. Postgrad Med J 84(998):618–621

Fahimi J, Kanzaria HK, Mongan J, Kahn KL, Wang RC (2019) Potential effect of the Protecting Access to Medicare Act on use of advanced diagnostic imaging in the emergency department: an analysis of the National Hospital Ambulatory Care Survey. Radiology 291(1):188–193

Hentel K, Menard A, Khorasani R (2017) New CMS clinical decision support regulations: a potential opportunity with major challenges. In., vol. 283: Radiological Society of North America 10–13.

Cassel CK, Guest JA (2012) Choosing wisely: helping physicians and patients make smart decisions about their care. JAMA 307(17):1801–1802

van Belle A, Buller HR, Huisman MV, Huisman PM, Kaasjager K, Kamphuisen PW, Kramer MH, Kruip MJ, Kwakkel-van Erp JM, Leebeek FW et al (2006) Effectiveness of managing suspected pulmonary embolism using an algorithm combining clinical probability, D-dimer testing, and computed tomography. JAMA 295(2):172–179

Wells PS, Anderson DR, Rodger M, Stiell I, Dreyer JF, Barnes D, Forgie M, Kovacs G, Ward J, Kovacs MJ (2001) Excluding pulmonary embolism at the bedside without diagnostic imaging: management of patients with suspected pulmonary embolism presenting to the emergency department by using a simple clinical model and d-dimer. Ann Intern Med 135(2):98–107

Levick DL, Stern G, Meyerhoefer CD, Levick A, Pucklavage D (2013) Reducing unnecessary testing in a CPOE system through implementation of a targeted CDS intervention. BMC Med Inform Decis Mak 13:43

Raja AS, Ip IK, Dunne RM, Schuur JD, Mills AM, Khorasani R (2015) Effects of performance feedback reports on adherence to evidence-based guidelines in use of CT for evaluation of pulmonary embolism in the emergency department: a randomized trial. AJR Am J Roentgenol 205(5):936–940

Raja AS, Ip IK, Prevedello LM, Sodickson AD, Farkas C, Zane RD, Hanson R, Goldhaber SZ, Gill RR, Khorasani R (2012) Effect of computerized clinical decision support on the use and yield of CT pulmonary angiography in the emergency department. Radiology 262(2):468–474

Winickoff RN, Coltin KL, Morgan MM, Buxbaum RC, Barnett GO (1984) Improving physician performance through peer comparison feedback. Med Care 22(6):527–534

Kadakia K, Pfeifer CM, Cao J, O’Connell EJ, Kwon J, Browning T (2020) Awareness of relative CT utilization among peers is not associated with changes in imaging requests among emergency department providers in a large county hospital. Emerg Radiol 27(1):17–22

Booker MT, Johnson JO (2017) Optimizing CT pulmonary angiogram utilization in a community emergency department: a pre- and postintervention study. J Ame College of Radiol : JACR 14(1):65–71

Drescher FS, Chandrika S, Weir ID, Weintraub JT, Berman L, Lee R, Van Buskirk PD, Wang Y, Adewunmi A, Fine JM (2011) Effectiveness and acceptability of a computerized decision support system using modified Wells criteria for evaluation of suspected pulmonary embolism. Ann Emerg Med 57(6):613–621

Kanaan Y, Knoepp UD, Kelly AM (2013) The influence of education on appropriateness rates for CT pulmonary angiography in emergency department patients. Acad Radiol 20(9):1107–1114

Venkatesh AK, Agha L, Abaluck J, Rothenberg C, Kabrhel C, Raja AS (2018) Trends and variation in the utilization and diagnostic yield of chest imaging for Medicare patients with suspected pulmonary embolism in the emergency department. AJR Am J Roentgenol 210(3):572–577

Costa AF, Basseri H, Sheikh A, Stiell I, Dennie C (2014) The yield of CT pulmonary angiograms to exclude acute pulmonary embolism. Emerg Radiol 21(2):133–141

Chen YA, Gray BG, Bandiera G, MacKinnon D, Deva DP (2015) Variation in the utilization and positivity rates of CT pulmonary angiography among emergency physicians at a tertiary academic emergency department. Emerg Radiol 22(3):221–229

Smith-Bindman R, Kwan ML, Marlow EC, Theis MK, Bolch W, Cheng SY, Bowles EJA, Duncan JR, Greenlee RT, Kushi LH et al (2019) Trends in use of medical imaging in US health care systems and in Ontario, Canada, 2000–2016. JAMA 322(9):843–856

Smith-Bindman R, Miglioretti DL, Johnson E, Lee C, Feigelson HS, Flynn M, Greenlee RT, Kruger RL, Hornbrook MC, Roblin D et al (2012) Use of diagnostic imaging studies and associated radiation exposure for patients enrolled in large integrated health care systems, 1996–2010. JAMA 307(22):2400–2409

Pola A, Corbella D, Righini A, Torresin A, Colombo PE, Vismara L, Trombetta L, Maddalo M, Introini MV, Tinelli D et al (2018) Computed tomography use in a large Italian region: trend analysis 2004–2014 of emergency and outpatient CT examinations in children and adults. Eur Radiol 28(6):2308–2318

Ip I, Lacson R, Hentel K, Malhotra S, Darer J, Langlotz C, Weiss J, Raja A, Khorasani R (2016) JOURNAL CLUB: predictors of provider response to clinical decision support: lessons learned from the Medicare imaging demonstration. Am J Roentgenol 208:1–7

Ryskina K, Jessica Dine C, Gitelman Y, Leri D, Patel M, Kurtzman G, Lin LY, Epstein AJ (2018) Effect of social comparison feedback on laboratory test ordering for hospitalized patients: a randomized controlled trial. J Gen Intern Med 33(10):1639–1645

Geeting GK, Beck M, Bruno MA, Mahraj RP, Caputo G, DeFlitch C, Hollenbeak CS (2016) Mandatory assignment of modified Wells score before CT angiography for pulmonary embolism fails to improve utilization or percentage of positive cases. AJR Am J Roentgenol 207(2):442–449

Mills AM, Ip IK, Langlotz CP, Raja AS, Zafar HM, Khorasani R (2018) Clinical decision support increases diagnostic yield of computed tomography for suspected pulmonary embolism. Am J Emerg Med 36(4):540–544

Hautz WE, Sauter TC, Hautz SC, Kämmer JE, Schauber SK, Birrenbach T, Exadaktylos AK, Stock S, Müller M (2020) What determines diagnostic resource consumption in emergency medicine: patients, physicians or context? Emerg Med J 37(9):546

Hoot NR, Aronsky D (2008) Systematic review of emergency department crowding: causes, effects, and solutions. Ann Emerg Med 52(2):126–136

Wong HJ, Sistrom CL, Benzer TI, Halpern EF, Morra DJ, Gazelle GS, Ferris TG, Weilburg JB (2013) Use of imaging in the emergency department: physicians have limited effect on variation. Radiology 268(3):779–789

Akudjedu TN, Mishio NA, Elshami W, Culp MP, Lawal O, Botwe BO, Wuni AR, Julka-Anderson N, Shanahan M, Totman JJ et al (2021) The global impact of the COVID-19 pandemic on clinical radiography practice: a systematic literature review and recommendations for future services planning. Radiography 27(4):1219–1226

Ginsburg PB, Koretz DM (1983) Bed availability and hospital utilization: estimates of the “Roemer effect.” Health Care Financ Rev 5(1):87–92

Freedman S (2016) Capacity and utilization in health care: the effect of empty beds on neonatal intensive care admission. Am Econ J Econ Policy 8(2):154–185

Nguyen LL, Smith AD, Scully RE, Jiang W, Learn PA, Lipsitz SR, Weissman JS, Helmchen LA, Koehlmoos T, Hoburg A et al (2017) Provider-induced demand in the treatment of carotid artery stenosis: variation in treatment decisions between private sector fee-for-service vs salary-based military physicians. JAMA Surg 152(6):565–572

Reich O, Weins C, Schusterschitz C, Thöni M (2012) Exploring the disparities of regional health care expenditures in Switzerland: some empirical evidence. Eur J Health Econ 13(2):193–202

Fisher ES, Wennberg JE, Stukel TA, Skinner JS, Sharp SM, Freeman JL, Gittelsohn AM (2000) Associations among hospital capacity, utilization, and mortality of US Medicare beneficiaries, controlling for sociodemographic factors. Health Serv Res 34(6):1351–1362

Salehi L, Jaskolka J, Ossip M, Phalpher P, Valani R, Mercuri M (2022) Utilization of CT angiography of the head and neck in the era of endovascular therapy for acute ischemic stroke: a retrospective study. Emerg Radiol 29(2):291–298

Wiener RS, Schwartz LM, Woloshin S (2011) Time trends in pulmonary embolism in the United States: evidence of overdiagnosis. Arch Intern Med 171(9):831–837

Li L, Chamoun GF, Chamoun NG, Sessler D, Gopinath V, Saini V (2021) Elucidating the association between regional variation in diagnostic frequency with risk-adjusted mortality through analysis of claims data of Medicare inpatients: a cross-sectional study. BMJ Open 11(9):e054632

Chami N, Li Y, Weir S, Wright JG, Kantarevic J (2021) Effect of strict and soft policy interventions on laboratory diagnostic testing in Ontario, Canada: a Bayesian structural time series analysis. Health policy (Amsterdam, Netherlands) 125(2):254–260

van Walraven C, Goel V, Chan B (1998) Effect of population-based interventions on laboratory utilization: a time-series analysis. JAMA 280(23):2028–2033

Henderson J, Bouck Z, Holleman R, Chu C, Klamerus ML, Santiago R, Bhatia RS, Kerr EA (2020) Comparison of payment changes and choosing wisely recommendations for use of low-value laboratory tests in the United States and Canada. JAMA Intern Med 180(4):524–531

Nazerian P, Vanni S, Fanelli A, Fallai L, Duranti C, Ognibene A, Rapi S, Betti L, Tomaiuolo M, Calamai M et al (2019) Appropriate use of laboratory test requests in the emergency department: a multilevel intervention. Eur J Emer Med: Off J Eur Soc Emergency Med 26(3):205–211

Pysher TJ, Bach PR, Lowichik A, Petersen MD, Shields LH (1999) Chemistry test ordering patterns after elimination of predefined multitest chemistry panels in a children’s hospital. Pediat an Dev Pathol: Off J Soc Ped Pathol Paediat Pathol Soc 2(5):446–453

Naugler C, Hemmelgarn B, Quan H, Clement F, Sajobi T, Thomas R, Turin TC, Hnydyk W, Chin A, Wesenberg J (2017) Implementation of an intervention to reduce population-based screening for vitamin D deficiency: a cross-sectional study. CMAJ Open 5(1):E36–E39

Bindraban RS, Ten Berg MJ, Naaktgeboren CA, Kramer MH, Van Solinge WW, Nanayakkara PW (2018) Reducing test utilization in hospital settings: a narrative review. Ann Lab Med 38(5):402–412

Acknowledgements

The authors would like to thank Mr. Ishmail Sheri, Mr. Shaun Ghanny, Ms. Desa Marin, Ms. Priscilla Matthews, Dr. Oscar Karbi, Dr. Michael Garay, Dr. Andrew Healey, and the Brampton Civic Hospital Emergency Department Governance Committee for their contributions to this manuscript. Institutional support was provided by William Osler Health System, the Brampton Civic Hospital Emergency Department Governance Committee, McMaster University Department of Medicine, and University of Toronto Department of Family and Community Medicine.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Salehi, L., Jaskolka, J., Yu, H. et al. The impact of performance feedback reports on physician ordering behavior in the use of computed tomography pulmonary angiography (CTPA). Emerg Radiol 30, 63–69 (2023). https://doi.org/10.1007/s10140-022-02100-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10140-022-02100-x