Abstract

Nearest neighbour similarity measures are widely used in many time series data analysis applications. They compute a measure of similarity between two time series. Most applications require tuning of these measures’ meta-parameters in order to achieve good performance. However, most measures have at least \(O(L^2)\) complexity, making them computationally expensive and the process of learning their meta-parameters burdensome, requiring days even for datasets containing only a few thousand series. In this paper, we propose UltraFastMPSearch, a family of algorithms to learn the meta-parameters for different types of time series distance measures. These algorithms are significantly faster than the prior state of the art. Our algorithms build upon the state of the art, exploiting the properties of a new efficient exact algorithm which supports early abandoning and pruning for most time series distance measures. We show on 128 datasets from the UCR archive that our new family of algorithms are up to an order of magnitude faster than the previous state of the art.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Time series distance measures are used in a wide range of time series data mining tasks, including similarity search [5, 21, 24, 32], classification [3, 18, 34, 37, 43], regression [33], clustering [8, 23], indexing [39], and motif discovery [1]. All these tasks rely on nearest neighbour (\({{\,\mathrm{\textrm{NN}}\,}}\)) search, which is widely known to be most effective when the meta-parameters of the distance measures are learnt [3, 18, 37]. For instance, the Dynamic Time Warping (\({{\,\textrm{DTW}\,}}\)) distance proves to be the most effective when constrained by the right warping window (WW) [8, 26, 34]. Indeed, the unconstrained \({{\,\textrm{DTW}\,}}\) is subject to pathological warping, leading to unintuitive alignments [34] where a single point of a time series is aligned to a large section of another series [14].

Traditionally, learning the meta-parameters has been a time-consuming leave-one-out cross-validation (LOOCV) process that requires computing the distance between every pair of training instances, for each value of each meta-parameter [7, 8, 18, 35, 37]. This is only compounded by the quadratic time complexity of most distance measures. Take \({{\,\textrm{DTW}\,}}\) for example, with a training dataset of N time series of length L, learning the WW naively requires \(O(N^2.L^3)\) operations (Sect. 2.7). This is extremely slow, even for datasets with only a thousand of time series. For instance, the naive approach took 58 h to learn the best WW on the StarLightCurves dataset from the UCR archive [7], which only has 1000 training instances with a length of 1024 (Fig. 1). In comparison, the prior state-of-the-art method, FastWWSearch, took 30 min, while our proposed approach took only 4 min. Similar improvements can be seen across all the largest (in terms of \(N\times L\)) datasets from the UCR archive [7], shown in Fig. 1 (note log scale).

UltraFastWWSearch vs the naive LOOCV approach, and state-of-the-art FastWWSearch. Total training time on the 6 largest datasets from [7] in terms of \(N\times L\)

The prior state-of-the-art FastWWSearch that learns the best WW for \({{\,\textrm{DTW}\,}}\), is a sophisticated and intricate algorithm [34], exploiting the properties of \({{\,\textrm{DTW}\,}}\) and its various lower bounds to achieve a three orders of magnitude speedup over the naive approach. Its significance is demonstrated by FastWWSearch having received the SDM 2018 best paper award. Even though FastWWSearch achieves 1000 times speedup compared to the traditional LOOCV approach, it is still undesirably slow for large datasets with long time series. This is shown in Fig. 1, where FastWWSearch took almost 6 h to train on the HandOutlines dataset, one of the largest and longest datasets from the UCR archive [7], compared to just 11 min for our proposed method. The FastWWSearch was later extended to other distance measures, forming the Fast Ensemble of Elastic Distances (FastEE) [37], that is 40 times faster than the original EE. We will refer to them in the paper as the FastEE approaches.

Before FastWWSearch and FastEE, there were two primary strategies for speeding up LOOCV. One was by speeding up the \({{\,\mathrm{\textrm{NN}}\,}}\) search process, e.g. by using lower bounds to skip most of the distance computations [15,16,17, 24, 36, 41]. The other was by speeding up the core computation of the distances, e.g. by approximating it [28] or pruning unnecessary operations [31]. But, scalability remains an issue for large datasets and long series [34, 37, 42].

Recently, [11] developed an efficient implementation strategy for six elastic distances, including \({{\,\textrm{DTW}\,}}\). This strategy, known as “Early Abandoned and Pruned” (\({{\,\textrm{EAP}\,}}\)), relies on an upper bound beyond which the precise distance is not required. This is used to prune and early abandon the core computation of elastic distances. Nearest neighbour search naturally provides such an upper bound (Sect. 3.1). \({{\,\textrm{EAP}\,}}\) demonstrated more than an order of magnitude speedup for several \({{\,\mathrm{\textrm{NN}}\,}}\) search tasks.

In this paper, we propose UltraFastMPSearch, a family of three algorithms to learn the meta-parameters for the six time series distance measures that are at the heart of the influential Ensemble of Elastic Distances (EE) algorithm [18]—Dynamic Time Warping (DTW) [27], Weighted Dynamic Time Warping (WDTW) [13], Longest Common Subsequence (LCSS) [4], Edit Distance with Real Penalty (\({{\,\textrm{ERP}\,}}\)) [5, 6], Move–Split–Merge (\({{\,\textrm{MSM}\,}}\)) [32], and Time Warp Edit Distance (\({{\,\textrm{TWE}\,}}\)) [21]. We fundamentally transformed the FastEE algorithm proposed in [37] to exploit the full capacity of \({{\,\textrm{EAP}\,}}\) [11]. UltraFastMPSearch consists of

-

1.

UltraFastWWSearch, recently proposed in our paper IEEE ICDM2021 [35], of which the current paper is an expanded version. UltraFastWWSearch was designed specifically for \({{\,\textrm{DTW}\,}}\), exploiting a \({{\,\textrm{DTW}\,}}\) property called the window validity to gain further substantial speedup;

-

2.

UltraFastLocalUB, a variant of UltraFastWWSearch extended to other distance measures without the window validity;

-

3.

UltraFastGlobalUB, a variant of UltraFastLocalUB that uses a global rather than local upper bound, ensuring that a distance computation is only early abandoned if it cannot provide a useful lower bound for distance computations with subsequent meta-parameter values.

Like FastWWSearch and all the FastEE approaches, UltraFastMPSearch is exact, i.e. produces the same results as the traditional LOOCV approach. It is, however, always faster—up to one order of magnitude—than FastWWSearch and the FastEE approaches when tested on the 128 datasets from the UCR archive [7]. Figure 2 demonstrates this by comparing UltraFastWWSearch to FastWWSearch and to the traditional LOOCV approach.

Similar to the FastWWSearch and FastEE approaches, UltraFastMPSearch systematically fills a table recording the nearest neighbour at each meta-parameter for each series in database \(\mathcal {T}\). However, it does so without the intricate cascading of lower bounds that is critical to FastWWSearch and FastEE. UltraFastMPSearch processes a new time series in a systematic order, minimizing the number of distance computations while carefully exploiting the strengths of \({{\,\textrm{EAP}\,}}\) to speedup the required ones. We release our code open-sourceFootnote 1 to ensure reproducibility and to enable researchers and practitioners to directly use UltraFastMPSearch as a subroutine to tune time series distance measures whatever their application.

We believe that UltraFastMPSearch serves as an important foundation to further speed up distance-based time series classification algorithms, such as the “Fast Ensemble of Elastic Distances” (FastEE) [37], that has fallen from favor due to its relatively slow compute time.

This paper is organized as follows. In Sect. 2, we introduce some background and notation used in this work. We review some related work in Sect. 3. Section 4 describes UltraFastMPSearch in detail. Then, we evaluate our method in Sect. 5 with the standard methods. Lastly, Sect. 6 concludes our work with some future directions.

Note that this paper is an extended version of our IEEE ICDM2021 UltraFastWWSearch paper [35].

2 Background

We consider learning from a dataset \(\mathcal {T}=\{\mathcal {T}_1,\ldots ,\mathcal {T}_N\}\) of N time series where \(\mathcal {T}_i\) are of length L. The letters S and T denote two time series, and \(T_i\) denotes the ith element of T.

In this section, we briefly discuss the distance measures used in this work and their meta-parameters. We refer interested readers to their respective papers for a detailed overview of the measures.

2.1 Dynamic Time Warping

The \({{\,\textrm{DTW}\,}}\) distance was first introduced in 1971 by [27] as a speech recognition tool. Since then, it has been one of the most widely used distance measures in \({{\,\mathrm{\textrm{NN}}\,}}\) search, supporting sub-sequence search [24], regression [33], clustering [8, 23], motif discovery [1], and classification [3, 18, 37]; nearest neighbour with \({{\,\textrm{DTW}\,}}\) (\({{\,\mathrm{\textrm{NN}}\,}}\)-\({{\,\textrm{DTW}\,}}\)) has been the historical approach to time series classification. The “Ensemble of Elastic Distances” (EE) [18], introduced in 2015, was one of the first classifiers to be consistently more accurate than \({{\,\mathrm{\textrm{NN}}\,}}\)-\({{\,\textrm{DTW}\,}}\) over a wide variety of tasks. It relies on eleven \({{\,\mathrm{\textrm{NN}}\,}}\) classifiers including \({{\,\mathrm{\textrm{NN}}\,}}\)-\({{\,\textrm{DTW}\,}}\) (and its variant with a warping window, \({{\,\textrm{cDTW}\,}}\), see below). EE opened the door to “ensemble classifiers”, i.e. classifiers embedding other classifiers as components, for time series classification. Various recent and accurate ensemble classifiers such as HIVE-COTE [19], Proximity Forest [20], and TS-CHIEF [29] also embed both \({{\,\mathrm{\textrm{NN}}\,}}\)-\({{\,\textrm{DTW}\,}}\) and \({{\,\mathrm{\textrm{NN}}\,}}\)-\({{\,\textrm{cDTW}\,}}\) classifiers.

\({{\,\textrm{DTW}\,}}\) computes in \(O(L^2)\) the cost of an optimal alignment between two series (lower costs indicating more similar series) by minimizing the cumulative cost of aligning their individual points. Equations 1a to 1d define the “cost matrix” M for two series S and T such that M(i, j) is the minimal cumulative cost of aligning the first i points of S with the first j points of T. It follows that \({{\,\textrm{DTW}\,}}(S,T) {=} M(L,L)\).

Common functions for the cost of aligning two points are \({{\,\textrm{d}\,}}(S_i, T_j)=\left| S_i {-} T_j\right| \) and \({{\,\textrm{d}\,}}(S_i, T_j)=(S_i {-} T_j)^2\). In the current paper, we use the latter. However, our algorithms generalize to any cost function.

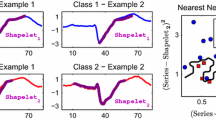

The individual alignments (dotted lines in Fig. 3) form a “warping path” in the cost matrix (Fig. 4a). See how the vertical section of the path column 1 in Fig. 4a corresponds to the first point of T being aligned thrice in Fig. 3.

Alignments associated with the warping path in Fig. 4a

\(M_{{{\,\textrm{DTW}\,}}_{w}(S,T)}\) with decreasing warping window w size. We have \({{\,\textrm{DTW}\,}}_{w}(S,T){=}M_{{{\,\textrm{DTW}\,}}_{w}(S,T)}(L,L)\). Cells cut-out by the warping window are in light grey, borders are in dark grey. The warping path computed by the full \({{\,\textrm{DTW}\,}}\) (a) is valid down to \(w{=}2\) (b). Hence, the next required computation is with \(w{=}1\), resulting in a higher \({{\,\textrm{DTW}\,}}\) cost of \(25>23\) (c)

2.1.1 Warping window

\({{\,\textrm{DTW}\,}}\) is usually associated with a “warping window” (WW) w (originally called Sakoe-Chiba band), constraining how far the warping path can deviate from the diagonal of the matrix [27]. Given a line index \(1\le {}l\le {}L\) and a column index \(1\le {}c\le {}L\), we have \(\left| l{-}c\right| \le {}w\). With the cost function \({{\,\textrm{d}\,}}(S_i, T_j){=}(S_i {-} T_j)^2\), a WW of 0 is equivalent to the squared Euclidean distance. On the other hand, a WW \(\ge {}L{-}1\) is equivalent to unconstrained (or “full”) \({{\,\textrm{DTW}\,}}\). \({{\,\textrm{DTW}\,}}\) with a WW is often called \({{\,\textrm{cDTW}\,}}\), or simply as \({{\,\textrm{DTW}\,}}\) annotated with a window w. In this paper, we focus only on the commonly used warping window (Sakoe–Chiba band) [15, 18, 26, 34, 37]. However, it is also important to note that there are other types of constraints for \({{\,\textrm{DTW}\,}}\) such as the Itakura Parallelogram [12] and the Ratanamahatana–Keogh band [25].

We can make the following observations.

-

1.

WW have a “validity”: If the warping path at a given window w deviates from the diagonal by no more that v, then it will remain the same for all windows \(v\le w'\le w\). This is called window validity, v in [34], and is noted [v, w], i.e. we say that \({{\,\textrm{DTW}\,}}_{w}(S,T)\) has a window validity of [v, w].

-

2.

\({{\,\textrm{DTW}\,}}\) is monotonic in w: \({{\,\textrm{DTW}\,}}_{w}(S,T)\) increases as w decreases, i.e. \({{\,\textrm{DTW}\,}}_{w}(S,T) \ge {{\,\textrm{DTW}\,}}_{w{+}k}(S,T)\) for \({k\ge {1}}\). In other words, \({{\,\textrm{DTW}\,}}_w(S,T)\) is a lower bound for all \({{\,\textrm{DTW}\,}}_{w'}(S,T)\) with \(0\le {}w'<w\) (see Sect. 3.1). Figure 4 illustrates these observations on the cost matrix. The full \({{\,\textrm{DTW}\,}}\) (Fig. 4a) has a window validity of \([2,L{-}1]\), i.e. the warping path and \({{\,\textrm{DTW}\,}}\) cost of 23 are the same for all warping windows from L down to 2 (Fig. 4b). The next WW of 1 actually constraints the warping path, resulting in an increased \({{\,\textrm{DTW}\,}}\) cost of 25 (Fig. 4c). Figure 5 illustrates the consequence of these observations. The \({{\,\textrm{DTW}\,}}\) distance is constant for a large range of windows and increases when w gets smaller.

2.2 Weighted Dynamic Time Warping

The Weighted Dynamic Time Warping (\({{\,\textrm{WDTW}\,}}\)) was proposed to reduce pathological alignments [13]. Instead of having a hard constraint like the WW for \({{\,\textrm{DTW}\,}}\), \({{\,\textrm{WDTW}\,}}\) imposes a soft constraint on the warping path. The cost of aligning two points \(S_i\) and \(T_j\) is multiplied by a weight that depends on their distance in the time dimension, \(a{=}\left| i-j\right| \). It will have a larger weight if i is far from j and reduces the chances of aligning \(S_i\) to \(T_j\), thus preventing the alignment of two points that are too far away in the time dimension. The weights are computed using a modified logistic weight function described in Eq. 2, parameterized by the meta-parameter g that controls the level of penalization for further points [13]. The optimal range for g is distributed between 0.01 and 0.6 as suggested by the authors [13]. \(\texttt{w}_{\max {}}\) is the upper bound for the weight and is typically set to 1 [13].

We observed that \({{\,\textrm{WDTW}\,}}\) monotonically decreases with increasing parameter g (Fig. 6), i.e. \({{\,\textrm{WDTW}\,}}_g(S,T) > {{\,\textrm{WDTW}\,}}_{g+k}(S,T)\) for \(k>0\). This means that \({{\,\textrm{WDTW}\,}}_g(S,T)\) is a lower bound for all \({{\,\textrm{WDTW}\,}}_{g'}(S,T)\) with \(0 \le g' < g\). Note that unlike \({{\,\textrm{DTW}\,}}\) that stays constant within a window validity, the sigmoid weighting function makes \({{\,\textrm{WDTW}\,}}\) a continuous function, preventing it from having a constant value, illustrated in Fig. 6b.

2.3 Longest Common Subsequence

The Longest Common Subsequence (\({{\,\textrm{LCSS}\,}}\)) is a common measure used to compare string sequences [4, 40]. It finds the longest common subsequence that best matches the two string sequences. Using a distance threshold \(\varepsilon \), \({{\,\textrm{LCSS}\,}}\) can be extended to numeric sequences (time series) where the two points \(S_i\) and \(T_j\) are considered a match if the cost between them is less than \(\varepsilon \). Each cell of the cost matrix \(M_{{{\,\textrm{LCSS}\,}}}(i,j)\) indicates the number of matches between the two time series, i.e. the length of the longest common subsequence. Then the \({{\,\textrm{LCSS}\,}}\) distance is equal to the difference between the length of the series, L and the last cell of \(M_{{{\,\textrm{LCSS}\,}}}\). Equation 3 describes the computation of \({{\,\textrm{LCSS}\,}}\). A global constraint, \(\delta \), similar to WW can also be applied to \({{\,\textrm{LCSS}\,}}\), restricting the alignment path.

Similar to WW, the \(\delta \) constraint parameter has a validity, making \({{\,\textrm{LCSS}\,}}\) constant for a range of \(\delta \) values. By definition, \({{\,\textrm{LCSS}\,}}\) is monotonic in both \(\delta \) and \(\varepsilon \) as shown in Fig. 7. \({{\,\textrm{LCSS}\,}}_{\delta ,\varepsilon }(S,T)\) decreases as \(\delta \) increases, i.e. \({{\,\textrm{LCSS}\,}}_{\delta ,\varepsilon }(S,T) \ge {{\,\textrm{LCSS}\,}}_{\delta {+}k,\varepsilon }(S,T)\) for \(k\ge 1\). In addition, \({{\,\textrm{LCSS}\,}}_{\delta ,\varepsilon }\) also decreases as \(\varepsilon \) increases, i.e. \({{\,\textrm{LCSS}\,}}_{\delta ,\varepsilon }(S,T) \ge {{\,\textrm{LCSS}\,}}_{\delta ,\varepsilon {+}k}(S,T)\) for \(k>0\). Note that \({{\,\textrm{LCSS}\,}}_{\delta ,\varepsilon }(S,T){=} {{\,\textrm{LCSS}\,}}_{\delta ,\varepsilon {+}k}(S,T)=0\) at a sufficiently large \(\varepsilon \). This allows \({{\,\textrm{LCSS}\,}}\) with a larger \(\delta \) or \(\varepsilon \) to lower bound \({{\,\textrm{LCSS}\,}}\) with a smaller \(\delta \) or \(\varepsilon \).

2.4 Edit distance with real penalty

Most time series distance measures such a \({{\,\textrm{DTW}\,}}\) and \({{\,\textrm{LCSS}\,}}\) are not metric, making it challenging to index a time series dataset or prune k-NN queries under these measures. Edit Distance with Real Penalty (\({{\,\textrm{ERP}\,}}\)) is a metric that combines the L1-norm and edit distances such as \({{\,\textrm{DTW}\,}}\) [5, 6]. It is parameterized by two meta-parameters, “gap value” g and WW, w. If a gap is added, the penalty will be the cost between a point \(S_i\) or \(T_j\) and g. This is described in Eq. 4. Note that our implementation uses the squared Euclidean distance as the cost function.

Increasing g increases \({{\,\textrm{ERP}\,}}_{g,w}(S,T)\), as illustrated in Fig. 8 We observed that \({{\,\textrm{ERP}\,}}_{g{+}k,w}(S,T) \ge {{\,\textrm{ERP}\,}}_{g,w}(S,T)\) for \(k\ge 0\) and according to Eq. 4, \({{\,\textrm{ERP}\,}}_{g{+}k,w}(S,T){=}{{\,\textrm{ERP}\,}}_{g,w}(S,T)\) for a sufficiently large g. Hence \({{\,\textrm{ERP}\,}}_{g,w}(S,T)\) lower bounds \({{\,\textrm{ERP}\,}}_{g{+}k,w}(S,T)\). Similarly \({{\,\textrm{ERP}\,}}_{g,w{+}k}(S,T)\) also lower bounds \({{\,\textrm{ERP}\,}}_{g,w}(S,T)\) for all \(k\ge 1\). Similar to \({{\,\textrm{DTW}\,}}\) with \(w{=}0\), confined to the diagonal of the cost matrix, \({{\,\textrm{ERP}\,}}\) is constant when \(w{=}0\) regardless of the g value, i.e. \({{\,\textrm{ERP}\,}}_{g{+}k,0}(S,T){=}{{\,\textrm{ERP}\,}}_{g,0}(S,T)\).

2.5 Move–Split–Merge

The Move–Split–Merge (\({{\,\textrm{MSM}\,}}\)) distance is a metric proposed to overcome the limitations of existing distance measures: the Euclidean distance is not robust to temporal misalignment; edit distances such as \({{\,\textrm{DTW}\,}}\) and \({{\,\textrm{LCSS}\,}}\) are not metric; the \({{\,\textrm{ERP}\,}}\) distance is a metric but not translation invariant due to the way the gap cost is computed. \({{\,\textrm{MSM}\,}}\) is a metric, robust to temporal misalignment and translation invariant [32]. It is parameterized by an additive penalty value \(\texttt{c}\) that is used to compute the cost of aligning off-diagonal alignments, described in Eq. 5. This cost function takes in the new point (np) of the off-diagonal alignment and the two previously considered points (x and y). If the new point is within the bounds of x and y, then the penalty is only be \(\texttt{c}\).

The \({{\,\textrm{MSM}\,}}\) distance increases with increasing parameter c and stays constant after some sufficiently large c because deviating from the diagonal is too costly, becoming similar to a \(w{=}0\) scenario. Figure 9 shows that \({{\,\textrm{MSM}\,}}_{c}(S,T) \ge {{\,\textrm{MSM}\,}}_{c{+}k}(S,T)\) for \(k>0\) and that we can use \({{\,\textrm{MSM}\,}}_{c}(S,T)\) to lower bound \({{\,\textrm{MSM}\,}}_{c{+}k}(S,T)\).

2.6 Time Warp Edit Distance

Existing distance measures assume that the time series are uniformly sampled and they do not consider timestamps in aligning time series. The Time Warp Edit Distance (\({{\,\textrm{TWE}\,}}\)) was designed to take into account the timestamps to better align time series that are not uniformly sampled [21]. It uses three main operations (delete\(_A\), delete\(_B\) and match) to align the time series. \({{\,\textrm{TWE}\,}}\) is parameterized by two meta-parameters, v and \(\lambda \). v controls the “stiffness” of aligning two time series by weighing the contributions from the timestamps. \(v{=}\infty \) means that points that are off-diagonal of the matrix are not considered and is similar to the Euclidean distance while \(v{=}0\) is similar to \({{\,\textrm{DTW}\,}}\) [21]. The match operation is the sum of the cost between the current and previous data points and the weighted contribution of the respective timestamps using v. \(\lambda \) is a constant penalty added to the cost of the alternate alignments, i.e. the delete operations. The cost of all the three operations and the computation of each elements in the cost matrix \(M_{{{\,\textrm{TWE}\,}}}\) are described in Eqs. 7 and 8, respectively. Note that \(t_{S_i}\) denotes the i-th timestamp of a series S. Our implementation assumes the time series are uniformly sampled and does not use timestamp, thus \(t_{S_i}{=}i\).

Figure 10 shows that \({{\,\textrm{TWE}\,}}\) increases monotonically with v and \(\lambda \), allowing us to use distances computed at the smaller parameters to lower bound distances at larger parameters. Similarly, \({{\,\textrm{TWE}\,}}\) distance becomes constant at a sufficiently large v and \(\lambda \), where the cost of doing the delete operations becomes too costly. Hence, \({{\,\textrm{TWE}\,}}_{v{+}k,\lambda }(S,T) \ge {{\,\textrm{TWE}\,}}_{v,\lambda }(S,T)\) and \({{\,\textrm{TWE}\,}}_{v,\lambda {+}k}(S,T) \ge {{\,\textrm{TWE}\,}}_{v,\lambda }(S,T)\) for \(k>0\).

2.7 Learning the optimal meta-parameter

There are two main advantages of learning the optimal meta-parameter. First, a good meta-parameter provides better results (e.g. classification accuracy) with \({{\,\mathrm{\textrm{NN}}\,}}\) search. For instance, learning the best \({{\,\textrm{DTW}\,}}\) warping window prevents spurious alignments, which in turn improves the classification accuracy [8, 14, 34]. Recent research [3, 18, 34, 37] demonstrated that learning the optimal meta-parameter for each distance measures significantly improves classification accuracy. Improvements in accuracy as great as from 65% to 93% have been demonstrated [34]. Second, the window meta-parameter reduces the time complexity down to O(w.L). By doing so, distance measures with a window meta-parameter, such as \({{\,\textrm{DTW}\,}}\), \({{\,\textrm{LCSS}\,}}\) and \({{\,\textrm{ERP}\,}}\) can be significantly faster to compute than without using any windows, especially for small windows. The usual approach for discovering the optimal meta-parameter is through leave-one-out cross-validation (LOOCV) [3, 7, 8, 18] by optimizing a performance metric such as training accuracy. This can be seen as creating a \((N\times L)\) table as shown in Table 1, giving the nearest neighbour of every time series for all meta-parameters and finding the column that gives the best training accuracy.

In this paper, we use 1-\({{\,\mathrm{\textrm{NN}}\,}}\) which has been widely used for benchmarking distance-based TSC algorithms [18, 37]. Note that our UltraFastMPSearch can easily be extended to cases where more than 1 neighbour (\(k>1\)) is desired. This is done by adding a third dimension, k to Table 1 and keeping track of the distance to the k-th nearest neighbour. For simplicity, we describe our work in this paper using \(k{=}1\).

A straightforward LOOCV meta-parameter search implementation has \(O(N^2.L^2.P)\) time complexity (\(L^2\) for each distance measure, repeated over P meta-parameters). To be consistent with distance-based TSC literature [18, 37], we used \(P{=}100\) in our implementation. For measures with 2 meta-parameters, 10 of each meta-parameter are sampled from a specified distribution (discussed later), forming 100 parameter combinations. We leave the exploration of different parameter search space to future work. For the rest of the paper, we use a parameter ID notation, p to refer to the parameter space for each distance measure. For example for single parameter measures like \({{\,\textrm{DTW}\,}}\), \(p{=}0\) refers to \(w{=}0\), while for two-parameters measures like \({{\,\textrm{LCSS}\,}}\), \(p{=}1\) refers to a combination of \(\delta \) and \(\varepsilon \) such as \(\delta {=}0\) and \(\varepsilon {=}0.2\). Due to its \(L^2\) complexity, meta-parameter search does not scale to long series [34, 35]. Note that FastWWSearch, FastEE and UltraFastMPSearch primarily tackle the impact of the \(L^2\) part of the complexity, by minimizing the number of times the \(O(L^2)\) distance is computed. For instance, the FordA and FordB datasets in Fig. 1 have short series, but many of them: the resulting speedup is limited by the \(N^2\) part of the complexity. Figure 15a, b clearly illustrate this. When the length of the series increases (Fig. 15a), UltraFastMPSearch, especially UltraFastWWSearch scales extremely well compared to the state of the art. On the other hand, when the number of series increases (Fig. 15b), both methods suffer from the associated quadratic complexity, although UltraFastMPSearch does better.

Discovering the optimal meta-parameter for all distance measures is so expensive that a recent version of state-of-the-art classifier HIVE-COTE dropped EE, even though doing so reduced its accuracy by \(0.6\%\) on average across the UCR series archive [7], and by up to \(5\%\) on some datasets [2]. The sole reason for dropping EE is its computational burden being too great for it to be considered feasible to employ. In the future, UltraFastMPSearch may allow to reinstate a more efficient implementation of EE in HIVE-COTE, providing a substantial improvement to the state of the art.

3 Related work

In this section, we review the state-of-the-art methods to speed up \({{\,\mathrm{\textrm{NN}}\,}}\)-\({{\,\textrm{DTW}\,}}\), focusing on LOOCV and learning the optimal meta-parameter efficiently.

3.1 Lower bounding

Filling out the \({{\,\mathrm{\textrm{NNs}}\,}}\) table in Table 1 can be considered as finding the nearest neighbour for each time series T within \(\mathcal {T}\) at each meta-parameter. It follows that one way to speed up LOOCV is to speed up \({{\,\mathrm{\textrm{NN}}\,}}\) search. A common approach to speeding up \({{\,\mathrm{\textrm{NN}}\,}}\) search is through “lower bounding” [15,16,17, 24, 36, 37, 41]. A \({{\,\mathrm{\textrm{NN}}\,}}\) search returns the nearest neighbour \(\mathcal {T}_\text {nn}\) of a query S among a dataset \(\mathcal {T}\), i.e. we have \(d_\text {nn}{=}{{\,\textrm{DIST}\,}}(S, \mathcal {T}_\text {nn})\) such that \(\forall {T\in {\mathcal {T}}}, d_\text {nn}\le {{{\,\textrm{DIST}\,}}(S, T)}\), where \({{\,\textrm{DIST}\,}}\) denotes a time series distance measures (Algorithm 1).

It turns out that \({{\,\mathrm{\textrm{NN}}\,}}\) search naturally supports lower bounding (Algorithm 2). First, in Algorithm 1, notice how \(d_\text {nn}\) is an upper bound (\({{\,\textrm{UB}\,}}\)) on the end result: either it is the distance of the actual nearest neighbour, or it will later be replaced by a smaller value. A lower bound \({{\,\textrm{LB}\,}}(S,T) \le {{\,\textrm{DIST}\,}}(S,T)\) allows a costly \({{\,\textrm{DIST}\,}}\) computation to be avoided if \({{\,\textrm{LB}\,}}(S,T)\ge {{\,\textrm{UB}\,}}\) as \({{\,\textrm{LB}\,}}(S,T)\ge {{\,\textrm{UB}\,}}\vdash {{\,\textrm{DIST}\,}}(S,T)\ge {{\,\textrm{UB}\,}}\) (Algorithm 2).

An efficient lower bound must be fast while having good approximations (“tight”) [15, 36]. Because these aims compete, lower bounds of increasing tightness and cost are used in cascade, e.g. in the UCR-Suite [24]. Various lower bounds have been developed for \({{\,\textrm{DTW}\,}}\). The most common lower bounds for \({{\,\textrm{DTW}\,}}\) are \({{\,\mathrm{\textsc {LB\_Kim}}\,}}\) [16] and \({{\,\mathrm{\textsc {LB\_Keogh}}\,}}\) [15]. The UCR-Suite [24] is one of the fastest \({{\,\mathrm{\textrm{NN}}\,}}\) search algorithms. It uses 4 optimization techniques: early abandoning, reordering early abandoning, reversing query and candidate roles in \({{\,\mathrm{\textsc {LB\_Keogh}}\,}}\) and cascading lower bounds (\({{\,\mathrm{\textsc {LB\_Kim}}\,}}\) and \({{\,\mathrm{\textsc {LB\_Keogh}}\,}}\)) to speed up \({{\,\mathrm{\textrm{NN}}\,}}\) search. Tan et al. [34] show that although UCR-Suite is faster than naive LOOCV, it is still significantly slower than FastWWSearch in optimizing for \({{\,\textrm{DTW}\,}}\)’s warping window. Lower bounds for other time series distance measures have not been well explored. Tan et al. [37] developed lower bounds for other time series distance measures and demonstrated that they can significantly speed up the meta-parameter optimization process.

3.2 Improving distance measures implementation

Speeding up the distance measure itself also speeds up the whole LOOCV process. \(\textsc {PrunedDTW}\) is one of the first approaches to speed up \({{\,\textrm{DTW}\,}}\) computations [30]. It first computes an upper bound on the result (the Euclidean distance), then skips cells from the cost matrix \(M_{{{\,\textrm{DTW}\,}}}\) that are larger. It was later extended to use the upper bound \({{\,\textrm{UB}\,}}\) compute by the \({{\,\mathrm{\textrm{NN}}\,}}\) search process (\(d_\text {nn}\) in Algorithm 2), allowing early abandoning [31]. These techniques only yielded a minimal improvement when applied to window search [34].

Recently, Herrmann and Webb [11] developed the new “\({{\,\textrm{EAP}\,}}\)” (Early Abandoned and Pruned) strategy, which is tightly integrating pruning and early abandoning for the six time series distance measures considered in this paper. \({{\,\textrm{EAP}\,}}\) supports the fastest known time series distance measure implementations, even reducing the need for lower bounds. Like any early abandoned distance, \({{\,\textrm{EAP}\,}}\) takes an upper bound \({{\,\textrm{UB}\,}}\) as an extra parameter (again, \(d_\text {nn}\) in Algorithm 2) and abandons the computation as soon as it can be established that the end result will exceed it. The novelty of \({{\,\textrm{EAP}\,}}\) is that early abandoning is treated as an extreme consequence of pruning: if \({{\,\textrm{UB}\,}}\) prunes a full line of the distance matrix M, then no warping path can exist. Note that in Algorithm 2, the initial upper bound is set to \(\infty \), which does not allow to prune anything when using \({{\,\textrm{EAP}\,}}\). By initializing it to the diagonal of M (i.e. squared Euclidean distance for \({{\,\textrm{DTW}\,}}\)), \({{\,\textrm{EAP}\,}}\) can prune some cells of M from the start but not early abandon.

3.3 FastWWSearch and FastEE

FastWWSearch [34] is a window optimization algorithm for \({{\,\textrm{DTW}\,}}\), producing the same results as LOOCV while being significantly faster than both traditional LOOCV and UCR-Suite. It exploits three important properties of \({{\,\textrm{DTW}\,}}\) and its \(\textsc {LB\_Keogh}\) lower bound: warping windows have a validity (Section 1); \({{\,\textrm{DTW}\,}}\) is monotonic with w (Section 2); and, like \({{\,\textrm{DTW}\,}}\), \(\textsc {LB\_Keogh}\) is monotonic with w.

FastWWSearch exploits these properties by starting from the largest warping window, skipping all the windows where the warping path remains the same. Starting from the largest warping window has another advantage: the monotonic property of \({{\,\textrm{DTW}\,}}\) and \(\textsc {LB\_Keogh}\) allows \(\textsc {LB\_Keogh}_{w{+}k}\) and \({{\,\textrm{DTW}\,}}_{w{+}k}\), for any \(k\ge {1}\), to be used as lower bounds for \({{\,\textrm{DTW}\,}}_{w}\). In other words, results obtained at larger windows provide “free” lower bounds for smaller windows.

FastWWSearch was subsequently extended to other distance measures, creating FastEE [37], a significantly faster implementation of EE. It uses the lower bounds to the distance measures and exploits their properties described in Sect. 2. We refer each of the distance measure (except \({{\,\textrm{cDTW}\,}}\)) to their respective FastEE method as FastWDTW, FastLCSS, FastERP, FastMSM and FastTWE. Each distance measure in EE contains 100 parameter values in the search space. FastWWSearch was originally designed to search through L warping windows for \({{\,\textrm{DTW}\,}}\) and was modified to use only 100 warping windows (percentage of the time series length) in FastEE.

FastWDTW exploits the monotonic property of \({{\,\textrm{WDTW}\,}}\) by starting from the largest g value, lower bounding \({{\,\textrm{WDTW}\,}}\) at smaller g using \({{\,\textrm{WDTW}\,}}\) at larger g. Since \({{\,\textrm{WDTW}\,}}\) is continuous with g, it does not have a constant value, preventing FastWDTW to skip any distance computations in the \({{\,\textrm{WDTW}\,}}\) parameter search space. The g parameter for \({{\,\textrm{WDTW}\,}}\) is chosen from an uniform distribution U(0, 1) with 100 values. FastMSM takes advantage of \({{\,\textrm{MSM}\,}}\) property that it increases monotonically with its parameter c and stayed constant at some sufficiently large c. It uses \({{\,\textrm{MSM}\,}}\) distances computed at a smaller c to lower bound \({{\,\textrm{MSM}\,}}\) at the larger c. It also skips the computations at c when the distances are constant. The parameter c is sampled from an exponential sequence in the range of [0.01, 100] with 100 values.

For \({{\,\textrm{LCSS}\,}}\), 10 \(\varepsilon \) values are sampled uniformly from the range \([\sigma /5, \sigma ]\) where \(\sigma \) is the standard deviation of the training dataset, while other 10 \(\delta \) values are sampled from the range [0, L/4], forming a total of 100 parameter combinations. Then, they are arranged in such a way that the overall \({{\,\textrm{LCSS}\,}}\) distance decreases with an increasing parameter ID (Fig. 11a), allowing FastLCSS to start from the largest parameter ID. Similar to WW, the \(\delta \) parameter also allows FastLCSS to skip the computations at \(\delta \)s where the distances are constant.

On the other hand, the meta-parameters combination for \({{\,\textrm{ERP}\,}}\) and \({{\,\textrm{TWE}\,}}\) is arranged such that the overall distances increase with an increasing parameter ID (Fig. 11b, c), allowing FastERP and FastTWE to start from the smaller parameter combination. The search space for \({{\,\textrm{ERP}\,}}\)’s g and w meta-parameter is chosen using the same way as \({{\,\textrm{LCSS}\,}}\). Note that regardless of the g value, the \({{\,\textrm{ERP}\,}}\) distance is the same when the window parameter is 0. This means that there is a redundancy in the search space and will be explored as part of our future work. For \({{\,\textrm{TWE}\,}}\), 10 v values are sampled from an exponential distribution ranging from \([10^{-5}, 1]\), while 10 penalty parameters \(\lambda \) are chosen uniformly from the range [0, 0.1].

4 Ultra-fast meta-parameter search

Our UltraFastMPSearch is a family of three algorithms, UltraFastWWSearch, UltraFastLocalUB and UltraFastGlobalUB. The UltraFastWWSearch algorithm was introduced in our ICDM2021 conference paper [35], designed specifically for the \({{\,\textrm{DTW}\,}}\) distance measure. Both UltraFastLocalUB and UltraFastGlobalUB generalize to all distance measures that can be computed with \({{\,\textrm{EAP}\,}}\). The UltraFastLocalUB is a generalized version to UltraFastWWSearch that does not utilize the window validity property. UltraFastGlobalUB differs from UltraFastLocalUB such that a global upper bound is used instead of a local upper bound for \({{\,\textrm{EAP}\,}}\). A local upper bound refers to nearest neighbour distances at the current meta-parameter while a global upper bound refers to using nearest neighbour distances at some upper bound meta-parameter that gives an upper bound for a range of meta-parameters.

The significance of this difference lies in the consequences of EAP early abandoning a distance calculation. UltraFastMPSearch orders the meta-parameter values such that the distance at one value is a lower bound for the distance at the next. This is exploited to avoid calculating the distances at most values. However, if EAP early abandons a distance calculation, the exact distance is not known only that it is greater than the upper bound that was employed by EAP. Hence, that upper bound is the tightest lower bound available for the distance at the next parameter value. When distances only increase a small amount from one parameter value to the next, this is usually sufficient for effective lower bounding. However, when they increase more substantially, it means that opportunities to exploit lower bounding are lost. In this case, it is better to use a weaker upper bound such that if a distance turns out to be a useful lower bound for some subsequent parameter value it will be found. The strongest such weaker bound is the global upper bound—the minimum value that could allow the current candidate series to be a nearest neighbour at the final parameter value in the current sequence. Our experiments in Sect. 5 show that some distance measures are more effective using the local and others the global upper bound approach.

The core of UltraFastMPSearch is built upon FastEE [37] with the following key differences:

-

1.

It replaces all calls to distance measures with the \({{\,\textrm{EAP}\,}}\) variant [11];

-

2.

It takes full advantage of \({{\,\textrm{EAP}\,}}\)’s early abandoning and pruning;

-

3.

It processes the time series in an order that best exploit \({{\,\textrm{EAP}\,}}\)’s capabilities; and

-

4.

It does not use any custom lower bounds.

For the rest of the paper, all distance measures should be understood as being the \({{\,\textrm{EAP}\,}}\) variant unless specified otherwise.

Recall that learning the meta-parameter can be thought as filling up a \((N\times P)\) nearest neighbour table, as shown in FastEE and illustrated in Table 1. Thus, it is important to note that FastEE and all algorithms under UltraFastMPSearch share the same space complexity. Once this table has been filled, we can easily determine the best meta-parameter for a particular problem by looking for the column that gives the best performance. In case of ties, we take the meta-parameter that is cheaper to compute at test time, for instance, smaller w for \({{\,\textrm{DTW}\,}}\). Algorithm 3 describes this process. The result is identical to FastEE and LOOCV. In general, Algorithm 3 can be transformed to either FastEE or LOOCV by replacing the InitTable algorithm in line 1 with the specified algorithm to fill the \({{\,\mathrm{\textrm{NNs}}\,}}\) table. For LOOCV, this is naively filling the table described in Sect. 2.7. Each of the UltraFastWWSearch, UltraFastLocalUB and UltraFastGlobalUB has their own InitTable method, which will be discussed later in this section.

In the following, we describe UltraFastMPSearch using UltraFastWWSearch and explain the key differences in both UltraFastLocalUB and UltraFastGlobalUB.

4.1 Ultra-fast warping window search

UltraFastWWSearch takes advantage of the properties of \({{\,\textrm{DTW}\,}}\) (Sect. 2.1.1), ordering the computation from large to small windows. Similar to FastWWSearch, this allows UltraFastWWSearch to skip the computation of \({{\,\textrm{DTW}\,}}_{v\le {}w'<w}(S,T)\) for \({{\,\textrm{DTW}\,}}_{w}(S,T)\) with a window validity of [v, w], and to use \({{\,\textrm{DTW}\,}}_{w}\) results as lower bounds for smaller window \(w''<v\) without extra expense. In practice, for most time series pairs the window validity of \({{\,\textrm{DTW}\,}}_{L}\) extends to around 10% of L, i.e. the validity is \([\frac{L}{10}, L]\), allowing us to skip many unnecessary computations.

We present UltraFastWWSearch as a set of algorithms. They rely on a global cache \(\mathcal {C}\) indexed by a pair of series, storing a variety of information. \(\mathcal {C}_{(S,T)}.\texttt {value}\) stores the most recent \({{\,\textrm{DTW}\,}}\) value (i.e. at a larger window); \(\mathcal {C}_{(S,T)}.\texttt {validity}\) stores the minimum window size for which \(\mathcal {C}_{(S,T)}.\textrm{value}\) is valid. \(\mathcal {C}_{(S,T)}.\texttt {do\_euclidean}\) calculates the squared Euclidean distance between S and T on demand, caching the result for future uses.

The AssessNN algorithm in Algorithm 4 is a function that assesses whether a given pair of time series (S, T) is less than some distance d apart for a meta-parameter p. For \({{\,\textrm{DTW}\,}}\), \(p{=}w\) is the warping window and \({{\,\textrm{DIST}\,}}{=}{{\,\textrm{DTW}\,}}\). AssessNN differs substantially from the FastWWSearch function on which it is based, LazyAssessNN, which incorporates complex management of partially completed lower bound calculations at varying windows. AssessNN uses \({{\,\textrm{DTW}\,}}_{w{+}k}\) computed at a larger window as a lower bound to avoid the computation of \({{\,\textrm{DTW}\,}}_{w}\) when possible. Unlike the complex cascade of lower bounds used in FastWWSearch, this is the only lower bound used in UltraFastWWSearch.

Algorithm 4 first checks whether the previously computed \({{\,\textrm{DTW}\,}}\) distance, stored in the cache \(\mathcal {C}_{(S,T)}\), is larger than the current best-so-far distance to beat, d. If so, the algorithm terminates without any extra computation. This is because \({{\,\textrm{DTW}\,}}\) distance increases with decreasing w (see Fig. 5), so if a distance at a larger \(w'\) is already larger than the best-so-far distance d at w, then so too is \({{\,\textrm{DTW}\,}}_{w}\). If not and the previously computed \({{\,\textrm{DTW}\,}}\) is still valid, it is returned (line 2). Otherwise, we have to compute \({{\,\textrm{DTW}\,}}_{w}(S,T)\). Notice that on line 4, we make use of the \({{\,\textrm{EAP}\,}}\) implementation of \({{\,\textrm{DTW}\,}}\), passing the upper bound \({{\,\textrm{UB}\,}}\) as an argument. We will describe how \({{\,\textrm{UB}\,}}\) is calculated in the following paragraphs. If we do not early abandon, then the new distance is stored in \(\mathcal {C}_{(S,T)}\). Else we store \({{\,\textrm{UB}\,}}\) in \(\mathcal {C}_{(S,T)}\) and terminate the algorithm. Storing \({{\,\textrm{UB}\,}}\) in \(\mathcal {C}_{(S,T)}\) instead of \(\infty \) provides a better ordering of \(T \in \mathcal {T}\) later in the algorithm.

Algorithm 3 is used to fill up the \({{\,\mathrm{\textrm{NNs}}\,}}\) table to learn the warping window for \({{\,\textrm{DTW}\,}}\). Algorithm 3 can be transformed into FastWWSearch by replacing line 1 with Algorithm 3 in [34] for FastWWSearch. We use Algorithm 6 to fill this table efficiently.

Similar to FastWWSearch, we build this table for a subset \(\mathcal {T}'\subseteq \mathcal {T}\) of increasing size until \(\mathcal {T}' = \mathcal {T}\). This method allows us to process all the series in \(\mathcal {T}\) in a systematic and efficient order. We start by building the table for \(\mathcal {T}'\) comprising only 2 first time series \(\mathcal {T}_1\) and \(\mathcal {T}_2\), and fill this (2 \(\times \) P)-table as if \(\mathcal {T}'\) was the entire dataset. At this stage it is trivial that \(\mathcal {T}_2\) is the nearest neighbour of \(\mathcal {T}_1\) and vice versa. We then add a third time series \(\mathcal {T}_3\) from \(\mathcal {T}\setminus \mathcal {T}'\) to our growing set \(\mathcal {T}'\). At this point, we have to do two things: (a) find the nearest neighbour of \(\mathcal {T}_3\) within \(\mathcal {T}' \setminus \mathcal {T}_3 = \{\mathcal {T}_1,\mathcal {T}_2\}\) and (b) check whether \(\mathcal {T}_3\) has become the nearest neighbour of \(\mathcal {T}_1\) and/or \(\mathcal {T}_2\). This is described in Algorithm 5. We can then add a fourth time series \(\mathcal {T}_4\) and so on until \(\mathcal {T}'=\mathcal {T}\).

Algorithm 5 describes the process to check whether either of a pair (S, T) is a nearest neighbour of the other and, if so, to update the \({{\,\mathrm{\textrm{NNs}}\,}}\) table accordingly. This process differs from FastWWSearch by using a local \({{\,\textrm{UB}\,}}\) to early abandon and prune \({{\,\textrm{DTW}\,}}\) computations, exploiting \({{\,\textrm{EAP}\,}}\). It is important to have a “tight” \({{\,\textrm{UB}\,}}\), especially for \(w{=}L\), because \({{\,\textrm{DTW}\,}}_L\) is the most expensive operation for UltraFastWWSearch and thus needs to be minimized. Using \({{\,\textrm{EAP}\,}}\) alone has provided a significant boost to the speed of FastWWSearch, which will be shown in our experiments in Sect. 5.

Lines 1 to 2 of Algorithm 5 calculate the upper bound that will be used to early abandon and prune \({{\,\textrm{EAP}\,}}\). The upper bound is calculated as \({{\,\textrm{UB}\,}}{=}\max ({{\,\mathrm{\textrm{NNs}}\,}}[S][w].\textrm{dist}, {{\,\mathrm{\textrm{NNs}}\,}}[T][w].\textrm{dist})\). This ensures that we always and only calculate the full \({{\,\textrm{DTW}\,}}\) when it can result in S being T’s \({{\,\mathrm{\textrm{NN}}\,}}\) or vice versa. If we do not have a best-so-far \({{\,\mathrm{\textrm{NN}}\,}}\) for either S nor T yet, i.e. when T is the first candidate \({{\,\mathrm{\textrm{NN}}\,}}\) considered for S and hence its distance is \(+\infty \), then we compute the Euclidean distance between S and T to use it as the \({{\,\textrm{UB}\,}}\) for \({{\,\textrm{EAP}\,}}\). The Euclidean distance is an upper bound (\({{\,\textrm{UB}\,}}\)) for \({{\,\textrm{DTW}\,}}\) and provides a better \({{\,\textrm{UB}\,}}\) than the commonly used \(+\infty \) for us to prune \({{\,\textrm{EAP}\,}}\) with \({{\,\textrm{DTW}\,}}\) at the largest window, \(w{=}L\). Then lines 3 to 5 check whether T can be the \({{\,\mathrm{\textrm{NN}}\,}}\) of S. The algorithm calls the \({{\,\textrm{AssessNN}\,}}\) function in Algorithm 4 to check whether T is able to beat (i.e. smaller than) the best-so-far \({{\,\mathrm{\textrm{NN}}\,}}\) distance of S, d. If \({{\,\textrm{AssessNN}\,}}\) returns \(\textrm{abort}\), it means that \({{\,\textrm{DTW}\,}}_{w'}(S,T) \ge d\) for all \(w'\ge w\), thus T cannot be the nearest neighbour of S. Otherwise we update the nearest neighbour of S with T (line 7). Similarly lines 6 to 8 check whether S is the nearest neighbour of T. Note that we have compute \({{\,\textrm{DTW}\,}}_w(S,T)\) once to update both \({{\,\mathrm{\textrm{NNs}}\,}}[S][w]\) and \({{\,\mathrm{\textrm{NNs}}\,}}[T][w]\).

The core of UltraFastWWSearch lies in Algorithm 6. In line 1, we start by initializing the \({{\,\mathrm{\textrm{NNs}}\,}}\) table to \((\_, +\infty )\), an otherwise empty table with \(+\infty \) nearest neighbour distances. Then, we initialize \(\mathcal {T}'\), the subset of \(\mathcal {T}\) processed so far. After initializing the key components, we start with the second time series in \(\mathcal {T}\) and add all the preceding time series \(\mathcal {T}_{s{-}1}\) to \(\mathcal {T}'\). We start the computation from the largest window, \(w{=}L{-}1\), described from lines 4 to 10.

Recall that FastWWSearch processes the series at \(w{=}L{-}1\) similarly as any other smaller w. It goes through the set \(\mathcal {T}'\) in an ascending order of lower bound distance to S. For the case of \(w{=}L{-}1\), \(\mathcal {T}'\) is ordered on \(\textsc {LB\_Kim}\), which is a loose lower bound. This exploits its complex cascade lower bounds in order to minimize the number of full \({{\,\textrm{DTW}\,}}\) calculations required by using the lower bounds to prune as many as possible. In contrast, UltraFastWWSearch exploits the unique properties of \({{\,\textrm{EAP}\,}}\) by seeking to minimize the \({{\,\textrm{UB}\,}}\) used in each call to \({{\,\textrm{DTW}\,}}\). Recall that \({{\,\textrm{UB}\,}}{=}\max ({{\,\mathrm{\textrm{NNs}}\,}}[S][w].\textrm{dist}, {{\,\mathrm{\textrm{NNs}}\,}}[T][w].\textrm{dist})\). \({{\,\mathrm{\textrm{NNs}}\,}}[S][w].\textrm{dist}\) starts at \(\infty \) and can only decrease with each successive T. Hence, it is most productive to pair it initially with Ts with larger \({{\,\mathrm{\textrm{NNs}}\,}}[T][w].\textrm{dist}\), as the max will be large anyway, and to pair it with the smallest \({{\,\mathrm{\textrm{NNs}}\,}}[T][w].\textrm{dist}\) last, when it is also most likely to be small and hence the max will be small. To this end, we process \(\mathcal {T}'\) in descending order of the \({{\,\mathrm{\textrm{NN}}\,}}\) distance of each \(T\in \mathcal {T}'\) at \(w{=}L{-}1\), as outlined in lines 6 to 9.

However, while this sort order is important to minimize the \({{\,\textrm{EAP}\,}}\) computations at the full \({{\,\textrm{DTW}\,}}\) when only loose lower bounds are possible, \({{\,\textrm{DTW}\,}}_{w{+}1}(S,T)\) provides a very tight lower bound on \({{\,\textrm{DTW}\,}}_{w}(S,T)\). Once it is available it is advantageous to exploit it. Hence, on line 14, we order \(\mathcal {T}'\) in ascending order of their \({{\,\textrm{DTW}\,}}_{L{-}1}\) distances. Note that we only do this once for each series S. In practice, the order does not change substantially as the window size decreases. Rather than resorting at each window size, it is sufficient to just keep track of the nearest neighbour at \(w{+}1\), process it first as it is likely to be one of the nearest neighbours at w and then process the remaining series in the \({{\,\textrm{DTW}\,}}_{L{-}1}\) sort order.

In addition, we also keep track of the maximum window validity, \(\omega \) for all \({{\,\mathrm{\textrm{NNs}}\,}}[T][L]\) for all \(T\in \mathcal {T}'\). By keeping track of \(\omega \), we can quickly skip all the windows where the distances are constant for all \(T\in \mathcal {T}'\). On line 11, once we have the \({{\,\mathrm{\textrm{NN}}\,}}\) of S at \(w{=}L{-}1\), we need to propagate this information for all \(w'\), \(w' \le w\) for which the warping path is valid. Similarly in lines 12 and 13, we also need to propagate the \({{\,\mathrm{\textrm{NN}}\,}}\) for all \(T\in \mathcal {T}'\) if \({{\,\mathrm{\textrm{NNs}}\,}}[T][L] {=} S\).

From line 16, we continue to process the windows from \(\omega {-}1\) down to 0. Line 17 checks if we already have a \({{\,\mathrm{\textrm{NN}}\,}}\) for S from larger windows due to window validity. Lines 18 to 23 check whether S is the \({{\,\mathrm{\textrm{NN}}\,}}\) of any \(T\in \mathcal {T}'\). Since we already have the \({{\,\mathrm{\textrm{NN}}\,}}\) for S, the process is the similar to lines 6-8 of Algorithm 5, the difference being the way \({{\,\textrm{UB}\,}}\) was calculated. In this case, we can use the distance of the current \({{\,\mathrm{\textrm{NN}}\,}}\) of T (if available) as the \({{\,\textrm{UB}\,}}\) instead of taking the max of the two \({{\,\mathrm{\textrm{NN}}\,}}\) distances, as we will not use the result to check whether T is S’s \({{\,\mathrm{\textrm{NN}}\,}}\). The process starting from the \(\textrm{else}\) case (line 24) is when we do not obtain the \({{\,\mathrm{\textrm{NN}}\,}}\) of S at w from a larger window. In this case, we need to search for the \({{\,\mathrm{\textrm{NN}}\,}}\) of S from \(\mathcal {T}'\). We start from \(\mathcal {T}_{{{\,\mathrm{\textrm{NN}}\,}}^{w{+}1}}\), the \({{\,\mathrm{\textrm{NN}}\,}}\) of S at \(w{+}1\). The \({{\,\mathrm{\textrm{NN}}\,}}\) of both S and \(\mathcal {T}_{{{\,\mathrm{\textrm{NN}}\,}}^{w{+}1}}\) is updated with Algorithm 5. The rest of \(T\in \mathcal {T}'\setminus \mathcal {T}_{{{\,\mathrm{\textrm{NN}}\,}}^{w{+}1}}\) is processed similar to Algorithm 5, except that we need to keep track of \(\mathcal {T}_{{{\,\mathrm{\textrm{NN}}\,}}^{w{+}1}}\) (lines 27–35). Finally, we have \({{\,\mathrm{\textrm{NNs}}\,}}[S][w]\), the \({{\,\mathrm{\textrm{NN}}\,}}\) of S at w; we need to propagate the information for all valid windows (line 36).

4.2 Ultra-fast meta-parameter search with local upper bound

UltraFastWWSearch is very specific to \({{\,\textrm{DTW}\,}}\) and is not applicable to other distance measures. Hence, we designed a more generic algorithm, UltraFastLocalUB that is applicable to all time series distance measures that can be calculated using \({{\,\textrm{EAP}\,}}\), including \({{\,\textrm{DTW}\,}}\). The main difference with UltraFastWWSearch is that it that does not exploit the window validity property in \({{\,\textrm{DTW}\,}}\), as not all measures have this property.

UltraFastLocalUB requires some slight modifications to generalize the algorithms in UltraFastWWSearch to other distance measures. Starting from Algorithm 5, Algorithm 7 replaces all w to p and takes in an additional input, \(p^{{{\,\textrm{UB}\,}}}\). \(p^{{{\,\textrm{UB}\,}}}\) is the meta-parameter that gives the upper bound distance in the parameter search space. This parameter allows us to first compute \(\mathcal {C}_{(S,T,p^{{{\,\textrm{UB}\,}}})}.\texttt {do\_upperBound} {=} {{\,\textrm{DIST}\,}}_{p^{{{\,\textrm{UB}\,}}}}(S,T)\), the distance at the upper bound meta-parameter, which is then cached for future use. This is similar to computing the Euclidean distance as the upper bound for \({{\,\textrm{DTW}\,}}\) where \(w{=}0\). For \({{\,\textrm{DTW}\,}}\), \({{\,\textrm{WDTW}\,}}\) and \({{\,\textrm{ERP}\,}}\), this upper bound distance is equivalent to computing the diagonal of the distance matrix M and can be computed in O(L) time instead of \(O(L^2)\). Similar to UltraFastWWSearch, if we do not have a best-so-far \({{\,\mathrm{\textrm{NN}}\,}}\) for either S or T yet, then we compute the upper bound distance between S and T at parameter \(p^{{{\,\textrm{UB}\,}}}\). The rest of the algorithm is similar to Algorithm 5 in UltraFastWWSearch.

Then UltraFastLocalUB replaces Algorithm 6 in Algorithm 3 with Algorithm 8 to fill the \({{\,\mathrm{\textrm{NNs}}\,}}\) table. Algorithm 8 describes the general process of filling the \({{\,\mathrm{\textrm{NNs}}\,}}\) table for any time series distance measures that support \({{\,\textrm{EAP}\,}}\). For ease of exposition, we assume that the meta-parameter p increases from 0 to P but implemented it based on the distance measure’s properties described in Sect. 2. There are two main differences to Algorithm 6. First, it does not utilize the maximum window validity as per UltraFastWWSearch, because not all distance measures have the window meta-parameter. Second, it needs to keep track of the upper bound parameter ID, \(p^{{{\,\textrm{UB}\,}}}\). Distance measures with two meta-parameters have multiple upper bound distances along the parameter search space and when we do not have a nearest neighbour yet, we want to use the tightest upper bound possible for \({{\,\textrm{EAP}\,}}\) – the upper bound that is closest to the current distance. Hence it is important to keep updating \(p^{{{\,\textrm{UB}\,}}}\) while going through the search space.

The upper bound parameter \(p^{{{\,\textrm{UB}\,}}}\) should be updated according to the properties of each distance measure, described in Sect. 2. \(p^{{{\,\textrm{UB}\,}}}\) is constant for single meta-parameter measures, i.e. \(p^{{{\,\textrm{UB}\,}}}_{{{\,\textrm{DTW}\,}}}{=}p^{{{\,\textrm{UB}\,}}}_{{{\,\textrm{WDTW}\,}}}{=}0\) and \(p^{{{\,\textrm{UB}\,}}}_{{{\,\textrm{MSM}\,}}}{=}P\). In this work, we ordered the parameters for two meta-parameters measures such that every 10 meta-parameter is an upper bound to the previous 9. This means that we need to update \(p^{{{\,\textrm{UB}\,}}}\) at every 10-th meta-parameter. For the special case of \({{\,\textrm{ERP}\,}}\), the upper bound is when \(w{=}0\) which gives the same \({{\,\textrm{ERP}\,}}\) distance for all g, i.e. the upper bound for \({{\,\textrm{ERP}\,}}\) is the same in this parameter space. When we are processing a new query, we have to reset \(p^{{{\,\textrm{UB}\,}}}\) to the first upper bound, as shown in line 4 of Algorithm 8. Then line 15 of Algorithm 8 checks and update \(p^{{{\,\textrm{UB}\,}}}\) after changing to the next parameter.

4.3 Ultra-fast meta-parameter search with global upper bound

UltraFastLocalUB takes the maximum of the nearest neighbour distance of S and T at the current meta-parameter p as the upper bound. We call this a local upper bound as this is only applicable to the current meta-parameter. Instead of using the nearest neighbour of S and T at the current meta-parameter p, UltraFastGlobalUB uses a global upper bound, i.e. the nearest neighbour distance of S and T at the parameter \(p^{{{\,\textrm{UB}\,}}}\). The global upper bound is applicable to a range of previous meta-parameters. Note that by definition, the global upper bound is looser than the local upper bound.

We modify Algorithm 7 by replacing line 1 with \({{\,\textrm{UB}\,}}{=} \max ({{\,\mathrm{\textrm{NNs}}\,}}[S][p^{{{\,\textrm{UB}\,}}}].\text {dist},{{\,\mathrm{\textrm{NNs}}\,}}[T][p^{{{\,\textrm{UB}\,}}}].\text {dist})\) as presented in Algorithm 9. The rest of Algorithm 9 is the same as Algorithm 7. Similarly Algorithm 8 is also modified with respect to the global upper bound by changing lines 19 and 26. Note that as the global upper bound will be used a lot, it is important to cache it for future use.

5 Experiments

This section describes the experiments to evaluate our UltraFastMPSearch. To ensure reproducibility, we have made our code and results available open-source at https://github.com/ChangWeiTan/UltraFastWWS. Note that UltraFastMPSearch is exact, producing the same results as FastWWSearch, FastEE and LOOCV, hence we are only interested in comparing the training time.

Our experiments use all of the 128 benchmark UCR time series datasets [7]. For each method, we perform the search using the set of 100 meta-parameters (Sect. 3.3) used in EE [18] and FastEE [37]. This allows UltraFastMPSearch to be directly used in EE. Since the ordering of the series in the datasets might affect the training time, i.e. the speed depends on where the actual nearest neighbour is, we report the average results over 5 runs for different reshuffles of the training dataset. We conducted our experiments in Java, on a standard single core cluster machine with AMD EPYC Processor CPU @2.2GHz and 32GB RAM.

Our experiments are divided into three parts. (A) We first study the effect of using \({{\,\textrm{EAP}\,}}\) with \({{\,\textrm{DTW}\,}}\) on LOOCV. (B) Then, we explore the features of UltraFastWWSearch that help it achieves significant speed up compared to the state of the art. (C) We investigate the scalability of UltraFastWWSearch on large and long datasets. (D) Lastly, we investigate the generalization of UltraFastWWSearch to other distance measures using UltraFastLocalUB and UltraFastGlobalUB.

5.1 Pruning and early abandoning with \({{\,\textrm{EAP}\,}}\)

Given the dramatic speedup of \({{\,\textrm{EAP}\,}}\) on \({{\,\mathrm{\textrm{NN}}\,}}\)-\({{\,\textrm{DTW}\,}}\) [11], we first study the feasibility of replacing \({{\,\textrm{DTW}\,}}\) in LOOCV with \({{\,\textrm{EAP}\,}}\). The following methods are compared:

-

1.

\({{\,\textrm{DTW}\,}}\)_LOOCV: Naive implementation of LOOCV described in Sect. 2.7 using \({{\,\mathrm{\textrm{NN}}\,}}\)-\({{\,\textrm{DTW}\,}}\) with early abandoning strategy described in [24] but without lower bound and \({{\,\textrm{UB}\,}}\) from Sect. 3.1. The \({{\,\textrm{UB}\,}}\) is computed using the best-so-far \({{\,\mathrm{\textrm{NN}}\,}}\) distance.

-

2.

\(\textsc {UCR-Suite}\)_LOOCV: Naive implementation of LOOCV using \({{\,\mathrm{\textrm{NN}}\,}}\)-\({{\,\textrm{DTW}\,}}\) with optimizations from UCR-Suite, i.e. cascading lower bounds and early abandoning as before [24].

-

3.

\({{\,\textrm{EAP}\,}}\)_LOOCV: Replacing \({{\,\textrm{DTW}\,}}\) in \({{\,\textrm{DTW}\,}}\)_LOOCV with \({{\,\textrm{EAP}\,}}\) [11].

Figure 12a compares the total training time of the three methods on the 128 datasets. The results show that \({{\,\textrm{EAP}\,}}\)_LOOCV reduces the training time of \({{\,\textrm{DTW}\,}}\)_LOOCV by almost 1000 h (42 days) and about 300 h (12 days) for UCR-Suite_LOOCV. Note that \({{\,\textrm{EAP}\,}}\)_LOOCV was able to achieve such significant speedup without using any lower bounds, while UCR-Suite_LOOCV uses a series of complex lower bounds. The main reason is because the \(\textsc {LB\_Kim}\) and \(\textsc {LB\_Keogh}\) lower bounds used in UCR-Suite are very loose at larger windows, as pointed out in [36]. The work in [36] showed that the more complex \(\textsc {LB\_Keogh}\) can be looser than the simpler \(\textsc {LB\_Kim}\) when \(w\ge 0.5\cdot L\). This shows that \({{\,\textrm{EAP}\,}}\) is able to reduce the need for lower bounds for \({{\,\mathrm{\textrm{NN}}\,}}\)-\({{\,\textrm{DTW}\,}}\) especially at larger warping windows.

Total training time on 128 datasets [7] of a LOOCV with \({{\,\textrm{DTW}\,}}\) and \({{\,\textrm{EAP}\,}}\), UCR-Suite, and our method for reference; b FastWWSearch and UltraFastWWSearch and their variants. The numbers in the round brackets represent the standard deviation over 5 runs

5.2 Speeding up the state of the art with UltraFastWWSearch

This section examines the features that make UltraFastWWSearch ultra-fast, comparing it to state-of-the-art FastWWSearch. The results are shown in Fig. 12b.

Much of \({{\,\textrm{EAP}\,}}\)’s speed up in many \({{\,\mathrm{\textrm{NN}}\,}}\)-\({{\,\textrm{DTW}\,}}\) tasks actually comes from early abandoning (see Fig. 7 of [11]). Section 5.1 shows that \({{\,\textrm{EAP}\,}}\), even without using lower bounds, speeds up the naive LOOCV implementation. Hence, we created two variants of FastWWSearch, (1) with early abandoning and (2) without lower bounds to study how they contribute to speeding up FastWWSearch, annotated with the suffixes “_EA” and “_NoLb”, respectively. We adopt the early abandoning strategy described in [24] for the original FastWWSearch and use the \({{\,\textrm{UB}\,}}\) described in Sect. 3.1 for the early abandoning process.

It is not surprising that removing lower bounds for FastWWSearch makes it slower, as it makes use of various lower bounds to achieve the huge speed up. However, it is interesting that adding early abandoning to FastWWSearch makes it the slowest. This is because if \({{\,\textrm{DTW}\,}}\) is early abandoned at a larger window, then when FastWWSearch needs the \({{\,\textrm{DTW}\,}}\) distance at a smaller window, because it was not fully computed, FastWWSearch needs to recalcuate \({{\,\textrm{DTW}\,}}\) from scratch. Similar behaviour was observed in [34] as well.

On the other hand, the opposite is observed for the \({{\,\textrm{EAP}\,}}\) variants. \({{\,\textrm{EAP}\,}}\)_FastWWSearch_NoLb in Fig. 12b is \({{\,\textrm{EAP}\,}}\)_FastWWSearch with the use of lower bounds removed. It shows that removing lower bounds actually improves \({{\,\textrm{EAP}\,}}\)_FastWWSearch, albeit only by about 20 min. This is not surprising as it coincides with the results from the \({{\,\textrm{EAP}\,}}\) paper [11]. This again highlights the effectiveness of the early abandoning strategy of \({{\,\textrm{EAP}\,}}\) and the possibility of removing complex lower bounds.

UltraFastWWSearch incorporates six primary strategies that distinguish it from FastWWSearch. We study the effect of introducing each of these in turn with algorithms \({{\,\textrm{EAP}\,}}\)_FastWWSearch: using \({{\,\textrm{EAP}\,}}\) for \({{\,\textrm{DTW}\,}}\) computations; \({{\,\textrm{EAP}\,}}\)_FastWWSearch_NoLb: removing lower bounds; \({{\,\textrm{EAP}\,}}\)_FastWWSearch_EA: using early abandoning; UltraFastWWSearch_V1: tighter upper bounds; UltraFastWWSearch_V2: sorting \(\mathcal {T}'\) in ascending order of distance to nearest neighbour and then sorting on \({{\,\textrm{DTW}\,}}_L\); and UltraFastWWSearch: skipping windows from \(L{-}1\) to \(\omega \), the maximum window validity at \(L{-}1\).

Figure 12b shows that substituting \({{\,\textrm{EAP}\,}}\) to compute \({{\,\textrm{DTW}\,}}\) within FastWWSearch (even without early abandoning) (\({{\,\textrm{EAP}\,}}\)_FastWWSearch) reduces the total training time for all 128 datasets by 5 h. 3 h and 50 min of this comes from 5 long and large datasets, NonInvasiveFetalECGThorax1, UWaveGestureLibraryAll, HandOutlines, FordA and FordB. The pairwise plot in Fig. 13 illustrates that \({{\,\textrm{EAP}\,}}\)_FastWWSearch is consistently faster than the original \({{\,\textrm{DTW}\,}}\) variant, although the difference between them is not large. The result shows that without early abandoning, \({{\,\textrm{EAP}\,}}\) is still an efficient strategy that prunes unnecessary computations in \({{\,\textrm{DTW}\,}}\).

The effectiveness of early abandoning an \({{\,\textrm{EAP}\,}}\) computation depends on the \({{\,\textrm{UB}\,}}\) that was passed into it. \({{\,\textrm{EAP}\,}}\)_FastWWSearch_EA uses the \({{\,\textrm{UB}\,}}\) described in Sect. 3.1. The V1 variant of UltraFastWWSearch uses the \({{\,\textrm{UB}\,}}\) described in Algorithm 5. The results in Fig. 12b show that this \({{\,\textrm{UB}\,}}\) improves the speed of UltraFastWWSearch but by a small margin. Since we calculate \({{\,\textrm{UB}\,}}\) as the maximum between the nearest neighbour distances of both S and T, it is most productive to pair S with Ts with larger \({{\,\mathrm{\textrm{NNs}}\,}}[T][w].\textrm{dist}\) (Algorithm 6). This allows us to better exploit the new \({{\,\textrm{UB}\,}}\). This strategy has shown to speed up FastWWSearch substantially, as demonstrated by the V2 variant of UltraFastWWSearch in Fig. 12b.

Finally, we add the optimization of skipping windows from \(L{-}1\) to \(\omega \). While the five previous optimizations all exploit the properties of \({{\,\textrm{EAP}\,}}\), this final optimization is a novel further exploit of the window validity property beyond those in FastWWSearch. It more than halves the total time.

Figure 12b shows that UltraFastWWSearch is able to complete all 128 datasets in under 4 h. This is a 6 times speedup compared to 24 h for FastWWSearch.

We performed a statistical test using the Wilcoxon signed-rank test with Holm correction as the post hoc test to the Friedman test [10] to test the significance of our results and visualize it in a critical difference diagram, illustrated in Fig. 14. Figure 14 shows the average ranking of each method over all datasets, with a rank of 1 being the fastest and rank 9 being the slowest. Methods in the same clique (black bars) indicates that they are not significantly different from each other. Similar to the results in Fig. 12b, the optimizations for UltraFastWWSearch significantly slows down FastWWSearch. The critical difference diagram shows that all the \({{\,\textrm{EAP}\,}}\) variants are faster than the original FastWWSearch with significant consistency across datasets. It is interesting to observe that although early abandoning reduces the total time on 128 datasets shown in Fig. 12b, it is ranked lower compared to all other methods. The reason being the early abandoning strategy in \({{\,\textrm{EAP}\,}}\) reduces the time of three largest datasets (HandOutlines, FordA, and FordB) by a significant amount, while the overhead of having to recalculate \({{\,\textrm{EAP}\,}}\) if previously early abandoned has greater cost relative to the computation save by abandoning on smaller datasets. Then we see that UltraFastWWSearch is the fastest among all with an average rank closed to 1 (i.e. it is faster than all methods on almost all datasets), followed by its V2 and V1 variants. Figure 2 shows that UltraFastWWSearch is up to one order of magnitude faster than FastWWSearch.

5.3 Scalability to large and long datasets

We showed previously that UltraFastWWSearch is efficient on large and long datasets. We now investigate its scalability. We first experimented using the HandOutlines dataset with a length of \(L{=}2709\)—the longest in the UCR archive [7]. We varied the length from \(0.1\times L\) to L, recording the time to search for the best warping window. Figure 15a shows that the training time of UltraFastWWSearch increases slower than FastWWSearch as L increases. With only \(L{=}1000\), we are able to achieve 9.4 times speed up, and 30 times at \(L{=}2709\). This means reducing 6 h of compute time down to 11 min (Fig. 1), thus effectively tackling the \(L^3\) part of the complexity.

We then evaluated the scalability to larger datasets, using the same SITS dataset as [34], taken from [38]. We chose this dataset because it has a short length of \(L{=}46\), which tends to isolate the influence of N on the scalability. Figure 15b shows that UltraFastWWSearch is on average 2 times faster than FastWWSearch for all N. This means that although UltraFastWWSearch is faster than FastWWSearch, the \(N^2\) part of the complexity becomes a limitation of UltraFastWWSearch. However, traditional methods LOOCV and UCR-Suite do not even scale on this dataset as shown in [34], requiring days to complete, while UltraFastWWSearch only takes 6 h for \(N{=}90,000\).

5.4 UltraFastMPSearch—Generalizing to other distance measures

The previous experiments showed that UltraFastWWSearch achieved a substantial speed up compared to FastWWSearch in optimizing \({{\,\textrm{DTW}\,}}\)’s warping window. In this section, we generalize UltraFastWWSearch to UltraFastLocalUB and UltraFastGlobalUB and apply them to all other time series distance measures. We only compare UltraFastLocalUB and UltraFastGlobalUB to the baseline FastEE approaches for each of the measures and FastEE using \({{\,\textrm{EAP}\,}}\) and early abandoning. We assume that LOOCV for each distance measure will take similar time as \({{\,\textrm{DTW}\,}}\)_LOOCV as they all have the same \(O(L^2)\) time complexity. Note that our proposed UltraFastWWSearch is by default using the local upper bound and we call the variant with the global upper bound, UltraFastWWSearch-Global which also uses the window validity to skip some \({{\,\textrm{DTW}\,}}\) computations.

Figure 16 compares the total training time for the methods of each distance measures on the 128 datasets. Overall, we observed that using the local upper bound is more efficient for most measures except for \({{\,\textrm{MSM}\,}}\) and \({{\,\textrm{TWE}\,}}\) where the global upper bound is faster. This is expected because the local upper bound is by definition tighter than the global upper bound. The results show that UltraFastMPSearch significantly reduces the training time from FastEE methods for all distance measures:

-

1.

\({{\,\textrm{DTW}\,}}\) by 21 h (\(\approx 1\) day)

-

2.

\({{\,\textrm{WDTW}\,}}\) by 69 h (\(\approx 3\) days)

-

3.

\({{\,\textrm{LCSS}\,}}\) by 93 h (\(\approx 4\) days)

-

4.

\({{\,\textrm{ERP}\,}}\) by 285 h (\(\approx 12\) days)

-

5.

\({{\,\textrm{MSM}\,}}\) by 264 h (\(\approx 11\) days)

-

6.

\({{\,\textrm{TWE}\,}}\) by 174 h (\(\approx 7\) days)

This speed up translates to a gain of more than 38 days when they are used in EE setting (EE has 11 measures, including variations to these 6 measures).

UltraFastLocalUB is slower for \({{\,\textrm{TWE}\,}}\) and \({{\,\textrm{MSM}\,}}\) because they use an additive penalty for off-diagonal alignments—\({{\,\textrm{TWE}\,}}\) adds a constant \(\lambda \) value while \({{\,\textrm{MSM}\,}}\) adds a constant c value. These values are typically very small, in the range of less than 1 causing the distances in the parameter search space to be very similar. In the worst case scenario, the difference in the distance between two subsequent meta-parameters in the search space is the difference between two consecutive \(\lambda \) or c. As the distances will be very similar, this means that they will typically early abandon near the end of the matrix and almost computing the full distance. Recall that an early abandoned distance cannot be used as a lower bound for the subsequent meta-parameters in UltraFastMPSearch. So it becomes inefficient for the algorithm when the distance computation early abandons late too many times, and recomputing it again for the next meta-parameter. This is almost as if we are computing the distance computation twice and defeating the purpose of early abandoning.

Therefore, by using a looser global upper bound, UltraFastGlobalUB achieves a balance between the number of times the \({{\,\textrm{DIST}\,}}\) function is called and the number of times it early abandons. In other words, if a distance computation early abandons using a larger global upper bound, then we know that the candidate T will never be the nearest neighbour between the current meta-parameter and \(p^{{{\,\textrm{UB}\,}}}\), thus skipping all the unnecessary distance function calls. For other distance measures where the distances only grow by small amounts from one parameter value to the next, the number of times the global upper bound allows useful tighter lower bounds for subsequent parameter values is so few that the time saving is less than the time saved by the additional pruning EAP can achieve with the tighter local upper bounds.

Figure 17 shows the average ranking of each distance measure in terms of training time over all datasets using a critical difference diagram to compare the training time for all the distance measures. At a glance, UltraFastMPSearch is significantly faster than the current state of the art, FastEE methods. Similar to the results in Sect. 5.2, some of the methods like UltraFastWWSearch-Global, \({{\,\textrm{EAP}\,}}\)-FastMSM-EA, UltraFastTWE-Local and \({{\,\textrm{EAP}\,}}\)-FastTWE-EA have longer overall training time, as shown in Fig. 16, but ranked better in the critical difference diagram in Fig. 17. The reason is that these methods are slightly faster on most datasets, that are short and small and require little computation, but took longer on the larger and longer datasets for which overall computation is greatest.

6 Conclusion

This paper proposes UltraFastMPSearch—a family of ultra-fast algorithms that are able to learn the meta-parameters for time series distance measures efficiently. UltraFastWWSearch fundamentally transforms its predecessor FastWWSearch. It incorporates six major changes—using \({{\,\textrm{EAP}\,}}\) to compute \({{\,\textrm{DTW}\,}}\); removing the use of \({{\,\textrm{DTW}\,}}\) lower bounds; adding early abandoning of \({{\,\textrm{DTW}\,}}\); establishing tighter upper bounds for early abandoning; ordering the time series so as to best exploit the efficient pruning and early abandoning power of \({{\,\textrm{EAP}\,}}\); and using the window validity to skip the majority of window sizes altogether. UltraFastLocalUB generalizes UltraFastWWSearch by not using the window validity to skip the meta-parameters. Instead of using the nearest neighbour distance at the current meta-parameter for \({{\,\textrm{EAP}\,}}\), UltraFastGlobalUB uses as the upper bound the nearest neighbour distance computed at a parameter value that provides an upper bound for a series of subsequent parameter values. This achieves a better balance for measures with distances that grow substantially between successive meta-parameters, such as \({{\,\textrm{MSM}\,}}\) and \({{\,\textrm{TWE}\,}}\).

Our experiments show that the UltraFastMPSearch algorithms are up to an order of magnitude faster than the previous state of the art, with the greatest benefit achieved on long time series datasets, where it is most needed.

UltraFastWWSearch speeds up the training of \({{\,\mathrm{\textrm{NN}}\,}}\)-\({{\,\textrm{DTW}\,}}\), formerly one of the slowest time series classification (TSC) algorithms, to under 4 h on the UCR datasets, a time close to ROCKET, one of the fastest and most accurate TSC algorithms [9]. Similarly UltraFastMPSearch also speeds up the training of \({{\,\mathrm{\textrm{NN}}\,}}\) search with other distance measures, although the efficiency is not as great due to the lack of the window validity property. This speedup holds open the promise for EE to be reinstated back into HIVE-COTE, which is known to improve its classification performance to a new state-of-the-art level for TSC and only omitted due to its excessive compute time [22]

Change history

01 March 2023

Change history text: Missing funding note has been updated.

Notes

Source code available at https://github.com/ChangWeiTan/UltraFastWWS.

References

Alaee S, Mercer R, Kamgar K, Keogh E (2021) Time series motifs discovery under DTW allows more robust discovery of conserved structure. Data Min Knowl Disc 35(3):863–910

Bagnall A, Flynn M, Large J, Lines J, Middlehurst M (2020) A tale of two toolkits, report the third: on the usage and performance of HIVE-COTE v1.0. arXiv e-prints pp. arXiv–2004

Bagnall A, Lines J, Bostrom A, Large J, Keogh E (2017) The great time series classification bake off: a review and experimental evaluation of recent algorithmic advances. Data Min Knowl Disc 31(3):606–660

Boreczky JS, Rowe LA (1996) Comparison of video shot boundary detection techniques. J Electron Imaging 5(2):122–128

Chen L, Ng R (2004) On the marriage of Lp-norms and edit distance. In: Proceedings of the 30th international conference on very large databases (VLDB), pp 792–803

Chen L, Özsu MT , Oria V (2005) Robust and fast similarity search for moving object trajectories. In: Proceedings of the 2005 ACM SIGMOD international conference on management of data (SIGMOD), pp 491–502

Dau HA, Keogh E, Kamgar K, Yeh C-CM, Zhu Y, Gharghabi S, Ratanamahatana CA, Yanping Hu, B, Begum N, Bagnall A, Mueen A, Batista G, Hexagon-ML (2018) The UCR time series classification archive. https://www.cs.ucr.edu/~eamonn/time_series_data_2018/

Dau HA, Silva DF, Petitjean F, Forestier G, Bagnall A, Mueen A, Keogh E (2018) Optimizing dynamic time warping’s window width for time series data mining applications. Data Min Knowl Disc 32(4):1074–1120

Dempster A, Petitjean F, Webb GI (2020) ROCKET: exceptionally fast and accurate time series classification using random convolutional kernels. Data Min Knowl Disc 34(5):1454–1495

Demšar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30