Abstract

We introduce a framework for performing vector-valued regression in finite-dimensional Hilbert spaces. Using Lipschitz smoothness as our regularizer, we leverage Kirszbraun’s extension theorem for off-data prediction. We analyze the statistical and computational aspects of this method—to our knowledge, its first application to supervised learning. We decompose this task into two stages: training (which corresponds operationally to smoothing/regularization) and prediction (which is achieved via Kirszbraun extension). Both are solved algorithmically via a novel multiplicative weight updates (MWU) scheme, which, for our problem formulation, achieves significant runtime speedups over generic interior point methods. Our empirical results indicate a dramatic advantage over standard off-the-shelf solvers in our regression setting.

Similar content being viewed by others

Change history

20 January 2024

A Correction to this paper has been published: https://doi.org/10.1007/s10107-024-02056-5

Notes

Even when the problem is formally infinite-dimensional, such as with SVM, the Representer Theorem [25] shows that the solution is spanned by the finite sample.

As explained in Sect. 3, there is no need to assume that L is given, as this hyper-parameter can be tuned via Structural Risk Minimization (SRM).

A further improvement via the use of spanners allows reducing the number of constraints m from \(O(n^2)\) to O(n) and hence the ERM runtime to

, as detailed in Sect. 3.1.

, as detailed in Sect. 3.1.

References

Alman, J., Williams, V.V.: A refined laser method and faster matrix multiplication. In: Proceedings of the Symposium on Discrete Algorithms, pp. 522–539. SIAM (2021)

Arora, S., Hazan, E., Kale, S.: The multiplicative weights update method: a meta-algorithm and applications. Theory Comput. 8(1), 121–164 (2012)

Arya, S., Mount, D.M., Netanyahu, N., Silverman, R., Wu, A.Y.: An optimal algorithm for approximate nearest neighbor searching in fixed dimensions. In: Symposium on Discrete Algorithms, pp. 573–582 (1994)

Ashlagi, Y., Gottlieb, L., Kontorovich, A.: Functions with average smoothness: structure, algorithms, and learning. In: Belkin, M., Kpotufe, S. (eds.) Conference on Learning Theory, COLT 2021, 15–19 August 2021, Boulder, Colorado, USA, PMLR, Proceedings of Machine Learning Research, vol. 134, pp. 186–236. http://proceedings.mlr.press/v134/ashlagi21a.html (2021)

Borchani, H., Varando, G., Bielza, C., Larrañaga, P.: A survey on multi-output regression. Wiley Interdiscip Rev Data Min Knowl Discov 5(5), 216–233 (2015)

Boyd, S., Vandenberghe, L.: Convex Optimization. Information Science and Statistics. Cambridge University Press, Cambridge (2004)

Brualdi, R.A.: Introductory Combinatorics, 5th edn. Pearson Prentice Hall, Upper Saddle River (2010)

Brudnak, M.: Vector-valued support vector regression. In: The 2006 IEEE International Joint Conference on Neural Network Proceedings, pp. 1562–1569. IEEE (2006)

Bunch, J.R., Hopcroft, J.E.: Triangular factorization and inversion by fast matrix multiplication. Math. Comput. 28(125), 231–236 (1974)

Caruana, R.: Multitask learning. Mach. Learn. 28(1), 41–75 (1997)

Chen, S., Banerjee, A.: An improved analysis of alternating minimization for structured multi-response regression. In: Proceedings of the 32Nd International Conference on Neural Information Processing Systems, NIPS’18, pp. 6617–6628. Curran Associates Inc., USA (2018). http://dl.acm.org/citation.cfm?id=3327757.3327768

Christiano, P., Kelner, J.A., Madry, A., Spielman, D.A., Teng, S.H.: Electrical flows, Laplacian systems, and faster approximation of maximum flow in undirected graphs. In: Proceedings of Symposium on Theory of Computing, pp. 273–282 (2011)

Cohen, D.T., Kontorovich, A.: Learning with metric losses. In: Loh, P., Raginsky, M. (eds.) Conference on Learning Theory, 2–5 July 2022, London, UK, PMLR, Proceedings of Machine Learning Research, vol. 178, pp. 662–700 (2022). https://proceedings.mlr.press/v178/cohen22a.html

Cole, R., Gottlieb, L.A.: Searching dynamic point sets in spaces with bounded doubling dimension. In: Proceedings of the Symposium on Theory of Computing, pp. 574–583 (2006)

Davidson, R., MacKinnon, J.G., et al.: Estimation and Inference in Econometrics. OUP Catalogue (1993)

Gottlieb, L.A., Kontorovich, A., Krauthgamer, R.: Adaptive metric dimensionality reduction (extended abstract: ALT 2013). Theoretical Computer Science, pp. 105–118 (2016)

Gottlieb, L.A., Kontorovich, A., Krauthgamer, R.: Efficient regression in metric spaces via approximate Lipschitz extension. IEEE Trans. Inf. Theory 63(8), 4838–4849 (2017)

Greene, W.H.: Econometric Analysis. Pearson Education India (2003)

Greene, W.H.: Econometric Analysis. William H. Greene (2012)

Györfi, L., Kohler, M., Krzyzak, A., Walk, H.: A Distribution-Free Theory of Nonparametric Regression. Springer, Cham (2006)

Hanneke, S., Kontorovich, A., Kornowski, G.: Near-optimal learning with average Hölder smoothness. CoRR arXiv:2302.06005 (2023)

Har-Peled, S., Mendel, M.: Fast construction of nets in low-dimensional metrics and their applications. SIAM J. Comput. 35(5), 1148–1184 (2006)

Jain, P., Tewari, A.: Alternating minimization for regression problems with vector-valued outputs. In: Cortes, C., Lawrence, N.D., Lee, D.D., Sugiyama, M., Garnett, R. (eds.) Advances in Neural Information Processing Systems, vol. 28, pp. 1126–1134. Curran Associates, Inc. (2015). http://papers.nips.cc/paper/5820-alternating-minimization-for-regression-problems-with-vector-valued-outputs.pdf

Kakade, S., Tewari, A.: Dudley’s theorem, fat shattering dimension, packing numbers. Lecture 15, Toyota Technological Institute at Chicago (2008). http://ttic.uchicago.edu/~tewari/lectures/lecture15.pdf

Kimeldorf, G.S., Wahba, G.: A correspondence between Bayesian estimation on stochastic processes and smoothing by splines. Ann. Math. Stat. 41(2), 495–502 (1970). https://doi.org/10.1214/aoms/1177697089

Kirszbraun, M.: Über die zusammenziehende und Lipschitzsche transformationen. Fundam. Math. 22(1), 77–108 (1934)

Koutis, I., Miller, G.L., Peng, R.: A fast solver for a class of linear systems. Commun. ACM 55(10), 99–107 (2012)

Mahabadi, S., Makarychev, K., Makarychev, Y., Razenshteyn, I.: Nonlinear dimension reduction via outer bi-lipschitz extensions. In: Proceedings of the 50th Annual ACM SIGACT Symposium on Theory of Computing, pp. 1088–1101. ACM (2018)

McShane, E.J.: Extension of range of functions. Bull. Am. Math. Soc. 40(12), 837–842 (1934). https://projecteuclid.org:443/euclid.bams/1183497871

Mohri, M., Rostamizadeh, A., Talwalkar, A.: Foundations Of Machine Learning. The MIT Press, Cambridge (2012)

Nadaraya, E.A.: Nonparametric Estimation of Probability Densities and Regression Curves. Springer, Cham (1989)

Naor, A.: Metric embeddings and Lipschitz extensions (2015)

Nesterov, Y., Nemirovskii, A.: Interior-Point Polynomial Algorithms in Convex Programming. SIAM, Philadelphia (1994)

Rudin, W.: Principles of Mathematical Analysis. International Series in Pure and Applied Mathematics, 3rd edn. McGraw-Hill Book Co., New York (1976)

Spielman, D.A., Teng, S.H.: Nearly-linear time algorithms for graph partitioning, graph sparsification, and solving linear systems. In: Proceedings of the Symposium on Theory of Computing, vol. 4 (2004)

Whitney, H.: Analytic extensions of differentiable functions defined in closed sets. Trans. Am. Math. Soc. 36(1), 63–89 (1934). http://www.jstor.org/stable/1989708

Acknowledgements

AK was partially supported by the Israel Science Foundation (Grant No. 1602/19), the Ben-Gurion University Data Science Research Center, and an Amazon Research Award. HZ was an MSc student at Ben-Gurion University of the Negev during part of this research. YM was partially supported by NSF awards CCF-1718820, CCF-1955173, and CCF-1934843.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this article was revised: Appendix E contained response of the referees, but it was inadvertently published. Now, it has been removed.

Appendices

Appendix A: The Arora–Hazan–Kale result

Intuitive explanation of the result Consider a feasibility problem over a domain \(\mathscr {P}\in \mathbb {R}^n\) in which you need to determine if there exists \(x\in \mathscr {P}\) which satisfies finite set of constrains: \(f_i(\textbf{X}) \ge 0\) for all \(i\in [m]\). This problem in the general case is NP-Hard. The Arora-Hazan-Kale result indicates that, under certain conditions, if we know how to solve approximately a simpler problem: \(\exists ?\textbf{X}\in \mathscr {P}:\> \sum _i w_i f_i(\textbf{X}) \ge 0\) where \(\sum _i w_i = 1\), by an algorithm which we call “Oracle”, then we can run T iterations over the simpler problem, where in each iteration we update the weights \(\{w_i\}_{i\in [m]}\) using the MWU framework, and solve the updated problem using the Oracle. If a solution \(x_i\in \mathscr {P}\) exists for all iterations then \(\textbf{X}^* = \frac{1}{T}\sum _{t=1}^T \textbf{X}^{(t)}\) will be an approximate solution to the feasible problem. The conditions and the definitions of the meaning in the intuitive explanation are given in “Appendix A”. In this section, we present our algorithm, show our problem fits the paradigm of [2, Sec. 3.3.1, p. 137], show our oracle solves the simpler problem (given in the algorithm) and proof that our algorithms solve the feasible problems efficiently. For completeness, we quote here the relevant definitions and results from [2, Sec. 3.3.1, p. 137].

Consider the following feasibility problem. Let \(\mathscr {P}\in \mathbb {R}^n\) be a convex domain in \(\mathbb {R}^n\) and \(f_i:\mathscr {P}\rightarrow \mathbb {R}\) be concave functions for \(i\in [m]\). The goal is to determine if there exists \(x\in \mathscr {P}\) such that \(f_i(\textbf{X}) \ge 0\) for all \(i\in [m]\):

The multiplicative weights update method of Arora, Hazan, and Kale [2] provides an algorithm that satisfies (3) approximately, up to an additive error of \(\varepsilon \). We assume the existence of an oracle that given a probability distribution \(\textbf{w} = (w_1,w_2,\ldots ,w_m)\) solves the following feasibility problem:

Definition 1

We say that oracle Oracle is \((\ell ,\rho )\)-bounded if it always returns \(x\in \mathscr {P}\) such that \(f_i(\textbf{X}) \in [-\rho ,\ell ]\) for all \(\in [m]\). The width of the oracle is \(\rho +\ell \).

Remark 1

Note that if \(f_i(\textbf{X}) \in [-\rho ,\ell ]\) for all \(\textbf{X}\in \mathscr {P}\) then every oracle for the problem is \((\ell ,\rho )\)-bounded.

Definition 2

We say that oracle Oracle is \(\varepsilon \)-approximate if given \(\textbf{w} = (w_1,\dots , w_m)\) it either finds a solution \(\textbf{X}\in \mathscr {P}\) such that \(\sum _i w_i f_i \ge - \varepsilon \) for all \(i\in [m]\) or correctly concludes that (4) has no feasible solution.

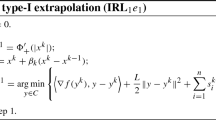

Consider the following algorithm.

We now state theorems providing performance guarantees for Algorithm 4. Theorems 5 and 6 are for the cases where we have an exact and \(\varepsilon \)-approximate oracles, respectively.

Theorem 5

(Theorem 3.4 in [2], restated) Let \(\varepsilon >0\) be a given error parameter. Suppose that there exists an \((\ell ,\rho )\)-bounded Oracle for the feasibility problem (3) with \(\ell \ge \varepsilon /2\). Then Algorithm 4 either

-

solves problem (3) up to an additive error of \(\varepsilon \); that is, finds a solution \(\textbf{X}^*\in \mathscr {P}\) such that \(f_i(\textbf{X}^*) \ge -\varepsilon \) for all \(i\in [m]\),

-

or correctly concludes that problem (3) is infeasible,

making only \(O(\ell \rho \log (m)/\varepsilon ^2)\) calls to the Oracle, with an additional processing time of O(m) per call.

Theorem 6

(Theorem 3.5 in [2], restated) Let \(\varepsilon >0\) be a given error parameter. Suppose that there exists an \((\ell ,\rho )\)-bounded \((\varepsilon /3)\)-approximate Oracle for the feasibility problem (3) with \(\ell \ge \varepsilon /2\). Consider Algorithm 4 with adjusted parameters \(\eta = \frac{\varepsilon }{6\ell }\) and \(T = \lceil \frac{18 \rho \ell \ln m}{\varepsilon ^2}\rceil \). Then the algorithm either

-

solves problem (3) up to an additive error of \(\varepsilon \); that is, finds a solution \(\textbf{X}^*\in \mathscr {P}\) such that \(f_i(\textbf{X}^*) \ge -\varepsilon \) for all \(i\in [m]\),

-

or correctly concludes that problem (3) is infeasible,

making only \(O(\ell \rho \log (m)/\varepsilon ^2)\) calls to the Oracle, with an additional processing time of O(m) per call.

Appendix B: Approximate oracle

This section is a continuation to Sect. 3.1, the analysis of the LipschitzSmooth algorithm in Theorem 1. To use the MWU method (see Theorem 6, Theorem 3.5 in [2]), we design an approximate oracle for the following problem.

Problem 3

Given non-negative edge weights \(w_{\Phi }\) and \(w_{ij}\), which add up to 1, find \(\widetilde{\textbf{Y}}\) such that

If Problem 3 has a feasible solution, the oracle finds a solution \(\widetilde{\textbf{Y}}\) such that

Let \(\mu _{ij} = \frac{w_{ij} + \varepsilon /(m+1)}{M^2\Vert x_i - x_j\Vert ^2}\) and \(\lambda _i = \lambda = (w_{\Phi } + \varepsilon /(m+1))/\Phi _0\). We solve Laplace’s problem with parameters \(\mu _{ij}\) and \(\lambda _i\) (see Sect. 1 and Line 9 of the algorithm). We get a matrix \(\widetilde{\textbf{Y}} = (\tilde{y}_1,\dots , \tilde{y}_n)\) minimizing

Consider the optimal solution \(\tilde{y}_1^*,\dots , \tilde{y}_n^*\) for Lipschitz Smoothing. We have

We verify that \(\widetilde{\textbf{Y}}\) is a feasible solution for Problem 3. We have

as required.

Finally, we bound the width of the problem. We have \(h_{\Phi }(\widetilde{\textbf{Y}}) \le 1\) and \(h_{ij}(\widetilde{\textbf{Y}}) \le 1\). Then, using (7), we get

Therefore, \(-h_{\Phi }(\widetilde{\textbf{Y}}) \le O(\sqrt{m/\varepsilon })\).

Similarly,

Therefore, \(-h_{ij}(\widetilde{\textbf{Y}}) \le O(\sqrt{m/\varepsilon })\).

Appendix C: Generalization bounds

Recall the following statistical setting of Sect. 3. We are given a labeled sample \((x_i,y_i)_{i\in [n]}\), where \(x_i\in X:=\mathbb {R}^a\) and \(y_i\in Y:=\mathbb {R}^b\). For a user-specified Lipschitz constant \(L>0\), we compute the (approximate) Empirical Risk Minimizer (ERM) \(\hat{f}:=\hbox {argmin}_{f\in F_L}\hat{R}_n(f)\) over \(F_L:=\{ f\in Y^X: \left\| f \right\| _{\text {{\tiny Lip }}}\le L \}\). A standard method for tuning L is via Structural Risk Minimization (SRM): One computes a generalization bound \(R(\hat{f})\le \hat{R}_n(\hat{f})+Q_n(a,b,L)\), where \(Q_n(a,b,L):=\sup _{f\in F_L}|R(f)-\hat{R}_n(f)|=O(L/n^{a+b+1})\), and chooses \(\hat{L}\) to minimize this. In this section, we will derive the aforementioned bound.

Let \( B_X \subset \mathbb {R}^k \) and \(B_Y \subset \mathbb {R}^\ell \) be the unit balls of their respective Hilbert spaces (each endowed with the \(\ell _2\) norm \(||\cdot ||\) and corresponding inner product) and \(\mathscr {H}_L \subset {B_Y}^{B_X}\) be the set of all L-Lipschitz mappings from \(B_X\) to \(B_Y\). In particular, every \(h\in \mathscr {H}_L\) satisfies

Let \(\mathscr {F}_L\subset \mathbb {R}^{B_X \times B_Y}\) be the loss class associated with \(\mathscr {H}_L\):

In particular, as every \(f\in \mathscr {F}_L\) satisfies \(0\le f\le 2\).

Our goal is to bound the Rademacher complexity of \(\mathscr {F}_L\). We do this via a covering numbers approach:

The empirical Rademacher complexity of a collection of functions \(\mathscr {F}\), mapping some set \({\textbf {A}} = \{a_1,\dots ,a_n\}\subset A^n\) to \(\mathbb {R}\) is defined by:

where \(\sigma _1, \sigma _2, \dots , \sigma _m\) are independent random variables drawn from the Rademacher distribution: \(\Pr (\sigma _{i}=+1)=\Pr (\sigma _{i}=-1)=1/2\). Recall the relevance of Rademacher complexities to uniform deviation estimates for the risk functional \(R(\cdot )\) [30, Theorem 3.1]: for every \(\delta >0\), with probability at least \(1-\delta \), for each \(h\in \mathscr {H}_L\):

Define \(Z=B_X\times B_Y\) and endow it with the norm \(\left\| (x,y) \right\| _Z=\left\| x \right\| +\left\| y \right\| \); note that \((Z,\left\| \cdot \right\| _Z)\) is a Banach but not a Hilbert space. First, we observe that the functions in \(\mathscr {F}_L\) are Lipschitz under \(\left\| \cdot \right\| _Z\). Indeed, choose any \(f=f_h\in \mathscr {F}_L\) and \(x,x'\in B_X\), \(y,y'\in B_Y\). Then

where \(a\vee b:=\max \left\{ a,b\right\} \). We conclude that any \(f\in \mathscr {F}_L\) is \((L\vee 1) < (L+1) \)-Lipschitz under \(\left\| \cdot \right\| _Z\).

Since we restricted the domain and range of \(\mathscr {H}_L\), respectively, to the unit balls \(B_X\) and \(B_Y\), the domain of \(\mathscr {F}_L\) becomes \(B_Z:=B_X\times B_Y\) and its range is [0, 2]. Let us recall some basic facts about the \(\ell _2\) covering of the k-dimensional unit ball

an analogous bound holds for \(\mathscr {N}(t,B_Y,\left\| \cdot \right\| )\). Now if \(\mathscr {C}_X\) is a collection of balls, each of diameter at most t, that covers \(B_X\) and \(\mathscr {C}_Y\) is a similar collection covering \(B_Y\), then clearly the collection of sets

covers \(B_Z\). Moreover, each \(E\in \mathscr {C}_Z\) is a ball of diameter at most 2t in \((Z,\left\| \cdot \right\| _Z)\). It follows that

Finally, we endow \(F_L\) with the \(\ell _\infty \) norm, and use a Kolmogorov–Tihomirov type covering estimate (see, e.g., [16, Lemma 4.2]):

Finally, we invoke a standard result bounding the Rademacher complexity in terms of the covering numbers via the so-called Dudley entropy integral [24],

The estimate in (12) is computed, e.g., in [16, Theorem 4.3]:

Putting \(d = k+\ell \) and combining (12) with (11) yields our generalization bound: with probability at least \(1-\delta \),

Appendix D: Additional experiments

For completeness we add here the comparison of the results from the experiment for \(f(x) = \sin (x)\) for \( x\in [-2\pi ,2\pi ]\) (Tables 7, 8, 9, 10, 11 and Fig. 1).

a–d Demonstrate the comparison, where training size is 200 random points, and \(f(x)=\sin (x)\). a, b Are the smoothing and extension phases implemented with our MWU based algorithm while c–d are the results of Matlab’s IntPt implementation. In all graphs the line represents the ground truth function while the X represent the estimation by the learned function

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zaichyk, H., Biess, A., Kontorovich, A. et al. Efficient Kirszbraun extension with applications to regression. Math. Program. (2023). https://doi.org/10.1007/s10107-023-02023-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10107-023-02023-6

, as detailed in Sect.

, as detailed in Sect.