Abstract

Adaptive cubic regularization (ARC) methods for unconstrained optimization compute steps from linear systems involving a shifted Hessian in the spirit of the Levenberg-Marquardt and trust-region methods. The standard approach consists in performing an iterative search for the shift akin to solving the secular equation in trust-region methods. Such search requires computing the Cholesky factorization of a tentative shifted Hessian at each iteration, which limits the size of problems that can be reasonably considered. We propose a scalable implementation of ARC named ARC\(_q\)K in which we solve a set of shifted systems concurrently by way of an appropriate modification of the Lanczos formulation of the conjugate gradient (CG) method. At each iteration of ARC\(_q\)K to solve a problem with \(n\) variables, a range of \(m \ll n\) shift parameters is selected. The computational overhead in CG beyond the Lanczos process is thirteen scalar operations to update five vectors of length \(m\), and two \(n\)-vector updates for each value of the shift. The CG variant only requires one Hessian-vector product and one dot product per iteration, independently of \(m\). Solves corresponding to inadequate shift parameters are interrupted early. All shifted systems are solved inexactly. Such modest cost makes our implementation scalable and appropriate for large-scale problems. We provide a new analysis of the inexact ARC method including its worst case evaluation complexity, global and asymptotic convergence. We describe our implementation and report numerical experience that confirms that our implementation of ARC\(_q\)K outperforms a classic Steihaug-Toint trust-region method, and the ARC method of the GALAHAD library. The latter solves the subproblem in nested Krylov subspaces by a Lanczos-based method, which requires the storage of a dense matrix that can be comparable to or larger than the two dense arrays required by our approach if the problem is large or requires many Lanczos iterations. Finally, we generalize our convergence results to inexact Hessians and nonlinear least-squares problems.

Similar content being viewed by others

Notes

An iterate was generated where the objective evaluated to \(-\infty \).

References

Bianconcini, T., Liuzzi, G., Morini, B., Sciandrone, M.: On the use of iterative methods in cubic regularization for unconstrained optimization. Comput. Optim. Appl. 60(1), 35–57 (2014). https://doi.org/10.1007/s10589-014-9672-x

Birgin, E.G., Gardenghi, J.L., Martínez, J.M., Santos, S.A., Toint, Ph.L.: Worst-case evaluation complexity for unconstrained nonlinear optimization using high-order regularized models. Math. Progr. 163(1–2), 359–368 (2016). https://doi.org/10.1007/s10107-016-1065-8

Carmon, Y., Duchi, J.: Gradient descent finds the cubic-regularized nonconvex Newton step. SIAM J. Optim. 29(3), 2146–2178 (2019). https://doi.org/10.1137/17m1113898

Cartis, C., Gould, N.I.M., Toint, Ph.L.: Adaptive cubic regularisation methods for unconstrained optimization. Part I: motivation, convergence and numerical results. Math. Program. 127(2), 245–295 (2011). https://doi.org/10.1007/s10107-009-0286-5

Cartis, C., Gould, N.I.M., Toint, Ph.L.: Adaptive cubic regularisation methods for unconstrained optimization. Part II: worst-case function- and derivative-evaluation complexity. Math. Program. 130(2), 295–319 (2011). https://doi.org/10.1007/s10107-009-0337-y

Cartis, C., Gould, N.I.M., Toint, Ph.L.: Complexity bounds for second-order optimality in unconstrained optimization. J. Complex. 28(1), 93–108 (2012). https://doi.org/10.1016/j.jco.2011.06.001

Cartis, C., Gould, N.I.M., Toint, Ph.L.: On the evaluation complexity of cubic regularization methods for potentially rank-deficient nonlinear least-squares problems and its relevance to constrained nonlinear optimization. SIAM J. Optim. 23(3), 1553–1574 (2013). https://doi.org/10.1137/120869687

Conn, A.R., Gould, N.I.M., Toint. Ph.L.: Trust-Region Methods, volume 1 of MPS/SIAM Series on Optimization. SIAM, Philadelphia, USA (2000). https://doi.org/10.1137/1.9780898719857

Cristofari, A., Niri, T.D., Lucidi, S.: On global minimizers of quadratic functions with cubic regularization. Optim. Lett. 13(6), 1269–1283 (2018). https://doi.org/10.1007/s11590-018-1316-0

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Progr. Ser. B 29(2), 201–213 (2002). https://doi.org/10.1007/s101070100

Dussault, J.-P.: ARCq: a new adaptive regularization by cubics. Optim. Method Softw. 33(2), 322–335 (2018). https://doi.org/10.1080/10556788.2017.1322080

Dussault, J.-P.: A unified efficient implementation of trust-region type algorithms for unconstrained optimization. Infor 58(2), 290–309 (2020). https://doi.org/10.1080/03155986.2019.1624490

Fong, D.C.L., Saunders, M.A.: LSMR: an iterative algorithm for sparse least-squares problems. SIAM J. Sci. Comput. 33(5), 2950–2971 (2011). https://doi.org/10.1137/10079687X

Frommer, A., Maass, P.: Fast CG-based methods for Tikhonov-Phillips regularization. SIAM J. Sci. Comput. 20(5), 1831–1850 (1999). https://doi.org/10.1137/S1064827596313310

Glässner, U., Güsken, S., Lippert, T., Ritzenhöfer, G., Schilling, K., Frommer, A.: How to compute Green’s functions for entire mass trajectories within Krylov solvers. Int. J. Mod. Phys. C 07(05), 635–644 (1996)

Gould, N.I.M., Simoncini, V.: Error estimates for iterative algorithms for minimizing regularized quadratic subproblems. Optim. Method Softw. 35(2), 304–328 (2019). https://doi.org/10.1080/10556788.2019.1670177

Gould, N.I.M., Lucidi, S., Roma, M., Toint, Ph.L.: Solving the trust-region subproblem using the Lanczos method. SIAM J. Optim. 9(2), 504–525 (1999). https://doi.org/10.1137/S1052623497322735

Gould, N.I.M., Orban, D., Toint, Ph.L.: GALAHAD, a library of thread-safe Fortran 90 packages for large-scale nonlinear optimization. ACM Trans. Math. Softw. 29(4), 353–372 (2003). https://doi.org/10.1145/962437.962438

Gould, N.I.M., Porcelli, M., Toint, Ph.L.: Updating the regularization parameter in the adaptive cubic regularization algorithm. Comput. Optim. Appl. 53(1), 1–22 (2012). https://doi.org/10.1007/s10589-011-9446-7

Gould, N.I.M., Orban, D., Toint, Ph.L.: CUTEst: a constrained and unconstrained testing environment with safe threads for mathematical optimization. Comput. Optim. Appl. 60, 545–557 (2015). https://doi.org/10.1007/s10589-014-9687-3

Griewank, A.: The modification of Newton’s method for unconstrained optimization by bounding cubic terms. Technical Report NA/12, Department of Applied Mathematics and Theoretical Physics, University of Cambridge (1981)

Hestenes, M.R., Stiefel, E.: Methods of conjugate gradients for solving linear systems. J. Res. Natl. Bur. Stand. 49(6), 409–436 (1952)

Jegerlehner, B.: Krylov space solvers for shifted linear systems. Technical Report IUHET-353, Indiana University, Department of Physics, Bloomington, IN, 1996. arXiv:hep-lat/9612014

Jiang, R., Yue, M.-C., Zhou, Z.: An accelerated first-order method with complexity analysis for solving cubic regularization subproblems. Comput. Optim. Appl. 79(2), 471–506 (2021). https://doi.org/10.1007/s10589-021-00274-7

Lanczos, C.: An iteration method for the solution of the eigenvalue problem of linear differential and integral operators. J. Res. Natl. Bur. Stand. 45(4), 255–282 (1950)

Montoison, A., Orban, D., and contributors. Krylov.jl: A Julia basket of hand-picked Krylov methods, June 2020. https://doi.org/10.5281/zenodo.822073. https://github.com/JuliaSmoothOptimizers/Krylov.jl

Moré, J.J., Sorensen, D.C.: Computing a trust region step. SIAM J. Sci. Stat. Comput. 4(3), 553–572 (1983). https://doi.org/10.1137/0904038

Orban, D., Siqueira, A.S.: JuliaSmoothOptimizers: Infrastructure and solvers for continuous optimization in Julia, 2019. https://doi.org/10.5281/zenodo.2655082. https://www.juliasmoothoptimizers.github.io

Orban, D., Siqueira, A.S., and contributors. CUTEst.jl: Julia’s CUTEst interface (2020a). https://doi.org/10.5281/zenodo.1188851. https://github.com/JuliaSmoothOptimizers/CUTEst.jl

Orban, D., Siqueira, A. S., and contributors. NLPModels.jl: Data structures for optimization models (2020b). https://doi.org/10.5281/zenodo.2558627. https://github.com/JuliaSmoothOptimizers/NLPModels.jl

Orban, D., Siqueira, A. S., and contributors. SolverBenchmark.jl: Benchmark tools for solvers (2020c). https://doi.org/10.5281/zenodo.3948381. https://github.com/JuliaSmoothOptimizers/SolverBenchmark.jl

Orban, D., Siqueira, A. S., and contributors. SolverTools.jl: Tools for developing nonlinear optimization solvers (2020d). https://doi.org/10.5281/zenodo.3937408. https://github.com/JuliaSmoothOptimizers/SolverTools.jl

Ortega, J. M.: Numerical analysis: a second course. Number 3 in Class. Appl. Math. Soc. Indust. Appl. Math., Philadelphia, PA, 1990

Paige, C.C., Saunders, M.A.: Solution of sparse indefinite systems of linear equations. SIAM J. Numer. Anal. 12(4), 617–629 (1975). https://doi.org/10.1137/0712047

Paige, C.C., Saunders, M.A.: LSQR: an algorithm for sparse linear equations and sparse least squares. ACM Trans. Math. Softw. 8(1), 43–71 (1982). https://doi.org/10.1145/355984.355989

Steihaug, T.: The conjugate gradient method and trust regions in large scale optimization. SIAM J. Numer. Anal. 20(3), 626–637 (1983). https://doi.org/10.1137/0720042

Toint, Ph.: Towards an efficient sparsity exploiting Newton method for minimization. In I. S. Duff, editor, Sparse Matrices and Their Uses, pages 57–88. Academic press, 1981

van den Eshof, J., Sleijpen, G.L.: Accurate conjugate gradient methods for families of shifted systems. Appl. Numer. Math. 49(1), 17–37 (2004). https://doi.org/10.1016/j.apnum.2003.11.010

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Research partially supported by an NSERC Discovery Grant, Research supported by IVADO and the Canada First Research Excellence Fund / Apogée, Research partially supported by an NSERC Discovery Grant.

Supplementary Information

Below is the link to the electronic supplementary material.

Appendices

Appendix A. Example of an ill-conditioned problem

We examine the iterations of the ARC\(_q\)K method on problem sbrybnd from the CUTEst test set [20], which has unfavorable eigenvalues. The problem is a Broyden banded system of nonlinear equations with 5000 variables, solved in the least squares sense. The smallest eigenvalue is of order \(-10^{12}\) at the initial point. With the parameters of Sect. 4, ARC\(_q\)K and ARC both stop because they attain the time limit. Both solvers stop close to stationary point in the sense that \(\Vert \nabla f(x_k)\Vert \approx {\tilde{\epsilon }} \Vert \nabla f(x_0)\Vert \) with \({\tilde{\epsilon }} = 2.26 \cdot 10^{-5}\) after \(148\) iterations for ARC\(_q\)K, and \({\tilde{\epsilon }} = 2.45 \cdot 10^{-4}\) after \(299\) iterations for ARC.

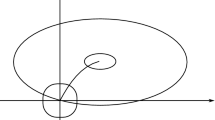

Figure 5 reports the shift \(\lambda _k\) used by ARC\(_q\)K and the absolute value of the smallest eigenvalue \(\sigma _k\) of \(\nabla ^2 f(x_k)\) as a function of the iterations (left), and the norm of the search direction \(d_k\) (right). As expected, at iterations where \(\sigma _k\) is very negative, \(\lambda _k\) is very large, and \(\Vert d_k\Vert \) is small, which induces slow progresses. Figure 6 shows the corresponding quantities for ARC, and the conclusions are similar.

Problem sbrybnd illustrates the necessity to make provision for potentially large shifts on problems with unfavorable eigenvalue distribution. Both methods make slow progress in regions dominated by very negative eigenvalues. They both manage to solve the problem to an acceptable level, though not to the prescribed stopping tolerance. Of course, large shifts may be unnecessary on many problems. One can easily imagine a refined implementation in which large shifts are only employed when smaller shifts fail to provide a satisfactory search direction. Evidently, the issue would be mitigated by an appropriate problem scaling, but that is out of the scope of the present analysis.

Appendix B Technical results

Denote by \(\sigma _{1,k}\le ...\le \sigma _{n,k}\) the eigenvalues of \(\nabla ^2 f(x_k)\), and consider an eigendecomposition of \(\nabla ^2 f(x_k) = U_k^T\Sigma _k U_k\) where \(\Sigma _k = diag (\sigma _{1,k}, \ldots , \sigma _{n,k})\), and \(U_k\) is an orthonormal matrix of eigenvectors.

As described in the preamble of Sect. 3, at each iteration k, the sequence of shifts is defined such that

where \(\lambda _0:= \psi ^{-e_L}\) and \(\psi > 1\).

Assume that there exists \({\bar{\imath }}_k\) the smallest nonnegative integer such that

Note that such an \({\bar{\imath }}_k\) always exists if the eigenvalues are bounded and m is sufficiently large. The motivation of this section is to prove that there exists a constant \(\beta \) such that for some \(i \ge {\bar{\imath }}_k\), (2.2c) is satisfied,

assuming that for all \(\lambda ^k_{i}\), there exists an oracle returning \(d(\lambda ^k_{i})\) satisfying (2.2a) with \(r^k_i\). First, the following lemma shows that \(\phi \) is monotonic.

Lemma B.1

Assume that \(\nabla f(x_k) \ne 0\). Let \(\Gamma _k > 0\) be a constant such that

Then, the sequence \(i \mapsto \phi (\lambda ^k_{i})\) is strictly increasing for \(i \ge {\bar{\imath }}_k\).

Conditions (B.1) and (B.2) impose a constraint on the precision used to solve the linear system, which is asymptotically less stringent than Assumption 2.6 used in the proofs.

Proof

The beginning of the proof follows the same line as [8, Section 7.3.1]. Using the eigendecomposition of the \(\nabla ^2 f(x_k)\), it follows that

where \(\gamma _{j,k} = [U_k \nabla f(x_k)]_j\) is the j-th component of \(U_k \nabla f(x_k)\). Additionally, using the triangle inequality and that \(\Vert a - b\Vert \ge \Vert a\Vert - \Vert b\Vert \), it holds for all \(i \ge {\bar{\imath }}_k\) that

We now prove that \(\Vert d_k(\lambda _{i+1}^k)\Vert _2^2 < \Vert d_k(\lambda ^k_i)\Vert _2^2\), which would conclude the proof. For \(i \ge {\bar{\imath }}_k\), using previous inequality, we get

Considering the first part of the right-hand side in previous inequality yields

The proof is complete after combining this relation with (B.2). \(\square \)

The following lemma gives an upper bound independent of i of

Lemma B.2

For all \(i \ge {\bar{\imath }}_k\),

Proof

The case \(i = {\bar{\imath }}_k\) is straightforward. Let \(i > {\bar{\imath }}_k\). Using \(\sigma _{1,k} + \psi ^{{\bar{\imath }}_k}\lambda _0 > 0\), then

On the other hand,

Combining the two previous inequalities into the definition of \({\overline{\kappa }}_{k}(i)\) yields

and the result follows as \(\psi ^{i - {\bar{\imath }}_k} \ge \psi \). \(\square \)

Proposition B.1

Let the assumptions of Lemma B.1 hold. Moreover, let \(\beta _k \ge 1\) be chosen such that

where \(\Gamma _k\) and \({\overline{\kappa }}\) are respectively the constants defined in Lemma B.1 and Lemma B.2. Then, there exists \(i \ge {\bar{\imath }}_k\) such that (2.2c) holds.

Proof

We can further assume that \(\phi (\lambda ^k_{{\bar{\imath }}_k}) < \frac{1}{\beta _k}\), as otherwise \(\phi (\lambda ^k_{{\bar{\imath }}_k}) \ge \frac{1}{\beta _k}\) and the result would follow by (B.3). The condition (2.2c) can be reformulated as

so the targeted interval is of length \(2\log (\beta _k)\). By Lemma B.1, the sequence \(i \mapsto \phi (\lambda ^k_{i})\) is strictly increasing, \(\log (\phi (\lambda ^k_{{\bar{\imath }}_k})) < \log (\beta _k)\) from (B.3), and we now prove that

which would conclude the proof.

Let \(i \ge {\bar{\imath }}_k\), it follows from (2.2a) that

Taking the norm on both sides, and using the triangle inequality yields

Additionally, \(\lambda ^k_i = \psi ^i \lambda _0\) gives

Therefore, using that \(\log \) is increasing and that \(x \mapsto \log (1+x)\) is subadditive,

which proves (B.5), and the result. \(\square \)

Proposition B.2

Let \(\{x^k\}\) be a sequence generated by Algorithm 3. Assume that the eigenvalues of \(\nabla ^2 f(x_k)\) are bounded for all k. Assume further that there exists a constant \(\phi ^{\max }\) such that

Then, there exists a constant \(\beta \ge 1\) such that (B.5) holds for all k. Furthermore, for all k, there exists a \(i \ge {\bar{\imath }}_k\) such that (2.2c) holds.

Proof

Using Lemma B.2 and that the eigenvalues of \(\nabla ^2 f(x_k)\) are bounded for all k, it is clear that \(\beta \) can be chosen greater than \(\phi ^{\max }\) and \(\sqrt{\psi {\overline{\kappa }}_k (1 + \Gamma _k)}\) for all k. \(\square \)

The condition (B.6) further imposes that the shifts \(\lambda ^k_i\) covers sufficiently small values.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Dussault, JP., Migot, T. & Orban, D. Scalable adaptive cubic regularization methods. Math. Program. (2023). https://doi.org/10.1007/s10107-023-02007-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10107-023-02007-6