Abstract

We study nonlinear optimization problems with a stochastic objective and deterministic equality and inequality constraints, which emerge in numerous applications including finance, manufacturing, power systems and, recently, deep neural networks. We propose an active-set stochastic sequential quadratic programming (StoSQP) algorithm that utilizes a differentiable exact augmented Lagrangian as the merit function. The algorithm adaptively selects the penalty parameters of the augmented Lagrangian, and performs a stochastic line search to decide the stepsize. The global convergence is established: for any initialization, the KKT residuals converge to zero almost surely. Our algorithm and analysis further develop the prior work of Na et al. (Math Program, 2022. https://doi.org/10.1007/s10107-022-01846-z). Specifically, we allow nonlinear inequality constraints without requiring the strict complementary condition; refine some of designs in Na et al. (2022) such as the feasibility error condition and the monotonically increasing sample size; strengthen the global convergence guarantee; and improve the sample complexity on the objective Hessian. We demonstrate the performance of the designed algorithm on a subset of nonlinear problems collected in CUTEst test set and on constrained logistic regression problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We study stochastic nonlinear optimization problems with deterministic equality and inequality constraints:

where \(f: \mathbb {R}^d\rightarrow \mathbb {R}\) is an expected objective, \(c: \mathbb {R}^d\rightarrow \mathbb {R}^{m}\) are deterministic equality constraints, \(g: \mathbb {R}^d\rightarrow \mathbb {R}^{r}\) are deterministic inequality constraints, \(\xi \sim {{\mathcal {P}}}\) is a random variable following the distribution \({{\mathcal {P}}}\), and \(F(\cdot ; \xi ):\mathbb {R}^d\rightarrow \mathbb {R}\) is a realized objective. In stochastic optimization regime, the direct evaluation of f and its derivatives is not accessible. Instead, it is assumed that one can generate independent and identically distributed samples \(\{\xi _i\}_{i}\) from \({{\mathcal {P}}}\), and estimate f and its derivatives based on the realizations \(\{F(\cdot \;; \xi _i )\}_{i}\).

Problem (1) widely appears in a variety of industrial applications including finance, transportation, manufacturing, and power systems [8, 56]. It includes constrained empirical risk minimization (ERM) as a special case, where \({{\mathcal {P}}}\) can be regarded as a uniform distribution over n data points \(\{\xi _i = ({{\varvec{y}}}_i, {{\varvec{z}}}_i)\}_{i=1}^n\), with \(({{\varvec{y}}}_i, {{\varvec{z}}}_i)\) being the feature-outcome pairs. Thus, the objective has a finite-sum form as

The goal of (1) is to find the optimal parameter \({{\varvec{x}}}^\star \) that fits the data best. One of the most common choices of F is the negative log-likelihood of the underlying distribution of \(({{\varvec{y}}}_i, {{\varvec{z}}}_i)\). In this case, the optimizer \({{\varvec{x}}}^\star \) is called the maximum likelihood estimator (MLE). Constraints on parameters are also common in practice, which are used to encode prior model knowledge or to restrict model complexity. For example, [30, 31] studied inequality constrained least-squares problems, where inequality constraints maintain structural consistency such as non-negativity of the elasticities. [42, 45] studied statistical properties of constrained MLE, where constraints characterize the parameters space of interest. More recently, a growing literature on training constrained neural networks has been reported [15, 25, 32, 33], where constraints are imposed to avoid weights either vanishing or exploding, and objectives are in the above finite-sum form.

This paper aims to develop a numerical procedure to solve (1) with a global convergence guarantee. When the objective f is deterministic, numerous nonlinear optimization methods with well-understood convergence results are applicable, such as exact penalty methods, augmented Lagrangian methods, sequential quadratic programming (SQP) methods, and interior-point methods [41]. However, methods to solve constrained stochastic nonlinear problems with satisfactory convergence guarantees have been developed only recently. In particular, with only equality constraints, [4] designed a very first stochastic SQP (StoSQP) scheme using an \(\ell _1\)-penalized merit function, and showed that for any initialization, the KKT residuals \(\{R_t\}_t\) converge in two different regimes, determined by a prespecified deterministic stepsize-related sequence \(\{\alpha _t\}_t\):

-

(a)

(constant sequence) if \(\alpha _t = \alpha \) for some small \(\alpha >0\), then \( \sum _{i=0}^{t-1}\mathbb {E}[R_i^2]/t \le \varUpsilon /(\alpha t) + \varUpsilon \alpha \) for some \(\varUpsilon >0\);

-

(b)

(decaying sequence) if \(\alpha _t\) satisfies \(\sum _{t=0}^{\infty }\alpha _t = \infty \) and \(\sum _{t=0}^{\infty }\alpha _t^2 <\infty \), then \(\liminf _{t\rightarrow \infty }\mathbb {E}[R_t^2] = 0\).

Both convergence regimes are well known for unconstrained stochastic problems where \(R_t = \Vert \nabla f({{\varvec{x}}}_t)\Vert \) (see [12] for a recent review), while [4] generalized the results to equality constrained problems. Within the algorithm of [4], the authors designed a stepsize selection scheme (based on the prespecified deterministic sequence) to bring some sort of adaptivity into the algorithm. However, it turns out that the prespecified sequence, which can be aggressive or conservative, still highly affects the performance. To address the adaptivity issue, [40] proposed an alternative StoSQP, which exploits a differentiable exact augmented Lagrangian merit function, and enables a stochastic line search procedure to adaptively select the stepsize. Under a different setup (where the model is precisely estimated with high probability), [40] proved a different guarantee: for any initialization, \(\liminf _{t\rightarrow \infty } R_t = 0\) almost surely. Subsequently, a series of extensions have been reported. [3] designed a StoSQP scheme to deal with rank-deficient constraints. [18] designed a StoSQP that exploits inexact Newton directions. [6] designed an accelerated StoSQP via variance reduction for finite-sum problems. [5] further developed [4] to achieve adaptive sampling. [17] established the worst-case iteration complexity of StoSQP, and [39] established the asymptotic local rate of StoSQP and performed statistical inference. In addition, [43] investigated a deterministic SQP where the objective and constraints are evaluated with noise. However, all aforementioned literature does not include inequality constraints.

Our paper develops this line of research by designing a StoSQP method that works with nonlinear inequality constraints. In order to do so, we have to overcome a number of intrinsic difficulties that arise in dealing with inequality constraints, which were already noted in classical nonlinear optimization literature [7, 41]. Our work is built upon [40], where we exploited an augmented Lagrangian merit function under the SQP framework. We enhance some of designs in [40] (e.g., the feasibility error condition, the increasing batch size, and the complexity of Hessian sampling; more on these later), and the analysis of this paper is more involved. To generalize [40], we address the following two subtleties.

-

(a)

With inequalities, SQP subproblems are inequality constrained (nonconvex) quadratic programs (IQPs), which themselves are difficult to solve in most cases. Some SQP literature (e.g., [10]) supposes to apply a QP solver to solve IQPs exactly, however, a practical scheme should embed a finite number of inner loop iterations of active-set methods or interior-point methods into the main SQP loop, to solve IQPs approximately. Then, the inner loop may lead to an approximation error for search direction in each iteration, which complicates the analysis.

-

(b)

When applied to deterministic objectives with inequalities, the SQP search direction is a descent direction of the augmented Lagrangian only in a neighborhood of a KKT point [50, Propositions 8.3, 8.4]. This is in contrast to equality constrained problems, where the descent property of the SQP direction holds globally, provided the penalty parameters of the augmented Lagrangian are suitably chosen. Such a difference is indeed brought by inequality constraints: to make the (active-set) SQP direction informative, the estimated active set has to be close to the optimal active set (see Lemma 3 for details). Thus, simply changing the merit function in [40] does not work for Problem (1).

The existing literature on inequality constrained SQP has addressed (a) and (b) via various tools for deterministic objectives, while we provide new insights into stochastic objectives. To resolve (a), we design an active-set StoSQP scheme, where given the current iterate, we first identify an active set which includes all inequality constraints that are likely to be equalities. We then obtain the search direction by solving a SQP subproblem, where we include all inequality constraints in the identified active set but regard them as equalities. In this case, the subproblem is an equality constrained QP (EQP), and can be solved exactly provided the matrix factorization is within the computational budget. To resolve (b), we provide a safeguarding direction to the scheme. In each step, we check if the SQP subproblem is solvable and generates a descent direction of the augmented Lagrangian merit function. If yes, we maintain the SQP direction as it typically enjoys a fast local rate; if no, we switch to the safeguarding direction (e.g., one gradient/Newton step of the augmented Lagrangian), along which the iterates still decrease the augmented Lagrangian although the convergence may not be as effective as that of SQP.

Furthermore, to design a scheme that adaptively selects the penalty parameters and stepsizes for Problem (1), additional challenges have to be resolved. In particular, we know that there are unknown deterministic thresholds for penalty parameters to ensure one-to-one correspondence between a stationary point of the merit function and a KKT point of Problem (1). However, due to the scheme stochasticity, the stabilized penalty parameters are random. We are unsure if the stabilized values are above (or below, depending on the context) the thresholds or not. Thus, we cannot directly conclude that the iterates converge to a KKT point, even if we ensure a sufficient decrease on the merit function in each step, and enforce the iterates to converge to one of its stationary points.

The above difficulty has been resolved for the \(\ell _1\)-penalized merit function in [4], where the authors imposed a probability condition on the noise (satisfied by symmetric noise; see [4, Proposition 3.16]). [40] resolved this difficulty for the augmented Lagrangian merit function by modifying the SQP scheme when selecting the penalty parameters. In particular, [40] required the feasibility error to be bounded by the gradient magnitude of the augmented Lagrangian in each step, and generated monotonically increasing samples to estimate the gradient. Although that analysis does not require noise conditions, adjusting the penalty parameters to enforce the feasibility error condition may not be necessary for the iterates that are far from stationarity. Also, generating increasing samples is not satisfactory since the sample size should be adaptively chosen based on the iterates. In this paper, we refine the techniques of [40] and generalize them to inequality constraints. We weaken the feasibility error condition by using a (large) multiplier to rescale the augmented Lagrangian gradient, and more significantly, enforcing it only when the magnitude of the rescaled augmented Lagrangian gradient is smaller than the estimated KKT residual. In other words, the feasibility error condition is imposed only when we have a stronger evidence that the iterate is approaching to a stationary point than approaching to a KKT point. Such a relaxation matches the motivation of the feasibility error condition, i.e., bridging the gap between stationary points and KKT points. We also get rid of the increasing sample size requirement by adaptively controlling the absolute deviation of the augmented Lagrangian gradient for the new iterates only (i.e. the previous step is a successful step; see Sect. 3). Following [40], we perform a stochastic line search procedure. However, instead of using the same sample set to estimate the gradient \(\nabla f\) and Hessian \(\nabla ^2 f\) as in [40], we sharpen the analysis and realize that the needed samples for \(\nabla ^2 f\) are significantly less than \(\nabla f\).

With all above extensions from [40], we finally prove that the KKT residual \(R_t\) satisfies \(\lim _{t\rightarrow \infty } R_t = 0\) almost surely for any initialization. Such a result is stronger than [44, Theorem 4.10] for unconstrained problems and [40, Theorem 4] for equality constrained problems, which only showed the “liminf” type of convergence. Our result also differs from the (liminf) convergence of the expected KKT residual \(\mathbb {E}[R_t^2]\) established in [3,4,5,6, 18] (under a different setup).

Related work

A number of methods have been proposed to optimize stochastic objectives without constraints, varying from first-order methods to second-order methods [12]. For all methods, adaptively choosing the stepsize is particularly important for practical deployment. A line of literature selects the stepsize by adaptively controlling the batch size and embedding natural (stochastic) line search into the schemes [11, 13, 20, 22, 29]. Although empirical experiments suggest the validity of stochastic line search, a rigorous analysis is missing. Until recently, researchers revisited unconstrained stochastic optimization via the lens of classical nonlinear optimization methods, and were able to show promising convergence guarantees. In particular, [1, 9, 16, 28, 57] studied stochastic trust-region methods, and [2, 14, 19, 44] studied stochastic line search methods. Moreover, [3,4,5,6, 18, 40] designed a variety of StoSQP schemes to solve equality constrained stochastic problems. Our paper contributes to this line of works by proposing an active-set StoSQP scheme to handle inequality constraints.

There are numerous methods for solving deterministic problems with nonlinear constraints, varying from exact penalty methods, augmented Lagrangian methods, interior-point methods, and sequential quadratic programming (SQP) methods [41]. Our paper is based on SQP, which is a very effective (or at least competitive) approach for small or large problems. When inequality constraints are present, SQP can be classified into IQP and EQP approaches. The former solves inequality constrained subproblems; the latter, to which our method belongs, solves equality constrained subproblems. A clear advantage of EQP over IQP is that the subproblems are less expensive to solve, especially when the quadratic matrix is indefinite. See [41, Chapter 18.2] for a comparison. Within SQP schemes, an exact penalty function is used as the merit function to monitor the progress of the iterates towards a KKT point. The \(\ell _1\)-penalized merit function, \(f({{\varvec{x}}}) + \mu \left( \Vert c({{\varvec{x}}})\Vert _1 + \Vert \max \{g({{\varvec{x}}}),{{\varvec{0}}}\}\Vert _1\right) \), is always a plausible choice because of its simplicity. However, a disadvantage of such non-differentiable merit functions is their impedance of fast local rates. A nontrivial local modification of SQP has to be employed to relieve such an issue [10]. As a resolution, multiple differentiable merit functions have been proposed [7]. We exploit an augmented Lagrangian merit function, which was first proposed for equality constrained problems by [46, 51], and then extended to inequality constrained problems by [47, 48]. [50] further improved this series of works by designing a new augmented Lagrangian, and established the exact property under weaker conditions. Although not crucial for that exact property analysis, [50] did not include equality constraints. In this paper, we enhance the augmented Lagrangian in [50] by containing both equality and inequality constraints; and study the case where the objective is stochastic. When inequality constraints are suppressed, our algorithm and analysis naturally reduce to [40] (with refinements). We should mention that differentiable merit functions are often more expensive to evaluate, and their benefits are mostly revealed for local rates (see [38, Figure 1] for a comparison between the augmented Lagrangian and \(\ell _1\) merit functions on an optimal control problem). Thus, with only established global analysis, we do not aim to claim the benefits of the augmented Lagrangian over the popular \(\ell _1\) merit function. On the other hand, the augmented Lagrangian is a very common alternative of non-differentiable penalty functions, which has been widely utilized for inequality constrained problems and achieved promising performance [52,53,54,55, 60]. Also, our global analysis is the first step towards understanding the local rate of StoSQP when differentiable merit functions are employed.

Structure of the paper

We introduce the exploited augmented Lagrangian merit function and active-set SQP subproblems in Sect. 2. We propose our StoSQP scheme and analyze it in Sect. 3. The experiments and conclusions are in Sects. 4 and 5. Due to the space limit, we defer all proofs to Appendix.

Notation We use \(\Vert \cdot \Vert \) to denote the \(\ell _2\) norm for vectors and spectrum norm for matrices. For two scalars a and b, \(a\wedge b = \min \{a, b\}\) and \(a\vee b = \max \{a, b\}\). For two vectors \({{\varvec{a}}}\) and \({{\varvec{b}}}\) with the same dimension, \(\min \{{{\varvec{a}}}, {{\varvec{b}}}\}\) and \(\max \{{{\varvec{a}}}, {{\varvec{b}}}\}\) are vectors by taking entrywise minimum and maximum, respectively. For \({{\varvec{a}}}\in \mathbb {R}^r\), \(\textrm{diag}({{\varvec{a}}}) \in \mathbb {R}^{r\times r}\) is a diagonal matrix whose diagonal entries are specified by \({{\varvec{a}}}\) sequentially. I denotes the identity matrix whose dimension is clear from the context. For a set \(\mathcal {A}\subseteq \{1,2,\ldots , r\}\) and a vector \({{\varvec{a}}}\in \mathbb {R}^r\) (or a matrix \(A\in \mathbb {R}^{r\times d}\)), \({{\varvec{a}}}_{\mathcal {A}} \in \mathbb {R}^{|\mathcal {A}|}\) (or \(A_{\mathcal {A}}\in \mathbb {R}^{|\mathcal {A}|\times d}\)) is a sub-vector (or a sub-matrix) including only the indices in \(\mathcal {A}\); \(\varPi _{\mathcal {A}}(\cdot ): \mathbb {R}^r\rightarrow \mathbb {R}^r\) (or \(\mathbb {R}^{r\times d} \rightarrow \mathbb {R}^{r\times d}\)) is a projection operator with \([\varPi _{\mathcal {A}}({{\varvec{a}}})]_i = {{\varvec{a}}}_i\) if \(i\in \mathcal {A}\) and \([\varPi _{\mathcal {A}}({{\varvec{a}}})]_i = 0\) if \(i\notin \mathcal {A}\) (for \(A\in \mathbb {R}^{r\times d}\), \(\varPi _{\mathcal {A}}(A)\) is applied column-wise); \(\mathcal {A}^c = \{1,2,\ldots , r\}\backslash \mathcal {A}\). Finally, we reserve the notation for the Jacobian matrices of constraints: \(J({{\varvec{x}}}) = \nabla ^T c({{\varvec{x}}}) = (\nabla c_1({{\varvec{x}}}), \ldots , \nabla c_m({{\varvec{x}}}))^T \in \mathbb {R}^{m\times d}\) and \(G({{\varvec{x}}}) = \nabla ^T g({{\varvec{x}}}) = (\nabla g_1({{\varvec{x}}}), \ldots , \nabla g_r({{\varvec{x}}}))^T \in \mathbb {R}^{r\times d}\).

2 Preliminaries

Throughout this section, we suppose f, c, g are twice continuously differentiable (i.e., \(f,g,c\in C^2\)). The Lagrangian function of Problem (1) is

We denote by

the feasible set and

the active set. We aim to find a KKT point \(({{\varvec{x}}}^\star , {\varvec{\mu }^\star }, {\varvec{\lambda }}^\star )\) of (1) satisfying

When a constraint qualification holds, existing a dual pair \(({\varvec{\mu }^\star }, {\varvec{\lambda }}^\star )\) to satisfy (4) is a first-order necessary condition for \({{\varvec{x}}}^\star \) being a local solution of (1). In most cases, it is difficult to have an initial iterate that satisfies all inequality constraints, and enforce inequality constraints to hold as the iteration proceeds. This motivates us to consider a perturbed set. For \(\nu >0\), we let

Here, the perturbation radius \(\nu /2\) is not essential and can be replaced by \(\nu /\kappa \) for any \(\kappa >1\). Also, the cubic power in \(a({{\varvec{x}}})\) can be replaced by any power s with \(s>2\), which ensures that \(a({{\varvec{x}}})\in C^2\) provided \(g_i({{\varvec{x}}})\in C^2\), \(\forall i\). We also define a scaling function

where \(a_{\nu }({{\varvec{x}}})\) measures the distance of \(a({{\varvec{x}}})\) to the boundary \(\nu \), and \(q_{\nu }({{\varvec{x}}}, {\varvec{\lambda }})\) rescales \(a_{\nu }({{\varvec{x}}})\) by penalizing \({\varvec{\lambda }}\) that has a large magnitude. In the definitions of (5) and (6), \(\nu >0\) is a parameter to be chosen: given the current primal iterate \({{\varvec{x}}}_t\), we choose \(\nu = \nu _t\) large enough so that \({{\varvec{x}}}_t \in \mathcal {T}_{\nu }\). Note that while it is difficult to have \({{\varvec{x}}}_t\in \varOmega \), it is easy to choose \(\nu \) to have \({{\varvec{x}}}_t\in \mathcal {T}_{\nu }\). We also note that

With (6) and a parameter \(\epsilon >0\), we define a function to measure the dual feasibility of inequality constraints:

The following lemma justifies the reasonability of the definition (7). The proof is immediate and omitted.

Lemma 1

Let \(\epsilon , \nu >0\). For any \(({{\varvec{x}}}, {\varvec{\lambda }}) \in \mathcal {T}_{\nu }\times \mathbb {R}^r\), \(\varvec{w}_{\epsilon , \nu }({{\varvec{x}}}, {\varvec{\lambda }}) = {{\varvec{0}}} \Leftrightarrow g({{\varvec{x}}}) \le ~{{\varvec{0}}}, {\varvec{\lambda }}\ge {{\varvec{0}}}, {\varvec{\lambda }}^Tg({{\varvec{x}}}) = 0\).

An implication of Lemma 1 is that, when the iteration sequence converges to a KKT point, \(\varvec{w}_{\epsilon , \nu }({{\varvec{x}}}, {\varvec{\lambda }})\) converges to 0, i.e., \(g({{\varvec{x}}}) = {{\varvec{b}}}_{\epsilon , \nu }({{\varvec{x}}},{\varvec{\lambda }})\). This motivates us to define the following augmented Lagrangian function:

where \(\eta >0\) is a prespecified parameter, which can be any positive number throughout the paper. The augmented Lagrangian (8) generalizes the one in [50] by including equality constraints and introducing \(\eta \) to enhance flexibility (\(\eta =2\) in [50]). Without inequalities, (8) reduces to the augmented Lagrangian studied in [40]. The penalty in (8) consists of two parts. The first part characterizes the feasibility error and consists of \(\Vert c({{\varvec{x}}})\Vert ^2\) and \(\Vert g({{\varvec{x}}})\Vert ^2 - \Vert {{\varvec{b}}}_{\epsilon , \nu }({{\varvec{x}}}, {\varvec{\lambda }})\Vert ^2\). The latter term is rescaled by \(1/q_{\nu }({{\varvec{x}}}, {\varvec{\lambda }})\) to penalize \({\varvec{\lambda }}\) with a large magnitude. In fact, if \(\Vert {\varvec{\lambda }}\Vert \rightarrow \infty \), then \(q_{\nu }({{\varvec{x}}}, {\varvec{\lambda }}){\varvec{\lambda }}\rightarrow {{\varvec{0}}}\) so that \(b_{\epsilon , \nu }({{\varvec{x}}}, {\varvec{\lambda }}) \rightarrow \min \{{{\varvec{0}}}, g({{\varvec{x}}})\}\) (cf. (7)). Thus, the penalty term \((\Vert g({{\varvec{x}}})\Vert ^2 - \Vert b_{\epsilon }({{\varvec{x}}}, {\varvec{\lambda }})\Vert ^2)/q_{\nu }({{\varvec{x}}},{\varvec{\lambda }})\rightarrow \infty \), which is impossible when the iterates decrease \(\mathcal {L}_{\epsilon , \nu , \eta }\). The second part characterizes the optimality error and does not depend on the parameters \(\epsilon \) and \(\nu \). We mention that there are alternative forms of the augmented Lagrangian, some of which transform nonlinear inequalities using (squared) slack variables [7, 60]. In that case, additional variables are involved and the strict complementarity condition is often needed to ensure the equivalence between the original and transformed problems [23].

The exact property of (8) can be studied similarly as in [50], however this is incremental and not crucial for our analysis. We will only use (a stochastic version of) (8) to monitor the progress of the iterates. By direct calculation, we obtain the gradient \(\nabla \mathcal {L}_{\epsilon , \nu , \eta }\). We first suppress the evaluation point for conciseness, and define the following matrices

where \(\varvec{e}_{i, m}\in \mathbb {R}^{m}\) is the i-th canonical basis of \(\mathbb {R}^m\) (similar for \(\varvec{e}_{i, r}\in \mathbb {R}^{r}\)). Then,

where \({{\varvec{l}}}= {{\varvec{l}}}({{\varvec{x}}}) = \textrm{diag}(\max \{g({{\varvec{x}}}), {{\varvec{0}}}\})\max \{g({{\varvec{x}}}),{{\varvec{0}}}\}\). Clearly, the evaluation of \(\nabla \mathcal {L}_{\epsilon , \nu , \eta }\) requires \(\nabla f\) and \(\nabla ^2 f\), which have to be replaced by their stochastic counterparts \({\bar{\nabla }}f\) and \({\bar{\nabla }}^2 f\) for Problem (1). Based on (10), we note that, if the feasibility error vanishes, then \(\nabla \mathcal {L}_{\epsilon , \nu , \eta } = {{\varvec{0}}}\) implies the KKT conditions (4) hold for any \(\epsilon ,\nu ,\eta >0\). We summarize this observation in the next lemma. The result holds without any constraint qualifications.

Lemma 2

Let \(\epsilon , \nu , \eta >0\) and let \(({{\varvec{x}}}^\star , {\varvec{\mu }^\star }, {\varvec{\lambda }}^\star ) \in \mathcal {T}_{\nu } \times \mathbb {R}^{m}\times \mathbb {R}^{r}\) be a primal-dual triple. If \(\Vert c({{\varvec{x}}}^\star )\Vert = \Vert \varvec{w}_{\epsilon , \nu }({{\varvec{x}}}^\star , {\varvec{\lambda }}^\star )\Vert = \Vert \nabla \mathcal {L}_{\epsilon , \nu , \eta }({{\varvec{x}}}^\star , {\varvec{\mu }^\star }, {\varvec{\lambda }}^\star )\Vert = 0\), then \(({{\varvec{x}}}^\star , {\varvec{\mu }^\star }, {\varvec{\lambda }}^\star )\) satisfies (4) and, hence, is a KKT point of Problem (1).

Proof

See Appendix A.1\(\square \)

In the next subsection, we introduce an active-set SQP direction that is motivated by the augmented Lagrangian (8).

2.1 An active-set SQP direction via EQP

Let \(\epsilon , \nu , \eta >0\) be fixed parameters. Suppose we have the t-th iterate \(({{\varvec{x}}}_t,\varvec{\mu }_t,{\varvec{\lambda }}_t)\in \mathcal {T}_{\nu }\times \mathbb {R}^m\times \mathbb {R}^r\), let us denote \(J_t = J({{\varvec{x}}}_t)\), \(G_t = G({{\varvec{x}}}_t)\) (similar for \(\nabla f_t, c_t, g_t\), \(q_{\nu }^t\) etc.) to be the quantities evaluated at the t-th iterate. We generally use index t as subscript, except for the quantities (e.g., \(q_{\nu }^t\)) that depend on \(\epsilon \), \(\nu \), or \(\eta \), which have been used as subscript. For an active set \(\mathcal {A}\subseteq \{1,\ldots , r\}\), we denote \({\varvec{\lambda }}_{t_a} = ({\varvec{\lambda }}_t)_{\mathcal {A}}\), \({\varvec{\lambda }}_{t_c} = ({\varvec{\lambda }}_t)_{\mathcal {A}^c}\) (similar for \(g_{t_a}\), \(g_{t_c}\), \(G_{t_a}\), \(G_{t_c}\) etc.) to be the sub-vectors (or sub-matrices), and denote \(\varPi _a(\cdot ) = \varPi _{\mathcal {A}}(\cdot )\), \(\varPi _c(\cdot ) = \varPi _{\mathcal {A}^c}(\cdot )\) for shorthand.

With the t-th iterate \(({{\varvec{x}}}_t,\varvec{\mu }_t,{\varvec{\lambda }}_t)\) and the above notation, we first define the identified active set as

We then solve the following coupled linear system

for some \(B_t\) that approximates the Hessian \(\nabla _{{{\varvec{x}}}}^2\mathcal {L}_t\). Our active-set SQP direction is then \(\varDelta _t:=(\varDelta {{\varvec{x}}}_t, \varDelta \varvec{\mu }_t, \varDelta {\varvec{\lambda }}_t)\). Finally, we update the iterate as

with \(\alpha _t\) chosen to ensure a certain sufficient decrease on the merit function (8).

The definition of active set was introduced in [50, (8.5)] and has been utilized, e.g., in [53]. Intuitively, for the i-th inequality constraint, if \(g_i^\star = (g({{\varvec{x}}}^\star ))_i = 0\) and \({\varvec{\lambda }}_i^\star >0\), then i will be identified when \(({{\varvec{x}}}_t, {\varvec{\lambda }}_t)\) is close to \(({{\varvec{x}}}^\star ,{\varvec{\lambda }}^\star )\); if \(g_i^\star <0\) and \({\varvec{\lambda }}^\star _i = 0\), then i will not be identified. The stepsize \(\alpha _t\) is usually chosen by line search. In Sect. 3, we will design a stochastic line search scheme to select \(\alpha _t\) adaptively. Compared to fully stochastic SQP schemes [3, 4, 18], we need a more precise model estimation. We explain the SQP direction (12) in the next remark.

Remark 1

Our dual direction \((\varDelta \varvec{\mu }_t, \varDelta {\varvec{\lambda }}_t)\) differs from the usual SQP direction introduced, for example, in [50, (8.9)]. In particular, the system (12a) is nothing but the KKT conditions of EQP:

Thus, \((\varDelta {{\varvec{x}}}_t, \varvec{\mu }_t + {\tilde{\varDelta }}\varvec{\mu }_t, {\varvec{\lambda }}_{t_a} + {\tilde{\varDelta }}{\varvec{\lambda }}_{t_a})\) solved from (12a) is also the primal-dual solution of the above EQP. However, instead of using \(({\tilde{\varDelta }}\varvec{\mu }_t, {\tilde{\varDelta }}{\varvec{\lambda }}_{t_a}, -{\varvec{\lambda }}_{t_c})\), we solve the dual direction \((\varDelta \varvec{\mu }_t, \varDelta {\varvec{\lambda }}_t)\) for both active and inactive constraints from (12b). As \(B_t\) converges to \(\nabla _{{{\varvec{x}}}}^2\mathcal {L}_t\) and \(({{\varvec{x}}}_t,\varvec{\mu }_t,{\varvec{\lambda }}_t)\) converges to a KKT point \(({{\varvec{x}}}^\star , {\varvec{\mu }^\star }, {\varvec{\lambda }}^\star )\), it is fairly easy to see that \((\varDelta \varvec{\mu }_t, \varDelta {\varvec{\lambda }}_t)\) converges to \(({\tilde{\varDelta }}\varvec{\mu }_t, {\tilde{\varDelta }}{\varvec{\lambda }}_t)\) (where we denote \({\tilde{\varDelta }}{\varvec{\lambda }}_{t_c} = -{\varvec{\lambda }}_{t_c}\)) in a higher order by noting that

Thus, the fast local rate of the SQP direction \((\varDelta {{\varvec{x}}}_t, {\tilde{\varDelta }}\varvec{\mu }_t, {\tilde{\varDelta }}{\varvec{\lambda }}_t)\) is preserved by \(\varDelta _t\). However, it turns out that the adjustment of \(\varDelta _t\) is crucial for the merit function (8) when \(B_t\) is far from \(\nabla _{{{\varvec{x}}}}^2\mathcal {L}_t\). A similar, coupled SQP system is employed for equality constrained problems [35, 40], while we extend to inequality constraints here. In fact, [50, Proposition 8.2] showed that \((\varDelta {{\varvec{x}}}_t, {\tilde{\varDelta }}\varvec{\mu }_t, {\tilde{\varDelta }}{\varvec{\lambda }}_t)\) is a descent direction of \(\mathcal {L}_{\epsilon , \nu , \eta }^t\) if \(({{\varvec{x}}}_t, \varvec{\mu }_t, {\varvec{\lambda }}_t)\) is near a KKT point and \(B_t = \nabla ^2_{{{\varvec{x}}}}\mathcal {L}_t\). However, \(B_t = \nabla ^2_{{{\varvec{x}}}}\mathcal {L}_t\) (i.e., no Hessian modification) is restrictive even for a deterministic line search, and that descent result does not hold if \(B_t \ne \nabla ^2_{{{\varvec{x}}}}\mathcal {L}_t\). In contrast, as shown in Lemma 3, \(\varDelta _t\) is a descent direction even if \(B_t\) is not close to \(\nabla _{{{\varvec{x}}}}^2\mathcal {L}_t\).

2.2 The descent property of \(\varDelta _t\)

In this subsection, we present a descent property of \(\varDelta _t\). We focus on the term \((\nabla \mathcal {L}_{\epsilon , \nu , \eta }^t)^T\varDelta _t\). Different from SQP for equality constrained problems, \(\varDelta _t\) may not be a descent direction of \(\mathcal {L}_{\epsilon , \nu , \eta }^t\) for some points even if \(\epsilon \) is chosen small enough. To see it clearly, we suppress the iteration index, denote \(g_a = g_{t_a}\) (similar for \({\varvec{\lambda }}_a\), \({\varvec{\lambda }}_c\) etc.), and divide \(\nabla \mathcal {L}_{\epsilon , \nu , \eta } \) (cf. (10)) into two terms: a dominating term that depends on \((g_a, {\varvec{\lambda }}_c)\) linearly, and a higher-order term that depends on \((g_a, {\varvec{\lambda }}_c)\) at least quadratically. In particular, we write \(\nabla \mathcal {L}_{\epsilon , \nu , \eta } = \nabla \mathcal {L}_{\epsilon , \nu , \eta }^{(1)} + \nabla \mathcal {L}_{\epsilon , \nu , \eta }^{(2)}\) where

Loosely speaking (see Lemma 3 for a rigorous result), \((\nabla \mathcal {L}_{\epsilon , \nu , \eta }^{(1)})^T\varDelta \) provides a sufficient decrease provided the penalty parameters are suitably chosen, while \((\nabla \mathcal {L}_{\epsilon , \nu , \eta }^{(2)})^T\varDelta \) has no such guarantee in general. Since \(\nabla \mathcal {L}_{\epsilon , \nu , \eta }^{(2)}\) depends on \((g_a, {\varvec{\lambda }}_c)\) quadratically, to ensure \(\nabla \mathcal {L}_{\epsilon , \nu , \eta }^T\varDelta <0\), we require \(\Vert g_a\Vert \vee \Vert {\varvec{\lambda }}_c\Vert \) to be small enough to let the linear term \((\nabla \mathcal {L}_{\epsilon , \nu , \eta }^{(1)})^T\varDelta \) dominate. This essentially requires the iterate to be close to a KKT point, since \(\Vert g_a\Vert = \Vert {\varvec{\lambda }}_c\Vert = 0\) at a KKT point. With this discussion in mind, if the iterate is far from a KKT point, \(\varDelta \) may not be a descent direction of \(\mathcal {L}_{\epsilon , \nu , \eta }\). In fact, for an iterate that is far from a KKT point, the KKT matrix \(K_a\) (and its component \(G_a\)) is likely to be singular due to the imprecisely identified active set. Thus, Newton system (12) is not solvable at this iterate at all, let alone it generates a descent direction. Without inequalities, the quadratic term \(\nabla \mathcal {L}_{\epsilon , \nu , \eta }^{(2)}\) disappears and our analysis reduces to the one in [40]. We realize that the existence of \(\nabla \mathcal {L}_{\epsilon , \nu , \eta }^{(2)}\) results in a very different augmented Lagrangian to the one in [40]; and brings difficulties in designing a global algorithm to deal with inequality constraints.

We point out that requiring a local iterate is not an artifact of the proof technique. Such a requirement is imposed for different search directions in related literature. For example, [50] showed that the SQP direction obtained by either EQP or IQP is a descent direction of \(\mathcal {L}_{\epsilon , \nu , \eta }\) in a neighborhood of a KKT point (cf. Propositions 8.2 and 8.4). That work also required \(B_t = \nabla _{{{\varvec{x}}}}^2\mathcal {L}_t\), which we relax by considering a coupled Newton system. Subsequently, [53, 55] studied truncated Newton directions, whose descent properties hold only locally as well (cf. [53, Proposition 3.7], [55, Proposition 10]).

Now, we introduce two assumptions and formalize the descent property.

Assumption 1

(LICQ) We assume at \({{\varvec{x}}}^\star \) that \((J^T({{\varvec{x}}}^\star )\;\; G^T_{\mathcal {I}({{\varvec{x}}}^\star )}({{\varvec{x}}}^\star ))\) has full column rank, where \(\mathcal {I}({{\varvec{x}}}^\star )\) is the active inequality set defined in (3).

Assumption 2

For \({{\varvec{z}}}\in \{{{\varvec{z}}}\in \mathbb {R}^d: J_t{{\varvec{z}}}= {{\varvec{0}}}, G_{t_a}{{\varvec{z}}}= {{\varvec{0}}}\}\), we have \({{\varvec{z}}}^TB_t{{\varvec{z}}}\ge \gamma _{B}\Vert {{\varvec{z}}}\Vert ^2\) and \(\Vert B_t\Vert \le \varUpsilon _{B}\) for constants \(\varUpsilon _{B}\ge 1\ge \gamma _{B}>0\).

The above condition on \(B_t\) is standard in nonlinear optimization literature [7]. In fact, \(B_t = I\) with \(\gamma _{B} = \varUpsilon _{B} = 1\) is sufficient for the analysis in this paper. The condition \(\varUpsilon _{B}\ge 1\ge \gamma _{B}>0\) (similar for other constants defined later) is inessential, which is only for simplifying the presentation. Without such a requirement, our analyses hold by replacing \(\gamma _{B}\) with \(\gamma _{B}\wedge 1\) and \(\varUpsilon _{B}\) with \(\varUpsilon _{B}\vee 1\).

Lemma 3

Let \(\nu , \eta >0\) and suppose Assumptions 1 and 2 hold. There exist a constant \(\varUpsilon >0\) depending on \(\varUpsilon _{B}\) but not on \((\nu ,\eta ,\gamma _{B})\), and a compact set \(\mathcal {X}_{\epsilon ,\nu }\times \mathcal {M}\times \varLambda _{\epsilon ,\nu }\) around \(({{\varvec{x}}}^\star , {\varvec{\mu }^\star }, {\varvec{\lambda }}^\star )\) depending on \((\epsilon , \nu )\) but not on \(\eta \),Footnote 1 such that if \(({{\varvec{x}}}_t, \varvec{\mu }_t, {\varvec{\lambda }}_t) \in \mathcal {X}_{\epsilon ,\nu }\times \mathcal {M}\times \varLambda _{\epsilon ,\nu }\) with \(\epsilon \) satisfying \(\epsilon \le \gamma _{B}^2(\gamma _{B}\wedge \eta )/\left\{ (1\vee \nu )\varUpsilon \right\} \), then

Furthermore, there exists a compact subset \(\mathcal {X}_{\epsilon ,\nu ,\eta }\times \mathcal {M}\times \varLambda _{\epsilon ,\nu ,\eta }\subseteq \mathcal {X}_{\epsilon ,\nu }\times \mathcal {M}\times \varLambda _{\epsilon ,\nu }\) depending additionally on \(\eta \), such that if \(({{\varvec{x}}}_t, \varvec{\mu }_t, {\varvec{\lambda }}_t) \in \mathcal {X}_{\epsilon ,\nu ,\eta }\times \mathcal {M}\times \varLambda _{\epsilon ,\nu ,\eta }\), then

Proof

See Appendix A.2\(\square \)

Similar arguments for other directions can be found in [53, Proposition 3.5] and [55, Proposition 9]. By the proof of Lemma 3, we know that as long as \(M_t\) and \((J_t^T\;\; G_{t_a}^T)\) in the SQP system (12) have full (column) rank, \((\nabla \mathcal {L}_{\epsilon , \nu , \eta }^{t\; (1)})^T\varDelta _t\) ensures a sufficient decrease provided \(\epsilon \) is small enough. However, from (A.11) in the proof, we also see that \((\nabla \mathcal {L}_{\epsilon , \nu , \eta }^{t\; (2)})^T\varDelta _t\) is only bounded by

where \(\varUpsilon '>0\) is a constant independent of \((\epsilon , \nu , \eta )\). Thus, to ensure \((\nabla \mathcal {L}_{\epsilon , \nu , \eta }^t)^T\varDelta _t\) to be negative, we have to restrict to a neighborhood, in which \(\Vert g_{t_a}\Vert \vee \Vert {\varvec{\lambda }}_{t_c}\Vert \) is small enough so that \(\varUpsilon '(\frac{1\vee \nu }{\epsilon (1\wedge \nu ^2)}\vee \eta )(\Vert g_{t_a}\Vert + \Vert {\varvec{\lambda }}_{t_c}\Vert ) \le (\gamma _{B}\wedge \eta )/4\). This requirement is achievable near a KKT pair \(({{\varvec{x}}}^\star , {\varvec{\lambda }}^\star )\), where the active set is correctly identified (implying that \(\Vert g_{t_a}\Vert \le \Vert (g_t)_{\mathcal {I}({{\varvec{x}}}^\star )}\Vert \) and \(\Vert {\varvec{\lambda }}_{t_c}\Vert \le \Vert ({\varvec{\lambda }}_t)_{\{i: 1\le i\le r, {\varvec{\lambda }}^\star _i=0\}}\Vert \)); and the radius of the neighborhood clearly depends on \((\epsilon , \nu , \eta )\).

In the next section, we exploit the introduced augmented Lagrangian merit function (8) and the active-set SQP direction (12) to design a StoSQP scheme for Problem (1). We will adaptively choose proper \(\epsilon \) and \(\nu \) (recall that \(\eta >0\) can be any positive number in this paper), incorporate stochastic line search to select the stepsize, and globalize the scheme by utilizing a safeguarding direction (e.g., Newton or steepest descent step) of the merit function \(\mathcal {L}_{\epsilon ,\nu ,\eta }\). If the system (12) is not solvable, or is solvable but does not generate a descent direction, we search along the alternative direction to decrease the merit function. However, since \(\varDelta _t\) usually enjoys a fast local rate (see [50, Proposition 8.3] for a local analysis of \((\varDelta {{\varvec{x}}}_t, {\tilde{\varDelta }}\varvec{\mu }_t, {\tilde{\varDelta }}{\varvec{\lambda }}_t)\) and Remark 1), we prefer to preserve \(\varDelta _t\) as much as possible.

3 An adaptive active-set StoSQP scheme

We design an adaptive scheme for Problem (1) that embeds stochastic line search, originally designed and analyzed for unconstrained problems in [14, 44], into an active-set StoSQP. There are two challenges to design adaptive schemes for constrained problems. First, the merit function has penalty parameters that are random and adaptively specified; while for unconstrained problems one simply uses the objective function in line search. To show the global convergence, it is crucial that the stochastic penalty parameters are stabilized almost surely. Thus, for each run, after few iterations we always target a stabilized merit function. Otherwise, if each iteration decreases a different merit function, the decreases across iterations may not accumulate. Second, since the stabilized parameters are random, they may not be below unknown deterministic thresholds. Such a condition is critical to ensure the equivalence between the stationary points of the merit function and the KKT points of Problem (1). Thus, even if we converge to a stationary point of the (stabilized) merit function, it is not necessarily true that the stationary point is a KKT point of Problem (1).

With only equality constraints, [4, 40] addressed the first challenge under a boundedness condition, and our paper follows the same type of analysis. Similar boundedness condition is also required for deterministic analyses to have the penalty parameters stabilized [7, Chapter 4.3.3]. [4] resolved the second challenge by introducing a noise condition (satisfied by symmetric noise), while [40] resolved it by adjusting the SQP scheme when selecting the penalty parameters. As introduced in Sect. 1, the technique of [40] has multiple flaws: (i) it requires generating increasing samples to estimate the gradient of the augmented Lagrangian (cf. [40, Step 1]); (ii) it imposes a feasibility error condition for each step (cf. [40, (19)]). In this paper, we refine the technique of [40] and enable inequality constraints. As revealed by Sect. 2, the present analysis of inequality constraints is much more involved; and more importantly, our “lim” convergence guarantee strengthens the existing “liminf” convergence of the stochastic line search in [40, 44]. In what follows, we use \(\bar{(\cdot )}\) to denote random quantities, except for the iterate \(({{\varvec{x}}}_t, \varvec{\mu }_t, {\varvec{\lambda }}_t)\). For example, \({\bar{\alpha }}_t\) denotes a random stepsize.

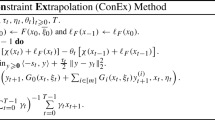

3.1 The proposed scheme

Let \(\eta , \alpha _{max}, \kappa _{grad}, \chi _{grad}, \chi _{f},\chi _{err}>0; \rho >1\); \(\gamma _{B}\in (0,1]\); \(\beta , p_{grad}, p_f\in (0, 1)\); \(\kappa _f\in (0, \beta /(4\alpha _{max})]\) be fixed tuning parameters. Given quantities \(({{\varvec{x}}}_t, \varvec{\mu }_t, {\varvec{\lambda }}_t, {\bar{\nu }}_t, {\bar{\epsilon }}_t, {\bar{\alpha }}_t,\bar{\delta }_t)\) at the t-th iteration with \({{\varvec{x}}}_t\in \mathcal {T}_{{\bar{\nu }}_t}\), we perform the following five steps to derive quantities at the \((t+1)\)-th iteration.

Step 1: Estimate objective derivatives

We generate a batch of independent samples \(\xi _1^t\) to estimate the gradient \(\nabla f_t\) and Hessian \(\nabla ^2f_t\). The estimators \({\bar{\nabla }}f_t\) and \({\bar{\nabla }}^2f_t\) may not be computed with the same amount of samples, since they have different sample complexities. For example, we can compute \({\bar{\nabla }}f_t\) using \(\xi _1^t\) while compute \({\bar{\nabla }}^2 f_t\) using a fraction of \(\xi _1^t\) (more on this in Sect. 3.4). With \({\bar{\nabla }}f_t\), \({\bar{\nabla }}^2f_t\), we then compute \({\bar{\nabla }}_{{{\varvec{x}}}}\mathcal {L}_t\), \({\bar{Q}}_{1,t}\), and \({\bar{Q}}_{2,t}\) used in the system (12).

We require the batch size \(|\xi _1^t|\) to be large enough to make the gradient error of the merit function small. In particular, we define

A simple observation from (10) is that \({\bar{\varDelta }}(\nabla \mathcal {L}_\eta ^t)\) is independent of \({\bar{\epsilon }}_t\) (and \({\bar{\nu }}_t\)), which will be selected later (Step 2). We require \(|\xi _1^t|\) to satisfy two conditions:

(a) the event \(\mathcal {E}_1^t\),

satisfies

(b) if \(t-1\) is a successful step (see Step 5 for the meaning), then

The sample complexities to ensure (15) and (16) will be discussed in Sect. 3.4. Compared to [40], we do not let \(|\xi _1^t|\) increase monotonically, while we impose an expectation condition (16) when we arrive at a new iterate. By our analysis, it is easy to see that (16) can also be replaced by requiring the subsequence \(\{|\xi _1^t|: t-1 \text { is a successful step}\}\) to increase to the infinity (e.g., increase by at least one each time), which is still weaker than [40]. The right hand side of (16) will be clear when we utilize \(\bar{\delta }_t\) later in Step 5 (cf. (27)). We use \(P_{\xi _1^t}(\cdot )\) and \(\mathbb {E}_{\xi _1^t}[\cdot ]\) to denote the probability and expectation that are evaluated over the randomness of sampling \(\xi _1^t\) only, while other random quantities are conditioned on, such as \(({{\varvec{x}}}_t, \varvec{\mu }_t, {\varvec{\lambda }}_t)\) and \({\bar{\alpha }}_t\). More precisely, we mean \(P_{\xi _1^t}(\mathcal {E}_1^t) = P(\mathcal {E}_1^t\mid \mathcal {F}_{t-1})\) (similar for \(\mathbb {E}_{\xi _1^t}[\cdot ]\)) where the \(\sigma \)-algebra \(\mathcal {F}_{t-1}\) is defined in (28) below.

Step 2: Set parameter \({\bar{\epsilon }}_t\). With current \({\bar{\nu }}_t\), we decrease \({\bar{\epsilon }}_t \leftarrow {\bar{\epsilon }}_t/\rho \) until \({\bar{\epsilon }}_t\) is small enough to satisfy the following two conditions simultaneously:

(a) the feasibility error is proportionally bounded by the gradient of the merit function, whenever the iterate is closer to a stationary point than a KKT point:

(we use the same multiplier \(\chi _{err}\) only for simplifying the notation.)

(b) if the SQP system (12) with \({\bar{\nabla }}_{{{\varvec{x}}}}\mathcal {L}_t\), \({\bar{Q}}_{1,t}\), and \({\bar{Q}}_{2,t}\) is solvable, then we obtain \({\bar{\varDelta }}_t = ({\bar{\varDelta }}{{\varvec{x}}}_t, {\bar{\varDelta }}\varvec{\mu }_t, {\bar{\varDelta }}{\varvec{\lambda }}_t)\) and require

We prove in Lemma 4 and Lemma 5 that both (17) and (18) can be satisfied for sufficiently small \({\bar{\epsilon }}_t\). In fact, Lemma 3 has already established (18) for the deterministic case. Even though \({\bar{\varDelta }}_t\) is not always used as the search direction, we still enforce (18) to hold for \(({\bar{\nabla }}\mathcal {L}_{{\bar{\epsilon }}_t, {\bar{\nu }}_t, \eta }^{t\;(1)})^T{\bar{\varDelta }}_t\). The reason for this is to avoid ruling out \({\bar{\varDelta }}_t\) just because \({\bar{\epsilon }}_t\) is not small enough, which would result in a positive dominating term \(({\bar{\nabla }}\mathcal {L}_{{\bar{\epsilon }}_t, {\bar{\nu }}_t, \eta }^{t\;(1)})^T{\bar{\varDelta }}_t\). If (12) is not solvable (e.g., the active set is imprecisely identified so that \(K_{t_a}\) is singular), then (18) is not needed.

The condition (17) is the key to ensure that the stationary point of the merit function that we converge to is a KKT point of (1). Motivated by Lemma 2, we know that “the stationarity of the merit function plus vanishing feasibility error” implies vanishing KKT residual. (17) states that the feasibility error is roughly controlled by the gradient of the merit function. (17) relaxes [40, (19)] from two aspects. First, [40] had no multiplier while we allow any (large) multiplier \(\chi _{err}\). Second, [40] enforced (17) for each step, while we enforce it only when we observe a stronger evidence that the scheme is approaching to a stationary point than to a KKT point. The above relaxations are driven by the intention of imposing the condition. When adjusting \({\bar{\epsilon }}_t\), if \(\Vert {\bar{\nabla }}\mathcal {L}_{{\bar{\epsilon }}_t,{\bar{\nu }}_t,\eta }^t\Vert \) first exceeds \({\bar{R}}_t\) before \(\Vert (c_t, \varvec{w}_{{\bar{\epsilon }}_t, {\bar{\nu }}_t}^t)\Vert \) (which easily happens for a large \({\bar{\nu }}_t\)), then one can immediately stop the adjustment of \({\bar{\epsilon }}_t\). Compared to [40] where the SQP system is supposed to be always solvable, (17) has extra usefulness: when \({\bar{\varDelta }}_t\) is not available, (17) ensures that the safeguarding direction can be computed using the samples in Step 1. Such a desire is not easily achieved, and further relaxations of (17) can be designed if we generate new samples for the safeguarding direction (in Step 3). The subtlety lies in the fact that no penalty parameters are involved when we generate \(\xi _1^t\) in Step 1, while (17) builds a connection between \(\xi _1^t\) and the penalty parameters. It implies that the set \(\xi _1^t\) satisfying (15) and (16) also satisfies the corresponding conditions for the safeguarding direction.

Step 3: Decide the search direction.

We may obtain a stochastic SQP direction \({\bar{\varDelta }}_t\) from Step 2. However, if (12) is not solvable, or it is solvable but \({\bar{\varDelta }}_t\) is not a sufficient descent direction because

then an alternative safeguarding direction \({\hat{\varDelta }}_t\) must be employed to ensure the decrease of the merit function. In that case, we follow [53, 55] and regard \(\mathcal {L}_{{\bar{\epsilon }}_t,{\bar{\nu }}_t,\eta }\) as a penalized objective. We require \({\hat{\varDelta }}_t\) to satisfy

for a constant \(\chi _{u}\ge 1\). Similar to (17), we use the same constant \(\chi _{u}\) for the two multipliers to simplify the notation. When using two different constants \(\chi _{1,u}\) and \(\chi _{2,u}\), we can always set \(\chi _{u} = 1/\chi _{1,u}\vee \chi _{2,u}\) to let (20) hold. The condition (20) is standard in the literature [53, (60a,b)] [55, (52a,b)]. One example that satisfies (20) and is computationally cheap is the steepest descent direction \({\hat{\varDelta }}_t = - {\bar{\nabla }}\mathcal {L}_{{\bar{\epsilon }}_t, {\bar{\nu }}_t, \eta }^t\) with \(\chi _{u} = 1\). Such a direction can be computed (almost) without any extra cost since the two components of \({\bar{\nabla }}\mathcal {L}_{{\bar{\epsilon }}_t, {\bar{\nu }}_t, \eta }^t\), \({\bar{\nabla }}\mathcal {L}_{{\bar{\epsilon }}_t, {\bar{\nu }}_t, \eta }^{t\; (1)}\) and \({\bar{\nabla }}\mathcal {L}_{{\bar{\epsilon }}_t, {\bar{\nu }}_t, \eta }^{t\; (2)}\), have been computed when checking (18) and (19). Another example that is more computationally expensive is the regularized Newton step \({\hat{H}}_t{\hat{\varDelta }}_t = -{\bar{\nabla }}\mathcal {L}_{{\bar{\epsilon }}_t, {\bar{\nu }}_t, \eta }^t\), where \({\hat{H}}_t\) captures second-order information of \(\mathcal {L}_{{\bar{\epsilon }}_t,{\bar{\nu }}_t,\eta }^t\) and satisfies \(1/\chi _{u}I \preceq {\hat{H}}_t\preceq \chi _{u}I\). In particular, \({\hat{H}}_t\) can be obtained by regularizing the (generalized) Hessian matrix \(H_t\), which is provided and discussed in [50, 53], and has the formFootnote 2

Here, \({{\varvec{1}}} = (1,\ldots , 1)\in \mathbb {R}^r\) is the all one vector. Other examples that improve upon the regularized Newton step include the choices in [21, 54], where a truncated conjugate gradient method is applied to an indefinite Newton system [54, Proposition 3.3, (14)]. We will numerically implement the regularized Newton and the steepest descent steps in Sect. 4.

Step 4: Estimate the merit function. Let  denote the adopted search direction; thus

denote the adopted search direction; thus  from Step 2 or

from Step 2 or  from Step 3. We aim to perform stochastic line search by checking the Armijo condition (26) at the trial point

from Step 3. We aim to perform stochastic line search by checking the Armijo condition (26) at the trial point

We estimate the merit function in this step and perform line search in Step 5.

First, we check if the trial primal point \({{\varvec{x}}}_{s_t}\) is in \(\mathcal {T}_{{\bar{\nu }}_t}\). In particular, if \({{\varvec{x}}}_{s_t} \notin \mathcal {T}_{{\bar{\nu }}_t}\), that is \(a_{s_t} = a({{\varvec{x}}}_{s_t}) > {\bar{\nu }}_t/2\) (cf. (5)), then we stop the current iteration and reject the trial point by letting \(({{\varvec{x}}}_{t+1}, \varvec{\mu }_{t+1}, {\varvec{\lambda }}_{t+1}) = ({{\varvec{x}}}_t, \varvec{\mu }_t, {\varvec{\lambda }}_t)\), \({\bar{\epsilon }}_{t+1} = {\bar{\epsilon }}_t\), \({\bar{\alpha }}_{t+1} = {\bar{\alpha }}_t\), and \(\bar{\delta }_{t+1} = \bar{\delta }_t\). We also increase \({\bar{\nu }}_t\) by letting

where \(\lceil y\rceil \) denotes the least integer that exceeds y. The definition of \(j\ge 1\) in (22) ensures \({{\varvec{x}}}_{s_t} \in \mathcal {T}_{{\bar{\nu }}_{t+1}}\). However, \(j=1\) works as well, since \({{\varvec{x}}}_{t+1}={{\varvec{x}}}_t \in \mathcal {T}_{{\bar{\nu }}_t} \subseteq \mathcal {T}_{{\bar{\nu }}_{t+1}}\), as required for performing the next iteration. In the case of \({{\varvec{x}}}_{s_t} \notin \mathcal {T}_{{\bar{\nu }}_t}\), particularly if \(a_{s_t} \ge {\bar{\nu }}_t\), evaluating the merit function \(\mathcal {L}_{{\bar{\epsilon }}_t, {\bar{\nu }}_t, \eta }^{s_t}\) is not informative since the penalty term in \(\mathcal {L}_{{\bar{\epsilon }}_t, {\bar{\nu }}_t, \eta }^{s_t}\) may be rescaled by a negative multiplier. Thus, we increase \({\bar{\nu }}_t\) and rerun the iteration at the current point.

Otherwise \({{\varvec{x}}}_{s_t} \in \mathcal {T}_{{\bar{\nu }}_t}\), then we generate a batch of independent samples \(\xi _2^t\), that are independent from \(\xi _1^t\) as well, and estimate \(f_t, f_{s_t}, \nabla f_t, \nabla f_{s_t}\). Similar to Step 1, the estimators \({\bar{f}}_t, {\bar{f}}_{s_t}\) and \({\bar{{\bar{\nabla }}}}f_t, {\bar{{\bar{\nabla }}}}f_{s_t}\) may not be computed with the same amount of samples. For example, \({\bar{f}}_t\) and \({\bar{f}}_{s_t}\) can be computed using \(\xi _2^t\) while \({\bar{{\bar{\nabla }}}}f_t\) and \({\bar{{\bar{\nabla }}}}f_{s_t}\) can be computed using a fraction of \(\xi _2^t\). The sample complexities are discussed in Sect. 3.4. Here, we distinguish \({\bar{{\bar{\nabla }}}}f_t\) from \({\bar{\nabla }}f_t\) in Step 1. While both of them are estimates of \(\nabla f_t\), the former is computed based on \(\xi _2^t\) and the latter is computed based on \(\xi _1^t\). Using \({\bar{f}}_t,{\bar{f}}_{s_t},{\bar{{\bar{\nabla }}}}f_t,{\bar{{\bar{\nabla }}}}f_{s_t}\), we compute \({\bar{\mathcal {L}}}_{{\bar{\epsilon }}_t, {\bar{\nu }}_t, \eta }^t\) and \({\bar{\mathcal {L}}}_{{\bar{\epsilon }}_t, {\bar{\nu }}_t, \eta }^{s_t}\) according to (8).

We require \(|\xi _2^t|\) is large enough such that the event \(\mathcal {E}_2^t\),

satisfies

and

Similar to (15) and (16), \(P_{\xi _2^t}(\cdot )\) and \(\mathbb {E}_{\xi _2^t}[\cdot ]\) denote that the randomness is taken over sampling \(\xi _2^t\) only, while other random quantities are conditioned on. That is, \(P_{\xi _2^t}(\mathcal {E}_2^t) = P(\mathcal {E}_2^t\mid \mathcal {F}_{t-0.5})\) (similar for \(\mathbb {E}_{\xi _2^t}[\cdot ]\)) where the \(\sigma \)-algebra \(\mathcal {F}_{t-0.5} = \mathcal {F}_{t-1}\cup \sigma (\xi _1^t)\) is defined in (28) below.

Step 5: Perform line search. With the merit function estimates, we check the Armijo condition next.

(a) If the Armijo condition holds,

then the trial point is accepted by letting \(({{\varvec{x}}}_{t+1}, \varvec{\mu }_{t+1}, {\varvec{\lambda }}_{t+1}) = ({{\varvec{x}}}_{s_t}, \varvec{\mu }_{s_t}, {\varvec{\lambda }}_{s_t})\) and the stepsize is increased by \({\bar{\alpha }}_{t+1} = \rho {\bar{\alpha }}_t\wedge \alpha _{max}\). Furthermore, we check if the decrease of the merit function is reliable. In particular, if

then we increase \(\bar{\delta }_t\) by \(\bar{\delta }_{t+1} = \rho \bar{\delta }_t\); otherwise, we decrease \(\bar{\delta }_t\) by \(\bar{\delta }_{t+1} = \bar{\delta }_t/\rho \).

(b) If the Armijo condition (26) does not hold, then the trial point is rejected by letting \(({{\varvec{x}}}_{t+1}, \varvec{\mu }_{t+1}, {\varvec{\lambda }}_{t+1}) = ({{\varvec{x}}}_{t}, \varvec{\mu }_{t},{\varvec{\lambda }}_{t})\), \({\bar{\alpha }}_{t+1} = {\bar{\alpha }}_t/\rho \) and \(\bar{\delta }_{t+1} = \bar{\delta }_t/\rho \).

Finally, for both cases (a) and (b), we let \({\bar{\epsilon }}_{t+1} = {\bar{\epsilon }}_t\), \({\bar{\nu }}_{t+1} = {\bar{\nu }}_t\) and repeat the procedure from Step 1. From (27), we can see that \(\bar{\delta }_t\) (roughly) has the order \({\bar{\alpha }}_t\Vert {\bar{\nabla }}\mathcal {L}_{{\bar{\epsilon }}_t,{\bar{\nu }}_t,\eta }^t\Vert ^2\), which justifies the definition of the right hand side of (16).

The proposed scheme is summarized in Algorithm 1. We define three types of iterations for line search. If the Armijo condition (26) holds, we call the iteration a successful step, otherwise we call it an unsuccessful step. For a successful step, if the sufficient decrease in (27) is satisfied, we call it a reliable step, otherwise we call it an unreliable step. Same notion is used in [14, 40, 44].

To end this section, let us introduce the filtration induced by the randomness of the algorithm. Given a random sample sequence \(\{\xi _1^t,\xi _2^t\}_{t=0}^\infty \),Footnote 3 we let \(\mathcal {F}_t = \sigma (\{\xi _1^j, \xi _2^j\}_{j=0}^t)\), \(t\ge 0\), be the \(\sigma \)-algebra generated by all the samples till t; \(\mathcal {F}_{t-0.5} = \sigma (\{\xi _1^j, \xi _2^j\}_{j=0}^{t-1}\cup \xi _1^t)\), \(t\ge 0\), be the \(\sigma \)-algebra generated by all the samples till \(t-1\) and the sample \(\xi _1^t\); and \(\mathcal {F}_{-1}\) be the trivial \(\sigma \)-algebra generated by the initial iterate (which is deterministic). Throughout the presentation, we let \({\bar{\epsilon }}_t\) be the quantity obtained after Step 2; that is, \({\bar{\epsilon }}_t\) satisfies (17) and (18). With this setup, it is easy to see that

We analyze Algorithm 1 in the next subsection.

3.2 Assumptions and stability of parameters

We study the stability of the parameter sequence \(\{{\bar{\epsilon }}_t, {\bar{\nu }}_t\}_t\). We will show that, for each run of the algorithm, the sequence is stabilized after a finite number of iterations. Thus, Lines 5 and 14 of Algorithm 1 will not be performed when the iteration index t is large enough. We begin by introducing the assumptions.

Assumption 3

(Regularity condition) We assume the iterate \(\{({{\varvec{x}}}_t, \varvec{\mu }_t, {\varvec{\lambda }}_t)\}\) and trial point \(\{({{\varvec{x}}}_{s_t}, \varvec{\mu }_{s_t}, {\varvec{\lambda }}_{s_t})\}\) are contained in a convex compact region \(\mathcal {X}\times \mathcal {M}\times \varLambda \). Further, if \({{\varvec{x}}}_{s_t}\in \mathcal {T}_{{\bar{\nu }}_t}\), then the segment \(\{\zeta {{\varvec{x}}}_t + (1-\zeta ){{\varvec{x}}}_{s_t}: \zeta \in (0, 1)\}\subseteq \mathcal {T}_{\theta {\bar{\nu }}_t}\) for some \(\theta \in [1, 2)\). We also assume the functions f, g, c are thrice continuously differentiable over \(\mathcal {X}\), and realizations \(|F({{\varvec{x}}}, \xi )|\), \(\Vert \nabla F({{\varvec{x}}}, \xi )\Vert \), \(\Vert \nabla ^2 F({{\varvec{x}}}, \xi )\Vert \) are uniformly bounded over \({{\varvec{x}}}\in \mathcal {X}\) and \(\xi \sim {{\mathcal {P}}}\).

Assumption 4

(Constraint qualification) For any \({{\varvec{x}}}\in \varOmega \), we assume that \((J^T({{\varvec{x}}})\,\, G^T_{\mathcal {I}({{\varvec{x}}})}({{\varvec{x}}}))\) has full column rank, where \(\varOmega \) is the feasible set in (2) and \(\mathcal {I}({{\varvec{x}}})\) is the active set in (3). For any \({{\varvec{x}}}\in \mathcal {X}\backslash \varOmega \), we assume the linear system

has a solution for \({{\varvec{z}}}\in \mathbb {R}^d\).

The boundedness condition on realizations in Assumption 3 is widely used in StoSQP analysis to have a well-behaved stochastic penalty parameter sequence [3, 4, 18, 40]. The third derivatives of f, g, c are only required in the analysis and not needed in the implementation. They are required since the existence of the (generalized) Hessian of the augmented Lagrangian needs the third derivatives. See, for example, [50, Section 6] for the same requirement. For deterministic schemes, the compactness condition on the iterates is typical for the augmented Lagrangian and SQP analyses [7, Chapter 4] [41, Chapter 18]. Some literature relaxed it by assuming all quantities (e.g., the objective gradient and constraints Jacobian, etc.) are uniformly upper bounded with a lower bounded objective (so as the merit function). However, either condition is rather restrictive for StoSQP due to the underlying randomness of the scheme. That said, given the StoSQP iterates presumably contract to a deterministic feasible set, we believe that an unbounded iteration sequence is rare in general. Furthermore, compared to fully stochastic schemes in [3, 4, 18], we generate a batch of samples to have a more precise estimation of the true model in each iteration; thus, our stochastic iterates have a higher chance to closely track the underlying deterministic iterates.

The convexity of \(\mathcal {M}\times \varLambda \) can be removed by defining a closed convex hull \(\overline{\text {conv}(\mathcal {M})} \times \overline{\text {conv}(\mathcal {M})}\). However, the convexity of the set for the primal iterates is essential to enable a valid Taylor expansion. See [54, Proposition 2.2 and Section 4] [52, Proposition 2.4 and (14)] and references therein for the same requirement for doing line search with (8) and applying its Taylor expansion.

In particular, by the design of Algorithm 1, we have \({{\varvec{x}}}_t \in \mathcal {T}_{{\bar{\nu }}_t}\) for any t, while the trial step \({{\varvec{x}}}_{s_t}\) may be outside \(\mathcal {T}_{{\bar{\nu }}_t}\). If \({{\varvec{x}}}_{s_t}\notin \mathcal {T}_{{\bar{\nu }}_t}\), we enlarge \({\bar{\nu }}_t\) (Line 14) and rerun the iteration from the beginning. Assumption 3 states that if it turns out that \({{\varvec{x}}}_{s_t}\in \mathcal {T}_{{\bar{\nu }}_t}\), then the whole segment \(\zeta {{\varvec{x}}}_t+(1-\zeta ){{\varvec{x}}}_{s_t}\), which may not completely lie in \(\mathcal {T}_{{\bar{\nu }}_t}\) as \(\mathcal {T}_{{\bar{\nu }}_t}\) may be nonconvex, is supposed to lie in a larger space \(\mathcal {T}_{\theta {\bar{\nu }}_t}\) with \(\theta \in [1,2)\). Since \(\mathcal {L}_{{\bar{\epsilon }}_t, {\bar{\nu }}_t, \eta }\) is SC\(^1\) in \(\mathcal {T}_{2{\bar{\nu }}_t}^\circ \times \mathbb {R}^m\times \mathbb {R}^r\) and \(\mathcal {T}_{\theta {\bar{\nu }}_t} \subseteq \mathcal {T}_{2{\bar{\nu }}_t}^\circ \), where \(\mathcal {T}_{2{\bar{\nu }}_t}^\circ \) denotes the interior of \(\mathcal {T}_{2{\bar{\nu }}_t}\), the second-order Taylor expansion at \(({{\varvec{x}}}_t, \varvec{\mu }_t, {\varvec{\lambda }}_t)\) is allowed [50]. Note that the range of \(\theta \) is inessential. If we replace \(\nu /2\) in (5) by \(\nu /\kappa \) for any \(\kappa >1\), then we would allow the existence of \(\theta \) in \([1, \kappa )\). In other words, \(\theta \) can be as large as any \(\kappa \). In fact, the condition on the segment always holds when the input \(\alpha _{max}\), the upper bound of \({\bar{\alpha }}_t\) (cf. Line 18), is suitably upper bounded. Specifically, supposing  (ensured by compactness of iterates), for any \(\theta > 1\) and \(\zeta \in (0, 1)\), as long as \(\alpha _{max} \le (\theta -1){\bar{\nu }}_0/(2\varUpsilon ^2)\), we have \(\zeta {{\varvec{x}}}_t+(1-\zeta ){{\varvec{x}}}_{s_t} \in \mathcal {T}_{\theta {\bar{\nu }}_t}\) by noting that

(ensured by compactness of iterates), for any \(\theta > 1\) and \(\zeta \in (0, 1)\), as long as \(\alpha _{max} \le (\theta -1){\bar{\nu }}_0/(2\varUpsilon ^2)\), we have \(\zeta {{\varvec{x}}}_t+(1-\zeta ){{\varvec{x}}}_{s_t} \in \mathcal {T}_{\theta {\bar{\nu }}_t}\) by noting that

Clearly, the condition on the segment is not required if \(\mathcal {T}_{\nu }\) in (5) is a convex set, which is the case, for example, if we have linear inequality constraints \({{\varvec{x}}}\le {{\varvec{0}}}\); or more generally, each \(g_i(\cdot )\) is a convex function. We further investigate the effect of the range of \(\theta \) by varying \(\kappa \) (\(\kappa = 2\) by default; cf. (5)) in the experiments.

By the compactness condition and noting that \({\bar{\nu }}_t\) is increased by at least a factor of \(\rho \) each time in (22), we immediately know that \({\bar{\nu }}_t\) stabilizes when t is large. Moreover, if we let

then \({\bar{\nu }}_t \le {\tilde{\nu }}\), \(t\ge 0\), almost surely. We will show a similar result for \({\bar{\epsilon }}_t\).

Assumption 4 imposes the constraint qualifications. In particular, for feasible points \(\varOmega \), we assume the linear independence constraint qualification (LICQ), which is a standard condition to ensure the existence and uniqueness of the Lagrangian multiplier [41]. For infeasible points \(\mathcal {X}\backslash \varOmega \), we assume that the solution set of the linear system (29) is nonempty. The condition (29) restricts the behavior of the constraint functions outside the feasible set, which, together with the compactness condition, implies \(\varOmega \ne \emptyset \) (cf. [36, Proposition 2.5]). In fact, the condition (29) weakens the generalized Mangasarian-Fromovitz constraint qualification (MFCQ) [59, Definition 2.5]; and relates to the weak MFCQ, which is proposed for problems with only inequalities in [36, Definition 1] and adopted in [50, Assumption A3] and [53, Assumption 3.2]. However, [36] requires the weak MFCQ to hold for feasible points in addition to LICQ; while [50, 53] and this paper remove such a condition. The condition (29) simplifies and generalizes the weak MFCQ in [36, 50, 53] by including equality constraints. We note that the weak MFCQ is slightly weaker than (29). By the Gordan’s theorem [26], (29) implies that \(\{c_i\cdot \nabla c_i\}_{i: c_i\ne 0}\cup \{\nabla g_i\}_{i: g_i>0}\) are positively linearly independent:

for any coefficients \(a_i, b_i\ge 0\) and \(\sum _i a_i^2+b_i^2 >0\). In contrast, the weak MFCQ only requires that the above linear combination is nonzero for a particular set of coefficients. However, we adopt the simplified but a bit stronger condition only because (29) has a cleaner form and a clearer connection to SQP subproblems. The coefficients of the weak MFCQ in [36, 50, 53] are relatively hard to interpret. Instead of regarding the constraint qualification as the essence of constraints, those coefficients depend on particular choice of the merit function, although that assumption statement is sharper. That said, (29) is still weaker than other literature on the augmented Lagrangian [34, 47, 49]; and weaker than what is widely assumed in SQP analysis [10], where the IQP system, \(c_i + \nabla ^Tc_i{{\varvec{z}}}= {{\varvec{0}}}\), \(1\le i\le m\), \(g_i + \nabla ^Tg_i{{\varvec{z}}}\le {{\varvec{0}}}\), \(1\le i\le r\), is supposed to have a solution. Moreover, we do not require the strict complementary condition, which is often imposed for the merit functions that apply (squared) slack variables to transform nonlinear inequality constraints [60, A2], [23, Proposition 3.8].

The first lemma shows that (17) is satisfied for a sufficiently small \({\bar{\epsilon }}_t\). Although (17) is inspired by [40, (19)] for equalities, the proof is quite different from that paper (cf. Lemma 4 there).

Lemma 4

Under Assumptions 3 and 4, there exists a deterministic threshold \(\tilde{\epsilon }_1>0\) such that (17) holds for any \({\bar{\epsilon }}_t \le {\tilde{\epsilon }}_1\).

Proof

See Appendix B.1. \(\square \)

The second lemma shows that (18) is satisfied for small \({\bar{\epsilon }}_t\). The analysis is similar to Lemma 3. We need the following condition on the SQP system (12).

Assumption 5

We assume that, whenever (12) is solvable, \((J_t^T\; G_{t_a}^T)\) has full column rank, and there exist positive constants \(\varUpsilon _{B}\ge 1\ge \gamma _{B}\vee \gamma _{H}\) such that

and \({{\varvec{z}}}^TB_t{{\varvec{z}}}\ge \gamma _{B}\Vert {{\varvec{z}}}\Vert ^2\), \(\forall {{\varvec{z}}}\in \{{{\varvec{z}}}\in \mathbb {R}^d: J_t{{\varvec{z}}}= {{\varvec{0}}}, G_{t_a}{{\varvec{z}}}= {{\varvec{0}}}\}\).

Assumption 5 summarizes Assumptions 1 and 2. As shown in Lemma 3, the conditions on \(M_t\) and \((J_t^T\; G_{t_a}^T)\) hold locally. For the presented global analysis, the Hessian approximation \(B_t\) is easy to construct to satisfy the condition, e.g., \(B_t = I\); however, such a choice is not proper for fast local rates. In practice, given a lower bound \(\gamma _{B}>0\), \(B_t\) is constructed by doing a regularization on a subsampled Hessian (e.g., for finite-sum objectives) or a sketched Hessian (e.g., for regression objectives), which can preserve certain second-order information and be obtained with less expense. With Assumption 5, we have the following result.

Lemma 5

Under Assumptions 3 and 5, there exists a deterministic threshold \(\tilde{\epsilon }_2>0\) such that (18) holds for any \({\bar{\epsilon }}_t \le {\tilde{\epsilon }}_2\).

Proof

See Appendix B.2. \(\square \)

We summarize (30), Lemmas 4 and 5 in the next theorem.

Theorem 1

Under Assumptions 3, 4, and 5, there exist deterministic thresholds \({\tilde{\nu }}\), \({\tilde{\epsilon }}>0\) such that \(\{{\bar{\nu }}_t, {\bar{\epsilon }}_t\}_t\) generated by Algorithm 1 satisfy \({\bar{\nu }}_t \le {\tilde{\nu }}\), \({\bar{\epsilon }}_t \ge {\tilde{\epsilon }}\). Moreover, almost surely, there exists an iteration threshold \(\bar{t}<\infty \), such that \({\bar{\epsilon }}_t = {\bar{\epsilon }}_{\bar{t}}\), \({\bar{\nu }}_t = {\bar{\nu }}_{\bar{t}}\), \( t\ge \bar{t}\).

Proof

The existence of \({\tilde{\nu }}\) is showed in (30). By Lemmas 4 and 5, and defining \({\tilde{\epsilon }}= ({\tilde{\epsilon }}_1\wedge {\tilde{\epsilon }}_2)/\rho \), we show the existence of \({\tilde{\epsilon }}\). The existence of the iteration threshold \({\bar{t}}\) is ensured by noting that \(\{{\bar{\nu }}_t, 1/{\bar{\epsilon }}_t\}_t\) are bounded from above; and each update increases the parameters by at least a factor of \(\rho >1\). \(\square \)

We mention that the iteration threshold \({\bar{t}}\) is random for stochastic schemes and it changes between different runs. However, it always exists. The following analysis supposes t is large enough such that \(t\ge {\bar{t}}\) and \({\bar{\epsilon }}_t, {\bar{\nu }}_t\) have stabilized. We condition our analysis on the \(\sigma \)-algebra \(\mathcal {F}_{{\bar{t}}}\), which means that we only consider the randomness of the generated samples after \({\bar{t}}+1\) iterations and, by (28), the parameters \({\bar{\epsilon }}_{{\bar{t}}}, {\bar{\nu }}_{{\bar{t}}}\) are fixed. We should point out that, although it is standard to focus only on the tail of the iteration sequence to show the global convergence (even for the deterministic case [41, Theorem 18.3]), an important aspect that is missed by such an analysis is the non-asymptotic guarantees. In particular, we know the scheme changes the merit parameters for at most \(\log ({\tilde{\nu }}{\bar{\epsilon }}_0/({\bar{\nu }}_0{\tilde{\epsilon }}))/\log (\rho )\) times; however, how many iterations it spans for all the changes is not answered by our analysis. Establishing a bound on \({\bar{t}}\) in expectation or high probability sense would help us further understand the efficiency of the scheme. However, since any characterization of \({\bar{t}}\) is difficult even for deterministic schemes, we leave such a study to the future. Another missing aspect is the iteration complexity, where we are interested in the number of iterations to attain an \(\epsilon \)-first- or second-order stationary point (we abuse \(\epsilon \) notation here to refer to the accuracy level). The iteration complexity is recently studied for two StoSQP schemes under very particular setups [5, 17]; none of the existing works allow either stochastic line search or inequality constraints. We leave the iteration complexity of our scheme to the future as well.

3.3 Convergence analysis

We conduct the global convergence analysis for Algorithm 1. We prove that \(\lim _{t\rightarrow \infty } R_t = 0\) almost surely, where \(R_t = \Vert (\nabla _{{{\varvec{x}}}}\mathcal {L}_t, c_t, \max \{g_t,-{\varvec{\lambda }}_t\})\Vert \) is the KKT residual. We suppose the line search conditions (15), (16), (24), (25) hold. We will discuss the sample complexities that ensure these generic conditions in Sect. 3.4. It is fairly easy to see that all conditions hold for large batch sizes.

Our proof structure closely follows [40]. The analyses are more involved in Lemmas 7, 9, 10, 11 and Theorem 3, which account for the differences between equality and inequality constraints, and account for our relaxations of the feasibility error condition and the increasing sample size requirement of [40]. The analysis in Theorem 5 is new, which strengthens the “liminf" convergence in [40]. The analyses are slightly adjusted in Theorem 4, and the same in Lemma 8 and Theorem 2. The adopted potential function (or Lyapunov function) is

where \(\omega \in (0, 1)\) is a coefficient to be specified later. We note that using \(\mathcal {L}_{{\bar{\epsilon }}_{{\bar{t}}}, {\bar{\nu }}_{{\bar{t}}}, \eta }^t\) by itself (i.e., \(\omega =1\)) to monitor the iteration progress is not suitable for the stochastic setting; it is possible that \(\mathcal {L}_{{\bar{\epsilon }}_{{\bar{t}}}, {\bar{\nu }}_{{\bar{t}}}, \eta }^t\) increases while \({\bar{\mathcal {L}}}_{{\bar{\epsilon }}_{{\bar{t}}}, {\bar{\nu }}_{{\bar{t}}}, \eta }^t\) decreases. In contrast, \(\varTheta _{{\bar{\epsilon }}_{{\bar{t}}}, {\bar{\nu }}_{{\bar{t}}}, \eta , \omega }^t\) linearly combines different components and has a composite measure of the progress. For example, the decrease of \(\varTheta _{{\bar{\epsilon }}_{{\bar{t}}}, {\bar{\nu }}_{{\bar{t}}}, \eta , \omega }^t\) may come from \(\bar{\delta }_t\) (Lines 22 and 25 of Algorithm 1).

Since parameters \({\bar{\epsilon }}_{\bar{t}}, {\bar{\nu }}_{{\bar{t}}}, \eta \) in \(\mathcal {L}_{{\bar{\epsilon }}_{{\bar{t}}}, {\bar{\nu }}_{{\bar{t}}}, \eta }\) are fixed (conditional on \(\mathcal {F}_{{\bar{t}}}\)), we denote \(\varTheta _{\omega }^t = \varTheta _{{\bar{\epsilon }}_{{\bar{t}}}, {\bar{\nu }}_{{\bar{t}}},\eta , \omega }^t\) for notational simplicity. In the presentation of theoretical results, we only track the parameters \((\beta , \alpha _{max}, \kappa _{grad}, \kappa _{f}, p_{grad}, p_{f}, \chi _{grad}, \chi _{f})\) that relate to the line search conditions. In particular, we use \(C_1, C_2\ldots \) and \(\varUpsilon _1, \varUpsilon _2\ldots \) to denote deterministic constants that are independent from these parameters, but may depend on \((\gamma _{B}, \gamma _{H}, \varUpsilon _B, \chi _{u}, \chi _{err},\rho , \eta , {\bar{\epsilon }}_0, {\bar{\nu }}_0)\), and thus depend on the deterministic thresholds \({\tilde{\epsilon }}\) and \({\tilde{\nu }}\). Recall that \((\gamma _{B}, \gamma _{H}, \varUpsilon _B, \chi _{u})\) come from Assumption 5 and (20), while \((\chi _{err}, \rho , \eta , {\bar{\epsilon }}_0, {\bar{\nu }}_0)\) are any algorithm inputs.

The first lemma presents a preliminary result.

Lemma 6

Under Assumptions 3, 4, 5, the following results hold deterministically conditional on \(\mathcal {F}_{t-1}\).

-

(a)

There exists \(C_1>0\) such that the following two inequalities hold for any iteration \(t \ge 0\) ((a2) also holds for \(s_t\)), any parameters \(\epsilon ,\nu \), and any generated sample set \(\xi \):

(a1) \(\left\| {\bar{\nabla }}\mathcal {L}_{\epsilon , \nu , \eta }^t - \nabla \mathcal {L}_{\epsilon , \nu , \eta }^t \right\| \le C_1\left\{ \left\| {\bar{\nabla }}f_t - \nabla f_t\right\| \vee ({\bar{R}}_t\wedge 1)\right\} \cdot \left\| {\bar{\nabla }}^2 f_t - \nabla ^2 f_t\right\| \);

(a2) \(\left| {\bar{\mathcal {L}}}_{\epsilon , \nu , \eta }^t - \mathcal {L}_{\epsilon , \nu , \eta }^t \right| \le C_1\{|{\bar{f}}_t - f_t| \vee [({\bar{R}}_t\vee \Vert {\bar{\nabla }}f_t-\nabla f_t\Vert )\wedge 1]\cdot \left\| {\bar{\nabla }}f_t - \nabla f_t\right\| \}\).

-

(b)

There exists \(C_2>0\) such that for any \(t\ge 0\) and set \(\xi \),

$$\begin{aligned} \left\| {\bar{\nabla }}_{{{\varvec{x}}}}\mathcal {L}_t\right\| \le C_2\left\{ \Vert {\bar{\nabla }}\mathcal {L}_{{\bar{\epsilon }}_t, {\bar{\nu }}_t, \eta }^t\Vert + \left\| ( c_t,\;\varvec{w}_{{\bar{\epsilon }}_t, {\bar{\nu }}_t}^t) \right\| \right\} . \end{aligned}$$ -

(c)

There exists \(C_3>0\) such that for any \(t\ge 0\) and set \(\xi \), if (12) is solvable, then

$$\begin{aligned} \left\| {\bar{\nabla }}\mathcal {L}_{{\bar{\epsilon }}_t, {\bar{\nu }}_t, \eta }^t\right\| \le C_3\left\| \left( \begin{array}{c} {\bar{\varDelta }}{{\varvec{x}}}_t\\ J_t{\bar{\nabla }}_{{{\varvec{x}}}}\mathcal {L}_t\\ G_t{\bar{\nabla }}_{{{\varvec{x}}}}\mathcal {L}_t + \varPi _c(\textrm{diag}^2(g_t){\varvec{\lambda }}_t) \end{array} \right) \right\| . \end{aligned}$$

Proof

See Appendix B.3. \(\square \)

The results in Lemma 6 hold deterministically conditional on \(\mathcal {F}_{t-1}\), because the samples \(\xi \) for computing \({\bar{\nabla }}\mathcal {L}_{{\bar{\epsilon }}_t, {\bar{\nu }}_t, \eta }^t\), \({\bar{\nabla }}_{{{\varvec{x}}}}\mathcal {L}_t\) are supposed to be also given by the statement. The following result suggests that if both the gradient \(\nabla \mathcal {L}_{{\bar{\epsilon }}_{{\bar{t}}}, {\bar{\nu }}_{{\bar{t}}}, \eta }^t\) and the function evaluations \(\mathcal {L}_{{\bar{\epsilon }}_{{\bar{t}}}, {\bar{\nu }}_{{\bar{t}}}, \eta }^t\), \(\mathcal {L}_{{\bar{\epsilon }}_{{\bar{t}}}, {\bar{\nu }}_{{\bar{t}}}, \eta }^{s_t}\) are precisely estimated, in the sense that the event \(\mathcal {E}_1^t\cap \mathcal {E}_2^t\) happens (cf. (14), (23)), then there is a uniform lower bound on \({\bar{\alpha }}_t\) to make the Armijo condition hold.

Lemma 7

For \(t\ge {\bar{t}}+ 1\), suppose \(\mathcal {E}_1^t\cap \mathcal {E}_2^t\) happens. There exists \(\varUpsilon _1>0\) such that the t-th step satisfies the Armijo condition (26) (i.e., is a successful step) if

Proof

See Appendix B.4. \(\square \)

The next result suggests that, if only the function evaluations \(\mathcal {L}_{{\bar{\epsilon }}_{{\bar{t}}}, {\bar{\nu }}_{{\bar{t}}}, \eta }^t\), \(\mathcal {L}_{{\bar{\epsilon }}_{{\bar{t}}}, {\bar{\nu }}_{{\bar{t}}}, \eta }^{s_t}\) are precisely estimated, in the sense that the event \(\mathcal {E}_2^t\) happens, then a sufficient decrease of \({\bar{\mathcal {L}}}_{{\bar{\epsilon }}_{{\bar{t}}}, {\bar{\nu }}_{{\bar{t}}}, \eta }^t\) implies a sufficient decrease of \(\mathcal {L}_{{\bar{\epsilon }}_{{\bar{t}}}, {\bar{\nu }}_{{\bar{t}}}, \eta }^t\). The proof directly follows [40, Lemma 6], and thus is omitted.

Lemma 8

For \(t\ge {\bar{t}}+ 1\), suppose \(\mathcal {E}_2^t\) happens. If the t-th step satisfies the Armijo condition (26), then