Abstract

We introduce a novel gradient descent algorithm refining the well-known Gradient Sampling algorithm on the class of stratifiably smooth objective functions, which are defined as locally Lipschitz functions that are smooth on some regular pieces—called the strata—of the ambient Euclidean space. On this class of functions, our algorithm achieves a sub-linear convergence rate. We then apply our method to objective functions based on the (extended) persistent homology map computed over lower-star filters, which is a central tool of Topological Data Analysis. For this, we propose an efficient exploration of the corresponding stratification by using the Cayley graph of the permutation group. Finally, we provide benchmarks and novel topological optimization problems that demonstrate the utility and applicability of our framework.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Motivation and related work

In its most general instance, nonsmooth, non-convex, optimization seeks to minimize a locally Lipschitz objective or loss function \(f:\mathbb {R}^n \rightarrow \mathbb {R}\). Without further regularity assumptions on \(f\), most algorithms—such as the usual Gradient Descent with learning rate decay, or the Gradient Sampling method—are only guaranteed to produce iterates whose subsequences are asymptotically stationary, without explicit convergence rates. Meanwhile, when restricted to the class of min-max functions (like the maximum of finitely many smooth maps), stronger guarantees such as convergence rates can be obtained [1]. This illustrates the common paradigm in nonsmooth optimization: the richer the structure in the irregularities of \(f\), the better the guarantees we can expect from an optimization algorithm. Note that there are algorithms specifically tailored to deal with min–max functions, see e.g. [2].

Another illustration of this paradigm are bundle methods [3,4,5]. They consist, roughly, in constructing successive linear approximations of \(f\) as a proxy for minimization. Their convergence guarantees are strong, especially when an additional semi-smoothness property of \(f\) [6, 7] can be made. Other types of methods, like the variable metric ones, can also benefit from the semi-smoothness hypothesis [8]. In many cases, convergence properties of the algorithm are not only dependent on the structure on \(f\), but also on the amount of information about \(f\) that can be computed in practice. For instance, the bundle method in [9] assumes that the Hessian matrix, when defined locally, can be computed. For accounts on the theory and practice in nonsmooth optimization, we refer the interested reader to [10,11,12].

The partitionning of \(\mathbb {R}^n\) into well-behaved pieces where \(f\) is regular, is another important type of structure. Examples, in increasing order of generality, include semi-algebraic, (sub)analytic, definable, tame (w.r.t. an o-minimal structure), and Whitney stratifiable functions [13]. For such objective functions, the usual gradient descent with learning-rate decay (GDwD) algorithm, or a stochastic version of it, converges to stationary points [14]. In order to obtain further theoretical guarantees such as convergence rates, it is necessary to design optimization algorithms specifically tailored for regular maps, since they enjoy stronger properties, e.g., tame maps are semi-smooth [15], and the generalized gradients of Whitney stratifiable maps are closely related to the (restricted) gradients of the map along the strata [13]. Besides, strong convergence guarantees can be obtained under the Kurdyka–Łojasiewicz assumption [16, 17], which includes the class of semi-algebraic maps. Our method is related to this line of work, in that we exploit the strata of \(\mathbb {R}^n\) in which \(f\) is smooth.

The motivation behind this work stems from Topological Data Analysis (TDA), where the underlying geometric structures of complex data are characterized by means of computable topological descriptors. Persistent Homology (PH) is one such descriptor, which has been successfully applied to various areas including neuroscience [18], material sciences [19], signal analysis [20], shape recognition [21], or machine learning [22]. From a high-level view, given a graph (or simplicial complex) on n vertices equipped with a vertex function, represented as a vector \(x\in \mathbb {R}^n\), Persistent Homology captures topological and geometric information about the sublevel sets of \(x\) and encodes it into a barcode \(\textrm{PH}(x)\).

Barcodes form a metric space \(\textbf{Bar}\) when equipped with the standard metrics of TDA, the so-called bottleneck and Wasserstein distances, and the persistence map \(\textrm{PH}: \mathbb {R}^n \rightarrow \textbf{Bar}\) is locally Lipschitz [23, 24]. However \(\textbf{Bar}\) is not Euclidean nor a smooth manifold, thus hindering the use of these topological descriptors in standard statistical or machine learning pipelines. Still, there exist natural notions of differentiability for maps in and out of \(\textbf{Bar}\) [25]. In particular, the persistence map \(\textrm{PH}: \mathbb {R}^n \rightarrow \textbf{Bar}\) restricts to a locally Lipschitz, smooth map on a stratification of \(\mathbb {R}^n\) by polyhedra. If we compose the persistence map with a smooth and Lipschitz map \(V: \textbf{Bar}\rightarrow \mathbb {R}\), the resulting objective (or loss) function

is itself Lipschitz and smooth on the various strata. From [14], and as recalled in [26], the standard Gradient Descent with weight decay (GDwD) on \(f\) asymptotically converges to stationary points. Similarly, the Gradient Sampling (GS) method asymptotically converges. See [27] for an application of GS to topological optimization.

Nonetheless, it is important to design algorithms that take advantage of the structure in the irregularities of the persistence map \(\textrm{PH}\), in order to get better theoretical guarantees. For instance, one can locally integrate the gradients of \(\textrm{PH}\)—whenever defined—to stabilize the iterates [27], or add a regularization term to \(f\) that acts as a smoothing operator [28]. In the present work, we rather exploit the stratification of \(\mathbb {R}^n\) induced by \(\textrm{PH}\), as it turns out to be easy to manipulate. We show in particular that we can efficiently access points \(x'\) located in neighboring strata of the current iterate \(x\), as well as estimate the distance to these strata.

Given this special structure in the irregularities of \(\textrm{PH}\), and the growing number of applications where \(\textrm{PH}\) is optimized (e.g. in point cloud inference [29], surface reconstruction [30], shape matching [31], graph classification [32], topological regularization for generative models [33], image segmentation [34], or dimensionality reduction [35]), we believe that persistent homology-based objective functions form a rich playground for nonsmooth optimization.

1.2 Contributions and outline of contents

Our method, called Stratified Gradient Sampling (SGS), is a variation of the established GS algorithm, whose main steps for updating the current iterate \(x_k\in \mathbb {R}^n\) we recall in Algorithm 1 below. Our method is motivated by the closing remarks of a recent overview of the GS algorithm [36], in which the authors suggest that assuming extra structure on the non-differentiability of \(f\) could improve the GS theory and practice compared to the general case of locally Lipschitz maps which are differentiable on an open and dense subspace.

In this work, we deal with stratifiably smooth maps, for which the non-differentiability is organized around smooth submanifolds that together form a stratification of \(\mathbb {R}^n\). In Sect. 2, we review some background material in nonsmooth analysis and define stratifiably smooth maps \(f:\mathbb {R}^n\rightarrow \mathbb {R}\), a variant of the Whitney stratifiable functions from [13] for which we do not impose any Whitney regularity on the gluing between adjacent strata of \(\mathbb {R}^n\), but rather enforce that there exist local \(C^2\)-extensions of the restrictions of \(f\) to top-dimensional strata.

Examples of stratifiably smooth maps are numerous (see Remark 2), and in particular comprise the topological descriptors based on \(\textrm{PH}\) studied in this paper, as well as all functions used in Deep Learning applications, and more generally, definable maps in an o-minimal structure for which restrictions to strata admit local smooth extensions.

Section 3 reviews the requirements, implementation and analysis of the SGS algorithm. Assumptions are detailed in Sect. 3.1. Beside two standard assumptions, namely that \(f\) has compact sublevel sets and that we have knowledge of whether \(f\) is differentiable at any given point, we assume that \(f\) is stratifiably smooth and that we can sample points in strata nearby the current iterate. This last assumption is essential to the design of the SGS algorithm in Sect. 3.2, which strongly resembles GS except that, instead of sampling points randomly around the current iterate \(x_k\) (see line 1 of Algorithm 1), it samples one point in each \(\epsilon \)-close stratum.

This difference in the sampling procedure has strong implications on the convergence analysis conducted in Sect. 3.3. While the convergence of GS relies on the sample size \(m\ge n+1\) in order to apply the Carathéodory Theorem to subgradients, our convergence analysis relies on the fact that the gradients of \(f\), when restricted to neighboring strata, are locally Lipschitz. Hence, our proof of asymptotic convergence to stationary points (Theorem 5) is substantially different.

Most importantly, in Theorem 7, we determine a convergence rate of our algorithm that holds for any stratifiably smooth map with compact sublevel sets, which is an improvement over the guarantees of GS (and of GDwD) for general locally Lipschitz maps. In Sect. 3.4, we adapt the algorithm and its convergence analysis to the case where only estimated distances to nearby strata are available.

From the perspective of time complexity, the computation of the descent direction depends on the number of samples m around the current iterate. The GS algorithm samples \(m\ge n+1\) at each iteration, while the SGS approach samples as many points as there are \(\epsilon \)-close strata. In scenarios where the stratification is small (see Example 1 below), the SGS sampling procedure is therefore more efficient, and in fact it boils down to classic gradient descent (\(m = 1\)) in regions where \(f\) has no \(\epsilon \)-close points of non-differentiability.

Although in practical scenarios, iterates often fall in the latter situation, the worst-case complexity of the sampling procedure significantly increases when numerous strata can theoretically meet inside an \(\epsilon \)-ball, which can be the case for the persistence based functions studied in this paper. To handle such situations in practice, we also introduce natural heuristics to reduce the complexity (see Remark 10), which come at the cost of theoretical guarantees.

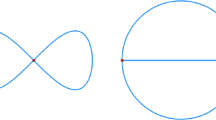

Example 1

For \(x\in \mathbb {R}^2\), we write \(x=(z_1,z_2)\) using Euclidean coordinates. Consider the objective function \(f: (z_1,z_2) \in \mathbb {R}^2 \rightarrow 10 \log (1 + \Vert z_1 \Vert ) + z_2^2 \in \mathbb {R}\). It is a stratifiably smooth map for which the stratification has two top-dimensional strata \(\{z_1<0\}\) and \(\{z_1>0\}\) where \(f\) is smooth, which meet along a codimension 1 stratum \(\{z_1=0\}\) where \(f\) is non-differentiable. In this case, around an iterate \(x\), the SGS algorithm needs only to compute the distance to the hyperplane \(\{z_1=0\}\) to determine which \(\epsilon \)-close strata to sample. In particular, in early iterations, SGS behaves like standard GDwD as it does not sample additional points to compute the approximate gradient of a point, while in late iterations it samples at most 1 additional point as iterates get closer to \(\{z_1=0\}\). See Fig. 1 for a simulation of GDwD, GS and SGS on this example.

In Sect. 4, we introduce the map \(\textrm{PH}\) over a fixed simplicial complex \(K\), which gives rise to a wide class of stratifiably smooth objective functions with rich applications in TDA. We characterize strata around the current iterate (i.e., filter function) \(x_k\) by means of the permutation group over n elements, where n is the number of vertices in \(K\). Then, the Cayley graph associated to the permutation group allows us to efficiently explore the set of neighboring strata by increasing order of distances to \(x_k\).

In Sect. 5 we provide empirical evidence that SGS behaves better than GDwD and GS for optimizing some persistent homology-based objective functions on both toy data (Sects. 5.1 and 5.2) and real 3D shape data 5.3. A fourth experiment involving biological data has been deferred to the appendix (Section A.1) for the sake of concision. Note that Sects. 5.2 and A.1 introduce novel topological optimization problems which we believe are of independent interest for applications.

A proof-of-concept comparison between different gradient descent techniques for the objective function \(f\) of Example 1 (blue surface) which attains its minimum at \(x_* = (0,0)\) and is not smooth along the line \(\{z_1=0\}\). In particular, \(\Vert \nabla f\Vert > 1\) around \(x_*\), thus the gradient norm cannot be used as a stopping criterion. The standard GD with learning-rate decay (GDwD), for which updates are given by \(x_{k+1} = x_k - \frac{\lambda _0}{k+1} \nabla f(x_k)\), oscillates around \(\{z_1=0\}\) due to the non-smoothness of \(f\) and asymptotically converges toward \(x_*\) because of the decaying learning rate \(\frac{\lambda _0}{k+1}\). Meanwhile, non-smooth optimization methods that sample points around \(x_k\) in order to produce reliable descent directions converge in finite time. Specifically, the Gradient Sampling (GS) method randomly samples 3 points and manages to reach an \((\epsilon ,\eta )\)-stationary point (see Definition 1) of \(f\) in about \(20.6 \pm 3.9\) iterations (averaged over 100 experiments), while our stratified approach (SGS) improves on this by leveraging the fact that we explicitly have access to the two strata \(\{z_1<0\}\) and \(\{z_1>0\}\) where \(f\) is differentiable. In particular, we only sample additional points when \(x_k\) is \(\epsilon \)-near the line \(\{z_1=0\}\), and reach an \((\epsilon , \eta )\)-stationary point in 18 iterations. Plots showcase the evolution of the objective value \(f(x_k)\) and the distance to the minimum \(\Vert x_k - x_*\Vert \) across iterations. Parameters: \(x_0 = (0.8, 0.8)\), \(\lambda _0 = 10^{-1}\), \(\epsilon = 10^{-1}\), \(\eta = 10^{-2}\) (color figure online)

2 A direction of descent for stratifiably smooth maps

In this section, we define the class of stratifiably smooth maps whose optimization is at stake in this work. For such maps, we can define an approximate subgradient and a corresponding descent direction, which is the key ingredient of our algorithm. Throughout, \(f:\mathbb {R}^n\rightarrow \mathbb {R}\) is a locally Lipschitz (non necessary smooth, nor convex) function with compact sublevel sets, which we aim to minimize.

2.1 Nonsmooth analysis

We first recall some useful background in nonsmooth analysis, essentially from [37]. First-order information of \(f\) at \(x\in \mathbb {R}^n\) in a direction \(v \in \mathbb {R}^n\) is captured by its generalized directional derivative:

Besides, the generalized gradient is the following set of linear subapproximations:

When \(f\) is convex, \(\partial f(x)\) is the usual subdifferential of a convex function.

Given an arbitrary set \(S\subset \mathbb {R}^n\) of Lebesgue measure 0, we have an alternative description of the generalized gradient in terms of limits of surrounding gradients, whenever defined:

where \(\nabla f (x_i)\) is the gradient of \(f\) (provided \(f\) is differentiable at \(x_i\)), and \(\overline{\textrm{co}}\) is the operation of taking the closure of the convex hull.Footnote 1 The duality between generalized directional derivatives and gradients is captured by the equality:

The Goldstein subgradient [38] is an \(\epsilon \)-relaxation of the generalized gradient:

Given \(x\in \mathbb {R}^n\), we say that:

Any local minimum is stationary, and conversely if \(f\) is convex. We also have weaker notions:

Definition 1

Given \(\epsilon , \eta >0\), an element \(x\in \mathbb {R}^n\) is \(\epsilon \)-stationary if \(0\in \partial _\epsilon f(x)\); and \((\epsilon ,\eta )\)-stationary if \(d(0,\partial _\epsilon f(x))\leqslant \eta \).

2.2 Stratifiably smooth maps

Desirable properties for an optimization algorithm is that it produces iterates \((x_k)_k\) that either converge to an \((\epsilon ,\eta )\)-stationary point in finitely many steps, or whose subsequences (or some of them) converge to an \(\epsilon \)-stationary point. For this, we work in the setting of objective functions that are smooth when restricted to submanifolds, that together partition \(\mathbb {R}^n\).

Definition 2

A stratification \(\mathcal {X}=\{X_i\}_{i\in I}\) of \(\mathbb {R}^n\) is a locally finite partition by smooth submanifolds \(X_i\) without boundary called strata.

Note that we do not impose any (usually needed) gluing conditions between adjacent strata, as we do not require them in the analysis. In particular, semi-algebraic, subanalytic, or definable subsets of \(\mathbb {R}^n\), together with Whitney stratified sets are stratified in the above weak sense. We next define the class of maps \(f\) with smooth restrictions \(f_{\mid X_i}\) to strata \(X_i\) of some stratification \(\mathcal {X}\), inspired by the Whitney stratifiable maps of [13] (there \(\mathcal {X}\) is required to be Whitney) and the stratifiable functions of [39], however we further require that the restrictions \(f_{\mid X_i}\) admit local extensions of class \(C^2\).

Definition 3

The map \(f:\mathbb {R}^n\rightarrow \mathbb {R}\) is stratifiably smooth if there exists a stratification \(\mathcal {X}\) of \(\mathbb {R}^n\), such that for each top-dimensional stratum \(X_i\in \mathcal {X}\), the restriction \(f_{\mid X_{i}}\) admits an extension \(f_{i}\) of class \(C^2\) in a neighborhood of \(X_i\).

Remark 1

The slightly weaker assumption that the extension \(f_{i}\) is continuously differentiable with locally Lipschitz gradient would have also been sufficient for our purpose.

Remark 2

Examples of stratifiably smooth maps include continuous selection of \(C^2\) maps \(f_1,\cdots , f_m\) using a selection function \(s:\mathbb {R}^n \rightarrow \{1,\cdots , m\}\), that is:

If the level-sets \(X_i:= \{x\mid s(x)=i\}\) are finite unions of submanifolds (possibly with corners), then up to refining the partition \(\mathcal {X}=\{X_i\}_{1\le i \le n}\), the map \(f\) is automatically stratifiably smooth. For instance the elementary selections of [40] and the PAP functions of [41], which together form a wide subset of semi-algebraic maps, and more generally, definable maps in an o-minimal structure, are stratifiably smooth.

These examples contain nearly all functions used in deep learning applications, which include in particular piecewise affine functions (which from [42, Proposition 2.2.2] are the same as max-min of affine functions).

We denote by \(X_x\) the stratum containing \(x\), and by \(\mathcal {X}_x\subseteq \mathcal {X}\) the set of strata containing \(x\) in their closures. More generally, for \(\epsilon > 0\), we let \(\mathcal {X}_{x,\epsilon }\subseteq \mathcal {X}\) be the set of strata \(X_i\) such that the closure of the ball \(B(x,\epsilon )\) has non-empty intersection with the closure of \(X_i\). Local finiteness in the definition of a stratification implies that \(\mathcal {X}_{x,\epsilon }\) (and \(\mathcal {X}_{x}\)) is finite.

If \(f\) is stratifiably smooth and \(X_i\in \mathcal {X}_x\) is a top-dimensional stratum, there is a well-defined limit gradient \(\nabla _{X_{i}}f(x)\), which is the unique limit of the gradients \(\nabla f_{\mid {X_i}}(x_n)\) where \(x_n\in X_i\) is any sequence converging to \(x\). Indeed, this limit exists and does not depend on the choice of sequence since \(f_{\mid X_{i}}\) admits a local \(C^2\) extension \(f_i\). The following result states that the generalized gradient at \(x\) can be retrieved from these finitely many limit gradients along the various adjacent top-dimensional strata.

Proposition 1

If \(f\) is stratifiably smooth, then for any \(x\in \mathbb {R}^n\) we have:

More generally, for \(\epsilon >0\):

Proof

We show the first equality only, as the second can be proven along the same lines. We use the description of \(\partial f(x)\) in terms of limit gradients from (3), which implies the inclusion of the right-hand side in \(\partial f(x)\). Conversely, let S be the union of strata in \(\mathcal {X}_x\) with positive codimension, which is of measure 0. Let \(x_i\) be a sequence avoiding S, converging to \(x\), such that \(\nabla f(x_i)\) converges as well. Since \(\mathcal {X}_x\) is finite, up to extracting a subsequence, we can assume that all \(x_i\) are in the same top-dimensional stratum \(X_i\in \mathcal {X}_x\). Consequently, \(\nabla f(x_i)\) converges to \(\nabla _{X_{i}}f(x)\). \(\square \)

2.3 Direction of descent

Thinking of \(x\) as a current position, we look for a direction of (steepest) descent, in the sense that a perturbation of \(x\) in this direction produces a (maximal) decrease of \(f\). Given \(\epsilon \geqslant 0\), we let \(g(x,\epsilon )\) be the projection of the origin on the convex set \(\partial _\epsilon f(x)\). Equivalently, \(g(x,\epsilon )\) solves the minimization problem:

Introduced in [38], the direction \(-g(x,\epsilon )\) is a good candidate of direction for descent, as we explain now. Since \(g(x,\epsilon )\) is the projection of the origin on the convex closed set \(\partial _\epsilon f(x)\), we have the inequality  that holds for any g in the Goldstein subgradient at \(x\). Equivalently,

that holds for any g in the Goldstein subgradient at \(x\). Equivalently,

Informally, if we think of a small perturbation \(x-tg(x,\epsilon )\) of \(x\) along this direction, for \(t>0\) small enough, then  . Using (7), since \(\nabla f(x)\in \partial _\epsilon f(x)\), we deduce that \(f(x-tg(x,\epsilon ))\leqslant f(x)- t \Vert g(x,\epsilon )\Vert ^2\). So \(f\) locally decreases at linear rate in the direction \(-g(x,\epsilon )\). This intuition relies on the fact that \(\nabla f(x)\) is well-defined so as to provide a first order approximation of \(f\) around \(x\), and that t is chosen small enough. In order to make this reasoning completely rigorous, we need the following well-known result (see for instance [37]):

. Using (7), since \(\nabla f(x)\in \partial _\epsilon f(x)\), we deduce that \(f(x-tg(x,\epsilon ))\leqslant f(x)- t \Vert g(x,\epsilon )\Vert ^2\). So \(f\) locally decreases at linear rate in the direction \(-g(x,\epsilon )\). This intuition relies on the fact that \(\nabla f(x)\) is well-defined so as to provide a first order approximation of \(f\) around \(x\), and that t is chosen small enough. In order to make this reasoning completely rigorous, we need the following well-known result (see for instance [37]):

Theorem 2

(Lebourg Mean value Theorem) Let \(x, x'\in \mathbb {R}^n\). Then there exists some \(y\in [x,x']\) and some \(w\in \partial f(y)\) such that:

Let \(t>0\) be lesser than \(\frac{\epsilon }{\Vert g(x,\epsilon )\Vert }\), in order to ensure that \(x':=x- t g(x,\epsilon )\) is \(\epsilon \)-close to \(x\). Then by the mean value theorem (and Proposition 1), we have that

for some \(w\in \partial _\epsilon f(x)\). Eq. (7) yields

as desired.

In practical scenarios however, it is unlikely that the exact descent direction \(-g(x,\epsilon )\) could be determined. Indeed, from (6), it would require the knowledge of the set \(\partial _\epsilon f(x)\), which consists of infinitely many (limits of) gradients in an \(\epsilon \)-neighborhood of \(x\). We now build, provided \(f\) is stratifiably smooth, a faithful approximation \(\tilde{\partial }_\epsilon f(x)\) of \(\partial _\epsilon f(x)\), by collecting gradient information in the strata that are \(\epsilon \)-close to \(x\).

For each top-dimensional stratum \(X_i\in \mathcal {X}_{x,\epsilon }\), let \(x_i\) be an arbitrary point in \(\overline{X}_i\cap \overline{B}(x,\epsilon )\). Define

Of course, \(\tilde{\partial }_\epsilon f(x)\) depends on the choice of each \(x_i\in X_i\). But this will not matter for the rest of the analysis, as we will only rely on the following approximation result which holds for arbitrary choices of points \(x_i\):

Proposition 3

Let \(x\in \mathbb {R}^n\) and \(\epsilon >0\). Assume that \(f\) is stratifiably smooth. Let \(L\) be a Lipschitz constant of the gradients \(\nabla f_{i}\) restricted to \(\overline{B}(x,\epsilon )\cap X_i\), where \(f_i\) is some local \(C^2\) extension of \(f_{\mid X_i}\), and \( X_i\in \mathcal {X}_{x,\epsilon } \) is top-dimensional. Then we have:

Note that, since the \(f_i\) are of class \(C^2\), their gradients are locally Lipschitz, hence by compactness of \(\overline{B}(x,\epsilon )\), the existence of the Lipschitz constant \(L\) above is always guaranteed.

Proof

From Proposition 1, we have

This yields the inclusion \(\tilde{\partial }_\epsilon f(x) \subseteq \partial _\epsilon f(x)\). Now, let \(x' \in \mathbb {R}^n\), \(\Vert x' - x\Vert \leqslant \epsilon \), and let \(X_i \in \mathcal {X}_{x'}\subseteq \mathcal {X}_{x,\epsilon }\) be a top-dimensional stratum touching \(x'\). Based on how \(x_i\) is defined in (9), we have that \(x'\) and \(x_i\) both belong to \(\overline{B}(x,\epsilon )\), and they both belong to the stratum \(X_i\). Therefore, \(\Vert \nabla _{X_i}f(x')-\nabla _{X_i}f(x_i)\Vert \leqslant 2L\epsilon \), and so \(\nabla _{X_i}f(x')\in \tilde{\partial }_\epsilon f(x)+ \overline{B}(0, 2L\epsilon )\). The result follows from the fact that \(\tilde{\partial }_\epsilon f(x)+ \overline{B}(0, 2L\epsilon )\) is convex and closed. \(\square \)

Recall from Eq. (8) that the (opposite to the) descent direction \(-g(x,\epsilon )\) is built as the projection of the origin onto \(\partial _\epsilon f(x)\). Similarly, we define our approximate descent direction as \(-\tilde{g}(x,\epsilon )\), where \(\tilde{g}(x,\epsilon )\) is the projection of the origin onto the convex closed set \(\tilde{\partial }_\epsilon f(x)\):

We show that this choice yields a direction of decrease of \(f\), in a sense similar to (8).

Proposition 4

Let \(f\) be stratifiably smooth, and let \(x\) be a non-stationary point. Let \(0<\beta <1\), and \(\epsilon _0>0\). Denote by \(L\) a Lipschitz constant for all gradients of the restrictions \(f_i\) to the ball \(\overline{B}(x,\epsilon _0)\) (as in Proposition 3). Then:

- (i):

-

For \(0<\epsilon \leqslant \epsilon _0\) small enough we have \(\epsilon \leqslant \frac{1-\beta }{2L}\Vert \tilde{g}(x,\epsilon )\Vert \); and

- (ii):

-

For such \(\epsilon \), we have \(\forall t \leqslant \frac{\epsilon }{\Vert \tilde{g}(x,\epsilon )\Vert }, \, f(x-t\tilde{g}(x,\epsilon ))\leqslant f(x)-\beta t\Vert \tilde{g}(x,\epsilon )\Vert ^2\).

Proof

Saying that \(x\) is non-stationary is equivalent to the inequality \(\Vert g(x,0)\Vert >0\). We show that the map \(\epsilon \in \mathbb {R}^+ \mapsto \Vert g(x,\epsilon )\Vert \in \mathbb {R}^+\), which is non-increasing, is continuous at \(0^+\). Let \(\epsilon \) be small enough such that the sets of strata incident to \(x\) are the same that meet with the \(\epsilon \)-ball around \(x\), i.e., \(\mathcal {X}_{x,\epsilon }=\mathcal {X}_{x}\). Such an \(\epsilon \) exists since there are finitely many strata that meet with a sufficiently small neighborhood of \(x\). Of course, all smaller values of \(\epsilon \) enjoy the same property. By Proposition 1, we then have the nesting

where \(L\) is a Lipschitz constant for the gradients in neighboring strata. In turn, \(0\leqslant \Vert g(x,0)\Vert -\Vert g(x,\epsilon )\Vert \leqslant 2L\epsilon \). In particular, \(\Vert g(x,\epsilon )\Vert \rightarrow \Vert g(x,0)\Vert >0\) as \(\epsilon \) goes to 0, hence \(\epsilon =o(\Vert g(x,\epsilon )\Vert )\). Besides, the inclusion \(\tilde{\partial }_\epsilon f(x)\subseteq \partial _\epsilon f(x)\) (Proposition 3) implies that \(\Vert \tilde{g}(x,\epsilon )\Vert \geqslant \Vert g(x,\epsilon )\Vert >0\). This yields \(\epsilon =o(\Vert \tilde{g}(x,\epsilon )\Vert )\) and so item (i) is proved.

We now assume that \(\epsilon \) satisfies the inequality of item (i), and let \(0\leqslant t \leqslant \frac{\epsilon }{\Vert \tilde{g}(x,\epsilon )\Vert }\). By the Lebourg mean value theorem, there exists a \(y\in [x,x-t\tilde{g}(x,\epsilon )]\) and some \(w\in \partial f(y)\) such that:

Since \(t\leqslant \frac{\epsilon }{\Vert \tilde{g}(x,\epsilon )\Vert }\), y is at distance no greater than \(\epsilon \) from \(x\). In particular, w belongs to \(\partial _\epsilon f(x)\). From Proposition 3, there exists some \(\tilde{w}\in \tilde{\partial }_\epsilon f(x)\) at distance no greater than \(2L\epsilon \) from w. We then rewrite:

On the one hand, by the Cauchy-Schwarz inequality:

where the last inequality relies on the assumption that \(\epsilon \leqslant \frac{1-\beta }{2L}\Vert \tilde{g}(x,\epsilon )\Vert \). On the other hand, since \(\tilde{g}(x,\epsilon )\) is the projection of the origin onto \(\tilde{\partial }_\epsilon f(x)\), we obtain  , or equivalently:

, or equivalently:

Plugging the inequalities of Eqs. (12) and (13) into Eq. (11) proves item (ii). \(\square \)

3 Stratified gradient sampling (SGS)

In this section we describe our gradient descent algorithm for the optimization of stratifiably smooth functions \(f:\mathbb {R}^n\rightarrow \mathbb {R}\), and we analyse its convergence properties.

3.1 Assumptions on the objective function

The algorithm and analysis are based on the following assumptions on \(f\)

- (A1):

-

\(f:\mathbb {R}^n\rightarrow \mathbb {R}\) has bounded (hence compact) sublevel sets.

- (A2):

-

For each point \(x\in \mathbb {R}^n\), we have an oracle \(\textbf{DiffOracle}(x)\) checking whether \(f\) is differentiable at \(x\).

- (A3):

-

\(f\) is stratifiably smooth, and for each point \(x\in \mathbb {R}^n\) and \(\epsilon \)-close top-dimensional stratum \(X_{i}\in \mathcal {X}_{x,\epsilon }\), we have an oracle \(\textbf{Sample}(x,\epsilon )\) which returns an element \(x^i\in X_{i}\) with \(\Vert x-x^i\Vert \le \epsilon \).

Assumption A1 is also needed in the original GS algorithm [43], although it has been omitted in subsequent work such as [44] to allow the values \(f(x_k)\) to decrease to \(-\infty \). In our case we stick to this assumption because we need the gradient of \(f\) (whenever defined) to be Lipschitz on sublevel sets.

Assumption A2 is standard in non-smooth optimization, for instance it is assumed in the GS algorithm and in quasi-Newton methods [45]. In bundle methods [46] it is generally replaced with the ability to sample the subgradient of \(f\) even at points of non-differentiability. We use A2 to ensure the differentiability of \(f\) at each iterate or, if needed, at arbitrarily small perturbations of the iterate. In practice, iterates are generically points of differentiability, hence A2 is mostly a theoretical requirement.

Assumption A3 is the strongest one, replacing the weaker assumption used in the GS algorithm that \(f\) is locally Lipschitz and that the set \(\mathcal {D}\) where \(f\) is differentiable is open and dense. A3 requires that \(f:\mathbb {R}^n\rightarrow \mathbb {R}\) is stratifiably smooth and that we have an oracle \(\textbf{Sample}(x,\epsilon )\) at our disposal to sample points in \(\epsilon \)-close top-dimensional strata of an iterate \(x\).

The SGS algorithm relies on A3 to gather points \(x_k^i\) in top-dimensional strata near the current iterate \(x_k\) in order to compute a finer approximation \(\tilde{\partial }_\epsilon f(x_k)\) of the Goldstein subgradient \(\partial _\epsilon f(x_k)\) and, in turn, to derive a descent direction \(g_k\) for producing the subsequent iterate \(x_{k+1}:=x_k-t_kg_k\). This allows us not only to guarantee asymptotic convergence to stationary points as in GS, but also to prove convergence rates for SGS (Theorem 7).

Both in GS and SGS, computing the descent direction \(g_k\) requires finding the element of minimal norm in the convex hull of the points \(x_k^i\) sampled around \(x_k\). This is a semidefinite programming problem which can for instance be solved by the ellipsoid method [47] with polynomial complexity in the number of samples \(x_k^i\). This number is different in GS and in SGS though, hence different time complexities for computing \(g_k\). Specifically, GS requires to sample at least \(m=n+1\) points at each iteration, while SGS requires to sample as many points as there are \(\epsilon \)-close strata. Therefore:

-

The sampling procedure of SGS is advantageous when the stratification \(\mathcal {X}\) has few strata, or at least few strata meeting in any \(\epsilon \)-ball—which per se does not preclude having a large (even infinite) number of strata in total. Example 1, or the continuous selection of few functions (see Remark 2), are typical examples.

-

The worst-case complexity of computing \(\tilde{\partial }_\epsilon f(x_k)\) in SGS increases significantly when numerous strata meet inside an \(\epsilon \)-ball, which can happen for the persistence based functions studied in Sect. 4. The complexity can be reduced at the cost of theoretical convergence guarantees, for instance by imposing an upper-bound on the number of samples \(x_k^i\), as further detailed in Remark 10 and implemented in the experiment of Sect. 5.3.

-

Even when numerous strata come close together in certain parts of the domain, most of the iterates may still fall within regions where \(f\) has no (or only a few) other nearby strata – see the experiment of section 5.2 for an example. In such cases, SGS runs faster than GS at most iterates, even as fast as classic gradient descent whenever there are no other strata nearby.

Remark 3

There are many possible ways to design the oracle \(\textbf{Sample}(x,\epsilon )\) of assumption A3: for instance the sampling could depend upon arbitrary probability measures on each stratum, or it could be set by deterministic rules depending on the input \((x,\epsilon )\) as will be the case for the persistence map studied in Sect. 4. Our algorithm and its convergence properties are oblivious to these degrees of freedom, as long as some \(\epsilon \)-close point is sampled in each \(\epsilon \)-close stratum.

Remark 4

For stratifiably smooth functions, points \(x\) of non-differentiability must belong to strata of codimension at least 1, making them easier to detect. In numerous cases (e.g. deep neural networks with ReLu activations, persistence based functions and minima or maxima of affine functions), this amounts to checking whether \(x\) belongs to a finite union of hyperplanes.

3.2 The algorithm

The details of the main algorithm \(\textbf{SGS}\) are given in Algorithm 2.

The algorithm \(\textbf{SGS}\) calls the method \(\textbf{UpdateStep}\) of Algorithm 3 as a subroutine to compute the right descent direction \(g_k\) and the right step size \(t_k\). Essentially, this method progressively reduces the exploration radius \(\epsilon _k\) of the ball where we compute the descent direction \(g_k:=\tilde{g}(x_k,\epsilon _k)\) until the criteria of Proposition 4 ensuring that the loss sufficiently decreases along \(g_k\) are met.

Given the iterate \(x_k\) and the radius \(\epsilon _k\), the calculation of \(g_k:=\tilde{g}(x_k,\epsilon _k)\) is done by the subroutine \(\textbf{ApproxGradient}\) in Algorithm 4: points \(x'\) in neighboring strata that intersect the ball \(B(x_k,\epsilon _k)\) are sampled using \(\textbf{Sample}(x_k,\epsilon _k)\) to compute the approximate Goldstein gradient and in turn the descent direction \(g_k\).

Much like the original GS algorithm, our method behaves like the well-known smooth gradient descent where the gradient is replaced with a descent direction computed from gradients in neighboring strata. A key difference however is that, in order to find the right exploration radius \(\epsilon _k\) and step size \(t_k\), the \(\textbf{UpdateStep}\) needs to maintain a constant \(C_k\) to approximate the ratio \(\frac{1-\beta }{2L}\) of Proposition 4, as no Lipschitz constant \(L\) may be explicitly available.

To this effect, \(\textbf{UpdateStep}\) maintains a relative balance between the exploration radius \(\epsilon _k\) and the norm of the descent direction \(g_k\), controlled by \(C_k\), i.e., \(\epsilon _k \simeq C_k \Vert g_k\Vert \). As we further maintain \(C_k \simeq \frac{1-\beta }{2L}\), we know that the convergence properties of \(\epsilon _k\) and \(g_k\) are closely related. Thus, the utility of this controlling constant is mainly theoretical, to ensure convergence of the iterates \(x_k\) towards stationary points in Theorem 5. In practice, we start with a large initial constant \(C_0\), and decrease it on line 11 of Algorithm 3 whenever it violates a property of the target constant \(\frac{1-\beta }{2L}\) given by Proposition 4.

Remark 5

Assume that we know a common Lipschitz constant \(L\) for all gradients \(\nabla f_i\) in the \(\epsilon \)-neighborhood of the current iterate \(x_k\), recall that \(f_i\) is any \(C^2\) extension of the restriction \(f_{\mid X_i}\) to the neighboring top-dimensional stratum \(X_i\in \mathcal {X}_{x,\epsilon }\). Then we can simplify Algorithm 3 by decreasing the exploration radius \(\epsilon _k\) progressively until \(\epsilon _k\le \frac{(1-\beta )}{2L} \Vert \tilde{g}(x_k,\epsilon _k)\Vert \) as done in Algorithm 6. This ensures by Proposition 4 that the resulting update step satisfies the descent criterion \(f(x_k-t_k\tilde{g}(x_k,\epsilon _k)) < f(x_k)-\beta t_k\Vert \tilde{g}(x_k,\epsilon _k)\Vert ^2\). In particular the parameter \(C_k\) is no longer needed, and the theoretical guarantees of the simplified algorithm are unchanged. Note that for objective functions from TDA (see section 4), the stability theorems (e.g. from [23]) often provide global Lipschitz constants, enabling the use of the simplified update step described in Algorithm 6.

Remark 6

In the situation of Remark 5, let us further assume that \(\epsilon \in \mathbb {R}_+ \mapsto \Vert \tilde{g}(x,\epsilon )\Vert \in \mathbb {R}_+\) is non-increasing. This monotonicity property mimics the fact that \(\epsilon \in \mathbb {R}_+ \mapsto \Vert g(x,\epsilon )\Vert \in \mathbb {R}_+\) is non-increasing, since increasing \(\epsilon \) grows the Goldstein generalized gradient \(\partial _\epsilon f(x)\), of which \(g(x,\epsilon )\) is the element with minimal norm. If the initial exploration radius \(\epsilon \) does not satisfy the termination criterion (Line 8), \(\epsilon \le \frac{1-\beta }{2L} \Vert \tilde{g}(x_k, \epsilon )\Vert \), then one can set \(\epsilon _k :=\frac{1-\beta }{2L} \Vert \tilde{g}(x_k,\epsilon )\Vert \le \epsilon \), yielding \(\epsilon _k \le \frac{1-\beta }{2L} \Vert \tilde{g}(x_k,\epsilon _k)\Vert \). This way Algorithm 6 is further simplified: in constant time we find a \(\epsilon _k\) that yields the descent criterion \(f(x_k-t_k \tilde{g}(x_k,\epsilon _k)) < f(x_k)-\beta t_k\Vert \tilde{g}(x_k,\epsilon _k)\Vert ^2\), and the parameter \(\gamma \) is no longer needed. A careful reading of the proofs provided in the following section yields that the convergence rate (Theorem 7) of the resulting algorithm is unchanged, however the asymptotic convergence (Theorem 5), case \(\mathbf {(b)}\), needs to be weakened: converging subsequences converge to \(\epsilon \)-stationary points instead of stationary points. We omit details for the sake of concision.

3.3 Convergence

We show convergence of Algorithm 2 towards stationary points in Theorem 5. Finally, Theorem 7 provides a non-asymptotic sub linear convergence rate, which is by no mean tight yet gives a first estimate of the number of iterations required in order to reach an approximate stationary point.

Theorem 5

If \(\eta =0\), then almost surely the algorithm either \(\mathbf {(a)}\) converges in finitely many iterations to an \(\epsilon \)-stationary point, or \(\mathbf {(b)}\) produces a bounded sequence of iterates \((x_k)_k\) whose converging subsequences all converge to stationary points.

As an intermediate result, we first show that the update step computed in Algorithm 3 is obtained after finitely many iterations and estimate its magnitude relatively to the norm of the descent direction.

Lemma 6

\(\textbf{UpdateStep}(f,x_k,\epsilon ,\eta ,C_k, \beta , \gamma )\) terminates in finitely many iterations. In addition, let \(L\) be a Lipschitz constant for the restricted gradients \(\nabla f_i\) (as in Proposition 3) in the \(\epsilon \)-neighborhood of \(x_k\). Assume that \(\frac{1-\beta }{2L}\leqslant C_{k}\). If \(x_k\) is not an \((\epsilon ,\eta )\)-stationary point, then the returned exploration radius \(\epsilon _k\) satisfies:

Moreover the returned controlling constant \(C_{k+1}\) satisfies:

Proof

If \(x_k\) is a stationary point, then \(\textbf{ApproxGradient}\) returns a trivial descent direction \(g_k=0\) because the approximate gradient \(G_k\) contains \(\nabla f(x_k)\) (Line 1). In turn, \(\textbf{UpdateStep}\) terminates at Line 4.

Henceforth we assume that \(x_k\) is not a stationary point and that the breaking condition of Line 4 in Algorithm 3 is never reached (otherwise the algorithm terminates). Therefore, at each iteration of the main loop, either \(C_{k+1}\) is replaced by \(\gamma C_{k+1} \) (line 9), or \(\epsilon _k\) is replaced by \(\gamma \epsilon _k\) (line 12), until both the following inequalities hold (line 14):

Once \(C_{k+1}\) becomes lower than \(\frac{1-\beta }{2L}\), inequality (A) implies inequality (B) by Proposition 4\(\mathbf{(ii)}\). It then takes finitely many replacements \(\epsilon _k \leftarrow \gamma \epsilon _k\) to reach inequality (A), by Proposition 4(i). At that point (or sooner), Algorithm 3 terminates. This concludes the first part of the statement, namely \(\textbf{UpdateStep}\) terminates in finitely many iterations.

Next we assume that \(x_k\) is not an \((\epsilon ,\eta )\)-stationary point, which ensures that the main loop of \(\textbf{UpdateStep}\) cannot break at Line 5. We have the invariant \(C_{k+1}\geqslant \gamma \frac{1-\beta }{2L}\): this is true at initialization (\(C_{k+1}=C_{k}\)) by assumption, and in later iterations \(C_{k+1}\) is only replaced by \(\gamma C_{k+1}\) whenever (A) holds but not (B), which forces \(C_{k+1}\geqslant \frac{1-\beta }{2L}\) by Proposition 4 (ii).

At the end of the algorithm, \(\epsilon _k \leqslant C_{k+1} \Vert \tilde{g}(x_k,\epsilon _k)\Vert \) by inequality (A), and so we deduce the right inequality \(\epsilon _k \leqslant \min (C_{k} \Vert \tilde{g}(x_k,\epsilon _k)\Vert ,\epsilon )\).

Besides, if both (A) and (B) hold when entering the main loop (line 11) for the first time, then \(\epsilon _k=\epsilon \). Otherwise, let us consider the penultimate iteration of the main loop for which the update step is \(\frac{1}{\gamma } \epsilon _k\). Then, either condition (A) does not hold, namely \(\frac{1}{\gamma }\epsilon _k> C_{k+1} \Vert \tilde{g}(x_k,\frac{1}{\gamma }\epsilon _k)\Vert \geqslant \gamma \frac{1-\beta }{2L} \Vert \tilde{g}(x_k,\frac{1}{\gamma }\epsilon _k)\Vert \), or condition (B) does not hold, which by the assertion (ii) of Proposition 4 implies \(\frac{1}{\gamma }\epsilon _k\geqslant \frac{1-\beta }{2L} \Vert \tilde{g}(x_k,\frac{1}{\gamma }\epsilon _k)\Vert \). In any case, we deduce that

\(\square \)

Proof of Theorem 5

We assume that alternative \(\mathbf {(a)}\) does not happen. By Lemma 6, Algorithm 3 terminates in finitely many iteration and by Line 14 we have the guarantee:

The subsequent iterate \(x_{k+1}\) is initialized at \(x_k-t_k\tilde{g}(x_k,\epsilon _k)\) by \(\textbf{MakeDifferentiable}\) (see Algorithm 5) and replaced by a sample in a progressively shrinking ball \(B(x_k-t_k\tilde{g}(x_k,\epsilon _k), r)\) until two conditions are reached. The first condition is that \(f\) is differentiable at \(x_{k+1}\), and since \(\mathcal {D}\) has full measure by Rademacher’s Theorem, this requirement is almost surely satisfied in finitely many iterations. The second condition is that

which by Eq. (14) and continuity of \(f\) is satisfied in finitely many iterations as well. Therefore \(\textbf{MakeDifferentiable}\) terminates in finitely many iterations almost surely. In particular, the sequence of iterates \((x_k)_k\) is infinite.

By Eq. (15) the sequence of iterates’ values \((f(x_k))_k\) is decreasing and it must converge by compactness of the sublevel sets below \(f\). Using (15), we obtain:

Let also \(L\) be Lipschitz constant for the restricted gradients \(\nabla f_i\) (as in Proposition 3) in the \(\epsilon \)-offset of the sublevel set \(\{x, f(x)\leqslant f(x_0) \}\). Up to taking \(L\) large enough, there is another Lipschitz constant \(L'<L\) ensuring that

By Lemma 6, upon termination of Algorithm 3, \(C_1\ge \gamma \frac{1-\beta }{2L'}\ge \frac{1-\beta }{2L}\). If \(C_1\le \frac{1-\beta }{2L'}\), the (ii) of Proposition 4 prevents Line 9 in Algorithm 3 from ever occurring again, i.e., \(C_k=C_1\) is constant in later iterations. Otherwise, \(C_1\) satisfies \(C_1\ge \frac{1-\beta }{2L'}\) just like \(C_0\). A quick induction then yields:

Therefore, by Lemma 6:

In particular, using the rightmost inequality and Eq. (16), we get \(\epsilon _k\rightarrow 0^+\). In turn, using the leftmost inequality, we get that

The sequence of iterates \((x_k)_k\) is bounded; up to extracting a converging subsequence, we assume that it converges to some \(x_*\). Let \(\epsilon '>0\). We claim that \(0\in \partial _{\epsilon '} f(x_*)\), that is \(x_*\) is \(\epsilon '\)-stationary. As \(x_k \rightarrow x_*\) and \(\epsilon _k \rightarrow 0\), we have that for k large enough \(B(x_k,\frac{1}{\gamma }\epsilon _k)\subseteq B(x_*,\epsilon ')\), which implies that:

Besides, from Proposition 3, we have \(\tilde{\partial }_{\frac{1}{\gamma }\epsilon _k}f(x_k)\subseteq \partial _{\frac{1}{\gamma }\epsilon _k} f(x_k)\), so that \(\tilde{g}(x_k,\frac{1}{\gamma }\epsilon _k)\in \partial _{\frac{1}{\gamma }\epsilon _k} f(x_k)\). Hence \(\tilde{g}(x_k,\frac{1}{\gamma }\epsilon _k)\in \partial _{\epsilon '}f(x_*)\). In the limit, (18) implies \(0\in \partial _{\epsilon '}f(x_*)\), as desired.

Following [43], the intersection of the Goldstein subgradients \(\partial _{\epsilon '} f(x_*)\), over \(\epsilon '>0\), yields \(\partial f(x_*)\). Hence, \(0\in \partial f(x_*)\) and \(x_*\) is a stationary point. \(\square \)

Theorem 7

If \(\eta >0\), then Algorithm 2 produces an \((\epsilon ,\eta )\)-stationary point using at most \(O\left( \frac{1}{\eta \min (\eta ,\epsilon )}\right) \) iterations.

As seen in the proof below, the bound \(O(\frac{1}{\eta \min (\eta ,\epsilon )})\) depends on hyper-parameters \(\beta \) and \(\gamma \), on the Lipschitz constant \(L\) of the restrictions \(f_i\) and on the gap between the initial value \(f(x_0)\) and the asymptotic local minimum \(f(x_*)\).

Proof

Assume that Algorithm 2 has run over k iterations without producing an \((\epsilon ,\eta )\)-stationary point. From Algorithm 3 (line 14), Algorithm 5 (Line 2) and the choice \(t_j=\frac{\epsilon _j}{\Vert \tilde{g}(x_j,\epsilon _j)\Vert }\) of update step for \(j\leqslant k\), it holds that \(\beta \epsilon _j \Vert \tilde{g}(x_j,\epsilon _j)\Vert \le f(x_j) - f(x_{j+1}) \), and in turn

where \(f_0:=f(x_0)\) and \(f^*\) is a minimal value of \(f\). Besides, using Lemma 6, \(\epsilon _j\) is either bigger than \(\frac{\gamma ^2(1-\beta )}{2L} \left\| \tilde{g}\left( x_j,\frac{1}{\gamma }\epsilon _j\right) \right\| \) or than \(\epsilon \), hence

The two equations cannot simultaneously hold whenever

which allows us to conclude. \(\square \)

3.4 Approximate distance to strata

The algorithm and its convergence assume that strata \(X\) which are \(\epsilon \)-close to an iterate \(x\) can be detected and sampled by the oracle \(\textbf{Sample}(x,\epsilon )\). In some situations, this may be expansive or even impossible. In this section, we relax these requirements and assume that we only have approximate distances \(\hat{d}(x,X)\) and an approximate assignment to nearby points:

at our disposal. We then replace the accurate oracle \(\textbf{Sample}(x,\epsilon )\) with the following approximate oracle:

For the purpose of this section, we make the following assumption on the estimates: There exists a constant \(a\geqslant 1\) such that, for any point \(x\in \mathbb {R}^n\) and stratum \( X\in \mathcal {X}\), the following inequalities hold:

Remark 7

Note that an assignment \((x,X)\mapsto \tilde{x}_{X}\) alone canonically induces an approximate distance \(\hat{d}(x,X):=d(x,\tilde{x}_{X})\). This will be useful in several experiments of Sect. 5 involving Persistent Homology. Furthermore, in these situations Eq. (19) will specialize to \(d(x,X) \leqslant \hat{d}(x,X)\leqslant 2d(x,X)\), that is \(a=2\), see Proposition 11.

We then replace the approximate Goldstein subgradient \(\tilde{\partial }_\epsilon f(x)\) with \(\hat{\partial }_\epsilon f(x)\), defined in the exact same way except that it is computed from strata satisfying \(\hat{d}(x,X)\leqslant \epsilon \), that is, \(\hat{\partial }_\epsilon f(x)\) contains \(\nabla f(\tilde{x}_{X})\) for each such stratum. The proof of Proposition 3 adapts straightforwardly to the following statement:

Proposition 8

Let \(x\in \mathbb {R}^n\) and \(\epsilon >0\). Assume that \(f\) is stratifiably smooth. Let \(L\) be a Lipschitz constant of the gradients \(\nabla f_{i}\) restricted to \(\overline{B}(x,a\epsilon )\cap X_i\), where \(f_i\) is some local \(C^2\) extension of \(f_{\mid X_i}\), and \( X_i\in \mathcal {X}_{x,\epsilon } \) is top-dimensional. Then we have:

Proof

The inclusion \(\hat{\partial }_\epsilon f(x) \subseteq \partial _\epsilon f(x)\) is clear. Conversely, let \(\nabla _{X}f(x')\in \partial _\epsilon f(x)\), where \(X\) is a top-dimensional stratum incident to \(x'\) and \(|x'-x|\leqslant \epsilon \). We then have \(\hat{d}(x,X)\leqslant a\epsilon \) and hence \(\tilde{x}_{X}\) is a point in \(\hat{\partial }_{a\epsilon }f(x)\) which is \((a+1)\epsilon \)-close to \(x'\). Therefore \(\nabla _{X}f(x')\in \hat{\partial }_{a\epsilon }f(x) + \overline{B}(0,(a+1)L\epsilon )\). The rest of the proof is then conducted as in Proposition 3. \(\square \)

The vector \(\hat{g}(x,\epsilon )\) with minimal norm in \(\hat{\partial }_\epsilon f(x)\) can then serve as the new descent direction in place of \(\tilde{g}(x,\epsilon )\):

Proposition 9

Let \(f\) be stratifiably smooth, and \(x\) be a non-stationary point. Let \(0<\beta <1\), and \(\epsilon _0>0\). Denote by \(L\) a Lipschitz constant for all gradients of the restriction \(f_i\) in the ball \(\overline{B}(x,a\epsilon _0)\) (as in Proposition 8).

- (i):

-

For \(0<\epsilon \leqslant \epsilon _0\) small enough we have \(\epsilon \leqslant \frac{1-\beta }{2L} \Vert \hat{g}(x,\epsilon )\Vert \); and

- (ii):

-

For such \(\epsilon \), we have \(\forall t \leqslant \frac{\epsilon }{a\Vert \hat{g}(x,\epsilon )\Vert }, \, f(x-t\hat{g}(x,\epsilon ))\leqslant f(x)-\beta t\Vert \hat{g}(x,\epsilon )\Vert ^2\).

Proof

The proof of Proposition 4 can be replicated by replacing \(\epsilon \) with \(\frac{\epsilon }{a}\) and using Proposition 8 instead of Proposition 3. \(\square \)

In light of this result, we can use \(g_k=\hat{g}(x_k,\epsilon _k)\) as a descent direction, which in practice simply amounts to replace the accurate oracle \(\textbf{Sample}(x_k,\epsilon _k)\) in Algorithm 4 with the approximate oracle \(\textbf{ApproxSample}(x_k,\epsilon _k)\). The only difference is that the assignment of update step in Algorithm 3 (Line 7) should take the constant \(a\) into account, namely:

The convergence analysis of Sect. 3.3 holds as well for this algorithm, and the proofs of Theorem 5 and Theorem 7 are unchanged.

4 Application to topological data analysis

In this section, we define the persistence map \(\textrm{PH}:\mathbb {R}^n \rightarrow \textbf{Bar}\) which is a central descriptor in TDA that gives rise to prototypical stratifiably smooth objective functions \(f\) in this work. We refer the reader to [48,49,50] for full treatments of the theory of Persistence. We then introduce the stratification that makes \(\textrm{PH}\) a stratifiably smooth map, by means of the permutation group. Using the associated Cayley graph we give a way to implement the oracle \(\textbf{Sample}(x,\epsilon )\) that samples points in nearby top-dimensional strata, which is the key ingredient of Algorithm 4 for computing descent directions.

4.1 The persistence map

Persistent Homology and Barcodes. Let \(n \in \mathbb {N}\), and let \(\{v_1,\dots ,v_n\}\) be a (finite) set of vertices. A simplicial complex \(K\) is a subset of the power set \(\mathcal {P}(\{v_1,\dots ,v_n\})\) whose elements are called simplices, and which is closed under inclusion: if \(\sigma \in K\) is a simplex and \(\sigma '\subseteq \sigma \), then \(\sigma '\in K\). The dimension of the complex is the maximal cardinality of its simplices minus one. In particular a 1-dimensional simplicial complex is simply an undirected graph.

A filter function is a function on the vertices of \(K\), which we equivalently view as a vector \(x\in \mathbb {R}^n\). Given \(t\in \mathbb {R}\), we have the sub complexes \(K_{\le t} = \{ \sigma \in K,\ \forall v \in \sigma , \ x(v) \le t\}\). This yields a nested sequence of sub complexes called the sublevel sets filtration of \(K\) by \(x\):

See Fig. 2 for an illustration on graphs. The (Ordinary) Persistent Homology of \(x\) in degree \(p\in \{0,\cdots ,\dim K\}\) records topological changes in (20) by means of points \((b,d)\in \mathbb {R}^2\), here \(b<d\), called intervals. For instance, in degree \(p=0\), an interval (b, d) corresponds to a connected component that appears in \(K_{\le b}\) and that is merged with an older component in \(K_{\le d}\). In degree \(p=1\) and \(p=2\), intervals track loops and cavities respectively, and more generally an interval (b, d) in degree p tracks a p-dimensional sphere that appears in \(K_{\le b}\) and persists up to \(K_{\le d}\).

Note that there are possibly infinite intervals \((b,\infty )\) for p-dimensional cycles that persist forever in the filtration (20). Such intervals are not easy to handle in applications, and it is common to consider the (Extended) Persistent Homology of \(x\), for which they do not occur, i.e. we append the following sequence of pairs involving superlevel sets \(K_{\ge t} :=\{\sigma \in K \mid \ \forall v \in \sigma , \ x(v) \ge t \}\) to (20):

Together intervals (b, d) form the (extended) barcode \(\textrm{PH}_p(x)\) of \(x\) in degree p, which we simply denote by \(\textrm{PH}(x)\) when the degree is clear from the context.

Definition 4

A barcode is a finite multi-set of pairs \((b,d)\in \mathbb {R}^2\) called intervals, with \(b\leqslant d\). Two barcodes differing by intervals of the form (b, b) are identified. We denote by \(\textbf{Bar}\) the set of barcodes.

The set \(\textbf{Bar}\) of barcodes can be made into a metric space as follows. Given two barcodes \(D :=\{(b,d)\}\) and \(D':=\{(b',d')\}\), a partial matching \(\gamma : D\rightarrow D'\) is a bijective map from some subset \(A \subseteq D\) to some \(B \subseteq D'\). For \(q\ge 1\) the q-th diagram distance \(W_q(D,D')\) is the following cost of transferring intervals (b, d) to intervals \((b',d')\), minimized over partial matchings \(\gamma \) between D and \(D'\):

In particular the intervals that are not in the domain A and image B of \(\gamma \) contribute to the total cost relative to their distances to the diagonal \(\{b=d\} \subset \mathbb {R}^2\).

The Stability Theorem [23, 24] implies that the map \(\textrm{PH}:\mathbb {R}^n\rightarrow \textbf{Bar}\), which we refer to as the persistence map in what follows, is Lipschitz continuous.

Differentiability of Persistent Homology. Next we recall from [25] the notions of differentiability for maps in and out of \(\textbf{Bar}\) and the differentiability properties of \(\textrm{PH}\). Note that the results of [25] focus on ordinary persistence, yet they easily adapt to extended persistence, see e.g. [32].

Given \(r\in \mathbb {N}\), we define a local \(\mathcal {C}^r\)-coordinate system as a collection of \(C^r\) real-valued maps coming in pairs \(b_i,d_i: U\rightarrow \mathbb {R}\) defined on some open Euclidean set \(U\subseteq \mathbb {R}^n\), indexed by a finite set I, and satisfying \(b_i(x)\le d_i(x)\) for all \(x\in U\) and \(i\in I\). A local \(\mathcal {C}^r\)-coordinate system is thus equally represented as a map valued in barcodes

where each interval \((b_i(x),d_i(x))\) is identified and tracked in a \(\mathcal {C}^r\) manner.

Definition 5

A map \(B: \mathbb {R}^n\rightarrow \textbf{Bar}\) is r-differentiable at \(x\in \mathbb {R}^n\) if \(B_{\mid U}=\tilde{B}_{\mid U}\) for some local \(\mathcal {C}^r\)-coordinate system \(\tilde{B}\) defined in a neighborhood U of \(x\).

Similarly we have a notion of differentiability for maps out of barcodes.

Definition 6

A map \(V: \textbf{Bar}\rightarrow \mathbb {R}^m\) is r-differentiable at \(D\in \textbf{Bar}\) if \(V\circ \tilde{B}:\mathbb {R}^n\rightarrow \mathbb {R}^m\) is of class \(\mathcal {C}^r\) in a neighborhood of the origin for all \(n\in \mathbb {N}\) and local \(\mathcal {C}^r\)-coordinate system \(\tilde{B}\) defined around the origin such that \(\tilde{B}(0)=D\).

These notions compose together via the chain rule, so for instance an objective function \(f=V\circ B: \mathbb {R}^n \rightarrow \mathbb {R}^m\) is differentiable in the usual sense as soon as B and \(V\) are so.

We now define the stratification \(\mathcal {X}\) of \(\mathbb {R}^n\) such that the persistence map \(B=\textrm{PH}\) is r-differentiable (for any r) over each stratum. Denote by \(\Sigma _n\) the group of permutations on \(\{1,\cdots ,n\}\). Each permutation \(\pi \in \Sigma _n\) gives rise to a closed polyhedron

which is a cell in the sense that its (relative) interior is a top-dimensional stratum of our stratification \(\mathcal {X}\). The (relative) interiors of the various faces of the cells \(\mathcal {S}_{\pi }\) form the lower dimensional strata. In terms of filter functions, a stratum is simply a maximal subset whose functions induce the same pre-order on vertices of \(K\). We then have that any persistence based loss is stratifiably smooth w.r.t. this stratification.

Proposition 10

Let \(V: \textbf{Bar}\rightarrow \mathbb {R}\) be a 2-differentiable map. Then the objective function \(f:=V\circ \textrm{PH}\) is stratifiably smooth for the stratification \(\mathcal {X}\) induced by the permutation group \(\Sigma _n\).

Proof

From Proposition 4.23 and Corollary 4.24 in [25], on each cell \(\mathcal {S}_{\pi }\) we can define a local \(C^2\) coordinate system adapted to \(\textrm{PH}\) that consists of linear maps \(b_i,d_i: \mathcal {S}_{\pi }\rightarrow \mathbb {R}\), in particular it admits a \(C^2\) extension on a neighborhood of \(\mathcal {S}_{\pi }\). Since \(V\) is globally 2-differentiable, by the chain rule, we incidentally obtain a local \(C^2\) extension \(f_i\) of \(f_{\mid \mathcal {S}_{\pi }}=(V\circ \textrm{PH})_{\mid \mathcal {S}_{\pi }}\). \(\square \)

Remark 8

Note that the condition that \(f=V\circ \textrm{PH}\) has compact sublevel sets, as required for the analysis of Algorithm 2, sometimes fails because preimage of compact sets by \(\textrm{PH}\) may not be bounded in general. The intuitive reason for this is that a filter function \(x\) can have an arbitrarily large value on two distinct entries—one simplex creates a homological cycle that the other destroys immediately—that may not be reflected in the barcode \(\textrm{PH}(x)\). Hence the fiber of \(\textrm{PH}\) is not bounded. However, when the simplicial complex K is (homeomorphic to) a compact oriented manifold, any filter function must reach its maximum at the simplex that generates the fundamental class of the manifold (or one of its components), hence \(\textrm{PH}\) has bounded preimages on compact sets in that case. Finally, we note that it is always possible to turn a loss function \(f\) based on \(\textrm{PH}\) into one with compact sublevel sets by adding a regularization term that controls the norm of the filter function \(x\).

4.2 Exploring the space of strata

In the setting of Proposition 10, the objective function \(f=V\circ \textrm{PH}:\mathbb {R}^n \rightarrow \mathbb {R}\) is a stratifiably smooth map, where the stratification involved is induced by the group \(\Sigma _n\) of permutations on \(\{1,\cdots ,n\}\), with cells \(\mathcal {S}_{\pi }\) as in (23). In order to calculate the approximate subgradient \(\partial _\epsilon f(x)\), we need to compute the set \(\mathcal {X}_{x,\epsilon }\) of cells \(\mathcal {S}_{\pi }\) that are at Euclidean distance no greater than \(\epsilon \) from \(x\):

Estimating distances to strata. In practice however, solving the quadratic problem of (24) to compute \(d_2(x,\mathcal {S}_{\pi })\) can be done in \(O(n \log n)\) time using solvers for isotonic regression [51]. Since we want to approximate many such distances to neighboring cells, we rather propose the following estimate which boils down to O(1) computations to estimate \(d_2(x,\mathcal {S}_{\pi })\). For any \(\pi \in \Sigma _n\), we consider the mirror of \(x\) in \(\mathcal {S}_{\pi }\), denoted by \(x^{\pi }\in \mathbb {R}^n\) and obtained by permuting the coordinates of \(x\) according to \(\pi \):

In the rest of this section, we assume for simplicity that the point \(x\) is fixed and has increasing coordinates, \(x_i\leqslant x_{i+1}\). The proxy \(d_2(x,x^\pi )\) then yields a good estimate of \(d_2(x,\mathcal {S}_{\pi })\), as expressed by the following result.

Proposition 11

For any permutation \(\pi \in \Sigma _n\), we have:

Proof

The left inequality is clear from the fact that \(x^{\pi }\) belongs to the cell \(\mathcal {S}_{\pi }\). To derive the right inequality, let \(\hat{x}^\pi \) be the projection of \(x\) onto \(\mathcal {S}_{\pi }\). It is a well-known fact in the discrete optimal transport literature, or alternatively a consequence of Lemma 13 below, that

so that in particular \(d_2(x^{\pi },\hat{x}^\pi )\leqslant d_2(x,\hat{x}^\pi )\). Consequently,

\(\square \)

Our approximate oracle for estimating the Goldstein subgradient, see Sect. 3.4, computes the set of mirrors \(x^{\pi }\) that are at most \(\epsilon \)-away from the current iterate \(x:=x_k\), that is:

Remark 9

Recall that the oracle is called several times in Algorithm 3 when updating the current iterate \(x_k\) with a decreasing exploration radius \(\epsilon _k\). However, for the oracle above we have

so that once we have computed \(\textbf{ApproxSample}(x_k,\epsilon _k)\) for an initial value \(\epsilon _k\) and the current \(x_k\), we can retrieve \(\textbf{ApproxSample}(x_k,\epsilon ')\) for any \(\epsilon ' < \epsilon _k\) in a straightforward way, avoiding re-sampling neighboring points around \(x_k\) and computing the corresponding gradient each time \(\epsilon _k\) decreases, saving an important amount of computational resources.

Sampling in nearby strata. In order to implement the oracle \(\textbf{ApproxSample}(x,\epsilon )\), we consider the Cayley graph with permutations \(\Sigma _n\) as vertices and edges between permutations that differ by elementary transpositions (those that swap consecutive elements). In other words, the Cayley graph is dual to the stratification of filter functions: a node corresponds uniquely to a cell \(\mathcal {S}_{\pi }\) and an edge corresponds to a pair of cells sharing a common \((n-1)\)-dimensional face.

We explore this graph by maintaining a list \(\mathcal {V}\) of visited nodes and a list \(\mathcal {N}\) of nodes to visit next. Initially, \(\mathcal {V}\) reduces to the source node, the identity permutation, and \(\mathcal {N}\) consists of its immediate neighbors, the elementary transpositions. At each step of the exploration procedure, we add a node that has not yet been visited, i.e. a permutation \(\pi \in \mathcal {N}\), to the list \(\mathcal {V}\) of visited nodes. If \(d_2(x,x^{\pi })\le \epsilon \), then we record the mirror point \(x^{\pi }\) in \(\textbf{ApproxSample}(x,\epsilon )\), and we add in \(\mathcal {N}\) all the neighbors \(\pi '\) of \(\pi \) that are not yet in \(\mathcal {V}\cup \mathcal {N}\). Note that by storing \(x^{\pi }\) and \(d_2(x,x^{\pi })\), we will later be able to retrieve \(x^{\pi '}\) and \(d_2(x,x^{\pi '})\) in O(1) time for any neighbor \(\pi '\). The following result entails that this exploration procedure indeed computes the set \(\textbf{ApproxSample}(x,\epsilon )\) of all mirrors \(x^{\pi }\) that satisfy \(d_2(x,x^{\pi })\le \epsilon \).

Proposition 12

Let \(\pi '\in \Sigma _n\) be a permutation such that \(d_2(x,x^{\pi '})\le \epsilon \). Then there exists a path in the Cayley graph from the identity to \(\pi '\) whose nodes \(\pi \) also satisfy \(d_2(x,x^\pi )\le \epsilon \).

Proof

In fact, from the well-known Lemma 13 below, any shortest path from the identity to \(\pi '\) does the job, because along such a path the set \(\textrm{inv}(\pi )\) of inversions, i.e. pairs (i, j) satisfying \((j-i)(\pi (j)-\pi (i))<0\), grows incrementally. \(\square \)

Lemma 13

Let \(x,y\in \mathbb {R}^n\) be two vectors whose coordinates are sorted in the same order, namely \(x_i\leqslant x_j \Leftrightarrow y_i\leqslant y_j\). Given \(\pi \in \Sigma _n\) a permutation, let \(\textrm{inv}(\pi )\) be the set of inversions, i.e. pairs (i, j) satisfying \((j-i)(\pi (j)-\pi (i))<0\). Then

Remark 10

For an arbitrary filter function \(x\), the computation of the barcode \(\textrm{PH}(x)\) has complexity \(O(\#K^3)\), here \(\#K\) is the number of vertices and edges in the graph \(K\) (or the number of simplices if \(K\) is a simplicial complex). In the SGS algorithm we need to compute \(\textrm{PH}(x_\pi )\) for each cell \(\mathcal {S}_{\pi }\) near the current iterate \(x_k\), which can quickly become too expansive. Below we describe two heuristics that we implemented in some of our experiments to reduce time complexity.

The first method bounds the number of strata that can be explored with a hyper-parameter \(N\in \mathbb {N}\), enabling a precise control of the memory footprint of the algorithm. In this case exploring the Cayley graph of \(\Sigma _n\) using Dijkstra’s algorithm is desirable, since it allows to retrieve the N strata that are the closest to the current iterate \(x_k\). Note that in the original Dijkstra’s algorithm for computing shortest-path distances to a source node, each node is reinserted in the priority queue each time one of its neighbors is visited. However in our case we know the exact distances \(d(x,x_\pi )\) to the source each time we encounter a new node, permutation \(\pi \), so we can simplify Dijkstra’s algorithm by treating each node of the graph at most once. Even though this heuristic lacks the theoretical convergence guarantees of Theorem 5 and Theorem 7, it is shown to be empirically efficient in Sect. 5.3.

The second approach is memoization: inside a cell \(\mathcal {S}_{\pi }\), all the filter functions induce the same pre-order on the n vertices of \(K\), hence the knowledge of the barcode \(\textrm{PH}(x_{\pi })\) of one of its filter functions allows to compute \(\textrm{PH}(x_{\pi }')\) for any other \(x_{\pi }'\in \mathcal {S}_{\pi }\) in \(O(\#K)\) time. We can take advantage of this fact by recording the cells \(\mathcal {S}_{\pi }\) (and the barcode \(\textrm{PH}(x_{\pi })\) of one filter function \(x_{\pi }\) therein) that are met by the SGS (or GS) algorithm during the optimization, thereby avoiding redundant computations whenever the cell \(\mathcal {S}_{\pi }\) is met for a second time.

5 Experiments

In this section we apply our approach to the optimization of objective functions based on the persistence map \(\textrm{PH}\), and compare it with other methods.

We consider three experimental settings in increasing level of complexity. Section 5.1 is dedicated to the optimization of an elementary objective function in TDA that allows for explicit comparisons of SGS with other optimization techniques. Section 5.2 introduces a novel topological optimization task: that of topological registration for translating filter functions between two distinct simplicial complexes. Finally, Sect. 5.3 considers a topological optimization problem introduced in [52] and used to refine functional maps between 3D shapes, showcasing the behavior of our algorithm (and other variants) in practical situations.

A fourth experiment related to the estimation of topological Fréchet mean of Mapper graphs built on top of a biological data set has been deferred to the appendix for the sake of concision.

Implementation. Our implementation is done in Python 3 and relies on TensorFlow [53] for automatic-differentiation, Gudhi [54] for TDA-related computations (barcodes, distances \(W_q\), Mapper graphs), cvxpy [55] for solving the quadratic minimization problem involved in Algorithm 4. Our implementation handles both ordinary and extended persistence, complexes of arbitrary dimension, and can easily be tuned to enable general objective functions (assuming those are provided in an automatic differentiation framework). Our code is publicly available at https://github.com/tlacombe/topt.

5.1 Proof-of-concept: minimizing total extended persistence

The goal of this experiment is to provide a simple yet instructive framework where one can clearly compare different optimization methods. Here we consider the standard Gradient Descent (GD) with fixed learning rate (a.k.a. step-size), its variant with learning-rate decay (GDwD), the Gradient Sampling (GS) algorithm and our Stratified Gradient Sampling (SGS) approach. Recall that GD is very well-suited to smooth optimization problems, while GS deals with objective functions that are merely locally Lipschitz with a dense subset of differentiability. To some extent, SGS is specifically tailored to functions with an intermediate degree of regularity since their restrictions to strata are assumed to be smooth, and this type of functions arise naturally in TDA.

We consider the elementary example of filter functions \(x\) on the graph obtained from subdividing the unit interval with n vertices and the associated (extended) barcodes \(\textrm{PH}(x)=\textrm{PH}_0(x)\) in degree 0.Footnote 2 When the target diagram is empty, \(D=\emptyset \), the objective \(x\mapsto W_1(\textrm{PH}(x),\emptyset )\) to minimize is also known in the TDA literature as the total extended persistence of \(\textrm{PH}(x)\):

Throughout the minimization, the sublevel sets of \(x\) are simplified until they become topologically trivial: \(\textrm{Pers}(x)\) is minimal if and only if \(x\) is constant. This elementary optimization problem enables a clear comparison of the GD, GDwD, GS and SGS methods.

For each \(\textrm{mode} \in \{\textrm{GD},\textrm{GDwD},\textrm{GS},\textrm{SGS}\}\), we get a gradient \(g_k^{\textrm{mode}}\) and thus build a sequence of iterates

For GD, the update step-size \(\epsilon _k = \epsilon \) is constant, for GDwD it is set to be \(\epsilon _k = \epsilon /(1+k)\), and for \(\textrm{mode}\in \{\textrm{GS},\textrm{SGS}\}\) it is reduced until \(\textrm{Pers}(x_k - \epsilon _k g_k^\textrm{mode}) < \textrm{Pers}(x_k) - \beta \epsilon _k \Vert g_k^\textrm{mode}\Vert ^2\) (and in addition \(\epsilon _k < C_k \Vert g_k^\textrm{SGS}\Vert \) for SGS). In each case the condition \(\Vert g_k^\textrm{mode}\Vert \le \eta \) is used as a stopping criterion.

For the experiments, the graph has \(n=5\) vertices, \(x_0 = (0.4, 0.72,0,0.3,0.14)\), \(\epsilon =\eta =0.01\), and we chose hyper-parameters \(\gamma = 0.5\) and \(\beta = 0.5\) for the GS and SGS algorithm. The results are illustrated in Fig. 3.

Whenever differentiable, the objective \(\textrm{Pers}\) has gradient norm greater than 1, so in particular it is not differentiable at its minima, which consists of constant filter functions \(x\). Therefore GD oscillates around its optimal value: the stopping criterion \(\Vert g_k^\textrm{GD}\Vert \le \eta \) is never met which prevents from detecting convergence. Setting \(\epsilon _k\) to decay at each iteration in GDwD theoretically ensures the convergence of the sequence \((x_k)_k\), but comes at the expense of a dramatic decrease of the convergence rate.

Comparison of Gradient Descent (GD), Gradient Descent with decay (GDwD), Gradient Sampling (GS) and our Stratified Gradient Sampling (SGS) on a toy example. a The evolution of filter functions \((x_k)_k\) as the total extended persistence \(\textrm{Pers}\) is minimized with the SGS method. Purple arrows indicate descent direction at \(k=0\). As expected, the minimization tends to make \((x_k)_k\) topologically as trivial as possible, that is flat in this context. b The barcodes \(\textrm{PH}(x_k)\) represented as persistence diagrams extended with the interval \((\min (x_k),\max (x_k))\). c The value \(\textrm{Pers}(x_k)\) of the objective function across iterations k. d The corresponding gradient norms \((\Vert g_k\Vert )_k\). Only GS and SGS reach the stopping criterion \(\Vert g_k\Vert < \eta \) (color figure online)

In contrast, the GS and SGS methods use a fixed step-size \(\epsilon _k\) yet they converge since their computed descent direction is the element of minimal norm in the convex hull of the surrounding gradients \(\{\nabla \textrm{Pers}(x_k), \nabla \textrm{Pers}(x^{(1)}),\dots , \nabla \textrm{Pers}(x^{(m)})\}\), as described in Algorithms 1 and 4. Here \(x^{(1)}, \dots , x^{(m)}\) are either sampled randomly around the current iterate \(x_k\) (with \(m=n+1\)) for GS or in the strata around \(x_k\) (if any) for SGS. We observe that it takes less iterations for SGS to converge: 137 iterations versus \(\sim 165\) iterations for GS (averaged over 10 runs). This is because in GS the convex hull of the random sampling \(\{x^{(1)},\dots ,x^{(m)} \}\) may be far from the actual generalized gradient \(\partial _\epsilon f\), incidentally producing sub-optimal descent directions and missing local minima, while in SGS the sampling takes all nearby strata into account which guarantees reliable approximate subgradient \(\tilde{\partial }_\epsilon f(x_k)\) and decent direction (by Proposition 4), in fact since the objective \(\textrm{Pers}\) restricts to a linear map on each stratum the approximate gradient \(\tilde{\partial }_\epsilon f(x_k)\) equals the Goldstein subgradient \(\partial _\epsilon f(x_k)\).

Another difference is that GS samples \(n+1=6\) nearby points at each iteration k, while SGS samples as many points as there are nearby strata, and for early iterations there is just one such stratum. In practice, this results in a total running time of \(\sim 2.7\)s for GS vs. 2.4s for SGS to reach convergence.Footnote 3

5.2 Topological registration

We now present the more sophisticated optimization task of topological registration. This problem takes inspiration from registration experiments in shapes and medical images analysis [56], where we want to translate noisy real-world data (e.g. MRI images of a brain) into a simpler and unified format, e.g. a given template of the brain. As detailed at the end of the section, this experiment is an example of scenarios where SGS requires sampling much less points at each iteration than GS would, while keeping stronger convergence guarantees.

Illustration of topological registration. a The target function defined on a (circular) simplicial complex of which we want to reproduce the topology. b The registration obtained when using a template with \(n=4\) vertices. Purple arrows indicate descent direction at \(k=0\). c The registration obtained when using a template with \(n=15\) vertices. d The target persistence diagram (blue triangles) along with the diagram trajectories through iterations for both cases (green and brown, respectively). e The values of the objective function across iterations. Using a larger template allows to attain lower objective values. f The corresponding gradient norms, both reaching the stopping criterion \(\Vert g_k\Vert \le \eta \). g The number of strata detected at each iteration of SGS (color figure online)

Problem formulation. In a topological analog of this problem from medical analysis, the observation consists of a filter function F defined on a simplicial complex \(K\) which may have, for instance, a large number of vertices, and the template consists of a simplicial complex \(K'\) simpler than \(K\) (e.g. with the same homotopy type but fewer vertices). The goal is then to find a filter function \(x\) on \(K'\) such that \((K', x)\) faithfully recovers the topology of the observation \((K, F)\). Formally we minimize the q-th distance (\(q \in [1,+\infty ]\)) between their barcodes

where we include the complexes in the notations \(\textrm{PH}(F, K)\) of the barcodes to make a clear distinction between filter functions defined on \(K\) and \(K'\).