Abstract

We consider minimizing a smooth and strongly convex objective function using a stochastic Newton method. At each iteration, the algorithm is given an oracle access to a stochastic estimate of the Hessian matrix. The oracle model includes popular algorithms such as Subsampled Newton and Newton Sketch, which can efficiently construct stochastic Hessian estimates for many tasks, e.g., training machine learning models. Despite using second-order information, these existing methods do not exhibit superlinear convergence, unless the stochastic noise is gradually reduced to zero during the iteration, which would lead to a computational blow-up in the per-iteration cost. We propose to address this limitation with Hessian averaging: instead of using the most recent Hessian estimate, our algorithm maintains an average of all the past estimates. This reduces the stochastic noise while avoiding the computational blow-up. We show that this scheme exhibits local Q-superlinear convergence with a non-asymptotic rate of \((\varUpsilon \sqrt{\log (t)/t}\,)^{t}\), where \(\varUpsilon \) is proportional to the level of stochastic noise in the Hessian oracle. A potential drawback of this (uniform averaging) approach is that the averaged estimates contain Hessian information from the global phase of the method, i.e., before the iterates converge to a local neighborhood. This leads to a distortion that may substantially delay the superlinear convergence until long after the local neighborhood is reached. To address this drawback, we study a number of weighted averaging schemes that assign larger weights to recent Hessians, so that the superlinear convergence arises sooner, albeit with a slightly slower rate. Remarkably, we show that there exists a universal weighted averaging scheme that transitions to local convergence at an optimal stage, and still exhibits a superlinear convergence rate nearly (up to a logarithmic factor) matching that of uniform Hessian averaging.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider minimizing a smooth and strongly convex objective function:

where \(f:\mathbb {R}^d\rightarrow \mathbb {R}\) is twice continuously differentiable with \(\textbf{H}(\textbf{x}) = \nabla ^2 f(\textbf{x}) \in \mathbb {R}^{d\times d}\) being its Hessian matrix. We suppose the Hessian \(\textbf{H}(\textbf{x})\) satisfies

for some constants \(0<\lambda _{\min }\le \lambda _{\max }\), and we let \(\kappa = \lambda _{\max }/\lambda _{\min }\) denote its condition number. We also let \(\textbf{x}^\star = \arg \min _{\textbf{x}\in \mathbb {R}^d} f(\textbf{x})\) be the unique global solution.

Problem (1) is arguably the most basic optimization problem, which nevertheless arises in many applications in machine learning and statistics [7, 9, 35, 56]. There is a plethora of methods for solving (1), and each (type of) method has its own benefits under favorable settings. This paper particularly focuses on solving (1) via second-order methods, where a (approximated) Hessian matrix is involved in the scheme. Consider the classical Newton’s method of the form

where \(\nabla f_t = \nabla f(\textbf{x}_t)\), \(\textbf{H}_t = \textbf{H}(\textbf{x}_t)\), and \(\mu _t\) is selected by passing a line search condition. Classical results indicate that Newton’s method in (2) exploits a global Q-linear convergence in the error of function value \(f(\textbf{x}_t)-f({\textbf{x}^\star })\); and a local Q-quadratic convergence in the iterate error \(\Vert \textbf{x}_t-{\textbf{x}^\star }\Vert \). More precisely, Newton’s method has two phases, separated by a neighborhood

When \(\textbf{x}_t\not \in {\mathcal N}_\nu \), (2) is in the damped Newton phase, where the objective \(f(\textbf{x}_t)\) is decreased by at least a fixed amount in each iteration, and converges linearly. In this phase, \(\Vert \textbf{x}_t-{\textbf{x}^\star }\Vert \) may converge slowly (e.g., it provably converges R-linearly using the fact that \(\Vert \textbf{x}_t-{\textbf{x}^\star }\Vert ^2\le 2/\lambda _{\min }(f(\textbf{x}_t)-f({\textbf{x}^\star }))\)). When \(\textbf{x}_t\in {\mathcal N}_\nu \), (2) transits to the quadratically convergent phase, where the unit stepsize is accepted and \(\Vert \textbf{x}_t-\textbf{x}^\star \Vert \) converges quadratically. See [8, Section 9.5] for the analysis. Compared to first-order methods, although second-order methods often exploit a faster convergence rate and behave more robustly to tuning parameters, they hinge on a high computational cost of forming the exact Hessian matrix \(\textbf{H}_t\) at each step. To resolve such a computational bottleneck, which is particularly pressing in large-scale data applications, a variety of deterministic and stochastic methods have been proposed for constructing different alternatives of the Hessian matrix.

When a deterministic alternative of \(\textbf{H}_t\), say \({\check{\textbf{H}}}_t\), is employed in (2), which may come from a finite difference approximation of the second-order derivative, or from a quasi-Newton update such as BFGS or DFP, the convergence behavior is well understood. Specifically, if \(\{{\check{\textbf{H}}}_t\}_t\) are positive definite with uniform upper and lower bounds, the damped phase is preserved by the same analysis as Newton’s method. Furthermore, the quadratically convergent phase is weakened to a superlinearly convergent phase, and the superlinear convergence occurs if and only if the celebrated Dennis-Moré condition [14] holds, i.e.,

See [47, Theorem 3.7] for the analysis. Recently, a deeper understanding of quasi-Newton methods for minimizing smooth and strongly convex objectives has been reported in [31, 50,51,52]. These works enhanced the analyses based on Dennis-Moré condition in (3) by performing a local, non-asymptotic convergence analysis, and provided explicit superlinear rates for different potential functions of quasi-Newton methods. The non-asymptotic superlinear results are more informative than asymptotic superlinear results established via (3), i.e., \(\Vert \textbf{x}_{t+1} - {\textbf{x}^\star }\Vert /\Vert \textbf{x}_t - {\textbf{x}^\star }\Vert \rightarrow 0\) as \(t\rightarrow \infty \). However, the analyses in [31, 50,51,52] highly rely on specific properties of quasi-Newton updates in the Broyden class, and do not apply to general Hessian approximations (e.g., finite difference approximation).

1.1 Stochastic Newton methods

A parallel line of research explores stochastic Newton methods, where a stochastic approximation \({\hat{\textbf{H}}}_t\) is used in place of \(\textbf{H}_t\) in (2). The stochasticity of \({\hat{\textbf{H}}}_t\) may come from evaluating the Hessian on a random subset of data points (i.e., subsampling), or from projecting the Hessian onto a random subspace to achieve the dimension reduction (i.e., sketching). To unify different approaches, we consider in this paper a general Hessian approximation given by a stochastic oracle. In particular, we express the approximation \({\hat{\textbf{H}}}(\textbf{x})\) at any point \(\textbf{x}\) by

where \(\textbf{E}(\textbf{x})\in \mathbb {S}^{d\times d}\) is a symmetric random noise matrix following a certain distribution (conditional on \(\textbf{x}\)) with mean zero. At iterate \(\textbf{x}_t\), we query the oracle to obtain an approximation \({\hat{\textbf{H}}}_t = {\hat{\textbf{H}}}(\textbf{x}_t)\), which (implicitly) comes from generating a realization of the random matrix \(\textbf{E}_t = \textbf{E}(\textbf{x}_t)\), and then adding \(\textbf{E}_t\) to the true Hessian \(\textbf{H}_t\). We do not assume \(\textbf{E}_t\) and \(\textbf{H}_t\) are accessible to us.

As mentioned, the popular specializations of stochastic oracle include subsampling and sketching. For Hessian subsampling, a finite-sum objective is considered

In the t-th iteration, a subset \(\xi _t \subseteq \{1,2,\ldots , n\}\) is sampled uniformly at random, and the subsampled Hessian is defined as

We note that the components \(f_i\) in (5) may not be convex even if the function f is strongly convex.Footnote 1 This is the so-called sum-of-non-convex setting [2, 25, 26, 55, e.g., see ]. Our oracle model (4) allows for this, since \({\hat{\textbf{H}}}_t\) is not required to be positive semidefinite, while the existing Subsampled Newton methods generally do not allow it. See Sect. 1.3 and Example 1 for further discussion.

For Hessian sketching, one first forms the square-root Hessian matrix \(\textbf{M}_t\in \mathbb {R}^{n\times d}\) satisfying \(\textbf{H}_t = \textbf{M}_t^\top \textbf{M}_t\), where n is the number of data points. Then, one generates a random sketch matrix \(\textbf{S}_t\in \mathbb {R}^{s\times n}\) with the sketch size s and the property \(\mathbb {E}[\textbf{S}_t^\top \textbf{S}_t] = \textbf{I}\), and the sketched Hessian is defined as

In some cases, \(\textbf{M}_t\) can be easily obtained. One particular example is a generalized linear model, where the objective has the form

with \(\{\textbf{a}_i\}_{i=1}^n\in \mathbb {R}^d\) being n data points. In this case, \(\textbf{M}_t = \frac{1}{\sqrt{n}}\cdot \textrm{diag}(f_1''(\textbf{a}_1^\top \textbf{x}_t)^{1/2}, \ldots , f_n''(\textbf{a}_n^\top \textbf{x}_t)^{1/2})\textbf{A}\) where \(\textbf{A}= (\textbf{a}_1, \ldots , \textbf{a}_n)^\top \in \mathbb {R}^{n\times d}\) is the data matrix.

Another family of stochastic Newton methods is based on the so-called Sketch-and-Project framework (e.g., [28]), where a low-rank Hessian estimate is used to construct a Newton-like step (with the Moore-Penrose pseudoinverse instead of the matrix inverse). For example, in one approach [27], the Newton-like step in the t-th iteration is obtained by generating a sketching matrix \(\textbf{S}_t\in \mathbb {R}^{s\times d}\) to construct a rank-s approximate of the inverse Hessian, resulting in the update:

While linear convergence rates have been derived for these methods (e.g., [16]), they do not fit into our stochastic oracle framework due to an intrinsic bias arising in the Hessian estimates (see Sect. 1.3 for further discussion).

Numerous stochastic Newton methods using (6) or (7) with various types of convergence guarantees have been proposed [1, 6, 10, 11, 15, 17, 18, 22, 24, 32, 34, 48, 53]. We review these related references later and point to [4] for a recent brief survey. However, the existing approaches to stochastic Newton methods share common limitations, which we now discuss.

The majority of literature studies the convergence of stochastic Newton by establishing a high probability error recursion. For example, [1, 22, 48, 53] all showed, roughly speaking, that stochastic Newton methods exploit a local linear-quadratic recursion:

for some constants \(c_1, c_2>0\) and \(\delta \in (0, 1)\). These constants depend on the sample/sketch size at each step. Based on (8), one often applies the result for \(t = 0,1,\ldots ,T\) recursively, uses the union bound, and establishes local convergence with probability \(1 - T\delta \). This approach has the following key drawbacks.

-

(a)

The presence of the linear term with coefficient \(c_1>0\) in the recursion means that, once \(\textbf{x}_t\) is sufficiently close to \({\textbf{x}^\star }\), the algorithm can only achieve the linear convergence, as opposed to the quadratic or superlinear convergence achieved by deterministic methods. Prior works (e.g., [6, 53]) discussed how to achieve local superlinear convergence by diminishing the coefficient \(c_1 = c_{1,t}\) gradually as t increases. However, since \(c_1\) is proportional to the magnitude of stochastic noise in the Hessian estimates, diminishing it requires increasing the sample size for estimating the Hessian, which results in a blow-up of the per-iteration computational cost. To be specific, \(c_1\) is proportional to the reciprocal of the square root of the sample size; thus this blow-up in terms of the sample size can be as fast as exponential if we wish to attain the quadratic convergence rate, and linear if we wish to attain the superlinear convergence rate established in this paper later.

-

(b)

The presence of the failure probability \(\delta \) in (8) means that after T iterations, a convergence guarantee may only hold with probability \(1-T\delta \). Thus, the failure probability explodes when \(T\rightarrow \infty \). To resolve this issue one can gradually diminish \(\delta \), e.g., \(\delta = \delta _t = O(1/t^2)\) such that \(\sum _t \delta _t<\infty \). However, once again, such adjustment on \(\delta \) leads to an increasing sample/sketch size as the algorithm proceeds, although not as drastically as in (a) (it suffices to increase the sample size logarithmically). Thus, in our stochastic oracle model, where the noise level (and hence the sample size) remains constant, it is problematic to show any convergence with high probability from (8) as \(T\rightarrow \infty \).

We note that some prior works [6, 42] showed the convergence in expectation guarantees. Although the explosion of the failure probability in (b) is suppressed by the direct characterization of the expectation, the drawback (a) still remains. In addition, the convergence in expectation results require stronger assumptions. For example, [6, (2.17)] and [42, Theorem 2] showed similar recursions to (8) for \(\mathbb {E}[\Vert \textbf{x}_t - {\textbf{x}^\star }\Vert ]\). However, these works assumed a bounded moments of iterates condition, i.e., \(\mathbb {E}[\Vert \textbf{x}_t-{\textbf{x}^\star }\Vert ^2]\le \gamma (\mathbb {E}[\Vert \textbf{x}_t-{\textbf{x}^\star }\Vert ])^2\) for some constant \(\gamma >0\), and assumed each component \(f_i\) in (5) to be strongly convex. Both conditions are not needed in high probability analyses. Note that the majority of high probability analyses assume that each component \(f_i\) is convex though not necessarily strongly convex [1, 53], while we do not impose any conditions on \(f_i\), which significantly weakens the conditions in the existing literature. Furthermore, the stepsize in [6, 42] was prespecified without any adaptivity. [3] introduced a non-monotone line search to address the adaptivity issue, while extra conditions such as the compactness of \(\{\textbf{x}_t\}_t\) were imposed for their expectation analysis.

1.2 Main results: stochastic Newton with Hessian averaging

Concerned by the above limitations of stochastic Newton methods, we ask the following question:

Can we design a stochastic Newton method that exploits global linear and local superlinear convergence in high probability, even for an infinite iteration sequence, and without increasing the computational cost in each iteration?

We provide an affirmative answer to this question by studying a class of stochastic Newton methods with Hessian averaging. A simple intuition is that the approximation error \(\textbf{E}_t\) can be de-noised by aggregating all the errors \(\{\textbf{E}_i\}_{i=0}^{t}\), inspired by the central limit theorem for martingale differences. Thus, if we could reuse the past samples and replace \(\textbf{E}_t\) by \(\frac{1}{t+1}\sum _{i=0}^t\textbf{E}_i\), then the matrix \(\textbf{H}_t + \frac{1}{t+1}\sum _{i=0}^t\textbf{E}_i\) would be more precise than \({\hat{\textbf{H}}}_t = \textbf{H}_t+\textbf{E}_t\). However, since \(\{\textbf{E}_i\}_{i=0}^t\) are unknown and only \(\{{\hat{\textbf{H}}}_i\}_{i=0}^t\) are known to us, in order to de-noise \(\textbf{E}_t\), we can only aggregate all the Hessian estimates \(\{{\hat{\textbf{H}}}_i\}_{i=0}^t\) and derive \(\frac{1}{t+1}\sum _{i=0}^t{\hat{\textbf{H}}}_i = \frac{1}{t+1}\sum _{i=0}^t\textbf{H}_i + \frac{1}{t+1}\sum _{i=0}^t\textbf{E}_i\). Compared to \({\hat{\textbf{H}}}_t\), such a matrix does not preserve the information of the true Hessian \(\textbf{H}_t\). Thus, we observe a trade-off between de-noising the error \(\textbf{E}_t\) and preserving the Hessian information \(\textbf{H}_t\): on one hand, we want to assign equal weights to all the past errors to achieve the fastest concentration for the error average (see Remark 2); on the other hand, we want to assign all weights to \(\textbf{H}_t\) to fully preserve the most recent Hessian information. In this paper, we investigate this trade-off, and show how the Hessian averaging resolves the drawbacks of existing stochastic Newton methods.

1.2.1 The proposed method

We consider an online averaging scheme (we let \({\tilde{\textbf{H}}}_{-1} = {\varvec{0}}\)):

where \(\{w_t\}_{t=-1}^{\infty }\) is a prespecified increasing non-negative weight sequence starting with \(w_{-1} = 0\). By online we mean that we only keep an average Hessian \({\tilde{\textbf{H}}}_t\) in each iteration, and update it as (9) when we obtain a new Hessian estimate. This scheme is in contrast to keeping all the past Hessian estimates. By the scheme (9), we note that, at iteration t, we re-scale the weights of \(\{{\hat{\textbf{H}}}_i\}_{t=0}^{t-1}\) equally by a factor of \(w_{t-1}/w_t\), instead of completely redefining all the weights. We note that such an online averaging scheme is memory and computation efficient: compared to stochastic Newton methods without averaging, we only require an extra \(O(d^2)\) space to save \({\tilde{\textbf{H}}}_t\) and \(O(d^2)\) flops to update it, which is negligible in the setting of stochastic Newton methods where the Hessian vector product requires \(O(d^2)\) flops and solving the exact Newton system requires \(O(d^3)\) flops. In (9), we use the ratio factors \(w_{t-1}/w_t\) instead of direct weights merely to simplify our later presentation. Furthermore, (9) can be reformulated as a general weighted average as follows:

In particular, by setting \(w_t=t+1\), we obtain \(z_{i,t} = 1/(t+1)\) and further have \({\tilde{\textbf{H}}}_t = \frac{1}{t+1}\sum _{i=0}^{t}{\hat{\textbf{H}}}_i\). Thus, we recover simple uniform averaging. If we let the sequence \(w_t\) grow faster-than-linearly, this results in a weighted average that is skewed towards the more recent Hessian estimates.

Our proposed method replaces \(\textbf{H}_t\) by \({\tilde{\textbf{H}}}_t\) when computing the Newton direction (2). The detailed procedure is displayed in Algorithm 1. We make two comments about the algorithm.

First, Algorithm 1 supposes that, unlike the Hessian, the function values and gradients are known deterministically. As a result, the method generates a monotonically decreasing sequence of \(f(\textbf{x}_t)\). If, on the other hand, \(f(\textbf{x}_t)\) and \(\nabla f(\textbf{x}_t)\) were known with random noise, we would have to relax the Armijo condition by adding extra error terms (hence, \(f(\textbf{x}_t)\) would be potentially non-monotonic), and analyze the resulting method under a random model framework such as in [5, 12]. We leave these non-trivial extensions to future work.

Second, for early iterates, the average Hessian \({\tilde{\textbf{H}}}_t\) may not be a good approximate of \(\textbf{H}_t\). For example, \({\tilde{\textbf{H}}}_t\) may be indefinite (or even singular) so that \(\textbf{p}_t\) is not a descent direction. In that case, we skip the line search step and let \(\textbf{x}_{t+1}=\textbf{x}_t\) (see Line 6 of Algorithm 1). Further, even if \({\tilde{\textbf{H}}}_t\) is positive definite, it may not be properly bounded, and thus leads to an extremely small stepsize \(\mu _t\). However, as analyzed later in Lemmas 2 and 7, the errors \(\{\textbf{E}_i\}_{i=0}^t\) are sufficiently concentrated for large t with high probability, so that \({\tilde{\textbf{H}}}_t\) is properly bounded from above and below. Thus, Algorithm 1 is well defined and can be performed for any iteration t, while it behaves like the classical Newton method only after a few iterations (the threshold is provided in Lemmas 2 and 7).

1.2.2 Main results

We conduct convergence analysis for Algorithm 1 with different weight sequences \(\{w_t\}_{t=-1}^\infty \). Throughout the analysis, we only assume the oracle noise \(\textbf{E}(\textbf{x})\) has a sub-exponential tail, which in particular includes Hessian subsampling and Hessian sketching as special cases. Our convergence guarantees rely on the quality of the average Hessian approximation \({\tilde{\textbf{H}}}_t\); thus, we do not require \({\hat{\textbf{H}}}_t\) to be a good approximation of \(\textbf{H}_t\). In other words, our scheme is applicable even if we generate a single sample for forming the subsampled Hessian estimator, and applicable even if some components \(f_i\) are non-convex. This is because the noise \(\textbf{E}_t\) can always be reduced by averaging (ensured by the central limit theorem) even if it has a large variance.

We show that, with high probability, Algorithm 1 has four convergence phases with three transition points:

-

(a)

Global phase: \(\textbf{x}_t\) converges from any initial iterate \(\textbf{x}_0\) to a local neighborhood of \({\textbf{x}^\star }\), in which the unit stepsize starts being accepted.

-

(b)

Steady phase: \(\textbf{x}_t\) stays in the neighborhood.

-

(c)

Slow superlinear phase: \(\textbf{x}_t\) starts converging superlinearly with a rate gradually increasing.

-

(d)

Fast superlinear phase: the superlinear acceleration reaches its full potential and is maintained for all the following iterations.

We mention that the superlinear rate is measured with respect to the error \(\Vert \textbf{x}_t-{\textbf{x}^\star }\Vert _{{\textbf{H}^\star }} :=\sqrt{(\textbf{x}_t-{\textbf{x}^\star })^\top {\textbf{H}^\star }(\textbf{x}_t-\textbf{x}_0)}\) where \({\textbf{H}^\star }= \textbf{H}({\textbf{x}^\star })\). Before introducing the main results in Theorems 1 and 2, we summarize them in Table 1, showing the transition points for two weight sequences. The transitions for general weights are provided in Sect. 4. We use \(\varUpsilon \) to denote the noise level of stochastic Hessian oracle (see Assumption 1 for a formal definition; typically \(\varUpsilon = O(\kappa )\)). We also use \(O(\cdot )\) to suppress the logarithmic factors and the dependence on other constants except \(\varUpsilon \) and \(\kappa \). We emphasize that all results of the paper require that the (weighted) average of the errors \(\{\textbf{E}_i\}_{i=0}^t\) is sufficiently concentrated; thus, they hold with high probability.

The first main result studies the uniform averaging scheme, which is informally stated below. We refer to Theorem 4 for a formal statement.

Theorem 1

(Uniform averaging; informal) Consider Algorithm 1 with \(w_t = t+1\). With high probability, the algorithm satisfies:

-

1.

After \({\mathcal T}= O(\kappa ^2+\varUpsilon ^2)\) iterations, \(\textbf{x}_t\) converges to a local neighborhood of \({\textbf{x}^\star }\).

-

2.

After \(O({\mathcal T}\kappa )\) iterations, \(\textbf{x}_t\) converges superlinearly to \({\textbf{x}^\star }\).

-

3.

After \(O({\mathcal T}^2\kappa ^2/\varUpsilon ^2)\) iterations, the superlinear rate reaches \((\varUpsilon \sqrt{\log (t)/t}\,)^{t}\), and this rate is maintained as \(t\rightarrow \infty \).

First, we observe that our convergence guarantee holds with high probability for the entire infinite iteration sequence, which addresses the issue of the blow-up of failure probability associated with the existing stochastic Newton methods (see part (b) in Sect. 1.1).

Second, the parameter \(\varUpsilon \) characterizes the noise level of the stochastic Hessian oracle. When the Hessian is generated by subsampling or sketching, \(\varUpsilon \) depends on the adopted sample/sketch sizes. As illustrated in Examples 1 and 2, \(\varUpsilon =O(\kappa )\) for popular Hessian estimators when sample/sketch sizes are independent of \(\kappa \). In this case, \({\mathcal T}= O(\kappa ^2)\). We require \({\mathcal T}\ge O(\varUpsilon ^2)\) only to ensure that \(\{{\tilde{\textbf{H}}}_t\}_{t\ge {\mathcal T}}\) are positive definite with condition numbers scaling as \(\kappa \), so that the Newton step based on \({\tilde{\textbf{H}}}_t\) leads to a usual decrease of the objective. On the other hand, popular stochastic Newton methods often generate a larger sample size (which depends on \(\kappa \)) to enforce \(\Vert \textbf{E}_t\Vert \le \epsilon \lambda _{\min }\) for any \(t\ge 0\) with an \(\epsilon \in (0,1)\) (e.g., see Lemma 2 and Equation 3.10 in [48, 53] respectively). In that case, \(\{{\hat{\textbf{H}}}_t\}_{t\ge 0}\) are positive definite and so are \(\{{\tilde{\textbf{H}}}_t\}_{t\ge 0}\). Importantly, our method does not require such well-conditioned Hessian oracles.

Third, we notice that the uniform averaging approach has three transitions outlined in Theorem 1. For the iterations before \({\mathcal T}\), the error in function value decreases linearly, implying that \(\textbf{x}_t\) converges R-linearly. The first transition occurs after \({\mathcal T}\) iterations when \(\textbf{x}_t\) reaches the local neighborhood, where second-order information starts being useful. \({\mathcal T}\) is also where the exact Newton methods would reach quadratic convergence. However, the averaged Hessian estimates still carry inaccurate Hessian information from the global phase, which is only gradually forgotten. From \({\mathcal T}\) to \(O({\mathcal T}\kappa )\) iterations, the algorithm gradually forgets the Hessian estimates in the global phase, while \(\textbf{x}_t\) still converges R-linearly. The second transition occurs after \(O({\mathcal T}\kappa )\) iterations, when \(\textbf{x}_t\) starts converging superlinearly (with a slow rate). The third transition occurs after \(O({\mathcal T}^2\kappa ^2/\varUpsilon ^2)\), when the superlinear rate is accelerated to \((\varUpsilon \sqrt{\log (t)/t}\,)^{t}\) and stabilized. This rate comes from the central limit theorem of averaging out the oracle noise; thus, this rate cannot be further improved.

Note that if we were to reset the averaged Hessian estimate \({\tilde{\textbf{H}}}_t\) after the first transition (so that the Hessian average does not include information from the global phase), then the algorithm would immediately reach the superlinear rate \((\varUpsilon \sqrt{\log (t)/t}\,)^{t}\) after \({\mathcal T}\) iterations (i.e., all transitions would occur at once). However, the algorithm does not know a priori when this transition will occur. As a result, the uniform Hessian averaging incurs a potentially significant delay of up to \(O({\mathcal T}^2\kappa ^2/\varUpsilon ^2)\) iterations before reaching the desired superlinear rate.

In our second main result, we address the delayed transition to superlinear convergence that occurs in the uniform averaging. Specifically, we ask:

Does there exist a universal weighted averaging scheme that achieves superlinear convergence without a delayed transition, and without any prior knowledge about the objective?

Remarkably, the answer to this question is affirmative. The weighted/non-uniform averaging scheme we present below puts more weight on recent Hessian estimates, so that the second-order information from the global phase is forgotten more quickly, and the transition to a fast superlinear rate occurs after only \(O({\mathcal T})\) iterations. Thus, the superlinear convergence occurs without any delay (up to constant factors). Such a scheme will necessarily have a slightly weaker superlinear rate than the uniform averaging as \(t\rightarrow \infty \), but we show that this difference is merely an additional \(O(\sqrt{\log t})\) factor (see Theorem 5 and Example 5 for a formal statement).

Theorem 2

(Weighted averaging; informal) Consider Algorithm 1 with \(w_t=(t+1)^{\log (t+1)}\). With high probability, after \(O({\mathcal T}) = O(\kappa ^2+\varUpsilon ^2)\) iterations, \(\textbf{x}_t\) achieves a superlinear convergence rate \((\varUpsilon \log (t)/\sqrt{t}\,)^t\), which is maintained as \(t\rightarrow \infty \).

We note that, given some knowledge about the global/local transition points (e.g., if the algorithm knows \(\kappa \), or if some convergence criterion is used for estimating the transition point), it is possible to switch from the more conservative weighted averaging to the asymptotically more effective uniform averaging within one run of the algorithm. However, since knowing transition points is difficult and rare in practice, we leave such considerations to future work, and only focus on problem-independent averaging schemes.

It is also worth mentioning that this paper only considers a basic stochastic Newton scheme based on (2), where we suppose exact function and gradient information and solutions to the Newton systems are known exactly. Some literature allows one to access inexact function values and/or gradients, and/or apply Newton-CG or MINRES to solve the linear systems inexactly [23, 37, 53, 63]. Applying our sample aggregation technique under these setups is promising, but we defer it to future work. The basic scheme purely reflects the benefits of Hessian averaging, which is the main interest of this work. Additionally, some literature deals with non-convex objectives via stochastic trust region methods [5, 12] or stochastic Levenberg-Marquardt methods [39]. The averaging scheme may not directly apply for these methods due to potential bias brought by Hessian modifications for addressing non-convexity, while our sample aggregation idea is still inspiring. We leave the generalization to non-convex objectives to future work as well. Some literature addressed the superlinearity of stochastic Newton methods under distributed or federated learning settings [30, 49, 54]. These works are not fully compatible with our Hessian oracle framework, since they exploit some distributed nature of problem to produce Hessian estimates with noise diminishing to zero (as opposed to the bounded noise in this paper).

1.3 Literature review

Stochastic Newton methods have recently received much attention. The popular Hessian approximation methods include subsampling and sketching.

For subsampled Newton methods, aside from extensive empirical studies on different problems [33, 40, 41, 62], the pioneering work in [10] established the very first asymptotic global convergence by showing that \(\Vert \nabla f_t\Vert \rightarrow 0\) as \(t\rightarrow \infty \), while the quantitative rate is unknown. Furthermore, [11, 24] studied Newton-type algorithms with subsampled gradients and/or subsampled Hessians, and established global Q-linear convergence in the error of function value \(f(\textbf{x}_t)-f({\textbf{x}^\star })\). However, the above analyses neglected the underlying probabilistic nature of the subsampled Hessian \({\hat{\textbf{H}}}_t\), and required \({\hat{\textbf{H}}}_t\) to be lower bounded away from zero deterministically. Such a condition holds only if each \(f_i\) in (5) is strongly convex, which is restrictive in general. Erdogdu and Montanari [22] relaxed such a condition by developing a novel algorithm, where the subsampled Hessian is adjusted by a truncated eigenvalue decomposition. With the exact gradient information and properly prespecified stepsizes, the authors showed a linear-quadratic error recursion for \(\Vert \textbf{x}_t-{\textbf{x}^\star }\Vert \) in high probability. Arguably, the convergence of standard subsampled Newton methods is originally analyzed in [53] and [6] from different perspectives. In particular, for both sampling and not sampling the gradient, [53] showed a global Q-linear convergence for \(f(\textbf{x}_t) - f({\textbf{x}^\star })\) and a local linear-quadratic convergence for \(\Vert \textbf{x}_t-{\textbf{x}^\star }\Vert \) in high probability. Under some additional conditions, [6] derived a global R-linear convergence for the expected function value \(\mathbb {E}[f(\textbf{x}_t) - f({\textbf{x}^\star })]\) and a (similar) local linear-quadratic convergence for the expected iterate error \(\mathbb {E}[\Vert \textbf{x}_t-{\textbf{x}^\star }\Vert ]\). For both works, the authors also discussed how to gradually increase the sample size for Hessian approximation to achieve a local Q-superlinear convergence with high probability and in expectation, respectively. Building on the two studies, various modifications of subsampled Newton methods have been reported with similar convergence guarantees. We refer to [3, 36, 61, 64] and references therein. We note that [32] designed a scheme that allows for a single sample in each iteration of subsampled Newton. That work established a local linear convergence in expectation, while we obtain a superlinear convergence in high probability.

As a parallel approach to subsampling, Newton sketch has also been broadly investigated. Pilanci and Wainwright [48] proposed a generic Newton sketch method that approximates the Hessian via a Johnson–Lindenstrauss (JL) transform (e.g., the Hadamard transform), and the gradient is exact. Furthermore, [1, 15, 17, 18] proposed different Newton sketch methods with debiased or unbiased Hessian inverse approximations. Dereziński et al. [19] relied on a novel sketching technique called Leverage Score Sparsified (LESS) embeddings [20] to construct a sparse sketch matrix, and studied the trade-off between the computational cost of \({\hat{\textbf{H}}}_t\) and the convergence rate of the algorithm. Similar to subsampled Newton methods, the aforementioned literature established a local linear-quadratic (or linear) recursion for \(\Vert \textbf{x}_t-{\textbf{x}^\star }\Vert \) in high probability. A recent work [34] adaptively increased the sketch size to let the linear coefficient be proportional to the iterate error, which leads to a quadratic convergence. However, the per-iteration computational cost is larger than typical methods. See [4] for a review of subsampled and sketched Newton, and their connections to, and empirical comparisons with, first-order methods.

Sketching has also been used to construct low-rank Hessian approximations through the Sketch-and-Project framework, originally developed by [28] for solving linear systems, and extended to general convex/nonconvex optimization by [21, 27, 38, 44]. The convergence properties of this family of methods have been thoroughly studied: they achieve linear convergence in expectation, with the rate controlled by a so-called stochastic condition number, which is defined as the smallest eigenvalue of the expectation of the low-rank projection matrix defined by the sketch [28]. While the per-iteration cost of stochastic Newton methods based on Sketch-and-Project is generally lower than that of the aforementioned Newton sketch methods, their convergence rates are more sensitive to the spectral properties of the Hessian. The precise characterizations of the convergence rates are given in [16, 18, 43]. Moreover, the Sketch-and-Project estimates are generally biased, so they are not appropriate for averaging.

In summary, none of the aforementioned existing works achieve superlinear convergence with a fixed per-iteration computational cost. Additionally, high probability convergence guarantees generally fail as \(t\rightarrow \infty \), with potent exceptions of certain stochastic trust-region methods [12] that enjoy almost sure convergence. However, the per-iteration computation of the exceptions is not fixed and the local rate is unknown. Further, for finite-sum objectives, the existing literature on stochastic Newton methods assumes each \(f_i\) to be strongly convex [6] or convex [53]. However, \(f_i\) needs not be convex even if f is strongly convex. See [2, 25, 26, 55] and references therein for first-order algorithms designed under such a setting. We address the above limitations of stochastic Newton methods by reusing all the past samples to average Hessian estimates. Our scheme is especially preferable when we have a limited budget for per-iteration computation (e.g., when we use very few samples in subsampled Newton, resulting in an ill-conditioned Hessian estimate). Our established non-asymptotic superlinear rates are stronger than the existing results, and our numerical experiments demonstrate the superiority of Hessian averaging.

Notation: Throughout the paper, we use \(\textbf{I}\) to denote the identity matrix, and \({\varvec{0}}\) to denote the zero vector or matrix. Their dimensions are clear from the context. We use \(\Vert \cdot \Vert \) to denote the \(\ell _2\) norm for vectors and spectral norm for matrices. For a positive semidefinite matrix \(\textbf{A}\), we let \(\Vert \textbf{x}\Vert _{\textbf{A}} = \sqrt{\textbf{x}^\top \textbf{A}\textbf{x}}\). For two scalars a, b, \(a\vee b = \max (a,b)\) and \(a\wedge b = \min (a, b)\). For two matrices \(\textbf{A}, \textbf{B}\), \(\textbf{A}\prec (\preceq )\textbf{B}\) if \(\textbf{B}- \textbf{A}\) is a positive (semi)definite matrix. Recall that we reserve the notation \(\lambda _{\min }, \lambda _{\max }\) to denote the lower and upper bounds of the true Hessian, and \(\kappa = \lambda _{\max }/\lambda _{\min }\) is the condition number.

Structure of the paper: In Sect. 2 we present the preliminaries on matrix concentration that are needed for our results. Then, we establish convergence results for the uniform averaging scheme in Sect. 3. Section 4 establishes convergence for general weight sequences. Numerical experiments and conclusions are provided in Sects. 5 and 6, respectively.

2 Preliminaries on matrix concentration

In this section, we present the key results on the concentration of sums of random matrices, which we then use to bound the noise of the averaged Hessian estimates. The Hessian estimates constructed by our algorithm are not independent, and hence standard matrix concentration results do not apply. However, they do satisfy a martingale difference condition, which we will exploit to derive useful concentration results.

Given a sequence of stochastic iterates \(\{\textbf{x}_t\}_{t=0}^{\infty }\), we let \(\mathcal {F}_0\subseteq \mathcal {F}_1\subseteq \mathcal {F}_2\subseteq \ldots \) be a filtration where \(\mathcal {F}_t = \sigma (\textbf{x}_{0:t})\), \(\forall t\ge 0\), is the \(\sigma \)-algebra generated by the randomness from \(\textbf{x}_0\) to \(\textbf{x}_t\). With such a filtration, we denote the conditional expectation by \(\mathbb {E}_t[\cdot ] = \mathbb {E}[\cdot \mid \mathcal {F}_t]\) and the conditional probability by \(\mathbb {P}_t(\cdot ) = \mathbb {P}(\cdot \mid \mathcal {F}_t)\). We suppose \(\textbf{x}_0\) is deterministic, so that \(\mathcal {F}_0\) is a trivial \(\sigma \)-algebra.

For a given weight sequence \(\{w_t\}_{t=-1}^\infty \) with \(w_{-1}=0\), the scheme (9) leads to

where \(z_{i,t} = (w_i - w_{i-1})/w_t\). Note that \(\sum _{i=0}^t z_{i,t}=1\) and \(z_{i,t}\propto z_i:=w_i-w_{i-1}\), i.e., \(z_{i,t}\) is proportional to an un-normalized weight \(z_i\). We see from (11) that \({\tilde{\textbf{H}}}_t\) consists of the Hessian averaging and noise averaging with the same weights. In principle, the Hessian averaging \(\sum _{i=0}^{t}z_{i,t}\textbf{H}_i\rightarrow {\textbf{H}^\star }\) as \(\textbf{x}_t\rightarrow {\textbf{x}^\star }\), while the noise averaging \(\sum _{i=0}^{t}z_{i,t}\textbf{E}_i\rightarrow {\varvec{0}}\) due to the central limit theorem. We will show that the Hessian averaging (eventually) converges faster than the noise averaging.

To study the concentration of noise averaging \(\bar{\textbf{E}}_t\), we use the fact that \(\{\textbf{E}_t\}_{t=0}^\infty \) is a martingale difference sequence, and rely on concentration inequalities for matrix martingales. These concentration inequalities require a sub-exponential tail condition on the noise. We say that a random variable X is K-sub-exponential if \(\mathbb {E}[|X|^p]\le p!\cdot K^p/2\) for all \(p=2,3,\ldots \,\), which is consistent (up to constants) with all standard notions of sub-exponentiality (see Sect. 2.7 in [59]).

Assumption 1

(Sub-exponential noise) We assume that \(\textbf{E}(\textbf{x})\) is mean zero and \(\Vert \textbf{E}(\textbf{x})\Vert \) is \(\varUpsilon _E\)-sub-exponential for all \(\textbf{x}\). Also, we define \(\varUpsilon :=\varUpsilon _E/\lambda _{\min }\) to be the scale-invariant noise level.

Remark 1

The sub-exponentiality of \(\Vert \textbf{E}(\textbf{x})\Vert \) implies that \(\textbf{E}(\textbf{x})\) has sub-exponential matrix moments: \(\mathbb {E}[\textbf{E}(\textbf{x})^p]\preceq p!\cdot \varUpsilon _E^p/2\cdot \textbf{I}\) for \(p=2,3,\ldots \,\). In fact, our analysis immediately applies under this slightly weaker condition. We impose the moment condition on \(\Vert \textbf{E}(\textbf{x})\Vert \) purely because it is easier to check in practice. Also, we note that sometimes the noise \(\textbf{H}(\textbf{x})^{-1/2}\textbf{E}(\textbf{x})\textbf{H}(\textbf{x})^{-1/2}\) is more natural to study, e.g., for sketching-based oracles where we additionally have \({\hat{\textbf{H}}}(\textbf{x})\succeq {\varvec{0}}\). Thus, we can alternatively impose \(\tilde{\varUpsilon }\)-sub-exponentiality on \(\Vert \textbf{H}(\textbf{x})^{-1/2}\textbf{E}(\textbf{x})\textbf{H}(\textbf{x})^{-1/2}\Vert \). Our analysis can also be adapted to this alternate condition, and leads to tighter convergence rates (in terms of the dependence on \(\kappa \)) for particular sketching-based oracles. However, this adaptation loses certain generality, and thus we prefer to impose conditions directly on the oracle noise \(\textbf{E}(\textbf{x})\).

Assumption 1 is weaker than assuming \(\Vert \textbf{E}(\textbf{x})\Vert \) to be uniformly bounded by \(\varUpsilon _E\), and it is satisfied by all of the popular Hessian subsampling and sketching methods. For example, when using Gaussian sketching, the noise is not bounded but sub-exponential. Further, the sub-exponential constant \(\varUpsilon _E\) (and hence \(\varUpsilon \)) reflects how the stochastic noise depends on the sample/sketch size, as illustrated in the examples below (see Appendix A for rigorous proofs).

Example 1

Consider subsampled Newton as in (6) with sample size \(s=|\xi _t|\), and suppose \(\Vert \nabla ^2f_i(\textbf{x})\Vert \le \lambda _{\max }R\) for some \(R>0\) and for all i. Then, we have \(\varUpsilon = O(\kappa R \sqrt{\log (d)/s})\). If we additionally assume that all \(f_i(\textbf{x})\) are convex, then \(\varUpsilon \) is improved to \(\varUpsilon = O(\sqrt{\kappa R\log (d)/s}+\kappa R\log (d)/s\,)\).

Example 2

Consider Newton sketch as in (7) with \(\textbf{S}\in \mathbb {R}^{s\times n}\) consisting of i.i.d. \(\mathcal N(0,1/s)\) entries. Then, we have \(\varUpsilon = O(\kappa (\sqrt{d/s}+ d/s))\).

From the above two examples, we observe that \(\varUpsilon \) scales as \(O(\kappa )\) when holding everything else fixed. Also, Example 1 illustrates that our Hessian oracle model applies to subsampled Newton even when some components \(f_i(\textbf{x})\) are non-convex (while \(f(\textbf{x})\) is still convex), although this adversely affects the sub-exponential constant. For Gaussian sketch in Example 2, we can show that \(\widetilde{\varUpsilon }=O(\sqrt{d/s}+ d/s)\) (where \(\widetilde{\varUpsilon }\) was defined in Remark 1). Thus, the dependence on \(\kappa \) can be avoided for the sub-exponential constant of noise \(\textbf{H}(\textbf{x})^{-1/2}\textbf{E}(\textbf{x})\textbf{H}(\textbf{x})^{-1/2}\). Analogous noise bounds can be proved for other sketching matrices \(\textbf{S}\), including sparse sketches and randomized orthogonal transforms.

We now show concentration inequalities for \(\bar{\textbf{E}}_t\) in (11) under Assumption 1. We state the following preliminary lemma, which is a variant of Freedman’s inequality for matrix martingales.

Lemma 1

(Adapted from Theorem 2.3 in [57]) Let \(t\ge 0\) be a fixed integer. Consider a d-dimensional martingale difference \(\{\textbf{E}_i\}_{i=0}^{t}\) (i.e., \(\mathbb {E}_i[\textbf{E}_i] = {\varvec{0}}\)). Suppose there exists a function \(g_t:\varTheta _t\rightarrow [0, \infty ]\) with \(\varTheta _t\subseteq (0, \infty )\), and a sequence of matrices \(\{\textbf{U}_i\}_{i=0}^{t}\), such that for any \(i = 0,1,\ldots ,t\),Footnote 2

Then, we have for any scalars \(\eta \ge 0\) and \(\sigma ^2>0\),

The function \(g_t\) in [57, Theorem 2.3] is defined on the full positive set \((0, \infty )\), but the proof applies to any subset \(\varTheta _t\). We use Lemma 1 to show the next result.

Theorem 3

(Concentration of sub-exponential martingale difference) Under Assumption 1, for any integer \(t\ge 0\) and scalar \(\eta \ge 0\), \(\bar{\textbf{E}}_t\) in (11) satisfies

where \(z_t^{(\max )} = \max _{i\in \{0,\ldots ,t\}}z_{i,t}\).

Proof

See Appendix C.1. \(\square \)

The martingale concentration in Theorem 3 matches the matrix Bernstein results for independent noises \(\{\textbf{E}_i\}_{i=0}^t\) (cf. Theorems 6.1, 6.2 in [58]). For any \(\delta \in (0, 1)\), if we let the right hand side of (13) be \(\delta /(t+1)^2\), then we obtain that, with probability at least \(1 - \delta /(t+1)^2\),

We provide the following remark to discuss the fastest concentration rate.

Remark 2

To achieve the fastest concentration rate, we minimize the right hand side of (14) under the restriction \(\sum _{i=0}^t z_{i,t} = 1\). Note that the minimum of both \(\sum _{i=0}^t z_{i,t}^2\) and \(z_t^{(\max )}\) is attained with equal weights, that is \(z_{i,t} = 1/(t+1)\), for \( i = 0,1,\ldots , t\). Thus, the fastest concentration rate is attained with equal weights. Furthermore, a union bound over t leads to

We note that the square root term \(\sqrt{\log (d(t+1)/\delta )/t+1}\) dominates the error bound for large t. Recalling from (11) that \(z_{i,t} = (w_i - w_{i-1})/w_t\), we know \(w_t = t+1\) for the equal weights. If fact, the concentration rate of \(\Vert \bar{\textbf{E}}_t\Vert \) relates to the superlinear convergence rate of \(\textbf{x}_t\) (see Theorem 1), because, as shown in the following sections, the convergence rate of \(\textbf{x}_t\) is proportional to \(\Vert \bar{\textbf{E}}_t\Vert \) when \(\textbf{x}_t\) is sufficiently close to \({\textbf{x}^\star }\).

3 Convergence of uniform Hessian averaging

We now study the convergence of stochastic Newton with Hessian averaging. We consider the uniform averaging scheme, i.e., \(w_t = t+1\), \(\forall t\ge 0\). Our first result suggests that, with high probability, \({\tilde{\textbf{H}}}_t \succ {\varvec{0}}\) for all large t. This implies that the Newton direction \(\textbf{p}_t = -({\tilde{\textbf{H}}}_t)^{-1}\nabla f_t\) will be employed from some t onwards (cf. Line 6 of Algorithm 1). We recall that \(\varUpsilon = \varUpsilon _E/\lambda _{\min }\), and e denotes the natural base.

Lemma 2

Consider Algorithm 1 with \(w_t = t+1\), \(\forall t\ge 0\). Under Assumption 1, we let \(\delta , \epsilon \in (0, 1)\) with \(d/\delta \ge e\). We also let

Then, with probability \(1-\delta \pi ^2/6\), the event

occurs, which implies \((1-\epsilon )\lambda _{\min }\cdot \textbf{I}\preceq {\tilde{\textbf{H}}}_t \preceq (1+\epsilon )\lambda _{\max }\cdot \textbf{I}\), \(\forall t\ge {\mathcal T}_1\).

Proof

See Appendix C.2. \(\square \)

By Lemma 2, we initialize the convergence analysis from the iteration \(t = {\mathcal T}_1\), and condition on the event \({\mathcal E}\). For \(0\le t< {\mathcal T}_1\), the Newton system may or may not be solvable and the lower and upper bounds of \({\tilde{\textbf{H}}}_t\) may or may not scale as \(\lambda _{\min }\) and \(\lambda _{\max }\) (cf. Line 6 of Algorithm 1). Thus, for the iterates \(\textbf{x}_{0:{\mathcal T}_1}\), we do not generally have guarantees on the convergence rate, but only know that the objective value is non-increasing, that is, \(f(\textbf{x}_0)\ge \cdots \ge f(\textbf{x}_{{\mathcal T}_1})\).

We next provide a Q-linear convergence for the objective value \(f(\textbf{x}_t)-f({\textbf{x}^\star })\) for \(t\ge {\mathcal T}_1\).

Lemma 3

Conditioning on the event (17), we let

and have \(f(\textbf{x}_{t+1})-f({\textbf{x}^\star }) \le (1-\phi )(f(\textbf{x}_t) - f({\textbf{x}^\star }))\), \(\forall t\ge {\mathcal T}_1\), which implies R-linear convergence of the iterate error,

Proof

See Appendix C.3. \(\square \)

We next show that \(\textbf{x}_t\) stays in a neighborhood around \({\textbf{x}^\star }\) for all large t. For this, we need a Lipschitz continuity condition.

Assumption 2

(Lipschitz Hessian) We assume \(\textbf{H}(\textbf{x})\) is L-Lipschitz continuous. That is \(\Vert \textbf{H}(\textbf{x}_1) - \textbf{H}(\textbf{x}_2)\Vert \le L\Vert \textbf{x}_1 - \textbf{x}_2\Vert \) for any \(\textbf{x}_1, \textbf{x}_2\in \mathbb {R}^d\).

Combining Lemma 3 with Assumption 2 leads to the following corollary.

Corollary 1

Consider Algorithm 1 with \(w_t=t+1\), \(\forall t\ge 0\). Under Assumptions 1 and 2, we let \(\delta , \epsilon \in (0, 1)\) with \(d/\delta \ge e\), and define the neighborhood \({\mathcal N}_{\nu }\) as

Then, with probability \(1 - \delta \pi ^2/6\), we have \(\textbf{x}_t\in {\mathcal N}_\nu \), for all \(t\ge {\mathcal T}\) where \({\mathcal T}= {\mathcal T}_1 + {\mathcal T}_2\) with \({\mathcal T}_1\) defined in (16) and

Proof

See Appendix C.4. \(\square \)

Combining (16) and (19), and using \(O(\cdot )\) to neglect logarithmic factors and all constants except \(\kappa \) and \(\varUpsilon \), we have \({\mathcal T}= O(\varUpsilon ^2 + \kappa ^2)\) with high probability. Building on Corollary 1, we then show that the unit stepsize is accepted locally.

Lemma 4

Under Assumption 2, suppose \(\textbf{p}_t = -({\tilde{\textbf{H}}}_t)^{-1}\nabla f_t\). Then \(\mu _t = 1\) if \(\textbf{x}_t \in {\mathcal N}_{\nu }\) and \((1-\psi )\textbf{H}_t \preceq {\tilde{\textbf{H}}}_t \preceq (1+\psi )\textbf{H}_t\) with \(\nu , \psi \) satisfying

Proof

See Appendix C.5. \(\square \)

The unit stepsize enables us to show a linear-quadratic error recursion.

Lemma 5

Under Assumption 2 and suppose \(\textbf{p}_t = -({\tilde{\textbf{H}}}_t)^{-1}\nabla f_t\), \(\textbf{x}_t \in {\mathcal N}_{\nu }\), and \((1-\psi )\textbf{H}_t \preceq {\tilde{\textbf{H}}}_t \preceq (1+\psi )\textbf{H}_t\) with \(\nu , \psi \) satisfying (20). Then, we have

Proof

See Appendix C.6. \(\square \)

Lemma 5 suggests that \(\Vert \textbf{x}_t-{\textbf{x}^\star }\Vert \) exhibits local Q-linear convergence.

Corollary 2

Under Assumption 2 and suppose \(\textbf{p}_t = -({\tilde{\textbf{H}}}_t)^{-1}\nabla f_t\), \(\textbf{x}_t \in {\mathcal N}_{\nu }\), and \((1-\psi )\textbf{H}_t \preceq {\tilde{\textbf{H}}}_t \preceq (1+\psi )\textbf{H}_t\) with \(\nu , \psi \) satisfying (20). Then, we have

which implies linear convergence provided \(3(\nu +\psi )<1\).

Given all the presented lemmas, we state the final convergence guarantee.

Theorem 4

Consider Algorithm 1 with \(w_t = t+1\), \(\forall t\ge 0\). Under Assumptions 1, 2, we let \(\delta \in (0, 1)\) satisfy \(d/\delta \ge e\), and let \(\epsilon , \nu \in (0, 1)\) satisfy

Define the neighborhood \({\mathcal N}_{\nu }\) as in (18), and define \({\mathcal T}= {\mathcal T}_1 +{\mathcal T}_2\) with \({\mathcal T}_1\) given by (16) and \({\mathcal T}_2\) given by (19). We also let \(\mathcal {J}= 4{\mathcal T}\kappa /\nu \). Then, with probability \(1-\delta \pi ^2/6\), we have that \(\textbf{x}_{{\mathcal T}_1:{\mathcal T}+\mathcal {J}}\) converges R-linearly, \(\textbf{x}_{{\mathcal T}:{\mathcal T}+\mathcal {J}}\in {\mathcal N}_{\nu }\), and

with

satisfying \(24\rho _t\le 1\), \(\forall t\ge 0\). \(\square \)

Proof

See Appendix C.7. \(\square \)

We note from Theorem 4 that the condition on \(\epsilon \) and \(\nu \) does not depend on unknown quantities of objective function and noise level. The convergence rate \(\rho _t\) consists of two terms. The first term is due to the fact that \({\mathcal T}= O(\varUpsilon ^2+ \kappa ^2)\) Hessians are accumulated in the global phase, and each of them contributes an error (in norm \(\Vert \cdot \Vert _{{\textbf{H}^\star }}\)) as large as \(\kappa \). Given these imprecise Hessians, the method cannot immediately converge superlinearly after \({\mathcal T}\) iterations. That is, \(\rho _t \nleq 1\) if \(\mathcal {J}=t=0\). We need \(\mathcal {J}= O({\mathcal T}\kappa )\) iterates to suppress the effect of these imprecise Hessians. The second term is due to the noise averaging, i.e., \(\Vert \bar{\textbf{E}}_t\Vert \), which decays slower than the first term. Thus, for sufficiently large t, the noise averaging will finally dominate the convergence rate.

We present the above observation in the following corollary. It suggests that the averaging scheme has three transition points; thus four convergence phases.

Corollary 3

Under the setup of Theorem 4, Algorithm 1 has three transitions:

(a): From \(\textbf{x}_0\) to \(\textbf{x}_{{\mathcal T}}\): the algorithm converges to a local neighborhood \({\mathcal N}_{\nu }\) from any initial point \(\textbf{x}_0\).

(b): From \(\textbf{x}_{{\mathcal T}}\) to \(\textbf{x}_{{\mathcal T}+\mathcal {J}}\): the sequence \(\textbf{x}_t\) stays in the neighborhood \({\mathcal N}_{\nu }\).

(Starting from \(\textbf{x}_{{\mathcal T}_1}\), the sequence \(\textbf{x}_t\) exhibits R-linear convergence)

(c): From \(\textbf{x}_{{\mathcal T}+\mathcal {J}}\) to \(\textbf{x}_{{\mathcal T}+\mathcal {J}+{\mathcal K}}\): the algorithm converges Q-superlinearly with

where

(d): From \(\textbf{x}_{{\mathcal T}+\mathcal {J}+{\mathcal K}}\): the algorithm converges Q-superlinearly with

where \(t\ge {\mathcal T}+\mathcal {J}+{\mathcal K}\).

Proof

See Appendix C.8. \(\square \)

For an infinite iteration sequence \(\{\textbf{x}_t\}_{t=0}^\infty \) and with high probability, Corollary 3(a) suggests that the first transition is \({\mathcal T}=O(\kappa ^2+\varUpsilon ^2)\); Corollary 3(b) suggests that the second transition is \(\mathcal {J}= O({\mathcal T}\kappa )\); Corollary 3(c) suggests that the third transition is \({\mathcal K}= O({\mathcal T}^2\kappa ^2/\varUpsilon ^2)\); and Corollary 3(d) suggests that the final rate is \(\rho _t^{(2)} =O(\varUpsilon \sqrt{\log t/t})\). This recovers Theorem 1. Recalling that \(\varUpsilon \) typically does not exceed \(\kappa \), in this case, we have \({\mathcal T}= O(\kappa ^2)\), \(\mathcal {J}= O(\kappa ^3)\), and \({\mathcal K}= O(\kappa ^6/\varUpsilon ^2)\). This suggests a trade-off between the final superlinear rate and the final transition. When the oracle noise level \(\varUpsilon \) is small, a faster superlinear rate is finally attained, but \({\mathcal K}\) also increases, meaning that the time to attain the final rate is further delayed. By Examples 1 and 2, \(\varUpsilon \) decays as sample/sketch size increases. Thus, the final superlinear rate is improved by increasing the sample/sketch size s, however the effect of this change may be delayed due to the rate/transition trade-off. Fortunately, as we will see in the following section, this trade-off can be optimized via weighted Hessian averaging.

4 Convergence of averaging with general weights

Although the superlinear convergence of stochastic Newton with uniform Hessian averaging (Corollary 3) is promising, since the scheme eventually attains the optimal superlinear rate implied by the central limit theorem, a clear drawback is the delayed transition—the scheme spends quite a long time before attaining the final rate. In this section, we study the relationship between transitions and general weight sequences. We consider performing Algorithm 1 with a weight sequence \(w_t\) that satisfies the following general condition.

Assumption 3

We assume \(w_t = w(t)\) for all integer \(t\ge 0\), where \(w(\cdot ): \mathbb {R}\rightarrow \mathbb {R}\) is a real function satisfying (i) \(w(\cdot )\) is twice differentiable; (ii) \(w(-1)= 0\), \(w(t)>0\), \(\forall t\ge 0\); (iii) \(w'(-1)\ge 0\); (iv) \(w''(t)\ge 0\), \(\forall t\ge -1\); (v) \(w(t+1)/w(t) \vee w'(t+1)/w'(t) \le \varPsi \), \(\forall t\ge 0\) for some \(\varPsi \ge 1\).

By the above assumption, we specialize the result of noise averaging in (14) as follows.

Lemma 6

Under Assumptions 1 and 3, for any \(t\ge 0\), with probability \(1-\delta /(t+1)^2\),

Proof

See Appendix C.9. \(\square \)

Naturally, to have \(\Vert \bar{\textbf{E}}_t\Vert \) concentrate, we require \(\lim _{t\rightarrow \infty }\log (\frac{d(t+1)}{\delta })\frac{w'(t)}{w(t)}=0\). It is easy to see that for some weight sequences, such as \(w(t) = \exp (t)-\exp (-1)\), such a requirement cannot be satisfied, which makes convergence fail. On the other hand, this is reasonable since \(z_{i,t}\propto z_i = w_i-w_{i-1} = \exp (i)-\exp (i-1) = (1-1/e)\exp (i)\), which means that we assign an exponentially large weight to the current Hessian estimate. Such an assignment preserves recent Hessian information better, but it diminishes the previous estimates too quickly to let the noise averaging concentrate.

Given the above concentration results, we have a similar result to Lemma 2.

Lemma 7

Consider Algorithm 1 with \(w_t\) satisfying Assumption 3. Under Assumption 1, for any \(\delta , \epsilon \in (0, 1)\), we let

Then, with probability \(1-\delta \pi ^2/6\), the event

occurs, which implies \((1-\epsilon )\lambda _{\min }\cdot \textbf{I}\preceq {\tilde{\textbf{H}}}_t \preceq (1+\epsilon )\lambda _{\max }\cdot \textbf{I}\), \(\forall t\ge \mathcal {I}_1\).

Proof

See Appendix C.10. \(\square \)

We note that Lemma 3 and Corollary 1 still hold for general weight sequences. Thus, we let

and know that \(\textbf{x}_t\in {\mathcal N}_{\nu }\) for all \(t\ge \mathcal {I}\). Lemmas 4, 5 and Corollary 2 also carry over to the setting of general weight sequences. Building on these results, we state the final convergence guarantee.

Theorem 5

Consider Algorithm 1 with \(w_t\) satisfying Assumption 3. Under Assumptions 1, 2, we let \(\delta , \epsilon , \nu \in (0,1)\) and \(\epsilon , \nu \) satisfy

Define the neighborhood \({\mathcal N}_{\nu }\) as in (18), and define \(\mathcal {I}\) as in (25). We also let \({\mathcal U}\) be \(w(\mathcal {I}+{\mathcal U}) = 2w(\mathcal {I}-1)\kappa /\nu \). Then, with probability \(1-\delta \pi ^2/6\), we have that \(\textbf{x}_{\mathcal {I}_1:\mathcal {I}+{\mathcal U}}\) converges R-linearly, \(\textbf{x}_{\mathcal {I}:\mathcal {I}+{\mathcal U}}\in {\mathcal N}_{\nu }\), and

with

satisfying \(12\varPsi \theta _t\le 1\), \(\forall t\ge 0\).

Proof

See Appendix C.11. \(\square \)

The proof of Theorem 5 is more involved than the one of Theorem 4. In particular, when dealing with a critical term \(\sum _{j=\mathcal {I}}^{\mathcal {I}+{\mathcal U}+t}(w(j)-w(j-1))\Vert \textbf{x}_j-{\textbf{x}^\star }\Vert _{{\textbf{H}^\star }}\), the analysis of Theorem 4 uses the facts that \(w(j)-w(j-1)=1\) and \(\textbf{x}_j\) converges linearly (cf. Corollary 2). However, that analysis does not apply for general weights, since plugging the setup \(w(j)=j+1\) masks some critical properties of \(w(\cdot )\), such as \(w'(j)\ge 0\) and \(w(j+1)/w(j) \le \varPsi \), \(\forall j\ge 0\) (\(\varPsi =2\) for \(w(j)=j+1\)). In contrast, for Theorem 5, we separate the above term into two terms, \(\sum _{j=\mathcal {I}}^{\mathcal {I}+{\mathcal U}}(w(j)-w(j-1))\Vert \textbf{x}_j-{\textbf{x}^\star }\Vert _{{\textbf{H}^\star }}\) and \(\sum _{j=\mathcal {I}+{\mathcal U}+1}^{\mathcal {I}+{\mathcal U}+t}(w(j)-w(j-1))\Vert \textbf{x}_j-{\textbf{x}^\star }\Vert _{{\textbf{H}^\star }}\). The first term is bounded by \(w(\mathcal {I}+{\mathcal U})\nu \) since \(\textbf{x}_j\in {\mathcal N}_{\nu }\) for \(\mathcal {I}\le j\le \mathcal {I}+{\mathcal U}\). The second term, which is proven to have the same bound as the first term, not only utilizes the linear convergence of \(\textbf{x}_j\) with a rate depending on \(\varPsi \) (proved by induction), but also utilizes general properties of \(w(\cdot )\) in Assumption 3.

We arrive at the following corollary for the iteration transitions. The proof is the same as for Corollary 3, and is omitted.

Corollary 4

Under the setup of Theorem 5, Algorithm 1 has three transitions:

(a): From \(\textbf{x}_0\) to \(\textbf{x}_\mathcal {I}\): the algorithm converges to a local neighborhood \({\mathcal N}_{\nu }\) from any initial point \(\textbf{x}_0\).

(b): From \(\textbf{x}_{\mathcal {I}}\) to \(\textbf{x}_{\mathcal {I}+ {\mathcal U}}\): the sequence \(\textbf{x}_t\) stays in the neighborhood \({\mathcal N}_{\nu }\).

(Starting from \(\textbf{x}_{\mathcal {I}_1}\), the sequence \(\textbf{x}_t\) exhibits R-linear convergence)

(c): From \(\textbf{x}_{\mathcal {I}+{\mathcal U}}\) to \(\textbf{x}_{\mathcal {I}+{\mathcal U}+{\mathcal V}}\): the algorithm converges Q-superlinearly with

where

(d): From \(\textbf{x}_{\mathcal {I}+{\mathcal U}+{\mathcal V}}\): the algorithm converges Q-superlinearly with

where \(t\ge \mathcal {I}+{\mathcal U}+{\mathcal V}\).

Given Corollary 4, we provide the following examples. Again, we use \(O(\cdot )\) to neglect the logarithmic factors and the dependence on all constants except \(\kappa \) and \(\varUpsilon \). We emphasize that for an iteration sequence, the three transition points occur only with high probability. For sake of presentation, we will not repeat “with high probability” for each \(O(\cdot )\). As mentioned, typically, \(\varUpsilon = O(\kappa )\) in practice. We note that for all considered \(w_t\) sequences, Assumption 3 is satisfied and \(\mathcal {I}_1\) has the same order as \({\mathcal T}_1\) (cf. (16)), so that \(\mathcal {I}= O({\mathcal T}) = O(\varUpsilon ^2 + \kappa ^2)\). In other words, the weighted averaging and uniform averaging take the same order of iterations to get into the same local neighborhood. In the following examples, we show how the second and third transition points \({\mathcal U}\) and \({\mathcal V}\), as well as the superlinear rates \(\theta _t^{(1)}\) and \(\theta _t^{(2)}\), change for different weight sequences (see Table 2 for a summary of the case \(\varUpsilon \le O(\kappa )\), i.e. \(\mathcal {I}= O(\kappa ^2)\)).

Example 3

(Uniform averaging) Let \(w_t = t+1\). Then, the superlinear rates are:

Moreover, the second and third transition points are given by \({\mathcal U}= O(\mathcal {I}\cdot \kappa )\) and \({\mathcal V}=O(\mathcal {I}^2\kappa ^2/\varUpsilon ^2)\). The above example recovers Corollary 3 (up to constant scaling factors). It achieves the best final rate \(\theta _t^{(2)}\) ensured by the central limit theorem, but the initial rate \(\theta _t^{(1)}\) is sub-optimal. Thus, this scheme takes a long time for the iterates to reach the final rate.

Example 4

(Accelerated initial rate) Let \(w_t = (t+1)^p\) for \(p \ge 1\). The superlinear rates are:

Moreover, the second and third transition points are given by

In the above example, we substantially accelerate the initial superlinear rate \(\theta _t^{(1)}\), while preserving the final rate \(\theta _t^{(2)}\) up to a constant factor p. We observe that the transitions \({\mathcal U}\) and \({\mathcal V}\) are both improved upon Example 3. In particular, the dependence of \(\kappa \) in \({\mathcal U}\) is improved from \(\kappa \) to \(\kappa ^{1/p}\), and the dependence of \(\mathcal {I}\) in \({\mathcal V}\) is improved from \(\mathcal {I}^2\) to \(\mathcal {I}^{2p/(2p-1)}\). An important observation is that both \({\mathcal U}\) and \({\mathcal V}\) contract to \(\mathcal {I}\) as p goes to \(\infty \), which inspires us to consider the following sequence.

Example 5

(Optimal transition points) Let \(w_t = (t+1)^{\log (t+1)}\). Then, the superlinear rates are:

We now derive the second and third transition points. In particular, \({\mathcal U}\) can be obtained from:

where the first implication uses the fact \(y = x^{\log x} \Longleftrightarrow x = \exp (\sqrt{\log y})\), as well as the fact \(\log \kappa \le (\log \mathcal {I})^2\). The third transition \({\mathcal V}\) can be obtained from:

where the implication uses \(\kappa ^2\le \mathcal {I}\). To let the right hand side hold, we let \({\mathcal V}= \xi \cdot \mathcal {I}\) and require

Thus, the final transition point \({\mathcal V}\) can be chosen as

Note that, as long as the oracle noise \(\varUpsilon \) is bounded below as \(\varUpsilon \ge 1/\text {poly}(\kappa )\), we have \({\mathcal V}=O(\mathcal {I})\). Since, as we mentioned, in practice the oracle noise actually grows proportionally with \(\kappa \), this is an extremely mild condition. However, it is technically necessary, since, if the Hessian oracle always returned the true Hessian, i.e., \(\varUpsilon =0\), then \(\theta _t^{(2)}=0\), so we would necessarily have \({\mathcal V}=\infty \) (i.e., Hessian averaging is not helpful when we have the exact Hessian). In the above example, all of the transition points \(\mathcal {I}, {\mathcal U}, {\mathcal V}\) are within constant factors of one another (not even logarithmic factors), while we sacrifice only \(\sqrt{\log (t)}\) in the final superlinear rate. The above results recover Theorem 2. This suggests that, using the weight sequence \(w_t=(t+1)^{\log (t+1)}\), the averaging scheme transitions from the global phase to the local superlinearly convergent phase smoothly, and the local neighborhood that separates the two phases is consistent with the neighborhood of classical Newton methods that separate the global phase (i.e., the damped Newton phase) and the local quadratically convergent phase (cf. Lemma 5 with \({\tilde{\textbf{H}}}_t=\textbf{H}_t\)).

5 Numerical experiments

We implement the proposed Hessian averaging schemes and compare them with popular baselines including BFGS, subsampled Newton methods and sketched Newton methodsFootnote 3. We focus on the regularized logistic regression problem

where \(\{(b_i, \textbf{a}_i)\}_{i=1}^n\) with \(b_i\in \{-1,1\}\) are input-label pairs. We let \(\textbf{A}= (\textbf{a}_1, \textbf{a}_2, \ldots , \textbf{a}_n)^\top \in \mathbb {R}^{n\times d}\) be the data matrix.

Data generating process: We generate several data matrices with \((n,d,\nu ) = (1000,100,10^{-3})\), varying their properties. First, we vary the coherence of \(\textbf{A}\), since higher coherence leads to higher variance for subsampled Hessian estimates, compared to sketched Hessian estimates. We use \(\tau _{\textbf{A}}\) to denote the coherence of \(\textbf{A}\), which is defined as

where \(\textbf{A}= \textbf{U}\mathbf {\varSigma }\textbf{V}^\top \) is the reduced singular value decomposition of \(\textbf{A}\) with \(\textbf{U}\in \mathbb {R}^{n\times d}\) and \(\textbf{V}\in \mathbb {R}^{d\times d}\). Second, we vary the condition number \(\kappa _{\textbf{A}}\) of \(\textbf{A}\), since (as suggested by our theory), higher condition number leads to slower convergence and delayed transitions to superlinear rate. The detailed generalization of \(\textbf{A}\) is as follows. We fix \(\textbf{V}= \textbf{I}\in \mathbb {R}^{d\times d}\). For low coherence, we generate a \(n\times d\) random matrix with each entry being independently generated from \({\mathcal N}(0,1)\), and let \(\textbf{U}\) be the left singular vectors of that matrix. For high coherence, we divide each row of \(\textbf{U}\) by \(\sqrt{\textbf{z}_i}\), where \(\textbf{z}_i\) is independently sampled from Gamma distribution with shape 0.5 and scale 2. We observe that the coherence of the low coherence matrix is \(\approx 1\) (minimal), while the coherence of the high coherence matrix is \(\approx 10=n/d\) (maximal). For either low or high coherence, we vary \(\kappa _{\textbf{A}}=\{d^{0.5}, d, d^{1.5}\}\). For each \(\kappa _{\textbf{A}}\), we let singular values in \(\mathbf {\varSigma }\) vary from 1 to \(\kappa _{\textbf{A}}\) with equal spacing, and finally form the matrix \(\textbf{A}= \textbf{U}\mathbf {\varSigma }\). We also generate a random vector \(\textbf{x}\sim {\mathcal N}({\varvec{0}}, 1/d\cdot \textbf{I})\), and the response \(b_i\in \{-1,1\}\) with \(P(b_i = 1) = 1/(1+\exp (-\textbf{a}_i^\top \textbf{x}))\), \(\forall i=1,\ldots ,n\).

Methods: We implement the deterministic BFGS method, and stochastic subsampled and sketched Newton methods. Given a batch size s, the subsampled Newton method computes the Hessian as

where the index set \(\xi \) has size s and is generated uniformly from [1, n] without replacement. The sketched Newton method instead computes the Hessian as

where \(\textbf{S}\in \mathbb {R}^{s\times n}\) is the sketch matrix. We consider three sketching methods, one dense (slow and accurate) and two sparse variants (fast but less accurate).

(i) Gaussian sketch: we let \(\textbf{S}_{i,j}{\mathop {\sim }\limits ^{iid}} {\mathcal N}(0, 1)\).

(ii) CountSketch [13]: for each column of \(\textbf{S}\), we randomly pick a row and realize a Rademacher variable.

(iii) LESS-Uniform [19]: for each row of \(\textbf{S}\), we randomly pick a fixed number of nonzero entries and realize a Rademacher variable in each nonzero entry. We let the number of nonzero entries in each row to be 0.1d.

For (i)–(iii), the sketches are scaled appropriately so that \(\mathbb {E}[{\hat{\textbf{H}}}(\textbf{x})]=\textbf{H}(\textbf{x})\).

For each of the above Hessian oracles (both sketched and subsampled), we consider three variants of the stochastic Newton methods, depending on how the final Hessian estimate is constructed.

(1) NoAvg: the standard method that uses the oracle estimate directly;

(2) UnifAvg: the uniform Hessian averaging (i.e., \(w_t=t+1\) from Theorem 1);

(3) WeightAvg: the universal weighted Hessian averaging (i.e., \(w_t=(t+1)^{\log (t+1)}\) from Theorem 2).

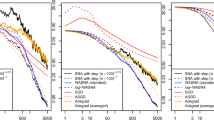

Convergence plots for Subsampled Newton with several Hessian averaging schemes, compared to the standard method without averaging (NoAvg) and to BFGS. For all of the plots, we have \((n,d,\nu ) = (1000, 100, 10^{-3})\). We vary the data coherence \(\tau _{\textbf{A}}\), condition number \(\kappa _{\textbf{A}}\) and the subsample size s

The overall results are summarized in Table 3, where we show the number of iterations until convergence for each experiment (we use \(\Vert \textbf{x}_{t} - {\textbf{x}^\star }\Vert _{{\textbf{H}^\star }}\le 10^{-6}\) as the criterion). We display the median over 50 independent runs. The first general takeaway is that our proposed WeightAvg performs best overall, and it is the most robust to different problem settings, when varying the coherence \(\tau _{\textbf{A}}\), condition number \(\kappa _{\textbf{A}}\), sample size s, and sketch/sample type. In particular, among the three variants of NoAvg/UnifAvg/WeightAvg, the latter is the only one to beat BFGS in all settings with sample size as small as \(s=0.5 d\), as well as the only one to successfully converge within the iteration budget (999 iterations) for all Hessian oracles. Nevertheless, there are a few problem instances where either NoAvg or UnifAvg perform somewhat better than WeightAvg, which can also be justified by our theory. In the cases where NoAvg performs best, the oracle noise is particularly small (due to the use of a dense Gaussian sketch and/or a large sketch size), which means that averaging out the noise is not helpful until long after the method has converged very close to the optimum. On the other hand, the cases where UnifAvg performs best are characterized by a well-conditioned objective function, where the superlinear phase of the optimization is reached almost instantly, so the slightly weaker superlinear rate of WeightAvg compared to UnifAvg manifests itself before reaching convergence (i.e., the additional factor of \(\sqrt{\log (t)}\) in Theorem 2).

To investigate the performance of Hessian averaging more closely, we present selected convergence plots in Fig. 1. The figure shows decay of the error in the log-scale, so that a linear slope indicates linear convergence whereas a concave slope implies superlinear rate. Here, we used subsampling as the Hessian oracle, varying the coherence \(\tau _{\textbf{A}}\), the condition number \(\kappa _{\textbf{A}}\), and sample size s, and compared the Hessian averaging schemes UnifAvg and WeightAvg against the baselines of standard Subsampled Newton (i.e., NoAvg) and BFGS. We make the following observations:

-

(a)

Subsampled Newton with Hessian averaging (UnifAvg and WeightAvg) exhibits a clear superlinear rate, observable in how its error plot curves away from the linear convergence of NoAvg. We note that BFGS also exhibits a superlinear rate, but only much later in the convergence process.

-

(b)

The gain from Hessian averaging (relative to NoAvg) is more significant both for highly ill-conditioned problems (large condition number \(\kappa \)) and for noisy Hessian oracles (small sample size s). For example, in the setting of \((\kappa ,s)=(d^{1.5},d)\) for both low and high coherence, standard Subsampled Newton (NoAvg) converges orders of magnitude slower than BFGS, and yet after introducing weighted averaging (WeightAvg), it beats BFGS by a factor of at least 2.5.

-

(c)

For small condition number, the two Hessian averaging schemes (UnifAvg and WeightAvg) perform similarly, although in the setting of \((\kappa ,s)=(\sqrt{d},d)\), the superlinear rate of UnifAvg is slightly better than that of WeightAvg (cf. Fig. 1a), which aligns with a slightly better rate in Theorem 1 compared to Theorem 2.

-

(d)

For highly ill-conditioned problems, WeightAvg converges much faster than UnifAvg. This is because, as suggested by Theorem 1, it takes much longer for UnifAvg to transition to its fast rate. In fact, in the settings of \((\tau _{\textbf{A}},\kappa _{\textbf{A}},s)=(1, d^{1.5},5d)\) and \((\tau _{\textbf{A}},\kappa _{\textbf{A}},s)=(10,d,5d)\), UnifAvg initially trails behind NoAvg, which is a consequence of the distortion of the averaged Hessian by the noisy estimates from the early global convergence.

We next investigate the convergence rate of different methods, and aim to show that Hessian averaging leads to Q-superlinear convergence, while subsampled/sketched Newton (with fixed sample/sketch size) only exhibit Q-linear convergence. Figure 2 plots \(\Vert \textbf{x}_{t+1}-{\textbf{x}^\star }\Vert _{{\textbf{H}^\star }}/\Vert \textbf{x}_t-{\textbf{x}^\star }\Vert _{{\textbf{H}^\star }}\) versus t. From the figure, we indeed observe that our method with different weight sequences (UnifAvg and WeightAvg) always exhibits a Q-superlinear convergence, and the superlinear rate exhibits the trend of \((1/t)^{t/2}\) matching the theory up to logarithmic factors. We also observe that subsampled/sketched Newton methods with different sketch matrices (NoAvg) always exhibit a Q-linear convergence. These observations are all consistent with our theory.

6 Conclusions

This paper investigated the stochastic Newton method with Hessian averaging. In each iteration, we obtain a stochastic Hessian estimate and then average it with all the past Hessian estimates. We proved that the proposed method exhibits a local Q-superlinear convergence rate, although the non-asymptotic rate and the transition points are different for different weight sequences. In particular, we proved that uniform Hessian averaging finally achieves \((\varUpsilon \sqrt{\log t/t})^t\) superlinear rate, which is faster than the other weight sequences, but it may take as many as \(O(\varUpsilon ^2 + \kappa ^6/\varUpsilon ^2)\) iterations with high probability to get to this rate. We also observe that using weighted averaging \(w_t = (t+1)^{\log (t+1)}\), the averaging scheme transitions from the global phase to the superlinear phase smoothly after \(O(\varUpsilon ^2+\kappa ^2)\) iterations with high probability, with only a slightly slower rate of \((\varUpsilon \log t/\sqrt{t})^t\).

One of the future works is to apply our Hessian averaging technique on constrained nonlinear optimization problems. [45, 46] designed various stochastic second-order methods based on sequential quadratic programming (SQP) for solving constrained problems, where the Hessian of the Lagrangian function was estimated by subsampling. These works established the global convergence for stochastic SQP methods, while the local convergence rate of these methods remains unknown. On the other hand, based on our analysis, the local rate of Hessian averaging schemes is induced by the central limit theorem, which we can also apply on the noise of the Lagrangian Hessian oracles. Thus, it is possible to design a stochastic SQP method with Hessian averaging and show a similar local superlinear rate for solving constrained nonlinear optimization problems. We note that a recent work [44] utilized the Hessian averaging to prove a local sublinear rate for stochastic SQP methods, while that result is not as strong as the one in this paper. Further, for unconstrained convex optimization problems, generalizing our analysis to enable stochastic gradients and function evaluations as well as inexact Newton directions is also an important future work, which can further improve the applicability of the algorithm. Finally, given any iteration threshold \({\mathcal T}\), establishing the probability that the first/second/third transition occurs before \({\mathcal T}\) is an interesting open question.

Notes

A concrete example is finding the leading eigenvector of a covariance matrix \(\textbf{A}= \frac{1}{n} \sum _{i=1}^{n}\textbf{a}_i\textbf{a}_i^\top \). Here, the objective can be structured as \(f_{{\varvec{b}}, \nu }(\textbf{x}) = \frac{1}{n}\sum _{i=1}^{n}(\textbf{x}^\top (\nu \textbf{I}- \textbf{a}_i\textbf{a}_i^\top )\textbf{x}- {\varvec{b}}^\top \textbf{x})\), where \(\nu >\Vert \textbf{A}\Vert \) and \({\varvec{b}}\) are given. See [25] for details.

The matrix exponential is defined by power series expansion: \(\exp (\textbf{A}) = \textbf{I}+ \sum _{i=1}^{\infty } \textbf{A}^i/ i!\).

All results can be reproduced via https://github.com/senna1128/Hessian-Averaging.

References

Agarwal, N., Bullins, B., Hazan, E.: Second-order stochastic optimization for machine learning in linear time. J. Mach. Learn. Res. 18(1), 4148–4187 (2017)

Allen-Zhu, Z., Yuan, Y.: Improved svrg for non-strongly-convex or sum-of-non-convex objectives. In: International Conference on Machine Learning, PMLR, pp. 1080–1089 (2016)

Bellavia, S., Krejić, N., Jerinkić, N.K.: Subsampled inexact Newton methods for minimizing large sums of convex functions. IMA J. Numer. Anal. 40(4), 2309–2341 (2019)

Berahas, A.S., Bollapragada, R., Nocedal, J.: An investigation of Newton-sketch and subsampled Newton methods. Optim. Methods Softw. 35(4), 661–680 (2020)

Blanchet, J., Cartis, C., Menickelly, M., Scheinberg, K.: Convergence rate analysis of a stochastic trust-region method via supermartingales. INFORMS J. Optim. 1(2), 92–119 (2019)