Abstract

Sparse principal component analysis with global support (SPCAgs), is the problem of finding the top-r leading principal components such that all these principal components are linear combinations of a common subset of at most k variables. SPCAgs is a popular dimension reduction tool in statistics that enhances interpretability compared to regular principal component analysis (PCA). Methods for solving SPCAgs in the literature are either greedy heuristics (in the special case of \(r = 1\)) with guarantees under restrictive statistical models or algorithms with stationary point convergence for some regularized reformulation of SPCAgs. Crucially, none of the existing computational methods can efficiently guarantee the quality of the solutions obtained by comparing them against dual bounds. In this work, we first propose a convex relaxation based on operator norms that provably approximates the feasible region of SPCAgs within a \(c_1 + c_2 \sqrt{\log r} = O(\sqrt{\log r})\) factor for some constants \(c_1, c_2\). To prove this result, we use a novel random sparsification procedure that uses the Pietsch-Grothendieck factorization theorem and may be of independent interest. We also propose a simpler relaxation that is second-order cone representable and gives a \((2\sqrt{r})\)-approximation for the feasible region. Using these relaxations, we then propose a convex integer program that provides a dual bound for the optimal value of SPCAgs. Moreover, it also has worst-case guarantees: it is within a multiplicative/additive factor of the original optimal value, and the multiplicative factor is \(O(\log r)\) or O(r) depending on the relaxation used. Finally, we conduct computational experiments that show that our convex integer program provides, within a reasonable time, good upper bounds that are typically significantly better than the natural baselines.

Similar content being viewed by others

Notes

For some intuition: The first term in the parenthesis controls the variance of \(\widetilde{\varvec{W}}_{ii}\), which is \(\text {Var}(\widetilde{\varvec{W}}_{ii}) \le \frac{\varvec{W}^2_{ii}}{p_i} \le \frac{6}{k}\); the second term controls the largest size of a row of \(\widetilde{\varvec{V}}\), which is \(\Vert \widetilde{\varvec{V}}_{i,:}\Vert _2 \le \left\| \frac{\varvec{V}^*_{i,:}}{p_i} \right\| _2 \le \frac{6}{k} \sum _{i'} \Vert \varvec{V}^*_{i',:}\Vert _2\), which is at most \(6\sqrt{\frac{r}{k}} \le 6\) because \(\varvec{V}^* \in \mathcal {C}\mathcal {R}1\).

Formally, we can append zero rows to \(\frac{\varvec{V}^*_i}{\Vert \varvec{V}^*_i\Vert _2}\) so that the resulting matrix is in \(\mathcal {C}\mathcal {R}1\).

References

Alizadeh, A.A., Eisen, M.B., Davis, R.E., Ma, C., Lossos, I.S., Rosenwald, A., Boldrick, J.C., Sabet, H., Tran, T., Yu, X., et al.: Distinct types of diffuse large b-cell lymphoma identified by gene expression profiling. Nature 403(6769), 503 (2000)

Alon, U., Barkai, N., Notterman, D.A., Gish, K., Ybarra, S., Mack, D., Levine, A.J.: Broad patterns of gene expression revealed by clustering analysis of tumor and normal colon tissues probed by oligonucleotide arrays. Proc. National Academy Sci. 96(12), 6745–6750 (1999)

Asteris, M., Papailiopoulos, D., Kyrillidis, A., Dimakis, A.G.: Sparse PCA via bipartite matchings. In: Advances in Neural Information Processing Systems, pp. 766–774 (2015)

Asteris, M., Papailiopoulos, D.S., Karystinos, G.N.: Sparse principal component of a rank-deficient matrix. In: 2011 IEEE International Symposium on Information Theory Proceedings, pp. 673–677. IEEE (2011)

Attouch, H., Bolte, J., Redont, P., Soubeyran, A.: Proximal alternating minimization and projection methods for nonconvex problems: An approach based on the kurdyka-łojasiewicz inequality. Math. Oper. Res. 35(2), 438–457 (2010)

Berthet, Q., Rigollet, P.: Computational lower bounds for sparse pca. arXiv:1304.0828 (2013)

Bolte, J., Sabach, S., Teboulle, M.: Proximal alternating linearized minimization for nonconvex and nonsmooth problems. Math. Program. 146(1–2), 459–494 (2014)

Boutsidis, C., Drineas, P., Magdon-Ismail, M.: Sparse features for PCA-like linear regression. In: Advances in Neural Information Processing Systems, pp. 2285–2293 (2011)

Burgel, P.R., Paillasseur, J., Caillaud, D., Tillie-Leblond, I., Chanez, P., Escamilla, R., Perez, T., Carré, P., Roche, N., et al.: Clinical COPD phenotypes: a novel approach using principal component and cluster analyses. Eur. Respiratory J. 36(3), 531–539 (2010)

Cai, T., Ma, Z., Wu, Y.: Optimal estimation and rank detection for sparse spiked covariance matrices. Probability Theory and Related Fields 161(3–4), 781–815 (2015)

Cai, T.T., Ma, Z., Wu, Y., et al.: Sparse PCA: Optimal rates and adaptive estimation. Ann. Stat. 41(6), 3074–3110 (2013)

Chan, S.O., Papailliopoulos, D., Rubinstein, A.: On the approximability of sparse PCA. In: Conference on Learning Theory, pp. 623–646 (2016)

Chen, S., Ma, S., Xue, L., Zou, H.: An alternating manifold proximal gradient method for sparse PCA and sparse CCA. arXiv:1903.11576 (2019)

d’Aspremont, A., Bach, F., El Ghaoui, L.: Approximation bounds for sparse principal component analysis. Math. Program. 148(1–2), 89–110 (2014)

d’Aspremont, A., Bach, F., Ghaoui, L.E.: Optimal solutions for sparse principal component analysis. J. Mach. Learn. Res. 9(Jul), 1269–1294 (2008)

d’Aspremont, A., Ghaoui, L.E., Jordan, M.I., Lanckriet, G.R.: A direct formulation for sparse PCA using semidefinite programming. In: Advances in neural information processing systems, pp. 41–48 (2005)

Del Pia, A.: Sparse PCA on fixed-rank matrices. http://www.optimization-online.org/DB_HTML/2019/07/7307.html (2019)

Deshpande, Y., Montanari, A.: Sparse PCA via covariance thresholding. J. Mach. Learn. Res. 17(1), 4913–4953 (2016)

Dey, S.S., Mazumder, R., Wang, G.: A convex integer programming approach for optimal sparse pca. arXiv:1810.09062 (2018)

Erichson, N.B., Zheng, P., Manohar, K., Brunton, S.L., Kutz, J.N., Aravkin, A.Y.: Sparse principal component analysis via variable projection. arXiv:1804.00341 (2018)

Gallivan, K.A., Absil, P.: Note on the convex hull of the stiefel manifold. Technical note (2010)

Gu, Q., Wang, Z., Liu, H.: Sparse PCA with oracle property. In: Advances in neural information processing systems, pp. 1529–1537 (2014)

Hiriart-Urruty, J.B., Lemaréchal, C.: Fundamentals of convex analysis. Springer Science & Business Media (2012)

Johnstone, I.M., Lu, A.Y.: Sparse principal components analysis. arXiv:0901.4392 (2009)

Jolliffe, I.T., Cadima, J.: Principal component analysis: a review and recent developments. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences 374(2065), 20150202 (2016)

Jolliffe, I.T., Trendafilov, N.T., Uddin, M.: A modified principal component technique based on the LASSO. J. Comput. Graph. Stat. 12(3), 531–547 (2003)

Journée, M., Nesterov, Y., Richtárik, P., Sepulchre, R.: Generalized power method for sparse principal component analysis. J. Mach. Learn. Res. 11(Feb), 517–553 (2010)

Kannan, R., Vempala, S.: Randomized algorithms in numerical linear algebra. Acta Numerica 26, 95 (2017)

Kim, J., Tawarmalani, M., Richard, J.P.P.: Convexification of permutation-invariant sets and applications. arXiv:1910.02573 (2019)

Krauthgamer, R., Nadler, B., Vilenchik, D., et al.: Do semidefinite relaxations solve sparse PCA up to the information limit? Ann. Stat. 43(3), 1300–1322 (2015)

Lei, J., Vu, V.Q., et al.: Sparsistency and agnostic inference in sparse PCA. Ann. Stat. 43(1), 299–322 (2015)

Ma, S.: Alternating direction method of multipliers for sparse principal component analysis. J. Oper. Res. Soc. China 1(2), 253–274 (2013)

Ma, T., Wigderson, A.: Sum-of-squares lower bounds for sparse pca. In: Advances in Neural Information Processing Systems, pp. 1612–1620 (2015)

Mackey, L.W.: Deflation methods for sparse PCA. In: Advances in neural information processing systems, pp. 1017–1024 (2009)

Magdon-Ismail, M.: NP-hardness and inapproximability of sparse PCA. Inf. Process. Lett. 126, 35–38 (2017)

Mitzenmacher, M., Upfal, E.: Probability and computing: Randomization and probabilistic techniques in algorithms and data analysis. Cambridge university press (2017)

Papailiopoulos, D., Dimakis, A., Korokythakis, S.: Sparse PCA through low-rank approximations. In: International Conference on Machine Learning, pp. 747–755 (2013)

Pietsch, A.: Operator ideals, vol. 16. Deutscher Verlag der Wissenschaften (1978)

PROBEL, C.J., TROPP, J.A.: Technical report no. 2011-02 august 2011 (2011)

Sigg, C.D., Buhmann, J.M.: Expectation-maximization for sparse and non-negative PCA. In: Proceedings of the 25th international conference on Machine learning, pp. 960–967. ACM (2008)

Steinberg, D.: Computation of matrix norms with applications to robust optimization. Research thesis, Technion-Israel University of Technology 2 (2005)

Tropp, J.A.: Column subset selection, matrix factorization, and eigenvalue optimization. In: Proceedings of the twentieth annual ACM-SIAM symposium on Discrete algorithms, pp. 978–986. SIAM (2009)

Tropp, J.A.: User-friendly tail bounds for sums of random matrices. Found. Comput. Math. 12(4), 389–434 (2012)

Vu, V., Lei, J.: Minimax rates of estimation for sparse PCA in high dimensions. In: Artificial intelligence and statistics, pp. 1278–1286 (2012)

Vu, V.Q., Cho, J., Lei, J., Rohe, K.: Fantope projection and selection: A near-optimal convex relaxation of sparse PCA. In: Advances in neural information processing systems, pp. 2670–2678 (2013)

Wang, G., Dey, S.: Upper bounds for model-free row-sparse principal component analysis. In: Proceedings of the International Conference on Machine Learning (2020)

Wang, Z., Lu, H., Liu, H.: Tighten after relax: Minimax-optimal sparse PCA in polynomial time. In: Advances in neural information processing systems, pp. 3383–3391 (2014)

Wolsey, L.A., Nemhauser, G.L.: Integer and combinatorial optimization, vol. 55. Wiley, New york (1999)

Yeung, K.Y., Ruzzo, W.L.: Principal component analysis for clustering gene expression data. Bioinformatics 17(9), 763–774 (2001)

Yongchun Li, W.X.: Exact and approximation algorithms for sparse PCA. http://www.optimization-online.org/DB_HTML/2020/05/7802.html (2020)

Yuan, X.T., Zhang, T.: Truncated power method for sparse eigenvalue problems. J. Mach. Learn. Res. 14(Apr), 899–925 (2013)

Zhang, Y., d’Aspremont, A., El Ghaoui, L.: Sparse PCA: Convex relaxations, algorithms and applications. In: Handbook on Semidefinite, Conic and Polynomial Optimization, pp. 915–940. Springer (2012)

Zou, H., Hastie, T., Tibshirani, R.: Sparse principal component analysis. J. Comput. Graph. Stat. 15(2), 265–286 (2006)

Acknowledgements

We would like to thank the anonymous reviewers for excellent comments that significantly improved the paper. In particular, the SDP presented in Sect. 2.2 and the heuristic method in Appendix B.3 have been suggested by the reviewers. Marco Molinaro was supported in part by the Coordenaćão de Aperfeićoamento de Pessoal de Nível Superior (CAPES, Brasil) - Finance Code 001, by Bolsa de Produtividade em Pesquisa \(\#3\)12751/2021-4 from CNPq, FAPERJ grant “Jovem Cientista do Nosso Estado”, and by the CAPES-PrInt program. Santanu S. Dey would like to gratefully acknowledge the support of the grant N000141912323 from ONR.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

A preliminary version of this paper was published in [46].

Appendices

Appendix

Additional concentration inequalities

We need the standard multiplicative Chernoff bound (see Theorem 4.4 [36]).

Lemma 3

(Chernoff Bound) Let \(X_1,\ldots ,X_n\) be independent random variables taking values in [0, 1]. Then for any \(\delta > 0\) we have

where \(\mu = {\mathbb {E}}\sum _i X_i\).

We also need the one-sided Chebychev inequality, see for example Exercise 3.18 of [36].

Lemma 4

(One-sided Chebychev) For any random variable X with finite first and second moments

Scaling invariance of Theorem 4

Given any data matrix \(\varvec{X} \in {\mathbb {R}}^{d \times M}\) with M samples, the sample covariance matrix is \(\varvec{A} = \frac{1}{M} \varvec{X}\varvec{X}^{\top }\). Theorem 4 shows that

Note that rescaling the data matrix \(\varvec{X}\) to \(\tilde{\varvec{X}} = c \cdot \varvec{X}\) for any constant \(c > 0\) does not change the approximation ratios \(\rho _{\mathcal {CR}i}^2\) for \(i \in \{1, 1', 2\}\) and changes the terms \(\text {opt} ^{{\mathcal {F}}}(\varvec{A})\) and \(\text {add} (\varvec{A})\) quadratically: letting \(\tilde{\varvec{A}} := \frac{1}{M} \tilde{\varvec{X}}\tilde{\varvec{X}}^{\top } = c^2 \varvec{A}\),

In particular, this implies that the effective multiplicative ratio (emr) between \(\text {opt}^{{\mathcal {F}}}(\varvec{A})\) and the affine upper bound \(\text {aff} (\varvec{A})\) is invariant under rescaling:

Greedy heuristic for SPCAgs

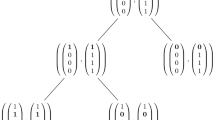

1.1 Complete pseudocode

1.2 Proof of Lemma 2

By optimality of \(\varvec{V}^t_{S_t}\) we can see that \(f(S_t) = f(S_t, \varvec{V}^t_{S_t})\) for all t. Thus, letting \(\varvec{G}_t := \varvec{I}^k - \varvec{V}^t_{S_{t}} (\varvec{V}_{S_{t}}^t)^{\top }\) to simplify the notation, we have

1.3 Primal Heuristic Algorithm For Near-Maximal Determinant

Here we present another primal heuristic algorithm that finds a principal submatrix whose determinant is near-maximal.

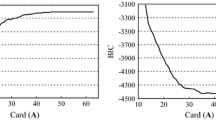

We compare the performance of the greedy neighborhood search Algorithm 1, with the greedy heuristic (GH) 2 in Tables 7 and 8 where we report the relative gap defined as \(\text {Gap} := \frac{\text {lb}_{\text {GH}}}{\text {lb}_{\text {GNS}}}\), where \(\text {lb}_{\text {GH}}, \text {lb}_{\text {GNS}}\) denote the primal lower bounds of sparse PCA obtained from the greedy heuristic and the greedy neighborhood search respectively.

Based on the numerical results in Tables 7 and 8, the greedy neighborhood search (GNS) algorithm outperforms the greedy heuristic (GH) in every instance.

Techniques for reducing the running time of CIP

In practice, we want to reduce the running time of CIP. Here are the techniques that we used to enhance the efficiency in practice.

1.1 Threshold

The first technique is to reduce the number of SOS-II constraints in the set PLA. Let \(\lambda _{\mathrm {TH}}\) be a threshold parameter that splits the eigenvalues \(\{\lambda _j\}_{j = 1}^d\) of sample covariance matrix \(\varvec{A}\) into two parts \(J^+ = \{j: \lambda _j > \lambda _{\mathrm {TH}} \}\) and \(J^- = \{j: \lambda _j \le \lambda _{\mathrm {TH}} \}\). The objective function \(\text {Tr} \left( \varvec{V}^{\top } \varvec{A} \varvec{V} \right) \) satisfies

in which the first term is convex, the second term is concave, and the third term satisfies

due to \(\sum _{j = 1}^d \sum _{i = 1}^r g_{ji}^2 \le r\). Since maximizing a concave function is equivalent to convex optimization, we replace the second term by a new auxiliary variable s and the third term by its upper bound \(r \lambda _{\mathrm {TH}}\) such that

where

is a convex constraint. We select a value of \(\lambda _{\mathrm {TH}}\) so that \(|J^+| = 3\). Therefore, it is sufficient to construct a piecewise-linear upper approximation for the quadratic terms \(g_{ji}^2\) in the first term with \(j \in J^+\), i.e., constraint set \(\text {PLA}([J^+] \times [r])\). We thus, greatly reduce the number of SOS-II constraints from \({\mathcal {O}}( d \times r )\) to \({\mathcal {O}}( |J^+| \times r )\), i.e. in our experiments to 3r SOS-II constraints.

1.2 Cutting planes

Similar to classical integer programming, we can incorporate additional cutting planes to improve the efficiency.

Cutting plane for sparsity: The first family of cutting-planes is obtained as follows: Since \(\Vert \varvec{V}\Vert _0 \le k\) and \(\varvec{v}_1, \ldots , \varvec{v}_r\) are orthogonal, by Bessel inequality, we have

We call these above cuts–sparse cut since \(\theta _j\) is obtained from the row sparsity parameter k.

Cutting plane from objective value: The second type of cutting plane is based on the property: for any symmetric matrix, the sum of its diagonal entries are equal to the sum of its eigenvalues. Let \(\varvec{A}_{j_1, j_1}, \ldots , \varvec{A}_{j_k, j_k}\) be the largest k diagonal entries of the sample covariance matrix \(\varvec{A}\), we have

Proposition 1

The following are valid cuts for SPCAgs:

When the splitting points \(\{\gamma _{ji}^{\ell }\}_{\ell = - N}^N\) in SOS-II are set to be \(\gamma _{ji}^{\ell } = \frac{\ell }{N} \cdot \theta _j\), we have:

1.3 Implemented version of CIP

Thus the implemented version of CIP is

1.4 Submatrix technique

Proposition 2

Let \(X \in {\mathbb {R}}^{m \times n}\) and let \(\theta \) be defined as

then \(\theta \le \sqrt{r} \Vert X\Vert _F\)

Proof

Note that the final maximization problem is equal to

Next we verify that the eigenvalues of

are ± singular values of X: Let \(X = U\Sigma W^{\top }\). In particular, note that:

Therefore, we have

\(\square \)

Rights and permissions

About this article

Cite this article

Dey, S.S., Molinaro, M. & Wang, G. Solving sparse principal component analysis with global support. Math. Program. 199, 421–459 (2023). https://doi.org/10.1007/s10107-022-01857-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-022-01857-w