Abstract

A class of risk-neutral generalized Nash equilibrium problems is introduced in which the feasible strategy set of each player is subject to a common linear elliptic partial differential equation with random inputs. In addition, each player’s actions are taken from a bounded, closed, and convex set on the individual strategies and a bound constraint on the common state variable. Existence of Nash equilibria and first-order optimality conditions are derived by exploiting higher integrability and regularity of the random field state variables and a specially tailored constraint qualification for GNEPs with the assumed structure. A relaxation scheme based on the Moreau-Yosida approximation of the bound constraint is proposed, which ultimately leads to numerical algorithms for the individual player problems as well as the GNEP as a whole. The relaxation scheme is related to probability constraints and the viability of the proposed numerical algorithms are demonstrated via several examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Whether it be a consequence of noisy measurements, estimated parameter values, or model ambiguity, uncertainty is present in just about every mathematical model of real-world phenomena. Whenever the uncertainty is untreatable by deterministic quantities, it is best to assimilate it into our mathematical models via random variables, vectors, or elements. This allows us to find more robust solutions in the face of future uncertainty and guard against outlier events. Since many models in engineering and the natural sciences are defined by partial differential equations (PDEs), the inclusion of random inputs leads us to consider parametric or random PDEs as part of our optimization problems, cf. [9, 18, 49, 60, 62].

PDE-constrained optimization under uncertainty is a challenging area of mathematical optimization with many relevant applications in the engineering sciences. It is a growing field with many recent of contributions in theory and algorithms, see e.g. [3, 11,12,13,14, 20, 22, 39,40,41, 43,44,45, 57, 58, 63]. However, many practical problems require the simultaneous minimization of multiple objectives. By pitting these objectives against each other, i.e., treating the problem as a noncooperative game with each objective and separate control representing a player and its individual strategy, we naturally come to study PDE-constrained Nash equilibrium problems under uncertainty. In the deterministic setting, we mention here the pioneering works [16, 26, 51,52,53,54, 61]. It is important to note, however, that the models in these papers do not consider bound constraints, in particular there are no state constraints. This is an important distinction, as it makes the difference between modeling the game via a coupled PDEs (no bound constraints) versus a variational inequality (no state constraints) versus a quasivariational inequality (with state constraints).

As with their deterministic counterparts, it is often necessary to look for a control that forces the state (solution of the PDE) to satisfy certain bound constraints, e.g., below a maximum temperature threshold or above a physical obstacle. When uncertain inputs are involved, this problem of state constraints becomes much more challenging. This is due in part to a lack of smoothness with respect to the random parameters and missing compactness properties, which we would expect in a deterministic setting. Moreover, though an adjoint equation solely for the state equation can be introduced, an adjoint equation that combines the state equation as well as a multiplier for the state constraint cannot be derived without assuming additional properties. The inclusion of state constraints leads in fact to generalized Nash equilibrium problems in Banach spaces. For recent work in the deterministic setting, we refer the reader to [33, 34, 37, 38] and the references therein.

Summarizing the discussions above, we thus consider a class of risk-neutral PDE-constrained GNEPs under uncertainty subject to state constraints. In an abstract sense, this amounts to considering an N-player GNEP in which the \(i\mathrm{th}\) player’s problem takes the form

Here, S(z) is the z-dependent random field solution of a linear elliptic PDE with uncertain inputs, \(Z^{i}_\mathrm{ad}\) and K are closed convex sets and \(J_i\) is an appropriate convex disutility function for player i. We will make the appropriate data assumptions below. The term “risk-neutral” arises due to the fact that only the expected disutility is considered. Letting \(\overline{z}\) be a Nash equilibrium for this problem, player i would expect \(\overline{z}_i\) to be the best response to \(\overline{z}_{-i}\) on average, i.e., if the game were played repeatedly. Since the literature is rather scarce on the treatment of state constraints in PDE-constrained optimization under uncertainty, see e.g., [19, 23] and the recent preprint [21], we pay special attention to the case where \(N = 1\), as well. We comment further on the studies [19, 23] below, which make use of probability constraints. In contrast, the abstract results in [22] can be used for state constraints as considered in this paper. However, these results require a different kind of constraint qualification that may be difficult to verify in general.

The contributions of our paper are as follows:

-

1.

We exploit existing results on elliptic regularity theory to prove higher integrability and regularity of the random field solutions S(z).

-

2.

Under appropriate constraint qualifications, we prove existence of solutions/equilibria and derive optimality conditions for the optimization problem and GNEP.

-

3.

We extend the well-known Moreau-Yosida approach for state constraints to the stochastic case and rigorously prove that the approximations converge to the original GNEP.

-

4.

The link between the Moreau-Yosida regularization technique and probability constraints is established using concentration inequalities.

-

5.

We propose and demonstrate the viability of numerical algorithms for the optimization problem and GNEP.

The first contribution is crucial, as we need at least essential boundedness of the random field solutions in order to use techniques of convex optimization in Banach spaces to develop the optimality theory. In (2), we require a Slater-type condition for the optimization problem and the strict uniform feasible response (SUFR) condition introduced in [33] for the GNEP. The SUFR condition imposes a kind of hidden symmetry on the GNEP model. Although Moreau-Yosida regularization has been used successfully in deterministic settings, the stochastic setting poses additional pitfalls. Nevertheless, passing to the limit in the relaxation parameter is crucial for the justification of the numerical methods in the fully continuous setting. The link to probability constraints in (4) is interesting in its own right, since the approximating problems are much easier to solve than a similar problem with probability conditions. In addition, we obtain a kind of probabilistic rate of convergence for the Moreau-Yosida relaxations, which is reflected in the properties of the out-of-sample controlled states in (5); even after solving with relatively small increasing batches and modest values of the relaxation parameter. The encouraging results in our numerical study (5) motivate a number of future research directions.

The rest of the paper is structured as follows. In Sect. 2, we pose a number of basic assumptions along with an analysis of the forward problem. In addition, the optimization problems and GNEP are introduced. Following this, we derive existence and optimality conditions in Sect. 3; using the underlying structure and basic constraint qualifications. Due to the low multiplier regularity in the optimality conditions and a lack of adjoint equation in the sense that the righthand side is the sum of the derivative of the objective with respect to the state and the Lagrange multiplier for the state constraint, we propose a Moreau-Yosida technique in Sect. 4. This allows us to formulate function-space-based numerical algorithms for both the optimization problems and the GNEP in Sect. 5. The potential of the algorithms is demonstrated via several numerical examples. In particular, we provide a brief, post-optimal analysis using the performance of the computed controls to derive a statistic on the violation of the state constraint.

2 Problem formulation

2.1 Notation, standing assumptions, and preliminary results

We start by defining the necessary function spaces. We assume that the physical domain \(D \subset \mathbb {R}^d\) with \( d = 1,2, \) or 3 is an open bounded set such that D is either a convex polyhedron or the boundary of D, denoted by \(\partial D\), is of class \(C^{1,1}\).

The triple \(\left( \varOmega , \mathcal {F}, \mathbb {P} \right) \) denotes a complete probability space, where \(\varOmega \) is the sample space of possible outcomes, \(\mathcal {F}\) the Borel \(\sigma \)-alegra of \(\varOmega \) for a fixed topology on \(\varOmega \) and \(\mathbb {P}\) is a probability measure.

Given a real-valued Banach space \((V, \left\| \cdot \right\| _V)\), Borel measure \(\mu \), and \(p \in [1,\infty ]\) we denote the usual Lebesgue-Bochner space \(L_{\mu }^p(\varOmega ;V)\) of all strongly \({\mathcal {F}}\)-measurable V-valued functions by

where

When \(V = \mathbb {R},\) we set \(L_{\mu }^p(\varOmega ; \mathbb {R}) = L_{\mu }^p(\varOmega )\) the usual Lebesgue space with underlying measure \(\mu \). When the Lebesgue measure \( \mu = {\mathcal {L}} \) is considered, we omit the subscript \({\mathcal {L}}\) and simply write \(L^p(\varOmega ).\) We denote by \({\mathcal {F}}_{{\mathcal {L}}}\) the \(\sigma \)-algebra of Lebesgue measurable sets. We recall here that for \(1 \le p,q \le \infty \) such that \( \nicefrac {1}{p} + \nicefrac {1}{q} = 1\), it is known that the topological dual fulfills \(L_{\mu }^p(\varOmega ;V)^* \simeq L_{\mu }^q(\varOmega ;V^*)\). If V is reflexive, then so is \(L_{\mu }^p(\varOmega ;V)\) for \(1< p < \infty \). For further information see [28, Chapter III].

We show in the sections below that the multipliers for the stochastic state constraints are of very low regularity, i.e., bounded additive measures. We will need the space \(\mathbf {ba}\), which we recall here for ease of reference, cf. [27, 20.27 Definition] or [17].

Definition 1

Let \(\left( \varXi , {\mathcal {B}}, \mu \right) \) be a \(\sigma \)-finite measure space. The space \(\mathbf {ba}(\varXi , {\mathcal {B}}, \mu )\) denotes the set of all real-valued set-functions \(\tau : {\mathcal {B}} \rightarrow \mathbb {R}\) such that

-

(i)

\(\sup \lbrace | \tau (A)| : A \in {\mathcal {B}} \rbrace < \infty ,\)

-

(ii)

\(\tau (A \cup B ) = \tau (A) + \tau (B)\) for \(A, B \in {\mathcal {B}} \) with \(A \cap B = \emptyset \) and

-

(iii)

\(\tau (A) = 0\) if \(A \in {\mathcal {B}} \) is \(\mu \)-null, i.e. \(\tau<< \mu \).

The norm of \(\tau \in \mathbf {ba}(\varXi , {\mathcal {B}}, \mu )\) is given by \( | \tau | (\varXi )\), the total variation of \(\tau \) on \({\mathcal {B}}\).

The key result for our analysis related to this space is the existence of an isometric isomorphism between \((L_{\pi }^{\infty }(\varXi ))^*\) and \(\mathbf {ba}(\varXi , {\mathcal {B}}, \pi )\), cf. [17, Thm. IV.8.16], where we use

Finally, we fix several notational conventions. For a (real) Banach space V we denote the expectation of a random element \(X: \varOmega \rightarrow V\) by

For some nonempty subset \(C \subset V\), \({\mathcal {I}}_C: V \rightarrow \mathbb {R} \cup \lbrace \infty \rbrace \) represents the standard indicator function, which satisfies \({\mathcal {I}}_{C}(x) = 0\) if \(x \in C\) and \(+\infty \) otherwise. For an arbitrary convex set K, we define the standard convex normal cone by

The (set-theoretic) characteristic function associated with some subset A is denoted by \(\chi \) or \(\chi _{A}\), where \(\chi _{A}(x) = 1\) if \(x \in A\) and 0 otherwise. Strong convergence of a sequence is denoted by \( \rightarrow \), weak-convergence by \(\rightharpoonup \), and weak-*-convergence by \( \overset{*}{\rightharpoonup }\). The closed \(\varepsilon \)-ball with center x in some normed space is denoted \({\mathbb {B}}_\varepsilon (x)\). The superscript \(*\) is used to denote the adjoint operator or dual space. As usual \(C \lesssim D\) means that C is bounded by D up to an independent constant. For two Banach spaces V and W, the set of all bounded linear operators from V to W will be denoted by \({\mathcal {L}}(V,W)\). We use the typical convention from game theory for a vector u with N components for emphasizing the \(i\mathrm{th}\) component by writing \( u = (u_i, u_{-i}) = (u_{-i}, u_i). \)

2.2 Risk-neutral PDE-constrained equilibrium problems

2.2.1 PDE-constrained equilibrium problems as strategic games

As mentioned above, our results apply to both PDE-constrained optimization problems under uncertainty as well as stochastic equilibrium problems with PDE-constraints. Whereas the solution concept for PDE-constrained optimization is obvious, there are several possibilities for equilibrium problems from the perspective of game theory. The notation in this brief section is chosen to reflect the references to the game theory literature.

We recall that a strategic game comprises a set of N players or agents, their sets of actions \(A^i\), and a unique preference relation for each player over all possible profiles of actions  . In many cases, the preference relation can be described by the values of utility functions \(u_i : \varvec{A} \rightarrow {\mathbb {R}}\) and the preferred solution concept for noncooperative behavior is often taken to be a Nash equilibrium; cf. [47]. The latter states that \(\bar{\varvec{a}} \in \varvec{A}\) is a (pure strategy) Nash equilibrium provided for all \(i = 1,\dots , N\) we have

. In many cases, the preference relation can be described by the values of utility functions \(u_i : \varvec{A} \rightarrow {\mathbb {R}}\) and the preferred solution concept for noncooperative behavior is often taken to be a Nash equilibrium; cf. [47]. The latter states that \(\bar{\varvec{a}} \in \varvec{A}\) is a (pure strategy) Nash equilibrium provided for all \(i = 1,\dots , N\) we have

see, e.g., [48] for more details. We will refer to games in which the solution concept is a Nash equilibrium as Nash Equilibrium Problems or NEPs.

We will take an analogous perspective for our PDE-constrained equilibrium problems. However, due to the presence of state constraints, the sets of actions are set-valued mappings \(A^i(\varvec{a}_{-i})\) that also depend on \(\varvec{a}_{-i}\) for each i. This leads to a natural extension, first introduced by Debreu [15], see also [6]: \(\bar{\varvec{a}} \in \varvec{A}\) is a (generalized) Nash equilibrium provided for all \(i=1,\dots ,N\) we have \({\bar{a}}_i \in A^{i}(\bar{\varvec{a}}_{-i})\) and

These games are significantly more difficult from both a theoretical as well as numerical perspective due to the embedded fixed point relation. We refer to games of this type as Generalized Nash Equilibrium Problems or GNEPs.

2.2.2 Linear elliptic random PDEs

Returning now to the context of PDE-constrained optimization, we introduce a class of linear elliptic random PDEs as our state system. Let

Given \(z \in L^2(D)\), we consider the following problem: Find \(u \in {\mathcal {U}}\) such that

for all test functions \(v \in {\mathcal {U}}\). Note that (2.3) can be equivalently written in a semi-weak form. Let u solve (2.3). Then using \(v(x,\omega ) = \chi _{A}(\omega ) \varphi (x)\) such that \(A \in {\mathcal {F}}\) and \(\varphi \in H^1_0(D)\) (or \(\varphi \in C^{\infty }_{0}(D)\)) we have

for every \(\varphi \in H^1_0(D)\) and consequently

for every \(\varphi \in H^1_0(D)\) . The reverse direction (from \({\mathbb {P}}\)-pointwise weak solutions to a solution of (2.3)) can be easily adapted from the nonlinear setting in [42]. The key components of the argument are: Prove the existence of a solution for \({\mathbb {P}}\)-a.e. \(\omega \), demonstrate measurability in \(\omega \) using Fillipov’s theorem for measurable selections, and obtain integrability using standard a priori estimates for elliptic PDEs. It is sometimes more convenient to work with one form versus the other as we will see below. For \(z = 0\), we denote the solution of (2.3) by \(u_f\) and for \(f \equiv 0\) we set \(u = S(z)\). Hence, any solution u of (2.3) can be written

We will demonstrate below that S(z) is a bounded linear operator in z between appropriate function spaces.

In order to ensure well-defined solutions and derive higher regularity results, we make the following additional assumptions on the problem data.

Assumption 1

In addition to the standing assumptions on D, \(\partial D\), and

\((\varOmega ,{\mathcal {F}},{\mathbb {P}})\), the following sets of assumptions will be necessary below.

-

(i)

(Minimum Regularity) The coefficient mapping \(A : D \times \varOmega \rightarrow {\mathbb {R}}\) is \(({\mathcal {L}} \times {\mathbb {P}})\)-measurable and there exist constants \(0< {\underline{A}} < {\overline{A}}\) such that

$$\begin{aligned} {\underline{A}} \le A(x,\omega ) \le {\overline{A}} \quad ({\mathcal {L}} \times {\mathbb {P}})\text {-a.e. } (x,\omega ) \in D \times \varOmega \end{aligned}$$The fixed bulk term f satisfies

$$\begin{aligned} f \in L^{\infty }_{{\mathbb {P}}}(\varOmega ; L^2(D)) \end{aligned}$$ -

(ii)

(Higher Regularity) In addition to (i), \(A \in L^{\infty }_{{\mathbb {P}}}(\varOmega ; C^{0,1}({\bar{D}}))\).

-

(iii)

(Control Mapping) The control mapping \(B : \varOmega \rightarrow {\mathcal {L}}(L^2(D)^N, L^2(D))\) is measurable and essentially bounded, i.e. \(B \in L^\infty _{{\mathbb {P}}}(\varOmega , {\mathcal {L}}(L^2(D)^N, L^2(D))\). Moreover, as a mapping from \(\varOmega \) to \({\mathcal {L}}(L^2(D), H^{-1}(D))\), B is completely continuous in the sense that for \({\mathbb {P}}\)-a.e. \(\omega \in \varOmega \) we have

$$\begin{aligned} z_k \rightharpoonup z \text { in } L^2(D)^N \Longrightarrow B(\omega ) z \rightarrow B(\omega ) z \text { in } H^{-1}(D). \end{aligned}$$

Some remarks are in order. Assumption 1.(i) can be slightly weakened to allow for unbounded coefficients and still obtain the existence of solutions, cf. e.g., [24]. It is also possible to choose f and/or \(B(\omega )z\) that is unbounded in \(\omega \). However, weakening these assumptions would mean that the solutions u to (2.3) are also not bounded. The latter property is essential for our treatment of state constraints. The Lipschitz continuity of \(A(\omega ,\cdot ) : {\bar{D}} \rightarrow {\mathbb {R}}\) in Assumption 1.(ii) will be used to ensure boundedness of u in x. This along with the regularity assumption on the boundary \(\partial D\) can be slightly weakened to the extent that we can guarantee \(u \in L^{\infty }_{{\mathbb {P}} \times {\mathcal {L}}}(\varOmega \times D)\), e.g., we could relax Lipschitz to Hölder and work with \(u(\cdot ,\omega )\) in \(W^{1,p}(D)\) with \(p > d\). The properties in Assumption 1.(iii) are the weakest possible for our analysis. Using Assumption 1, we gather several essential properties of the mapping \(z \mapsto u\) in the following result.

Proposition 1

Let Assumption 1 hold. For any \(z \in L^2(D)\), there exists a unique solution \(u \in {\mathcal {U}}\) of (2.3). Moreover, \(u \in L^{\infty }_{{\mathbb {P}}}(\varOmega ; H^2(D)\cap H^1_0(D))\) and the following a priori bound holds

Here, C is independent of \(\omega \).

Proof

Defining the bilinear form \(b : {\mathcal {U}} \times {\mathcal {U}} \rightarrow {\mathbb {R}}\) by

and z-dependent linear form \(L(\cdot ; z) : {\mathcal {U}} \rightarrow \mathbb R\) by

we can view (2.3), as the variational problem: Find \(u \in {\mathcal {U}}\) such that

It readily follows from Assumption 1 that b is a \({\mathcal {U}}\)-coercive bilinear form. Then by the Lax-Milgram Lemma there exists a unique solution \(u \in {\mathcal {U}}\). In light of the equivalence to (2.5), we immediately deduce from the standard a priori bound:

that \(u : \varOmega \rightarrow H^1_0(D)\) is \({\mathbb {P}}\)-essentially bounded. Due to the assumptions on A, \(C_1\) does not depend on \(\omega \).

For the a priori bound (2.7), we need to consider two cases. We once again appeal to the equivalence between (2.3) and (2.5). If \(\partial D\) is of type \(C^{1,1}\), then it follows from Assumption 1 along with Friedrichs’ theorem, see e.g., [5, A12.2 Theorem], that for \({\mathbb {P}}\)-a.e. \(\omega \in \varOmega \) we have

Here, \(C(\omega ) = C\left( \partial D,d,{\underline{A}}, \Vert A(\cdot ,\omega )\Vert _{C^{0,1}({\bar{D}})}\right) \). The same estimate also holds when \(\partial D\) is nonsmooth, but D is a convex polyhedron, see Remark 1 below. The “constant” \(C(\omega )\) is indeed a bounded and measurable function in \(\omega \). This follows from the fact that the term \(\Vert A(\cdot ,\omega ) \Vert _{C^{0,1}({\bar{D}})}\) is measurable, uniformly bounded away from zero, and \(C(\omega )\) is a sum of rational functions of \(\Vert A(\cdot ,\omega ) \Vert _{C^{0,1}({\bar{D}})}\), where it appears in a numerator and a denominator. Continuing, for \({\mathbb {P}}\)-a.e. \(\omega \in \varOmega \), we have

Furthermore, we obtain

Finally, due to the Gelfand triple \(H_0^1(D) \hookrightarrow L^2(D) \hookrightarrow H^{-1}(D)\), we have

where

and \(C_{\mathrm{emb}}\) is the embedding constant for \(L^2(D)\) into \(H^{-1}(D)\). Passing to the \({\mathbb {P}}\)-essential supremum yields

Thus, \(u \in L_{{\mathbb {P}}}^\infty (\varOmega ;H_0^1(D) \cap H^2(D))\) and (2.7) follows. \(\square \)

Remark 1

For details on the well-known regularity results for deterministic elliptic PDEs on nonsmooth domains, we refer to [25, Thm. 3.2.1.2] and especially to [25, Thm. 3.1.3.3, Lem. 3.1.3.2, Thm. 3.1.3.1] for the estimation bounds.

Proposition 1 justifies the decomposition in (2.6). In particular, we see that S is a bounded linear operator and \(u_{f} \in L_{{\mathbb {P}}}^\infty (\varOmega ;H_0^1(D) \cap H^2(D))\). We deduce several additional properties in the following corollary.

Corollary 1

Under the hypotheses of Proposition 1 we have:

-

(i)

As a mapping from \(L^2(D)\) to \(L^q_{{\mathbb {P}}}(\varOmega ; H^1_0(D))\) with \(q \in [1,\infty )\), S is completely continuous, bounded, and linear.

-

(ii)

As a mapping from \(L^{2}(D)\) to \(L_{{\mathbb {P}}}^\infty (\varOmega ;H_0^1(D) \cap H^2(D)))\), S is bounded and linear.

Proof

Case (i) is a special case of [42, Prop 2.3]. In case (ii) linearity follows trivially from the definition of S(z) whereas boundedness is a consequence of (2.7) and Assumption 1.(iii). \(\square \)

We end this section by introducing a convenient \(\mathbb P\)-pointwise notation that will aid in the derivation of optimality conditions below. We define

to be the operators given by

for \(u, v \in H^1_0(D) \cap H^2(D)\) and

respectively. Note that \(\varvec{A}(\omega )\) is a linear isomorphism due to the regularity results above. Given \(\varvec{A}, \varvec{B}\) we can understand \(S(z) + u_f\) \({\mathbb {P}}\)-pointwise as

whenever we need to work with higher regularity.

2.2.3 A class of risk-neutral PDE-constrained optimization problems

In this section, we introduce a class of optimization problems that will serve as a template for the individual player problems in the PDE-constrained GNEP.

Assumption 2

We assume that

-

(i)

(Control Constraints) \(Z_{\mathrm{ad}} \subset L^2(D)\) is a nonempty, closed, bounded, and convex set.

-

(ii)

(Objective) The cost parameter \(\nu \ge 0\), \(u_d \in L^2(D)\), \(T \in {\mathcal {L}}(L^2(D))\), and

\(J : L^2(D) \times L^2(D) \rightarrow {\mathbb {R}}\) is defined by

$$\begin{aligned} J(u,z) := \frac{1}{2} \Vert Tu - u_{d} \Vert ^2_{L^2(D)} + \frac{\nu }{2}\Vert z \Vert ^2_{L^2(D)}. \end{aligned}$$(2.11) -

(iii)

(State Constraint) Given \(\psi \in C(\overline{\varOmega \times D})\) for which there exists \(\varepsilon > 0\) such that

$$\begin{aligned} \psi |_{\partial D}(\omega ) \le -\varepsilon \; \;{\mathbb {P}} \text {-a.s.}, \end{aligned}$$we define the state constraint by

$$\begin{aligned} S(z) + u_f \ge \psi \text { for } ({\mathcal {L}} \times {\mathbb {P}}) \text {-a.e. } (x,\omega ) \in D \times \varOmega . \end{aligned}$$(2.12) -

(iv)

(Feasibility) There exists \(z \in Z_{\mathrm{ad}}\) such that (2.12) holds.

The boundedness in Assumption 2.(i) is only needed in the optimization setting if \(\nu = 0\). However, it is unclear how to extend the existence proof for the GNEP, as the latter follows from an application of the Kakutani-Fan-Glicksberg theorem, which includes a compactness condition. It is not necessary for our analysis to restrict ourselves to the tracking-type objective in Assumption 2.(ii). We could proceed in a more general manner as suggested in [44] under appropriate convexity, continuity, and growth conditions. This would require further technical assumptions that we believe would detract from the main purpose of the text. The nonemptiness of the feasible set in our setting is assumed in Assumption 2.(iv). Provided \(Z_{\mathrm{ad}}\) admits a \(z > 0\) with sufficiently large \(L^{\infty }(D)\)-norm, then the existence of a feasible point can be guaranteed by the maximum principle in light of the regularity result in Proposition 1.

The inclusion of state constraints in PDE-constrained optimization in the form of (2.12) is new. An alternative way of interpreting (2.12) would be to consider either

or

From the perspective of stochastic programming, this is rather restrictive and in general settings (beyond PDE-constrained optimization), may lead to empty feasible sets. Typically one remedies this by selecting a minimum probability level \(p \in (0,1)\) and considering instead:

Several recent studies have considered this perspective, see [19, 23]. However, these approaches do not circumvent the fundamental difficulties encountered with state constraints in regards to multiplier regularity and mesh-independent numerical approaches. In addition, the functional

is nontrivial to analyze and use in numerical algorithms. This usually requires \({\mathbb {P}}\) to admit a log-concave density and for \(S(z)(x,\omega )\) to have a very specific structure with respect to \(\omega \). For more on probability constraints, we refer the reader to [50, 59] and the related references therein.

We may now formulate the optimization problem

2.2.4 A class of risk-neutral PDE-constrained GNEPs

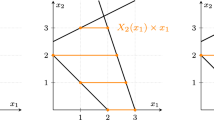

We now introduce a noncooperative game with N players by using the results of the previous section. The individual \(i\mathrm{th}\) player is assumed to solve the following optimization problem

Here, the quantities \(Z^{i}_{\mathrm{ad}}\), \(T_i\), \(\nu _i\), and \(u_d^i\) are defined analogously to those in the standard optimization setting, where we again require Assumptions 1 and 2 for each \(i =1,\dots ,N\). In what follows, we denote the collective admissible set of controls by \({\mathcal {Z}}_{\mathrm{ad}} = Z^{1}_{\mathrm{ad}} \times \dots \times Z^{N}_{\mathrm{ad}}\). The main difference for the individual player problems lies in the definition of the control mapping \(\varvec{B}\). For the sake of reference, we make the following assumption.

Assumption 3

The operator \(\varvec{B}\) has the additive representation

where \(\varvec{B}_i\) satisfies Assumption 1 for \(i =1,\dots , N\).

In light of the assumptions, we may also formulate the PDE-constrained GNEP in terms of the following reduced space problems.

3 Existence and optimality conditions

We first prove existence of optimal solutions of (2.14) and provide optimality conditions. Then, by extending the arguments used in [34], we prove the existence of generalized Nash equilibria for (2.15). Optimality conditions for a certain type of equilibria are also derived. We will use the concept of variational equilibria, which is strongly related to the notion of normalized equilibrium due to Rosen [56]; although Rosen’s concept of normalized equilibrium was formulated using Lagrange multipliers. This is a specific class of Nash equilibria that can in many cases be computed numerically.

3.1 Risk-neutral PDE-constrained optimization problems

For the risk-neutral PDE-constrained optimization problems the existence and optimality conditions are formulated as follows.

Theorem 4

Let Assumptions 1 and 2 hold. Then (2.14) admits a solution \({\bar{z}}\). If \(\nu > 0\), then \({\bar{z}}\) is unique. Moreover, if there exists a \(z_0 \in Z_{\mathrm{ad}}\) and a constant \(\kappa > 0\) such that

then there exists a measure \({\bar{\mu }} \in \mathbf {ba}(\varXi , {\mathcal {B}}, \pi )\) such that

-

(i)

(Nonpositivity) \({\bar{\mu }} \) satisfies

$$\begin{aligned} \int _\varXi g(x,\omega ) \, \mathrm {d}{{\bar{\mu }}}(x, \omega ) \le 0, \quad \forall \, g \in L^{\infty }_{\pi }(\varXi )_+. \end{aligned}$$ -

(ii)

(Complementarity) \({\bar{\mu }}\) fulfills

$$\begin{aligned} \int _{\varXi } G({\bar{z}})(x, \omega ) \, \mathrm {d} {\bar{\mu }}(x, \omega ) = 0, \end{aligned}$$where

$$\begin{aligned} G(z) = \iota \varvec{A}^{-1} \varvec{B} z +\iota u_f - \psi \end{aligned}$$and

$$\begin{aligned} \iota : L_{{\mathbb {P}}}^\infty (\varOmega ;H_0^1(D) \cap H^2(D))) \rightarrow L^{\infty }_{\pi }(\varXi ) \end{aligned}$$is the continuous embedding.

-

(iii)

(Subgradient Condition) The general inclusion holds

$$\begin{aligned} 0 \in {\mathbb {E}}_{{\mathbb {P}}}[\varvec{B}^* \varvec{A}^{-*} T^*( T S{\bar{z}} + Tu_f - u_d)] + \nu {\bar{z}} + {\mathcal {N}}_{Z_{ad}}({\bar{z}}) + \varvec{B}^*\varvec{A}^{-*}\iota ^*{\bar{\mu }}. \end{aligned}$$Here, the latter term must be understood

$$\begin{aligned} \langle \varvec{B}^*\varvec{A}^{-*}\iota ^*{\bar{\mu }}, \delta z \rangle = \int _{\varXi } (\varvec{A}^{-1}(\omega ) \varvec{B}(\omega ) \delta z)(x) \, \mathrm {d} {\bar{\mu }}(x, \omega ) \end{aligned}$$for an arbitrary test function \(\delta z \in L^2(D)\).

Conversely, if there exists a pair \(({\bar{z}},{\bar{\mu }})\) such that (i)-(iii) hold, then \({\bar{z}}\) is an optimal solution of (2.14).

Remark 2

In this general setting, we cannot guarantee that \({\bar{\mu }}\) splits into a generalized product of measures that would allow us to write \(\varvec{B}^*\varvec{A}^{-*}\iota ^*{\bar{\mu }}\) using an expectation. We explain this in more detail following the proof. However, the subgradient condition in (iii) can be brought into a form slightly more familiar to PDE-constrained optimization by introducing an adjoint variable \({\widehat{\lambda }}\) that satisfies the pointwise adjoint equations

Using this term we can “unfold” the general subgradient condition into the inclusion

coupled to the adjoint Eq. (3.2), a state equation for \(S{\bar{z}}\) and complementarity conditions for the state constraint. Similarly, in some settings we may choose sufficiently regular test functions \(\varphi \) and introduce an additional adjoint variable \({\widetilde{\lambda }}\) to simplify the term \( \varvec{B}^*\varvec{A}^{-*}\iota ^*{\bar{\mu }}\) using

Indeed, \({\bar{\mu }}\) defines a bounded linear functional on \( L^{\infty }_{\pi }(\varXi )\). So if \(\varvec{A}\) can define a linear isomorphism between a subspace of \(L^{\infty }_{\pi }(\varXi )\) and, e.g., \(L^2_{\pi }(\varXi )\), then \({\widetilde{\lambda }}\) would be a (very weak) solution. These two observations could potentially be used in a numerical setting, especially if \({\mathbb {P}}\) is discrete.

Proof

To prove existence, we need to argue that the feasible set is weakly sequentially closed and \(F(z) := {\mathbb {E}}[J(S(z) + u_f,z)]\) is weakly sequentially lower semicontinuous on \(L^2(D)\). Since the assumptions on J imply F is convex and the latter component of J is deterministic and continuous, we concentrate on the properties of S and their relation to the first argument of J.

By Assumption 2, (2.14) admits a feasible point and consequently a minimizing sequence \(\{z_k\} \subset Z_{\mathrm{ad}}\) such that (2.12) holds. Since \(Z_\mathrm{ad}\) is bounded, closed, and convex, \(\{z_k\}\) admits a weakly convergent subsequence \(\{z_{k_l}\}\). For each l, we have

Since S is completely continuous as a mapping into \(L^2_{\mathbb P}(\varOmega ; H^1_0(D))\), we have \(S(z_{k_l}) \rightarrow S({\bar{z}})\) strongly. Moreover, the Sobolev embedding theorem (see e.g. [2, 4.12 Theorem]) and the fact that \(L^p(\varOmega ; X) \hookrightarrow L^q(\varOmega ;Y)\) if \(X \hookrightarrow Y\) for \(1 \le q \le p < \infty \) plus the equivalence of \(L^1_{\mathbb P}(\varOmega ;L^1(D))\) and \(L^1_{\pi }(\varXi )\) (see e.g. [36, Proposition 1.2.24]) imply that \(S(z_{k_l}) \rightarrow S({\bar{z}})\) in \(L^1_{\pi }(\varXi )\). Therefore, there exists a subsequence \(\{z_{k_{l_{m}}}\}\) such that \(S(z_{k_{l_{m}}}) \rightarrow S({\bar{z}})\) \(\pi \)-pointwise almost everywhere. It follows that

Continuing, the integrand J induces a superposition operator that is continuous from the product space \(L^2_{{\mathbb {P}}}(\varOmega ; H^1_0(D)) \times L^2(D)\) to \(L^1_{{\mathbb {P}}}(\varOmega )\), see e.g., [44, Ex. 3.2]. Then by combining the properties of S with this continuity result, we deduce the weak lower semicontinuity of F. It follows from the direct method that \({\bar{z}}\) is an optimal solution, which is of course unique if \(\nu > 0\) as the objective would be strictly convex.

In order to derive first order optimality conditions for (2.14), we write

and appeal to the general Lagrangian formalism in [10, Chap. 3]. Here, we set

where \( \iota : L_{{\mathbb {P}}}^\infty (\varOmega ;H_0^1(D) \cap H^2(D))) \rightarrow L^{\infty }_{\pi }(\varXi ) \) is the continuous embedding and K is the convex cone of all positive essentially bounded \({\mathcal {B}}\)-measurable functions. Note that we first use the continuous embedding of \( L_{{\mathbb {P}}}^\infty (\varOmega ;H_0^1(D)\cap H^2(D))\) into \(L_{{\mathbb {P}}}^\infty (\varOmega ;L^\infty (D))\) and then the continuous embedding of \(L_{{\mathbb {P}}}^\infty (\varOmega ; L^\infty (D))\) into \(L^{\infty }_{\pi }(\varXi )\) to define \(\iota \). The latter two spaces are not equivalent.

Since K has a nonempty interior and G is clearly convex with respect to the partial order induced by \((-K)\), (3.1) is equivalent to the constraint qualification \(0 \in \mathrm {int\,} \lbrace G(Z_{\mathrm{ad}}) - K \rbrace \) (and therefore Robinson’s CQ), cf. [10, Prop. 2.106]. It follows from [10, Thm. 3.6] that

where \( L(z,\mu ) = F(z) + \langle G(z), \mu \rangle . \) Due to convexity, these are both necessary and sufficient for optimality. It remains to make the conditions more explicit.

Since K is a closed, convex cone, \({{\bar{\mu }}} \in {\mathcal {N}}_{K}(G({\bar{z}}))\) yields assertions (i) and (ii). To obtain the form in (iii), we first note that

and

For the objective function F, we can exploit the equivalence with the pointwise adjoints and write

Furthermore, the uniform integrability of the operators \(\varvec{A}, \varvec{B}\), i.e. \(\varvec{B}^*, \varvec{A}^{-*}\) allows us to write via [28, Thm. 3.7.12]

This concludes the proof. \(\square \)

We caution the reader that the form of the duality pairing used for the \(\mu \)-multiplier initially does not include the expectation with respect to \({\mathbb {P}}\). However, if \(\mu \) is \(\sigma \)-finite and \(\sigma \)-additive, then by the Radon-Nikodym theorem, there exists a density \(\rho _{\mu }\) such that

In other words, we would have \( \mathrm {d} \mu = \rho _{\mu } \mathrm {d} ( {\mathcal {L}} \times {\mathbb {P}}). \) Furthermore, The sign condition on \(\mu \) carries over to \(\rho _{\mu }\), in which case \(|\rho _{\mu }| = -\rho _{\mu }\). This would indicate that \(\rho _{\mu } \in L^1_{\pi }(\varXi )\). We could then write

by Fubini’s theorem.

Furthermore, note that [28, Theorem 3.8.1] together with [42, Lemma 2.1] ensures that \(\varvec{B}^*\) is a bounded linear operator from \({\mathcal {U}}\) into \(L^p_{{\mathbb {P}}}(\varOmega ;L^2(D))\). This would then allow us to incorporate the density into the adjoint equation, which is formulated in a very weak sense. This is essential, as otherwise the dual pairing with \(\rho _{\mu }\) and the test functions would not be defined.

3.2 Risk-neutral PDE-constrained GNEPs

In order to prove existence of at least one generalized Nash equilibrium and link the proof to a function-space-based numerical algorithm, we restrict ourselves to a variational reformulation as mentioned earlier. The variational reformulation is based on the so-called Nikaido-Isoda function \(\varPsi : L^2(D)^N \times L^2(D)^N \rightarrow \mathbb {R}\). For our GNEP the Nikaido-Isoda function is given by

We then introduce the potentially set-valued function \(\widehat{{\mathcal {R}}}: {\mathcal {Z}}_{\mathrm{ad}} \rightrightarrows {\mathcal {Z}}_{\mathrm{ad}}\) given by

This mapping acts as a collective best-response function to a strategy vector \(z \in Z_{\mathrm{ad}}\) for all players simultaneously. Next, we define variational equilibria by their characterization as fixed points of the best-response function \(\widehat{{\mathcal {R}}}\) . The nomenclature diverges somewhat from the literature, but it should be clear in context what is meant below.

Definition 2

A strategy vector \({\bar{z}}\) with \({\bar{z}} \in {\mathcal {Z}}_{\mathrm{ad}} \) and \( S({\bar{z}}_i,{\bar{z}}_{-i}) + u_f \ge \psi \text { for } ({\mathcal {L}} \times {\mathbb {P}}) \text {-a.e. } (x,\omega ) \in D \times \varOmega \) is a variational equilibrium if and only if \({\bar{z}} \in \widehat{{\mathcal {R}}}({\bar{z}})\).

Note that for jointly convex GNEPs, every variational equilibrium is also a Nash equilibrium [34, Theorem 3.2]. This characterization converts the proof of the existence of Nash Equilibria to a fixed point problem. The essential ingredient is the fixed point theorem of Kakutani-Fan-Glicksberg, see e.g. [4, Corollary 17.55].

Theorem 5

Let Assumptions 1 and 3 hold. The set of variational equilibria of the jointly convex GNEP (2.15) is weakly compact and nonempty.

Proof

We proceed as in [34, Theorem 3.2], in order to apply the fixed point theorem of Kakutani-Fan-Glicksberg on \(\widehat{{\mathcal {R}}}\). By adapting the proof to the current setting, it follows from Theorem 4 that \(\widehat{{\mathcal {R}}}\) has nonempty and convex images.

To ensure compactness, we recast the problem in the space \(X_i\), where \(X_i\) is \(L^2(D)\) endowed with the weak topology. Note that \(X_i\) is a real locally convex topological space. The equivalence of weak and strong closure for convex sets in reflexive Banach spaces implies that \(Z_{ad}^i\) is closed in \(X_i\). Moreover, the weak compactness of closed and bounded convex subsets in reflexive Banach spaces implies that each set \(Z_{\mathrm{ad}}^i\) is convex and compact in \(X_i\) or equivalently sequentially compact (see [65, Satz VIII.6.1(Satz von Eberlein-Shmulyan)]). Consequently, if we take \({\mathcal {Z}}_{\mathrm{ad}} = Z_{\mathrm{ad}}^1 \times \dots \times Z_{\mathrm{ad}}^N\) and \(X = X_1 \times \dots \times X_N\), then \({\mathcal {Z}}_{\mathrm{ad}} \subset X\), where \({\mathcal {Z}}_{\mathrm{ad}} \) is also nonempty, convex and compact in X. Due to the latter property, the weak topology is metrizable on \({\mathcal {Z}}_{\mathrm{ad}} \) (see [65, Lemma VIII.6.2]).

In order to see the closedness of the graph of \(\widehat{{\mathcal {R}}}\), we introduce the set

Now, we consider a closed subset \(C \subset \mathcal {X}_{\mathrm{ad}}\) and a sequence \(\lbrace z^n \rbrace _{n \in \mathbb {N}} \subset \widehat{{\mathcal {R}}}^{-1}(C)\) with \(z^n \rightarrow {\bar{z}}\) in X (i.e. \(z^n \rightharpoonup {\bar{z}}\) in \(L^2(D)^N\)). For every \(z^n\) we choose \(v^n \in C \cap \hat{{\mathcal {R}}}(z^n).\) By a slight adaptation of the arguments in the proof of Theorem 4, we can show that \(\mathcal {X}_{\mathrm{ad}}\) is sequentially compact. Hence, there exists a convergent subsequence \(v^{n_k} {\mathop {\rightarrow }\limits ^{X}} {\bar{v}}\) with \({\bar{v}} \in C\).

For some arbitrary \(w \in \mathcal {X}_{\mathrm{ad}}\) it holds that

By adapting the proof of Theorem 4, we can argue that

This is a consequence of the properties of the expectation, the objectives \(J_i\) and the solution operator S. In particular, it is essential that S is completely continuous into \(L^1_{\mathbb P}(\varOmega ; H^1_0(D))\). Using again the complete continuity, we have

It follows that \({\bar{v}} \in \widehat{{\mathcal {R}}}({\bar{z}})\), which proves the sequential closedness of the graph of \(\widehat{{\mathcal {R}}}\) or equivalently the closedness in X ( [65, Theorem B.1.2]). We now apply Kakutani-Fan-Glicksberg’s fixed point theorem. The set of Nash equilibria of the GNEP is nonempty and compact in X and thus, weakly compact in \(L^2(D)^N\). \(\square \)

The optimality conditions for a generalized Nash equilibria reads as follow. We adapt the same notation as Theorem 4.

Theorem 6

Let Assumptions 1 and 3 hold. If there exists a \((z_i^0,z_{-i}^0)\) \(\in \) \({\mathcal {Z}}_{\mathrm{ad}} \) and a constant \(\kappa > 0\) such that

then there exists a measure \({\bar{\mu }} \in \mathbf {ba}(\varXi , {\mathcal {B}}, \pi )\) such that

-

(i)

(Nonpositivity) \({\bar{\mu }} \) is an element of the polar cone of \( L^{\infty }_{\pi }(\varXi )_{+}\).

-

(ii)

(Complementarity) \({\bar{\mu }}\) fulfills

$$\begin{aligned} \int _{\varXi } G({\bar{z}}_i,{\bar{z}}_{-i})(x, \omega ) \, \mathrm {d} {\bar{\mu }}(x, \omega ) = 0. \end{aligned}$$ -

(iii)

(Subgradient Conditions) For \(i = 1, \dots , N\) the general inclusion holds

$$\begin{aligned} 0 \in {\mathbb {E}}_{{\mathbb {P}}}[\varvec{B}_i^* \varvec{A}^{-*} T_i^*( T_i S({\bar{z}}_i,{\bar{z}}_{-i}) + T_iu_f - u_d^i)] + \nu _i {\bar{z}}_i + {\mathcal {N}}_{Z_{ad}^i}({\bar{z}}_i) + \varvec{B}_i^*(\varvec{A}^{-*}\iota ^*{\bar{\mu }}). \end{aligned}$$

Conversely, if there exists a pair \(({\bar{z}},{\bar{\mu }})\) such that (i)-(iii) hold, then \({\bar{z}}\) is generalized Nash equilibrium of (2.15).

Proof

Similiar to the proof of Theorem 4, we work with the general Lagrangian formalism. We first note that \({\bar{z}} \in \widehat{{\mathcal {R}}}({\bar{z}})\). This is equivalent to

In order to derive first order optimality conditions for variational equilibria of (2.15), we recall some of the notation from the proof of Theorem 4 We again set

and define the continuous embedding \(\iota : L_{{\mathbb {P}}}^\infty (\varOmega ;H_0^1(D) \cap H^2(D))) \rightarrow L^{\infty }_{\pi }(\varXi ).\) In the notation of [10], we set

which yields the parametric Lagrangian

Since (3.4) is equivalent to the constraint qualification \(0 \in \mathrm {int\,} \lbrace G({\mathcal {Z}}_{\mathrm{ad}} ) - K \rbrace \), it follows from [10, Thm. 3.6] that

Assertions (i) and (ii) are implied by \({{\bar{\mu }}} \in {\mathcal {N}}_{K}(G({\bar{z}}_i,{\bar{z}}_{-i}))\) since K is a closed, convex cone. To obtain the subgradient conditions in (iii), we first note that

For the objective function, it holds that

In order to see that the sum of the subdifferentials equals the product in (3.5), we refer to the proof of [34, Theorem 3.7]. Analogously to (3.3), we can write

Moreover, [8, section 4.6] enables us to write the normal cones as

For \(i=1, \dots , N\), we have the componentwise subgradient condition

\(\square \)

4 A Moreau-Yosida regularization technique

The optimality conditions derived in Theorems 4 and 6 are not suitable for the development of algorithms. This is mainly due to the low regularity of the multiplier \({\bar{\mu }}\) for the state constraint. To remedy this issue, we propose a Moreau-Yosida (MY) regularization technique, similar to the studies [1, 30, 31, 34].

From the perspective of risk aversion, the MY-regularization can be seen as a measure of regret, e.g., as in [55], for the state constraint. We will also use several concentration inequalities below, which link MY-regularization to probability constraints. This further justifies the viability of the approach and provides a modeling solution for cases in which either the constraint qualification is hard to verify and/or it is not known if a feasible point for the original problem actually exists. To the best of our knowledge, this is the first time that such concentration inequalities have been used in the context of MY-regularization for infinite dimensional optimization under uncertainty.

4.1 Approximation of the risk-neutral PDE-constrained GNEPs

More specifically, the \(\gamma \)-dependent regularized problem of player i in the risk-neutral PDE-constrained GNEP (2.15) reads as

where \(\gamma > 0\). The usage of MY-regularization amounts to approximating the original GNEP by a more numerically tractable NEP. We will refer to this \(\gamma \)-dependent strategic game as \(\hbox {NEP}_{\gamma }\).

4.2 Existence and optimality conditions

The existence of a Nash equilibrium for every \(\gamma > 0\) follows by using almost identical arguments to those in Theorem 5. Moreover, the first-order conditions have a similar, but numerically more workable form. We state the following theorem for ease of reference.

Theorem 7

Let Assumptions 1 and 3 hold. The set of variational equilibria of the jointly convex \(\hbox {NEP}_{\gamma }\) (4.1) is weakly compact and nonempty. If \({\overline{z}}^{\gamma } \in {\mathcal {Z}}_{\mathrm{ad}}\) is a Nash equilibrium, then we have the following necessary and sufficient optimality conditions: For each \(i = 1,\dots , N\)

where

Corollary 7 allows us to introduce the adjoint variables \({\bar{\lambda }}^\gamma _i \in L_{{\mathbb {P}}}^2(\varOmega ; H^1_0(D))\) for \(i =1,\dots ,N\) and the associated adjoint equations:

\(\varphi \in H^1_0(D)\) and \({\bar{u}} = S({\bar{z}}^{\gamma }_i,{\bar{z}}^{\gamma }_{-i}) \). This simplifies (4.2) to

4.3 Asymptotic considerations

We now investigate the behavior of \(\hbox {NEP}_{\gamma }\) as \( \gamma \rightarrow \infty \). This is important for both theoretical as well as numerical considerations. We closely follow the approach in [33]. In order to ensure consistency of the relaxed problems, we will require the fulfillment of a constraint qualification as introduced in [33].

Definition 3

We say that (2.15) satisfies the strict uniform feasible response constraint qualification (SUFR), if there exists an \(\varepsilon > 0\) such that for all \(i= 1, \dots ,N\) and \(z_{-i} \in {\mathcal {Z}}_{\mathrm{ad}}^{-i}\) there is a \(v_i \in Z_{\mathrm{ad}}^i\) that satisfies

A few comments are in order. Traditional constraint qualifications such as the existence of a Slater point or in nonlinear programming the Mangasarian-Fromovitz constraint qualification (Robinson’s CQ in infinite dimensions) were developed for optimization problems. They provide not only the existence of Lagrange multipliers, but also, they indicate a certain stability of the constraint set around the optimal solution. For example, the MFCQ gives us that the Lagrange multipliers associated with the point in question lie in a convex, compact polytope. In Theorem 4, it was enough to assume such a CQ without the need to adapt to the GNEP setting. However, for issues of approximation, we will see in the following that GNEPs require a much more robust CQ such as SUFR in order to exhibit the local stability needed to bound the dual variables; in this case the adjoint states and the constraint multipliers. From a game-theoretic perspective, we are requiring that each player has a feasible response to any strategy by its competitors such that the common state constraint is strictly uniformly fulfilled. Finally, as the current regularity assumptions on the random inputs only provide essential boundedness, we will need more regularity of the solutions.

Assumption 8

(Higher Parametric Regularity) The set \(\varOmega \) is a compact Polish space. The solution mapping \(S(\cdot ) + u_f\) is a continuous affine mapping from \(L^2(D)^N\) into \(C(\varOmega ; H^1_0(D) \cap H^2(D))\).

The need for \(\varOmega \) to be a compact Polish space will be evident in the following proof. Under weaker assumptions, we have already shown that the mapping \(S(\cdot ) + u_f\) is a continuous affine mapping from \(L^2(D)^N\) into \(L^{\infty }_{{\mathbb {P}}}(\varOmega ; H^1_0(D) \cap H^2(D))\). The continuity assumption is actually weaker than it appears and can be guaranteed under mild assumptions (continuity in \(\omega \)) on \(A(x,\omega )\), \(B(\omega )\) and \(f(x,\omega )\), cf. the results in [35, Section 6]. The main idea is to reformulate the random PDE as a parametric fixed point equation and apply classic results on parametric dependence of solutions to fixed point equations. We now state the main result of this section.

Theorem 9

Suppose the GNEP (2.15) satisfies the Slater condition (3.1) and SUFR. If in addition Assumption 8 holds, then there exist sequences \(\gamma _n \rightarrow \infty \) and

-

\(\{ z^{\gamma _{n}} \}_{n \in \mathbb {N}} \subset L^2(D)^N \),

-

\(\{ u^{\gamma _{n}} \}_{n \in \mathbb {N}} \subset L^2_{{\mathbb {P}}}(\varOmega ; H^1_0(D) \cap H^2(D))\),

-

\(\{ \lambda ^{\gamma _{n}} \}_{n \in \mathbb {N}} \subset L^2_{{\mathbb {P}}}(\varOmega ; H^1_0(D) \cap H^2(D))^N\),

-

\(\{ \eta ^{\gamma _{n}} \}_{n \in \mathbb {N}} \subset L^2(D)^N\),

-

\(\{ \mu ^{\gamma _n} \}_{n \in {\mathbb {N}}} \subset L_{\pi }^{2}(\varXi )\),

such that for each \(i =1,\dots , N\), \((z_i^{\gamma _n},u^{\gamma _n},\lambda _i^{\gamma _n},\eta _i^{\gamma _n},\mu ^{\gamma _n})\) satisfies (4.2) as stated in Corollary 7. This sequence admits a limit point

where, for all \(i= 1, \dots , N\), we have

Moreover, the limit point satisfies

for an arbitrary test function \(\varphi \in L^2(D)\). Finally, \(\rho ^*\) satisfies

Note that (4.5c) and (4.5d) correspond to the subdifferential inclusion in Theorem 6. For readability, we split the proof over several partial results.

Lemma 1

Under the assumptions of Theorem 9, there exists a sequence of MY parameters \(\gamma _k \rightarrow \infty \) such that the associated sequence of Nash equilibria \(\{z^{\gamma _k}\}_{k \rightarrow \infty }\) converges weakly to a feasible strategy of the GNEP, i.e. (4.5a) and (4.5b) hold.

Proof

Fix a sequence \(\gamma _n \rightarrow \infty \) for \(n \rightarrow \infty \). Since \({\mathcal {Z}}_{\mathrm{ad}}\) is weakly compact in \(L^2(D)^N \) and \(z^{\gamma _n} \in {\mathcal {Z}}_{\mathrm{ad}}\) for all \(\gamma ^n\), there exists a subsequence, denoted by \(\gamma _k := \gamma _{n_k}\) and some element \(z^* \in {\mathcal {Z}}_{\mathrm{ad}}\) such that \(z^{\gamma _k} \rightharpoonup z^{*} \) in \(L^2(D)^N \). According to SUFR, there exists an \(\varepsilon > 0\) and a sequence \(\{ v^{\gamma _k}\}_{k \rightarrow \infty } \subset {\mathcal {Z}}_{\mathrm{ad}}\) such that \(S(v_i^{\gamma _k},z_{-i}^{\gamma _k})+ u_f \ge \psi + \varepsilon \) \(\varXi \)-a.s. for all \(i = 1, \dots ,N\). By definition of \({\mathcal {Z}}_{\mathrm{ad}}\), \(\{ v^{\gamma _k}\}_{k \rightarrow \infty }\) is uniformly bounded in \(L^2(D)^N \). Then for all \(\gamma _k\), the non-negativity of the MY-term gives us the lower bound:

Since \(S(v_i^{\gamma _k},z_{-i}^{\gamma _k})+ u_f \ge \psi + \varepsilon \), it holds that

Furthermore, by definition of a Nash equilibrium we have the simple upper bound

Using the fact that S is completely continuous into \(L^2_{\pi }(\varXi )\) and each individual feasible set is bounded, we deduce the existence of a constant M independent of \(i, \gamma _k\) such that

Combining these observations yields

Using the weak lower semicontinuity of the objective functions, it follows that the bound also holds for the limit

As a result, \({\mathbb {E}}_{{\mathbb {P}}}\left[ \frac{\gamma _k}{2} \Vert (\psi - (S(z_i^{\gamma _k},z_{-i}^{\gamma _k}) + u_f)_+ \Vert _{L^2(D)}^2 \right] \) is bounded. This can only hold if

since \( \gamma _k \rightarrow \infty \). Since \(S(z_i^{\gamma _k},z_{-i}^{\gamma _k})\) converges strongly to \(S(z_i^*,z_{-i}^{*})\) in \(L^2_{\pi }(\varXi )\), we also have

We can conclude, that

Thus, \(z^* \in {\mathcal {Z}}_{\mathrm{ad}}\) such that \(S(z_i^{*},z_{-i}^{*})+ u_f \ge \psi \) \(\pi \)-a.e., i.e. \(z^*\) is a feasible strategy vector for the GNEP. \(\square \)

We note that for feasibility of \(z^*\), it is not necessary for \(\varepsilon \) to be positive in the SUFR condition. In what follows, we discuss the convergence of the stationary points individually. We start by showing that \(z^*\) is also a generalized Nash equilibrium.

Lemma 2

Suppose the assumptions of Theorem 9 hold. Let \(\left\{ \gamma _k\right\} \) be the sequence of MY parameters from the proof of Lemma 1. Then there exists a subsequence \(\left\{ \gamma _{l}\right\} \) with \(\gamma _{l} := \gamma _{k_l} \rightarrow +\infty \) such that the weak limit point \(z^{*}\) is a generalized Nash equilibrium.

Proof

Define \(X_i = \{ v_i \in {\mathcal {Z}}_{\mathrm{ad}}^i \, : \, S(v_i,z^*_{-i}) + u_f \ge \psi \, \pi \text {-a.s} \}\). Due to the SUFR condition, \(X_i\) is non-empty. Since for all \(\gamma _k\) the associated \(z^{\gamma _k}\) is a Nash equilibrium, it holds that

for all \(v_i \in X_i\). For any \(v_i \in X_i\), we want to construct a strongly convergent sequence \(\{ v^{\gamma _k} \}_{k \rightarrow \infty }\) so such \(v^{\gamma _k} \rightarrow v_i\) in \(L^2(D)\) and \(S(v_i^{\gamma _k},z_{-i}^{\gamma _k}) + u_f \ge \psi \).

Due to the SUFR condition, there exists an \(\varepsilon > 0\) and for all k, a \(v_i^k \in Z_{\mathrm{ad}}^i\) such that \(S(v_i^k,z^{\gamma _k}_{-i}) + u_f \ge \psi + \varepsilon \), \(\pi \)-a.s. Clearly, \(\{ v_i^k\}_{k \rightarrow \infty }\) is uniformly bounded in \(L^2(D)\). Since every admissible set of each player is convex, we have that

lies in \( {\mathcal {Z}}_{\mathrm{ad}}^i\) for all \(t \in (0,1)\). Due to the linearity of the operator \({\mathbf {A}}\) and \({\mathbf {B}}\), it holds that

We know, that for \({\mathbb {P}}\)-a.e. \(\omega \in \varOmega \) the solution operator \(S(v_i, \cdot )(\omega ) + u_f(\omega )\) maps continuously from \( L^2(D)^{N-1} \) into \( H^1_0(D) \cap H^2(D)\). Due to the Sobolev and Rellich-Kondrachov theorem, the solution of the state equation can be continuously and compactly embedded into the space of continuous functions over \({\bar{D}}\) \({\mathbb {P}}\)-a.s. Thus, \(S(v_i, \cdot )(\omega ) + u_f(\omega )\) maps from \( L^2(D)^{N-1} \) into \(C({\bar{D}})\) for \({\mathbb {P}}\)-a.e. \(\omega \in \varOmega \). Combining this with the regularity assumption on the solution of the state equation, we have \(S(v_i,z_{-i}^{\gamma _k}) + u_f \rightarrow S(v_i,z_{-i}^{*}) + u_f\) in \(C(\varOmega ;C({\bar{D}}))\). Then by virtue of the nature of convergence in the \(C(\varOmega ;C({\bar{D}}))\)-norm, we deduce the existence of a subsequence \(\gamma _{k_l}\), denoted by \(\gamma _l\), such that

on D for all k. Now, setting

then \(t_l \rightarrow 0\) and \(t_l \in (0,1)\) for all l. Moreover, substituting (4.9) in (4.7) and due to (4.8), we have

Thus, \( S(v_i(t_l),z_{-i}^{\gamma _l}) + u_f \ge \psi \) for all l. And finally, since

Passing to the limit as \( l \rightarrow \infty \) yields \(|t_l|\left( \Vert v_i^l \Vert _{L^2(D)} + \Vert v_i \Vert _{L^2(D)} \right) \rightarrow 0\) due to the boundedness of \(\{ v_i(t_l) \}_{l \rightarrow \infty }\) and that \(\{ t_l\}_{l \rightarrow \infty }\) is a null sequence. Thus, we have constructed a sequence \(\{ v_i(t_l) \}_{l \rightarrow \infty }\) such that \(v_i(t_l) \rightarrow v_i\) in \(L^2(D)\) and \(S(v_i(t_l),z_{-i}^{\gamma _l}) + u_f \ge \psi \). Note that \(v_i \in X_i\) was arbitrary.

Finally, by substitution, we have

For all \(i = 1, \dots , N\), passing to the limit inferior yields the following inequality

for all \(v_i \in X_i\). Thus, \((z_i^{*}, z_{-i}^{*})\) is a generalized Nash equilibrium. \(\square \)

Here, we see that the uniformity in the SUFR condition is crucial to prove that \(z^{*}\) is in fact a Nash equilibrium. In the following result, we obtain a stronger form of convergence to \(z^{*}\). This is necessary to derive the adjoint equation in the limit.

Lemma 3

Under the assumptions of Theorem 9, (4.4a) holds.

Proof

First, we choose \(z_i^* \in X_i\) in the construction of (4.7) with \(t=t_l\) as in (4.9), then we have

Recall that \(v_i^*(t_l) \rightarrow z_i^*\) in \(L^2(D)\) and \(S(v_i^*(t_l),z_{-i}^{\gamma _l}) + u_f \ge \psi \) for all \(l \in \mathbb {N}\). Then it holds that

Passing to the limit superior yields

Due to the complete continuity of S, we have

Then (4.10) reads as

This implies that

Due to the weak convergence of \(\{z_i^{\gamma _l}\}_{l \in \mathbb {N}}\), it holds that \( \underset{l \rightarrow \infty }{\lim \inf } \, \Vert z_i^{\gamma _l} \Vert ^2_{L^2(D)} \ge \Vert z_i^* \Vert ^2_{L^2(D)}\). This implies

Together with the weak convergence, the assertion follows. \(\square \)

We proceed with the sequence of the state variables.

Lemma 4

Under the assumptions of Theorem 9, (4.4b) and (4.5b) hold.

Proof

This directly follows from the assumption, that \(S(\cdot ,\cdot ) + u_f : L^2(D)^N \rightarrow C(\varOmega ; H^1_0(D) \cap H^2(D))\) is continuous and the fact the sequencs \(\{z_i^{\gamma _l}\}_{l \in \mathbb {N}}\) converges strongly in \(L^2(D)\) for all \(i = 1, \dots , N\). \(\square \)

We note that the continuity in \(\varOmega \) is not really needed to prove a norm convergence result. Indeed since \(\{z_i^{\gamma _l}\}_{l \in \mathbb {N}}\) is bounded, we still have that \(\{u^{\gamma _l}\}_{l \in \mathbb {N}}\) is bounded in \(L^2_{{\mathbb {P}}}(\varOmega ; H^1_0(D)\cap H^2(D))\). Then \(u^{\gamma _{l_n}} \rightharpoonup u^* \) in \(L^2_{{\mathbb {P}}}(\varOmega ; H^1_0(D)\cap H^2(D)).\) By Corollary 1 we even know that \(u^{\gamma _{l_n}} \rightarrow u^* \text { in } L^\infty _{{\mathbb {P}}}(\varOmega ; H^1_0(D) \cap H^2(D))\) holds.

Next, we turn our attention to the sequence of the multipliers \(\mu ^{\gamma }\) for the state constraint. We will observe that the Slater condition is enough to obtain a bound on \(\mu ^{\gamma }\). Recall that \(\mu ^{\gamma } = -\gamma (\psi - (S({\bar{z}}^{\gamma }_i,{\bar{z}}^{\gamma }_{-i}) + u_f))_+\).

Lemma 5

Suppose the assumptions of Theorem 9 hold. In particular, (3.1) is fulfilled. Then we have (4.4c).

Proof

We now prove the existence of a constant \(c_0 > 0\) such that

for any \(z \in {\mathbb {B}}_{\varepsilon }(0) \subset L^{\infty }_{\pi }(\varXi )\) and some fixed \(\varepsilon > 0\). For the sake of readability, we set \(\beta : L^2_{\pi }(\varXi ) \rightarrow \mathbb {R}_+\) such that

Unless otherwise noted, \((\cdot ,\cdot )\) denotes the inner product on \(L^2_{\pi }(\varXi )\) throughout the proof.

One readily shows that \(\beta \) is convex and continuously differentiable and therefore, \(\mu ^\gamma = \gamma \beta '(u^\gamma )\). Since \(\beta \) is convex, differentiable, and nonnegative, we obtain for any

the equality

By the assumption (3.4) there exists \(\varepsilon > 0\) and \(z^0 \in {\mathcal {Z}}_{\mathrm{ad}}\) such that for all \(v \in {\mathbb {B}}_{\varepsilon }(0) \subset L^\infty _{\pi }(\varXi )\): we have

Since \(\left( \varOmega , {\mathcal {F}}, {\mathbb {P}} \right) \) is a complete probability space and the spatial domain D is bounded, the Lebesgue spaces are nested, and it holds that \(v \in L^2_{\pi }(\varXi )\). Furthermore, \(Sz^0 +u_f \in L^2_{\pi }(\varXi )\). Fixing an arbitrary \(v \in {\mathbb {B}}_{\varepsilon }(0)\), we have

Due to (4.13), we have

The definition of the multiplier \(\mu ^\gamma \) and the operator \(\varvec{B}\) yield

Substituting the adjoint equation and applying the adjoint operator \( \varvec{B}^*\varvec{A}^{-*}\) yields

Applying [28, Thm. 3.7.12] yields

Using \(0 = \nu _i z^\gamma _i + {\mathbb {E}}_{{\mathbb {P}}}\left[ \varvec{B}_i^* \lambda ^\gamma _i \right] + \eta ^\gamma _i\) and the fact, that \(z_i^0 \in Z_{\mathrm{ad}}^i\) yields

Here, the existence of \(c_0\) is guaranteed, since the mappings

are continuously differentiable with uniformly bounded gradients on \({\mathcal {Z}}_{\mathrm{ad}}\) for all \(i= 1, \dots , N\). This proves (4.12), since z was arbitrary. Using the fact that the \(L^1\)-norm is positively homogeneous, subadditive and continuous, it follows from the Fenchel-Moreau theorem that the \(L^1\)-norm is equivalent to the bidual norm

It follows that the sequence \(\{ \mu ^\gamma \}_{\gamma \rightarrow \infty }\) is bounded in \(L^1_\pi (\varXi )\). Therefore, by [17, Theorem IV.6.2] or [7, Corollary 2.4.3], we can extract a subsequence \(\left\{ \mu ^{\gamma _l} \right\} _{l \in \mathbb {N}}\) which is \(\text {weak}^*\) convergent to some regular countably additive Borel measure \(\rho \in {\mathcal {M}}({\overline{\varXi }})\). \(\square \)

Next, we discuss the limit of the adjoint equation. We start by investigating the behavior of the expectation of the adjoint states. This leads to the derivation of a limiting adjoint state \(\varLambda ^*\).

Lemma 6

Under the assumptions of Theorem 9, for all \(i = 1, \dots , N\), (4.4d) holds.

Proof

We start by constructing a specific test function. Let \(\phi \) be the solution of the operator equation

for \(\varphi \in L^2(D)\). Then by the assumptions, \(\phi \in L^\infty _{{\mathbb {P}}}(\varOmega ; H^1_0(D) \cap H^2(D))\) and, by Assumption 8, \(\phi \in C(\varOmega ; H^1_0(D) \cap H^2(D))\) holds. Using the adjoint state as a test function, we have

Then due to the Cauchy-Schwarz inequality and Hölder-inequality, respectively, we obtain

Due to the continuous embedding of \( H^2(D) \cap H^1_0(D)\) into \(L^2(D)\) and \(L^\infty (D)\), respectively, we have

By the assumptions on the operators \({\mathbf {A}}\) and \({\mathbf {B}}\), there exists \(C_2 \in L^{\infty }_{{\mathbb {P}}}(\varOmega )\) such that

Now, combining the latter with (4.15) and (4.14), we obtain

for all \(\varphi \in L^2(D)\). Here, \(C_3 \in L^\infty _{{\mathbb {P}}}(\varOmega )\) and \(C_4 \in L^1_{{\mathbb {P}}}(\varOmega )\). The existence of \(C_4\) follows from the uniform bound on \(\mu ^{\gamma }\) in the \(L^1_{\pi }(\varXi )\)-norm. Taking the expectation and applying Fubini’s theorem yield

for all \(\varphi \in L^2(D)\). In other words, the sequence \(\{ {\mathbb {E}}_{{\mathbb {P}}} \left[ \varvec{B}_i^* \lambda _i^\gamma \right] \}_{\gamma \rightarrow \infty } \) is bounded in \( L^2(D)\). Thus, there exists a weakly convergent subsequence \(\{ {\mathbb {E}}_{{\mathbb {P}}} \left[ \varvec{B}_i^* \lambda _i^{\gamma _l} \right] \}_{l \in \mathbb {N}}\) and a \(\varLambda _i^* \in L^2(D) \) such that \( {\mathbb {E}}_{{\mathbb {P}}} \left[ \varvec{B}_i^* \lambda _i^{\gamma _l} \right] \rightharpoonup \varLambda _i^*\) in \(L^2(D)\). \(\square \)

Remark 3

The adjoint state plays an important role in numerical methods. In particular, \({\mathbb {P}}\) is often replaced by an empirical measure \({\mathbb {P}}_{N}\), which is associated with an i.i.d. random sample of size N. Therefore the quantity

is of practical interest. By the (Kolmogorov) strong law of large numbers, we have

with probability 1 as \(N \rightarrow +\infty \) for any \(\varphi \in L^2(D)\). For readability, set

and recall that almost sure convergence implies convergence in probability. Then for fixed \(l \in {\mathbb {N}}\) and any \(\varepsilon > 0\), there exists \(N_{l,\varepsilon } \in {\mathbb {N}}\) such that

On the other hand, the previous lemma gives us \( {\mathbb {E}}_{{\mathbb {P}}} \left[ \varvec{B}_i^* \lambda _i^{\gamma _l} \right] \rightharpoonup \varLambda _i^*\) in \(L^2(D)\) as \(l \rightarrow +\infty \). It follows that for any \(\varphi \in L^2(D)\) we have

This means that the set of all events for which

is contained in the set of all events for which

Therefore, fix \(\varphi \in L^2(D)\) and \(\varepsilon > 0\), and choose l such that

Then for all \(\varepsilon \), there exists an l such that

Thus, the diagonal sequence of sample averages of the adjoint variables weakly converges in probability to the limiting adjoint variable \(\varLambda ^*\). For a fully discrete scheme using a finite element discretization of the underlying deterministic state spaces, in which error estimates for the deterministic adjoint variables were available, we could derive a similar statement. This is part of the justification for the update heuristic in our algorithm and, in general, for any related numerical algorithm in which the sample sizes gradually increase with the MY-parameters.

Next, we turn our attention on the adjoint equation in the limit.

Lemma 7

Under the assumptions of Theorem 9, (4.5c) holds.

Proof

As in the previous proof, we start by constructing a specific test function. In this case, let w be the solution of the operator equation

for all \(\varphi \in L^2(D)\), then we know that \( w \in C(\varOmega ; H^1_0(D) \cap H^2(D))\) holds. It follows that

Taking the expectation on both sides yields

We know that \(\mu ^{\gamma _l} \rightharpoonup ^* \rho ^* \) in \({\mathcal {M}}({\overline{\varXi }})\). The right hand side reads as

Passing to the limit \(l \rightarrow \infty \) yields

for all \(\varphi \in L^2(D)\). \(\square \)

Next, we turn to the sequence \(\{\eta ^\gamma \}_{\gamma \rightarrow \infty } \subset {\mathcal {N}}_{Z_{\mathrm{ad}}^i}(z_i^\gamma )\).

Lemma 8

Under the assumptions of Theorem 9, (4.4e) and (4.5d) hold.

Proof

Due (4.2), we can write

Then the boundedness of the sequence \(\{ \eta _i^{\gamma _l} \}_{l \in \mathbb {N}}\) in \(L^2(D)\) directly follows from

Thus, there exists a \(\eta _i^* \in L^2(D)\) and a subsequence \(\{ \eta _i^{\gamma _{l_n}} \}_{n \in \mathbb {N}}\) such that the assertion holds. \(\square \)

Finally, we derive the complementarity system for the multiplier \(\rho ^*\).

Lemma 9

Under the assumptions of Theorem 9, (4.6a) and (4.6b) hold.

Proof

As used several times above, there exists a subsequence of MY parameters \(\gamma _{l} \rightarrow +\infty \) along which the multipliers \(\left\{ \mu ^{\gamma _l}\right\} \) converge \(\hbox {weak}^*\) in \({\mathcal {M}}({\overline{\varXi }})\) to some \(\rho ^* \in {\mathcal {M}}({\overline{\varXi }})\). For each fixed l we have \(\pi \)-a.s.:

Therefore, for any non-negative test function \(\phi \in C({\overline{\varXi }})\), we have

By definition, \(\langle \phi ,\mu ^{\gamma _l}\rangle \rightarrow \langle \phi ,\rho ^* \rangle \) as \(l \rightarrow +\infty \). Hence, \(\rho ^*\) is a negatively signed measure. Moreover, setting

which is continuous and converges strongly in \(C({\overline{\varXi }})\) (by assumption) to

we have

Furthermore, for each l, we have \(\langle \phi _{l},\mu ^{\gamma _l}\rangle \le 0\) and \(\langle \phi _{l},\mu ^{\gamma _l}\rangle \rightarrow \langle \phi ^*,\rho ^* \rangle \). Whence we have the complementarity condition. \(\square \)

This completes the derivation of Theorem 9.

4.4 Probability constraints and Moreau-Yosida regularization

In this final theoretical section, we wish to draw the link between Moreau-Yosida regularization and probability constraints. We do so only for the the risk-neutral PDE-constrained optimization problem (2.14), as the treatment of the GNEP would require further technical assumptions and somewhat obfuscate our main point. The main tools are basic concentration inequalities from probability theory. We recall again the \(\gamma \)-dependent optimization problem:

where \(\gamma > 0\). We note that yet another way of formulating the original state constraint is

Ideally, we would use the \(L^{\infty }(D)\)-norm as opposed to the \(L^2(D)\)-norm, since the latter allows strong violation of the constraint on small subsets of positive measure for the weaker constraint

for \(\varepsilon > 0\), but arbitrarily small. However, in order to derive a result of the type in the following theorem with the \(L^{\infty }(D)\)-norm, we would need a careful analysis similar to [32]. This goes beyond the scope of the current paper.

Proposition 2

Let \(z^{\gamma }\) be the unique minimizer of (4.16). Then for any \(\varepsilon > 0\), we have

where \(\alpha = {\mathbb {E}}_{{\mathbb {P}}}\left[ J(S(z),z)\right] \) and z is the unique minimizer of (2.14).

Proof

Using Markov’s inequality, we have

We use \(z^{\gamma }\) to obtain a simpler upper bound. By definition of \(z^\gamma \), it holds that

for all \(v \in Z_{\mathrm{ad}}\). In particular, we obtain the bound

for all \(v \in Z_{\mathrm{ad}}\) such that \(S(v) + u_f \ge \psi \text { for } ({\mathcal {L}} \times {\mathbb {P}}) \text {-a.e. } (x,\omega ) \in D \times \varOmega \). Using the minimizer z of (2.14) leads to

From this we obtain \( {\mathbb {E}}_{{\mathbb {P}}}\left[ \Vert (\psi - (S(z_\gamma ) + u_f))_+ \Vert ^2_{L^2(D)}\right] \le \frac{2\alpha }{\gamma }. \) Then returning to Markov’s inequality, we now have

Finally, the complementary event is given by

\(\square \)

Remark 4

Using the analysis from the previous sections, we know that there exists a sequence \(\gamma _n \rightarrow +\infty \) such that the random variable

converges strongly in \(L^1(\varOmega ,{\mathcal {F}},{\mathbb {P}})\) to

Since \(z^*\) is feasible, the state constraint holds and \(X^* \equiv 0\). Therefore, there exists a subsequence \(\gamma _k := \gamma _{n_k}\) along which \(X_{k} := X_{n_k}\) converges almost surely to 0; and consequently in distribution as well. For each k, we can set \(\varepsilon _k = 1/\sqrt{\gamma _k}\) and treat \(Y_k := \varepsilon _k\) as a degenerate random variable, which clearly converges in distribution to 0. It follows from Slutsky’s theorem that \(X_k + Y_k\) converges in distribution to \( \Vert (\psi - (S(z^{*}) + u_f))_+ \Vert ^2_{L^2(D)}\), i.e., 0 and since

the Portmanteau lemma yields

In this sense, Proposition 2 provides us with a probabilistic rate of convergence from Moreau-Yosida to feasibility for the original problem. We observe in the out-of-sample experiments in Sect. 5 almost exactly this behavior, i.e., for \(\gamma _k = 1000\), the percent of out-of-sample states is between one and three percent.

5 Numerical experiments

In this final section, we provide a numerical study to indicate how stochastic PDE-constrained optimization problems subject to pointwise state constraints and PDE-constrained GNEPs under uncertainty might best be solved. To the best of our knowledge, this is the first attempt to solve such problems numerically. As a result, the focus will be on the numerical solution of the individual optimization problems. For the GNEP, a Krasnoselskii-Mann-type alternating method is employed in which the dueling agents use the solver from Sect. 5.1.

5.1 Solving the individual problems

The basic idea behind this algorithm derives from the success of semismooth Newton methods for solving deterministic PDE-constrained optimization problems subject to state constraints using Moreau-Yosida regularization and path-following for the parameter updates; see e.g., [30, 31]. Indeed, given \(\gamma > 0\) and an iid sample of size M, we can replace the underlying probability distribution with the associated empirical probability measure \({\mathbb {P}}_M\) and consider

This is now a deterministic problem. In order to solve (5.1) with a semismooth Newton solver, we rewrite the first order optimality system as a single nonsmooth equation in z.

where for each \(m =1,\dots , M\), \(\lambda ^{\gamma }_m \in H^1_0(D)\) solves

for all \(\varphi \in H^1_0(D)\), \({u}^{\gamma }_m = S({z}^{\gamma ,M})(\omega ^{m}) \in H^1_0(D)\) solves

with the same test functions \(\varphi \), and

The fixed random terms \(u_{f}(\omega ^{m})\) are defined analogously to \(u^{\gamma }_m\). For readability, we denote the mapping \(z \mapsto B^* \lambda ^{\gamma }\) as \(\varLambda (z)\) or \(\varLambda (z,\omega )\) to indicate the dependence on \(\omega \). Moreover, we set

In the current setting, \(F^{\gamma }_{M} : L^2(D) \rightarrow L^2(D)\) admits a Newton derivative \(G^{\gamma }_{M}(z)\) of the form

where \({\mathcal {G}}\) is the Newton derivative of the projection operator. This allows us to apply a semismooth Newton method in \(L^2(D)\) [29, 64], which is known to be locally superlinearly convergent for each M and \(\gamma > 0\).

However, since \(\gamma \) must be taken to \(+\infty \), such an algorithm would not be computationally efficient if M were chosen large for comparatively small \(\gamma \). If M were to remain fixed, then we could use a strategy as in [1, 30, 31]. On the other hand, M should be ideally as large as possible or also treated as a parameter going to \(+\infty \). To remedy this issue, we set a maximum allowable sample size \(M_{\mathrm{max}} > 0\) and penalty parameter \(\gamma _{\mathrm{max}} > 0\) and, starting with \(M_0 \in {\mathbb {N}}\) and \(\gamma _0 > 0\), we add samples to \(M_k\) every time \(\gamma _k\) passes a certain threshold. For our numerical experiments, we consider a heuristic, which is motivated by the previous section; in particular the convergence statements in the fully continuous setting along with Remarks 3 and 4. A full convergence analysis linking sampling, approximation and smoothing error goes beyond the scope of this paper. The full algorithm is given in Algorithm 1. A few comments are in order.

The operator \(G^{\gamma _k}_{M_k}(z^k_{l})\) is not explicitly given. Thus, it is necessary to use an iterative method to solve for the Newton steps \(dz^{k}_{l}\), for which we use the tolerance \( {\texttt {tol}}^{{\texttt {newt}}} \ge 0\). Since we are using a semismooth Newton iteration for pointwise bound constraints, the components of \(dz^{k}_{l}\) are fixed on the estimated active sets for each l and we only need to solve the linear systems on the potentially smaller inactive set. Here, it is important to note that each evaluation of \(G^{\gamma _k}_{M_k}(z^k_l) dz^k_l\) requires the solution of the forward equation and two adjoint equations for every sample \(m_k = 1,\dots ,M_k\). In our implementation, we employ a preconditioned conjugate gradient method. Therefore, the computational complexity of each Hessian-vector product involved must also be multiplied by \(M_k\) and take into account the cost of applying the preconditioner. Similarly, the evaluation of the residual \(F^{\gamma }_{M_k}(z^{k}_{l})\) requires a forward and adjoint solve for each sample. For our numerical examples, we use a direct solver for the linear elliptic PDEs.

Due to these facts, we suggest starting with a relatively small \(M_0\) and increasing slowly with \(\gamma _k\). Moreover, we suggest a relatively large \({{\texttt {tol}}}^{\mathrm{res}}_{0} > 0\) and \(\rho ^{\mathrm{res}}\) close to 1. In step 13: of Algorithm 1, we simply set \(\gamma _{k+1} = \phi (\gamma _k) = \gamma _k + 1\). More aggressive strategies may be possible, but empirical evidence suggests that this is not necessary and may even cause the Newton iteration to cycle. Finally, in step 15: of Algorithm 1, we link the increases of the sample sizes \(M_k\) to \(\gamma _k\). For our implementation, we start with \(\gamma _0\) and \(M_0\) and increase \(M_k\) by 10 every time \(\gamma _k\) is divisible by 100. This is merely a heuristic and other strategies are possible.

5.2 Example: risk-neutral PDE-constrained optimization

In order to demonstrate the viability of the algorithm, we consider a model problem based on [43, Ex. 6.1, Ex. 6.2] and [35, Sec. 7.2]. Here, we set \(\nu = 10^{-3}\), \(D = (0,1)\), \({\widetilde{u}}(x) = \sin (50.0*x/\pi )\), and consider the optimal control problem

where \(z \in Z_{\mathrm{ad}} := \left\{ w \in L^2(D) \left| -0.75 \le w(x) \le 0.75 \text { a.e. } x \in D\right. \right\} \) and the solution of the random PDE \(u=u(z)\in L^{\infty }(\varOmega , {\mathcal {F}}, \mathbb P;H^1(D))\) solves the weak form of

In addition, we impose the state constraint

Furthermore, we suppose that

with random variables \(\xi _i :\varOmega \rightarrow {\mathbb {R}}\), \(i=1,2,3,4\), such that the supports \(\xi _i\), \(i=1,2,3,4\), are [0, 1]. We assume here that each of these random variables is uniformly distributed. Following the usual change of variables, the forward problem (5.7) can be understood as

with \(\varXi = [0,1]^4\), endowed with the associated uniform density. We define \(\varvec{\xi }:= (\xi _1,\dots ,\xi _4) \in \varXi \). Since (5.8) is linear, we can use the superposition principle to lift the boundary conditions into the righthand side of (5.8). This allows us to transform the problem into the function space setting used throughout the paper.

Remark 5