Abstract

We present a generalization of the notion of neighborliness to non-polyhedral convex cones. Although a definition of neighborliness is available in the non-polyhedral case in the literature, it is fairly restrictive as it requires all the low-dimensional faces to be polyhedral. Our approach is more flexible and includes, for example, the cone of positive-semidefinite matrices as a special case (this cone is not neighborly in general). We term our generalization Terracini convexity due to its conceptual similarity with the conclusion of Terracini’s lemma from algebraic geometry. Polyhedral cones are Terracini convex if and only if they are neighborly. More broadly, we derive many families of non-polyhedral Terracini convex cones based on neighborly cones, linear images of cones of positive-semidefinite matrices, and derivative relaxations of Terracini convex hyperbolicity cones. As a demonstration of the utility of our framework in the non-polyhedral case, we give a characterization based on Terracini convexity of the tightness of semidefinite relaxations for certain inverse problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The combinatorial view of polytopes is a pillar of polyhedral theory which has played a prominent role both in deepening our understanding of the structure of polytopes as well as in illuminating those attributes of polytopes that are significant in the context of particular applications such as linear programming. A parallel perspective for non-polyhedral convex sets—even in the presence of additional structure—has generally been lacking. This limitation may be attributed to the fact that the central object of study in polyhedral combinatorics is the face lattice, and consequently, many of the key ideas and definitions in the field are face-centric. However, face-centric notions do not always carry over naturally to the non-polyhedral setting for a number of reasons; in particular, non-polyhedral closed convex sets consist of infinitely many faces, may contain non-exposed faces, may lack faces of all dimensions, may not be closed under linear images, and so forth. Motivated by this broad challenge of bridging the gap in our understanding between the polyhedral and non-polyhedral cases, we focus in this article on the question of obtaining a suitable generalization of neighborliness for non-polyhedral convex sets, with a less face-centric reformulation of neighborliness of polytopes playing a central role in our development.

A polyhedral cone that is pointed is called k-neighborly if the cone over any subset of up to k extreme rays forms a face [13].Footnote 1 Neighborliness arises in many contexts in geometry and polyhedral combinatorics, most notably in the characterization of various extremal classes of polytopes [13] and in conditions under which linear programming relaxations are tight for certain nonconvex inverse problems [9].

1.1 Motivation

We are aware that there is a definition available for non-polyhedral k-neighborly convex cones that are closed and pointed which parallels the polyhedral setting [14]—that is, the cone over any subset of up to k extreme rays forms an exposed face. However, this notion is too restrictive in the non-polyhedral case as it essentially requires that all the low-dimensional faces are polyhedral, and, in particular, are linearly isomorphic to orthants. This limitation restricts the utility of neighborliness in the non-polyhedral context in a number of ways.

As one example, the cone of positive semidefinite matrices is not k-neighborly for any \(k > 1\) as all the faces other than the extreme rays are non-polyhedral, and as a consequence, neighborliness is not useful for characterizing tightness of semidefinite relaxations for nonconvex problems that are ubiquitous in many applications [8, 15], in contrast to the situation with linear programming. Concretely, Donoho and Tanner [9] used neighborliness of polytopes to characterize the exactness of linear programming relaxations for identifying nonnegative vectors with the smallest number of nonzeros in affine spaces. A similar characterization of the success of semidefinite relaxations for identifying low-rank positive semidefinite matrices in affine spaces—a problem that arises in a range of applications such as factor analysis, collaborative filtering, and phase retrieval, and contains NP-hard problems as special cases—has been lacking. Thus, we seek a more flexible notion for non-polyhedral cones that specializes to the usual definition of neighborliness for polyhedral cones.

In a different vein, the utility of neighborliness lies in the fact that it provides a succinct characterization of the geometry of the ‘most singular’ pieces of the boundary of a polyhedral cone. It is of intrinsic interest to understand such geometry more generally for other families of structured cones. Hyperbolicity cones serve as an instructive case study in this regard. These are convex cones derived from hyperbolic polynomials, with the nonnegative orthant and the positive semidefinite matrices being prominent examples. Relaxations based on derivatives of hyperbolicity cones offer the prospect of computationally less expensive approaches for obtaining bounds on conic optimization problems with respect to hyperbolicity cones, and an intriguing feature of these relaxations is that they tend to preserve the low-dimensional faces of the original hyperbolicity cone. Formalizing and quantifying this assertion by leveraging the perspective of neighborliness would provide new insights into the facial geometry of a large class of structured convex cones.

In this paper, we describe a generalization of neighborliness for non-polyhedral cones that addresses the preceding objectives.

1.2 Towards a definition for non-polyhedral cones

In aiming at an appropriate generalization of neighborliness for non-polyhedral cones that overcomes the limitation of polyhedrality of the low-dimensional faces, a natural approach is to reformulate neighborliness via other geometric attributes that are less face-centric. As a first attempt, for a convex cone \(\mathscr {C}\) that is closed and pointed but not necessarily polyhedral, let \(\mathscr {S}_\mathscr {C}(x)\) denote the linear span of the smallest exposed face of \(\mathscr {C}\) that contains x. Then one can check that if the extreme rays of \(\mathscr {C}\) are exposed, k-neighborliness of \(\mathscr {C}\) is equivalent to the following condition for any collection \(x^{(1)},\dots ,x^{(k)}\) of generators of the extreme rays of \(\mathscr {C}\):

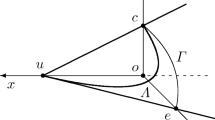

One can check that the left-hand-side of this equation always contains the right-hand-side, with the containment being strict in general and equality holding only for k-neighborly cones. It is instructive to consider the three cones in \(\mathbb {R}^3\) that are shown in Fig. 1 from the perspective of the relation (1). The cone \(\mathscr {C}_1\) is isomorphic to the orthant in \(\mathbb {R}^3\), which is 3-neighborly, and therefore the relation (1) holds for any subset of the generators of the three extreme rays. The cone \(\mathscr {C}_2\) is not 2-neighborly as the cone over the generators \(x^{(1)}, x^{(2)}\) is not a face of \(\mathscr {C}_2\); accordingly, we note that \(\mathscr {S}_{\mathscr {C}_2}(x^{(1)} + x^{(2)}) \supsetneq \mathscr {S}_{\mathscr {C}_2}(x^{(1)}) + \mathscr {S}_{\mathscr {C}_2}(x^{(2)})\). Finally, the ice-cream cone \(\mathscr {C}_3\) is evidently not 2-neighborly by considering the cone over the generators \(x^{(1)}, x^{(2)}\); as expected, we again have the strict containment \(\mathscr {S}_{\mathscr {C}_3}(x^{(1)} + x^{(2)}) \supsetneq \mathscr {S}_{\mathscr {C}_3}(x^{(1)}) + \mathscr {S}_{\mathscr {C}_3}(x^{(2)})\). The cone \(\mathscr {C}_3\) presents an interesting case study as it is also linearly isomorphic to the cone of \(2 \times 2\) symmetric positive semidefinite matrices. As mentioned previously, developing a suitable generalization of neighborliness that encompasses the cone of positive semidefinite matrices is one of the motivations for this article, and we investigate next what precisely fails with the relation (1) for \(\mathscr {C}_3\).

For a polyhedral cone \(\mathscr {C}\) that is pointed, the map \(\mathscr {S}_\mathscr {C}(x)\) represents a kind of “local linearization” of \(\mathscr {C}\) around the point x; concretely, the set \(\mathscr {S}_\mathscr {C}(x)\) is the largest subspace—also called the lineality space—in the cone of feasible directions from x into \(\mathscr {C}\). However, the interpretation of \(\mathscr {S}_\mathscr {C}(x)\) as a local linearization of \(\mathscr {C}\) at x no longer holds in general if \(\mathscr {C}\) is not polyhedral. For the cone \(\mathscr {C}_3\) in Fig. 1, the set \(\mathscr {S}_{\mathscr {C}_3}(x^{(1)})\) does not fully represent a local linearization of \(\mathscr {C}_3\) around \(x^{(1)}\) as it fails to account for the curvature of the boundary of \(\mathscr {C}_3\) at \(x^{(1)}\). Rather, the subspace \(\mathscr {L}_{\mathscr {C}_3}(x^{(1)})\) in Fig. 1, akin to a tangent space at \(x^{(1)}\) with respect to the boundary of \(\mathscr {C}_3\), provides a more accurate local linearization of \(\mathscr {C}_3\) at \(x^{(1)}\). Letting \(\mathscr {L}_{\mathscr {C}_3}(x^{(2)})\) similarly denote an accurate local linearization of \(\mathscr {C}_3\) at \(x^{(2)}\), we observe that \(\mathscr {L}_{\mathscr {C}_3}(x^{(1)})+\mathscr {L}_{\mathscr {C}_3}(x^{(2)}) = \mathbb {R}^3\). As \(x^{(1)}+x^{(2)}\) lies in the interior of \(\mathscr {C}_3\), a natural local linearization of \(\mathscr {C}_3\) at \(x^{(1)} + x^{(2)}\) is the full space \(\mathbb {R}^3\), i.e., \(\mathscr {L}_{\mathscr {C}_3}(x^{(1)}+x^{(2)}) = \mathbb {R}^3\). Consequently, we have that the relation (1) holds for \(\mathscr {C}_3\) with \(k=2\) if we substitute \(\mathscr {S}_{\mathscr {C}_3}\) with \(\mathscr {L}_{\mathscr {C}_3}\). Motivated by this discussion, our generalization of neighborliness to closed, convex, pointed cones is based on a criterion analogous to (1) with a more accurate notion of local linearization; as we discuss in the sequel, this criterion is satisfied by neighborly polyhedral cones, cones of positive semidefinite matrices, as well as many other families.

1.3 Terracini convex cones

We begin by giving a formal definition of the map \(\mathscr {L}_{\mathscr {C}}(x)\). In the example with the cone \(\mathscr {C}_3\) from Fig. 1, the set \(\mathscr {L}_{\mathscr {C}_3}(x)\) corresponds to a tangent space. However, convex cones in general have both smooth and singular features in their boundary, and therefore we do not explicitly appeal to any differential notions. Our definition is stated in terms of the feasible directions \(\mathscr {K}_\mathscr {C}(x)\) into a convex cone \(\mathscr {C}\subset \mathbb {R}^d\) that is closed and pointed from any \(x \in \mathscr {C}\):

The closure of the cone of feasible directions \(\overline{\mathscr {K}_\mathscr {C}(x)}\) is called the tangent cone of \(\mathscr {C}\) at x.

Definition 1

Let \(\mathscr {C}\subset \mathbb {R}^d\) be a convex cone that is closed and pointed. For any \(x \in \mathscr {C}\), the convex tangent space of \(\mathscr {C}\) at x is denoted by \(\mathscr {L}_\mathscr {C}(x)\) and is defined as the lineality space of the tangent cone of \(\mathscr {C}\) at x:

In some sense, the subspace \(\mathscr {L}_\mathscr {C}(x)\) represents all those directions from x in which the cone \(\mathscr {C}\) is locally “flat”. For smooth convex cones \(\mathscr {C}\) that are closed and pointed, the convex tangent space \(\mathscr {L}_\mathscr {C}(x)\) at a point x (\(\ne 0\)) on the boundary is indeed the tangent space with respect to the boundary of \(\mathscr {C}\) at x. For polyhedral cones \(\mathscr {C}\) that are pointed, one can check that \(\mathscr {L}_\mathscr {C}(x) = \mathscr {S}_\mathscr {C}(x)\). With this definition, we are in a position to present the main object of investigation of this article.

Definition 2

A convex cone \(\mathscr {C}\subset \mathbb {R}^d\) that is closed and pointed is k-Terracini convex if the following condition holds for any collection \(x^{(1)}, \dots , x^{(k)}\) of generators of extreme rays of \(\mathscr {C}\):

If \(\mathscr {C}\) is k-Terracini convex for all k, then we say that \(\mathscr {C}\) is Terracini convex.

One inclusion always holds as \(\mathscr {L}_\mathscr {C}\left( \sum _{i=1}^k x^{(i)}\right) \supseteq \sum _{i=1}^k \mathscr {L}_\mathscr {C}\left( x^{(i)}\right) \), and the relevant portion of this definition is the other inclusion. The reason for the terminology ‘Terracini convexity’ is that the stipulation in this definition mirrors the consequence of Terracini’s lemma in algebraic geometry [19], with convex tangent space playing the role in our context that a tangent space does in Terracini’s lemma.Footnote 2 We give next some preliminary examples of k-Terracini convex cones:

Example 1

To begin with, it is instructive to compare k-Terracini convexity to k-neighborliness for polyhedral cones. For a polyhedral cone \(\mathscr {C}\) that is pointed, we observed previously that \(\mathscr {L}_\mathscr {C}(x) = \mathscr {S}_\mathscr {C}(x)\) for \(x \in \mathscr {C}\). As \(\mathscr {C}\) has exposed extreme rays and as the relation (1) is equivalent to k-neighborliness, we have that k-Terracini convexity and k-neighborliness are equivalent for pointed polyhedral cones. We also prove this fact as a special case of a more general result (see Theorem 1 and Corollary 2).

Example 2

All convex cones that are closed and pointed are trivially 1-Terracini convex. As a contrast, based on the generalization of [14] of neighborliness to non-polyhedral cones, a convex cone that is closed and pointed is 1-neighborly if and only if all its extreme rays are exposed.

Example 3

Let \(\mathscr {C}\subset \mathbb {R}^d\) be a smooth convex cone that is closed and pointed. Then \(\mathscr {C}\) is Terracini convex. To see this, consider any collection \(x^{(1)},\dots ,x^{(k)}\) of generators of extreme rays of \(\mathscr {C}\). Due to the smoothness of \(\mathscr {C}\), we have that \(\sum _{i=1}^k \mathscr {L}_\mathscr {C}\left( x^{(i)}\right) = span (\mathscr {C})\) for \(k\ge 2\), unless all the \(x^{(i)}\)’s generate the same extreme ray (in which case the Terracini convexity condition is trivially satisfied).

Example 4

As our next example, we consider the cone of positive semidefinite matrices \(\mathbb {S}^d_+\) in the space of \(d \times d\) real symmetric matrices \(\mathbb {S}^d\). This cone consists of both smooth and singular features in its boundary. For \(X \in \mathbb {S}^d_+\), one can check that \(\mathscr {L}_{\mathbb {S}^d_+}(X) = \{MX + XM \;:\; M \in \mathbb {S}^n\}\), from which it follows that \(\mathbb {S}^d_+\) is Terracini convex. We give an alternative proof of this fact via a dual perspective on Terracini convexity; see Example 5 after Proposition 1.

It is instructive to consider the definition of Terracini convexity from a dual perspective, as this leads to a characterization that is more easily verified in some cases. In preparation to state this dual criterion, we recall that the polar of a cone \(\mathscr {S} \subset \mathbb {R}^d\) is the collection of linear functionals that are nonpositive on \(\mathscr {S}\) and is denoted \(\mathscr {S}^\circ \). With this notation, the normal cone to a convex cone \(\mathscr {C}\subset \mathbb {R}^d\) at \(x \in \mathscr {C}\) is denoted \(\mathscr {N}_\mathscr {C}(x)\) and is the polar \(\mathscr {K}_\mathscr {C}(x)^\circ \) of the cone of feasible directions from x into \(\mathscr {C}\). As \(\mathscr {C}\) is a cone, one can check that the normal cone to \(\mathscr {C}\) at \(x \in \mathscr {C}\) is given by:

which is the set of linear functionals that are nonpositive on \(\mathscr {C}\) and vanish at x. We now establish an equivalent dual formulation of Terracini convexity.

Proposition 1

A closed, pointed, convex cone \(\mathscr {C}\subset \mathbb {R}^d\) is k-Terracini convex if and only if for any collection \(x^{(1)},\ldots ,x^{(k)}\) of generators of extreme rays of \(\mathscr {C}\),

Remark 1

In the result above, one inclusion is trivial—we always have that the span of the intersection of the normal cones is contained inside the intersection of the spans of the normal cones. Terracini convexity corresponds to the reverse inclusion being true, and this is all we need to verify. This remark is dual to the assertion after Definition 2 about one inclusion always being true.

Proof

The normal cone and the closure of the cone of feasible directions at a point \(x \in \mathscr {C}\) are related via \(\mathscr {N}_{\mathscr {C}}(x) = \mathscr {K}_{\mathscr {C}}(x)^\circ = \overline{\mathscr {K}_{\mathscr {C}}(x)}^\circ \), which implies that \(\mathscr {L}_{\mathscr {C}}(x)^\perp = span (\mathscr {N}_{\mathscr {C}}(x))\). Taking orthogonal complements in the definition of k-Terracini convexity, we see that \(\mathscr {C}\) is k-Terracini convex if and only if for any collection \(x^{(1)},\ldots ,x^{(k)}\) of generators of extreme rays of \(\mathscr {C}\),

Here we have used that the orthogonal complement of a sum of subspaces is the intersection of the orthogonal complements. To complete the proof, we note that \(\mathscr {N}_{\mathscr {C}}\left( \sum _{i=1}^k x^{(i)}\right) = \bigcap _{i=1}^k \mathscr {N}_{\mathscr {C}}(x^{(i)})\) whenever \(x^{(1)},\ldots ,x^{(k)}\in \mathscr {C}\). For one inclusion, if \(\ell \in \mathscr {C}^\circ \) and \(\ell (x^{(i)}) = 0\) then \(\ell \left( \sum _{i=1}^k x^{(i)}\right) =0\). For the other inclusion, if \(\ell \in \mathscr {C}^\circ \) and \(\ell \left( \sum _{i=1}^k x^{(i)}\right) = \sum _{i=1}^k \ell (x^{(i)}) = 0\), then we have that \(\ell (x^{(i)}) \le 0\) for each i (as \(\ell \in \mathscr {C}^\circ \)) and therefore \(\ell (x^{(i)}) = 0\) for each i (as \(\sum _{i=1}^k \ell (x^{(i)}) = 0\)). \(\square \)

To illustrate the utility of this dual formulation, we show that the positive semidefinite cone is Terracini convex.

Example 5

(Positive semidefinite cone) Let \(\mathscr {C}= \mathbb {S}^d_+\) be the cone of \(d\times d\) positive semidefinite matrices. Given an extreme ray \(vv'\) for \(v \in \mathbb {R}^d\), the corresponding normal cone from (3) is \(\mathscr {N}_{\mathscr {C}}(vv') = \{Q \in -\mathbb {S}^d_+ ~:~ v'Qv = 0\} = \{Q \in -\mathbb {S}^d_+ ~:~ Qv = 0\}\). For any collection of generators of extreme rays \(v^{(1)}{v^{(1)}}', \dots , v^{(k)}{v^{(k)}}'\) of \(\mathscr {C}\) for \(v^{(1)},\ldots ,v^{(k)}\in \mathbb {R}^d\), we have that:

As \(\mathrm {span}\left( \mathscr {N}_{\mathscr {C}}\left( v^{(i)}{v^{(i)}}'\right) \right) = \{Q \in \mathbb {S}^d ~:~ Q v^{(i)} = 0\}\) and as k was arbitrary, it follows that \(\mathbb {S}_+^d\) is Terracini convex.

1.4 Outline of contributions

We initiate our study of Terracini convex cones by investigating the face structure of such cones. Specifically, in Sect. 2 we provide two conditions for a closed, pointed, convex cone to be Terracini convex based on order-theoretic properties of the faces of the cone. The first condition states that if a cone is k-Terracini convex for a sufficiently large k, which is a function of the height of the partially ordered set of faces, then the cone is Terracini convex. The second condition gives a necessary and sufficient characterization for a cone to be Terracini convex based on the collection of all convex tangent spaces of the cone inheriting some of the lattice structure of the subspace lattice.

From the examples in the previous subsection we see that Terracini convexity is equivalent to neighborliness for polyhedral cones, but there are many families of non-polyhedral cones that are also Terracini convex. Thus, a natural question is to clarify the distinction between Terracini convexity and neighborliness for non-polyhedral cones. In one direction, the cone of positive semidefinite matrices serves as an example that there are Terracini convex cones that are not neighborly. In the other direction, we prove in Sect. 3 that subject to a non-degeneracy condition that is of the form of a quadratic growth property, k-neighborly cones are k-Terracini convex. As a consequence of this result, we obtain that the cone over the (homogeneous) moment curve, which was studied by Kalai and Wigderson in [14], is Terracini convex; see Sect. 3.3 for more examples.

Next we demonstrate the utility of the notion of Terracini convexity in characterizing tightness of semidefinite relaxations for the problem of finding a positive semidefinite matrix of smallest rank in an affine space. A commonly employed heuristic to solve this problem is to compute the positive semidefinite matrix of smallest trace in the given affine space, which can be obtained via a tractable semidefinite program. In Sect. 4, we show that the success of this heuristic is closely tied to a certain cone being Terracini convex. Our result may be viewed as a generalization of Donoho and Tanner’s result on using neighborliness to characterize the exactness of linear programming relaxations for identifying nonnegative vectors with the smallest number of nonzeros in affine spaces [9]. As a by-product of our result, we obtain that ‘most’ linear images of a cone of positive semidefinite matrices are k-Terracini convex, where the value of k depends on the dimension of the image of the linear map; see Theorem 4.

In Sect. 5, we investigate the Terracini convexity properties of derivative relaxations of hyperbolicity cones. We study conditions under which derivatives of Terracini convex hyperbolicity cones continue to be k-Terracini convex (for suitable k), and in particular the relationship between the number of derivatives and k. As a consequence, we obtain new examples of Terracini convex cones, and in particular ones that are basic semialgebraic; it is instructive to contrast these examples with the ones described in Sect. 4.3 of linear images of cones of positive semidefinite matrices, which are semialgebraic but not necessarily basic semialgebraic.

Sections 3, 4, and 5 illustrate the role that Terracini convexity plays in illuminating various aspects of the facial structure of convex cones. In each case, we obtain new examples of Terracini convex cones in the course of our discussion. We conclude in Sect. 6 with some open questions.

2 Order-theoretic conditions for Terracini convexity

In this section we discuss conditions under which a closed, pointed, convex cone is Terracini convex based on the order structure underlying the faces of a convex cone. Section 2.1 shows that a cone that is k-Terracini convex for sufficiently large k is Terracini convex, with the threshold value of k depending on the length of the longest chain of faces of the cone. In Sect. 2.2 we give a lattice-theoretic condition on the collection of lineality spaces that is necessary and sufficient for a cone to be Terracini convex.

In preparation for our discussion, we recall briefly a few relevant facts about the face structure of a convex cone. Let \(\mathscr {C}\) be a closed, pointed, convex cone. A subset \(\mathscr {F}\subseteq \mathscr {C}\) is a face if \(x,y \in \mathscr {C}\) and \(x+y \in \mathscr {F}\) implies that \(x,y \in \mathscr {F}\). A face \(\mathscr {F}\subseteq \mathscr {C}\) is exposed if \(\mathscr {F}\) can be expressed as the intersection of \(\mathscr {C}\) and a hyperplane specified by a linear functional \(\ell \in \mathscr {C}^\circ \), i.e., \(\mathscr {F}= \{x \in \mathscr {C}\;:\; \ell (x) = 0\}\). By convention \(\mathscr {C}\) is itself an exposed face as one can take \(\ell = 0\). The collection of (exposed) faces of \(\mathscr {C}\) form a partially ordered set (poset) by inclusion. For any subset \(\mathscr {X}\subseteq \mathscr {C}\), let \(\mathscr {F}_\mathscr {C}(\mathscr {X})\) (respectively, \(\mathscr {F}^{exp }_\mathscr {C}(\mathscr {X})\)) denote the inclusion-wise minimal (exposed) face of \(\mathscr {C}\) containing \(\mathscr {X}\). For any element \(x \in \mathscr {C}\), one can check that the normal cone \(\mathscr {N}_\mathscr {C}(x)\) depends only on \(\mathscr {F}^{exp }_\mathscr {C}(x)\), which in turn depends only on \(\mathscr {F}_\mathscr {C}(x)\); consequently, the convex tangent space \(\mathscr {L}_\mathscr {C}(x)\) depends only on \(\mathscr {F}^{exp }_\mathscr {C}(x)\) and in turn \(\mathscr {F}_\mathscr {C}(x)\) [18]. Formally, for any \(x^{(1)}, x^{(2)} \in \mathscr {C}\):

2.1 Terracini convexity and the height of the poset of faces

Given a closed, pointed, convex cone \(\mathscr {C}\), consider a collection of points \(x^{(1)}, \dots , x^{(k)} \in \mathscr {C}\). For large k, it is possible to replace the convex tangent space \(\mathscr {L}_\mathscr {C}(\sum _{i=1}^k x^{(i)})\) by \(\mathscr {L}_\mathscr {C}(\sum _{i \in I} x^{(i)})\) for a subset \(I \subseteq \{1,\dots ,k\}\) that is potentially much smaller than k, by appealing to the observation that the convex tangent space at a point depends only on the smallest face containing the point. This allows us to conclude that if \(\mathscr {C}\) is k-Terracini convex for sufficiently large k, then \(\mathscr {C}\) is Terracini convex.

We describe next the relevant terminology that we use in our result. A collection of faces \(\mathscr {F}^{(i)}, ~ i=1,\dots ,m\) of \(\mathscr {C}\) that satisfies \(\mathscr {F}^{(1)} \subsetneq \cdots \subsetneq \mathscr {F}^{(m)}\) is called a chain of faces. For a closed, pointed, convex cone \(\mathscr {C}\), let \(\mathscr {H}(\mathscr {C})\) denote the height of the poset of faces of \(\mathscr {C}\), which is the length of the longest chain of faces of \(\mathscr {C}\). As the dimension always increases strictly along chains of faces and as any maximal-length chain of faces begins with the zero-dimensional faceFootnote 3\(\{0\}\) and ends with \(\mathscr {C}\), we have that \(\mathscr {H}(\mathscr {C}) \le dim (\mathscr {C})+1\). We have next a result that allows us to replace the convex tangent space of a large sum of elements of \(\mathscr {C}\) by that of a smaller subset based on \(\mathscr {H}(\mathscr {C})\):

Lemma 1

Let \(\mathscr {C}\) be a closed, pointed, convex cone, and consider a collection of points \(x^{(1)},\ldots ,x^{(k)}\in \mathscr {C}\). There exists \(I \subseteq \{1,\dots ,k\}\) with \(|I| \le \mathscr {H}(\mathscr {C})-1\) such that \(\mathscr {F}_{\mathscr {C}}\left( \sum _{i=1}^k x^{(i)}\right) = \mathscr {F}_{\mathscr {C}}\left( \sum _{i\in I}x^{(i)}\right) \).

Proof

We explicitly construct a set I with \(|I| \le \mathscr {H}(\mathscr {C})-1\). Set \(j = 0, I_0 = \emptyset , \mathscr {F}_\mathscr {C}^{(0)} = \{0\}\). Running sequentially through \(i = 1,\dots ,k\), if \(x^{(i)} \notin \mathscr {F}_\mathscr {C}(I_j)\), then (a) increase j by one, (b) set \(I_j = I_{j-1} \cup \{i\}\), and (c) set \(\mathscr {F}_\mathscr {C}^{(j)} = \mathscr {F}_\mathscr {C}\left( \sum _{m \in I_j} x^{(m)}\right) \).

The sequence of faces \(\mathscr {F}_\mathscr {C}^{(0)}, \dots , \mathscr {F}_\mathscr {C}^{(j)}\) has the property that \(\mathscr {F}_{\mathscr {C}}^{(0)} \subsetneq \cdots \subsetneq \mathscr {F}_{\mathscr {C}}^{(j)} = \mathscr {F}_{\mathscr {C}}\left( \{x^{(1)},\dots ,x^{(k)}\}\right) \), and therefore forms a chain of faces of \(\mathscr {C}\) of length at most \(\mathscr {H}(\mathscr {C})\). As \(\mathscr {F}_{\mathscr {C}}\left( \{x^{(1)},\dots ,x^{(k)}\}\right) = \mathscr {F}_{\mathscr {C}}\left( \sum _{i=1}^k x^{(i)}\right) \) and as the index set \(I_j\) satisfies \(|I_j| \le \mathscr {H}(\mathscr {C})-1\), setting \(I = I_j\) leads to the desired conclusion. \(\square \)

We are now in a position to state and prove the main result of this section.

Proposition 2

Let \(\mathscr {C}\) be a closed, pointed, convex cone that is \((\mathscr {H}(\mathscr {C})-1)\)-Terracini convex. Then \(\mathscr {C}\) is Terracini convex.

Proof

Let \(x^{(1)},\ldots ,x^{(k)}\) be a collection of generators of extreme rays of \(\mathscr {C}\). By Lemma 1, we know that there exists \(I\subseteq \{1,\dots ,k\}\) with \(|I| \le \mathscr {H}(\mathscr {C})-1\) such that \(\mathscr {F}_{\mathscr {C}}\left( \sum _{i = 1}^k x^{(i)}\right) = \mathscr {F}_{\mathscr {C}}\left( \sum _{i\in I}x^{(i)}\right) \). From (6) we have that:

Since \(\mathscr {C}\) is \((\mathscr {H}(\mathscr {C})-1)\)-Terracini convex, it is |I|-Terracini convex and therefore

Combining (7) and (8), and noting that \(\sum _{i=1}^k \mathscr {L}_{\mathscr {C}}\left( x^{(i)}\right) \subseteq \mathscr {L}_{\mathscr {C}}\left( \sum _{i=1}^k x^{(k)}\right) \) as well as \(\sum _{i\in I} \mathscr {L}_{\mathscr {C}}\left( x^{(i)}\right) \subseteq \sum _{i=1}^k \mathscr {L}_{\mathscr {C}}\left( x^{(i)}\right) \), we conclude that \(\mathscr {C}\) is k-Terracini convex. Since k was arbitrary, we have shown that \(\mathscr {C}\) is Terracini convex. \(\square \)

As a consequence of this result, we have the following corollary:

Corollary 1

Let \(\mathscr {C}\) be a closed, pointed, convex cone that is \(dim (\mathscr {C})\)-Terracini convex. Then \(\mathscr {C}\) is Terracini convex.

Proof

This follows from the observation that \(\mathscr {H}(\mathscr {C}) \le dim (\mathscr {C}) + 1\). \(\square \)

2.2 Terracini convexity and the lattice of subspaces

Motivated by the order-theoretic structure underlying the faces of a closed, pointed, convex cone \(\mathscr {C}\subset \mathbb {R}^d\), we consider the order-theoretic aspects of the collection of convex tangent spaces associated to \(\mathscr {C}\):

As \(\mathfrak {L}(\mathscr {C})\) is a subset of the collection of subspaces in \(\mathbb {R}^d\), one may view \(\mathfrak {L}(\mathscr {C})\) as a poset by inclusion. However, the collection of all subspaces in \(\mathbb {R}^d\) additionally forms a lattice (called the subspace lattice in \(\mathbb {R}^d\)) with the join of two subspaces given by their sum and the meet given by their intersection. In this section we relate Terracini convexity of \(\mathscr {C}\) to \(\mathfrak {L}(\mathscr {C})\) inheriting some of the lattice structure of the collection of all subspaces in \(\mathbb {R}^d\).

In preparation to present this result, we discuss next a link between the elements of \(\mathfrak {L}(\mathscr {C})\) and the exposed faces of \(\mathscr {C}\). As noted previously in (6), the convex tangent space at a point \(x \in \mathscr {C}\) depends only on the smallest exposed face of \(\mathscr {C}\) containing x so that the elements of \(\mathfrak {L}(\mathscr {C})\) are in one-to-one correspondence with the exposed faces of \(\mathscr {C}\). The next result describes how one obtains an exposed face of \(\mathscr {C}\) given an element of \(\mathfrak {L}(\mathscr {C})\):

Lemma 2

Let \(\mathscr {C}\) be a closed, pointed, convex cone. For any \(x \in \mathscr {C}\) we have that:

Proof

One can check that \(\mathscr {F}^{exp }_\mathscr {C}(x) \subseteq \mathscr {L}_\mathscr {C}(x)\), and therefore \(\mathscr {F}^{exp }_\mathscr {C}(x) \subseteq \mathscr {C}\cap \mathscr {L}_\mathscr {C}(x)\). In the other direction, we begin by observing that any hyperplane supporting \(\mathscr {C}\) that contains \(\mathscr {F}^{exp }_\mathscr {C}(x)\) must contain \(\mathscr {L}_\mathscr {C}(x)\). Consider a hyperplane H supporting \(\mathscr {C}\) that exposes \(\mathscr {F}^{exp }_\mathscr {C}(x)\), i.e., \(\mathscr {C}\cap H = \mathscr {F}^{exp }_\mathscr {C}(x)\) (such a hyperplane must exist as \(\mathscr {F}^{exp }_\mathscr {C}(x)\) is an exposed face). As \(\mathscr {L}_\mathscr {C}(x) \subseteq H\), we have that \(\mathscr {C}\cap \mathscr {L}_\mathscr {C}(x) \subseteq \mathscr {F}^{exp }_\mathscr {C}(x)\). This concludes the proof. \(\square \)

With this result in hand, we are now in a position to state and prove the following proposition:

Proposition 3

Let \(\mathscr {C}\subset \mathbb {R}^d\) be a closed, pointed, convex cone. The cone \(\mathscr {C}\) is Terracini convex if and only if \(\mathfrak {L}(\mathscr {C})\) is a join sub-semilattice of the lattice of all subspaces in \(\mathbb {R}^d\) (i.e., the poset \(\mathfrak {L}(\mathscr {C})\) has a join given by the sum of two subspaces).

Proof

Suppose first that \(\mathscr {C}\) is Terracini convex. Consider any pair \(\mathscr {L}_\mathscr {C}(x), \mathscr {L}_\mathscr {C}(y) \in \mathfrak {L}(\mathscr {C})\) corresponding to \(x,y \in \mathscr {C}\), and let \(x = \sum _i x^{(i)}\) and \(y = \sum _j y^{(j)}\) be decompositions in terms of generators of extreme rays of \(\mathscr {C}\). As \(\mathscr {C}\) is Terracini convex, we have that:

Since \(\mathscr {L}_\mathscr {C}(x + y) \in \mathfrak {L}(\mathscr {C})\), the poset \(\mathfrak {L}(\mathscr {C})\) is a join sub-semilattice of the lattice of all subspaces in \(\mathbb {R}^d\).

In the other direction, suppose that the poset \(\mathfrak {L}(\mathscr {C})\) is a join sub-semilattice of the lattice of all subspaces in \(\mathbb {R}^d\). Consider any collection \(x^{(1)},\dots ,x^{(k)} \in \mathscr {C}\) of generators of extreme rays of \(\mathscr {C}\). As the join is given by subspace sum, we have that \(\sum _{i=1}^k \mathscr {L}_\mathscr {C}\left( x^{(i)}\right) \in \mathfrak {L}(\mathscr {C})\), which implies that \(\sum _{i=1}^k \mathscr {L}_\mathscr {C}\left( x^{(i)}\right) \) is the convex tangent space at some point \(y \in \mathscr {C}\). Then, from Lemma 2 we see that \(\mathscr {C}\cap \sum _{i=1}^k \mathscr {L}_\mathscr {C}\left( x^{(i)}\right) = \mathscr {F}^{exp }_\mathscr {C}(y)\), and in particular, \(\sum _{i=1}^k x^{(i)} \in \mathscr {F}^{exp }_\mathscr {C}(y)\) as each \(x^{(i)} \in \mathscr {L}_\mathscr {C}\left( x^{(i)}\right) \). We also have that \(\mathscr {C}\cap \mathscr {L}_\mathscr {C}\left( \sum _{i=1}^k x^{(i)}\right) = \mathscr {F}^{exp }_\mathscr {C}\left( \sum _{i=1}^k x^{(i)}\right) \). As \(\sum _{i=1}^k \mathscr {L}_\mathscr {C}\left( x^{(i)}\right) \subseteq \mathscr {L}_\mathscr {C}\left( \sum _{i=1}^k x^{(i)}\right) \), we conclude that \(\mathscr {F}^{exp }_\mathscr {C}(y) \subseteq \mathscr {F}^{exp }_\mathscr {C}\left( \sum _{i=1}^k x^{(i)}\right) \), which in turn implies that \(\mathscr {F}^{exp }_\mathscr {C}(y) = \mathscr {F}^{exp }_\mathscr {C}\left( \sum _{i=1}^k x^{(i)}\right) \) because \(\sum _{i=1}^k x^{(i)} \in \mathscr {F}^{exp }_\mathscr {C}(y)\). Appealing to (6), we can then conclude that \(\sum _{i=1}^k \mathscr {L}_\mathscr {C}\left( x^{(i)}\right) = \mathscr {L}_\mathscr {C}\left( \sum _{i=1}^k x^{(i)}\right) \). \(\square \)

Therefore, Terracini convexity of a cone \(\mathscr {C}\) is linked to the poset \(\mathfrak {L}(\mathscr {C})\) inheriting the join structure of the lattice of subspaces. In general, \(\mathfrak {L}(\mathscr {C})\) does not inherit the meet structure of the lattice of subspaces as the intersection of the convex tangent spaces corresponding to two exposed faces does not usually yield a convex tangent space corresponding to an exposed face of \(\mathscr {C}\) (the positive semidefinite cone provides a counterexample); indeed, the preceding proposition makes no assumptions on the existence of a meet operation.

3 Neighborliness and Terracini convexity

Terracini convexity is one approach to extend neighborliness from polyhedral cones to non-polyhedral convex cones. As discussed in the introduction, there is already a previous notion of neighborliness available in the non-polyhedral case due to Kalai and Wigderson [14]. In this section we investigate the relationship between these two concepts, and in particular we show that k-neighborly convex cones (formally defined in Sect. 3.1) are k-Terracini convex subject to mild non-degeneracy conditions. Throughout this section we view \(\mathbb {R}^m\) as being equipped with an inner product (which varies based on context and is specified clearly in each case), and we define an associated set \(\mathscr {S}^{m-1} \subset \mathbb {R}^m\) of unit-norm elements induced by the inner product. Doing so allows us to work with a distinguished set \(ext (\mathscr {K})\cap \mathscr {S}^{m-1}\) of normalized extreme rays of a closed, pointed, convex cone \(\mathscr {K}\subseteq \mathbb {R}^{m}\).

3.1 k-Neighborly convex cones

In [14] Kalai and Wigderson extend the notion of a neighborly polytope to define a k-neighborly embedded smooth manifold. This concept serves as the point of departure for a definition of a k-neighborly convex cone that is expressed in convex-geometric terms with no reference to an underlying embedded manifold.

Definition 3

Let \(\mathscr {M}\) be a smooth manifold and let \(\phi : \mathscr {M}\rightarrow \mathbb {R}^m\) be an embedding of \(\mathscr {M}\) in \(\mathbb {R}^m\). The image \(\phi (\mathscr {M})\) is a k-neighborly embedded manifold if for any collection \(x^{(1)},x^{(2)},\ldots ,x^{(k)}\) of elements of \(\phi (\mathscr {M})\), there exists an affine function \(\ell :\mathbb {R}^m\rightarrow \mathbb {R}\) such that \(\ell (x^{(i)}) =0\) for \(i=1,2,\ldots ,k\) and \(\ell (x) > 0\) for all \(x\in \phi (\mathscr {M})\setminus \{x^{(1)},x^{(2)},\ldots ,x^{(k)}\}\).

This definition is a slight reformulation of that of Kalai and Wigderson and it is stated in a manner that is more convenient for our presentation. The neighborliness of \(\phi (\mathscr {M})\) clearly only depends on the convex hull of \(\phi (\mathscr {M})\), which suggests the following notion of a k-neighborly convex cone.

Definition 4

A closed, pointed, convex cone \(\mathscr {K}\subseteq \mathbb {R}^{m}\) is k-neighborly if for every collection \(x^{(1)},x^{(2)},\ldots ,x^{(k)}\) of normalized extreme rays of \(\mathscr {K}\), there exists a linear functional \(\ell :\mathbb {R}^{m}\rightarrow \mathbb {R}\) such that \(\ell (x^{(i)})=0\) for \(i=1,2,\ldots ,k\) and \(\ell (x) > 0\) for all \(x\in ext (\mathscr {K})\cap \mathscr {S}^{m-1}\setminus \{x^{(1)},x^{(2)},\ldots ,x^{(k)}\}\).

It is straightforward to check that if an embedded smooth manifold \(\phi (\mathscr {M})\subseteq \mathbb {R}^m\) is k-neighborly, then the cone over \(\phi (\mathscr {M})\), i.e., \(cone (\{1\}\times \phi (\mathscr {M}))\subseteq \mathbb {R}^{m+1}\), is a k-neighborly convex cone. A basic observation about k-neighborly convex cones is that all of their sufficiently low-dimensional faces are linearly isomorphic to a nonnegative orthant.

Proposition 4

Consider a closed, pointed, convex cone \(\mathscr {K} \subseteq \mathbb {R}^m\) that is k-neighborly, and suppose \(\mathscr {F}\) is a face of \(\mathscr {K}\) of dimension \(d \le k\). Then \(\mathscr {F}\) is linearly isomorphic to \(\mathbb {R}_+^d\).

Proof

As \(\mathscr {K}\) is a closed, pointed, convex cone, so is \(\mathscr {F}\). Hence, \(\mathscr {F}\) is the conic hull of its extreme rays. Let \(x^{(1)},\ldots ,x^{(d)}\) be a choice of d linearly independent normalized extreme rays of \(\mathscr {F}\) (and hence of \(\mathscr {K}\)). Let \(\ell \) be a linear functional satisfying \(\ell (x^{(i)}) = 0\) for \(i=1,2,\ldots ,d\) and \(\ell (x) > 0\) for all other normalized extreme rays of \(\mathscr {K}\), whose existence is guaranteed due to the k-neighborliness of \(\mathscr {K}\). Let \(\tilde{\mathscr {F}} = \{x\in \mathscr {K}\;:\; \ell (x) = 0\}\) be the face of \(\mathscr {K}\) exposed by \(\ell \). Since every extreme ray of \(\mathscr {K}\) that belongs to \(\tilde{\mathscr {F}}\) is also an extreme ray of \(\tilde{\mathscr {F}}\), it follows from the definition of \(\ell \) that \(x^{(1)},x^{(2)},\ldots ,x^{(d)}\) are exactly the normalized extreme rays of \(\tilde{\mathscr {F}}\). As such, \(\tilde{\mathscr {F}}\) is a closed, pointed, convex cone with exactly d linearly independent extreme rays, and therefore it must be linearly isomorphic to \(\mathbb {R}_+^d\). Finally, \(\tilde{\mathscr {F}}\) and \(\mathscr {F}\) are both faces of \(\mathscr {K}\) such that their relative interiors have a point in common, so \(\tilde{\mathscr {F}} = \mathscr {F}\) [18, Corollary 18.1.2]. \(\square \)

Proposition 4 makes it clear that k-Terracini convex cones are not necessarily k-neighborly. Indeed, we have seen that the positive semidefinite cone is Terracini convex, and yet its faces are not linearly isomorphic to nonnegative orthants in general. We describe next an example that serves as a running illustration throughout this section. This cone was considered by Kalai and Wigderson [14] in the language of neighborly manifolds.

Cone over the Veronese embedding The Veronese embedding \(\phi _{n,2d}:\mathbb {R}^{n}\rightarrow \mathbb {R}^{\left( {\begin{array}{c}n+2d-1\\ 2d\end{array}}\right) }\) is defined by the homogeneous moment map \(\phi _{n,2d}(z) = (z^{\alpha })_{\alpha \in \mathscr {A}_{n,2d}}\) where \(\mathscr {A}_{n,2d} = \{\alpha \in \mathbb {N}^{n}\;:\; \sum _{i=1}^{n}\alpha _i = 2d\}\) and \(z^\alpha := \prod _{i=1}^{n}z_i^{\alpha _i}\). We denote the cone over this embedding by

When discussing this example, we let \(m=\left( {\begin{array}{c}n+2d-1\\ 2d\end{array}}\right) \) and equip \(\mathbb {R}^m\) with the inner productFootnote 4 that satisfies

where the inner product on the right is the Euclidean inner product on \(\mathbb {R}^n\). The norms associated with these inner products are denoted \(\Vert \cdot \Vert _{B}\) and \(\Vert \cdot \Vert \), respectively. Any linear functional \(\ell : \mathbb {R}^m \rightarrow \mathbb {R}\) restricted to the extreme rays of the cone \(\mathscr {C}_{n,2d}\) can be interpreted as a homogeneous polynomial of degree 2d in n variables, i.e.,

Under this interpretation, the dual cone \(-\mathscr {C}_{n,2d}^\circ \) is the cone of (coefficients of) nonnegative homogeneous polynomials of degree 2d in n variables.

Example 6

(Neighborliness of cones over Veronese embeddings [14]) The cone \(\mathscr {C}_{n,2d}\) is a d-neighborly convex cone. To see this, consider a collection of up to d normalized extreme rays

and define the linear functional

From the Cauchy-Schwarz inequality, we can see that this is a nonnegative polynomial in z. (In fact, it is a sum of squares.) As such, \(\ell \) defines a linear functional that is nonnegative on the extreme rays of \(\mathscr {C}_{n,2d}\), and hence on \(\mathscr {C}_{n,2d}\) itself. Furthermore, the only normalized extreme rays at which \(\ell \) vanishes are \(\phi _{n,2d}(z^{(i)})\) for \(i=1,2,\ldots ,d\).

3.2 Non-degeneracy and regularity of convex cones

Our approach to showing that a k-neighborly cone is k-Terracini convex is based on the dual characterization of k-Terracini convexity from Proposition 1. Specifically, for any collection of normalized extreme rays \(x^{(1)},\dots ,x^{(k)}\) of a k-neighborly cone \(\mathscr {K} \subseteq \mathbb {R}^m\), we wish to prove that \(\bigcap _{i=1}^{k}span \left( \mathscr {N}_{\mathscr {K}}(x^{(i)})\right) \subseteq span \left( \bigcap _{i=1}^{k}\mathscr {N}_{\mathscr {K}}(x^{(i)})\right) \). Our strategy is to identify an \(\ell \in -\bigcap _{i=1}^{k}\mathscr {N}_{\mathscr {K}}(x^{(i)})\) such that

for an open set \(U \subseteq \mathbb {R}^m\) containing the origin. The linear functional that supports \(\mathscr {K}\) at the points \(x^{(1)}, \dots , x^{(k)}\), which is available to us from the definition of k-neighborliness, serves as a natural candidate for \(\ell \). The key issue with executing this strategy is that we need to control the extent to which any \(\Delta \in \bigcap _{i=1}^{k} span \left( \mathscr {N}_{\mathscr {K}}(x^{(i)})\right) \) perturbs \(\ell \). In particular, as \(\Delta \in \bigcap _{i=1}^{k} span \left( \mathscr {N}_{\mathscr {K}}(x^{(i)})\right) \) may be decomposed as \(\Delta = \Delta ^{(i)}_{+} - \Delta ^{(i)}_{-}\) for each \(i=1,\dots ,k\), (with \(\Delta ^{(i)}_{+},\Delta ^{(i)}_{-}\in -\mathscr {N}_{\mathscr {K}}(x^{(i)})\)), we need to bound the amount that the ‘negative’ parts \(\Delta ^{(i)}_{-}\) perturb \(\ell \). We consider two conditions to address this point. The first one ensures that \(\ell (x)\) grows sufficiently fast around \(\{x^{(1)},x^{(2)},\ldots ,x^{(k)}\}\). The second one controls the growth of any linear functional in \(-\mathscr {N}_{\mathscr {K}}(x)\) for any normalized extreme ray \(x \in \mathscr {K}\). Under these conditions—with the second one applied to each \(\Delta ^{(i)}_{-}\)—we show that \(\ell \) dominates \(\Delta ^{(i)}_{-}\); consequently, we prove that for each \(\Delta \in \bigcap _{i=1}^{k} span \left( \mathscr {N}_{\mathscr {K}}(x^{(i)})\right) \) there exists \(\gamma \ne 0\) such that \(\ell + \gamma \Delta \in -\bigcap _{i=1}^{k}\mathscr {N}_{\mathscr {K}}(x^{(i)})\). The first condition is a requirement on k-neighborly cones and takes the form of a quadratic growth criterion, while the second one is a regularity property applicable to arbitrary closed, pointed, convex cones. Both of these conditions are mild; for example, we show that the cone over the Veronese embedding satisfies them. (That being said, we are unaware of a method to prove that a k-neighborly cone is k-Terracini convex without these two conditions.) We precisely describe the conditions next, and we prove in Sect. 3.3 that k-neighborly cones satisfying these conditions are k-Terracini convex.

3.2.1 Non-degenerate neighborliness

We present a non-degenerate extension of the notion k-neighborliness in which the linear functional exposing a subset of k extreme rays satisfies an additional growth condition when restricted to nearby extreme rays.

Definition 5

A closed, pointed, convex cone \(\mathscr {K}\subseteq \mathbb {R}^m\) is non-degenerate k-neighborly if for every collection \(x^{(1)},x^{(2)},\ldots ,x^{(k)}\) of normalized extreme rays of \(\mathscr {K}\), there exist \(\epsilon >0\), \(\mu > 0\), and a linear functional \(\ell : \mathbb {R}^m \rightarrow \mathbb {R}\), such that \(\ell (x^{(i)})=0\) for \(i=1,2,\ldots ,k\), \(\ell (x) > 0\) for all \(x\in (ext (\mathscr {K})\cap \mathscr {S}^{m-1})\setminus \{x^{(1)},\ldots ,x^{(k)}\}\), and

The quadratic growth condition (10) is a mild restriction, and it is satisfied by the examples of k-neighborly convex cones we consider in this section.

Example 7

(k-neighborly polyhedral cones are non-degenerate k-neighborly) If \(\mathscr {K} \subseteq \mathbb {R}^m\) is a k-neigborly polyhedral cone, then for any collection \(x^{(1)},x^{(2)},\ldots ,x^{(k)}\) of normalized extreme rays there is a linear functional \(\ell \) such that \(\ell (x^{(i)}) = 0\) for \(i=1,2,\ldots ,k\) and \(\ell (x) > 0\) for all other normalized extreme rays of \(\mathscr {K}\). As the set of normalized extreme rays is finite, one can choose \(\epsilon \) smaller than half the minimum distance between normalized extreme rays and obtain that

which implies that (10) is vacuously satisfied for any positive \(\mu \).

Example 8

(Cone \(\mathscr {C}_{n,2d}\) over the Veronese embedding is non-degenerate d-neighborly) For \(y,z \in \mathbb {R}^n\) with unit Euclidean norm so that \(\Vert \phi (y)\Vert _B = \Vert \phi (z)\Vert _B = 1\) (this is the norm associated with the Bombieri inner product on \(\mathbb {R}^m\)), we have that

Here, the inequality follows from the Cauchy-Schwarz inequality and the fact that y and z have unit Euclidean norm. For unit Euclidean norm \(z^{(i)}, ~ i=1,2,\ldots ,d\) and unit Euclidean norm \(z \in \mathbb {R}^n\), the linear functional \(\ell \) from Example 6 satisfies

Choosing \(\epsilon = \frac{1}{2}\min _{i\ne j}\Vert \phi (z^{(i)})-\phi (z^{(j)})\Vert _B > 0\), whenever \(\phi _{n,2d}(z)\in \bigcup _{i=1}^{k}\mathscr {B}(\phi _{n,2d}(z^{(i)}),\epsilon )\) and \(\Vert z\Vert ^2=1\) we have that

It follows that \(\mathscr {C}_{n,2d}\) is non-degenerate d-neighborly.

Although the definition of being non-degenerate k-neighborly only requires quadratic growth locally around the set of minimizers, compactness of the sphere means that local quadratic growth implies global quadratic growth.

Lemma 3

If a closed, pointed, convex cone \(\mathscr {K} \subseteq \mathbb {R}^m\) is non-degenerate k-neighborly then for every collection \(x^{(1)},x^{(2)},\ldots ,x^{(k)}\) of normalized extreme rays of \(\mathscr {K}\), there exists \(\mu _0 > 0\), and a linear functional \(\ell \), such that \(\ell (x^{(i)})=0\) for \(i=1,2,\ldots ,k\) and

Proof

Let \(x^{(1)},x^{(2)},\ldots ,x^{(k)}\) be a collection of normalized extreme rays of \(\mathscr {K}\). Let \(\epsilon \) and \(\mu \) be the positive constants, and let \(\ell \) be the linear functional, that exist because \(\mathscr {K}\) is non-degenerate k-neighborly. Let

and let \(\mathscr {W}^c = ext (\mathscr {K})\cap \mathscr {S}^{m-1} \setminus \mathscr {W}\) be its complement in normalized extreme rays. By compactness of \(\mathscr {W}^c\) and the fact that \(\ell (x) > 0\) on \(\mathscr {W}^c\), there exists some \(M>0\) such that

where the second inequality holds because \(\Vert x-y\Vert ^2\le 4\) whenever \(x,y\in \mathscr {S}^{m-1}\). Since

taking \(\mu _0 = \min \{\mu , M/4\}\) completes the proof. \(\square \)

3.2.2 Regular cones

Our notion of regularity for a closed, pointed, convex cone requires that no linear functional in the dual cone grows too fast around its minimizer when restricted to extreme rays. This holds whenever the restriction of a linear functional to the extreme rays is smooth.

Definition 6

A closed, pointed, convex cone \(\mathscr {K}\subseteq \mathbb {R}^m\) is regular if for each \(x_0\in ext (\mathscr {K})\) and each \(\ell \in -\mathscr {N}_{\mathscr {K}}(x_0)\), there exist \(\delta >0\) and \(\nu >0\) such that

Example 9

(Polyhedral cones are regular) If \(\mathscr {K} \subseteq \mathbb {R}^m\) is a proper polyhedral cone, then the set of normalized extreme rays is finite. Therefore, for sufficiently small \(\delta \), \((ext (\mathscr {K}) \cap \mathscr {S}^{m-1}) \cap \mathscr {B}(x_0,\delta ) = \{x_0\}\). If \(\ell \in -\mathscr {N}_{\mathscr {K}}(x_0)\), then \(\ell (x_0) = 0\) and so (11) is vacuously satisfied for any \(\nu >0\).

Example 10

(Cone over the Veronese embedding is regular) Suppose that \(z_0\in \mathscr {S}^{n-1}\) and \(\ell (\phi _{n,2d}(z))\) is nonnegative and vanishes at \(z_0\). Consider the nonnegative homogeneous quadratic \(\Vert z\Vert ^2 \Vert z_0\Vert ^2 - \langle z, z_0\rangle ^2\), which vanishes only on the line spanned by \(z_0\). Since both \(\ell (\phi _{n,2d}(z))\) and its gradient vanish at \(z=z_0\), there exists \(M > 0\) such that \(\ell (\phi _{n,2d}(z)) \le M (\Vert z\Vert ^2 \Vert z_0\Vert ^2 - \langle z, z_0\rangle ^2)\) for all \(z\in \mathscr {S}^{n-1}\). Then if \(z\in \mathscr {S}^{n-1}\),

Since \(z_0\) was arbitrary, it follows that \(\mathscr {C}_{n,2d}\) is regular.

Although the definition of a cone being regular only bounds the growth of a linear functional on normalized extreme rays locally around its minimizer, such a local bound can be extended to a global bound.

Lemma 4

If a closed, pointed, convex cone \(\mathscr {K} \subseteq \mathbb {R}^m\) is regular then for each \(x_0\in ext (\mathscr {K})\) and each \(\ell \in -\mathscr {N}_{\mathscr {K}}(x_0)\) there exists \(\nu _0>0\) such that \(\ell (x) \le \nu _0\Vert x-x_0\Vert ^2\) for all \(x\in ext (\mathscr {K})\cap \mathscr {S}^{m-1}\).

Proof

If \(x_0\in ext (\mathscr {K})\), the cone \(\mathscr {K}\) is regular, and \(\ell \in -\mathscr {N}_{\mathscr {K}}(x_0)\), then there exist \(\delta >0\) and \(\nu \ge 0\) such that \(x\in ext (\mathscr {K}) \cap \mathscr {S}^{m-1}\) and \(\Vert x-x_0\Vert < \delta \) implies \(\ell (x) \le \nu \Vert x-x_0\Vert ^2\). If, on the other hand, \(\frac{\Vert x_0-x\Vert }{\delta }\ge 1\) and \(L = \max _{x\in \mathscr {S}^{m-1}}\ell (x)\) then

Choosing \(\nu _0 = \max \{\nu ,L/\delta \}\) completes the proof. \(\square \)

3.3 Terracini convexity of neighborly cones

We are now in a position to state and prove the main result of this section.

Theorem 1

If a closed, pointed, convex cone is non-degenerate k-neighborly and regular, then it is k-Terracini.

Proof

Let \(x^{(1)},x^{(2)},\ldots ,x^{(k)}\) be a collection of normalized extreme rays of a closed, pointed, non-degenerate k-neighborly convex cone \(\mathscr {K}\). To establish that \(\mathscr {K}\) is k-Terracini convex, by Remark 1 it suffices to show that \(\bigcap _{i=1}^{k}span \left( \mathscr {N}_{\mathscr {K}}(x^{(i)})\right) \subseteq span \left( \bigcap _{i=1}^{k}\mathscr {N}_{\mathscr {K}}(x^{(i)})\right) \). As such, let \(\Delta \in \bigcap _{i=1}^{k}span \left( \mathscr {N}_{\mathscr {K}}(x^{(i)})\right) \) be arbitrary.

Let \(\ell \) be a linear functional from the definition of non-degenerate k-neighborliness of \(\mathscr {K}\). Since this functional is nonnegative on \(\mathscr {K}\) and vanishes on \(x^{(i)}\) for \(i=1,2,\ldots ,k\), it follows that \(\ell \in -\bigcap _{i=1}^{k}\mathscr {N}_{\mathscr {K}}(x^{(i)})\). Further, from Lemma 3 there exists \(\mu _0 > 0\) such that

Since \(\Delta \in \bigcap _{i=1}^{k}span \left( \mathscr {N}_{\mathscr {K}}(x^{(i)})\right) \), for each i we have a decomposition of \(\Delta \) as \(\Delta = \Delta ^{(i)}_+ - \Delta ^{(i)}_-\) where \(\Delta ^{(i)}_+,\Delta ^{(i)}_-\in -\mathscr {N}_{\mathscr {K}}(x^{(i)})\). As \(\mathscr {K}\) is regular, for each \(i=1,2,\ldots ,k\), there exists \(\nu ^{(i)}_0> 0\) such that \(\Delta ^{(i)}_- \le \nu ^{(i)}_0\Vert x-x^{(i)}\Vert ^2\) for all \(x\in ext (\mathscr {K}) \cap \mathscr {S}^{m-1}\) from Lemma 4. Setting \(\nu _0 = \max _i\{\nu ^{(i)}_0\}\) we have that

If we choose \(0<\gamma < \mu _0/\nu _0\) it follows from (12) and (13) that

Using the fact that \(\Delta (x^{(i)}) = \ell (x^{(i)}) = 0\) for \(i=1,2,\ldots ,k\), we can conclude that \(\ell +\gamma \Delta \in -\bigcap _{i=1}^{k}\mathscr {N}_{\mathscr {K}}(x^{(i)})\). Since \(\gamma \ne 0\), it follows that \(\Delta \in span \left( \bigcap _{i=1}^{k}\mathscr {N}_{\mathscr {K}}(x^{(i)})\right) \), and so \(\mathscr {K}\) is k-Terracini convex. \(\square \)

This theorem yields two immediate corollaries based on the examples in Sects. 3.2.1 and 3.2.2.

Corollary 2

A pointed k-neighborly polyhedral cone is k-Terracini convex.

Proof

This follows immediately from Theorem 1 and Examples 7 and 9. \(\square \)

Corollary 3

The cone \(\mathscr {C}_{n,2d}\) over the Veronese embedding is d-Terracini convex.

Proof

This follows immediately from Theorem 1 and Examples 8 and 10. \(\square \)

While Corollary 3 holds for general cones over Veronese embeddings, for the special case of the cone over the moment curve, i.e., the case \(n=2\), a stronger conclusion is possible.

Corollary 4

The cone \(\mathscr {C}_{2,2d}\) over the homogeneous moment curve is Terracini convex, i.e., is k-Terracini convex for all k.

Proof

Let \(x^{(1)},\ldots ,x^{(k)}\) generate distinct extreme rays of \(\mathscr {C}_{2,2d}\). Then there exist points \(z^{(1)},\ldots ,z^{(k)}\in \mathbb {R}^2\) such that \(\phi _{2,2d}(z^{(i)}) = x^{(i)}\) for \(i=1,2,\ldots ,k\) and \(z^{(i)}_1z^{(j)}_2 - z^{(j)}_1z^{(i)}_2 \ne 0\) whenever \(i\ne j\). In other words, the \(z^{(i)}\) represent distinct elements of the real projective line. Since \(\mathscr {C}_{2,2d}\) is d-Terracini convex, to conclude that \(\mathscr {C}_{2,2d}\) is Terracini convex it suffices to show that if \(k\ge d+1\) then

Elements \(\ell \in \mathscr {N}_{\mathscr {C}_{2,2d}}(x^{(i)})\) are exactly the linear functionals with the property that \(\ell (\phi _{2,2d}(z))\) is a bivariate homogeneous polynomial of degree 2d that is non-positive and vanishes at \(z^{(i)}\). As such \(\ell \in \bigcap _{i=1}^{d}\mathscr {N}_{\mathscr {C}_{2,2d}}(x^{(i)})\) if and only if \(\ell (\phi _{2,2d}(z))\) is a non-negative multiple of \(p(z) = -\prod _{i=1}^{d}(z_1z^{(i)}_2 - z_2z^{(i)}_1)^2\). From d-Terracini convexity of \(\mathscr {C}_{2,2d}\), it follows that \(\ell \in \bigcap _{i=1}^{d}span \left( \mathscr {N}_{\mathscr {C}_{2,2d}}(x^{(i)})\right) \) if and only if \(\ell (\phi _{2,2d}(z))\) is a scalar multiple of p(z).

Consider any \(\tilde{\ell }\in \bigcap _{i=1}^{k}span \left( \mathscr {N}_{\mathscr {C}_{2,2d}}(x^{(i)})\right) \) for \(k\ge d+1\) and let \(q(z) = \tilde{\ell }(\phi _{2,2d}(z))\). Then \(q(z) = \alpha p(z)\) for some scalar \(\alpha \) since

Furthermore, \(q(z^{(d+1)}) = 0\) since \(\tilde{\ell }\in span (\mathscr {N}_{\mathscr {C}_{2,2d}}(x^{(d+1)}))\). Since \(z^{(i)}_1z^{(j)}_2 - z^{(j)}_1z^{(i)}_2 \ne 0\) whenever \(i\ne j\), this is only possible if \(\alpha =0\) and hence \(\tilde{\ell } = 0\). \(\square \)

A natural question at this stage is whether cones \(\mathscr {C}_{n,2d}\) over Veronese embeddings for \(n > 2\) are also Terracini convex, rather than merely being d-Terracini convex. For the case of \(n=3\), this question is open, and (to the best of our knowledge) cannot be resolved given the current understanding of the structure of \(\mathscr {C}_{3,2d}\). For the case of \(n=4\), the following example shows that \(\mathscr {C}_{4,4}\) is not Terracini convex based on Blekherman’s study of dimensional differences between faces of nonnegative polynomials and sums of squares [5].

Example 11

([5, Section 2.2]) Consider the cone \(\mathscr {C}_{4,4}\), which can be viewed as dual to nonnegative quartic forms in four variables. Let \(S = \{(1,1,0,0), (1,0,1,0), (1,0,0,1), (0,1,1,0), (0,1,0,1), (0,0,1,1), (1,1,1,1)\}\). Blekherman shows that the face of nonnegative quartic forms in four variables that vanish on S has dimension 6, i.e.,

Furthermore, each of the subspaces \(span (\mathscr {N}_{\mathscr {C}_{4,4}}(\phi _{4,4}(z)))\) for \(z\in S\) has codimension 4 in the 35-dimensional space of quartic forms in four variables. The intersection (over \(z \in S\)) of these subspaces are exactly the forms that double vanish on S. Consequently

In Blekherman’s language, the set S is not 2-independent. It follows from (15) and (16) that \(\mathscr {C}_{4,4}\) is not 7-Terracini convex, and hence not Terracini convex.

4 Preservation of Terracini convexity under linear images

In this section, we consider the Terracini convexity properties of linear images of Terracini convex cones such as the nonnegative orthant and the positive semidefinite matrices. We carry out our investigation by analyzing the performance of convex relaxations for nonconvex inverse problems. Specifically, we consider the problem of finding the componentwise nonnegative vector with the smallest number of nonzero entries (i.e., nonnegative sparse vectors) in an affine space, and that of finding the smallest rank positive semidefinite matrix in an affine space. Both of these problems arise commonly in many applications and they have been widely studied in the literature. In Sect. 4.1 we consider sparse vector recovery and we reprove a result of Donoho and Tanner that a natural linear programming relaxation succeeds in recovering nonnegative sparse vectors in an affine space if and only if a particular linear image of the nonnegative orthant is k-Terracini convex for an appropriate k [9]. Donoho and Tanner’s original proof was given in the language of neighborly polytopes. We provide an alternate proof in Sect. 4.1 by appealing to the dual relation Proposition 1 as it is instructive in our subsequent analysis on recovering low-rank matrices in affine spaces. In Sect. 4.2 we prove that the success of a semidefinite programming relaxation in recovering positive semidefinite low-rank matrices implies k-Terracini convexity of a particular linear image of the cone of positive semidefinite matrices for a suitable k; in the reverse direction, we show that a ‘robust’ analog of k-Terracini convexity implies success of the semidefinite relaxation. The results in Sect. 4.2 lead to a new family of non-polyhedral Terracini convex cones, which we describe in Sect. 4.3. Thus, this section supplies new examples of Terracini convex cones, and our results also highlight the utility of our definition of Terracini convexity in generalizing neighborly polyhedral cones, as the usual notion of neighborliness for non-polyhedral cones is not the right one for characterizing the performance of semidefinite relaxations for low-rank matrix recovery.

4.1 Linear images of the nonnegative orthant

In applications ranging from feature selection in machine learning to recovering signals and images from a limited number of measurements, a frequently encountered question is that of finding vectors with the smallest number of nonzero entries in a given affine space. Consider the following model problem:

Here \(A : \mathbb {R}^d \rightarrow \mathbb {R}^n\) is a linear map, \(b \in \mathbb {R}^n\), \(x \ge 0\) denotes componentwise nonnegativity of x, and \(|\mathrm {support}(x)|\) denotes the number of nonzero entries of x. As solving (P0) is NP-hard in general, the following tractable linear programming relaxation is the method of choice that is employed in most contexts:

In assessing the performance of the relaxation (P1), the usual mode of analysis is to suppose that there exists a nonnegative vector \(x^\star \in \mathbb {R}^d\) with a small number of nonzeros such that \(b = A x^\star \), and to then ask whether \(x^\star \) is the unique optimal solution of (P1), i.e., whether \(LP(A,A x^\star ) = \{x^\star \}\). The main result of Donoho and Tanner [9] relates the success of (P1) to neighborliness properties of images of the d-simplex \(\Delta ^d = \{x \in \mathbb {R}^d \;:\; x \ge 0, ~ \langle 1, x \rangle = 1\}\) under the map A.

In Theorem 2, to follow, we state a conic analog of the result in [9], and we reprove it in two stages. The proof we give offers a template for our generalization in Sect. 4.2 on relating the performance of semidefinite relaxations for low-rank matrix recovery to Terracini convexity of linear images of the cone of positive semidefinite matrices. Our analysis relies on relating the following three properties; each of these is stated with respect to a positive integer k, which will be clear from context.

-

A linear map \(A : \mathbb {R}^d \rightarrow \mathbb {R}^n\) satisfies the exact recovery property if, for each \(x^\star \in \mathbb {R}^d_+\) with \(|\mathrm {support}(x^\star )| \le k\), the unique optimal solution of the linear programming relaxation (P1) is \(LP(A,Ax^\star ) = \{x^\star \}\).

-

Consider a linear map \(B : \mathbb {R}^d \rightarrow \mathbb {R}^N\). The cone \(B(\mathbb {R}^d_+)\) satisfies the unique preimage property if, for each \(x^\star \in \mathbb {R}^d_+\) with \(|\mathrm {support}(x^\star )| \le k\), the point \(B x^\star \) has a unique preimage in \(\mathbb {R}^d_+\).

-

Consider a linear map \(B : \mathbb {R}^d \rightarrow \mathbb {R}^N\). The cone \(B(\mathbb {R}^d_+)\) satisfies the Terracini convexity property if it is pointed, it has d extreme rays, and it is k-Terracini convex.

Given these notions we state next the result of Donoho and Tanner in conic form:

Theorem 2

Consider a linear map \(A : \mathbb {R}^d \rightarrow \mathbb {R}^n\) that is surjective and define the linear map \(B : \mathbb {R}^d \rightarrow \mathbb {R}^{n+1}\) as \(Bx = \begin{pmatrix}Ax \\ \langle 1, x \rangle \end{pmatrix}\). Suppose that \(\mathrm {null}(A) \cap \mathbb {R}^d_{++} \ne \emptyset \). Fix a positive integer \(k < d\). The map A satisfies the exact recovery property if and only if the cone \(B(\mathbb {R}^d_+)\) satisfies the Terracini convexity property.

Remark 2

This result is a conic analog of those in [9]. The assumption that A is surjective is to ensure a cleaner argument; if this condition is not satisfied, the proof can be adapted by restricting to the image of A. Finally, the results in [9] do not require the condition \(\mathrm {null}(A) \cap \mathbb {R}^d_{++} \ne \emptyset \), and they are described in terms of a property termed ‘outward neighborliness’. However, the particular restriction on which we focus suffices for our purposes and leads to a simpler exposition.

This result leads to two types of consequences in [9]. In one direction, Donoho and Tanner leveraged results on constructions of neighborly polytopes to obtain new families of linear maps A for which the linear program (P1) succeeds in sparse recovery. Conversely, by building on results in the sparse recovery literature, they constructed new families of neighborly polytopes.

Our proof proceeds in two steps and is based on the following intermediate results.

Lemma 5

Consider a linear map \(A : \mathbb {R}^d \rightarrow \mathbb {R}^n\) and define the linear map \(B : \mathbb {R}^d \rightarrow \mathbb {R}^{n+1}\) as \(Bx = \begin{pmatrix}Ax \\ \langle 1, x \rangle \end{pmatrix}\). Suppose that \(\mathrm {null}(A) \cap \mathbb {R}^d_{++} \ne \emptyset \). Fix a positive integer \(k < d\). The map A satisfies the exact recovery property if and only if the cone \(B(\mathbb {R}^d_+)\) satisfies the unique preimage property.

Proof

For the case \(x^\star = 0\), one can check that \(LP(A,0) = \{0\}\) and that the unique preimage of \(0 \in \mathbb {R}^{n+1}\) under the map B in \(\mathbb {R}^d_+\) is also \(\{0\}\). For nonzero \(x^\star \), in considering the exact recovery property and the unique preimage property, we may assume without loss of generality that \(\langle 1, x^\star \rangle = 1\). The reason for this that \(LP(A, \alpha b) = \alpha LP(A, b)\) for any \(\alpha > 0\); the unique preimage property is similarly unaffected by such scaling. With this normalization, the exact recovery property is equivalent to the fact that for any \(x^\star \in \mathbb {R}^d_+\) with \(|\mathrm {support}(x^\star )| \le k\), the point \(A x^\star \) has a unique preimage in the solid simplex \(\Delta ^d_0 = \{x \in \mathbb {R}^d \;:\; \langle 1, x \rangle \le 1, ~ x \ge 0\}\).

Consider the implication that the exact recovery property implies the unique preimage property. Assume that the unique preimage property does not hold. Then there exists \(x^\star \in \mathbb {R}^d_+\) with \(|\mathrm {support}(x^\star )| \le k\) and \(\tilde{x} \in \mathbb {R}^d_+\) such that \(B \tilde{x} = B x^\star , ~ \tilde{x} \ne x^\star \). Based on the description of B, we can conclude that \(\langle 1, \tilde{x} \rangle = 1\) and therefore \(\tilde{x} \in \Delta ^d\). This violates the property that \(A x^\star \) has a unique preimage in \(\Delta ^d_0\); hence the exact recovery property does not hold.

Conversely, consider the implication that the unique preimage property implies the exact recovery property. Assume for the sake of a contradiction that there exists \(x^\star \in \mathbb {R}^d_+\) with \(|\mathrm {support}(x^\star )| \le k\) and \(\tilde{x} \in \Delta ^d_0\) such that \(A \tilde{x} = A x^\star , ~ \tilde{x} \ne x^\star \). As \(\mathrm {null}(A) \cap \mathbb {R}^d_{++} \ne \emptyset \), there exists \(x^0 \in \Delta ^d\) with \(|\mathrm {support}(x^0)| = d\) such that \(A x^0 = 0\). The point \(x' = (1-\langle 1, \tilde{x}\rangle ) x^0 + \tilde{x}\) has the property that \(B x' = B x^\star \). Consequently, we have that \(x^\star = x' = (1-\langle 1, \tilde{x}\rangle ) x^0 + \tilde{x}\), which in turn implies that \(x^0\) and \(\tilde{x}\) belong to the smallest face of \(\mathbb {R}^d_+\) containing \(x^\star \), i.e., \(\mathrm {support}(x^0) \subseteq \mathrm {support}(x^\star )\) and \(\mathrm {support}(\tilde{x}) \subseteq \mathrm {support}(x^\star )\). However, as \(|\mathrm {support}(x^0)| = d\) but \(|\mathrm {support}(x^\star )| \le k < d\), we have the desired contradiction. \(\square \)

Our next result relates the unique preimage property to the Terracini convexity property:

Proposition 5

Consider a linear map \(A : \mathbb {R}^d \rightarrow \mathbb {R}^n\) and define the linear map \(B : \mathbb {R}^d \rightarrow \mathbb {R}^{n+1}\) as \(Bx = \begin{pmatrix}Ax \\ \langle 1, x\rangle \end{pmatrix}\). Suppose the map B is surjective. Fix a positive integer k. The cone \(B(\mathbb {R}^d_+)\) satisfies the unique preimage property if and only if it satisfies the Terracini convexity property.

Proof

First, we give a dual reformulation of the unique preimage property. For each \(x^\star \in \mathbb {R}^d_+\) with \(|\mathrm {support}(x^\star )| \le k\), the property that \(B x^\star \) has a unique preimage in \(\mathbb {R}^d_+\) is equivalent to the transverse intersection condition \(\mathrm {null}(B) \cap \mathscr {K}_{\mathbb {R}^d_+}(x^\star ) = \{0\}\). The cone \(\mathscr {K}_{\mathbb {R}^d_+}(x^\star )\) is closed and therefore one can check that this transverse intersection condition is equivalent to \(\mathrm {null}(B)^\perp \cap \mathrm {ri}(\mathscr {N}_{\mathbb {R}^d_+}(x^\star )) \ne \emptyset \). As the nonnegative orthant is a self-dual cone, the normal cone \(\mathscr {N}_{\mathbb {R}^d_+}(x^\star )\) is given by a face of \(\mathbb {R}^d_+\) of co-dimension at most k. In summary, the unique preimage property states that for any face \(\Omega \) of \(\mathbb {R}^d_+\) of co-dimension at most k, we have that \(\mathrm {null}(B)^\perp \cap \mathrm {ri}(\Omega ) \ne \emptyset \).

Second, we note that the cone \(B(\mathbb {R}^d_+)\) is pointed by construction. As the linear map B is surjective, elements of the normal cone \(\mathscr {N}_{B(\mathbb {R}^d_+)}(Bx)\) for any \(x \in \mathbb {R}^d_+\) are in one-to-one correspondence with \(\mathrm {null}(B)^\perp \cap \mathscr {N}_{\mathbb {R}^d_+}(x)\). Consequently, by appealing to Proposition 1, the cone \(B(\mathbb {R}^d_+)\) being k-Terracini convex is equivalent to the condition that for any face \(\Omega \) of \(\mathbb {R}^d_+\) of co-dimension at most k, we have that \(\mathrm {span}(\mathrm {null}(B)^\perp \cap \Omega ) = \mathrm {null}(B)^\perp \cap \mathrm {span}(\Omega )\).

With these two reformulations of the unique preimage property and the Terracini convexity property in hand, we proceed to establish the desired result.

Consider the implication that the unique preimage property implies the Terracini convexity property. Based on the unique preimage property applied to elements of \(\mathbb {R}^d_+\) with one nonzero entry, we conclude that \(B(\mathbb {R}^d_+)\) has d extreme rays. Let \(v \in \mathrm {null}(B)^\perp \cap \mathrm {ri}(\Omega )\). Letting U be an open set in \(\mathbb {R}^d\) containing the origin, we have that \(v + \epsilon [U \cap \mathrm {null}(B)^\perp \cap \mathrm {span}(\Omega )] \subset \mathrm {null}(B)^\perp \cap \mathrm {ri}(\Omega )\) for a sufficiently small \(\epsilon > 0\). Consequently, we can conclude that \(\mathrm {span}(\mathrm {null}(B)^\perp \cap \Omega ) = \mathrm {null}(B)^\perp \cap \mathrm {span}(\Omega )\), which is equivalent to \(B(\mathbb {R}^d_+)\) being k-Terracini convex.

Next, consider the implication that the Terracini convexity property implies the unique preimage property. We prove this by induction on k. For the base case \(k=1\), as the cone \(B(\mathbb {R}^d_+)\) has d extreme rays, we have that the unique preimage property holds for \(k=1\). For \(k > 1\), suppose for the sake of a contradiction that \(\mathrm {null}(B)^\perp \cap \mathrm {ri}(\Omega ) = \emptyset \). Thus, there exists a face \(\hat{\Omega }\) of \(\mathbb {R}^d_+\) contained strictly in \(\Omega \), i.e., \(\hat{\Omega } \subsetneq \Omega \) such that \(\mathrm {null}(B)^\perp \cap \hat{\Omega } = \mathrm {null}(B)^\perp \cap \Omega \). We have the following sequence of containment relations:

The first relation follows from \(\hat{\Omega } \subseteq \Omega \), the second one follows from the Terracini convexity property, the third one follows from \(\mathrm {null}(B)^\perp \cap \hat{\Omega } = \mathrm {null}(B)^\perp \cap \Omega \), and the final one follows from the fact that the span of the intersection of two sets is contained inside the intersection of the spans of the sets. In conclusion, all the containments are satisfied with equality and we have that \(\mathrm {null}(B)^\perp \cap \mathrm {span}(\hat{\Omega }) = \mathrm {null}(B)^\perp \cap \mathrm {span}(\Omega )\), or equivalently that:

As \(\mathbb {R}^d_+\) is a polyhedral cone, we note that \(\mathrm {span}(\hat{\Omega })^\perp \) and \(\mathrm {span}(\Omega )^\perp \) are themselves spans of faces of \(\mathbb {R}^d_+\). In particular, let \(\mathscr {F}, \hat{\mathscr {F}}\) be faces of \(\mathbb {R}^d_+\) such that \(\mathscr {F} \subsetneq \hat{\mathscr {F}}\), and \(\mathrm {span}(\mathscr {F}) = \mathrm {span}(\Omega )^\perp , \mathrm {span}(\hat{\mathscr {F}}) = \mathrm {span}(\hat{\Omega })^\perp \). The relationship (17) implies that there exists a generator \(\hat{x}\) of an extreme ray of \(\mathbb {R}^d_+\) in \(\hat{\mathscr {F}} \backslash \mathscr {F}\) such that \(\hat{x} = (x^{(+)} - x^{(-)}) + v\) for \(x^{(+)}, x^{(-)} \in \mathscr {F}\) with disjoint supports and \(v \in \mathrm {null}(B)\). Hence, we have that \(B (\hat{x} + x^{(-)}) = B x^{(+)}\). As \(\mathrm {dim}(\mathscr {F}) = k\), the sum of the sizes of the supports of \(\hat{x} + x^{(-)}\) and of \(x^{(+)}\) is at most \(k+1\). If \(x^{(+)} \ne 0\) we have a contradiction due to the inductive hypothesis. If \(x^{(+)} = 0\) we have \(\langle 1, (\tilde{x} + x^{(-)})\rangle = 0\), which implies that \(\hat{x} + x^{(-)} = 0\) and in turn that \(\hat{x} = 0\), also a contradiction. \(\square \)

Based on these two results, we are now in a position to prove Theorem 2.

Proof of Theorem 2

As \(\mathrm {null}(A) \cap \mathbb {R}^d_{++} \ne \emptyset \) and \(k < d\) by assumption, we can apply Lemma 5. Specifically, the exact recovery property for A is equivalent to the unique preimage property for \(B(\mathbb {R}^d_+)\).

Next, in preparation to apply Proposition 5, we need to verify that the linear map B is surjective. The surjectivity of B is equivalent to A being surjective and \(1 \notin \mathrm {null}(A)^\perp \). The former condition holds by assumption and the latter condition is in turn equivalent to \(\mathrm {null}(A) \nsubseteq \mathrm {span}(1)^\perp \). The assumption \(\mathrm {null}(A) \cap \mathbb {R}^d_{++} \ne \emptyset \) implies that \(\mathrm {null}(A) \nsubseteq \mathrm {span}(1)^\perp \). Thus, we are in a position to apply Proposition 5 and obtain that the unique preimage property of the cone \(B(\mathbb {R}^d_+)\) is equivalent to \(B(\mathbb {R}^d_+)\) satisfying the Terracini convexity property. This concludes the proof. \(\square \)

4.2 Linear images of the positive semidefinite matrices

The development of convex relaxations for obtaining low-rank matrices in affine spaces largely paralleled and built upon the literature on sparse recovery. Notable examples of such problems include factor analysis and collaborative filtering. Concretely, given an affine space in \(\mathbb {S}^d\) of the form \(\{X \in \mathbb {S}^d \;:\; \mathscr {A}(X) = b\}\) where \(\mathscr {A} : \mathbb {S}^d \rightarrow \mathbb {R}^n\) is a linear map and \(b \in \mathbb {R}^n\), consider the following optimization problem for identifying a positive-semidefinite low-rank matrix in this space:

As with the problem (P0), the program (R0) is also NP-hard to solve in general. Consequently, the following semidefinite relaxation is widely employed in practice:

By analogy with the analysis of the performance of (P1), we are interested in obtaining conditions under which the unique optimal solution of (R1) with \(b = \mathscr {A}(X^\star )\) for a low-rank matrix \(X^\star \in \mathbb {S}^d_+\) is equal to \(X^\star \), i.e., whether \(SDP(\mathscr {A},\mathscr {A}(X^\star )) = \{X^\star \}\). Our objective in the remainder of this section is to relate such exact recovery to Terracini convexity of an appropriate linear image of \(\mathbb {S}^d_+\).

As with the previous subsection, our analysis is organized in terms of three properties:

-

A linear map \(\mathscr {A} : \mathbb {S}^d \rightarrow \mathbb {R}^n\) satisfies the exact recovery property if for any \(X^\star \in \mathbb {S}^d_+\) with \(\mathrm {rank}(X^\star ) \le k\), the unique optimal solution of the semidefinite programming relaxation (R1) is \(SDP(\mathscr {A},\mathscr {A}(X^\star )) = \{X^\star \}\).

-

Consider a linear map \(\mathscr {B} : \mathbb {S}^d \rightarrow \mathbb {R}^N\). The cone \(\mathscr {B}(\mathbb {S}^d_+)\) satisfies the unique preimage property if for any \(X^\star \in \mathbb {S}^d_+\) with \(\mathrm {rank}(X^\star ) \le k\), the point \(\mathscr {B}(X^\star )\) has a unique preimage in \(\mathbb {S}^d_+\).

-

Consider a linear map \(\mathscr {B} : \mathbb {S}^d \rightarrow \mathbb {R}^N\). The cone \(\mathscr {B}(\mathbb {S}^d_+)\) satisfies the Terracini convex property if it is closed and pointed, its extreme rays are in one-to-one correspondence with those of \(\mathbb {S}^d_+\), and it is k-Terracini.

In what follows, let \(\mathscr {O}^d = \{X\in \mathbb {S}^d\;:\; tr (X)=1,\; X \succeq 0\}\) be the spectraplex. This plays the same role as the simplex \(\Delta ^d\) did in Sect. 4.1. We are now in a position to state the main new result of this section.

Theorem 3

Consider a linear map \(\mathscr {A} : \mathbb {S}^d \rightarrow \mathbb {R}^n\) and fix a positive integer \(k < d\). Then the following two statements hold:

-

1.

Suppose that \(\mathscr {A}\) is surjective and \(\mathrm {null}(\mathscr {A}) \cap \mathbb {S}^d_{++} \ne \emptyset \). Consider the linear map \(\mathscr {B}: \mathbb {S}^d \rightarrow \mathbb {R}^{n+1}\) defined as \(\mathscr {B}(X) = \begin{pmatrix}\mathscr {A}(X) \\ \mathrm {tr}(X)\end{pmatrix}\). If the map \(\mathscr {A}\) satisfies the exact recovery property, then the cone \(\mathscr {B}(\mathbb {S}^d_+)\) satisfies the Terracini convexity property.

-

2.

Assume that \(n > {d+1 \atopwithdelims ()2} - {d-k+1 \atopwithdelims ()2}\). Suppose there exists an open set \(\mathfrak {S}\) in the space of linear maps from \(\mathbb {S}^d\) to \(\mathbb {R}^n\) with the following properties:

-

\(\mathscr {A}\in \mathfrak {S}\)

-

For each \(\tilde{\mathscr {A}} \in \mathfrak {S}\), the map \(\tilde{\mathscr {A}}\) is surjective and satisfies \(\mathrm {null}(\tilde{\mathscr {A}}) \cap \mathbb {S}^d_{++} \ne \emptyset \).

-

For each \(\tilde{\mathscr {A}} \in \mathfrak {S}\) with associated \(\tilde{\mathscr {B}} : \mathbb {S}^d \rightarrow \mathbb {R}^{n+1}\) defined as \(\tilde{\mathscr {B}}(X) = \begin{pmatrix}\tilde{\mathscr {A}}(X) \\ \mathrm {tr}(X)\end{pmatrix}\), the cone \(\tilde{\mathscr {B}}(\mathbb {S}^d_+)\) satisfies the Terracini convexity property.

Then the map \(\mathscr {A}\) satisfies the exact recovery property.

-