Abstract

Projection-free optimization via different variants of the Frank–Wolfe method has become one of the cornerstones of large scale optimization for machine learning and computational statistics. Numerous applications within these fields involve the minimization of functions with self-concordance like properties. Such generalized self-concordant functions do not necessarily feature a Lipschitz continuous gradient, nor are they strongly convex, making them a challenging class of functions for first-order methods. Indeed, in a number of applications, such as inverse covariance estimation or distance-weighted discrimination problems in binary classification, the loss is given by a generalized self-concordant function having potentially unbounded curvature. For such problems projection-free minimization methods have no theoretical convergence guarantee. This paper closes this apparent gap in the literature by developing provably convergent Frank–Wolfe algorithms with standard \(\mathcal {O}(1/k)\) convergence rate guarantees. Based on these new insights, we show how these sublinearly convergent methods can be accelerated to yield linearly convergent projection-free methods, by either relying on the availability of a local liner minimization oracle, or a suitable modification of the away-step Frank–Wolfe method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Statistical analysis using generalized self-concordant (GSC) functions as a loss function is gaining increasing attention in the machine learning community [2, 45, 46, 50]. Beyond machine learning, GSC loss functions are also used in image analysis [44] and quantum state tomography [33]. This class of loss functions allows to obtain faster statistical rates similar to least-squares [37]. At the same time, the minimization of empirical risk in this setting is a challenging optimization problem in high dimensions. Thus, without knowledge of specific structure, interior point, or other polynomial time methods, are unappealing. Moreover, large-scale optimization models in machine learning often depend on noisy data and thus high-accuracy solutions are not really needed, or obtainable. All these features make simple optimization algorithms, with low implementation costs, the preferred methods of choice. In this paper we focus on projection-free methods which rely on the availability of a Linear Minimization Oracle (LMO). Such algorithms are known as Conditional Gradient (CG) or Frank–Wolfe (FW) methods. These classes of gradient-based algorithms belong to the oldest convex optimization tools, and their origins can be traced back to [22, 32]. For a given convex compact set \(\mathcal {X}\subset \mathbb {R}^n\), and a convex objective function f, FW methods solve the smooth convex optimization problem

by sequential calls of a LMO, returning at point x the target state

The selection s(x) is determined via some pre-defined tie breaking rule, whose specific form is of no importance for the moment. Computing this target state is the only computational bottleneck of the method. Progress of the algorithm is monitored via a merit function. The standard merit function in this setting is the Frank–Wolfe (dual) gap

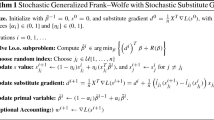

It is easy to see that \({{\,\mathrm{\mathsf {Gap}}\,}}(x)\ge 0\) for all \(x\in \mathcal {X}\), with equality if and only if x is a solution to (P). The vanilla implementation of FW (Algorithm 1) aims to reduce the gap function by sequentially solving linear minimization subproblems to obtain the target point s(x).

As always, the general performance of an algorithm depends heavily on the availability of practical step-size policies \(\{\alpha _{k}\}_{k\in \mathbb {N}}\). Two popular choices are either \(\alpha _{k}=\frac{2}{k+2}\) (FW-Standard), or an exact line-search (FW-Line Search). Under either choice, the algorithm exhibits an \(\mathcal {O}(1/k)\) rate of convergence for solving (P) in case where f is convex and either possess a Lipschitz continuous gradient, or a bounded curvature constant. The latter concept is a slight weakening of the classical Lipschitz gradient assumption, and is the key quantity in the modern analysis of FW due to Jaggi [28]. The curvature constant is defined as

Assuming that \(\kappa _{f}<\infty \), [28] estimated the iteration complexity of Algorithm 1 to be \(\mathcal {O}(1)\frac{\kappa _{f}{{\,\mathrm{diam}\,}}(\mathcal {X})}{\varepsilon }\). This iteration complexity is in fact optimal [30], even when f is strongly convex. This is quite surprising, since gradient methods are known to display linear convergence on well-conditioned optimization problems, i.e. when the objective function is strongly convex with a Lipschitz continuous gradient [41].

Frank–Wolfe for ill-conditioned functions. In this paper we are interested in functions which are possibly ill-conditioned: f is neither assumed to be globally strongly convex, nor to posses a Lipschitz continuous gradient over the feasible set. Recently, many empirical risk minimization problems have been identified to be ill-conditioned, or at least nearly so [36, 37, 45]. This explains why the study of algorithms for this challenging class of problems received a lot of attention recently. The role of self-concordance-like properties of loss functions has been clarified in the influential seminal work by Bach [2]. Since then, numerous papers at the intersection between statistics, machine learning and optimization, exploited the self-concordance like behavior of typical statistical loss function to improve existing statistical rate estimates [37, 45, 46], or to improve the practical performance and theoretical guarantees of optimization algorithms [8, 16, 19, 20, 51,52,53]. Besides applications in statistics, generalized self-concordant functions are of some importance in scientific computing. [54] construct self-concordant barriers for a class of polytopes arising naturally in combinatorial optimization. [50] show that the well-known matrix balancing problem minimizes a GSC function. We believe that our results are going to be useful in such problems as well.

The main difficulties one faces in minimizing functions with self-concordance like properties can be easily illustrated with a basic, in some sense minimal, example:

Example 1

Consider the function \(f(x,y)=-\ln (x)-\ln (y)\) where \(x,y> 0\) satisfy \(x+y=1\). This function is the standard self-concordant barrier for the positive orthant (the log-barrier) and thus (2, 3)-generalized self-concordant (see Definition 1). Its Bregman divergence is easily calculated as

Neither the function f, nor its gradient, is Lipschitz continuous over the set of interest. In particular the curvature constant is unbounded, i.e. \(\kappa _{f}=\infty \). Moreover, if we start from \(u^0=(1/4,3/4)\) and apply the standard \(2/(k+2)\)-step size policy, then \(\alpha _0=1\), which leads to \(u^1=s(u^0)=(1,0) \notin {{\,\mathrm{dom}\,}}f\). Clearly, the standard method fails. \(\blacklozenge \)

The logarithm is one of the canonical members of (generalized) self-concordant functions, and thus the above example is quite representative for the class of optimization problems of interest in this paper. It is therefore clear that the standard analysis of [28], and all subsequent investigations relying on estimates of the Lipschitz constant of the gradient or the curvature, cannot be applied straightforwardly to the problem of minimizing a GSC function via projection-free methods.

1.1 Related literature

The development of FW methods for ill-conditioned problems has received quite some attention recently. [40] requires the gradient of the objective function to be Hölder continuous and similar results for this setting are obtained in [7, 49]. Implicitly it is assumed that \(\mathcal {X}\subseteq {{\,\mathrm{dom}\,}}f\). This would also not be satisfied in important GSC minimization problems, and hence we do not impose it (e.g. \(0\in \mathcal {X}\), but \(0\notin {{\,\mathrm{dom}\,}}f\) in the Covariance Estimation problem in Sect. 6.4). Specialized to solving a quadratic Poisson inverse problem in phase retrieval, [44] provided a globally convergent FW method using the convex and self-concordant (SC) reformulation, based on the PhaseLift approach [9]. They constructed a provably convergent FW variant using a new step size policy derived from estimate sequence techniques [3, 39], in order to match the proof technique of [40].

Very recently, two other FW-methods for ill-conditioned problems appeared. [34] employed a FW-subroutine for computing the Newton step in a proximal Newton framework for minimizing self-concordant (SC)-functions over a convex compact set. After the first submission of this work, Professor Robert M. Freund sent us the preprint [57], in which the SC-FW method from our previous conference paper [17] is refined to minimize a logarithmically homogeneous barrier [42] over a convex compact set. They also propose new stepsizes for FW for minimizing functions with Hölder continuous gradient. None of these recent contributions develop FW methods for the much larger class of GSC-functions, nor do they consider linearly convergent variants.

Linearly convergent Frank–Wolfe methods Given their slow convergence, it is clear that the application of projection-free methods can only be interesting if projections onto the feasible set are computationally expensive. Various previous papers worked out conditions under which the iteration complexity of projection-free methods can be potentially improved. [25] obtained linear convergence rates in well conditioned problems under the a-priori assumption that the solution lies in the relative interior of the feasible set, and the rate of convergence explicitly depends on the distance of the solution from the boundary (see also [5, 21]). If no a-priori information on the location of the solution is available, there are essentially two known twists of the vanilla FW to boost the convergence rates. One twist is to modify the search directions via corrective, or away search directions [23, 25, 26, 48, 55]. The Away-Step Frank Wolfe (ASFW) method can remove weight from "bad" atoms in the active set. These drop steps have the potential to circumvent the well-known zig-zagging phenomenon of FW when the solution lies on the boundary of the feasible set. When the feasible set \(\mathcal {X}\) is a polytope, [29] derived linear convergence rates for ASFW using the "pyramidal width constant" in the well-conditioned optimization case. Unfortunately, the pyramidal width is the optimal value of a complicated combinatorial optimization problem, whose value is unknown even on simple sets such as the unit simplex. [4] improved their construction by replacing the pyramidal width with a much more tractable gradient bound condition, involving the "vertex-facet distance". In many instances, including the unit simplex, the \(\ell _{1}\)-ball and the \(\ell _{\infty }\)-ball, the vertex-facet distance can be computed (see Section 3.4 in [4]). In this paper we develop a corresponding away-step FW variant for the minimization of a GSC function (Algorithm 8 (ASFWGSC)), extending [4] to ill-conditioned problems.

While we were working on the revision of this paper, Professor Sebastian Pokutta shared with us the recent preprint [10], where a monotone modification of FW-Standard applied to GSC-minimization problems is proposed. They derive a \(\mathcal {O}(1/k)\) convergence rate guarantee for minimizing GSC functions. Moreover, they exhibit a linearly convergent variant using away-steps. These results have been achieved independently from our work, and they give a nice complementary view on our away-step variant ASFWGSC. The basic difference between our analysis and [10] is that we exploit the vertex-facet distance instead of the pyramidal width. As already said, this gives explicit and efficiently computable error bounds for some important geometries, and thus allows for a more in-depth complexity assessment.

The alternative twist to obtain linear convergence is to change the design of the LMO [24, 27, 30] via a well-calibrated localization procedure. Extending the work by Garber and Hazan [24], we construct another linearly convergent FW-variant based on local linear minimization oracles (Algorithm 7, FWLLOO).

1.2 Main contributions and outline of the paper

In this paper, we demonstrate that projection-free methods extend to a large class of potentially ill-conditioned convex programming problems, featuring self-concordant like properties. Our main contributions can be succinctly summarized as follows:

-

(i)

Ill-Conditioned problems We construct a set of globally convergent projection-free methods for minimizing generalized self-concordant functions over convex compact domains.

-

(ii)

Detailed Complexity analysis Algorithms with sublinear and linear convergence rate guarantees are derived.

-

(iii)

Adaptivity We develop new backtracking variants in order to come up with new step size policies which are adaptive with respect to local estimates of the gradient’s Lipschitz constant, or basic parameters related to the self-concordance properties of the objective function. The construction of these backtracking schemes fully exploits the basic properties of GSC-functions. Specifically, Algorithm 3 (LBTFWGSC) builds on a standard quadratic upper model over which a local search for the Lipschtiz modulus of the gradient, restricted to level sets, can be performed. This local search method is inspired by [47], but our convergence proof is much simpler and direct. Our second backtracking variant (Algorithm 5, MBTFWGSC) performs a local search for the generalized self-concordance constant. To the best of our knowledge this is the first algorithm which adaptively adjusts the self-concordance parameters on-the-fly. We thus present three new sublinearly converging FW-variants which are all adaptive, and share the standard sublinear \(\mathcal {O}(1/\varepsilon )\) complexity bound which is proved in Sect. 4. On top of that, we derive two new linearly converging schemes, either building on the availability of Local Linear Optimization Oracle (LLOO) (Algorithm 7 (FWLLOO)), or suitably defined Away-Steps (Algorithm 8 (ASFWGSC)).

-

(iv)

Detailed Numerical experiments We test the performance of our method on a set of challenging test problems, spanning all possible GSC parameters over which our algorithms are provably convergent.

This paper builds on, and significantly extends, our conference paper [17]. This previous work exclusively focused on the minimization of standard self-concordant functions. The extension to generalized self-concordant functions requires some careful additional steps and a detailed case-by-case analysis that are not simple corollaries of [17]. On top of that, in this paper we derive two completely new projection-free algorithms, and new proofs of existing algorithms we already introduced in our first publication. In light of these contributions, this paper significantly extends the results reported in [17].

Outline Section 2 contains necessary definitions and properties for the class of GSC functions in a self-contained way. Our algorithmic analysis starts in Sect. 3 where a new FW variant with an analytic step-size rule is presented (Algorithm 2, FWGSC). This algorithm can be seen as the basic template from which the other methods are subsequently derived. Section 4 presents the convergence analysis for the three sublinearly convergent variants presented in Sect. 3. Section 5 presents the two linearly convergent variants and their convergence analysis. Section 6 reports results from extensive numerical experiments using the proposed algorithms and their comparison with the baselines. Section 7 concludes the paper.

Notation Given a proper, closed, and convex function \(f:\mathbb {R}^n\rightarrow (-\infty ,\infty ]\), we denote by \({{\,\mathrm{dom}\,}}f\triangleq \{x\in \mathbb {R}^n\vert f(x)<\infty \}\) the (effective) domain of f. For a set X, we define the indicator function \(\delta _{X}(x)=\infty \) if \(x\notin X\), and \(\delta _{X}(x)=0\) otherwise. We use \({\mathbf {C}}^{k}({{\,\mathrm{dom}\,}}f)\) to denote the class of functions \(f:\mathbb {R}^n\rightarrow (-\infty ,\infty ]\) which are k-times continuously differentiable on their effective domain. We denote by \(\nabla f\) the gradient map, and \(\nabla ^{2}f\) the Hessian map.

Let \(\mathbb {R}_{+}\) and \(\mathbb {R}_{++}\) denote the set of nonnegative, and positive real numbers, respectively. We use \(\mathbb {S}^{n}\triangleq \{x\in \mathbb {R}^{n\times n}\vert x^{\top }=x\}\) the set of symmetric matrices, and \(\mathbb {S}^{n}_{+},\mathbb {S}^{n}_{++}\) to denote the set of symmetric positive semi-definite and positive definite matrices, respectively. Given \(Q\in \mathbb {S}^{n}_{++}\) we define the weighted inner product \(\langle u,v\rangle _{Q}\triangleq \langle Qu,v\rangle \) for \(u,v\in \mathbb {R}^n\), and the corresponding norm \(\Vert u\Vert _{Q}\triangleq \sqrt{\langle u,u\rangle _{Q}}\). The associated dual norm is \(\Vert v\Vert ^{*}_{Q}\triangleq \sqrt{\langle v,v\rangle _{Q^{-1}}}\). For \(Q\in \mathbb {S}^{n}\), we let \(\lambda _{\min }(Q)\) and \(\lambda _{\max }(Q)\) denote the smallest and largest eigenvalues of the matrix Q, respectively.

2 Generalized self-concordant functions

Following [50], we briefly introduce the basic properties of the class of GSC functions. Let \(\varphi :\mathbb {R}\rightarrow \mathbb {R}\) be a three-times continuously differentiable function on \({{\,\mathrm{dom}\,}}\varphi \). Recall that \(\varphi \) is convex if and only if \(\varphi ''(t)\ge 0\) for all \(t\in {{\,\mathrm{dom}\,}}\varphi \).

Definition 1

[50] Let \(\varphi \in {\mathbf {C}}^{3}({{\,\mathrm{dom}\,}}\varphi )\) be a convex function with \({{\,\mathrm{dom}\,}}\varphi \) open. Given \(\nu >0\) and \(M_{\varphi }>0\) some constants, we call \(\varphi \) \((M_{\varphi },\nu )\) generalized self-concordant (GSC) if

If \(\varphi (t)=\frac{a}{2}t^2+bt+c\) for any constant \(a\ge 0\) we get a \((0,\nu )\)-generalized self-concordant function. Hence, any convex quadratic function is GSC for any \(\nu >0\). Standard one-dimensional examples are summarized in Table 1 (based on [50]).

This definition generalizes to multivariate functions by requiring GSC along every straight line. Specifically, let \(f:\mathbb {R}^n\rightarrow (-\infty ,+\infty ]\) be a closed convex, lower semi-continuous function with effective domain \({{\,\mathrm{dom}\,}}f\) which is an open nonempty subset of \(\mathbb {R}^n\). For \(x\in {{\,\mathrm{dom}\,}}f\) and \(u,v\in \mathbb {R}^n\), define the real-valued function \(\varphi (t):=\langle \nabla ^{2}f(x+tv)u,u\rangle \). For \(t\in {{\,\mathrm{dom}\,}}\varphi \), one sees that \(\phi '(t)=\langle D^{3}f(x+tv)[v]u,u\rangle ,\) where \(D^{3}f(x)[v]\) denotes the third-derivative tensor at (x, v), viewed as a bilinear mapping \(\mathbb {R}^n\times \mathbb {R}^n\rightarrow \mathbb {R}\). The Hessian of the function f defines a semi-norm \(\Vert u\Vert _{x}\triangleq \sqrt{\langle u,u\rangle _{\nabla ^{2}f(x)}}\) for all \(x\in {{\,\mathrm{dom}\,}}f,\) with dual norm \(\Vert a\Vert ^{*}_{x}\triangleq \sup _{d\in \mathbb {R}^n}\{2\langle d,a\rangle -\Vert d\Vert _{x}^{2}\}.\) If \(\nabla ^{2}f(x)\in \mathbb {S}^{n}_{++}\) then \(\Vert \cdot \Vert _{x}\) is a true norm, and \(\Vert d\Vert ^{*}_{x}=\sqrt{\langle d,d\rangle _{{[\nabla ^{2}f(x)]^{-1}}}}\).

Definition 2

[50] A closed convex function \(f\in {\mathbf {C}}^{3}({{\,\mathrm{dom}\,}}f)\), with \({{\,\mathrm{dom}\,}}f\) open, is called \((M_{f},\nu )\) generalized self-concordant of the order \(\nu \in [2,3]\) and with constant \(M_{f}\ge 0\), if for all \(x\in {{\,\mathrm{dom}\,}}f\)

We denote this class of functions as \(\mathcal {F}_{M_{f},\nu }\).

In the extreme case \(\nu =2\) we recover the definition \(|\langle D^{3}f(x)[v]u,u\rangle |\le M_{f}\Vert u\Vert ^{2}_{x}\Vert v\Vert _{2}\), which is the generalized self-concordance definition proposed by Bach [2]. If \(\nu =3\) and \(u=v\) the definition becomes \(|\langle D^{3}f(x)[u]u,u\rangle |\le M_{f}\Vert u\Vert ^{3}_{x}\), which is the standard self-concordance definition due to [42].

Given \(\nu \in [2,3]\) and \(f\in \mathcal {F}_{M_{f},\nu }\), we define the distance-like function

and the Dikin Ellipsoid

Since \(f\in \mathcal {F}_{M_{f},\nu }\) are closed convex functions with open domain, it follows that they are barrier functions for \({{\,\mathrm{dom}\,}}f\): Along any sequence \(\{x_{n}\}_{n\in \mathbb {N}}\subset {{\,\mathrm{dom}\,}}f\) with \({{\,\mathrm{dist}\,}}\left( x_{n},{{\,\mathrm{bd}\,}}({{\,\mathrm{dom}\,}}f)\right) \rightarrow 0\) we have \(f(x_{n})\rightarrow \infty \). This fact allows us to use the Dikin Ellipsoid as a safeguard region within which we can perturb the current position x without falling off \({{\,\mathrm{dom}\,}}f\).

Lemma 1

([50], Prop. 7) Let \(f\in \mathcal {F}_{M_{f},\nu }\) with \(\nu \in (2,3]\). We have \(\mathcal {W}(x;1)\subset {{\,\mathrm{dom}\,}}f \) for all \(x\in {{\,\mathrm{dom}\,}}f\).

The inclusion \(\mathcal {W}(x;1)\subset {{\,\mathrm{dom}\,}}f\) for \(\nu \in (2,3]\) is a generalization of a well-known classical property of self-concordant functions [42]. It gains relevance for the case \(\nu >2\), since when \(\nu =2\), we have \({{\,\mathrm{dom}\,}}f=\mathbb {R}^n\), making the statement trivial.

The next Lemma gives a-priori local bounds on the function values.

Lemma 2

([50], Prop. 10) Let \(x,y\in {{\,\mathrm{dom}\,}}f\) for \(f\in \mathcal {F}_{M_{f},\nu }\) and \(\nu \in [2,3]\). Then

where, if \(\nu >2\), the right-hand side of (9) holds if and only if \(\mathsf {d}_{\nu }(x,y)<1\). Here \(\omega _{\nu }(\cdot )\) is defined as

The function \(\omega _{\nu }(\cdot )\) is strictly convex and one can check that \(\omega _{\nu }(t)\ge 0\) for all \(t\in {{\,\mathrm{dom}\,}}(\omega _{\nu })\). These bounds on the function values can be seen as local versions of the standard approximations valid for strongly convex functions, respectively for functions with a Lipschitz continuous gradient (see e.g. [41], Def. 2.1.3 and Lemma 1.2.3). In particular, the upper bound (9) corresponds to a local version of the celebrated descent lemma, a fundamental tool in the analysis of first-order methods [18]. To emphasize this analogy, we will also refer to (9) as the GSC-descent lemma.

3 Frank–Wolfe works for generalized self-concordant functions

In this section we describe three provably convergent modifications of Algorithm 1, displaying sublinear convergence rates.

3.1 Preliminaries

Assumption 1

The following assumptions shall be in place throughout this paper:

-

The function f in (P) belongs to the class \(\mathcal {F}_{M_{f},\nu }\) with \(\nu \in [2,3]\).

-

The solution set \(\mathcal {X}^{*}\) of (P) is nonempty, with \(x^{*}\in \mathcal {X}^{*}\) representing a solution and \(f^{*}= f(x^{*})\) the corresponding objective function value.

-

\(\mathcal {X}\) is convex compact and the search direction (1) can be computed efficiently and accurately.

-

\(\nabla ^{2}f\) is continuous and positive definite on \(\mathcal {X}\cap {{\,\mathrm{dom}\,}}f\).

Define the Frank–Wolfe search direction as

We also declare the functions \(\mathtt {e}(x)\triangleq \Vert v_{{{\,\mathrm{FW}\,}}}(x)\Vert _{x}\text { and }\beta (x)\triangleq \Vert v_{{{\,\mathrm{FW}\,}}}(x)\Vert _{2}\) for all \(x\in {{\,\mathrm{dom}\,}}f.\)

3.2 A Frank–Wolfe method with analytical step-size

Our first Frank–Wolfe method (Algorithm 2, FWGSC) for minimizing generalized self-concordant functions builds on a new adaptive step-size rule, which we derive from a judicious application of the GSC-descent Lemma (9). An attractive feature of this new step size policy is that it is available in analytical form, which allows us to do away with any globalization strategy (e.g. line search). This has significant practical impact when function evaluations are expensive.

Given \(x\in \mathcal {X}\), set \(x^{+}_{t}\triangleq x+t v_{{{\,\mathrm{FW}\,}}}(x)\), and assume that \(\mathtt {e}(x)\ne 0\). Moving from the current position x to the point \(x^{+}_{t}\), we know that \(\mathsf {d}_{\nu }(x,x^{+}_{t})=tM_{f}\delta _{\nu }(x)\), where

Choosing \(t\in (0,\frac{1}{M_{f}\delta _{\nu }(x)})\), the GSC-descent lemma (9) gives us the upper bound

For \(x\in {{\,\mathrm{dom}\,}}f\cap \mathcal {X}\), define \(\eta _{x,M,\nu }:\mathbb {R}_{+}\rightarrow (-\infty ,+\infty ]\) by

Note that \(\eta _{x,M,\nu }(t)\) is strictly concave on \({{\,\mathrm{dom}\,}}(\eta _{x,M,\nu })\subseteq [0,\frac{1}{M\delta _{\nu }(x)}]\). This leads to the per-iteration change in the objective function value as

Since \(\eta _{x,M_{f},\nu }(t)>0\) for \(t\in (0,\frac{1}{M_{f}\delta _{\nu }(x)})\), we are ensured that we make progress in reducing the objective function value when choosing a step size within the indicated range. Given the triple \((x,M,\nu )\), we search for a value t such that the per-iteration decrease is as big as possible. Hence, we aim to find \(t\ge 0\) which solves the concave maximization problem

Call \(\mathtt {t}_{M,\nu }(x)\) a solution of this program. Since we have to stay within the feasible set, we cannot simply use the number \(\mathtt {t}_{M,\nu }(x)\) as our step size as it might lead to an infeasible point. Consequently, we propose the truncated step-size

In Sect. 4 we show that this step-size policy guarantees feasibility and a sufficient decrease.

Remark 1

We emphasize that the basic step-size rule is derived by identifying a suitable local majorizing model \(f(x)-\eta _{x,M_{f},\nu }(t)\). Minimization with respect to t aligns the model as close as possible to the effective progress we are making in reducing the objective function value. This upper model holds for all GSC functions with the same characteristic parameter \((M_{f},\nu )\). Thus, our derived step size strategy is universally applicable to all functions within the class \(\mathcal {F}_{M_{f},\nu }\). Therefore, akin to [50, 52], the derived adaptive step size policy can be regarded as an optimal choice in the analytic worst-case sense.

3.3 Backtracking Frank–Wolfe variants

Algorithm FWGSC comes with several drawbacks. First, it relies on the minimization of a universal upper model derived from the GSC-descent Lemma. This over-estimation strategy leads to a worst-case performance estimate, relying on various state-dependent quantities, such as the local norm \(\mathtt {e}(x^{k})\), and the GSC parameters \((M_{f},\nu )\). Evaluating the local norm requires the computation of the matrix-vector product between the Hessian \(\nabla ^{2}f(x^{k})\), and the FW search direction \(v_{{{\,\mathrm{FW}\,}}}(x^{k})\).Footnote 1 The GSC parameter \(M_{f}\) is a global quantity, relating the second and third derivative over the entire domain of the function f. Additionally, it restricts the interval of admissible step sizes \((0,\frac{1}{M_{f}\delta _{\nu }(x)})\). Consequently, a local search for this parameter could lead to larger step-sizes, which may improve the performance. Motivated by these facts, this section presents two backtracking variants of the basic Frank–Wolfe method. Both methods are based on the assumption that we can easily answer the question whether a given candidate search point x belongs to the domain of the function f, or not.

Assumption 2

(Domain Oracle) Given a point x, it is easy to decide if \(x\in {{\,\mathrm{dom}\,}}f\), or not.

Remark 2

For many problems such domain oracles are easy to construct. As a concrete example, consider the problem of minimizing the log-barrier function over a compact domain in \(\mathbb {R}^{n}_{+}\), which is a standard routine in interior-point methods (e.g. the computation of the analytic center). For this problem, a simple domain oracle is a single pass through all the coordinates of the vector x and checking if each entry is positive. The complexity of such an oracle is linear in the number of variables.

3.3.1 Backtracking over the Lipschitz constant

Our first backtracking variant of FWGSC preforms a local search over the Lipschitz modulus of the gradient over level sets. From the previous analysis we know that under an appropriate choice of the stepsize the algorithm is guaranteed to stay in the level set on which the function has Lipschitz gradient. Thus, we can appropriately modify and apply the Backtracking FW algorithm proposed in [47] for functions with Lipschitz-continuous gradient. The main difference is that we additionally check that the step is feasible w.r.t. \({{\,\mathrm{dom}\,}}f\). Also our proof is both simpler and much more direct. We also remark that our algorithm is not only applicable to generalized self-concordant minimization, but also for other settings with locally Lipschitz gradient.

Consider the quadratic model

where \(x\in \mathcal {X}\) is the current position of the algorithm, and \(t,\mathcal {L}>0\) are parameters. From the complexity analysis of FWGSC, we know that there exists a range of step-size parameters \(t>0\) that guarantee decrease in the objective function value. Denote by \(\mathcal {S}(x)\triangleq \{x'\in \mathcal {X}\vert f(x')\le f(x)\}\), and set \(\gamma _{k}\triangleq \sup \{t>0\vert x^{k}+t(s^{k}-x^{k})\in \mathcal {S}(x^{k})\}\) as well as \(L_{k}\triangleq \max _{x\in \mathcal {S}(x^{k})}\lambda ^{2}_{\max }(\nabla ^{2}f(x))\). Then, for all \(t\in [0,\gamma _{k}]\), it holds true that \(f(x^{k}+t(s^{k}-x^{k}))\le f(x^{k})\). Therefore, by the mean-value-theorem

Hence, for all \(t\in (0,\gamma _{k})\),

The idea is to dispense with the computation of the local Lipschitz estimate \(L_{k}\) over the level set \(\mathcal {S}(x^{k})\), and replace it by the backtracking procedure \(\mathtt {step}_{L}(f,v^{k},x^{k},\mathcal {L}_{k-1})\) (Algorithm 4) as an inner-loop within Algorithm 3 (LBTFWGSC). In particular, using Assumption 2, the implementation of LBTFWGSC does not require the evaluation of the Hessian matrix \(\nabla ^{2}f(x^{k})\), and simultaneously determines a step size which minimizes the quadratic model under the prevailing local Lipschitz estimate.

3.3.2 Backtracking over the GSC parameter \(M_{f}\)

Our second backtracking variant performs a local search for the GSC parameter \(M_{f}\). Our goal is to construct a backtracking procedure for the constant \(M_{f}\) such that for a given candidate GSC parameter \(\mu >0\) and search point \(x^{+}_{t}=x+tv_{{{\,\mathrm{FW}\,}}}(x)\), we have feasibility: \(x^{+}_{t}\in {{\,\mathrm{dom}\,}}f\), and sufficient decrease:

Optimizing the new upper model \(Q_{M}(x,t,\mu )\) with respect to \(t\ge 0\) yields a step-size \(\mathtt {t}_{\mu ,\nu }(x)\), whose definition is just like the maximizer in (14), but using the parameters \((x,\mu ,\nu )\) as input. This approach allows us to define a localized step-size, exploiting the analytic structure of the step-size policy associated with the base algorithm FWGSC.

The main merit of this backtracking method can be seen by revisiting the analytical step-size criterion attached with FWGSC, defined in eq. (15). Inspection of the definition of the function \(\alpha _{M,\nu }(x)\) that a larger M cannot lead to a larger step size. Hence, a precise local estimate of the GSC parameter M opens up possibilities to make larger steps and thus improve the practical performance of the method. We will see in our numerical experiments in Sect. 6 that this claim has some substance in important machine learning problems.

4 Complexity analysis

4.1 Complexity analysis of FWGSC

Based on the preliminary discussion of Sect. 3.2, our strategy to determine the step-size policy is to first compute \(\mathtt {t}_{M_{f},\nu }(x)\), defined as the solution to program (14), and then clip the value accordingly. A technical analysis of the optimization problem (14), relegated to “Appendix B”, yields the following explicit expression for \(\mathtt {t}_{M_{f},\nu }(x)\).

Proposition 1

The unique solution to program (14) is given by

where \(\delta _{\nu }(x),\nu \in [2,3],\) is defined in eq. (12).

Next we show that FWGSC is well-defined using the step size policy (15).

Proposition 2

Let \(\{x^{k}\}_{k\ge 0}\) be generated by FWGSC with step size policy \(\{\alpha _{M_{f},\nu }(x^{k})\}_{k\ge 0}\) defined in (15). Then \(x^{k}\in \mathcal {X}\cap {{\,\mathrm{dom}\,}}f\) for all \(k\ge 0\).

Proof

The proof proceeds by induction. By assumption, \(x^{0}\in {{\,\mathrm{dom}\,}}f\cap \mathcal {X}\). To perform the induction step, assume that \(x^{k}\in \mathcal {X}\cap {{\,\mathrm{dom}\,}}f\) for some \(k\ge 0\). We consider two cases:

-

If \(\nu =2\), then since \(\alpha _{M_{f},2}(x^{k})\le 1\), feasibility follows immediately from convexity of \(\mathcal {X}\) (recall that \({{\,\mathrm{dom}\,}}f=\mathbb {R}^n\) in this case).

-

If \(\nu \in (2,3]\), then, whenever \(x^{k}\in \mathcal {X}\), we deduce from (19) that \(\mathtt {t}_{M_{f},\nu }(x^{k})M_{f}\delta _{\nu }(x^{k})<1\). If \(\mathtt {t}_{M_{f},\nu }(x^k)>1\), then \(\alpha _{M_{f},\nu }(x^{k})M_{f} \delta _{\nu }(x^{k}) = M_{f}\delta _{\nu }(x^{k})< \mathtt {t}_{M_{f},\nu }(x^{k}) M_{f}\delta _{\nu }(x^{k}) <1\). The claim then follows thanks to Lemma 1.

\(\square \)

In order to simplify the notation, let us introduce the sequences \(\alpha _{k}\equiv \alpha _{M_{f},\nu }(x^{k})\) and \(\Delta _{k}\equiv \eta _{x^{k},M_{f},\nu }(\alpha _{M_{f},\nu }(x^{k}))\). Along the sequence \(\{x^{k}\}_{k\ge 0}\), we have \(\mathsf {d}_{\nu }(x^{k},x^{k+1})=M_{f}\alpha _{k}\delta _{\nu }(x^{k})<1\), and we know that we reduce the objective function value by at least the quantity \(\Delta _{k}>0\). Whence,

so that \(f(x^{k})\le f(x^{0})\), or equivalently, \(\{x^{k}\}_{k\ge 0}\subset \mathcal {S}(x^{0})\triangleq \{x\in {{\,\mathrm{dom}\,}}f\cap \mathcal {X}\vert f(x)\le f(x^{0})\}.\)

Lemma 3

The set \(\mathcal {S}(x^{0})\) is compact.

Proof

\(\mathcal {S}(x^0)\subseteq \mathcal {X}\) and therefore it is bounded. Moreover, since \(x^0\in {{\,\mathrm{dom}\,}}f\cap \mathcal {X}\), f is closed and convex and \(\mathcal {X}\) is also closed. \(\mathcal {S}(x^0)\) is closed as the intersection of two closed sets, and therefore compact. \(\square \)

Accordingly, \(\mathcal {S}(x^0)\subset {{\,\mathrm{dom}\,}}(f)\) and the numbers \( L_{\nabla f}\triangleq \max _{x\in \mathcal {S}(x^{0})}\lambda _{\max }(\nabla ^{2}f(x))\) and \(\sigma _{f}\triangleq \min _{x\in \mathcal {S}(x^{0})}\lambda _{\min }(\nabla ^{2}f(x))\) are well defined and finite. Furthermore, since the level set \(\mathcal {S}(x^{0})\) is compact, Assumption 1 guarantees \(\nabla ^{2}f(x)\succ 0\) for all \(x\in \mathcal {S}(x^{0})\), and hence \(\sigma _{f}>0\). By [41, Thm.2.1.11], for any \(x \in \mathcal {S}(x^{0})\) it holds that

Proposition 3 below shows asymptotic convergence to a solution along subsequences. We omit the proof, as it follows from [17].

Proposition 3

Suppose Assumption 1 holds. Then, the following assertions hold for FWGSC:

-

(a)

\(\{f(x^{k})\}_{k\ge 0}\) is non-increasing;

-

(b)

\(\sum _{k\ge 0}\Delta _{k}<\infty \), and hence the sequence \(\{\Delta _{k}\}_{k\ge 0}\) converges to 0;

-

(c)

For all \(K\ge 1\) we have \(\min _{0\le k<K}\Delta _{k}\le \frac{1}{K}(f(x^{0})-f^{*})\).

In order to assess the iteration complexity of FWGSC, we need a lower bound on the sequence \(\{\Delta _{k}\}_{k\ge 0}\). We start with a bound at iterations satisfying \(\mathtt {t}_{M_{f},\nu }(x^{k})>1\).

Lemma 4

If \(\mathtt {t}_{M_{f},\nu }(x^{k})>1\), we have \(\Delta _{k}\ge \frac{1}{2}{{\,\mathrm{\mathsf {Gap}}\,}}(x^{k}).\)

Proof

See “Appendix C.1”. \(\square \)

Next, we turn to iterates for which \(\mathtt {t}_{M_{f},\nu }(x^{k})\le 1\). In this case, the per-iteration progress reads as \(\Delta _{k}=\eta _{x^{k},M_{f},\nu }(\mathtt {t}_{M_{f},\nu }(x^{k}))\), and enjoys the following lower bound:

Lemma 5

If \(\mathtt {t}_{M_{f},\nu }(x^{k})\le 1\), we have

where \(\tilde{\gamma }_{\nu }\triangleq 1+\frac{4-\nu }{2(3-\nu )}\left( 1-2^{2(3-\nu )/(4-\nu )}\right) \) and \(\mathtt {b}\triangleq \frac{2-\nu }{4-\nu }\).

Proof

See “Appendix C.2”. \(\square \)

Remark 3

It can be checked that \(\lim _{\nu \rightarrow 3}\tilde{\gamma }_{\nu }=1-\ln (2)\), so that the lower bound \(\tilde{\Delta }_{k}\) is continuous in the parameter range \(\nu \in (2,3]\).

Combining Lemma 4 together with Lemma 5 and estimates summarized in “Appendix C.2”, we get the next fundamental relation.

Proposition 4

Suppose Assumption 1 holds. Let \(\{x^{k}\}_{k\ge 0}\) be generated by FWGSC. Then, for all \(k\ge 0\), we have

where, for \((M,\nu )\in (0,\infty )\times [2,3]\), we define

and

Proof

We only illustrate the lower bound for the case \(\nu =2\). All other claims can be verified in exactly the same way. From Lemma 4, we know that \(\Delta _{k}\ge \frac{1}{2}{{\,\mathrm{\mathsf {Gap}}\,}}(x^{k})\) whenever \(\mathtt {t}_{M_{f},2}(x^{k})>1\). Moreover, from Lemma 5 we have that \(\mathtt {t}_{M_{f},2}(x^{k})\le 1\), then

Consequently,

\(\square \)

With the help of the lower bound in Proposition 4, we are now able to establish the \(\mathcal {O}(1/\varepsilon )\) convergence rate in terms of the approximation error \(h_{k}\triangleq f(x^{k})-f^{*}\).

Theorem 1

Suppose that Assumption 1 holds. Let \(\{x^{k}\}_{k\ge 0}\) be generated by FWGSC. For \(x^{0}\in \mathcal {X}\cap {{\,\mathrm{dom}\,}}f\) and \(\varepsilon >0\), define \(N_{\varepsilon }(x^{0})\triangleq \inf \{k\ge 0\vert h_{k}\le \varepsilon \}.\) Then, for all \(\varepsilon >0\),

Proof

To simplify the notation, let us set \(\mathtt {c}_{1}\equiv \mathtt {c}_{1}(M_{f},\nu )\) and \(\mathtt {c}_{2}\equiv \mathtt {c}_{2}(M_{f},\nu )\). By convexity, we have \({{\,\mathrm{\mathsf {Gap}}\,}}(x^{k})\ge h_{k}\). Therefore, Proposition 4 shows that \(\Delta _{k}\ge \min \{\mathtt {c}_{1} h_{k},\mathtt {c}_{2} h_{k}^{2}\}\). This implies

From this inequality we see that \(h_k\) is decreasing and there are two potential phases of convergence:

Phase I. \(\mathtt {c}_1 h_{k} < \mathtt {c}_2 h_{k}^2\), which is equivalent to \(h_{k}>\frac{\mathtt {c}_{1}}{\mathtt {c}_{2}}\).

Phase II. \(\mathtt {c}_1 h_{k} \ge \mathtt {c}_2 h_{k}^2\), which is equivalent to \(h_{k}\le \frac{\mathtt {c}_{1}}{\mathtt {c}_{2}}\).

For fixed initial condition \(x^{0}\in {{\,\mathrm{dom}\,}}f\cap \mathcal {X}\), we can thus subdivide the time domain into the set \(\mathcal {K}_{1}(x^{0})\triangleq \{k\ge 0\vert h_{k}>\frac{\mathtt {c}_{1}}{\mathtt {c}_{2}}\}\) (Phase I) and \(\mathcal {K}_{2}(x^{0})\triangleq \{k\ge 0\vert h_{k}\le \frac{\mathtt {c}_{1}}{\mathtt {c}_{2}}\}\) (Phase II). Since in Phase I \(\{h_{k}\}_{k\in \mathcal {K}_{1}(x^{0})}\) is decreasing and bounded from below by the positive constant \(\mathtt {c}_{1}/\mathtt {c}_{2}\), the set \(\mathcal {K}_{1}(x^{0})\) is bounded. Let us set

the first time at which the process \(\{h_{k}\}_{k}\) enters Phase II. To get a worst-case estimate on this quantity, we assume without loss of generality that \(0\in \mathcal {K}_{1}(x^{0})\), so that \(\mathcal {K}_{1}(x^{0})=\{0,1,\ldots ,T_{1}(x^{0})-1\}\). Then, using the definition of the Phase I, for all \(k=1,\ldots ,T_{1}(x^{0})-1\) we have

Note that \(\mathtt {c}_{1}\le 1/2\), so we make progressions like a geometric series, i.e. we have linear convergence in this phase. Hence, \(h_{k}\le (1-\mathtt {c}_{1})^{k}h_{0}\) for all \(k=0,\ldots ,T_{1}(x^{0})-1\). By the definition of the Phase I, \(h_{T_{1}(x^{0})-1}>\frac{\mathtt {c}_{1}}{\mathtt {c}_{2}}\), so we get \(\frac{\mathtt {c}_{1}}{\mathtt {c}_{2}}\le h_{0} (1-\mathtt {c}_{1})^{T_{1}(x^{0})-1}\) iff \((T_{1}(x^{0})-1)\ln (1-\mathtt {c}_{1})\ge \ln \left( \frac{\mathtt {c}_{1}}{h_{0}\mathtt {c}_{2}}\right) \). Hence,

After these number of iterations, the process will enter Phase II, at which \(h_{k}\le \frac{\mathtt {c}_{1}}{\mathtt {c}_{2}}\) holds. Therefore, \(h_{k}\ge h_{k+1}+\mathtt {c}_{2} h_{k}^{2}\), or equivalently,

Pick \(N>T_{1}(x^{0})\) an arbitrary integer. Summing (28) from \(k=T_{1}(x^{0})\) up to \(k=N-1\), we arrive at

By definition \(h_{T_{1}(x^{0})}\le \frac{\mathtt {c}_{1}}{\mathtt {c}_{2}}\), so that for all \(N>T_{1}(x^{0})\), we see

Consequently,

By definition of the stopping time \(N_{\varepsilon }(x^{0})\), it is true that \(h_{N_{\varepsilon }(x^{0})-1}>\varepsilon \). Consequently, evaluating (29) at \(N=N_{\varepsilon }(x^{0})-1\), we obtain

Combining this upper bound with (27) shows the claim. \(\square \)

Remark 4

Combining the result of Theorem 1 and the definitions of the constants \(\mathtt {c}_{1}(M,\nu )\) in (23) and \(\mathtt {c}_{2}(M,\nu )\) in (24), we can see that, neglecting the logarithmic terms and using that \(-\frac{1}{\ln (1-x)}\le \frac{1}{x}\) for \(x\in [0,1]\), the iteration complexity of FWGSC can be bounded as

where \(c_1,c_2,c_3\) are numerical constants. The first term corresponds to Phase I where one observes the linear convergence, the second term corresponds to the Phase II with sublinear convergence. Interestingly, the second term has the same form as the standard complexity bound for FW methods. The only difference is that the global Lipschitz constant of the gradient is changed to the Lipschitz constant over the level set defined by the starting point.

4.2 Complexity analysis of backtracking versions

The complexity analysis of both backtracking-based algorithms (LBTFWGSC and MBTFWGSC) use similar ideas, which all essentially rest on the specific form of the employed upper model \(Q_{L}\) and \(Q_{M}\), respectively. We will first derive a uniform bound on the per-iteration decrease of the objective function value, and then deduce the complexity analysis from Theorem 1. In both algorithms we use a generic bound on the backtracking parameter.

Lemma 6

Let \(\{\mathcal {L}_{k}\}_{k\in \mathbb {N}}\) be the sequence of Lipschitz estimates produced by the procedure \(\mathtt {step}_{L}(f,v^{k},x^{k},\mathcal {L}^{k-1})\) and \(\{\mu _{k}\}_{k\in \mathbb {N}}\) the sequence of GSC-parameter estimates produced by \(\mathtt {step}_{M}(f,v^{k},x^{k},\mu ^{k-1})\), respectively. We have \(\mathcal {L}_{k}\le \max \{\mathcal {L}_{-1},\gamma _{u}L_{\nabla f}\}\) and \(\mu ^{k}\le \max \{\mu _{-1},\gamma _{u}M_{f}\}\).

Proof

We proof the statement only for the sequence \(\{\mathcal {L}_{k}\}_{k}\). The claim for \(\{\mu _{k}\}_{k\in \mathbb {N}}\) can be shown in the same way. By construction of the backtracking procedure, we know that if the sufficient decrease condition is evaluated successfully at the first run, then \(\mathcal {L}_{k-1}\ge \mathcal {L}_{k}\ge \gamma _{d}\mathcal {L}_{k-1}\). If not, then it is clear that \(\mathcal {L}_{k}\le \gamma _{d}L_{\nabla f}.\) Hence, for all \(k\ge 0\), \(\mathcal {L}_{k}\le \max \{\gamma _{d}L_{\nabla f},\mathcal {L}_{k-1}\}\). By backwards induction, it follows then \(\mathcal {L}_{k}\le \max \{\mathcal {L}_{-1},\gamma _{u}L_{\nabla f}\}\). \(\square \)

4.2.1 Analysis of LBTFWGSC

Calling Algorithm LBTFWGSC at position \(x^{k}\) generates a step size \(\alpha _{k}\) and a local Lipschitz estimate \(\mathcal {L}_{k}\) via \((\alpha _{k},\mathcal {L}_{k})=\mathtt {step}_{L}(f,v_{{{\,\mathrm{FW}\,}}}(x^{k}),x^{k},\mathcal {L}_{k-1})\). The thus produced new search point satisfies \(x^{k+1}=x^{k}+\alpha _{k}v^{k}\in {{\,\mathrm{dom}\,}}f\cap \mathcal {X}\), and

The reported step size is \(\alpha _{k}=\min \left\{ 1,\frac{{{\,\mathrm{\mathsf {Gap}}\,}}(x^{k})}{\mathcal {L}_{k}\beta _{k}^{2}}\right\} \). For each of these possible realizations of this step size, we will provide a lower bound of the achieved reduction in the objective function value.

Case 1 If \(\alpha _{k}=1\), then \(\mathcal {L}_{k}\beta _{k}^{2}\le {{\,\mathrm{\mathsf {Gap}}\,}}(x^{k})\) and \(x^{k+1}=x^{k}+v^{k}\in {{\,\mathrm{dom}\,}}f\cap \mathcal {X}\). Hence,

Case 2 If \(\alpha _{k}=\frac{{{\,\mathrm{\mathsf {Gap}}\,}}(x^{k})}{\mathcal {L}_{k}\beta _{k}^{2}}\), then

Since \(\mathcal {L}_{k}\le \max \{\gamma _{u}L_{\nabla f},\mathcal {L}_{-1}\}\equiv \bar{L}\) (Lemma 6), we obtain the performance guarantee

Set \(\mathtt {c}_{1}\equiv \frac{1}{2}\) and \(\mathtt {c}_{2}\equiv \frac{1}{2\bar{L}{{\,\mathrm{diam}\,}}(\mathcal {X})^{2}}\), it therefore follows that

In terms of the approximation error, this implies

Thus, we can use a similar analysis as in the one in the proof of Theorem 1, and obtain the following \(\mathcal {O}(1/\varepsilon )\) iteration complexity guarantee for method LBTFWGSC.

Theorem 2

Suppose that Assumptions 1 and 2 hold. Let \(\{x^{k}\}_{k\ge 0}\) be generated by LBTFWGSC. For \(x^{0}\in \mathcal {X}\cap {{\,\mathrm{dom}\,}}f\) and \(\varepsilon >0\), define \(N_{\varepsilon }(x^{0})\triangleq \inf \{k\ge 0\vert h_{k}\le \varepsilon \}.\) Then, for all \(\varepsilon >0\),

where \(\bar{L}=\max \{\gamma _{u}L_{\nabla f},\mathcal {L}_{-1}\}\).

4.2.2 Analysis of MBTFWGSC

The complexity analysis of this algorithm is completely analogous to the one corresponding to Algorithm LBTFWGSC. The main difference between the two variants is the upper model employed in the local search. Calling MBTFWGSC at position \(x^{k}\), generates the pair \((\alpha _{k},\mu _{k})=\mathtt {step}_{M}(f,v_{{{\,\mathrm{FW}\,}}}(x^{k}),x^{k},\mu _{k-1})\) such that

where \(\mathtt {e}_{k}\equiv \mathtt {e}(x^{k})\). The step size parameter \(\alpha _{k}\) satisfies \(\alpha _{k}=\min \{1,\mathtt {t}_{\mu _{k},\nu }(x^{k})\}.\) We can thus apply Proposition 4 in order to obtain the recursion

involving the constants defined in (23) and (24). By construction of the backtracking step, we know that \(\mu _{k}\le \max \{\gamma _{u}M_{f},\mu _{-1}\}\equiv \bar{M}\) (Lemma 6). Hence, after setting \(\mathtt {c}_{1}\equiv \mathtt {c}_{1}(\bar{M},\nu ),\mathtt {c}_{2}\equiv \mathtt {c}_{2}(\bar{M},\nu )\), we arrive at

From here the complexity analysis proceeds as in Theorem 1. The only change that has to be made is to replace the expressions \(\mathtt {c}_{1}(M_{f},\nu )\) and \(\mathtt {c}_{2}(M_{f},\nu )\) by the numbers \(\mathtt {c}_{1}(\bar{M},\nu )\) and \(\mathtt {c}_{2}(\bar{M},\nu )\), respectively.

Theorem 3

Suppose that Assumption 1 and 2 hold. Let \(\{x^{k}\}_{k\ge 0}\) be generated by MBTFWGSC. For \(x^{0}\in \mathcal {X}\cap {{\,\mathrm{dom}\,}}f\) and \(\varepsilon >0\), define \(N_{\varepsilon }(x^{0})\triangleq \inf \{k\ge 0\vert h_{k}\le \varepsilon \}.\) Then, for all \(\varepsilon >0\),

where \(\bar{M}=\max \{\gamma _{u}M_{f},\mu _{-1}\}.\)

Note that a similar remark to Remark 4 can be made in this case.

Remark 5

While the proofs of Theorem 2 and Theorem 3 follow the same steps, the underlying models are different. LBTFWGSC is based on the observation that since the algorithm is monotone, it stays in the level set on which the objective function has Lipschitz-continuous gradient. This allows us to use the quadratic upper bound (16) to find the corresponding stepsize. On the contrary, MBTFWGSC is based on the upper bound (18) that is specific to generalized self-concordant functions. Moreover, these two different models lead to different stepsize definitions, different estimates for the per-iteration progress, and slightly different complexity results, yet with similar dependence on \(\varepsilon \).

5 Linearly convergent variants of Frank–Wolfe for GSC functions

In the development of all our linearly convergent variants, we assume that the feasible set is a polytope described by a system of linear inequalities.

Assumption 3

The feasible set \(\mathcal {X}\) admits the explicit representation

where \({\mathbf{B}}\in \mathbb {R}^{m\times n}\) and \(b\in \mathbb {R}^{m}\).

5.1 Local linear minimization oracles

In this section we show how the local linear minimization oracle of [24] can be adapted to accelerate the convergence of FW-methods for minimizing GSC functions. In particular, we work out an analytic step-size criterion which guarantees linear convergence towards a solution of (P). The construction is a non-trivial modification of [24], as it exploits the local descent properties of GSC functions. In particular, we neither assume global Lipschitz continuity, nor strong convexity of the objective function. Instead, our working assumption in this section is the availability of a local linear minimization oracle, defined as follows:

Definition 3

([24], Def. 2.5) A procedure \(\mathcal {A}(x,r,c)\), where \(x\in \mathcal {X},r>0,c\in \mathbb {R}^n,\) is a Local Linear Optimization Oracle (LLOO) with parameter \(\rho \ge 1\) for the polytope \(\mathcal {X}\) if \(\mathcal {A}(x,r,c)\) returns a point \(u(x,r,c)=u\in \mathcal {X}\) such that

We refer to [24] for illustrative examples for oracles \(\mathcal {A}(x,r,c)\). In particular, [24] provide an explicit construction of the LLOO for a simplex and for general polytopes. We further redefine the local norm as

With an obvious abuse of notation, we also redefine

As in the previous sections, our goal is to come up with a step-size policy guaranteeing feasibility and a sufficient decrease. As will become clear in a moment, our construction relies on a careful analysis of the function

where \(\xi ,\delta \ge 0\) are free parameters. This function is also used in the complexity analysis of FWGSC, and thoroughly discussed in “Appendix B”. In particular, the analysis in “Appendix B” shows that \(t\mapsto \psi _{\nu }(t)\) is concave, unimodal with \(\psi _{\nu }(0)=0\), increasing on the interval \([0,t_{\nu }^{*})\) and decreasing on \([t_{\nu }^{*},\infty )\), where the cut-off value \(t_{\nu }^{*}\) is defined in eq. (62). Moreover, \(\psi _{\nu }(t)\ge 0\) for \(t \in [0,t_{\nu }^{*}]\). To facilitate the discussion, let us redefine this cut-off value in a way which emphasizes its dependence on structural parameters. We call

We construct our step size policy iteratively. Suppose we are given the current iterate \(x^{k}\in {{\,\mathrm{dom}\,}}f\cap \mathcal {X}\), produced by k sequential calls of FWLLOO, using a finite sequence \(\{\alpha _{i}\}_{i=0}^{k-1}\) of step-sizes and search radii \(\{r_{i}\}_{i=0}^{k-1}\). Set \(c_{k}=\exp \left( -\sum _{i=0}^{k-1}\alpha _{i}\right) \). Call the LLOO to obtain the target state \(u^{k}=u(x^{k},r_{k},\nabla f(x^{k}))\), using the updated search radius \(r_{k}=r_{0}c_{k}\). We define the next step size \(\alpha _{k}=\alpha _{\nu }(x^{k})\) by setting

Update the sequence of search points to \(x^{k+1}=x^{k}+\alpha _{k}(u^{k}-x^{k})\). By construction of \(t^{k}_{\nu }\equiv t^{*}_{\nu }\left( M_f\delta _{\nu }(x^{k}),\frac{2\mathtt {e}(x^{k})^2}{{{\,\mathrm{\mathsf {Gap}}\,}}(x^{0})c_{k}}\right) \), this point lies in \({{\,\mathrm{dom}\,}}f\cap \mathcal {X}\). To see this, consider first the case in which \(\alpha _{k}=1<t^{k}_{\nu }\). Then, \(\mathsf {d}_{\nu }(x^{k+1},x^{k})=\alpha _{k}M_f\delta _{\nu }(x^{k})=M_f\delta _{\nu }(x^{k})<t^{k}_{\nu }M_f\delta _{\nu }(x^{k})<1\). On the other hand, if \(\alpha _{k}=t^{k}_{\nu }\), then it follows from the definition of the involved quantities that \(\mathsf {d}_{\nu }(x^{k+1},x^{k})=\alpha _{k}M_f\delta _{\nu }(x^{k})<1\).

Repeating this procedure iteratively yields a sequence \(\{x^{k}\}_{k\in \mathbb {N}}\), whose performance guarantees in terms of the approximation error \(h_{k}=f(x^{k})-f^{*}\) are described in the Theorem below.

Theorem 4

Suppose Assumption 1 holds. Let \(\{x^{k}\}_{k\ge 0}\) be generated by FWLLOO. Then, for all \(k\ge 0\), we have \(x^{*}\in \mathbb {B}(x^{k},r_{k})\) and

where the sequence \(\{\alpha _{k}\}_{k}\) is constructed as in (37).

Proof

Let us define \(\mathcal {P}(x^{0})\triangleq \left\{ x\in \mathcal {X}\vert f(x)\le f^{*}+{{\,\mathrm{\mathsf {Gap}}\,}}(x^{0})\right\} \). We proceed by induction. For \(k=0\), we have \(x^{0}\in {{\,\mathrm{dom}\,}}f\cap \mathcal {X}\) by assumption and \(x^{0}\in \mathcal {P}(x^{0})\) by definition. (21) gives

Let \(u^{0}\equiv u(x^{0},r_{0},\nabla f(x^{0})),\delta _{0}\equiv \delta _{\nu }(x^{0}),\xi _{0}=\frac{2\mathtt {e}(x^{0})^{2}}{{{\,\mathrm{\mathsf {Gap}}\,}}(x^{0})}\) and \(\alpha _{0}=\alpha _{\nu }(x^{0})\) obtained by evaluating (37) with the cut-off value \(t^{*}_{\nu }(M_{f}\delta _{0},\xi _{0})\). Since \(r_{0} = \sqrt{\frac{2{{\,\mathrm{\mathsf {Gap}}\,}}(x^{0})}{\sigma _{f}}}\ge \sqrt{\frac{2h_{0}}{\sigma _{f}}}\), (39) implies that \(x^{*}\in \mathbb {B}(x^{0},r_{0})\). The definition of the LLOO gives us

Set \(x^{1}=x^{0}+\alpha _{0}(u^{0}-x^{0})\in {{\,\mathrm{dom}\,}}f\cap \mathcal {X}\). The GSC-descent lemma (9) gives then

Hence, writing the above in terms of the approximation error \(h_{k}=f(x^{k})-f^{*}\), we obtain

We see that the second summand in the right-hand side above is just the value of the function \(\psi _{\nu }(\alpha _{0})\), with the parameters \(\delta =M_{f}\delta _{0}\) and \(\xi =\xi _{0}=\frac{2\mathtt {e}(x^{0})^{2}}{{{\,\mathrm{\mathsf {Gap}}\,}}(x^{0})}\). Hence, by construction, the second summand is nonnegative, which gives us the bound

To perform the induction step, assume that for some \(k\ge 1\) it holds

Since \(c_{k}\in (0,1)\), we readily see that \(x^{k}\in \mathcal {P}(x^{0})\). Call \(\delta _{k}=\delta _{\nu }(x^{k})\) and \(\xi _{k}=\frac{2\mathtt {e}(x^{k})^{2}}{{{\,\mathrm{\mathsf {Gap}}\,}}(x^{0})c_{k}}\). (21) leads to

Call the LLOO to obtain the target point \(u^{k}=\mathcal {A}(x^{k},r_{k},\nabla f(x^{k}))\). Using the definition of the LLOO, (42) implies

Define the step size \(\alpha _{k}=\alpha _{\nu }(x^{k})\), and declare the next search point \(x^{k+1}=x^{k}+\alpha _{k}(u^{k}-x^{k})\in {{\,\mathrm{dom}\,}}f\cap \mathcal {X}\). By the discussion preceeding the Theorem, it is clear that \(x^{k+1}\in \mathcal {X}\cap {{\,\mathrm{dom}\,}}f\). Via the GSC-descent lemma and the induction hypothesis we arrive in exactly the same way as for the case \(k=0\) to the inequality

The construction of the step size \(\alpha _{k}\) ensures that the expression in the brackets on the right-hand-side is non-negative. Consequently, we obtain \(h_{k+1} \le (1-\alpha _k/2){{\,\mathrm{\mathsf {Gap}}\,}}(x^{0})c_k \le {{\,\mathrm{\mathsf {Gap}}\,}}(x^{0})c_k \exp (-\alpha _k/2) = {{\,\mathrm{\mathsf {Gap}}\,}}(x^{0})c_{k+1}\), which finishes the induction proof. \(\square \)

To obtain the final linear convergence rate, it remains to lower bound the step size sequence \(\alpha _{k}=\alpha _{\nu }(x^{k})\). Note that for all values \(\nu \in [2,3]\), \(t^{*}_{\nu }(\delta ,\xi )\) is an increasing function of \(\frac{1}{\delta }\) and \(\frac{\delta }{\xi }\). Thus, our next steps are to lower bound the values of the non-negative sequences \(\{\frac{1}{M_f\delta _{k}}\}_{k}\) and \(\{\frac{M_f\delta _{k}}{\xi _{k}}\}_{k}\), where \(\delta _{k}=\delta _{\nu }(x^{k})\) and \(\xi _{k}=\frac{2\mathtt {e}(x^{k})^{2}}{{{\,\mathrm{\mathsf {Gap}}\,}}(x^{0})c_{k}}\) for all \(k\ge 0\). We have

By definition of the LLOO, we have \(\Vert u^{k}-x^{k}\Vert _{2} \le \min \{\rho r_k, {{\,\mathrm{diam}\,}}(\mathcal {X})\}\). Thus, if \(\nu =2\), we have

while if \(\nu >2\), we observe

Furthermore, from the identity \(\frac{2{{\,\mathrm{\mathsf {Gap}}\,}}(x^0)c_k}{\sigma _f}=r^{2}_{k}\), we conclude \({{\,\mathrm{\mathsf {Gap}}\,}}(x^0)c_k = \frac{\sigma _fr^{2}_{k}}{2}\). Hence,

If \(\nu =2\), we see that

while if \(\nu >2\), we have in turn

Denoting \(\gamma _{\nu } = \frac{\nu -2}{2}M_{f} L_{\nabla f}^{\frac{\nu -2}{2}}\) for \(\nu >2\) and \(\gamma _{\nu } = M_{f}\) for \(\nu =2\), and substituting these lower bounds to the expression for \(t_{\nu }^*\), we obtain

For all \(\nu \in [2,3]\), the minorizing sequence \(\{\underline{t}_{k}\}_{k}\) has a limit \(\frac{\sigma _f}{4\rho ^2 L_{\nabla f}}\) as \(r_k \rightarrow 0\). Moreover, as the search radii sequence \(\{r_{k}\}_{k\in \mathbb {N}}\) is decreasing, basic calculus shows that the sequence \(\{\underline{t}_{k}\}_{k}\) is monotonically increasing. Whence, we get a uniform lower bound of the cut-off values \(\{t_{\nu }^{k}\}_{k}\) as

Corollary 1

Suppose Assumption 1 holds. Algorithm FWLLOO guarantees linear convergence in terms of the approximation error:

where \(\bar{\alpha }=\min \{\underline{t},1\}\) with \(\underline{t}\) defined in (44).

Proof

It is clear that \(\alpha _{k}\ge \bar{\alpha }=\min \{\underline{t},1\}\) for all \(k\ge 0\). Hence \(\exp \left( -\frac{1}{2}\sum _{i=0}^{k-1}\alpha _{i}\right) \le \exp (-k\bar{\alpha }/2)\), and the claim follows. \(\square \)

The obtained bound can be quite conservative since we used a uniform bound for the sequence \(\underline{t}_{k}\). At the same time, since \(r_k\) geometrically converges to 0 and for all \(\nu \in [2,3]\), the minorizing sequence \(\{\underline{t}_{k}\}_{k}\) has a limit \(\frac{\sigma _f}{4\rho ^2 L_{\nabla f}}\) as \(r_k \rightarrow 0\), we may expect that after some burn-in phase, the sequence \(\alpha _{k}\) can be bounded from below by \(\frac{\sigma _f}{8\rho ^2 L_{\nabla f}}\). This lower bound leads to the linear convergence as \(h_{k}\le {{\,\mathrm{\mathsf {Gap}}\,}}(x^{0})\exp (-k_0\bar{\alpha }/2))\exp (-(k-k_0)\frac{\sigma _f}{16\rho ^2 L_{\nabla f}})\) for \(k \ge k_0\), where the length of the burn-in phase \(k_0\) is up to logarithmic factors equal to \(\frac{1}{\bar{\alpha }}\). This corresponds to the iteration complexity

Interestingly, the second term has the same form as the complexity bound for FW method under the LLOO proved in [24] with \(\frac{\rho ^2 L_{\nabla f}}{\sigma _f}\) playing the role of condition number. The only difference is that the global Lipschitz constant of the gradient is changed to the Lipschitz constant over the level set defined by the starting point.

5.2 Away-step Frank–Wolfe (ASFW)

We start with some preparatory remarks. Recall that in this section Assumption 3 is in place. Hence, \(\mathcal {X}\) is a polytope of the form (33). By compactness and the Krein-Milman theorem, we know that \(\mathcal {X}\) is the convex hull of finitely many vertices (extreme points) \(\mathcal {U}\triangleq \{u_{1},\ldots ,u_{q}\}\). Let \(\Delta (\mathcal {U})\) denote the set of discrete measures \(\mu \triangleq (\mu _{u}:u\in \mathcal {U})\) with \(\mu _{u}\ge 0\) for all \(u\in \mathcal {U}\) and \(\sum _{u\in \mathcal {U}}\mu _{u}=1,\mu _{u}\ge 0\). A measure \(\mu ^{x}\in \Delta (\mathcal {U})\) is a vertex representation of x if \(x=\sum _{u\in \mathcal {U}}\mu ^{x}_{u}u\). Given \(\mu \in \Delta (\mathcal {U})\), we define \({{\,\mathrm{supp}\,}}(\mu )\triangleq \{u\in \mathcal {U}\vert \mu _{u}>0\}\) and the set of active vertices \(\mathcal {U}(x)\triangleq \{u\in \mathcal {U}\vert u\in {{\,\mathrm{supp}\,}}(\mu ^{x})\}\) of point \(x\in \mathcal {X}\) under the vertex representation \(\mu ^{x}\in \Delta (\mathcal {U})\). We use \(I(x)\triangleq \{i\in \{1,\ldots ,m\}\vert {\mathbf{B}}_{i}x=b_{i}\}\) to denote the set of binding constraints at x. For a given set \(V\subset \mathcal {U}\), we let \(I(V)=\bigcap _{u\in V}I(u)\).

For the linear minimization oracle generating the target point s(x), we invoke an explicit tie-breaking rule in the definition of the linear minimization oracle.

Assumption 4

The linear minimization procedure

returns a vertex solution, i.e. \(s(x)\in \mathcal {U}\) for all \(x\in \mathcal {X}\).

Remark 6

[4] refer to this as a vertex linear oracle.

ASFW needs also a target vertex which is as much aligned as possible with the same direction of the gradient vector at the current position x. Such a target vertex is defined as

At each iteration, we assume that the iterate \(x^{k}\) is represented as a convex combination of active vertices \(x^{k}=\sum _{u\in \mathcal {U}}\mu ^{k}_{u}u\), where \(\mu ^{k}\in \Delta (\mathcal {U})\). In this case, the sets \(U^{k}= \mathcal {U}(x^{k})\) and the carrying measure \(\mu ^{k}=\mu ^{x^{k}}\) provide a compact representation of \(x^{k}\). The ASFW scheme updates the thus described representation \((U^{k},\mu ^{k})\) via the vertex representation updating (VRU) scheme, as defined in [4]. A single iteration of ASFW can perform two different updating steps:

-

1.

Forward Step This update is constructed in the same way as FWGSC.

-

2.

Away Step This is a correction step in which the weight of a single vertex is reduced, or even nullified. Specifically, the away step regime builds on the following ideas: Let \(x\in \mathcal {X}\) be the current position of the algorithm with vertex representation \(x=\sum _{u\in \mathcal {U}}\mu ^{x}_{u}u\). Pick u(x) as in (45). Define the away direction

$$\begin{aligned} v_{{{\,\mathrm{A}\,}}}(x)\triangleq x-u(x), \end{aligned}$$(46)and apply the step size \(t>0\) to produce the new point

$$\begin{aligned} x^{+}_{t}&=x+tv_{{{\,\mathrm{A}\,}}}(x)\\&=\sum _{u\in \mathcal {U}(x)\setminus \{u(x)\}}(1+t)\mu ^{x}_{u}u+\left( \mu ^{x}_{u(x)}(1+t)-t\right) u(x). \end{aligned}$$Choosing \(t\equiv \bar{t}(x)\triangleq \frac{\mu ^{x}_{u(x)}}{1-\mu ^{x}_{u(x)}}\) eliminates the vertex \(u=u(x)\) from the support of the current point x and leaves us with the new position \(x^{+}=x^{+}_{\bar{t}(x)}=\sum _{u\in \mathcal {U}(x)\setminus \{u(x)\}}\frac{\mu ^{x}_{u}}{1-\mu ^{x}_{u(x)}}u\). This vertex removal is called a drop step.

For the complexity analysis of ASFWGSC, we introduce some convenient notation. Define the vector field \(v:\mathcal {X}\rightarrow \mathbb {R}^n\) by

The modified gap function is

One observes that \(G(x)\ge 0\) for all \(x\in {{\,\mathrm{dom}\,}}f\cap \mathcal {X}\). To construct a feasible method, we need to impose bounds on the step-size. To that end, define

where \(\{\mu _{u}\}_{u\in \mathcal {U}}\in \Delta (\mathcal {U})\) is a given vertex representation of the current point x, and u(x) is the target state identified under the away-step regime (45).

The construction of our step size policy is based on an optimization argument, similar to the one used in the construction of FWGSC. In order to avoid unnecessary repetitions, we thus only spell out the main steps.

Recall that if \(\mathsf {d}_{\nu }(x,x+tv(x))<1\), then we can apply the generalized self-concordant descent lemma (9):

where \(\delta _{\nu }(x)\) is defined as in (12), modulo the change \(\beta (x)=\Vert v(x)\Vert _{2}\) and \(\mathtt {e}(x)=\Vert v(x)\Vert _{x}\). Using the modified gap function (48), this gives the upper model for the objective function

provided that \(G(x)>0\). This upper model is structurally equivalent to the one employed in the step-size analysis of FWGSC. Hence, to obtain an adaptive step-size rule in Algorithm 8, we solve the concave program

As in Sect. 3.2, and with some deliberate abuse of notation, let us denote the unique solution to this maximization problem by \(\mathtt {t}_{\nu }(x)\) (dependence on \(M_{f}\) is suppressed here, since we consider this parameter as given and fixed in this regime). Building on the insights we gained from proving Proposition 1, we thus obtain the familiarly looking characterization of the unique maximizer of the concave program (50):

Theorem 5

The unique solution to program (50) is given by

where \(\delta _{\nu }(x)\) is defined in eq. (12), with \(\beta (x)=\Vert v(x)\Vert _{2}\) and \(\mathtt {e}(x)=\Vert v(x)\Vert _{x}\) considering the vector field (47).

Analogously to Proposition 2, we see that when applying the step-size policy

we can guarantee that \(x^{k}\in \mathcal {X}\) for all \(k\ge 0\). Indeed, inspecting the expression (51) for each value \(\nu \in [2,3]\), it is easy to see that \(M_{f}\delta _{\nu }(x)\mathtt {t}_{\nu }(x)<1\). Hence, if \(\bar{t}(x)\le \mathtt {t}_{\nu }(x)\), it is immediate that \(\bar{t}(x)M_{f}\delta _{\nu }(x)<1\). Consequently, \(x+\alpha _{\nu }(x)v(x)\in \mathcal {X}\cap {{\,\mathrm{dom}\,}}f\) for all \(x\in \mathcal {X}\cap {{\,\mathrm{dom}\,}}f\). Therefore, the sequence generated by Algorithm 8 is always well defined. In terms of the thus constructed process \(\{x^{k}\}_{k\ge 0}\), we can quantify the per-iteration progress \(\Delta _{k}\equiv \tilde{\eta }_{x^{k},\nu }(\alpha _{k}),\) setting \(\alpha _{k}\equiv \alpha _{\nu }(x^{k})\), via the following modified version of Lemma 5:

Lemma 7

If \(\mathtt {t}_{\nu }(x)\le \bar{t}(x)\), we have

where \(\tilde{\gamma }_{\nu }\triangleq 1+\frac{4-\nu }{2(3-\nu )}\left( 1-2^{2(3-\nu )/(4-\nu )}\right) \) and \(\mathtt {b}\triangleq \frac{2-\nu }{4-\nu }\).

This means that at each iteration of Algorithm 8 in which \(\alpha _{k}=\mathtt {t}_{\nu }(x^{k})\), we succeed in reducing the objective function value by at least

To proceed further with the complexity analysis of ASFWGSC, we need the following technical angle condition, valid for polytope domains:

Lemma 8

(Corollary 3.1, [4]) For any \(x\in \mathcal {X}\setminus \mathcal {X}^{*}\) with support \(\mathcal {U}(x)\), we have

where

To assess the overall iteration complexity of Algorithm 8 we consider separately the following cases:

-

(a)

If the step size regime \(\alpha _{k}=\mathtt {t}_{\nu }(x^{k})\) applies, then from Proposition 4 we deduce that \(f(x^{k+1})-f(x^{k})\le -\Delta _{k}\), were

$$\begin{aligned} \Delta ^{k}\ge \min \{\mathtt {c}_{1}(M_{f},\nu )G(x^{k}), \mathtt {c}_{2}(M_{f},\nu ) G(x^{k})^{2}\}. \end{aligned}$$The multiplicative constants \(\mathtt {c}_{1}(M_{f},\nu ),\mathtt {c}_{2}(M_{f},\nu )\) are the ones defined in (23) and (24). Hence,

$$\begin{aligned} f(x^{k+1})-f(x^{k})\le -\min \{\mathtt {c}_{1}(M_{f},\nu )G(x^{k}),\mathtt {c}_{2}(M_{f},\nu ) G(x^{k})^{2}\}. \end{aligned}$$ -

(b)

Else, we apply the step size \(\alpha _{k}=\bar{t}_{k}\). Then, there are two cases to consider:

-

(b.i)

If a Forward Step is applied, then we know that \(\bar{t}_{k}=1\). Since \(1<\mathtt {t}_{\nu }(x^{k})\), we can apply Lemma 4, but now evaluating the function \(\tilde{\eta }_{x,\nu }(t)\) at \(t=1\), to obtain the bound

$$\begin{aligned} \frac{\tilde{\eta }_{x^{k},\nu }(\bar{t}_{k})}{G(x^{k})}\ge \frac{1}{2}. \end{aligned}$$This gives the per-iteration progress

$$\begin{aligned} f(x^{k+1})-f(x^{k})\le -\frac{1}{2}G(x^{k}). \end{aligned}$$ -

(b.ii)

If an Away Step is applied, then we do not have a lower bound on \(\bar{t}_{k}\). However, we know that \(f(x^{k+1})-f(x^{*})\le f(x^{k})-f(x^{*})\). As in [4], we know that such drop steps can happen at most half of the iterations.

-

(b.i)

Collecting these cases, we are ready to state and prove the main result of this section.

Theorem 6

Let \(\{x^{k}\}_{k\ge 0}\) be the trajectory generated by Algorithm 8 (ASFWGSC). Suppose that Assumptions 1, 3 and 4 are in place. Then, for all \(k\ge 0\) we have

where \(\theta \triangleq \min \left\{ \frac{1}{2},\frac{\mathtt {c}_{1}(M_{f},\nu )\Omega }{2{{\,\mathrm{diam}\,}}(\mathcal {X})},\frac{\mathtt {c}_{2}(M_{f},\nu )\Omega ^{2}\sigma _{f}}{8}\right\} \), \(\Omega \equiv \frac{\Omega _{\mathcal {X}}}{|\mathcal {U}|}\).

Proof

We say that iteration k is productive if it is either a Forward step or an Away step, which is not a drop step. Based on the estimates developed by inspecting thes cases (a) and (b.i) above, we see that at all productive steps we reduce the objective function value according to

We now develop a uniform bound for this decrease.

First, we recall that on the level set \(\mathcal {S}(x^{0})\), we have the strong convexity estimate

Using Lemma 8 and the definition of an Away-Step, we obtain the bound

where \(\Omega \equiv \frac{\Omega _{\mathcal {X}}}{|\mathcal {U}|}\le \frac{\Omega _{\mathcal {X}}}{|\mathcal {U}(x^{k})|}\). At the same time,

Consequently,

and

Furthermore,

Hence, in the cases (a) and (b.i), we can lower bound the per-iteration progress in terms of the approximation error \(h_{k}=f(x^{k})-f^{*}\) as

Since we are making a full drop step in at most k/2 iterations (recall that we initialize the algorithm from a vertex), we conclude from this that

\(\square \)

Remark 7

We would like to point out that Algorithm ASFWGSC does not need to know the constants \(\sigma _f\), \(L_{\nabla f}\) which may be hard to estimate. Moreover, the constants in Lemma 8 are also used only in the analysis and are not required to run the algorithm. Compared to [10], our ASFW does not rely on the backtracking line search, but requires to evaluate the Hessian, yet without its inversion. Furthermore, our method does not involve the pyramidal width of the feasible set, which is in general extremely difficult to evaluate.

6 Numerical results

We provide four examples to compare our methods with existing methods in the literature. As competitors we take Algorithm 1, with its specific versions FW-Standard and FW-Line Search.Footnote 2 As further benchmarks, we implement the self-concordant Proximal-Newton (PN) and the Proximal-Gradient (PG) of [50, 52], as available in the SCOPT package.Footnote 3 All codes are written in Python 3, with packages for scientific computing NumPy 1.18.1 and SciPy 1.4.1. The experiments were conducted on a Intel(R) Xeon(R) Gold 6254 CPU @ 3.10 GHz server with a total of 300 GB RAM and 72 threads, where each method was allowed to run on a maximum of two threads.

We ran all first order methods for a maximum 50,000 iterations and PN, which is more computationally expensive, for a maximum of 1000 iterations. FW-Line Search is run with a tolerance of \(10^{-10}\). In order to ensure that FW-standard generates feasible iterates for \(\nu >2\), we check if the next iterate is inside the domain; If not we replace the step-size by 0, as suggested in [10]. PG was only used in instances where \(\nu =3\) as this method has been developed for standard self-concordant functions only [52]. Within PN we use monotone FISTA [6], with at most 100 iterations and a tolerance of \(10^{-5}\) to find the Newton direction. The step size used in PG is determined by the Barzilai-Borwein method [43] with a limit of 100 iterations, similar to [52]. FWLLOO was only implemented for experiments where the feasible set is the simplex, for which [24] provide an explicit LLOO, since the LLOO implementation for general polytopes suggested in [24] is non-trivial and involves calculating barycentric coordinates of the iterates.

Our comparison is made by the construction of performance profiles [15]. In order to present the result, we first estimate \(f^*\) by the best function value achieved by any of the algorithms, and compute the relative error attained by each of the methods at iteration k. More precisely, given the set of methods \(\mathcal {S}\), test problems \(\mathcal {P}\) and initial points \(\mathcal {I}\), denote by \(F_{ijl}\) the function value attained by method \(j\in \mathcal {S}\) on problem \(i\in \mathcal {P}\) starting from point \(l\in \mathcal {I}\). We define the estimate of the optimal value of problem j by \(f^*_j=\min \{F_{ijl}\vert j\in \mathcal {S}, l\in \mathcal {I}\}\). Denoting \(\{x^{k}_{ijl}\}_{k}\) the sequence produced by method j on problem i starting from point l, we define the relative error as \(r^{k}_{ijl}=\frac{f(x^{k}_{ijl})-f^*_j}{f^*_j}\).

Now, for all methods \(j\in \mathcal {S}\) and any relative error \(\varepsilon \), we compute the proportion of data sets that achieve a relative error of at most \(\varepsilon \) (successful instances). We construct this statistic as follows: Let \(\bar{N}_{j}\) denote the maximum allowed number of iterations for method \(j\in \mathcal {S}\) (i.e for first-order methods 50,000 and for PN 1000). Define \(\mathcal {I}_{ij}(\varepsilon )\triangleq \{l\in \mathcal {I}: \exists k\le \bar{N}_j, r^{k}_{ijl}\le \varepsilon \}\). Then, the proportion of successful instances is

We are also interested in comparing the iteration complexity and CPU time. For that purpose, we define \(N_{ijl}(\varepsilon )\triangleq \min \{0\le k\le \bar{N}_{j}\vert r^{k}_{ijl}\le \varepsilon \}\) as the first iteration in which method \(j\in \mathcal {S}\) achieves a relative error \(\varepsilon \) on problem \(i\in \mathcal {P}\) starting from point \(l\in \mathcal {I}\). Analogously, \(T_{ijl}(\varepsilon )\) measures the minimal CPU time in which method \(j\in \mathcal {S}\) achieves a relative error \(\varepsilon \) on problem \(i\in \mathcal {P}\) starting from point \(l\in \mathcal {I}\). For comparing the iteration complexity and the CPU time across methods we construct the following statistics:

Besides average performance, we also report the mean and standard deviation of \(N_{ijl}(\varepsilon )\) and \(T_{ijl}(\varepsilon )\) across starting points, for specific values of relative error \(\varepsilon \) for all tested methods and data sets.

6.1 Logistic regression

Starting with [2], the logistic regression problem has been the main motivation from the perspective of statistical theory to analyze self-concordant functions in detail. The objective function involved in this standard classification problem is given by

Here \(\mu \) is a given intercept, \(y_{i}\in \{-1,1\}\) is the label attached to the i-th observation, and \(a_{i}\in \mathbb {R}^n\) are predictors given as input data for \(i=1,2,\ldots ,p\). The regularization parameter \(\gamma >0\) is usually calibrated via cross-validation. The task is to learn a linear hypothesis \(x\in \mathbb {R}^n\). According to [50], we can treat (57) as a \((M_{f}^{(3)},3)\)-GSC function minimization problem with \(M_{f}^{(3)}\triangleq \frac{1}{\sqrt{\gamma }}\max \{\Vert a_{i}\Vert _{2}\vert 1\le i\le p\}\). On the other hand, we can also consider it as a \((M_{f}^{(2)},2)\)-GSC minimization problem with \(M_{f}^{(2)}\triangleq \max \{\Vert a_{i}\Vert _{2}\vert 1\le i\le p\}\). It is important to observe that the regularization parameter \(\gamma >0\) affects the self-concordant parameter \(M_{f}^{(3)}\) but not \(M_{f}^{(2)}\). This gains relevance, since usually the regularization parameter is negatively correlated with the sample size p. Hence, for \(p\gg 1\), the GSC constant \(M_{f}\) could differ by orders of magnitude, which suggests considerable differences in the performance of numerical algorithms.

We consider the elastic net formulation of the logistic regression problems [58], by enforcing sparsity of the estimators via an added \(\ell _{1}\) penalty. The resulting optimization problem reads as

This introduces another free parameter \(R>0\), which can be treated as another hyperparameter just like \(\gamma \).

We test our algorithms using \(R=10\), \(\mu =0\) and \(\gamma =1/{p}\), where \(a_i\) and \(y_i\) are based on data sets a1a–a9a from the LIBSVM library [12], where the predictors are normalized so that \(\Vert a_i\Vert =1\). Hence, \(M_{f}^{(2)}/M_{f}^{(3)}=p^{-1/2}\). For each data set, the methods were ran for 10 randomly generated starting points, where each starting point was chosen as a random vertex of the \(\ell _1\) ball with radius 10.

We first compare the methods that are affected by the value of \(\nu \in \{2,3\}\) and \(M_f\in \{M_{f}^{(2)},M_{f}^{(3)}\}\), i.e. FWGSC, MBTFWGSC, ASFWGSC, and PN. We display the comparison of the average relative error over the starting points versus iteration and time for data set a9a in Fig. 1. Note that for this data set we have \(p=32,561\). It is apparent that the linearly convergent methods ASFWGSC and PN gain the most benefit from the lower \(M_f\) associated with the shift from \(\nu =3\) to \(\nu =2\), reducing both iteration complexity and time. Moreover, for FWGSC and MBTFWGSC the change of \(\nu \) only seems to benefit the method in earlier iteration, but does not create any asymptotic speedup. Specifically, the benefit for MBTFWGSC is very small, probably since the backtracking procedure already takes advantage of the possible increase in the step-size that is partially responsible for the improved performance in the other methods. We observed the same behavior for all other data sets considered. Thus, we next compare these methods with \(\nu =2\) to the MBTFWGSC, FW-standard, FW-Line Search, and PG and display the performance of all tested methods using the aggregate statistics \(\rho (\varepsilon ),\tilde{\rho }(\varepsilon ),\hat{\rho }(\varepsilon )\), in Fig. 2. Table 2 reports statistics for \(N(\varepsilon )\) and \(T(\varepsilon )\) for each individual data set. PG has the best performance in terms of time to reach a certain value of relative error, followed by FW-standard and ASFWGSC. FW-standard is slightly better for relative error higher than \(10^{-5}\) but becomes inferior to ASFWGSC for lower error values. From this example we conclude that given a choice using the FW algorithms with lower parameter \(\nu \) is preferable since the upper bound obtained by this choice is tighter. The fact that we want to use the GSC setting with \(\nu =2\) even when the problem can be formulated in the standard self concordant setting \(\nu =3\) provides motivation for developing methods and analyses for the GSC case. Moreover, the advantage of ASFWGSC over other FW methods is in its ability to achieve higher accuracy in lower iteration complexity, however, this may be hindered by its higher computational complexity per iteration.

Performance profile for the logistic regression problem (57) obtained after averaging over 9 binary classification problems

6.2 Portfolio optimization with logarithmic utility

We study high-dimensional portfolio optimization problems with logarithmic utility [13]. In this problem there are n assets with returns \(r_{t}\in \mathbb {R}^{n}_{+}\) in period t of the investment horizon. More precisely, \(r_{t}\) measures the return as the ratio between the closing price of the current day \(R_{t,i}\) and the previous day \(R_{t-1,i}\), i.e. \(r_{t,i}=R_{t,i}/R_{t-1,i},1\le i\le n\). The utility function of the investor is given as

Based on historical observations \(r_t\), \(t\in \{1,\ldots ,p\}\), our task is to design a portfolio x solving the problem