Abstract

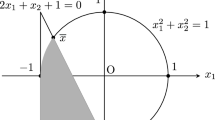

This paper studies high-order evaluation complexity for partially separable convexly-constrained optimization involving non-Lipschitzian group sparsity terms in a nonconvex objective function. We propose a partially separable adaptive regularization algorithm using a pth order Taylor model and show that the algorithm needs at most \(O(\epsilon ^{-(p+1)/(p-q+1)})\) evaluations of the objective function and its first p derivatives (whenever they exist) to produce an \((\epsilon ,\delta )\)-approximate qth-order stationary point. Our algorithm uses the underlying rotational symmetry of the Euclidean norm function to build a Lipschitzian approximation for the non-Lipschitzian group sparsity terms, which are defined by the group \(\ell _2\)–\(\ell _a\) norm with \(a\in (0,1)\). The new result shows that the partially-separable structure and non-Lipschitzian group sparsity terms in the objective function do not affect the worst-case evaluation complexity order.

Similar content being viewed by others

Notes

If \(u_i=u_i^+\), \(R_i= I\). If \(n_i=1\) and \(r_ir_i^+<0\), this rotation is just the mapping from \(\mathbb {R}_+\) to \(\mathbb {R}_-\), defined by a simple sign change, as in the two-sided model of [17].

References

Ahsen, M.E., Vidyasagar, M.: Error bounds for compressed sensing algorithms with group sparsity: a unified approach. Appl. Comput. Harmon. Anal. 43, 212–232 (2017)

Beck, A., Hallak, N.: Optimization problems involving group sparsity terms. Math. Program. 178, 39–67 (2019)

Baldassarre, L., Bhan, N., Cevher, V., Kyrillidis, A., Satpathi, S.: Group-sparse model selection: hardness and relaxations. IEEE Trans. Inf. Theory 62, 6508–6534 (2016)

Bellavia, S., Gurioli, G., Morini, B., Toint, PhL: Deterministic and stochastic inexact regularization algorithms for nonconvex optimization with optimal complexity. SIAM J. Optim. 29, 2881–2915 (2019)

Bian, W., Chen, X.: Worst-case complexity of smoothing quadratic regularization methods for non-Lipschitzian optimization. SIAM J. Optim. 23, 1718–1741 (2013)

Bian, W., Chen, X., Ye, Y.: Complexity analysis of interior point algorithms for non-Lipschitz and nonconvex minimization. Math. Program. 149, 301–327 (2015)

Birgin, E.G., Gardenghi, J.L., Martínez, J.M., Santos, S.A., Toint, Ph.L.: Worst-case evaluation complexity for unconstrained nonlinear optimization using high-order regularized models. Math. Program. 163, 359–368 (2017)

Breheny, P., Huang, J.: Group descent algorithms for nonconvex penalized linear and logistic regression models with grouped predictors. Stat. Comput. 25, 173–187 (2015)

Cartis, C., Gould, N.I.M., Toint, Ph.L.: An adaptive cubic regularization algorithm for nonconvex optimization with convex constraints and its function-evaluation complexity. IMA J. Numer. Anal. 32, 1662–1695 (2012)

Cartis, C., Gould, N.I.M., Toint, Ph.L.: Second-order optimality and beyond: characterization and evaluation complexity in convexly-constrained nonlinear optimization. Found. Comput. Math. 18, 1073–1107 (2018)

Cartis, C., Gould, N.I.M., Toint, Ph.L.: Sharp worst-case evaluation complexity bounds for arbitrary-order nonconvex optimization with inexpensive constraints. SIAM J. Optim. (To appear)

Cartis, C., Gould, N.I.M., Toint, Ph.L.: Worst-case evaluation complexity and optimality of second-order methods for nonconvex smooth optimization. In: The Proceedings of the 2018 International Conference of Mathematicians (ICM 2018) (To appear)

Chen, L., Deng, N., Zhang, J.: Modified partial-update Newton-type algorithms for unary optimization. J. Optim. Theory Appl. 97, 385–406 (1998)

Chen, X., Xu, F., Ye, Y.: Lower bound theory of nonzero entries in solutions of \(\ell _2\)-\(\ell _p\) minimization. SIAM J. Sci. Comput. 32, 2832–2852 (2010)

Chen, X., Niu, L., Yuan, Y.: Optimality conditions and smoothing trust region Newton method for non-Lipschitz optimization. SIAM J. Optim. 23, 1528–1552 (2013)

Chen, X., Ge, D., Wang, Z., Ye, Y.: Complexity of unconstrained \(L_2\)-\(L_p\) minimization. Math. Program. 143, 371–383 (2014)

Chen, X., Toint, Ph.L., Wang, H.: Complexity of partially-separable convexly-constrained optimization with non-Lipschitzian singularities. SIAM J. Optim. 29, 874–903 (2019)

Chen, X., Womersley, R.: Spherical designs and nonconvex minimization for recovery of sparse signals on the sphere. SIAM J. Imaging Sci. 11, 1390–1415 (2018)

Conn, A.R., Gould, N.I.M., Sartenaer, A., Toint, Ph.L.: Convergence properties of minimization algorithms for convex constraints using a structured trust region. SIAM J. Optim. 6, 1059–1086 (1996)

Conn, A.R., Gould, N.I.M., Toint, Ph.L.: LANCELOT: A Fortran Package for Large-scale Nonlinear Optimization (Release A), Number 17 in Springer Series in Computational Mathematics. Springer, Berlin (1992)

Conn, A.R., Gould, N.I.M., Toint, Ph.L.: Trust-Region Methods. MPS-SIAM Series on Optimization. SIAM, Philadelphia (2000)

Eldar, Y.C., Kuppinger, P., Bölcskei, H.: Block-sparse signals: uncertainty relations and efficient recovery. IEEE Trans. Signal Process. 58, 3042–3054 (2010)

Fourer, R., Gay, D.M., Kernighan, B.W.: AMPL: a mathematical programming language. Computer science technical report. AT&T Bell Laboratories, Murray Hill, USA (1987)

Gay, D.M.: Automatically finding and exploiting partially separable structure in nonlinear programming problems. Technical report. Bell Laboratories, Murray Hill, NJ, USA (1996)

Goldfarb, D., Wang, S.: Partial-update Newton methods for unary, factorable and partially separable optimization. SIAM J. Optim. 3, 383–397 (1993)

Gould, N.I.M., Orban, D., Toint, Ph.L.: CUTEst: a constrained and unconstrained testing environment with safe threads for mathematical optimization. Comput. Optim. Appl. 60, 545–557 (2015)

Gould, N.I.M., Toint, Ph.L.: FILTRANE, a Fortran 95 filter-trust-region package for solving systems of nonlinear equalities, nonlinear inequalities and nonlinear least-squares problems. ACM Trans. Math. Softw. 33, 3–25 (2007)

Griewank, A., Toint, Ph.L.: On the unconstrained optimization of partially separable functions. In: Powell, M.J.D. (ed.) Nonlinear Optimization 1981, pp. 301–312. Academic Press, London (1982)

Huang, J., Ma, S., Xie, H., Zhang, C.: A group bridge approach for variable selection. Biometrika 96, 339–355 (2009)

Huang, J., Zhang, T.: The benefit of group sparsity. Ann. Stat. 38, 1978–2004 (2010)

Juditsky, A., Karzan, F., Nemirovski, A., Polyak, B.: Accuracy guaranties for \(\ell _1 \) recovery of block-sparse signals. Ann. Stat. 40, 3077–3107 (2012)

Le, G., Sloan, I., Womersley, R., Wang, Y.: Isotropic sparse regularization for spherical Harmonic representations of random fields on the sphere. Appl. Comput. Harmon. Anal. (To appear)

Lee, K., Bresler, Y., Junge, M.: Subspace methods for joint sparse recovery. IEEE Trans. Inform. Theory 58, 3613–3641 (2012)

Lee, S., Oh, M., Kim, Y.: Sparse optimization for nonconvex group penalized estimation. J. Stat. Comput. Simul. 86, 597–610 (2016)

Lv, X., Bi, G., Wan, C.: The group Lasso for stable recovery of block-sparse signal representations. IEEE Trans. Signal Proc. 59, 1371–1382 (2011)

Ma, S., Huang, J.: A concave pairwise fusion approach to subgroup analysis. J. Am. Stat. Assoc. 112, 410–423 (2017)

Mareček, J., Richtárik, P., Takáč, M.: Distributed block coordinate descent for minimizing partially separable functions. Technical report, Department of Mathematics and Statistics, University of Edinburgh, Edinburgh, Scotland (2014)

Obozinski, G., Wainwright, M.J., Jordan, M.: Support union recovery in high-dimensional multivariate regression. Ann. Stat. 39, 1–47 (2011)

Yuan, M., Lin, Y.: Model selection and estimation in regression with grouped variables. J. R. Stat. Soc. B 68, 49–67 (2006)

Acknowledgements

Xiaojun Chen would like to thank Hong Kong Research Grant Council for Grant PolyU153001/18P. Philippe Toint would like to thank the Hong Kong Polytechnic University for its support while this research was being conducted. We would like to thank the editor and two referees for their helpful comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Proof Lemma 3.1

The proof of (3.3) is essentially borrowed from [11, Lemma 2.4], although details differ because the present version covers \(a \in (0,1)\). We first observe that \(\nabla _\cdot ^j \Vert r\Vert ^a\) is a jth order tensor, whose norm is defined using (1.7). Moreover, using the relationships

defining

and proceeding by induction, we obtain that, for some \(\mu _{j,i}\ge 0\) with \(\mu _{1,1}=1\),

where the last equation uses the convention that \(\mu _{j,0} = 0\) and \(\mu _{j-1,j} = 0\) for all j. Thus we may write

with

where we used the identity

to deduce the second equality. Now (A.3) gives that

It is then easy to see that the maximum in (1.7) is achieved for \(v = r/\Vert r\Vert \), so that

with

Successively using this definition, (A.4), (A.5) (twice), the identity \(\mu _{j-1,j} = 0\) and (A.7) again, we then deduce that

Since \(\pi _1 = a\) from the first part of (A.1), we obtain from (A.8) that

which, combined with (A.6) and (A.7), gives (3.3). Moreover, (A.9), (A.7) and (A.3) give (3.2) with \(\phi _{i,j}= \mu _{j,i}\,\nu _i\). In order to prove (3.4) (where now \(\Vert r\Vert =1\)), we use (A.3), (A.7), (A.9) and obtain that

Using (1.7) again, it is easy to verify that the maximum defining the norm is achieved for \(v=r\) and (3.4) then follows from \(\Vert r\Vert =1\). \(\square \)

Rights and permissions

About this article

Cite this article

Chen, X., Toint, P.L. High-order evaluation complexity for convexly-constrained optimization with non-Lipschitzian group sparsity terms. Math. Program. 187, 47–78 (2021). https://doi.org/10.1007/s10107-020-01470-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-020-01470-9

Keywords

- Complexity theory

- Nonlinear optimization

- Non-Lipschitz functions

- Partially-separable problems

- Group sparsity

- Isotropic model