Abstract

We study a fundamental online job admission problem where jobs with deadlines arrive online over time at their release dates, and the task is to determine a preemptive single-server schedule which maximizes the number of jobs that complete on time. To circumvent known impossibility results, we make a standard slackness assumption by which the feasible time window for scheduling a job is at least \(1+\varepsilon \) times its processing time, for some \(\varepsilon >0\). We quantify the impact that different provider commitment requirements have on the performance of online algorithms. Our main contribution is one universal algorithmic framework for online job admission both with and without commitments. Without commitment, our algorithm with a competitive ratio of \(\mathcal {O}(1/\varepsilon )\) is the best possible (deterministic) for this problem. For commitment models, we give the first non-trivial performance bounds. If the commitment decisions must be made before a job’s slack becomes less than a \(\delta \)-fraction of its size, we prove a competitive ratio of \(\mathcal {O}(\varepsilon /((\varepsilon -\delta )\delta ^2))\), for \(0<\delta <\varepsilon \). When a provider must commit upon starting a job, our bound is \(\mathcal {O}(1/\varepsilon ^2)\). Finally, we observe that for scheduling with commitment the restriction to the “unweighted” throughput model is essential; if jobs have individual weights, we rule out competitive deterministic algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Many modern computing environments involve a centralized system for managing the resource allocation for processing many different jobs. Such environments are varied, including, for example, internal clusters and public clouds. These systems typically handle a diverse workload [22] with a mixture of jobs including short time-sensitive jobs, longer batch jobs, and everything in between. By centralizing computing and scheduling decisions, one can potentially better utilize resources.

The challenge for a system designer is to implement scheduling policies that trade off between these different types of jobs and obtain good performance. There are many ways to define good performance and in this paper, we will focus on the commonly used notion of throughput which is the number of jobs completed, or if jobs have weights, the total weight of jobs completed.

In general, throughput is a “social welfare” objective that tries to maximize total utility. To this end, a solution may abort jobs close to their deadlines in favor of many shorter and more urgent tasks [12]. As companies start to outsource mission critical processes to external clouds, they may require a certain provider-side guarantee, i.e., service providers have to commit to complete admitted jobs before they cannot be moved to other computing clusters anymore. Moreover, companies tend to rely on business analytics to support decision making. Analytical tools, that usually work with copies of databases, depend on faultless data. This means, once such a copy process started, its completion must be guaranteed.

Formally, we consider a model in which jobs arrive online over time at their release date \(r_j\). Each job has a processing time \(p_j\ge 0\), a deadline \(d_j\), and possibly a weight \(w_j>0\). In order to complete, a job must receive a total of \(p_j\) units of processing time in the interval \([r_j,d_j)\). We allow preemption, that is, the processing time does not need to be contiguous. If a schedule completes a set S of jobs, then the throughput is |S|, while the weighted throughput is \(\sum _{j \in S} w_j\). We analyze the performance of algorithms using standard competitive analysis in which the performance of an algorithm is compared to that of an optimal offline algorithm with full knowledge of the future. More precisely, an online algorithm Alg is called c-competitive if it achieves for any input instance I a total value of \(\textsc {Alg} (I) \ge \frac{1}{c} \textsc {Opt} (I)\), where Opt is the value of an optimal offline algorithm.

Deadline-based objectives are typically much harder to optimize than other Quality-of-Service metrics such as makespan or total completion time. Indeed, the problem becomes hopeless when preemption is not allowed: whenever an algorithm starts a job j without being able to preempt it, it may miss the deadlines of an arbitrary number of jobs that would have been schedulable if j had not been started. For scheduling with commitment, we provide a similarly strong lower bound for the preemptive version of the problem in the presence of weights. Therefore, we focus on unweighted preemptive online throughput maximization.

Hard examples for online algorithms tend to involve jobs that arrive and then must immediately be processed since \(d_j - r_j \approx p_j\). It is entirely reasonable to bar such jobs from a system, requiring that any submitted job contains some slack, that is, we must have some separation between \(p_j\) and \(d_j - r_j\). To that end we say that an instance has \(\varepsilon \)-slack if every job satisfies \(d_j - r_j \ge (1 + \varepsilon ) p_j\). We develop algorithms whose competitive ratio depends on \(\varepsilon \); the greater the slack, the better we expect the performance of our algorithm to be. This slackness parameter captures certain aspects of Quality-of-Service provisioning and admission control, see e.g. [14, 20], and it has been considered in previous work, e.g., in [2, 4, 13, 15, 22, 24]. Other results for scheduling with deadlines use speed scaling, which can be viewed as another way to add slack to the schedule, e.g. [1, 3, 16, 23]. In this paper we quantify the impact that different job commitment requirements have on the performance of online algorithms. We parameterize our performance guarantees by the slackness of jobs.

1.1 Our results and techniques

Our main contribution is a general algorithmic framework, called region algorithm, for online scheduling with and without commitments. We prove performance guarantees which are either tight or constitute the first non-trivial results. We also answer open questions in previous work. We show strong lower bounds for the weighted case and therefore our algorithms are all for the unweighted case, \(w_j \equiv 1\).

Optimal algorithm for scheduling without commitment We give an implementation of the region algorithm that achieves a competitive ratio of \(\mathcal {O}(\frac{1}{\varepsilon })\). We prove that this is optimal by giving a matching lower bound (ignoring constants) for any deterministic online algorithm.

Impossibility results for commitment upon job arrival In this most restrictive model, an algorithm must decide immediately at a job’s release date if the job will be completed or not. We show that no (randomized) online algorithm admits a bounded competitive ratio. Such a lower bound has only been shown by exploiting arbitrary job weights [22, 26]. Given our strong negative result, we do not consider this commitment model any further.

Scheduling with commitment We distinguish two different models: (i) commitment upon job admission and (ii) \(\delta \)-commitment. In the first model, an algorithm may discard a job any time before its start, its admission. This reflects the situation when the start of a process is the critical time point after which the successful execution is essential (e.g., faultless copy of a database). In the second model, \(\delta \)-commitment, an online algorithm must commit to complete a job when its slack has reduced from the original slack requirement of at least an \(\varepsilon \)-fraction of the job size to a \(\delta \)-fraction for \(0< \delta < \varepsilon \). Then, the latest time for committing to job j is \(d_j - (1 + \delta ) p_j\). This models an early enough commitment (parameterized by \(\delta \)) for mission critical jobs.

For both models, we show that implementations of the region algorithm allow for the first non-trivial performance guarantees. We prove an upper bound on the competitive ratio of \(\mathcal {O}(1/\varepsilon ^2)\) for commitment upon admission and a competitive ratio of \(\mathcal {O}(\varepsilon /((\varepsilon -\delta )\delta ^2))\), for \(0<\delta <\varepsilon \), in the \(\delta \)-commitment model. These are the first rigorous non-trivial upper bounds in any commitment model (excluding the special weighted setting with \(w_j=p_j\) that has been resolved; see related work).

Instances with arbitrary weights are hopeless without further restrictions. We show that there is no deterministic online algorithm with bounded competitive ratio, neither for commitment upon admission (also shown in [2]) nor for \(\delta \)-commitment. Informally, our construction implies that there is no deterministic online algorithm with bounded competitive ratio in any commitment model in which a scheduler may have to commit to a job before it has completed. (This is hard to formalize but may give guidance for the design of alternative commitment models.) Our lower bound for \(\delta \)-commitment is actually more fine-grained: for any \(\delta >0\) and any \(\varepsilon \) with \(\delta \le \varepsilon < 1+\delta \), no deterministic online algorithm has a bounded competitive ratio for weighted throughput. In particular, this rules out bounded performance guarantees for \(\varepsilon \in (0,1)\). We remark that for sufficiently large slackness (\(\varepsilon >3\)), Azar et al. [2] provide an online algorithm that has bounded competitive ratio. Our new lower bound answers affirmatively the open question if high slackness is indeed required.

Finally, our impossibility result for weighted jobs and the positive result for instances without weights clearly separate the weighted from the unweighted setting. Hence, we do not consider weights in this paper. We summarize in Table 1 the state of the art regarding competitive analysis for online throughput maximization with and without commitment.

Our techniques Once a job j is admitted to the system, its slack becomes a scarce resource: to complete the job before its deadline (which may be mandatory depending on the commitment model, but is at least desirable), one needs to carefully “spend” the slack on admitting jobs to be processed before the deadline of j. Our general framework for admission control, the region algorithm, addresses this issue by the concept of “responsibility”: whenever a job \(j'\) is admitted while j could be processed, \(j'\) becomes responsible for not admitting similar-length jobs for a certain period, its region. The intention is that \(j'\) reserves time for j to complete. To balance between reservation (commitment to complete j) and performance (loss of other jobs), the algorithm uses the parameters \(\alpha \) and \(\beta \), which specify the length of a region and similarity of job lengths.

A major difficulty in the analysis of the region algorithm is understanding the complex interval structure formed by feasible time windows, regions, and time intervals during which jobs are processed. Here, we rely on a key design principle of our algorithm: regions are defined independently of the actual execution of jobs. Thus, the analysis can be naturally split into two parts.

In the first part, we argue that the scheduling routine can handle the admitted jobs sufficiently well for suitably chosen parameters \(\alpha \) and \(\beta \). That means that the respective commitment model is obeyed and, if not implied by that, an adequate number of the admitted jobs is completed.

In the second part, we can disregard how jobs are actually scheduled by the scheduling routine and argue that the region algorithm admits sufficiently many jobs to be competitive with an optimum solution. The above notion of “responsibility” suggests a proof strategy mapping jobs that are completed in the optimum to the corresponding job that was “responsible” due to its region. Transforming this idea into a charging scheme is, however, a non-trivial task: There might be many ( \(\gg \mathcal {O}(\frac{1}{\varepsilon ^2}\))) jobs released within the region of a single job j and completed by the optimum, but not admitted by the region algorithm due to many consecutive regions of varying size. It is unclear where to charge these jobs—clearly not all of them to j.

We develop a careful charging scheme that avoids such overcharging. We handle the complex interval structure by working on a natural tree structure (interruption tree) related to the region construction and independent of the actual schedule. Our charging scheme comprises two central routines for distributing charge: moving charge along a sequence of consecutive jobs (Push Forward) or to children (Push Down).

We show that our analysis of the region algorithm is tight up to a constant factor.

1.2 Previous results

Preemptive online scheduling and admission control have been studied rigorously. There are several results regarding the impact of deadlines on online scheduling; see, e.g., [5, 13, 15] and references therein. Impossibility results for jobs with hard deadlines and without slack have been known for decades [6, 7, 18, 19, 21].

Scheduling without commitment Most research on online scheduling does not address commitment. The only results independent of slack (or other job-dependent parameters) concern the machine utilization, i.e., weighted throughput for the special case \(w_j=p_j\), where a constant competitive ratio is possible [6, 18, 19, 25]. In the unweighted setting, a randomized \(\mathcal {O}(1)\)-competitive algorithm is known [17]. For instances with \(\varepsilon \)-slack, Lucier et al. [22] give an \(\mathcal {O}(\frac{1}{\varepsilon ^2})\)-competitive algorithm in the most general weighted setting. To the best of our knowledge, no lower bound was known to date.

Scheduling with commitment Much less is known for scheduling with commitment. In the most restrictive model, commitment upon job arrival, Lucier et al. [22] rule out competitive online algorithms for any slack parameter \(\varepsilon \) when jobs have arbitrary weights. For commitment upon job admission, they give a heuristic that empirically performs very well but for which they cannot show a rigorous worst-case bound. In fact, later Azar et al. [2] show that no bounded competitive ratio is possible for weighted throughput maximization for small \(\varepsilon \). For the \(\delta \)-commitment model, Azar et al. [2] design (in the context of truthful mechanisms) an online algorithm that is \(\mathcal {O}(\frac{1}{\varepsilon ^2})\)-competitive if the slack \(\varepsilon \) is sufficiently large. They call an algorithm in this model \(\beta \)-responsive algorithm. They left open if this latter condition is an inherent property of any committed scheduler in this model and we answer this affirmatively.

Again, the machine utilization variant (\(w_j=p_j\)) is much more tractable than weighted or unweighted throughput maximization. Simple greedy algorithms achieve the best possible competitive ratio \(\varTheta (\frac{1}{\varepsilon })\) [11, 13] in all aforementioned commitment models, even commitment upon arrival.

2 Our general framework

2.1 The region algorithm

In this section we present our general algorithmic framework which we apply to scheduling with and without commitment. We assume that an online algorithm is given the slackness constant \(\varepsilon >0\) and, in the \(\delta \)-commitment model, \(0< \delta < \varepsilon \).

To gain some intuition for our algorithm, we first describe informally the three underlying design principles. The third principle is crucial to improve on existing results that only use the first two [22].

-

1.

A running job can be preempted only by significantly smaller jobs (parameter \(\beta \)).

-

2.

A job cannot start for the first time when its remaining slack is too small (constant \(\delta \) which is part of the input in the \(\delta \)-commitment model and otherwise set to \(\delta =\frac{\varepsilon }{2}\)).

-

3.

If a job preempts other jobs, then it has to take “responsibility” for a certain time interval (parameter \(\alpha \)) with which it assures that the jobs it preempted can complete on time.

We implement it in the following way. The region algorithm has two parameters, \(\alpha \ge 1\) and \(0< \beta < 1\). A region is a union of time intervals associated with a job, and the size of the region is the sum of sizes of the intervals. We denote the region of job j by R(j). Region R(j) will always have size \(\alpha p_j\), although the particular time intervals composing the region may change over time. Regions are always disjoint, i.e., for any \(i \ne j\), \(R(i) \cap R(j) = \emptyset \). Informally, whenever our algorithm starts a job i (we say i is admitted) that arrives during the region of an already admitted job j, then the current interval of j is split into two intervals and the region R(j) as well as all later regions are delayed.

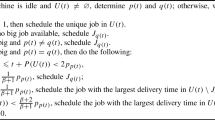

Formally speaking, at any time t, the region algorithm maintains two sets of jobs: admitted jobs, which have been started before or at time t, and available jobs. A job j is available if it is released before or at time t, is not yet admitted, and it is not too close to its deadline, i.e., \(r_j \le t\) and \(d_j - t \ge (1 + \delta ) p_j\). The intelligence of the region algorithm lies in admitting jobs and (re)allocating regions. The actual scheduling decision then is simple and independent of the regions: at any point in time, schedule the shortest admitted job that has not completed its processing time, i.e., we schedule admitted jobs in Shortest Processing Time (SPT) order. The region algorithm never explicitly considers deadlines except when deciding whether to admit jobs.

The region algorithm starts by admitting job 1 at its release date and creates the region \(R(1) := [r_1,r_1+\alpha p_1)\). There are two events that trigger a decision of the region algorithm: the release of a job and the end of a region. If one of these events occurs at time t, the region algorithm invokes the region preemption subroutine. This routine compares the processing time of the smallest available job i with the processing time of the admitted job k whose region contains t. If \(p_i < \beta p_k\), job i is admitted and the region algorithm reserves the interval \([t,t + \alpha p_i)\) for processing i. Since regions must be disjoint, the algorithm then modifies all other remaining regions, i.e., the parts of regions that belong to \([t,\infty )\) of other jobs j. We refer to the set of such jobs j whose regions have not yet completed by time t as J(t). Intuitively, we preempt the interval of the region containing t and delay its remaining part as well as the remaining regions of all other jobs. Formally, this update of all remaining regions is defined as follows. Let k be the one job whose region is interrupted at time t, and let \([a_k',b_k')\) be the interval of R(k) containing t. Interval \([a_k',b_k')\) is replaced by \([a_k',t)\cup [t+\alpha p_i, b_k'+ \alpha p_i)\). For all other jobs \(j \in J(t)\backslash \{k\}\), the remaining region \([a_j', b_j')\) of j is replaced by \([a_j'+\alpha p_i, b_j'+\alpha p_i )\). Observe that, although the region of a job may change throughout the algorithm, the starting point of a region for a job will never be changed. We summarize the region algorithm in Algorithm 1.

We apply the algorithm in different commitment models with different choices of parameters \(\alpha \) and \(\beta \), which we derive in the following sections. In the \(\delta \)-commitment model, \(\delta \) is given as part of the input. In the other models, i.e., without commitment or with commitment upon admission, we simply set \(\delta =\frac{\varepsilon }{2}\).

Commitment The region algorithm always commits upon admission of a job, i.e., at its first start. This is possibly earlier than required in the \(\delta \)-commitment model. The parameter \(\delta \) determines the latest possible start time of a job, which is then for our algorithm also the latest time the job can be admitted. Thus, for the analysis, the algorithm execution for commitment upon admission (with \(\delta =\frac{\varepsilon }{2}\)) is a special case of \(\delta \)-commitment. This is true only for our algorithm, not in general.

2.2 Main results on the region algorithm

In the analysis we focus on instances with small slack as they constitute the hard case. Notice that instances with large slack clearly satisfy a small slack assumption. In such a case, we simply run our algorithm by setting \(\varepsilon =1\) and obtain constant competitive ratios. Therefore, we assume for the remainder that \(0<\varepsilon \le 1\).

Our main results are as follows. Without commitment, we present an optimal online algorithm.

Theorem 1

(Scheduling without commitment) Let \(0<\varepsilon \le 1\). With the choice of \(\alpha =1\), \(\beta = \frac{\varepsilon }{4}\), and \(\delta = \frac{\varepsilon }{2}\), the region algorithm is \(\varTheta (\frac{1}{\varepsilon })\)-competitive for scheduling without commitment.

This is an exponential improvement upon the previously best known upper bound [22] (given for weighted throughput). For scheduling with commitment, we give the first rigorous upper bound.

Theorem 2

(Scheduling with commitment) Let \(0< \delta < \varepsilon \le 1\). Choosing \(\alpha =\frac{8}{\delta }\), \(\beta = \frac{\delta }{4}\), the region algorithm is \(\mathcal {O}(\frac{\varepsilon }{(\varepsilon -\delta )\delta ^2})\)-competitive in the \(\delta \)-commitment model. When the scheduler has to commit upon admission, the region algorithm has a competitive ratio \(\mathcal {O}(\frac{1}{\varepsilon ^2})\) for \(\alpha =\frac{4}{\varepsilon }\) and \(\beta = \frac{\varepsilon }{8}\).

In Sect. 5, we show that the analysis of our framework is tight up to constants.

2.3 Interruption trees

To analyze the performance of the region algorithm on a given instance, we consider the final schedule and the final regions and investigate them retrospectively. Let \(a_j\) be the admission date of job j which remained fixed throughout the execution of the algorithm. Let \(b_j\) denote the final end point of j’s region. Then, the convex hull of R(j) is given by \(conv (R(j)) = [a_j, b_j)\).

Our analysis crucially relies on understanding the interleaving structure of the regions that the algorithm constructs. This structure is due to the interruption by smaller jobs and can be captured well by a tree or forest in which each job is represented by one vertex. A job vertex is the child of another vertex if and only if the region of the latter is interrupted by the first one. The leaves correspond to jobs with non-interrupted regions. By adding a machine job M with \(p_M := \infty \) and \(a_M=-\infty \), we can assume that the instance is represented by a tree which we call interruption tree. This idea is visualized in Fig. 1, where the vertical arrows indicate the interruption of a region by another job and intervals of the same color belong to one job.

Let \(\pi (j)\) denote the parent of j. Further, let \(T_j\) be the subtree of the interruption tree rooted in job j and let the forest \(T_{-j}\) be \(T_j\) without its root j. By slightly abusing notation, we denote the tree/forest as well as its job vertices by \(T_{*}\).

A key property of this tree is that the processing times on a path are geometrically decreasing.

Lemma 1

Let \(j_1,\ldots ,j_{\ell }\) be \(\ell \) jobs on a path in the interruption (sub)tree \(T_j\) rooted in j such that \(\pi (j_{i+1}) = j_{i}\). Then, \(p_{j_\ell } \le \beta p_{j_{\ell -1}} \cdots \le \beta ^{\ell -1} p_{j_1} \le \beta ^{\ell }p_j\) and the total processing volume is

Proof

Let the jobs \(j_1,\ldots ,j_{\ell }\) be indexed in decreasing order of processing times, i.e., \(p_{j_{\ell } }\le p_{j_{\ell -1}} \le \cdots \le p_{j_1}\).

Observe that \(p_{j_1} < \beta p_j\) as otherwise the region of j shall not be preempted by \(j_1\). Furthermore, for any \(2\le i\le \ell \), we claim that job \({j_i}\) is released after \(j_{i-1}\). Suppose the claim is not true, then for some i job \(j_i\) is released before \(j_{i-1}\). Consider the point in time t when job \(j_{i-1}\) is admitted. The time t either belongs to the region of \(j_i\), or belongs to the region of some job \(j'\) which interrupts the region of \(j_i\), and consequently \(p_{j'} < \beta p_{j_i}\). In both cases the algorithm will not admit \(j_{i-1}\), and therefore the claim is true. At any point in time when the algorithm admits a job \(j_i\), then it interrupts the region of \(j_{i-1}\) and \(p_{j_i} < \beta p_{j_{i-1}}\). Thus, we have

We conclude by observing that the total processing volume of the jobs \(j_1,\ldots ,j_\ell \) is

\(\square \)

3 Successfully completing sufficiently many admitted jobs

We show that the region algorithm completes sufficiently many jobs among the admitted jobs before their deadline. For scheduling without commitment, we show how to choose \(\alpha , \beta \), and \(\delta \) to ensure that at least half of all admitted jobs are completed on time. For scheduling with commitment, we provide a choice of \(\alpha , \beta \), and \(\delta \) such that every admitted job is guaranteed to complete on time.

Recall that the region algorithm schedules admitted and yet not completed jobs independently of the regions in SPT order. This guarantees the following.

Observation 1

For \(\alpha \ge 1\), the region algorithm always prioritizes a job within its own region.

3.1 Scheduling without commitment

In this section we fix \(\delta =\frac{\varepsilon }{2}\) for \(0< \varepsilon \le 1\). We show the following result.

Theorem 3

Let \(\alpha = 1\) and \(\beta = \frac{\varepsilon }{4}\). Then the region algorithm completes at least half of all admitted jobs before their deadline.

The intuition for setting \(\alpha =1\) and thus reserving regions of minimum size \(|R(j)| = p_j\), for any j, is that in the model without commitment, we do not need to block extra time in the future to ensure the completion of earlier admitted jobs. Because of Observation 1, for \(\alpha =1\), every job j completes at the end of the region at \(b_j\). Thus, j completes on time if and only if the region R(j) ends before \(d_j\), i.e., \(b_j \le d_j\). We prove Theorem 3 by showing that at least half of all regions end before the deadline of their respective jobs. Formally, we prove the following lemma.

Lemma 2

For any instance \(\mathcal {I}\) and some job j, for which the region algorithm generates an interruption tree \(T_j\) with regions in \([a_j, b_j)\), there is an instance \(\mathcal {I}'\) with at most \(|T_j|+1\) jobs such that the regions in \([a_j,b_j)\) and the tree \(T_j\) are identical.

Proof

Consider an instance \(\mathcal {I}\) of the non-committed scheduling problem, and let \(T_j\) be the interruption tree constructed by the region algorithm with its root in j. Let the interval \([a_j, b_j)\) be the convex hull of the subintervals belonging to the region of j. Our goal is to modify the instance \(\mathcal {I}\) such that we can remove jobs outside of \(T_j\) without changing the interruption tree of the algorithm. We do so by setting \(\mathcal {I}'\) to contain the set of jobs in \(T_j\) and changing only parameters of job j (and possibly adding one auxiliary job). Note that j is, by definition, the largest job in \(\mathcal {I}'\).

If \(d_j - a_j \ge (1+\varepsilon ) p_j\) then we set \(r_j':= a_j\). Otherwise, we add an auxiliary job 0 to \(\mathcal {I}'\) that is tight and blocks the machine until \(a_j\). This means \(r_0 = d_j - (1+\varepsilon ) p_j\), \(p_0 = (1+\varepsilon ) p_j - (d_j - a_j)\), and \(d_0 = r_0 + (1+\varepsilon ) p_0\). Moreover, we modify the release date of j to \(r_j':= r_0\). Since the auxiliary job is the smallest job in instance \(\mathcal {I}'\) at time \(r_0\), the region algorithm admits this job and delays job j. Let \({\mathcal {R}}\) and \({\mathcal {R}}'\) be the schedule of regions in \([a_j,b_j)\) generated by the region algorithm when applied to \(\mathcal {I}\) and \(\mathcal {I}'\), respectively. We show that \({\mathcal {R}}\) and \({\mathcal {R}}'\) are identical in \([a_j, b_j)\).

Consider the time \(t = a_j\). Clearly, j’s region starts in \({\mathcal {R}}\) by assumption. If no auxiliary job was used, job j is the only available job in \(\mathcal {I}'\). Thus, the region algorithm admits j. In contrast, if \(0 \in \mathcal {I}'\), it finishes at \(a_j\) by definition. Since j is admitted in \({\mathcal {R}}\), it must hold that \(d_j - a_j \ge (1+\delta )p_j\). Thus, its regions also begins in \({\mathcal {R}}'\) at \(a_j\).

Let \(a_j< t < b_j\) be the first time when the two region schedules \({\mathcal {R}}\) and \({\mathcal {R}}'\) are different. Since both schedules are generated by the region algorithm, any change in the structure of the regions is due to one of the two decision events of the region algorithm. Recall that these events where the end of a job’s region and the release of a new job. We distinguish two cases based on the job k that caused the difference in \({\mathcal {R}}\) and \({\mathcal {R}}'\): \(k\in T_j\) or \(k\notin T_j\).

By definition, the region of any job outside of \(T_j\) has empty intersection with \([a_j, b_j)\). Thus, the release of such a job can neither change \({\mathcal {R}}\) nor \({\mathcal {R}}'\). Of course, the region of such job cannot end within \([a_j, b_j)\). Thus, a job \(k\in T_j\) is the reason for the difference in \({\mathcal {R}}\) and \({\mathcal {R}}'\). Let k be the job that owns time t in \({\mathcal {R}}\). If the processor is idle in \({\mathcal {R}}\) after t let k be the job that owns t in \({\mathcal {R}}'\). As the two schedules are identical in \([a_j,t)\), let \(i \in T_j\) be the unique job that owns the time right before t.

Consider the event that the region of i ended at t. If k is an ancestor of i, then there is no sufficiently small job available in \(\mathcal {I}\) that prevents k from being restarted at t. Additionally, the amount of time that belongs to R(k) in \([a_j,t)\) is identical in \({\mathcal {R}}\) and \({\mathcal {R}}'\). Thus, k also resumes processing in \({\mathcal {R}}'\). If k is admitted at t in \({\mathcal {R}}\), it is sufficiently small to (further) preempt the ancestor of i and it is available for admission. Hence, these two properties are also satisfied in \(\mathcal {I}'\) and k is admitted at t in \({\mathcal {R}}'\) as well. Therefore \({\mathcal {R}}\) contains idle time at t while the region of k is scheduled in \({\mathcal {R}}'\). Since the jobs in \(\mathcal {I}'\) are a subset of the jobs in \(\mathcal {I}\) (except for 0), job k is also admitted and unfinished or available at t in \(\mathcal {I}\). This is a contradiction. \(\square \)

We show that the existence of a late job j implies that the subtree \(T_j\) rooted in j contains more finished than unfinished jobs. We fix a job \(j \in J\) that was admitted by the region algorithm at time \(a_j\) and whose region completes at time \(b_j\). We want to analyze the structure of all regions \({\mathcal {R}}\) in \([a_j,b_j)\), i.e., the regions of all jobs in \(T_j\). Let \(F_j\) denote the set of jobs in \(T_j\) that finish on time. Similarly, we denote the set of jobs in \(T_j\) that complete after their deadlines, i.e., that are unfinished at their deadline, by \(U_j\).

Lemma 3

Let \(\alpha =1\) and \(\beta = \frac{\varepsilon }{4}\), with \(\varepsilon >0\). If \(b_j - a_j \ge (\ell + 1) p_j\) for \(\ell > 0\), then \(|F_j| - |U_j| \ge \lfloor \frac{4\ell }{\varepsilon }\rfloor \).

As this proof is rather technical without new insights, we give only the proof sketch here and refer to the Appendix for the full details.

Proof

(Proof sketch) Assume for the sake of contradiction that there is an instance such that the interruption tree generated by the region algorithm contains a subtree \(T_j\) with \(b_j - a_j \ge (\ell + 1) p_j\) and \(|F_j| - |U_j| < \lfloor \frac{4\ell }{\varepsilon }\rfloor \). Let \(\mathcal {I}\) be such an instance that uses a minimal number of jobs in total. The goal is to construct an instance \(\mathcal {I}'\) that satisfies \(b_j - a_j \ge (\ell + 1) p_j\) and \(|F_j| - |U_j| < \lfloor \frac{4\ell }{\varepsilon }\rfloor \) although it uses less jobs than \(\mathcal {I}\).

To this end, we modify \(\mathcal {I}\) in several steps such that we can merge three jobs to one larger job without violating \(b_j - a_j \ge (\ell +1) p_j\), changing \(|F_j|\) or \(|U_j|\), or making the instance infeasible. The three jobs will be leaves with the same parent \(i\) in \(T_j\). If i is an unfinished job that has children which are all leaves, then there have to be at least three jobs that interrupt \(i\). After merging the three jobs, we adapt the release date and deadline of \(i\) to guarantee that the modified instance remains feasible. For all these modification steps, it is crucial that we can restrict to instances in which all jobs appear in the interruption tree (Lemma 2).

However, this modification might lead to \(b_{i} \le d_{i}'\) which implies that \(i\) finishes on time. This changes the values of \(|F_j|\) and \(|U_j|\). Clearly, in this case, \(|U_j'| = |U_j| -1\). By a careful analysis, we see that the number of finished jobs decreases by one as well because the three children of \(i\) are replaced by only one finished job. Hence, \(|F_j'| - |U_j'| = |F_j| - |U_j|\). If \(i\) does not finish by \(d_{i}'\), then \(|F_j'| - |U_j'| = (|F_j| - 2) - |U_j|\). Thus, the modified instance \(\mathcal {I}'\) also violates \(|F_j'| - |U_j'| \ge \lfloor \frac{4\ell }{\varepsilon }\rfloor \) but uses less jobs than \(\mathcal {I}\) does; a contradiction. \(\square \)

Proof

(Theorem 3) Let U be the set of jobs that are unfinished by their deadline but whose ancestors (except machine job M) have all completed on time. Every job \(j\in U\) was admitted by the algorithm at some time \(a_j\) with \(d_j - a_j \ge (1+\delta ) p_j\). With \(\delta = \frac{\varepsilon }{2}\) this implies \(b_j - a_j > d_j - a_j \ge (1+\frac{\varepsilon }{2}) p_j\). By Lemma 3, it follows that \(|F_j| - |U_j| \ge \lfloor \frac{4\cdot \varepsilon /2}{\varepsilon } \rfloor = 2 \). Then, \( |T_j| = |F_j| + |U_j| \le 2|F_j| - 2 < 2|F_j|. \) This completes the proof. \(\square \)

3.2 Scheduling with commitment

We analyze the region algorithm for scheduling with commitment. For both models, commitment at admission and \(\delta \)-commitment, we show that there is a choice of \(\alpha \) and \(\beta \) such that every job that has started processing will be completed before its deadline. Recall that we can restrict to analyzing the algorithm in the \(\delta \)-commitment model since it runs with \(\delta =\frac{\varepsilon }{2}\) for commitment at admission.

Lemma 4

Let \(\varepsilon ,\delta >0\) be fixed with \(\delta <\varepsilon \). If \(\alpha \ge 1\) and \(0<\beta < 1\) satisfy the condition that

then any job j that is admitted by the algorithm at time \(a_j\le d_j -(1+\delta )p_j\) will be finished by \(d_j\).

Proof

Consider a job j that is admitted (and simultaneously accepted for completion) by time \(a_j\). It holds that \(d_j-a_j\ge (1+\delta ) p_j\). We show that j receives at least \(p_j\) units of time within \([a_j,d_j)\). Let |R(k)| denote the total length of intervals in R(k), the region of job k.

Let \(D_{j}\subseteq T_{-j}\) be the set of jobs whose region delays the region of job j, and has nonempty intersection with \([a_j,d_j)\). Notice that a job \(k\in D_{j}\) can only be released after time \(a_j\). Let \(D_{j}'\subseteq D_{j}\) be the subset of jobs whose region is completely contained in \([a_j,d_j)\) and \(D_{j}''=D_{j}\backslash D_{j}'\).

Consider \(D_{j}'\). Notice that \(\big | \bigcup _{k\in D_{j}'} R(k) \big | = \alpha \sum _{k\in D_{j}'} p_k.\) Thus, within regions R(k) of jobs \(k\in D_{j}'\), an \(\frac{\alpha -1}{\alpha }\)-fraction of the total time is available for processing job j.

Consider \(D_{j}''=\{j_1,j_2,\ldots ,j_\ell \}\) and assume that \(p_{j_1}\ge p_{j_2}\ge \cdots \ge p_{j_\ell }\). Any interval \([a_{j_i},b_{j_i})\) of such a job \(j_i\) in \(D_{j}''\) contains \(d_j\). This implies that \(\pi (j_{i+1}) = j_i\) for \(0 \le i < \ell \) where \(j_0 := j\) for simplicity. Applying Lemma 1 gives an upper bound on the total processing volume of jobs in \( D_{j}''\), i.e., \( \sum _{i=1}^{\ell } p_{j_i} \le \frac{\beta }{1-\beta }\cdot p_j. \)

To determine the amount of time for processing j within \([a_j,d_j)\), we first subtract the total processing time for jobs in \(D_{j}''\). The remaining interval may be covered with regions of \(D_{j}'\) within which we can use an \(\frac{\alpha -1}{\alpha }\)-fraction as shown above. Recall that \(d_j-a_j \ge (1+\delta ) p_j\). Thus, the amount of time that we can process job j within \([a_j,d_j)\) is at least

This bound is now independent of the actual schedule. We can conclude, if \(\alpha \) and \(\beta \) satisfy Condition (1), then job j can process for \(p_j\) units of time within \([a_j,d_j)\) and completes before its deadline. \(\square \)

4 Competitiveness: admission of sufficiently many jobs

We show that the region algorithm admits sufficiently many jobs, independently of the commitment model.

Theorem 4

The number of jobs that an optimal (offline) algorithm can complete on time is at most a factor \(\lambda + 1\) larger than the number of jobs admitted by the region algorithm, where \(\lambda := \frac{\varepsilon }{\varepsilon -\delta }\frac{\alpha }{\beta }\), for \(0<\delta <\varepsilon \le 1\).

To prove the theorem, we fix an instance and an optimal offline algorithm Opt. Let X be the set of jobs that Opt scheduled and the region algorithm did not admit. We can assume that Opt completes all jobs in X on time. Let J denote the jobs that the region algorithm admitted. Then, \(X\cup J\) is a superset of the jobs scheduled by Opt. Thus, showing \(|X|\le \lambda |J|\) implies Theorem 4.

To this end, we develop a charging procedure that assigns each job in X to a unique job in J such that each job \(j\in J\) is assigned at most \(\lambda = \frac{\varepsilon }{\varepsilon -\delta }\frac{\alpha }{\beta }\) jobs. For a job \(j\in J\) admitted by the region algorithm we define the subset \(X_j \subset X\) based on release dates. Then, we inductively transform the laminar family \((X_j)_{j\in J}\) into a partition \((Y_j)_{j \in J}\) of X with \(|Y_j| \le \lambda \) for all \(j\in J\) in the proof of Lemma 5, starting with the leaves in the interruption tree as base case (Lemma 7). For the construction of \((Y_j)_{j \in J}\), we heavily rely on the key property (Volume Lemma 6) and Corollary 1.

More precisely, for a job \(j\in J\) let \(X_j\) be the set of jobs \(x \in X\) that were released in the interval \( [a_j,b_j)\) and satisfy \(p_x < \beta p_{\pi (j)}\). Let \(X_j^S := \{x\in X_j: p_x < \beta p_j\}\) and \(X_j^B := X_j \backslash X_j^S\) denote the small and the big jobs, respectively, in \(X_j\). Recall that \([a_j,b_j)\) is the convex hull of the region R(j) of job j and that it includes the convex hulls of the regions of all descendants of j in the interruption tree, i.e., jobs in \(T_j\). In particular, \(X_k \subset X_j\) if \(k\in T_j\).

Observation 2

-

1.

Any job that is scheduled by Opt and not admitted by the region algorithm is released within the region of some job \(j\in J\), i.e., \(\bigcup _{j \in J} X_j = X\).

-

2.

As the region algorithm admits any job that is small w.r.t. j and released in R(j), it holds that \(X_j^S = \bigcup _{k: \pi (k) = j} X_k\).

Recall that M denotes the machine job. By Observation 2, \(X = X_M^S\) and, thus, it suffices to show that \(|X_M^S| \le \lambda |J|\). In fact, we show a stronger statement for each job \(j\in J\): the number of small jobs in \(X_j\) is bounded by \(\lambda \tau _j\) where \(\tau _j\) is the number of descendants of j in the interruption tree, i.e., \(\tau _j := |T_{-j}|\).

Lemma 5

For all \(j\in J\cup \{M\}\), \(|X_j^S| \le \lambda \tau _j\).

Before proving the lemma, we will highlight the main steps in the following. The fine-grained definition of the sets \(X_j\) in terms of the release dates and the processing times allows us to show that any job j with \(|X_j| > (\tau _j +1) \lambda \) has siblings \(j_1,\ldots ,j_k\) such that \(|X_j| + \sum _{i=1}^k|X_{j_i}| \le \lambda ( \tau _j +1 + \sum _{i=1}^k (\tau _{j_i}+1))\). We call i and j siblings if they have the same parent in the interruption tree. Simultaneously applying this charging idea to all descendants of a job h already proves \(|X_h^S| \le \lambda \tau _h\) as \(X_h^S = \bigcup _{j: \pi (j) = h} X_j\) by Observation 2.

We prove that this “balancing” of \(X_j\) between jobs only happens between siblings \(j_1,\ldots ,j_k\) with the property that \(b_{j_i} = a_{j_{i+1}}\) for \(1\le i < k \). We call such a set of jobs a string of jobs. The ellipses in Fig. 1 visualize the maximal strings of jobs. A job j is called isolated if \(b_i \ne a_j\) and \(b_j \ne a_i\) for all children \(i\ne j\) of \(\pi (j)\).

The next (technical) lemma is a key ingredient for the “balancing” of \(X_j\) between a string of jobs. For any subset of J, we index the jobs in order of increasing admission points \(a_j\). Conversely, for a subset of X, we order the jobs in increasing order of completion times, \(C_x^*\), in the optimal schedule.

Lemma 6

(Volume Lemma) Let \(f,\ldots ,g \in J\) be jobs with a common parent in the interruption tree. Let \(x\in \bigcup _{j=f}^g X_{j}\) such that

Then, \(p_x \ge \beta p_{j^*}\), where \(j^*\in J\cup \{M\}\) is the job whose region contains \(b_{g}\), i.e., \(b_{g}\in R(j^*)\).

Proof

Let \(f,\ldots ,g\), x, and \(j^*\) as in the lemma. Since \(x \in X\), the region algorithm did not accept x at time \(b_{g}\). There are two possible reasons for this behavior: either \(p_x \ge \beta p_{j^*}\) or x was not available for admission at time \(b_{g}\) anymore.

Assume for the sake of contradiction that \(p_x < \beta p_{j^*}\) and, thus, \(d_x - b_{g} < (1 + \delta ) p_x\). By assumption, \(r_x \ge a_{f}\) and \(d_x - r_x \ge (1 + \varepsilon ) p_x\). Hence,

By (V), the volume Opt processes between \(b_{g}\) and \(C_x^*\) is at least \( \frac{\delta }{\varepsilon -\delta } (b_{g} - a_{f}) + p_x \). By applying the above calculated lower bound, we get that

and, hence, that \(C_x^* \ge b_{g} + (1 + \delta ) p_x > d_x\), which contradicts that Opt is a feasible schedule. \(\square \)

The next corollary follows directly from the Volume Lemma applied to a string of jobs or to a single job \(j \in J\) (let \(f=j=g\)). To see this, recall that \(X_j\) contains only jobs that are small w.r.t. \(\pi (j)\), i.e., all \(x\in X_j\) satisfy \(p_x < \beta p_{\pi (j)}\).

Corollary 1

Let \(\{ f,\ldots , g \} \subset J\) be a string of jobs and let \(x\in \bigcup _{j=f}^g X_j\) satisfy (V). Then, the interruption tree contains a sibling \(j^*\) of g with \(b_g = a_{j^*}\).

The main part of the proof of Lemma 5 is to show (V) for a string of jobs only relying on \(\sum _{j=f}^g |X_j| > \lambda \sum _{j=f}^g (\tau _j +1)\). Then, Corollary 1 allows us to charge the “excess” jobs to a subsequent sibling \(g+1\). The relation between processing volume and size of job sets is possible due to the definition of \(X_j\) based on \(T_j\).

We inductively prove Lemma 5 where the induction is on the distance \(\varphi (j)\) of a job j from the machine job M, i.e., \(\varphi (M) := 0\) and \(\varphi (j) := \varphi (\pi (j)) + 1\) for \(j\in J\). Moreover, let \(\varphi _{\max } := \max \{ \varphi (j): j\in J \}\) be the maximal distance or, equivalently, the height of the interruption tree. Any job j at maximal distance from the machine job is a leaf in the interruption tree. The following lemma serves as base case in the proof of Lemma 5.

Lemma 7

Let \(\{f, \ldots , g\}\subset J\) be jobs at maximal distance from M such that \(\sum _{j=f}^i |X_{j}| > \lambda (i+1 - f) \) holds for all \(f\le i\le g\). If g is the last such job, there is a sibling \(j^*\) of g with \(b_{g} = a_{j^*}\) and \(\sum _{j = f }^{j^*} |X_{j}| \le \lambda (j^*+ 1 - f)\).

Proof

Observe that \([a_{f}, b_{g}) = \bigcup _{j=1}^k R(j)\) because the leaves \(f, \ldots , g\) form a string of jobs. Thus, by showing that there is a job \(x \in X_f^g := \bigcup _{j=f}^g X_{j}\) that satisfies (V), we prove the statement with the Volume Lemma. To this end, we show that for every job \(f \le j \le g\) there exists a set \(Y_j\) such that the processing volume within \(Y_j\) is sufficient to cover the interval \([a_{j},b_{j})\) at least \(\frac{\varepsilon }{\varepsilon -\delta }\) times. More precisely, \(Y_f,\ldots ,Y_g\) will satisfy

-

(i)

\(\bigcup _{j=f}^g Y_j \subset X_f^g\),

-

(ii)

\(|Y_j| = \lambda \), and

-

(iii)

\(Y_j \subset \{x \in X_f^g: p_x \ge \beta p_{j}\}\) for every \(f\le j \le g\).

Then, (ii) and (iii) imply \(\sum _{y \in Y_j} p_y \ge \lambda \beta p_{j} = \frac{\varepsilon }{\varepsilon -\delta }(b_{j} - a_{j})\). Thus, if we choose x among those jobs in \(X_f^g\) that Opt completes last and guarantee that \(x \notin \bigcup _{j=f}^g Y_j\), the volume condition (V) is satisfied. We first describe how to find \(Y_f,\ldots ,Y_g\) before we show that these sets satisfy (i) to (iii).

By assumption, \(|X_{f}| > \lambda \). Let \(X_{f} = \{x_1,\ldots ,x_\lambda ,x_{\lambda +1},\ldots \}\) be indexed in increasing completion times \(C_x^*\). Define \(Y_f := \{x_1,\ldots ,x_\lambda \}\) and \(L_f := \{x_{\lambda +1},\ldots \}= X_f \backslash Y_f \), i.e., \(Y_f\) contains the \(\lambda \) jobs in \(X_{f}\) that Opt completes first and \(L_f\) contains the last jobs. For \(f < j+1\le g\), let \(Y_f,\ldots ,Y_j\) and \(L_j\) be defined. By assumption, \(|X_{j+1 } \cup L_j| > \lambda \) since \(|Y_i| = \lambda \) for \(1\le i \le j\). The jobs in \(X_{j +1} \cup L_j = \{x_1, \ldots , x_\lambda , x_{\lambda +1}, \ldots \}\) are again indexed in increasing order of optimal completion times. Then, \(Y_{j+1} := \{x_1,\ldots ,x_\lambda \}\) and \(L_{j+1} := \{x_{\lambda +1},\ldots \}\). Since we move jobs only horizontally to later siblings, we call this procedure Push Forward.

By definition, (i) and (ii) are satisfied. Since \(f,\ldots ,g\) are leaves, the jobs in \(Y_j \cap X_j\) are big w.r.t. j. Thus, it remains to show that the jobs in \(L_j\) are big w.r.t. the next job \(j+1\).

To this end, we observe the following. Assume that the jobs in \(Y_f,\ldots ,Y_j\) are big w.r.t. \(f,\ldots ,j\), respectively. If we find an index \(f\le i(x) \le j\) such that x as well as the jobs in \(\bigcup _{i=i(x)}^j Y_i\) are released after \(a_{i(x)}\), i.e.,

and x completes after every \(y \in \bigcup _{i = i(x)}^j Y_i\), i.e.,

then we can apply the Volume Lemma to show that \(x \in L_j\) is big w.r.t. \(j+1\). Indeed, then

We show by induction that such an index \(i(x)\) exists for every \(x \in L_j\).

Since \(Y_f \subset X_f\), we set \(i(x) := f\) for \(x\in L_f\). By definition of \(L_f\), \(C_y^* \le C_x^*\) for \(y \in Y_f\) and \(x \in L_f\). Hence, applying the Volume Lemma as explained above shows \(p_x \ge \beta p_{f+1}\).

Let \(f< j < g\). Assume that \(Y_f,\ldots ,Y_j\) and \(L_j\) are defined as described above. For jobs \(x \in L_j \backslash X_j \subset L_{j-1}\), we have \(i(x)\) with the Properties (ii) and (iii) by induction. For \(x \in L_j \cap X_j\), we temporarily set \(i(x) := j\) for simplification. We have to distinguish two cases: \(i(x)\) also satisfies (ii) and (iii) for j or we have to adjust \(i(x)\). Fix \(x\in L_j\).

-

\(L_i \cap Y_j = \emptyset \) for every \(f\le i < i(x)\). Since only jobs in \(L_i\) are shifted to some later job j, this implies \(\bigcup _{i=f}^{i(x)-1} X_i \cap Y_j = \emptyset \). Thus, the jobs in \(Y_j\) are released after \(a_{i(x)}\) and by definition, \(C_y^* \le C_x^*\) for \(y \in Y_j\). By induction, x and the jobs in \(Y_{i(x)} \cup \cdots \cup Y_{j-1}\) satisfy (ii) and (iii). Hence, \(i(x)\) is a suitable choice for x and j.

-

\(L_i \cap Y_j \ne \emptyset \) for some \(f\le i < i(x)\). Choose the job \(y \in L_{j-1} \cap Y_j\) with the smallest \(i(y)\). By a similar argumentation as before, \(\bigcup _{i=f}^{i(y)-1} X_i \cap Y_j = \emptyset \), which implies (ii) for \(z \in Y_j\). Again by induction, y and the jobs in \(Y_{i(y)} \cup \cdots \cup Y_{j-1}\) satisfy (ii) and (iii). Since \(x\in L_j\), \(C_x^* \ge C_z^*\) for all \(z \in Y_j\). This implies \(C_x^* \ge C_z^*\) for \(z \in \bigcup _{i = i(y)}^{j-1} Y_i\) because \(y \in L_{j-1}\cap Y_j\). Set \(i(x) := i(y)\).

As explained above, the Volume Lemma implies \(p_x \ge \beta p_{j+1}\).

The same argumentation holds for \(j = g\) although in this special case, Corollary 1 implies the statement. \(\square \)

We can now generalize the above described procedure to arbitrary strings of jobs in the interruption tree and, thus, prove Lemma 5.

Proof

(Lemma 5) We show that for every \(j\in J\cup \{M\}\), there exists a partition \((Y_k)_{k\in T_{-j}}\) with

-

(i)

\(\bigcup _{k\in T_{-j}} Y_k = X_j^S\),

-

(ii)

\(Y_k \subset \{ x \in X_j: p_x \ge \beta p_k \}\), and

-

(iii)

\(|Y_k| \le \lambda \) for every \(k\in T_{-j}\).

Then, it holds that \(|X_j^S | = |\bigcup _{k\in T_{-j}} Y_k | = \sum _{k \in T_{-j}} |Y_k| \le \lambda \tau _j\) and, thus, the lemma follows.

The proof consists of an outer and an inner induction. The outer induction is on the distance \(\varphi (j)\) of a job j from machine job M, i.e., \(\varphi (M) := 0\) and \(\varphi (j) := \varphi (\pi (j)) + 1\) for \(j\in J\). The inner induction uses the idea about pushing jobs \(x\in X_j\) to some later sibling of j in the same string of jobs (see proof of Lemma 7).

Let \(j \in J\) with \(\varphi (j) = \varphi _{\max } -1 := \max \{\varphi (i): i \in J\} -1\). By Observation 2, \(X_j^S = \bigcup _{k: \pi (k) = j} X_k\), where all \(k \in T_{-j}\) are leaves at maximal distance from M. We distinguish three cases for \(k\in T_{-j}\):

Case I If \(k\in T_{-j}\) is isolated, \(|X_k| \le \lambda \) follows directly from the Volume Lemma as otherwise \(\sum _{x \in X_k} p_x \ge \lambda \beta p_k + p_x = \tfrac{\varepsilon }{\varepsilon - \delta } (b_k - a_k) + p_x\) contradicts Corollary 1, where \(x\in X_k\) is the last job that Opt completes from the set \(X_k\). Since all jobs in \(X_k\) are big w.r.t. k, we set \(Y_k := X_k\).

Case II If \(k\in T_{-j}\) with \(|X_k| > \lambda \) is part of a string, let \(f ,\ldots , g\) be the maximal string satisfying Lemma 7 with \(k\in \{f,\ldots ,g\}.\) With this lemma, we find \(Y_f,\ldots ,Y_g\) and set \(Y_{g+1} := X_{g+1} \cup L_g\).

Case III We have not yet considered jobs k in a string with \(|X_k| \le \lambda \) that do not have siblings \(f,\ldots ,g\) in the same string with \(b_g = a_k\) and \(\sum _{i=f}^{g} |X_j| > (g - f) \lambda \). This means that such jobs do not receive jobs \(x \in X_i\) for \(i\ne k\) by the Push Forward procedure in Case II. For such \(k\in T_{-j}\) we define \(Y_k := X_k\).

Then, \(X_j^S = \bigcup _{k: \pi (k) = j} X_k = \bigcup _{k \in T_{-j}} X_k = \bigcup _{k \in T_{-j}} Y_k\) and, thus, (i) to (iii) are satisfied.

Let \(\varphi < \varphi _{\max }\) such that \((Y_k)_{k\in T_{-j}}\) satisfying (i) to (iii) exists for all \(j\in J\) with \(\varphi (j) \ge \varphi \). Fix \(j \in J\) with \(\varphi (j) = \varphi -1\). By induction and Observation 2, it holds that \(X_j^S = \bigcup _{k: \pi (k) = j} \left( X_k^B \cup \bigcup _{i \in T_{-k}} Y_i \right) \). Now, we use the partitions \((Y_i)_{i \in T_{-k}}\) for k with \(\pi (k) = j\) as starting point to find the partition \((Y_k)_{k \in T_{-j}}\). Fix k with \(\pi (k)= j\) and distinguish again the same three cases as before.

Case I If k is isolated, we show that \(|X_k| \le \lambda (\tau _k+1) \) and develop a procedure to find \((Y_i)_{i \in T_k}\). Assume for sake of contradiction that \(|X_k| > \lambda (\tau _k+1) \) and index the jobs in \(X_k\) in increasing order of completion times, i.e., \(X_k = \{x_1, \ldots , x_{\lambda (\tau _k + 1) }, x_{\lambda (\tau _k + 1) +1 },\ldots \}\), and set \(L := \{ x_{\lambda (\tau _k + 1) +1 },\ldots \}\). Then,

By induction hypothesis, \(\lambda - |Y_i\backslash L | \ge 0\) for \(i \in T_{-k}\). Let \(Y_k\) contain \(\lambda \) arbitrary big jobs in \(X_k^B\backslash L\) and assign each \(Y_i\) for \(i\in T_{-k}\) exactly \(\lambda - |Y_i\backslash L |\) of the remaining (big) jobs in \(X_k^B\backslash L\). This is possible because the jobs in \(X_k^B\) are big for any descendant of k, i.e., they satisfy (ii). By choice of \(\lambda \), each of the just obtained sets covers the region of the corresponding job at least \(\frac{\varepsilon }{\varepsilon -\delta }\) times. Thus, the jobs in \(X_k \backslash L\) have a total processing volume of at least \(\frac{\varepsilon }{\varepsilon -\delta }(b_k - a_k)\). Therefore, any job \(x \in L\) satisfies (V) which contradicts the fact that k is isolated by Corollary 1. Thus, \(|X_k| \le \lambda (\tau _k +1)\).

To construct \((Y_i)_{i \in T_{k} }\), we assign \(\min \{\lambda , |X_k^B|\}\) jobs from \(X_k^B\) to \(Y_k\). If \(|X_k^B| > \lambda \), distribute the remaining jobs according to \(\lambda - |Y_i|\) among the descendants of k. Then, \(X_k = \bigcup _{i \in T_{k}} Y_i\). Because a job that is big w.r.t job k is also big w.r.t. all descendants of k, every (new) set \(Y_i\) satisfies (ii) and (iii). We refer to this procedure as Push Down since jobs are shifted vertically to descendants.

Case II If \(|X_k| > \lambda (\tau _k+1) \), k must belong to a string with similar properties as described in Lemma 7, i.e., there are jobs \(f,\ldots ,g\) containing k such that

-

1.

\(\sum _{j=f}^i |X_{j}| > \lambda \sum _{j=f}^i \tau _j \) for all \(f\le i\le g\) and

-

2.

\(b_{j} = a_{j+1}\) for all \(f\le j < g\).

Choose \(\{f,\ldots ,g\}\) maximal with those two properties. We show that the Volume Lemma implies the existence of another sibling \(g+1\) that balances the sets \(X_f,\ldots ,X_g,X_{g+1}\). This is done by using the Push Down procedure within a generalization of the Push Forward procedure.

As the jobs \(f,\ldots ,g\) may have descendants, we use Push Forward to construct the sets \(Z_f,\ldots ,Z_g\) and \(L_f,\ldots , L_g\) with \(|Z_k| = \lambda (\tau _k+1)\). Then, we show that we can apply Push Down to \(Z_k\) and \((Y_i)_{i\in T_{-k}}\) in order to obtain \((Y_i)_{i \in T_{k} }\). This means the newly obtained partition satisfies

-

(iv)

\(Y_k\cup \bigcup _{i\in T_{-k}} Y_i = Z_k\),

-

(v)

\(Y_i \subset \{ x \in X_j: p_x \ge \beta p_i \}\) and

-

(vi)

\(|Y_i| = \lambda \) for every \(i\in T_{k}\).

This implies that the set \(Z_k\) covers \([a_k,b_k)\) at least \(\frac{\varepsilon }{\varepsilon -\delta }\) times. Thus, the sets \(X_k\) with \(f\le k\le g\) satisfy (V) and we can apply Corollary 1.

To define \(Z_f,\ldots ,Z_g\), we index the jobs in \(X_f = \{x_1, \ldots , x_{\lambda _f}, x_{\lambda (\tau _f+1) + 1},\ldots \}\) in increasing order of optimal completion times and set \(Z_f := \{x_1, \ldots , x_{\lambda (\tau _f+1)}\}\) and \(L_f = X_f\backslash Z_f\). Assume that \(Z_f,\ldots ,Z_k\) and \(L_f,\ldots ,L_k\) are defined. Index the jobs in \(X_{k+1} \cup L_k = \{x_1, \ldots , x_{\lambda (\tau _{k+1}+1)}, x_{\lambda (\tau _{k+1}+1) + 1},\ldots \}\) in increasing order of completion times and set \(Z_{k+1} := \{x_1, \ldots , x_{\lambda (\tau _{k+1}+1)}\}\) and \(L_{k+1} = (X_{k+1} \cup L_k)\backslash Z_{k+1}\). Use the Push Down procedure to obtain the partition \((Y_i)_{i \in T_{k}}\).

If we can show that any job \(x \in L_k\) is big w.r.t. \(k+1\), we have that \(Z_{k+1} \backslash X_{k+1}^S\) only contains big jobs w.r.t. \({k+1}\), which are also big w.r.t. every \( i \in T_{-(k+1)}\). As in Case I,

Hence, the just defined partition \((Y_i)_{i \in T_{k}}\) satisfies (iv) to (vi).

As in the proof for Lemma 7, we show by induction that every \(x \in L_k\) exhibits an index \(i(x)\) with

for \(y = x\) or \(y \in \bigcup _{i = i(x)}^j Z_i\). Then, the Volume Lemma implies that \(p_x \ge \beta p_{k+1}\).

For \(x\in L_f\), set \(i(x) = f\). Thus, Eqs. (4) and (5) are trivially satisfied. Since \(Z_f \subset X_f\), we have that \(Z_f \backslash X_f^S\) only contains big jobs w.r.t. f.

Let \(f< k < g\). Assume that \(Z_f,\ldots ,Z_k\) and \(L_k\) are defined as described above. For jobs \(x \in L_k \backslash X_k\), we have \(i(x)\) with the Properties (iv) and (v) by induction. For \(x \in L_k \cap X_k\), we temporarily set \(i(x) := k\) for simplification. We have to distinguish two cases: \(i(x)\) also satisfies (iv) and (v) for k or we have to adjust \(i(x)\). Fix \(x\in L_k\).

-

\(L_i \cap Z_k = \emptyset \) for every \(f\le i < i(x)\). Since only jobs in \(L_i\) are shifted to some later job k, this implies \(\bigcup _{i=f}^{i(x)-1} X_i \cap Z_k = \emptyset \). Thus, the jobs in \(Z_k\) are released after \(a_{i(x)}\) and by definition, \(C_y^* \le C_x^*\) for \(y \in Z_k\). By induction, x and the jobs in \(Z_{i(x)} \cup \cdots \cup Z_{k-1}\) satisfy (iv) and (v). Hence, \(i(x)\) is a suitable choice for x and k.

-

\(L_i \cap Z_k \ne \emptyset \) for \(f\le i < i(x)\). Choose the job \(y \in L_{k-1} \cap Z_k\) with the smallest \(i(y)\). By a similar argumentation as before, \(\bigcup _{i=f}^{i(y)-1} X_i \cap Z_k = \emptyset \), which implies (iv) for \(z \in Z_k\). Again by induction, y and the jobs in \(Z_{i(y)} \cup \cdots \cup Z_{k-1}\) satisfy (iv) and (v). Since \(x\in L_k\), \(C_x^* \ge C_z^*\) for all \(z \in Z_k\). This implies \(C_x^* \ge C_z^*\) for \(z \in \bigcup _{i = i(y)}^{k-1} Z_i\) because \(y \in L_{k-1}\cap Y_k\). Set \(i(x) := i(y)\).

As explained above, the Volume Lemma implies \(p_x \ge \beta p_{k+1}\).

For \(k+1 = g\), the above argumentation can be combined with Corollary 1 to prove that the sibling \(g+1\) indeed exists. Set \(Z_{g+1} := X_{g+1} \cup L_g\) and use Push Down to construct \((Y_{i})_{i \in T_{(g+1)}}\).

Case III Any job k with \(\pi (k) = j\) that is part of a string and was not yet considered must satisfy \(|X_{k} | \le (\tau _k+1) \lambda \). We use the Push Down procedure for isolated jobs to get the partition \((Y_{i})_{i \in T_k }\).

Hence, we have found \((Y_k)_{k\in T_{-j}}\) with the properties (iv) to (vi). \(\square \)

We can now prove the main result of this section.

Proof

(Theorem 4) As explained before, the job set scheduled by Opt clearly is a subset of \(X\cup J\), the union of jobs only scheduled by Opt and the jobs admitted by the region algorithm. Thus, it suffices to prove that \(|X| \le \lambda |J|\). By Observation 2, \(X = X_M^S\) and, hence, \(|X_M^S| \le \lambda |J|\) implies \(|X| \le \lambda |J|\). This is true as Lemma 5 also holds for the machine job M. \(\square \)

Finalizing the proofs of Theorems 1and 2

Proof

(Theorem 1) Set \(\alpha =1\) and \(\beta = \frac{\varepsilon }{4}\). Theorem 3 shows that our algorithm completes at least half of all admitted jobs on time. Theorem 4 implies that the region algorithm is \(\frac{16}{\varepsilon }\)-competitive.\(\square \)

Proof

(Theorem 2) By Lemma 4, the choice \(\alpha = \frac{8}{\delta }\) and \(\beta = \frac{\delta }{4}\) implies that the region algorithm completes all admitted jobs. Theorem 4 implies that our algorithm is (\(\frac{32\varepsilon }{(\varepsilon -\delta )\delta ^2}+1\))-competitive. \(\square \)

5 Tightness of the region algorithm

In this section, we consider scheduling with commitment. We show that the analysis of the region algorithm is tight in the sense that the competitive ratio of the region algorithm is \(\varOmega (\alpha /\beta )\). Moreover, we give examples that show that for the commitment upon admission model the choice \(\alpha \in \varOmega (1/\varepsilon )\) and \(\beta \in O(1/\varepsilon )\) is best possible.

In Sect. 6.1 we show that the region algorithm is best possible (up to constants) for scheduling without commitment.

Lemma 8

Let \(0 < \varepsilon \le 1 \), \(\alpha \ge 1\), and \(0< \beta < 1\). Then, the competitive ratio of the region algorithm is bounded from below by \(\alpha /\beta \).

Proof

We consider an instance where a job 0 with processing time \(p_0 =1\) and a huge scheduling interval \([r_0, r_0+\alpha +2)\) is released first. Then, the region algorithm blocks the region \([r_0, r_0 +\alpha ]\) for this job. During this interval, \(\lfloor \alpha / \beta \rfloor \) jobs of size \(p_j = \beta \) arrive. They all fit into R(0) but the jobs are to big relative to 0 to be admitted. Then, an offline optimum would process all small jobs until \(r_0 + \alpha \) before starting job 0. Hence, the region algorithm completes one job while it is optimal to complete \(\lfloor \alpha / \beta \rfloor + 1\) jobs.

More formally, let \(r_0 = 0\), \(p_0 = 1\) and \(d_0 = \alpha + 1\). Fix \(0< \varphi< \beta < 1\). For \(1 \le j \le \lfloor \alpha /\beta \rfloor \) let \(r_j = (j-1)\beta + \varphi \), \(p_j = \beta \) and \(d_j = r_j + (1+\varepsilon ) p_j\). The region algorithm admits job 0 at time 0 and blocks the interval \([0,\alpha )\) for 0. Thus, the region algorithm cannot admit any of the small jobs and completes only job 0. This behavior does not depend on the commitment model.

An optimal offline algorithm processes the jobs \(1,\ldots ,\lfloor \alpha /\beta \rfloor \) one after the other in the interval \([\varphi , \lfloor \alpha / \beta \rfloor \beta + \varphi ) \subset [0, \alpha + 1)\). At the latest at time \(\alpha +1\) job 0 starts processing and finishes on time.

Thus, the competitive ratio of the algorithm is bounded from below by \(\lfloor \alpha / \beta \rfloor + 1 \ge \alpha / \beta \). \(\square \)

Lemma 9

The competitive ratio of the region algorithm in the scheduling with commitment model is bounded from below by \(\varOmega (1/\varepsilon ^2)\).

Proof

The proof consists of two parts. First we show an upper bound on the choice of \(\beta \) in terms of \(\delta \). Then, we use this observation to show an upper bound on \(\beta \) depending on \(\alpha \).

It is obvious that \(\beta \le \delta \) must hold as otherwise a job that is admitted at \(d_j - (1+\delta )p_j\) and interrupted by another job i with \(p_i=\beta p_j\) cannot finish on time. Hence, \(\beta \le \delta \le 1\) must hold.

We define a family of instances \(\mathcal {I}_m(c)\) that depends on two natural numbers \(m, c \in \mathbb {N}\) where c is chosen such that

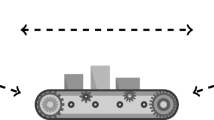

Each instance consists of four types of jobs, a job 0 that cannot be finished unless \(\alpha \) and \(\beta \) satisfy certain bounds, an auxiliary job \(-1\) that guarantees that 0 is not admitted before \(d_0 - (1+\delta ) p_0\) and two sets of jobs, B(c) and G(m), that block as much time in \([a_0,d_0)\) as possible. A visualization of the instance can be seen in Fig. 2.

The structure of the regions and the schedule generated by the region algorithm when faced with the instance \(\mathcal {I}_m(c)\). The darkest shades of a color mean that jobs are scheduled there. The light yellow and blue parts show that the region is currently interrupted. The only time slots where 0 can be processed are the lighter parts of the green regions, i.e., the regions belonging to B(c) (color figure online)

More precisely, at time \(t=0\), an auxiliary job \(-1\) is released with \(p_{-1} =1\) and \(d_{-1} = (1+\varepsilon ) p_{-1}\). The region algorithm admits this job and assigns it the region \(R(-1) = [0,\alpha )\). At time \(\alpha -(\varepsilon -\delta )\) job 0 is released with \(p_0 =1\) and \(d_0 = \alpha + 1 + \delta \). Obviously, this job is admitted at time \(\alpha \) as it is still available. Fix \(\varphi >0\) sufficiently small.

At time \(\alpha + \varphi \) the sequence B(c) of c identical jobs is released one after the other such that the release date of one job coincides with the end of the region of the previous job. For \(0 \le i \le c-1\), a tight job is released at \(r_i := \alpha + i/c + \varphi \) with processing time \(p_i = \beta -\varphi \) and deadline \(d_i = r_i + (1+\varepsilon )p_i\). Since

each of these jobs is admitted by the region algorithm at their release date. The last of these regions ends at \(\alpha + (c-1)/c + \varphi + \alpha (\beta - \varphi ) = \alpha + \frac{c-1}{c} + \varphi + \frac{1}{c} - \alpha \varphi \le \alpha +1\). Thus, in the limit \(\varphi \rightarrow 0\), they block \(c\beta \) units of time in \([a_j, a_j + d_j)\).

At time \(d_0 - \frac{\beta }{1-\beta }\), a sequence of m geometrically decreasing jobs G(m) is released. For \(1\le j \le m\), job j is released at \(r_j =d_0 - \frac{\beta }{1-\beta } + \sum _{i =1}^j \beta ^i\) with processing time \(p_j = (\beta - \varphi )^j\) and deadline \(d_j = r_j + (1+\varepsilon )p_j\). Then, \(p_{j+1} = (\beta - \varphi ) p_j < \beta p_j\). Thus, the region algorithm admits each of the m jobs. Again, in the limit \(m\rightarrow \infty \) and \(\varphi \rightarrow 0\), the processing volume of G(m) sums up to \(\frac{\beta }{1-\beta }\).

Putting the two observations together, we obtain

as otherwise job 0 cannot finish on time. Hence, \(0 \le c \beta ^2 - (1 + c + \delta )\beta + \delta .\) Solving for the two roots, \(\beta _+\) and \(\beta _-\), we obtain

As we have seen by the first example, \(\beta \le 1\) must hold. Thus, we conclude that the only valid choice for \(\beta \) is in the interval \((0,\beta _-)\). By a similar calculation, it follows that \(\beta _{-} \le \frac{\delta }{c}.\) As we know by Lemma 8, the competitive ratio is bounded from below by \(\alpha / \beta \). Combined with the two bounds on \(\alpha \), \(\frac{1}{\beta (c+1)} < \alpha \le \frac{1}{\beta c}\), we obtain

Since the right hand side is increasing in c for positive c, the expression is minimized for \(c=1\). This implies that \(\beta \in \mathcal {O}(\varepsilon )\) and therefore \(\alpha \in \varOmega (1/\varepsilon )\). \(\square \)

6 Lower bounds on the competitive ratio

In this section we give a collection of lower bounds on the competitive ratio in the different commitment models and for different problem settings. To simplify notation, we formally introduce the notion of laxity. Let j be a job with processing time \(p_j\), deadline \(d_j\), and \(r_j\). The laxity \(\ell _j\) is defined as \(d_j - r_j - p_j\).

6.1 Scheduling without commitment

We give a lower bound matching our upper bound in Theorem 2. This shows that the region algorithm is best possible for scheduling without commitment.

Theorem 5

Every deterministic online algorithm has a competitive ratio \(\varOmega (\frac{1}{\varepsilon })\).

Proof

The proof idea is as follows: we release \(\varOmega (\frac{1}{\varepsilon })\)levels of jobs. In each level, the release date of any but the first job is the deadline of the previous job. Whenever an online algorithm decides to complete a job from level i (provided no further jobs are released), then the release of jobs in level i stops and a sequence of \(\mathcal {O}(\frac{1}{\varepsilon })\) jobs in level \(i+1\) is released. Jobs in level \(i+1\) have processing time that is too large to fit in the slack of a job of level i. Thus, an algorithm has to discard the job started at level i to run a job of level \(i+1\). This implies that it can only finish one job, while the optimum can finish a job from every other level.

Formally, let \(\varepsilon <\frac{1}{10}\) such that \(\frac{1}{8\varepsilon } \in \mathbb {N}\) and suppose there is an online algorithm with competitive ratio \(c<\frac{1}{8\varepsilon }\), from which it is sufficient to deduce a contradiction. We construct an adversarial instance in which each job j belongs to one of \(2\cdot \lceil c+1\rceil \) levels and fulfills \(d_j=r_j+(1+\varepsilon )\cdot p_j\). The processing time for any job j in level i are \(p_j = p^{(i)} = (2\varepsilon )^i\).

This (along with the interval structure) makes sure that no two jobs from consecutive levels can both be completed by a single schedule, which we will use to show that the online algorithm can only complete a single job throughout the entire instance. The decrease in processing times between levels, however, also makes sure that the optimum can finish a job from every other level, resulting in an objective value of \(\lceil c+1\rceil \), which is a contradiction to the algorithm being c-competitive.

The sequence starts in level 0 at time 0 with the release of one job j with processing time \(p^{(0)}=1\) and, thus, deadline \(d_{j}=1+\varepsilon \). We will show inductively that, for each level i, there is a time \(t_i\) when there is only a single job \(j_i\) left that the algorithm can still finish, and this job is from the current level i (and, thus, \(p_{j_i} = p^{(i)} = (2\varepsilon )^{i}\)). We will also make sure that at \(t_i\) at most a \((\frac{2}{3})\)-fraction of the time window of \(j_i\) has passed. From \(t_i\) on, no further jobs from level i are released, and jobs from level \(i+1\) start being released (or, if \(i=2\cdot \lceil c+1\rceil -1\), we stop releasing jobs altogether). It is clear that \(t_0\) exists.

Consider some time \(t_i\), and we will release jobs from level \(i+1\) so as to create time \(t_{i+1}\). The first job j from level \(i+1\) has release date \(t_i\) and, by the above constraints, \(d_j=t_i+(1+\varepsilon )\cdot p_j\) where \(p_j= p^{(i+1)}=(2\varepsilon )^{i+1}\). As long as no situation occurs that fits the above description of \(t_{i+1}\), we release an additional job of level \(i+1\) at the deadline of the previous job from this level (with identical time-window length and processing time). We show that we can find time \(t_{i+1}\) before \(\frac{1}{8\varepsilon }\) jobs from level \(i+1\) have been released. Note that the deadline of the \(\frac{1}{8\varepsilon }\)th job from level \(i+1\) is \(t_i+\frac{1}{8\varepsilon } \cdot (1+\varepsilon ) \cdot 2\varepsilon \cdot p^{(i)}\), which is smaller than the deadline of \(d_{j_i}\) since by induction \(d_{j_i}-t_i\ge \frac{2}{3} \cdot p^{(i)}\) and \(\varepsilon <\frac{1}{10}\). This shows that, unless more than \(\frac{1}{8\varepsilon }\) jobs from level \(i+1\) are released (which will not happen as we will show), all time windows of jobs from layer \(i+1\) are contained in that of \(j_i\).

Note that there must be a job \(j^\star \) among the \(\frac{1}{8\varepsilon }\) first ones in level \(i+1\) that the algorithm completes if no further jobs are released within the time window of \(j^\star \): by induction, the algorithm can only hope to finish a single job released before time \(t_i\) and the optimum could complete \(\frac{1}{8\varepsilon }\) jobs from level \(i+1\), so \(j^\star \) must exist for the algorithm to be c-competitive. Now we can define \(j_{i+1}\) to be the first such job \(j^\star \) and find \(t_{i+1}\) within its time window: at the release date of \(j^\star \), the algorithm could only complete \(j_i\). However, since the algorithm finishes \(j_{i+1}\) if there are no further jobs released, and \(\varepsilon <\frac{1}{10}\), it must have worked on \(j_{i+1}\) for more than \(\frac{p^{(i+1)}}{2}\) units of time until \(r_{i+1}+\frac{2}{3}\cdot p^{(i+1)}=:t_{i+1}\). This quantity, however, exceeds the laxity of \(j_i\), meaning that the algorithm cannot finish \(j_i\) any more. (Recall that the laxity of \(j_i\) is \(\varepsilon p^{(i)} = 2^i \varepsilon ^{i+1}\).) So \(t_{i+1}\) has the desired properties.

This defines \(t_{2\cdot \lceil c+1\rceil }\), and indeed the algorithm will only finish a single job. We verify that an optimal algorithm can schedule a job from every other level. Note that, among levels of either parity, processing times are decreasing by a factor of \(4\varepsilon ^2\) between consecutive levels. So, for any job j, the total processing time of jobs other than j that need to be processed within the time window of j adds up to less than

This completes the proof. \(\square \)

6.2 Scheduling with commitment

6.2.1 Commitment upon arrival

We strengthen earlier results for weighted jobs [22, 26] and show that the model is hopeless even in the unweighted setting and even for randomized algorithms.

Theorem 6

No randomized online algorithm has a bounded competitive ratio for commitment upon arrival.

In the proof of the theorem, we use the following algebraic fact.

Lemma 10

Consider positive numbers \(n_1,\dots ,n_k, c\in {\mathbb {R}}_+\) with the following properties:

-

(i)

\(\sum _{i=1}^k n_i\le 1\),

-

(ii)

\(\sum _{i=1}^j n_i\cdot 2^{i-1}\ge \frac{2^{j-1}}{c}\) for all \(j=1,\dots ,k\).

Then it holds that \(c\ge \frac{k+1}{2}.\)

Proof

We take a weighted sum over all inequalities in (ii), where the weight of the inequality corresponding to \(j<k\) is \(2^{k-j-1}\) and the weight of the inequality corresponding to \(j=k\) is 1. The result is

If \(c<\frac{k+1}{2}\), this contradicts (i). \(\square \)

We proceed to the proof of the theorem.

Proof

(Theorem 6) Consider any \(\varepsilon >0\) and arbitrary \(\gamma \in (0,1)\). Suppose there is a (possibly randomized) c-competitive algorithm, where c may depend on \(\varepsilon \).

We will choose some \(k\in {\mathbb {N}}\) later. The adversary releases at most k waves of jobs, but the instance may end after any wave. Wave i has \(2^i\) jobs. Each job from the ith wave has release date \(\frac{i}{k}\,\cdot \,\gamma \), deadline 1, and processing time \(\frac{1}{2^i}\,\cdot \,\frac{1-\gamma }{1+\varepsilon }\). Note that choosing \(p_j\le \frac{1-\gamma }{1+\varepsilon }\) for all jobs j makes sure that indeed \(\ell _j\ge \varepsilon \cdot p_j\), and observe that the total volume of jobs in wave i adds up to no more than \(1-\gamma \).

Define \(n_i\) to be the expected total processing time of jobs that the algorithm accepts from wave i. We observe:

-

(i)

Since all accepted jobs have to be scheduled within the interval [0, 1], we must have \(\sum _{i=1}^k n_i\le 1\).

-

(ii)

For each i, possibly no further jobs are released after wave i. Since, in this case, the optimum schedules all jobs from wave i and the jobs’ processing times decrease by a factor of 2 from wave to wave, it must hold that \(\sum _{i=1}^j n_i\cdot 2^{i-1}\ge \frac{2^{j-1}}{c}\).

This establishes the conditions necessary to apply Lemma 10 to \(n_1,\dots ,n_k\), which shows that choosing \(k\ge 2c\) yields a contradiction. \(\square \)

6.2.2 Commitment on job admission and \(\delta \)-commitment

Since these models are more restrictive than scheduling without commitment, the lower bound \(\varOmega (\frac{1}{\varepsilon })\) from Theorem 5 holds. In the present setting we can provide a much simpler (but asymptotically equally strong) lower bound.

Commitment upon admission For scheduling with arbitrary weights, Azar et al. [2] rule out any bounded competitive ratio for deterministic algorithms. Thus, our bounded competitive ratio for the unweighted setting (Theorem 2) gives a clear separation between the weighted and the unweighted setting.

Scheduling with \(\delta \)-commitment We give a lower bound depending on parameters \(\varepsilon \) and \(\delta \).

Theorem 7

Consider scheduling weighted jobs in the \(\delta \)-commitment model. For any \(\delta >0\) and any \(\varepsilon \) with \(\delta \le \varepsilon < 1+\delta \), no deterministic online algorithm has a bounded competitive ratio.

Proof

We reuse the idea of [2] to release the next job upon admission of the previous one while heavily increasing the weights of subsequent jobs. However, the scheduling models differ in the fact that the \(\delta \)-commitment model allows for processing before commitment which is not allowed in the commitment-upon-admission model.

Assume for the sake of contradiction, that there is a c-competitive algorithm. We consider the following instance that consists of n tight jobs with the same deadline \(d := 1+\varepsilon \). Job j has a weight of \(w_j := (c+1)^j\) which implies that any c-competitive algorithm has to admit job j at some point even if all jobs \(1,\ldots ,j-1\) are admitted. In the \(\delta \)-commitment model, the admission cannot happen later than \(d-(1+\delta ) p_j\) which is the point in time when job \(j+1\) is released.

More precisely, the first job is released at \(r_1=0\) with processing time \(p_1=1\). If jobs \(1,\ldots ,j\) have been released, job \(j+1\) is released at \(r_{j+1} = d-(1+\delta ) p_j\) and has processing time

An instance with n such jobs has a total processing volume of

Any c-competitive algorithm has to complete the n jobs before \(d = 1+\varepsilon \). This also holds for \(n \rightarrow \infty \) and, thus, \(\frac{1 + \varepsilon }{ \varepsilon - \delta } \le 1+ \varepsilon \) is implied. This is equivalent to \(\varepsilon \ge 1+ \delta \). In other words, if \(\varepsilon < 1 + \delta \), there is no deterministic c-competitive online algorithm. \(\square \)

In particular, there is no bounded competitive ratio possible for \(\varepsilon \in (0,1)\). A restriction for \(\varepsilon \) appears to be necessary as Azar et al. [2] provide such a bound when the slackness is sufficiently large, i.e, \(\varepsilon >3\). In fact, our bound answers affirmatively the open question in [2] if high slackness is indeed required. Again, this strong impossibility result gives a clear separation between the weighted and the unweighted problem as we show in the unweighted setting a bounded competitive ratio for any \(\varepsilon >0\) (Theorem 2).

Proportional weights \((w_j = p_j)\) For scheduling with commitment, it is known that simple greedy algorithms achieve the best possible competitive ratio \(\varTheta (1/\varepsilon )\) [11, 13]. In this section, we show a weaker lower bound for randomized algorithms.

Theorem 8

Consider proportional weights \((w_j=p_j)\). For commitment on job admission and the \(\delta \)-commitment model, the competitive ratio of any randomized algorithm is \(\varOmega (\log \frac{1}{\varepsilon })\).

Proof