Abstract

In this paper, we identify partial correlation information structures that allow for simpler reformulations in evaluating the maximum expected value of mixed integer linear programs with random objective coefficients. To this end, assuming only the knowledge of the mean and the covariance matrix entries restricted to block-diagonal patterns, we develop a reduced semidefinite programming formulation, the complexity of solving which is related to characterizing a suitable projection of the convex hull of the set \(\{(\mathbf x , \mathbf x {} \mathbf x '): \mathbf x \in \mathcal {X}\}\) where \(\mathcal {X}\) is the feasible region. In some cases, this lends itself to efficient representations that result in polynomial-time solvable instances, most notably for the distributionally robust appointment scheduling problem with random job durations as well as for computing tight bounds in the newsvendor problem, project evaluation and review technique networks and linear assignment problems. To the best of our knowledge, this is the first example of a distributionally robust optimization formulation for appointment scheduling that permits a tight polynomial-time solvable semidefinite programming reformulation which explicitly captures partially known correlation information between uncertain processing times of the jobs to be scheduled. We also discuss extensions where the random coefficients are assumed to be non-negative and additional overlapping correlation information is available.

Similar content being viewed by others

References

Aldous, D.J.: The \(\zeta (2)\) limit in the random assignment problem. Random Struct. Algorithms 18(4), 381–418 (2001)

Anstreicher, K.M., Burer, S.: Computable representations for convex hulls of low-dimensional quadratic forms. Math. Program. 124, 33–43 (2010)

Ball, M.O., Colbourn, C.J., Provan, J.S.: Network reliability. In: Handbooks in Operations Research and Management Science, vol. 7, pp. 673–762. Elsevier Science B.V., Amsterdam (1995)

Ben-Tal, A., El Ghaoui, L., Nemirovski, A.: Robust Optimization. Princeton University Press, Cambridge (2009)

Berge, C.: Some classes of perfect graphs. In: Harary, F. (ed.) Graph Theory and Theoretical Physics, pp. 155–166. Academic Press, London (1967)

Bertsimas, D., Doan, X.V., Natarajan, K., Teo, C.-P.: Models for minimax stochastic linear optimization problems with risk aversion. Math. Oper. Res. 35(3), 580–602 (2010)

Bertsimas, D., Natarajan, K., Teo, C.-P.: Probabilistic combinatorial optimization: moments, semidefinite programming, and asymptotic bounds. SIAM J. Optim. 15(1), 185–209 (2004)

Bertsimas, D., Natarajan, K., Teo, C.-P.: Persistence in discrete optimization under data uncertainty. Math. Program. 108(2), 251–274 (2006)

Bertsimas, D., Popescu, I.: Optimal inequalities in probability theory: a convex optimization approach. SIAM J. Optim. 15(3), 780–804 (2005)

Bertsimas, D., Sim, M., Zhang, M.: Adaptive distributionally robust optimization. Manag. Sci. 65(2), 604–618 (2018)

Bomze, I.M., Cheng, J., Dickinson, P.J.C., Lisser, A.: A fresh CP look at mixed-binary QPs: new formulations and relaxations. Math. Program. 166(1–2), 159–184 (2017)

Bomze, I.M., De Klerk, E.: Solving standard quadratic optimization problems via linear, semidefinite and copositive programming. J. Glob. Optim. 24(2), 163–185 (2002)

Buck, M.W., Chan, C.S., Robbins, D.P.: On the expected value of the minimum assignment. Random Struct. Algorithms 21(1), 33–58 (2002)

Burer, S.: On the copositive representation of binary and continuous nonconvex quadratic programs. Math. Program. 120(2), 479–495 (2010)

Burer, S.: A gentle, geometric introduction to copositive optimization. Math. Program. 151(1), 89–116 (2015)

Cambanis, S., Simons, G., Stout, W.: Inequalities for E k (x, y) when the marginals are fixed. Zeitschrift für Wahrscheinlichkeitstheorie und verwandte Gebiete 36(4), 285–294 (1976)

Delage, E., Ye, Y.: Distributionally robust optimization under moment uncertainty with application to data-driven problems. Oper. Res. 58(3), 595–612 (2010)

Dickinson, P.J.C.: Geometry of the copositive and completely positive cones. J. Math. Anal. Appl. 380(1), 377–395 (2011)

Dickinson, P.J.C., Gijben, L.: On the computational complexity of membership problems for the completely positive cone and its dual. Comput. Optim. Appl. 57(2), 403–415 (2014)

Doan, X.V., Natarajan, K.: On the complexity of nonoverlapping multivariate marginal bounds for probabilistic combinatorial optimization problems. Oper. Res. 60(1), 138–149 (2012)

Donath, W.E.: Algorithm and average-value bounds for assignment problems. IBM J. Res. Dev. 13(4), 380–386 (1969)

Dyer, M.E., Frieze, A.M., Mcdiarmid, C.J.H.: On linear programs with random costs. Math. Program. 35(1), 3–16 (1986)

Fulkerson, D.R.: Expected critical path lengths in pert networks. Oper. Res. 10(6), 808–817 (1962)

Galichon, A.: Optimal Transport Methods in Economics. Princeton University Press, Princeton (2016)

Goemans, M.X., Kodialam, M.S.: A lower bound on the expected cost of an optimal assignment. Math. Oper. Res. 18(2), 267–274 (1993)

Grone, R., Johnson, C.R., Sá, E.M., Wolkowicz, H.: Positive definite completions of partial hermitian matrices. Linear Algebra Appl. 58, 109–124 (1984)

Hagstrom, J.N.: Computational complexity of pert problems. Networks 18(2), 139–147 (1988)

Hanasusanto, G.A., Kuhn, D.: Conic programming reformulations of two-stage distributionally robust linear programs over wasserstein balls. Oper. Res. 66(3), 849–869 (2018)

Hanasusanto, G.A., Kuhn, D., Wallace, S.W., Zymler, S.: Distributionally robust multi-item newsvendor problems with multimodal demand distributions. Math. Program. 152(1), 1–32 (2015)

Jiang, R., Shen, S., Zhang, Y.: Integer programming approaches for appointment scheduling with random no-shows and service durations. Oper. Res. 65(6), 1638–1656 (2017)

Karp, R.M.: An upper bound on the expected cost of an optimal assignment. In: Johnson, D.S., Nishizeki, T., Nozaki, A., Wilf, H.S. (eds.) Discrete Algorithms and Complexity, pp. 1–4. Academic Press, New York (1987)

Kong, Q., Lee, C.-Y., Teo, C.-P., Zheng, Z.: Scheduling arrivals to a stochastic service delivery system using copositive cones. Oper. Res. 61(3), 711–726 (2013)

Kuhn, H.W.: The Hungarian method for the assignment problem. Naval Res. Logist. Q. 2(1–2), 83–97 (1955)

Kurtzberg, J.M.: On approximation methods for the assignment problem. J. ACM 9(4), 419–439 (1962)

Laurent, M.: Matrix Completion Problems, pp. 1967–1975. Springer, Boston (2009)

Lazarus, A.J.: Certain expected values in the random assignment problem. Oper. Res. Lett. 14(4), 207–214 (1993)

Lorentz, G.G.: An inequality for rearrangements. Am. Math. Month. 60(3), 176–179 (1953)

Mak, H.-Y., Rong, Y., Zhang, J.: Appointment scheduling with limited distributional information. Manag. Sci. 61(2), 316–334 (2015)

Mézard, M., Parisi, G.: On the solution of the random link matching problems. J. Phys. 48(9), 1451–1459 (1987)

Möhring, R.H.: Scheduling under uncertainty: bounding the makespan distribution. In: Alt, H. (ed.) Computational Discrete Mathematics, pp. 79–97. Springer, Berlin (2001)

Munkres, J.: Algorithms for the assignment and transportation problems. J. Soc. Ind. Appl. Math. 5(1), 32–38 (1957)

Murty, K.G., Kabadi, S.N.: Some np-complete problems in quadratic and nonlinear programming. Math. Program. 39(2), 117–129 (1987)

Natarajan, K., Teo, C.-P.: On reduced semidefinite programs for second order moment bounds with applications. Math. Program. 161(1–2), 487–518 (2017)

Natarajan, K., Teo, C.-P., Zheng, Z.: Mixed 0–1 linear programs under objective uncertainty: a completely positive representation. Oper. Res. 59(3), 713–728 (2011)

Olin, B.: Asymptotic properties of random assignment problems. PhD thesis, Division of Optimization and Systems Theory, Department of Mathematics, Royal Institute of Technology, Stockholm, Sweden (1992)

Padberg, M.: The boolean quadric polytope: some characteristics, facets and relatives. Math. Program. 45(1), 139–172 (1989)

Parrillo, P.A.: Structured semidefinite programs and semi-algebraic geometry methods in robustness. Ph.D. Thesis, California Institute of Technology (2000)

Penrose, R.: A generalized inverse for matrices. Math. Proc. Camb. Philos. Soc. 51(3), 406–413 (1955)

Pitowsky, I.: Correlation polytopes: their geometry and complexity. Math. Program. 50(1), 395–414 (1991)

Radhakrishna Rao, C., Mitra, S.K.: Generalized inverse of a matrix and its applications. In: Proceedings of the Sixth Berkeley Symposium on Mathematical Statistics and Probability, Volume 1: Theory of Statistics, pp. 601–620. University of California Press, Berkeley (1972)

Rose, D.J.: Triangulated graphs and the elimination process. J. Math. Anal. Appl. 32(3), 597–609 (1970)

Shogan, A.W.: Bounding distributions for a stochastic pert network. Networks 7(4), 359–381 (1977)

Toh, K.C., Todd, M.J., Tutuncu, R.H.: SDPT3—a matlab software package for semidefinite programming. Optim. Methods Softw. 11, 545–581 (1999)

Tutuncu, R.H., Toh, K.C., Todd, M.J.: Solving semidefinite-quadratic-linear programs using SDPT3. Math. Program. Ser. B(95), 189–217 (2003)

Van Slyke, R.M.: Monte Carlo methods and the pert problem. Oper. Res. 11(5), 839–860 (1963)

Walkup, D.: On the expected value of a random assignment problem. SIAM J. Comput. 8(3), 440–442 (1979)

Wiesemann, W., Kuhn, D., Sim, M.: Distributionally robust convex optimization. Oper. Res. 62(6), 1358–1376 (2014)

Wästlund, J.: A proof of a conjecture of buck, chan, and robbins on the expected value of the minimum assignment. Random Struct. Algorithms 26(1–2), 237–251 (2005)

Guanglin, X., Burer, S.: A data-driven distributionally robust bound on the expected optimal value of uncertain mixed 0–1 linear programming. Comput. Manag. Sci. 15(1), 111–134 (2018)

Boshi, A.K.Y., Burer, S.: Quadratic programs with hollows. Math. Program. 170(2), 541–552 (2018)

Zangwill, W.I.: A deterministic multi-period production scheduling model with backlogging. Manag. Sci. 13(1), 105–119 (1966)

Zangwill, W.I.: A backlogging model and a multi-echelon model of a dynamic economic lot size production system—a network approach. Manag. Sci. 15(9), 506–527 (1969)

Zuluaga, L.F., Peña, J.F.: A conic programming approach to generalized Tchebycheff inequalities. Math. Oper. Res. 30(2), 369–388 (2005)

Acknowledgements

The research of the first and the second author was partially supported by the MOE Academic Research Fund Tier 2 Grant T2MOE1706, “On the Interplay of Choice, Robustness and Optimization in Transportation” and the SUTD-MIT International Design Center Grant IDG21700101 on “Design of the Last Mile Transportation System: What Does the Customer Really Want?”. The authors would like to thank Teo Chung-Piaw (NUS) for providing some useful references on this research. We would also like to thank the editor Alper Atamturk, the two anonymous reviewers and the associate editor for the detailed perusal of the paper and providing useful suggestions towards improving the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: On the structure of a worst-case distribution

In this section, we exhibit a probability distribution for \(\tilde{\mathbf{c }}\) that attains the optimal value \(Z^*\) of (7). The construction is along the lines of the worst case distribution proposed in proof of Theorem 1—step 2 of [43]. The worst-case distribution we identify in particular is a mixture of normal distributions. Each of these normal distributions is in turn constructed by first constructing suitable marginal distributions and then applying conditional independence.

We begin with a result on psd matrix factorization in [43]. The following definition of Moore–Penrose pseudoinverse (see [48, 50]) is useful in stating the psd matrix factorization in Lemma 6. Let \(\mathbf X \) be a matrix of dimension \(k_1 \times k_2\). Then the Moore–Penrose pseudoinverse of \(\mathbf X \) is a matrix \(\mathbf X ^\dagger \) of dimension \(k_2 \times k_1\) and is defined as a unique solution to the set of four equations:

Theorem 6

[43, Theorem 1] Suppose that \(\mathbf L \) is a \((k_1 + k_2) \times (k_1+k_2)\) positive semidefinite block matrix of the form,

where the matrices \(\mathbf A \in \mathbb {R}^{k_1 \times k_1}, \mathbf C \in \mathbb {R}^{k_2 \times k_2}\) are symmetric and the matrix \(\mathbf C \) admits an explicit factorization given by \(\mathbf C = \mathbf V {} \mathbf V '\). Then \(\mathbf L \) admits the following factorization:

where the matrix \(\mathbf U \) is defined such that \(\mathbf A - \mathbf B '{} \mathbf C ^{\dagger }{} \mathbf B = \mathbf U U ' \succeq 0\).

For a given partition \(\{\mathcal {N}_r: r \in [R\,]\}\) and projected covariance matrices \(\{{\varvec{\Pi }}^r: r \in [R\,]\}\), suppose that \(\{\mathbf{p}_*, \mathbf{X}_*^r, \mathbf{Y}^r_*: r \in [R]\}\) maximizes (7). As in the proof of Theorem 1, it follows from Carathéodory’s theorem and the convex hull constraint in (7) that there exists \(\hat{\mathcal {X}}\), a subset of \(\mathcal {X}\), containing at most \(1+\sum _r (n_r^2 + 3n_r)/2\) elements such that,

for some \(\{\alpha _\mathbf{x }: \mathbf x \in \hat{\mathcal {X}}\}\) satisfying \(\alpha _\mathbf x \ge 0\), \(\sum _\mathbf{x \in \hat{\mathcal {X}}} \alpha _\mathbf{x } = 1\). Consequently, for any \(r \in [R\,]\), we have from Lemma 6 that,

where \(\mathbf d _r(\mathbf x ^r)\in \mathbb {R}^{n_r} \) and \({\varvec{\Phi }}_r \in \mathcal {S}_{n_r}^+\) for every \(r \in [R\,]\). From the above factorization, observe that,

For completeness, we will now list explicitly the expressions for the means \(\mathbf d _r(\mathbf x ^r)\) and \({\varvec{\Phi }}_{\mathbf{r}}\). These expressions are obtained by making appropriate substitutions as per Theorem 6. For every \(r \in [R]\), define the matrix \(\mathbf V _r\) of size \((n_r + 1) \times m_r\) where \(m_r \) is the number of points in the projected space \(\mathcal {X}^r\) as follows.

Each column of \(\mathbf V _r\) corresponds to an element \(\mathbf x ^r\) of \(\mathcal {X}^r\) and is of the form \( \begin{bmatrix}\sqrt{\alpha _r(\mathbf x ^r)} \\ \sqrt{\alpha _r(\mathbf x ^r) }{} \mathbf x ^r \end{bmatrix}\). Define \({\varvec{\Phi }}_r\) of size \(n_r \times n_r\) as:

The mean vector \(\mathbf d _{r}(\mathbf x _r)\) is set to be the column vector of the matrix \(\begin{bmatrix} \varvec{\mu }^r&\quad \hat{\mathbf{Y }}_*^r \end{bmatrix}(\mathbf V _r^{\dagger })'\times 1/\sqrt{\alpha _{r}(\mathbf x ^r})\) corresponding to where \(\mathbf x _r\) occurs in \(\mathbf V _r\).

Proposition 2

Suppose that \(\{\mathbf{p}_*, \mathbf{X}_*^r, \mathbf{Y}^r_*: r \in [R]\}\) maximizes (7). Let \(\hat{\mathcal {X}} \subseteq \mathcal {X}\) be a finite subset and \(\{\alpha _\mathbf{x }: \mathbf x \in \hat{\mathcal {X}}\}\) satisfy (54). Let \(\theta ^*\) be the distribution of \(\tilde{\mathbf{c }}\) generated as follows:

-

Step 1: Generate a random vector \(\tilde{\mathbf{x }} \in \hat{\mathcal {X}} \subseteq \mathcal {X}\) such that \(P(\tilde{\mathbf{x }} = \mathbf x ) = \alpha _\mathbf{x }\).

-

Step 2: For every \(r \in [R\,]\), independently generate a normally distributed random vector \(\tilde{\mathbf{z }}_r \in \mathbb {R}^{n_r}\), conditionally on \(\mathbf{x }\), with mean \( \mathbf d _r(\mathbf x ^r)\) and covariance \({\varvec{\Phi }}_{\mathbf{r}}\). Set \(\tilde{\mathbf{c }}^r = \tilde{\mathbf{z }}_r\).

Then \(\theta ^*\) attains the maximum in (2).

Proof

Consider \((\tilde{\mathbf{x }} ,\tilde{\mathbf{c }})\) generated jointly according to the described steps. Then it follows from the law of iterated expectations that, \(\mathbb {E}[f(\tilde{\mathbf{c }})] = \mathbb {E}[ \mathbb {E}[f(\tilde{\mathbf{c }}) \vert \tilde{\mathbf{x }} ] = \sum _\mathbf{x \in \hat{\mathcal {X}}}\alpha _\mathbf{x }\mathbb {E}[f(\tilde{\mathbf{c }}) \vert \tilde{\mathbf{x }} = \mathbf x ]\), for any function f. As a result, we have from (54) that for any \(r \in [R\,]\),

Moreover, as \(\tilde{\mathbf{x }}\in \mathcal {X}\), the objective \(\mathbb {E}[\max _\mathbf{x \in \mathcal {X}}\tilde{\mathbf{c }}'{} \mathbf x ]\) satisfies,

where the last three equalities follow, respectively, from (55), the optimality of \(\{\mathbf{p}_*, \mathbf{X}_*^r, \mathbf{Y}_*^r: r \in [R\,]\}\) for (7), and Theorem 1. Combining this observation with (56), we have that the distribution of \(\tilde{\mathbf{c }}\), denoted by \(\theta ^*\), is feasible and it attains the maximum in (2). \(\square \)

The generation of the normal distributions for each of \(\tilde{\mathbf{c }}^r\) and the mixture proportions \(\alpha _\mathbf{x }\) are both identical to [43]. The difference is that in step 2 above, the joint distributions over the whole vector \(\tilde{\mathbf{c }}\) is the independent distribution on \(\tilde{\mathbf{c }}^r, \, r \in [R\,]\) conditional on \(\mathbf x \). Note that in [43], this additional step of constructing a joint distribution was not required as the whole vector \(\tilde{\mathbf{c }}\) was entirely generated at once.

Appendix B: Reasoning for gap in bounds produced by formulation (48)

In this section we investigate why the formulation (48) does not necessarily provide tight bounds for \(Z^*_{\mathrm{series}}\).

Using a similar reasoning in proof of Theorem 1, Step 1, we can show that \( Z^*_{\mathrm{series}} \le \hat{Z}^{*}_{\mathrm{series}}\). However a similar adoption of Step 2, proof of Theorem 1 to check \( Z^*_{\mathrm{series}} \ge \hat{Z}_{\mathrm{series}}\) does not go through, unfortunately. To see this, let \(\mathbf p ^*, \mathbf X ^{*}, \mathbf Y ^{*}\) be an optimal solution to formulation (48) and let us attempt to construct a solution \(\bar{\mathbf{p }}, \bar{\mathbf{X }}, \bar{\mathbf{Y }}, \bar{{\varvec{\Delta }}}\) feasible to (47) such that \(trace(\bar{\mathbf{Y }}) = \hat{Z}^*_{\mathrm{series}} = \sum _{i=1}^n trace(\mathbf Y ^{*}_{ii})\).

Construction of \(\bar{\mathbf{p }}\) and \(\bar{\mathbf{X }}\): Analogous to proof of Theorem 1, step 2.

Construction of \(\bar{\mathbf{Y }}\) and \(\bar{{\varvec{\Delta }}}\):

Set \(\bar{Y}_{ii} = Y_{ii}, \bar{Y}_{i,i+1} = Y_{i,i+1}, \bar{Y}_{i+1,i} =Y_{i+1,i}\) and \(\bar{\varDelta }_{ii} = \varPi _{ii}\), \(\bar{\varDelta }_{i,i+1} =\varPi _{i,i+1}\).

As before, consider a \((2n+1) \times (2n+1)\) partial symmetric matrix \(\mathbf L _p\) constructed using the analogous partial matrices \(\bar{\mathbf{Y }}_p\) (with entries \(\bar{Y}_{ii}\) and \(\bar{Y}_{i,i+1} \) described above), \(\bar{{\varvec{\Delta }}}_p\)(with entries \(\bar{\varDelta }_{ii}\) and \(\bar{\varDelta }_{i,i+1} \) described above) and the fully specified matrix \(\bar{\mathbf{X }}\) as follows:

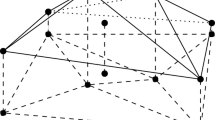

We note that the fully specified principal submatrices of \(\bar{\mathbf{L }}_p\) are exactly the matrices that appear in the positive semidefinite constraints in formulation (48) and are therefore guaranteed to be positive-semidefinite. Similar to proof of Theorem 1, if the partial matrix \(\bar{\mathbf{L }}_p\) can be shown to admit a completion \(\bar{\mathbf{L }}_{\mathrm{comp}}\) such that \(\bar{\mathbf{L }}_{\mathrm{comp}} \succeq 0\) then the rest of the entries in \(\bar{\mathbf{Y }}\) and \(\bar{{\varvec{\Delta }}}\) can be computed. However in this stage, the analogous graph constructed as in Lemma 3 is not chordal (refer to Fig. 10). Since the graph constructed is not chordal, the construction of a positive semidefinite completion cannot be guaranteed. Therefore the bound \(\hat{Z}^*_{\mathrm{series}} \) is not necessarily tight. However it may be used as a polynomial time computable upper bound for \(Z^*_{\mathrm{series}}\). Whether \(Z^*_{\mathrm{series}}\) can be solved in polynomial time or not, is an interesting open question.

Illustration of a graph G for the case where \(n=4\) and the moments corresponding to \(\{\{1,2\},\{2,3\},\{3,4\}\}\) are known. The whole graph additionally includes a vertex s connected to all the nodes in the graph and is omitted here for clarity. Consider a subgraph formed by the vertices \(\{c_2, x_1, x_4, c_3\}\). The edges induced by this subset of vertices are shown in red. These edges form a chordless cycle and therefore the graph is not chordal (color figure online)

Rights and permissions

About this article

Cite this article

Padmanabhan, D., Natarajan, K. & Murthy, K. Exploiting partial correlations in distributionally robust optimization. Math. Program. 186, 209–255 (2021). https://doi.org/10.1007/s10107-019-01453-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-019-01453-5