Abstract

Estimating losses on portfolios both theoretically and technically is an interesting and hard issue because the comprehensive formulation of the problem results in a complicated task to solve. Simulation algorithms are among the most popular, currently available procedures used by financial institutions and striving to obtain closed form formulas to see the structure of the maximum loss requires the exploration of additional properties of the maximum loss problem. We prove that within a given Mahalanobis distance, under assumptions, the maximum loss on a loan portfolio is located at the frontier of this distance, and this property allow us to set up closed forms to define efficient initial solutions. The performance of these initial solutions is compared to the optimum solution when we have two variables indicating the state of the macro economy. The supposed advantage of this approach would appear in case of large number of macro variables, but this requires further research and investigation. We found that the initial solutions generated by the compact forms underestimates losses only with insignificant manner compared to the optimum solution for a particular set of maximum loss problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The accurate knowledge of expected losses on both individual financial assets and loan portfolios is an important advantage for the management of financial institutions because good estimation of the probability of default of individual financial assets helps avoid critical cases, understand the factors of profitability of the product, and may lead to setting up a healthy portfolio. Accountants need this help as well because the expected initial loss conditional upon circumstances in the future should be reported in the profit and loss statement of the financial institutions. Requesting financial institutions to have sufficient capital to cover unexpected, rare situations, especially since the 2008 crisis, the loss, and probability of default analysis are interesting for financial regulators, too. Regulators usually prefer unconditional risk models to estimate capital requirements, and, then, financial institutions tend to measure the risk-adjusted return of a loan based on unconditional risk models rather than on conditional ones (Garcia-Céspedes and Moreno 2017).

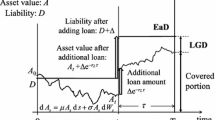

To provide a loan, a financial institution considers the outcomes of both current and future external circumstances, like the time path of GDP, real incomes or wages, interest rates to mention some. At least with the same importance, the future value of the investment should be followed as it is the basis of paying back loans. The hints come from Merton’s (1974) idea, according to which the value of a risky debt depends on firm value, and default risk is correlated because firm values are correlated via common dependence on market factors. A loan defaults when the value of the firm drops below the volume of the liability. Developed by Vasicek (1987), and improved by Finger (1999) and Gordy (2003), in the single macro factor model, the firm value is influenced by two random factors when one of them is a latent macroeconomic factor, and the other one is an independent idiosyncratic risk term.

Since then, the Vasicek (1987) model has become the base for Basel regulatory capital requirements and has been widely used by financial institutions. This model determines the probability of defaults for homogenous financial assets with the assumption that the correlation between the values of any two assets is the same, and the default probability (PD) is conditional upon a macro state. An important characteristic of this model is that it lays the foundation of a linear relationship between N−1(PD) and a macro state, where N−1 is the inverse function of the cumulative standard normal distribution function. The idea can be extended for the case when the macro state is described more than one variable, and we suppose in our analysis that we own linear regression functions describing the relationship between the observed N−1(PD) data and macro variables with a high percentage of determination coefficients. In the paper of Bhat et al. (2018) for example, the authors linearly regress the loan loss provision for the current quarter (period t) divided by prior quarter total loans on the change in non-performing loans from quarter end t-2 to quarter end t divided by quarter t-1 total loans (including other variables as well) to analyze how credit risk modeling implicates loan loss provisions and loan-origination procyclicality.

Among other models, Breuer et al. (2012) use the Vasicek model to illustrate the difference between plausibility given at a level and the losses in the worst case under a given risk level. Mahalanobis distance of changes in a set of macro factors is used to measure the plausibility level, and over this set, they maximize possible losses. Intuitively, the Mahalanobis distance of a multidimensional move can be interpreted as the number of standard deviations of the multivariate move from a center (Breuer et al. 2009). There exist other approaches as well to measure large losses. Chan and Kroese (2010) use t-copula models to estimate large portfolio loss probabilities. By utilizing the asymmetric description of how the rare event occurs, they derive conditional Monte Carlo simulation algorithms to estimate the probability of large loss occurrence. Monte Carlo simulation plays important role in the research of Bassamboo et al. (2008), using an importance sampling algorithm to efficiently compute credit risk. The authors are interested in determining probabilities indicating large portfolio losses over a fixed time horizon and they illustrate the implications of extremal dependence among obligors.

There are other streams of research tracks applying Vasicek model-types to estimate portfolio loss and at the same time, to increase the credibility of these estimations aiming to develop more explicit forms exhibiting larger clarity. Garcia-Céspedes and Moreno (2017) propose second and third-order adjustment formulas of the Taylor expansion approximation to obtain multi-period loss distributions. As we offer approximation methods later, we need to have accurate solutions to evaluate the performance of these methods. To do so, we apply a 20th order Maclaurin polynomial approximation for the cumulative standard normal distribution, which seems to be rather accurate in all five digits determining the areas of the standard normal density function at the interval of [0, 2.5], and we have observed only slight differences in the fifth digit moving toward 3 (of course the accuracy can be extended further).

It is worth noting that the Vasicek model-related idea is used in other fields of financial analysis as well, mainly when the interest rate is stochastic (Yao et al. 2016; Shevchenko and Luo 2017; Wu et al. 2019; Chang and Chang 2017; Orlando 2020), and is also popular in continuous time models (Christensen et al. 2016; Abid et al. 2020; Xiao and Yu 2019).

This paper deals with the estimation of maximum losses on individual financial assets and portfolios to provide further insights into the nature of the complicated problem. To estimate the maximum losses of portfolios under a given Mahalanobis distance, first, we formulate a conditional optimization model whose objective function is neither convex nor concave. Using an economically not significant restriction, we prove that the objective function takes its maximum value at the frontier of the Mahalanobis distance. This finding can significantly speed up computations, and then, we elaborate a Maclaurin polynomial approximation method to determine a rather accurate level of maximum loss on portfolios under given Mahalanobis distance when we have two macro variables, but an arbitrary number of financial products. The insights provided by the theoretical analysis suggest an approximation method as well, which uses compact closed forms, and hopefully its simplicity increases the belief and trust of top managers in financial tools and methods.

The paper is organized as follows: the next section gives a compact form solution of the maximum loss problem when we have only one homogenous financial product, and apply these forms to consumer loans. The Sect. 3 characterizes the nature of the maximum loss of portfolios with multiple financial products under given Mahalanobis distance. Based upon these findings an approximation method is suggested in Sect. 4. This method uses simple, compact forms again, and a variety of portfolios is defined to test the new procedure. The performance of this new procedure is compared to the optimal solution, where the optimal solution is provided by an elaborated Maclaurin approximation with the order of 20. Section 5 gives the conclusions.

2 Model formulation

Laying the foundations of our concept, we quote that in the Vasicek model (1987), a financial asset defaults when

where B is the initial investment (equivalent with the value of the firm at the very beginning), out of which the volume of debt is D, and DTD is called the distance to default. Here y denotes the expected annual percentage increase of the asset value and s denotes its standard deviation over one time unit and \(\varepsilon \) is a random variable with (\(\varepsilon \sim N(0, 1)\)). It is thought that \(\varepsilon \) is the source of two random effects, namely

where v is the idiosyncratic random variable with the standard normal distribution (\(v\sim N(0, 1)\)), x is the macro state, which is again a random variable with standard normal distribution (\(x\sim N(0, 1))\), and \(\rho \) is the correlation between the value of the firm and the macro state (it is supposed that \(\rho >0\)). Applying (1b) in (1a), we have

The first term of the numerator in (1c), or the right-hand side of (1a), is frequently called as the unconditional Distance to Default (DTD), x as Distance from Economy (DFE), while N−1(PDx) as the distance to default conditional upon the x state of the economy (DTDE). PDx is called the probability of default supposing that the economy is in state x. As \(v\sim N(0, 1)\), from (1c) it follows that.

from which

follows. We note that theoretically, it is a key idea that if our portfolio consists of homogeneous loans and if their number is significantly large enough, for a given value of DFE, the proportion of loans in the portfolio that default, will converge to PDDFE. Thus the classification procedure of financial assets is crucial, for which Doumpos et al. (2002) offer a multi-criteria hierarchical discrimination approach to classify firms seeking credit into homogenous groups. We also note that the idea of extremal common shock plays important role in many models, see for example Bassamboo et al. (2008). In their model, instead of (1b),

and w is a nonnegative random variable independent of both v and x, and its probability density function satisfies \(\propto {w}^{k-1}+o({w}^{k-1})\) as \(w\downarrow 0\) for some constants \(\propto >0, k>0\).

Frequently, in the financial industry, not only one macro variable are considered, and the underlying correlations are often specified through a linear factor model, like.

where \({x}_{1}\), …, \({x}_{n}\) are i.i.d. standard normal random variables that measure for example global, country, and industry effects impacting all companies, v is the already mentioned idiosyncratic normal random variable, and \({c}_{1}\), …, \({c}_{n}\) are the loading factors (Bassamboo et al. 2008).

Based upon this linear relationship, and the form of (3), we suppose we have the affine relationship between the conditional distance to default values and the macro states, like

where a0 is a constant, a and x are vectors with n elements, prime’ means transposition: a’ = [a1,…, an], and x’ = [x1, …, xn]. In this regression ai is the coefficient of the i-th macro variable (= xi), and we may suppose that a \(\ne \) 0 (Table 1).

Let us denote \(\Lambda \) the estimated loss on a particular product, which is composed of three factors: the probability of default (= PD), Loss Given Default (= LGD), and the third one: Exposure at Default (= EAD). Then, we are searching for the maximum of

Although this paper focuses on the probability of default, there are interesting results connected with the other two factors. Somers and Whittaker (2007) apply quantile regression to predict the volume of loss given default for mortgages which is considered to be an asymptotic process. These quantities are also well exposed to credit scoring, and Verbraken et al. (2014) developed consumer credit scoring models based on profit-based classification measures. Reducing the attention for the probability of default, in the literature it is usually assumed that LGD and EAD are given volumes, consequently, we can focus on the question, how large is the maximum default level? For portfolios, Gotoh and Takeda (2012) derive a portfolio optimization model by minimizing the upper and lower bounds of loss probability. Based on these bounds two fractional programs are derived which result in a non-convex, constrained optimization problem, and an algorithm is proposed.

The probability of default in case of a given macro state (PDx) is determined by taking the value of the cumulative distribution function at DTDE, and DTDE is given by (4). So:

Our aim is that over a set of macro variables, where this set is constrained by the Mahalanobis (Maha) distance, to identify macro states that maximize loss, which is equivalent to the maximum probability of default in this case. Thus, we need to solve the following mathematical programming problem:

st.

In (7b) V is the nxn covariance-variance matrix of macro variables. We suppose that this matrix is positive definite (as it is known, this matrix is at least positive semi-definite), \({{\varvec{V}}}^{-1}\) is the inverse of this matrix, and \(\updelta \) is a given Mahalanobis distance (obviously positive). Considering the possible values of \(\updelta \), we start form (7b), which is equivalent with \({\varvec{x}}\boldsymbol{^{\prime}}{{\varvec{V}}}^{-1}{\varvec{x}} \le {\delta }^{2}\), and it is known that the square of the Mahalanobis distance of a multivariate normal vector follows the chi-squared distribution. Thus, for example with the degree of freedom two (having two components of the normal vector), from the chi-squared distribution table we have quantiles 13.3, 9.2, 4.6 (consequently 3.65, 3.03, 2.15 \(\updelta \) values) for probabilities 0.1%, 1% and 10%, respectively.

Although it is easy to solve the mathematical programming problem formulated by (7), we make a formal statement, namely Proposition 1.

Proposition 1

The maximum probability of default defined by (7) under given Mahalanobis distance \(\updelta \) is determined by the macro states.

and this maximum probability (denoted by PDmax) is given by the expressions

(N denotes the standard normal cumulative distribution function).

Proof

Considering that the standard normal cumulative distribution is strictly increasing, (7) is equivalent to the following problem:

st.

Although formally (7b) and (8b) are different, we have no made mistake of taking equality instead of inequality. The reason is that the objective function is a constant plus a linear function, and the set of feasible solutions is convex. Linear functions take their maximum or minimum values at the boundary of convex sets. Thus, it is enough to consider macro states that position themselves at the frontier of the feasible set.

Based on (8), the Lagrangean of this problem can be written as:

where \(\lambda \) is the Lagrange multiplier attached to the assumption (8b).

The first order conditions to have maximum point are given by the equations:

Let us multiply from left both sides of (10a) with the matrix V, and then we have:

Multiplying this from left by vector a’, we gain the following result:

Now, let us multiply from left both sides of Eq. (10a) by vector x, revealing the relationship:

from which.

and using this in (12) we obtain the values of \(\lambda \):

Knowing the value of the Lagrange multiplier the macro variables are determined. In Eq. (12) \({{\varvec{a}}}^{\boldsymbol{^{\prime}}}{\varvec{V}}{\varvec{a}}>0\), as V is positive definite. Then neither \(\lambda \) nor \({\varvec{a}}\boldsymbol{^{\prime}}{\varvec{x}}\) are zero, and from (11):

Using (13), the solution providing maximum or minimum value is given by:

and from (15) we have the following:

We can ignore the negative \(\lambda \) value because we are seeking for maximum, which is

This important relationship leads us to the maximum level of loss, or default. Based on (6):

which result completes the proof.

Example

We observed the data of a bank connected with consumer loans (is a real case). The data are summarized in Table 2, moreover we determined the linear regression for DTDE as function of macro states identified by interest rate and GDP growth, respectively. (The determination coefficient is of 92%.)

We found that

and the covariance/correlation matrix is

Here x1 indicates the interest rate, while x2 the GDP increase (as DFI and DFE, respectively, as defined before. Let the Maha distance 3, i.e. \(\updelta =3\). The covariance/correlation of the two variables is − 0.95. Thus, our inputs are: a0 = − 1.815, a′ = [0.177, − 0.0084], \(\updelta =3\), and the V given above.

(DFE (distance from economy) values are calculated from the GDP % increase data: the average of these values and their variance are calculated and the average is deducted from the actual observed data and this difference is divided by the variance. DTDE and DFI standard normalized data are calculated similarly for default and interest rate, respectively. GDP data and interest rates are released by the Hungarian Statistical Office and the Hungarian National Bank, respectively.)

Using these inputs, we calculate Va = [0.185, − 0.176]’, and a\(\boldsymbol{^{\prime}}\)Va = 0.0342. Then, \({\lambda }^{2}=0.0342/36\), and \(\lambda = \pm 0.031\). Based on (15) the macro states that determine maximum loss/default probability: x1 = 3, and x2 = − 2.85. Then, we calculate DTDE from (16): DTDE = a0 + \(\delta \boldsymbol{ }\sqrt{{\varvec{a}}\boldsymbol{^{\prime}}{\varvec{V}}{\varvec{a}}}\) = − 1.815 + 3 \(\sqrt{0.0342}\) = − 1.26. Thus based on (17) we will have the maximum loss/default probability: PDmax = N(− 1.26) = 10.5%. For a more strict case when the quantile is 13.3, thus \(\delta \) = 3.65, we have DTDE = − 1.815 + 3.65 \(\sqrt{0.0342}\) = − 1.14, for which PDmax = N(− 1.14) = 12.5%.

3 The nature of the maximum loss on loan portfolios

The solution to this problem requires more significant efforts as for a given macro state the loss on an individual product may differ from product to product. This section aims to reveal some properties of the problem that may speed up known procedures and may lead to defining new ones.

Let us suppose now that there are k financial products in the portfolio and this way we need to modify (5) to be valid for multiple products. For the j-th product, we have PDj \(\times \) LGDj \(\times \) EADj, (j = 1, 2,…, k), thus for the full portfolio the total loss is:

As \({LGD}_{j}\times {EAD}_{j}\) is considered to be given in case of each product in the portfolio, the maximum of loss formulated by (18) depends on the default probabilities of the individual products that can be different for the same macro state. Let \({\varphi }_{j}= {LGD}_{j}\times {EAD}_{j},\) and without loss of generality we may assume that \({\sum }_{j=1}^{m}{\varphi }_{j}=1\), and 0 < \({\varphi }_{j}\) < 1 for each j. For \({\varphi }_{j}=1\), the previous section gives the solution.

Similarly to (7), we can formulate the maximum loss problem for a portfolio as:

s.t.

Here the expression \(\left({a}_{0j} + {{\varvec{a}}}_{{\varvec{j}}}^{\boldsymbol{^{\prime}}}{\varvec{x}}\right)\) determines DTDE for the jth product in the portfolio, for which we also use the denotation DTDEj. Instead of (19b) we use the equivalent assumption \({{\varvec{x}}}^{\boldsymbol{^{\prime}}}{{\varvec{V}}}^{-1}{\varvec{x}}\boldsymbol{ }\le {\updelta }^{2}\). Thus, we can attach the following Lagrangian function to (19):

To have a stationary point of the function L, the necessary Kuhn-Tucker conditions can be formulated (Sydsaeter and Hammond 1995) as:

where \({{{\varvec{v}}}_{i}^{-1}}^{\boldsymbol{^{\prime}}}\) is the ith row of matrix V−1, and aji is the ith component of the vector aj, playing role in the determination of the value of DTDEj. (21a) assumption set can also be formulated as:

where \(\phi \) denotes the probability density of the standard normal distribution.

First, we note that the set of feasible solutions defined by (19b) is a closed convex set, and the objective function is continuous, (19) has optimal solution, and consequently, (21) has solution. Let x0 denote a stationary point–a solution of the system (21).

Proposition 2

An interior stationary point x0 may not be an optimal solution when for at least one i the expression.

is negative.

Proof

For an interior stationary point the Lagrange multiplier is zero, i.e. \(\lambda =0\). However, in the case of \(\lambda =0,\) taking the derivative of (21a) again with respect to xi, we will have:

If x0 were an interior local maximum point, F would attain an unconstrained local maximum point at x0. However, the Hesse matrix of F must be negative semidefinite at this local maximum point, but by the assumption in Proposition 2, this rule is violated (Sydsaeter and Hammond, 1995).

Proposition 3

When the default probability of each product of the portfolio is below 50% under given Mahalanobis distance, the macro variables providing maximum loss are located on the boundaries of the Mahalanobis distance.

Proof

Let us suppose now we have one financial product only, let us say the j-th one. The expression \(({a}_{0j} + {{\varvec{a}}}_{{\varvec{j}}}^{\boldsymbol{^{\prime}}}{\varvec{x}})\) measures the distance to default, i.e. DTDEj = \(({a}_{0j} + {{\varvec{a}}}_{{\varvec{j}}}^{\boldsymbol{^{\prime}}}{\varvec{x}})\). However, when the probability of default is below 50%, then \(({a}_{0j} + {{\varvec{a}}}_{{\varvec{j}}}^{\boldsymbol{^{\prime}}}{\varvec{x}})\) = DTDEj < 0. Consequently, the value of the second-order expression in (22a) is positive for j. The other elements of the Hesse matrix of F in this case are:

Then, we can set up the Hesse matrix (denoted by H) for product j now as:

The matrix \(({{\varvec{a}}}_{{\varvec{j}}}{{\varvec{a}}}_{{\varvec{j}}}^{\boldsymbol{^{\prime}}}\)) is positive semidefinite and considering the rest of the expressions of (22c), we may conclude that H is positive semidefinite as well when \(\left({a}_{0j} + {{\varvec{a}}}_{{\varvec{j}}}^{\boldsymbol{^{\prime}}}{\varvec{x}}\right)\) is negative. Consequently, the cumulative distribution function \(N({a}_{0j} + {{\varvec{a}}}_{{\varvec{j}}}^{\boldsymbol{^{\prime}}}{\varvec{x}})\) is convex in x. The objective function in (19) is the sum of these convex functions, thus F is convex as well. Convex functions have maximum values on the boundaries of convex sets, which fact completes the proof.

Analyzing the data of Example above, which depicts a real case, one may observe that even during the largest crises the probability of default was around 6%, far below the 50. The state of the economy that time (in 2009) was at DFE = − 2.7, which appears ones during 300 years on average. The 2021 first half year report of the same bank declares 5.4% default rate on loans, basically during the third wave of pandemic. We believe, these numbers provide grounds for closed form approaches.

Proposition 4

In the assumption system (21) when.

the value of \(\lambda \) is positive, and

Proof

Assumptions formulated by (21a) can be written in the form of:

As the Mahalanobis distance \(\updelta >0\), and \({{\varvec{V}}}^{-1}\) is nonsingular, \({{\varvec{V}}}^{-1}{\varvec{x}}\) may not be zero. By (21) \(\lambda \ge 0\). If \(\lambda \) were zero, then (21a) or (24) is satisfied if \(\nabla F\left({\varvec{x}}\right)=0\). Thus \(\lambda >0\), \(\lambda {{\varvec{V}}}^{-1}{\varvec{x}}\boldsymbol{ }\ne 0\) when \(\nabla F\left({\varvec{x}}\right)\ne 0\).

Let us note that a solution of the equation system \(\nabla F\left({\varvec{x}}\right)=0\), \({\updelta }^{2}={{\varvec{x}}}^{\boldsymbol{^{\prime}}}{{\varvec{V}}}^{-1}{\varvec{x}}\) may present a maximum point as well (the case, when the maximum point lies on boundary anyway, and \(\lambda =0\) in this case, too).

Now, we move closer to determining the value of \(\lambda \) in case of \(\nabla F\left({\varvec{x}}\right)\ne 0\). Multiplying from the left both sides of (24) with the matrix V, we arrive at:

Then multiplying this equality from left by the row vector \(\nabla F\left({\varvec{x}}\right)\)’ we will have:

Let us turn to (23) now, and multiplying both sides from left with the row vector x’:

Utilizing this relationship at the left-hand side of (26), we have.

\(2\lambda \cdot 2\lambda {\delta }^{2}\) = \(4{\lambda }^{2}{\delta }^{2}\)= \(\nabla F\left( {\varvec{x}} \right)^{\prime } {\varvec{V}}\nabla F\left( {\varvec{x}} \right)\)

from which the statement follows.

Example

To illustrate the flexibility of our approach connected with the assumption that the probability of default is below 50% in case of each financial product, we define two products with the distance to default functions DTDE1 = − 1.81 + 0.5x, and DTDE2 = − 1.81–0.5x, respectively (i.e. we have only one macro variable–not reducing generality, considering the purpose of the example). Let the ratio of weighting these two products in the portfolio be 1:8. (Not influencing the aim, we omit the expression \(\frac{1}{\sqrt{2\uppi }}\), thus none of the functions are multiplied by this expression).

More generally, to see the key players of the optimum, let us focus our attention on the assumptions formulated by (21a). In this expression, there are two components depending on xi (that will be x in our special, one macro variable case). One of them is the term \(\frac{1}{\sqrt{2\pi }}{\sum }_{j=1}^{k}{\varphi }_{j}{a}_{ji}{e}^{-\frac{{({a}_{0j} + {{\varvec{a}}}_{{\varvec{j}}}^{\boldsymbol{^{\prime}}}{\varvec{x}})}^{2}}{2}}\), and the other one is the term\(2\lambda {{{\varvec{v}}}_{i}^{-1}}^{\boldsymbol{^{\prime}}}{\varvec{x}}\). Except for xi, let us fix all other variables. Then, we see that

while \(2\lambda {{{\varvec{v}}}_{i}^{-1}}^{\boldsymbol{^{\prime}}}{\varvec{x}}\) = \(2\lambda { {v}_{ii}^{-1}x}_{i}+constant ({{v}_{ii}^{-1}\boldsymbol{ }\mathrm{is the i}-\mathrm{th component of the vector}\boldsymbol{ }\boldsymbol{ }{\varvec{v}}}_{i}^{-1}\) (which is the i-th row of the matrix V−1). Figure 1 exhibits the components of assumption (21a) with positive \(\lambda \), from which one may conclude that the maximum point always exists. (We note that \({v}_{ii}^{-1}\) is positive.)

The components of the assumption (21a), and the second order assumption \(\frac{{\partial }^{2}F}{\partial {x}_{i}\partial {x}_{i}}\)

In Fig. 1 the function \(\frac{\partial F}{\partial {x}_{i}}\) is the derivative of the objective function without condition. The derivative of this function is the second-order derivative \(\frac{{\partial }^{2}F}{\partial {x}_{i}\partial {x}_{i}}\). We picked up an arbitrary line to represent the function \(2\lambda {v}_{ii}^{-1}{x}_{i}+\mathrm{costant}\). The functions well indicate that the invoked non-negativity assumptions on the DTDE values (that default probabilities are below 50%) practically do not reduce real-life problems as the function \(\frac{{\partial }^{2}F}{\partial {x}_{i}\partial {x}_{i}}\) representing the second-order assumption, in the interval [− 3.6, 3.6] is positive (and the function \(\frac{\partial F}{\partial {x}_{i}}\) monotonously increases in this interval), i.e., we have a guarantee that the maximum point is on the Maha frontier. On Fig. 1, the shape of the function \(\frac{\partial L}{\partial {x}_{i}}\) (assumption (21a)) is the composition of \(\frac{\partial F}{\partial {x}_{i}}\) and (\(2\lambda { {v}_{ii}^{-1}x}_{i}+constant\)), taking their difference. The point x = 2.7 is a maximum point as on the one hand \(\frac{\partial L}{\partial {x}_{i}}\) = 0, and on the other hand, \(\frac{{\partial }^{2}L}{\partial {x}_{i}\partial {x}_{i}}<0\), because just before the maximum point the value of \(\frac{\partial F}{\partial {x}_{i}}\) is larger than (\(2\lambda { {v}_{ii}^{-1}x}_{i}+constant\)), and after that, it is smaller. So, we can say that at the maximum point \(\frac{\partial L}{\partial {x}_{i}}\) is decreasing, so \(\frac{{\partial }^{2}L}{\partial {x}_{i}\partial {x}_{i}}<0\). It is worth noting that we assigned a relatively large coefficient to the macro variable x. In real life, we have observed significantly smaller numbers than 0.5. If this coefficient were 0.2 for example, then 1.8/0.2= \(\pm \) 9 would be the limits of the interval in which the individual defaults are below 50%, giving a guarantee for the macro variable to stay at frontiers of the Mahalanobis limit. It is known that the emphasized 0.1% case is at the interval [− 3, 3] approximately.

Proposition 5:

If for the \({{\varvec{x}}}_{0}\) optimal solution \(\nabla F\left({{\varvec{x}}}_{0}\right) \ne 0\), and the DTDEj = \(({a}_{0j} + {{\varvec{a}}}_{{\varvec{j}}}^{\boldsymbol{^{\prime}}}{{\varvec{x}}}_{0})\) values were the same for each j, j = 1,…, k, then the optimum solution is.

Proof

From (23),

And substituting this value in (25), and after rearranging,

Let \({e}^{-\frac{{(DTDEj)}^{2}}{2}}\) = \({e}^{-\frac{{({a}_{0j} + {{\varvec{a}}}_{{\varvec{j}}}^{\boldsymbol{^{\prime}}}{\varvec{x}})}^{2}}{2}}\) \(={e}^{-\frac{{(DTDE)}^{2}}{2}}=z\), then (29) is

from which our statement follows.

Equation (29) indicates than in the case of our previous example, when we have only one macro variable with any finite number of financial products, the optimal solution can be determined without any restriction: this point is the intersection of the function y = x, and the right-hand-side of (29), which exclusively depends on x. Formula (29) allows us to conduct nice sensitivity analysis.

4 Generating initial solutions for the maximum loss problems

Especially, in case of large number of macro variables, the solution to the problem in (19) is not simple, even owning the results above, but hopefully, they lead us to pragmatic initial solutions. Using the properties of the problem identified above, we are going to formulate a procedure to generate good initial solutions, intending to speed up searching methods, like Monte Carlo simulation, which is generally used in this field. To compare the efficiency of the generated initial solutions, we need a benchmark. This benchmark will be the optimal solution, which will be exhibited by the use of the Maclaurin polynomial approximation of the standard normal distribution. This polynomial approximation is the following:

We found that setting K at 10, which results in the highest exponent of 19, provides rather accurate numbers of the cumulative distribution function. Till around z = 2.5, all digits are accurate, moving towards 3, with slight differences in the fifth digit.

Equation (31) gives the territory of the region below the curve of the density function represented in Fig. 2 for the interval of (0,z), but we need the territory over the interval (− \(\infty \), DTDE) because this territory gives the default probability, which is the loss in this case as well. So, we need to mirror the DTDE value to the vertical axes, and we must deduct the probability values from 0.5. The result is the probability of default (PD) belonging to DTDE.

Using (31) to solve the maximum loss problem in (19), our task is to solve

s.t.

Equation (32) can be solved for any finite number of products and macro variables, but with two macro variables (GDP increase and interest rate, for example) a very simple, pragmatic solution can be offered. Here it is:

-

For each financial product let us determine the coefficients of the linear regression function. For the j-th product they are: \(\left({a}_{0j}, {{\varvec{a}}}_{{\varvec{j}}}^{\boldsymbol{^{\prime}}}\right)=({a}_{0j},\boldsymbol{ }{a}_{1j},{a}_{2j})\), j = 1, …, k.

-

For each product let us determine its weight in the portfolio. It is \({\varphi }_{j}\), j = 1, …, k.

-

Let us determine the covariance-variance matrix and its inverse. They are V, and V−1, respectively.

-

Let us give the desired Maha distance. It is \(\updelta \). As we have two macro variables, based on (32b), we can express one of them as a function of the other one, and let us substitute this in the function (32a).

-

(32a) is a function with one variable in this case, and have the function plotted on the screen of the computer.

-

Let us determine the accurate value of the maximum point.

Example

Let us define three financial products in a portfolio. Let the first one be the known consumption loan case, where we know that DTDE1 = − 1.815 + 0.177x1–0.0084x2. We will consider Mahalanobis distances, and,

\(\updelta =3,\text{and}\,\updelta =2.15\) where these chi-square quantiles correspond to 99% and 90% probabilities, respectively. Then the inputs are: a01 = − 1.815, \({{\varvec{a}}}_{1}^{^{\prime}}\) = [0.177, − 0.0084], \(\updelta =3,\,\text{or}\,2.15\), and the covariance-variance matrix is:

To gain further insights we define an artificial covariance matrix as well. Namely:

The inputs of the next two products are artificial, trying to define hopefully interesting cases to provide better insights into the nature of the problem. The inputs of the second products are: \(({a}_{02},\boldsymbol{ }{a}_{12},{a}_{22})\) = (− 1.815, 0.177, − 0.084), and for the third product: \(({a}_{03},\boldsymbol{ }{a}_{13},{a}_{23})\) = (− 1.815, 0.05, − 0,1).

To start with, first, we analyze the individual products, and the solution can be given by formulas given in Proposition 1. Table 3 summarizes the inputs, and Table 4 indicates the projected probability of defaults for different Mahalanobis distances.

Using the algorithm given above, Table 5 indicates the maximum losses (default probabilities) by Maclaurin approximation for different portfolio structures, and Table 6 gives the same, but the correlation coefficient is − 0.95.

From these computational results, we may conclude that a strong correlation does not allow large latitude for variables to move freely. For Mahalanobis distance 3, the value of the first variable is always around 3, and when the distance is close to two, the value of this variable is always close to 2.

In the following lines, we define an algorithm, which uses easily calculable inputs, and the formulas are compact and closed. We will see that the solutions provided by this approximation method are rather close to the ‘optimal’ ones. The steps of this procedure are the following:

-

Using the weights of the products inside the portfolio, we construct an individual maximum loss problem by defining the inputs of the single product problem as:

$$ \alpha _{0} = \mathop \sum \limits_{{j = 1}}^{k} \varphi _{j} a_{{0j}} ,\;\user2{\alpha } = ~\mathop \sum \limits_{{j = 1}}^{k} \varphi _{j} \user2{a}_{j} $$(33)(let us note that \({\sum }_{j=1}^{k}{\varphi }_{j}=1,\) and \({\varphi }_{j}> 0\) for all j)

-

Then, based on Proposition 1, the macro states causing maximum loss are determined by the form:

$${\varvec{x}}=\boldsymbol{ }\frac{\delta }{\sqrt{\boldsymbol{\alpha }\boldsymbol{^{\prime}}{\varvec{V}}\boldsymbol{\alpha }}}{\varvec{V}}\boldsymbol{\alpha }.$$(34)

and the distance to default that results in the maximum probability of default or loss is given as:

-

DTDE = \(\alpha \)0 + \(\delta \boldsymbol{ }\sqrt{\boldsymbol{\alpha }\boldsymbol{^{\prime}}{\varvec{V}}\boldsymbol{\alpha }}\). Consequently,

-

The maximum loss is \(\Lambda \) = N(\(\alpha \)0 + \(\delta \boldsymbol{ }\sqrt{\boldsymbol{\alpha }\boldsymbol{^{\prime}}{\varvec{V}}\boldsymbol{\alpha }}\))–where N stands for the cumulative standard normal distribution function.

Example

Let us consider the coefficients of the regression functions as inputs given in Table 3. Using the approximation procedure defined above, let us determine the maximum losses and the corresponding macro states for different portfolio structures and Mahalanobis distance 2.15. Table 7 summarizes the results. We note that as the a0j values are the same for each product (− 1.815) the weighted average is the same, i.e. \({\alpha }_{0}\)= − 1.815.

Comparing the last two lines, as has been expected, the approximation procedure gives lower numbers than what optimum solutions provide. But the difference is insignificant, and the procedure is quick, compact, and easy to explain, maybe top management will believe in.

It is noticeable that the hint for the approximation method originates from Proposition 5. In this proposition, the \({e}^{-\frac{{({a}_{0j} + {{\varvec{a}}}_{{\varvec{j}}}^{\boldsymbol{^{\prime}}}{\varvec{x}})}^{2}}{2}}\) values are the same for each product, and the suggested approximation in (32) applies the results of Proposition 5 in (30). This gives the soul of the approximation method. We can increase the potential number of initial solutions for possible search algorithms by using the macro state values given by this approximation method in (33) to recalculate \({e}^{- \frac{{({a}_{0j} + {{\varvec{a}}}_{{\varvec{j}}}^{\boldsymbol{^{\prime}}}{\varvec{x}})}^{2}}{2}}\) for each j, and then we modify the aj vectors with this term. From Table 7 we have followed this idea for the portfolio structure (0.2, 0.2, 0.6) as here we have the largest difference between the optimal and the approximated solution (7.14 and 6.6, respectively). Practically we have arrived at the optimum.

5 Conclusions

The Vasicek model formulates a linear relationship between the distance to default conditional on a macro state (DTDE) and one macro variable (DFE). Obtaining linear regression functions describing the relationship between conditional distance to default and macro states is important as in the case of individual financial products there is a straightforward connection between the conditional distance to default and probability of default as the cumulative distribution is increasing in DTDE. The importance of the DTDE concept is increased by elaborating explicit compact forms set up from the inputs of the linear regression functions. These closed forms describe the macro states that determine the maximum loss or default probabilities of a single financial product and the conditional distance to default providing maximum loss can be described by a closed form as well for a given level of Mahalanobis distance. If we observe this formula for the multivariate macro state, it is a nice extension of the one macro variable problem: the Mahalanobis distance \(\delta \) describes the macro state having the coefficient \(\sqrt{\boldsymbol{\alpha }\boldsymbol{^{\prime}}{\varvec{V}}\boldsymbol{\alpha }}\). These single product formulas are important as using an approximation procedure, with appropriately defining the inputs of the procedure, the determination of the maximum portfolio loss is traced back to the single product problem for which we have simple, compact forms.

With two macro state variables, we elaborated a procedure to determine the accurate level of the maximum loss of portfolios. This procedure is based on the Maclaurin’s polynomial approximation method which, despite this, is accurate. The results of this procedure provide a base for comparing the outcomes of a new approximation procedure. The soul of this approximation procedure is the composition of an artificial single product where the components of the linear regression function are the weighted average of the regression components of the individual products in the portfolio. The underestimation of the portfolio loss level is expected, however, comparing it to the optimum numbers, the difference is not significant. The usage of these compact formulas would be especially beneficial in case of large number of macro variables, as simulation algorithms takes large running times. We lack the proof of this idea, but hopefully this research inspires new algorithms that utilize the compact forms advocated above.

One further interesting question could be how the new findings connected with the nature of the optimization problem could speed up procedures, let it be simulation or other method, to find the maximum loss on loan portfolios. Although the hunch is that in the case of a larger number of products in a portfolio the performance of the defined initial solution is better, this needs verification. As always, to gain results, we have applied trade-offs. Eliminating some of them while insisting on closed forms is certainly a useful research area, similarly to comparing the results with other methods.

References

Abid A, Abid F, Kaffel B (2020) CDS-based implied probability of default estimation. J Risk Finance. https://doi.org/10.1108/JRF-05-2019-0079

Bassanboo A, Juneja S, Zeevi A (2008) Portfolio credit risk with extremal dependence: asymptotic analysis and efficient simulation. Oper Res 56(3):593–606

Bhat G, Ryan SG, Vyas D (2018) The implications of credit risk modeling for banks’ loan loss provisions and loan-origination procyclicality. Manage Sci 65(5):2116–2141

Breuer T, Jandacka M, Rheinberger K, Summer M (2009) How to find plausible, severe and useful stress scenarios. Int J Cent Bank 3:205–224

Breuer T, Jandacka M, Mencia J, Summer M (2012) A systematic approach to multi-period stress testing of portfolio credit risk. J Bank Finance 36:332–340

Chan JCC, Kroese DP (2010) Efficient estimation of large portfolio loss probabilities in t-copula models. Eur J Oper Res 2010:361–367

Chang H, Chang K (2017) Optimal consumption-investment strategy under the Vasicek model: HARA utility and Legendre transform. Insur Math Econ 72:215–227

Christensen BJ, Posch O, van der Wel M (2016) Estimating dynamic equilibrium models using mixed frequency macro and financial data. J Econom 194:116–137

Doumpos M, Kosmidou K, Baourakis G, Zopounidis C (2002) Credit risk assessment using a multi criteria hierarchical discrimination approach: a comparative analysis. Eur J Oper Res 138:392–412

Finger C (1999) Conditional approach for CreditMetrics portfolio distributions, CreditMetrics Monitor. Riscmetrics Group

Garcia-Céspedes R, Moreno M (2017) An approximate multi-period Vasicek credit risk model. J Bank Finance 81:105–113

Gordy MB (2003) A risk factor model foundation for rating based bank capital rules. J Financ Intermed 12:199–232

Gotoh J, Takeda A (2012) Minimizing loss probability bounds for portfolio selection. Eur J Oper Res 217:371–380

Merton RC (1974) On the pricing of corporate debt: the risk structure of interest rates. J Finance 29:449–470

Orlando G, Mininni RM, Bufalo M (2020) Forecasting interest rates through Vasicek and CIR models: a partitioning approach. J Forecast 39(4):569–579

Shevchenko PV, Luo X (2017) Valuation of variable annuities with Guaranteed Minimum Withdrawal Benefit under stochastic interest rate. Insur Math Econ 76:104–117

Somers M, Whittaker J (2007) Quantile regression for modelling distributions of profit and loss. Eur J Oper Res 183:1477–1487

Sydsaeter K, Hammond PJ (1995) Mathematics for Economic Analysis. Prentice Hall, N.J.

Vasicek OA (1987) Probability of loss on loan portfolio, KVM Corporation, working paper

Verbraken T, Bravo C, Baesens B (2014) Development and application of consumer credit scoring models using profit-based classification measures. Eur J Oper Res 238:505–513

Wu X, Xie D, Edwards DA (2019) An optimal mortgage refinancing strategy with stochastic interest rate. Comput Econ 53:1353–1375

Xiao W, Yu J (2019) Asymptotic theory for rough fractional Vasicek models. Econ Lett 177:26–29

Yao H, Li Z, Li D (2016) Multi-period mean-variance portfolio selection with stochastic interest rate and uncontrollable liability. Eur J Oper Res 252:837–851

Acknowledgements

The author thanks Mr. Sándor Csányi, CEO and Chairman of the OTP Bank Plc for the inspiration and support to research in this field and for releasing the data. The research project TKP2021-NKTA-19 has been implemented with the support provided from the National Research, Development and Innovation Fund of Hungary, financed under the TKP2021-NKTA funding scheme. The author also thanks professor Sándor Komlósi for his valuable help.

Funding

Open access funding provided by University of Pécs.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Hereby the author of the paper ‘Some properties of the maximum loss on loan portfolios’, József Vörös, I state that the paper below is authentic, and it does not express any interest that would influence the reader to conclude potentially biased judgements.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Vörös, J. Some properties of the maximum loss on loan portfolios. Cent Eur J Oper Res 32, 155–176 (2024). https://doi.org/10.1007/s10100-022-00837-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10100-022-00837-x