Abstract

The interactive programming (IP) using aspiration levels is a well-known method applied to multi-criteria decision making under certainty (M-DMC). However, some essential analogies between M-DMC and scenario-based one-criterion decision making under uncertainty (1-DMU) have been recently revealed in the literature. These observations give the opportunity to adjust the IP to a totaly new issue. The goal of the paper is to create two novel procedures for uncertain problems on the basis of the IP ideas: the first one for pure strategy searching and the second for mixed strategy searching. In many ways, they allow a better consideration of the decision maker's preferences than classical decision rules. One of their significant advantages consists in analyzing particular scenarios sequentially. Another strong point is that the new procedures can be used by any kind of decision makers (optimists, moderate, pessimists). The new approaches may be helpful when solving problems under uncertainty with partially known probabilities. Both methods are illustrated in the paper on the basis of two fictitious decision problems concerning the choice of an optimal location and the optimization of the stock portfolio structure.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The goal of the paper is to present two novel methods for uncertain problems on the basis of the interactive programming (IP) ideas. The IP is one of the approaches applied to multi-criteria decision making under certainty (M-DMC). This type of optimization is related to the situation where the decision maker (DM) assesses particular decision variants (alternatives, options, courses of action) in terms of more than one criterion and all the parameters of the problem are deterministic.

The IP is successfully used in discrete and continuous of M-DMC. The discrete version is called Multiple Attribute Decision Problems (MADP). In MADP the number of possible courses of action is precisely defined at the beginning of the decision making process and the levels of analyzed attributes are assigned to each decision variant (Singh et al. 2020). The continuous version of M-DMC is related to Multiple Objective Decision Problems (MODP). Within MODP the cardinality of the set of potential options is not exactly known. The DM only knows the mathematical optimization model, i.e., the set of objective functions and constraints that create the set of possible solutions (Ding et al. 2016; Tzeng and Huang 2011). In this paper we investigate both cases (MADP and MODP).

So far, the IP has been used in multi-criteria problems: initially—under certainty (Nakayama 1995; Stewart 1999) and later–under uncertainty (Tehhem et al. 1986; Yano 2017) as well. Nevertheless, some evident analogies between multi-criteria decision making with deterministic parameters (M-DMC) and scenario-based one-criterion decision making under uncertainty (1-DMU) have been recently revealed in the literature (Gaspars-Wieloch 2020a, 2021a, 2022). These observations give the possibility to extend the current applications range of IP and to adjust the IP to an entirely new domain–the uncertain optimization with one criterion.

The IP in M-DMC consists in analysing objectives iteratively according to a pre-established order while the IP in 1-DMU means an iterative scenario analysis where the sequence of considered scenarios is supposed to be defined as well. Such a concept differs significantly from existing decision rules designed for 1-DMU, but the novel idea opens new opportunities especially in the case of uncertain problems with partially known probabilities. The main advantage of the suggested approach is its flexibility.

The paper contains a description of two procedures. The first one is designed for pure strategy searching and is based on the discrete integer programming. Pure strategies are connected with situations where the DM selects and executes only one option. The second method is designed for mixed strategy searching and refers to the continuous integer programming. Mixed strategies are combinations of several options. They are especially common in portfolio construction and cultivation of different plants.

The paper is organized as follows. Section 2 describes the original version of the IP. The emphasis is put on IP based on aspiration levels since this variety is directly used to develop analogical decision rules for 1-DMU. Section 3 presents the analogies between multi-criteria optimization with deterministic parameters and scenario-based one-criterion optimization with indeterministic parameters. Section 4 describes two new procedures: IP for uncertain pure strategy searching and IP for uncertain mixed strategy searching. The first method has been already briefly presented in (Gaspars-Wieloch 2021b), but it certainly requires a more complex analysis, while the description of the second aforementioned method has never been published. Section 5 uses two illustrative examples to show how the novel approaches may be applied to indicate the optimal pure and mixed strategy. Conclusions concerning the suggested procedures are gathered in the last section.

2 Interactive programming with aspiration levels

The interactive programming is regarded as a multi-criteria reduction technique (Peitz and Dellnitz 2018), which means that it does not compute the entire set of optimal compromises, but instead interactively explores the Pareto set. One of the main advantages of interactive methods is the reduced computational effort, especially in the presence of many criteria, since it is not affected significantly by the dimension of the Pareto set.»In contrast to a priori and a posteriori methods, in an interactive multiobjective optimization method, the DM specifies preferences progressively during the solution process to guide the search towards his/her preferred regions. (…) Only one or a small set of solutions which the DM is interested in is found. Thus the computational complexity is reduced and the DM does not need to compare many non-dominated solutions simultaneously.« (Xin et al. 2018). However, it is worth underlining that this characteristic signifies that in the case of the IP approaches applied to the discrete version of M-DMC the generation of a ranking consisting of all the options is rather impossible.

There are numerous varieties of IP programming techniques designed for multiple objective decision making (Stewart 1999), but in the paper we examine only one IP algorithm. Shin and Ravindran (1991) have divided IP methods into the following groups: branch-and-bound methods, feasible region reduction methods, feasible direction methods, Lagrange multiplier methods, visual interactive methods using aspiration levels, criterion weight space methods, trade-off cutting plane methods, but within the last 30 years other approaches have been developed. They are discussed for instance in (Jaszkiewicz and Branke 2008; Peitz and Dellnitz 2018; Xin et al. 2018). In the article we investigate the concept related to the feasible region reduction. Additionally, Ravindran (2008) distinguishes diverse interaction styles used in IP, such as the precise local trade-off ratio, interval trade-off ratio, comparative trade-off ratio, index specification and value trade-off, binary pairwise comparison, vector comparison, but here we concentrate on IP based on so-called aspiration levels. This tool has three essential advantages (Nakayama 1995): (1)»It does not require any consistency of the DM’s judgement, (2) It reflects the wish of the DM very well, (3) It plays the role of probe better than the weight for objective functions.«

The discrete IP algorithm explored here consists of the following steps:

-

1.

Define the set of alternatives A = {A1,…,Aj,…,An} where n is the number of decision variants.

-

2.

Define the set of criteria C = {C1,…,Ck,…,Cp} where p is the number of criteria.

-

3.

Generate the payoff matrix (see Table 1, Sect. 3) where bk,j represents the performance of criterion Ck if option Aj is selected.

-

4.

Choose the first criterion to be analyzed (l = 1).

-

5.

Normalize the initial values connected with the first criterion (l = 1) according to Eq. (1).

$$ b\left( n \right)_{k,j} = \left\{ \begin{gathered} \frac{{b_{k,j} - \mathop {\min }\limits_{j} b_{k,j} }}{{\mathop {\max }\limits_{j} b_{k,j} - \mathop {\min }\limits_{j} b_{k,j} }}\quad {\text{for}}\, {\text{maximized criteria}} \hfill \\ \frac{{\mathop {\max }\limits_{j} b_{k,j} - b_{k,j} }}{{\mathop {\max }\limits_{j} b_{k,j} - \mathop {\min }\limits_{j} b_{k,j} }}\quad {\text{for }} {\text{minimized }} {\text{criteria}} \hfill \\ \end{gathered} \right. $$(1) -

6.

Select the decision variants satisfying the first objective according to the normalized aspiration level declared by the DM (AL1).

-

7.

Move to criterion l = 2 (subsequent elements of the objective sequence are established iteratively by the DM), normalize its values only for the reduced set of options (A1) and select decision variants fulfilling AL2. Follow the same procedure for criteria l = 3,…,p-1.

-

8.

Select from the current reduced set of alternatives (i.e. Ap−1) the option (or set of options) performing the best criterion l = p. That is the compromise solution.

Note that there is no need to normalize all the data in step 5 since after each iteration the set of possible options changes and when it changes, maximum and minimum values within a given criterion may vary as well. Of course, the normalization is only required if the objectives are expressed in different units and/or scales. If each initial value has to be transformed into a performance degree, it means that the aspiration levels also must be normalized. When the normalization is not necessary, we say that a given variant satisfies the aspiration level if its value is not lower than AL (case of maximized criterion) or not higher than this parameter (case of minimized criterion). When the normalization is applied, normalized values should be always not lower than the normalized aspiration level (irrespective of the criterion optimization type).

As it has been mentioned in Sect. 1, the IP may support MODP as well. In this case the optimal solution is generated by means of an optimization model.

The continuous IP algorithm with aspiration levels involves the following steps:

-

1.

Define the decision variables x1, …, xj, …, xn. They represent the share of a given alternative in the whole mixed strategy.

-

2.

Define the set of criteria C = {C1,…,Ck,…,Cp} and their objective functions f1(x), …, fk(x), …, fp(x).

-

3.

Formulate constraints connected with the decision problem:

$$ x_{j} \in SFS^{\prime}\quad j = 1, \ldots ,n $$(2)$$ x_{j} \ge 0\quad j = 1, \ldots ,n $$(3)$$ \mathop \sum \limits_{j = 1}^{n} x_{j} = 1 $$(4)where SFS’ denotes the set of feasible solutions. Thus, Eq. (2) concerns all the conditions additionally formulated by the DM.

-

4.

Establish iteratively the sequence of the analyzed criteria Seq = (C(1),…,C(l),…,C(t)) where t = p.

-

5.

Declare iteratively the aspiration levels as normalized values: AL(1), …, AL(l),…, AL(t-1).

-

6.

Solve the optimization model (2)-(6):

$$ f_{C\left( 2 \right)} \left( x \right) \to \min /\max $$(5)$$ g_{C\left( 1 \right)} \left( x \right) \ge AL\left( 1 \right) $$(6)where gk(x) denotes the normalized value of criterion Ck and is computed according to formula (7).

$$ {g_{k} \left( x \right) = \left\{ \begin{gathered} \frac{{f_{k} \left( x \right) - f_{k}^{\min } \left( x \right)}}{{f_{k}^{\max } \left( x \right) - f_{k}^{\min } \left( x \right)}}\quad {\text{for}}\, {\text{maximized criteria}} \hfill \\ \frac{{f_{k}^{\max } \left( x \right) - f_{k} \left( x \right)}}{{f_{k}^{\max } \left( x \right) - f_{k}^{\min } \left( x \right)}}\quad {\text{for}}\, {\text{minimized criteria}} \hfill \\ \end{gathered} \right.} $$(7) -

7.

Solve the optimization model (2), (3) and (4), (6) and (8), (7) and (9):

$$ f_{C\left( 3 \right)} \left( x \right) \to \min /\max $$(8)$$ g_{C\left( 2 \right)} \left( x \right) \ge AL\left( 2 \right) $$(9)where gC(2)(x) is calculated on the basis of the maximum and minimum value of function fC(2)(x) obtained after solving model (2), (3), (4), (5) and (6).

-

8.

Follow the same procedure for criteria C(3),…,C(t-1) using Eqs. (2), (3) and (4) and (10) and (11).

$$ f_{C\left( l \right)} \left( x \right) \to \min /\max $$(10)$$ g_{C\left( 1 \right)} \left( x \right) \ge AL\left( 1 \right); \ldots ; g_{{C\left( {l - 1} \right)}} \left( x \right) \ge AL\left( {l - 1} \right) $$(11)

When solving the last optimization model (i.e. in the case where criterion C(t) is optimized) there is no need to search both the maximal and minimal function value. It suffices to apply the direction of optimization desired by the DM. The result is the compromise solution.

As we can observe, similar to IP for MADP, within IP for MODP the maximum and minimum criteria values are regularly updated, which means that for each subsequent optimization model the final set of feasible solutions (SFS) is smaller and smaller.

Steps 4 and 5 require a broader comment. The expression “iteratively” means that there is no need to declare the whole sequence of criteria and all the aspiration levels at the beginning of the decision making process. These data may be defined successively after solving a given optimization model. The iterative approach has two essential advantages. First, it gives the DM the possibility to control the search of the solution and to express his/her expectations at every stage of the process. Second, it protects the DM from formulating a contradictory optimization problem without any feasible solution.

It is worth emphasizing that usually the decision variables need to be non-negative [Eq. (3)], but in some specific situations this constraint is redundant (e.g. portfolio construction with short sale).

3 Comparative analysis of M-DMC and 1-DMU

We will see that the structure of multi-criteria optimization under certainty is extremely similar to the structure of the scenario-based 1-criterion optimization under uncertainty. This observation has been made for the first time by the author of this paper in Gaspars-Wieloch (2020a, 2021a). Here we are reminding the main conclusions. M-DMC is connected with cases where the decision maker evaluates particular options in terms of many criteria (i.e. at least two). „Under certainty” means that the parameters of the problem are supposed to be known. The second area refers to situations in which the DM assesses a given course of action on the basis of only one objective function, but, for instance due to numerous unknown future factors, the problem data are not deterministic. Instead of that a set of potential scenarios is available. These scenarios may be suggested by experts, decision makers or by someone who is both an expert and a DM. “Scenario” means a possible way in which the future might unfold.

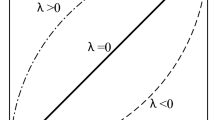

There are diverse uncertainty levels (Courtney et al. 1997; Waters 2011):

-

uncertainty with known probabilities (the DM knows the alternatives, scenarios and their likelihood),

-

uncertainty with partially known probabilities (the DM knows the alternatives, scenarios, but the probability of their occurrence is not exactly known–it may be given as intervals or scenarios can be ordered from the most to the least probable),

-

uncertainty with unknown probabilities (the DM knows the alternatives and scenarios, but has no knowledge on their probabilities),

-

uncertainty with unknown scenarios (the DM knows possible alternatives only).

In this research we focus on uncertainty with partially known probabilities. Uncertainty may be modelled in diverse ways, but here we refer to scenario planning (SP) since it is a very comfortable tool–it does not require sophisticated mathematical skills, so it can be used both by researchers and managers (i.e. theoreticians and practitioners). Furthermore, within SP the set of scenarios does not need to be exhaustive.

The payoff matrix representing M-DMC is given in Table 1 where n–number of alternatives, p–number of criteria, bk,j–performance of criterion Ck if option Aj is selected.

On the other hand, Table 2 shows the payoff matrix for scenario-based 1-DMU: m–number of scenarios, ai,j–payoff obtained if option Aj is selected and scenario Si occurs.

Payoffs in Tables 1 and 2 may represent efficiencies, profits, revenues, profitability, sale volumes etc.

Indeed, we state without a doubt that similarities between both tables are highly visible—payoff matrices are almost analogous. In both cases there is a set of potential alternatives and the set of objectives in M-DMC can correspond to the set of scenarios in 1-DMU. In Gaspars-Wieloch (2022) another significant analogy is discussed–in both issues the discrete and continuous version can be investigated, which means that the pure and mixed strategy searching is possible. It is worth underlining that without the use of scenario planning as an uncertainty modelling tool the identification of some analogies between M-DMC and 1-DMU would not be possible.

A comparative analysis involves not only similarities, but also differences. The first discrepancy is as follows. Within 1-DMU, if Aj is chosen, the final outcome (ai,j) is single and depends on the real scenario which will occur. Within M-DMC, if Aj is selected, there are p final payoffs, i.e. b1,j, …, bk,j, …, bp,j, because the decision variants are assessed in terms of p essential objectives. Second, in the case of M-DMC initial values usually have to be normalized as they represent the performance of diverse criteria. In 1-DMU the problem is related to one objective. That is why the normalization is redundant. The observed discrepancies do not cancel the opportunity to develop new procedures for 1-DMU on the basis of methods already invented for M-DMC. Nevertheless, the existence of the aforementioned differences should be certainly taken into account in Sect. 4.

4 Two interactive decision rules for 1-DMU

The observed analogies give us the possibility to adjust the IP to a totally new area, i.e. to scenario-based one-criterion optimization under uncertainty with partially known probabilities. In the previous section we have mentioned that this uncertainty degree is usually related to a decision situation where the probability is given as interval values (instead of punctual ones) or where the DM is able to order scenarios from the most to the least probable. In this research we assume that the decision maker can declare the order.

Let us start with the construction of the algorithm for pure strategy searching. From the technical point of view, the methodology is similar, but the interpretation is going to be different. The Interactive Decision Rule for one-criterion uncertain problems and pure strategies may consist of the following steps (IDR(P)):

-

1.

Define the set of options A = {A1,…,Aj,…,An} where n is the number of alternatives.

-

2.

Define the set of scenarios S = {S1,…,Si,…,Sm} where m is the number of scenarios.

- 3.

-

4.

Choose the scenario with the highest subjective chance of occurrence (l = 1).

-

5.

Find set A1, i.e. select the alternatives satisfying AL1, i.e. the aspiration level declared for the aforementioned scenario by the DM.

-

6.

Move to scenario l = 2 (subsequent elements of the scenario sequence are established iteratively by the DM), and select alternatives fulfilling AL2 from set A1. Follow the same procedure for scenarios l = 3,…,m-1.

-

7.

Select from the current reduced set of alternatives (i.e. Am−1) the option (or set of options) with the best payoff value within scenario l = m. That is the optimal pure strategy.

Let us briefly discuss the features of IDR(P).

First, when analysing the structure of the suggested algorithm, indeed, we can notice that the normalization step is not required, since the data representing payoffs are connected with only one criterion.

Second, within M-DMC, the order of criteria is determined by the subjective objective importance, while within 1-DMU with partially known probabilities the order of scenarios depends on their subjective chance of occurrence which is closely linked to the DM’s attitude towards risk, i.e. his or her state of mind and soul.

Third, in IDR(P) the aspiration level has got a different interpretation than is the case of the original IP. In M-DMC parameters AL are used to declare the decision maker’s requirements. He or she expects at least this level within a considered criterion. On the other hand, in 1-DMU diverse parameters AL are applied for particular scenarios since the payoff ranges related to each scenario may be diverse. Additionally, some scenarios can offer really unfavourable results and that is why in such cases the DM’s expectations should be lower. Of course, sometimes, if the payoff ranges are similar, parameters ALi may be the same for each scenario since all the results are related to the same objective.

In the second part of this section the algorithm for mixed strategy searching will be developed. The Interactive Decision Rule for one-criterion uncertain problems and mixed strategies may consist of the following steps (IDR(M)):

-

1.

Define the decision variables x1, …, xj, …, xn.

-

2.

Define the set of scenarios S = {S1,…,Si,…,Sm} and the coefficients of their objective functions f1(x), …, fi(x), …, fm(x).

-

3.

Formulate constraints connected with the decision problem:

$$ x_{j} \in SFS^{^{\prime}} \quad j = 1, \ldots ,n $$(12)$$ x_{j} \ge 0\quad j = 1, \ldots ,n $$(13)$$ \mathop \sum \limits_{j = 1}^{n} x_{j} = 1 $$(14)where SFS’ denotes the set of feasible solutions. Thus, Eq. (12) concerns all the conditions additionally formulated by the DM.

-

4.

Establish iteratively the sequence of the analyzed scenarios Seq = (S(1),…,S(l),…,S(t)) where t = p.

-

5.

Declare iteratively the aspiration levels: AL(1), …, AL(l),…, AL(t-1).

-

6.

Solve the optimization model (12)-(16):

$$ f_{S\left( 2 \right)} \left( x \right) \to \max /\min $$(15)$$ f_{S\left( 1 \right)} \left( x \right) \ge AL\left( 1 \right) $$(16) -

7.

Solve the optimization model (12)-(14), (16) and (17)-(18):

$$ f_{S\left( 3 \right)} \left( x \right) \to \min /\max $$(17)$$ f_{S\left( 2 \right)} \left( x \right) \ge AL\left( 2 \right) $$(18)where AL(2) is determined after solving the model (12)-(16) and finding the maximum and minimum possible value of function fS(2)(x).

-

8.

Follow the same procedure for scenarios S(3),…,S(t-1) using Eqs. (12), (13), (14) and (19) and (20).

$$ f_{S\left( l \right)} \left( x \right) \to \min /\max $$(19)$$ f_{S\left( 1 \right)} \left( x \right) \ge AL\left( 1 \right); \ldots ; f_{{S\left( {l - 1} \right)}} \left( x \right) \ge AL\left( {l - 1} \right) $$(20)

When solving the last optimization model (i.e. in the case where function fS(t) is optimized) there is no need to search both the maximum and minimum function value. It suffices to apply the direction of optimization desired by the DM. The result is the optimal mixed strategy.

In the description of the algorithm we have assumed in Eqs. (16), (18), (20) that the analysed criteria were maximized. However, if a minimized objective is investigated, then the inequality symbol in constraints (16), (18) and (20) ought to be reversed.

Similarly to the IP for M-DMC, the novel interactive approach for mixed strategies does not require non-negative decision variables in each case. In portfolio optimization with short sale this constraint is omitted.

Again, we see that within IDR(M), the normalization is not necessary, which can be regarded as a significant advantage–the procedure is less time-consuming than the original IP for continuous multi-criteria optimization.

5 Examples

Let us discuss two simple illustrative examples in order to analyse the essence of the proposed procedures.

We start with the use of IDR(P) for the following problem. We assume that the managing director of a company is searching an attractive location for a social event which is going to be held on a 15 May 202X (five potential places, step 1). The company tends to select the place according to the number of participants (the criterion is maximized), but this number depends on diverse factors. Therefore the company considers six possible scenarios (step 2). They are quite different as numerous diverse aspects have been taken into account. Necessary data concerning the expected number of participants are gathered in Table 3 (step 3). The figures have been estimated by three experts. The degree of uncertainty is rather high as the social event will be held in seven months and such events haven’t been organized by the firm before, so the managing director is only able to establish a sequence of scenarios, i.e. to order the scenarios from the most to the least probable. His opinion mainly relies on subjective predictions and assumptions.

The decision maker sets the following scenario order: (S6, S5, S2, S1, S4, S3), which means that the scenario with the highest subjective chance of occurrence is S6 (step 4). The aspiration level (declared subjectively by the managing director) for the first considered scenario is equal to AL1(S6) = 1000, which means that set A1 = {A1, A2, A3, A4}, step 5 (option A5 is removed because 100 < 1000). Within step 6 we perform the same procedure for scenarios S5, S2, S1 and S4. The DM has noticed that the payoff range connected with scenario S5 is narrower and lower than it was for scenario S6. Therefore, he will be satisfied with a number of participants equal to at least AL2(S5) = 400. This condition is not met by alternative A3, thus A2 = {A1, A2, A4}. Scenario S2 is quite favourable, so the managing director, in this case, would be satisfied with 3000 participants: AL3(S2) = 3000, which entails another reduction of the set of alternatives: A3 = {A1, A4}. For scenarios S1 and S4 the options from A3 have the same values (6000 = 6000 and 800 = 800), so the declaration of aspiration levels AL4(S1) and AL5(S4) is quite unusual—if the DM defines a level lower than the expected numbers, both locations will still belong to the set; if the DM determines a level exceeding the expected numbers, both locations will be excluded and the managing director will not be able to indicate the best solution. Thus, let us assume that the DM intends to examine all the remaining scenarios and that is why he declares the following conditions: AL4(S1) = 6000 and AL5(S4) = 800. With such preferences the reduced set remains the same: A3 = A4 = A5 = {A1, A4}. Now, we can move to step 7 since scenario S3 is the last to consider. Within set A5 location A4 is related to a larger number of participants (1500 > 0) than location A1. Therefore the managing director should select the fourth location (A4)–this is the optimal pure strategy recommended by IDR(P).

We can compare the IDR(P) solutions with existing classical decision rules recommendations. The basics of these procedures are explained for instance in Gaspars-Wieloch (2020b, 2021a):

- For the Bayes rule (designed for multi-shot decisions) A3 is the best.

- For the Wald rule (designed for one-shot decisions and extreme pessimists)–A4.

- According to the max-max rule (designed for one-shot decisions and extreme optimists) A4 should be chosen.

- The Hurwicz rule (designed for one-shot decisions and only based on extreme payoffs) indicates: A3 for high, mid and low optimism coefficient values; A2 for very low optimism values and A4 for extreme pessimists.

- The Savage rule (designed for one-shot decisions and analysing the position of a given payoff within the remaining scenario outcomes; recommended to extreme pessimists, based on relative losses) suggests A2.

- The Hayashi rule (designed for one-shot decisions and analysing the position of a given payoff within the remaining scenario outcomes; recommended to extreme pessimists, based on relative profits) treats all the alternatives as equivalent: A1, A2, A3, A4 and A5.

As we can observe, solutions are diverse, but this should not be surprising since each decision rule is designed for different purposes and different types of decision makers. Therefore, a comparison is not justified.

Now let us explore the decision problem concerning mixed strategy searching and test IDR(M). We assume that the investor is interested in creating a stock portfolio without short sale. He considers 7 potential companies (A1–A7) and wants to maximize the total monthly rate of return. Thus, the optimization model will contain seven decision variables (step 1). According to experts four essential scenarios (S1–S4) should be taken into account (step 2), but neither the experts nor the decision maker are able to estimate the probability of occurrence. The investor can only order the scenarios from the most to the least probable. Table 4 represents the expected monthly rates of return.

Let us assume that in the investor’s opinion the share of each company in the portfolio should not exceed 20% and that share A6 ought to be not higher than share A3 (the losses connected with A6 are the most severe, A3 is the only company with positive rates of return, regardless of the scenario). Hence, constraints concerning shares are as follows (step 3):

The investor is not able to estimate the probability of occurrence of each scenario, but he thinks that scenario S3 is the most probable (this scenario has the lowest average of rates of return and a quite high standard deviation). S4 is a little less probable than S3 (S4 has a higher average of payoffs, but its standard deviation is also higher), step 4. Within step 5 the investor is supposed to declare the aspiration level for the first scenario from the sequence, e.g. AL1(S3) = 7%. Now the first optimization model may be solved (step 6)–it consists of Eqs. (21), (22), (23), (24) and (25).

The maximum and minimum value of the current objective function is equal to 9% and 5.48%, respectively. This information will allow the investor to declare a rational aspiration level for the second scenario from the sequence: AL2(S4) = 6%. According to the investor, scenario S2 is a little less likely than scenario S4, which means that S2 is the third element in the sequence (step 7). The second optimization model to solve contains Eqs. (21), (22) and (23) and (25), (26) and (27).

The maximum and minimum value of the new objective function is equal to 1.042% and − 0.2%, respectively. This information will allow the investor to declare a rational aspiration level for the third scenario from the sequence: AL3(S2) = 1%. According to the investor, scenario S1 is the least probable, thus–S1 is the last element in the sequence. The third optimization model to solve contains Eqs. (21), (22), (23), (25), (27) and (28),(29), step 8.

The optimal solution is x1 = 0.185, x2 = 0.132, x3 = 0.200, x4 = 0.083, x5 = 0.200, x6 = 0.000, x7 = 0.200. The shares represent the best mixed strategy obtained by means of IDR(M) and on the basis of the DM’s preferences. The maximal expected rate of return for scenario S1 is equal to 3.809%. In such a case the expected rate of return for the remaining scenarios equals 1.042% (for scenario S2), 7.000% (for scenario S3) and 6.000% (for scenario S4).

As we certainly noticed our investor is rather a pessimist decision maker, but IDR(M) can be applied by any kind of people, so the investor could start with a scenario with a relatively high average of payoffs and relatively low standard deviation.

Let us assume that in connection with the fact that some expected rates of return are negative, the investor decides to find the optimal mixed strategy where the short sale is possible. Within the short sale the investor sells assets or stocks that he does not own. They are borrowed in anticipation of a price decline. The seller is then supposed to return an equal number of shares at some point in the future. The use of the short sale entails the necessity to replace constraint (22) with condition (30). In such circumstances the decision variables do not need to be non-negative.

If we assume that the investor still intends to apply the sequence Seq = (S3, S4, S2, S1), the first model to solve consists of Eqs. (21), (23), (24), (25), (30). The first optimal solution is: x1 = 0.200, x2 = 0.200, x3 = 0.200, x4 = 0.200, x5 = 0.200, x6 = -0.200, x7 = 0.200. Hence, the short sale would be applied to share A6.

The maximum and minimum value of the current objective function is equal to 17.6% and 2.97%, respectively. This information allows the investor to declare a rational aspiration level for the second scenario from the sequence, but here we maintain the value set before, i.e. AL2(S4) = 6%. The second optimization model to solve contains Eqs. (21), (23), (25), (26), (27), (30).

The maximum and minimum value of the new objective function is equal to 1.45% and − 4,6%, respectively. We assume that AL3(S2) = 1.042%. Why is it a modified aspiration level (compared to the aspiration level used in the case without short sale)? The answer is as follows: if the final expected rate of return for scenario S2 in the optimal solution with non-negative shares is equal to 1.042%, it means that we can try to get such a result with the short sale as well. The third optimization model to solve contains Eqs. (21), (23), (25), (27) and (28), (29), (30), but the coefficient 1 needs to be replaced with 1.042 in Eq. (29).

The optimal solution is x1 = 0.199, x2 = 0.078, x3 = 0.200, x4 = 0.200, x5 = 0.200, x6 = -0.055, x7 = 0.178. The shares represent the best mixed strategy obtained by means of IDR(M) on the assumption that the short sale is possible. The maximal expected rate of return for scenario S1 is equal to 4,814%. In such a case the expected rate of return for the remaining scenarios equals 1.042% (for scenario S2), 7.000% (for scenario S3) and 6.000% (for scenario S4).

Hence, thanks to the short sale, an improvment was possible. If the investor borrow the shares of company A6 (5.5% of the initial investor's capital) and sells them for one month, his total capital will increase by 5.5%. According to data from Table 4, after adding the short sale, the expected monthly rates of return will be the same for scenarios S2, S3 and S4, but this measure will improve for scenario S1 (from 3.809% to 4,814%). Of course, we must be aware of the fact that the short sale usually entails additional costs (loan costs and supplementary transaction costs), which means that in real problems the difference between the rate of return without short sale and the rate of return with short sale is affected by the aforementioned expenses. Neverthelless, it is worth stressing that formulas (22) and (30) had also a strong impact on the final results. If there were no share limitations, the possibilities to increase the rate of return would be greater in both cases.

Equation (14) in IDR(M) indicates that the decision maker is allowed to define numerous additional constraints. In the analysed portfolio example (with and without short sale) they can be connected with the relationships between particular shares, but they can also concern for instance the accepted risk level.

6 Discussion and conclusions

The goal of this paper was to:

-

Explain in detail the IDR(P), i.e. the interactive decision rule for pure strategies;

-

Develop IDR(M), i.e. the interactive decision rule for mixed strategies.

The first method has been already presented in Gaspars-Wieloch (2021b), but here the description was broader and more precise.

The second procedure has never been formulated before, but is vital, since it allows the DM to generate solutions being a weighted combination of numerous alternatives. Such recommendations are especially desired in the optimal investment portfolio construction.

Both decision rules have been developed thanks to the author’s observation (Gaspars-Wieloch 2021a) connected with the analogy between two different issues: the multi-criteria optimization under certainty and the one-criterion optimization under uncertainty. The creation of two new procedures for 1-DMU were possible thanks to the existence of two almost analogous methods applied to M-DMC. “The novel interactive decision rules (IDR) are technically very similar to the initial ones, but the interpretation of particular steps is often different. Within the original IP criteria are ordered according to their importance while within the suggested approaches the scenario sequence is established on the basis of the subjective chance of occurrence”. (Gaspars-Wieloch 2021b).

Now, let us discuss several aspects related to the analysed topic.

-

(1)

IDR(P) is designed for pure strategies while IDR(M) is designed for mixed strategies, but note that if we apply binary decision variables in the optimization model used in IDR(M) we will obtain the same optimal solution as we would obtain when using IDR(P). Such a relationship is due to the fact that IDR(M) is actually a generalization of IDR(P). Nevertheless, even if IDR(M) could solve any problem, it is recommended to apply IDR(P) when a pure strategy is sought, because the IDR(M) methodology is more time-consuming.

-

(2)

It is worth stressing that both presented decision rules are designed for one-shot decisions, i.e. for decisions performed only once. “If the DM considers executing one of the analysed alternatives in the future, it is advised to follow the procedure one more time, because the decision maker’s attitude towards the problem may change as time goes on”. (Gaspars-Wieloch 2021b).

-

3)

The proposed procedures should not be compared with classical decision rules because each technique is based on different assumptions which result from different DM’s needs, goals, predictions and states of soul/mind.

-

(4)

“Sometimes the IP for multiple criteria problems is used under the assumption that all the aspiration levels are declared at the beginning of the decision making process. Such a possibility also exists in the case of 1-criterion uncertain problems, but note that under such circumstances the solved problem may have an empty feasible region. That is why it is recommended to estimate the aspiration levels sequentially in both analogical techniques” (Gaspar-Wieloch 2021b).

-

(5)

The paper focuses on maximized (and minimized) criteria, but real problems often contain neutral criteria, i.e. objectives within which the decision maker tends to reach a specified value or interval. This case has not been discussed in the article, but the formulated algorithms can be easily modified for that purpose.

IDR(P) and IDR(M) have some significant advantages. First, they do not require the use of precise probabilities–it is quite important, since in the case of decisions made in turbulent times and in the case of innovative or innovation projects the probability estimation may be rather complicated. Second, both procedures do not require the payoff normalization. Third, they can be applied by any kind of decision maker (optimism, pessimist, moderate) since each decision maker is allowed to define the scenario sequence and aspiration levels individually. Fourth, it gives the possibility to sequentially analyse scenarios while classical decision rules assume that the whole information on the DM’s preferences is declared at the beginning of the decision making process. Thus, IDRs are definitely more flexible.

In the future it would be desirable to investigate the possibilities to adjust the initial multiple-criteria IP to multi-criteria decision making under uncertainty. Approaches for that area are already described in the literature (Klein et al. 1990; Nowak et al. 2021; Ozceylan and Paksoy 2014; Tehhem et al. 1986; Yano 2017), but the author’s intention is to maintain the interactive character for scenarios, not for criteria. So, would it be possible to examine numerous objectives, each one described by a separate scenario planning matrix, and find a compromise solution assuming that the decision maker can analyse the scenarios according to a pre-established order connected with the subjective chance of occurrence?

Availability of data and material

All the data are fictitious and prepared by the author.

Code availability

Not applicable.

References

Courtney H, Kirkland J, Viquerie P (1997) Strategy under uncertainty. Harv Bus Rev 75(6):66–79

Ding T, Liang L, Min Y, Huaqing W (2016) Multiple attribute decision making based on cross-evaluation with uncertain decision parameters. Math Problems Eng 2016:10

Gaspars-Wieloch H (2020a) A new application for the goal programming–the target decision rule for uncertain problems. J Risk Financial Manag 13:280–293

Gaspars-Wieloch H (2020b) Critical analysis of classical scenario-based decision rules for pure strategy searching. Organ Manag Ser 149:155–165

Gaspars-Wieloch H (2021a) On some analogies between one-criterion decision making under uncertainty and multi-criteria decision making under certainty. Econ Business Rev 7(2):17–36

Gaspars-Wieloch H (2021b) From the interactive programming to a new decision rule for uncertain one-criterion problems. In: Drobne S, Zadnik Stirn L, Kljajic Borstnar M, Povh J, Zerovnik J (eds) Proceedings of the 16th International Symposium on Operational Research in Slovenia (Sept. 22–24, 2021b), Ljubljana, pp 669–674

Gaspars-Wieloch H (2022) From goal programming for continuous multi-criteria optimization to target decision rule for mixed uncertain problems. Entropy 24(1):51–62

Jaszkiewicz A, Branke J (2008) Interactive multiobjective evolutionary algorithms. In: Branke J, Deb K, Miettinen K, Słowiński R (eds) Multiobjective optimization. Lecture Notes Computer Science. Springer, Berlin, Heidelberg

Klein G, Moskowitz H, Ravindran A (1990) Interactive multiobjective optimization under uncertainty. Manage Sci 36:58–75

Nakayama H (1995) Aspiration level approach to interactive multi-objective programming and its applications. In: Siskos Y, Zopounidis C (eds) Pardalos PM. Advances in Multicriteria Analysis, Kluwer Academic Publishers, pp 147–174

Nowak M, Trzaskalik T, Sitarz S (2021) Interactive multi-objective procedure for mixed problems and its application to capacity planning. Entropy 23(10):1243–1262

Ozceylan E, Paksoy T (2014) Interactive fuzzy programming approaches to the strategic and tactical planning of a closed-loop supply chain under uncertainty. Int J Prod Res 52(8):2363–2387

Peitz S, Dellnitz M (2018) A survey of recent trends in multiobjective optimal control-surrogate models, feedback control and objective reduction. Math Comput Appl. https://doi.org/10.3390/mca23020030

Ravindran AR (ed) (2008) Operations research and management science. Handbook. Taylor and Francis Group LLC., Boca Raton, London, New York

Shin WS, Ravindran A (1991) Interactive multi objective optimization: survey I–continuous case. Comput Oper Res 18:97–114

Singh A, Anjana G, Aparna M (2020) Matrix games with 2-tuple linguistic information. Ann Oper Res 287:895–910

Stewart TJ (1999) Concepts of interactive programming. In: Multicriteria decision making, pp 277–304

Tehhem J, Dugrane D, Thauvoye M, Kunsch P (1986) Strange: an interactive method for multi-objective linear programming under uncertainty. Eur J Oper Res 26(1):65–82

Tzeng GH, Huang JJ (2011) Multiple attribute decision making, methods and applications. A Chapman and Hall Book, London, New York

Waters D (2011) Supply chain risk management. Vulnerability and resilience in Logistics, 2nd edn. Kogan Page, London

Xin B, Chen L, Chen J, Ishibuchi H, Hirota K, Liu B (2018) Interactive multiobjective optimization: a review of state-of-the-art. IEEE Access 6:41256–41279

Yano H (2017) Interactive multiobjective decision making under uncertainty. CRC Press, Boca Raton, FL

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

Not applicable.

Corresponding author

Ethics declarations

Conflicts of interest

Not applicable-the author declares that there is no conflict of interest.

Ethics approval

Not applicable—the study does not involve any human participants, their data or biological material.

Consent to participate

Not applicable.

Research human or animal rights

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gaspars-Wieloch, H. Possible new applications of the interactive programming based on aspiration levels—case of pure and mixed strategies. Cent Eur J Oper Res 31, 733–749 (2023). https://doi.org/10.1007/s10100-022-00836-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10100-022-00836-y