Abstract

By rewriting the Riemann–Liouville fractional derivative as Hadamard finite-part integral and with the help of piecewise quadratic interpolation polynomial approximations, a numerical scheme is developed for approximating the Riemann–Liouville fractional derivative of order \(\alpha \in (1,2).\) The error has the asymptotic expansion \( \big ( d_{3} \tau ^{3- \alpha } + d_{4} \tau ^{4-\alpha } + d_{5} \tau ^{5-\alpha } + \cdots \big ) + \big ( d_{2}^{*} \tau ^{4} + d_{3}^{*} \tau ^{6} + d_{4}^{*} \tau ^{8} + \cdots \big ) \) at any fixed time \(t_{N}= T, N \in {\mathbb {Z}}^{+}\), where \(d_{i}, i=3, 4,\ldots \) and \(d_{i}^{*}, i=2, 3,\ldots \) denote some suitable constants and \(\tau = T/N\) denotes the step size. Based on this discretization, a new scheme for approximating the linear fractional differential equation of order \(\alpha \in (1,2)\) is derived and its error is shown to have a similar asymptotic expansion. As a consequence, a high-order scheme for approximating the linear fractional differential equation is obtained by extrapolation. Further, a high-order scheme for approximating a semilinear fractional differential equation is introduced and analyzed. Several numerical experiments are conducted to show that the numerical results are consistent with our theoretical findings.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper deals with high-order numerical methods for approximating the following semilinear fractional differential equation for \(\alpha \in (1,2)\)

where \(\beta < 0\), the time \(T>0\), initial values \(y_{0}, y_{0}^{1} \in {\mathbb {R}}\) and f is a nonlinear function satisfying the following uniform Lipschitz continuity: for \(s_1,s_2 \in {\mathbb {R}}\) there exists a positive constant L such that

Moreover, \(y^{\prime } (0)\) denotes the derivative of y at \(t=0\) and the Caputo derivative \( _0^C D_{t}^{\alpha } y(t)\) is defined by [15, 23], with \(\alpha \in (1, 2)\),

where \( y''(s)\) denotes the second order derivative of y and \(\varGamma \) denotes the standard \(\varGamma \) function.

Following a relation between Caputo and Riemann–Liouville fractional derivatives, the Eq. (1) can be rewritten, see [3], as

The aim of this paper is to construct a high-order numerical method for approximating (4). In order to achieve this, we first construct a high-order scheme to approximate the Riemann–Liouville fractional derivative \( _0^R D_{t}^{\alpha } y(t), \, \alpha \in (1,2).\) Recall that the Riemann–Liouville fractional derivative can be expressed as a Hadamard finite-part integral. Approximating the Hadamard finite-part integral with the quadratic interpolation polynomials, we obtain a scheme to approximate the Riemann–Liouville fractional derivative. We shall show in Sect. 2 that the error has the asymptotic expansion \( \big ( d_{3} \tau ^{3- \alpha } + d_{4} \tau ^{4-\alpha } + d_{5} \tau ^{5-\alpha } + \cdots \big ) + \big ( d_{2}^{*} \tau ^{4} + d_{3}^{*} \tau ^{6} + d_{4}^{*} \tau ^{8} + \cdots \big ) \) at any fixed time \( T= N \tau = t_{N}\) for some suitable constants \(d_{i}, i=3, 4,\ldots \) and \(d_{i}^{*}, i=2, 3,\ldots \), where \(\tau \) denotes the step size. Applying this scheme, a numerical method for the fractional differential equation (4) is derived in Sect. 3 and the corresponding error is also shown to have a similar asymptotic expansion.

The Hadamard finite-part integral approach has been proposed and analyzed in 1997 by Diethelm [2] for linear fractional differential equations of order \(\alpha \in (0, 1)\) where the author proposed a numerical scheme to approximate the Riemann–Liouville fractional derivative by approximating the Hadamard finite-part integral with piecewise linear interpolation polynomial. The convergence order of the scheme is \(O(\tau ^{2- \alpha }), \alpha \in (0, 1).\) One may obtain the L1 scheme, when the scheme in [2] is applied to approximate the Caputo fractional derivative of order \(\alpha \in (0, 1)\). Diethelm and Walz [5] showed that the error of the L1 scheme obtained in [2] for approximating the Caputo fractional derivative of order \(\alpha \in (0, 1)\) has the asymptotic expansion which can be applied to the linear fractional differential equation to derive the higher convergence orders by extrapolation, see also [19, 30]. Dimitrov [6, 7] also considered the asymptotic expansion of the L1 scheme for approximating the Caputo fractional derivative of order \(\alpha \in (0, 1)\) by using the different approach from [5], see also [10, 24]. For other numerical methods for fractional differential equations, we refer to [18, 20, 1, 14, 17, 26,27,28,29, 11, 12, 21, 13, 16, 31], and references therein.

To the best of our knowledge, there are hardly any numerical schemes in literature for approximating the Caputo fractional derivative and fractional differential equation of order \( \alpha \in (1,2) \) by using the Hadamard finite-part integral approach. Therefore, an attempt has been made in this paper to develop and analyse approximation schemes for the Caputo fractional derivative and the fractional differential equation of the order \(\alpha \in (1, 2)\) by using the Hadamard finite-part integral approach.

The main contributions of this paper are as follows:

-

1.

A new high-order scheme for approximating the Riemann–Liouville fractional derivative of order \( \alpha \in (1,2)\) is introduced based on the Hadamard finite-part integral. it is observed that the error has the asymptotic expansion:

$$\begin{aligned} \big ( d_{3} \tau ^{3- \alpha } + d_{4} \tau ^{4-\alpha } + d_{5} \tau ^{5-\alpha } + \cdots \big ) + \big ( d_{2}^{*} \tau ^{4} + d_{3}^{*} \tau ^{6} + d_{4}^{*} \tau ^{8} + \cdots \big ) \end{aligned}$$(5)for some suitable constants \(d_{i}, i=3, 4,\ldots \) and \(d_{i}^{*}, i=2, 3,\ldots \), where \(\tau \) denotes the step size.

-

2.

We examine a finite difference method based on the previously mentioned scheme for solving semilinear fractional differential equations of order \(\alpha \in (1,2)\) and have proved that the approximate error generated by this method exhibits an asymptotic expansion.

-

3.

The properties of the weights utilized in the approximation scheme for the Riemann–Liouville fractional derivative are thoroughly investigated. Based on these properties, we establish rigorous error estimates with an order of \(O(\tau ^{3-\alpha })\), where \(\alpha \in (1, 2)\), for semilinear fractional differential equations.

-

4.

By employing Richardson extrapolation in conjunction with the asymptotic behaviour of the error, we devise higher-order approximation schemes for effectively approximating semilinear fractional differential equations. The rates of convergence of these schemes are of \(O(\tau ^{3-\alpha })\), \(O(\tau ^{4-\alpha })\), \(O(\tau ^{5-\alpha })\), \(O(\tau ^{4})\), etc., aiming to enhance the accuracy and precision of the approximations.

The paper is organized as follows. Section 2 deals with high-order approximation of the Riemann–Liouville fractional derivative of order \(\alpha \in (1,2)\) based on the Hadamard finite-part integral. Section 3 focuses on high-order approximations of linear fractional differential equations of order \(\alpha \in (1,2)\). Section 4 is on the numerical approximation of a semilinear fractional differential equation of order \(\alpha \in (1,2)\). Numerical tests are carried out to confirm our theoretical findings in Sect. 5.

By \(C, c_{l}, c^{*}_{l}, d_{l}, d^{*}_{l}, l \in {\mathbb {Z}}^{+}\), we denote some positive constants independent of the step size \(\tau \), but not necessarily the same at different occurrences.

2 New high-order scheme for the Riemann–Liouville fractional derivative of order \(\alpha \in (1, 2)\)

In this section, based on the Hadamard finite-part integral approximation, we introduce a new high-order scheme for approximating the Riemann–Liouville fractional derivative of order \(\alpha \in (1, 2)\) and prove that the error has an asymptotic expansion.

2.1 The scheme

It is well-known that the Riemann–Liouville fractional derivative and the Hadamard finite-part integral has the following relation, see, e.g., Elliott [9, Theorem 2.1], with \(\alpha \in (1,2)\),

where the integral \( =\!\!\!\!\!\! \int _{0}^{1} \) must be interpreted as a Hadamard finite-part integral, see Diethelm [3, p.233].

Let \(0 = t_{0}< t_{1}< \cdots < t_{N} =T\) be a partition of [0, T] and \(\tau = \frac{T}{N}\) the step size. At \(t=t_{n}, \, n=1, 2,\ldots , N\), we may write

For any fixed \( n=1, 2,\ldots , N\), we denote \(g(w)=f(t_{n}-t_{n}w)\). When \( n \ge 2\), we approximate g(w) by the following piecewise quadratic interpolation polynomial \(g_{2}(w)\) defined on the nodes \(w_{l}=\frac{l}{n}, l=0,1,2,\ldots , n, \, n \ge 2\):

and, for \( k=2,3,\ldots ,n, \)

We remark that on the first interval \([w_{0}, w_{1}]\), the piecewise quadratic interpolation polynomial \(g_{2}(w)\) is defined on the nodes \(w_{0}, w_{1}, w_{2}\), and on the other interval \([w_{k-1}, w_{k}], k=2, 3,\ldots , n\), the piecewise quadratic interpolation polynomial \(g_{2}(w)\) is defined on the nodes \(w_{k-2}, w_{k-1}, w_{k}\). We also remark that when \(n=1\), it is not possible to approximate \(g(w) = f(t_{n}- t_{n} w)\) with a quadratic interpolation polynomial since we only have two nodes \(w_{l}= \frac{l}{n}, l=0, 1\) in this case.

The following lemma gives a new approximation scheme of the Riemann–Liouville fractional derivative.

Lemma 1

Let \(0=t_{0}< t_{1}< \cdots < t_{N}=T\) with \( N \ge 2\) be a partition of [0, T] and \(\tau = \frac{T}{N}\) the step size. Let \(\alpha \in (1, 2)\). Then, with \(n \ge 2\),

where the weights \(w_{kn}, k=0,1,2,\ldots , n\) and \(n=2,3,\ldots ,N\) are determined as follows.

For \(n=2,\)

For \(n=3,\)

For \(n=4,\)

For \(n\ge 5,\)

Here \(E(\cdot ), F(\cdot )\) and \(G(\cdot )\) are defined by (17), (18) and (19), respectively.

Proof

We first write

where \(R_{n}(g)\) denotes the remainder term. By Diethelm [4, Theorem 2.4], one obtains

Note that

By the definition of Hadamard finite-part integral and with \(K(\alpha )=(-\alpha )(1-\alpha )(2-\alpha ) >0,\) for \(\alpha \in (1,2)\), we obtain, see, Elliott [9] and Diethelm [3, p. 233],

For the second term of the right hand side in (14), we arrive with \(k=2, 3,\ldots ,n\) at

Here, with \(k=2, 3,\ldots ,n\),

where

Similarly, it follows for \(k=2, 3,\ldots ,n\) that

and

where

and

Combining (15) with (16), we obtain from (14),

for some suitable weights \(\alpha _{kn}\). Thus, we obtain

where \(R_{n}(g)\) is introduced in (12). By (13) and with \(g(w) = f(t_{n} - t_{n} w)\), we write (21) in the following form,

where \(w_{kn}\) are defined by (8)–(11) and

This completes the rest of the proof. \(\square \)

Remark 1

Using the following relationship between Caputo and Riemann–Liouville fractional derivatives for \(\alpha \in (1, 2)\),

we obtain the following approximation scheme of the Caputo fractional derivative at \(t = t_{n}, \, n=2, 3,\ldots , N\),

2.2 The error formula for the approximation scheme (21)

This subsection is on the asymptotic expansion of the error for the approximation of the Riemann–Liouville fractional derivative defined in (21).

Theorem 1

Let \(0=t_{0}< t_{1}< \cdots < t_{N}=T\) with \( N \ge 2\) be a partition of [0, T] and \(\tau = \frac{T}{N}\) the step size. Let \(\alpha \in (1, 2)\) and let g be sufficiently smooth on [0, T]. With \(R_{n} (g)\) as the remainder term in (21), the following expansion holds for \(n=2, 3,\ldots , N\)

for some suitable coefficients \(d_{l}, \, l=3, 4 \ldots \) and \(d_{l}^{*}, \, l=2, 3 \ldots ,\) which are independent of n.

Our proof of the above theorem is influenced by [5, Theorem 1.3], where the piecewise linear interpolation polynomials are used to consider the approximation of the Hadamard finite-part integral with \(\alpha \in (0,1)\) and also by [30, Lemma 2.3], where the quadratic interpolation polynomials are applied for the approximation of the Hadamard finite-part integral with \(\alpha \in (0,1)\).

Proof

Let \(n \ge 2\) be fixed and let \(0=w_{0}< w_{1}< w_{2}< \cdots < w_{n}=1\), \(w_{k}= k/n, k=0, 1, 2,\ldots , n\) be a partition of [0, 1] with step size \(h=1/n, n \ge 2.\) Let \(g_{2}(w)\) denote the piecewise quadratic interpolation polynomials defined by (6) on \([w_{l}, w_{l+1}], l=0, 1, 2,\ldots , n-1\) with \(n \ge 2\). Then, it follows that

For \(I_{1}\), there holds

Since g is sufficiently smooth, by using the Taylor series expansion, we find for \(k=0, 1, 2\) that

Thus, we obtain

for some suitable functions \(\pi _{0} (s), \pi _{1}(s), \pi _{2}(s),\ldots \).

Applying Lemma 3 in Appendix with \(G(t) = g^{(l)}(t), l=3, 4,\ldots \), we arrive at

for some suitable functions \(a_{kl} (s), b_{kl}(s), k=0, 1,\ldots \) and \( l=3, 4,\ldots ,\) which are not necessarily the same at different occurrences. Hence, we obtain

for some suitable coefficients \(d_{l}, \, l=3, 4 \ldots \) and \(d_{l}^{*}, \, l=2, 3 \ldots \) which are independent of h. Here, we note that the expansion does not contain any odd integer of power h following the proof of [5, Theorem 1.3].

Now, we turn to the estimate of \(I_{2}\). For \(n \ge 2\), there holds

A use of the Taylor series expansion as in the estimate of \(I_{1}\) shows

Hence, from (24), it follows that

for some suitable coefficients \(d_{l}, \, l=3, 4 \ldots \) and \(d_{l}^{*}, \, l=2, 3 \ldots \) which are independent of h. We again note that the expansion does not contain any odd integer following the argument in the proof of [5, Theorem 1.3]. This concludes the rest of the proof. \(\square \)

Remark 2

For any fixed \( n \ge 2\), we rewrite (23) for \(n=2, 3,\ldots , N\) as

In particular, for \(n=N\), there holds for \(t_{n}= t_{N}=T=1\)

Remark 3

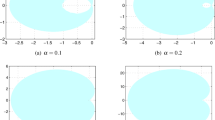

We indeed observe in Example 1 that the experimentally determined convergence order of the numerical scheme defined by (21) ( or (7)) is \(O(\tau ^{3- \alpha })\) with \(\alpha \in (1, 2)\) at any fixed time \(t_{N}=T\) and the extrapolated values of the scheme have the convergence orders \(O(\tau ^{4- \alpha }), O(\tau ^{5- \alpha }), O(\tau ^{4}), \ldots \), with \(\alpha \in (1, 2)\) as expected in the asymptotic expansion formula (25).

3 A high-order numerical scheme for linear fractional differential equation of order \(\alpha \in (1,2)\)

This section focuses on a high-order numerical method for approximating the solution of (4) in linear case with \(\alpha \in (1,2)\), \(\beta <0\), that is,

For simplicity of the notations, we shall assume that \(T=1\).

Let \(0 = t_{0}< t_{1}< t_{2}<\cdots < t_{N} =T=1\) with \(N \ge 2\) be a partition of \([0, T]=[0, 1]\) and \(\tau = \frac{T}{N} =\frac{1}{N} \) the step size. At node \(t_{j} = j \tau , j =2, 3,\ldots , N\), the Eq. (4) satisfies

By (21), the solution of (26) satisfies, for \( j=2, 3,\ldots , N, \, N \ge 2\),

Let \(y_{j} \approx y(t_{j})\) denote the approximation of the exact solution y(t) at \(t=t_{j}.\) Assume that the starting values \(y_{0}\) and \(y_{1}\) are given. Then, define the following numerical scheme to approximate (4), for \( j=2, 3,\ldots , N, \, N \ge 2\) as

for given \(y_0= y(0)\) and \(y_1=y(t_1).\)

Theorem 2

Let \(\alpha \in (1, 2)\). Let \(0 = t_{0}< t_{1}< t_{2}< \cdots < t_{N} =1\) with \(N \ge 2\) be a partition of [0, 1] and \(\tau \) the step size. Let \(y(t_{j})\) and \( y_{j}\) be the exact and the approximate solutions of (28) and (29), respectively. Assume that the function \( y \in C^{m+2}[0, 1], \; m \ge 3\). Further assume that we obtain the exact starting values \(y_{0} = y(0)\) and \(y_{1} = y(t_{1})\). Then, there exist coefficients \(c_{\mu } = c_{\mu } (\alpha )\) and \(c_{\mu }^{*} = c_{\mu }^{*} (\alpha )\) such that the error possesses an asymptotic expansion of the form

or, since \(\tau = \frac{1}{N}\),

Proof

Our proof here is influenced by the proof of Theorem 2.1 in [5], where the asymptotic expansion of the error for approximating the linear fractional differential equation with order \(\alpha \in (0, 1)\) is considered.

Let us fix \(t_{l} =c\) to be a constant for \( l=2,3,\ldots N\) with \(N \ge 2\) and investigate the difference

where \(\tau =1/N\) is the step size. In other words, there is a constant c, independent of N, such that

and consequently, we observe that if \(e_{l}\) possesses an asymptotic expansion with respect to l, then \(e_{N}\) possesses at the same time one with respect to N, and vice versa.

We now claim that

for some suitable constants \(c_{\mu }, c_{\mu }^{*}\) which will be determined later.

Subtracting (29) from (28) and noting \(t_{l} = l \tau = \frac{l}{N} =c\), there holds, with \( g(w) = f(t_{l}- t_{l} w)\),

Since \( g \in C^{m+2}[0, 1], \; m \ge 3,\) an application of the Theorem 1 shows

where \(\mu ^{*}\) is the integer satisfying \( 2 \mu ^{*}< m+1 - \alpha < 2 ( \mu ^{*} +1), \) and \(d_{\mu }\) and \(d_{\mu }^{*}\) are certain coefficients which are independent of \(\tau \).

Since \(l/N =c\), rewrite (33) as

for some new coefficients \({\tilde{d}}_{\mu }\) and \( {\tilde{d}}_{\mu }^{*}\). Choose

We now claim below that (31) holds for the coefficents \(c_{\mu }, c_{\mu }^{*}\) defined in (35) and (36).

For the proof, we use mathematical induction. By our assumption, \(e_{0} =0, e_{1} =0\), and hence, (31) holds for \(l=0, 1\) with the coefficients given by (35) and (36). Let us now consider the case for \(l=2\). An application of the Theorem 1 implies

Thus, we arrive noting that \( \sum _{k=0}^{2} \alpha _{k 2} = - 1/\alpha \) and \( \alpha _{0, 2} = \frac{2^{-\alpha } (\alpha +2) ( N c)^{\alpha }}{(-\alpha ) ( -\alpha +1) ( -\alpha +2)}\) at

This shows that \(e_{2}\) has an asymptotic expansion with respect to the powers of N. By comparing with the coefficients of powers of N, see [5],

where \(c_{\mu }\) and \(c_{\mu }^{*}\) are defined by (35) and (36), respectively.

Assume that (31) holds for \(l=0, 1,\ldots , j-1\). Following the same argument as for showing (38), we obtain, noting \( \sum _{k=0}^{j} \alpha _{k j} = - 1/\alpha \) and applying Theorem 1,

This shows that \(e_{j}\) possesses an asymptotic expansion with respect to the powers of N. By comparing the coefficients of powers of N, see [5], we find that

Hence, (31) holds for \(l=j\). Together these estimates complete the proof of (31). Applying \(l=N\) in (31), we obtain (30). This concludes the rest of the proof. \(\square \)

Remark 4

In Theorem 2, we indeed assume that \(y_{1}\) is known exactly. However, in practical applications, it is often necessary to approximate \(y_{1}\) numerically using alternative numerical methods. These methods aim to achieve a desired level of accuracy, ensuring that the error \(|y_{1}- y(t_{1})|\) meets the required precision. Since the primary focus of this work is on investigating the asymptotic expansion for \(y_{N}\) with \(N \ge 2\), we assume, for the sake of simplicity, that we are able to accurately obtain the value of \(y_{1}\). This simplifying assumption allows us to concentrate on the analysis of higher-order terms and the behavior of the approximation beyond the initial point.

Remark 5

In Theorem 2, we have made an assumption that the solution of the fractional differential equation (28) is smooth enough. However, such an assumption may not be realistic for fractional differential equations as the behavior of the solution to (28) typically follows a power-law growth of the form \(O(t^{\alpha })\), where \(\alpha \in (1, 2)\). But for zero initial data, it is possible to obtain smooth solution as required by our analysis. Our numerical experiments with nonzero initial data suggest that our extrapolation scheme provides higher order convergence as predicted by our theory. To address cases where the solution lacks sufficient smoothness, one approach is to introduce improved algorithms that apply extrapolation techniques. These algorithms aim to achieve higher-order accuracy, as demonstrated in [17, Section 4.1]. By employing extrapolation, it becomes possible to enhance the approximation accuracy even when dealing with solutions that do not exhibit strong regularity.

4 A high-order numerical scheme for a semilinear fractional differential equation of order \(\alpha \in (1,2)\)

This section focuses on a high-order numerical scheme for approximating the solution of the semilinear problem (4).

For simplicity, we shall assume that \(T=1\) as before.

Let \(y_{j} \approx y(t_{j})\) denote the approximation of the exact solutions \(y(t_{j})\). Based on the Caputo derivative approximation scheme (22), we denote

Given the starting values \(y_{0}\) and \(y_{1},\) define the following numerical scheme for approximating (40)

with \(y_0= y(0)\) and \(y_1=y(t_1)\).

Let \({\tilde{y}}_j,\;j=2,3,\ldots ,N\) be the solutions of the linearized problem:

with \({\tilde{y}}_0= y(0)\) and \({\tilde{y}}_1=y(t_1).\) Set for \(j=2, 3,\ldots , N\)

Applying Theorem 2 with f(t) replaced by f(y(t)), we obtain the asymptotic expansion of the error \(\eta _N\) as

Therefore, it remains to prove a similar error expansion of \(\theta _N.\) Now the error equation in \(\theta _j\) becomes

with \(\theta _0= 0\) and \(\theta _1= 0.\) With the help of

and

rewrite \(D^{\alpha }_{\tau } \theta _j\) in a suitable manner in terms of \(\delta _t \theta _{j-\frac{1}{2}}\) and hence, obtain an equivalent equation \(\theta _j \) for \( j=1,2,\ldots , N\) as

with \(\theta _0= 0\) and \(\theta _1= 0.\)

In order to derive an estimate of \(\theta _j,\) we need the following Lemma, whose proof is given in the Sect. 7.2 of the “Appendix”.

Lemma 2

Let \(1<\alpha <2\). Then, the coefficients \(p_{l}\) defined by (46) satisfy the following properties

Theorem 3

For \(\alpha \in (1, 2),\) let \(y(t_{j})\) and \( y_{j}\) be the exact and the approximate solutions of (40) and (41), respectively. Assume that the function \( y \in C^{m+2}[0, 1], \; m \ge 3\). Further, assume that exact starting values \(y_{0} = y(0)\) and \(y_{1} = y(t_{1})\) are known. Then, there exist coefficients \(c_{\mu } = c_{\mu } (\alpha )\) and \(c_{\mu }^{*} = c_{\mu }^{*} (\alpha )\) such that the error satisfies

Proof

Since \( e_{j} = (y(t_{j}) - {\tilde{y}}_{j}) + ({\tilde{y}}_{j} - y_{j}) =: \eta _{j} + \theta _{j} \) and estimate of \(\eta _j\) is known from (43), it is enough to estimate \(\theta _j, j=2, 3,\ldots , N\). Multiplying (47) by \(\tau \delta _t \theta _{j-\frac{1}{2}}\), it follows that

Note that

and

and

we arrive at

Denoting

we obtain

It follows using \(1/p_j = \varGamma (2-\alpha ) \tau ^{1-\alpha }\;t_j^{\alpha -1}, j \ge 2\), \(\theta _0=0\), \(\theta _1=0\), and \( \tau \sum _{l=2}^{j} t_{l}^{\alpha -1} \le C t_{j}^{\alpha }\) that

A use of Gronwall’s inequality yields

which implies that

where \(\eta _{l}\), by (43), has the asymptotic expansion. This completes the rest of the proof. \(\square \)

Remark 6

Indeed, proving the asymptotic expansion of the error for the approximation of the semilinear problem (40) directly can pose a significant challenge. The nonlinearity introduces additional complexities that can significantly complicate the analysis and proof techniques required. Therefore, an intermediate solution \({\tilde{y}}_j\) through linearization is introduced and then comparison with semilinear discrete solution helps to provide the necessary result.

5 Numerical examples

This section deals with several numerical experiments using high-order numerical methods developed in this paper for approximating the Riemann–Liouville fractional derivative, the linear and semilinear fractional differential equations. It is observed that the numerical results are consistent with our theoretical findings.

Let us first recall the Richardson extrapolation algorithm. Let \(A_{0}(\tau )\) denote the approximation of A calculated by an algorithm with the step size \(\tau \). Assume that the error has the following asymptotic expansion

where \(a_{j}, j=0, 1, 2,\ldots \) are unknown constants and \(0< \lambda _{0}< \lambda _{1}< \lambda _{2} < \cdots \) are some positive numbers.

Let \(b \in {\mathbb {Z}}^{+}, \, b >1\) (usually \(b=2\)). Let \(A_{0} \big (\frac{\tau }{b} \big ) \) denote the approximation of A calculated with the step size \(\frac{\tau }{b}\) by the same algorithm as for calculating \(A_{0}(\tau )\). Then by (57), there holds

Similarly, we may calculate the approximations \(A_{0} \big (\frac{\tau }{b^2} \big ), A_{0} \big (\frac{\tau }{b^3} \big ),\ldots \) of A by using the step sizes \( \frac{\tau }{b^2}, \frac{\tau }{b^3},\ldots \).

By (57), we note that

that is, the approximation \(A_{0}(\tau )\) has the convergence order \(O(\tau ^{\lambda _{0}})\). The convergence order \(\lambda _{0}\) can be calculated numerically. By (59), there exists a constant C such that, for sufficiently small \(\tau \),

and

Hence, we obtain

which implies that the convergence order \(\lambda _{0}\) can be calculated by

Since the error in (57) has the asymptotic expansion, we may use the Richardson extrapolation to construct a new approximation of A which has higher convergence order \(O(\tau ^{\lambda _{1}}), \, \lambda _{1} > \lambda _{0}\). To see this, multiplying (58) by \(b^{\lambda _{0}}\), we get

Subtracting (57) from (61), we obtain

Denote

we then arrive at

for some suitable constants \(b_{1}, b_{2},\ldots \). We now remark that the new approximation \(A_{1}(\tau )\) only depends on b and \(\lambda _{0}\) and is independent of the coefficients \(a_{j}, j=0, 1, 2,\ldots \) in (57). Hence we obtain a new approximation \(A_{1}(\tau )\) of A which has the higher convergence order \(O(\tau ^{\lambda _{1}})\). To see the convergence order \(\lambda _{1}\), using (62), we may calculate

Then, by (63), it follows that

and

Continuing this process, we may construct the high-order approximations \(A_{2}(\tau ), A_{3}(\tau ),\ldots \) which have the convergence orders \(O(\tau ^{\lambda _{2}})\), \(O(\tau ^{\lambda _{3}}),\ldots . \)

Remark 7

The approximations \(A_{1}(\tau ), A_{2}(\tau ),\ldots \) only depend on b and \(\lambda _{j}, j=0, 1, 2,\ldots \) and are independent of the coefficients \(a_{j}, j=0, 1, 2,\ldots \) in (57).

Example 1

Consider the approximation scheme defined in (21) or (7) for approximating Riemann–Liouville fractional derivative \(\, _{0}^{R} D_{t}^{\alpha } f(t_{N}), \alpha \in (1, 2)\) at a fixed time \(t_{N} =T\) for some smooth functions \(f(t), t \in [0, T]\).

By Theorem 1, we obtain

where the error \(R_{N}(g)\) satisfies

for some suitable coefficients \(d_{l}, \, l=3, 4 \ldots \) and \(d_{l}^{*}, \, l=2, 3 \ldots \). This implies for fixed \(t_{N}=T\),

Denote \(A=_0^RD_{t}^{\alpha }f(t_{N})\) and approximate A by \( A_{0}(\tau ) = \tau ^{-\alpha } \sum _{k=0}^{N} w_{k N} f(t_{N-k}). \) Then, by (65),

In Table 1, we choose \(f(t) = t^{5}, \tau = 1/20\), \(b=2\) and \(T=1\). We obtain the approximate solutions with the step sizes \( \big ( \tau , \frac{\tau }{2}, \frac{\tau }{2^2}, \frac{\tau }{2^3}, \frac{\tau }{2^4}, \frac{\tau }{2^5} \big )= \big ( \frac{1}{20}, \frac{1}{40}, \frac{1}{80}, \frac{1}{160}, \frac{1}{320}, \frac{1}{640} \big )\).

We observe that the experimentally determined order of convergence (EOC) of the approximate solutions in the first column is \(O(\tau ^{3- \alpha })\) with \(\alpha \in (1, 2)\). After the first extrapolation, the new approximate solutions have the experimentally determined convergence order \(O(\tau ^{4- \alpha })\). After the second extrapolation, the experimentally determined convergence order of the approximate solutions is slightly less than the expected order \(O(\tau ^{5- \alpha }),\) because of the computational errors. Here, the number in the bracket \(( \cdot )\) denotes the expected convergence order.

In Tables 2 and 3, we choose \(f(t) = \cos (\pi t)\) and \(f(t) = e^{t}\), respectively, and use the same parameters as in Table 1, we also observe the similar experimentally determined orders of the convergence as in Table 1.

Example 2

Consider the following linear fractional differential equation

where \(y(t) = t^{5}\) and \( \beta =-1\) and \(f(t) = \, _{0}^{R} D_{t}^{\alpha } t^5 + t^5\). The initial values are \(y_{0}= y_{0}^{1}=0\).

Let \(A= y(t_{N})\) with \(T_{N}=1\) be the exact solution of (67). Let \(A_{0}(\tau )= y_{N}\) be the approximate solution obtained from (29). By Theorem 2, we arrive at

for some suitable constants \(c_{\mu }, \mu = 3, 4,\ldots \) and \(c^{*}_{\mu }, \mu = 2, 3,\ldots \).

In Tables 4 and 5, we choose \(\tau = 1/20\), \(b=2\), \( y_{0}=0\) and \(y_{1} = \tau ^{5}\). We obtain the extrapolated values of the approximate solutions with the step sizes \( \big ( \tau , \frac{\tau }{2}, \frac{\tau }{2^2}, \frac{\tau }{2^3}, \frac{\tau }{2^4}, \frac{\tau }{2^5} \big )= \big ( \frac{1}{20}, \frac{1}{40}, \frac{1}{80}, \frac{1}{160}, \frac{1}{320}, \frac{1}{640} \big )\).

We observe that the experimentally determined order of convergence (EOC) of the approximate solution in the first column is \(O(\tau ^{3- \alpha })\) with \(\alpha \in (1, 2)\). After the first extrapolation, we get the new approximate solutions which have the experimentally determined order of convergence slightly less that the expected order \(O(\tau ^{4- \alpha })\) because of the computational errors. After the second extrapolation, the experimentally determined order of convergence of the approximate solutions is also slightly less than the expected order \(O(\tau ^{5- \alpha })\) due to the computational errors.

In Tables 4 and 5, We compared the convergence orders and CPU times of our numerical scheme with a scheme developed in [8]. In [8], an approximate scheme (47)–(49) with a convergence order of \(O(\tau ^{4-\alpha }), \alpha \in (1, 2)\), was proposed for solving time fractional wave equations with order \(\alpha \in (1, 2)\). We modified the scheme (47)–(49) in [8] to solve fractional differential equation with order \(\alpha \in (1, 2)\). We observed that our scheme achieves a convergence order close to \(O(\tau ^{5-\alpha })\) after two extrapolations, while requiring nearly the same CPU times as the method presented in [8]. This indicates that our scheme exhibits better convergence behavior compared to the scheme in [8], which only achieves a convergence order of \(O(\tau ^{4-\alpha })\).

Example 3

Consider the following semilinear fractional differential equation

where \(y(t) = t^{5}\), \( \beta =-1\), \(f(y) = \sin (y)\) and \(g(t)= \, _{0}^{R} D_{t}^{\alpha } t^5 + t^5 - \sin (t^5)\).

For given \(y_{0} = y (0) =0, \, y_{1} = y(\tau )= \tau ^{5}\), we define the following numerical method, with \(n \ge 2\),

Let \(A= y(t_{N})\) with \(T_{N}=1\) be the exact solution of (69). Let \(A_{0}(\tau )= y_{N}\) be the approximate solution obtained from (70) by using MATLAB function "fsolve.m".

In Table 6, we choose \(\tau = 1/20\), \(b=2\). We obtain the extrapolated values of the approximate solutions with the step sizes \( \big ( \tau , \frac{\tau }{2}, \frac{\tau }{2^2}, \frac{\tau }{2^3}, \frac{\tau }{2^4}, \frac{\tau }{2^5} \big )= \big ( \frac{1}{20}, \frac{1}{40}, \frac{1}{80}, \frac{1}{160}, \frac{1}{320}, \frac{1}{640} \big )\).

We observe in Table 6 that the experimentally determined order of convergence (EOC) of the approximate solution in the first column is almost \(O(\tau ^{3- \alpha })\) with \(\alpha =1.5\). After the first extrapolation, we get the new approximate solutions. The experimentally determined order of convergence is slightly less than the expected \(O(\tau ^{4- \alpha })\). After the second extrapolation, the experimentally determined order of convergence of the approximate solutions is also less than the expected \(O(\tau ^{5- \alpha })\) due to the nonlinearity of the problem.

Example 4

Consider the following semilinear fractional differential equation

where \(y(t) = t^{5}\), \( \beta =-1\), \(f(y) = y-y^3\) and \(g(t)= \, _{0}^{R} D_{t}^{\alpha } t^5 + t^5 - \big ( t^5 - (t^5)^3 \big ) \).

For given \(y_{0} = y (0) =0, \, y_{1} = y(\tau )= \tau ^{5}\), we define the following numerical method, with \(n \ge 2\),

Let \(A= y(t_{N})\) with \(T_{N}=1\) be the exact solution of (71). Let \(A_{0}(\tau )= y_{N}\) be the approximate solution obtained from (72) by using MATLAB function "fsolve.m".

We use the same parameters as in Example 3 and in Table 7, we obtain the similar experimentally determined convergence orders as in Example 3.

Note that this type of nonlinearity does not satisfy uniform Lipschitz condition (2), but it satisfies local Lipschitz condition. However, the above mentioned computational results indicate that it may be possible to derive the error analysis for this case and this will be a part of our future endeavor.

Example 5

Consider the following linear fractional differential equation

where \(y(t) = t^{\gamma }, \gamma >1 \) and \( \beta =-1\) and \(f(t) = \, _{0}^{R} D_{t}^{\alpha } t^{\gamma } + t^{\gamma }\). The initial values are \(y_{0}= y_{0}^{1}=0\).

In this example, we consider the case where the solution is not sufficiently smooth. We shall choose \(\gamma = 1.4\) and the exact solution takes \(y (t) = t^{1.4}\) which is not sufficiently smooth. We use the same step sizes as in Example 2. In Table 8 we choose \( \alpha = 1.4\), \(\tau = 1/20\), \(b=2\), \(y_{0} =0\) and \( y_{1}= \tau ^{1.4}\). We obtain the extrapolated values of the approximate solutions with the step sizes \( \big ( \tau , \frac{\tau }{2}, \frac{\tau }{2^2}, \frac{\tau }{2^3}, \frac{\tau }{2^4}, \frac{\tau }{2^5} \big )= \big ( \frac{1}{20}, \frac{1}{40}, \frac{1}{80}, \frac{1}{160}, \frac{1}{320}, \frac{1}{640} \big )\). We observed that the convergence orders obtained after extrapolations are much lower than the expected values due to the solutions’ lack of sufficient smoothness. To address this issue, we recognize the necessity of developing alternative approaches for extrapolations when dealing with solutions that lack smoothness. For further insights and potential solutions, please refer to Remark 5 in Sect. 3.

6 Conclusion

In this paper, we construct a new high-order scheme for approximating the Riemann–Liouville fractional derivative with order \(\alpha \in (1, 2)\) based on the Hadamard finite-part integral expression of the Riemann–Liouville fractional derivative. The asymptotic expansion of the approximation error is proved. By using proposed scheme, we obtain a high-order numerical method for a linear fractional differential equation and the error also has an asymptotic expansion. We also construct and analyze a high-order numerical scheme for approximating a semilinear fractional differential equation and it is again shown that the error has an asymptotic expansion. The numerical experiments show that the numerical results are consistent with our theoretical results.

Data Availability

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

References

Chen, M., Deng, W.: High order algorithms for the fractional substantial diffusion equation with truncated Lévy flights. SIAM J. Sci. Comput. 37, A890–A917 (2015)

Diethelm, K.: An algorithm for the numerical solution of differential equations of fractional order. Electron. Trans. Numer. Anal. 5, 1–6 (1997)

Diethelm, K.: The Analysis of Fractional Differential Equations. Lecture Notes in Mathematics, Springer, Berlin (2010)

Diethelm, K.: Generalized compound quadrature formula finite-part integral. IMA J. Numer. Anal. 17, 479–493 (1997)

Diethelm, K., Walz, G.: Numerical solution of fractional order differential equations by extrapolation. Numer. Algorithms 16, 231–253 (1997)

Dimitrov, Y.: A second order approximation for the Caputo fractional derivative. J. Fract. Calc. Appl. 7, 175–195 (2016)

Dimitrov, Y.: Three-point approximation for Caputo fractional derivative. Commun. Appl. Math. Comput. 31, 413–442 (2017)

Du, R., Yan, Y., Liang, Z.: A high-order scheme to approximate the Caputo fractional derivative and its application to solve the fractional diffusion wave equation. J. Comput. Phys. 376, 1312–1330 (2019)

Elliott, D.: An asymptotic analysis of two algorithms for certain Hadamard finite-part integrals. IMA J. Numer. Anal. 13, 445–462 (1993)

Gao, G., Sun, Z.: Two unconditionally stable and convergent difference schemes with the extrapolation method for the one-dimensional distributed-order differential equations. Numer. Methods Partial Differ. Equ. 32, 591–615 (2016)

Gohar, M., Li, C., Li, Z.: Finite difference methods for Caputo–Hadamard fractional differential equations. Mediterr. J. Math. 17, 194–220 (2020)

Gohar, M., Li, C., Yin, C.: On Caputo Hadamard fractional differential equations. Int. J. Comput. Math. 97, 1459–1483 (2020)

Hao, Z., Cao, W., Li, S.: Numerical correction of finite difference solution for two-dimensional space-fractional diffusion equations with boundary singularity. Numer. Algorithms 86, 1071–1087 (2021)

Jin, B., Zhou, Z.: A finite element method with singularity reconstruction for fractional boundary value problems. ESAIM: M2AN 49, 1261–1283 (2015)

Kilbas, A.A., Srivastava, H.M., Trujillo, J.J.: Theory and Applications of Fractional Differential Equations. Elsevier Science, Amsterdam (2006)

Li, C., Cai, M.: Theory and Numerical Approximations of Fractional Integrals and Derivatives. SIAM, Philadelphia (2019)

Li, S., Cao, W., Hao, Z.: An extrapolated finite difference method for two-dimensional fractional boundary value problems with non-smooth solution. Int. J. Comput. Math. 99, 274–291 (2022)

Li, C., Zeng, F.: Numerical Methods for Fractional Calculus. Chapman and Hall/CRC, Boca Raton (2015)

Li, Z., Liang, Z., Yan, Y.: High-order numerical methods for solving time fractional partial differential equations. J. Sci. Comput. 71, 785–803 (2017)

Lin, Y., Xu, C.: Finite difference/spectral approximations for the time-fractional diffusion equation. J. Comput. Phys. 225, 1533–1552 (2007)

Liu, Y., Roberts, J., Yan, Y.: Detailed error analysis for a fractional Adams method with graded meshes. Numer. Algorithms. 78, 1195–1216 (2018)

Lyness, J.N.: Finite-part integrals and the Euler–MacLaurin expansion. In: Zahar, R.V.M. (ed.) Approximation and Computation. International Series of Numerical Mathematics, vol. 119, pp. 397–407. Basel, Birkhäuser (1994)

Podlubny, I.: Fractional Differential Equations. Academic Press, New York (1999)

Qi, R., Sun, Z.: Some numerical extrapolation methods for the fractional sub-diffusion equation and fractional wave equation based on the L1 formula. Commun. Appl. Math. Comput. (2022). https://doi.org/10.1007/s42967-021-00177-8

Vong, S., Lyu, P., Chen, X., Lei, S.: High order finite difference method for time-space fractional differential equations with Caputo and Riemann–Liouville derivatives. Numer. Algorithms 72, 195–210 (2016)

Wang, Y.: A high-order compact finite difference method and its extrapolation for fractional mobile/ immobile convection-diffusion equations. Calcolo 54, 733–768 (2017)

Wang, Y.: A Crank-Nicolson-type compact difference method and its extrapolation for time fractional Cattaneo convection-diffusion equations with smooth solutions. Numer Algorithms 81, 489–527 (2019)

Yang, X., Zhang, H., Xu, D.: Orthogonal spline collocation method for the two-dimensional fractional sub-diffusion equation. J. Comput. Phys. 256, 824–837 (2014)

Yin, B., Liu, Y., Li, H., He, S.: Fast algorithm based on TT-MFE system for space fractional Allen–Cahn equations with smooth and non-smooth solutions. J. Comput. Phys. 379, 351–372 (2019)

Yan, Y., Pal, K., Ford, N.J.: High-order numerical methods for solving fractional differential equations. BIT Numer. Math. 54, 555–584 (2014)

Zeng, F., Li, C., Liu, F., Turner, I.: Numerical algorithms for time-fractional subdiffusion equation with second-order accuracy. SIAM J. Sci. Comput. 37, A55–A78 (2015)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

7 Appendix

7 Appendix

1.1 7.1 Euler-Maclaurin expansion of the integral

In the proof of Theorem 1, we frequently apply the following Lemma 3.

Lemma 3

Let \( 0 = w_{0}< w_{1}< \cdots < w_{n}=1, n \ge 1 \) be a partition of [0, 1] with step size \(h=\frac{1}{n}\) and \(w_{k} = \frac{k}{n}, k=0, 1,\ldots , n\). Let G(w) be a sufficiently smooth function defined on [0, 1] and \( q \in {\mathbb {R}}\) with \( q <-1\). Then, for \( s \in (0, 1)\), there holds

for some suitable functions \(a_{k} (s), b_{k} (s), k=0, 1, 2,\ldots \).

For completeness, we will give the idea of the proof of Lemma 3 in this subsection. Let us first recall some results related to the Euler-Maclaurin expansion of the integral which help us to prove Lemma 3. For more details on the Hadamard finite-part integral and the Euler-Maclaurin expansion of the integral, we refer to Lyness [22].

There are many ways to approximate the integral

where f(x) is sufficiently smooth or \(f(x) = x^{q} g(x)\) with \(q \in {\mathbb {R}}\) and g is sufficiently smooth. The integral must be understood in the Hadamard finite-part integral sense when the integral has no integrable singularity, Lyness [22].

Let \(0= x_{0}< x_{1}< \cdots < x_{N}=1\) be a partition with the step size h. The simplest method to approximate (74) is the rectangle rule, that is,

The trapezoidal rule is

where \( P_{1} (x) = \frac{x-x_{j-1}}{x_{j} - x_{j-1}} f(x_{j-1}) + \frac{x-x_{j}}{x_{j-1} - x_{j}} f(x_{j}) \) is the linear interpolation polynomial of f(x) on \([x_{j-1}, x_{j}]\). It is easy to show that for the sufficiently smooth f(x) the errors of the rectangle rule and the trapezoidal rule have the convergence orders O(h) and \(O(h^2)\), respectively.

It is natural to consider the following approximation

With \(h= \frac{1}{N}\), denote

Then the following result holds, whose proof can be found in [22, Theorem 2.5].

Lemma 4

If f(x) is sufficiently smooth, that is, \(f, f^{\prime },\ldots , f^{(p+1)} \in L^{1} [0, 1]\) for some \( p \in {\mathbb {Z}}^{+}\), then the error has the following asymptotic expansion.

where \(f^{(s)} (w):= \frac{d^{s} f(x)}{d x^s}\), and

where \(B_{s}(x)\) is the Bernoulli polynomial and \({\bar{B}}_{s}(x)\) the corresponding Bernoulli function, which coincides with \(B_{s}(x)\) in the interval (0, 1) and has unit period.

The next Lemma is based on [22, Theorem 3.2], whose proof is given below for completeness.

Lemma 5

Let \( f(x) = x^{q} g(x)\) with \(q \in {\mathbb {R}}\). Assume \( g \in C^{p_{1}+1} [0, 1]\) for some sufficiently large \(p_{1} \in {\mathbb {Z}}^{+}\). Then, for any \( 0 \le p < q + 1 + p_{1}, \, p \in {\mathbb {Z}}^{+}\) and \( p_{2} > q + p_{1}, \, p_{2} \in {\mathbb {Z}}^{+}\), we have the following asymptotic expansion, with \(0< \beta <1\),

for some suitable functions \(a_{j}(\beta ), a_{0 j} (\beta ), b_{s}(\beta )\).

Proof

Step 1: A use of Taylor series expansion shows

Thus, it follows that

Step 2. We first estimate II.

Setting

we shall show that \(F, F^{\prime },\ldots , F^{(p+1)} \in L^{1} [0, 1]\) for some suitable \(p \in {\mathbb {Z}}^{+}\). In fact, we observe that

which implies

Hence, \( F^{\prime } \in L^{1} (0, 1),\) provided \( p_{1} + q + 1>0. \) Similarly, it is easy to show \( F^{(p+1)} \in L^{1} (0, 1), \) provided \( p_{1} + q - (p+1) +2 >0, \) that is, \(p < q + p_{1} +1.\)

Applying Lemma 4, we obtain for \(p < q + p_{1} +1\)

for some suitable constants \(a_{l} (\beta ), l=0, 1,\ldots , p+1\).

Step 3. In order to estimate I, we first consider \(R_{N}^{\beta } (x^{q})\).

For fixed \(j=1, 2,\ldots , N-1\), set \( w(x) = (j + x)^q. \) With \(f_{q} (x) = x^q\), we now find that

Hence,

Note that

and for \(s \ge 2\)

Further note that

Since for \( p_{2} > q\)

and

then, we arrive at

Similarly, noting that \(p_{2} > q + p_{1}\), we have, with \(l=0, 1,\ldots , p_{1}\),

Together these estimates, we arrive at

Hence, we obtain for \( g \in C^{p_{1}+1}(0, 1)\) and \(p< q +1 + p_{1}, \; p_{2} > q + p_{1}\), the following result

This completes the proof of Lemma 5. \(\square \)

Proof of Lemma 3

Applying Lemma 5, we immediately arrive at the conclusion in Lemma 3. \(\square \)

1.2 7.2 Proof of Lemma 2

In this subsection, we provide a proof of Lemma 2. To prove Lemma 2, we need the following two Lemmas.

Lemma 6

Let \(E(k), F(k), G(k), k=2,3,\ldots , n, n=2,3,\ldots , N\) be defined by (17), (18) and (19), respectively. Then, there hold

and

Proof

We first prove \(E(k)<0\) for \(k=2,3,\ldots , n,\, n=2,3,\ldots , N\). By the expression of E(k) and the series expansion of \((1-1/k)^{-\alpha +j}\) for \(j=1, 2\), we obtain

A use of \(K(\alpha )>0\) with some simplifications shows

Similarly, it is easy to prove \(F(k)<0\) and \(G(k)>0\). It remains to show \(E(k+1)-2F(k)>0\) for \(k=2,3,\ldots , n,\, n=2,3,\ldots , N-1\). With \(K(\alpha )>0,\) we note that

Together these estimates complete the rest of the proof. \(\square \)

Lemma 7

Let \(1<\alpha <2\). Then the coefficients \(w_{kn}\) defined by (2.2) satisfy the following properties

Proof

We only prove the case when \(n\ge 5\). For the cases with \(n=2,3,4\), it can be proved in a similar manner. We first show (82). Note with \(n=5,6,\cdots , N\) that

Since,

and

we obtain for \(n=5,6,\cdots , N\)

It follows from Lemma 6 that for \(n=5,6,\cdots , N\), the following result holds

Similarly, for \(k=3,4,\cdots , n-2,\,n=5,6,\cdots , N\), there holds

Using Lemma 6 again, it follows that for \(n=5,6,\cdots , N\),

and \(w_{nn}=\frac{1}{\varGamma (3-\alpha )}\frac{1}{2}G(n)>0.\)

We next prove (83). For \(l=1,2,\cdots ,n, n=5,6,\cdots ,N\), since

we obtain, taking \( f(t) \equiv 1\),

Finally, we give the proof of (84). Letting \(f(t)\equiv t\), we note at \(t=t_{l}\) that

that is,

This implies

Together these estimates complete the proof of Lemma 7. \(\square \)

Now it is ready to give the proof of Lemma 2

Proof of Lemma 2

Similar to Lemma 7, we only prove the case for \(n=5,6,\cdots , N\). The case for \(n=2,3,4\) can be proved similarly. We first show (48). According to (84), it is easy to see that

We next prove (49). Recalling (83), we arrive at

Finally, we give the proof of (50). we now claim that for \(1<\alpha <2\) and \(n\ge 1\),

Assume (85) holds at the moment, then for \(n=5,6,\cdots , N\), we find that

which is (50).

It remains to show (85). We shall use the mathematical induction on n to prove (85). When \(n=1\), the result is obviously true. We assume that the conclusion is true for \(n=1,2,\cdots ,k-1\) and we need to prove the conclusion holds for \(n=k\), that is, \( \sum \limits _{l=1}^{k}l^{1-\alpha }\le \frac{k^{2-\alpha }}{2-\alpha }. \) By the inductive hypothesis, it suffices to consider \( \frac{(k-1)^{2-\alpha }}{2-\alpha }+k^{1-\alpha }\le \frac{k^{2-\alpha }}{2-\alpha }, \) that is, \( \frac{(2-\alpha )k^{1-\alpha }}{k^{2-\alpha }-(k-1)^{2-\alpha }}\le 1. \) Note again using series expansion of \((1-1/k)^{2-\alpha }\) that

This shows the result holds true for \(n=k\) and by the principle of induction, we obtain (85). This concludes the rest of the proof.\(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yang, Y., Green, C.W.H., Pani, A.K. et al. High-order schemes based on extrapolation for semilinear fractional differential equation. Calcolo 61, 2 (2024). https://doi.org/10.1007/s10092-023-00553-1

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10092-023-00553-1