Abstract

In frequency analysis an often appearing problem is the reconstruction of a signal from given samples. Since the samples are usually noised, pure interpolating approaches are not recommended and appropriate approximation methods are more suitable as they can be interpreted as a kind of denoising. Two approaches are widely used. One uses the reflection coefficients of a finite sequence of Szegő polynomials and the other one the zeros of the so called Prony polynomial. We show that both approaches are closely related. As a kind of inverse problem, it’s not surprising that they have in common that both methods depend very sensitive on sampling errors. We use known properties of the signal to estimate the positions of the zeros of the corresponding Szegő- or Prony-like polynomials and construct adaptive algorithms to calculate these ones. Hereby, we get the corresponding parameters in the exponential parts of the signal, too. Then, the coefficients of the signal (as a linear combination of such exponential functions) can be obtained from a system of linear equations by minimizing the residuals with respect to a suitable norm as a kind of denoising.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider a signal h with finite bandlength m of the form

with \(\,\mathrm {Re}\,\omega _j\in [-\alpha ,0],\ \alpha \ge 0, \ \,\mathrm {Im}\, \omega _j\in (-\pi ,\pi ],\ \omega _i\ne \omega _j\) for \(i\ne j\), \(\lambda _j\in \mathbb {C}\backslash \{ 0\}\). Especially, all \(z_j:=e^{\omega _j}\) lie in an annulus \([[e^{-\alpha },1]]=\{z\in \mathbb {C}\,:\, e^{-\alpha }\le |z|\le 1\}\), where \([[a,b]]:=\{z\in \mathbb {C}\,:\, a\le |z|\le b\}\), and especially \([[1,1]]=\{z\,:\, |z|=1\}\) denotes the unit circle. In the same manner we define \(((a,b]]:=\{ z\,:\, a<|z|\le b\}\) and analogously \([[a,b)),\ ((a,b))\) for \(a,b\in \mathbb {R},\ a\le b\). By \(h_k:=h(k),\ k\in \mathbb {N}_0\), we denote the equidistant sampled values.

A problem in signal theory is to get the signal h or an approximation of it from given samples. From the sampling theorem it is known that 2m samples are necessary for the reconstruction, i.e. to get the \(\lambda _j,\ \omega _j,\ j=1,\ldots ,m\).

The classical approach of Prony [35] uses 2m samples \(h_k\) to calculate a monic polynomial \(\rho _m\) in its monomial representation whose zeros are the desired \(z_j\), i.e. \(\rho _m(z)=\prod _{j=1}^m (z-z_j)\). By construction, \(\rho _m\) has only simple zeros. If we know the \(z_j\) we get the corresponding \(\omega _j\) via \(\omega _j=\mathrm {Log}\, z_j\) (complex logarithm).

If all \(\omega _j\) are known then the coefficients \(\lambda _j,\ j=1,\ldots ,m\), can be obtained from (1) as the solution of a system of linear equations, using the samples as interpolation conditions, see Sect. 9.

Usually, the measured values \(h_k\) contain some errors which may also distort the Prony polynomial. From the numerical analysis it is well known that even small perturbations of the monomial coefficients may cause essential changes for the zeros of \(\rho _m\). This can already be the case for small values of m. Thus, Prony’s original method is not recommended for general signal reconstruction.

Nevertheless, methods which are based on this approach are widely used by engineers, in particular for special applications, e.g. in the medical, radiophysical and electromechanical context, see [9,10,11, 15, 22, 23, 28] for some few examples.

Another classical way constructs the Prony polynomial not in its monomial representation, see e.g. [1, 2, 13, 17,18,19,20,21]. Using given samples, some authors construct moments of a weight function. By a Levinson-like algorithm, an appropriate finite sequence of Szegő polynomials \((s_\nu )_{\nu =0}^m\) resp. its reflection coefficients can be obtained. Here, \(s_m\) coincides up to normalization with the Prony polynomial \(\rho _m\) if all zeros of \(\rho _m\) are in the interior or all of them are on the boundary of the unit disc.

The reflection coefficients are the entries of a Hessenberg matrix whose eigenvalues are the zeros of \(s_m\). But this method has some drawbacks, especially if some zeros are in the interior of the unit disc and some of them are unimodular ones. Then, the convergence of the zeros of \(s_m\) to the unimodular ones of \(\rho _m\) is not guaranteed [29, 30].

Other methods, which take erroneous samples into account, use \(l_1\)- or \(l_2\)-techniques (or a mixture of them). Popular examples in the \(l_2\)-context are the ESPRIT method [37, 38], the matrix-pencil method [16, 39], a method which uses annihilating filters [8, 40, 43] and the approximate Prony method [32,33,34].

Besides the least squares approaches, one can use the \(l_1\)-approximation. For example, in [36, p. 255] it was noted, that this approximation appears to be markedly superior among other \(l_p\)-approximations, \(p>1\), in presence of outliers or wild points. In the next section we sketch an algorithm, based on the method given in [42], to calculate an approximate Prony polynomial by using the \(l_1\)-norm for the overdetermined case (i.e., if we have at least 2m samples).

In this article we focus on the overdetermined case with \(N\ge 2m\) samples: Theoretically the signal can be reconstructed in the absence of noised samples and numerical uncertainties. But in practice, due to noising and occuring numerical errors it can only be approximated.

Nevertheless, if we have too few samples (i.e. an underdetermined system), we notice that an \(l_1\)-solution is in most cases the sparsest solution [7], i.e. the obtained approximation of the Prony polynomial has in most cases the minimal number of non zero coefficients in its monomial representation, see e.g. [4, 5, 24]. Thus, an \(l_1\)-solution for the underdetermined analogues of (4) resp. of (21) yields usually sparse vectors and hence a mimimal value of m and therefore a sparse approximation for h.

Usually, m is not known a priori and will be estimated. If m is initially overestimated by \(\ell \ge m\), by the \(l_1\)-norm approach we get a linear programming problem. Depending on the kind of the underlying noise, the number of basis variables in the final tableau gives for the real case an ‘optimal’ value of m which is the numerical rank of the matrix of the overdetermined system of linear equations [42]. (For the complex case see there, too.) For ‘moderate’ noise this coincides with the true value of m. If we have too few samples, the underdetermined system yields an approximation of the calculated signal in the above mentioned sparse sense.

We see that there exist various methods to calculate a Prony polynomial \(\rho _m\) or an approximation of it. A possible approach is sketched in the next section.

However, the main objective of this article is not the calculation of an (approximate) Prony polynomial but the determination of its zeros and thus the parameters \((\omega _j)_{j=1}^m\) of the signal. In principle, each zero finder for polynomials can be used. But using properties of this polynomial, which can be derived from known characteristics of the signal, maybe a more appropriate approach.

Here, we use knowledges on possible positions of the zeros of \(\rho _m\) to get more insights in the structure of this polynomial (Sect. 3). For example, we use connections between the Szegő- and the Prony-polynomials in Sects. 4 and 5 to find a factorization of \(\rho _m\) as the product of two polynomials \(\rho _m= s_\delta \rho _{m-\delta }\), whose zeros are in the interior resp. on the boundary of the unit disc.

More precisely, all the zeros of \(s_{\delta }\) are simple and lie in \([[e^{-\alpha },1))\). In Sect. 5 we construct a sequence of Szegő polynomials \((s_\nu )_{\nu =0}^\delta\) which we use in Sect. 6 to determine their reflection coefficients. With them we built up a Hessenberg matrix whose eigenvalues are the zeros of \(s_\delta\).

In Sect. 7 we derive an algorithm to calculate the zeros of \(\rho _{m-\delta }\). We use the property that all of its zeros are on the unit circle for the design of this algorithm.

From the calculated zeros \(z_j\) of \(\rho _m\) we obtain \(\omega _j\) as the complex logarithms \(\omega _j=\mathrm {Log}\, z_j\). After calculating the corresponding coefficients \(\lambda _j\) in Sect. 9, the signal h, given as in (1), is reconstructed respectively approximated from the given samples.

Numerically, it is difficult to distinguish between zeros on the unit circle and ‘near’ to it, especially for a noised signal. Thus, this case deserves a closer attention in Sect. 8. In total, we get an algorithm to reconstruct or approximate a given signal from possible noised samples. Some tests and numerical remarks are given in Sect. 10.

2 Determining an approximate prony polynomial

Prony [35] has shown that the coefficients of the monomial representation of \(\rho _m\),

can be obtained from 2m samples \(h_\nu :=h(\nu ) ,\ \nu =0,\ldots ,2m-1\), where h is the exponential sum in (1), by solving the system of linear equations

Unfortunately, the zeros of \(\rho _m\) depend very sensitive on these coefficients: For a polynomial in monomial representation it is known that already slight perturbations of some coefficients may vary the position of its zeros substantially (a famous example is Wilkinson’s polynomial), even for small m. Here, the coefficients depend on the samples, which are often distorted in the real world, e.g. by sampling errors, and the value of m is usually not known a priori. Thus, it is recommended to use known properties of the signal for its reconstruction/approximation. Algorithms for calculating zeros of a polynomial or the eigenvalues of its companion matrix with satisfactory stability behaviours are for example given in [3].

In [42] we modified Prony’s method and used that \(p_0\ne 0\) (instead of \(p_m=1\)). This is possible because we have assumed that all zeros lie in \([[e^{-\alpha },1]]\), and thus 0 is not a zero of \(\rho _m\). We may renormalise \(\rho _m\) so that \(p_0\) becomes an arbitrary non zero value, e.g. \(p_0:=1\), which can be done by defining \(\tilde{p}_{\nu }:=\frac{p_\nu }{p_0}\) and using \(\tilde{\rho }_m:=\frac{1}{p_0}\rho _m\). With N samples of h and an upper bound \(\ell\) for the unknown value of m (\(m\le \ell \le \lfloor N/2\rfloor\)) we modified Prony’s method to get an overdetermined system of linear equations

with \(H_{N-\ell ,\ell }=(h_{j+k})_{\begin{array}{c} {j=0,\ldots ,N-\ell -1}\\ {k=1,\ldots ,\ell \ \ \ \ \ \ } \end{array}},\ \mathrm {h}:=(h_0,\ldots ,h_{N-\ell -1})^T\) and \(\tilde{p}=(\tilde{p}_1,\ldots ,\tilde{p}_{\ell })^T\).

We have shown that \(\mathrm {rank}\, H_{N-\ell ,\ell }= m\) or in other words: The number m of individual superimposed signals \(e^{\omega _j\cdot }\) of h is given by the rank of \(H_{N-\ell ,\ell }\).

With the residual vector

we developed an algorithm which minimizes \(\Vert r(\tilde{p})\Vert _\dagger\) in [42]. The norm \(\Vert \cdot \Vert _\dagger\) is defined by \(\Vert z\Vert _\dagger := \Vert \,\mathrm {Re}\, z\Vert _1+\Vert \,\mathrm {Im}\, z\Vert _1,\ z\in \mathbb {C}^{N-\ell }\), which coincides with the \(l_1\)-norm \(\Vert \cdot \Vert _1\) for real or pure complex vectors. Without additional work, we get a reasonable value of m, which takes possible noised values of the samples into account, even if \(H_{N-\ell ,\ell }\) has singular values with small moduli. We can interpret m (real case) resp. 2m (complex case) as the number of basis variables in the final tableau of the algorithm described in [42]. Of course, the numerical rank of \(H_{N-\ell ,\ell }\) depends on some tolerance parameters in this algorithm.

It can be shown that such a solution \(\tilde{p}^\dagger\) is a near best approximation for which the \(l_1\)-error within a factor \(\sqrt{2}\) of its minimal value \(\Vert r(\tilde{p}^\star )\Vert _1\).

The solution \((p_0,\ldots ,p_m)^T\) of (3) can be used as a solution of (4) by \(\tilde{p}_{\nu }=\frac{p_\nu }{p_0},\ \nu =0,\ldots ,\ell ,\) where \(p_m=1\) and \(p_\nu =0\) for \(\nu >m\). Although (4) may have solutions with \(\tilde{p}_\nu \ne 0\) for \(\ell \ge \nu >m\), it can be achieved that only the first m components of \(\tilde{p}\) maybe non zero. Thus, it can be achieved that the obtained polynomial \(\frac{1}{\tilde{p}_m}\tilde{\rho }_m\), \(\tilde{\rho }_m(z)=\sum _{\nu =0}^m \tilde{p}_\nu z^\nu\), coincides with the Prony polynomial \(\rho _m\) in the absence of any sources of errors.

From the practical point of view it is reasonable to interpret \(\frac{1}{\tilde{p}_m}\tilde{\rho }_m\) as an approximation to the ‘true’ Prony polynomial that particularly takes erroneous samples into account. Especially its zeros can be used as approximations for zeros of the Prony polynomial which can be obtained by suitable root finding algorithms.

In [42] the main focus is the construction of the Prony-like polynomial \(\rho _m\) and the calculation of the coefficients \(\lambda _j,\ j=1,\ldots ,m\). Here, we set the key aspect in determining the zeros of \(\rho _m\), using special properties of the signal. In the subsequent sections we don’t distinguish between the Prony(-like) polynomial and its numerical calculated approximation and focus on the goal to calculate the zeros of \(\rho _m\) which maybe not monic.

Although Szegő polynomials are widely used in frequency analysis in classical algorithms, see e.g. [17,18,19,20,21], it is also known from these papers that their construction is sensitive not only due to erroneous samples but as well due to the number of samples. Furthermore, the convergences of the algorithms are not always guaranteed [21, 29, 30]. These articles suggest that the moments used there depend sensitive on the samples, too.

We remark that most of the just before mentioned articles make the assumption that in their model all \(\omega _j\) have real parts zero, i. e. that \(|z_j|=1\) for all j.

Apart from a degeneracy case, Szegő polynomials have all its zeros in the interior of the unit disc, so it seems that their usage would be inappropriate in that context.

We consider the more general case \(0<e^{-\alpha }\le |z_j|\le 1\) for all j. Starting from \(\rho _m\), in Sect. 4 we construct Szegő polynomials \((s_{\mu })_{\mu =0}^\delta\). In Sect. 6 we use their reflection coefficients to find the zeros in the interior of the unit disc and a polynomial \(\rho _{m-\delta }\), whose zeros are all unimodular, \(\rho _m=s_\delta \rho _{m-\delta }\). Here, the number \(\delta\) of zeros of \(\rho _m\) resp. \(s_\delta\) in \([[e^{-\alpha },1))\), will be calculated by our algorithm, too.

By numerical reasons we cannot distinguish exactly between zeros \(|z_j|<1\) and \(|z_j|=1\). In fact, we distinguish between zeros \(z_j\) of a Szegő polynomial \(s_\delta\) with \(|z_j|< 1-\sqrt{\epsilon },\ 0<\epsilon \ll 1\), and zeros of a polynomial \(\rho _{m-\delta }\) whose zeros are in \([[{1-\sqrt{\epsilon }},1]]\). The latter ones can be obtained by another approach which calculates zeros with a fixed modulus, where we vary the moduli by a bisection strategy. Both polynomials, \(s_\delta\) and \(\rho _{m-\delta }\), will be calculated in Sect. 5.

3 On the positions of the zeros

From the explanations of the preceding section we may assume that we have calculated \(\rho _m\) in its monomial representation (and especially that m is known),

A goal of this article is to determine the zeros of \(\rho _m\). Although many root finding algorithms can do this [3, 26, 27], we develop another one, which takes special properties of this polynomial into account.

Since all zeros of \(\rho _m\) lie in \([[e^{-\alpha },1]]\) we get

and thus \(|p_0|\le |p_m|\). Furthermore, we have equality iff all zeros are unimodular. Since \(\prod _{j=1}^{m} |z_j|\ge \exp (-m\alpha )\) we have \(\exp (-m\alpha ) |p_m|-|p_0|\le 0\) or

If \(\rho _m\) is calculated by the algorithm outlined in Sect. 2, a violation of the previous inequality contradicts the model and indicates that \(\rho _m\) is erroneous. Of course, by (6) we can add the additional (linear) restriction \(|\tilde{p}_m|=\left| \frac{p_m}{p_0}\right| \ge 1\) to (4) to enforce that all zeros are in \([[e^{-\alpha },1]]\). (Note, that we have normalized \(p_0=1\) there.) But even for erroneous polynomials the calculated zeros in the interior of the unit disc are usually close to the exact ones in our tests. Thus, we don’t add this restriction. Only zeros on [[1, 1]] or close to it are sometimes more affected in our tests, see example 1. These ones can be improved e.g. by iterative methods like the Newton method. Of course, this requires that erroneous samples don’t affect the calculation of \(\rho _m\) respectively the positions of its zeros too much.

Besides the case \(|p_m|=|p_0|\), i.e. that all zeros are unimodular, which we examine in Sect. 7, we have to consider the case \(|p_m|-|p_0|>0\), i.e. that at least one zero has modulus \(<1\). Then we also have \(|p_m|^2-|p_0|^2=(|p_m|+|p_0|)(|p_m|-|p_0|)>0\).

By numerical reasons we choose an \(\epsilon \in \mathbb {R}\), \(0<\epsilon \ll 1\), and assume that \(|p_m|>\sqrt{\epsilon }\). Although the leading coefficient can be chosen arbitrarily (\(\ne 0\)) it shouldn’t be chosen to have a too small modulus.

Furthermore, we distinguish in the subsequent sections between the cases \(1-\sqrt{\epsilon }>\left| \frac{p_0}{p_m}\right| =\prod _{j=1}^m |z_j|\) (there exist some zeros in the interior of the unit disc; but it may be that there are unimodular zeros ), \(\left| \frac{p_0}{p_m}\right| =1\) (all \(z_j\) are unimodular) and \(1>\left| \frac{p_0}{p_m}\right| \ge 1-\sqrt{\epsilon }\), i. e. besides possible unimodular zeros, all (at least one) zeros are in \([[{1-\sqrt{\epsilon }}, 1))\).

In the next sections we generate a sequence of polynomials \((\rho _{m-\mu })_{\mu =0}^\delta\), where \(\rho _{m-\delta }\) has only unimodular zeros and is a common divisor of all \(\rho _{m-\mu },\ \mu =0,\ldots ,\delta\). Especially, its zeros are the unimodular ones of \(\rho _m\). The zeros of \(\rho _{m-\delta }\) will be calculated by the algorithm, derived in Sect. 7.

By construction, \(s_j:=\rho _{j+m-\delta }/\rho _{m-\delta },\ j=0,\ldots ,\delta\), have all their zeros in the interior of the unit disc. We will see that it is a sequence of Szegő polynomials, whose coefficients are the entries of a Hessenberg matrix. Its eigenvalues are the zeros of \(s_\delta\) resp. of \(\rho _m\) in \([[e^{-\alpha },1))\). Usually, the zeros of \(s_\delta\) have moduli less that \(1-\sqrt{\epsilon }\) for sufficient small \(\epsilon\). Nevertheless, there maybe zeros of \(\rho _m\) in \([[1-\sqrt{\epsilon }, 1))\).

By a modification of the algorithm for calculating the unimodular zeros, the number of such zeros can be determined and calculated by a bisection strategy. This will be done in Sect. 8.

It should be noted that \(1-\sqrt{\epsilon }\approx 1\). From examinations in a similar context, c.f. Jones et al. [20, p. 145], [31, Remark after Theorem 2.4], it can be expected that for sufficient small \(\epsilon\), appearing zeros in \([[1-\sqrt{\epsilon },1))\) often indicate the presence of noised samples or other numerical inaccuracies. Nevertheless, it is easy to construct a signal, whose Prony polynomial has zeros there.

4 Construction of a recurrence for the prony polynomial

In this section we consider the case \(1-\sqrt{\epsilon }>\left| \frac{p_0}{p_m}\right|\), i. e. \(|p_m|-|p_0|>|p_m|\sqrt{\epsilon }\) (\(>\epsilon\) since \(|p_m|>\sqrt{\epsilon }\)). Especially we have \(1-\left| \frac{p_0}{p_m}\right| >\sqrt{\epsilon }\) and, of course, \(1+\left| \frac{p_0}{p_m}\right| >\sqrt{\epsilon }\). From this we conclude

Since \(\epsilon ^2<\epsilon <\sqrt{\epsilon }\), \(\epsilon\) should be chosen, so that \(\epsilon ^2\) is larger that the machine-epsilon and \(1/\epsilon ^2\) smaller than the largest finite floating point number.

For a polynomial \(\rho _m\in \Pi _m\) (space of (complex) algebraic polynomials with highest degree m) we define its reciprocal polynomial \(\rho _m^\star\) by

If \(\rho _m\) is given in its monomial representation (2), this can be written as

Since \(\rho _m(0)=p_0\ne 0\ne p_m\) we see that \(\rho _m^\star\) has degree m, too. We take the approach

and want to find the coefficients \(p_\nu ^{(1)}\) of a polynomial \(\rho _{m-1}\),

resp. of \(\rho _{m-1}^\star\), such that (7) is satisfied.

This approach is based on the construction of the recurrence of Szegő polynomials. We start with \(\rho _m\), generate a sequence \(\rho _{m-1},\rho _{m-2},\ldots ,\rho _{m-\delta }\) of polynomials for a suitable \(\delta \le m\) (this will be specified later) to get a factorization \(\rho _m=\rho _{m-\delta } s_\delta\), where all zeros of \(s_\delta\) are in \([[e^{-\alpha },1))\) and all zeros of \(\rho _{m-\delta }\) are unimodular.

From (7) we immediately see

Combining this identity with (7) we get

On the other hand, using the monomial representations of \(\rho _m\) and \(\rho _m^\star\), we obtain

The right side can be simplified if we choose the normalization \(p_{m-1}^{(1)}\in \mathbb {R}\backslash \{0\}\) for the leading coefficient of \(\rho _{m-1}^{(1)}\). This has no effects for the zeros of the polynomials. Usually, we may assume \(p_{m-1}^{(1)}=1\), i.e. that \(\rho _{m-1}\) is monic. For a real valued leading coefficient of \(\rho _{m-1}\) we get

and thus for \(p_m^2-|p_0|^2> 0\)

This proves the following theorem

Theorem 1

Let \(\rho _m\in \Pi _m\backslash \Pi _{m-1},\ m\ge 1,\) be given with real leading coefficient \(p_m\) and whose zeros lie all in \([[e^{-\alpha },1]]\). If it has at least one zero with modulus less than 1, i. e. \(\left| \frac{\rho _m(0)}{p_m}\right| <1\), then there exists a polynomial \(\rho _{m-1}\in \Pi _{m-1}\backslash \Pi _{m-2}\) with leading coefficient \(p_{m-1}^{(1)}\in \mathbb {R}\backslash \{0\}\), so that the representation (7) holds. Besides its normalization \(\rho _{m-1}\) is uniquely determined.

As before, all zeros of \(\rho _{m-1}\) are unimodular iff \(|p_{m-1}^{(1)}|^2-|p_0^{(1)}|^2=0\). Otherwise, we can restart the proceeding for \(\rho _{m-1}\) instead of \(\rho _m\). This suggests the preliminary Algorithm 1 to calculate \(\rho _{m-\mu },\ \mu =1,2\ldots ,\) from given \(\rho _m\).

We get a sequence of polynomials \(\rho _{m-\mu },\)

where \(\delta\) denotes the index, where the outer loop stops, i. e. \(\rho _{m-\delta }\) has only unimodular zeros for \(\delta \le m-1\). If \(\delta =m-1\) then we choose \(\rho _0(z):=p_0^{(m)}=\kappa _0=a_0\in \mathbb {R}\backslash \{0\}\) (arbitrarily). The leading coefficients \(\kappa _{m-j}:=p_{m-j}^{(j)}\) of \(\rho _{m-j}\) can be chosen arbitrarily (\(\in \mathbb {R}\backslash \{0\}\)). Furthermore, we denote \(a_{m-j}:=\rho _{m-j}(0)=p_0^{(j)} ,\ j=0,\ldots ,\delta\).

Writing \(\rho _{m-j}\) in its product form \(\rho _{m-j}(z)=\kappa _{m-j} \prod _{\nu =1}^{m-j}(z- z_\nu ^{(m-j)})\), where \(z_\nu ^{(m-j)}\) denote the (unknown) zeros of this polynomial, we have

and especially \(\overline{a}_{m-\delta }=\frac{\kappa _{m-\delta }^2}{a_{m-\delta }}\) resp.

since all zeros of \(\rho _{m-\delta }\) have modulus 1 for \(\delta \le m-1\).

In the inner loop of Algorithm 1 the product

is obviously independent of \(\nu\) and can be calculated recursively by

We obtain Algorithm 2 as a more elaborated reformulation of Algorithm 1.

5 Recurrence relations

From (7) and (8) we see that the calculated polynomials satisfy the recurrences

For \(\delta =m-1\) we choose \(\rho _0(z):=\rho _0^\star (z):=\kappa _0=a_0\in \mathbb {R}\backslash \{0\}\).

For \(\delta <m-1\) the recurrences stop with \(\rho _{m-\delta }\) and \(\kappa _{m-\delta }^2=|a_{m-\delta }|^2\) (this property defines the value of \(\delta\)) and we get the sequence \((\rho _{m-\mu })_{\mu =0,\ldots ,\delta }\), where all zeros of \(\rho _{m-\delta }\) are unimodular.

We summarize

Theorem 2

Let \(\rho _m\in \Pi _m\backslash \Pi _{m-1}\) be a polynomial which has only simple zeros, located in \([[e^{-\alpha },1]],\ \alpha \ge 0\), normalized with leading coefficient \(\kappa _m\in \mathbb {R}\backslash \{0\}\) and \(a_m:= \rho _m(0)\). Then polynomials \(\rho _{m-1},\ldots ,\rho _{m-\delta },\ \deg \rho _{m-\mu }=m-\mu\), can be generated which satisfy the recurrences (13), (14) with \(a_{m-\mu }=\rho _{m-\mu }(0)\) and arbitrary leading coefficients \(\kappa _{m-\mu }\in \mathbb {R}\backslash \{0\},\ \mu =1,\ldots ,\delta\). Up to these non zero normalizing factors they are uniquely determined.

For \(\mu <\delta\) all zeros of \(\rho _{m-\mu }\) are in \([[e^{-\alpha },1]]\). Furthermore, the recurrences stop with a polynomial \(\rho _{m-\delta }\) which has for \(\delta <m\) all its zeros in [[1, 1]], i.e. (11) is satisfied.

It is not necessary that \(\rho _m\) is given in its monomial representation. (14) can be rewritten as

from which we get in the same manner by (13)

In summary, we obtain

Applying these recurrences, starting with \(\rho _m\) and \(a_m=\rho _m(0)\), the polynomials \(\rho _\mu\) can be generated ‘backwards’. Again, if \(\delta\) is unknown, these recurrences stop if the denominator \(\kappa _\mu ^2-|a_\mu |^2\) is close to zero with \(\delta =m-\mu\), i.e. with \(\rho _{m-\delta }\) which has only unimodular zeros for \(\delta <m\).

If \(\rho _m\) is given in monomial representation, the construction of the monomial coefficients of \(\rho _\mu\) in Algorithm 2 corresponds with the Schur–Cohn algorithm/Schur–Cohn test [14][Chapter 6].

As in (10) we get

and especially \(\kappa _{m-\mu }=\rho _{m-\mu }^\star (0)\). Since for \(\mu =\delta\) all zeros of \(\rho _{m-\delta }\) are unimodular, we obtain

Writing the recurrences (13) and (14) for \(\mu =m,\ldots ,m-\delta\), using (15), it is easy to see that \(\rho _{m-\delta }\) is a common divisor of each \(\rho _{m-\mu }\). Thus, there exist polynomials \(s_\nu \in \Pi _\nu\) so that \(\rho _{m-\nu }=\rho _{m-\delta } s_{\delta -\nu }\) (and hence \(\rho _{m-\nu }^\star =\rho _{m-\delta }^\star s_{\delta -\nu }^\star\)) for \(\nu =0,\ldots ,\delta\), and the recurrences (13), (14) can be rewritten as recurrences for the \(s_{\mu -m+\delta }=\rho _{\mu }/\rho _{m-\delta }\) and \(s_{\mu -m+\delta }^\star\). We obtain

Dividing by \(\rho _{m-\delta }\) and using (15) we get

for \(\nu =0,\ldots ,\delta -1\). Analogously we proceed for the reciprocal polynomial from (14) where we get

By definition, we have \(s_{\delta -\nu }(0)=\frac{a_{m-\nu }}{a_{m-\delta }}\ne 0\), \(s_{\delta -\nu }^\star (0)=\frac{\kappa _{m-\nu }}{\kappa _{m-\delta }}\in \mathbb {R}\backslash \{0\}\), and with \(j:= \delta -\nu\) we get

where \(s_0:=s_0^\star :=1\) by reason of consistency to \(\rho _{m-\nu }= \rho _{m-\delta } s_{\delta -\nu }\) for \(\nu =\delta\).

Lemma 1

Each \(s_j\), defined in (18), has all its zeros in the interior of the unit disc and 0 is no zero of them. Furthermore, \(s_j\) and \(s_{j-1},\ j=1,\ldots ,\delta\), have no common zeros.

Proof

By (18) it can be seen that the leading coefficient of \(s_j\) is \(\prod _{\nu =1}^{j} \frac{\kappa _{\nu +m-\delta }}{\kappa _{\nu +m-\delta -1}}=\frac{\kappa _{j+m-\delta }}{\kappa _{m-\delta }}\). Since renormalising a polynomial doesn’t change its zeros, we consider the monic polynomials

Especially, we have \(\tilde{s}_j^\star = \frac{\kappa _{m-\delta }}{\kappa _{j+m-\delta }} s_j^\star\). By (18) we get the recurrence \(\tilde{s}_0(z)=\tilde{s}_0^\star (z)=1\),

(11) implicates that the reflection coefficients \(\frac{a_{j+m-\delta }}{\kappa _{j+m-\delta }} \frac{\kappa _{m-\delta }}{a_{m-\delta }}\) have moduli

Thus, the result of the lemma follows from [12, Theorem 9.1] and the text immediately after (17). \(\square\)

Obviously, the zeros of \(\rho _m\) in \([[e^{-\alpha },1))\) are these ones of \(s_\delta\) (which have moduli \(\ge e^{-\alpha }\) by the given assumptions), and its unimodular zeros are these ones of \(\rho _{m-\delta }\).

The elements \((\tilde{s}_j)_{j=0}^\delta\) satisfy the recurrence (19), whose reflection coefficients have moduli less than one. By [12, Theorem 4.1 and the sentence before] it follows that these polynomials are orthogonal with respect to a sequence of complex numbers, respectively with respect to a linear functional. More precisely, the examinations before this cited Theorem 4.1 show that these polynomials are orthogonal on the (complex) unit circle [[1, 1]] with respect to a bounded nondecreasing measure with at least \(\delta +1\) points of increase on \([0,2\pi ]\), where \(z=e^{\mathrm{i}\, t},\ t\in [0,2\pi ]\).

Thus, the designation ‘Szegő-polynomials’ for the elements of both sequences \((\tilde{s}_j)_{j=0}^\delta\) and \((s_j)_{j=0}^\delta\) is justified, because all \(\tilde{s}_j\) and \(s_j\) differ only by a normalisation factor. Especially, both polynomials have the same zeros for \(j=1,\ldots ,\delta\), which do not affect the orthogonality properties of both sequences of polynomials.

But this article does not focus on the orthogonality of these polynomials, rather we need the recurrence and the reflection coefficients.

6 Zeros in the interior of the unit disc

In this section we determine the zeros of \(s_\delta \). We abbreviate \(\alpha _j:=\frac{a_{j+m-\delta }}{\kappa _{j+m-\delta -1}} \frac{\kappa _{m-\delta }}{a_{m-\delta }}\), \(\gamma _j:=\frac{\kappa _{j+m-\delta }}{\kappa _{j+m-\delta -1}}\) and \(m-\delta =:\omega , \ 0\le \omega \le m\). Then we have

By (18) we get

where empty products (\(\nu =j\) in the sum) have value 1, all \(\gamma _\mu -\frac{|\alpha _\mu |^2}{\gamma _\mu }\ne 0 \) since \( 0< 1- \left| \frac{\alpha _\mu }{\gamma _\mu }\right| ^2<1\). With \(\beta _0:=1\),

and (18) we get

This can be rewritten in the form

Hence, the zeros of \(s_\delta \) are the eigenvalues of \(\mathbf {A}\in \mathbb {C}^{\delta ,\delta }\),

where \(\mathbf {D}:=\mathrm {diag}\left( \beta _0,\ldots ,\beta _{\delta -1}\right) , \ \mathbf {D}^{-1}=\mathrm {diag}\left( \frac{1}{\beta _0},\ldots ,\frac{1}{\beta _{\delta -1}}\right) \) and

Here, we have used

Since \(\mathbf {B}\) is similar to \( \mathbf {A}\), the eigenvalues of both matrices are the same and \(s_\delta \) is the characteristic polynomial of \(\mathbf {A}\) and hence of \(\mathbf {B}\), too, c.f. [2, Theorem 3.2], [14].

From (10) we see, as expected, that the entries of the non degenerated Hessenberg matrix \(\mathbf {B}\) are independent of the representation of the \(s_j\), because there appear only terms \(\frac{a_{j+\omega }}{\kappa _{j+\omega }}\) resp. \(\frac{\overline{a}_{j+\omega }}{\kappa _{j+\omega }}\) which are the values of the monic polynomials \(\frac{1}{\kappa _{j+\omega }} \rho _{j+\omega }\) resp. \(\frac{1}{\kappa _{j+\omega }} \overline{\rho }_{j+\omega }\) at 0, \(j=0,\ldots ,\delta\) (note that \(|a_{j+\omega }|<|\kappa _{j+\omega }|,\ j=0,\ldots ,\delta -1\)).

7 Zeros on the unit circle

In this section we consider \(\rho _\omega (z)=\sum _{\nu =0}^\omega p_\nu ^{(\omega )} z^\nu\) on [[1, 1]]. Although its zeros can be determined with standard zero finders, see e.g. [26, 27], we sketch an algorithm which uses the knowledge that \(\rho _{\omega }\) has only unimodular zeros.

We follow ideas given in [25] and write \(z=e^{\mathrm{i}\, t},\ -\pi < t\le \pi\), i.e. \(\rho _\omega (e^{\mathrm{i}\, t})=u_\omega (t)+\mathrm{i}\, v_\omega (t)\),

Thus, the common zeros \(t_j\) of the trigonometric polynomials \(u_\omega\) and \(v_\omega\) in \((-\pi ,\pi ]\) yield the zeros \(z_j\) of \(\rho _m\) in [[1, 1]] and \(\omega _j=\mathrm{i}\, t_j,\ j=1,\ldots ,\omega\). By the given assumptions there are \(\omega\) simple ones.

In the following we consider the case that \(\rho _\omega\) has only real coefficients \(p_\nu ^{(\omega )},\ \nu =0,\ldots ,\omega\) (the case that it has only imaginary coefficients can be treated in the same manner). For the general case one may consider the real polynomial \(|\rho _\omega |^2\). From its \(2\omega\) zeros \(z_j,\ \overline{z}_j\) only these ones with \(\mathrm {Re}\,z_j\ge 0\) needed to be calculated. The other ones can be obtained by conjugation. Then, verify which of them are the zeros of \(\rho _\omega\).

For \(p_\nu ^{(\omega )}\in \mathbb {R}\) for all \(\nu =0,\ldots ,\omega\), the functions \(u_\omega ,\ v_\omega\) can be written as

Furthermore, we define the even trigonometric polynomial \(\tilde{v}_{\omega -1}\) by

Since \(\rho _\omega ([[1,1]])\) is symmetric to the real axis, it is sufficient to consider \(\rho _\omega (e^{\mathrm{i}\, t})\) for \(t\in [0,\pi ]\) instead for \(-\pi <t\le \pi\). As the transformation \(x:=\cos t,\ t\in [0,\pi ],\ x\in [-1,1]\), is bijective we have

where \(T_\nu\) (resp. \(U_\nu\)) denotes the \(\nu\)th Chebyshev polynomial of the first (resp. second) kind. Since a possible zero of \(\rho _\omega\) in \(\pm 1\) can be detected and divided out by the Horner algorithm, we assume in the following that \(\rho _\omega (\pm 1)\ne 0\) and especially that \(\omega\) is even because \(\rho _\omega\) is a real polynomial with only non real and simple zeros. In this case, a zero of \(\rho _\omega\) on [[1, 1]] can be obtained from the common zeros of \(r_\omega\) and \(r_{\omega -1}\) in \((-1,1)\), i.e. as the zeros of the greatest common divisor (\(\gcd\)) of \(r_\omega\) and \(r_{\omega -1}\).

As \(\rho _\omega\) has \(\omega\) zeros on \(|z|=1\) and by the symmetry of its zeros with respect to the real axis, there are \(\frac{\omega }{2}\) zeros for \(t\in (0,\pi )\). Thus, \(r_{\omega /2}:=\gcd (r_\omega , r_{\omega -1})\in \Pi _{\omega /2}\) can be calculated by the Euclidean algorithm for Chebyshev expansions which we have introduced in [25]. This algorithm yields \(r_{\omega /2}\) as an \(U_\nu\)-expansion. It has all its \(\omega /2\) (simple) zeros in \((-1,1)\), which can be obtained successively e.g. by Newton’s method with deflation. The deflation can be done by the Euclidean algorithm for Chebyshev expansions [25], too, or by the Clenshaw algorithm in the version which we have described in [41, p. 605 f.], where \(q_{n-1}^{(1)}\) from there is the deflated polynomial and y from there denotes the zero. Alternatively, the algorithm in [6] calculates the roots as eigenvalues of a matrix, whose coefficients depend on the recurrence coefficients of the \(U_\nu\) and on the coefficients of \(r_{\omega /2}\) in terms of the \(U_\nu\).

8 Zeros near the unit circle

Until now we have distinguished between finding zeros \(z_j\) with modulus less than one or equal one. By numerical reasons, we have chosen a small value \(\epsilon ,\ 0<\epsilon \ll 1\), and replaced the condition \(1-\left| \frac{a_{m-\mu }}{\kappa _{m-\mu }}\right| >0\) in the while-loop in Algorithm 2 by \(1-\left| \frac{a_{m-\mu }}{\kappa _{m-\mu }}\right| >\sqrt{\epsilon },\ 0\le \mu \le \delta \le m-1\). By the approach of Sect. 3 this means that we have assumed that all zeros of \(\rho _{m-\mu }\) lie in \([[e^{-\alpha },1-\sqrt{\epsilon }))\) and especially that the \(\delta\) zeros of \(s_\delta\) resp. the eigenvalues of \(\mathbf {B}\) are there, too. Consequently, Algorithm 2 calculates a sequence of polynomials whose all zeros are there.

Furthermore, for \(\rho _{m-\delta }\) we have calculated its unimodular zeros. If we would have exact samples and no numerical inaccuracies, we could choose \(\epsilon =0\) and would obtain all zeros of \(\rho _m\) on the unit circle (i.e. that all \(m-\delta\) zeros of \(\rho _{m-\delta }\) are there).

But for \(\epsilon >0\), \(\rho _{m-\delta }\) may have \(\deg (\gcd (u_\omega , v_\omega ))<m-\delta\) zeros on the unit circle which can be calculated by the algorithm descibed in Sect. 7. Thus, the remaining \(m-\delta - \deg (\gcd (u_\omega , v_\omega ))\) zeros of \(\rho _m\) resp. \(\rho _{m-\delta }\) are in \([[{1-\sqrt{\epsilon }},1))\), and \(s_\delta\) has all its zeros in \([[e^{-\alpha },1-\sqrt{\epsilon }))\).

Now, we consider \(\rho _{m-\delta }\) on \([[1-\sqrt{\epsilon },1-\sqrt{\epsilon }]]\), i.e.

on the unit circle, and determine its zeros there by the algorithm outlined in Sect. 7. Then we repeat this proceeding by using a bisection strategy on \([[1-{\epsilon _\nu },1-{\epsilon _\nu }]],\ \epsilon _\nu :=\frac{\sqrt{\epsilon }}{2^\nu }\), \(\nu =1,2,\ldots\), until all zeros have been found. The scaling of \(\rho _{m-\delta }\), i.e. the usage of \(\rho _{m-\delta }((1-{\epsilon _\nu }) z)\) for \(|z|=1\) and various \(\epsilon _\nu\), may increase the risk of numerical instabilities. Thus, it is recommended to use the scaled polynomials in Sect. 7 only to find rough estimates for the zeros, followed by an iterative refinement with the unscaled polynomial, e.g. with Newton’s or Bairstow’s method and deflation.

9 Determining the coefficients \((\lambda _j)_{j=1}^m\) of the signal

In the previous Sects. 6 and 7, we have calculated \(\omega _j,\ j=1,\ldots ,m\), and especially the value m of the signal h. Using N given samples \(h_k,\ k=0,\ldots ,N-1\), with \(N\ge 2m\) we get from (1) the interpolation conditions

or

where \(E_{N,m}=\left( e^{\nu \omega _j}=z_j^\nu \right) _{\begin{array}{c} {\nu =0,\ldots ,N-1} \\ {j=1,\ldots ,m}\ \ \end{array}},\ \lambda =(\lambda _1,\ldots ,\lambda _m)^T,\ \mathrm {h}=(h_0,\ldots ,h_{N-1})^T\) and \(N\ge k\ge m\). The matrix \(E_{N,m}\) has full rank m, and m of the equations will be enough to determine \(\lambda\). If we have unnoised samples and no errors appear during the calculations, (21) has an unique solution. The overdetermination of this system can be used to regularize the effects of numerical errors during the calculations. This can be done by minimizing \(\Vert r(\lambda )\Vert\), where \(r(\lambda ):= \mathrm {h}-E_{N,m} \lambda\) and \(\Vert \cdot \Vert\) denotes a suitable norm, usually the \(l_1, \ l_2\) ones, or the norm \(\Vert \cdot \Vert _\dagger\) from Sect. 2. In a last step, all \(\lambda _j e^{\omega _j\cdot }\) of h with \(|\lambda _j|<\varepsilon\) for a suitable small positive \(\varepsilon\) will be removed, and we get \(m:=\# \{j\, :\, |\lambda _j|\ge \varepsilon \}\), so that a possible overestimated value of m can be corrected.

10 Numerical remarks

Example 1

To test the algorithm for various values of m and \(\epsilon\) we have randomly generated \(\omega _j,\ j=1,\ldots ,m,\) and thus the zeros of a polynomial \(\rho _m\) which we expand in its monomial representation, using Mathematica. Its zeros in the interior of the unit disc are computed by the algorithm in Sect. 6 and compared with the exact zeros by the \(l_2\)-norm of the residual vectors. The results are given in Table 1, where the residual-norms are mean values of the considered examples.

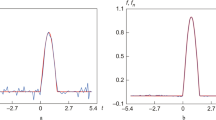

Even in cases with larger norms of the residuals, most zeros are accurately determined and only some few ‘outliers’ appeared, see Fig. 1 for an example (randomly generated). Thus, it may be appropriate, especially for large m, to use an iterative (e.g. Newton’s) method to improve the accuracy of the numerically calculated zeros.

a Positions of the zeros: red bullet: numerically determined, \(\otimes\): positions of the exact zeros, polynomial degree \(m=50\), all zeros in the interior of the unit disc (\(\epsilon =10^{-8}\)), b numbering of the zeros by their absolute values and their errors measured by the minimal distances between the exact and numerical values

From the previous examinations we get the number \(\omega\) of zeros in \([[{1-\sqrt{\epsilon }},1]]\) and the number \(\delta =m-\omega\) of zeros in \([[e^{-\alpha },1-\sqrt{\epsilon }))\). If m is estimated by \(\ell >m\) or if \(\epsilon\) is chosen too large, there maybe zeros in \([[{1-\sqrt{\epsilon }},1]]\), which possibly appear only due to noised samples, numerical difficulties, inappropriate chosen \(\ell ,\ldots\) If we found some there, it is recommendable to test whether there are some other zeros in a small disc around the found zero, to check whether there is in fact a cluster of (simple) zeros or whether this is a noised one. In such cases it is possible to use filtering and approximation methods to detect and correct such zeros. But this is a theme of its own.

The value \(\epsilon\) defines the distance between the zero with maximal modulus in the interior of the unit disc to [[1, 1]]. If it is too small, in Algorithm 2 there may appear very small denominators. This causes that \(\mathbf {B}\) has a superdiagonal element with small modulus, which means that this matrix is nearly degenerated.

If there are zeros in the interior of \([[e^{-\alpha },1]]\), but close to [[1, 1]], the results of Algorithm 2 may depend sensitive on the chosen value of \(\epsilon\). A larger value of \(\epsilon\) increases the width of the annulus where ‘nearly’ unimodular zeros are (calculated as described in Sect. 8).

If \(\epsilon\) is too small it may lead to a too large value of \(\delta\), i.e. the dimension of \(\mathbf {B}\). This may cause that some of its numerical calculated eigenvalues have moduli \(\ge 1\). In the subsequent we develop a simple method to handle this.

Until now we have assumed that \(\delta\) is known by the calculations of the previous sections, especially in Sect. 6. Now, we show how \(\mathbf {B}\) can be calculated successively if \(\delta\) is only known as an upper bound for the number of zeros in the interior of the unit disc or if \(\epsilon\) is chosen inappropriate. To empathize the dependence of \(\mathbf {B}\) from m and \(\delta\) we write \(\mathbf {B}_{m-\delta }^m:=\mathbf {B}\). Obviously, this matrix can be subdivided as

where \(\mathbf {B}_{m-\delta }^{m-\delta +j}\in \mathbb {C}^{j,j},\ \mathbf {B}_{m-(\delta -j)}^m\in \mathbb {C}^{\delta -j,\delta -j}\) are Hessenberg matrices, too. In Algorithm 2 and from the text after theorem 2 the coefficients \(a_{m-\mu }\) will be calculated for \(\mu =0,1,\ldots\) This means that \(\mathbf {B}\) is generated successively from \(\mathbf {B}_{m-\mu }^m,\ \mu =1,2,\ldots ,\delta\).

If there are zeros on the unit circle, Algorithm 2 stops with \(\mu =\delta\) for which \(1-\left| \frac{a_{m-\delta }}{\kappa _{m-\delta }}\right| <\sqrt{\epsilon }\) and we get \(\mathbf {B}=\mathbf {B}_{m-\delta }^m\). This corresponds to stop the iteration for computing the \(\rho _{m-\mu }\) in Algorithm 2 for \(\mu =\delta\). If \(\epsilon\) is too large it maybe that \(\delta\) and hence the dimension of \(\mathbf {B}\) resp. the degree of \(s_\delta\) becomes too small. Then we have not found all zeros in the interior of the unit disc and \(\rho _{m-\delta }\) may have some zeros close to but not in [[1, 1]]. This will be taken into account in Sect. 7, too.

For an \(\epsilon\) chosen too small, it maybe that we obtain a stopping parameter \(\delta _\epsilon >\delta\) instead of \(\delta\), i.e. the dimension \(\delta _\epsilon\) of \(\mathbf {B}\) will be too large, i.e. for decreasing j there is an index \(j=j_\epsilon\), with \(\delta _\epsilon -j_\epsilon >\delta ,\) for which we have \(1\gg 1-\left| \frac{a_{m-\delta _\epsilon +j_\epsilon }}{\kappa _{m-\delta _\epsilon +j_\epsilon }}\right| >\sqrt{\epsilon }\approx 0\).

Let us assume for a moment what would happen if \(1-\left| \frac{a_{m-\delta _\epsilon +j_\epsilon }}{\kappa _{m-\delta _\epsilon +j_\epsilon }}\right| =0\). Then the set of eigenvalues of \(\mathbf {B}_{m-\delta _\epsilon }^m\) is the union of the sets of eigenvalues of \(\mathbf {B}_{m-\delta _\epsilon }^{m-\delta _\epsilon +j_\epsilon }\) and \(\mathbf {B}_{m-(\delta _\epsilon -j_\epsilon )}^m\). Thus, for sufficient small (positive) \(1-\left| \frac{a_{m-\delta _\epsilon +j_\epsilon }}{\kappa _{m-\delta _\epsilon +j_\epsilon }}\right|\), we may presume that the eigenvalues of the last both matrices are good approximations for the eigenvalues of \(\mathbf {B}_{m-\delta _\epsilon }^m\), i.e. zeros of a polynomial \(s_{\delta _\epsilon }\), whose \(\delta\) zeros with smallest moduli are approximations for the zeros of \(s_\delta\), and the remaining \(\delta _\epsilon -\delta\) eigenvalues are approximations for some of the unimodular zeros of \(\rho _{m-\delta }\). The latter ones may be close to the unit circle, but may have moduli \(>1\) if they are obtained numerically. So, they must be corrected because this would contradict the assumptions of the model of the given signal (1). We illustrate this in the following examination which may occur if we build up the matrix \(\mathbf {B}\), using a too small chosen \(\epsilon\).

Taking \(\mathbf {B}_{m-\delta }^m\) we add the first column/row to get \(\mathbf {B}_{m-\delta _\epsilon }^{m}\), assuming \(\delta _\epsilon = \delta +1\). We have \(\mathbf {B}_{m-(\delta +1)}^{m-\delta }=\left( -\frac{\overline{a}_{m-(\delta +1)}}{\kappa _{m-(\delta +1)}}\right)\) with an eigenvalue having a modulus \(\ge 1\), i.e. the eigenvalues of \(\mathbf {B}_{m-\delta }^m\) are the zeros of \(\rho _m\) in the interior of the unit disc. The value of \(\mathbf {B}_{m-(\delta +1)}^{m-\delta }\) indicates that there maybe a zero in (or very close to) [[1, 1]]. Thus, we correct \(\delta _\epsilon\) to \(\delta\) and can use the additional located zero as an approximation for a possible unimodular zero of \(\rho _m\).

In similar manner we conclude that for each \(\delta _\epsilon >\delta\) the eigenvalues of \(\mathbf {B}_{m-\delta }^m\) are the zeros of \(\rho _m\) in the interior of the unit disc and that the eigenvalues of \(\mathbf {B}_{m-\delta _\epsilon }^{m-\delta }\) are approximations for \(m-\delta _\epsilon \le \omega\) unimodular zeros of \(\rho _m\).

These approximations can be used as initial values e.g. for some few iterations of the Newton method, applied on \(\rho _m\) (not on \(\rho _{m-\delta }\) and \(s_\delta\) because they may be inaccurate calculated due of numerical errors in this case), to improve the position of the zeros. An alternative way for this is given in [2], applied on \(\rho _m^\star\). Deflation can be used to decrease the efforts.

Example 2

For \(\epsilon =10^{-30}\), a randomly generated polynomial of degree \(m=20\),

we have obtained \(\delta =15\) and

with a Hessenberg matrix \(\mathbf {B}_6^{20}\in \mathbb {C}^{14,14}\), whose superdiagonal elements have moduli \(>\sqrt{\epsilon }\). The first element of \(\mathbf {B}\) has an absolute value greater than one. The element on superdiagonal position (1, 2) has a modulus close to zero, indicating that \(\epsilon\) is too small.

Obviously, the element on position (1, 1) of \(\mathbf {B}_5^{20}\), i.e. \(\mathbf {B}_5^{6}\in \mathbb {C}\), is an approximate eigenvalue, but is outside of the unit disc and close to a zero \(0.998768-0.0496163\mathrm {i}\) of \(\rho _m\) on the unit circle, see Fig. 2. If \(\delta\) would be correct, the eigenvalues of \(\mathbf {B}_5^{20}\) would be the zeros of a Szegő polynomial \(s_{15}\) which are, due to Lemma 1, all in the interior of the unit disc. This indicates that \(\delta\) is chosen too large resp. \(\epsilon\) too small.

If we choose \(\epsilon =10^{-7}\) we obtain \(\mathbf {B}=\mathbf {B}_6^{20}\), whose eigenvalues are the zeros of \(s_{14}\). They are all in the interior of the unit disc. Since \(\mathbf {B}_5^{20}\) is nearly degenerated, the eigenvalues of \(\mathbf {B}_6^{20}\) are closely to the corresponding ones of \(\mathbf {B}_5^{20}\) in the interior of the unit disc but without its incorrect one outside there. The \(l_2\)-norm of the vector of the differences of the calculated zeros of \(s_{14}\) and the corresponding zeros of \(\rho _m\) in the interior of the unit disc is \(2.96315\times 10^{-12}\). The zeros on [[1, 1]] are \(-0.0352764-0.999378 \mathrm{i}\, ,\ -0.494871+0.868967\mathrm{i} ,\ 0.0917022 -0.995786 \mathrm{i} ,\ -0.491722+0.870752 \mathrm{i} ,\ -0.57585-0.817556 \mathrm{i} , \ 0.998768 -0.0496163 \mathrm{i}\) (this one was approximated by \(\mathbf {B}_5^{6}\)).

References

Ammar, G.S., Calvetti, D., Gragg, W.B., Reichel, L.: Polynomial zerofinders based on Szegő polynomials. J. Comput. Appl. Math. 127, 1–16 (2001)

Ammar, G.S., Calvetti, D., Reichel, L.: Continuation methods for the computation of zeros of Szegő polynomials. Lin. Algebra Appl. 249, 125–155 (1996)

Aurentz, J.L., Mach, T., Robol, L., Vandebril, R., Watkins, D.S.: Core–Chasing Algorithms for the Eigenvalue Problem. SIAM, Philadelphia (2018)

Boyko, N., Karamemis, G., Kuzmenko, V., Uryasev, S.: Sparse signal reconstruction: LASSO and cardinality approaches. In: Vogiatzis, C., Walteros, J., Pardalos, P. (eds), Dynamics of Information Systems. Springer Proceedings in Mathematics & Statistics, vol. 105, pp. 77–90, Springer, Cham (2014)

Cai, T.T., Xu, G., Zhang, J.: On recovery of sparse signals via \(\ell _{1}\) minimization. IEEE Trans. Inform. Theory 55, 3388–3397 (2009)

Day, D., Romero, L.: Roots of polynomials expressed in terms of orthogonal polynomials. SIAM J. Numer. Anal. 43, 1969–1987 (2005)

Donoho, D.L.: For most large underdetermined systems of linear equations the minimal \(l_1\)-norm solution is also the sparsest solution. Commun. Pure Appl. Math. 59, 797–829 (2006)

Dragotti, P.L., Vetterli, M., Blu, T.: Sampling moments and reconstructing signals of finite rate of innovation: Shannon meets Strang-Fix. IEEE Trans. Signal Process. 55, 1741–1757 (2007)

Drobakhin, O.O., Olevskyi, O.V., Olevskyi, V.I.: Study of eigenfrequencies with the help of Prony’s method. AIP Conf. Proc. 1895, 060001-1-060001–8 (2017)

Elsayed, O.A., Eldeib, A., Elhefnawi, F.: Parametric modeling of ICTAL epilepsy EEG signal using Prony method. Int. J. Comput. Sci. Softw. Eng. (IJCSSE) 3, 86–89 (2014)

Føyen, S., Kvammen, M.-E., Fosso, O.B.: Prony’s method as a tool for power system identification in smart grids. In: 24th IEEE Internal Symposium Power Electronics and Electrical Drives, Automation and Motion (SPEEDAM), pp. 562–569 (2018)

Geronimus, Ya. L.: Polynomials orthogonal on a circle and their applications, Transl. AMS, Ser. 1, 3 (Series and Approximation), 1–77 (1962, Reprint 1968), translated from the original article, publ. in Zapiski Naučno-Issled. Inst. Mat. Meh. Har’kov. Mat. Obšč. (4) 19, 35–120 (1948)

Gragg, W.B.: Positive definite Toeplitz matrices, the Arnoldi process for isometric operators, and Gaussian quadrature on the unit circle, J. Comput. Appl. Math. 46, 183–198 (1993), rev. transl. from: Gregg, V. B. [Gragg, William B.] Positive definite Toeplitz matrices, the Hessenberg process for isometric operators, and the Gauss quadrature on the unit circle (Russian). In: Numerical methods of linear algebra (Russian), Moskov. Gos. Univ., Moscow, pp. 16–32 (1982)

Henrici, P.: Applied and Computational Complex Analysis, vol. 1. Wiley, New York (1974)

Ho, A.T.S., Tham, W.H., Low, K.S.: Improving classification accuracy in through-wall radar imaging using hybrid Prony’s and singular value decomposition method. In: Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, IGARSS ’05, pp. 4267–4270 (2005)

Hua, Y., Sarkar, T.K.: Matrix pencil method for estimating parameters of exponentially damped/undamped sinusoids in noise. IEEE Trans. Speech Signal Process. 38, 814–824 (1990)

Jones, W.B., Njåstad, O., Saff, E.B.: Szegő polynomials associated with Wiener–Levinson filters. J. Comput. Appl. Math. 32, 387–406 (1990)

Jones, W.B., Njåstad, O.: Applications of Szegő polynomials to digital signal processing. Rocky Mountain J. Math. 21, 387–436 (1991)

Jones, W.B., Thron, W.J., Njåstad, O., Waadeland, H.: Szegő polynomials applied to frequency analysis. J. Comput. Appl. Math. 46, 217–228 (1993)

Jones, W.B., Njåstad, O., Waadeland, H.: An alternative way of using Szegő polynomials in frequency analysis. In: Cooper, S.C. ,Thorn, W.J. (eds.), Continued Fractions and Orthogonal Polynomials-Theory and Applications, Lecture Notes in Pure and Applied Mathematics, vol. 154 , Marcel Dekker, Inc., New York, pp. 141–152 (1994)

Jones, W.B., Njåstad, O., Waadeland, H., Asymptotics of zeros of orthogonal and para-orthogonal Szegő polynomial in frequency analysis. In: Cooper, S.C., Thorn, W.J. (eds.), Continued Fractions and Orthogonal Polynomials-Theory and Applications, Lecture Notes in Pure and Applied Mathematics, vol. 154 , Marcel Dekker, Inc., New York, pp. 153–190 (1994)

Khazaei, J., Fan, L., Jiang, W., Manjure, D.: Distributed Prony analysis for real-world MPU data. Electr. Pow. Res. 133, 113–120 (2016)

Khalaf, M.W., El-Hefnawi, F.M., Elsherbeni, A.Z., Harb, H.M., Bannis, M.H.: Feature extraction and classification of buried landmine signals. In: IEEE International Symposium Antennas and Propagation and USNC-URSI Radio Science Meeting, pp. 1175–1176 (2018)

Liu, Q., Wang, J.: \(L_1\)-minimization algorithms for sparse signal reconstruction based on a projection neural network. IEEE Trans. Neural Netw. Learn. Syst. 27, 698–707 (2016)

Locher, F., Skrzipek, M.: An algorithm for locating all zeros of a real polynomial. Computing 54, 359–375 (1995)

McNamee, J.M.: Numerical Methods for Roots of Polynomials—Part I (Series: Studies in Computational Mathematics, vol. 14). Elsevier Science, Amsterdam (2007)

McNamee, J.M., Pan, V., Numerical Methods for Roots of Polynomials—Part II (Series: Studies in Computational Mathematics, vol. 16). Elsevier Science, Amsterdam (2013)

de Morena-Váquez, J., Sartorius-Castellanos, A.R., Antonio-Ortiz, R., Hernández-Nieto, M.L., Zamudio-Radilla, A.: Prony’s method implementation for slow wave identification of electroenterogram signals, Rev. Facul. de Ingeniera, Univ. de Antioquia 76, 114–122 (2015)

Petersen, V.: A combination of two methods in frequency analysis: the \(R(N)\)-process. In: Jones, W.B., Ranga, A.R. (eds.) Orthogonal Functions, Moment Theory, and Continued Fractions: Theory and Applications, pp. 387–398. Marcel Dekker Inc, New York, Basel, Hong Kong (1998)

Petersen, V.: Zeros of polynomials used in frequency analysis: The \(R(N)\)-process. In: Jones, W.B., Ranga, A.R. (eds.) Orthogonal Functions, Moment Theory, and Continued Fractions: Theory and Applications, pp. 399–408. Marcel Dekker Inc, New York, Basel, Hong Kong (1998)

Pan, K., Saff, E.B.: Asymptotics for zeros of Szegő polynomials associated with trigonometric polynomial signals. J. Approx. Theory 71, 239–251 (1992)

Potts, D., Tasche, M.: Parameter estimation for exponential sums by approximate Prony method. Signal Process. 90, 1631–1642 (2010)

Potts, D., Tasche, M.: Nonlinear approximation by sums of nonincreasing exponentials. Appl. Anal. 90, 609–626 (2011)

Potts, D., Tasche, M.: Parameter estimation for nonincreasing exponential sums by Prony-like methods. Linear Algebra Appl. 439, 1024–1039 (2013)

de Prony, G.: Essai expérimental et analytique sur les lois de la dilatabilité des fluides élastiques et sur celles de la force expansive de la vapeur de l’eau et de la vapeur de l’alkool, à différerentes températures, J. École Polytech. 1, 24–76 (1795)

Rice, J.R., White, J.S.: Norms for smoothing and estimation. SIAM Rev. 6, 243–256 (1964)

Roy, R., Kailath, T.: ESPRIT-estimation of signal parameters via rotational invariance techniques. IEEE Trans. Acoustic Speech Signal Process. 37, 984–994 (1989)

Roy, R., Kailath, T.: ESPRIT-estimation of signal parameters via rotational invariance techniques. In: Auslander, L., Grünbaum, F.A., Helton, J.W., Kailath, T., Khargoneka, P., Mitter, S. (eds.) Signal Processing, Part II: Control Theory and Applications, pp. 369–411. Springer, New York (1990)

Sarkar, T.K., Pereira, O.: Using the matrix pencil method to estimate the parameters of a sum of complex exponentials. IEEE Antennas Propag. Mag. 37, 48–55 (1995)

Shukla, P., Dragotti, P.L.: Sampling schemes for multidimensional signals with finite rate of innovation. IEEE Trans. Signal Process. 55, 3670–3686 (2007)

Skrzipek, M.: Polynomial evaluation and associated polynomials. Numer. Math. 79, 601–613 (1998)

Skrzipek, M.: Signal recovery by discrete approximation and a Prony-like polynomial. J. Comput. Appl. Math. 326, 193–203 (2017)

Vetterli, M., Marziliano, P., Blu, T.: Sampling signals with finite rate of innovation. IEEE Trans. Signal Process. 30, 1417–1428 (2002)

Acknowledgements

I thank the unknown reviewers for their valuable hints and comments.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Skrzipek, MR. Parameter estimation and signal reconstruction. Calcolo 58, 40 (2021). https://doi.org/10.1007/s10092-021-00431-8

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10092-021-00431-8

Keywords

- Prony’s method

- Prony polynomial

- Szegő polynomial

- Exponential sum

- Hessenberg matrix

- Recurrence relation

- Chebyshev polynomial

- overdetermined system

- Recovery of structured functions