Abstract

Statistical solutions have recently been introduced as an alternative solution framework for hyperbolic systems of conservation laws. In this work, we derive a novel a posteriori error estimate in the Wasserstein distance between dissipative statistical solutions and numerical approximations obtained from the Runge-Kutta Discontinuous Galerkin method in one spatial dimension, which rely on so-called regularized empirical measures. The error estimator can be split into deterministic parts which correspond to spatio-temporal approximation errors and a stochastic part which reflects the stochastic error. We provide numerical experiments which examine the scaling properties of the residuals and verify their splitting.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Analysis and numerics of hyperbolic conservation laws have seen a significant shift of paradigms in the last decade. The investigation and approximation of entropy weak solutions was state of the art for a long time. This has changed due to two reasons. Firstly, analytical insights [5, 9] revealed that weak entropic solutions to the Euler equations in several space dimensions are not unique. Secondly, numerical experiments [14, 23] have shown that in simulations of e.g. the Kelvin-Helmholtz instability, numerical solutions do not converge under mesh refinement. In contrast, when families of simulations with slightly varying initial data are considered, averaged quantities like mean, variance and also higher moments are observed to converge under mesh refinement. This has led to several weaker (more statistics inspired) notions of solutions being proposed. We would like to mention dissipative measure valued solutions [13] and statistical solutions [17]. Considering measure valued solutions has a long history in hyperbolic conservation laws and can be traced back to the works of DiPerna [12] considering Young measures. Statistical solutions are time-parametrized probability measures on spaces of integrable functions and have been introduced recently for scalar problems in [15] and for systems in [17]. We would like to mention that in the context of the incompressible Navier-Stokes equations and turbulence, statistical solutions have a long history going back to the seminal work of Foias et al. see [19] and references therein.

The precise definition of statistical solutions is based on an equivalence theorem [17, Theorem 2.8] that relates probability measures on spaces of integrable functions and correlation measures on the state space. Correlation measures are measures that determine joint probability distributions of some unknown quantity at any finite collection of spatial points. In this sense, statistical solutions contain more information than e.g. dissipative measure valued solutions and, indeed, for scalar problems uniqueness of entropy dissipative statistical solutions can be proven. This proof is similar to the classical proof of uniqueness of entropy weak solutions for scalar problems. In contrast, for systems in multiple space dimensions the non-uniqueness of entropy weak solutions immediately implies non-uniqueness of dissipative measure valued solutions and statistical solutions. Still, all these concepts satisfy weak-strong uniqueness principles, i.e., as long as a Lipschitz continuous solution exists in any of these classes it is the unique solution in any of these classes. The (technical) basis for obtaining weak-strong uniqueness results is the relative entropy framework of Dafermos and DiPerna [8], which can be extended to dissipative statistical solutions as in [17].

Convergence of numerical schemes for nonlinear systems of hyperbolic conservation laws is widely open (except when the solution is smooth). Exceptions are the one dimensional situation, where convergence of the Glimm scheme is well known [22] and is, indeed, used for constructing the standard Riemann semigroup [4]. For multi-dimensional problems, there is recent progress showing convergence of numerical schemes towards dissipative measure valued solutions [13] with more information on the convergence in case the limit is an entropy weak solution. It seems impossible to say anything about convergence rates in this setting due to the multiplicity of entropy weak solutions.

The work at hand tries to complement the a priori analysis from [17] with a reliable a posteriori error estimator, i.e., we propose a computable upper bound for the numerical approximation error of statistical solutions. This extends results for entropy weak solutions of deterministic and random systems of hyperbolic conservation laws [10, 20, 21, 30] towards statistical solutions. One appealing feature of our error estimator is that it can be decomposed into different parts corresponding to space-time and stochastic errors. Our analysis relies on the relative entropy framework of Dafermos and DiPerna [8] extended to statistical solutions and, as such, it requires one of the (approximate) solutions that are to be compared to be Lipschitz continuous. Thus, we need to introduce a suitable reconstruction of the numerical solution, see [29] for the general idea, and our estimator is not convergent under mesh refinement once shocks have formed in the exact solution. We would like to mention that there are also other frameworks for providing a posteriori error analysis for hyperbolic systems, namely superconvergence, e.g., [1], dual weighted residuals, e.g., [24] and Bressan’s \(L^1\) stability framework, e.g., [27, 28] for one-dimensional systems.

The structure of this work is as follows: Sect. 2 reviews the notion of (dissipative) statistical solutions of hyperbolic conservation laws from [17]. Section 3 is concerned with the numerical approximation of dissipative statistical solutions using empirical measures. Moreover, we recall a reconstruction procedure which allows us to define the so-called regularized empirical measure. In Sect. 4, we present our main a posteriori error estimate between a dissipative statistical solution and its numerical approximation using the regularized empirical measure. We explain in Sect. 5 that our a posterirori error analysis directly extends to statistical solutions to general systems that take values in some compact subset of the state space. Finally, Sect. 6 provides some numerical experiments examining and verifying the convergence and splitting of the error estimators.

2 Preliminaries and notations

We consider the following one-dimensional system of \(m\in {\mathbb {N}}\) nonlinear conservation laws:

Here, \(u(t,x)\in {\mathcal {U}}\subset {\mathbb {R}}^m\) is the vector of conserved quantities, \({\mathcal {U}}\) is an open and convex set that is called state space, \(f\in C^{2}( {\mathcal {U}}; {\mathbb {R}}^m)\) is the flux function, \(D\subset {\mathbb {R}}\) is the spatial domain and \(T \in {\mathbb {R}}_+\). We restrict ourselves to the case where \(D=(0,1)\) with periodic boundary conditions. The system (1) is called hyperbolic if for any \(u \in {\mathcal {U}}\) the flux Jacobian \({{\,\mathrm{D}\,}}f(u)\) has \(m\) real eigenvalues and admits a basis of eigenvectors. We assume that (1) is equipped with an entropy/entropy flux pair \((\eta ,q)\), where the strictly convex function \(\eta \in C^{2}({\mathcal {U}};{\mathbb {R}})\) and \(q\in C^{2}({\mathcal {U}};{\mathbb {R}})\) satisfy \({{\,\mathrm{D}\,}}q= {{\,\mathrm{D}\,}}\eta {{\,\mathrm{D}\,}}f.\)

Most literature on numerical schemes for hyperbolic conservation laws focuses on computing numerical approximations of entropy weak solutions of (1). In contrast, we are interested in computing statistical solutions. We recall the definition of statistical solutions in Sect. 2.1. It is worthwhile to note that statistical solutions were only defined for systems for which \({\mathcal {U}}={\mathbb {R}}^m\) in [17]. We restrict our analysis to this setting in Sects. 2.1 and 4. In Sect. 5, we discuss some issues concerning how to define statistical solutions to general systems.

2.1 Statistical solutions

In this section, we provide a brief overview of the notions needed to define statistical solutions for (1), following the exposition in [17], referring to [17, Sect. 2] for more background, details and proofs.

Let us introduce some notation: For any topological space X let \({\mathcal {B}}(X)\) denote the Borel \(\sigma\)-algebra on X and \({\mathcal {M}}(X)\) denotes the set of signed Radon measures on \((X,{\mathcal {B}}(X))\). In addition, \({\mathcal {P}}(X)\) denotes the set of probability measures on \((X,{\mathcal {B}}(X))\), i.e., all non-negative \(\mu \in {\mathcal {M}}(X)\) satisfying \(\mu (X)=1.\) We consider \({\mathcal {U}}= {\mathbb {R}}^m\) and choose \(p\in [1,\infty )\) minimal, such that

holds for some constant \(C>0\). The following classical theorem states the duality between \(L^{1}(D^k; C_0({\mathcal {U}}^k))\) and \(L^\infty (D^k ; {\mathcal {M}}({\mathcal {U}}^k))\).

Theorem 2.1

([2], p. 211) For any \(k \in {\mathbb {N}}\) the dual space of \({\mathcal {H}}_0^k(D,{\mathcal {U}}):= L^{1}(D^k; C_0({\mathcal {U}}^k))\) is \({\mathcal {H}}_0^{k*}(D,{\mathcal {U}}) := L^\infty (D^k ; {\mathcal {M}}({\mathcal {U}}^k))\), i.e., the space of bounded, weak*-measurable maps from \(D^k\) to \({\mathcal {M}}({\mathcal {U}}^k)\) under the duality pairing

Definition 2.2

(Correlation measures [15, Def 2.5]) A p-summable correlation measure is a family \(\varvec{\nu }=(\nu ^1, \nu ^2,\dots )\) with \(\nu ^k \in {\mathcal {H}}_0^{k*}(D;{\mathcal {U}})\) satisfying for all \(k \in {\mathbb {N}}\) the following properties:

-

a.

\(\nu ^k\) is a Young measure from \(D^k\) to \({\mathcal {U}}^k\).

-

b.

Symmetry: If \(\sigma\) is a permutation of \(\{1,\dots ,k\}\), i.e., for \(x=(x_1,\ldots ,x_k)\), we have \(\sigma (x):=(x_{\sigma (1)}, \ldots , x_{\sigma (k)})\) and if \(f\in C_0({\mathcal {U}}^k)\) then \(\langle \nu _{\sigma (x)}^k,f(\sigma (\xi )) \rangle =\langle \nu _x^k,f(\xi ) \rangle\) for a.e. \(x \in D^k\).

-

c.

Consistency: If \(f \in C_b({\mathcal {U}}^k)\) is of the form \(f(\xi _1,\dots ,\xi _k)= g(\xi _1,\dots ,\xi _{k-1})\) for some \(g \in C_0({\mathcal {U}}^{k-1})\) then \(\langle \nu ^k_{x_1,\dots ,x_k}, f\rangle = \langle \nu ^{k-1}_{x_1,\dots ,x_{k-1}}, g\rangle\) for a.e. \(x=(x_1,\dots ,x_k)\in D^k\).

-

d.

\(L^p\)-integrability: \(\int _{D^k} \langle \nu _x^1,|\xi |^p \rangle \, {\text {d}}\!x <\infty\).

-

e.

Diagonal continuity: \(\lim _{r \searrow 0} \int _D \frac{1}{|B_r(x_1)|} \int _{B_r(x_1)} \langle \nu ^2_{x_1,x_2}, |\xi _1 - \xi _2|^p\rangle \, {\text {d}}\!x_2 {\text {d}}\!x_1=0.\)

Let \({\mathcal {L}}^p(D;{\mathcal {U}})\) denote the set of all p-summable correlation measures.

Theorem 2.3

(Main theorem on correlation measures [15, Thm. 2.7]) For every correlation measure \(\varvec{\nu } \in {\mathcal {L}}^p(D;{\mathcal {U}})\) there exists a unique probability measure \(\mu \in {\mathcal {P}}(L^{p}(D;{\mathcal {U}}))\) whose p-th moment is finite, i.e., \(\int _{L^p} \Vert u \Vert _{L^{p}(D;{\mathcal {U}})}^p d \mu (u) < \infty\) and such that \(\mu\) is dual to \(\varvec{\nu }\), i.e.,

Conversely, for every \(\mu \in {\mathcal {P}}(L^{p}(D;{\mathcal {U}}))\) with finite p-th moment there is a \(\varvec{\nu } \in {\mathcal {L}}^p(D;{\mathcal {U}})\) that is dual to \(\mu\).

To take into account the time-dependence in (1) the authors of [17] suggest to consider time parametrized probability measures. For \(T \in (0,\infty ]\) consider time parametrized measures \(\mu : [0,T) \rightarrow {\mathcal {P}}(L^{p}(D;{\mathcal {U}}))\). Note that such a \(\mu\) does not contain any information about correlation between function values at different times. We denote \(\mu\) evaluated at time t by \(\mu _t\). Let us define \({\mathcal {H}}_0^{k*}([0,T),D;{\mathcal {U}}) := L^\infty ([0,T)\times D^k; {\mathcal {M}}({\mathcal {U}}^k))\) and notice that it was shown in [17] that it is meaningful to evaluate an element \(\nu ^k \in {\mathcal {H}}_0^{k*}([0,T),D;{\mathcal {U}})\) at almost every \(t \in [0,T).\)

Definition 2.4

A time-dependent correlation measure \(\varvec{\nu }\) is a collection \(\varvec{\nu }=(\nu ^1, \nu ^2,\dots )\) of maps \(\nu ^k \in {\mathcal {H}}_0^{k*}([0,T),D;{\mathcal {U}})\) such that

-

a.

\((\nu _t^1, \nu _t^2 , \dots ) \in {\mathcal {L}}^p(D;{\mathcal {U}})\) for a.e. \(t \in [0,T).\)

-

b.

\(L^p\) integrability:

$$\begin{aligned} \mathop {\text {ess sup}}\limits _{t \in [0,T)} \int _D \langle \nu _{t,x}^1, |\xi |^p \rangle \, {\text {d}}\!x < \infty. \end{aligned}$$ -

c.

Diagonal continuity

$$\begin{aligned} \lim _{r \searrow 0} \int _0^{T'} \int _D \frac{1}{|B_r(x_1)|} \int _{B_r(x_1)} \langle \nu ^2_{t,x_1,x_2}, |\xi _1 - \xi _2|^p\rangle \, {\text {d}}\!x_2 {\text {d}}\!x_1\, dt =0 \quad \forall T' \in (0,T). \end{aligned}$$

We denote the space of all time-dependent p-summable correlation measures by \({\mathcal {L}}^p([0,T),D;{\mathcal {U}})\).

A time-dependent analogue of Theorem 2.3 holds true:

Theorem 2.5

For every time-dependent correlation measure \(\varvec{\nu } \in {\mathcal {L}}^p([0,T),D;{\mathcal {U}})\) there is a unique (up to subsets of [0, T) of Lebesgue measure zero) map \(\mu :[0,T) \rightarrow {\mathcal {P}}(L^{p}(D;{\mathcal {U}}))\) such that

-

a.

the mapping

$$\begin{aligned} t \mapsto \int _{L^p} \int _{D^k} g(x,u(x)) \, {\text {d}}\!x d\mu _t(u) \end{aligned}$$is measurable for all \(g \in {\mathcal {H}}_0^k(D;{\mathcal {U}})\).

-

b.

\(\mu\) is \(L^p\)-bounded, i.e.,

$$\begin{aligned} {\text {ess sup}}_{t \in [0,T)} \int _{L^p} \Vert u \Vert _{L^{p}(D;{\mathcal {U}})}^p d \mu _t(u) <\infty . \end{aligned}$$ -

c.

Duality, i.e. \(\mu\) is dual to \(\varvec{\nu }\),

$$\begin{aligned} \int _{D^k} \langle \nu ^k_{t,x} , g(x) \rangle \, {\text {d}}\!x =\int _{L^p} \int _{D^k} g(x,u(x)) {\text {d}}\!x d\mu _t(u) \text { for a.e. } t \in [0,T), \\ \forall g \in {\mathcal {H}}_0^k(D,{\mathcal {U}}), \ \forall k \in {\mathbb {N}}. \end{aligned}$$

Conversely, for every \(\mu :[0,T) \rightarrow {\mathcal {P}}(L^{p}(D;{\mathcal {U}}))\) satisfying (a) and (b) there is a unique correlation measure \(\varvec{\nu } \in {\mathcal {L}}^p([0,T),D;{\mathcal {U}})\) such that (c) holds.

Definition 2.6

(Bounded support) We say some \({{\bar{\mu }}} \in {\mathcal {P}}(L^{p}(D;{\mathcal {U}}))\) has bounded support, provided there exists \(C>0\) so that

Definition 2.7

(Statistical solution) Let \({{\bar{\mu }}} \in {\mathcal {P}}(L^{p}(D;{\mathcal {U}}))\) have bounded support. A statistical solution of (1) with initial data \(\bar{\mu }\) is a time-dependent map \(\mu :[0,T) \rightarrow {\mathcal {P}}(L^{p}(D;{\mathcal {U}}))\) such that each \(\mu _t\) has bounded support and such that the corresponding correlation measures \(\nu ^k_t\) satisfy

in the sense of distributions, for every \(k \in {\mathbb {N}}\).

Lemma 2.8

Let \({{\bar{\mu }}} \in {\mathcal {P}}(L^{p}(D;{\mathcal {U}}))\) have bounded support. Then, every statistical solution \(\mu : [0,T) \rightarrow {\mathcal {P}}(L^{p}(D;{\mathcal {U}}))\) to (1) with initial data \({{\bar{\mu }}}\) satisfies

for any \(\phi \in C^{\infty }([0,T) \times D;{\mathbb {R}}^m)\), where \(\mu _t\) denotes \(\mu\) at time t.

Proof

This is a special case of [17, Equation 3.7] for \(M=1\). \(\square\)

In order to show uniqueness (for scalar problems) and weak-strong uniqueness the authors of [15] and [17] require a comparison principle that compares statistical solutions to convex combinations of Dirac measures. Therefore, the entropy condition for statistical solutions has two parts. The first imposes stability under convex decompositions and the second is reminiscent of the standard entropy condition for deterministic problems. To state the first condition, we need the following notation: For \(\mu \in {\mathcal {P}}(L^{p}(D;{\mathcal {U}}))\), \(K\in {\mathbb {N}}\), \(\alpha \in {\mathbb {R}}^K\) with \(\alpha _i \geqslant 0\) and \(\sum _{i=1}^K\alpha _i =1\) we set

The elements of \(\Lambda (\alpha ,\mu )\) are strongly connected to transport plans that play a major role in defining the Wasserstein distance, see Remark 3.5 for details. Now, we are in position to state the selection criterion for statistical solutions.

Definition 2.9

(Dissipative statistical solution) A statistical solution of (1) is called a dissipative statistical solution if

-

1.

for every choice of coefficients \(\alpha _i \geqslant 0\), satisfying \(\sum _{i=1}^K\alpha _i =1\) and for every \(({\bar{\mu }}_1,\ldots ,{\bar{\mu }}_K) \in \Lambda (\alpha ,{\bar{\mu }})\), there exists a function \(t \mapsto (\mu _{1,t},\ldots ,\mu _{K,t}) \in \Lambda (\alpha ,\mu _t)\), such that each \(\mu _i: [0,T) \rightarrow {\mathcal {P}}(L^{p}(D;{\mathcal {U}}))\) is a statistical solution of (1) with initial measure \({\bar{\mu }}_i\).

-

2.

it satisfies

$$\begin{aligned} \int \limits _0^T\int \limits _{L^{p}(D;{\mathcal {U}})} \int \limits _{D} \eta (u(x))\partial _{t} \phi (t) ~ \mathrm {d}x\mathrm {d}\mu _{t}(u) \mathrm {d}t + \int \limits _{L^{p}(D;{\mathcal {U}})} \int \limits _{D} \eta ({\bar{u}}(x)) \phi (0) ~ \mathrm {d}x\mathrm {d}{\bar{\mu }}({\bar{u}}) \geqslant 0, \end{aligned}$$for any \(\phi \in C_c^\infty ([0,T);{\mathbb {R}}_+)\).

This selection criterion implies weak-(dissipative)-strong uniqueness, i.e., as long as some initial value problem admits a statistical solution supported on finitely many classical solutions, then this is the only dissipative statistical solution (on that time interval), [17, Lemma 3.3]. That result is a major ingredient in the proof of our main result Theorem 4.2 and it is indeed the special case of Theorem 4.2 for \(R^{st}_k \equiv 0.\) Both results are extensions of the classical relative entropy stability framework going back to seminal works of Dafermos and DiPerna. The attentive reader will note that on the technical level there are some differences between [17, Lemma 3.3] and Theorem 4.2. This is due to the following consideration: If \(L^2\) stability results are to be inferred from the relative entropy framework, upper and lower bounds on the Hessian of the entropy and an upper bound on the Hessian of the flux F are needed. To this end, Fjordholm et.al. restrict their attention to systems for which such bounds exist globally, while we impose conditions (8), (9) and discuss situations in which they are satisfied (including the setting of [17, Lemma 3.3]), see Remark 4.3.

3 Numerical approximation of statistical solutions

This section is concerned with the description of the numerical approximation of statistical solutions. Following [16, 17] the stochastic discretization relies on a Monte-Carlo, resp. collocation approach. Once samples are picked the problem at each sample is deterministic and we approximate it using the Runge–Kutta Discontinuous Galerkin method which we outline briefly. Moreover, we introduce a Lipschitz continuous reconstruction of the numerical solutions which is needed for our a posteriori error estimate in Theorem 4.2. Let us start with the deterministic space and time discretization.

3.1 Space and time discretization: Runge–Kutta discontinuous Galerkin method

We briefly describe the space and time discretization of (1), using the Runge–Kutta Discontinuous Galerkin (RKDG) method from [6]. Let \({\mathcal {T}}:= \{ I_l\}_{l=0}^{N_s-1}\), \(I_l:=(x_l,x_{l+1})\) be a quasi-uniform triangulation of \(D\). We set \(h_l:= (x_{l+1}-x_l)\), \(h_{\max }:= \max \limits _lh_l\), \(h_{\min }:= \min \limits _lh_l\) for the spatial mesh and the (spatial) piecewise polynomial DG spaces for \(p\in {\mathbb {N}}_0\) are defined as

Here \({\mathbb {P}}_p\) denotes the space of polynomials of degree at most \(p\) and \({\mathcal {L}}_{V_h^{p}}\) denotes the \(L^2\)-projection into the DG space \(V_h^{p}\). After spatial discretization of (1) we obtain the following semi-discrete scheme for the discrete solution \(u_h\in C^{1}([0,T);V_h^{p})\):

where \(L_h :V_h^{p} \rightarrow V_h^{p}\) is defined by

Here, \(F: {\mathbb {R}}^m \times {\mathbb {R}}^m \rightarrow {\mathbb {R}}^m\) denotes a consistent, conservative and locally Lipschitz-continuous numerical flux, \(\psi _h(x^{\pm }):= \lim \limits _{s \searrow 0} \psi _h(x \pm s)\) are spatial traces and \({{\,\mathrm{[\![}\,}}\psi _h {{\,\mathrm{]\!]}\,}}_l:= (\psi _h(x_l^-)-\psi _h(x_l^+))\) are jumps.

The initial-value problem (DG) is advanced in time by a \(R\)-th order strong stability preserving Runge–Kutta (SSP-RK) method [26, 32]. To this end, we let \(0={t}_{0}<{t}_{1}<\ldots < {t}_{N_{t}}=T\) be a (non-equidistant) temporal decomposition of [0, T]. We define \(\Delta {t}_{n} :=( {t}_{n+1}-{t}_{n})\), \(\Delta t :=\max \limits _n~\Delta {t}_{n}\). To ensure stability, the explicit time-stepping scheme has to obey the CFL-type condition

where \(\lambda _{\text {max}}\) is an upper bound for absolute values of eigenvalues of the flux Jacobian \({{\,\mathrm{D}\,}}f\) and \(C\in (0,1]\). Furthermore, we let \(\Pi _h: {\mathbb {R}}^m \rightarrow {\mathbb {R}}^m\) be the TVBM minmod slope limiter from [7]. The complete \(S\)-stage time-marching algorithm for given \(n\)-th time-iterate \(u_h^n:=u_h({t}_{n},\cdot )\in V_h^{p}\) can then be written as follows.

Note that the initial condition \(u_h(t=0)\) is also limited by \(\Pi _h\). The parameters \(\delta _{sl}\) satisfy the conditions \(\delta _{sl}\geqslant 0\), \(\sum _{l=0}^{s-1} \delta _{sl}=1\) , and if \(\beta _{sl} \ne 0\), then \(\delta _{sl} \ne 0\) for all \(s=1,\ldots , S\), \(l=0,\ldots ,s\).

3.2 Reconstruction of numerical solution

Our main a posteriori error estimate Theorem 4.2 is based on the relative entropy method of Dafermos and DiPerna [8] and its extension to statistical solutions as described in the recent work [17]. The a posteriori error estimate requires that the approximating solutions are at least Lipschitz-continuous in space and time. To ensure this property, we reconstruct the numerical solution \(\{ u_h^{n} \}_{n=0}^{N_{t}}\) to a Lipschitz-continuous function in space and time. We do not elaborate upon this reconstruction procedure, to keep notation short and simple, but refer to [10, 21, 30]. The reconstruction process provides a computable space-time reconstruction \(u^{st}\in W_\infty ^1((0,T);V_h^{p+1}\cap C^0(D))\), which allows us to define the following residual.

Definition 3.1

(Space-time residual) We call the function \({\mathcal {R}}^{st}\in L^{2}((0,T)\times D;{\mathbb {R}}^m)\), defined by

the space-time residual for \(u^{st}\).

We would like to stress that the mentioned reconstruction procedure does not only render \(u^{st}\) Lipschitz-continuous, but it is also specifically designed to ensure that the residual, defined in (5), has the same decay properties as the error of the RKDG numerical scheme as h tends to zero, cf. [10].

3.3 Computing the empirical measure

Following [16, 17], we approximate the statistical solution of (1) using empirical measures. In this work, we allow for arbitrary sample points and weights. The sample points can either be obtained by randomly sampling the initial measure (Monte-Carlo), or by using roots of corresponding orthogonal polynomials. In this article we focus on the Monte-Carlo sampling. Let us assume that the sampling points are indexed by the set \({\mathcal {K}}=\{1,\ldots ,K\}\), \(K\in {\mathbb {N}}\) and let us denote the corresponding weights by \(\{w_{\mathrm {k}}\}_{\mathrm {k}\in {\mathcal {K}}}\), i.e., for Monte-Carlo sampling we have \(w_{\mathrm {k}}= \frac{1}{K}\), for all \(\mathrm {k}\in {\mathcal {K}}\). We use the following Monte-Carlo type algorithm from [17] to compute the empirical measure.

Definition 3.2

(Empirical measure) Let \(\{u_{h,\mathrm {k}}(t)\}_{\mathrm {k}\in {\mathcal {K}}}\) be a sequence of approximate solutions of (1) with initial data \({\bar{u}}_\mathrm {k}\), at time \(t \in (0,T)\). We define the empirical measure at time \(t \in (0,T)\) via

For the a posteriori error estimate in Theorem 4.2, we need to consider a regularized measure, which we define using the reconstructed numerical solution \(u^{st}\) obtained from the reconstruction procedure described in Sect. 3.2.

Definition 3.3

[Regularized empirical measure] Let \(\{u^{st}_{\mathrm {k}}(t)\}_{\mathrm {k}\in {\mathcal {K}}}\) be a sequence of reconstructed numerical approximations at time \(t \in (0,T)\). We define the regularized empirical measure as follows

Common metrics for computing distances between probability measures on Banach spaces are the Wasserstein-distances. In Theorem 4.2, we bound the error between the dissipative statistical solution of (1) and the discrete regularized measure in the 2-Wasserstein distance.

Definition 3.4

(\(r\)-Wasserstein distance) Let \(r\in [0, \infty )\), X be a separable Banach space and let \(\mu , \rho \in {\mathcal {P}}(X)\) with finite \(r\)th moment, i.e., \(\int \limits _X \Vert u\Vert _X^r~ \mathrm {d}\mu (u)< \infty\), \(\int \limits _X \Vert u\Vert _X^r~ \mathrm {d}\rho (u)< \infty\). The \(r\)-Wasserstein distance between \(\mu\) and \(\rho\) is defined as

where \(\Pi (\mu ,\rho ) \subset {\mathcal {P}}(X^2)\) is the set of all transport plans from \(\mu\) to \(\rho\), i.e., the set of measures \(\pi\) on \(X^2\) with marginals \(\mu\) and \(\rho\), i.e.,

for all measurable subsets A of X.

Remark 3.5

It is important to recall from [15, Lemma 4.2] that if \(\rho \in {\mathcal {P}}(X)\) is K-atomic, i.e., \(\rho = \sum _{i=1}^K\alpha _i \delta _{u_i}\) for some \(u_1, \dots , u_K\in X\) and some \(\alpha _1,\dots , \alpha _K\geqslant 0\) with \(\sum _{i=1}^K\alpha _i =1,\) then there is a one-to-one correspondence between transport plans in \(\Pi (\mu ,\rho )\) and elements of \(\Lambda (\alpha ,\mu )\), which was defined in (4).

4 The a posteriori error estimate

In this section, we present the main a posteriori error estimate between dissipative statistical solutions and regularized numerical approximations. A main ingredient for the proof of Theorem 4.2 is the notion of relative entropy. Since the relative entropy framework is essentially an \(L^2\)-framework and due to the quadratic bounds in (8), (9) it is natural to consider the case \(p=2\). Therefore, for the remaining part of this paper we denote \({\mathcal {F}}:= L^{2}(D;{\mathcal {U}}).\)

Definition 4.1

(Relative entropy and relative flux) Let \((\eta ,q)\) be a strictly convex entropy/entropy flux pair of (1). We define the relative entropy \(\eta (\cdot |\cdot ) : {\mathcal {U}}\times {\mathcal {U}}\rightarrow {\mathbb {R}}\), and relative flux \(f(\cdot |\cdot ) : {\mathcal {U}}\times {\mathcal {U}}\rightarrow {\mathbb {R}}^m\) via

for all \(u,v\in {\mathcal {U}}\).

We can now state the main result of this work which is an a posteriori error estimate between dissipative statistical solutions and their numerical approximations using the regularized empirical measure.

Theorem 4.2

Let \(\mu _{t}\) be a dissipative statistical solution of (1) as in Definition 2.9and let \({\hat{\mu }}_{t}^K\) be the regularized empirical measure from Definition 3.3. Assume that there exist constants \(A,B>0\) (independent of h, K) such that for all \(t \in [0,T)\)

\(for a.e. x\in D, \ for \mu _{t}- {a.e. } u\in {\mathcal {F}}, \ \text {for } {\hat{\mu }}_{t}^K-\text {a.e. } v \in {\mathcal {F}}\). Here \(H_v\) denotes the Hessian matrix w.r.t. v.

Then the distance between \(\mu _{t}\) and \({\hat{\mu }}_{t}^K\) satisfies

for a.e. \(s \in (0,T)\) and \(L:= \max \limits _{\mathrm {k}\in {\mathcal {K}}} \Vert \partial _{x}u^{st}_{\mathrm {k}} \Vert _{L^{\infty }((0,s)\times D)}\). Here we denoted \(\hat{{\bar{\mu }}}^K:= \sum _{\mathrm {k}\in {\mathcal {K}}} w_{\mathrm {k}}\delta _{{\bar{u}}^{st}_{\mathrm {k}}}\).

Remark 4.3

There are several settings that guarantee validity of (8). Two of them are

-

1.

The global bounds of the Hessians of \(f\) and \(\eta\) assumed in [17, Lemma 3.3].

-

2.

Let \({\mathcal {C}}\Subset {\mathcal {U}}\) be a compact and convex set. Let us assume that \(\mu _t\) and \({\hat{\mu }}_{t}^K\) are (for all t) supported on solutions having values in \({\mathcal {C}}\) only. Due to the regularity of \(f, \eta\) and the compactness of \({\mathcal {C}}\), there exist constants \(0< C_{{\overline{f}},{\mathcal {C}}}< \infty\) and \(0< C_{{\underline{\eta }},{\mathcal {C}}}<C_{{\overline{\eta }},{\mathcal {C}}}< \infty\), such that

$$\begin{aligned} |u^\top H_{v}f(v) u| \leqslant C_{{\overline{f}},{\mathcal {C}}}|u|^2, \quad C_{{\underline{\eta }},{\mathcal {C}}}|u|^2 \leqslant | u^\top H_{v}\eta (v) u| \leqslant C_{{\overline{\eta }},{\mathcal {C}}}|u|^2, \quad \forall u\in {\mathbb {R}}^m, v\in {\mathcal {C}}. \end{aligned}$$(10)In this case, A, B from (8), (9) can be chosen as

$$\begin{aligned} A= \max \{ (1 + C_{{\overline{f}},{\mathcal {C}}}) C_{{\underline{\eta }},{\mathcal {C}}}^{-1}, C_{{\overline{\eta }},{\mathcal {C}}}\}, \quad B= C_{{\overline{\eta }},{\mathcal {C}}}. \end{aligned}$$(11)

Remark 4.4

Let us briefly outline a fundamental difference between Theorem 4.2 and [17, Thm 4.9]. The latter result is an a priori estimate that treats the empirical measure (created via Monte Carlo sampling) as a random variable and infers convergence in the mean via the law of large numbers. Theorem 4.2 provides an a posteriori error estimate that can be evaluated once the numerical scheme has produced an approximate solution. This process involves sampling the initial data measure \({{\bar{\mu }}}\) and the “quality” of these samples enters the error estimate via the term \(W_{2}({\bar{\mu }},\hat{{\bar{\mu }}}^K)\).

Remark 4.5

Note that the error bound in Theorem 4.2 depends exponentially on the spatial Lipschitz constant of the regularized empirical measure. Thus, if the exact solution \(\mu _t\) assigns positive measure to some set of discontinuous functions then the error estimator from Theorem 4.2 is expected to blow up under mesh refinement.

Proof

We recall that the weights \(\{ w_{\mathrm {k}} \}_{\mathrm {k}\in {\mathcal {K}}}\) satisfy \(\sum _{\mathrm {k}\in {\mathcal {K}}} w_{\mathrm {k}}=1\). We further denote the vector of weights by \(\vec {w}:=(w_{1},\ldots ,w_{K})\), and let \({\overline{\mu }}^{*}=({\overline{\mu }}^{*}_1,\ldots ,{\overline{\mu }}^{*}_K) \in \Lambda (\vec {w},{\bar{\mu }})\) correspond to an optimal transport plan between \({\bar{\mu }}\) and \(\hat{{\bar{\mu }}}^K\). Because \(\mu _{t}\) is a dissipative statistical solution, there exists \((\mu _{1,t}^*,\ldots ,\mu _{K,t}^*) \in \Lambda (\vec {w},\mu _{t})\), such that

for every \(\phi _\mathrm {k}\in C_c^\infty ([0,T)\times D;{\mathbb {R}}^m)\), \(\mathrm {k}\in {\mathcal {K}}\). Recalling that each function \(u^{st}_{\mathrm {k}}\) is a Lipschitz-continuous solution of the perturbed conservation law (5), considering its weak formulation yields

for every \(\phi _\mathrm {k}\in C_c^\infty ([0,T)\times D;{\mathbb {R}}^m)\). As \((\mu _{\mathrm {k},t}^*)_{\mathrm {k}\in {\mathcal {K}}}\) are probability measures on \({\mathcal {F}}\), we obtain (after changing order of integration)

Subtracting (14) from (12) and using the Lipschitz-continuous test function \(\phi _\mathrm {k}(t,x):= {{\,\mathrm{D}\,}}\eta (u^{st}_{\mathrm {k}}(t,x)) \phi (t)\), where \(\phi \in C_c^\infty ([0,T);{\mathbb {R}}_+)\) yields

We compute the partial derivatives of \({{\,\mathrm{D}\,}}\eta (u^{st}_{\mathrm {k}}(t,x))\phi (t)\) using product and chain rule

Next, we multiply (5) by \({{\,\mathrm{D}\,}}\eta (u^{st}_{\mathrm {k}})\). Upon using the chain rule for Lipschitz-continuous functions and the relationship \({{\,\mathrm{D}\,}}q( u^{st}_{\mathrm {k}})={{\,\mathrm{D}\,}}\eta (u^{st}_{\mathrm {k}}) {{\,\mathrm{D}\,}}f(u^{st}_{\mathrm {k}})\) we derive the relation

Let us consider the weak form of (18) and integrate w.r.t. x, t and \(\mathrm {d}\mu _{\mathrm {k},t}^*\) for \(\mathrm {k}\in {\mathcal {K}}\). Upon changing the order of integration we have

for any \(\phi \in C_c^\infty ([0,T);{\mathbb {R}}_+)\). Since \(\mu _{\mathrm {k},t}^*\) is a dissipative statistical solution it satisfies

Hence, subtracting (19) from (20) and using the definition of the relative entropy from Definition 4.1 yields

After subtracting (15) from (21) and using (16), (17) we are led to

Rearranging (5) yields

Plugging (23) into (22) and after rearranging we have

where we have used \({{\,\mathrm{D}\,}}f \cdot H\eta = H\eta {{\,\mathrm{D}\,}}f.\) Up to now, the choice of \(\phi (t)\) was arbitrary. We fix \(s>0\) and \(\epsilon >0\) and define \(\phi\) as follows

According to Theorem 2.5 (a) we have that the mapping

is measurable for all \(\mathrm {k}\in {\mathcal {K}}\). Moreover, due to the quadratic bound on the relative entropy, cf. (8), Lebesgue’s differentiation theorem states that a.e. \(t \in (0,T)\) is a Lebesgue point of (25). Thus, letting \(\epsilon \rightarrow 0\) we obtain

The left hand side of (26) is bounded from below using (8). The first term on the right hand is estimated using the \(L^\infty (D)\)-norm of the spatial derivative. We estimate the second term on the right hand side by Young’s inequality. Finally, we apply (8) and then (9) to both terms. The last term on the right hand side is estimated using (8). We, thus, end up with

Upon using Grönwall’s inequality we obtain

Using \(\max \limits _{\mathrm {k}\in {\mathcal {K}}} \Vert \partial _{x}u^{st}_{\mathrm {k}}\Vert _{ L^{\infty }((0,s)\times D)}=:L\) and recalling that \(({\overline{\mu }}^{*}_\mathrm {k})_{\mathrm {k}\in {\mathcal {K}}}\) corresponds to an optimal transport plan and that \((\mu _{\mathrm {k},s}^*)_{\mathrm {k}\in {\mathcal {K}}}\) corresponds to an admissible transport plan, we finally obtain

\(\square\)

To obtain an error estimate with separated bounds, i.e., bounds that quantify spatio-temporal and stochastic errors, respectively, we split the 2-Wasserstein error in initial data into a spatial and a stochastic part. Using the triangle inequality we obtain

The first term in (27) is a stochastic error, which is inferred from approximating the initial data by an empirical measure. On the other hand, the second term is essentially a spatial approximation error. This can be seen from the following lemma.

Lemma 4.6

With the same notation as in Theorem 4.2, the following inequality holds.

Equality in (28) holds provided spatial discretization errors are smaller than distances between samples. A sufficient condition is:

Proof

Recalling the definition of \(\hat{{\bar{\mu }}}^K= \sum _{\mathrm {k}\in {\mathcal {K}}}w_{\mathrm {k}} {\bar{u}}^{st}_{\mathrm {k}}\) and defining the transport plan \(\pi ^* := \sum _{\mathrm {k}\in {\mathcal {K}}} w_{\mathrm {k}}(\delta _{{\bar{u}}_\mathrm {k}} \otimes \delta _{{\bar{u}}^{st}_{\mathrm {k}}})\) yields the assertion, because

If (29) holds, \(\pi ^*\) is an optimal transport plan. \(\square\)

Remark 4.7

In contrast to random conservation laws as considered in [30], the stochastic part of the error estimator of Theorem 4.2 is solely given by the discretization error of the initial data, i.e., given by the second term in (27), which may be amplified due to nonlinear effects. However, there is no stochastic error source during the evolution of the numerical approximation.

5 Extension to general systems (with \({\mathcal {U}}\ne {\mathbb {R}}^m\))

Up to this point, we have stuck with the setting from [17] to stress that a posteriori error estimates can be obtained as long as the relative entropy framework from [17] is applicable. Having said this, it is fairly clear that this setting does not cover certain systems of practical interest, e.g. the Euler equations of fluid dynamics.

This raises the question how statistical solutions can be defined for more general systems. It seems to be sufficient to require \(\mu _t\) to be a measure on some set of functions \({\mathcal {F}}\) so that \(u \in {\mathcal {F}}\) implies that \(u, f(u)\) and \(\eta (u)\) are integrable. In particular, such a definition ensures (fairly generically) that \(\int _{{\mathcal {F}}} \int _D f(u) {\text {d}}\!x d\mu (u)\) is well-defined, i.e. that \(E: {\mathcal {F}} \rightarrow {\mathbb {R}}^m, \ u \mapsto E(u):= \int _D f(u) {\text {d}}\!x\) is measurable. For \({\mathcal {F}}=L_f^r := \{ u \in L^r : \int _D | f(u(x)) | \, {\text {d}}\!x < \infty \}\) for some \(r \in [1,\infty ]\) and \({\mathcal {F}}\) being equipped with the \(L^r\) topology this can be seen as follows: For any \(M>0\) the functional \(u \mapsto E_M (u):= \int _D \max \{ -M, \min \{ M, f(u)\}\} {\text {d}}\!x\) is continuous and E is measurable as pointwise limit of continuous functions. The same argument works for \(\int _{{\mathcal {F}}} \int _D \eta (u) {\text {d}}\!x d\mu (u)\).

However, it is not clear to us, whether the a posteriori error estimate in Sect. 4 can be extended to all such settings in which statistical solutions can be defined since it requires (8), (9). . One interesting, albeit somewhat restrictive, setting in which (8), (9) hold is the case that all measures under consideration are supported on some set of functions taking values in some compact subset of the state space. We will provide a definition of statistical solutions and an a posteriori error estimate in this setting.

Definition 5.1

(\({\mathcal {C}}\)-valued statistical solution) Let \({\mathcal {C}} \Subset {\mathcal {U}}\) be compact and convex. Let \({{\bar{\mu }}} \in {\mathcal {P}}(L^{p}(D;{\mathcal {U}}))\) be supported on \(L^{p}(D;{\mathcal {C}})\). A \({\mathcal {C}}\)-valued statistical solution of (1) with initial data \({{\bar{\mu }}}\) is a time-dependent map \(\mu :[0,T) \rightarrow {\mathcal {P}}(L^{p}(D;{\mathcal {U}}))\) such that each \(\mu _t\) is supported on \(L^{p}(D;{\mathcal {C}})\) for all \(t \in [0,T)\) and such that the corresponding correlation measures \(\nu ^k_t\) satisfy

in the sense of distributions, for every \(k \in {\mathbb {N}}\)

Remark 5.2

Note that duality implies that if \(\mu\) is supported on \(L^{p}(D;{\mathcal {C}})\) then the corresponding \(\nu ^k\) is supported on \({\mathcal {C}}^k\) (for all \(k \in {\mathbb {N}}\)).

Definition 5.3

(Dissipative \({\mathcal {C}}\)-valued statistical solution) A \({\mathcal {C}}\)-valued statistical solution of (1) is called a dissipative statistical solution provided the conditions from Definition 2.9 hold with “statistical solution” being replaced by “ \({\mathcal {C}}\)-valued statistical solution”.

We immediately have the following theorem whose proof follows mutatis mutandis from the proof of Theorem 4.2

Theorem 5.4

Let \(\mu _{t}\) be a \({\mathcal {C}}\)-valued dissipative statistical solution of (1) with initial data \({{\bar{\mu }}}\) as in Definition 2.9and let \({\hat{\mu }}_{t}^K\) be the regularized empirical measure as in Definition 3.3and supported on functions with values in \({\mathcal {C}}\). Then the difference between \(\mu _{t}\) and \({\hat{\mu }}_{t}^K\) satisfies

for a.e. \(s \in (0,T)\) and \(L:= \max \limits _{\mathrm {k}\in {\mathcal {K}}} \Vert \partial _{x}u^{st}_{\mathrm {k}} \Vert _{L^{\infty }((0,s)\times D)}\).

6 Numerical experiments

In this section we illustrate how to approximate the 2-Wasserstein distances that occur in Theorem 4.2. Moreover, we examine the scaling properties of the estimators in Theorem 4.2 by means of a smooth and a non-smooth solution of the one-dimensional compressible Euler equations.

6.1 Numerical approximation of Wasserstein distances

We illustrate how to approximate the 2-Wasserstein distance on the example of \(W_{2}({\bar{\mu }},\sum _{\mathrm {k}\in {\mathcal {K}}} w_{\mathrm {k}}\delta _{{\bar{u}}_\mathrm {k}})\) from (27). For the given initial measure \({\bar{\mu }}\in {\mathcal {P}}(L^{2}(D))\) we choose a probability space \((\Omega ,\sigma ,{\mathbb {P}})\) and a random field \({\bar{u}} \in L^{2}(\Omega ;L^{2}(D))\), such that the law of \({\bar{u}}\) with respect to \({\mathbb {P}}\) coincides with \({\bar{\mu }}\). We approximate the initial measure \({\bar{\mu }}\) using some empirical measure \(\sum _{\mathrm {m}\in {\mathcal {M}}} w_{\mathrm {m}} \delta _{{\bar{u}}_\mathrm {m}}\), for a second sample set \({\mathcal {M}}:=\{1, \ldots ,M \}\), where the number of samples \(M \gg K\) is significantly larger than the number of samples of the numerical approximation. To distinguish between the two different sample sets \({\mathcal {K}}\) and \({\mathcal {M}}\), we write \(\{{\bar{u}}^{{\mathcal {K}}}_\mathrm {k}\}_{\mathrm {k}\in {\mathcal {K}}}\), \(\{{\bar{u}}^{{\mathcal {M}}}_\mathrm {m}\}_{\mathrm {m}\in {\mathcal {M}}}\) and \(\{ w_{\mathrm {k}}^{\mathcal {K}}\}_{\mathrm {k}\in {\mathcal {K}}}\), \(\{ w_{\mathrm {m}}^{\mathcal {M}}\}_{\mathrm {m}\in {\mathcal {M}}}\) respectively. Finally, we collect the weights \(\{ w_{\mathrm {k}}^{\mathcal {K}}\}_{\mathrm {k}\in {\mathcal {K}}}\) in the vector \(\vec {w}^{\mathcal {K}}\) and \(\{ w_{\mathrm {m}}^{\mathcal {M}}\}_{\mathrm {m}\in {\mathcal {M}}}\) in the vector \(\vec {w}^{\mathcal {M}}\).

Computing the optimal transport \(\pi ^*\) (and thus the 2-Wasserstein distance) between the two atomic measures can be formulated as the following linear program, cf. [31, (2.11)]

where \(\Pi \Big ( \sum _{\mathrm {k}\in {\mathcal {K}}} w_{\mathrm {k}}^{\mathcal {K}}\delta _{{\bar{u}}^{\mathcal {K}}_\mathrm {k}}, \sum _{\mathrm {m}\in {\mathcal {M}}} w_{\mathrm {m}}^{\mathcal {M}}\delta _{{\bar{u}}^{\mathcal {M}}_\mathrm {m}} \Big ):= \{ \pi \in {\mathbb {R}}^{K\times M}_+~|~ \pi \mathbb {1}_{M} = \vec {w}^{\mathcal {M}}\text { and } \pi ^\top \mathbb {1}_{K} = \vec {w}^{\mathcal {K}}\}\) denotes the set of transport plans and \(\mathbb {1}_{n} := (1,\ldots ,1)^\top \in {\mathbb {R}}^n\). Moreover, the entries of the cost matrix \({\mathbb {C}}\in {\mathbb {R}}^{K\times M}_+\) are computed as

The linear program is solved using the network simplex algorithm [3, 31], implemented in the optimal transport library ot.emd [18] in python. The 2-Wasserstein distance is finally approximated by

where (32) can be computed with ot.emd2(\(\vec {w}^{\mathcal {K}},\vec {w}^{\mathcal {M}},{\mathbb {C}}\)).

6.2 Numerical experiment for a smooth solution

This section is devoted to the numerical study of the scaling properties of the individual terms in the a posteriori error estimate Theorem 4.2. In the following experiment we consider as instance of (1) the one-dimensional compressible Euler equations for the flow of an ideal gas, which are given by

where \(\rho\) describes the mass density, m the momentum and E the energy of the gas. The constitutive law for pressure p reads

with the adiabatic constant \(\gamma =1.4\). We construct a smooth exact solution of (33) by introducing an additional source term. The exact solution reads as follows

where \(\xi \sim {\mathcal {U}}(0,1)\) is a uniformly distributed random variable. Moreover, the spatial domain is \([0,1]_{\text {per}}\) and we compute the solution up to \(T=0.2\). We sample the initial measure \({\bar{\mu }}\) and the exact measure \(\mu _T\) with 10000 samples.

For the remaining part of this section we introduce the notations

Moreover, when we refer to error, we mean the Wasserstein distance between exact and numerically computed density \(\rho\) at time \(t=T\).

As numerical solver we use the RKDG Code Flexi [25]. The DG polynomial degrees will always be two and for the time-stepping we use a low storage SSP RK-method of order three as in [26]. The time-reconstruction is also of order three. As numerical fluxes we choose the Lax-Wendroff numerical flux

Computing \({\mathcal {E}}\,^{\text {det}}, {\mathcal {E\,}}_0^{\text {det}}, {\mathcal {E\,}}_0^{\text {stoch}}\) requires computing integrals, we approximate them via Gauß-Legendre quadrature where we use seven points in each time-cell and ten points in every spatial cell.

6.2.1 Spatial refinement

In this section we examine the scaling properties of \({\mathcal {E}}\,^{\text {det}}(T)\) and \({\mathcal {E\,}}_0^{\text {det}}\) when we gradually increase the number of spatial cells. We start initially on a coarse mesh with 16 elements, i.e., \(h=1/16\) and upon each refinement we subdivide each spatial cell uniformly into two cells. To examine a possible influence of the stochastic resolution we consider a coarse and fine sampling with 100 and 1000 samples, respectively. Table 1 and Fig. 1a display the error estimator parts, \({\mathcal {E}}\,^{\text {det}}\), \({\mathcal {E\,}}_0^{\text {stoch}}\) and \({\mathcal {E\,}}_0^{\text {det}}\) from (35)-(37) when we compute the numerical approximation with 100 samples and Table 2 and Fig. 1b display the same quantities for 1000 samples. We observe that the convergence of \({\mathcal {E}}\,^{\text {det}}\) and \({\mathcal {E\,}}_0^{\text {det}}\) does not depend on the stochastic resolution. Indeed, both quantities decay when we increase the number of spatial elements and they are uniform with respect to changes in the sample size. Moreover, \({\mathcal {E\,}}_0^{\text {det}}\) exhibits the expected order of convergence which is six for a DG polynomial degree of two (note that we compute squared quantities). It can also be observed that \({\mathcal {E}}\,^{\text {det}}\) converges with order five, which is due to a suboptimal rate of convergence on the first time-step. This issue has also been discussed in detail in [30, Remark 4.1]. Furthermore, we see that the numerical error remains almost constant upon mesh refinement, since it is dominated by the stochastic resolution error, which is described by \({\mathcal {E\,}}_0^{\text {stoch}}\). Since \({\mathcal {E\,}}_0^{\text {stoch}}\) reflects the stochastic error it also remains constant upon spatial mesh refinement.

Spatial refinement for 100 and 1000 samples. Numerical example from Sect. 6.2

6.2.2 Stochastic refinement

In this section, we consider stochastic refinement, i.e., we increase the number of samples and keep the spatial resolution fixed. Similarly to the numerical example in the previous section we consider a very coarse spatial discretization with 8 elements (Table 3, Fig. 2a) and a fine spatial discretization with 256 elements (Table 4, Fig. 2b). We observe that \({\mathcal {E}}\,^{\text {det}}\) and \({\mathcal {E\,}}_0^{\text {det}}\) remain constant when we increase the number of samples. This is the correct behavior, since both residuals reflect spatio-temporal errors. For the coarse spatial discretization we observe that the numerical error does not decrease when increasing the number of samples since the spatial discretization error, reflected by \({\mathcal {E}}\,^{\text {det}}\), dominates the numerical error. For the fine spatial discretization the numerical error is dominated by the stochastic resolution error and thus the error converges with the same order. We observe an experimental rate of convergence of one (resp. one half after taking the square root). Finally, we see that \({\mathcal {E\,}}_0^{\text {stoch}}\) is independent of the spatial discretization because its convergence is not altered by the spatio-temporal resolution (Tables 5, 6, 7, 8).

Stochastic refinement for 8 and 256 spatial cells. Numerical example from Sect. 6.2

6.3 Numerical experiment for a non-smooth solution

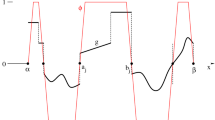

In this numerical experiment we consider a spatially non-smooth exact solution given by

where \(\xi \sim {\mathcal {U}}(0,1)\) is a uniformly distributed random variable. The spatial domain is again \([0,1]_{\text {per}}\). We compute the solution up to \(T=0.2\) and sample the initial measure \({\bar{\mu }}\) and the exact measure \(\mu _T\) with 10000 samples.

6.3.1 Spatial refinement

Again we first examine the scaling properties of \({\mathcal {E}}\,^{\text {det}}(T)\) and \({\mathcal {E\,}}_0^{\text {det}}\) when we gradually increase the number of spatial cells. We start initially on a coarse mesh with 16 elements, i.e., \(h=1/16\). Strictly speaking the samples \({{\bar{\rho }}}_\mathrm {k}\) are smooth but the meshes under consideration are sufficiently coarse so that the numerics sees the \(k^{-2}\) decay of Fourier coefficients in \({{\bar{\rho }}}_\mathrm {k}\) but the cut-off at \(k=600\) is not resolved. Thus, for the meshes under consideration, the decay of error is as if each sample \(\{{\bar{\rho }}_\mathrm {k}\}_{\mathrm {k}\in {\mathcal {K}}}\) satisfies \({\bar{\rho }}_\mathrm {k}\in H^{s}(D)\) for any \(s< \frac{3}{2}\) but is not in \(H^{3/2}(D)\). We expect the convergence of \({\mathcal {E\,}}_0^{\text {det}}\) in terms of the mesh width h to be of order 3, resp. \(\frac{3}{2}\) after taking the square root. This behavior can be indeed observed in Fig. 3. Similarly, the numerical error converges with the order \(\tfrac{3}{2}\), up to the point where it is dominated by the stochastic error. The deterministic residual \({\mathcal {E}}\,^{\text {det}}\) exhibits a rate of convergence of 1, resp. \(\tfrac{1}{2}\) after taking the square root, which has also been observed in [11].

Spatial refinement for 100 and 1000 samples. Numerical example from Sect. 6.3

6.3.2 Stochastic refinement

Finally, we consider stochastic refinement for two fixed spatial meshes. The setup of the meshes and number of stochastic samples is exactly the same as in the numerical experiment from Sect. 6.2.2. In Fig. 3 we observe a similar behavior of the residuals and the numerical error as in Sect. 6.2.2, that is, the stochastic residual \({\mathcal {E\,}}_0^{\text {stoch}}\) converges independently of the spatial resolution and on the coarse grid the numerical error does not converge due to the low spatial resolution. On the fine grid, however, it converges with the same order as the stochastic resolution error, which is one, resp. one-half after taking the square-root (Fig. 4).

Stochastic refinement for 8 and 256 spatial cells. Numerical example from Sect. 6.3

7 Conclusions

This work provides a first rigorous a posteriori error estimate for numerical approximations of dissipative statistical solutions in one spatial dimension. Our numerical approximations rely on so-called regularized empirical measures, which enable us to use the relative entropy method of Dafermos and DiPerna [8] within the framework of dissipative statistical solutions introduced by the authors of [17]. We derived a splitting of the error estimator into a stochastic and a spatio-temporal part. In addition, we provided numerical examples verifying this splitting. Moreover, our numerical results confirm that the quantities that occur in our a posteriori error estimate decay with the expected order of convergence.

Further work should focus on a posteriori error control for multi-dimensional systems of hyperbolic conservation laws, especially the correct extension of the space-time reconstruction to two and three spatial dimensions. Moreover, based on the a posteriori error estimate it is possible to design reliable space-time-stochastic numerical schemes for the approximation of dissipative statistical solutions. For random conservation laws and classical \(L^2(\Omega ;\, L^2(D))\)-estimates, the stochastic part of the error estimator is given by the discretization error in the initial data and an additional stochastic residual which occurs during the evolution, see for example [21, 30]. For dissipative statistical solutions the stochastic part of the error estimator in the 2-Wasserstein distance is directly related to the stochastic discretization error of the initial data which may be amplified in time due to nonlinear effects. This result shows that stochastic adaptivity for dissipative statistical solutions becomes significantly easier compared to random conservation laws since only stochastic discretization errors of the initial data (and their proliferation) need to be controlled. The design of space-stochastic adaptive numerical schemes based on this observation will be the subject of further research.

References

Adjerid, S., Devine, K.D., Flaherty, J.E., Krivodonova, L.: A posteriori error estimation for discontinuous Galerkin solutions of hyperbolic problems. Comput. Methods Appl. Mech. Eng. 191, 1097–1112 (2002)

J. M. Ball, A version of the fundamental theorem for Young measures, in PDEs and continuum models of phase transitions (Nice: vol. 344 of Lecture Notes in Phys. Springer, Berlin 1989, 207–215 (1988)

Bonneel, N., Van De Panne, M., Paris, S., Heidrich, W.: Displacement interpolation using lagrangian mass transport. In ACM Transactions on Graphics (TOG), vol. 30, ACM, p. 158 (2011)

Bressan, A.: The unique limit of the Glimm scheme. Arch. Rational Mech. Anal. 130, 205–230 (1995)

Chiodaroli, E.: A counterexample to well-posedness of entropy solutions to the compressible Euler system. J. Hyperbolic Differ. Equ. 11, 493–519 (2014)

Cockburn, B., Shu, C.-W.: The Runge-Kutta discontinuous Galerkin method for conservation laws. V. Multidimensional systems. J. Comput. Phys. 141, 199–224 (1998)

Cockburn, B., Shu, C.-W.: Runge–Kutta discontinuous Galerkin methods for convection-dominated problems. J. Sci. Comput. 16, 173–261 (2001)

Dafermos, C.M.: Hyperbolic conservation laws in continuum physics. Grundlehren der Mathematischen Wissenschaften [Fundamental Principles of Mathematical Sciences], vol. 325, 4th edn. Springer-Verlag, Berlin (2016)

De Lellis, C., Székelyhidi, L., Jr.: On admissibility criteria for weak solutions of the Euler equations. Arch. Ration. Mech. Anal. 195, 225–260 (2010)

Dedner, A., Giesselmann, J.: A posteriori analysis of fully discrete method of lines discontinuous Galerkin schemes for systems of conservation laws. SIAM J. Numer. Anal. 54, 3523–3549 (2016)

Dedner, A., Giesselmann, J.: Residual error indicators for discontinuous Galerkin schemes for discontinuous solutions to systems of conservation laws. In: Theory numerics and applications of hyperbolic problems I, Aachen, Germany, August 2016, pp. 459–471. Springer, Cham (2018)

DiPerna, R.J.: Measure-valued solutions to conservation laws. Arch. Rational Mech. Anal. 88, 223–270 (1985)

Feireisl, E., Lukáčová-Medvidová, M., Mizerová, H.: \({\cal K}\)-convergence as a new tool in numerical analysis, IMA J. Num. Anal. (2019)

Fjordholm, U.S., Käppeli, R., Mishra, S., Tadmor, E.: Construction of approximate entropy measure-valued solutions for hyperbolic systems of conservation laws. Found. Comput. Math. 17, 763–827 (2017)

Fjordholm, U.S., Lanthaler, S., Mishra, S.: Statistical solutions of hyperbolic conservation laws: foundations. Arch. Ration. Mech. Anal. 226, 809–849 (2017)

Fjordholm, U.S., Lye, K., Mishra, S.: Numerical approximation of statistical solutions of scalar conservation laws. SIAM J. Numer. Anal. 56, 2989–3009 (2018)

Fjordholm, U.S., Lye, K., Mishra, S., Weber, F.: Statistical solutions of hyperbolic systems of conservation laws: numerical approximation. Math. Models Methods Appl. Sci. 30, 539–609 (2020)

Flamary, R., Courty, N.: POT Python Optimal Transport library, GitHub: https://github.com/rflamary/POT, (2017)

Foias, C., Manley, O., Rosa, R., Temam, R.: Navier–Stokes Equations and Turbulence. Encyclopedia of Mathematics and its Applications, vol. 83. Cambridge University Press, Cambridge (2001)

Giesselmann, J., Makridakis, C., Pryer, T.: A posteriori analysis of discontinuous Galerkin schemes for systems of hyperbolic conservation laws. SIAM J. Numer. Anal. 53, 1280–1303 (2015)

Giesselmann, J., Meyer, F., Rohde, C.: A posteriori error analysis and adaptive non-intrusive numerical schemes for systems of random conservation laws. BIT 60, 619–649 (2020)

Glimm, J.: Solutions in the large for nonlinear hyperbolic systems of equations. Comm. Pure Appl. Math. 18, 697–715 (1965)

Glimm, J., Sharp, D.H., Lim, H., Kaufman, R., Hu, W.: Euler equation existence, non-uniqueness and mesh converged statistics. Philos. Trans. Roy. Soc. A 373, 20140282 (2015)

Hartmann, R., Houston, P.: Adaptive discontinuous Galerkin finite element methods for nonlinear hyperbolic conservation laws. SIAM J. Sci. Comput. 24, 979–1004 (2002)

Hindenlang, F., Gassner, G.J., Altmann, C., Beck, A., Staudenmaier, M., Munz, C.-D.: Explicit discontinuous Galerkin methods for unsteady problems. Comput. Fluids 61, 86–93 (2012)

Ketcheson, D.I.: Highly efficient strong stability-preserving Runge-Kutta methods with low-storage implementations. SIAM J. Sci. Comput. 30, 2113–2136 (2008)

Laforest, M.: A posteriori error estimate for front-tracking: systems of conservation laws. SIAM J. Math. Anal. 35, 1347–1370 (2004)

Laforest, M.: An a posteriori error estimate for Glimm’s scheme, in Hyperbolic problems. Theory, numerics and applications. Proceedings of the 11th international conference on hyperbolic problems, Ecole Normale Supérieure, Lyon, France, July 17–21, (2006), pp. 643–651 Berlin: Springer (2008)

Makridakis, C.: Space and time reconstructions in a posteriori analysis of evolution problems. ESAIM Proc. 21, 31–44 (2007)

Meyer, F., Rohde, C., Giesselmann, J.: A posteriori error analysis for random scalar conservation laws using the stochastic Galerkin method. IMA J. Numer. Anal. 40, 1094–1121 (2020)

Peyré, G., Cuturi, M.: Computational optimal transport, foundations and trends in machine. Learning 11, 355–607 (2019)

Shu, C.-W., Osher, S.: Efficient implementation of essentially nonoscillatory shock-capturing schemes. J. Comput. Phys. 77, 439–471 (1988)

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

F.M., C.R. thank the Baden-Württemberg Stiftung for support via the project ”SEAL“. J.G. thanks the German Research Foundation (DFG) for support of the project via DFG grant GI1131/1-1.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Giesselmann, J., Meyer, F. & Rohde, C. Error control for statistical solutions of hyperbolic systems of conservation laws. Calcolo 58, 23 (2021). https://doi.org/10.1007/s10092-021-00417-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10092-021-00417-6

Keywords

- Hyperbolic conservation laws

- Statistical solutions

- A posteriori error estimates

- Discontinuous Galerkin method