Abstract

This paper is concerned with the efficient numerical treatment of 1D stationary Schrödinger equations in the semi-classical limit when including a turning point of first order. As such it is an extension of the paper [3], where turning points still had to be excluded. For the considered scattering problems we show that the wave function asymptotically blows up at the turning point as the scaled Planck constant \(\varepsilon \rightarrow 0\), which is a key challenge for the analysis. Assuming that the given potential is linear or quadratic in a small neighborhood of the turning point, the problem is analytically solvable on that subinterval in terms of Airy or parabolic cylinder functions, respectively. Away from the turning point, the analytical solution is coupled to a numerical solution that is based on a WKB-marching method—using a coarse grid even for highly oscillatory solutions. We provide an error analysis for the hybrid analytic-numerical problem up to the turning point (where the solution is asymptotically unbounded) and illustrate it in numerical experiments: if the phase of the problem is explicitly computable, the hybrid scheme is asymptotically correct w.r.t. \(\varepsilon \). If the phase is obtained with a quadrature rule of, e.g., order 4, then the spatial grid size has the limitation \(h=\mathcal{O}(\varepsilon ^{7/12})\) which is slightly worse than the \(h=\mathcal{O}(\varepsilon ^{1/2})\) restriction in the case without a turning point.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper is concerned with the numerical treatment of the stationary, one-dimensional Schrödinger equation

in the semi-classical limit \(\varepsilon \rightarrow 0\). Here, \(0<\varepsilon \ll 1\) is the rescaled Planck constant (\(\varepsilon := \frac{\hbar }{\sqrt{2m}} \)), \(\psi (x)\) the (possibly complex valued) Schrödinger wavefunction, and the (given) real valued coefficient function a(x) is related to the potential. For \(a(x)>0\) and \(\varepsilon \) “small”, the solution is highly oscillatory, and efficient numerical schemes have been developed, e.g., in [1, 19]. The novel feature of this work is to include one turning point of first order at \(x=0\) (i.e. \(a(0)=0\), \(a'(0)>0\)). Then, \(x<0\) represents the evanescent region where the solution \(\psi \) decreases exponentially, possibly including a pronounced boundary layer. \(x>0\) is the oscillatory region, where the solution exhibits rapid oscillations with (local) wave length \(\lambda (x)=\tfrac{2\pi \varepsilon }{\sqrt{a(x)}}\). Hence, the situation at hand is a classical multi-scale problem.

Standard numerical methods (e.g., [12, 13]) for (1.1)—particularly in the highly oscillatory regime—are costly and inefficient as they would require to resolve the oscillations by choosing a spatial grid with step size \(h=\mathcal{O}(\varepsilon )\). By contrast, we are aiming here at a numerical method on a coarse grid with \(h>\lambda \), while still recovering the fine structures of the solution. Our strategy is built upon the following works: for the purely oscillatory case \(a(x)\ge \tau _1>0\) in the semi-classical regime, WKB-based marching methods (named after the physicists Wentzel, Kramers, Brillouin) were developed in [1, 15, 19]. They allow to reduce the grid limitation to at least \(h=\mathcal{O}(\sqrt{\varepsilon })\). For the evanescent case \(a(x)\le \tau _3<0\) (as \(\varepsilon \rightarrow 0\)), a WKB-based multi-scale FEM was introduced in §3 of [22]. A hybrid method to couple both of these regimes was recently introduced and analyzed in [3]; it consists of a (non-overlapping) domain decomposition method. Turning points were excluded there, since (to the authors’ knowledge) no \(\varepsilon \)-uniformly accurate method for (1.1) including turning points has been developed so far. Hence, the function a in [3] was assumed to have a jump discontinuity at the interface between the evanescent and the oscillatory regimes. In this work we shall extend the setting by including turning points of special form. Rather than providing a numerical scheme that can handle general turning points (which is unknown so far), this paper is more a feasibility study to identify the involved problems. One of the key challenges towards a uniformly accurate scheme for turning points is the fact that the continuous solutions \(\psi _\varepsilon \) asymptotically blow up (as \(\varepsilon \rightarrow 0\)) at the turning point (for a scattering problem to be specified in Sect. 2 below).

Hence, we shall not attempt here to make the WKB-marching method from [1] extendable up to the turning point. As a first step towards a full semi-classical method including turning points, we shall rather assume that a is either a linear or quadratic function of x close to the turning point, say on \([0,x_1]\). On that interval, the solution of (1.1) is then an Airy function or, respectively, a parabolic cylinder function. This will lead to a hybrid method that is analytic on \([0,x_1]\) and numerical for \(x>x_1\). Our strategy thus combines the WKB-method from [1] (away from the turning point) with the philosophy of [10], §15.5 (i.e. a linear approximation of the potential in the first cell adjacent to the turning point). Nevertheless, this coupled problem still includes the effects of the turning point and the problems with handling it: the solution to (1.1)—as a boundary value problem (BVP)—becomes unbounded at the turning point in the semi-classical limit. Therefore, as \(\varepsilon \rightarrow 0\), the errors of standard numerical methods would become unbounded there as well (since numerical errors in a BVP are non-local and pollute the whole interval). But for our hybrid method we shall still derive an error estimate up to the turning point, and this error even decreases with \(\varepsilon \). We first note that, although the analytic solution form will be known on \([0,x_1]\), that (asymptotically unbounded) solution part is polluted by the numerical error at the boundaries. For our estimates it will be crucial that the WKB-method from [1] is not only uniformly accurate w.r.t. \(\varepsilon \) but even asymptotically correct, i.e. the numerical error goes to zero with \(\varepsilon \rightarrow 0\). This will allow to over-compensate the unbounded growth of \(|\psi _\varepsilon (0)|\) as \(\varepsilon \rightarrow 0\).

This paper is organized as follows: in Sect. 2 we specify the scattering problem to be discussed, and in Sect. 3 we rewrite the scattering-BVP as an initial value problem (IVP), coupled to an Airy function solution close to the turning point (for the case of a linear potential on \([0,x_1]\)). Section 4 illustrates the blow-up of \(\psi _\varepsilon \) in the semi-classical limit. Section 5 is the core part of this work: we extend the WKB-error analysis from [1] to the hybrid problem at hand. This follows the strategy for the domain decomposition method in [3], but is more subtle here—due to the unboundedness of \(\psi _\varepsilon \). A numerical illustration of the proved error estimates closes that section. Section 6 extends the previous analysis to the case of quadratic potentials—close to the turning point. In the final Sect. 7 we briefly discuss possible extensions to more general potentials close to the turning point.

2 Scattering model

Highly oscillatory problems similar to (1.1) appear in a wide range of applications (e.g., electromagnetic and acoustic scattering, quantum physics). Our interest in this problem is motivated by the electron transport in nano-scale semiconductor devices, which will determine the details of our set-up. 1D models are of course idealizations, but quite appropriate, e.g., for resonant tunneling diodes [5]. In this application \(\psi \) represents the quantum mechanical wave function. The derived macroscopic quantities of interest to practitioners are the particle density \(n(x):=|\psi (x)|^2\) and the current density \(j(x):=\varepsilon \mathfrak {I}({\bar{\psi }}(x)\psi '(x))\), see [1] for more details. While \(\psi \) is highly oscillatory, n and j are not; but they can only be obtained from the wave function.

We consider the internal domain\(x\in [0,1]\), which corresponds to the semiconductor device. Moreover, we assume that electrons are injected from the right boundary (or lead) with the prescribed energy E. The coefficient function a in (1.1) is then given by \(a(x) := E-V(x)\) where V(x) is the (prescribed) electrostatic potential of the problem. In reality, electrons are injected into a device as a statistical mixture with continuous energies \(E\ge E_0\) [8]. Therefore, given a potential V, a whole energy interval will give rise to turning pointsFootnote 1, i.e. zeros of a within the interval [0, 1]. To simplify the presentation we shall assume that the only turning point is located at \(x=0\), and we shall consider only one such injection energy E. Specifically, we shall make the following assumptions for the given potential V (see Fig. 1).

Assumption 2.1

-

(a)

\(V\in {\mathcal {C}}(\mathbb {R};\mathbb {R})\). Potential jumps are excluded here only for simplicity. Without difficulty, they could be included inside the interior domain (0, 1) by restarting the IVP (from Sect. 3) at jump points.

-

(b)

Let \(V(x)<V(0)\) for \(x\in (0,1]\) (to exclude further turning points besides of \(x=0\)).

-

(c)

The potential in the left exterior domain (i.e. \(x<0\)), and also in a (small) neighborhood of \(x=0\), is linear (for simplicity of the hybrid problem). We also assume (w.l.o.g.) that it has slope \(-1\). More precisely we assume that \(\exists \,x_1\in (0,1)\) such that \(a(x)=x\) for \(x\le x_1\). Hence, (1.1) is the (scaled) Airy equation for \(x\le x_1\).

-

(d)

The potential in the right exterior domain (i.e. \(x>1\)) is constant with value V(1).

Hence, this scattering problem is oscillatory for \(x>0\), evanescent for \(x<0\), and it has a turning point of order 1 at \(x=0\). Since \(V(x)>E\) for \(x<0\), the injected wave function is fully reflected. Due to a) and b) \(\exists \,\tau _1>0\) such that \(\tau _1\le a(x)\) on \([x_1,1]\), which is an important assumption for the WKB-marching method from [1].

In a realistic device model, it would of course be appropriate to assume that the potential V is constant also in the left lead, i.e. on \((-\infty ,x_2]\) with some \(x_2<0\). If \(V(x_2)>E\), the injected wave would still be fully reflected, leading to a situation that is qualitatively very similar to the present case. In order to simplify the subsequent proofs, we shall stick here to Assumption 2.1 c). The extension to a constant potential on \((-\infty ,x_2]\) will be discussed in a follow-up work [6].

Sketch of the model described in Assumption 2.1 with linear potential left of \(x_1\). Electrons are injected from the right boundary \(x=1\) and there is a turning point of first order at the left boundary \(x=0\). The coefficient function is \(a(x) := E-V(x)\). The explicit solution form is available for \(x\le x_1\) and for \(x\ge 1\); on \((x_1,1)\) the solution is obtained numerically

For each fixed \(\varepsilon \), the wave function \(\psi \) is \({\mathcal {C}}^2\) and we require the scattering solution to be bounded w.r.t. \(x\in \mathbb {R}\). Since the potential grows linearly for \(x<0\), \(\psi (x)\) has to decay to 0 as \(x\rightarrow -\infty \). Hence, \(\psi \) is a scaled Airy function \(\mathrm{Ai}\) for \(x\le x_1\):

with some \(c_0\in \mathbb {C}\) to be determined. Moreover \(\psi \) is a superposition of two plane waves (incoming and outgoing) for \(x\ge 1\):

with \(a_1 := a(1)\).

This whole-space problem (with \(x\in \mathbb {R}\)) can be written as an equivalent BVP for \(\psi \) on the interval \([x_1,1]\) by using transparent boundary conditions (BCs) that correspond to the \({\mathcal {C}}^1\)-continuity of the matched whole-space solution. The inhomogeneous transparent BC at \(x=1\) is well known from [1, 18] (see (2.4)) and ensures that there is no reflection induced by the BC for an incident wave coming from the right. \({\mathcal {C}}^1\)-matching of \(\psi \) with \(\psi _-\) at \(x_1\) reads:

with some \(c_0(\varepsilon )\in \mathbb {C}{{\setminus }}{\{0\}}\). Eliminating the (so far) unknown constant \(c_0\), the last two conditions are combined into a Robin BC. In summary this yields the following BVP:

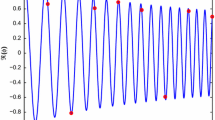

Here we already used the assumption that the incident wave has amplitude 1, and more precisely that \(c_2 = e^\frac{\mathrm {i}\sqrt{a_1}}{\varepsilon }\). Since the wave is fully reflected, we have \(|c_1|=1\) for the reflection coefficient \(c_1\). Hence, the wave \(\psi _{+}\) from (2.2) has the maximum amplitude 2 on \(x\ge 1\). The plots in Figs. 2 and 7 illustrate this.

For the solvability of this BVP the following simple result holds:

Proposition 2.2

Let \(x_1\in [0,1)\) and \(a\in {\mathcal {C}}[x_1,1]\) with \(a(1)>0\). Then the BVP (2.4) has a unique solution \(\psi \in {\mathcal {C}}^1[x_1,1]\).

Proof

This proof is analogous to Proposition 2.3 of [4] and Proposition 1.1 of [3]: multiplying the Schrödinger equation by \(\bar{\psi }\), integrating by parts, and taking the imaginary part. \(\square \)

Notation and assumptions

Now we recall some notation and assumptions needed to apply the WKB-marching method from [1] to the BVP (2.4). The well-known WKB-approximation (cf. [16], §15 of [10]), for the oscillatory regime where \(a(x)\ge \tau _1>0\), is based on inserting the asymptotic power series ansatz

into the Eq. (1.1), and comparing \(\varepsilon \)-powers to successively obtain the functions \(\phi _p(x)\). Truncating the sum in the exponential after \(p=2\) leads to the 2nd order asymptotic WKB-approximation for the oscillatory regime

with the phase

In the WKB-marching method from [1] this 2nd order WKB-approximation is used to transform the Eq. (1.1) to a smoother problem that is then numerically solved on a coarse grid, accurately and efficiently. This is done by reformulating the BVP (2.4) into an IVP using the boundary condition at \(x_1\) and then scaling the numerical approximation to this IVP (obtained by the scheme we will recall in Sect. 5.1) to also satisfy the boundary condition at \(x=1\).

We need to make an assumption to assure the feasibility of the WKB-marching method:

Assumption 2.3

Let \(a\in {\mathcal {C}}^5[x_1,1]\) be real valued and satisfy the following bounds

Moreover let \(0<\varepsilon \le \varepsilon _0\), with some \(\varepsilon _0\) such that

where \(\beta _+\) denotes the non-negative part of \(\beta \).

Mind that this assumption on \(\varepsilon \) guarantees that the phase \(\phi (x)\) for the 2nd order WKB-approximation is strictly increasing since the integrand \(\sqrt{a}-\varepsilon ^2\beta \) is then positive.

3 Analytical problem: reformulation as IVP

For the numerical solution of (2.4) we want to apply the WKB-marching method from [1] on the interval \([x_1,1]\). To this end we need to reformulate the BVP (2.4) as an IVP, whose solution \({\hat{\psi }}\) will be scaled afterwards to satisfy the BCs in (2.4). Note that the left BC at \(x_1\) in (2.4) is invariant under scalings.

\({Step\, 1:}\) Since (2.4) only includes one condition at \(x_1\), it is necessary to prescribe an additional, auxiliary initial value for \({{\hat{\psi }}}(x_1)\). The condition on \(\varepsilon {\hat{\psi }}^\prime (x_1)\) then follows from the Robin BC at \(x_1\) in (2.4). On the one hand the two ICs should have the structure of (2.3) (with an appropriate choice of \(c_0\)), and on the other hand the scaling constant \(c_0\) should be of orderFootnote 2\(\Theta (\varepsilon ^{-\frac{1}{6}})\), such that the initial condition vector \(({\hat{\psi }}(x_1),\varepsilon {\hat{\psi }}^\prime (x_1))^\top \) is \(\varepsilon \)-uniformly bounded above and below. In fact, \(c_0= \varepsilon ^{-\frac{1}{6}}\) in (2.3) yields

where the constants \(c,C>0\) are independent of \(\varepsilon \). This can be verified using the asymptotic expansions for \(\mathrm{Ai}(-z)\) and \({\mathrm{Ai}}^\prime (-z)\) from (A.4): we get the asymptotic representations with the argument \(z=\frac{x}{\varepsilon ^{2/3}}\) for some (fixed) \(x>0\) as \(\varepsilon \rightarrow 0\):

where \(\xi (x):= \frac{2x^\frac{3}{2}}{3\varepsilon }- \frac{\pi }{4}\). We verify

The \(\varepsilon \)-uniform lower bound on the IC can be found, as \(\cos \) and \(\sin \) never vanish simultaneously. Hence, this scaling gives a natural balance of \(\psi _{-}\) and \(\varepsilon \psi _{-}^\prime \). We shall thus consider the IVP

Here and in the sequel we use the notation \({\hat{\psi }}\) to refer to the solution of this IVP.

\({Step\, 2:}\) Next the solution \({\hat{\psi }}\) of this IVP is scaled as

with

in order to satisfy the BC at \(x=1\). Note that this scaling preserves the BC at \(x_1\), and thus, \(\psi \) is a solution to the BVP (2.4). From here on we use the notation \(\psi \) to refer to the solution of BVP (2.4). This scaling is also applied to the extension of the solution to \([0,x_1]\) as \(\psi (x) = \alpha \,\psi _{-}(x)\), with the choice \(c_0=\varepsilon ^{-\frac{1}{6}}\) in (2.1). This equivalence of the BVP to an IVP with a-posteriori scaling was already used in [1, §2] and [3, Prop. 2.2] for closely related problems.

The vector valued system

Following [1] it is convenient to reformulate the second order differential equation (1.1) as a system of first order. This is done in the following non-standard way: instead of the vector \(({\hat{\psi }}(x),\varepsilon {\hat{\psi }}^\prime (x))^\top \) we shall use

with the transformation matrix

Under Assumption 2.3 (i.e. a(x) is bounded away from zero), the transformation matrix A(x) and its inverse are uniformly bounded w.r.t. \(x\in [x_1,1]\) and \(\varepsilon \). Hence, the norms of the two vectors \({\hat{W}}(x)\) and \(({\hat{\psi }}(x),\varepsilon {\hat{\psi }}^\prime (x))^\top \) are equivalent, uniformly in \(\varepsilon \). After the transformation (3.6), the IVP (3.3) reads

with the two matrices

In order to show (in Sect. 5) that the WKB-marching method from [1] applied to (3.3) yields a uniformly accurate scheme for the BVP (2.4), we shall need \(\varepsilon \)-uniform boundedness of the scaling factor \(\alpha \) from (3.5). This can be inferred from a uniform lower bound on \(({\hat{\psi }},\varepsilon \hat{\psi ^\prime })^\top \), which we establish similarly to [3, Lemma 3.4]:

Lemma 3.1

Let \(a(x)\in {\mathcal {C}}^2[x_1,1]\) and \(a(x)\ge \tau _1>0\). Let \({{\hat{\psi }}}(x)\) be the solution to the IVP (3.3), then \(({{\hat{\psi }}}(x),\varepsilon {\hat{\psi }}^\prime (x))\) is uniformly bounded above and below, i.e.

or equivalently

where the constants \(C_1,\ldots ,C_4>0\) are independent of \(0<\varepsilon <\varepsilon _0\).

Proof

Let \({\hat{W}}(x)\) be a solution to (3.8). A short calculation for the norm \(\Vert {\hat{W}}(x)\Vert ^2\)\(=|{\hat{w}}_1(x)|^2+|{\hat{w}}_2(x)|^2\) shows

which implies

As the norms of \({\hat{W}}(x)\) and \(({\hat{\psi }},\varepsilon {\hat{\psi }}^\prime )^\top \) are (\(\varepsilon \)-uniformly) equivalent, the proof is concluded if the norm of the initial condition \({\hat{W}}(x_1)\) is \(\varepsilon \)-uniformly bounded from above and below. This is again equivalent to (3.1), proving the assertion. \(\square \)

A solution \({\hat{W}}\) to the analytical IVP (3.8) needs to be scaled such that, after transforming back via \(A(x)^{-1}\) to \((\psi ,\varepsilon \psi ^\prime )^\top \), it fits both boundary conditions in (2.4). In analogy to (3.5) this is done via the scaling parameter \({\tilde{\alpha }}\in \mathbb {C}\) defined as

Now we can write the exact solution \(\psi (x)\) to the BVP (2.4) extended to the region [0, 1] as

\({\hat{W}}\), as well as \({\hat{\psi }}\), are real-valued, and \(\psi \) only becomes complex-valued due to the scaling with \({\tilde{\alpha }} (=\alpha )\). Since \({\hat{W}}\in \mathbb {R}^2\), the denominator in \({\tilde{\alpha }} \) cannot vanish, except for the trivial solution \({\hat{W}}\equiv 0\). Therefore the scaling by \({\tilde{\alpha }}\) is well-defined and one can show the following properties.

Lemma 3.2

(see Lemma 3.7 in [3]) Let \(\delta >0\) be fixed. Then the map \({\tilde{\alpha }}: \mathbb {R}^2{\setminus } B_\delta (0)\rightarrow \mathbb {C}\) in (3.12) is Lipschitz continuous with Lipschitz constant \(L_{{\tilde{\alpha }}}>0\) and bounded with a constant \(C_{{\tilde{\alpha }}} \). Both, \(L_{{\tilde{\alpha }}}\) and \(C_{{\tilde{\alpha }}}\), can be chosen uniformly with respect to \(0<\varepsilon \le \varepsilon _0\).

4 Asymptotic blow-up at the turning point

The goal of this work is to construct an \(\varepsilon \)-uniformly accurate numerical scheme for (2.4). Since it incorporates (implicitly) the turning point at \(x=0\), it shall be a generalization of [1, 3]. A key ingredient of the numerical analysis in these papers was the uniform boundedness of the solution \(\psi \) w.r.t. \(\varepsilon \). But when including a turning point, this does not hold any more, which is a main challenge of the situation at hand. At the turning point, solutions to the BVP (2.4) exhibit blow-up behavior as \(\varepsilon \rightarrow 0\), i.e. \(|\psi (0)| = \Theta (\varepsilon ^{-\frac{1}{6}})\). This is shown in the following explicitly solvable example and the proposition that follows it.

Example 4.1

Consider (2.4) with \(x_1=0\) and \(a(x) = x\) for \(x\in [0,1]\) and \(0<\varepsilon <1\). Then the explicit solution reads

Figures 2 and 3 illustrate that \(\varepsilon \Vert \psi ^\prime _\varepsilon \Vert _{L^\infty (0,1)}\) is uniformly bounded w.r.t. \(0<\varepsilon \le 1\), but \(\Vert \psi _\varepsilon \Vert _{L^\infty (0,1)}\) is not since \(\{|\psi _\varepsilon (0)|\}\) becomes unbounded as \(\varepsilon \rightarrow 0\).

This blow-up and, resp., boundedness behavior of \(\psi _\varepsilon \) actually extends to all potentials satisfying Assumption 2.1:

Proposition 4.2

Let \(x_1\in (0,1)\) and a(x) be as in Assumption 2.1 and \({\mathcal {C}}^2\) on \([x_1,1]\). Then the family of solutions \(\{\psi _\varepsilon (x)\}\) to the BVP (2.4), extended with the Airy solution on \([0,x_1]\), satisfies:

- (a)

\(\Vert \psi _\varepsilon \Vert _{L^\infty (0,1)}\) is of the (sharp) order \(\Theta (\varepsilon ^{-\frac{1}{6}})\) for \(\varepsilon \rightarrow 0\).

- (b)

\(\varepsilon \Vert \psi _\varepsilon ^\prime \Vert _{L^\infty (0,1)}\) is uniformly bounded with respect to \(\varepsilon \rightarrow 0\).

Proof

For readability of this proof, we omit the index \(\varepsilon \) in \(\psi _\varepsilon \) and \({\hat{\psi }}_\varepsilon \). As discussed in Sect. 3, the solution \(\psi \) to the BVP (2.4) can be obtained by scaling the solution \({\hat{\psi }}\) to the IVP (3.3) with the constant \(\alpha \) from (3.5), i.e. \(\psi = \alpha \,{\hat{\psi }}\). With some (fixed) \(x_0\in (0,x_1)\) we make a case distinction:

\({{Region\, x_0\le x\le 1}}\)

For \(x\ge x_0\) we consider the IVP (3.8) first with a generic initial condition at \(x_0\). For such x we have \(a(x)\ge \tau _3>0\), and therefore the transformation matrices A(x) and its inverse are uniformly bounded w.r.t. x and \(\varepsilon \). Denoting by W the vector valued solution to this IVP, this matrix bound implies equivalence (uniform in \(\varepsilon \)) of the norms of the two vectors W(x) and \(({\psi }(x),\varepsilon {\psi }^\prime (x))^\top \). Note that this equivalence would not hold for the choice \(x_0=0\). Analogously as in the proof of Lemma 3.1 we obtain

Step1 : First we consider an auxiliary problem that corresponds to the “pure” Airy function solution: let \({{\tilde{W}}}\) be the solution of the IVP (3.8) on \(x\ge x_0\) with \(\varepsilon =1\), \(a(x) = x\) and the initial condition

obtained from (3.3). The solution reads

Then the estimates (4.2) with \(\int _{x_0}^x|\beta (y)|dy = \frac{5}{48}(x_0^{-\frac{3}{2}} - x^{-\frac{3}{2}})\le \frac{5}{48} x_0^{-\frac{3}{2}}\) yield

with the constants defined as

\({{Step\, 2:}}\) Let \({{\hat{W}}}\) be the solution of the IVP (3.8) on \(x_0\le x\le x_1\) with \(0<\varepsilon \le 1\), \(a(x) = x\) and the initial condition

Then the vector valued solution corresponds to the scaled Airy function:

where \(x/\varepsilon ^{\frac{2}{3}}\ge x_0\). Hence, (4.3) implies

Since \(\Vert {\tilde{W}}(x_0)\Vert \) is independent of \(\varepsilon \), this proves \(\Vert {\hat{W}}(x)\Vert = \Theta _\varepsilon (1)\) on \([x_0,x_1]\).

\({{ Step\, 3:}}\) On \(x_1\le x\le 1\) the (generic) function a(x) satisfies \(a(x)\ge \tau _1>0\). Hence, we obtain the following upper and lower bounds analogously to (4.2):

where \(d_{x_1}:=e^{-\int _{x_1}^1|\beta (y)| dy}\) and \(c_{x_1}:= e^{\int _{x_1}^1|\beta (y)| dy}\). Since (4.5) particularly holds for \(\Vert {\hat{W}}(x_1)\Vert \), the bounds in (4.6) yield \(\Vert {\hat{W}}(x)\Vert = \Theta _\varepsilon (1)\) on \([x_1,1]\). Since the norms of \({\hat{W}}(x)\) and \(({\hat{\psi }}(x),\varepsilon {\hat{\psi }}^\prime (x))^\top \) are (\(\varepsilon \) uniformly) equivalent, we get

This yields the asymptotic behavior of the scaling constant \(\alpha \) from (3.5):

In the region \(x_0\le x\le 1\), this yields for the vector solution of the BVP (2.4)

\({{Region\, 0\le x\le x_0:}}\)

\({{ Step\,4:}}\) Note that the solution to the BVP (2.4), extended to [0, 1], exhibits an asymptotic blow-up at the turning point \(x=0\): as \({\hat{\psi }}(x)= \varepsilon ^{-\frac{1}{6}}\mathrm{Ai}(-\frac{x}{\varepsilon ^{2/3}})\) on \([0,x_1]\), it holds that

Moreover, the following proves that \(\max _{x\in [0,x_0]}|\psi _\varepsilon (x)| = \Theta (\varepsilon ^{-\frac{1}{6}})\): the Airy function \(\mathrm{Ai}\) is continuous and bounded on \(\mathbb {R}\). It attains its unique maximum at some \(y_{max}^{\mathrm{Ai}}\approx -1.01879\) , i.e.

The maximum of \(\mathrm{Ai}(-\frac{x}{{\tilde{\varepsilon }}^{2/3}})\), located at \(x_{max}^{\mathrm{Ai}} = ~{-{\tilde{\varepsilon }}^{2/3}y_{max}^{\mathrm{Ai}}}\), lies inside \([0,x_0]\) for \({\tilde{\varepsilon }}\) sufficiently small. Hence, \(\max _{x\in [0,x_0]} \mathrm{Ai}(-\frac{x}{\varepsilon ^{2/3}}) = M:=\mathrm{Ai}\left( y_{max}^{\mathrm{Ai}}\right) \) is constant for \(0<\varepsilon \le {\tilde{\varepsilon }}\). Since \(\alpha =\Theta _\varepsilon (1)\) we get

Hence, (4.9) together with (4.11) yields

thus proving a).

\({{ Step\,5:}}\) To prove the uniform bound on the \(\varepsilon \)-scaled derivative, we use the asymptotic representation (3.2) for \({\mathrm{Ai}}^\prime \) and the fact that

Fix some \(z_0>0\) for another case distinction. With \(z=\frac{x}{\varepsilon ^{2/3}}\) the asymptotic representation (3.2) holds for \(z\ge z_0>0\). I.e. there exists a \(c_1>0\) such that for \(z_0\varepsilon ^\frac{2}{3}\le x\le x_0\) it holds that

since \(\alpha = \Theta _\varepsilon (1)\) and \(x^{-5/4}\varepsilon \le z_0^{-5/4}\varepsilon ^{1/6}\le c_2\) for some \(c_2>0\). For small arguments \(0\le z\le z_0\) (i.e. close to the turning point) it holds that \(|{\mathrm{Ai}}^\prime (-z)|\le c_3\) for some \(c_3>0\), as \({\mathrm{Ai}}^\prime \) is continuous on a compact set. Hence, for \(0\le x\le z_0\varepsilon ^\frac{2}{3}\) it holds that

for some \(c_4>0\). All four constants \(c_1,\ldots ,c_4\) are independent of \(\varepsilon \) yielding \(\varepsilon \Vert \psi _\varepsilon ^\prime \Vert _{L^\infty (0,x_0)}=\mathcal{O}(1)\) for \(\varepsilon \rightarrow 0\). Together with (4.9) this yields an overall uniform bound, i.e.

\(\square \)

Remark 4.3

The proof of the above proposition illustrates the reason for the asymptotic blow-up of \(|\psi _\varepsilon (0)|\): essentially, it stems from the \(x^{-1/4}\)-decay of the flipped Airy function \(\mathrm{Ai}(-x)\) as \(x\rightarrow \infty \) (note that \({{\tilde{w}}}_1=x^{1/4}\mathrm{Ai}(-x)\) satisfies (4.3)). Close to the turning point of first order, \(\psi _\varepsilon \) behaves like the scaled Airy function \(\varepsilon ^{-1/6}\mathrm{Ai}(-x\,\varepsilon ^{-2/3})\). In the scattering model of Sects. 2–5 this even holds exactly, and in Sect. 6 this will hold approximately. This \(\varepsilon \)-scaling of the x variable compresses this Airy function decay to the (small) interval \([0,x_1]\). At the fixed point \(x_1\), \(\mathrm{Ai}(-x_1\varepsilon ^{-2/3})\) is proportional to \(\varepsilon ^{1/6}\), which we compensated by the scaling \(\varepsilon ^{-{1/6}}\) yielding an \(\varepsilon \)-uniformly bounded initial condition \({\hat{W}}(x_1)\). This \(\varepsilon \)-uniformity is then not affected any more on the subsequent interval \([x_1,1]\), since a(x) is there uniformly bounded away from zero. Hence, the solution propagator is \(\varepsilon \)-uniformly bounded (above and below) on \([x_1,1]\), see Step 3 in the above proof. On the other hand, at the turning point \(x=0\) the Airy function has the value \(\mathrm{Ai}(0)\), i.e. constant w.r.t. \(\varepsilon \). Hence, the \(\varepsilon ^{-{1/6}}\) scaling yields the asymptotic blow-up at \(x=0\).

This asymptotic blow-up is, for the time being, one of the key problems for extending asymptotic preserving schemes (like the WKB-method from [1] or the adiabatic integrators from [19]) up to the turning point. To mitigate this problem, yet still include the turning point into the scattering system, we made the simplifying assumption that \(a(x) \equiv x\) on some (small) interval \([0,x_1]\). This way we shall match the analytic solution on \([0,x_1]\) to a numerical solution on \([x_1,1]\). The former is explicit up to a scaling factor that, however, inherits a numerical error from the approximation to \(\psi (1)\).

5 Numerical method and error analysis

In this section we will review the WKB-marching method for the IVP (3.3) and derive error estimates for the BVP (2.4), extended to [0, 1].

We recall that the BVP (2.4) is solved in two steps: first the corresponding IVP (3.3) is solved numerically; then the numerical solution is scaled according to (3.4), to fit the right boundary condition. For the turning point problem we actually want to solve (2.4) on [0, 1]. But the solution to this BVP on \([0,x_1]\) is given by a scaled Airy function as in (2.1). We are left with numerically approximating a solution on \([x_1,1]\) and matching the two parts at \(x_1\) to obtain a solution on the whole interval. In the 2-step solution process we incur an error from the WKB-marching method on \([x_1,1]\) and this propagates into a second error from the (inaccurate) \(\alpha \)-scaling of the “Airy-solution” on \([0,x_1]\).

The essential novelty compared to [3] is the inclusion of a first order turning point at \(x=0\). We proved in Sect. 4 that the exact solution \(\psi _\varepsilon \) blows up at the turning point like \(\Theta (\varepsilon ^{-\frac{1}{6}})\). Hence, one might expect that the corresponding numerical error would also be unbounded there. We recall that the numerical error stems from the \(\alpha \)-scaling, where \(\alpha ({{\hat{\psi }}},{\hat{\psi ^\prime }})\) depends on the numerically obtained approximations of \({{\hat{\psi }}}(1)\) and \({\hat{\psi ^\prime }}(1)\). In fact, the inclusion of the turning point “costs” a factor \(\varepsilon ^{-\frac{1}{6}}\) in the error estimates due to the blow-up of the sequence \(\psi _\varepsilon \) at \(x=0\) (cp. the estimate (5.5) to (5.12) below). In these estimates the negative power of \(\varepsilon \) can be compensated by restricting the step size h in dependence of \(\varepsilon \). With a turning point, this \(h(\varepsilon )\)-relation gets slightly less favorable.

In the special case of an explicitly integrable phase \(\phi (x)\) from (2.7), the WKB-marching method is an asymptotically correctFootnote 3 scheme w.r.t. \(\varepsilon \) (with error order \(\mathcal{O}(\varepsilon ^3)\)). This asymptotic correctness will compensate for the fact that the solution sequence \(\psi _\varepsilon \) is unbounded at the turning point \(x=0\), and it will still yield an overall asymptotically correct schemeFootnote 4 for (2.4).

To clarify the notation, we summarize it in the following Table 1. Here the superscript \(^{(\prime )}\) means that we refer to the function as well as its derivative. Let \(x_1<x_2<\cdots <x_N=1\) be a grid for the numerical method on \([x_1,1]\), where \(x_1>0\), and \(h:=\max _{2\le n\le N}|x_n-x_{n-1}|\) is the step size.

Occasionally we shall use a sub- or superscript \(\varepsilon \) to emphasize the \(\varepsilon \)-dependence of that quantity.

The Airy-WKB scheme for an approximation \(\psi _h^{(\prime )}\) to the solution \(\psi ^{(\prime )}\) of the BVP (2.4) extended to [0, 1] consists of the following steps:

\({Step\,1:}\)

-

(a)

On \([0,x_1]\): The solution (2.1) satisfying the ICs in (3.3) reads

$$\begin{aligned} \psi _-(x) = \varepsilon ^{-\frac{1}{6}}\mathrm{Ai}\left( -\tfrac{x}{\varepsilon ^{2/3}}\right) \;;\;\;\varepsilon \psi _-^\prime (x) = -\varepsilon ^{\frac{1}{6}}{\mathrm{Ai}}^\prime \left( -\tfrac{x}{\varepsilon ^{2/3}}\right) . \end{aligned}$$ -

(b)

On \([x_1,1]\): Compute a numerical approximation \(({\hat{\psi }}_{h,n},\varepsilon {\hat{\psi }}_{h,n}^\prime )^\top \) to the IVP (3.3) on the grid \(\{x_1,\ldots ,x_N\}\) via the WKB-marching method.

\({Step\,2:}\)

Scale \(\psi _-^{(\prime )}\) and \({\hat{\psi }}_{h,n}^{(\prime )}\) using \(\alpha :=\alpha ({\hat{\psi }}_{h,N},{\hat{\psi }}_{h,N}^\prime )\) and set

5.1 The WKB-marching method on \([x_1,1]\)

We shall now review the basics of the second order WKB-marching method from [1]: here the focus is on the algorithm and error estimates. The background, including motivation for the tools used in this method can be read in [1]. The method consists of two parts, first a transformation of the highly oscillatory problem (1.1) to a smoother problem, and second the numerical discretization of said smooth problem as to obtain an \(\varepsilon \)-asymptotically correct scheme.

Analytic transformation The first order system (3.8) for \({\hat{W}}(x)\) is transformed to the system in the variable Z(x) as follows:

with matrices

where \(\phi (x)\) is the phase function defined in (2.7). For this analytic transformation we assume that \(\phi \) is explicitly available (for a generalization on numerically computed phases see Remark 5.3 below). This yields the system

where \(N^\varepsilon (x)\) is non-zero only in the off-diagonal entries

The above system exhibits much smoother solutions compared to the system for \({\hat{W}}(x)\) from (3.8). Moreover, the strong limit of its solutions \(Z_\varepsilon \) as \(\varepsilon \rightarrow 0\) satisfies the trivial equation \(Z^\prime (x) = 0\), since \(N^\varepsilon (x)\) is \(\varepsilon \)-uniformly bounded. Next we recall from [1] a numerical scheme that is at the same time \(\varepsilon \)-asymptotically correct and second order in the step size h.

Numerical scheme The second order (in h) scheme is rather non-standard and developed via the second order Picard approximation of (5.1):

These (iterated) oscillatory integrals (with \(\phi \) assumed to be known exactly) are then approximated using similar techniques as the asymptotic method in [14]. This yields the following scheme that is \(\varepsilon \)-asymptotically correct:

For a given initial condition \(Z_1:= Z(x_1)\) the algorithm reads

with the matrices \(A_n^1\) and \(A_n^2\) given as

Here we used the following notations:

and the discrete phase increments are

In the end we obtain a sequence of vectors \(Z_n\) which we have to transform back via

This yields an approximation of the solution to the vector valued system (3.8) for \({\hat{W}}\).

Now let us formulate a discrete analogue of Lemma 3.1.

Lemma 5.1

Let Assumption 2.3 hold and let the initial condition \({\hat{W}}_1^\varepsilon \in ~\mathbb {R}^2\) be \(\varepsilon \)-uniformly bounded above and below. Then \(\exists \,\varepsilon _1\in (0,\varepsilon _0]\) such that the WKB-marching method for (3.8) yields a sequence of vectors \({\hat{W}}_n^\varepsilon \in ~\mathbb {R}^2\), \(n=1,\ldots ,N\) that is uniformly bounded from above and below, i.e.

where the constants \(C_5,C_6>0\) are independent of \(0<\varepsilon \le \varepsilon _1\) and of the numerical grid on \([x_1,1]\).

Proof

A proof can be carried out exactly as in [3, Lemma 3.5], with the only difference that the initial condition \({\hat{W}}_1\) is now \(\varepsilon \)-dependent (but uniformly bounded above and below). \(\square \)

In particular Lemma 5.1 applies to the \(\varepsilon \)-dependent initial condition \({\hat{W}}_1:={\hat{W}}(x_1)\) obtained from (3.3) via the transformation (3.6). \({\hat{W}}_1=\Theta _\varepsilon (1)\) because of (3.1) and the \(\varepsilon \)-uniform equivalence of the norms \(\Vert {\hat{W}}(x_1)\Vert \) and \(\Vert ({\hat{\psi }}(x_1),\varepsilon {\hat{\psi }}^\prime (x_1))^\top \Vert \).

5.2 Error estimates including the turning point

The following result is a simple consequence of Theorem 3.1 in [1]. It shows that the WKB-marching method applied to the IVP (3.3)—however, with a different IC compared to [1]—yields the same h- and \(\varepsilon \)-order as when applied to the IVP proposed in [1]. So we obtain:

Proposition 5.2

(see Thm. 3.1 in [1]) Let Assumptions 2.1 and 2.3 on the coefficient function a(x) be satisfied. Then the global error of the second order WKB-marching method for the IVP (3.3) satisfies

with a constant C independent of n, h and \(\varepsilon \). Here, \(\gamma >0\) is the order of the chosen numerical integration method for evaluating the phase integral \(\phi \) from (2.7).

Remark 5.3

In the error analysis of [1], as well as in Proposition 5.2 above, the possible error of the matrices \(A_n^1, A_n^2\) in (5.2) arising from an incorrect phase \(\phi \) is not taken into account. Errors of \(\phi \) are only considered for the back transformation (5.3). However, in the recent, more complete error analysis in [2], both occurrences of the error of \(\phi \) are included. It would lead to a third error term in (5.5) that is \(\mathcal{O}(\varepsilon ^2)\) and includes the \(W^{2,\infty }\)-error of the phase (see [2, Theorem 3.2]). We omit this additional error term here for brevity of the presentation.

Proof of Proposition 5.2

The proof is using the main result [1, Theorem 3.1] for the IVP

and it only remains to generalize it to the initial condition in (3.3). Let \(Y(x) = (y_1(x), y_2(x))^\top \) be the vector valued solution to (5.6) after the transformation via (3.6), and \(Y_n\) is its numerical approximation at \(x_n\) obtained via the WKB-marching method. Due to Theorem 3.1 of [1] it holds

Next we give a transformation formula to connect \(\varphi \) with \({\hat{\psi }}\), the solution of (3.3). One easily verifies that

with the \(\varepsilon \)-dependent constant

Using the asymptotic representations (3.2) we verify

where \(\xi (x):= \tfrac{2x^\frac{3}{2}}{3\varepsilon }- \tfrac{\pi }{4}\). Thus it holds

with \(C>0\) independent of n, h and \(\varepsilon \). \(\square \)

In some applications the phase \(\phi \) is exactly computable: in quantum tunneling models, e.g., the crystalline heterostructure leads to a piecewise linear potential V(x) (with jumps due to the contact potential difference), and hence piecewise linear a(x). In this case the \(\frac{h^\gamma }{\varepsilon }\)-error term in (5.5) drops out and the scheme satisfies an \(\varepsilon \)-uniform, second order in h error estimate. Moreover it is even asymptotically correct with respect to \(\varepsilon \). The opposite situation, when \(\phi (x)\) has to be computed numerically, will be discussed in Remark 5.5 below.

Scaling to fit the right boundary condition

The numerical approximation \(W_n\) for \(n=1,\ldots ,N\) to the solution vector \(W(x_n)\) of (2.4) is obtained by first calculating the numerical approximation \({\hat{W}}_n\) for the IVP (3.8) via the WKB-marching method. Then it is scaled with \({\tilde{\alpha }}:={\tilde{\alpha }}({\hat{W}}_N)\), i.e.

where \({\hat{W}}_N\) is the approximation to \({\hat{W}}(1)\) obtained in the last step of the WKB-scheme. Now we can give the error estimates for numerically solving the BVP (2.4) using the \({\tilde{\alpha }}\)-scaled Airy function \({\tilde{\alpha }}\,\psi _-\) (with the choice \(c_0:=\varepsilon ^{-\frac{1}{6}}\)) on \([0,x_1]\) and the \({\tilde{\alpha }}\)-scaled numerical solution \({\tilde{\alpha }}\,{\hat{\psi }}_{h,n}\) on \(\{x_1,\ldots ,x_N\}\), i.e.

We recall that the \(\varepsilon \)-scaling of the Airy function on \([0,x_1]\) is important here to satisfy the IC at \(x_1\) for the IVP (3.3). Next we give error estimates for the hybrid solution (5.8), (5.9), i.e. Airy function on \([0,x_1]\) coupled to the WKB-solution on \([x_1,1]\). While our main strategy follows §3.5 of [3], the turning point at \(x=0\), and thus, the unboundedness of \(\psi _\varepsilon (0)\), causes technical challenges.

Theorem 5.4

(Convergence of the Airy-WKB method) Let Assumptions 2.1 and 2.3 be satisfied and \(0<\varepsilon \le \varepsilon _1\). Then the pair \((\psi _h,\varepsilon \psi _h^\prime )\) satisfies the following error estimates:

- (a)

In the region \([0,x_1]\), we have

$$\begin{aligned} \Vert e_h\Vert _{C[0,x_1]} \le C\tfrac{h^\gamma }{\varepsilon ^{7/6}} + C\varepsilon ^{\frac{17}{6}}h^2\;,\quad \varepsilon \Vert e_h^\prime \Vert _{C[0,x_1]} \le C\tfrac{h^\gamma }{\varepsilon } + C\varepsilon ^3 h^2\;, \end{aligned}$$(5.10)where \(e_h(x) := \psi (x) - \psi _h(x)\).

- (b)

In the region \([x_1,1]\), we have

$$\begin{aligned} |e_{h,n}| + \varepsilon |e_{h,n}^\prime | \le C\tfrac{h^\gamma }{\varepsilon }+C\varepsilon ^3 h^2\;,\quad n=1,\ldots ,N\;, \end{aligned}$$(5.11)where \(e_{h,n}:= \psi (x_n)-{\tilde{\alpha }}\,{\hat{\psi }}_{h,n}\) and \(e_{h,n}^\prime := \psi ^\prime (x_n)-{\tilde{\alpha }}\,{\hat{\psi }}_{h,n}^\prime \).

- (c)

For the hybrid method on the interval [0, 1] we have the error estimate

$$\begin{aligned} \Vert e_h\Vert _\infty \le C\tfrac{h^\gamma }{\varepsilon ^{7/6}} + C\varepsilon ^{\frac{17}{6}}h^2 \;,\quad \varepsilon \Vert e_h^\prime \Vert _\infty \le C\tfrac{h^\gamma }{\varepsilon }+C\varepsilon ^3 h^2 \;, \end{aligned}$$(5.12)where \(\Vert e_h\Vert _\infty := \max \{\Vert e_h\Vert _{C[0,x_1]}; \max \limits _{n=1,\ldots ,N}|e_{h,n}|\}\).

Remark 5.5

The \(\frac{h^\gamma }{\varepsilon }\) (or \(\frac{h^\gamma }{\varepsilon ^{7/6}}\)) term drops out if the phase \(\phi \) in (2.7) is explicitly integrable, leading to a second order scheme (in h) that is asymptotically correct with respect to \(\varepsilon \). But when \(\phi \) has to be computed numerically, e.g., via Simpson’s rule where \(\gamma =4\), the scheme is still second order in h as long as h is bounded by \(\mathcal{O}(\varepsilon ^{\frac{7}{12}})\). And for the (slightly) less restrictive step size bound \(h=\mathcal{O}(\sqrt{\varepsilon })\), the order of the scheme reduces to \(h^{\frac{5}{3}}\). As a comparison, we note that the bound \(h=\mathcal{O}(\sqrt{\varepsilon })\) is well known for the WKB approximation of highly oscillatory problems without a turning point (see [1, 19]), yielding a scheme of order \(h^2\). Using a spectral method for the phase integral allows to drastically reduce the quadrature error for \(\phi \), as illustrated in [2].

Proof of Theorem 5.4

Within this proof, we will use Lemma 3.2 multiple times for arguments \({\hat{W}}(x_n)\) as well as \({\hat{W}}_n\). Thus we choose \(\delta :=\min (C_3,C_5)\) with the lower bounds \(C_3\) and \(C_5\) on the arguments obtained in Lemma 3.1 and Lemma 5.1. It is crucial here that the solution vector \({\hat{W}}(x_n)\) of the IVP (3.8) as well as the numerical approximation \({\hat{W}}_n\) are in \(\mathbb {R}^2\) (see Lemma 5.1). Then the map \({\tilde{\alpha }}\) is Lipschitz continuous with a constant \(L_{{\tilde{\alpha }}}\) and uniformly bounded by a constant \(C_{{\tilde{\alpha }}}\), both independent of \(0<\varepsilon \le \varepsilon _0\), as stated in Lemma 3.2.

- (a)

For \(x\in [0,x_1]\) we first recall that

$$\begin{aligned} \psi (x) = \alpha ({\hat{\psi }}(1),{\hat{\psi }}^\prime (1))\,\psi _{-}(x) = {\tilde{\alpha }}({\hat{W}}(1))\,\psi _{-}(x), \end{aligned}$$cf. (3.4), (3.12). Hence, with (5.8) we have

$$\begin{aligned} |\psi (x)-\psi _h(x)|&= |{\tilde{\alpha }}({\hat{W}}(1))-{\tilde{\alpha }}({\hat{W}}_N)|\,|\psi _-(x)|\\\nonumber&\le L_{{\tilde{\alpha }}}\,\Vert {\hat{W}}(1)-{\hat{W}}_N\Vert \,\varepsilon ^{-\frac{1}{6}}\,\left| \mathrm{Ai}\left( -\tfrac{x}{\varepsilon ^{2/3}}\right) \right| \\&\le C\,\left( \tfrac{h^\gamma }{\varepsilon }+\varepsilon ^3 h^2\right) \,\varepsilon ^{-\frac{1}{6}}\,\left| \mathrm{Ai}\left( -\tfrac{x}{\varepsilon ^{2/3}}\right) \right| \\&\le C\,\left( \tfrac{h^\gamma }{\varepsilon ^{7/6}}+\varepsilon ^{\frac{17}{6}} h^2\right) , \end{aligned}$$where we used the \(\varepsilon \)-uniform boundedness of the (scaled) Airy function \(\mathrm{Ai}\left( -\tfrac{x}{\varepsilon ^{2/3}}\right) \) on \(\mathbb {R}^+\). We also note that the term \(\varepsilon ^{-\frac{1}{6}}\) cannot be compensated by \(\mathrm{Ai}\left( -\tfrac{x}{\varepsilon ^{2/3}}\right) \), since the latter term takes a constant value (independent of \(\varepsilon \)) at \(x=0\). We also used the Lipschitz continuity of \({\tilde{\alpha }}\), in addition to Proposition 5.2. For the derivative we estimate as follows:

$$\begin{aligned} \varepsilon |\psi ^\prime (x)-\psi _h^\prime (x)|&= \varepsilon |{\tilde{\alpha }}({\hat{W}}(1))-{\tilde{\alpha }}({\hat{W}}_N)|\,|\psi _-^\prime (x)|\\&\le L_{{\tilde{\alpha }}}\,\Vert {\hat{W}}(1)-{\hat{W}}_N\Vert \,\left| \varepsilon ^{\frac{1}{6}}{\mathrm{Ai}}^\prime \left( -\tfrac{x}{\varepsilon ^{2/3}}\right) \right| \\&\le C\,\left( \tfrac{h^\gamma }{\varepsilon }+\varepsilon ^3 h^2\right) , \end{aligned}$$where we used that \(|\varepsilon ^{\frac{1}{6}}{\mathrm{Ai}}^\prime \left( -\tfrac{x}{\varepsilon ^{2/3}}\right) |\le c\). This can be argued in the same manner as in Step 5 of the proof of Proposition 4.2.

- (b)

It is convenient to use the vector notation W on \([x_1,1]\). Note that scaling \({\hat{W}}\) with the constant \({\tilde{\alpha }}\) is equivalent to scaling \(({\hat{\psi }},\varepsilon {\hat{\psi }}^\prime )\). Therefore the estimates after the \({\tilde{\alpha }}\)-scaling are

$$\begin{aligned} \Vert W(x_n) - W_n\Vert&= \Vert {\tilde{\alpha }}({\hat{W}}(1))\,{\hat{W}}(x_n) - {\tilde{\alpha }}({\hat{W}}_N)\,{\hat{W}}_n\Vert \\&\le |{\tilde{\alpha }}({\hat{W}}(1)) - {\tilde{\alpha }}({\hat{W}}_N)|\,\Vert {\hat{W}}(x_n)\Vert + |{\tilde{\alpha }}({\hat{W}}_N)|\,\Vert {\hat{W}}(x_n) - {\hat{W}}_n\Vert \\&\le L_{{\tilde{\alpha }}} \Vert {\hat{W}}(1) - {\hat{W}}_N\Vert \, C_4 + C_{{\tilde{\alpha }}}\,\Vert {\hat{W}}(x_n) - {\hat{W}}_n\Vert \\&\le C\,\left( \tfrac{h^\gamma }{\varepsilon }+\varepsilon ^3 h^2\right) , \quad n=1,\ldots ,N. \end{aligned}$$In the second to last line we used the Lipschitz continuity and boundedness of \({\tilde{\alpha }}\) as well as (3.11). In the last line we used the estimate (5.5) twice. Due to the (\(\varepsilon \)-uniform) equivalence of \(\Vert W\Vert \) and \(\Vert (\psi ,\varepsilon \psi ')\Vert \), the estimate above yields the desired bound on the interval \([x_1,1]\).

- (c)

The overall estimate on [0, 1] is a combination of the previous two.

\(\square \)

5.3 Numerical results

In this subsection we will present numerical results to illustrate the error estimates of Theorem 5.4 for the Airy-WKB method.

Absolute error on a log-log scale for the Airy-WKB method on [0, 1] with \(x_1=0.1\) and the linear potential \(a(x)=x\) for several values of \(\varepsilon \). h is the step size for the WKB-marching method. On the left are the results with the phase \(\phi \) computed numerically via the composite Simpson rule, and on the right the results using the explicitly known phase

Figure 4 shows the error of the Airy-WKB method on [0, 1] with coefficient function \(a(x)=x\) and “switching-point” \(x_1=0.1\). This is a convenient test case since its solution is explicitly known (an Airy function), yet the numerical WKB method on \([x_1,1]\) is not trivial. Moreover, the integral of the phase \(\phi (x)\), needed for the WKB-marching method, is explicitly available without numerical integration. For comparison we shall present two simulations, one with the exact phase \(\phi (x)\) and one with a numerically computed phase (used both in (5.2) and (5.3)). In the right plot, the \(\frac{h^\gamma }{\varepsilon ^{7/6}}\)-term drops out of the error estimate (5.12), yielding an asymptotically correct scheme as \(\varepsilon \rightarrow 0\) (for fixed step size h).

In the left plot, the first term of (5.12) (i.e. \(\frac{h^\gamma }{\varepsilon ^{7/6}}\), originating from the numerical integration of the phase \(\phi \)) is dominant for large values of h and/or small values of \(\varepsilon \). Hence, the error behaves like \(\frac{h^4}{\varepsilon ^{7/6}}\), due to the Simpson rule with \(\gamma =4\), as visualized by the right slope triangle. At \(h=0.1\) we can clearly see an inversion of the error curves (\(\varepsilon =2^{-12}\) at the top and \(\varepsilon =2^{-8}\) at the bottom) with an \(\varepsilon \)-dependence of almost exactly \({\mathcal {O}}(\varepsilon ^{-7/6})\). This error term originating from the numerical integration of the phase could be reduced to machine precision by using the spectral method proposed in [2].

In the right plot (and likewise in the left plot for small h and large \(\varepsilon \)) we clearly observe the quadratic convergence rate in h for each value of \(\varepsilon \). The error in \(\varepsilon \) is decreasing with order of about \(\varepsilon ^{3.4}\) to \(\varepsilon ^{3.6}\) and therefore better than the predicted estimates of order \(\varepsilon ^{17/6}\) from the second term in (5.12). This improved \(\varepsilon \)-order of the error does not originate in the choice of a linear potential, as a similar observation was already made in the error plot for the WKB-marching method in [1, Fig. 3.1 (right)]. There, a simulation with a quadratic coefficient function was chosen and the error order in \(\varepsilon \) was showing better results than the predicted order of \(\varepsilon ^3\).

For small values of both h and \(\varepsilon \), the error is very small, such that it gets eventually polluted by round-off errors (due to double precision computations in Matlab). Hence, in this case the error is not showing a simple dependence on h and/or \(\varepsilon \), and is around the order of \(10^{-11}\).

In order to illustrate the efficiency of the Airy-WKB method, we make a comparison to a standard Runge–Kutta method, the ‘ode45’ single step Matlab solver. This solver is based on an explicit Runge–Kutta (4, 5) formula (the Dormand-Prince pair). It is adaptive to attempt to optimize the step size h using error estimates obtained from the comparison of a 4th and 5th order approximation. In this example we used (again) the linear coefficient function \(a(x)=x\), such that we have the explicit formula for the exact solution at hand, in terms of the Airy function \(\mathrm{Ai}\). Additionally, the phase is explicitly available without numerical integration, and thus it does not contribute to an additional error or increase of the run time. Also, in this case the WKB scheme is asymptotically correct.

The goal of this test is to compare (for several fixed values of \(\varepsilon \)) the run times of the Airy-WKB and the Runge–Kutta methods, when both methods achieve (almost) the same numerical accuracy. To match the accuracies, we first determined the error of the Airy-WKB scheme, in the norm \({\Vert e_h\Vert _\infty +\varepsilon \Vert e_h^\prime \Vert _\infty }\) (as defined in Theorem 5.4) for a grid with uniform step size \(h=10^{-3}\). Then we specify an error tolerance for ‘ode45’ (somewhat by trial and error) to obtain a matching approximation error (see Table 2). Figure 5 then gives a comparison of the run times: as expected, the Airy-WKB method’s run times stay constant for decreasing \(\varepsilon \), since we leave the step size h fixed. But the Runge–Kutta method’s run times grow strongly for smaller \(\varepsilon \). This is a consequence of the following two contributing parts. One is due to the prescribed error tolerances. These need to go down, since the Airy-WKB method has decreasing error as \(\varepsilon \rightarrow 0\) (even for a fixed step size), and we need to obtain comparable errors for both methods. The second reason is that, for smaller \(\varepsilon \), the oscillatory solution to the problem exhibits higher and higher frequencies, i.e. of order \(\mathcal{O}(\frac{\sqrt{a}}{\varepsilon })\). Therefore the Runge–Kutta method needs to refine the step size h even more to resolve the oscillations and to meet the prescribed error tolerances.

Run times of the Airy-WKB method in comparison to the standard Runge–Kutta Matlab solver ‘ode45’ plotted against \(\varepsilon \) in a semi-log plot. We used the coefficient function \(a(x)=x\), and \(\varepsilon = 2^{-4},\ldots ,2^{-10}\). For each \(\varepsilon \), the prescribed error tolerance of ‘ode45’ is ‘fitted’ for the two methods to be comparable

6 Generalization to quadratic potentials close to the turning point

The goal of this section is a generalization of the hybrid method of Sects. 2–5 to the situation when the potential V(x) is quadratic instead of linear in the vicinity of the turning point (which is still of first order at \(x=0\)). As we shall take a similar path as in the previous sections, not all of the (analogous) motivating deductions will be repeated. We start with specifying the assumptions on the coefficient function a(x) analogously to Assumption 2.1.

Assumption 6.1

Let parts (a), (b) and (d) of Assumption 2.1 remain unchanged.

- (c’)

More general than in Sects. 2–5, we now assume the potential to be quadratic in the left exterior and also in a (small) neighborhood of \(x=0\). More precisely we assume that \(\exists \,x_1\in (0,1)\) such that \(a(x) = k_1 x^2+k_2 x\) for \(x\le x_1\) with \(k_1<0\) and \(k_2>-k_1x_1>0\) such that the second zero of a(x) is strictly larger than \(x_1\), and thus, not included in \([0,x_1]\).

Sketch of the model described in Assumption 6.1 with quadratic potential left of \(x_1\). Electrons are injected from the right boundary \(x=1\) and there is a turning point of first order at the left boundary \(x=0\). The coefficient function is \(a(x) := E-V(x)\). The explicit solution form is available for \(x\le x_1\) and for \(x\ge 1\); on \((x_1,1)\) the solution is obtained numerically

Note that the above stated quadratic form of a(x) is in its most general form as a turning point at \(x=0\) requires \(a(0)=0\). We consider a potential \(V(x)\rightarrow +\infty \) as \(x\rightarrow -\infty \) (see Fig. 6) which requires a scattering solution for the equation \(\varepsilon ^2\psi ''+a(x)\psi =0\) to decay for \(x\rightarrow -\infty \). For a quadratic potential, this solution is given on \((-\infty ,x_1]\) by the parabolic cylinder function (PCF) denoted by \(U(\nu ,z)\), cf. [23, §12].

For \(a(x) = k_1x^2+k_2x\), we have

and a fundamental set of solutions is \(\{U(\nu ,z(x)),U(-\nu ,\mathrm {i}z(x))\}\). Here, \(U(\nu ,z(x))\) is the solution that stays bounded (and even decays) for \(x\rightarrow -\infty \). This yields the following BVP with transparent BCs:

Here and in the sequel the notation for the derivative of the parabolic cylinder function is \(U^\prime (\nu ,z)\) where the \(^\prime \) always refers to the derivative w.r.t. the (second) argument z, not the order \(\nu \). An analogous result as Proposition 2.2 about existence and uniqueness of solutions holds for the BVP (6.2). As in Sect. 3 this BVP will first be reformulated as the IVP

with a constant \(c_1\in \mathbb {R}{\setminus }\{0\}\) that we shall fix later. From here on we denote by \({\hat{\psi }}\) the solution to this IVP. The solution \(\psi \) of (6.2) is then obtained by scaling \({\hat{\psi }}\) to fit the right BC of (6.2), at \(x=1\). Note that this scaling preserves the validity of the left BC of (6.2), at \(x_1\).

6.1 Asymptotic blow-up at the turning point

In this section, we shall analyze the asymptotic behavior of solutions to the BVP (6.2) with quadratic potential close to the turning point. The following proposition will be used later to prove that the solution to (6.2) is not uniformly bounded w.r.t. \(\varepsilon \,\); this unboundedness arises at the turning point.

Proposition 6.2

(Asymptotics of the parabolic cylinder function) The function \(U(\nu ,z(x))\) with (6.1) has the following asymptotic representations for (a fixed) \(x\in (0,-\frac{k_2}{k_1})\) as \(\varepsilon \rightarrow 0\):

with the notation

and

The (\(\varepsilon \)-dependent) constant \(g(\mu )\) is defined in (A.7) and (A.8).

The lengthy proof is deferred to the Appendix A.3.

An essential requirement for the proofs in Sects. 3–5 was the uniform boundedness of the initial condition \(({\hat{\psi }}(x_1),\varepsilon {\hat{\psi }}^\prime (x_1))^\top \) w.r.t. \(\varepsilon \). Proposition 6.2 shows that the initial condition in (6.3) has the asymptotic order \(\mathcal{O}(g(\mu ))\) w.r.t. \(\varepsilon \), if \(c_1\) is chosen independent of \(\varepsilon \). In order to obtain again \(\varepsilon \)-uniform boundedness of the IC, we shall now choose \(c_1\) depending on \(\varepsilon \). As \(g(\mu )\) is defined as an asymptotic series and \(g(\mu )=\Theta _\varepsilon (h(\mu ))\), we choose in the IC of (6.3):

with \(h(\mu )\) defined in (A.8). Using \(\tfrac{1}{h(\mu )}\) is more practical than \(\tfrac{1}{g(\mu )}\) since it can be implemented explicitly and it yields the same asymptotic scaling as \(\tfrac{1}{g(\mu )}\). Then the IVP reads

After transforming \(({\hat{\psi }},\varepsilon {\hat{\psi }}^\prime )\) to \({\hat{W}}\in \mathbb {R}^2 \) via the matrix A(x) from (3.7) we get the vector-valued system

with the two matrices \(A_0(x)\) and \(A_1(x)\) as in (3.9).

A similar result as in Lemma 3.1 yields the \(\varepsilon \)-uniform boundedness of the analytic solution \(({\hat{\psi }},\varepsilon {\hat{\psi }}^\prime )(x)\) to the IVP (6.5) and of the vector valued solution \({\hat{W}}(x)\) to (6.6):

Lemma 6.3

Let \(a(x)\in {\mathcal {C}}^2[x_1,1]\) and \(a(x)\ge \tau _1>0\). Let \({{\hat{\psi }}}(x)\) be the solution to the IVP (6.5). Then \(({{\hat{\psi }}}(x),\varepsilon {\hat{\psi }}^\prime (x))\) is uniformly bounded above and below, i.e.

or equivalently

where the constants \(C_7,\ldots ,C_{10}>0\) are independent of \(0<\varepsilon \le \varepsilon _0\).

Proof

The proof is identical to the proof of Lemma 3.1. It only remains to show the \(\varepsilon \)-uniform boundedness above and below of the initial condition. As \(x_1\in (0,1)\) we can use Proposition 6.2 for an asymptotic representation of \(({{\hat{\psi }}}(x_1),\varepsilon {\hat{\psi }}^\prime (x_1))\): with \(t_1:= 1+\frac{2k_1}{k_2}x_1\in (-1,1)\) we get

where \(\xi (t):=\eta (t)-\tfrac{\pi }{4}\) and \(f(\mu ) := \frac{g(\mu )}{h(\mu )} = \Theta _\varepsilon (1)\). As \(\sin (\xi (t_1))\) and \(\cos (\xi (t_1))\) are never simultaneously zero we get \(\Vert ({\hat{\psi }},\varepsilon {\hat{\psi }}^\prime )(x_1)\Vert = \Theta _\varepsilon (1)\), proving uniform boundedness above and below. \(\square \)

Next we illustrate that the solutions of the BVP (6.2) become unbounded at the turning point \(x=0\), analogously to Example 4.1. First we consider

Example 6.4

Consider (6.2) with \(x_1=0\) and \(a(x) = x-\frac{x^2}{2}\) for \(x\in [0,1]\) and \(0<\varepsilon <1\). Then the explicit solution reads

where

The quadratic coefficient \(a(x) = x-\tfrac{x^2}{2}\) has a turning point at \(x=0\) and in Fig. 7 the absolute value of solutions \(|\psi _\varepsilon (x)|\) is plotted for various \(\varepsilon >0\): the solutions \(|\psi _\varepsilon (x)|\) are unbounded as \(\varepsilon \rightarrow 0\) at the turning point \(x=0\). Fig. 8 shows the plot of the family \(\{\varepsilon |\psi _\varepsilon ^\prime (x)|\}\), which is bounded on [0, 1] uniformly in \(\varepsilon \). It is even decreasing (in \(\varepsilon \rightarrow 0\)) at \(x=0\).

The generalization of Example 6.4 to potentials as in Assumption 6.1 is the main result of the following proposition.

Proposition 6.5

Let \(x_1\in (0,1)\) and a(x) be as in Assumption 6.1 and \({\mathcal {C}}^2\) on \([x_1,1]\). Then the family of solutions \(\{\psi _\varepsilon (x)\}\) to the BVP (6.2), extended with the PCF solution on \([0,x_1]\), satisfies:

- (a)

\(\Vert \psi _\varepsilon \Vert _{L^\infty (0,1)}\) is of the (sharp) order \(\Theta (\varepsilon ^{-\frac{1}{6}})\) for \(\varepsilon \rightarrow 0\).

- (b)

\(\varepsilon \Vert \psi _\varepsilon ^\prime \Vert _{L^\infty (0,1)}\) is uniformly bounded with respect to \(\varepsilon \rightarrow 0\).

The lengthy and involved proof is provided in the Appendix A.3.

6.2 Numerical method and error analysis

In this section we extend the Airy-WKB method from Sect. 5 to the more general case of a quadratic potential in the vicinity of the turning point. As the fundamental solution to the equation in (6.2) for quadratic a(x) is a parabolic cylinder function (PCF), we will denote this method as PCF-WKB method. The error estimates and convergence results will be essentially the same as for the Airy-WKB method in Sect. 5.

For the notation we will again use Table 1, but instead of the BVP (2.4) and IVPs (3.3), resp. (3.8) we consider the corresponding BVP (6.2) and IVPs (6.5), resp. (6.6).

With the uniformly bounded initial condition \({\hat{W}}(x_1)\) from (6.6), Lemma 5.1 can be directly applied to the numerical approximation \({\hat{W}}_n^\varepsilon \) obtained via the WKB-marching method. Hence, there exists \(\varepsilon _1\in (0,\varepsilon _0]\) and \(\varepsilon \)-independent constants \(C_{11},C_{12}>0\) such that

Next we want to carry over the error estimates of the WKB-marching method [1], just like in Proposition 5.2. Observe that, similar to (5.7), one can write the solution \({\hat{\psi }}(x)\) to the IVP (6.5) as

where \(\varphi \) is the solution to (5.6) and

Using Proposition 6.2 one can see that

and we get an analog error estimate as in Proposition 5.2: under Assumptions 2.3 and 6.1, and for \(0<\varepsilon \le \varepsilon _1\) it holds

Here, \({\hat{W}}(x_n)\) is the exact solution to the IVP (6.6) at \(x_n\), and \({\hat{W}}_n\) is its numerical approximation obtained by the WKB-marching method.

Using the numerical approximation \({\hat{W}}_n\) we shall denote the (numerical) approximation \(\psi _h^{(\prime )}\) to the solution \(\psi ^{(\prime )}\) of the BVP (6.2)—extended to \([0,x_1]\)—as

with the abbreviation \({\tilde{\alpha }}:={\tilde{\alpha }}({\hat{W}}_N)\).

With this notation we can now formulate the analog result to Theorem 5.4 for quadratic potentials satisfying Assumption 6.1. The error orders are the same as in the case of a linear potential, since we considered a first order turning point at \(x=0\) in both cases.

Theorem 6.6

(Convergence of the PCF-WKB method) Let Assumptions 2.3 and 6.1 be satisfied and \(0<\varepsilon \le \varepsilon _1\). Then the pair \((\psi _h,\varepsilon \psi _h^\prime )\) satisfies the same error estimates as in Theorem 5.4.

Proof

In this proof we are using the Lipschitz constant \(L_{{\tilde{\alpha }}}\) and upper bound \(C_{{\tilde{\alpha }}}\) for \({\tilde{\alpha }}\) from Lemma 3.2 with \(\delta := \min (C_9,C_{11})\) as lower bound for the arguments \({\hat{W}}(x_n)\) and \({\hat{W}}_n\), cf. (6.8) and (6.10).

- (a)

For the error estimate on \(\psi (x)\) with \(x\in [0,x_1]\) we need the following estimate:

$$\begin{aligned} \begin{aligned} |\psi _{-}&(x)| = \left| \tfrac{1}{h(\mu )}U(\nu ,z(x))\right| = \left| \tfrac{g(\mu )}{h(\mu )}\left( 2\sqrt{\pi }\varphi (t)\mu ^\frac{1}{3}\mathrm{Ai}(\mu ^\frac{4}{3}\zeta (t)) + \mathcal{O}(\varepsilon )\right) \right| \\&\le \tfrac{|f(\mu )|\,2^\frac{2}{3} \sqrt{\pi }\,k_2^\frac{1}{3}}{(-k_1)^\frac{1}{4}}\max _{t\in [1+\frac{2k_1}{k_2}x_1,1]}\left| \varphi (t)\mathrm{Ai}(\mu ^\frac{4}{3}\zeta (t))\right| \varepsilon ^{-\frac{1}{6}} + \mathcal{O}(\varepsilon )\le C_{\psi _-}\varepsilon ^{-\frac{1}{6}}, \end{aligned}\nonumber \\ \end{aligned}$$(6.14)with an \(\varepsilon \)-independent constant \(C_{\psi _-}>0\). In the first line we used the asymptotic representation (A.21) for \(U(\nu ,z(x))\), which is uniform in \(x\in [0,x_1]\); the terms \(\varphi (t)\) and \(\zeta (t)\) are defined right after (A.21). In the last line we used that the \(\varepsilon \)-dependent constant \(f(\mu ) = \Theta _\varepsilon (1)\), and that the \(\max \)-term is \(\varepsilon \)-uniformly bounded, see (A.22). Using the estimate (6.14) yields

$$\begin{aligned} |\psi (x)-\psi _h(x)|&= |{\tilde{\alpha }}({\hat{W}}(1))-{\tilde{\alpha }}({\hat{W}}_N)|\,|\psi _-(x)|\\&\le L_{{\tilde{\alpha }}}\,\Vert {\hat{W}}(1)-{\hat{W}}_N\Vert \,C_{\psi _-}\varepsilon ^{-\frac{1}{6}}\\&\le C\,\left( \tfrac{h^\gamma }{\varepsilon }+\varepsilon ^3 h^2\right) \,\varepsilon ^{-\frac{1}{6}}= C\,\left( \tfrac{h^\gamma }{\varepsilon ^{7/6}}+\varepsilon ^{\frac{17}{6}} h^2\right) , \end{aligned}$$where we used (6.11) in addition to the Lipschitz continuity of \({\tilde{\alpha }}\). For the \(\varepsilon \)-scaled derivative \(\varepsilon \psi _{-}^\prime (x)\) in (6.13) we have the asymptotic expansion (A.6). The term \(\mu ^{-\frac{4}{3}}\mathrm{Ai}(\mu ^\frac{4}{3}\zeta (t))=\mathcal{O}(\varepsilon ^\frac{4}{6})\), when including the turning point at \(t=1\) (since \(\zeta (1)=0\)), and for all other \(t\in [1+\frac{2k_1}{k_2}x_1,1)\) we have \(\mathrm{Ai}(\mu ^\frac{4}{3}\zeta (t)) = \mathcal{O}(\varepsilon ^\frac{1}{6})\). Truncating the asymptotic expansion (A.6) after the lowest order term in \(\varepsilon \) (which pertains to \(D_0(\zeta )=1\)) shows that there exists an \(\varepsilon \)-independent \(C_{\psi _-^\prime }>0\), such that

$$\begin{aligned} |\varepsilon \psi _{-}^\prime (x)| = \bigg |-\frac{f(\mu )\,2^\frac{1}{3}\sqrt{\pi }k_2^\frac{2}{3}}{(-k_1)^\frac{1}{4}}\frac{{\mathrm{Ai}}^\prime (\mu ^\frac{4}{3}\zeta (t))\varepsilon ^\frac{1}{6}}{\varphi (t)}+\mathcal{O}(\varepsilon ^\frac{5}{6})\bigg |\le C_{\psi _-^\prime }. \end{aligned}$$Here we used the fact that \(|\frac{{\mathrm{Ai}}^\prime (\mu ^{4/3}\zeta (t))\varepsilon ^{1/6}}{\varphi (t)}|\) for \(t\in [1+\frac{2k_1}{k_2}x_1,1]\) is \(\varepsilon \)-uniformly bounded above, see (A.23). Hence,

$$\begin{aligned} \varepsilon |\psi ^\prime (x)-\psi _h^\prime (x)|&= \varepsilon |{\tilde{\alpha }}({\hat{W}}(1))-{\tilde{\alpha }}({\hat{W}}_N)|\,|\psi _-^\prime (x)|\\&\le L_{{\tilde{\alpha }}}\,\Vert {\hat{W}}(1)-{\hat{W}}_N\Vert \,C_{\psi _-^\prime }\\&\le C\,\left( \tfrac{h^\gamma }{\varepsilon }+\varepsilon ^3 h^2\right) . \end{aligned}$$

The parts b) and c) are identical to the proof of the respective parts in Theorem 5.4. \(\square \)

The \(\tfrac{h^\gamma }{\varepsilon ^{7/6}} (\hbox {or} \, \frac{h^\gamma }{\varepsilon })\) term from the numerical integration of the phase \(\phi (x)\) from (2.7) appears again in the error estimates of Theorem 6.6. As mentioned in Sect. 5.2, this term drops out for some applications, when the phase is explicitly integrable.

6.3 Numerical results

The illustration of the error estimates of Theorem 6.6 for the PCF-WKB method is done analogously to Sect. 5.3. Here the coefficient function of (1.1) is chosen as \(a(x)= x-\frac{x^2}{2}\) on [0, 1]. Therefore the solution \(\psi \) is explicitly known as a parabolic cylinder function. The calculations are done in MATLAB®, where the PCF is not implemented, but can be obtained via relations to other functions, i.e. the confluent hypergeometric function [23, §12.7(iv)]. As the range of \(\varepsilon \) we chose \(\varepsilon = 2^{-5},\ldots ,2^{-9}\), since for \(\varepsilon =2^{-10}\) the evaluation of the PCF returns Inf as values. This is because its order \(\nu \) becomes large (negative) for small \(\varepsilon \), and thus, the PCF maps to very large values. For \(\varepsilon = 2^{-9}\) the evaluation already gets very inaccurate, such that we used Mathematica® for this case—to evaluate the PCF for the reference solution.

For the plots in Fig. 9, we computed the integral for the phase \(\phi (x)\) numerically, once for the plot on the right with an error tolerance of \(10^{-12}\) (such that the corresponding error term is negligible in comparison to the second error term in (5.12)), and once using the composite Simpson rule for the phase in the plot on the left. We clearly see the \(h^2\) convergence rate of the method for each \(\varepsilon \). An interesting difference can be seen in the left plot for small \(\varepsilon \) and large h: here the \(\frac{h^4}{\varepsilon ^{7/6}}\)-term, originating from the numerical integration of the phase, is dominant.

In the region with quadratic convergence (i.e. when the second term in (5.12) is dominant), the error in \(\varepsilon \) is decreasing with order of about \(\varepsilon ^{3}\) to \(\varepsilon ^{3.7}\), and thus it is again (cf. Sect. 5.3) slightly superior to the predicted estimates of order \(\varepsilon ^\frac{17}{6}\).

Absolute error on a log-log scale for the PCF-WKB method on [0, 1] with \(x_1=0.1\) and the quadratic potential \(a(x)=x-\frac{x^2}{2}\) for several values of \(\varepsilon \). h is the step size for the WKB-marching method. On the left are the results with the phase \(\phi \) computed numerically via the composite Simpson rule, and on the right the results using a numerically computed phase with high precission (error tolerance of \(10^{-12}\))

7 Generalization and outlook

The next natural question is how to alleviate the restriction that the potential V(x) should be (exactly) linear or quadratic in a neighborhood of the turning point. An obvious and frequently used strategy is to approximate the potential: in [11] a piecewise constant approximation was used (actually in a regime without turning points), and in [10, §15.5], [21, §7.3.1], [9, §4.3] a linear approximation at the turning point was employed. But, as we shall illustrate next, such linear (or even higher order) approximations lead to errors that are unbounded as \(\varepsilon \rightarrow 0\). Hence, they cannot serve as a starting point to construct uniformly accurate schemes. The error encountered by taking Airy functions (as solutions to the reduced problem—i.e. the Airy equation) can only be uniformly bounded when confining to a region around the turning point that shrinks fast enough as \(\varepsilon \rightarrow 0\) (particularly like \(o(\varepsilon ^{2/5})\), [20]). But, for the time being, the WKB-marching method requires a constant-in-x transition point \(x_1\).

Error of the scattering solution due to the linear approximation of a(x) by \({{\tilde{a}}}(x)\), as shown in Fig. 10. The plot shows the error in dependence of \(\varepsilon \) and the cut-off point \(x_1\); apparently it is of the order \(x_1^3/\varepsilon ^{1.5}\). The function E(x) is the difference of the two solutions

Next we illustrate the consequence of a linear approximation of the potential close to the turning point: we chose a piecewise quadratic coefficient function a(x), see Fig. 10, along with its linear approximation \({{\tilde{a}}}\) on \([0,x_1]\) with some (fixed) \(x_1\in (0,1)\).

Since the solutions of the scattering problem for both potentials are analytically known in terms of Airy functions and parabolic cylinder functions, the error plotted in Fig. 11 is not due to any numerical method. The non-uniform error (in \(\varepsilon \)) stems only from the modified coefficient function, and hence modified phase of the solution: for \(\varepsilon \) small, the approximate solution is entirely out of phase close to \(x=x_1\). On the one hand the error in Fig. 11 is of order \(\mathcal{O}(x_1^3)\) for fixed \(\varepsilon \), which stems from the linear approximation of the potential. On the other hand, for fixed \(x_1\) the error grows approximately like \(\varepsilon ^{-3/2}\).

As a conclusion of this feasibility study and as an outlook for a follow-up work there are two options to proceed. Since a linear approximation of a(x) leads to \(\varepsilon \)-uniform errors for transition points \(x_1=o(\varepsilon ^{2/5})\) (see [20]), a first option would be to extend the WKB-marching method to \(\varepsilon \)-dependent intervals of the form \([\varepsilon ^\alpha ,1]\), with some \(0<\alpha <1\). Since the WKB-method yields an \(\mathcal{O}(\varepsilon ^3)\)-error (for analytically integrable phase functions, see Proposition 5.2) on \(\varepsilon \)-independent intervals, an extension to some \([\varepsilon ^\alpha ,1]\) should yield \(\varepsilon \)-uniform errors—hopefully with \(\alpha >\frac{2}{5}\), to have an overlap between both regimes.

A second option is to use Langer functions [17] instead of Airy functions on a fixed interval \([0,x_1]\), coupled to the WKB-marching method. Langer functions are essentially Airy functions with a (generally) non-linear transformation of the argument and a (linear) modification of the amplitude, cf. modified Airy functions in [25]. This procedure is in strong contrast to the approach by linear approximation of the potential: both approaches correspond to a transformation to a perturbed Airy equation. The error encountered by using the solution to the reduced problem is non-uniform in \(\varepsilon \) for the approach of linear approximation of the potential, but uniform on a fixed interval \([0,x_1]\) for the transformation proposed by Langer, cf. [20, §7.2.5]. The concept of Langer functions extends also to higher order turning points.

Notes

There, a classical particle with energy E in the potential V(x) would change directions, hence the name.

“Big Theta” is defined as: \(f(x)=\Theta (g(x))\) as \(x\rightarrow a :\Leftrightarrow \exists \,k_1, k_2, \delta >0:\forall x:|x-a|<~\delta : \,k_1|g(x)|\le |f(x)|\le k_2|g(x)|\), i.e. g(x) is an asymptotic tight bound for f(x). Occasionally we will give \(\Theta \) a subscript to specify the variable of asymptotic limit.

I.e. the numerical error decreases to zero with \(\varepsilon \rightarrow 0\), even for a fixed spatial grid.

Note: this constitutes a numerical scheme only for a coefficient function a(x) that is linear (or even quadratic, as in Sect. 6 below) on \([0,x_1]\) for some \(x_1\in (0,1)\).

The ‘little-O’ notation \(f = o(g)\) as \(x\rightarrow x_0\) is equivalent to \(\frac{f(x)}{g(x)}\rightarrow 0\) as \(x\rightarrow x_0\).

References

Arnold, A., Abdallah, N.B., Negulescu, C.: WKB-based schemes for the oscillatory 1D Schrödinger equation in the semi-classical limit. SIAM J. Numer. Anal. 49(4), 1436–1460 (2011)

Arnold, A., Klein, C., Ujvari, B.: WKB-method for the 1D Schrödinger equation in the semi-classical limit: enhanced phase treatment, submitted (2019). arXiv:1808.01887

Arnold, A., Negulescu, C.: Stationary Schrödinger equation in the semi-classical limit: numerical coupling of oscillatory and evanescent regions. Numer. Math. 138(2), 501–536 (2018)

Abdallah, N.B., Degond, P., Markowich, P.A.: On a one-dimensional Schrödinger–Poisson scattering model. ZAMP 48, 35–55 (1997)

Abdallah, N.B., Pinaud, O.: Multiscale simulation of transport in an open quantum system: resonances and WKB interpolation. J. Comput. Phys. 213(1), 288–310 (2006)

Döpfner, K., Arnold, A.: On the stationary Schrödinger equation in the semi-classical limit: Asymptotic blow-up at a turning point. PAMM (2019). https://doi.org/10.1002/pamm.201900004

Erdélyi, A.: Asymptotic Expansions. Dover Publ, New York (1956)

Ferry, D.K., Goodnick, S.M.: Transport in Nanostructures. Cambridge Univ, Press (1997)

Holmes, M.H.: Introduction to Perturbation Methods. Springer-Verlag, New York (1995)

Hall, B.C.: Quantum Theory for Mathematicians, Graduate Texts in Mathematics 267. Springer-Verlag, New York (2013)

Han, H., Huang, Z.: A tailored finite point method for the Helmholtz equation with high wave numbers in heterogeneous medium. J. Comput. Math. 26(5), 728–739 (2008)

Ihlenburg, F., Babuška, I.: Finite element solution of the Helmholtz equation with high wave number. I. The \(h\)-version of the FEM. Comput. Math. Appl. 30(9), 9–37 (1995)

Ihlenburg, F., Babuška, I.: Finite element solution of the Helmholtz equation with high wave number. II. The \(h\)-\(p\) version of the FEM. SIAM J. Numer. Anal. 34(1), 315–358 (1997)

Iserles, A., Nørsett, S.P., Olver, S.: Highly oscillatory quadrature: The story so far. In: Bermudez de Castro, A. (ed.) Proceeding of ENuMath, pp. 97–118. Santiago de Compostella. Springer, Berlin (2006)

Jahnke, T., Lubich, C.: Numerical integrators for quantum dynamics close to the adiabatic limit. Numerische Mathematik 94, 289–314 (2003)

Landau, L.D., Lifschitz, E.M.: Quantenmechanik. Akademie-Verlag, Berlin (1985)

Langer, R.E.: On the asymptotic solutions of ordinary differential equations, with an application to the Bessel functions of large order. Transact. AMS 33(1), 23–64 (1931)

Lent, C.S., Kirkner, D.J.: The quantum transmitting boundary method. J. Appl. Phys. 67, 6353–6359 (1990)

Lorenz, K., Jahnke, T., Lubich, C.: Adiabatic integrators for highly oscillatory second-order linear differential equations with time-varying eigendecomposition. BIT 45(1), 91–115 (2005)

Miller, P.D.: Applied asymptotic analysis, American Mathematical Soc. Vol. 75 (2006)

Nayfeh, A.H.: Perturbation Methods. Wiley, New York (1973)

Negulescu, C.: Asymptotic models and numerical schemes for quantum systems. PhD-thesis at Université Paul Sabatier, Toulouse (2005)

Olver, F.W., Lozier, D.W., Boisvert, R.F., Clark, C.W.: NIST Handbook of Mathematical Functions. Cambridge University Press, Cambridge (2010)

Olver, F.W.: Introduction to Asymptotics and Special Functions. Acad. Press, New York (1974)

Smith, A.J., Baghai-Wadjij, A.R.: A numerical technique for solving Schrödingers equation in molecular electronic applications. Proc. SPIE 7268, 72681Q (2008)

Acknowledgements