Abstract

The aim of our work is to present a method (p-factor method) for solving nonlinear equations of the form

in singular (irregular) case, i.e. when the matrix \(F'(x)\) is singular at an initial point \(x_0\) of an iterative sequence \(\{x_k\}\), \(k=1,2,\ldots \) We investigate conditions that have to be fulfilled at the initial point \(x_0\) to obtain the existence of solution of nonlinear equation \(F(x)=0\), prove convergence of the presented p-factor method and give estimation of convergence rate for this method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider the problem of a construction of a numerical method (or an iterative sequence \(\{x_k\}\), \(k=1,2,\ldots \)) for solving a system of nonlinear equations

where \(F: \mathbb {R}^n\rightarrow \mathbb {R}^m\), \(m\le n\) and F is singular at the initial point \(x_0\). The problem (1) is called regular at the point \(x_{0}\) if \(\mathrm{rank }F'(x_{0})=m\). Otherwise, it is called nonregular (singular).

Usually, for proving convergence of the numerical method and obtaining an estimation of a convergence rate, a local convergence is supposed, i.e. the initial point \(x_0\) of the iterative sequence is assumed to belong to some neighborhood \(U_{\varepsilon }(x^{*})=\{x: \Vert x-x^{*}\Vert <\varepsilon \}\), \(\varepsilon >0\) of a solution \(x^{*}\) to the system (1) and moreover this solution has to exist. For this reason, an important problem in construction numerical method one can faced is to prove the existence of solution in some neighborhood of the initial point and moreover to fix assumptions on a neighborhood of the starting point so that the constructed numerical method is convergent.

For the classical Newton (or Gauss–Newton) method in regular case,

where \(\left( F'(x_0)\right) ^{-1}_R=F'(x_0)\left( F'(x_0)F'(x_0)^T\right) ^{-1}\), the existence of a solution \(x^{*}\) in neighborhood of the initial point \(x_0\) is guaranteed by Theorem 1 from the Sect. 2. And if the assumptions of Theorem 1 are valid, for the Newton method we have geometrical estimation of convergence rate:

However, one of the main requirements for the method convergence, existence of solutions and estimation of the convergence rate is nonsingularity of the Jacobian matrix \(F'(x_0)\). In a singular case, when \(\left( F'(x_0)\right) ^{-1}_R\) does not exist, the situation is much more complicated.

In the paper we present a numerical method (p-factor method) for solving equations in the case when \(F'(x_0)\) is singular. We prove a theorem on existence of solutions and justify convergence and estimation of the rate of convergence to the described method.

One could wonder why we propose a new method instead of choosing another initial point and then using the classical method. It is because of the fact that even if one choose a starting point which is not singular it could happened that the next iteration might give rejection effect, that is \(\Vert x_1-x^{*}\Vert \gg \Vert x_0-x^{*}\Vert \). The method described in this paper allows to avoid such effects and gives quadratic rate of convergence.

The construction of p-regularity introduced in [7] gives new possibilities for description and investigation of the set of solutions in the singular case. We use some of the methods to prove the conditions of the existence of local solutions to nonlinear singular equations in [4] and then some other results in the case with non-zero p-kernel (see [5]) and also give a description of a tangent cone in singular case [6].

Throughout this paper we assume that the mapping \(F:\mathbb {R}^n\rightarrow \mathbb {R}^m\) is continuously p-times differentiable on \(\mathbb {R}^n\) and its pth order derivative at \(\;x\in \mathbb {R}^n\;\) will be denoted as \(F^{(p)}(x)\) (a symmetric multilinear map of p copies of \(\mathbb {R}^n\) to \(\mathbb {R}^m\)) and the associated p-form is

Let X and Y are sets. We denote by \(2^{Y}\) the set of all subsets of the set Y. Any mapping \(\varPhi : X{\rightarrow } 2^{Y}\) is said to be a multimapping (or a multivalued mapping) from X into Y. For a linear operator \(\; \varLambda :X\rightarrow Y,\;\) we define by \(\; \varLambda ^{-1}_{R}\;\) its right inverse, that is \(\varLambda ^{-1}_{R}:Y \rightarrow 2^{X}\) and \( \varLambda ^{-1}_{R}y=\left\{ x\in X: \varLambda x = y\right\} . \) Of course, \( \varLambda \varLambda ^{-1}_{R}=I_{Y}. \) Furthermore, we shall use the “norm” of such right inverse operator

and so

In our further considerations, under notion \(\varLambda ^{-1}\) we shall mean just right inverse operator (multivalued) with the norm defined by (3).

The set \(M(x^{*})=\left\{ x\in U: F(x)=F(x^{*})=0\right\} \) is called the solution set for the mapping F in neighborhood U of \(x^{*}\).

We call \(h\in \mathbb {R}^n\) a tangent vector to a set \(M\subseteq \mathbb {R}^n\) at \(x^{*} \in M\) if there exist \(\varepsilon > 0\) and a function \(r:[0,\varepsilon ]\rightarrow \mathbb {R}^n\) such that \(x^{*}+th+r(t)\in M\), where \(t\in [0,\varepsilon ]\) and \(\Vert r(t)\Vert =o(t).\)

The collection of all tangent vectors at \(x^{*}\) is called the tangent cone to M at \(x^{*}\) and it is denoted by \(T_{1}M(x^{*})\).

We recall two auxiliary lemmas. The first of these lemmas is a “multivalued” generalization of the contraction mapping principle. By \(\mathrm{dist}(A_{1},A_{2})\) we mean the Hausdorff distance between sets \(A_{1}\) and \(A_{2}.\)

Lemma 1

(Contraction multimapping principle [2]) Assume that we are given a multimapping

on a ball \(U_{\varepsilon }(z_{0})=\left\{ z: \rho (z,z_{0})\le \varepsilon \right\} \;\;(\varepsilon >0)\) where the sets \(\varPhi (z)\) are non-empty and closed for any \(z\in U_{\varepsilon }(z_{0}).\) Further, assume that there exists a number \(\theta ,\; 0<\theta <1\) such that

-

1.

\(\mathrm{dist}(\varPhi (z_{1}),\varPhi (z_{2}))\le \theta \rho (z_{1},z_{2})\) for any \(z_{1},z_{2}\in U_{\varepsilon }(z_{0})\)

-

2.

\(\rho (z_{0},\varPhi (z_{0}))<(1-\theta ) \varepsilon .\)

Then, for every number \(\varepsilon _{1}\) which satisfies the inequality

there exists \(z\in B_{\varepsilon _{1}/(1-\theta )}(z_{0})=\left\{ \omega : \rho (\omega ,z_{0})\le \varepsilon _{1}/(1-\theta )\right\} \) such that

Lemma 2

[2] Let \(\varLambda \in \mathcal {L}(\mathbb {R}^n,\mathbb {R}^m).\) We set

If \(\;\mathrm{Im}\varLambda = \mathbb {R}^m,\) then \(C(\varLambda )<\infty .\)

2 Elements of p-regularity theory

Let us consider the case when the regularity condition fails but the mapping F is p-regular. We construct the p-factor operator under the assumption that \(\mathbb {R}^m\) is decomposed into an orthogonal sum

where \(Y_{1}=\hbox {Im} F'(x_{0})\), and the remaining spaces are defined as follows. Let \(Z_{1}=\mathbb {R}^m,\) \(Z_{2}=Y_{1}^{\perp }\), and let \(P_{Z_{2}}:Y\rightarrow Z_{2}\) be the orthogonal projection onto \(Z_{2}\). Let \(Y_{2}\) be a linear span of the image of the quadratic map \(P_{Z_{2}}F''(x_{0})[\cdot ]^{2}.\) More generally, define inductively,

where \(Z_{i}=\left( Y_{1}\oplus \cdots \oplus Y_{i-1}\right) ^{\perp }\) with respect to \(\mathbb {R}^m,\) \(i=2,\ldots ,p\) and \(P_{Z_{i}}:\mathbb {R}^m\rightarrow Z_{i}\) is the orthogonal projection onto \(Z_{i}\), \(i=2, \ldots ,p.\) Finally, \(Y_{p}=Z_{p}.\) The order p is the minimum number for which (5) holds.

Define the following mappings \(f_{i}:U\rightarrow Y_{i},\) \(f_{i}(x)=P_{Y_{i}}F(x),\) \(i=1,\ldots ,p,\) where \(\;P_{Y_{i}}:\mathbb {R}^m\rightarrow Y_{i}\;\) is the orthogonal projection onto \(\;Y_{i}\), \(i=1,\ldots ,p\) (see e.g. [8]). It is obvious that \(F(x)=f_1(x)+\cdots +f_p(x)\).

If \(F^{(i)}(x_0)=0\), where \(i=1,\ldots ,p-1\) then we say that F is completely degenerate at \(x_0 \in \mathbb {R}^n\) up to the order p. Note that \(f^{(i)}_k(x_0)=0\), \(i=1,\ldots ,k-1\), \(k=1,\ldots ,p\).

Definition 1

The linear operator \(\varLambda _h\in \mathcal {L}(\mathbb {R}^n,Y_{1}\oplus \cdots \oplus Y_{p})\) is defined for \(h\in \mathbb {R}^n\) by

and is called the p-factor operator along h at the point \(x_{0}.\)

Sometimes it is convenient to use the following equivalent definition of p-factor operator \(\widetilde{\varLambda }_{h}\in \mathcal {L}\left( \mathbb {R}^n,Y_{1}\times \cdots \times Y_{p}\right) \) for \(h \in \mathbb {R}^n,\)

We also introduce the corresponding multivalued right inverse operator,

and nonlinear operator

and \(\varPsi ^{-1}(y)=\left\{ h\in \mathbb {R}^n: y=\varPsi [h]^{p}\right\} \). It is easy to see that \(\varPsi [h]^{p}=\widetilde{\varLambda }_h(h).\)

Let us introduce the following set

We can write it also as follows

where

For our further considerations we need a generalization of the notion of regular mapping.

Definition 2

We say that the mapping F is p-regular at \(x_{0}\) along h if \(\hbox {rank} \varLambda _{h}=m\).

Definition 3

We say that the mapping F is p-regular at \(x_{0}\) if either it is p-regular along every h from the set \(\hbox {H}_{p}(x_{0})\setminus \{0\} \hbox { or } \hbox { H}_{p}(x_{0}) ={\{0\}}.\)

3 Regular case

In regular case the tangent cone to a solution set of the Eq. (1) equals to the kernel of the first derivative of the mapping F, i.e. \(T_{1}M(x_{0})=\mathrm{Ker}F'(x_{0})\). To investigate regular nonlinear problems one can apply classical results, such as Lyusternik theorem, implicit function theorem, Lagrange–Euler optimality conditions.

Besides description of the solution set and formulation of optimality conditions, very important problem is to give a guarantee of existence of a solution in some neighborhood of an initial point.

We quote one of the modifications of the theorem about existence of solutions of Eq. (1) in regular case (see e.g. [1]).

Theorem 1

Let \(F:\mathbb {R}^n\rightarrow \mathbb {R}^m,\) \(F\in \mathcal {C}^{2}(U_{\varepsilon }(x_{0})),\) \(\varepsilon >0\), \(F'(x_{0})\ne 0\), \(\eta =\Vert F(x_{0})\Vert ,\) F is regular at \(x_0\) and \(\delta =\left\| [F'(x_{0})]^{-1}\right\| \), \(c=\max \nolimits _{x \in U_{\varepsilon }(x_{0})} \Vert F''(x)\Vert . \) If the following conditions

-

1.

\(\delta \cdot c \cdot \varepsilon \le \frac{1}{6}\),

-

2.

\(\delta \cdot \eta \le \frac{\varepsilon }{2}\)

are valid then the equation \(F(x)=0\) has a solution \(x^{*} \in U_{\varepsilon }(x_{0})\), the method (2) converges to \(x^{*}\) and the following estimation holds:

For the proof see [4].

4 Singular case

We can generalize the above results and give the necessary conditions that have to be valid for existence of solutions in the singular case, i.e. when \(F'(x_0)\) is singular. First, let us recall two lemmas that are essential in the proof of Theorems 2 and 3.

Lemma 3

[3] Let U be an open subset of \(\mathbb {R}^n\) such that \([a,b]\subset U.\) If \(f:U\rightarrow \mathbb {R}^m\) and \(f\in \mathcal {C}^{1}(U)\) then

Lemma 4

[4] Let \(S : \mathbb {R}^n\rightarrow \mathbb {R}^m=Y_1\oplus \cdots \oplus Y_p\) be a nonlinear operator of the form \(S(x)=\left( S_{1}(x),S_{2}(x),\ldots ,S_{p}(x)\right) ,\) where \(S_{r}(x)\) is r-form, \(r=1,\ldots ,p\). If

then for \(y=\left( y_{1},\ldots ,y_{p}\right) ,\) \(y_i\in Y_i,\) \(i=1,\ldots ,p\), where \(\Vert y\Vert =\Vert y_1\Vert +\ldots +\Vert y_p\Vert ,\) we have

For the singular case we have the following generalization of the Theorem 1.

Theorem 2

Let \(F:\mathbb {R}^n\rightarrow \mathbb {R}^m,\) \(F\in \mathcal {C}^{p+1}(U_{\varepsilon }(x_0))\), \(\varepsilon >0\) and there exists h, such that \(h\in \varPsi ^{-1} (-F(x_0))\setminus \{0\}\), \(\hat{h}=\frac{h}{\Vert h\Vert }\) and F is p-regular at \(\;x_{0}\;\) along h. Moreover, assume that for a given \(\varepsilon \) the following conditions are satisfied

-

1.

\( c_{3} p^{2}\delta ^{\frac{1}{p}}\le \frac{1}{3}\varepsilon ,\)

-

2.

\(0<\delta < 1,\)

-

3.

\(\frac{4}{3}\left( 4^{p}-1\right) c_{1} c_{2} \varepsilon \le \frac{1}{2},\)

where \(\delta = \left\| F(x_{0})\right\| \ne 0\), \(c_{1}=\left\| \varLambda _{\widehat{h}}^{-1}\right\| \), \(c_{2}=\max \nolimits _i \max \nolimits _{x\in U_{\varepsilon }(x_0)} \left\| f^{(i+1)}_{i}(x)\right\| <\infty ,\) \(i=1,\ldots ,p,\) \(c_{3}=\left\| \varPsi ^{-1}\right\| <\infty \). Then the equation \(F(x)=0\) has a solution \(x^{*} \in U_{\varepsilon }(x_0).\)

For the proof see [4]. Notice that if \(p=1\) then Theorem 2 reduces to the Theorem 1. If \(p\ge 2\) then \(F'(x_0)\) is singular and we have singular case.

Remark 1

(Sequential p-regularity) The assumptions of Theorem 2 can be relax if we consider the sequence:

Namely, instead of p-regularity of F at \(x_0\) we can assume p-regularity F on \(\{\xi _k\}\), \(k=1,2,\ldots \). It means that instead of surjectivity of \(\varLambda _h\) we can require that \(\mathrm{Im}\varLambda _h=Y'_1\oplus \cdots \oplus Y'_p\) and \(f_i(x_0+ h+\xi _k)\in Y'_i,\) where \(Y'_i\subseteq Y_i\), \(i=1,\ldots ,p\), \(k=0,1,\ldots .\)

Remark 2

We choose \(x\in \mathbb {R}^{n}\) as a representative of the set \(\varLambda _{h}^{-1}(y)\) if \(\Vert x\Vert =\min \left\{ \Vert z\Vert : \varLambda _{h}(z)=y\right\} \). We will write then \(\varLambda _{h}^{-1}(y)=x\).

Consider the following method (p-factor method)

Theorem 3

Suppose that for F, \(x_0\), h, \(\varepsilon \), \(\delta \) the assumptions of Theorem 2 are valid. Then the sequence defined in (8) converges to the solution \(x^{*}\in U_{\varepsilon }(x_0)\) and the following estimation of convergent rate holds:

Proof

Consider the following equivalent form of the sequence (8).

We prove that \(\forall _k \Vert \xi _{k+1}-\xi _k\Vert \le \frac{1}{2}\Vert \xi _k-\xi _{k-1}\Vert \). For this purpose we define a multivalued mapping \(\varPhi _{h}: U_{\varepsilon }(x_{0})\rightarrow 2^{\mathbb {R}^n},\) such that

The sets \(\varPhi _{h}(x)\) are non-empty because \(\varLambda _h\) is a surjection for any \(x\in U_{\varepsilon }(x_{0}).\) Moreover, for any \(y\in Y_{1}\times \cdots \times Y_{p}\) the sets \(\varLambda ^{-1}_h(y)\) are linear manifolds parallel to \(\mathrm{Ker}\varLambda _h,\) and hence the sets \(\varPhi _{h}(x)\) are closed for any \(x\in U_{\varepsilon }(x_{0}).\) Taking into account Remark 2 we can write \(\Vert \xi _{k+1}-\xi _k\Vert =\mathrm{dist}\left( \varPhi _{h}(\xi _{k+1}),\varPhi _{h}(\xi _{k})\right) \). We prove that

for \(\xi _{k+1},\xi _{k}\in U_{\frac{\varepsilon }{2}}(x_{0})\) such that \(\Vert \xi _{j}\Vert \le \frac{\Vert h\Vert }{R},\) \(j=k,k+1,\) where

Let \(\varLambda _{h,i}=\frac{1}{(i-1)!}f_{i}^{(i)}(x_{0})[h]^{i-1}, \; i=1,\ldots ,p\), \(s_{1}=x_{0}+h+\xi _{k},\) \(s_{2}=x_{0}+h+\xi _{k-1}.\) Then

Taking into account Lemma 3, we have

As \(f_{i}\) is completely degenerate up to the order i we obtain the following Taylor expansion

where

Moreover,

Thus, inserting (14) into (13) and then putting the obtained formula into (11), we get

Let \(F(x_{0})=(y_{1},\ldots ,y_{p}),\) where \(y_{i}\in Y_{i}, \; i=1, \ldots ,p.\) Then from the assumption and the definition of norm in \(\mathbb {R}^n\) we have \(\Vert y_{1}\Vert +\cdots +\Vert y_{p}\Vert \le \delta .\) As \(R\Vert \xi _{j}\Vert \le \Vert h\Vert ,\) \(j=k-1,k\) we have \(\left\| h+\xi _{k-1}+\theta (s_{1}-s_{2})\right\| \le 4 \Vert h\Vert .\) Taking into account Lemma 4 and assumptions (3) and (2) of Theorem 2 we have

where \(0<\varDelta <\frac{1}{2}.\) This and the previous formulae imply

Moreover,

Taking into account the definition of \(R_{i}\), we get

Now, inserting (15)–(17) into (14) we obtain

Hence

which proves (10).

Let \(\xi _{1}\) be an element of \(\varPhi _{h}(\xi _{0})\) such that \(\xi _{1}\in \xi _{0}-\varPsi ^{-1}(F(x_{0}))\) and \(\Vert \xi _{1}-\xi _{0}\Vert \le (1+\varDelta )\Vert \varPsi ^{-1} (-F(x_{0})\Vert ,\) where \(0<\varDelta <\frac{1}{2}.\) Thus we have

From the above and from (10) we obtain that the sequence \(\xi _k\) is a Cauchy sequence hence there exists an element \(\xi ^{*}\) such that \(\xi ^{*}\in \varPhi _{h}(\xi ^{*}).\) It means that

and hence \(\left( f_{1}(x_{0}+h+\xi ^{*})+\cdots + f_{p}(x_{0}+h+\xi ^{*})\right) =\mathbf {0}.\) Then for \(i=1, \ldots , p,\) \(f_{i}(x_{0}+h+\xi ^{*})=0\) which is equivalent to \(F(x_{0}+h+\xi ^{*})=\mathbf {0}.\) It follows that the sequence \(z_k=x_0+h+\xi _k\) is convergent to \(x^{*}=x_0+h+\xi ^{*}\) which is a solution of \(F(x)=0\).

The estimation (9) follows from (10). \(\square \)

We conclude with an example which is very easy but serves to illustrate the main idea of the method.

Example

Consider Eq. (1) and let \(F:\mathbb {R}^2\rightarrow \mathbb {R}^2\) be the mapping given by

Let \(x_0=(-1,-1)^T\) be an initial point. One can easily verify that F is nonregular at \(x_0\). Indeed, \(F'(x^1,x^2)=\left( {\begin{matrix} 1+3(x^1+1)^2 &{} 1 \\ x^2+1 &{} x^1+3(x^2+1)^2+1 \\ \end{matrix}} \right) \) and \(\mathrm{rank }F'(-1,-1)\ne 2\), so the Newton method could not be applied. We will prove that F is 2-regular and construct 2-factor operator.

We can decompose the space \(\mathbb {R}^2\) as follows \(\mathbb {R}^2=Y_1\oplus Y_2\), where \(Y_1=\left\{ \left( {\begin{matrix} t \\ 0 \end{matrix}} \right) : t\in \mathbb {R}\right\} \) and \(Y_2=\left\{ \left( {\begin{matrix} 0 \\ r \end{matrix}} \right) : r\in \mathbb {R}\right\} \). Then we calculate norms of some elements to examine if the assumptions of Theorem 2 are satisfied. We obtain \(\delta =\frac{\sqrt{10^{6}+1}}{10^{6}}\), \(\hat{h}=\left( \begin{array}{cc} \frac{1-\sqrt{3}}{2\sqrt{2}} &{} \frac{1+\sqrt{3}}{2\sqrt{2}} \\ \end{array} \right) ^T\) and 2-factor operator is \(\varLambda _{\hat{h}}=\left( \begin{array}{cc} 1 &{} 1 \\ \frac{1+\sqrt{3}}{2\sqrt{2}} &{} \frac{1-\sqrt{3}}{2\sqrt{2}} \\ \end{array} \right) \). Hence, \(\mathrm{rank }\varLambda _{\hat{h}}=2\), so it is surjective. To verify all assumptions of Theorem 2 we calculate \(c_1\approx 1.414214\), \(c_2\approx 1\), \(c_3\approx 0.001\), \(\varepsilon =0.01\) and hence we can conclude that in \(\varepsilon \)-neighbourhood of \(x_0\) there exists a solution of (1).

We use the sequence (8) to find an approximation of the solution. Here \(\xi _1^1=0,\) \(\xi _1^2=0\) and one of the three solutions which is in \(\varepsilon \)-neighbourhood of \(x_0\) is \(x^{*}\approx (-1.000619933,-99838)\).

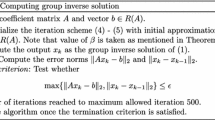

In the table one can see the six iterations of the method described in this paper.

k | \(\quad z^{1}_{k}\) | \(z^{2}_{k}\) | \(z^{1}_{k}-x^{1*} \) | \(z^{2}_{k}-x^{2*}\) |

|---|---|---|---|---|

1 | 0 | 0 | 0.000619933 | \( -0.001619933\) |

2 | \(-1.000366025\) | \( -0.998634\) | 0.000253908 | \( -0.00025393\) |

3 | \(-1.000366528\) | \( -0.998344\) | 0.000253405 | \( 3.6067 \times 10^{-5}\) |

4 | \(-1.00065657\) | \( -0.998343\) | \( -3.6637 \times 10^{-5}\) | \( 3.73067\times 10^{-5}\) |

5 | \(-1.000657155\) | \( -0.998392\) | \( -3.7222\times 10^{-5}\) | \( -1.1933\times 10^{-5}\) |

6 | \(-1.000608426\) | \( -0.998384\) | \( 1.1507\times 10^{-5}\) | \( -3.933\times 10^{-6}\) |

References

Demidovitch, B.P., Maron, I.A.: Basis of Computational Mathematics. Nauka, Moscow (1973)

Ioffe, A.D., Tihomirov, V.M.: Theory of Extremal Problems. Nauka, Moscow (1974). (in Russian)

Krasnosel’skii, M.A., Wainikko, G.M., Zabreiko, P.P., Rutitcki, YuB, Stetsenko, VYu.: Approximated Solutions of Operator Equations, p. 484. Walters-Noordhoff Publishing, Groningen (1972)

Prusińska, A., Tret’yakov, A.: A remak on the existence of solutions to nonlinear equations with degenerate mappings. Set-Valued Anal. 16, 93–104 (2008)

Prusińska, A., Tret’yakov, A.: On the existence of solutions to nonlinear equations involving singular mappings with non-zero p-kernel. Set-Valued Anal. 19, 399–416 (2011)

Prusińska, A., Tret’yakov, A.: \(p\)-Regularity theory. Tangent cone description in singular case. Ukr. Math. J. 67,(8), 1097–1106 (2015)

Tret’yakov, A.: Necessary and sufficient conditions for optimality of p-th order. USSR Comput. Math. Math. Phys. 24(1), 123–127 (1984)

Tret’yakov, A., Marsden, J.E.: Factor-analysis of nonlinear mappings: p-regularity theory. Commun. Pure Appl. Math. 2, 425–445 (2003)

Author information

Authors and Affiliations

Corresponding author

Additional information

Research of the second author is supported by the Russian Foundation for Basic Research Grant No 14-07-00805 and the Council for the State Support of Leading Scientific Schools Grant 4640.2014.1 and by the Russian Academy of Sciences, Presidium Programme P-8.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Prusińska, A., Tret’yakov, A. Iterative method for solving nonlinear singular problems. Calcolo 53, 635–645 (2016). https://doi.org/10.1007/s10092-015-0166-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10092-015-0166-8