Abstract

Information necessary for decision-making is often distributed among agents with misaligned interests. In such settings receiving information is desirable for the agents, while revealing it may be privately harmful. This paper constructs a class of almost-truthful interim-biased mediation protocols that incentivize information exchange in a succinct model capturing such conflicts. The protocols in this class receive signal reports from the agents and send private messages back, almost always transmitting the received signal reports without any distortions. Each rare distorted message is deliberately designed to prevent deviations from truth-telling. Specifically, each mediator’s distortion aims at implicitly encouraging a truthful agent to take the action that is interim-optimal given her private signal report only. A deviating agent, however, receives an encouragement based on an untruthful report and thus shifts her action away from the truly interim-optimal one when facing such a distortion. As a result, the deviating agent is put to a disadvantage when the mediator distorts the signals, which is enough to ensure truthful communication when the misalignment of interests between the agents is sufficiently small.

Similar content being viewed by others

Notes

In fact, the preferences may be completely “opposite”: the payoff structure, such that an increase in one agent’s utility may always lead to the decrease of the other agent’s utility, is allowed.

Further examples of the literature on communication in oligopoly include Novshek and Sonnenschein (1982), Vives (1984, 1990), Gal-Or (1985), Li (1985), Shapiro (1986) and Raith (1996). See Kühn and Vives (1995) and Vives (2001) for extensive reviews. This strand of research typically assumes commitment power or verifiable private information. A notable exception is Ziv (1993), who shows that conveying credible information in the oligopoly setting is also possible if “money-burning” or transfers are allowed. In the present paper agents lack commitment power, information is non-verifiable, and both “money-burning” and transfers are assumed out.

Which is not unique: both the private signal \(s_k\) and 1/2 are optimal actions for every agent.

This paper is primarily motivated by the situations where agents have misaligned interests, but the model formally allows for aligned agents’ preferences as well (i.e. a change in one player’s action being beneficial for both players). Exploiting the specifics of such an alignment of interests can, in principle, be used to facilitate communication, but constructing such setting-specific schemes is beyond the scope of the present paper. The mediation protocol class introduced further in the paper can enable information exchange in the situations of aligned interests (under a set of assumptions, also discussed below), but does not depend on such an alignment.

To see this formally, one can utilize Lemma 4.1.

The setup under part (i) of Assumption 4.3 can be interpreted as follows. Agents agree on the possible states of the world: \(\mathcal {S}_1=\mathcal {S}_2=S\), and receive noisy signals about the true state with the signal space coinciding with the states of the world set.

If the expected value of \(v_k\) is weakly lower than under no communication for both \(k=1\) and \(k=2,\) there are two possible cases. In the first case, the expected value of the \(v_k\)-component is the same as without communication for both agents, which means no welfare improvement coming from direct communication. In the second case, the expected value of the \(v_k\)-component is strictly lower relative to the no-communication situation for some k. The latter, however, is impossible in equilibrium, since returning to the interim-correct actions would be profitable for such agent k. Thus, there has to exist k such the expected value of the the \(v_{k}\)-component is strictly greater in the welfare-improving equilibrium of the direct communication extension, provided that such an equilibrium exists.

Notice that Assumption 4.4 does not add restrictions on the cost functions \(c_k,\) so the result on the impossibility of direct communication with “opposite” preferences still holds with this additional assumption.

Note also that the results in “Appendix C” ensure that this discussion also applies when the interaction between project decisions can slightly affect employees’ payoffs. Specifically, “Appendix C” demonstrates that almost-truthful interim-biased mediation protocols can also facilitate communication in cases when agents’ payoffs are not fully separable in actions, provided that the the payoff changes due to action interaction are relatively small compared to payoff changes induced by the own-action component \(v_k.\)

In the continuous action space both agents’ optimal actions conditional on the available information will reflect the possibility that the mediator’s message was distorted.

The solution concept corresponds to information design with elicitation in terminology of Bergemann and Morris (2018).

The correct action recommendation to agent k in this case is \((i+j)/2\) in case of truthful reporting, while the incorrect action recommendation to agent k is \((i+(1-j))/2.\)

References

Alonso R, Dessein W, Matouschek N (2008) When does coordination require centralization? Am Econ Rev 98(1):145–79

Ambrus A, Takahashi S (2008) Multi-sender cheap talk with restricted state spaces. Theor Econ 3(1):1–27

Ambrus A, Azevedo EM, Kamada Y (2013) Hierarchical cheap talk. Theor Econ 8(1):233–261

Austen-Smith D (1993) Interested experts and policy advice: multiple referrals under open rule. Games Econ Behav 5(1):3–43

Battaglini M (2002) Multiple referrals and multidimensional cheap talk. Econometrica 70(4):1379–1401

Bergemann D, Morris S (2018) Information design: a unified perspective. J Econ Lit 57:44–95

Blume A, Board OJ, Kawamura K (2007) Noisy talk. Theor Econ 2(4):395–440

Council on Foreign Relations (2006) FBI and law enforcement. Archived at https://web.archive.org/web/20171119172108/; https://www.cfr.org/backgrounder/fbi-and-law-enforcement. Accessed 19 Nov 2017

Crawford VP, Sobel J (1982) Strategic information transmission. Econometrica 50(6):1431–1451

Deal J (2018) How leaders can stop employees from deliberately hiding information. Wall Street J. Archived at https://web.archive.org/web/20181015152315/; https://blogs.wsj.com/experts/2018/08/14/how-leaders-can-stop-employees-from-deliberately-hiding-information/. Accessed 14 Oct 2018

Farrell J, Gibbons R (1989) Cheap talk with two audiences. Am Econ Rev 79(5):1214–1223

Forges F (1986) An approach to communication equilibria. Econometrica 54(6):1375–1385

Gal-Or E (1985) Information sharing in oligopoly. Econometrica 53(2):329–343

Galeotti A, Ghiglino C, Squintani F (2013) Strategic information transmission networks. J Econ Theory 148(5):1751–1769

Garicano L, Posner RA (2005) Intelligence failures: an organizational economics perspective. J Econ Perspect 19(4):151–170

Goltsman M, Pavlov G (2011) How to talk to multiple audiences. Games Econ Behav 72(1):100–122

Goltsman M, Pavlov G (2014) Communication in Cournot oligopoly. J Econ Theory 153:152–176

Goltsman M, Hörner J, Pavlov G, Squintani F (2009) Mediation, arbitration and negotiation. J Econ Theory 144(4):1397–1420

Ivanov M (2010) Communication via a strategic mediator. J Econ Theory 145(2):869–884

Kolotilin A, Mylovanov T, Zapechelnyuk A, Li M (2017) Persuasion of a privately informed receiver. Econometrica 85(6):1949–1964

Krishna V, Morgan J (2001) A model of expertise. Q J Econ 116(2):747–775

Krishna V, Morgan J (2004) The art of conversation: eliciting information from experts through multi-stage communication. J Econ Theory 117(2):147–179

Kühn K-U, Vives X (1995) Information exchange among firms and their impact on competition. Office for Official Publications of the European Communities, Luxembourg

Li L (1985) Cournot oligopoly with information sharing. Rand J Econ 16(4):521–536

Menon T, Thompson L (2010). Envy at work. Harvard Bus Rev. Archived at https://web.archive.org/web/20211128132300/; https://hbr.org/2010/04/envy-at-work. Accessed 30 Nov 2020

Myers CG (2015) Is your company encouraging employees to share what they know? Harvard Bus Rev. Archived at https://web.archive.org/web/20181015154019/; https://hbr.org/2015/11/is-your-company-encouraging-employees-to-share-what-they-know. Accessed 14 Oct 2018

Myerson RB (1982) Optimal coordination mechanisms in generalized principal-agent problems. J Math Econ 10(1):67–81

Myerson RB (1986) Multistage games with communication. Econometrica 54(2):323–358

Myerson RB (1997) Game theory: analysis of conflict. Harvard University Press, Cambridge

Novshek W, Sonnenschein H (1982) Fulfilled expectations Cournot duopoly with information acquisition and release. Bell J Econ 13(1):214–218

Raith M (1996) A general model of information sharing in oligopoly. J Econ Theory 71(1):260–288

Sales NA (2010) Share and share alike: intelligence agencies and information sharing. Geo Wash L Rev 78(2):279–352

Shapiro C (1986) Exchange of cost information in oligopoly. Rev Econ Stud 53(3):433–446

U.S. Congress (2004) Intelligence reform and terrorism prevention act of 2004. 108th Cong., 2d sess., Public Law 108-458. U.S. G.P.O, Washington

U.S. Congress, Senate, Select Committee on Intelligence. U.S. Congress, House, Permanent Select Committee on Intelligence (2002) Joint inquiry into intelligence community activities before and after the terrorist attacks of September 11, 2001: report of the U.S. Senate Select Committee on Intelligence and U.S. House Permanent Select Committee on Intelligence together with Additional Views. 107th Cong., 2d sess., S. Rep. 107-351, H. Rep. 107-792. U.S. G.P.O, Washington

Vida P, Forges F (2013) Implementation of communication equilibria by correlated cheap talk: the two-player case. Theor Econ 8(1):95–123

Vives X (1984) Duopoly information equilibrium: Cournot and Bertrand. J Econ Theory 34(1):71–94

Vives X (1990) Trade association disclosure rules, incentives to share information, and welfare. Rand J Econ 21(3):409–430

Vives X (2001) Oligopoly pricing: old ideas and new tools. MIT Press, Cambridge, p 2001

Wang S, Noe RA (2010) Knowledge sharing: a review and directions for future research. Hum Resour Manag Rev 20(2):115–131

Ziv A (1993) Information sharing in oligopoly: the truth-telling problem. Rand J Econ 24(3):455–465

Acknowledgements

The author thanks Timur Abbiasov, Yingni Guo, Gaston Illanes, Sergei Izmalkov, Riccardo Marchingiglio, Alessandro Pavan, Robert Porter, Dmitry Sorokin, Xavier Vives, Asher Wolinsky, Andrey Zhukov and seminar participants at Northwestern University for helpful comments and discussions. Special thanks to Anna Algina, Francisco Poggi and Quitzé Valenzuela-Stookey. The author is also grateful to the Editor, Moritz Meyer-ter-Vehn, and to the anonymous reviewers for useful suggestions. This paper previously circulated under the title “Information Exchange between Competitors”. The author gratefully acknowledges financial support received from the Russian Science Foundation [Project No. 15-18-30081, 2015–2017] when that version of the paper was in progress.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Appendices

A Proofs for Sect. 3

Proposition A.1

The only Bayesian Nash Equilibrium of the game \(\Gamma _E\) consists of a strategy profile \(\left\{ a_k^*()\right\} _{i=1}^2\) with

Each player’s ex-ante expected equilibrium payoff of the game \(\Gamma _E\) is

Proof

Note that agent k’s best response to each strategy of agent \((3-k)\) requires selecting the most likely correct action of nature given k’s signal. Since

while

and then since \(r>1/2,\) it must be that in equilibrium each agent selects an action that coincides with her signal and thus indeed \(a_k^*(s_k)=s_k.\)

Given the equilibrium strategies, each agent guesses the the correct action with probability r and thus the ex-ante equilibrium payoff of each player is \(\pi ^E=r(1-\alpha ).\) \(\square \)

Proposition A.2

Consider a benevolent third party that observes both signals, cares equally about the agents and solves for the first-best

This problem is solved by \(a_k(s)=s^*\) and accordingly \(\pi ^{E,FB}=1-\alpha .\)

Proof

Note that for each choice of \(\left\{ a_k(s)\right\} _{i=1}^2\)

where \(p_k\) is the unconditional probability of player k guessing the correct action \(s^*\) given \(a_k(s)\). When \(r\in (1/2,1)\) and \(\alpha \in (0,1),\) the expression in (4) is maximized by \(p_k=1\) for each k. The only way to achieve \(p_k=1\) is by setting \(a_k(s)=s^*.\) The corresponding expected payoff of each agent is \(\pi ^{E,FB}=1-\alpha .\) \(\square \)

Proposition A.3

Consider the game \(\Gamma _E\) extended with a finite set \(\mathcal {W}_k\) of messages that agent k can send to agent \((3-k)\) upon observing her private signal. Let \(\Gamma _D\) denote the extended game. In every weak Perfect Bayesian Equilibrium of \(\Gamma _D\) for each message \(w_{3-k}\in \mathcal {W}_{3-k}\) player k chooses the action according to her private signal only: \(a_k(s_k,w_{3-k})=s_k.\)

Proof

Note first that according to Proposition A.1, each agent chooses the same action as her signal in the absence of any additional information. Expected equilibrium payoffs of the players in the game with communication take the form

where \(p_k\) is the probability of player k guessing the correct action. Since each player can guarantee herself a correct guess with probability r based on the private information only, it must be that \(p_k,p_{3-k}\geqslant r.\)

Now suppose that there exists a message \(w_{3-k}\) sent by type \(s_{3-k}\) of agent \((3-k)\) in equilibrium, such that some type \(s_k\) of agent k chooses action 1/2.

There are two cases: either (i) the type \(1-s_k\) continues to choose action \(1-s_k\) upon observing message \(w_{3-k}\) or (ii) the type \(1-s_k\) chooses action 1/2 upon observing message \(w_{3-k}.\) In both of these cases player \((3-k)\) can deceive agent k to choose the correct action with probability less than r.

-

(i)

If type \(1-s_k\) continues to choose action \(1-s_k\) upon observing message \(w_{3-k},\) then by sending message \(w_{3-k}\) irrespective of her own signal, agent \((3-k)\) induces type \(s_k\) of agent k to do action 1/2 both in case of coinciding or non-coinciding signals, while type \(1-s_k\) continues to act on private information only. Thus in case of \((3-k)\) always sending message \(w_{3-k}\), the probability of k guessing the correct action is \({\hat{p}}_k=1/2<r\leqslant p_k.\) Since for a fixed strategy of k the probability of \((3-k)\) guessing the correct action \(p_{3-k}\) remains constant, agent \((3-k)\) has a profitable deviation.

-

(ii)

If type \(1-s_k\) chooses action 1/2 upon observing message \(w_{3-k},\) then by sending message \(w_{3-k}\) irrespective of her signal, agent \((3-k)\) induces all types of agent k to do action 1/2 and the probability of k guessing the correct action in case of such a deviation is \({\hat{p}}_k=1-r<r\leqslant p_k.\) Again, since for a fixed strategy of k the probability of \((3-k)\) guessing the correct action \(p_{3-k}\) remains constant, agent \((3-k)\) has a profitable deviation.

Thus if there exists a message \(w_{3-k}\) that induces some type of agent k to choose action 1/2, agent \((3-k)\) necessarily has a profitable deviation. Therefore, it must be that in every every weak Perfect Bayesian Equilibrium of the game \(\Gamma _D\) with direct communication, each agent acts on her private information only. \(\square \)

B Optimal mediation for the illustrative example

This appendix section finds the optimal mediation protocol for the illustrative example of Sect. 3. Recall that the baseline case of the example consists of the game \(\Gamma _E.\) In the game, each agent \(k\in \{1,2\}\) obtains a binary signals \(s_k\in \mathcal {S}=\{0,1\}\) with the following joint distribution \(\mathbb {P}\) over \(\mathcal {S}^2=\mathcal {S}\times \mathcal {S}\) parametrized by \(r\in (1/2, 1):\)

Together, these signals determine the correct action

Both agents would like to guess \(s^*\) by choosing an action in the set \(\mathcal {A}_k=\{0,1/2,1\}.\) The agents have a conflict of interest and prefer the opponent not to be able to guess the correct action. The payoffs representing such preferences are given by

where \(\alpha \in (0,1).\)

Consider now a mediated game. That is, a mediation protocol is introduced that receives reports from the agents and sends messages back to the agents. For each possible report profile received from the agents the protocol specifies a distribution on the messages sent back to the agents.

Due to the revelation principle (see Myerson 1982, 1986 and Forges 1986), attention can be restricted to direct revelation mediation protocols that take the type-reports from the agents and send back action recommendations to each player. A direct revelation mediation protocol M is defined as a function from the product type space into the joint distributions over the action recommendations \(M:\mathcal {S}\rightarrow \Delta (A\times A).\) That is, each agent is asked to submit her signal received and conditional on the pair of reports is advised on an action, possibly in a random way. The mediation protocol should be such that the agents find it optimal to report their true types and follow the recommended action conditional on the other player reporting truthfully and following recommendations.Footnote 12

Let \(\mathcal {M}\) be the set of all incentive-compatible mediation protocols. Let \(\pi ^{M}_k\) be the ex-ante expected equilibrium payoff of agent k in game \(\Gamma _M\) with two agents, signals jointly distributed according to \(\mathbb {P}\) in (B), the correct action \(s^*\) determined as in (B), payoff-relevant actions be \(A_k=\{0,1/2, 1\}\) and the mediation protocol \(M\in \mathcal {M}.\) Consider now the problem of optimal mediation protocol design faced by competitors

The following sequence of lemmas first simplifies the problem in (5) and leads to Theorem B.1 which presents the optimal mediation protocol.

Lemma B1 below shows that, when solving (5), without loss of generality one can consider only mediation protocols that generate independent recommendations conditional on the pair of reports.

Lemma B1

For every \(M\in \mathcal {M}\) there exists \(M'\in \mathcal {M}\) such that

-

(i)

\(M':\mathcal {S}\rightarrow \Delta (A)\times \Delta (A)\)

-

(ii)

\(M({\hat{s}})\) and \(M'({\hat{s}})\) have the same marginal distributions for every \({\hat{s}}\)

-

(iii)

\(\pi ^{M'}_k=\pi ^{M}_k\) for every k

Proof

Consider an incentive-compatible mediation protocol \(M\in \mathcal {M}.\) Define \(M'\) to be a mapping from \(\mathcal {S}\) to \(\Delta (A)\times \Delta (A)\) such that for each \({\hat{s}}\in \mathcal {S},\) for each vector of recommendations a

That is, \(M'\) is defined to be the product of the marginal distributions of M for each pair of reports s. By construction, (i) \(M'\in \Delta (A)\times \Delta (A)\) and (ii) M and \(M'\) have the same marginal distributions.

Now, since \(M\in \mathcal {M},\) it must also be that \(M'\in \mathcal {M}.\) To see this observe first that for type \(s_k\) of agent k that submitted report \({\hat{s}}_k\) and received a recommendation \({\hat{a}}_k,\) agent k’s posterior over the signal of agent \((3-k)\) [and thus also k’s preferred action] is pinned down by the marginal of recommendation distributions. Thus for each report \({\hat{s}}_k\) of type \(s_k\) of agent k under \(M',\) the resulting distribution of k’s actions is the same as under M and consequently the probability of type \(s_k\) agent k making a correct guess is the same under M and \(M'.\) Similarly, for each report \({\hat{s}}_k\) of agent k under \(M',\) the resulting distribution of \((3-k)\)’s actions is the same as under M. Since the distribution of both agents’ actions pin down the expected payoff of agent k, type \(s_k\) of agent k has the same expected payoff for each report \({\hat{s}}_k\) under M and \(M'.\) By assumption there were no profitable deviations from truth-telling under M, thus so is the case under \(M'.\) It is therefore proved that \(M'\in \mathcal {M}\) and (iii) \(\pi ^{M'}_k=\pi ^{M}_k.\) \(\square \)

Exploiting Lemma B1, one can restrict attention to mediation protocols with independent action recommendations when solving for the optimal communication protocol. Thus from now on \(\mathcal {M}\) is redefined to be the set of IC mediation protocols with independent action recommendations.

Next, Lemma B2 shows that none of the IC mediation protocols recommend action \(1-{\hat{s}}_k\) to agent k who reported type \({\hat{s}}_k.\) That is, an action that is known to be incorrect by a particular agent is never recommended to this agent.

Lemma B2

For every \(M\in \mathcal {M}\) it must be that \(M({\hat{s}})\in \times _k\Delta \left( \left\{ {\hat{s}}_k,1/2\right\} \right) .\)

Proof

Suppose that for some \(M\in \mathcal {M}\) action \(1-{\hat{s}}_k\) is recommended to agent with a truthful report \({\hat{s}}_k\). Note that following such a recommendation yields a probability 0 of agent k guessing \(s^*.\) Obviously, agent k can deviate from following the recommendation, act on her private information only and guarantee herself at least a probability r of guessing \(s^*.\) \(\square \)

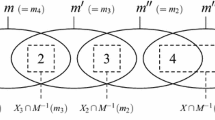

Every \(M\in \mathcal {M}\) is now completely summarized by a vector in \([0,1]^8\) with typical values \(m_{i,j}^k\in [0,1]\) presented in the following table:

In the table \(m_{i,j}^k\) is the probability of recommending the correct action to agent k, when agent k reported i and agent \((3-k)\) reported j.Footnote 13 The vector m consisting of \(m_{i,j}^k\in [0,1]\) is feasible if the corresponding M belongs to \(\mathcal {M}.\)

Lemma B3 below simplifies the problem in (5) even further, stating that there is no loss in optimizing the weighted sum of payoffs with respect to just two variables: the probability of recommending a correct action in case of (i) coinciding and (ii) non-coinciding reports.

To prove Lemma B3 the following claim is first established.

Claim

A mediation protocol M defined by a vector \(\left\{ \left\{ m_{i,j}^k\right\} _{i,j=0,0}^{1,1}\right\} _{k=1}^2\) belongs to the set of IC mediation protocols \(\mathcal {M}\) if and only if the following two sets of condition hold. First, for each k and i

Second, for each k and i

Conditions (6)–(7) ensure that each agent finds it profitable to follow the mediation protocol’s recommendation. Conditions (8) ensure that there are no profitable deviations from reporting truthfully to the mediation protocol.

Proof

The two sets of IC conditions (6)–(7) and (8) are established separately.

“Following recommendation” conditions Under an IC direct revelation mediation protocol, agent k finds it profitable to follow the mediation protocol’s recommendation. In the problem at hand, one needs to establish conditions under which this is the case for every signal and recommendation obtained by agent k.

-

Suppose agent k has signal i and obtained a recommendation R to do i. Such a recommendation can occur when the reported pair of types is either (i, i) or \((i,1-i).\) The posterior probability that the state is (i, i) (and thus that the correct action is indeed i) is equal to

$$\begin{aligned} \begin{aligned} \mathbb {P}{\left[ s=(i,i)|R=i,s_k=i\right] }&=\mathbb {P}{\left[ s_{3-k}=i|R=i,s_k=i\right] }\\&=\dfrac{\mathbb {P}{\left[ s_k=i,s_{3-k}=i,R=i\right] }}{\mathbb {P}{\left[ s_k=i,R=i\right] }}\\&=\dfrac{m_{ii}^k\cdot \frac{r}{2}}{m_{ii}^k\cdot \frac{r}{2}+(1-m_{i,1-i}^k)\cdot \frac{1-r}{2}} \end{aligned} \end{aligned}$$Agent k following recommendation means that \(s=(i,i)\) is more likely than \(s=(i,1-i)\), which yields the first IC constraint

$$\begin{aligned} m_{ii}^k r\geqslant (1-m_{i,1-i}^k)(1-r) \end{aligned}$$and condition (6) is established.

-

Suppose now agent k has signal i and obtained a recommendation R to do 1/2. Such a recommendation can occur when the reported pair of types is either (i, i) or \((i,1-i).\) The posterior probability that the state is \((i,1-i)\) (and thus the correct action is indeed 1/2) is equal to

$$\begin{aligned} \begin{aligned} \mathbb {P}{\left[ s=(i,1-i)|R=1/2,s_k=i\right] }&=\mathbb {P}{\left[ s_{3-k}=1-i|R=1/2,s_k=i\right] }\\&=\dfrac{\mathbb {P}{\left[ s_k=i,s_{3-k}=1-i,R=1/2\right] }}{\mathbb {P}{\left[ s_k=i,R=1/2\right] }}\\&=\dfrac{m_{i,1-i}^k\cdot \frac{1-r}{2}}{m_{i,1-i}^k\cdot \frac{1-r}{2}+(1-m_{ii}^1)\cdot \frac{r}{2}} \end{aligned} \end{aligned}$$Agent 1 following recommendation means that \((i,1-i)\) is more likely than (i, i), which yields the second IC constraint

$$\begin{aligned} m_{i,1-i}^k (1-r)\geqslant (1-m_{ii}^k)r \end{aligned}$$and condition (7) is established.

The “following recommendation” conditions are thus established.

“Truthful reporting” conditions Under an IC direct revelation mediation protocol, agent k finds it profitable to report truthfully to the mediation protocol and follow the recommendation rather than misreporting and doing some other action upon receiving a recommendation. In the problem at hand, one needs to establish conditions under which this is the case for every signal obtained by agent k.

-

Suppose agent k received a signal i. If she reports [T]ruthfully and follows the recommendation by the mediation protocol (and so does agent \((3-k)\)), the expected payoff is

$$\begin{aligned} \pi ^T_k&=\mathbb {E}_{}{\left[ \pi ^T_k|s_k=i\right] }=\mathbb {E}_{}{\left[ \pi ^T_k|s_k=i, s_{3-k}=i\right] }\mathbb {P}{\left[ s_{3-k}=i|s_k=i\right] }\\&\quad +\mathbb {E}_{}{\left[ \pi ^T_k|s_k=i,s_{3-k}=1-i\right] }\mathbb {P}{\left[ s_{3-k}=1-i|s_k=i\right] }\\&=(m_{ii}^k-\alpha m_{ii}^{3-k})r+(m_{i,1-i}^k-\alpha m_{1-i,i}^{3-k})(1-r) \end{aligned}$$ -

If agent k misreports and sends \(1-i\) to the mediation protocol instead of i, it is possible to hear two recommendations in response: \(1-i\) or 1/2. The optimal actions in each of these cases are established below:

-

What is the optimal action if \(1-i\) is recommended back by the mediation protocol? The conditional probability of agent \((3-k)\) having a signal i is equal to

$$\begin{aligned} \mathbb {P}_{U}{\left[ s_{3-k}=i|R=1-i,s_k=i\right] }&=\dfrac{\mathbb {P}_{U}{\left[ s_k=i,s_{3-k}=i,R=1-i\right] }}{\mathbb {P}_{U}{\left[ R=1-i,s_k=i\right] }}\\&=\dfrac{(1-m_{1-i,i}^k)\cdot \frac{r}{2}}{(1-m_{1-i,i}^k)\cdot \frac{r}{2}+m_{1-i,1-i}^k\cdot \frac{1-r}{2}}, \end{aligned}$$where \(\mathbb {P}_{U}{\left[ \right] }\) stands for the updated probabilities given an [U]ntruthful report.

Similarly, the conditional probability of agent \((3-k)\) having a signal \(1-i\) is equal to

$$\begin{aligned} \mathbb {P}_{U}{\left[ s_{3-k}=1-i|R=1-i,s_k=i\right] }&=\dfrac{m_{1-i,1-i}^k\cdot \frac{1-r}{2}}{(1-m_{1-i,i}^k)\cdot \frac{r}{2}+m_{1-i,1-i}^k\cdot \frac{1-r}{2}} \end{aligned}$$Consequently, if \((1-m_{1-i,i}^k)r> m_{1-i,1-i}^k(1-r),\) agent k will do action i upon receiving signal \(1-i\) and if \((1-m_{1-i,i}^k)r\leqslant m_{1-i,1-i}^k(1-r),\) agent k will do action 1/2 upon receiving signal \(1-i.\)

-

What is the optimal action if 1/2 is recommended back by the mediation protocol? The conditional probability of agent \((3-k)\) having a signal i is equal to

$$\begin{aligned} \mathbb {P}_{U}{\left[ s_{3-k}=i|R=1/2,s_{k}=i\right] }&=\dfrac{\mathbb {P}_{U}{\left[ s_{3-k}=i,s_{k}=i,R=1/2\right] }}{\mathbb {P}_{U}{\left[ R=1/2,s_{k}=i\right] }}\\&=\dfrac{m_{1-i,i}^k\cdot \frac{r}{2}}{m_{1-i,i}^k\cdot \frac{r}{2}+(1-m_{1-i,1-i}^1)\cdot \frac{1-r}{2}}, \end{aligned}$$while the conditional probability of agent \((3-k)\) having a signal \(1-i\) is equal to

$$\begin{aligned} \mathbb {P}_{U}{\left[ s_{3-k}=1-i|R=1/2,s_{k}=i\right] }&=\dfrac{(1-m_{1-i,1-i}^k)\cdot \frac{1-r}{2}}{m_{1-i,i}^k\cdot \frac{r}{2}+(1-m_{1-i,1-i}^k)\cdot \frac{1-r}{2}}, \end{aligned}$$Note that the IC constraint (7) and Assumption 1 imply that \(m_{1-i,i}^kr>(1-m_{1-i,1-i}^k)(1-r)\) and thus agent k prefers to do action i upon hearing a recommendation of 1/2 from the mediation protocol.

-

-

Now [after trivially calculating the probabilities of agent \((3-k)\) making the correct guess], the expected payoff of agent k in case of misreporting and sending \(1-i\) instead of i can be computed. If \((1-m_{1-i,i}^k)r> m_{1-i,1-i}^k(1-r),\) agent k does i in any case and gets an expected utility of

$$\begin{aligned} \pi ^{U,>}_k=r(1-\alpha (1-m_{i,1-i}^{3-k}))+(1-r)(0-\alpha (1-m_{1-i,1-i}^{3-k})) \end{aligned}$$If \((1-m_{1-i,i}^k)r\leqslant m_{1-i,1-i}^k(1-r),\) agent k does 1/2 in case of hearing a recommendation of \(1-i\) and i in case of recommendation of 1/2 and gets an expected utility of

$$\begin{aligned} \pi ^{U,\leqslant }_k&=r(m_{1-i,i}^l\cdot 1+(1-m_{1-i,i}^k)\cdot 0-\alpha (1-m_{i,1-i}^k))\\&\quad +(1-r)(m_{1-i,1-i}^k\cdot 1+(1-m_{1-i,1-i}^l)\cdot 0-\alpha (1-m_{1-i,1-i}^{3-k}))\\&=r(m_{1-i,i}^k-\alpha (1-m_{i,1-i}^{3-k}))+(1-r)(m_{1-i,1-i}^k-\alpha (1-m_{1-i,1-i}^{3-k})) \end{aligned}$$

Thus the IC constraint for agent k reporting truthfully upon observing signal i is

and “truthful reporting” conditions (8) are established.

The proof of the claim is now completed. \(\square \)

Lemma B3

There exists a solution to the optimal mediation protocol design problem in Eq. (5) such that \(\forall k, i\) \(m_{i,i}^k=p\in [0,1]\) and \(m_{i,1-i}^k=q\in [0,1].\)

Proof

Note first that the optimal mediation protocol design problem

can be written more explicitly as

To prove the lemma, one needs to notice that (i) the constraint set defined by IC conditions is convex; (ii) the objective and the constraint set are symmetric with respect to players and states.

Convexity of the constraint set (6)–(8) To establish (i) one can show first that each IC condition defines a convex set. This is obvious for conditions (6)–(7) as these a linear in parameters. Now consider the typical truthful-reporting IC constraint

and consider two vectors t and s that satisfy those constraints.

If both t and s are such that \(\left[ (1-t_{1-i,i}^k)r> l_{1-i,1-i}^k(1-r)\right. \) and \(\left. (1-s_{1-i,i}^k)r>\right. \left. s_{1-i,1-i}^k(1-r)\right] \) or \(\left[ (1-t_{1-i,i}^k)\right. r\) \(\leqslant l_{1-i,1-i}^k(1-r)\) and \(\left. (1-s_{1-i,i}^k)r\leqslant s_{1-i,1-i}^k\right. \left. (1-r)\right] ,\) then the convex combination \(u=\beta t+(1-\beta ) s,\beta \in [0,1]\) also satisfies the constraint (11) with \((1-u_{1-i,i}^k)r> u_{1-i,1-i}^k(1-r)\) or \((1-u_{1-i,i}^k)r\leqslant u_{1-i,1-i}^k(1-r)\) respectively by linearity of both RHS and LHS of the inequality in (11).

Now suppose that

and let \(u=\beta t+(1-\beta )s\) with \(\beta \in [0,1].\)

First, either \((1-u_{1-i,i}^k)r> u_{1-i,1-i}^k(1-r)\) or \((1-u_{1-i,i}^k)r\leqslant u_{1-i,1-i}^k(1-r).\) Suppose \((1-u_{1-i,i}^k)r> u_{1-i,1-i}^k(1-r),\) then to show convexity of the set defined by constraint (11), one needs to establish that

Indeed, if this is the case, then also

since u is a convex combination of t and s.

To show (12) note that

where the last inequality follows from \((1-s_{1-i,i}^k)r\leqslant s_{1-i,1-i}^k(1-r).\) The case of \((1-u_{1-i,i}^k)r\leqslant u_{1-i,1-i}^k(1-r)\) is similar.

Since the intersection of convex sets is convex, the constraint set is convex itself.

Symmetry of the objective (10) and the constraint set (6)–(8) Note that if the maximization problem is solved by some vector \(m=(m^1,m^2)\) (where \(m^i\) denotes the subvector of probabilities related to agent i), then vector \(m'=(m^2,m^1)\) leads to the same value of the objective function and constraints are satisfied at \(m'\) by symmetry. Thus the same value of the objective is achieved at the average of \(m,m'\) and one can restrict attention to maximizing with 4-element vector \(m_{00},m_{01},m_{10},m_{11}.\) Again, swapping \(m_{00}\) with \(m_{11}\) and \(m_{01}\) with \(m_{10}\) leads to the same value of the modified objective function and constraints being satisfied by symmetry. Thus one can restrict attention to maximizing with respect to 2-element vector (p, q) with \(p=m_{ii}\) and \(q=m_{i,1-i}\) and the proof of the lemma is now completed. \(\square \)

Utilizing the results from the preceding lemmas, Theorem B.1 provides an explicit solution to the problem of designing the optimal mediation protocol.

Theorem B.1

The optimal mediation protocol design problem in Eq. (5) is solved by \(M^*\in \mathcal {M}\) such that \(\forall k, i\) \((m^*)_{i,i}^k=p^*\) and \((m^*)_{i,1-i}^k=q^*\) with

Proof

Due to Lemma B3 the optimal mediation protocol design problem is reduced to

For a moment, ignore the first two constraints (13)–(14). It will be verified later that the solution of the relaxed maximization problem still satisfies these two constraints.

-

Note that the value of the objective grows with p along the line \((1-q)r=p(1-r)\) under Assumption 1. Indeed, substituting

$$\begin{aligned} q=1-p\dfrac{1-r}{r} \end{aligned}$$into the objective yields coefficient equal to \(2-1/r\) on the variable p and thus the objective grows in p. Having observed this, it is easy to see that the value of the objective in the region with \( (1-q)r> p(1-r)\) is not higher than at the point point on its boundary with the highest value of p, which is \(\left( 1,\frac{2r-1}{r}\right) .\)

-

Now also note that the objective grows with p along the line

$$\begin{aligned} (p-\alpha p)r+(q-\alpha q)(1-r)=r(q-\alpha (1-q))+(1-r)(p-\alpha (1-p)) \end{aligned}$$Indeed, substituting

$$\begin{aligned} q=p\dfrac{1-2r+\alpha }{1-2r-\alpha }-\dfrac{\alpha }{1-2r-\alpha } \end{aligned}$$into the objective yields coefficient \((\alpha +1) (2 r-1)/(\alpha +2 r-1)>0\) on p. Thus if the point \(\left( 1,\frac{2r-1}{2r+\alpha -1}\right) \) satisfies \((1-q)r\leqslant p(1-r)\), it has the highest value of the objective in the region with \((1-q)r\leqslant p(1-r).\)

-

Note that \(\alpha \leqslant 1-r\) simultaneously guarantees that \(\left( 1,\frac{2r-1}{2r+\alpha -1}\right) \) satisfies \((1-q)r\leqslant p(1-r)\) and has a higher value of the objective than \(\left( 1,\frac{2r-1}{r}\right) .\) Moreover, the point \(\left( 1,\frac{2r-1}{2r+\alpha -1}\right) \) satisfies the two omitted constraints (13)–(14) of the maximization problem. Thus the point that maximizes the objective for \(\alpha \leqslant 1-r\) is \(\left( 1,\frac{2r-1}{2r+\alpha -1}\right) .\)

-

If in turn \(\alpha >1-r,\) then there are no points that satisfy the constraint with \((1-q)r<p(1-r):\) the set defined by \((p-\alpha p)r+(q-\alpha q)(1-r)\geqslant r(q-\alpha (1-q))+(1-r)(p-\alpha (1-p))\) has no intersection with the set defined by \((1-q)r<p(1-r),\) which is easy to verify by comparing the values of the linear constraints at the boundary points of the constraint set \(p=0\) and \(p=1\). Moreover, the only point satisfying the constraint with \((1-q)r\geqslant p(1-r)\) is (1, 0), which remains the only candidate for the optimal mediation protocol when \(\alpha >1-r.\) This point obviously satisfies the omitted constraints (13)–(14).

-

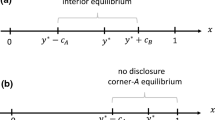

For a graphical treatment, two typical constraint sets are shown in Fig. 1.

The search for the optimal mediation protocol is thus completed

and Theorem B.1 is proved. \(\square \)

Remark B.1

\(M^*\) is the unique solution of the optimal mediation protocol design problem in the set \(\mathcal {M}\) of protocols with independent action recommendations.

Proof

Table 1 below explicitly presents \(M^*\) together with its gradient and the gradients of the 8 constraints that bind at the maximum found in Theorem B.1. The binding constraints are (i) \(\left\{ \left\{ m_{ii}^k\leqslant 1\right\} _{i=0}^1\right\} _{k=1}^2\); (ii) the “truthful reporting” constraints in (8).

Now note that the gradient of the objective is a linear combination of the gradients of the constraints with weights

that are strictly positive when \(r\in (1/2,1)\) and \(\alpha \in (0,1).\) Thus \(M^*\) is locally the unique solution to the maximization problem that defines the optimal mediation protocol. Due to the convexity of the constraint set and the linearity of the objective, \(M^*\) is also the unique global solution in the set \(\mathcal {M}\) of mediation protocols with independent action recommendations. \(\square \)

Remark B.2

It is easy to see that the mediation protocol of Theorem B.1 can be implemented by a generalized almost-truthful interim-biased mediation protocol with

C The case of payoffs non-separable in agents’ actions

This appendix demonstrates that almost-truthful interim-biased mediation protocols can also facilitate communication in cases when agents’ payoffs are not fully separable in actions, provided that the the payoff changes due to action interaction are relatively small compared to payoff changes induced by the own-action component \(v_k.\)

Consider the following modification to the specification of agents’ payoffs in eq. (2):

where the function \(z_k\) captures the effect of action interaction on agents’ payoffs. Assume also that \(\alpha \in (0,1]\) and suppose that Assumption 4.1 (which guarantees the uniqueness of the state-specific correct action) holds throughout this part of the appendix. Also assume that the interaction of actions leads to a smaller variation in payoffs relative to the \(v_k\)-component of the utility:

Assumption C.1

For every agent \(k\in \{1,2\},\) state \(s\in \mathcal {S},\) action pair \(a_k, a'_k\in \mathcal {A}_k\) and \((3-k)\)’s action \(a_{3-k}\in \mathcal {A}_{3-k}\) if \(v_k(a_k, s) - v_k(a'_k, s)>0\) then

This assumption essentially guarantees that agent k’s optimal action in state s is not affected by agent \((3-k)'\)s action.

Under this assumption, the main results of the paper continue to hold even when agents’ actions interact in determining the payoffs as in (17). The intuition is similar to the main result. First, the mediator is able to control agents’ beliefs by only rarely introducing distortions. With rare distortions, the mediator is able to induce the agents to take actions \(a^*(s_k, m)\) that are correct given their private signals and the mediator’s message (these actions do not depend on the counterpart’s action due to Assumption C.1 as demonstrated below). Given that the mediator is able to shift actions in this way, agents’ \(v_k\)-component of the utility is maximized by truth-telling. This motive for truth-telling dominates any secondary payoff effects through the counterpart’s action provided that the misalignment of interests \(\alpha \) is small enough. The formal proof is sketched below with some details omitted given the similarity to the main part of the paper.

Lemma C1

Consider agent k and state \(s\in \mathcal {S}.\) For all \(a_{3-k}\in \mathcal {A}_{3-k}\) and \(\alpha \in (0,1]\) the correct action \(a_k^{*}(s)\) maximizes \(v_k(a_k, s) - \alpha \times z_k(a_k, a_{3-k}, s))\) with respect to \(a_k.\)

Proof

To see this notice first that given the definition of \(a_k^{*}(s)\) and the uniqueness assumption (part (i) of Assumption 4.1), for every \(a'_k\in \mathcal {A}_k:a'_k\ne a_k^{*}(s)\)

Now, since \(v_k(a_k^{*}(s), s) - v_k(a'_k, s)>0\), then by Assumption C.1 for every \(a_{3-k}\) and \(\alpha \in (0,1]\)

Thus for every \(a'_k\in \mathcal {A}_k:a'_k\ne a_k^{*}(s)\) and every \(a_{3-k}\)

which implies that indeed for every \(a_{3-k}\) and \(\alpha \in (0,1]\) the correct action \(a_k^{*}(s)\) maximizes \(v_k(a_k, s) - \alpha \times z_k(a_k, a_{3-k}, s))\) with respect to \(a_k.\) \(\square \)

Next, similarly to Lemma 4.1, notice that an action that is correct for a given signal of the counterpart will be chosen for high enough belief on this signal for any action of the counterpart.

Lemma C2

Let \({\tilde{\pi }}_k\) be agent k’s belief over \(\mathcal {S}_{3-k}\). There exists a \({\bar{\delta }}^z_k<1\) such that for all \(s_{3-k},\) \(\alpha \in (0,1]\) and any \(a_{3-k}\in \mathcal {A}_{3-k},\) if \({\tilde{\pi }}_k(s_{3-k})\geqslant {\bar{\delta }}^z_k,\) then \(\arg \max _{a_k}\mathbb {E}_{{\tilde{\pi }}_k}{\left[ v_k(a_k,s)-\alpha z_k(a_k, a_{3-k}, s)\right] }=a^*_k(s_k,s_{3-k}).\)

Proof

Notice that for \({\tilde{\pi }}_k(s_{3-k})=1\) and \(a^*=a^*_k(s_k,s_{3-k})\) (which is unique by part (i) of Assumption 4.1)

for every \(a'\ne a^*_k(s_k,s_{3-k}),\) where the inequality for every \(a_{3-k}\) is implied by Lemma C1. Thus by continuity of \(\sum _{t_{3-k}}{\tilde{\pi }}_k(t_{3-k})v_k(a_k,s)-\alpha z_k(a_k, a_{3-k}, s)\) with respect to \({\tilde{\pi }}_k(t_{3-k}),\) there exists a \({\bar{\delta }}^z_k(s_{3-k}, a_{3-k})<1\) such that the same strict inequality holds for all \({\tilde{\pi }}_k\) such that \({\tilde{\pi }}_k(s_{3-k})\geqslant {\bar{\delta }}^z_k(s_{3-k}, a_{3-k}).\) The proof of the lemma is completed by defining \({\bar{\delta }}^z_k=\max _{s_{3-k}, a_{3-k}}\left\{ {\bar{\delta }}^z_k(s_{3-k}, a_{3-k})\right\} .\) \(\square \)

Now, to see that the equilibrium with truth-telling exists even with the interplay of agents’ actions, notice that the remaining steps of the proof in the main part of the paper can be reproduced almost without changes. First, Lemma 4.2 showing that the mediator can create a belief weight arbitrarily close to 1 by using rare distortions does not depend on the interplay of the actions and goes through in exactly the same form. Next, the definition of almost-truthful mediation requires only a small change reflecting that the belief weights that are enough for each agent to behave as if the counterpart’s signal is perfectly known now also depend on possible variation in the counterpart’s action:

Definition C.1

Let an almost-truthful interim-biased mediation protocol [for the case of payoff functions allowing for an interaction between agents’ actions] \(m^{az}\) be a collection of random variables \(\left\{ m^{az}_k\right\} _{k=1,2}\) with \(m_k^{az}({\hat{s}}_k,{\hat{s}}_{3-k})=m_k^b({\hat{s}}_k,{\hat{s}}_{3-k})\) for some \(\varepsilon _k\in \left( 0,{\bar{\varepsilon }}_k({\bar{\delta }}^z_k)\right] .\)

The only difference between this definition and Definition 4.5 is that \({\bar{\delta }}_k\) is replaced with \({\bar{\delta }}^z_k.\) Then, Lemma 4.3 can be reformulated similarly:

Lemma C3

For each agent k, \(\alpha \in (0,1],\) signal \(s_k,\) signal report \({\hat{s}}_k,\) \((3-k)\)’s action and realization m of mediator’s message \(m_k^{az}({\hat{s}}_k,s_{3-k}),\) agent k’s optimal action coincides with the correct action in state \((s_k, m):\) \(\arg \max _{a_k}\mathbb {E}_{k}{\left[ v_k(a_k, s)-\alpha z_k(a_k,\right] } {a_{3-k}, s)|s_k, m_k^a({\hat{s}}_k, s_{3-k})=m}= a_k^*(s_k, m).\)

Proof

By Definition C.1 agent k’s posterior belief over agent \((3-k)\)’s signal places a higher than \({\bar{\delta }}^z_k\) weight on m. By Lemma C2, \(\arg \max _{a_k} \mathbb {E}_{k}{\left[ v_k(a_k, s)-\alpha z_k(a_k, a_{3-k}, s)|s_k, m_k^a\right] }\)\({({\hat{s}}_k, s_{3-k})=m}=a_k^*(s_k, m).\) \(\square \)

Next, since the agent’s optimal behavior upon observing the mediator’s message is known for any counterpart action (and coincides with the correct action in the corresponding state), Lemma 4.4 holds without any modifications, reproduced here for reference:

Lemma C4

Suppose that Assumption 4.4 holds. For each agent k, signals \(s_k\ne {\hat{s}}_k\) and mediation protocol \(m^{az},\) \(V_{m_k^{az}}(s_k, s_k)>V_{m_k^{az}}(s_k, {\hat{s}}_k).\)

That is, the \(v_k\)-component of the utility (excluding the action interaction part and the \(c_k\)-component) is still strictly maximized by truth-telling.

Finally, the proof of the Theorem 4.1 requires only a small modification to show the existence of low enough misalignment of interest that ensures truth-telling:

Theorem C.1

Suppose that Assumption 4.4 holds. There exists an \({\bar{\alpha }}\) such that for all \(\alpha \in (0, {\bar{\alpha }}),\) for each agent k, signals \(s_k\ne {\hat{s}}_k\) and almost-truthful mediation protocol \(m_k^{az},\) \(U_{m_k^{az}}(s_k, s_k)>U_{m_k^{az}}(s_k, {\hat{s}}_k).\)

Proof

Define \(\Delta Z_{m_k^{az}}(s_k, {\hat{s}}_k),\) \(\Delta U_{m_k^{az}}(s_k, {\hat{s}}_k)\) and \(\Delta C_{m_k^{az}}(s_k, {\hat{s}}_k)\) analogously to \(\Delta V_{m_k^{az}}(s_k, {\hat{s}}_k)\). Notice that \(\Delta Z_{m_k^{az}}(s_k, {\hat{s}}_k)\) and \(\Delta U_{m_k^{az}}(s_k, {\hat{s}}_k)\) are well-defined expectations, since the counterpart’s action conditional on their private signal is uniquely pinned down by the mediator’s message to the counterpart (i.e. there’s a unique equilibrium in the post-communication game which only depends on the signals and mediator’s messages). Also,

Let

and

Notice that \({\bar{\Delta }} V_{m_k^{az}}>0\) by Lemma C4 and \({\bar{\Delta }} H_{m_k^{az}}\geqslant 0\) by construction. Define

where the last line ensures that \({\bar{\alpha }}\in (0,1].\) It remains to notice that for \(\alpha \in (0, {\bar{\alpha }}),\) \(\Delta U_{m_k^{az}}(s_k, {\hat{s}}_k)>0\) for every k and \(s_k\ne {\hat{s}}_k,\) which completes the proof. \(\square \)

Rights and permissions

About this article

Cite this article

Sedov, D. Almost-truthful interim-biased mediation enables information exchange between agents with misaligned interests. Rev Econ Design 27, 505–546 (2023). https://doi.org/10.1007/s10058-022-00301-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10058-022-00301-x