Abstract

Virtual Reality (VR) technology has the potential to support the aging population and improve testing of daily abilities to detect functional decline. In multiple research studies, VR performance of participants has been assessed by measuring time to complete test, but the effect of learning how to use the VR system and differences between real and virtual environments have been understudied, especially for fine motor tasks. In this study, 20 older adults ages 65–84 performed a task that required fine motor skills in real-life and then in a VR replica of the same task. All participants completed the task in each setting with no difficulties. A clear learning effect was observed in VR, which was attributed to learning how to use the device itself. Still, participants could not reach the same level of performance (time) in VR as in real-life. Participants rated the VR task more mentally and physically demanding than in real-life, as well as more stressful, but with an overall low cognitive demand. In an exploratory cluster analysis, participants with an average age of 69 years old had more technological devices, found the VR system more usable and realistic than participants in the group with an average of 76 years old. This study demonstrated that VR influences time to complete a fine motor task, and that learning effects related to the system could be confounded with actual task performance if not properly considered in VR studies with older adults.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Life expectancy has increased from 69.9 years in 1959 to 77.8 years in 2020 (Arias et al. 2021). Because of that, the absolute number of older adults began to progressively increase throughout the years. The United States Census Bureau estimates that there will be more older adults than children by 2035, increasing from 15.2% of the US population in 2016 to 23.4% by 2060 (US Census 2018).

This aging of the population will increase the number of individuals unable to live an independent life as the risk for cognitive decline, including changes that impact sensory, mental, and physical functioning, increases with age (Murman 2015; World Health Organization 2015). But even with expected declines, research has showed that older adults can still learn new performance skills and can preserve motor memories acquired later in life (Smith et al. 2005).

Newly developed technologies can help to increase the quality of life of older adults providing medical rehabilitation (Bui et al. 2021; Canning et al. 2020; Pedram et al. 2020; Perez-Marcos et al. 2018; Stamm et al. 2022), increasing physical activity engagement (Campo-Prieto et al. 2021; Gao et al. 2020), and even decreasing loneliness and social isolation (Appel et al. 2020; Lee et al. 2019). Virtual Reality (VR) technology can simulate environments that are very realistic, which can also contribute with the development of better diagnostic tools for remotely detecting changes (Zygouris et al. 2017) in cognition such as mild cognitive impairment (Cavedoni et al. 2020).

When deciding to incorporate VR into training or testing applications, researchers must understand how representative a VR task is of a real-life one, commonly defined as validity, and it can be measured by comparing behavioral metrics in VR versus real-life (Paljic 2017). Indeed, immersive VR systems have been recently used to compare real-life and VR performance in the fields of prosthetics (Joyner et al. 2021) and manual training (Carlson et al. 2015; Elbert et al. 2018; Murcia-López and Steed 2018), but not with older adults.

Using VR for training purposes has goals of facilitating the training and making sure that its results will transfer to its real-life applications (Bezerra et al. 2018; Elbert et al. 2018). Elbert et al. (2018) looked at order picking performance transferability between real-life to VR using a task replica and an immersive system in a sample of working-age adults. Another study looked at differences in kinematics when picking up objects from a supermarket shelf in real-life and in a virtual environment (Arlati et al. 2022). Bezerra et al. (2018) did not use a fully immersive system (Microsoft Kinect) but experimented with older adults specifically to evaluate the transferability of VR skills.

Not many studies had participants performing a task in real-life as well as in a replica in VR (Arlati et al. 2022; Bezerra et al. 2018; Elbert et al. 2018), which should give a better comparison especially looking at subjective measurements i.e., how people feel about the different settings. It is much easier to judge how much harder a task is when done in VR when you have just done the real-life one instead of having to recall from your previous experiences, which is known to be part of late-life cognitive decline (Hedden and Gabrieli 2004).

One factor commonly overlooked is the effect of VR on performance involving fine motor skills, and how learning the system takes place in a VR setting. Past research has used time as a measure of performance (Bezerra et al. 2018; Chen and Or 2017; Mason et al. 2019; Porffy et al. 2022), which learning the system itself might have a direct influence upon. Studies normally have a demonstration or a training portion of the study, but even with that, research should incorporate multiple trials, which was part of some past studies (Bezerra et al. 2018; Elbert et al. 2018). The challenge is dealing with practice effects, which might be due to factors such as memorization and learned strategies, something commonly seen in cognitive tests (Calamia et al. 2012).

When making a direct comparison between real and virtual environments, one must also understand its acceptability and feasibility. Past research has looked at VR versus real-life (Bezerra et al. 2018; Parra and Kaplan 2019), but not necessarily using immersive environments and/or motor-cognitive tasks related to daily abilities commonly referred to as the Instrumental Activities of Daily Living (IADLs). These can be defined as “intentional and complex activities, requiring high-level controlled processes in response to individuals’ needs, mainly related to novel and/or challenging daily living situations” (de Rotrou et al. 2012). Examples of IADLs include driving, shopping for products, cooking, managing money, and doing laundry. These complex tasks require fine motor-cognitive skills, such as the precision needed for selecting and moving objects during activities like sorting laundry. Since IADLs are considered higher-order and complex activities, it is logical to assume a strong connection between them and cognitive function. This relationship has already been demonstrated by various studies (Marshall et al. 2011; Reppermund et al. 2011).

When considering the utilization of different technologies in any field, it’s crucial to understand how they can affect performance due to user interaction. In the case of VR development, researchers must take into account the human experience, which includes understanding the usability of the system and its potential limitations. For example, consumer behavior in VR was found to be mostly consistent with everyday life for product development (Branca et al. 2023), being an effective and more sustainable alternative to traditional tests. In addition, a potential limitation can be the phenomenon of cybersickness, a common issue with VR technology (Dilanchian et al. 2021; Mittelstaedt et al. 2019; Weidner et al. 2017). However, this has been somewhat alleviated by technological advancements that increased display resolution and refresh rate (Saredakis et al. 2020). To minimize cybersickness, designers must focus on the user’s sense of presence within the VR environment. Studies have found that a stronger sense of ‘being there’ is negatively associated with feelings of cybersickness (Weech et al. 2019). Overall, effects of cybersickness reported by older adults are generally low on VR experiences (Huygelier et al. 2019), with research even pointing to older adults reporting less cybersickness than younger adults (Dilanchian et al. 2021).

Understanding the effect of the VR system on performance, including learning its use, is decisive to develop clinical applications intended to replicate tasks that are part of common cognitive abilities testing such as the IADLs. In this study, a task that requires fine motors skills was performed by older adults in both settings (real-life and VR) to evaluate differences. Participants were required to repeat the same task in each setting to determine if and how learning would take place in VR. Testing multiple times in each setting was deliberately chosen to ensure that participants had adequately learned and could perform the task in a real-life context before replicating it in VR. This approach would allow a direct comparison of learning outcomes and adaptations in the VR environment against a real-life baseline, isolating the VR-specific learning effects. Both objective (time and effectiveness) and subjective (perceived task-load) measures of comparison were collected, as well as usability and acceptability measures related to the VR equipment and environment.

2 Methodology

2.1 Participants

A total of 20 participants were recruited from the Memory and Aging Laboratory at Kansas State University. This study complied with the American Psychological Association Code of Ethics and was approved by the Kansas State University’s Institutional Review Board (#IRB-10,786). Consent was obtained by having participants read and sign the consent form after the nature of the study was explained to them.

The sample had an average age of 72.4 (SD = 5.0, MIN = 65, MAX = 84), with 12 males, 7 females, and 1 participant who did not want to report gender. The sample was on average highly educated with a mean of 17.2 (SD = 2.6) years of education. Majority of participants were retired (16 retired, 4 still working).

2.2 Task design

A task that requires fine motor coordination (selecting objects jumbled in a bowl) and is easy to complete by participants in a real-life (which will be referred to as RL) setting was designed for the study. Dealing with the same task in VR would require participants to learn how to interact with the system, which does not provide the same sensory feedback (touch) and requires more precision when selecting objects to complete a task.

The task designed for this experiment consisted of a sorting task that requires hand-eye coordination along with decision making processes. Seated participants were presented with a transparent bowl with two types of objects of different colors each. A total of 54 1-inch cubes of six different colors (9 each color) and 45 1.5-inch diameter balls of 9 different colors (5 each color) were randomly mixed in the bowl (See Fig. 1).

Participants were instructed to sort the objects in the transparent bowl in front of them into three different black rectangular containers according to specific pairs of colors e.g., one container with blue and green objects only, another with red and orange objects only, and the last one with red and purple objects only. To account for learning effects of decreased decision-making time when sorting objects by previously knowing which pairs to combine, the combination of colors changed for each trial performed by the participants. Only one object could be selected at a time.

To account for learning abilities related to the task, each task was performed 3 times in each setting i.e., 3 times in RL and 3 times in VR. Data collected from each trial included time (using a stopwatch) and number of misplaced objects i.e., objects put in an incorrect container.

The task was designed to isolate the VR effect on the task performance and reduce practice effect. A conscious decision was made not to employ a counterbalance between RL and VR given the primary objective of assessing abilities of older adults in performing tasks that are reflective of everyday activities with which they are already familiar. For example, the sorting task designed is akin to routine activities such as organizing household items or sorting laundry, which most participants have likely encountered in their daily lives. Practice effect was reduced by having the task change pairs of colors at every trial, so that participants could not memorize the correct order and would still have to go through the same amount of decision-making process time during each trial.

It was hypothesized that there would not be a significant difference in time performance between second and third trials in RL. If time differences between second and third trials are not significant, any time difference seen later in the VR could be attributed to the VR itself.

2.3 Virtual reality apparatus and system training

The Virtual Reality environment was designed using Unity (version 2020.3.10f1), and it was built to accurately replicate the real environment, that is, with the same quantities, colors, and sizes for objects as seen in Fig. 1. Colliders for objects were designed to match the exact same visual size and shape for balls and cubes. Additionally, a sphere collider, located in the middle of the players’ virtual hands and with a 0.05 radius, was utilized for grabbing purposes.

The Oculus Quest 2, a fully immersive VR system, was selected for this study, which consists of a Head Mounted Display (HMD) and two hand-held controllers. The side trigger button in the controller was chosen to be pressed to grab the object in the virtual environment. Although participants used physical controllers, within the VR setting, they saw only virtual hands, not the controllers themselves. The device was connected to a computer that was rendering the virtual reality environment.

A demonstration scene was prepared to train participants on how to use the controllers to grab objects. All colors and shapes of objects were displayed in front of the participant before starting the experiment to give a better understanding of correct color assignments and how to grab each object. After grasping and releasing at least half of the objects, participants could move forward to the actual sorting task. Pairs of colors for each trial were displayed on a gray wall in front of the participant. In the real-life task, the pairs of colors were displayed using a piece of paper that was affixed to the wall and replaced at each round, mirroring the setup in the VR environment.

2.4 Procedure

The study was conducted in an Ergonomics Laboratory at Kansas State University. Participants were informed of the location of the study and came independently to the laboratory. Participants gave consent to joining the study and started by answering a pre-experiment survey that included basic demographics. Participants also reported their familiarity with technology devices by selecting which devices they have from a list e.g., tablet, smartphone, computer, and VR devices, and answered the Computer Proficiency Questionnaire (Boot et al. 2015). All participants reported having a computer and a cellphone, and 14 participants had a tablet device. The average technological device ownership was of 3.15 (SD = 0.87) devices per participant, ranging from 2 to 5 devices total.

Figure 2 describes the basic procedure followed by each participant in the study. Each participant took the Mini Montreal Cognitive Assessment (MOCA) Version 2.1. It was administered by a MOCA certified rater (ID USKAUCR7093499-01). Results were not interpreted by the researcher and participants were not informed of their scores since the purpose of the study was not to evaluate possible cognitive decline effects. The MOCA score was only used to control for cognitive abilities in the modeling process. No participant was removed from the analysis if they were able to complete the task effectively in RL and in VR, despite of the MOCA score. MOCA scores had an average of 12.80 (SD = 1.73), with 11 and above out of 15 points being considered normal cognition. Two participants scored less than 11 points, mostly due to the recall portion of the test, but were not excluded from the analysis since they successfully completed the study.

All participants were seated during both tasks. Participants were allowed to continue to the first trial of the VR condition after successfully completing the training session described in the previous section.

Right after the end of the 3 trials in RL, task load was assessed using the NASA-TLX questionnaire, which is comprised of 6 sub-dimensions related to mental, physical, and temporal demands, performance, effort, and frustration levels (Hart and Staveland 1988). Participants then executed the other remaining 3 trials in the VR condition, followed by another task load questionnaire now referring to the latest task performed and a post-experiment survey. Specific VR-related assessments in the survey included (1) presence in the VR environment using the IGroup Presence Questionnaire (IPQ) (Schubert 2003). Subscales included were related to Experienced Realism (REAL1, REAL2) and General Presence (G1, SP1, SP5), and were combined to determine a VR Realness score; (2) Simulator Sickness Questionnaire (SSQ) (Kennedy et al. 1993) measured cybersickness possibly caused by the VR experience; (3) System Usability Scale (Brooke 1996) was also utilized to evaluate the usability of this type technology; and (4) participants answered open-ended questions related to strategy changes between the real and virtual settings and likes and dislikes regarding the VR experience.

3 Results

All 20 participants completed the entire experiment with no difficulties nor technical issues. The study took approximately one hour to be complete by each participant.

3.1 Performance results

Completion rates from all participants were extremely high and with a very low number of mistakes in both settings. The average number of misplaced objects in each setting was of 0.73 per trial in RL, and 0.86 per trial in VR. The majority of these mistakes were attributed to the difficulty in distinguishing similar colors, such as pink and purple. Although the participants were capable of sorting colors during the trials, which suggests color blindness was not a factor, it is recognized that using colors with lower contrast could potentially affect performance.

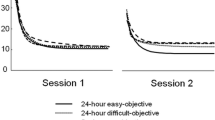

To evaluate if there were significant differences between trials, a repeated measures statistical analysis was conducted. Data was tested for normality using the Shapiro Wilk Test, which was rejected for most trials due to its right-skewness. Therefore, the non-parametric Friedman test was utilized. Differences between all 3 trials in RL showed a statistically significant difference (\({\chi }^{2}\left(2\right)=4524, p<0.001)\), and the same was observed during the VR trials (\({\chi }^{2}\left(2\right)=4000, p<0.001)\).

The Wilcoxon signed-rank test was used as a paired t-test alternative using times from first and second trials in RL (\(T=29, p=0.003)\) and from second and third trials in RL (\(T=101.50 , p=0.89)\). A Bonferroni correction was utilized with a significance level of 0.025. The first trial in RL therefore required more learning of the task compared to subsequent trials. This was due to the straightforward nature of the task and the participants’ increasing proficiency with practice. In VR, fourth and fifth trials were compared (\(T=3, p<0.001)\) as well as fifth and sixth trials (\(T=44, p=0.04)\). With a 0% improvement between second and third trials in VR, we could not reject the initial hypothesis that participants had already learned the task and therefore all changes observed during the VR trials were related to the VR system. In the VR task, a much lower p-value was observed, meaning that there was likely still improvement taking place, although at a lower rate than between trials four and five. The improvement between the fifth and sixth trials did not meet the significance threshold, and therefore was not considered statistically significant.

A final test was conducted comparing the third trial (RL) and sixth trial (VR), to compare the two conditions (VR and RL directly) also using the Wilcoxon signed-rank test (\(T=0, p<0.001)\). We concluded that the two conditions were statistically significantly different, with VR taking longer than RL for participants.

Figure 3 shows the boxplots of time (measured in seconds) to complete each trial. There was a small improvement in time during the first three RL trials for many participants (17 out of 20 total). Mostly, the improvement happened between first and second trial, with more consistent results between second and third trial, demonstrating that participants had already mastered the task by then. It was observed that all 20 participants improved their baseline time during the VR trials, but still, VR tasks took longer than in RL.

Table 1 summarizes mean times and standard deviation for each trial in the study. Average improvement in time was observed between first and second trial in RL (10.38%) but became consistent between second and third trial (0% change). A sharp learning process took place using the VR as seen in Fig. 3. In VR, larger improvements were observed between first and second trial (23.84%), and still some improvement between second and third trial (3.84%).

3.2 Task load comparison

Each participant rated the RL and the VR tasks using the NASA Task Load Index immediately after completing the trials of each setting, with a mean score of 12.54 (SD = 12.80) and 22.21 (SD = 17.04) out of 100 total points for RL and VR respectively. Data from NASA-TLX scores failed the Shapiro Wilk test, so the non-parametric Wilcoxson test was selected to run the analysis. Results showed a significant difference (T = 24.00, p = 0.001) between conditions. Participants, on average, reported an increase in task load when performing the same sorting task in the virtual environment.

3.2.1 Individual scores

Average scores for each dimension of the task load index seemed to get worse for the VR setting in exception for the pace of the task, which demonstrates that participants perceived they were, on average, slower in the VR task. All individual variables were tested for normality and failed the Shapiro Wilk test; therefore, the Wilcoxson test was run for each specific question, and with a Bonferroni Correction for multiple testing, the threshold used for significance was of 0.008 (0.05/6). Table 2 summarizes the statistical results of the analysis. Mental and physical demand, along with perceived stress, were considered statistically significantly different in the analysis, all with higher means in the VR portion of the study. Overall, participants did find the same task harder in the virtual environment, but they all on average rated their performance very high in both settings (lower scores represent higher success).

3.3 System usability, realness, and cybersickness effects

Data related to usability of the system was collected using the System Usability Scale (SUS). SUS scores above 68 are considered above average. Participants gave the system’s usability an average score of 78 (SD = 13.40). It was clarified to participants that the score should be related to using the VR to complete the task. Majority of participants thought the system was easy to use and felt confident using the system. Another key component was that, although most participant were using the system for the very first time, most participants did not think that they needed to learn a lot of things before they could get going with the system.

VR Realness, measured using subscales of the IGroup Presence Questionnaire, had an average score of 4.95 (SD = 0.89), with scores ranging from 0 to 6. Higher scores represent a more realistic VR experience. Given the selected subscales, with three referring to presence and two to experienced realism, participants felt overall presence in the virtual environment performing the tasks, and thought the environment looked relatively real. There were mixed answers to the specific subscale REAL2 related to the system’s consistency with its real-world counterpart, with an average score of 4.20 (SD = 1.47) and a higher standard deviation when compared to the combined results of all five subscales.

Scores for the Simulator Sickness Questionnaire were low, with a mean score of 3.9 (SD = 5.62). Scores over 20 indicate “perceptible discomfort” (Kennedy et al. 1993), and was only reported by one participant in the group.

3.4 Exploratory data analysis

In an exploratory data analysis, a K-Means cluster technique was utilized to find natural grouping amongst participants. Data was standardized prior to the cluster analysis to reduce bias from variables with larger dimensions. The elbow method showed a sharp angle at two clusters, and given the small sample size, this was the number of clusters selected for further analysis.

Table 3 shows the means and standard deviations of participants allocated in each of the 2 clusters. The first cluster had participants with an average age of 69.54 years old, with an average of 4.09 devices, and high overall usability scores of 87.04. The second cluster had slightly older participants (75.88 years old) that had a lower average number of devices, found the system to be less usable, and had higher SSQ scores. MOCA score was not a good source of differentiation between groups.

3.5 Strategy changes

One key component reported by some participants was related to changes in strategy to complete the given task when switching to the virtual environment. 60% of participants reported changing their strategies, which was mostly related to an initial difficulty in selecting the exact object that they were initially planning on selecting and grabbing, which increased their decision time and made them reassess in which container the selected color should be put in.

3.6 Post-experiment feedback and other comments

Participants reported positive experiences with the study. Participants demonstrated enthusiasm for the VR equipment: “fun” and “interesting” were feedback provided by 18 participants. When asked about what components of the study participants disliked, common topics brought up included the controller itself and how to use it. A total of four participants reported having a hard time holding the controllers and pressing the correct buttons, which might have distracted them when doing the task. The weight of the headset was also brought up by two participants who reported neck discomfort even though the whole VR portion of the study lasted on average 15 min.

4 Discussion

In this study, the high completion rate and minimal errors among participants indicate that older adults were able to effectively interact with the VR system. These findings are in line with previous research (Smith et al. 2005) that suggests the potential for this demographic to engage with new technologies. VR indeed seems to be a feasible tool to be used by older adults (Appel et al. 2020; Chau et al. 2021; Gerber et al. 2018; Zygouris et al. 2017), therefore, the findings contradicted the ageism concept that older adults have difficulties with new technologies (Rosales and Fernández-Ardèvol 2019).

In this study, there was no statistically significant difference between the number of errors in the VR and RL, but participants did have a harder time selecting objects in VR. This effect was observed in the increased time spent in the VR trials, even after completing the task a couple of times. Past studies comparing VR performance also found that participants took longer to complete a real-life task in VR (Elbert et al. 2018; Oren et al. 2012). The same happened when comparing VR to a regular desktop display when performing a spatial ability test (Guzsvinecz et al. 2022), although fine motor movements were not the focus of those studies.

Cybersickness levels were low and consistent with past research (Dilanchian et al. 2021), but when grouping participants in the cluster analysis, the older group actually reported higher levels of cybersickness than the younger group. This could be related to other clinical measures or health conditions not incorporated in the study such as smoking (Kim et al. 2021), which was found to be linked to lower levels of cybersickness.

The ergonomics of the device can be improved with lighter headsets and more intuitive controllers or hand-tracking systems. Haptic gloves have been developed to enhance the user experience but its options have been limited (Perret and Vander Poorten 2018) with not many commercially available options, and its use for tasks such as Object Location Spatial Memory (OLSM) was not significantly different when compared to regular VR controllers (Forgiarini et al. 2023). Equipment utilized in similar studies will have to be light, compact, and with precise sensors so that tactile stimulations like the ones experienced in real-life can be replicated (Van Wegen et al., 2023). Also, depending on the type of task designed, reducing the amount of controller buttons available could potentially help participants. The current controllers from the chosen device included multiple other buttons that were not necessary for this study and therefore could have increased the difficulty to execute the task.

Past research has also evaluated the use of VR technology to promote well-being in older adults experiencing mild cognitive impairment or related dementias, with results showing that virtual experiences were well accepted and had improved mood and apathy of participants (D’Cunha et al. 2019). Learning rates and task feasibility in those cases should be further investigated, especially if the goal is to measure cognitive decline using VR tests, which could have a confounding factor related to learning how to use the VR technology. The VR market has been growing, and future studies should evaluate if learning rates for VR users could be different than for non-users. This will also help to determine how much training one should get to use the VR system effectively.

Other skills that relate to daily activities should also be tested in VR to evaluate feasibility and validity by comparing real and virtual environments. Training of IADLs using non-immersive systems was already able to improve neuropsychological measures of older adults (Gamito et al. 2019), so further research should test for daily abilities but incorporating the currently available immersive systems.

Furthermore, the ability to standardize IADL assessments in a VR setting presents a significant advantage over traditional office-based evaluations. While certain IADLs, such as the sorting task investigated in this study, can be readily tested in an office environment, the transition to VR can offer a more controlled and uniform testing framework (Bohil et al. 2011). This standardized approach not only minimizes the potential for rater bias but also reduces the need for extensive training and resources typically required for conducting these assessments. By ensuring a consistent and bias-free environment, VR has the potential to provide more reliable and objective measures of daily living skills, critical for accurately evaluating the capabilities of older adults.

4.1 Study limitations

Although the sample had a similar size to other VR studies with older adults (Chen and Or 2017; Dilanchian et al. 2021; Mason et al. 2019; Park et al. 2022; Parra and Kaplan 2019), larger sample sizes would provide stronger statistical power and insights. This study also had a sample with high educational levels, which might yield different results when compared to other subgroups of older adults (Brazil and Rys 2022). Even with a relatively similar group in education and technology usage, a high variability in time to perform the task in both settings was observed.

To properly model the learning rate, more trials would be necessary as the learning rate models normally work in a logarithmic scale. For this study, object-picking was not analyzed independently, but all simultaneously in the task as whole (sorting all the objects in the bowl). Each participant spent about 15 min doing the tasks in VR, which is a common length for VR sessions with older adults (D’Cunha et al. 2019; Jones et al. 2016). A shorter task will allow for more trials, and therefore be better suited to mathematically model a learning curve.

The time difference between RL and VR in this study could have happened because of two reasons. One reason would be due to participants aiming for a specific colored-object but ending up getting a different object and having to reassess which container it should go in. The second possible reason would be that participants made multiple attempts to get the specific object for which they were aiming. Participants were asked to maintain the same pace for all trials, therefore, it was assumed that the learning effect observed in the VR trials was not due to learning the task, but because participants got better at picking up the desired objects. Future work should focus on that specific component by having only one sorting strategy allowed.

While in this research VR systems with controller-based interactions was used, it should be acknowledged that new systems with hand-tracking technologies are emerging. Despite these advancements, this research provided critical insights into older adults’ interaction with VR technology used for replicating real-life tasks, offering understanding that is pertinent to this evolving technology. Future research should extend this work by comparing the efficacy and accessibility of controller-based systems and hand-tracking interfaces, particularly with a testing population of older adults to determine the best VR technology readily available. Additionally, any potential latency issues with newer systems should be rigorously evaluated to ensure its suitability for older adult users.

5 Conclusion

In this study, virtual reality’s validity for a fine motor task was assessed by directly comparing older adult’s performance in a sorting task in real-life and then in VR. The effect of learning how to use the VR system was objectively assessed, which was observed even after participants were provided with instructions and went through a demonstration scene. The task’s feasibility using VR was confirmed, as all participants effectively completed the task with a small number of mistakes. This demonstrates that older adults can learn how to use the system even for fine motor tasks. Consequently, VR emerges as a potential tool to assess older adults in instrumental activities of daily living (IADLs), aiding in the identification of functional and cognitive decline.

It is decisive to incorporate the VR effect in performance analyses for older adults, especially if the measure of performance is time to complete a task as all participants improved their VR times by the third trial. Learning rates displayed variability, though overall, the task took longer to complete in the VR environment compared to real-life. This aspect should also be factored in when designing VR tests for this demographic.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Appel L, Appel E, Bogler O, Wiseman M, Cohen L, Ein N, Abrams HB, Campos JL (2020) Older adults with cognitive and/or physical impairments can benefit from immersive virtual reality experiences: a feasibility study. Front Med 6:329. https://doi.org/10.3389/fmed.2019.00329

Arias E, Betzaida T-V, Ahmad F, Kochanek K (2021) Provisional Life Expectancy estimates for 2020. National Center for Health Statistics (U.S. https://doi.org/10.15620/cdc:107201

Arlati S, Keijsers N, Paolini G, Ferrigno G, Sacco M (2022) Kinematics of aimed movements in ecological immersive virtual reality: a comparative study with real world. Virtual Reality 26(3):885–901. https://doi.org/10.1007/s10055-021-00603-5

Bezerra ÍMP, Crocetta TB, Massetti T, da Silva TD, Guarnieri R, Meira CdeM, Arab C, Abreu LCde, Araujo LVde, Monteiro CBdeM (2018) Functional performance comparison between real and virtual tasks in older adults: a cross-sectional study. Medicine 97(4):e9612. https://doi.org/10.1097/MD.0000000000009612

Bohil CJ, Alicea B, Biocca FA (2011) Virtual reality in neuroscience research and therapy. Nat Rev Neurosci 12(12):752–762. https://doi.org/10.1038/nrn3122

Boot WR, Charness N, Czaja SJ, Sharit J, Rogers WA, Fisk AD, Mitzner T, Lee CC, Nair S (2015) Computer proficiency questionnaire: assessing low and high computer proficient seniors. Gerontologist 55(3):404–411. https://doi.org/10.1093/geront/gnt117

Branca G, Resciniti R, Loureiro SMC (2023) Virtual is so real! Consumers’ evaluation of product packaging in virtual reality. Psychol Mark 40(3):596–609. https://doi.org/10.1002/mar.21743

Brazil CK, Rys MJ (2022) Technology adherence and incorporation to daily life activities of highly educated older adults (SSRN Scholarly Paper 4167279). https://doi.org/10.2139/ssrn.4167279

Brooke john (1996) SUS: a quick and dirty usability scale. Usability evaluation in industry. CRC

Bui J, Luauté J, Farnè A (2021) Enhancing Upper Limb Rehabilitation of Stroke Patients With Virtual Reality: A Mini Review. Frontiers in Virtual Reality, 2. https://www.frontiersin.org/article/https://doi.org/10.3389/frvir.2021.595771

Calamia M, Markon K, Tranel D (2012) Scoring higher the second time around: Meta-analyses of Practice effects in Neuropsychological Assessment. Clin Neuropsychol 26(4):543–570. https://doi.org/10.1080/13854046.2012.680913

Campo-Prieto P, Cancela JM, Rodríguez-Fuentes G (2021) Immersive virtual reality as physical therapy in older adults: Present or future (systematic review). Virtual Reality 25(3):801–817. https://doi.org/10.1007/s10055-020-00495-x

Canning CG, Allen NE, Nackaerts E, Paul SS, Nieuwboer A, Gilat M (2020) Virtual reality in research and rehabilitation of gait and balance in Parkinson disease. Nat Reviews Neurol 16(8). https://doi.org/10.1038/s41582-020-0370-2

Carlson P, Peters A, Gilbert SB, Vance JM, Luse A (2015) Virtual training: learning transfer of Assembly tasks. IEEE Trans Vis Comput Graph 21(6):770–782. https://doi.org/10.1109/TVCG.2015.2393871

Cavedoni S, Chirico A, Pedroli E, Cipresso P, Riva G (2020) Digital biomarkers for the early detection of mild cognitive impairment: Artificial Intelligence meets virtual reality. Frontiers in human neuroscience, 14. https://www.frontiersin.org/articles/https://doi.org/10.3389/fnhum.2020.00245

Chau PH, Kwok YYJ, Chan MKM, Kwan KYD, Wong KL, Tang YH, Chau KLP, Lau SWM, Yiu YYY, Kwong MYF, Lai WTT, Leung MK (2021) Feasibility, acceptability, and efficacy of virtual reality training for older adults and people with disabilities: single-arm Pre-post Study. J Med Internet Res 23(5):e27640. https://doi.org/10.2196/27640

Chen J, Or C (2017) Assessing the use of immersive virtual reality, mouse and touchscreen in pointing and dragging-and-dropping tasks among young, middle-aged and older adults. Appl Ergon 65:437–448. https://doi.org/10.1016/j.apergo.2017.03.013

D’Cunha NM, Nguyen D, Naumovski N, McKune AJ, Kellett J, Georgousopoulou EN, Frost J, Isbel S (2019) A mini-review of virtual reality-based interventions to Promote Well-being for people living with dementia and mild cognitive impairment. Gerontology 65(4):430–440. https://doi.org/10.1159/000500040

de Rotrou J, Wu Y-H, Hugonot-Diener L, Thomas-Antérion C, Vidal J-S, Plichart M, Rigaud A-S, Hanon O (2012) DAD-6: a 6-ltem version of the Disability Assessment for Dementia scale which may differentiate Alzheimer’s disease and mild cognitive impairment from controls. Dement Geriatr Cogn Disord 33(2–3):210–218. https://doi.org/10.1159/000338232

Dilanchian AT, Andringa R, Boot WR (2021) A pilot study exploring age differences in Presence, workload, and Cybersickness in the experience of immersive virtual reality environments. Front Virtual Real 2:129. https://doi.org/10.3389/frvir.2021.736793

Elbert R, Knigge J-K, Sarnow T (2018) Transferability of order picking performance and training effects achieved in a virtual reality using head mounted devices. IFAC-PapersOnLine 51(11):686–691. https://doi.org/10.1016/j.ifacol.2018.08.398

Forgiarini A, Buttussi F, Chittaro L (2023) Virtual Reality for Object Location Spatial Memory: A Comparison of Handheld Controllers and Force Feedback Gloves. In Proceedings of the 15th Biannual Conference of the Italian SIGCHI Chapter (pp. 1–9)

Gamito P, Oliveira J, Morais D, Coelho C, Santos N, Alves C, Galamba A, Soeiro M, Yerra M, French H, Talmers L, Gomes T, Brito R (2019) Cognitive stimulation of Elderly individuals with instrumental virtual reality-based activities of Daily Life: Pre-post Treatment Study. Cyberpsychology Behav Social Netw 22(1):69–75. https://doi.org/10.1089/cyber.2017.0679

Gao Z, Lee JE, McDonough DJ, Albers C (2020) Virtual reality Exercise as a Coping Strategy for Health and Wellness Promotion in older adults during the COVID-19 pandemic. J Clin Med 9(6) Article 6. https://doi.org/10.3390/jcm9061986

Gerber SM, Muri RM, Mosimann UP, Nef T, Urwyler P (2018) Virtual reality for activities of daily living training in neurorehabilitation: a usability and feasibility study in healthy participants*. 2018 40th Annual Int Conf IEEE Eng Med Biology Soc (EMBC) 1(4). https://doi.org/10.1109/EMBC.2018.8513003

Guzsvinecz T, Orbán-Mihálykó É, Sik-Lányi C, Perge E (2022) Investigation of spatial ability test completion times in virtual reality using a desktop display and the Gear VR. Virtual Reality 26(2):601–614. https://doi.org/10.1007/s10055-021-00509-2

Hart SG, Staveland LE (1988) Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. In P. A. Hancock & N. Meshkati (Eds.), Advances in Psychology. 52:139–183. North-Holland. https://doi.org/10.1016/S0166-4115(08)62386-9

Hedden T, Gabrieli JDE (2004) Insights into the ageing mind: a view from cognitive neuroscience. Nat Rev Neurosci 5(2). https://doi.org/10.1038/nrn1323

Huygelier H, Schraepen B, van Ee R, Abeele V, V., Gillebert CR (2019) Acceptance of immersive head-mounted virtual reality in older adults. Sci Rep 9(1):4519. https://doi.org/10.1038/s41598-019-41200-6

Jones T, Moore T, Choo J (2016) The impact of virtual reality on Chronic Pain. PLoS ONE 11(12):e0167523. https://doi.org/10.1371/journal.pone.0167523

Joyner JS, Vaughn-Cooke M, Benz HL (2021) Comparison of dexterous task performance in virtual reality and real-world environments. Frontiers in virtual reality, 2. https://www.frontiersin.org/article/https://doi.org/10.3389/frvir.2021.599274

Kennedy RS, Lane NE, Berbaum KS, Lilienthal MG (1993) Simulator Sickness Questionnaire: an enhanced method for quantifying Simulator sickness. Int J Aviat Psychol 3(3):203–220. https://doi.org/10.1207/s15327108ijap0303_3

Kim H, Kim DJ, Chung WH, Park K-A, Kim JDK, Kim D, Kim K, Jeon HJ (2021) Clinical predictors of cybersickness in virtual reality (VR) among highly stressed people. Sci Rep 11(1). https://doi.org/10.1038/s41598-021-91573-w

Lee LN, Kim MJ, Hwang WJ (2019) Potential of augmented reality and virtual reality technologies to promote wellbeing in older adults. Appl Sci 9(17) Article 17. https://doi.org/10.3390/app9173556

Marshall GA, Rentz DM, Frey MT, Locascio JJ, Johnson KA, Sperling RA (2011) Executive function and instrumental activities of daily living in MCI and AD. Alzheimer’s Dementia: J Alzheimer’s Association 7(3):300–308. https://doi.org/10.1016/j.jalz.2010.04.005

Mason AH, Grabowski PJ, Rutherford DN (2019) The role of visual feedback and age when grasping, transferring and passing objects in virtual environments. Int J Human–Computer Interact 35(19):1870–1881. https://doi.org/10.1080/10447318.2019.1574101

Mittelstaedt JM, Wacker J, Stelling D (2019) VR aftereffect and the relation of cybersickness and cognitive performance. Virtual Reality 23(2):143–154. https://doi.org/10.1007/s10055-018-0370-3

Murcia-López M, Steed A (2018) A comparison of virtual and physical training transfer of Bimanual Assembly tasks. IEEE Trans Vis Comput Graph 24(4):1574–1583. https://doi.org/10.1109/TVCG.2018.2793638

Murman DL (2015) The impact of age on Cognition. Semin Hear 36(3):111–121. https://doi.org/10.1055/s-0035-1555115

Oren M, Carlson P, Gilbert S, Vance JM (2012) Puzzle assembly training: Real world vs. virtual environment. 2012 IEEE virtual reality workshops (VRW), 27–30. https://doi.org/10.1109/VR.2012.6180873

Paljic A (2017) Ecological Validity of Virtual Reality: Three Use Cases. In S. Battiato, G. M. Farinella, M. Leo, & G. Gallo (Eds.), New Trends in Image Analysis and Processing – ICIAP 2017 (pp. 301–310). Springer International Publishing. https://doi.org/10.1007/978-3-319-70742-6_28

Park S, Lee H, Kwon M, Jung H, Jung H (2022) Understanding experiences of older adults in virtual reality environments with a subway fire disaster scenario. Univ Access Inf Soc. https://doi.org/10.1007/s10209-022-00878-8

Parra MA, Kaplan RI (2019) Predictors of performance in Real and virtual scenarios across Age. Exp Aging Res 45(2):180–198. https://doi.org/10.1080/0361073X.2019.1586106

Pedram S, Palmisano S, Perez P, Mursic R, Farrelly M (2020) Examining the potential of virtual reality to deliver remote rehabilitation. Comput Hum Behav 105:106223. https://doi.org/10.1016/j.chb.2019.106223

Perez-Marcos D, Bieler-Aeschlimann M, Serino A (2018) Virtual reality as a vehicle to Empower Motor-Cognitive Neurorehabilitation. Front Psychol 9. https://doi.org/10.3389/fpsyg.2018.02120

Perret J, Vander Poorten E (2018) Touching virtual reality: A review of haptic gloves. ACTUATOR 2018; 16th international conference on new actuators, 1–5

Porffy LA, Mehta MA, Patchitt J, Boussebaa C, Brett J, D’Oliveira T, Mouchlianitis E, Shergill SS (2022) A novel virtual reality Assessment of Functional Cognition: Validation Study. J Med Internet Res 24(1):e27641. https://doi.org/10.2196/27641

Reppermund S, Sachdev PS, Crawford J, Kochan NA, Slavin MJ, Kang K, Trollor JN, Draper B, Brodaty H (2011) The relationship of neuropsychological function to instrumental activities of daily living in mild cognitive impairment. Int J Geriatr Psychiatry 26(8):843–852. https://doi.org/10.1002/gps.2612

Rosales A, Fernández-Ardèvol M (2019) Smartphone Usage Diversity among Older People. In S. Sayago (Ed.), Perspectives on human-computer interaction research with older people (pp. 51–66). Springer International Publishing. https://doi.org/10.1007/978-3-030-06076-3_4

Saredakis D, Szpak A, Birckhead B, Keage HAD, Rizzo A, Loetscher T (2020) Factors associated with virtual reality sickness in head-mounted displays: A systematic review and meta-analysis. Front hum neurosci. 2020;14:96. https://doi.org/10.3389/fnhum.2020.00096. PMID: 32300295; PMCID: PMC7145389

Schubert TW (2003) The sense of presence in virtual environments. Z Für Medienpsychologie 15(2):69–71. https://doi.org/10.1026/1617-6383.15.2.69

Smith CD, Walton A, Loveland AD, Umberger GH, Kryscio RJ, Gash DM (2005) Memories that last in old age: motor skill learning and memory preservation. Neurobiol Aging 26(6):883–890. https://doi.org/10.1016/j.neurobiolaging.2004.08.014

Stamm O, Dahms R, Reithinger N, Ruß A, Müller-Werdan U (2022) Virtual reality exergame for supplementing multimodal pain therapy in older adults with chronic back pain: a randomized controlled pilot study. Virtual Reality. https://doi.org/10.1007/s10055-022-00629-3

US Census (2018), March The U.S. joins other countries with large aging populations. Census.Gov. https://www.census.gov/library/stories/2018/03/graying-america.html

Van Wegen M, Herder JL, Adelsberger R, Pastore-Wapp M, van Wegen EEH, Bohlhalter S, Nef T, Krack P, Vanbellingen T (2023) An overview of wearable haptic technologies and their performance in virtual object exploration. Sensors 23:1563. https://doi.org/10.3390/s23031563

Weech S, Kenny S, Barnett-Cowan M (2019) Presence and cybersickness in virtual reality are negatively related: a review. front psychol 10:158. https://doi.org/10.3389/fpsyg.2019.00158

Weidner F, Hoesch A, Poeschl S, Broll W (2017) Comparing VR and non-VR driving simulations: an experimental user study. 2017 IEEE virtual real (VR) 281–282. https://doi.org/10.1109/VR.2017.7892286

World Health Organization (2015) World report on ageing and health. World health organization

Zygouris S, Ntovas K, Giakoumis D, Votis K, Doumpoulakis S, Segkouli S, Karagiannidis C, Tzovaras D, Tsolaki M (2017) A preliminary study on the feasibility of using a virtual reality cognitive training application for remote detection of mild cognitive impairment. J Alzheimer’s Disease 56(2):619–627. https://doi.org/10.3233/JAD-160518

Acknowledgements

we are thankful to the Memory and Aging Lab at Kansas State University who assisted with the recruitment of participants for this study.

Funding

The authors did not receive support from any organization for the submitted work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

the authors have no competing interests to declare that are relevant to the contents of this article.

Ethical approval

this study was approved by the Institutional Review Board of Kansas State University.

Consent to participate

informed consent was obtained from all the participants in the study.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Brazil, C.K., Rys, M.J. The effect of VR on fine motor performance by older adults: a comparison between real and virtual tasks. Virtual Reality 28, 113 (2024). https://doi.org/10.1007/s10055-024-01009-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10055-024-01009-9