Abstract

Falls are a major health concern. Existing augmented reality (AR) and virtual reality solutions for fall prevention aim to improve balance in dedicated training sessions. We propose a novel AR prototype as an assistive wearable device to improve balance and prevent falls in daily life. We use a custom head-mounted display toolkit to present augmented visual orientation cues in the peripheral field of view. The cues provide a continuous space-stationary visual reference frame for balance control using the user’s tracked head position. In a proof of concept study, users performed a series of balance trials to test the effect of the displayed visual cues on body sway. Our results showed that body sway can be reduced with our device, indicating improved balance. We also showed that superimposed movements of the visual reference in forward-backward or sideways directions induce respective sway responses. This indicates a direction-specific balance integration of the displayed cues. Based on our findings, we conclude that artificially generated visual orientation cues using AR can improve balance and could possibly reduce fall risk.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

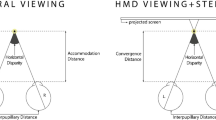

Balance control is a complex mechanism involving the integration of visual, vestibular, and proprioceptive cues to maintain an upright position and prevent falls. Recently, we showed that balance when viewing a computer-generated stimulus in a head-mounted-display (HMD) based virtual reality (VR) has reached behavioral realism. Body sway during eyes open was comparable between stance in a photo-realistic virtual and in a real-world visual scene (Assländer and Streuber 2020; Assländer et al. 2023). Therefore, artificially generated images presented in HMD devices can be integrated for balance control. Consequently, we proposed that virtual reality is a valid tool to study the visual contribution to balance control. In the study presented here, we tested, whether artificially generated visual input presented in the periphery of the human eye can be used to improve balance in real life. This would be very desirable, as it lays the foundation for assistive devices that can improve visual orientation and balance and reduce the risk of falling. Presenting subtle visual cues in the periphery is a promising concept as it leaves central vision free of any obstructions that could be disturbing while doing everyday tasks.

While viewing a stable visual reference without additional visually based tasks improves balance in everyday life, situations occur, where the visual input is degraded. For example, reduced brightness (Dev et al. 2021) and reduced visual acuity (Paulus et al. 1984) lead to an increase in body sway, indicating a decrease in postural stability. In addition, visual stabilization depends on the richness of the visual scene (Paulus et al. 1984). In the extreme, standing or turning around in front of a white wall will not change the image on the retina in relation to the head movement. Thus, the visual system is no longer providing reliable feedback for balance control. Undesired sway would not be encoded by the visual system and no correcting muscle contraction would be generated. Given the partly severe functional degradation of all parts of the balance control system (see below), fall-prone subjects likely have difficulties compensating a loss of visual feedback, resulting in a high fall risk. We think that such threatening situations could be avoided by providing reliable visual feedback using augmented reality (AR). In other words, we think that AR might be used to improve balance and prevent falls. Furthermore, additional visual orientation cues might be useful in avoiding situations known as white-out (Meeks et al. 2023), where deteriorated visual cues lead to a complete loss in orientation, could help to avoid motion sickness, and could be an aid for balance demanding tasks, such as slack-lining.

The study presented here was conducted as a proof of concept for the technical feasibility using young adults. Nonetheless, the main motivation for our approach is the global health problem of falls in the elderly and neurological patient populations. The World Health Organization fact sheet on falls cites an estimate of 37.3 million annual falls requiring medical attention and causing 684’000 fatalities. Fall-injury related health costs underline the magnitude of the problem. The fact sheet reports average injury costs of US$ 3611 for Finland and US$ 1049 for Australia. In Canada, a reduction of falls by 20% is estimated to reduce costs by US$ 120 million annually. (United Nations 2021)

Falls and fall risks are multi-factorial problems. The present study targets the sensory system. Humans rely on three sensory systems for self-orientation and balance: 1) the vestibular organ, a gravito-inertial sensor in the inner ear; 2) the proprioceptive system, providing joint angle sensation and thereby information on body orientation with respect to the surface; 3) the visual system, extracting self-motion cues with respect to the visual scene (Peterka 2002). Sensory function deteriorates with age, affecting the vestibular (Agrawal et al. 2020), the proprioceptive (Henry and Baudry 2019), as well as the visual system (Andersen 2012). Evidence suggests, that people with a high fall-risk show an increased reliance on visual information (Osoba et al. 2019). A high dependence on vision, however, could expose participants to an increased risk of orientation loss in situations of deteriorated vision (e.g. poor lighting) or visually poor scenes (e.g. a white wall). It should be noted, that despite the subjective experience that standing balance is easy, human stance is inherently unstable, requiring constant corrective muscle contractions. Losing orientation for a fraction of a second, therefore, is sufficient to accelerate the body enough to cause a fall. These considerations highlight the potential of the idea in using AR to improve visual orientation and balance.

The main application of our device is likely as AR googles to improve visual orientation, balance and as an assistive device for fall-prevention. Baragash et al. reviewed AR and VR applications to improve the life of older adults (2022) and balance is one of the most prevalent topics. Most of the approaches use AR-/VR-based balance training interventions (Nishchyk et al. 2021). These involve older adults wearing an HMD to perform balance exercises (Mostajeran et al. 2020; Kouris et al. 2018). One notable approach used a VR device to generate a virtual tiled floor to enhance walking rhythm and speed (Baram 1999). The approach uses a virtual environment occluding the real-world environment and is used as a training device (Baram and Miller 2006). The tiled floor could also have a balance stabilizing effect, however the device is not designed for use outside of the training sessions. Next to such technology supported training approaches, also multiple fall detection devices have been proposed (Wang et al. 2020). In general, technology is widely deployed in the field of fall prevention. Oh-Park et al. (2021) highlight four fields: 1) fall risk assessment, 2) fall detection, 3) fall injury prevention, and 4) fall prevention. For fall prevention, mostly the above described technically supported training interventions are utilized. Exceptions are three studies we found, where robotic devices are used to detect and actively prevent occurring falls based on fall event detection algorithms (Trkov et al. 2017; Ji et al. 2018; Oh-Park et al. 2021). While these approaches are certainly valuable, assuming a version that reliably works, the involved exoskeletons and robotic devices do have severe downsides in terms of usability and costs. In summary, AR and VR systems, as well as technology in general, are widely used in fall-related prevention, targeting physical exercise, rehabilitation, and emergencies. However, to the best of our knowledge and outside of mechanical walking aids, no assistive devices exist that actually improve balance stability and reduce the risk of falling.

We propose a novel approach of an AR system that provides visual orientation in everyday situations. The aim of the system is to continuously augment a user’s view with reliable visual orientation cues. The approach might not provide improvements when looking at a stationary, visually rich, well-illuminated, and high-contrast visual scene. However, in situations with poor visual conditions or a moving natural visual scene, we pose that the system could make the difference between a fall and maintaining balance as well as avoid white-out situations. Please note that the idea is not based on fall detection or other event-based interventions, but merely on guaranteeing and enhancing the reliability and validity of the visual reference that is naturally integrated into human balance control. In our approach, we furthermore focused on feedback in the periphery, to avoid clutter and disturbances in central vision.

To test our idea, we used a custom-built HMD (Albrecht et al. 2023) and conducted a study to test the effects of the device on balance as a proxy for its functionality. Humans show small oscillations when standing upright (spontaneous sway), which is considerably larger with eyes closed as compared to eyes open. Blocking the view thus mimics an extreme case of poor vision associated with an increase to eyes closed sway levels. Presenting AVOC cues should then again reduce spontaneous sway, ideally restoring eyes open levels. Our device displays space-stationary patterns in the peripheral field of view. The goals of the present work were to develop a proof of concept prototype implementing AVOC as augmented reality and test the following two hypotheses:

-

Hypothesis 1: AVOC cues can reduce spontaneous body sway as compared to a sham condition displaying a static image.

-

Hypothesis 2: AVOC cues are used for both anterior-posterior and medio-lateral balance, where small superimposed movements of the visual scene evoke direction specific sway responses.

2 Methods

2.1 Display device

Commercially available AR devices show content in central vision and are therefore not suitable for our approach of providing visual cues in the periphery. Thus, we used our own head-mounted display toolkit for peripheral vision - MoPeDT (Albrecht et al. 2023). MoPeDT is a headset toolkit to augment peripheral vision using a custom 3D-printed headset and off-the-shelf electronic components. Our configuration of MoPeDT (Fig. 1) consists of two 2.9 inch 1440x1440@120 Hz displays (LS029B3SX02, Sharp, Osaka, Japan) that are placed at a horizontal angle of 40 degrees (angle between sagittal plane and right angle from the center of the screen) on each side of the wearer’s head and at approximately 10 cms distance from the wearer’s eyes. The screen centers are positioned at eye height. Each peripheral display covers approximately 30x30 degrees of a participant’s field of view.When wearing the HMD, central vision stays clear of any obstructions. Participants are still able to see their environment except for the parts where the peripheral display modules block vision (Fig. 1).

To track the user’s head in space, a virtual reality tracker (Vive Tracker 3.0, HTC, Taoyuan, Taiwan) is mounted on the headset. The MoPeDT HMD is connected to a host PC via DisplayPort for the video signal and USB for power. A custom Unity application (Version 2020.3.26f1, Unity Technologies, San Francisco, USA) controls the HMD rendering, tracking, experimental protocol, and data recording.

The content displayed on the screens is designed to provide high contrast patterns that are space-stationary from a participant’s perspective. We render an infinite 3D grid of white lines on a black background (Fig. 2), employing a simple visual language and maximizing contrast for good perception in the periphery (Strasburger et al. 2011; Luyten et al. 2016). To avoid visual clutter, the grid is only displayed at a fixed distance from the user. Close (\(\le\) 0.4 ms) and far (\(\ge\) 0.6 ms) grid segments are gradually faded out. In the center of the displays, a line of the grid covers 2.7–6.4 degrees visual angle (depending on its distance) with 48 pixels per degree. The brightness of the peripheral displays is dimmed according to the brightness of the study environment in order to avoid blinding wearers and thus decreasing perceived contrast.

As the HMD is motion-tracked, head movement leads to the motion of the grid on the peripheral displays, such that the grid appears space stationary for the participant. This way, the displayed visual cues behave like cues in a real environment, for example, the outlines of a door in a room or a wall with a high contrast pattern. Thus, the grid is assumed to be naturally integrated as a visual head-orientation reference and used for balance.

We quantified the delay between HMD (i.e. tracker) motion and the self-motion correcting pattern motion on the display. We used a high-speed camera (Mikrotron Eosens Mini2, SVS-Vistek, Gilching, Germany) to capture the HMD displays and their physical rim at 1000 frames per second. A weight on a pendulum was swung against the side of the HMD to induce a slight position change. We then counted the number of frames to get the time in milliseconds from the moment the HMD starts moving until there is a visual change on the displays. We measured an average delay of 33 milliseconds (SD = 2 ms) over 7 measurements using a wireless connection to the tracker and the displays running at 120 Hz. This is comparable to consumer VR HMDs in terms of latency without pose prediction (Warburton et al. 2022) (see also Sect. 4).

2.2 Study procedure

A total of 34 healthy young participants took part in the study. Exclusion criteria were balance or neurological disorders, recent concussions, epilepsy, as well as orthopedic problems. Due to technical recording issues, only 31 participants (18 female, 13 male) between 21 and 35 (\(M = 24.58\), \(SD = 3.45\)) were analyzed. Participant height was between 1.57 and 1.89 m (\(M = 1.70\), \(SD = 0.08\)) and weight was between 51 and 94 kg (\(M = 69.01\), \(SD = 11.24\)).

Prior to the study, participants received a short written and verbal introduction, filled out a short health questionnaire, and gave written consent for their participation. Then, participants filled out a first Simulator Sickness Questionnaire (SSQ) (Kennedy et al. 1993) to assess baseline sickness.

Shoulder and hip kinematics were recorded using HTC Vive Trackers 3.0 attached using velcro straps. In all but the eyes-open and eyes-closed reference conditions, participants also wore the HMD which allowed us to additionally record head movement (Fig. 2). During the experiment, participants were standing in socks with feet together (narrow stance) in the middle of a foam pad (Balance-Pad, Airex, Sins, Switzerland) placed on a force plate (Leonardo Mechanograph GRFP STD, Novotec Medical, Pforzheim, Germany). Analog center of pressure data from the force plate was captured by a data acquisition device (T7, LabJack, Lakewood, USA) connected to the host PC (i7-9700K, RTX 2070, 16 GB RAM) running the Unity application (see Sect. 2.1).

Table 1 provides an overview of all conditions. During conditions 1 and 2 (eyes open and eyes closed) subjects did not wear the HMD to assess baseline sway to compare against. In conditions 3-6 (Sham/AVOC \(\times\) Room/Dark) subjects wore the HMD to test for balance improvements through augmented visual orientation cues (Hypothesis 1). In Dark conditions, a towel was used to fully block the view of the room and simulate a case of no visual space reference (see Sect. 1). The towel was hung over the frontal part of the participant’s head and HMD, such that the displays were visible and participants had a small volume of space in front of their face to breathe comfortably. Additionally, we turned off the room light to prevent light leaking in. In Room conditions, subjects looked at the regularly lit lab room of 3.2 x 3.7 x 3.3 m room (width, length, height). Distance of the walls was approximately 2 ms to the front and 1.5 ms on each side. Participant’s view contained two white doors, a white cupboard, a dotted black floor, as well as several smaller items and structures. During AVOC, the pattern displayed on the screen was rendered such that it appeared space stationary to the user, i.e. when tilting the head, the vertical lines remained aligned with gravity, when translating the head, the lines moved along the screen. During Sham, the pattern frozen after 10 s and only a static picture was displayed throughout the trial. The Sham condition was introduced to account for potential confounding aspects through subjects wearing the HMD and the towel. During conditions 1 - 6 subjects were instructed to stand upright and comfortably while we measured sway and force-plate data for 60 s. Another four conditions (SineAP/SineML \(\times\) Room/Dark) were designed to test for the direction-specific integration of augmented visual orientation cues (Hypothesis 2). For conditions 7 - 10, subjects were again instructed to stand upright and comfortably, while we measured for 240 s. Throughout the trials the AVOC cues, in addition to correcting for self-motion, moved with a 10 cm amplitude sine at a frequency of 0.25 Hz in either anterior-posterior (AP) or medio-lateral (ML) direction. In total, the measurements took around 35 min for each participant and participants could take small breaks in between trials, if required.

Conditions were tested in random order and double-blinded, where participants and instructors were neither aware of the purpose of the study, nor of the currently applied condition apart from the instruction with/without HMD, eyes open/closed, Dark/Room. Before each condition, participants were instructed to stand upright and comfortably, cross hands in front of the hip and to look straight ahead without fixating a point or looking directly at the peripheral displays.

After all conditions, participants filled out a second SSQ.

2.3 Data analysis

Preprocessing of data was realized in MATLAB (The Mathworks, Natick, USA), statistics and plots in R (The R Foundation, https://www.R-project.org). We used the center of pressure (COP) cues as measured by the force plate as our main outcome measure. The center of pressure essentially reflects changes in ankle torque, where a higher variability is typically associated with less stable balance (please note that this is, e.g. in patient populations, not universally true). One standard measure to quantify the COP oscillations is to calculate the path length ’traveled’ in a given time (Maurer and Peterka 2005). For the six spontaneous sway conditions, we filtered the data by a 5 Hz low-pass filter to reduce measurement noise and discarded the first 10 s to avoid including preparation movements of the participants when starting the measurement. For the remaining 50 s of each trial, we calculated the 2D COP path length and divided it by 50 s to obtain a measure independent from the recording time. We then normalized the data for each participant to the individual eyes open (0%) and eyes closed (100%) path length values. We statistically compared results using a Friedman Test, as the assumption of normality was violated, and calculated Kendall’s W for the effect size. Afterwards, we performed pairwise Holm-corrected Wilcoxon post-hoc tests. Additionally, we compared each static condition to the theoretic mean of normalized eyes open and eyes closed values using one-sample two-sided Wilcoxon tests. We also calculated the Wilcoxon effect size r for each test.

COP data of the four sine-stimulus conditions was 5 Hz low-pass filtered and the first 20 s were discarded to avoid transient effects when starting the trials. Further, we calculated COP velocity to reduce the effect of position drifts (Assländer and Peterka 2016) and cut the 220 s long COP AP and COP ML recordings into 55 sine-stimulus cycles of 4 s duration.

Averaging across cycles (i.e. stimulus repetitions) reduces the random sway component and brings forth the sway component evoked by the stimulus. We further reduced the random sway contribution by calculating a scaled Fourier transform of all cycles and solely using the 0.25 Hz frequency point. Taking the arithmetic mean across all cycles of all participants and calculating the absolute value provided our mean sway response for each condition and direction.

As the Fourier values for the sine-stimulus conditions are complex numbers, conventional repeated measures multi-variate analyses like repeated-measures MANOVA were not suitable for our data analysis. Instead, we chose a bootstrapping-based approach for both hypothesis testing and confidence interval calculation (Zoubir and Boashash 1998). To test the difference of means between the two groups, we bootstrapped the original samples (0.25 Hz frequency points of 31 participants and 55 cycles each) 10,000 times. For each resulting bootstrap sample we calculated the statistic \({\hat{T}}^*\) as follows:

with

where |... | denotes the complex magnitude, \(\bar{g_1}\) and \(\bar{g_2}\) the mean of the original samples of the two compared conditions, and \(\bar{g_1}^*\) and \(\bar{g_2}^*\) the mean of the current bootstrap sample. \({\hat{\sigma }}^*\) is the standard deviation of the bootstrap sample, which was calculated in a nested design: each bootstrap sample was again bootstrapped (200 replicates) and the standard deviation across the resulting 200 complex magnitude of mean differences (\(g_1^*\) and \(g_2^*\)) was calculated.

The p-value for the hypothesis test is the number of \({\hat{T}}^*\) values that are greater than the cutoff point

divided by the number of bootstraps (10,000), where \(\sigma\) is calculated as described above but for the original sample.

We compared AP and ML sway with and without sine stimulus in the respective direction (4 tests) and the influence of the environment condition (Room vs. Dark) for sway with a sine stimulus in the same direction (2 tests). The p-values for each group of tests were Holm-corrected. Additionally, bias-corrected and accelerated (BCa) 95% confidence intervals were calculated for both AP and ML sway and for each condition. Finally, we calculated Cohen’s d as effect size measure, where we estimated the standard deviation from the confidence intervals using

where \(N = 31\) is the number of recorded subjects.

We compared SSQ scores before and after the experiment to assess if the presentation of balance cues induces motion sickness or a general feeling of discomfort. As normality was violated for all SSQ scores, we performed paired Wilcoxon tests for each sub-score and the total score. We also calculated the Wilcoxon effect size r for each test.

We selected an alpha level of 0.05 for all tests.

3 Results

3.1 Center of pressure

3.1.1 Spontaneous sway

Figure 3 shows the COP velocity during the unperturbed stance conditions. COP velocity was significantly different between conditions (\(\chi ^2(3) = 76.74\), \(p < 0.0001\), \(W = 0.825\)). The main comparison of interest between avoc_dark and sham_dark showed a 23% reduction (\(p = 0.01\), \(r = 0.496\)) and a 2% reduction between avoc_room and sham_room (\(p = 0.039\), \(r = 0.37\)). We further compared individual conditions to eyes open and eyes closed baseline COP path length measures. COP velocity was 40% lower in avoc_dark as compared to eyes closed (\(p < 0.0001\), \(r = 0.718\)). However, we also found a 17% reduction in sham_dark as compared to eyes closed (\(p = 0.0289\), \(r = 0.391\)). COP path length was comparable between avoc_room and eyes open (\(p = 0.0695\)), but showed a 9% increase for sham-room as compared to eyes open (\(p = 0.0246\), \(r = 0.401\)). Please refer to Table 2 for original and normalized summary statistics of the COP velocity for each condition.

3.1.2 Direction-specific integration of visual feedback

Stimulus and COP sway response for each direction-specific sway condition, averaged over all participants for a single stimulus cycle. Colored COP response line depicts signal after low-pass filtering with 0.3 Hz. Colored ribbon band denotes standard deviation. Gray COP response line depicts signal after low-pass filtering with 5 Hz. Stimulus plotted without filtering

To display the effect of the sinusoidal translation of the displayed visual reference, we averaged the time-domain COP trajectories across all participants and recorded cycle repetitions (Fig. 4). The grey trajectories show the 5 Hz low-pass filtered average, showing the presence of higher order oscillations and a rough resemblance of the stimulus. Colored lines show 0.3 Hz low-pass filtered and averaged trajectories. Here the sinusoidal sway is visible in all conditions, where the sine-stimulus was present, while there is no sinusoidal sway visible in the directions without stimulus. To quantify these effects, we conducted frequency based analyses with bootstrap hypothesis tests, as described in Sect. 2.3. Figure 5 shows the magnitude of mean in AP and ML direction for each condition (averaged across all participants and stimulus repetitions), bootstrapped BCa 95% confidence intervals, and bootstrapped hypothesis test results. The X-axis shows AP and ML sway for the four conditions with stimulus (Dark/Room \(\times\) stimAP/stimML). In the Dark AP stimulus condition, magnitude of mean AP sway was much larger as compared to ML (\(p < 0.001\), \(d = 1.37\)). A similar, however non-significant (\(p = 0.055\), \(d = 0.79\)), effect was present in the Room AP stimulus condition. Similarly, magnitude of mean ML sway was larger than AP sway in the Dark ML stimulus condition (\(p = 0.041\), \(d = 0.68\)) and in the Room ML condition (\(p = 0.041\), \(d = 0.82\)). We did not find a significant difference in AP sway between Dark AP and Room AP conditions (\(p = 0.073\), \(d = 0.73\)), or in ML sway between Dark ML and Room ML conditions (\(p = 0.110\), \(d = 0.26\)). The magnitude of mean sway for all non-stimulus components (i.e. ML sway for AP stimulus and AP sway for ML stimulus) showed similar small values in all conditions.

3.2 SSQ

All sub-scores and the total score of the SSQ were significantly higher (\(p < 0.01\)) after the experiment compared to before the experiment (Fig. 6). Post disorientation (\(MD = 13.92\), \(IQR = 41.75\)) was higher than pre disorientation (\(MD = 0\), \(IQR = 6.96\), \(p = 0.001\), \(r = 0.69\)). Post nausea (\(MD = 19.08\), \(IQR = 19.08\)) was twice as high as pre nausea (\(MD = 9.54\), \(IQR = 19.08\), \(p = 0.002\), \(r = 0.58\)). Post oculomotor (\(MD = 22.74\), \(IQR = 22.74\)) was three times as high as pre oculomotor (\(MD = 7.58\), \(IQR = 7.58\), \(p = 0.001\), \(r = 0.70\)). Post total SSQ score (\(MD = 18.7\), \(IQR = 24.31\)) was 2.5 times as high as pre total SSQ score (\(MD = 7.48\), \(IQR = 11.22\), \(p < 0.001\), \(r = 0.77\)).

4 Discussion

In this study, we tested whether visual patterns displayed in the periphery of a participant’s field of view can be used to improve human standing balance (Hypothesis 1) and induce either anterior-posterior or medio-lateral sway through a superimposed motion of the displayed pattern (Hypothesis 2). Our results confirmed both hypotheses, showing a 23% reduction in center of pressure oscillations (sham_dark vs. avoc_dark), as well as direction-specific responses to the pattern movement in AP and ML directions. Our results, therefore, provide a proof of concept for a technical device to improve standing balance by augmenting visual orientation cues in the peripheral field of view. The observed effects were statistically robust, but also showed potential for improvements. In the following, we will discuss some experimental shortcomings and the challenges in design and technical solutions for future developments.

The observed effects on spontaneous sway were statistically robust with a large effect size. However, during the Dark conditions, we found an improvement also in the Sham condition as compared to eyes closed, which was unexpected. One factor could be that the headset and towel had a mechanical effect on sway behavior through the additional weight, inertia, and cables attached to the participant. We had no suspension for the cables, which therefore could have caused a strain on the participant’s head and/or body. Also, psychological effects could have caused the difference between eyes closed and sham, such as changes in concentration on or distraction from the balance task.

Participants were standing on foam pads in all conditions. Our rationale was to decrease the information provided by the body orientation with respect to the support surface, if it is compliant (Schut et al. 2017). Reducing the proprioceptive contribution typically increases the visual contribution, therefore increasing the potential effects of AVOC. We underestimated the amount of variability that was induced by the instability of the participants on the foam. Foam is widely known to induce an increase in sway variability. However, the large variability resulted in rather large confidence bounds, somewhat masking the effects. Due to the sufficient number of recruited participants, we were nonetheless able to obtain statistically robust results. Another shortcoming in our design was the chosen randomization which was not counterbalanced and therefore does not fully exclude potential order effects.

We did not find meaningful differences between eyes open and avoc_room conditions. As the room provides reliable visual feedback, the visual cues in avoc_room could have merely substituted ’natural’ visual feedback with artificial visual feedback. This result is important, as it confirms that AVOC did not perturb balance or confuse participants. We did, however, find slightly increased COP oscillations in the room_sham condition as compared to eyes open. One explanation could be that in this condition, the displays act like blinders, showing only a static pattern, and reducing the wearer’s field of view.

The device covered only a very small proportion of the human field of view (30x30 degrees per eye). There are some indications that the size of the field of view is modulating the extent to which spontaneous sway is reduced by vision, where a larger field of view leads to less spontaneous sway (Paulus et al. 1984). However, the size needs to be corrected by the cortical magnification, corresponding to the retinal receptor density, which also determines the visual acuity across the human field of view (Cowey and Rolls 1974). In other words, more central areas have a larger contribution, as they have a higher cortical representation. Thus, the small field of view of our device technically limits the possible improvement, which likely is a major factor explaining the rather small effect on spontaneous sway (23% in Dark AVOC vs. Sham). Paulus et al. (1984) looked at changes in spontaneous sway when occluding parts of the field of view. Incidentally, one condition was roughly comparable to our setup, where participants only saw 30 degrees in the periphery of one eye. We normalized their reported data to eyes open (0%) and eyes closed (100%) and found an improvement of 41% in the \(30^{\circ }\) condition compared to eyes closed. This indicates a general limitation of small field of view feedback, as we used it in our setup. However, it strengthens our findings in that our technical presentation of AVOC cues showed similar results as compared to a high-contrast real-world presentation used in the study of Paulus et al. (1984). Notably, Paulus et al. (1984) explicitly discuss, that the visual contribution to balance has enough "redundancy for compensation of small ’scotomas’". Thus, AVOC cues presented in a significant portion of the human field of view is likely sufficient to stabilize balance enough in poor vision conditions to prevent orientation loss and falls. Covering the full field of view seems not to be necessary.

Similar to the small effects on spontaneous sway, also the amplitudes evoked by the sinusoidal stimulus were small compared to earlier studies (Stoffregen 1985, 1986; Dijkstra et al. 1992). Size and location of the displayed field of view largely differed between those studies and our displays. While one condition by Stoffregen (1985) had an even smaller field of view (20\(\times 23^{\circ }\)), it was located in the foveal region, with a much larger effect on balance compared to our AVOC cues due to the cortical magnification factor (Straube et al. 1994). In addition, also motion stimulus amplitudes and velocities were very different, which could have added to the differences due to sensory reweighting (Peterka 2002). Another major factor, potentially reducing the use of the displayed cues is the reduced visual acuity through the near-eye location of the displays. With subjects looking into the distance, the accommodation of the eyes is effectively blurring the displays. Thus, future systems should use display optics adjusted for eye accommodations typical in everyday life.

The significantly higher scores of the SSQ after the experiment suggest that presenting balance cues using MoPeDT can induce small levels of cybersickness. We suspect that the current technical limitations of the system could be a reason for this. The motion-to-photon latency of our HMD (approx. 33 ms, see Sect. 2.1) is comparable to common consumer VR headsets (Warburton et al. 2022). However, we did not use motion prediction, which can drastically reduce experienced latency during ongoing movements (Warburton et al. 2022) by incorporating the current velocity and direction of a movement and predicting future poses. We suspect that a lower average latency of the displayed balance cues could further improve their effectiveness and possibly reduce cybersickness. Furthermore, the motion stimuli used in our SineAP/SineML conditions could also induce motion sickness as it has been reported e.g. by Stoffregen (1985). In addition, due to differences in head shape and how a user is wearing the HMD, they might perceive a slightly different view on the peripheral displays, and as such the displayed visual cues do not perfectly match that viewing angle. A possible solution would be to use eye-tracking to precisely account for different users’ heads or use even simpler visual cues that do not depend on the viewing angle. Apart from such technical reasons, also standing itself and especially wearing the towel over the head likely have contributed to the higher SSQ scores.

In this present study, we used opaque peripheral displays for maximum contrast and due to their simple implementation. The next steps are to increase the area in the human field of view where AVOC cues are presented and to develop optical see-through augmented reality optics. Transparency is mandatory in terms of usability in everyday life, especially when increasing the AVOC display area. Introducing transparent displays might introduce additional problems, such as a trade-of between too low contrast and too disturbing AVOC cues, which need to be evaluated with hardware developed for this purpose and usability studies. In addition, the see-through devices needs to have optics tailored to provide sufficient visual acuity with everyday focal distances, which induces considerable technical challenges. Next to the display technology, future work will need to optimize the presented patterns. Moderating factors are likely contrast and brightness, resolution and spatial frequency, crowding and optimal area to display the cues. With a sufficiently mature technical device, future studies need to investigate the potential of AVOC in fall-risk and patient populations. However, the effort is potentially rewarding: implemented as an optical see-through augmented reality device, our results show that the approach has the potential to improve balance in situations with poor visual conditions.

5 Conclusion

We proposed a novel approach to improve balance in situations with a poor visual reference, developed a technical implementation and demonstrated its functionality. Our results confirmed that technically generated space-stationary visual patterns are integrated as a reference for the visual contribution to balance. Currently, the main technical limitation is presenting AVOC across a large field of view, without restricting the real-world view. As balance deficits are a major health problem leading to numerous falls in affected populations, improving the visual orientation through AVOC might have considerable potential.

Availability of data and materials

The datasets generated and analysed during the current study are available from the corresponding author upon reasonable request.

References

Agrawal Y, Merfeld DM, Horak FB et al (2020) Aging, vestibular function, and balance: proceedings of a national institute on aging/national institute on deafness and other communication disorders workshop. J Gerontol Ser A 75(12):2471–2480. https://doi.org/10.1093/gerona/glaa097

Albrecht M, Assländer L, Reiterer H, et al. (2023) MoPeDT: A modular head-mounted display toolkit to conduct peripheral vision research. In: 2023 IEEE conference virtual reality and 3D user interfaces (VR), pp 691–701. https://doi.org/10.1109/VR55154.2023.00084

Andersen GJ (2012) Aging and vision: changes in function and performance from optics to perception. Wiley Interdiscip Rev Cognit Sci 3(3):403–410. https://doi.org/10.1002/wcs.1167

Assländer L, Peterka RJ (2016) Sensory reweighting dynamics following removal and addition of visual and proprioceptive cues. J Neurophysiol 116(2):272–285. https://doi.org/10.1152/jn.01145.2015

Assländer L, Streuber S (2020) Virtual reality as a tool for balance research: eyes open body sway is reproduced in photo-realistic, but not in abstract virtual scenes. PLoS One 15(10):e0241479. https://doi.org/10.1371/journal.pone.0241479

Assländer L, Albrecht M, Diehl M et al (2023) Estimation of the visual contribution to standing balance using virtual reality. Sci Rep 13(1):2594. https://doi.org/10.1038/s41598-023-29713-7

Association WM (2013) World medical association declaration of helsinki: ethical principles for medical research involving human subjects. JAMA 310(20):2191–2194. https://doi.org/10.1001/jama.2013.281053

Baragash RS, Aldowah H, Ghazal S (2022) Virtual and augmented reality applications to improve older adults’ quality of life: a systematic mapping review and future directions. Digital Health 8:20552076221132100. https://doi.org/10.1177/20552076221132099

Baram Y (1999) Walking on tiles. Neural Process Lett 10:81–87. https://doi.org/10.1023/A:1018713516431

Baram Y, Miller A (2006) Virtual reality cues for improvement of gait in patients with multiple sclerosis. Neurology 66(2):178–181. https://doi.org/10.1212/01.wnl.0000194255.82542.6b

Cowey A, Rolls E (1974) Human cortical magnification factor and its relation to visual acuity. Exp Brain Res 21:447–454. https://doi.org/10.1007/BF00237163

Dev MK, Wood JM, Black AA (2021) The effect of low light levels on postural stability in older adults with age-related macular degeneration. Ophthal Physiol Opt 41(4):853–863. https://doi.org/10.1111/opo.12827

Dijkstra T, Gielen C, Melis B (1992) Postural responses to stationary and moving scenes as a function of distance to the scene. Human Movement Sci 11(1–2):195–203. https://doi.org/10.1016/0167-9457(92)90060-O

Henry M, Baudry S (2019) Age-related changes in leg proprioception: implications for postural control. J Neurophysiol 122(2):525–538. https://doi.org/10.1152/jn.00067.2019

Ji J, Guo S, Song T et al (2018) Design and analysis of a novel fall prevention device for lower limbs rehabilitation robot. J Back Musculoskeletal Rehabil 31(1):169–176. https://doi.org/10.3233/BMR-169765

Kennedy RS, Lane NE, Berbaum KS et al (1993) Simulator sickness questionnaire: an enhanced method for quantifying simulator sickness. Int J Aviat Psychol 3(3):203–220. https://doi.org/10.1207/s15327108ijap0303_3

Kouris I, Sarafidis M, Androutsou T, et al. (2018) Holobalance: An augmented reality virtual trainer solution forbalance training and fall prevention. In: 2018 40th Annual international conference of the IEEE engineering in medicine and biology society (EMBC), pp 4233–4236.https://doi.org/10.1109/EMBC.2018.8513357

Luyten K, Degraen D, Rovelo Ruiz G, et al. (2016) Hidden in plain sight: An exploration of a visual language for near-eye out-of-focus displays in the peripheral view. In: Proceedings of the 2016 CHI conference on human factors in computing systems. Association for Computing Machinery, New York, NY, USA, CHI ’16, pp 487–497. https://doi.org/10.1145/2858036.2858339

Maurer C, Peterka RJ (2005) A new interpretation of spontaneous sway measures based on a simple model of human postural control. J Neurophysiol 93(1):189–200. https://doi.org/10.1152/jn.00221.2004

Meeks RK, Anderson J, Bell PM (2023) Physiology of spatial orientation. StatPearls Publishing, Treasure Island (FL). http://europepmc.org/books/NBK518976

Mostajeran F, Steinicke F, Ariza Nunez OJ, et al. (2020) Augmented reality for older adults: exploring acceptability of virtual coaches for home-based balance training in an aging population. In: Proceedings of the 2020 CHI conference on human factors in computing systems. Association for Computing Machinery, New York, NY, USA, CHI ’20, pp 1–12. https://doi.org/10.1145/3313831.3376565

Nishchyk A, Chen W, Pripp AH et al (2021) The effect of mixed reality technologies for falls prevention among older adults: systematic review and meta-analysis. JMIR Aging 4(2):e27972. https://doi.org/10.2196/27972

Oh-Park M, Doan T, Dohle C et al (2021) Technology utilization in fall prevention. Am J Phys Med Rehabil 100(1):92–99. https://doi.org/10.1097/PHM.0000000000001554

Osoba MY, Rao AK, Agrawal SK et al (2019) Balance and gait in the elderly: a contemporary review. Laryngoscope Investigative Otolaryngol 4(1):143–153. https://doi.org/10.1002/lio2.252

Paulus W, Straube A, Brandt T (1984) Visual stabilization of posture: physiological stimulus characteristics and clinical aspects. Brain 107(4):1143–1163. https://doi.org/10.1093/brain/107.4.1143

Peterka RJ (2002) Sensorimotor integration in human postural control. J Neurophysiol 88(3):1097–1118. https://doi.org/10.1152/jn.2002.88.3.1097

Schut I, Engelhart D, Pasma J et al (2017) Compliant support surfaces affect sensory reweighting during balance control. Gait Posture 53:241–247. https://doi.org/10.1016/j.gaitpost.2017.02.004

Stoffregen TA (1985) Flow structure versus retinal location in the optical control of stance. J Exp Psychol Human Percept Perform 11(5):554. https://doi.org/10.1037/0096-1523.11.5.554

Stoffregen TA (1986) The role of optical velocity in the control of stance. Percept Psychophys 39:355–360. https://doi.org/10.3758/BF03203004

Strasburger H, Rentschler I, Jüttner M (2011) Peripheral vision and pattern recognition: a review. J Vis 11(5):13–13. https://doi.org/10.1167/11.5.13

Straube A, Krafczyk S, Paulus W et al (1994) Dependence of visual stabilization of postural sway on the cortical magnification factor of restricted visual fields. Exp Brain Res 99:501–506

Trkov M, Wu S, Chen K et al (2017) Design of a robotic knee assistive device (rokad) for slip-induced fall prevention during walking. IFAC PapersOnLine 50(1):9802–9807. https://doi.org/10.1016/j.ifacol.2017.08.887

United Nations (2021) Factsheet: falls. https://www.who.int/news-room/fact-sheets/detail/falls

Wang Z, Ramamoorthy V, Gal U, et al. (2020) Possible life saver: a review on human fall detection technology. Robotics 9(3). https://doi.org/10.3390/robotics9030055

Warburton M, Mon-Williams M, Mushtaq F et al (2022) Measuring motion-to-photon latency for sensorimotor experiments with virtual reality systems. Behav Res Methods. https://doi.org/10.3758/s13428-022-01983-5

Zoubir A, Boashash B (1998) The bootstrap and its application in signal processing. IEEE Signal Process Mag 15(1):56–76. https://doi.org/10.1109/79.647043

Acknowledgements

We thank our student lab members Johannes Dörr, Saiguhan Elancheran, Anna Grootenhuis, and Lennart Präger for their help during data collection for this study.

Funding

Open Access funding enabled and organized by Projekt DEAL. This research was funded by the "Transferplattform" of the University of Konstanz, and the "EFRE-Programm Baden-Württemberg 2021-2027" (ID 2519389).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by Matthias Albrecht and Lorenz Assländer. The first draft of the manuscript was written by Matthias Albrecht and Lorenz Assländer. All authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have filed a patent for the idea that was tested in this publication (U.S. Patent Application No. 17/864,946.) and otherwise no competing interests to declare that are relevant to the content of this article.

Ethics approval

The study was conducted in agreement with the IRB regulations by the ethics committee of the University of Konstanz, Germany, and the latest version of the Declaration of Helsinki (Association 2013).

Consent to participate

All participants were informed about the study and consented to participate.

Consent for publication

All participants gave consent that their anonymized data recorded during the study may be used for publications.

Code availability

The code that was used to conduct and analyze the current study is available from the corresponding author upon reasonable request.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Albrecht, M., Streuber, S. & Assländer, L. Improving balance using augmented visual orientation cues: a proof of concept. Virtual Reality 28, 109 (2024). https://doi.org/10.1007/s10055-024-01006-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10055-024-01006-y