Abstract

Robotic surgery is preferred over open or laparoscopic surgeries due to its intuitiveness and convenience. However, prolonged use of surgical robots can cause neck pain and joint fatigue in wrist and fingers. Also, input systems are bulky and difficult to maintain. To resolve these issues, we propose a novel input module based on real-time 3D hand tracking driven by RGB images and MediaPipe framework to control surgical robots such as patient side manipulator (PSM) and endoscopic camera manipulator (ECM) of da Vinci research kit. In this paper, we explore the mathematical basis of the proposed 3D hand tracking module and provide a proof-of-concept through user experience (UX) studies conducted in a virtual environment. End-to-end latencies for controlling PSM and ECM were 170 ± 10 ms and 270 ± 10 ms, respectively. Of fifteen novice participants recruited for the UX study, thirteen managed to reach a qualifiable level of proficiency after 50 min of practice and fatigue of hand and wrist were imperceivable. Therefore, we concluded that we have successfully developed a robust 3D hand tracking module for surgical robot control and in the future, it would hopefully reduce hardware cost and volume as well as resolve ergonomic problems. Furthermore, RGB image driven 3D hand tracking module developed in our study can be widely applicable to diverse fields such as extended reality (XR) development and remote robot control. In addition, we provide a new standard for evaluating novel input modalities of XR environments from a UX perspective.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Minimal invasiveness has become a key paradigm in modern surgery (Berggren et al. 1994; Mack 2001; Fuchs 2002; Lanfranco et al. 2004; Gemmill and McCulloch 2007; Nezhat 2008; Townsend et al. 2016; Martínez-Pérez et al. 2017; Nouralizadeh et al. 2018). Over the past few decades, minimally invasive surgery has proven to be superior to open surgery in terms of various clinical outcome indicators, such as intraoperative blood loss, perioperative pain, postoperative complications, hospitalization period, and patients’ aesthetic satisfaction (Berggren et al. 1994; Fuchs 2002; Gemmill and McCulloch 2007; Townsend et al. 2016; Martínez-Pérez et al. 2017; Nouralizadeh et al. 2018). However, the environment for conducting minimally invasive surgery was not surgeon-friendly since it was uncomfortable and was constrained by difficult instrument control (Mack 2001; Fuchs 2002; Lanfranco et al. 2004; Lawson et al. 2007; Nezhat 2008; Townsend et al. 2016). To overcome these difficulties and maximize the benefits of minimally invasive surgery, robot-assisted minimally invasive surgery (RAMIS) was devised (Mack 2001; Lanfranco et al. 2004; Townsend et al. 2016).

RAMIS platforms, like the remarkable da Vinci surgical system, enable intuitive manipulator control by implementing a system composed of a surgeon console and a patient side robot (DiMaio et al. 2011; Douissard et al. 2019). The movements of the user are input via master tool manipulators (MTMs) and processed to control multiple patient side manipulators (PSMs) and an endoscopic camera manipulator (ECM). Current RAMIS platforms increase the surgeon’s dexterity and precision by adding extra wrist joints to the laparoscopic instruments and implementing tremor filtration algorithms as well as motion scaling (Mack 2001; DiMaio et al. 2011; Douissard et al. 2019). In addition, a stereoscopic endoscope and stereo viewer provide the surgeon with an in-depth binocular view of the operative field. Therefore, RAMIS allows surgeons to easily learn and comfortably perform submillimeter procedures, thereby decreasing complication rates for some surgeries that require delicate instrument manipulation (Ficarra et al. 2007; Nezhat 2008; Gala et al. 2014; Crippa et al. 2021). Nevertheless, various crucial weaknesses of RAMIS persist, including low cost-effectiveness, fatigue owing to non-ergonomic postures, extended operation time, and risk of intraoperative nerve compression from bulky hardware (Mack 2001; Lanfranco et al. 2004; Meijden and Schijven 2009; Mills et al. 2013; Koehn and Kuchenbecker 2015; Townsend et al. 2016; Wee et al. 2020). In this study, we mainly addressed problems related to ergonomics in RAMIS.

Although RAMIS is more comfortable than conventional laparoscopic surgery, surgeons still suffer from fatigue and pain in the back and joints of the upper extremities (Lawson et al. 2007; Lux et al. 2010; Hullenaar et al. 2017; Lee et al. 2017; Wee et al. 2020). Studies have shown that prolonged pain-inducing postures are adopted during extensive surgeries and approximately 20% of surgeons have advocated for improvements in the console design, thus leading to various solutions (Lawson et al. 2007; Lux et al. 2010; Hullenaar et al. 2017; Lee et al. 2017; Wee et al. 2020). Direct adjustments to the surgeon console, such as changing the viewer angle and arm rest height, have improved the user experience (UX), but it was reported that there existed some surgeons who could not be fully satisfied with such adjustments (Lux et al. 2010; Hullenaar et al. 2017). Alternatively, new systems have been developed by implementing new hardware. A head-mounted display (HMD) is one of the most actively studied vision systems as an alternative to the stereo viewer (Qian et al. 2018; Dardona et al. 2019; Jo et al. 2020; Danioni et al. 2022; Kim et al. 2022). Compared with a conventional stereo viewer, surgeons using an HMD can conduct procedures in a more upright position and have reported reduced neck and back fatigue in UX studies (Dardona et al. 2019; Kim et al. 2022).

Finger and wrist pain is also a significant ergonomic issue of contemporary RAMIS systems (Lee et al. 2017). In order to control ECM or PSMs, surgeons should grab and move the end-effector of MTMs. Although a gravity compensating mechanism is implemented to reduce the force applied to the fingers, it has been reported that extensive surgeries lasting for hours can cause a significant amount of hand and wrist pain, potentially affecting surgeons’ performance (Lee et al. 2017). Consequently, a need for a novel input mechanism for RAMIS systems emerged. Wearable devices such as motion gloves have been proposed as a substitute for MTMs (Danioni et al. 2022). However, they are too bulky for users to express delicate movements, their battery capacity is not sufficient for extensive robot surgeries lasting for hours, and cost issues can pose a significant barrier to a widespread use. Currently, deep learning based real-time hand gesture recognition is actively studied in the field of computer vision (Amprimo et al. 2023; Kavana and Suma 2022; Buckingham 2021). Also, in conjunction with HMD, hand tracking based on sets of RGB images is becoming an emerging input modality for users to intuitively interact with virtual reality environments (Amrani et al. 2022; Nikitin et al. 2020; Han S et al. 2020). However, to our knowledge, application of computer vision based hand tracking technology for controlling RAMIS systems has not been reported.

We propose an unconstrained lightweight control interface (ULCI) to reduce finger and wrist pain caused by MTMs by implementing contactless hand tracking algorithm for manipulator control. As depicted in Fig. 1, the three-dimensional (3D) locations of hand landmarks are tracked in real-time through images obtained from multiple cameras. The images are processed to control virtual manipulators that are displayed on the HMD. We report proof of the concept by introducing underlying mechanisms of the ULCI. Then, the interface was assessed both qualitatively and quantitatively through trajectories and latencies of virtual PSMs and ECM. Finally, we recruited naïve participants to conduct UX studies composed of several tasks and a set of questionnaires to investigate feasibility and ergonomic properties of the ULCI.

Diagram of the proposed unconstrained lightweight control interface (ULCI) for robot-assisted minimally invasive surgery (RAMIS) is shown. Several cameras are set at different angles to capture the movement of hands. The data from the cameras are processed with a hand tracking module to extract a set of hand landmark data. Finally, this data is used to visualize the virtual manipulators such as the patient side manipulators (PSMs) or the endoscopic camera manipulator (ECM). A stereoscopic view of the virtual environment is displayed on a head-mounted display (HMD)

2 Materials and methods

2.1 Components of the unconstrained lightweight control interface

2.1.1 Overview

ULCI is a surgical robot control platform based on a real-time computer vision driven hand tracking system and an HMD. As shown in Fig. 2, the ULCI can be divided into three units according to information flow: input, robot control, and visualization units.

Diagram of main components of the ULCI and their connections are shown. The ULCI is composed of three units: input, robot control, and visualization units. The input unit consists of multiple USB cameras and foot pedals. The robot control unit analyzes the videos captured by the cameras with MediaPipe and scripts developed on ROS to determine the joint states of the surgical robot. The visualization unit displays the state of the robot through an HMD via Unity software

2.1.2 Input unit

The input unit comprises several USB cameras (C930E Business Webcam, Logitech, Newark, CA, USA) that stream images at 30 frames per second (FPS) and a pair of customized foot pedals connected to an Arduino Uno board (Arduino Uno Rev3, Arduino, Somerville, MD, USA). We focused on adopting widespread devices to the ULCI in order to allow maximum flexibility for further development. For example, by using USB cameras, various up-to-date computer vision algorithms can be implemented and additional sensors and input devices can be interfaced with the Arduino board. In this study, we placed four cameras at points surrounding and pointing down to the user (surgeon), as shown in Fig. 3a, to acquire images of the user’s hands from a wide range of viewpoints and to minimize the risk of malfunction owing to a blind spot. Although the settings can be configured according to the user’s preferences, the settings were kept fixed in this study. Input signals from cameras and pedals were sent to a computer via a USB connection for integration, data processing, and robot control.

(a) Diagram of the experimental setting is illustrated. In our study, four cameras were positioned above the user and were tilted as described in the figure. (b) An example scene of a user controlling a surgical robot with the ULCI in a virtual environment is shown. The cameras set at different poses capture the user’s hands in different angles to create the input data and the virtual surgical robot manipulators are displayed through an HMD. (c) Arduino based foot pedals are used in the ULCI. In the study, two pedals, ECM pedal and PSM pedal, were used. (d) An example scene inside a virtual world from a third person perspective (TPP) is shown. (e) An example scene inside a virtual world from a first person perspective (FPP) is shown. It should be noted that a stereoscopic display is given to the user to allow depth perception

2.1.3 Robot control unit

Robot control is implemented in a Linux computer with the following specifications: Ubuntu 20.04 LTS operating system, Intel® Core i7-12700K (5.0 GHz, 12 cores) processor with 64 GB of DDR4 RAM, and MSI GeForce RTX™ 3080 Gaming Z 12G graphics processing unit (GPU). Input images from cameras are processed in parallel through the MediaPipe framework (version 0.8.10) with OpenGL GPU support to return two-dimensional coordinates of hand landmarks for both hands viewed from each camera (Lugaresi et al. 2019; Zhang et al. 2020). Then, these data and input signals from the foot pedals are integrated into a Robot Operating System (ROS) environment developed using open-source software for the da Vinci research kit (dVRK) (Kazanzides et al. 2014; Chen et al. 2017). The ULCI was built with the ROS Noetic Ninjemys version which is compatible with Linux Ubuntu 20.04 operating system. Sets of 2D coordinates of hand landmarks obtained from USB cameras via MediaPipe are used to configure the 3D positions of each hand landmark and determine the joint states of the manipulators consisting dVRK, especially PSM and ECM, at a loop rate of 100 Hz. Finally, the joint states are sent to the visualization unit via TCP communication using ROS TCP endpoint and connector modules, which are open-source python scripts provided by Unity Robotics (version 0.7.0) (Codd-Downey et al. 2014; Hussein et al. 2018). The mechanisms for collecting the 3D coordinates of each hand landmark and manipulating PSMs and ECM are introduced in detail in the following sections.

2.1.4 Visualization unit

We developed a virtual world and performed visualization on a Windows computer with the following specifications: Windows 10 operating system, Intel® Core i9-9900K (3.6 GHz, 8 cores) processor with 64 GB of DDR4 RAM, and NVIDIA GeForce RTX 2070 GPU. A virtual world was built using Unity (version 2020.3.36f1), and we modified the open-source dVRK-XR package to visualize and render the movements of a virtual surgical robot in the Unity scene (Qian et al. 2019). Two PSMs and one ECM based on open-source 3D models, as shown in Fig. 4, were imported to the scene (Kazanzides et al. 2014; Chen et al. 2017; Qian et al. 2019). Finally, stereoscopic videos seen from the tip of the virtual ECM were streamed to a VIVE Pro Eye HMD (HTC, Taoyuan, Taiwan), which served as the main display of the proposed ULCI.

2.2 Hand tracking in 3D space

2.2.1 Naming conventions for vectors and coordinate systems

We detail the mathematical and mechanical bases of the ULCI. First, consider the following naming conventions for vectors and frames. Symbols \(\overrightarrow{v}\) and \(\widehat{v}\) denote a vector and its directional unit vector in 3D space, respectively. An uppercase letter enclosed in braces { } refers to a coordinate system (e.g., \(\left\{O\right\}\)). Symbol \({\overrightarrow{v}}^{\left\{O\right\}}\) represents a 3 × 1 matrix corresponding to a vector \(\overrightarrow{v}\) expressed in frame \(\left\{O\right\}\). In addition, \({R}_{AB}\) and \({T}_{AB}\) are the 3 × 3 rotation and 4 × 4 transformation matrices from frames \(\left\{A\right\}\) to \(\left\{B\right\}\), respectively.

2.2.2 Localization of hand landmarks

MediaPipe is a pipeline-based framework developed by Google for building artificial neural networks in a modular architecture (Lugaresi et al. 2019). It allows developers to integrate various calculators, which are modules executing specific functions, in a graph structure to develop various applications. MediaPipe provides calculators for human motion tracking including face mesh, hand tracking, and pose detection. We mainly used MediaPipe Hands with OpenGL GPU support to quickly track hand position and orientation. This module detects multiple hands and their handedness in an image and tracks 21 anatomical landmarks per hand as illustrated in Fig. 5 (Zhang et al. 2020).

Twenty one anatomical landmarks detected by MediaPipe hands are illustrated. One carpometacarpal (CMC) joint, five metacarpophalangeal (MCP) joints, one interphalangeal (IP) joint, four proximal interphalangeal (PIP) joints, four distal interphalangeal (DIP) joints, and five fingertips (TIP) are labeled with red dots. The numbers refer to the number of the finger it is located in; based on a figure from (Zhang et al. 2020).

Although MediaPipe provides 3D coordinates of the landmarks with respect to the camera frame, they are not very reliable for use in surgical robot control owing to the intrinsic difficulty of depth perception from a single two-dimensional image. Therefore, we used \(N=4\) cameras in different poses to precisely estimate the 3D coordinates of a point of interest (\(A\)). Coordinate frames for each camera and its position vector in the fixed laboratory (global) frame, \(\left\{O\right\}\), are denoted as \(\left\{{C}_{i}\right\}\) and \({\overrightarrow{c}}_{i}\), respectively, as shown in Fig. 6. In addition, \({\widehat{v}}_{i}^{\left\{{C}_{i}\right\}}\) is a directional unit vector pointing from the \(i\)-th camera to point \(A\) expressed in the coordinate frame \(\left\{{C}_{i}\right\}\). Vector \({\widehat{v}}_{i}\) is determined from the relative location of a tracked point seen in the view of the i-th camera. For example, if the tracked point is at the center of the view of the i-th camera, \({\widehat{v}}_{i}\) would be parallel to the z-axis of {Ci}, resulting in \({\widehat{v}}_{i}^{\left\{{C}_{i}\right\}}\) being equal to [0, 0, 1]T. Then, the position vector of \(A\), denoted as \(\overrightarrow{r}\), is estimated as the vector that minimizes the sum of squared distances between \(A\) and points on lines \({l}_{i}:{\overrightarrow{c}}_{i}+{k}_{i}{\widehat{v}}_{i} ({k}_{i}\in \mathbb{R},\mathbb{ }1\le i\le N)\), as given in Eq. (1). This technique guarantees stable solutions tolerable to some level of measurement errors due to the convex nature of the target quadratic function.

Simplified diagram for estimating 3D coordinates of point A from multiple cameras positioned around the interaction volume is shown. The laboratory frame is denoted as {O} and the coordinate frames of the cameras are denoted as {Ci}, where i is an integer ranging from 1 to N and N is the number of cameras

Setting the partial derivatives of \(f\left(\overrightarrow{x}, {k}_{1},{k}_{2}, \dots, {k}_{N}\right)\) with respect to variables \({k}_{j} (1\le j\le N)\) to zero, we obtain Eqs. (2) and (3), where \({k}_{j}^{\circ }\) is the value of \({k}_{j}\) that minimizes \(f\left(\overrightarrow{x}, {k}_{1},{k}_{2}, \dots, {k}_{N}\right)\) for a given \(\overrightarrow{x}\).

Then, under the set of \({k}_{j}^{\circ }\) obtained from Eq. (3) and looking from the laboratory frame \(\left\{O\right\}\), Eq. (4) can be derived from Eq. (1), where matrices \({M}_{i}\) are defined by Eq. (5) and \({\widehat{v}}_{i}^{\left\{O\right\}}\) can be obtained from Eq. (6) (Lynch and Park 2017). TOCi is a 4 by 4 transformation matrix from coordinate frame {O} to coordinate frame {Ci}.

Furthermore, setting the partial derivative of \(f\left({\overrightarrow{x}}^{\left\{O\right\}}\right)\) with respect to \({\overrightarrow{x}}^{\left\{O\right\}}\) to zero and using a relation \({M}_{i}^{2}={M}_{i}={M}_{i}^{T}\), an explicit solution for \(\overrightarrow{r}\) is given through the process shown in Eqs. (7) and (8). The number of cameras \(N\) should be at least 2 because a unique solution for \({\overrightarrow{r}}^{\left\{O\right\}}\) cannot be obtained otherwise, given the singular nature of \({M}_{i}\). This is consistent with the fact that in general, precise depth perception cannot be achieved through monocular vision.

2.3 Robot control mechanism

2.3.1 Operation mode selection

Clutch and move are the two major operation modes for manipulators inspired by the control mechanism of conventional RAMIS platforms. Clutch mode allows the user to move the hands freely while the end-effector of the robot arm remains stationary. Move mode displaces the manipulators according to the user’s hand gestures which are tracked and scaled. In the proposed ULCI, the selection of manipulators and modes are controlled by the foot pedals shown in Fig. 3c. The default mode is PSM clutch. It is activated when none or both foot pedals are pressed. When the PSM pedal is pressed, PSM move mode is activated. The ECM pedal enables ECM control mode where ECM clutch mode and ECM move mode are determined from hand gestures as described below.

2.3.2 PSM control

Fig. 7 illustrates the coordinate frames involved in the ULCI. \(\left\{O\right\}\), \(\left\{P\right\}\), and \(\left\{E\right\}\) in Fig. 7a are the reference frame, hand frame, and eye frame in the real world, respectively, while the coordinate frames shown in Fig. 7b belong to the virtual world. \(\left\{{O}^{{\prime }}\right\}\), \(\left\{{O}_{P}^{{\prime }}\right\}\), and \(\left\{{O}_{E}^{{\prime }}\right\}\) are the reference frames of the virtual world, PSM, and ECM, respectively, while \(\left\{{P}^{{\prime }}\right\}\) and \(\left\{{E}^{{\prime }}\right\}\) are the end-effector coordinate frames of the PSM and ECM, respectively. In addition, \(H\) and \({H}^{{\prime }}\) are the reference points for PSM control, which can be repositioned only by PSM clutch mode. During PSM clutch mode, H and H' are floating points tracking the 3D location of the user’s hand and the PSM end-effector. Then, at the instant PSM move mode is activated, H and H' are set fixed until the PSM move mode is returned to PSM clutch mode. \(\left\{{O}_{P}^{{\prime }}\right\}\) and \(\left\{{O}_{E}^{{\prime }}\right\}\) should be predefined alongside \(\left\{{O}^{{\prime }}\right\}\) because the dVRK ROS package provides functions for setting goal transformation matrix from {O'P} to {P'} and {O'E} to {E'}, denoted as \({T}_{{O}_{P}^{{\prime }}{P}^{{\prime }}}\) and \({T}_{{O}_{E}^{{\prime }}{E}^{{\prime }}}\).

(a) Real world coordinate frames used in the study are depicted. {O}, {E}, {P} are the lab frame, eye frame, and hand frame in the real world. (b) Virtual world coordinate frames used in the study are shown. {O'}, {E'}, {P'} of subfigure (b) are the world frame, endoscope frame, and PSM end-effector coordinate frames. {O'P} and {O'E} are the coordinate frames of the base of PSM and ECM, respectively. Points H and H' are the reference points for PSM control

Before controlling the PSM, a relationship between the 3D hand landmarks and the end-effector of the PSM should be established. Most surgical procedures are conducted using the thumb and index finger. Hence, we mapped the second metacarpophalangeal (MCP) joint, M2 for short, to the wrist-yaw joint axis of the PSM. Also, the tips of the thumb and the index finger, denoted as T1 and T2, were mapped to the respective tips of the end-effector as shown in Fig. 8. The jaw angle was determined as the angle between \(\overrightarrow{M_2T_1}\) and \(\overrightarrow{M_2T_2}\), and the orientation was assessed from M1, M2, T1, T2, and P2, where M1 and P2 refer to the first metacarpophalangeal and second proximal interphalangeal joints, respectively. Unit vector \(\widehat{z}\) of \(\left\{P\right\}\) points from M2 to the midpoint of T1 and T2, and \(\widehat{x}\) of \(\left\{P\right\}\) is calculated as the unit vector of the cross-product of \(\overrightarrow{M_2M_1}\) and \(\overrightarrow{M_2P_2}\). M1 and P2 were selected for the determination of \(\widehat{x}\) because using \(T_1\) and \(T_2\) instead would lead to unstable solutions as the jaw angle approaches zero. Finally, \(\widehat{y}\) of \(\left\{P\right\}\) was determined by the cross-product of \(\widehat{z}\) and \(\widehat{x}\).

(a) Points and vectors used for determination of the hand coordinate frame {P} is shown. M1 and M2 refer to the first and second metacarpophalangeal joints, T1 and T2 refer to the tips of the thumb and index finger, and P2 is the proximal interphalangeal joint of the index finger. (b) PSM end-effector coordinate frame {P'} is shown. Further algorithm is implemented so that the orientation of the hand is mimicked by the PSM end-effector

For the PSM to apparently replicate the user’s hand movements, two relations should be satisfied. First, the orientation of the PSM end-effector seen from the endoscope should be equal to the orientation of the hand seen from the eye. In other words, the rotation matrix from {E'} to {P'}, denoted as RE'P', should be equal to the rotation matrix from {E} to {P}, denoted as REP, resulting in our first relation given as Eq. (9). Next, given the reference points of the hand and the PSM end-effector, each given as H and H', we can derive a relation to implement a scaled motion of the PSM end-effector according to the movement of the user’s hand. The displacement vector heading from H' to P' seen from the endoscope should be parallel to the displacement vector heading from H to P seen from the eye. After introducing a scaling factor α empirically set to 0.2 by default, the second relation is derived as Eq. (10).

Using the relations above, \({T}_{{O}_{P}^{{\prime }}{P}^{{\prime }}}\) is determined uniquely as given in Eqs. (11) and (12). Transformation matrices \({T}_{OE}\) can be calculated through measuring the position and orientation of HMD. \({T}_{{O}^{{\prime }}{O}_{p}^{{\prime }}}\) and \({T}_{{O}^{{\prime }}{O}_{E}^{{\prime }}}\) are calculated from relative position and orientation of virtual PSM and ECM with respect to the reference coordinate frame of the virtual world. \({\overrightarrow{{O}^{{\prime }}{H}^{{\prime }}}}^{\left\{{O}^{{\prime }}\right\}}\) and \({T}_{{O}_{E}^{{\prime }}{E}^{{\prime }}}\) can be determined from the joint states of the PSM and ECM through forward kinematics. Similarly, \({T}_{OP}\) and \({\overrightarrow{HP}}^{\left\{O\right\}}\) are derived from the tracked 3D coordinates of hand landmarks.

2.3.3 ECM control

During ECM control mode, a user can activate ECM move mode by simultaneously making a gripping gesture with both hands within a jaw angle threshold of 15°. At the instant the control mode is switched from ECM clutch mode to ECM move mode, reference point \(H_E\) is set as the midpoint of second MCP joints from each hand. Then, as the user’s hand moves, ULCI tracks the 3D location of the midpoint and the slope of the line connecting the two MCP joints to calculate the displacement vector \({\overrightarrow{m}}^{\left\{E\right\}}\) and rotation angle \(\varphi\), as illustrated in Fig. 9. The \(x\)-, \(y\)-, and \(z\)-axis values of \({\overrightarrow{m}}^{\left\{E\right\}}\) are scaled by factor \(\beta\) (default value of 0.1) to control the yaw, insertion, and pitch of the ECM, respectively, and \(\varphi\) controls the roll. Finally, as the user releases the gripping gesture, the control mode is returned to ECM clutch mode and \(H\) is reset.

Illustration of ECM control mechanism is given. When both hands make a gripping gesture, the reference point HE is set as the midpoint of the two second-metacarpophalangeal (MCP) joints. Then as the hands move, maintaining the gripping gesture, the displacement vector of the midpoint and the slope of the line connecting the two MCP joints, denoted as \({\overrightarrow{m}}\) and φ respectively, are tracked to provide input signals for ECM control.

2.4 Usability study

2.4.1 Participants

Fifteen naïve participants with minimal experience in both HMD and RAMIS were enrolled in a usability study that was approved by the institutional review board of Seoul National University Hospital (approval number: H-2107-167-1236). The study consisted of three stages: modified peg transfer task (mPTT), line tracking task (LTT), and UX survey. All procedures involving PSM and ECM manipulation were conducted in a virtual environment constructed in Unity.

2.4.2 mPTT

Peg transfer task is a widely practiced task intended to evaluate and improve the ability to perform laparoscopic procedures (Peters et al. 2004; Ritter and Scott 2007; Sroka et al. 2010; Zendejas et al. 2016). During a peg transfer task, each participant is asked to grasp an object with one hand, pass it to the other hand in mid-air, place it in an empty peg on the opposite side, and repeat this process for six objects. In this study, we designed a virtual environment for the peg transfer task in Unity, as shown in Fig. 10a. Each participant was given 10 minutes to get familiar with the ULCI and 40 minutes to perform the task at their best effort. Originally, the task requires the participants to move 12 objects in 300 s, but considering their low familiarity with laparoscopic tools and procedures, we required them to move only 6 objects in 150 s (Badalato et al. 2014; Hong et al. 2019; Miura et al. 2019). Minimum task time during a testing period of 40 minutes was recorded per participant.

2.4.3 LTT

Line tracking task is used to assess a user’s proficiency in ECM control and we designed a virtual LTT environment as shown in Fig. 10b (Cagiltay et al. 2019; Jo et al. 2020).Participants were instructed to move a red sphere of radius 1.5 cm fixed at a point 5 cm distal to the end-effector of the ECM along a blue track consisting of six segments of 15 cm. Similarly with mPTT, participants were given 10 minutes to practice ECM control and were told to move the sphere along the track 10 times while trying their best to stay on path. Task time and mean deviation of the center of the red sphere from the track were measured.

(a) Virtual environment for a modified peg transfer task (mPTT) is shown. Users should move six objects on the left to the pegs on the right as fast as they can without dropping the objects. (b) Virtual environment for line tracking task (LTT) is demonstrated. A red sphere is fixed relatively to the ECM end-effector coordinate frame and users should move the sphere along the blue track by controlling the ECM

2.4.4 UX survey

After mPTT and LTT, participants were asked to fill out a questionnaire based on their experience with the ULCI. We studied scores obtained from system usability scale (SUS), Van der Laan’s technology acceptance scale, and overall fatigue. SUS score is measured on a 100-point scale from answering 10 questions and reflects how easy the users can use the ULCI (Brooke 1996; Bangor et al. 2008; Lewis 2018). Van der Laan’s technology acceptance score is comprised of nine items that reflect usefulness and satisfaction scales with values ranging from − 2 to 2, where a higher score indicates a higher affinity to a proposed system (Laan et al. 1997). Then, the participants were asked to score their fatigue levels for neck, back, shoulder, elbow, wrist, fingers, and eyes as well as dizziness on a 10-point scale. Participants were instructed to answer the survey with the following standards. Scores less or equal to 3 indicate negligible discomfort and scores between 4 and 6 indicate perceivable yet tolerable discomfort, while scores greater or equal to 7 indicate intolerable fatigue. Finally, they were asked to write their subjective opinions about the ULCI in open-ended questions. The questionnaires and scoring guidelines are provided in the Appendix.

3 Results

3.1 Intrinsic ULCI characteristics

Accuracy of PSM control was qualitatively investigated from trajectories of the hands and PSMs recorded during an mPTT trial as shown in Fig. 11. Figure 11a, d show that the hand movements were replicated and scaled to the corresponding PSMs. Hand positions estimated by MediaPipe contained noise, but it was eliminated through the internal control mechanism available in the open-source dVRK ROS package, resulting in virtually no discomfort when manipulating the virtual robots.

A qualitative evaluations of PSM trajectory from one mPTT trial is shown. (a) Scaled 3D trajectories of left hand and PSM1 are plotted. (b) x, y, and z coordinates of left hand and PSM1 are plotted with respect to time. (c) Jaw angles of the left hand and PSM1 are plotted with respect to time. (d) Scaled 3D trajectories of right hand and PSM2 are plotted. (e) x, y, and z coordinates of right hand and PSM2 are plotted with respect to time. (f) Jaw angles of the right hand and PSM2 are plotted with respect to time

End-to-end latency of the ULCI was determined from the sum of delays caused by the following processes: image processing through MediaPipe, robot control using dVRK-ROS, TCP communication, and rendering for robot visualization. The time consumed in image processing ranged from 3.5 ms to 12 ms, with its peak at 4.0 ms. The latency caused by robot control was measured by comparing the time difference between the hand and PSM coordinate values, which were scaled into the interval between 0 and 1, as shown in Fig. 11 and Fig. 12. This latency was measured to be 130 ± 10 ms and 230 ± 10 ms for the PSM and ECM, respectively. As we used wired communication, networking time was negligible and rendering time did not exceed 38 ms. Hence, the end-to-end latency of the PSM and ECM were measured to be 170 ± 10 ms and 270 ± 10 ms, respectively, as listed in Table 1.

A qualitative evaluation of ECM trajectory from one LTT trial is shown. (a) Scaled 3D trajectories of the midpoint between two hands and joint states of the ECM are plotted. (b) x, y, and z coordinates of the midpoint between two hands and their corresponding joint states of the ECM are plotted with respect to time

3.2 Usability study

3.2.1 mPTT

Best task times for the mPTT during a testing period of 40 minutes per participant are listed in Table 2. Of the 15 participants, 13 (86%) achieved a task time below 150 s (P = .0015), with a mean task time of 95 ± 47 s.

3.2.2 LTT

Task times of LTT decreased after repetition, from 204 ± 91 s to 113 ± 30 s after 10 trials, while mean deviation of the red sphere from the track remained relatively constant, estimated to be 1.1 ± 0.5 cm as shown in Fig. 13a and Fig. 13b, respectively.

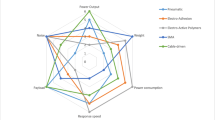

3.2.3 UX survey

As shown in Fig. 14a, the median SUS score was 75.0, indicating that most participants felt good about the proposed system (Bangor et al. 2009). In addition, both scales for Van der Laan’s technology acceptance score were distributed above zero (P < .001 for both scales), as shown in Fig. 14b.

Fatigue levels of different body parts were measured on a 10-point scale. Joint pain in the neck and shoulders was perceived yet tolerable while that in other joints was mostly imperceptible. The eye fatigue and level of dizziness were comparatively high but tolerable throughout the study.

4 Discussion

4.1 Feasibility study

We examined accuracy and latency to assess the feasibility of the proposed ULCI. These factors substantially affect the surgeon’s performance and patient’s safety (Anvari et al. 2005; Lum et al. 2009; Xu et al. 2014). Toward the end of each task, the participants were able to easily manipulate the virtual robots as intended. Latency requires thorough investigation because it is associated with various performance indicators in telesurgeries, such as task time and error rate (Anvari et al. 2005; Lum et al. 2009; Xu et al. 2014). The end-to-end latency measured for the PSM and ECM was around 200 ms, which is suitable to perform telesurgery (Xu et al. 2014). Additional latency caused by image processing with MediaPipe was minimal compared with the total delay, although it may vary according to the GPU specifications. None of the participants reported discomfort from inaccuracy or time delay, suggesting a successful development of a real-time robot manipulation interface based on contactless hand tracking.

Results from the mPTT trials show that a large proportion of the naïve participants could reach a qualifiable level of PSM manipulation after 50 minutes of practice (Peters et al. 2004; Ritter and Scott 2007). LTT results in Fig. 13 show that mean deviation from the track remained similar throughout a series of trials, while the mean task time decreased. Hence, the participants were able to learn from their experience to achieve faster execution with a similar amount of effort. In addition, SUS scores distributed from 60 to 80 (Fig. 14a) indicate that the participants easily familiarized themselves with the ULCI. Moreover, Van der Laan’s technology acceptance scores above zero indicate that most of the participants felt positive about the proposed ULCI as an emerging technology.

4.2 Ergonomic evaluation

Ergonomic evaluation is crucial to development of surgical robot control systems. According to Table 3, fatigue levels of the elbow, wrist, and finger joints were minimal, implying that ULCI may resolve existing issues of contemporary RAMIS systems related to pain in the distal upper extremities, though a comparative study should be conducted in future work. On the other hand, most users reported shoulder discomfort after completing the tasks. This originated from prolonged shoulder abduction illustrated in Fig. 15a, where the deltoid muscle was strained from holding the arms up most of the task time. This problem can be addressed by adding arm rests, which have been proven to be effective for the ergonomics of current RAMIS platforms (Lee et al. 2017). When adding arm rests to the ULCI, we suggest them to be long and wide so that users can freely slide their elbows on the arm rests, thus minimizing the fatigue of shoulder joints while maintaining the degree of freedom of the arms. In addition, arm rest heights should be adjustable to allow the most optimal use for each surgeon.

Although preliminary studies have shown that an HMD is more ergonomic than a stereo viewer in terms of posture and joint fatigue, the participants in this study reported a moderate level of fatigue in the neck. Most participants pointed out the weight of the HMD (550 g) as a major cause of neck pain. Fig. 15b shows that anterior position of the center of mass of HMD causes a considerable amount of torque to the cervical spine, resulting in a continuous recruitment of posterior neck muscles to compensate for the weight of HMD (Knight and Baber 2004, 2007; Chihara and Seo 2018; Ito et al. 2021). Toward the end of the usability study, participants tended to extend their necks aiming to reduce the torque exerted by the HMD. This behavior was consistent with the findings reported in (Knight and Baber 2007). Neck fatigue can be alleviated by either reducing the weight of HMD through further development of lightweight hardware or adding a counterweight to the back so that the center of mass moves posteriorly (Chihara and Seo 2018; Ito et al. 2021). In fact, the HMD used in our study weighs approximately 550 grams and most of the mass was focused on the display at the front. Newly released HMD are much lighter and according to our market analysis, the weight of HTC VIVE Flow is 189 grams (HTC VIVE 2022). In addition, many contemporary products such as Microsoft HoloLens 2 or HTC VIVE XR Elite use battery as a counterweight to the display, and they tend to cause less neck pain (Palumbo 2022). Therefore, we believe that reduced fatigue to the cervical spine can be achieved even with the implementation of devices available today.

Dizziness is another aspect to consider when using an HMD. Dizziness levels reported from the usability study were relatively high compared with other fatigue levels such as joint discomfort. Based on subjective opinions of participants, motion sickness may have been induced because of the discrepancy between the head rotation and movement of the field of vision (Munafo et al. 2017). In this study, foot pedals were used to switch modes between the PSM and ECM and suitably reproduce the control environment of commercial RAMIS platforms. This method may have aggravated motion sickness because the field of view cannot be changed intuitively through head motion but instead altered via predefined hand gestures. Dizziness caused by HMD is expected to decrease with improved hardware and it can be further reduced by implementing a more user-centered robot control mechanism such as using a gyroscope sensor to detect head orientation for mapping to the ECM (Jo et al. 2020). In addition, aiding hand-eye coordination by displaying user’s hands on the HMD would contribute to reducing dizziness. Implementing smooth movement of the ECM and modulating its speed and acceleration is also suggested (Lareyre et al. 2021; Sun et al, 2019).

4.3 Hardware cost and volume

In this study, a robot control interface based on the MediaPipe framework with tolerable accuracy and latency was developed using commercial devices such as USB cameras and an HMD. The total budget for developing the full system was around USD 10,000. Input system for contemporary RAMIS systems contain delicate input devices such as MTMs which is a wire driven robot manipulator with eight degrees of freedom. They are bulky and prone to collisions, making its maintenance challenging. However, since the proposed interface implements a computer vision driven input mechanism, it can be stated that ULCI is more compact and volume-efficient. In addition, hardware components of the ULCI are portable, meaning that a surgeon console need not be confined to an operating room, enabling an efficient use of the operating room. Although further studies should be conducted, ULCI may become a promising alternative to the surgeon console used today, hopefully contributing to the improvement of space utilization and reduction of medical expenses associated with robotic surgery, as well as providing comfort to the surgeon (Barbash and Glied 2010; Turchetti et al. 2012).

4.4 Contribution to Extended Reality (XR) Community

As mentioned previously, hand gesture recognition is an emerging input modality for users to actively interact with extended reality (XR) environments. Prior to development of computer vision based hand tracking modules, various mechanisms for 3D hand gesture recognition methods were developed such as infrared based depth perception modules and motion capture gloves (Yasen and Jusoh 2019; Suarez and Murphy 2012; Moreira et al. 2014). Recently, computer vision based hand tracking technology is actively studied due to the wide availability to RGB cameras and source codes. In this paper, we explicitly detailed the underlying mechanism of 3D hand tracking based on a set of RGB images and established a proof-of-concept of concept through UX studies. Alongside the wide accessibility to RGB cameras and computer vision algorithms, we expect our work to be actively studied and further developed among scholars interested in developing interactive XR environments not only confined to RAMIS systems (Tavakoli et al. 2006; Martin-Barrio et al. 2020; Hong et al. 2021).

In addition, our work carries significance in a way that we have conducted extensive UX studies based on widely accepted surveys and questionnaires such as SUS scores and Van der Laan’s technology acceptance scores. Also, ergonomic evaluation was also thoroughly carried out by quantitatively assessing discomfort in various body parts. Therefore, we hope that our work would provide a new standard to measure the properties of input modalities for XR environments in a UX perspective.

5 Conclusion

ULCI is a surgical robot control interface based on real-time contactless hand tracking. We devised the interface to overcome issues of existing RAMIS platforms regarding ergonomics. We investigated the mathematical background of the interface and performed feasibility and UX studies as proof of concept. Accuracy and latency of the ULCI did not significantly affect participants’ performance in conducting various tasks. SUS and Van der Laan’s technology acceptance scores showed that naïve users were able to easily learn and improve their task performance in a short period of 100 minutes, suggesting that the ULCI is intuitive and user-friendly. In addition, fatigue levels in the distal upper extremities experienced by the participants were low, indicating that ULCI may contribute to surgical environments with improved ergonomics. Nevertheless, the interface should be further improved to alleviate joint pain in the neck and shoulders. Consisting of portable devices such as an HMD and USB cameras, the proposed system can reduce the overall hardware volume of conventional RAMIS platforms and increase space utilization efficiency.

In conclusion, we have successfully developed a surgical robot control system using MediaPipe framework and an HMD and confirmed its feasibility by investigating its intrinsic features and UX including ergonomic evaluation with a group of widely accepted surveys and questionnaires. The proposed system allows dexterous robot manipulation without constraining the hands to delicate devices such as MTMs, resulting in minimal strain in the upper extremities. As real-time 3D hand tracking technology is not confined to surgical robot control, it can be applied to any field requiring delicate hand gestures as input including XR environments or remote controlled robotic systems, hopefully contributing to improved human–machine interaction.

Data availability

The datasets generated during and analyzed during the current study are available from the corresponding author on reasonable request.

References

Amprimo G, Masi G, Pettiti G, Olmo G, Priano L, Ferraris C (2023). Hand tracking for clinical applications: validation of the Google MediaPipe Hand (GMH) and the depth-enhanced GMH-D frameworks. arXiv preprint arXiv:2308.01088. https://doi.org/10.48550/arXiv.2308.01088

Amrani MZ, Borst CW, Achour N (2022). Multi-sensory assessment for hand pattern recognition. Biomed Signal Process Control 72:103368. https://doi.org/10.1016/j.bspc.2021.103368

Anvari M, Broderick T, Stein H, Chapman T, Ghodoussi M, Birch DW, Mckinley C, Trudeau P, Dutta S, Goldsmith CH (2005) The impact of latency on surgical precision and task completion during robotic-assisted remote telepresence surgery. Comput Aided Surg 10(2):93–99. https://doi.org/10.3109/10929080500228654

Badalato GM, Shapiro E, Rothberg MB, Bergman A, RoyChoudhury A, Korets R, Patel T, Badani KK (2014) The Da Vinci robot system eliminates multispecialty surgical trainees’ hand dominance in open and robotic surgical settings. JSLS 18(3):e2014. https://doi.org/10.4293/JSLS.2014.00399

Bangor A, Kortum P, Miller J (2009) Determining what individual SUS scores mean: adding an adjective rating scale. J Usability Stud 4(3):114–123. https://doi.org/10.5555/2835587.2835589

Bangor A, Kortum PT, Miller JT (2008) An empirical evaluation of the system usability scale. Int J Hum–Comput Interact 24(6):574–594. https://doi.org/10.1080/10447310802205776

Barbash GI, Glied SA (2010) New technology and health care costs–the case of robot-assisted surgery. N Engl J Med 363(8):701–704. https://doi.org/10.1056/NEJMp1006602

Berggren U, Gordh T, Grama D, Haglund U, Rastad J, Arvidsson D (1994) Laparoscopic versus open cholecystectomy: hospitalization, sick leave, analgesia and trauma responses. J Br Surg 81(9):1362–1365. https://doi.org/10.1002/bjs.1800810936

Brooke J (1996) SUS-A quick and dirty usability scale. Usability Eval Ind 189(194):4–7

Buckingham G (2021). Hand tracking for immersive virtual reality: opportunities and challenges. Front Virtual Real 2:728461. https://doi.org/10.3389/frvir.2021.728461

Cagiltay NE, Ozcelik E, Berker M, Dalveren GGM (2019) The underlying reasons of the navigation control effect on performance in a virtual reality endoscopic surgery training simulator. Int J Hum–Comput Interact 35(15):1396–1403. https://doi.org/10.1080/10447318.2018.1533151

Chen Z, Deguet A, Taylor RH, Kazanzides P (2017) Software architecture of the Da Vinci Research Kit. 2017 First IEEE Int Conf Robot Comput 180–187. https://doi.org/10.1109/IRC.2017.69

Chihara T, Seo A (2018) Evaluation of physical workload affected by mass and center of mass of head-mounted display. Appl Ergon 68:204–212. https://doi.org/10.1016/j.apergo.2017.11.016

Codd-Downey R, Forooshani PM, Speers A, Wang H, Jenkin M (2014) From ROS to unity: leveraging robot and virtual environment middleware for immersive teleoperation. 2014 IEEE Int Conf Inf Autom 932–936. https://doi.org/10.1109/ICInfA.2014.6932785

Crippa J, Grass F, Dozois EJ, Mathis KL, Merchea A, Colibaseanu DT, Kelley SR, Larson DW (2021) Robotic surgery for rectal cancer provides advantageous outcomes over laparoscopic approach: results from a large retrospective cohort. Ann Surg 274(6):e1218–e1222. https://doi.org/10.1097/SLA.0000000000003805

Danioni A, Yavuz GC, Ozan DE, Momi ED, Koupparis A, Dogramadzi S (2022) A study on the dexterity of surgical robotic tools in a highly immersive virtual environment: assessing usability and efficacy. IEEE Robot Autom Mag 29(1):68–75. https://doi.org/10.1109/MRA.2022.3141972

Dardona T, Eslamian S, Reisner LA, Pandya A (2019) Remote presence: development and usability evaluation of a head-mounted display for camera control on the Da Vinci surgical system. Robotics 8(2):31. https://doi.org/10.3390/robotics8020031

DiMaio S, Hanuschik M, Kreaden U (2011) The Da Vinci surgical system. Surgical robotics: systems applications and visions. Springer US, USA

Douissard J, Hagen ME, Morel P (2019) The Da Vinci surgical system. Bariatric robotic surgery: a comprehensive guide. Springer, Germany

Ficarra V, Cavalleri S, Novara G, Aragona M, Artibani W (2007) Evidence from robot-assisted laparoscopic radical prostatectomy: a systematic review. Eur Urol 51(1):45–55. https://doi.org/10.1016/j.eururo.2006.06.017

Fuchs KH (2002) Minimally invasive surgery. Endoscopy 34(2):154–159. https://doi.org/10.1055/s-2002-19857

Gala RB, Margulies R, Steinberg A, Murphy M, Lukban J, Jeppson P, Aschkenazi S, Olivera C, South M, Lowenstein L, Schaffer J, Balk EM, Sung V (2014) Systematic review of robotic surgery in gynecology: robotic techniques compared with laparoscopy and laparotomy. J Minim Invasive Gynecol 21(3):353–361. https://doi.org/10.1016/j.jmig.2013.11.010

Gemmill EH, McCulloch P (2007) Systematic review of minimally invasive resection for gastro-oesophageal cancer. J Br Surg 94(12):1461–1467. https://doi.org/10.1002/bjs.6015

Han S, Liu B, Cabezas R, Twigg CD, Zhang P, Petkau J., Yu TH, Tai CJ, Akbay M, Wang Z, Nitzan A, Dong G, Ye Y, Tao L, Wan C, and Wang, R. (2020). MEgATrack: monochrome egocentric articulated hand-tracking for virtual reality. ACM Transactions on Graphics (ToG), 39(4), 87-1. https://doi.org/10.1145/3386569.3392452

Hong M, Rozenblit JW, and Hamilton AJ (2021). Simulation-based surgical training systems in laparoscopic surgery: a current review. Virtual Reality, 25, 491-510. https://doi.org/10.1007/s10055-020-00469-z

Hong N, Kim M, Lee C, Kim S (2019) Head-mounted interface for intuitive vision control and continuous surgical operation in a surgical robot system. Med Biol Eng Comput 57(3):601–614. https://doi.org/10.1007/s11517-018-1902-4

HTC VIVE, https://www.vive.com/us/product/vive-flow/overview/ (2022).

Hullenaar CDPV, Hermans B, Broeders IAMJ (2017) Ergonomic assessment of the Da Vinci console in robot-assisted surgery. Innov Surg Sci 2(2):97–104. https://doi.org/10.1515/iss-2017-0007

Hussein A, García F, Olaverri-Monreal C (2018) ROS and Unity based framework for intelligent vehicles control and simulation. 2018 IEEE Int Conf Veh Electron Saf 1–6. https://doi.org/10.1109/ICVES.2018.8519522

Ito K, Tada M, Ujike H, and Hyodo K (2021). Effects of the weight and balance of head-mounted displays on physical load. Applied Sciences, 11(15), 6802. https://doi.org/10.3390/app11156802

Jo Y, Kim YJ, Cho M, Lee C, Kim M, Moon HM, Kim S (2020) Virtual reality-based control of robotic endoscope in laparoscopic surgery. Int J Control Autom Syst 18(1):150–162. https://doi.org/10.1007/s12555-019-0244-9

Kavana KM, Suma NR (2022). Recognization of Hand Gestures Using Mediapipe Hands. Int Res J Moderniz Eng Tech Sci 4(06).

Kazanzides P, Chen Z, Deguet A, Fischer GS, Taylor RH, DiMaio SP (2014) An open-source research kit for the da Vinci® Surgical System. 2014 IEEE Int Conf Robot Autom 6434–6439. https://doi.org/10.1109/ICRA.2014.6907809

Kim YG, Mun G, Kim M, Jeon B, Lee JH, Yoon D, Kim BS, Kong SH, Jeong CW, Lee KE, Cho M, Kim S (2022) A study on the VR Goggle-based vision system for robotic surgery. Int J Control Autom Syst 20(9):2959–2971. https://doi.org/10.1007/s12555-021-1044-6

Knight JF, Baber C (2004) Neck muscle activity and perceived pain and discomfort due to variations of head load and posture. Aviat Space Environ Med 75(2):123–131

Knight JF, Baber C (2007) Effect of head-mounted displays on posture. Hum Factors 49(5):797–807. https://doi.org/10.1518/001872007X230172

Koehn JK, Kuchenbecker KJ (2015) Surgeons and non-surgeons prefer haptic feedback of instrument vibrations during robotic surgery. Surg Endosc 29(10):2970–2983. https://doi.org/10.1007/s00464-014-4030-8

Laan JDVD, Heino A, Waard DD (1997) A simple procedure for the assessment of acceptance of advanced transport telematics. Transp Res Part C Emergng Technol 5(1):1–10. https://doi.org/10.1016/S0968-090X(96)00025-3

Lanfranco AR, Castellanos AE, Desai JP, Meyers WC (2004) Robotic surgery: a current perspective. Ann Surg 239(1):14–21. https://doi.org/10.1097/01.sla.0000103020.19595.7d

Lareyre F, Chaudhuri A, Adam C, Carrier M, Mialhe C, Raffort J (2021). Applications of head-mounted displays and smart glasses in vascular surgery. Ann Vasc Surg 75:497-512. https://doi.org/10.1016/j.avsg.2021.02.033

Lawson EH, Curet MJ, Sanchez BR, Schuster R, Berguer R (2007) Postural ergonomics during robotic and laparoscopic gastric bypass surgery: a pilot project. J Robot Surg 1(1):61–67. https://doi.org/10.1007/s11701-007-0016-z

Lee GI, Lee MR, Green I, Allaf M, Marohn MR (2017) Surgeons’ physical discomfort and symptoms during robotic surgery: a comprehensive ergonomic survey study. Surg Endosc 31(4):1697–1706. https://doi.org/10.1007/s00464-016-5160-y

Lewis JR (2018) The system usability scale: past, present, and future. Int J Hum–Comput Interact 34(7):577–590. https://doi.org/10.1080/10447318.2018.1455307

Lugaresi C, Tang J, Nash H, McClanahan C, Uboweja E, Hays M, Zhang F, Chang CL, Yong MG, Lee J, Chang WT, Hua W, Georg M, Grundmann M (2019) MediaPipe: a framework for building perception pipelines. arXiv preprint arXiv:1906.08172. https://doi.org/10.48550/arXiv.1906.08172

Lum MJH, Rosen J, King H, Friedman DCW, Lendvay TS, Wright AS, Sinanan MN, Hannaford B (2009) Teleoperation in surgical robotics–network latency effects on surgical performance. 2009 Annu Int Conf IEEE Eng Med Biol Soc 6860–6863. https://doi.org/10.1109/IEMBS.2009.5333120

Lux MM, Marshall M, Erturk E, Joseph JV (2010) Ergonomic evaluation and guidelines for use of the daVinci Robot system. J Endourol 24(3):371–375. https://doi.org/10.1089/end.2009.0197

Lynch KM, Park FC (2017) Modern Robotics. Cambridge University Press, Cambridge

Mack MJ (2001) Minimally invasive and robotic surgery. JAMA 285(5):568–572. https://doi.org/10.1001/jama.285.5.568

Martín-Barrio A, Roldán JJ, Terrile S, Del Cerro J, and Barrientos A (2020). Application of immersive technologies and natural language to hyper-redundant robot teleoperation. Virtual Reality, 24, 541-555. https://doi.org/10.1007/s10055-019-00414-9

Martínez-Pérez A, Carra MC, Brunetti F, De’Angelis N (2017) Pathologic outcomes of laparoscopic vs open mesorectal excision for rectal cancer: a systematic review and meta-analysis. JAMA Surg 152(4):e165665. https://doi.org/10.1001/jamasurg.2016.5665

Meijden OAJVD, Schijven MP (2009) The value of haptic feedback in conventional and robot-assisted minimal invasive surgery and virtual reality training: a current review. Surg Endosc 23(6):1180–1190. https://doi.org/10.1007/s00464-008-0298-x

Mills JT, Burris MB, Warburton DJ, Conaway MR, Schenkman NS, Krupski TL (2013) Positioning injuries associated with robotic assisted urological surgery. J Urol 190(2):580–584. https://doi.org/10.1016/j.juro.2013.02.3185

Miura S, Oshikiri T, Miura Y, Takiguchi G, Takase N, Hasegawa H, Yamamoto M, Kanaji S, Matsuda Y, Yamashita K, Matsuda T, Nakamura T, Suzuki S, Kakeji Y (2019) Optimal monitor positioning and camera rotation angle for mirror image: overcoming reverse alignment during laparoscopic colorectal surgery. Sci Rep 9(1):8371. https://doi.org/10.1038/s41598-019-44939-0

Moreira AH, Queirós S, Fonseca J, Rodrigues PL, Rodrigues NF, Vilaça JL (2014, May). Real-time hand tracking for rehabilitation and character animation. In 2014 IEEE SeGAH:1-8. https://doi.org/10.1109/SeGAH.2014.7067086

Munafo J, Diedrick M, Stoffregen TA (2017) The virtual reality head-mounted display Oculus Rift induces motion sickness and is sexist in its effects. Exp Brain Res 235(3):889–901. https://doi.org/10.1007/s00221-016-4846-7

Nezhat F (2008) Minimally invasive surgery in gynecologic oncology: laparoscopy versus robotics. Gynecol Oncol 111(2):S29–S32. https://doi.org/10.1016/j.ygyno.2008.07.025

Nikitin A, Reshetnikova N, Sitnikov I, Karelova O (2020). VR Training for Railway Wagons Maintenance: architecture and implementation. Procedia Comput Sci 176:622-631. https://doi.org/10.1016/j.procs.2020.08.064

Nouralizadeh A, Tabatabaei S, Basiri A, Simforoosh N, Soleimani M, Javanmard B, Ansari A, Shemshaki H (2018) Comparison of open versus laparoscopic versus hand-assisted laparoscopic nephroureterectomy: a systematic review and meta-analysis. J Laparoendosc Adv Surg Tech 28(6):656–681. https://doi.org/10.1089/lap.2017.0662

Palumbo A. (2022). Microsoft HoloLens 2 in medical and healthcare context: State of the art and future prospects. Sens 22(20):7709. https://doi.org/10.3390/s22207709

Peters JH, Fried GM, Swanstrom LL, Soper NJ, Sillin LF, Schirmer B, Hoffman K, SAGES FLS Committee (2004) Development and validation of a comprehensive program of education and assessment of the basic fundamentals of laparoscopic surgery. Surgery 135(1):21–27. https://doi.org/10.1016/S0039-6060(03)00156-9

Qian L, Deguet A, Kazanzides P (2018) ARssist: augmented reality on a head-mounted display for the first assistant in robotic surgery. Healthc Technol Lett 5(5):194–200. https://doi.org/10.1049/htl.2018.5065

Qian L, Deguet A, Kazanzides P (2019) dVRK-XR: mixed reality extension for Da Vinci Research Kit. https://doi.org/10.31256/HSMR2019.47. Hamlyn Symp Med Robot

Ritter EM, Scott DJ (2007) Design of a proficiency-based skills training curriculum for the fundamentals of laparoscopic surgery. Surg Innov 14(2):107–112. https://doi.org/10.1177/1553350607302329

Sroka G, Feldman LS, Vassiliou MC, Kaneva PA, Fayez R, Fried GM (2010) Fundamentals of laparoscopic surgery simulator training to proficiency improves laparoscopic performance in the operating room—a randomized controlled trial. Am J Surg 199(1):115–120. https://doi.org/10.1016/j.amjsurg.2009.07.035

Suarez J, Murphy RR (2012, September). Hand gesture recognition with depth images: A review. In 2012 IEEE RO-MAN:411-417. https://doi.org/10.1109/ROMAN.2012.6343787

Sun Y, Pan B, Fu Y, Niu G (2019, December). Development of a Novel Hand-eye Coordination Algorithm for Robot Assisted Minimally Invasive Surgery. In 2019 IEEE ROBIO:1234-1239. https://doi.org/10.1109/ROBIO49542.2019.8961587

Tavakoli M, Patel RV, and Moallem M (2006). A haptic interface for computer-integrated endoscopic surgery and training. Virtual Reality, 9, 160-176. https://doi.org/10.1007/s10055-005-0017-z

Townsend CM, Beauchamp RD, Evers BM, Mattox KL (2016) Sabiston Textbook of Surgery. Elsevier Health Sciences, Amsterdam

Turchetti G, Palla I, Pierotti F, Cuschieri A (2012) Economic evaluation of Da Vinci-assisted robotic surgery: a systematic review. Surg Endosc 26(3):598–606. https://doi.org/10.1007/s00464-011-1936-2

Wee IJY, Kuo LJ, Ngu JCY (2020) A systematic review of the true benefit of robotic surgery: ergonomics. Int J Med Robot Comput Assist Surg 16(4):e2113. https://doi.org/10.1002/rcs.2113

Xu S, Perez M, Yang K, Perrenot C, Felblinger J, Hubert J (2014) Determination of the latency effects on surgical performance and the acceptable latency levels in telesurgery using the dV-Trainer® simulator. Surg Endosc 28(9):2569–2576. https://doi.org/10.1007/s00464-014-3504-z

Yasen M, Jusoh S (2019). A systematic review on hand gesture recognition techniques, challenges and applications. PeerJ Comput Sci 5:e218. https://doi.org/10.7717/peerj-cs.218

Zendejas B, Ruparel RK, Cook DA (2016) Validity evidence for the fundamentals of laparoscopic surgery (FLS) program as an assessment tool: a systematic review. Surg Endosc 30(2):512–520. https://doi.org/10.1007/s00464-015-4233-7

Zhang F, Bazarevsky V, Vakunov A, Tkachenka A, Sung G, Chang CL, Grundmann M (2020) MediaPipe hands: on-device real-time hand tracking. arXiv Preprint. https://doi.org/10.48550/arXiv.2006.10214. arXiv:2006.10214

Acknowledgements

This work was supported by the Korea Medical Device Development Fund grant funded by the Korean government (the Ministry of Science and ICT, the Ministry of Trade, Industry and Energy, the Ministry of Health & Welfare, the Ministry of Food and Drug Safety) (Project Number: 1711174462, RS-2020-KD000123), the Interdisciplinary Research Initiatives Program from College of Engineering and College of Medicine, Seoul National University (grant no. 800-20230385), and grant No. 1920230020 from the SNUH Research Fund.

Author information

Authors and Affiliations

Contributions

Conceptualization: Wounsuk Rhee, Young Gyun Kim, Jong Hyeon Lee, Jae Woo Shim, Byeong Soo Kim, Dan Yoon, Minwoo Cho, and Sungwan Kim. Methodology: Wounsuk Rhee, Young Gyun Kim, Jong Hyeon Lee, and Jae Woo Shim. Investigation: Wounsuk Rhee, Young Gyun Kim, Jong Hyeon Lee, Jae Woo Shim, Byeong Soo Kim, and Dan Yoon. Software: Wounsuk Rhee. Evaluation: Wounsuk Rhee and Young Gyun Kim. Formal analysis: Wounsuk Rhee, Young Gyun Kim, Jong Hyeon Lee, and Jae Woo Shim. Visualization: Wounsuk Rhee. Writing – original draft: Wounsuk Rhee and Young Gyun Kim. Writing – review & editing: Wounsuk Rhee, Young Gyun Kim, Jong Hyeon Lee, Jae Woo Shim, Byeong Soo Kim, Dan Yoon, Minwoo Cho, and Sungwan Kim. Funding acquisition: Sungwan Kim. Supervision: Minwoo Cho and Sungwan Kim.

Corresponding authors

Ethics declarations

Ethical approval

Approval of all ethical and experimental procedures and protocols was granted by the institutional review board (IRB) in Seoul National University Hospital (IRB No: H-2107-167-1236). Written consent was obtained from all participants.

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix

Appendix

Usability questionnaire

The usability questionnaire contains the 10 questions listed in Table 4. A single value is calculated from the results by the contribution of each question according to the system usability scale. The preference scores for questions 1, 3, 5, 7, and 9 are subtracted by 1, and those for questions 2, 4, 6, 8, and 10 are subtracted from 5. Then, they are summed and multiplied by 2.5 to obtain an overall score on a 100-point scale. Higher scores indicate that the system is more user-friendly.

Van Der Laan’s technology acceptance scoring

Van der Laan’s technology acceptance score indicates the users’ willingness to use a given technology. It comprises the nine items listed in Table 5. The score per item ranges from − 2 to 2, where a higher score reflects a more positive response. The questionnaire consists of scales related to usability (items 1, 3, 5, 7, and 9) and satisfaction (items 2, 4, 6, and 8).

Ergonomics survey

Ergonomics is evaluated separately for the modified peg transfer and line tracking tasks according to the items listed in Table 6. We mainly focus on joint fatigue in the spine and upper extremities as well as eye fatigue and dizziness. All the scores are recorded on a 0–10 scale. Higher scores indicate more fatigue.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rhee, W., Kim, Y.G., Lee, J.H. et al. Unconstrained lightweight control interface for robot-assisted minimally invasive surgery using MediaPipe framework and head-mounted display. Virtual Reality 28, 114 (2024). https://doi.org/10.1007/s10055-024-00986-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10055-024-00986-1