Abstract

This work presents the development of a mixed reality (MR) application that uses color Passthrough for learning to play the piano. A study was carried out to compare the interpretation outcomes of the participants and their subjective experience when using the MR application developed to learn to play the piano with a system that used Synthesia (N = 33). The results show that the MR application and Synthesia were effective in learning piano. However, the students played the pieces significantly better when using the MR application. The two applications both provided a satisfying user experience. However, the subjective experience of the students was better when they used the MR application. Other conclusions derived from the study include the following: (1) The outcomes of the students and their subjective opinion about the experience when using the MR application were independent of age and gender; (2) the sense of presence offered by the MR application was high (above 6 on a scale of 1 to 7); (3) the adverse effects induced by wearing the Meta Quest Pro and using our MR application were negligible; and (4) the students showed their preference for the MR application. As a conclusion, the advantage of our MR application compared to other types of applications (e.g., non-projected piano roll notation) is that the user has a direct view of the piano and the help elements appear integrated in the user’s view. The user does not have to take their eyes off the keyboard and is focused on playing the piano.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Extended reality (XR) is a term that encompasses both virtual reality (VR), augmented reality (AR), and mixed reality (MR). XR has been gaining traction in various sectors, including entertainment, education, design, and simulation. This evolution has been fueled by the rise of increasingly advanced software (e.g., Vuforia, ARCore, ARKit, SparkAR) and hardware (e.g., Oculus/Meta Quest 2, HoloLens 2). In July 2021, Meta released an experimental API called Passthrough for its Oculus Quest 2 headset, which allowed users to view their real environment in 3D using the device’s front-facing cameras and sensors. The integration of elements of the real world with virtual objects through this function has promoted the development of various MR applications. However, this first Version of Passthrough has some limitations due to the fact that it uses infrared cameras that are designed to capture movements (controller tracking) instead of being designed specifically for this purpose. As a result, the quality of the reconstructed image is low and is displayed in grayscale. In addition, users may experience instabilities, especially at short distances. On the other hand, the running application cannot directly access the real-world image information. This image behaves like a fixed background that is then replaced by the real image that is captured by the cameras and is transparent to the application. Recently, in October 2022, Meta released a new headset called Meta Quest Pro. This headset is equipped with enhanced infrared sensors to capture the outdoor environment and an additional front-facing high-resolution color camera, resulting in color Passthrough with greater definition and stability. This is one of the two big differences from its predecessor. The other is facial and eye tracking.

This work presents a MR application for learning to play the piano that takes advantage of the new Version of the color Passthrough in Meta Quest Pro. Our MR application uses Passthrough to show the users the real image of the piano in color, on which they are going to play a previously loaded piece. The help information of the notes (duration and time) is presented to the users as virtual cubes that fall on the real keys, which are rendered by the application on the real-world image generated from Passthrough.

Systems with different technologies have been proposed for musical learning and specifically for piano. There are applications that can run on computers or mobile devices (e.g., tablets) and that, from a MIDI file or similar can show rectangles falling on a virtual piano keyboard. Both the rectangles and the virtual piano are displayed on a computer screen or other device screen to help the interpretation of a piece of music. Among them, one of the best known is Synthesia (https://synthesiagame.com), which has been widely used as a method for learning to play the piano on one’s own. Synthesia is considered to be one of the baseline approaches for learning the piano, and it is one of the systems that has been compared the most with other proposals [e.g., (Rogers et al. 2014; Molloy et al. 2019)]. Therefore, in this work, we carried out a study to compare our MR application with a Synthesia-based reference approach (using a MIDI keyboard and an iPad).

2 Related work

The evolution of technology has been reflected in all fields, and musical learning has not been an exception. Various systems have been presented to learn to play the piano, violin, guitar, drum, or flute. However, the piano is the instrument that has received the most attention (Deja et al. 2022). Different applications have been proposed for learning to play the piano, several of which use the idea of falling notes to somehow project visual information on a real or virtual piano keyboard. VR applications have been developed that have used the VR headsets of the time. The emergence of Leap Motion also allowed hand tracking to be incorporated. Since the beginning, one of the possibilities of achieving AR was to add cameras to these VR headsets to capture the image of the real world, superimpose virtual elements on the captured image, and display the augmented image on the screens of the VR headsets. The emergence of headsets like HoloLens marked a differentiating point with respect to their predecessors because they include hand tracking, they are autonomous devices, the virtual elements are blended with the real environment, and the interaction with virtual elements is natural. The main drawback of HoloLens is its high-price. MR is increasingly becoming the agreed-upon term for experiences on headsets like HoloLens (similar or higher). The appearance of Oculus/Meta Quest 2 and its grayscale Passthrough marked a turning point because it is an autonomous device, allowing virtual elements to be integrated into the real environment at an affordable price. Its main drawback is that the image is grayscale and unstable. Some previous works that have demonstrated this evolution of technology and its use for learning piano are mentioned below.

With regard to VR, Wijaya et al. (2020) presented a VR system that recorded and analyzed the user’s fingering data. Their system used a transparent and physical board that simulated a physical keyboard. For fingering registration, the system used multiple pressure sensors attached to the fingertips of the users and two Leap Motion devices for wide-range hand tracking. An HTC Vive headset was used for visualization. The authors compared the working system visualized on the headset and on a 2D screen. The authors concluded that the participants found the headset visualization to be more helpful for learning, more intuitive, and more enjoyable than the 2D screen visualization. As a drawback, the participants noted that the headset was less comfortable due to its weight. Desnoyers-Stewart et al. (2017) developed a system that combined an Arduino-based MIDI keyboard, a VR headset (HTC Vive), and a hand tracking device (Leap Motion). Leap Motion detected the users’ hands when they played the real piano. The users saw the virtual piano and a representation of their hands on the headset screen.

With regard to AR, Huang et al. (2011) developed Piano AR, which did not require markers to identify the physical position of the piano. Instead, they identified the keyboard area by the structure of the black and white keys and identified the outline of the keyboard by natural feature tracking. They used a webcam to capture the image. The goal of the authors was to determine the accuracy of the system. With regard to AR displayed on headsets, Chow et al. (2013) proposed an AR system to encourage practice and to promote notation literacy. Their system used marker-based tracking and a headset with cameras (Trivisio ARvision-3D) and a MIDI interface for tracking performances. It displayed feedback for note playing accuracy and a final report after playing a piece. Molloy et al. (2019) used a VR headset (HTC Vive) with a mounted stereo camera (Zed Mini) and a MIDI keyboard connected to a computer to develop a gamified piano practice environment. They focused on providing precise feedback on rhythm and note accuracy. Some limitations of their system were inconsistencies in stability tracking and inaccuracies in the overlaid screen. They reported that users had higher accuracy in interpreting notes compared to Synthesia. Rigby et al. (2020) presented piARno, an AR application for teaching sheet music reading, which superimposed the notes to be played on a virtual musical sheet. To do this, they used a VR headset (HTC Vive) with a mounted stereo camera (Zed Mini). They demonstrated that piARno significantly improved users’ ability to recall notes by name and correct execution of the notes. With regard to AR projected onto the real environment, Rogers et al. (2014) presented P.I.A.N.O., a system that physically projected notes onto the real piano. To do this, they placed a projector above the piano using a tripod. In their study, they compared P.I.A.N.O, Finale, and Synthesia. They concluded that P.I.A.N.O. needed less cognitive load, provided faster progress over a week of practice, facilitated better initial learning performance, provided a better user experience, and induced better perceived quality compared to non-projected piano roll notation (Synthesia) and sheet music notation (Finale).

With regard to MR using HoloLens, Birhanu and Rank (2017) used HoloLens to explore piano pedagogy. They demonstrated that MR can be an effective tool to help students learn to play the piano. Hackl and Anthes (2017) used HoloLens, but they placed an image target underneath the piano keyboard which was tracked by Vuforia. Hackl and Anthes found difficulties due to the technical limitations of HoloLens, particularly the limited field of view of the device. With a development similar to the one presented by Hackl and Anthes, Molero et al. (2021) also used HoloLens and Vuforia to track the real piano. In this case, they used the real piano as an image which was tracked by Vuforia. They carried out a study involving 5 teachers and 13 students. They concluded that the participants were satisfied with the experience and that most of them wished to use this type of technology in their piano lessons.

With regard to MR using Quest 2, a MR application to learn to play the piano using Meta Quest 2 was also recently presented (Banquiero et al. 2023). The Passthrough in grayscale was used to create a MR application for Meta Quest 2 to learn to play the piano. Two visualization modes were used to display the piano keys (wireframe and solid). The border lines on all of the keys were shown in the Wireframe mode. A solid color was shown that hide the real keys in the Solid mode. The two modes were compared. The conclusions of that work were that the students perceived the limitations of Passthrough in grayscale, although the two display modes provided a satisfactory user experience.

Our work aims to go one step further with respect to previous works in the use of technology applied to musical learning. This is the first time that Passthrough in color has been used for music learning, and, to our knowledge, there are no applications for other purposes that have used Passthrough in color. Moreover, this work explores the practical limits of this new technology and demonstrates how improvements in hardware can be effectively leveraged to enrich the MR experience. In our case, integrating the virtual help on the real piano so that the users can play the real piano without having to look away from it makes the experience as natural and intuitive as possible.

As mentioned in the introduction, Synthesia has been widely used as a method for learning to play the piano on one’s own. As detailed in previous works (Rogers et al. 2014; Molloy et al. 2019), Synthesia has been used as a baseline approach to compare with new proposals. Apart from being used in research for comparison, Synthesia has also been used for other purposes. For example, supervised piano training sessions using Synthesia combined with home practice exercises without Synthesia were used to improve manual dexterity, finger movement coordination, and upper extremity function in chronic stroke survivors (Villeneuve et al. 2014). Villeneuve et al. carried out a study involving thirteen participants with chronic stroke. The piano training lasted three weeks and consisted of supervised sessions (9 × 60 min) and home practice. In the supervised training sessions, the musical pieces were shown using Synthesia and displayed on a laptop screen placed on the piano. The participants played on a touch-sensitive Yamaha P-155 piano keyboard. Home piano exercises were performed on a roll-up flexible piano (Hand Roll Piano, 61 K). They concluded that the improvements in manual dexterity, finger movement coordination, and upper extremity function persisted for three weeks after the intervention. In another work, Synthesia was used to facilitate Angklung learning (Julia et al. 2019). Julia et al. carried out a study involving fifteen participants. Their study consisted of the following steps: (1) making angklung musical compositions in MIDI files; (2) inputting MIDI files for Synthesia; (3) setting up Synthesia; (4) Angklung learning using Synthesia; and (5) evaluation of the learning process. They concluded that the use of Synthesia helped that students who could not read notation play angklung compositions. The studies mentioned, along with others in the field, use Synthesia as a baseline reference, which supports our decision to compare our MR application with Synthesia.

3 Development of the MR application and Synthesia

3.1 The Passthrough API

The Passthrough API provides the user with a 3D perception of the real world by capturing the environment through the front sensors of the headset. This information is used to reconstruct a three-dimensional image of the external environment. The reconstructed image is rendered through a special service in a layer called the “Passthrough layer,” which is separate from the other rendering options. This layer is then merged internally with the rest of the image generated virtually by the headset software, which is transparent to the application. In the Oculus/Meta Quest 2 headset, the front facing cameras used to generate the Passthrough layer are infrared cameras that were originally designed for controller tracking. Due to this configuration, the reconstruction of the environment is achieved in a low resolution and in grayscale. In order to address these limitations, Meta launched the Meta Quest Pro headset, which added high-resolution RGB cameras that are capable of generating a Passthrough in color and with higher visual quality (2328 × 1748 (16 MP RGB Passthrough overlay)). Nevertheless, stability issues and distortions still persist, especially at short distances. In either case (Oculus/Meta Quest 2 or Meta Quest Pro), the application lacks access to the reconstructed image information, including RGB color channels and depth (Z-buffer), as well as any other information captured by the sensors. In other words, the application has very limited control over the interaction with Passthrough, which means, for example, that computer vision techniques (e.g., object detection) cannot be used. In addition, the current Version does not support operations that make use of depth such as Z-buffering, which means that any virtual elements drawn on top of Passthrough will overwrite the real-world image. However, the API does support certain predefined types of compositing using the Passthrough layer (e.g., color keying or automatic edge detection). These functionalities are always transparent to the application, i.e., the application does not have direct control over them. It is important to mention that in both Versions of headsets, the reconstruction of the environment through Passthrough is highly sensitive to lighting conditions. If these conditions are not suitable, stability issues may be more pronounced.

3.2 Meta Quest Pro

The Meta Quest Pro is the latest headset released by Meta, with significant improvements in MR and the Passthrough layer. The display quality of the Meta Quest Pro is considerably higher than its predecessors. Its screen uses local dimming, which provides 75% higher contrast than the Oculus/Meta Quest 2. It also has 37% more pixels per inch and a larger sweet spot of maximum clarity due to the new pancake lenses. In terms of performance, Meta Quest Pro has a newer processor, with superior performance and improved graphics capabilities.

The Meta Quest Pro has the battery at the back, counteracting the weight of the front viewfinder. The Meta Quest Pro features higher resolution front infrared sensors and an additional 16MP color camera. All of this allows a MR to provide the user with a more immersive experience. In addition, a new Passthrough reconstruction method based on artificial neural networks is included. This technique is called NeuralPassthrough (Xiao et al. 2022), and it combines the stereo depth estimation and image reconstruction networks to generate images from the point of view of the eye using an end-to-end approach. The Meta Quest Pro controllers benefit from improved tracking, so they are never out of sight, even when they are behind the headset. However, the Meta Quest Pro controllers have internal rechargeable batteries that need to be sufficiently charged for proper tracking. The duration of the charge is not very long, and they are discharged even when they are not in use. When the controllers are undercharged, the normal operation of the application is affected due to the constant appearance of messages that hinder and interrupt the use of the application.

3.3 Design of the MR application

Our objective was the design of a MR application for learning to play the piano that was both practical and attractive. The new functionality of the color Passthrough of Meta Quest Pro was considered to accomplish this. The agile scrum methodology was used for the design and implementation of the application. This methodology is based on the iterative and incremental management of the project, which allows greater flexibility and responsiveness to changes. The functionalities of the application have been modified and expanded using this methodology. First, a prototype was developed for use in Meta Quest 2, with Passthrough in grayscale and less functionality than that presented in this publication. The characteristics of this first prototype can be reviewed in (Banquiero et al. 2023). Therefore, this publication mentions the common features and emphasizes the differences between the two applications. For clarity and where there may be confusion, Version 1 will refer to the application with the grayscale Passthrough and Version 2 will refer to the application with the color Passthrough. For the design, we first defined the main functional characteristics:

-

The application must include interaction that is as intuitive and natural as possible.

-

The interaction must be gestural, using the hands.

-

The application must facilitate learning while playing the real piano, so the application must have visual aids.

Four piano teachers collaborated in the design, development, and validation of the application (1 man and 3 women). The meetings with the teachers were essential to create the first prototype and to modify some functionality or add new ones in subsequent meetings. For example, in the first prototype, there was no 8th guide or staff, or the next note hint. The following guidelines were derived from the design and were included in Version 1. Figure 1 shows some of the augmented elements displayed on the headset screen.

-

Background guide: it is a dark background that helps to estimate the position of each falling note. This background is displayed just above the real piano keyboard. It has lines in the octaves and fifths. Two successive keys are displayed with different intensity.

-

Colors: different colors are used for different notes. Notes to be played on a black piano key (notes with accidentals, i.e., sharps or flats) are shown in a darker tone. Notes that must be played with the left hand are displayed in a different color than notes that must be played with the right hand.

-

C keys: the keys on the real piano that represent the notes C (DO) are highlighted with a color to facilitate the location of octaves.

-

Names of the notes: they appear on the white keys of the real keyboard of the piano.

-

Staff: the application includes an option to display a sliding staff above the background guide.

-

Division of the measures: the application includes an option to display the measures. These measures are shown on the background guide with horizontal lines.

The following guidelines were derived from the design and were included in Version 2.

-

Next note indicator: the application has the option of showing an indicator on the piano key or keys corresponding to the next notes to be played in the piece. This helps the user to quickly identify the next finger movements or to place the hand in a suitable position.

-

Fingering indicator: the application offers a help function that shows the recommended fingering for playing the notes. This is accomplished by drawing a dotted line from the piano key to be played to the corresponding finger position. This is achieved by using the hand tracking of the device and piece fingering information when available.

-

Performance feedback: the application can determine (with some degree of accuracy) whether the user is playing the keys correctly and provide feedback. This is achieved by tracking the fingertips. When the correct key is pressed, the application draws icons that represent success, and, in the same way, shows icons to represent the mistakes committed. This can be useful for providing real-time feedback to the users, allowing them to correct their mistakes and improve their playing technique. In addition, the application offers additional information, such as the percentage of correct keys played by each hand, which allows the users to monitor their progress.

Different levels of augmenting the piano keyboard were defined (solid, wireframe, and clean). The solid and wireframe levels were included in Version 1 to alleviate the limitations of the Passthrough in grayscale. The clean level was included in Version 2 to exploit the potential of the Passthrough in color. These levels, ordered from fully virtual to the least degree of augmentation, are:

-

Solid: the real piano keys are hidden with virtual keys. The application draws the keys in a solid color (Fig. 2a). The user sees the rest of the piano and the environment (Fig. 3a).

-

Wireframe: the application highlights the outline of the real piano keys by drawing lines on their edges (Fig. 3b). By default, white lines are employed for the white keys and black lines for the black keys (Fig. 2c), allowing users to perceive the actual piano with virtual borders on the real keys. Additionally, in Version 2, users have the option to apply alpha blending to fill the interior (Fig. 2b), and they have the flexibility to choose alternative colors for the borders, such as light green for white keys and dark green for black keys (Fig. 2d).

-

Clean: the application shows only the note name above the center of the real piano key (Fig. 2e). The users can see the entire piano through the Passthrough image (Fig. 3c). Optionally, the application can also highlight the C keys in color, allowing the user to quickly identify the beginning of each octave.

These forms of augmentation allow for a progressive experience of augmented elements, from a completely virtual representation to a minimal one of piano keys.

3.4 Description of the MR application for learning to play the piano

The user does not lose contact with reality at any time and continues seeing the real world in color. The user presses the real piano keys to play the piece. The application helps the users to learn to play the piano by showing virtual notes that fall on the real keys. The user knows the real piano key to press because the key is highlighted with color. Figure 4 shows a user playing the real piano with our MR application and her view.

A user playing the piano with our application and her view: a the photographic image depicting a user using the application, showcasing both the user interface and the augmented objects superimposed onto the actual photograph; b the raw image captured by the device is displayed, providing the user’s perspective

Before being able to play musical pieces on the piano, the person in charge of the calibration must perform an initial calibration process. The purpose of this phase is to allow the application to acquire knowledge about the position and orientation of the physical piano within the virtual reference frame of the headset. By doing so, the augmented information will be seamlessly integrated with the real piano.

The calibration phase begins by defining the number of keys on the piano. To do this, a menu is available that offers options for 49, 61, 76, or 88 keys for standard pianos and keyboards. Alternatively, there is an option for a custom keyboard, allowing the specification of the number of keys and the starting note. Optionally, the dimensions of the actual piano can be entered, specifically the length of the piano. This is measured from the start of the first white key to the end of the last one. Other physical measurements that are unrelated to the calibration phase but offer flexibility in adjusting visual and esthetic parameters can also be entered. These values may include the length and the height of the white and black keys, among others. Additionally, these values can be saved for future use, streamlining the calibration phase.

Then, the person in charge of the calibration is required to interactively define the position and orientation of the piano using either the controllers or his/her hands, employing one of the three methods explained in the calibration module section. After completing the calibration phase, the application displays the main user interface positioned in front of the piano to allow easy access for the user without obstructing the view of the piano keys. However, certain users, especially children and those using grand pianos, may encounter difficulty accessing distant menu options when seated. To address this, the application offers an on-demand secondary mini-user interface that is positioned near the left wrist. This interface provides quick access to essential functions, ensuring convenient accessibility without compromising piano key interaction.

The user interface enables the selection of several pieces and tutorials that are included within the application. The tutorials comprise different scales for practicing with the left hand, right hand, or both hands. Their purpose is to familiarize the user with the interface and augmented information. When a piece is selected, the application informs the user about the initial hand placement by displaying left and right avatar hands. The user is given a few seconds to prepare for execution. Subsequently, a series of cubes, referred to as “falling notes,” appear above and descend onto the keys of the physical piano. Each falling note represents a note from the piece and lands on the corresponding key of the piano. The user must press the corresponding key on the physical piano precisely when the falling note makes contact with the key. The length and speed of the falling note are designed so that the falling note remains in collision with the physical piano key for the exact duration specified in the melody. The falling notes serve as the most significant augmented element generated by the application. The falling notes enable users (even those without any musical reading skills) to perform any piece by following the guidelines mentioned earlier. The video that accompanies this publication shows how our MR application works. The calibration process can be seen first, then a tutorial and the different display modes.

3.5 Architecture and technical specifications

The application was developed using Unity 2020.3.14 and the Oculus Integration SDK Version 54.1, which includes OVRPlugin 1.86.0. The same application Version is compatible with both devices: Meta Quest 2 with the grayscale Passthrough Version and Quest Pro with the enhanced color Version. The architecture of the application is shown in Fig. 5. The application comprises the modules: calibration, user interface, feedback, scene generator, and simulation and renderer.

3.5.1 The simulation and renderer module

A specific simulation module, which is independent of the Unity physics engine, is responsible for animating the falling note boxes. Its primary objective is to ensure that each box falls onto the piano key precisely at its designated start time. This synchronized animation, combined with other visual cues, allows the user to anticipate which key to play and when, effectively following the rhythm of the melody. The design of this module is independent of the physics engine and enables seamless advancement or regression within the melody as well as control over its playback speed, without any loss in precision. In Version 2, several visual effects were added. The wireframe mode was improved with a specialized shader employing on-screen derivatives to consistently outline the borders of the piano keys. Additionally, Version 2 offers a more detailed modeling of the piano keys, ensuring a closer match to the real-world counterpart. Furthermore, the update incorporates innovative visual effects such as particle systems and disintegration effects. These effects create the illusion that the virtual elements merge perfectly with the Passthrough image. The rendering of all these visual effects, including blending with the Passthrough layer and edge detection for wireframe modes, is executed through fragment shaders, ensuring easy portability to other systems like OpenGL or HLSL.

3.5.2 The scene generator module

The objective of this module is to generate the geometry of the objects for the falling notes based on the musical information contained in music files. For this purpose, the application needs to know, at a minimum, the start time, duration, and frequency of each note that composes a musical piece. While Version 1 utilized boxes to represent each note, Version 2 introduces a variety of shapes, such as cylinders, capsules, and other geometric representations. The length of these objects depends on the duration of the notes, and their horizontal and vertical positions are determined by the frequency and start time of the notes, respectively. In addition to the shape variations, Version 2 enhances the color coding of the falling notes. Version 1 differentiated between the left and right hands using specific colors. In Version 2, a wider color palette is adopted to represent other voices. These could be played by someone else, left unplayed or disabled, or even be played by the application itself, serving as a form of accompaniment. Currently, the music parser supports two widely used musical formats: MIDI and MusicXML. MIDI files are primarily oriented toward the playback and control of electronic musical instruments. They only contain the basic information necessary for transmitting messages of notes, durations, dynamics, and other musical parameters to MIDI devices. As a result, they do not contain detailed information about musical interpretation. On the other hand, MusicXML files, unlike MIDI files, do contain more detailed information about musical interpretation. MusicXML is an XML-based format that allows for the representation of a wide range of musical elements, including notation, lyrics, articulations, dynamics, digitation, and more. When this information is present, the application can utilize it to incorporate optional augmented elements that aid in musical interpretation, such as fingering (indicating which finger to use for each note) or visualizing the staff.

3.5.3 The user interface module

Our application aligns with the design and operational interface recommendations that Meta has established for its devices, but it has been adapted to the specific context of learning to play the piano. Therefore, while the user can optionally use the controllers, the entire application can be operated using only the hands. In previous releases, the core of the interaction was housed in a module called Smart Tracker, which utilized algorithms to analyze the raw data from the hand tracking API of the device. This was used in Version 1. In the latest Version, the smart tracker has been enhanced by integrating finite state machines (FSM) to refine user interaction. This is used in Version 2. Interface components, such as virtual buttons and sliders, now feature a specific FSM. This approach not only ensures a more natural interaction aligned with user expectations, but it also mitigates errors that might arise from native tracking.

3.5.4 The feedback module

Neither the Meta Quest 2 nor the Meta Quest Pro provide sufficient precision to accurately play a complex melody solely based on native hand tracking without the use of a physical piano. If the application generated sound when pressing virtual keys (like an “air piano”), an additional detection or interaction mechanism would be required, as hand tracking alone would not be precise enough for this task. However, the tracking precision of the devices is adequate to provide relatively good feedback during the performance of a specific musical piece, thanks to the availability of the exact position required for each finger at any given moment and our algorithm designed for this purpose. This is explained later in this subsection.

This module was included in Version 2. This module continuously checks for collisions between the fingers and the piano keyboard during the interpretation of a piece. This module compares this information with the falling notes to determine if the user pressed the correct key at the right moment. However, the device tracking is not precise enough for this task due to various factors, such as finger overlap in certain parts of the piece, the proximity between adjacent white and black keys (e.g., between a B and a B-flat), or the speed of the melody in some passages. To address this issue, a statistical approach is employed. Simplifying the problem, we assumed:

-

Measurements derived from the device tracking are essentially treated as independent.

-

The spatial coordinates, which represent the fingers position, in three dimensions, are considered separately.

When projecting the fingers position onto the virtual piano, the horizontal measure indicates the note pitch, the vertical measure shows whether or not the key is pressed, and the depth measure differentiates between black and white keys. The algorithm calculates an error metric based on the difference between the detected finger position and the desired position from the staff. This positional discrepancy, termed as “error”, is modeled using a normal distribution centered around zero, indicating no error. The standard deviation for this distribution is derived from empirical observations and our prior experiments. Using this distribution, the algorithm calculates the probability that a user is pressing the correct key based on a specific probability level. The chosen probability level directly correlates with the desired difficulty setting. A higher probability level demands a stricter error margin, ensuring that the user’s finger position closely matches the desired position. Conversely, a lower probability level offers greater leniency. This approach counteracts potential inaccuracies in the device measurements while being more forgiving of user errors. In addition to spatial accuracy, our algorithm takes timing into account. Minor deviations are allowed in the exact moment a note is played by understanding the crucial nature of rhythm in music. The algorithm saves previous positions to estimate future positions and predicts on note accuracy both spatially and temporally.

3.5.5 The calibration module

Ensuring that the geometry of the falling notes intersects with the keys of the physical piano accurately is crucial. This alignment is essential in order to prevent user confusion and maintain the meaningfulness of the augmented information. This alignment is achieved in the calibration module, which ensures that the user can clearly identify which note to play and to maintain the integrity of the MR experience. In order to accurately position the falling notes on the physical piano, the headset requires knowledge of its position and orientation. The calibration module provides three methods aimed at aligning the physical piano with the virtual reference frame of the device:

Method 1: Anchors + Right Controller. The user employs the Right Controller to grab and move two spherical objects called anchors. These anchors allow the user to define a position in 3D space. To calibrate the piano, the user utilizes the left anchor to set the initial position. The right anchor is used to define the final position, and thus, the orientation. Figure 6a and Fig. 6b show the calibration using this method. Once the piano is calibrated using any of these three methods, the users can perform fine-tuning using the user interface. A series of buttons allows the movement of the left anchor or the right anchor in any direction, with intervals as small as tenths of millimeters, enabling precise adjustments (Fig. 6c).

Method 2: Right and Left Controllers. The user utilizes both controllers in the calibration process. First, the user places the left controller on the left side of the piano, physically making contact. Then, the user repeats the same process by placing the right controller on the end part of the piano and presses the primary button to fix the position.

Method 3: Hand Tracking. The user utilizes the index finger of the left hand to press the first key of the piano while simultaneously performing a pinching gesture with the right hand for about two seconds. The same process is repeated on the right side.

3.6 Synthesia

Synthesia is a software that is used to learn to play the piano virtually. Synthesia allows users to visualize the musical notes in a graphical interface while the piece is playing, which facilitates the process of learning and practicing music. Synthesia uses MIDI files to generate the pieces and displays the notes to be played as colored cubes that fall from the top of the screen onto the corresponding keys on the piano image. Each colored cube lines up with the specific musical note that needs to be played. Synthesia can be used by directly touching notes on a touch screen, providing an intuitive and accessible experience. If a MIDI keyboard is available, Synthesia can take the input from the MIDI and use it to provide feedback information in real time. Users can follow the notes and practice at their own pace, adjusting the playback speed based on their skill level. Synthesia also offers options for one-handed practice, repeating specific sections of a piece, and receiving real-time feedback on performance. In addition to its primary piano teaching functionality, Synthesia also offers features such as custom staff music creation, performance recording, and sound mixing. There are several similar applications, such as Playground Sessions, Flowkey, and Piano Marvel. However, Synthesia is considered to be one of the most popular softwares among beginners and hobby musicians who want to learn and improve their piano playing skills.

In this work, we carried out a study comparing a system with Synthesia with our developed MR application. In our study, the Synthesia software (Version 10.9.5895) was used on an iPad Pro (11″). The Synthesia license was paid in order to use the 150 included pieces. We used a CME M-Key MDI keyboard with 49 keys. The iPad Pro was connected to the MIDI keyboard via a USB MIDI connector. The iPad Pro was placed on a support that allowed the iPad Pro to be placed horizontally, thereby facilitating the visualization of the notes that fall. Figure 7 shows the layout of the complete system.

4 Study

4.1 Participants

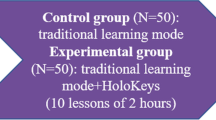

The participants were 33 piano students from the U.M. Santa Cecilia Music School of Benicàssim, Spain (11 men and 22 women). These participants come from two programs of study: an official one (with 22 students) and an unofficial one for adults (with 11 students). The levels of the official program of study are: initiation (with 6 students), the first level of elementary education (with 5 students), the second level (with 3 students), the third level (with 4 students), and the fourth level (with 4 students). The participants were divided into two groups (MR and Syn) counterbalancing age and gender. The MR group had 17 students aged between 7 and 65 years old. The Syn group had 16 students aged between 7 and 65 years old. The students or their parents (if the students were underage) received information about the study. The students or their parents signed the informed consent form before their participation. The study was carried out in accordance with the Declaration of Helsinki and was approved by the Ethics Committee of the Universitat Politècnica de València (Spain).

4.2 Measures

4.2.1 Assessment of the teachers

The teachers assess how the student plays two pieces in each of the two modalities (MR and Syn). The teachers’ scores ranged from 0 to 10. The mean of the scores assigned by the teachers to the two pieces played using each modality was calculated (variables TeacherMR, TeacherSyn).

4.2.2 Questionnaires

The following questionnaires were used:

-

(1)

SSQ (simulator sickness questionnaire) (Kennedy et al. 1993). The SSQ contains sixteen symptoms on a 4-point Likert scale. The symptoms are grouped into three subscales: disorientation, oculomotor, and nausea. Each subscale contains seven symptoms. The subscales share symptoms of other subscales. The procedure proposed by Kennedy et al. (Kennedy et al. 1993) was used to calculate the total score (TSSQ variable) and the subscale scores (Disorientation, Oculomotor, and Nausea variables).

-

(2)

Presence. Six questions were used (Regenbrecht and Schubert 2002). The presence score (PRE variable) was calculated as the mean of the scores of the presence questions. The scores for the two negative questions were inverted to calculate the mean.

-

(3)

TAM (technology acceptance model) (Davis 1993). Four questions were used. Three questions (variables TAM1-TAM3) were included to measure the perceived usefulness. The fourth question (TAM4 variable) measures the perceived ease of use.

-

(4)

UEQ (user experience questionnaire) (Laugwitz et al. 2008). The UEQ consists of twenty-six items on a 7-point Likert scale, grouped into six variables: Attractiveness, Efficiency, Dependability, Stimulation, Novelty, and Perspicuity.

-

(5)

Preferences. This questionnaire consists of questions to determine the preference of the participants with regard to the two applications used and open comments. The questions were: PF1- Which one did you like the most (MR or Synthesia)?; PF2- Which one do you think is better for musical learning (MR or Synthesia)?; PF3- Which one was easier for you to handle (MR or Synthesia)?; PF4- Would you recommend them to your colleagues (MR, Synthesia, the two equally, neither)?; PF5- Would you like your teacher to use either of them in class (MR, Synthesia, the two equally, neither)?; PF6- Open comments.

-

(6)

Data. This questionnaire collected information from the participants. The information collected was the following: age, gender, type of vision, and level of experience in computers.

4.3 Protocol

The participants were counterbalanced and randomly assigned to one of two groups:

-

(1)

The MR Group. The protocol followed by the students was as follows:

-

(2)

The students played two pieces on the piano using the MR application. The pieces were chosen by the teacher based on the level of the student.

-

(3)

The students filled out the following questionnaires: SSQ, Presence, TAM, and UEQ.

-

(4)

The students played two pieces on a MIDI keyboard, used Synthesia, and viewed the help on the screen of an iPad. The pieces were chosen by the teacher based on the level of the student.

-

(5)

The students filled out the following questionnaires: TAM, UEQ, Preferences, and Data.

-

(6)

The Syn Group. The protocol followed by the students was as follows:

-

(7)

The students played two pieces on a MIDI keyboard, used Synthesia, and viewed the help on the screen of an iPad. The pieces were chosen by the teacher based on the level of the student.

-

(8)

The students filled out the following questionnaires: TAM and UEQ.

-

(9)

The students played two pieces on the piano using the MR application. The pieces were chosen by the teacher based on the level of the student.

-

(10)

The students filled out the following questionnaires: SSQ, Presence, TAM, UEQ, Preferences, and Data.

The study was carried out at the Music School U.M. Santa Cecilia of Benicàssim (Spain) for one week from Monday to Friday, during each student’s class time (from 3:30 p.m. to 9:00 p.m.). The students needed about half an hour to use the two applications and fill out the questionnaires.

5 Results

The Shapiro–Wilk test was applied to verify the normal distribution of the variables. Most of the variables had a non-normal distribution. Nonparametric tests were used with the entire data set, which are more suitable for distributions of this type. If p < 0.05, the results are considered to be statistically significant and are shown in bold. In some cases, the data from the analyses are not included when there are no statistically significant differences to facilitate reading. We used the statistical toolkit R (http://www.r-project.org) to perform the statistical analysis of the data.

5.1 Outcomes

The piano teachers selected four pieces to be played by the students based on the level of each student. The students played two pieces with each of the two applications. For each application, the teacher tried to select the first piece to be of equal or less difficulty than the second piece. The teachers assessed how the students played each of the four pieces on a scale from 0 to 10. The mean of the scores assigned by the teachers to the two pieces was calculated using the two applications (TeacherMR, TeacherSyn). The Mann–Whitney U test was applied to check if the use of the MR application (Mdn = 8.75; IQR = 1.75) and the system with Synthesia (Mdn = 7.38; IQR = 2.63) affected the mean score obtained by the students when using one of the two applications first (U = 197.5, Z = 2.23, p = 0.027, r = 0.389). Figure 8 shows box plots for the mean scores for the first use of the MR application and the system with Synthesia. Figure 9 shows the interaction plots for the mean scores for the first use of the MR application and the system with Synthesia, considering the age and gender of the students.

Interaction plots for the mean scores assessed by the teachers for the first two pieces played by the students, considering the age and gender of the students: a mean scores for the first use of the MR application; b mean scores for the first use of the system with Synthesia. The red rhombuses indicate men

The mean scores obtained by the students of the MR group were also analyzed, i.e., the mean scores of the participants who used the MR application first and the system with Synthesia second were compared. The Wilcoxon signed-rank test was applied to check if there were differences in the mean scores of the same students when playing the pieces using the two applications. The results are shown in Table 1 (first row).

The mean scores obtained by the students of the Syn group were analyzed, i.e., the mean scores of the participants who used the system with Synthesia first and the MR application second were compared. The Wilcoxon signed-rank tests were applied to check if there were differences in the mean scores of the same students when playing the pieces using the two applications. The results are shown in Table 1 (second row).

All of these analyses indicate that there were statistically significant differences in the mean scores obtained by the students in favor of the MR application when using the two applications.

5.2 User experience

The UEQ data analysis tool (https://www.ueq-online.org) was used to analyze the responses of the UEQ questionnaire. First, the results considering only the first use with one of the two applications are presented, i.e., the data of the group that used the MR application first were compared with the group that used the system with Synthesia first. For the use of the MR application first, one participant was removed from the sample for the UEQ analysis because he/she had a problematic response pattern. The tool identifies such a pattern as an inconsistency and suggests its removal. Using one of the UEQ downloadable excel-sheets, two graphs were obtained (Fig. 10) to determine how good our application is compared to the products in the benchmark data set (Martin et al. 2017) included in the UEQ Data Analysis Tool. These graphs indicate that the MR application is excellent compared to the UEQ benchmark. According to the interpretation of the graphs provided in the UEQ tool and in (Martin et al. 2017), our MR application is in the range of the 10% best results for its six variables or scales. The graphs also indicate that the system with Synthesia is excellent compared to the UEQ benchmark for five variables and above average for one variable.

The Mann–Whitney U tests were applied to check whether using one application or another first affected any of the six variables. The results are shown in Table 2. Figure 11 shows the bar charts with confidence intervals for the six variables for the first use of the two applications. The error bars represent 5% confidence intervals for the variable means. The graph was generated by the UEQ Data Analysis Tool. Statistically significant differences were found for two variables (Perspicuity and Novelty) in favor of the MR Group.

Second, the data considering only the second use with one of the two applications were analyzed, i.e., the data of the group that used MR second were compared with the group that used Synthesia second. In this case, the UEQ Data Analysis Tool identifies three participants with problematic response patterns in the use of Synthesia and were removed from the analysis. The graphs comparing the data of both groups with the benchmark provided in the UEQ Data Analysis Tool showed the same trend as the one shown in Fig. 10. The Mann–Whitney U tests were applied to check if the second use of the applications affected any of the six variables. No statistically significant differences were found for any of the six variables.

Third, the data considering the MR group were analyzed, i.e., the data of the participants who used the MR application first and the system with Synthesia second were compared. As indicated by the UEQ Data Analysis Tool and as in the previous analyses, one participant had a problematic response pattern using the MR application first and three participants had a problematic response pattern using Synthesia second. These participants were removed from their groups to obtain the graphs. The graphs comparing the data of both groups with the benchmark provided in the UEQ Data Analysis Tool showed the same trend as the one shown in Fig. 10. The Wilcoxon signed-rank tests were applied to check if there were differences in the experience of the same users for the two applications considering the six variables. The four participants mentioned above were removed from this analysis. No statistically significant differences were found for any of the six variables.

Fourth, the data considering the Syn group were analyzed, i.e., the data of the participants who used the system with Synthesia first and the MR application second were compared. The graphs comparing the data of both groups with the benchmark provided in the UEQ Data Analysis Tool showed the same trend as the one shown in Fig. 10. The Wilcoxon signed-rank tests were applied to check if there were differences in the experience of the same users for the two applications considering the six variables. The results are shown in Table 3. Statistically significant differences were found for the six variables. Figure 12 shows the bar charts with confidence intervals for the six variables for the second use of the MR application. The graph was generated by the UEQ Data Analysis Tool. The graph of the first use of the system with Synthesia is shown in Fig. 11b.

The Mann–Whitney U tests were applied to check whether or not there were differences for the TAM variables (perceived usefulness (TAM1-TAM3) and perceived ease of use (TAM4) between the participants who used the first application. The results are shown in Table 4.

The data considering only the second use with one of the two applications were analyzed, i.e., the data of the group that used the MR application second were compared with the participants who used the system with Synthesia second. The Mann–Whitney U tests were applied to check if the second use of the applications affected the TAM variables. No statistically significant differences were found for any of the four variables.

The data for the MR group were analyzed, i.e., the data of the participants who used the MR application first and the system with Synthesia second were compared. The Wilcoxon signed-rank tests were applied to check if there were differences in the experience of the same students for the two applications considering the TAM variables. A statistically significant difference was found only for the TAM2 variable (W = 15, Z = 2.231, p = 0.048, r = 0.383) in favor of the MR application.

The data for the Syn group were analyzed, i.e., the data of the participants who used the system with Synthesia first and the MR application second were compared. The Wilcoxon signed-rank tests were applied to check if there were differences in the experience of the same users for the two applications considering the six variables. The results are shown in Table 5. Statistically significant differences were found for three of the TAM variables.

5.3 Presence

The Mann–Whitney U test was applied to check whether or not there were differences for the Presence variable (U = 92.5, Z = − 1.585, p = 0.117, r = 0.276) between the groups of participants that used the MR application first (Mdn = 6.33; IQR = 1.67) or second (Mdn = 6.67; IQR = 0.38). No statistically significant differences were found for this variable. As can be observed, the median is quite high (above 6 on a scale of 1 to 7).

5.4 Adverse effects of wearing headsets

The mean of TotalSSQ is 2.86 for the use of MR application first and 2.34 for the use of the MR application second. The thresholds for classifying the symptoms induced are negligible (< 5), minimal (5–10), significant (10–15), concerning (15–20), and bad (> 20) (Bimberg et al. 2020). Therefore, the symptoms induced by the use of the MR application first or second were negligible.

5.5 Gender and age

To determine if gender influences the mean scores obtained by the students, we applied the Mann–Whitney U tests. The mean scores obtained by women and men were compared in each of the four possible uses of the applications. The results are shown in Table 6. No significant differences were found for any of the analyses.

We applied the Mann–Whitney U tests to determine if gender influences the UEQ variables. The UEQ variables for women and men were compared for the first use of each of the two applications. No significant differences were found for any of the analyses. We applied the Mann–Whitney U tests to determine if gender influences the presence variable. The presence variable was compared for women and men and for the use of the MR application first and second. No significant differences were found for any of the analyses. We applied the Mann–Whitney U tests to determine if gender influences the SSQ variables. The SSQ variables were compared for women and men and for the use of the MR application first and second. No significant differences were found for any of the analyses. We applied the Mann–Whitney U tests to determine if gender influences the TAM variables. The TAM variables for women and men were compared for the first use of each of the two applications. A statistically significant difference was found only for the TAM2 variable considering the participants who used Synthesia first (U = 12, Z = − 2.107, p = 0.041, r = 0.527). Men gave significantly higher ratings. From all these analyses, it can be concluded that all the variables analyzed were independent of the gender of the participants when using the MR application.

With regard to Synthesia, from the seventeen analyses, a statistically significant difference was found for only one variable. Even though it cannot be concluded that all of the variables analyzed were independent of gender, all except one were independent of these factors.

The Kruskal–Wallis tests were applied to determine if age influences the mean scores obtained by the students. The results of these analyses are shown in Table 7.

We applied the Kruskal–Wallis tests to determine if age influences the UEQ variables. The UEQ variables were compared for the first use of each of the two applications considering age. No significant differences were found for any of the analyses. We applied the Kruskal–Wallis tests to determine if age influences the presence variable. The presence variable was analyzed for the use of the MR application first and second. No significant differences were found for any of the analyses. We applied the Kruskal–Wallis tests to determine if age influences the SSQ variables. The SSQ variables were analyzed for the use of the MR application first and second. No significant differences were found for any of the variables analyzed. We applied Kruskal–Wallis tests to determine if age influences the TAM variables. The TAM variables were compared for the first use of each of the two applications considering age. No significant differences were found for any of the analyses. From all these analyses, it can be concluded that all of the variables analyzed were independent of the age of the participants when using the MR application and Synthesia.

5.6 Correlations

Tables 8 and 9 show the correlations obtained between some of the variables analyzed when using the MR application first and the system with Synthesia first, respectively. Positive correlations were obtained between some of the UEQ variables both in students who used the MR application first and in students who used the system with Synthesia first. The students who reported more experience in computers reported greater dependability in the MR application.

5.7 Preferences

This section presents the preferences expressed by the participants. For PF1- Which one did you like the most (MR or Synthesia)?, a total of 90.9% of the students showed their preference for the MR application. For PF2- Which one do you think is better for musical learning (MR or Synthesia)?, a total of 72.7% of the students showed their preference for the MR application. For PF3- Which one was easier for you to handle (MR or Synthesia)?, a total of 66.7% of the students showed their preference for the MR application. For PF4- Would you recommend any to your colleagues (MR, Synthesia, the two equally, neither)?, a total of 48.5% of the students showed their preference for the MR application, and 51.5% showed their preference for the two applications. For PF5- Would you like your teacher to use any of them in class (MR, Synthesia, the two equally, neither)?, a total of 51.5% of the students showed their preference for the MR application, 39.4% showed their preference for the two applications, and 9.1% of the students selected neither (3 students aged 18, 63 and 65).

6 Discussion

This work has demonstrated that color Passthrough of Meta Quest Pro offers a satisfactory MR experience. In our case, this new functionality that is included in Meta Quest Pro was used to develop a MR application for learning to play the piano. A study was also carried out to compare the interpretation outcomes of the students and their subjective experience when using our MR application and a system composed by a MDI keyboard and an iPad Pro in which Synthesia was used. The interpretation outcomes show that the MR application and the system with Synthesia were effective in learning piano. All of the students obtained scores from the teachers that were greater than or equal to 5 on a scale from 0 to 10 using the two applications. However, the students played the pieces significantly better using the MR application.

The two applications provided a satisfying user experience. However, for the students in their first use (between-subjects), there were statistically significant differences for perspicuity and novelty in favor of the MR application. For the students using Synthesia first and then the MR application (within-subjects), there were statistically significant differences for all of the variables used in the UEQ questionnaire in favor of the MR application. A similar trend was observed when analyzing the variables of the TAM questionnaire. Therefore, it can be concluded that the subjective experience of the students was better when they used the MR application. Our argument for these results is that even though the students gave better values to the MR application, Synthesia is a good application for learning to play the piano and the students recognized its value. Furthermore, practically none of the students had used Synthesia, and they also liked Synthesia and rated it well.

From the comments of the students that were added to the final questionnaire as open comments (PF6), the teachers’ comments and our own observations during the study, the main advantage of our application with Meta Quest Pro compared to the system with Synthesia is that everything is integrated in the screen of the headset. The students do not have to look elsewhere. They only pay attention to one thing (playing the piano), not two (the MIDI keyboard and the screen of the iPad). Our MR application helps to locate the key on the piano keyboard efficiently, quickly, and more intuitively. The integration of the help elements directly on the real piano greatly helps in the placement of the fingers on the key to be played. The system with Synthesia is confusing because the students have to look at the iPad screen and the keyboard, i.e., they have to look at the screen to see the note and then search for the note to play on the keyboard. One student added that it is better to look at a staff, which has more information. The non-clarity is especially obvious when the key to be played next is separated by more than four keys from the current key. In this case, the student has to count the keys to the right or to the left. The students could not correctly play any pieces with two hands or pieces that had intervals that require switching to another keyboard position, except for students who had experience with Simply Piano or similar. When the piece has a certain width, more than two or three octaves (e.g., those that are played with two hands), the piano image on the tablet screen is very small, and this image clearly does not match the MIDI keyboard, which is very confusing. This inconvenience could be solved with a screen that is the same size as the keyboard. With pieces that are musically simple but go over four intervals with the same hand, the vast majority of the students could not follow them once they lost the sequence. With our MR application, it is much easier to take up any piece again from any point. All of these observations allow us to conclude that the integration of help elements directly on the real piano has advantages over other types of systems, such as non-projected piano roll notation (e.g., Synthesia). This conclusion is in line with previous works (Rogers et al. 2014; Banquiero et al. 2023). Another advantage of using our application is that the students do not lose contact with the real world and can see the real piano and the teacher, who can help them visually in the learning process.

Nevertheless, one aspect in favor of using of the system with Synthesia is that its commissioning is simpler than with headsets. The configuration of the system with Synthesia is faster. With headsets, the configuration is slower, the experimenter has to adjust the headset well, check that it does not look blurry, etc. Touching buttons on a touch screen is easier than touching the virtual buttons on the headset.

After the experience using Meta Quest 2 and Meta Quest Pro, our conclusions are as follows. The main advantage of Meta Quest Pro is color Passthrough. The Passthrough visualization is much improved in Meta Quest Pro, not only because of the color, but also the stability of the image. The image is distorted at greater distances with Meta Quest 2, i.e., the image is distorted at distances of less than 0.6 m. (Banquiero et al. 2023). The image is also distorted with Meta Quest Pro, but at much shorter distances, e.g., when bringing the hand closer to the face or bringing the headset closer to the piano. In our tests, we verified that Meta Quest Pro is very stable up to distances greater than 40 cm and begins to show noticeable artifacts for distances of less than 35 cm. Figure 13 shows an extreme example of distortion. Our MR application is not affected by this distortion since, when playing the piano, the distance between the headset and the piano is out of range for distortion to occur. Therefore, the MR experience with Meta Quest Pro is much better than with Meta Quest 2.

We also observed some aspects that are better in Meta Quest 2 than in Meta Quest Pro. With regard to the controllers, we did not have any problem with the Meta Quest 2 controllers. If the Meta Quest 2 controllers run out of battery, the battery is changed and there is no more problem. In contrast, we had a lot of problems with the Meta Quest Pro controllers. The Meta Quest Pro controllers get discharged when not in use and the Meta Quest Pro does not detect the controllers well and loses the tracking. In addition, in some cases, messages appear to indicate this fact and distract the user when using the application. When the Meta Quest Pro is removed and placed on another student, the headset sometimes loses position and the piano becomes out of calibration. That was not the case with Meta Quest 2. The Meta Quest Pro only goes out of calibration a few millimeters, which is almost imperceptible to the naked eye, but when the student uses the headset to play the keys, the calibration is noticeable. The Meta Quest Pro has problems transmitting the image through https://www.oculus.com/casting. We had no problem with this functionality with Oculus Quest and Oculus/Meta Quest 2. However, we had quite a few problems with Meta Quest Pro. In most cases, the Meta Quest Pro did not work the first time, and we had to try it several times. Moreover, when the transmission was working, in some cases, it suddenly stopped. Therefore, this functionality was not used in the study. However, when the transmission works, the visualization is perfect because the real world and the embedded virtual elements are transmitted in color. In contrast, in Meta Quest 2 only the virtual elements were observed, but not the real world (even if it was in gray levels). We would like to add, that with Oculus/Meta Quest 2, all of the Passthrough is shown when using Side Quest and Oculus Hub. The Meta Quest Pro is considerably more sensitive to light than Meta Quest 2. The current price of Meta Quest Pro is more than three times the price of Meta Quest 2. The size of the headsets is an aspect that has its advantages and disadvantages. The Meta Quest 2 is smaller than the Meta Quest Pro. This is an advantage for smaller heads (e.g., small children) and is a drawback for people who wear glasses. When taking the headset off, in most cases, the glasses stay inside the headset. When using Meta Quest Pro with small children (7 years old in our study), we had problems because the headset was more difficult to fit and adjust. Once the headset was placed, it would fall or move.

The sense of presence offered by the MR application was high (above 6 on a scale of 1–7). Specifically, the sense of presence of the groups of participants that used the MR application first (Mdn = 6.33; IQR = 1.67) and second (Mdn = 6.67; IQR = 0.38) was greater than the sense of presence induced when using Meta Quest 2 in its two display modes (Wireframe (Mdn = 5.6; IQR = 1.8) and Solid (Mdn = 5.7; IQR = 1.2)) with the same objective (Banquiero et al. 2023). Therefore, the sense of presence induced in a MR application by Meta Quest Pro was greater than that induced by Meta Quest 2.

The adverse effects induced by wearing the Meta Quest Pro and using our MR application were negligible (a mean of less than 3). The means for the adverse effects obtained in the study in which Meta Quest 2 was used for learning to play the piano (Banquiero et al. 2023) were 14.49 for the use of a Wireframe visualization of the piano keyboard and 7.26 for the use of a Solid visualization. Therefore, the Meta Quest Pro induced fewer adverse effects than Meta Quest 2.

The outcomes of the students and their subjective opinion about the experience when using the MR application were independent of age and gender. These results are in line with the results obtained when the Meta Quest 2 was used for learning to play the piano (Banquiero et al. 2023). We include some opinions expressed by the participants or observed by the experimenters. Some students expressed their satisfaction with the natural-looking and virtual hand that covered the real hand like a glove and followed it naturally. Several students commented that they played pieces that they did not know and previously did not feel capable of playing. These comments corroborate the satisfaction experienced by the students. Only one 7-year-old student reported that the Meta Quest Pro was uncomfortable and heavy for her. A student who does not see 3D in the cinema perceived the 3D with the headset. This is a common feature of headsets with features that are similar to or better than the Oculus Rift (Cárdenas-Delgado et al. 2017).

With regard to the headsets used to date for developing applications to learn to play the piano, our proposal uses the Meta Quest Pro, which is a headset that has the most suitable features for an immersive MR experience. HoloLens has technical limitations, such as the limited field of view (which is 52 degrees), which was noted by the authors who used HoloLens to learn to play the piano (Hackl and Anthes 2017). This limitation implies that if the user’s field of view is wide, the headset will only display virtual elements in the device’s field of view, but not in the user’s field of view. Therefore, all of the virtual elements that should be displayed in the field of view that are not covered by the headset will not be displayed. In the case of the piano, the virtual elements in the central area of the user’s viewing angle will be displayed, but not the elements furthest to the left or to the right. The HTC Vive headset used in previous works (Molloy et al. 2019; Rigby et al. 2020) needed the inclusion of a mounted stereo camera to capture the image of the real world. There are two new headsets coming soon with many possibilities for use. Meta Quest 3, with a possible release in the fall of 2023, will have color Passthrough and be half the price of Meta Quest Pro. Another interesting alternative will be Apple’s Vision Pro headset, which will be available in the United States in early 2024.

7 Conclusion

This work presents the design, development, and validation of the first MR application that has used color Passthrough for learning to play the piano. A study was also carried out to compare the interpretation outcomes of the participants and their subjective experience when using our MR application with the use of a system with Synthesia. The results show that the MR application and the system with Synthesia are effective in learning piano. However, the students played the pieces significantly better when using the MR application. The two systems provided a satisfying user experience. However, the subjective experience of the students was better when they used the MR application. The outcomes of the students and their subjective opinion about the experience when using the MR application were independent of age and gender. The students showed their preference for the MR application. The technical improvements included in Meta Quest Pro over Meta Quest 2 (color and distortion at shorter distances) make Meta Quest Pro and devices with better features suitable for creating satisfying MR experiences. With regard to the comparison between our MR application and the system with Synthesia, the students argued that seeing the real world and integrating the help elements for playing the piano (indicating the key to be played on the key itself) is what makes the difference.

The information included in this publication could help in the development of new applications and in the selection of the headset to use. As future work, our MR application can be extended in several ways; for example, an option could be added to manage the hands, i.e., show or hide the virtual hands, select the type of skin (skin color, gender, etc.), or show only the hand skeleton. Our application can be also used to perform other studies. A study could compare the performance results and subjective opinion between people who do not know how to play the piano and piano students. Our MR application could also be used in a longitudinal study and compared with a control group, in which the students play the traditional piano.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Banquiero M, Valdeolivas G, Trincado S et al (2023) Passthrough mixed reality with Oculus Quest 2: a case study on learning piano. IEEE Multimed 30:60–69. https://doi.org/10.1109/MMUL.2022.3232892

Bimberg P, Weissker T, Kulik A (2020) On the usage of the simulator sickness questionnaire for virtual reality research. In: 2020 IEEE conference on virtual reality and 3D user interfaces abstracts and workshops (VRW), pp 464–467

Birhanu A, Rank S (2017) KeynVision: exploring piano pedagogy in mixed reality. In: Extended abstracts publication of the annual symposium on computer-human interaction in play. Association for Computing Machinery, New York, NY, USA, pp 299–304

Cárdenas-Delgado S, Juan MC, Méndez-López M, Pérez-Hernández E (2017) Could people with stereo-deficiencies have a rich 3D experience using HMDs? In: Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics), pp 97–116

Chow J, Feng H, Amor R, Wünsche BC (2013) Music education using augmented reality with a head mounted display. In: Proceedings of the fourteenth australasian user interface conference, vol 139. Australian Computer Society, Inc., AUS, pp 73–79

Davis FD (1993) User acceptance of information technology: system characteristics, user perceptions and behavioral impacts. Int J Man Mach Stud 38:475–487. https://doi.org/10.1006/imms.1993.1022

Deja JA, Mayer S, Čopič Pucihar K, Kljun M (2022) A Survey of augmented piano prototypes: Has augmentation improved learning experiences? Proc ACM Hum Comput Interact. https://doi.org/10.1145/3567719

Desnoyers-Stewart J, Gerhard D, Smith M (2017) Mixed reality MIDI keyboard. In: 13th International symposium on CMMR, pp 376–386

Hackl D, Anthes C (2017) HoloKeys—an augmented reality application for learning the piano. In: CEUR workshop, pp 140–144

Huang F, Zhou Y, Yu Y et al (2011) Piano AR: a markerless augmented reality based piano teaching system. In: 2011 Third international conference on intelligent human-machine systems and cybernetics, pp 47–52

Julia J, Kurnia D, Isrokatun I et al (2019) The use of the Synthesia application to simplify Angklung learning. J Phys Conf Ser 1318:12040. https://doi.org/10.1088/1742-6596/1318/1/012040

Kennedy RS, Lane NE, Berbaum KS, Lilienthal MG (1993) Simulator sickness questionnaire: an enhanced method for quantifying simulator sickness. Int J Aviat Psychol 3:203–220. https://doi.org/10.1207/s15327108ijap0303_3

Laugwitz B, Held T, Schrepp M (2008) Construction and evaluation of a user experience questionnaire. In: Holzinger A (ed) HCI and usability for education and work. Springer Berlin Heidelberg, Berlin, Heidelberg, pp 63–76

Martin S, Jörg T, Andreas H (2017) Construction of a benchmark for the user experience questionnaire (UEQ). Int J Interact Multimed Artif Intell 4:40–44. https://doi.org/10.9781/ijimai.2017.445

Molero D, Schez-Sobrino S, Vallejo D et al (2021) A novel approach to learning music and piano based on mixed reality and gamification. Multimed Tools Appl 80:165–186. https://doi.org/10.1007/s11042-020-09678-9

Molloy W, Huang E, Wünsche BC (2019) Mixed reality piano tutor: a gamified piano practice environment. In: 2019 International conference on electronics, information, and communication (ICEIC), pp 1–7

Regenbrecht H, Schubert T (2002) Measuring presence in augmented reality environments: design and a first test of a questionnaire. In: Proceedings of the 5th annual international workshop presence, pp 1–7