Abstract

Several studies have shown that video games may indicate or even develop intellectual and cognitive abilities. As intelligence is one of the most widely used predictors of job performance, video games could thus have potential for personnel assessment. However, few studies have investigated whether and how virtual reality (VR) games can be used to make inferences about intelligence, even though companies increasingly use VR technology to recruit candidates. This proof-of-concept study contributes to bridging this gap between research and practice. Under controlled laboratory conditions, 103 participants played the commercial VR game Job Simulator and took the short version of the intelligence test BIS-4. Correlation and regression analysis reveal that, on average, participants who completed the game more quickly than others had higher levels of general intelligence and processing capacity, suggesting that VR games may provide useful supplementary tools in the prediction of job performance. Still, our results also indicate that game-based assessments have limitations that deserve researchers’ attention, which lead us to discuss directions for future research.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

It has become apparent that video games could be used to assess or develop skills such as communication, collaboration, and digital literacy skills (see, e.g., Sourmelis et al. 2017). Therefore, Petter et al. (2018) argued that employers should consider gaming-related experience and achievements in the hiring process and encourage applicants to share their gaming backgrounds on their résumés or during job interviews. Similar to voluntary work that signals social responsibility and awareness, or sports activities that demonstrate goal orientation and team spirit, an applicant’s history in gaming may indicate a variety of skills and attributes that are professionally valuable (Barber et al. 2017).

In fact, employers are increasingly interested in video games. Robert Half (2017), a US-based human resource (HR) consulting firm, surveyed more than 2500 CIOs about technology-related hobbies and activities that increase graduates’ appeal to technology employers, and 24 percent of the respondents cited video-gaming or game development. In addition, there is a growing interest in video games on the other side of the recruiting table, too, as applicants increasingly inform employers about their gaming experience and expertise to help them land a job. For example, Heather Newman, Director of Marketing and Communications for the School of Information at the University of Michigan, included on her résumé her experience with World of Warcraft, as several tasks involved in the game, such as managing guilds of hundreds of people and organizing large-scale raids, required skills that she believed also applied to the job (Rubenfire 2014; also see Barber et al. 2017). Such anecdotal evidence suggests that video games have something to offer to HR management, which is why companies from various industries have started to use them to identify and attract talent (see Fetzer et al. 2017).

However, while most HR professionals would probably agree that video games may be used for branding and recruiting purposes—for example, America’s Army, a first-person shooter game, is widely considered the most effective of all armed-service recruiting tools (Buday et al. 2012)—many are unsure about their usefulness in personnel selection, as whether and to what extent game-related data can predict future job performance remains unclear (Melchers and Basch 2022). As this paper argues, a strong argument for the usefulness of video games in candidate assessment is that they may indicate intellectual and cognitive abilities (see Weidner and Short 2019), which has not only been confirmed for puzzle and brain-training games (e.g., Buford and O’Leary 2015; Quiroga et al. 2009) but also for several other game genres such as action and casual games (see Quiroga and Colom 2020). HR research has repeatedly demonstrated that general intelligence is one of the best, if not the best, predictors of future job performance (see, e.g., Schmidt et al. 2016), so video games that indicate intelligence may meaningfully support companies’ assessment procedures: In particular, as video games enable “stealth assessments” during which candidates are less aware that they are being monitored and evaluated, game-based assessments could reduce test anxiety, prevent faking, and foster candidate engagement (see, e.g., Boot 2015; Fetzer 2015; Shute et al. 2016; Wu et al. 2022). As Fetzer et al. (2017, p. 297) put it, “candidates may become so immersed in the game that their true behaviors emerge, increasing the accuracy of the assessment, rather than being constrained or changed by social desirability and the propensity of candidates to second-guess their actions during employment assessment.”

Still, the assessment of job candidates based on video games has some limitations, one of which is that a video game can lose its ability to indicate intelligence when players become familiar with the game (see Quiroga et al. 2011). Therefore, companies typically gamify traditional assessment techniques or use self-developed, “serious” games for which applicants are not likely to be able to prepare. But even then, experience with other video games or with gaming hardware may bias the results, since gamers can be expected to have better mouse and keyboard control and better knowledge of the underlying game mechanics than inexperienced applicants do, so a potential issue of using video games for assessments is to ensure fairness in the selection process (see Bina et al. 2021). Accordingly, game-based assessments require the use of video games that are similarly attractive to different groups of applicants (Melchers and Basch 2022), and it is up to research to determine what games and game genres qualify for personnel selection and which ones can be used to demonstrate and develop which skills and abilities (see Petter et al. 2018). The relationship between gaming and intelligence has been studied for only some of the available games and game genres (see Quiroga and Colom 2020), and empirical studies on the criterion-related validity of game-based assessment are rare (see Bina et al. 2021; Melchers and Basch 2022).

Virtual reality (VR) games in particular deserve researchers’ attention (e.g., Sanchez et al. 2022; Weiner and Sanchez 2020). As Aguinis et al. (2001) argued, VR-based assessments may be more valid than some of the traditional assessment techniques, allow for higher levels of standardization and structure, and even enable simulations that were once not feasible, such as handling hazardous tasks or fictitious products. Despite these benefits, high equipment costs and negative side effects, such as cyber sickness, have long challenged the broader adoption of VR hardware (Valmaggia 2017), but now that VR technology has become affordable and has matured, many HR professionals have realized that it has much to offer for personnel selection. For example, Lloyds Banking Group (2021) uses VR to present candidates with situations that would not be feasible in conventional assessments, Accenture assesses graduates’ problem-solving skills in a virtual “Egyptian tomb,” and BDO Global is testing an Alice in Wonderland-themed VR game to assess candidates’ ability to judge cause and effect (Consultancy.uk 2019; also see Wohlgenannt et al. 2020).

Against this background, research is challenged to keep pace with HR practice, as few empirical studies, especially studies that use game-based approaches, have addressed VR technology’s usefulness in personnel selection (e.g., Sanchez et al. 2022; Weiner and Sanchez 2020). VR games differ considerably from conventional video games, as they are much more immersive and realistic and give players the feeling of physical presence in a virtual environment (Weiner and Sanchez 2020), so it remains to be determined whether extant findings from video-game studies are applicable to VR contexts. Accordingly, this proof-of-concept study uses the commercial VR game Job Simulator and the intelligence test BIS-4 and explores the relationship between game results and test results to discuss VR’s applicability to personnel assessment.

The paper proceeds as follows. Section 2 provides a rationale for game-based assessment and reviews studies that have investigated the relationship between gaming and intelligence. Section 3 explains the controlled laboratory study that was used for data collection and analysis, and Sect. 4 presents the results. Section 5 discusses implications and limitations, and Sect. 6 draws conclusions.

2 Background

2.1 Game-based recruitment and assessment

Researchers from various disciplines have repeatedly found that performance in video games correlates with skills that are professionally valuable. For example, tower-defense games like Plants vs. Zombies 2 could be used to assess problem-solving skills (Shute et al. 2016); massively multiplayer online role-playing games like World of Warcraft and EVE Online to assess leadership skills (Lisk et al. 2012); strategy video games like Sid Meier’s Civilization to assess managerial skills like organizing and planning (Simons et al. 2021); learning video games like Physics Playground to assess creativity (Shute and Rahimi 2021); and Xbox Kinect video games like Just Dance or Table Tennis to assess elderly people’s driving skills (Vichitvanichphong et al. 2016). In addition, while researchers have long studied the negative effects of video games, such as addiction or aggression, they have recently turned to possible positive outcomes and provided arguments for video games’ ability not only to indicate but also to develop professional skills. For example, multiplayer games like Rock Band and Halo 4 have been used in team-building activities to improve team cohesion and performance (Keith et al. 2018), and games such as Borderlands 2, Lara Croft and the Guardian of Light, Minecraft, Portal 2, and Warcraft III may foster players’ communication skills, adaptability, and resourcefulness (Barr 2017).

Accordingly, given the ever-growing skill gap that has emerged in practice, researchers have realized that video games may meaningfully inform personnel recruitment. For example, Barber et al. (2017) and Petter et al. (2018) proposed that employers encourage applicants to include their gaming accomplishments and experience in their résumés or to share them during job interviews, as finding digitally skilled candidates has become increasingly difficult. In addition, researchers have also argued that game-based recruitment practices can improve employers’ talent pools because the use of video games or game elements may increase companies’ attractiveness to applicants and foster diversity and engagement (see Bina et al. 2021). Since modern video games are typically played online and since players’ profiles are often public, video game platforms, rankings, and forums may further provide promising new data sources for scouting and recruiting, and employers may even create add-ons or “mods” for video games with which to recruit suitable candidates. For example, WibiData, a former software company, developed a Portal 2 mod with new puzzles, and players who solved all of the puzzles unlocked a special job application (Kuo 2013).

Perhaps the most promising application of video games in HR management is candidate assessment (see, e.g., Bhatia and Ryan 2018; Fetzer et al. 2017; Landers and Sanchez 2022; Melchers and Basch 2022), among others because video games may allow companies to make inferences about candidates’ intellectual abilities (see Weidner and Short 2019). The assessment of intellectual ability plays an important role in personnel selection, as intelligence has long been established as one of the best performance predictors for diverse professions, especially for employees with no experience in the job (Schmidt et al. 2016). However, companies’ reluctance to use intelligence tests has grown (see Krause et al. 2014), since intelligence tests are not popular among applicants (see Hausknecht et al. 2004), so game-based assessment could provide a useful supplement to personnel selection. In particular, as they are entertaining and fun, video games could help organizations to “augment their brand awareness, engage candidates and enhance positive perceptions of the company due to being at the cutting edge of technology, providing competitive advantage in the battle for talent” (Fetzer et al. 2017, p. 297).

2.2 Game-based intelligence assessment

Researchers’ interest in using video games to assess intellectual or cognitive abilities goes back to the 1980s, and the Space Fortress video game (e.g., Rabbitt et al. 1989) was probably the most notable and systematic attempt to study the relationship between gaming and human cognition (Boot 2015). Since then, several studies have provided empirical evidence that video games can indicate intelligence and cognition levels. For example, some of Nintendo’s puzzle and brain-training games, such as Train and Professor Layton, have been used to assess general intelligence (Quiroga et al. 2009, 2016); casual online games such as DigiSwitch and Sushi Go Round to assess working memory, perceptual speed, and fluid intelligence (Baniqued et al. 2013); puzzle platformers such as Portal 2 to assess problem-solving ability, spatial skills, and persistence (Shute et al. 2015); multiplayer online battle arenas like League of Legends to assess fluid intelligence (Kokkinakis et al. 2017); digital board games such as Taboo to assess abstract reasoning, spatial reasoning, and verbal reasoning (Lim and Furnham 2018); and sandbox games such as Minecraft to assess fluid intelligence and spatial ability (Peters et al. 2021). Perhaps most noteworthy, Quiroga et al. (2015) used a variety of video games, most of which were from Nintendo’s Big Brain Academy, to measure individual differences in general intelligence, and Quiroga et al. (2019) provided similar results using several genres of games other than brain-training games. Accordingly, grounded in a comprehensive overview of game-related research studies, Quiroga and Colom (2020, p. 651) recently provided strong arguments for the use of video games to measure intelligence, arguments that could also justify their use for assessment purposes (“Is it time to use video games for measuring intelligence and related cognitive abilities? Yes, it is.”).

However, despite these promising results, the use of video games to assess intellectual ability comes with some challenges, one of which is that their ability to indicate intelligence can decrease with practice. As Quiroga et al. (2011) argued based on a three-stage model of cognitive, associative, and autonomous learning (see, e.g., Ackerman and Cianciolo 2000), individual cognitive differences can explain the variability in game results only in the first of these stages, when one learns how to play the game; after that, perceptual speed (second stage) and psychomotor ability (third stage) come into play. The authors concluded that only for games that are sufficiently novel, complex, and inconsistent can players be kept from going beyond the cognitive stage to using abilities other than cognitive (Quiroga et al. 2011). Therefore, commercial video games cannot usually be used for assessment purposes, and companies typically use self-developed, “serious” games instead. Put simply, selection games should be played only once, since “there is a strong need to avoid contaminating the scores obtained with practice” (Fetzer et al. 2017, p. 305).

Having said that, even if companies use such serious games, previous gaming experience and expertise may still bias the results, so researchers have raised concerns that game-based assessments may favor certain groups of applicants (see, e.g., Bina et al. 2021; Leutner et al. 2021; Weidner and Short 2019). As Fetzer et al. (2017) argued, potential issues in game-based assessment have most commonly been associated with age and gender, and although the number of female and older gamers continues to increase, it is still important to minimize demographic differences in game-based assessment. For example, using a large dataset from the financial industry, Melchers and Basch (2022) recently found that male and younger applicants achieved overall higher scores in a game-based assessment than female and older applicants did—even though the female candidates performed significantly better in an adjunct assessment center. Accordingly, game-based assessments require the use of games and game genres that are similarly attractive to different groups of applicants as well as careful consideration of previous gaming experiences. For example, McPherson and Burns (2008) found that men completed the video game Space Code, a self-developed, computer-game-like test of processing speed, more quickly than women did, but when the authors controlled for current and previous computer gaming, the correlation dropped to almost zero. Similarly, Quiroga et al. (2016) found significant, game-related differences between two studies that used the Professor Layton game to assess intelligence, likely because participants in one study had higher levels of gaming experience than participants in the other, since the samples were otherwise quite homogeneous. Still, the available evidence is partly contradictory when it comes to gaming experiences in assessment contexts, which highlights the need to carefully design game-based assessments (see Fetzer et al. 2017). For example, Foroughi et al. (2016) found no significant differences between experienced and inexperienced gamers in their Portal 2 study of fluid intelligence, probably because they used self-designed puzzles (“chambers”) that did not (or only partly did) require hand-eye coordination and measured the number of puzzles solved rather than the time required to complete them. As the authors acknowledged, a different research design may have resulted in an advantage for experienced gamers unrelated to fluid intelligence, as gamers can be expected to have better mouse and keyboard control and maneuver in the game environment more quickly than non-gamers can (Foroughi et al. 2016).

In summary, to ensure fairness in the selection process, game-based assessment must ensure that previous gaming experience and expertise with both the video game used for assessment and video games in general do not bias the results. As the present study serves as a proof-of-concept, its research design is intended to address these and related challenges. In particular, the study uses a casual VR simulation game for assessment purposes. On the one hand, this type of game genre is intuitive and similarly attractive to different groups of applicants, and on the other, few researchers have investigated whether and how VR games can be used to make inferences about intelligence.

3 Research design

3.1 Participants

We recruited university students for our study, so the participants had similar educational and social backgrounds, which reduced the need for extensive control variables. This pool of recruits was appropriate because the intelligence test we used was developed for relatively young subjects (i.e., teenagers and young adults) with at least an intermediate-school education and because students can be expected to have differing levels of experience with video games. We promoted participation in the study in lectures, via e-mail, and through posters and flyers. To avoid participants’ preparing for the study or being nervous about taking an intelligence test, we told them only that the study would assess VR games’ applicability in HR management and that they would be taking a paper-and-pencil assessment test. We asked only native German speakers to apply to ensure sufficient comprehension of directions and the tests themselves, and we compensated each participant with CHF 30.00. Plus, the participants had the chance to win a drawing for one of three CHF 500.00 vouchers for purchases at a local electronics store.

One hundred twenty students volunteered to participate. As we had to ensure that none of them knew the VR game we used for the study, we excluded eight students who had experiences with VR technology. (We did not disclose this exclusion criterion when we promoted the study to avoid recruiting students who were highly interested in VR and gaming and whose eagerness to participate could lead them to provide false information about their VR experience.) In addition, we excluded two students because the video-clip application we used (Sect. 3.3) did not work for one of them, and the other one spent considerably more time playing the VR game than any other participant, so they were identified as an outlier (Grubbs test: G = 3.68, U = 0.87, p value = 0.01).Footnote 1 Finally, seven students did not show up for their appointments, so our final sample consisted of 103 participants.

Table 1 provides descriptive information about the participants. Their average Age was 23.12 years, with a standard deviation of 2.88 years, and seventy of the participants (67.96%) were men. To account for their gaming experience and expertise, which could have influenced how well they coped with the VR game, we asked them to self-assess their gaming skills using a Likert scale from 1 (“very poor”) to 5 (“excellent”). The participants’ self-assessed Gaming Skills averaged 3.27 (“fair”), with a standard deviation of 0.84.

3.2 Materials and equipment

The VR game was run on a work station that was set up specifically for the study. We used the PC version of the commercially successful game Job Simulator from Owlchemy Labs, which runs on Steam, a distribution platform for video games, and the first-generation HTC Vive VR system, which includes a headset, two wireless controllers, and two base stations that create a 360-degree virtual space with a maximum radius of 15 × 15 feet within which players can move. Job Simulator puts players into a futuristic world in which robots do all of the humans’ jobs but, for nostalgia and entertainment, the humans in the game can visit “job museums” to experience how it must have felt to work for a living. While this game, which can perhaps best be described as a casual simulation game, contains comic and satiric elements and confronts players with some unusual tasks, it also requires them to think out of the box and solve challenging problems, so we deemed it appropriate for our proof-of-concept study. In particular, we selected the Job Simulator game, as there are only a few genres other than simulations that are similarly attractive to female and male players (see Lucas and Sherry 2004), and since the game has been found to highlight “the multitude of ways that the increasing proliferation of virtual reality technology could help to shape future job assessments” (Weidner and Short 2019, p. 155). Job Simulator features three types of simulations, one of which, Gourmet Chef, is a kitchen simulation that we used for data collection. The Gourmet Chef simulation confronts players with seventeen tasks, from preparing a sandwich to participating in a television cooking show, and requires them to complete each task before they can move on to the next. While successful approaches to completing some of the tasks differ, one solution was considered as good as another in the study. (Note that the Internet offers videos that illustrate the gameplay (e.g., YouTube 2016).)Footnote 2

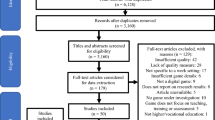

Our study was grounded in the Berlin Intelligence Structure (BIS) model, which makes three assumptions: Intellectual achievement is a function of all intellectual abilities, albeit to different degrees; intellectual abilities can be classified into content-related and operative abilities; and intellectual abilities are hierarchically structured (Jäger et al. 1997). Therefore, the model distinguishes four operative components of intellectual ability—processing capacity, processing speed, memory, and creativity—and three content components of intellectual ability—figural ability, verbal ability, and numerical ability—and puts general intelligence at the top of the hierarchy (Fig. 1).

BIS model (Jäger et al. 1997, p. 5)

The BIS test is a validated instrument for measuring general intelligence and several more specific intellectual abilities, so researchers have used it in various studies (e.g., Beauducel and Kersting 2002; Bucik and Neubauer 1996; Süß and Beauducel 2005; Süß et al. 2002; Weis and Süß 2007). The current version of the test, BIS-4, is a paper-and-pencil test that was developed for subjects aged sixteen to nineteen with intermediate or high levels of education, but it can also be used for older subjects if they have sufficient educational backgrounds (Jäger et al. 1997). The test covers a broad range of intellectual abilities, as its development is grounded in a pool of more than 2000 exercises (Beauducel and Kersting 2002), and it can be customized for research purposes. In particular, its developers have made available a shortened test version, which we deemed appropriate for our proof-of-concept study: While the short version uses fifteen instead of forty-five exercises, it is still a valid instrument for measuring general intelligence and processing capacity, although it does not contain enough exercises to measure the other abilities (Jäger et al. 1997).Footnote 3

3.3 Procedure

We conducted a controlled observational laboratory study. There was no treatment, so the study design was correlational and the same for all participants, and we made individual appointments with the participants, each of which took approximately two and a half hours. At the beginning of these appointments, we informed the participants about the risks in using VR devices (e.g., epilepsy and dizziness) and about their rights during the study (e.g., the right to quit at any time for any reason without forfeiting the CHF 30.00 payment). We also told them that we would be taking video of their VR games (not video of themselves) and asked them to keep the study’s contents confidential until it was completed, so other participants could not prepare. Finally, to provide a performance incentive, we informed them that one of the shopping vouchers would be raffled among the ten participants who completed the VR game the most quickly, and all of them completed a short survey about their experience with video games to add to other information that had already been collected via e-mail.

After receiving this introductory information, the participants were given a written instruction sheet that explained the Job Simulator game and how to use the VR headset and controllers. In particular, the instruction sheet told them to complete the game as quickly as possible, so thoroughness was not required or helpful (since the Job Simulator game does not count points or anything similar). Participants confirmed that they understood these instructions and that they would try to complete the VR game as quickly as possible. We then conducted a series of vision and hearing tests and performed some simple motor tests with the participants, such as asking them to focus on an object or to grab and throw items in the virtual environment. After the participants signaled they were ready, they started the Gourmet Chef simulation.

To avoid the risk of injury, the room provided sufficient space for participants to move about when they played the VR game, and at least one researcher supervised the participants to ensure they did not fall over the cable that connected the VR headset with the desktop computer or collide with a wall. The supervising researcher used a detailed protocol that explained what information could be given to participants who asked for support and contained the exact wording of all audio instructions the participants could hear during the game. Apart from small technical and language problems that could be addressed quickly, all simulations ran smoothly and were consistent.

After completing the VR game, the participants had a short break during which they confirmed they did not feel unwell or dizzy, and then took the intelligence tests. As with the VR simulations, these tests were conducted individually to ensure that participants did not feel pressure from the presence of other participants and that they could ask questions. Two researchers, both of whom the psychologist on the research team had instructed, supervised the participants during the test. The verbal instructions given to the participants followed the test’s guidelines exactly, and time-keeping was strict. The researchers created detailed protocols on which they noted the times required to explain and perform the exercises and any special incidents, although none occurred.

3.4 Measures

The main purpose of our study was to determine whether the time required to complete the VR game correlated with the scores achieved in the intelligence test. Playing Time was measured in minutes and automatically calculated based on two unambiguous audio signals that sounded when the participants started and finished the game. The evaluation of the intelligence test’s results followed the test’s guidelines and its detailed instructions and standardized templates. As the structure of the BIS model is bimodal (Bucik and Neubauer 1996), the BIS-4 test considers that performance on each exercise is influenced not only by general intelligence but also by an operative factor and a content factor (Brunner and Süß 2005), so the test provides a measurement instrument of General Intelligence, three content-related abilities (i.e., Figural Ability, Numerical Ability, and Verbal Ability) and four operative abilities (i.e., Processing Capacity, Processing Speed, Memory, and Creativity). However, as explained, we used the short test version for our study, which should be used only to measure general intelligence and processing capacity; still, we also calculated the number of points earned in those exercises that related to the other abilities, as we were not interested in diagnosing or certifying abilities at the individual level but in exploring and understanding patterns at the group level. While the validity of these measures is unclear, the points achieved in those exercises may still indicate what abilities could be valuable in playing the Job Simulator game and highlight areas for future research. Accordingly, Table 2 uses all the seven intellectual abilities to show the order and type of exercises that are included in the short test version.

After the participants completed the test, all pages of the test booklets were disassembled and copied, so the two assessors could evaluate each exercise independently using the evaluation protocols provided by BIS-4. In line with those instructions, one of the researchers evaluated all participants’ test results, and another researcher evaluated 52 randomly selected tests (i.e., more than 50%). The BIS-4 evaluation templates provided unambiguous sample solutions for nearly all exercises, so the assessors’ ratings were almost always consistent, and the failure/disagreement rate was well below the threshold of 1 to 2 percent per exercise. The more complex exercises that measured creativity based on either idea fluency (number of solutions) or idea flexibility (diversity of solutions) were all independently evaluated by both assessors. Because these exercises required creative solutions, the participants’ responses were less consistent than those for the other exercises, so the assessors created protocols to justify their ratings. Still, as they used detailed criteria catalogues and checklists, the level of agreement between the two assessors was also high for the three creativity exercises.Footnote 4 All participants completed the tests, so there were no missing values, and because there were no special incidents, measurement was straightforward.

4 Results

4.1 Descriptive results

Table 3 shows the descriptive results for the VR games and the intelligence tests. Ranging from 24.46 to 46.03 min, Playing Time averaged 33.42 min, with a standard deviation of 4.41 min. The total points achieved in the intelligence tests, which provided our measure of General Intelligence, varied between 1313 and 1648 points, averaged 1484.77 points, and had a standard deviation of 71.52 points. Processing Capacity varied between 507 and 687 points and had a mean (standard deviation) of 586.49 (34.87) points.Footnote 5

As explained, we further calculated the total number of points achieved in the exercises that related to intellectual abilities other than Processing Capacity, although these scores should not be interpreted as ability measures. The means (standard deviations) were as follows: Processing Speed: 310.54 (22.30); Memory: 290.09 (21.59); Creativity: 297.65 (20.89); Verbal Ability: 502.06 (31.05); Numerical Ability: 488.22 (34.41); and Figural Ability: 494.49 (30.51). (The point values for Memory, Creativity, and Processing Speed were generally lower than those for the content-related abilities because they were measured with fewer exercises (Table 2).)

4.2 Correlational results

To explore the associations between our measures, we started with an explorative data analysis that examined how our Playing Time variable correlated with General Intelligence and Processing Capacity and with the number of points that participants achieved in the exercises that were related to the other intellectual abilities. We calculated both Pearson and Spearman correlation coefficients (Table 7), but these coefficients were similar, so we report only the Pearson coefficients r here. Following Kokkinkakis et al.’s (2017) visualization, Table 4 shows the skill variables that were significantly related to the participants’ playing times, as well as histograms and scatterplots that illustrate these variables’ distributions. While the scatterplots suggested weak and negative linear relationships between Playing Time and the other variables, the histograms and a series of Shapiro-Wilk tests indicated that we could assume they were normally distributed (even though the Playing Time and Figural Ability variables were a little skewed).Footnote 6

In summary, the correlation analysis showed several moderate to weak correlations. The association between Playing Time and General Intelligence was significantly negative (r = −0.257; p = 0.009), as was the relationship between Playing Time and Processing Capacity (r = −0.281; p = 0.004), so participants who required less time to complete the VR game demonstrated higher levels of general intelligence and processing capacity than those who required more time. In addition, Playing Time correlated with the number of points achieved in exercises that were related to Memory (r = −0.202; p = 0.040), Verbal Ability (r = −0.206; p = 0.037), and Figural Ability (r = −0.344; p = 0.000), but not with exercises related to Processing Speed, Creativity, or Numerical Ability, and we found no significant correlations between Playing Time and any of our control variables, except for Gaming Skills (r = −0.318; p = 0.001). Of the remaining control variables, only Gender correlated significantly with other variables: Age (r = 0.399; p = 0.000), Gaming Skills (r = 0.297; p = 0.002), Verbal Ability (r = −0.199; p = 0.044), and Numerical Ability (r = 0.279; p = 0.004) (Table 7).

4.3 Regression results

To explore further the associations between our main variables—that is, (1) between General Intelligence and Playing Time and (2) between Processing Capacity and Playing Time—we built on studies that have analyzed response times (e.g., van der Linden 2006). As response times are typically skewed with a tail and cannot be below zero, as is the case for our Playing Time variable (Table 4), we used the following log-normal regression models:

where i indices the subjects 1,…,N, and Playing Timei is the dependent variable, which is assumed to be log-normally distributed and described by a mean \(\mu\) (i.e., the linear model) and a standard deviation \(\sigma\). The associations between General Intelligencei and Playing Timei and between Processing Capacityi and Playing Timei are shown by β1, for which we expect a negative sign in both models, as higher ability levels should be associated with faster gameplay. Controls is a vector of variables that includes Age, Gender, and Gaming Skills.

We used Bayesian inference to estimate the statistical models (van der Linden 2006), which offers some advantages over more traditional methods (see Kruschke et al. 2012). For example, a Bayesian approach can incorporate existing knowledge from the literature as prior belief and update it using new data, which makes Bayesian models particularly suitable for small sample sizes. (Although our sample was comparatively large, small sample sizes have been identified as a shortcoming of laboratory video-game research (Unsworth et al. 2015).) For the models’ unknown parameters \(\alpha , {\beta }_{1}\), \({\beta }_{2}\) and \(\sigma\), we used the following, weakly informative priors from the literature (e.g., McElreath 2020): \(\alpha \sim Normal\left(\mu =3, \sigma =1.5\right), {\beta }_{\mathrm{1,2}}\sim Normal\left(\mu =0, \sigma =1\right),\) and \(\sigma \sim Exponential\left(\lambda =1\right)\).

Table 5 shows the regression results for our first model, both without control variables (Model 1a) and with control variables (Model 1b).Footnote 7 The results confirm that General Intelligence and Playing Time are negatively associated (β1 = −0.0005***; 95%-CI [−0.0008, −0.0002]) and remain robust when control variables are added (β1 = −0.0004***; 95%-CI [−0.0007, −0.0001]). (Of the control variables, only the participants’ self-assessed Gaming Skills are significant (\({\beta }_{2}\) = −0.0441***; 95%-CI [−0.0682, −0.0190]).) As we used a log-normal model, these numbers can be interpreted as a percentage change, such that a one-point increase in General Intelligence reduces Playing Time by approximately 0.05 percent, a substantial effect given that the mean of General Intelligence was 1484.77. The effect size becomes evident in a sensitivity analysis, where an increase of 100 test points is associated with a decrease of approximately 1.7 playing minutes.

Table 6 shows the regression results for our second model, both without control variables (Model 2a) and with control variables (Model 2b). The results confirm that Processing Capacity and Playing Time are also negatively associated (β1 = −0.0011***; 95%-CI [−0.0017, −0.0005]) and remain robust when control variables are added (β1 = −0.0009***; 95%-CI [−0.0015, −0.0003]). (Of the control variables, only the participants’ self-assessed Gaming Skills are significant (\({\beta }_{2}\) = −0.0407***; 95%-CI [−0.0671, −0.0145]).) Again, as we used a log-normal model, these numbers can be interpreted as a percentage change, such that a one-point increase in Processing Capacity reduces Playing Time by approximately 0.11 percent (while the mean of Processing Capacity was 586.49), so an increase of 100 points in exercises related to Processing Capacity is associated with a decrease of approximately 3.7 playing minutes.Footnote 8

Finally, as part of our robustness checks, we ran linear regression models both with and without controls, which confirmed our results (Model 1a: β1 = −0.016***; 95%-CI [−0.025, −0.006]; Model 1b: β1 = −0.015**; 95%-CI [−0.024, −0.005]; Model 2a: β1 = −0.036***; 95%-CI [−0.055, −0.016]; Model 2b: β1 = −0.033**; 95%-CI [−0.052, −0.014]). That is, with each additional point that a participant achieved in the intelligence test (in exercises related to Processing Capacity), Playing Time decreased by 0.016 (0.036) minutes, so an increase of 100 test points was associated with a decrease of 1.6 (3.6) playing minutes in the linear models.

5 Discussion

Researchers have proposed the use of VR-based tools to assess cognitive abilities like working memory and attention (Climent et al. 2021), and it has been argued that VR technology may eventually replace traditional intelligence tests in the future, as they offer new ways to analyze data and to simulate highly naturalistic scenarios (Koch et al. 2021). With the advent of consumer-grade VR headsets that are readily available for gaming and entertainment, researchers have also developed an interest in VR games and used them to train high-fidelity memory in older adults (Wais et al. 2021), to determine their impact on cognitive ability compared to 3D games (Wan et al. 2021), and to assess cognitive abilities like visual speed and accuracy, visual pursuit, and space visualization (Weiner and Sanchez 2020). However, compared to the rapidly growing stream of intelligence-related research on regular video games, VR games have received little in the way of research attention, and the available evidence is mixed. For example, Sanchez et al. (2022) used two commercial VR games (Project M and Richie’s Plank Experience) for assessment purposes and could provide only limited evidence for their reliability and validity.

Against this background, the objective of this proof-of-concept study was to (re-)assess whether VR games may be used to draw inferences about intellectual ability and to discuss their applicability for assessment purposes. A correlational analysis suggested that, on average, participants who completed the VR game Job Simulator more quickly than others had higher levels of general intelligence and processing capacity, while a regression analysis largely confirmed these results. In addition, we found that faster participants achieved overall higher scores on exercises that were related to memory, verbal ability, and figural ability, which could indicate that these abilities were helpful in playing the game (although these measures’ validity is questionable, as we used the short version of the BIS-4 intelligence test). While these results are promising, they also have some limitations that suggest topics for further research.

First, although our results are comparable to those of related work in the VR realm (e.g., Weiner and Sanchez 2020), the correlations we found were only moderate to weak, so our results do not confirm that VR games may replace traditional intelligence tests but suggest that they could be used for pre-screening candidates or as supplementary tools in predicting job performance. As other gaming studies have reported higher correlations (see Quiroga and Colom 2020), future research could explore whether the Job Simulator game (or similar games) can be used to assess intellectual ability more reliably by, for example, focusing only on tasks that are cognitively demanding and excluding those that require motor skills or luck (which probably plays a role in Job Simulator, as, for example, some of the items that participants had to search for were located in unusual places). However, researchers who plan to conduct similar studies should consider that we used the Job Simulator game for test purposes and do not recommend the use of commercial games for personnel selection. As explained, video games may lose their ability to indicate intelligence with practice, so HR professionals typically use self-developed, serious games instead, and the Job Simulator game is particularly (or maybe even only) challenging when it is played for the first time (as it is highly repetitive). Still, we believe that our results provide an important step toward clarifying the potential of VR games in assessing intelligence: If a simple, fun game like Job Simulator that was developed for entertainment purposes, and not for assessment purposes, can indicate intelligence and related abilities (albeit only to some degree), surely VR games have much to offer for personnel selection. Compared to the use of regular video games, VR games may amplify the various benefits that game-based stealth assessments offer, such as increased candidate engagement or reduced test anxiety, as they are much more immersive and realistic and can even make players forget about the real world (see Wohlgenannt et al. 2020).

Second, since fairness is a prerequisite in the selection process, researchers have raised concerns that game-based assessments may favor certain groups of applicants (see Weidner and Short 2019), which leads to another limitation of our study. In particular, candidates’ previous gaming experience and expertise may bias the results, so it has been argued that women and older applicants could have a disadvantage (see Fetzer et al. 2017), which has been confirmed by empirical research that used archival data from actual game-based assessments (Melchers and Basch 2022). While the number of female gamers continues to increase (Lopez-Fernandez et al. 2019), a broad survey from the Entertainment Software Association (2020) still suggests that fifty-nine percent of video-game players in the US are male and no older than thirty-four years, and that gamers’ preferences vary across groups (e.g., females most often play casual mobile games, which are typically not part of on-site assessments). While we did not have much age-related variance in our data, and although our female participants tended to rate their own gaming skills lower than our male participants did, our participants’ age or gender did not significantly influence how fast they completed the game—an interesting result that could confirm that the demographics of video gamers have changed during the past years (Fetzer et al. 2017) and/or that the genre we studied is similarly attractive to different groups of applicants (see Lucas and Sherry 2004). On the other hand, our results may also indicate that VR games are more intuitive to use than regular video games are (Zhang 2017), which would provide another argument for their applicability in personnel assessment. Accordingly, future research should explore whether experience with VR technology may be another issue for game-based assessment, as none of our participants had such experience. Aguinis et al. (2001) identified the need for applicants to be familiar with or trained in using VR technology as a potential drawback to its broad adoption in assessment practice, and Sophie Thompson, co-founder and Chief Operating Officer of VirtualSpeech, a UK-based VR education platform, observed that “it’s quite a jump from looking at a computer or phone and observing the digital world, to then becoming an active participant right in the middle of it” (Debusmann 2021).

Third, researchers should use more sophisticated questionnaires and tests to measure previous gaming experience and expertise, as we used only the participants’ self-assessment of their gaming skills in our regression models. In doing so, however, researchers should be aware that such measurement is not straightforward, as other game-related variables that we intended to use for our study turned out to be misleading. For example, we asked our participants how often and for how long they had been playing video games but omitted these measures from the analysis because they were not only unrelated to our main variables but also of questionable validity. Questions like “Approximately how often do you play video games?” and “For how many years have you been playing video games?” seem intuitive but are difficult to answer because, for example, skilled and experienced players who are currently abstaining from video-game play may answer the first question with “very rarely,” and the second question does not appreciate the difference between occasional and intensive video-game play. Against that background, Latham et al. (2013) suggested the use of screening video games or more objective measures like rankings, achievements, and awards, which are readily available on gaming platforms like Steam, to measure participants’ gaming experience and expertise. When using more comprehensive study set-ups and models, researchers should also consider variables other than those we have measured, including temperament, persistence, drive, motor skills, motivation (although the lottery offered in our study was intended to provide a performance incentive) and, in particular, cyber sickness, which has been identified as a major barrier to the broader use of VR technology (Tian et al. 2022). (We simply asked participants if they had felt unwell or dizzy during the game, which none of them confirmed.) Even height may have had an influence in our results: One participant was only 1.62 m (5′4″) tall, so they had to jump several times to reach some items in the VR game. (At the time we conducted our study, we were not aware that Owlchemy Labs had made available a “smaller human mode” for the Job Simulator game.)

Fourth, while our controlled laboratory study was designed to be reproducible for other researchers, future research could explore VR games’ applicability in more realistic, high-stakes selection contexts (e.g., Melchers and Basch 2022). Companies commonly use techniques other than intelligence tests—personality tests, work-sample tests, interviews, reference checks, and many more—to assess several skills and attributes that were outside the scope of this paper. For example, assessment centers are often used to assess management potential in terms of communication, drive, organizing and planning, problem-solving, influence, and awareness (Arthur et al. 2003), and employers are also interested in applicants’ personality traits (see, e.g., Weidner and Short 2019; Wu et al. 2022) and in forms of intelligence other than general intelligence, such as practical, emotional, and social intelligence (Lievens and Chan 2017). Therefore, even though general intelligence has been identified as one of the best predictors of work performance, future research could evaluate VR games’ usefulness in assessing these and related skills and abilities to provide a comprehensive picture of candidates’ aptitude. Such broader assessments may also require researchers to consider alternative approaches to collecting the game data, as we measured only the time required to complete the Job Simulator game. However, efficiency is only one of many ways to measure work performance, and even the Job Simulator game could be used to analyze qualitatively how thoroughly or resourcefully participants complete their tasks and/or to collect other types of data that were outside the scope of this article. For example, in a secondary study that may be presented elsewhere, we further analyzed the video clips that we took from the participants’ games to track how often and how fast they moved their heads during the game. A preliminary analysis of the motion data collected suggests that video clips—as they are typically shared on platforms like YouTube and Twitch—may also be used to draw inferences about intellectual abilities: On average, participants who moved their heads less frequently and faster also achieved better test results than did participants who looked around more frequently and at lower speed.

Fifth, the intelligence test we used may present another limitation. We used the short version of BIS-4 for our study, so we could measure only general intelligence and processing capacity. However, as part of our proof-of-concept study, we also compared the participants’ game results with the number of points they achieved in exercises that were related to abilities other than processing capacity, and though these numbers should not be interpreted as ability measures, they may guide similar studies that seek to clarify what intellectual abilities may be assessed with VR games. In any case, while we used a valid instrument to measure general intelligence and processing capacity, the validity of our other measures is questionable, so future research could build on and extend our results using the full test version. In addition, BIS-4 is an established instrument in the German-speaking research community, but most Anglo-American research on assessment has followed the Cattell-Horn-Carroll theory of cognitive abilities (see, e.g., Schneider and McGrew 2018), so future research could replicate our results using alternative measurement instruments to identify the intellectual abilities that may be assessed with VR games. Researchers have explored the relationships between BIS and other models, and our results suggest that fluid intelligence, which reflects the capacity to solve reasoning problems, may be assessed using VR games, as intellectual abilities like processing capacity and memory are related to fluid intelligence (see Beauducel and Kersting 2002). Finally, when studying the relationship between VR gaming and different facets of intelligence, researchers should also recruit more diverse samples, as our participants were of similar age, had similar backgrounds and no experience with VR technology, and were not randomly selected (but had to apply for our study).

6 Conclusion

Researchers have proposed the use of video games for assessment purposes to create a more pleasant test atmosphere, reduce test anxiety, and increase candidate engagement, among other reasons. VR games in particular deserve researchers’ attention in this regard, as they can simulate highly realistic, work-like environments and confront candidates with situations that were once not feasible. Since intelligence is one of the most widely used predictors of future job performance, our controlled laboratory study used a commercial VR game, an established intelligence test, and a sample of 103 university students to explore the relationship between the participants’ playing times and their intellectual abilities. We found that, on average, participants who completed the VR game more quickly than others also had higher levels of general intelligence and processing capacity and achieved more points on exercises related to memory, verbal ability, and figural ability. While researchers have raised concerns that game-based assessments could favor men and younger applicants, who are supposedly more experienced with video games, our participants’ age and gender did not significantly influence how well they coped with the VR game. However, since participants who assessed their gaming skills as higher tended to complete the game faster than others did, our study reinforces the need to study individual differences in game-based assessment. Furthermore, the correlations we found were not strong but moderate to weak, so our results do not suggest that VR games should replace traditional intelligence tests but may be used to pre-screen candidates or as supplementary tools in predicting job performance.

Data availability

The datasets generated and/or analyzed during the study are not publicly available due to privacy and ethical reasons.

Notes

Two of the researchers watched the participant’s video clip to determine why they did not perform well in the VR game and concluded that the participant had major motor problems handling the controllers and understanding the audio instructions, so they had to restart several tasks. As these problems appeared frequently in the beginning of the game, we concluded that the participant had lost the motivation to complete the game quickly and excluded them from the sample. However, the outlier’s exclusion did not affect our estimation results (Sect. 4.3).

Several pretests with colleagues suggested that the Job Simulator game was sufficiently intuitive to be used for data collection and that we could expect enough variance in the resulting data. While the game was available only in English when we conducted the study, all participants had English-language skills on at least the B2 level (based on the Council of Europe’s Common European Framework of Reference for Languages), which was sufficient for playing the game.

The shortened test results strongly correlate with those that can be obtained from the original test (r = 0.92 for processing capacity and r = 0.93 for general intelligence); BIS-4 focuses on processing capacity since several tests of criterion validity have found that processing capacity has the highest predictive power of the operative abilities (Jäger et al. 1997).

We measured inter-rater reliability using two-way, consistency-average intra-class correlation with mixed effects, which was in the “excellent” range for all creativity-related exercises (exercise 3: 0.947; exercise 7: 0.950; exercise 13: 0.989) (Hallgren 2012).

Note that we used the participants’ point values for measurement because the BIS-4 test provides standard scores only for subjects aged sixteen to nineteen.

The results of the Shapiro-Wilk tests were as follows: Playing Time (W = 0.979; p > 0.109), General Intelligence (W = 0.992; p > 0.777), Processing Capacity (W = 0.990; p > 0.611), Memory (W = 0.987; p > 0.418), Verbal Ability (W = 0.994; p > 0.917), and Figural Ability (W = 0.981; p > 0.153).

For model estimation, we used R (Ihaka and Gentleman 1996) and the probabilistic programming language Stan (Carpenter et al. 2017) via the brms package (Bürkner 2018). Samples were drawn using the No-U-Turn Sampler (Hoffman and Gelman 2014). We ran four chains with 4000 iterations, split into 2000 for warm-up and 2000 for sampling. All diagnostics met expectations (\(\widehat{R}\)<1.1; effective sample size ESS > 1000), so the chains were well mixed and efficient.

These results remained robust when the outlier was included, whether with controls or without them (Model 1a: (β1 = −0.0005***; 95%-CI: [−0.0008, −0.0002]); Model 1b: (β1 = −0.0004**; 95%-CI: [−0.0007, −0.0001]); Model 2a: (β1 = −0.0011***; 95%-CI: [−0.0017, −0.0004]); Model 2b: (β1 = −0.0009**; 95%-CI: [−0.0015, −0.0003])).

References

Ackerman PL, Cianciolo AT (2000) Cognitive, perceptual-speed, and psychomotor determinants of individual differences during skill acquisition. J Exp Psychol Appl 6:259–290. https://doi.org/10.1037/1076-898X.6.4.259

Aguinis H, Henle CA, Beaty JC Jr (2001) Virtual reality technology: a new tool for personnel selection. Int J Sel Assess 9:70–83. https://doi.org/10.1111/1468-2389.00164

Arthur W Jr, Day EA, McNelly TL, Edens PS (2003) A meta-analysis of the criterion-related validity of assessment center dimensions. Pers Psychol 56:125–153. https://doi.org/10.1111/j.1744-6570.2003.tb00146.x

Baniqued PL, Lee H, Voss MW, Basak C, Cosman JD, DeSouza S, Severson J, Salthouse TA, Kramer AF (2013) Selling points: what cognitive abilities are tapped by casual video games? Acta Psychol 142:74–86. https://doi.org/10.1016/j.actpsy.2012.11.009

Barber CS, Petter SC, Barber D (2017) It’s all fun and games until someone gets a real job!: From online gaming to valuable employees. In: Proceedings of the 38th International Conference on Information Systems. Seoul. Accessed 15 May 2022 https://aisel.aisnet.org/icis2017/PracticeOriented/Presentations/1

Barr M (2017) Video games can develop graduate skills in higher education students: a randomised trial. Comput Educ 113:86–97. https://doi.org/10.1016/j.compedu.2017.05.016

Beauducel A, Kersting M (2002) Fluid and crystallized intelligence and the Berlin model of intelligence structure (BIS). Eur J Psychol Assess 18:97–112. https://doi.org/10.1027/1015-5759.18.2.97

Bhatia S, Ryan AM (2018) Hiring for the win: game-based assessment in employee selection. In: Dulebohn JH, Stone DL (eds) The brave new world of eHRM 2.0. Information Age Publishing, Charlotte, pp 81–110

Bina S, Mullins JK, Petter S (2021) Examining game-based approaches in human resources recruitment and selection: a literature review and research agenda. In: Proceedings of the 54th Hawaii International Conference on System Sciences. Kauai. Accessed 15 May 2022 http://hdl.handle.net/10125/70773

Boot WR (2015) Video games as tools to achieve insight into cognitive processes. Front Psychol 6:1–3. https://doi.org/10.3389/fpsyg.2015.00003

Brunner M, Süß H-M (2005) Analyzing the reliability of multidimensional measures: an example from intelligence research. Edu Psychol Measure 65:227–240. https://doi.org/10.1177/0013164404268669

Bucik V, Neubauer AC (1996) Bimodality in the Berlin model of intelligence structure (BIS): a replication study. Person Individ Differ 21:987–1005. https://doi.org/10.1016/S0191-8869(96)00129-8

Buday R, Baranowski T, Thompson D (2012) Fun and games and boredom. Games Health J 1:257–261. https://doi.org/10.1089/g4h.2012.0026

Buford CC, O’Leary BJ (2015) Assessment of fluid intelligence utilizing a computer simulated game. Int J Gaming Comput-Med Simul 7:1–17. https://doi.org/10.4018/IJGCMS.2015100101

Bürkner P-C (2018) Advanced Bayesian multilevel modeling with the R package brms. R J 10:395–411. https://doi.org/10.32614/RJ-2018-017

Carpenter B, Gelman A, Hoffman MD, Lee D, Goodrich B, Betancourt M, Brubaker MA, Guo J, Li P, Riddell A (2017) Stan: a probabilistic programming language. J Stat Softw 76:1–32. https://doi.org/10.18637/jss.v076.i01

Climent G, Rodríguez C, García T, Areces D, Mejías M, Aierbe A, Moreno M, Cueto E, Castellá J, González MF (2021) New virtual reality tool (Nesplora Aquarium) for assessing attention and working memory in adults: a normative study. Appl Neuropsychol Adult 28:403–415. https://doi.org/10.1080/23279095.2019.1646745

Consultancy.uk (2019) BDO trialling virtual reality game for recruitment. Accessed 15 May 2022 https://www.consultancy.uk/news/21819/bdo-trialling-virtual-reality-game-for-recruitment

Debusmann B Jr (2021) How virtual reality can help to recruit and train staff. British Broadcasting Corporation (BBC). Accessed 15 May 2022 https://www.bbc.com/news/business-57805093

Entertainment Software Association (2020) Essential facts about the video game industry. Accessed 15 May 2022 https://www.theesa.com/resource/2020-essential-facts

Fetzer M (2015) Serious games for talent selection and development. Ind-Organ Psychol 52:117–125

Fetzer M, McNamara J, Geimer JL (2017) Gamification, serious games and personnel selection. In: Goldstein HW, Pulakos ED, Passmore J, Semedo C (eds) The Wiley Blackwell handbook of the psychology of recruitment, selection and employee retention. John Wiley & Sons Ltd., New York, pp 293–309. https://doi.org/10.1002/9781118972472.ch14

Foroughi CK, Serraino C, Parasuraman R, Boehm-Davis DA (2016) Can we create a measure of fluid intelligence using Puzzle Creator within Portal 2? Intelligence 56:58–64. https://doi.org/10.1016/j.intell.2016.02.011

Hallgren KA (2012) Computing inter-rater reliability for observational data: an overview and tutorial. Tutor Quant Methods Psychol 8:23–34. https://doi.org/10.20982/tqmp.08.1.p023

Hausknecht JP, Day DV, Thomas SC (2004) Applicant reactions to selection procedures: an updated model and meta-analysis. Pers Psychol 57:639–683. https://doi.org/10.1111/j.1744-6570.2004.00003.x

Hoffman MD, Gelman A (2014) The No-U-Turn Sampler: adaptively setting path lengths in Hamiltonian Monte Carlo. J Mach Learn Res 15:1593–1623

Ihaka R, Gentleman R (1996) R: a language for data analysis and graphics. J Comput Graph Stat 5:299–314. https://doi.org/10.2307/1390807

Jäger AO, Süß H-M, Beauducel A (1997) Berliner Intelligenzstruktur-Test: BIS-Test, Form 4. Hogrefe-Verlag, Göttingen

Keith MJ, Anderson G, Gaskin JE, Dean DL (2018) Team video gaming for team building: effects on team performance. AIS Transact Human-Comput Interact 10:205–231. https://doi.org/10.17705/1thci.00110

Koch M, Becker N, Spinath FM, Greiff S (2021) Assessing intelligence without intelligence tests: future perspectives. Intelligence 89:101596. https://doi.org/10.1016/j.intell.2021.101596

Kokkinakis AV, Cowling PI, Drachen A, Wade AR (2017) Exploring the relationship between video game expertise and fluid intelligence. PLoS ONE 12:e0186621. https://doi.org/10.1371/journal.pone.0186621

Krause DE, Anderson N, Rossberger RJ, Parastuty Z (2014) Assessment center practices in Indonesia: an exploratory study. Int J Sel Assess 22:384–398. https://doi.org/10.1111/ijsa.12085

Kruschke JK, Aguinis H, Joo H (2012) The time has come: Bayesian methods for data analysis in the organizational sciences. Organ Res Methods 15:722–752. https://doi.org/10.1177/1094428112457829

Kuo I (2013) Why Wibidata’s Portal 2 recruiting game was so successful. Gamification Co. Accessed 15 May 2022 http://www.gamification.co/2013/02/07/wibidata-portal-2-recruiting-game

Landers RN, Sanchez DR (2022) Game-based, gamified, and gamefully designed assessments for employee selection: definitions, distinctions, design, and validation. Int J Sel Assess 30:1–13. https://doi.org/10.1111/ijsa.12376

Latham AJ, Patston LLM, Tippett LJ (2013) Just how expert are “expert” video-game players? Assessing the experience and expertise of video-game players across “action” video-game genres. Front Psychol 4:941. https://doi.org/10.3389/fpsyg.2013.00941

Leutner F, Codreanu S-C, Liff J, Mondragon N (2021) The potential of game- and video-based assessments for social attributes: examples from practice. J Manag Psychol 36:533–547. https://doi.org/10.1108/JMP-01-2020-0023

Lievens F, Chan D (2017) Practical intelligence, emotional intelligence, and social intelligence. In: Farr JL, Tippins NT (eds) Handbook of employee selection, 2nd edn. Routledge, New York, pp 342–364

Lim J, Furnham A (2018) Can commercial games function as intelligence tests? A pilot study. Comput Games J 7:27–37. https://doi.org/10.1007/s40869-018-0053-z

Lisk TC, Kaplancali UT, Riggio RE (2012) Leadership in multiplayer online gaming environments. Simul Gaming 43:133–149. https://doi.org/10.1177/1046878110391975

Lloyds Banking Group (2021) Virtual reality assessment: bringing assessment into the future. Accessed 15 May 2022 https://www.lloydsbankinggrouptalent.com/early-careers-hub/virtual-reality

Lopez-Fernandez O, Williams AJ, Griffiths MD, Kuss DJ (2019) Female gaming, gaming addiction, and the role of women within gaming culture: a narrative literature review. Front Psych 10:454. https://doi.org/10.3389/fpsyt.2019.00454

Lucas K, Sherry JL (2004) Sex differences in video game play: a communication-based explanation. Commun Res 31:499–523. https://doi.org/10.1177/0093650204267930

McElreath R (2020) Statistical rethinking: a Bayesian course with examples in R and Stan, 2nd edn. CRC Press, Boca Raton. https://doi.org/10.1201/9780429029608

McPherson J, Burns NR (2008) Assessing the validity of computer-game-like tests of processing speed and working memory. Behav Res Methods 40:969–981. https://doi.org/10.3758/BRM.40.4.969

Melchers KG, Basch JM (2022) Fair play? Sex-, age-, and job-related correlates of performance in a computer-based simulation game. Int J Sel Assess 30:48–61. https://doi.org/10.1111/ijsa.12337

Peters H, Kyngdon A, Stillwell D (2021) Construction and validation of a game-based intelligence assessment in Minecraft. Comput Hum Behav 119:106701. https://doi.org/10.1016/j.chb.2021.106701

Petter S, Barber D, Barber CS, Berkley RA (2018) Using online gaming experience to expand the digital workforce talent pool. MIS Q Exec 17:315–332. https://doi.org/10.17705/2msqe.00004

Quiroga MA, Herranz M, Gómez-Abad M, Kebir M, Ruiz J, Colom R (2009) Video-games: do they require general intelligence? Comput Educ 53:414–418. https://doi.org/10.1016/j.compedu.2009.02.017

Quiroga MÁ, Román FJ, Catalán A, Rodríguez H, Ruiz J, Herranz M, Gómez-Abad M, Colom R (2011) Videogame performance (not always) requires intelligence. Int J Online Pedagog Course Des 1:18–32. https://doi.org/10.4018/ijopcd.2011070102

Quiroga MÁ, Escorial S, Román FJ, Morillo D, Jarabo A, Privado J, Hernández M, Gallego B, Colom R (2015) Can we reliably measure the general factor of intelligence (g) through commercial video games? Yes, we can! Intelligence 53:1–7. https://doi.org/10.1016/j.intell.2015.08.004

Quiroga MA, Diaz A, Román FJ, Privado J, Colom R (2019) Intelligence and video games: beyond “brain-games.” Intelligence 75:85–94. https://doi.org/10.1016/j.intell.2019.05.001

Quiroga MÁ, Colom R (2020) Intelligence and video games. In: Sternberg RJ (ed) The Cambridge handbook of intelligence, 2nd edn. Cambridge University Press, Cambridge, pp 626–656. https://doi.org/10.1017/9781108770422.027

Quiroga MA, Román FJ, De La Fuente J, Privado J, Colom R (2016) The measurement of intelligence in the XXI century using video games. Spanish J Psychol 19:e89. https://doi.org/10.1017/sjp.2016.84

Rabbitt P, Banerji N, Szymanski A (1989) Space fortress as an IQ test? Predictions of learning and of practised performance in a complex interactive video-game. Acta Psychol 71:243–257. https://doi.org/10.1016/0001-6918(89)90011-5

Robert Half (2017) Stand-out skills for today’s IT grads. Accessed 15 May 2022 https://www.roberthalf.com/blog/salaries-and-skills/stand-out-skills-for-todays-it-grads

Rubenfire A (2014) Can ‘World of Warcraft’ game skills help land a job? The Wall Street Journal. Accessed 15 May 2022 https://www.wsj.com/articles/can-warcraft-game-skills-help-land-a-job-1407885660

Sanchez DR, Weiner E, Van Zelderen A (2022) Virtual reality assessments (VRAs): exploring the reliability and validity of evaluations in VR. Int J Sel Assess 30:103–125. https://doi.org/10.1111/ijsa.12369

Schmidt FL, Oh I-S, Shaffer JA (2016) The validity and utility of selection methods in personnel psychology: practical and theoretical implications of 100 years of research findings. Working paper. Accessed 15 May 2022 https://www.researchgate.net/publication/309203898_The_Validity_and_Utility_of_Selection_Methods_in_Personnel_Psychology_Practical_and_Theoretical_Implications_of_100_Years_of_Research_Findings

Schneider WJ, McGrew KS (2018) The Cattell-Horn-Carroll theory of cognitive abilities. In: Flanagan DP, McDonough EM (eds) Contemporary intellectual assessment: theories, tests, and issues, 4th edn. The Guilford Press, New York, pp 73–162

Shute VJ, Ventura M, Ke F (2015) The power of play: the effects of Portal 2 and Lumosity on cognitive and noncognitive skills. Comput Educ 80:58–67. https://doi.org/10.1016/j.compedu.2014.08.013

Shute VJ, Wang L, Greiff S, Zhao W, Moore G (2016) Measuring problem solving skills via stealth assessment in an engaging video game. Comput Hum Behav 63:106–117. https://doi.org/10.1016/j.chb.2016.05.047

Shute VJ, Rahimi S (2021) Stealth assessment of creativity in a physics video game. Comput Hum Behav 116:106647. https://doi.org/10.1016/j.chb.2020.106647

Simons A, Wohlgenannt I, Weinmann M, Fleischer S (2021) Good gamers, good managers? A proof-of-concept study with Sid Meier’s Civilization. RMS 15:957–990. https://doi.org/10.1007/s11846-020-00378-0

Sourmelis T, Ioannou A, Zaphiris P (2017) Massively multiplayer online role playing games (MMORPGs) and the 21st century skills: a comprehensive research review from 2010 to 2016. Comput Hum Behav 67:41–48. https://doi.org/10.1016/j.chb.2016.10.020

Süß H-M, Oberauer K, Wittmann WW, Wilhelm O, Schulze R (2002) Working-memory capacity explains reasoning ability–and a little bit more. Intelligence 30:261–288. https://doi.org/10.1016/S0160-2896(01)00100-3

Süß H-M, Beauducel A (2005) Faceted models of intelligence. In: Wilhelm O, Engle RW (eds) Handbook of understanding and measuring intelligence. Sage Publications, Thousand Oaks, pp 313–332. https://doi.org/10.4135/9781452233529.n18

Tian N, Lopes P, Boulic R (2022) A review of cybersickness in head-mounted displays: raising attention to individual susceptibility. Virtual Real 26:1409–1441. https://doi.org/10.1007/s10055-022-00638-2

Unsworth N, Redick TS, McMillan BD, Hambrick DZ, Kane MJ, Engle RW (2015) Is playing video games related to cognitive abilities? Psychol Sci 26:759–774. https://doi.org/10.1177/0956797615570367

Valmaggia L (2017) The use of virtual reality in psychosis research and treatment. World Psychiatry 16:246–247. https://doi.org/10.1002/wps.20443

van der Linden WJ (2006) A lognormal model for response times on test items. J Edu Behav Stat 31:181–204. https://doi.org/10.3102/10769986031002181

Vichitvanichphong S, Talaei-Khoei A, Kerr D, Ghapanchi AH, Scott-Parker B (2016) Good old gamers, good drivers: results from a correlational experiment among older drivers. Australas J Inf Syst 20:1–21. https://doi.org/10.3127/ajis.v20i0.1110

Wais PE, Arioli M, Anguera-Singla R, Gazzaley A (2021) Virtual reality video game improves high-fidelity memory in older adults. Sci Rep 11:2552. https://doi.org/10.1038/s41598-021-82109-3

Wan B, Wang Q, Su K, Dong C, Song W, Pang M (2021) Measuring the impacts of virtual reality games on cognitive ability using EEG signals and game performance data. IEEE Access 9:18326–18344. https://doi.org/10.1109/ACCESS.2021.3053621

Weidner N, Short E (2019) Playing with a purpose: the role of games and gamification in modern assessment practices. In: Landers RN (ed) The Cambridge handbook of technology and employee behavior. Cambridge University Press, Cambridge, pp 151–178. https://doi.org/10.1017/9781108649636.008

Weiner EJ, Sanchez DR (2020) Cognitive ability in virtual reality: validity evidence for VR game-based assessments. Int J Sel Assess 28:215–235. https://doi.org/10.1111/ijsa.12295

Weis S, Süß H-M (2007) Reviving the search for social intelligence—a multitrait-multimethod study of its structure and construct validity. Personal Individ Differ 42:3–14. https://doi.org/10.1016/j.paid.2006.04.027

Wohlgenannt I, Simons A, Stieglitz S (2020) Virtual reality. Bus Inf Syst Eng 62:455–461. https://doi.org/10.1007/s12599-020-00658-9

Wu FY, Mulfinger E, Alexander L III, Sinclair AL, McCloy RA, Oswald FL (2022) Individual differences at play: an investigation into measuring Big Five personality facets with game-based assessments. Int J Sel Assess 30:62–81. https://doi.org/10.1111/ijsa.12360

Youtube (2016) Job Simulator—Gourmet Chef [no commentary]. Uploaded by Delco. Accessed 15 May 2022 https://www.youtube.com/watch?v=YtWCne2ilzE

Zhang H (2017) Head-mounted display-based intuitive virtual reality training system for the mining industry. Int J Min Sci Technol 27:717–722. https://doi.org/10.1016/j.ijmst.2017.05.005

Funding

Open access funding provided by University of Liechtenstein. Financial support was received from the Liechtenstein National Research Fund under grant number wi-1–16.

Author information

Authors and Affiliations

Contributions

AS and IW developed the study design, prepared the material, collected the data, and supported data analysis; SZ supported the study as a psychologist, contributed to study design, and provided instructions on how to supervise and evaluate the intelligence tests; MW contributed to the study design, analyzed the data, and summarized the results; JS computed the participants’ playing times and supported data analysis; JvB contributed ideas, comments and revisions, especially regarding practical implications, and served as corresponding author. All authors commented on previous versions of the manuscript and read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or nonfinancial interests to disclose.

Ethics approval

The authors’ academic community is Information Systems, so the study adhered to the Association for Information Systems (AIS) Code of Research Conduct. The research commission of the authors’ university, which has the responsibilities of a research ethics committee, reviewed and approved the study before data collection began.

Consent to participate

All participants took part in the study voluntarily, provided written informed consent, and could quit the study at any time without giving a reason.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Simons, A., Wohlgenannt, I., Zelt, S. et al. Intelligence at play: game-based assessment using a virtual-reality application. Virtual Reality 27, 1827–1843 (2023). https://doi.org/10.1007/s10055-023-00752-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10055-023-00752-9