Abstract

Virtual reality (VR) has emerged as a valid addition to conventional therapy in rehabilitation and sports medicine. This has enabled the development of novel and affordable rehabilitation strategies. However, before VR devices can be used in these situations, they must accurately capture the range of motion of the body-segment where they are mounted. This study aims to state the accuracy of the Oculus Touch v2 controller when used to measure the elbow’s motion in the sagittal plane. The controller is benchmarked against an inertial sensor (ENLAZA\(^{\mathrm{TM}}\)), which has already been validated as a reliable measurement device. We have developed a virtual environment that matches both the Oculus Touch v2 and the inertial sensor orientations using a digital goniometer. We have also collected the orientation measurements given by each system for a set of 17 static angles that cover the full range of normal elbow flexion and hyperextension motion, in 10° intervals from − 10° (hyperextension) to 150° (flexion). We have applied the intra-rater reliability test to assess the level of agreement between the measurements of these devices, obtaining a value of 0.999, with a 95% confidence interval ranged from 0.996 to 1.000. By analyzing the angle measurement outcomes, we have found that the accuracy degrades at flexion values between 70° and 110°, peaking at 90°. The accuracy of Oculus Touch v2 when used to capture the elbow’s flexion motion is good enough for the development of VR rehabilitation applications based on it. However, the flaws in the accuracy that have been revealed in this experimental study must be considered when designing such applications.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Recent technological developments can be applied to sports medicine and rehabilitation, helping with both patient assessment and the execution of rehabilitation programs. Wearable sensors are especially popular because they can be used by a wide range of people, from patients with mobility problems to athletes who are recovering from an injury. One of their more important features is the ability to take range of motion (ROM) measurements of body-joints as accurately as goniometers, which are currently the most popular instrument in rehabilitation clinics (Costa et al. 2020). These quantitative measurements of human movement can be used to assess the presence of a motor disorder. In addition, wearable devices can be employed in a free-living environment, expanding their use cases in rehabilitation (Porciuncula et al. 2018). Finally, they also provide an opportunity for the collection of large amounts of data from many patients, which allows the growth of personalized and precision medicine (Dhawan 2016). Many wearable sensors use inertial measurement units (IMUs), which contain a gyroscope, an accelerometer and a magnetometer. IMUs are small enough for the devices that employ to provide systematic, objective and reliable monitoring of human movement without hindering it or imposing space limitations (Camomilla et al. 2018).

Another emerging technology that can be used for rehabilitation purposes is immersive virtual reality (VR), usually through headsets that can be combined with other devices, such as omni-directional treadmills, special gloves (Jerald 2019) or controllers. VR’s ability to generate realistic images, sounds and other sensations, and, through them, replicate a real environment or create an imaginary world, has proven to be very useful in this field. VR is currently gaining traction in many areas, such as teaching or health, and it is expected to grow even more in the near future (Checa and Bustillo 2020). Concerning rehabilitation, VR has emerged as a valid addition to conventional therapy, enriching new and low-cost rehabilitation strategies (Laver et al. 2017).

VR can provide a positive learning experience, while being motivating and engaging (Maillot et al. 2012). This is especially important in rehabilitation, which can be a tough, prolonged and exhausting experience that patients might feel reluctant to proceed with. Any tool that is able to alleviate this problem is, therefore, particularly useful. Another advantage of VR-based therapy is the possibility of adapting the tasks to each particular patient’s needs. Virtual environments can be easily customized, so tasks tailored to the patient’s cognitive and physical impairments can be designed, maximizing brain reorganization and reactivating the areas of the brain involved in motor planning, learning and execution (Kim et al. 2005; Boyd and Winstein 2001), while maintaining engagement (Maillot et al. 2012). These modifications would be considerably more difficult and expensive to achieve with real environments.

There are few published studies that use immersive VR for rehabilitation therapies, with most of which are non-immersive experiences in which the patient can see their own body on a standard computer screen (Costa et al. 2020; Cui et al. 2019; Shum et al. 2019; Jost et al. 2021; Postolache et al. 2019; Oña et al. 2018; Borresen et al. 2019; Lee 2017). However, there are some that could be considered immersive VR, such as CAREN (Computer Assisted Rehabilitation Environment), which is a CAVE-like (Cruz-Neira et al. 1992) solution designed for balance assessment and therapy, gait analysis, adaptability, and motor control, among others (CAREN 2021). This integrates an instrumented dual-belt treadmill with a six DOF (Degrees of freedom) motion base and a 3D motion capture system inside an immersive environment. Some studies (De Luca et al. 2020; Rachitskaya et al. 2020; Calabrò et al. 2020; Kalron et al. 2016) have pointed out that this device may be a useful tool in rehabilitation therapies, which encourages the idea that VR could be a useful addition to the therapist’s toolset.

1.1 Use of IMUs in immersive applications

Several studies have successfully employed IMUs with immersive applications to measure shoulder joint (Cui et al. 2019) or upper arm and forearm mobility (Kim et al. 2013). These approaches have shown their potential to reduce the human resources and time required to assess the patient’s joint mobility in comparison with traditional methods. In addition, these solutions could lead to the development of personalized training methods for upper extremity rehabilitation. There are similar systems that integrate more inertial sensors for motion capture of all body segments (Brandão et al. 2020; Fitzgerald et al. 2007). These solutions allow the patient to receive real-time visual stimuli from a virtual environment, and they provide the therapist with information about the movements performed during therapy. More complex developments (Patil et al. 2020) have proposed pose-tracking systems for virtual interaction by fusing multiple 3D light sensors and IMUs. However, inertial sensors are used to capture the orientation of each body segment, estimating the position and orientation of every joint of the body. This setup enables a real-time 3D avatar reconstruction. The accuracy of the results of this study shows that this solution is comparable to state-of-the-art pose-tracking systems.

1.2 Position and orientation trackers in virtual reality

Motion tracking technologies can be combined with VR, which extends the innate tracking capabilities of this technology to body parts other than the head. This enables rehabilitation therapies to take advantage of some VR features (e.g., immersion, accurate head tracking), while collecting any additional physiological parameters that might be relevant. The more advanced VR Head-Mounted Displays (HMDs) that are currently available on the consumer market are supported by advanced six DOF tracking and are usually based on embedded infrared systems, as is the case with Oculus Rift\(^{\mathrm{TM}}\) (Farahani et al. 2016) or the Lighthouse Stations (Dempsey 2016) and is typically used with HTC Vive\(^{\mathrm{TM}}\) and other SteamVR-based headsets. These systems provide a very precise tracking of both position and orientation (Niehorster et al. 2017). The HTC Vive\(^{\mathrm{TM}}\) tracking system and the WorldViz\(^{\mathrm{TM}}\) Precision Position Tracking System have been compared (Niehorster et al. 2017) in terms of accuracy and latency, concluding that the RMS in orientation was less than 0.0113° for the former and less than 0.0053° for the latter for all the rotations. An accuracy analysis of the position and orientation of the HTC Vive\(^{\mathrm{TM}}\) controllers and trackers (Spitzley and Karduna 2019) has concluded that the mean angular errors for both devices were less than 0.4°. In the same domain of tracking technologies for VR is the Antilatency\(^{\mathrm{TM}}\) system, which is a positional tracker designed to be used with a VR headset. This uses several trackers consisting of IMU sensors with real-time position correction based on optical data that allows for full-body tracking (LLC 2021). However, despite its potentially high performance, no scientific studies have been found that use this system in the field of motion tracking for rehabilitation.

Despite the positive outcomes in position and orientation tracking of these systems, they still have to deal with two main problems: first, the reduced tracking areas limit the person’s movement space and activities; and second, the loss of tracking or changes in height measurements along the tracking space can cause incorrect measurements of the orientation of the device (Niehorster et al. 2017). There are several alternatives to this setup. For example, inside-out tracking uses infrared cameras that are mounted on the headset itself to scan the environment, but they are less accurate and are highly dependent on the environmental conditions (Passos and Jung 2020). Although most motion capture accuracy studies using trackers are not performed with inside-out tracking HMDs (Farahani et al. 2016; Dempsey 2016; Niehorster et al. 2017; Spitzley and Karduna 2019), two studies have focused on the positional and rotational accuracy of the Oculus Touch v1 controller. One of these studies (Jost et al. 2021) acquired static data samples from the device at different step sizes and at different points on a \(2.4\times 2.4\) m play-place. The authors determined that the maximum positional accuracy error of the Oculus Touch was \(3.5\pm 2.5\) mm at the largest step size of 500 mm along the z-axis. The other study (Shum et al. 2019) evaluated the rotations in three orthogonal axes for rotation intervals of 90°. The authors found that the rotational accuracy of the system was \(0.34^{\circ }\pm 0.38^{\circ }\) for the HMD and \(1.13^{\circ }\pm 1.23^{\circ }\) for the controller. However, no study has examined the orientation accuracy for movements in the sagittal plane. In addition, thorough studies of the accuracy of every component of a VR system that tracks a part of the user’s body or an object that the user interacts with are (as have been stated in this section) still lacking. This is an important gap in our understanding of the development of VR-based rehabilitation solutions.

1.3 Aim

The main objective of this work is to validate the accuracy of the Oculus Touch v2 device (Oculus Quest 2 controller) to measure the elbow’s motion in the sagittal plane. To achieve this, we used a wireless motion capture wearable device as benchmark. This is based on an inertial measurement unit (IMU) sensor—the ENLAZA\(^{\mathrm{TM}}\) device—which is already validated as a reliable measuring device for the elbow’s range of motion (ROM) (Costa et al. 2020). The previous validity and feasibility study of the use of an inertial sensor to measure elbow and wrist active ROM were a comparative test-retest study between this device and a standard goniometry system with 29 participants. The results revealed that the ROM measurements that were obtained for the elbow had similar values for both systems. The inertial sensor had better reliability when compared to the goniometer for elbow measurements. The intra-rater and inter-rater reliability ICC values ranged from 0.83 to 0.96 and from 0.94 to 0.97, respectively.

However, to be able to do this research, we must first fulfill the secondary objective of this work: to test the capability of an IMU sensor as a VR tracker in conjunction with a VR headset. This will enable us to confidently use the aforementioned IMU sensor as a proper benchmark for the Oculus Touch v2. In immersive applications, VR trackers should fulfill strict accuracy and latency requirements to induce the user’s perception of ownership over a virtual body (Banakou et al. 2013). Concerning latency, the existing literature has pointed out that a high delay between the time that a physical movement is performed and an output image is rendered on the HMD can decrease the user’s sense of immersion (Farahani et al. 2016). In fact, in VR, an end-to-end latency higher than 30 ms will break the sense of agency and body ownership (Raaen and Kjellmo 2015). Consequently, we must ensure that the IMU sensor respects this limit.

To achieve both aims, we have developed a VR scenario for the Oculus Quest\(^{\mathrm{TM}}\) 2 that uses both an Oculus Touch v2 and the IMU sensor to track the elbow flexion–extension movement.

2 Methods

2.1 Approach

We have devised the present setup with the aim of establishing a benchmark to test the orientation accuracy of Oculus Touch v2 controllers for VR applications, focusing on its application to the elbow’s ROM. This setup has also been designed to integrate the IMU sensor’s measurements, which will enable accuracy measurements of both devices (Oculus Touch v2 and IMU sensor) to be taken and compared.

The wireless motion capture system is composed of three fundamental elements: the inertial sensor, a Raspberry Pi based-computer and an Oculus Quest 2 device. We have implemented the communication between the three blocks, which has resulted in a low latency and accurate position tracking method.

2.2 Materials

2.2.1 Inertial sensors

This approach uses an inertial sensor to capture the flexion–extension movement of the forearm. This device contains an IMU module that integrates a three-axis accelerometer, a three-axis gyroscope and a three-axis compass. The IMU sensor also includes a microcontroller unit (MCU) (8-bit AVR, 8 MHz, 32 KBytes of flash memory) that is in charge of acquiring the IMU data; computing the Direction Cosine Matrix (DCM) using the 3D accelerometer, gyroscope and magnetometer data following a published (Premerlani and Bizard 2009) algorithm; and interfacing with a computer unit via Bluetooth.

2.2.2 Computer unit

The computer unit is a Raspberry Pi\(^{\mathrm{TM}}\), which is a low-cost and small ARM-based computer that supports WiFi and Bluetooth wireless communications. This computing core handles the reception of data from the IMU sensor via Bluetooth, the calculation of Euler Angles orientation from such data, and the sending of these angles via WiFi to a VR device using UPD (User Datagram Protocol), which is chosen to reduce latency.

2.2.3 Virtual reality device

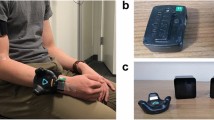

The Oculus Quest\(^{\mathrm{TM}}\) 2 VR device is used. This is a VR HMD with six DOF for both the headset itself and two Oculus Touch v2 controllers. The Oculus Touch v2 is a handheld unit that contains a set of infrared LEDs. This allows the handheld unit to be tracked by the cameras that are present in the Oculus Quest\(^{\mathrm{TM}}\) 2 headset (Constine 2015) and an IMU sensor and determines their own orientation.

2.3 System design

The DCM information from the IMU sensor is transmitted via Bluetooth to the Raspberry Pi, which transform the DCM into the angle data that will be used in the VR application that runs in the Oculus Quest 2. Once transformed, the angle data are transmitted via Wi-Fi using UDP to the VR headset. The application uses this data to move a virtual arm that replicates the user’s real arm in VR (see Fig. 1). This movement will also be registered by the Oculus Touch v2. A digital goniometer will be used for the static orientation of the devices.

Bluetooth communication between the sensor and the Raspberry Pi is established using the Serial Port Profile (SPP). This enables the angular orientation data to be sent in a binary format.

The WiFi communication between the Raspberry Pi and the Oculus Quest\(^{\mathrm{TM}}\) 2 is implemented using the UDP protocol with a static IP-port endpoint to which data will be sent. We used UDP communication to prioritize low-latency over reliability because the system is tolerant to the loss of some datagrams (Rind et al. 2006).

2.4 Data processing

From the first DCM received, we calculate a calibration matrix to have a new reference frame, which will correspond to the user’s initial position. From this neutral position, we can calculate a calibration matrix. Once the calibration matrix is known, we will be able to calculate the transformation matrix for each subsequent DCM received. Next, we can obtain the rotation angle of the reference system, which is equivalent to the rotation measured by the sensor.

This process uses the following calculations: \(R_{\mathrm{cal}}\) denotes the calibration matrix, \(R_{\mathrm{s}}\) denotes the data sent by the IMU (in relation to the reference frame), and \(R_{\mathrm{t}}\) denotes the transformation matrix. We calculate the movement in relation to the calibration using the following equation:

where \(R_{a}\) denotes the initial matrix, representing the initial position and orientation; T denotes the transformation matrix, representing the transformation applied to the initial matrix (a rotation in this case); and \(R_{b}\) denotes the final matrix after the transformation, representing the position and orientation after the transformation. This corresponds in our case to: \(R_{\mathrm{s}} = R_{\mathrm{t}} \times R_{\mathrm{cal}}\), so we have that:

After calculating \(R_{\mathrm{t}}\), we normalize it to ensure that the system is orthonormal.

Finally, we convert \(R_{\mathrm{t}}\) to Euler angles, using the formulas of the ZXY convention, as proposed by the International Society of Biomechanics (ISB) (Wu et al. 2005). The sequence ZXY can be intuitively interpreted as the individual Euler rotations. This means that the first Euler angle represents a flexion of the arm, the second represents an abduction of the arm, and the third represents an internal/external rotation of the arm (Campeau-Lecours et al. 2020). These data are sent via UDP to replicate the movement virtually.

2.5 Latency analysis

To measure the total delay of the developed wireless motion capture system, we implemented a time measurement tool in all processes of the data transmission thread. The latency of the system represents the total delay between the instant that the movement occurs to the time that it is displayed on the Oculus Quest\(^{\mathrm{TM}}\) 2 HMD. Applying the following approach, we expected to find that the end-to-end latency of this setup stays below 30 ms (Raaen and Kjellmo 2015) to avoid breaking the sense of body ownership in VR experiences.

The simplest way to record these time delays is to write the data packet with a standardized time stamp between events. In this way, when this information is received by the HMD, data time can be stored for later analysis. To support the latency analysis, we store both latency data, as follows: the Raspberry Pi data processing timestamp for each sample and the elapsed-time of the communication process (UDP protocol), which is the time to read an incoming message for 1 min. From these results, the time averages and the standard deviation between the maximum and minimum values are calculated.

Because the inertial sensor has a fixed sampling rate of 50 Hz, this means that we have a data acquisition rate of 20 ms. The times at which each piece of data is received are then captured to know this delay with respect to the sampling rate because a communication rate from IMU to VR HMD of 20 ms would be the expected value in a system with no-latency. This delay reflects the time cost of the three processes: the Bluetooth data transmission between the IMU sensor and the Raspberry Pi, the computation of the Euler angles done by the Raspberry Pi and the UDP socket communication process.

Table 1 shows the time costs of end-to-end communication, the IMU sampling rate and the difference between them. This results in the end-to-end latency. As can be seen, the latency stays inside the values recommended by the literature.

2.6 Data analysis

To analyze the accuracy of the Oculus Touch v2 controller as a tracker and an elbow ROM measurement device, we compare it with the IMU sensor using a digital goniometer to set the devices in different orientations. The ENLAZA \(^{\mathrm{TM}}\), the particular IMU sensor that we used has already been validated for elbow ROM (Costa et al. 2020), will be used the baseline against which the Oculus Touch v2 controller is compared. Accuracy assessment has been established by comparing the means and standard deviations of the pairs of measurements.

To measure the reliability of this validation procedure, we calculated the ICC (95% confidence interval [CI]) using the analysis of reliability of the IBM SPSS Statistics 27 software suite. In this study, the ICC reflects the variation in the measurements made by the devices in the same setup under the same conditions. We took the design of Koo and Li (2016) as a reference and applied the model of two-way mixed effect and absolute agreement definition. We did this because this is an inter-rater reliability study for two different devices and it is expected to observe agreement between their averaged measurements.

2.7 Protocol

We assessed the accuracy of the orientation measures reported by the Oculus Touch v2 at each orientation. Specifically, for accuracy, we looked at whether the roll orientation of the Oculus was stable across space. The orientation of the controllers is referenced with respect to their local coordinate system and not with respect to the external HMD coordinate system. The Oculus Touch v2 bases its measurements on a reference plane that is aligned with its button pad. Therefore, for initial orientation (0, 0, 0), the aim axis is tilted 40° with respect to the horizontal plane of the grip (see Figs. 2 and 3).

To compare static orientation measurements between the Oculus Touch v2 controller and the IMU sensor, both devices were attached to the movable segment of the digital goniometer Silverline\(^{\mathrm{TM}}\). The Oculus Quest 2 headset was located on a stable table near the goniometer to record the measurement data. The system’s setup protocol was run before each data collection session.

The 3D environment built in Unity has two 3D representations of the goniometer (the first for the IMU sensor and the second for the Oculus Touch v2), consisting of two segments and two joints. The first joint enables the rotation of the whole object, and the other joint enables the rotation of the last segment around the X-axis, replicating the real goniometer’s rotation (see Fig. 4). When the IMU sensor and the Oculus Touch v2 rotate, their angles of rotation are replicated in the corresponding Unity object’s joints, to translate the real movement to the VR environment. This allows the data from both devices to be simultaneously stored and represented in the virtual environment.

The angles measured by the IMU sensor and the controller are taken for each set angle that is assessed with the goniometer. This allows us to later evaluate the accuracy of both devices in characterizing this range of angular values. According to the existing literature on the elbow joint normal ROM values (Soucie et al. 2011), healthy men and women aged 9–69 years can achieve a flexion value ranged between 143.5° and 150.0° and a hyperextension value ranged between \(-\,0.8^{\circ }\) and \(-\,6.4^{\circ }\) (see Fig. 5). Based on these data, we captured the expected normal ROM angles: from 350° (equivalent to \(-\,10^{\circ }\) in hyperextension) to 150°, in 10° intervals, which are shown in the results section (Table 2).

3 Results

Table 3 shows the results for the angle measurements performed with the IMU sensor and the Oculus Touch v2, respectively, with both devices fixed to the goniometer. To better characterize the measured angle, the average value of three repetitions for each angle was used instead.

The difference between the mean value of each device and the reference value (goniometer) is shown. This shows the precision achieved by each system. By observing the descriptive results of the measurements, it can be assumed that the average value replicates the reference value because of the narrow dispersion of the samples (standard deviation) for each angle. The standard deviation outcomes of the IMU sensor ranged from 0.127° to 3.307°, and the Oculus Touch v2 standard deviation outcomes ranged from 0.342° to 1.472°.

The standard error of the mean (SEM) was calculated to measure the dispersion of sample means around the population mean. The average standard error of the IMU sensor outcomes is 0.307°, with a minimum value of 0.076° and a maximum value of 1.909°. Meanwhile, the average standard error of the Oculus Touch v2 outcomes is 0.449°, with a minimum value of 0.197° and a maximum value of 0.849°. The degree of agreement between the measurements of the devices was statistically estimated by applying the inter-rater reliability test. We obtained an ICC of 0.999, with values ranging from 0.996 to 1.000 for a 95% confidence interval.

To better illustrate the low dispersion of the results, the mean values and their corresponding standard deviations for each device are graphed in Figs. 6 and 7. The data for 350° have been excluded to preserve the scale of the graph (Fig. 6), but their values are similar to those depicted.

4 Discussion

The present study aimed to compare the static rotation measurements between an Oculus Touch v2 controller and the IMU sensor. These measurements were meant to state the former’s accuracy in measuring orientation for the sagittal plane roll rotation, with the aim of tracking kinematic data for an immersive VR application.

An ICC value of 0.999 was obtained for the reliability measurement (Table 4), with a 95% CI that ranged from 0.996 to 1.000, indicating good reliability according to the existing literature (Koo and Li 2016). In addition, the F-test (with truth value equals 0) value that we obtained is 872.322 with a statistical significance of \(p\le 0.001\). Basing on the ICC results, we proceed to discuss the results of each device in more detail for each angle range. For the IMU sensor outcomes, the standard deviation results for each measured angle show low dispersion. However, for the Oculus Touch v2 outcomes, higher standard deviation values are observed in most cases. The angles reported by the Oculus Touch v2 between the static angles of 70° and 110° deviate considerably from the goniometer reference values, as shown in the mean difference between goniometer and Oculus Touch v2 values in Table 2. The average SEM value of the IMU sensor was 0.307°. In contrast, the Oculus Touch v2 showed an average SEM value of 0.449° and a mean difference of 2.22°. The latter result is similar to the mean difference angle value of \(1.13\pm 1.23\) that was reported by Jost et al. (2021) in their quantitative analysis of the position and orientation of the Oculus Rift\(^{\mathrm{TM}}\) controllers, which correspond to the first version of the Oculus Touch. These authors already reflected the consistent error in accuracy of the Oculus Touch (v1) for roll rotation in the z-axis. According to Ertzgaard et al. (2016) and Youdas et al. (1991), comparing different (inertial and optical) ROM assessment systems, small systematic errors were detected in all planes of elbow motion, ranging from 1.2° to 1.3°. Meanwhile, Brodie et al. (2008) investigated the accuracy of IMUs from XSens Technologies (set as a gold-standard) and obtained a RMS error of between 0.8 and 1.3. Then, assuming that all sensing systems can have an average systematic measurement error around 1.3° (Costa et al. 2020; Youdas et al. 1991; Brodie et al. 2008), values that are below or close to this threshold over the entire range of measurement could be considered as acceptable accurate values. In this study, it is evident that the SEM values of the Oculus Touch v2 are not constant over the whole physiological range of motion of the elbow flexo-extension because its accuracy fluctuates seriously in the identified zone from 80 to 110°.

Besides the technical demands on accuracy, it is also essential to consider objective accuracy values from a clinical point of view. However, it is first worth introducing the concept of “minimal detectable change” (MDC) (Fernández Serrano et al. 2014), which is the change in measurement that represents a real improvement in the patient’s clinical condition and which, ideally, is not caused by a measurement error. The MDC value is calculated based on the average SEM of the device (\(1.96*\sqrt{2*{\text{SEM}}}\)). Therefore, in the case of the Oculus Touch v2 (\({\text{SEM}} = 0.449^{\circ }\)), it should at least guarantee a measurement accuracy of 1.857° to be considered a feasible accuracy device for clinical applications. As shown in Table 3, the controller meets the MDC value at every static-angle in the whole range. In the case of the inertial system (\({\text{SEM}} = 0.307^{\circ }\)), its MDC value is 1.536°, which is mostly respected throughout the whole range except for the value of 110°.

From these results, it is clear that in the range of angles close to 90°, the Oculus Touch v2 controller loses precision as we approach this particular angle. It is reasonable to speak of the existence of a blind area around the 90° angle, caused either by the hardware sensors or the software that processes their input. This blind area affects the sensitivity of the continuous roll rotation measurement. With that said, it can be considered that the Oculus Touch v2 enables the assessment of the elbow ROM in virtual rehabilitation applications because it accurately captures the extreme (0°, 150°) angle values of the ROM. However, its lack of precision around the 90° angle must be taken into account, so the specific VR application must be designed to properly position the user’s arm to avoid having to take accurate measurements around this blind area. Our characterization of the accuracy of the reported 3D orientation of the Oculus Touch v2 in the sagittal plane complements previous studies (Shum et al. 2019), which were focused on the measurement of the positional error of the Oculus Touch v1 controllers. As in the aforementioned study, we conclude that the Oculus Touch v2 controllers could provide a cost-effective alternative for tracking gross upper limb movements in rehabilitation applications.

From all these insights, we can conclude that using higher precision inertial sensors rather than the Oculus Touch would be strongly recommended for VR applications that are designed for elbow ROM rehabilitation. Thus, we recommend the use of devices based on IMUs, such as SlimeVR\(^{\mathrm{TM}}\) or DecaMove\(^{\mathrm{TM}}\) because they use a technology similar to the one featured in the ENLAZA\(^{\mathrm{TM}}\) sensor we used as a benchmark for this study. Regarding more robust motion capture systems, which combine optical and inertial systems, such as Antilatency\(^{\mathrm{TM}}\), Tundra Tracker\(^{\mathrm{TM}}\) or Indo Track\(^{\mathrm{TM}}\), it could be assumed that they would be even more precise. They also enable the capture of more segments, although their potential as full-body trackers for virtual rehabilitation applications should be studied in depth in the future.

We can also conclude that the Oculus Touch v2 tracking data can be used in applications in which elbow movements that cover the full range of motion are performed, especially if most of the time is spent outside the known zone of lowered accuracy. Several movements that are validated for rehabilitation exercises (Shahmoradi et al. 2021) fulfill these criteria, as follows: touching the chin, mouth or opposite shoulder; extending the hand forward to get objects; or holding an object and lifting it or taking it down. These movements require the elbow flexion–extension movement, and their working range mostly avoids the lower accuracy zone of the Oculus Touch v2.

5 Conclusion

The Oculus Touch v2 controllers show a lack of accuracy in some specific orientations of the sagittal rotation plane. Nevertheless, the full ROM of the elbow joint can be properly described in a virtual environment using these devices. The user would only perceive small misalignments in orientation when the controller is close to the 90° angle. In VR applications where a few°s of offset do not matter, the use of Oculus Touch v2 may not be a major problem. However, in applications where high accuracy of orientation measurement is a priority, the use of an IMU sensor is much preferred because the accuracy of the ENLAZA\(^{\mathrm{TM}}\), a device that uses this technology, has been confirmed to be very good, if not excellent.

Availability of data and materials

Not applicable.

References

Banakou D, Groten R, Slater M (2013) Illusory ownership of a virtual child body causes overestimation of object sizes and implicit attitude changes. Proc Natl Acad Sci 110(31):12846–12851

Borresen A, Wolfe C, Lin C-K, Tian Y, Raghuraman S, Nahrstedt K, Prabhakaran B, Annaswamy T (2019) Usability of an immersive augmented reality based telerehabilitation system with haptics (ARTESH) for synchronous remote musculoskeletal examination. Int J Telerehabilit 11(1):23

Boyd LA, Winstein CJ (2001) Implicit motor-sequence learning in humans following unilateral stroke: the impact of practice and explicit knowledge. Neurosci Lett 298(1):65–69

Brandão AF, Dias DRC, Reis STM, Cabreira CM, Frade MCM, Beltrame T, de Paiva Guimarães M, Castellano G (2020) Biomechanics sensor node for virtual reality: a wearable device applied to gait recovery for neurofunctional rehabilitation. In: International conference on computational science and its applications. Springer, pp 757–770

Brodie MA, Walmsley A, Page W (2008) Dynamic accuracy of inertial measurement units during simple pendulum motion. Comput Methods Biomech Biomed Eng 11(3):235–242. https://doi.org/10.1080/10255840802125526

Calabrò RS, Naro A, Cimino V, Buda A, Paladina G, Di Lorenzo G, Manuli A, Milardi D, Bramanti P, Bramanti A (2020) Improving motor performance in Parkinson disease: a preliminary study on the promising use of the computer assisted virtual reality environment (CAREN). Neurol Sci 41(4):933–941

Camomilla V, Bergamini E, Fantozzi S, Vannozzi G (2018) Trends supporting the in-field use of wearable inertial sensors for sport performance evaluation: a systematic review. Sensors 18(3):873

Campeau-Lecours A, Vu D-S, Schweitzer F, Roy J-S (2020) Alternative representation of the shoulder orientation based on the tilt-and-torsion angles. J Biomech Eng 142(7):074504

CAREN (2021) Why the CAREN. Motek Medical B.V., Shared https://www.motekmedical.com/solution/caren/

Checa D, Bustillo A (2020) A review of immersive virtual reality serious games to enhance learning and training. Multimedia Tools Appl 79(9):5501–5527

Constine J (2015) Oculus previews‘Oculus Touch’ handheld motion-tracking haptic controllers. TechCrunch. https://techcrunch.com/2015/06/11/oculus-touch/

Costa V, Ramírez Ó, Otero A, Muñoz-García D, Uribarri S, Raya R (2020) Validity and reliability of inertial sensors for elbow and wrist range of motion assessment. PeerJ 8:9687

Cruz-Neira C, Sandin DJ, DeFanti TA, Kenyon RV, Hart JC (1992) The cave: audio visual experience automatic virtual environment. Commun ACM 35(6):64–73

Cui J, Yeh S-C, Lee S-H (2019) Wearable sensors integrated with virtual reality: a self-guided healthcare system measuring shoulder joint mobility for frozen shoulder. J Healthc Eng 2019:1–6

De Luca R, Portaro S, Le Cause M, De Domenico C, Maggio MG, Cristina Ferrera M, Giuffrè G, Bramanti A, Calabrò RS (2020) Cognitive rehabilitation using immersive virtual reality at young age: a case report on traumatic brain injury. Appl Neuropsychol Child 9(3):282–287

Dempsey P (2016) The teardown: HTC Vive VR headset. Eng Technol 11(7–8):80–81

Dhawan AP (2016) Collaborative paradigm of preventive, personalized, and precision medicine with point-of-care technologies. IEEE J Transl Eng Health Med 4:1–8

Ertzgaard, P., Öhberg, F., Gerdle, B., & Grip, H. (2016). A new way of assessing arm function in activity using kinematic Exposure Variation Analysis and portable inertial sensors–A validity study. Manual Therapy, 21, 241-249.

Farahani N, Post R, Duboy J, Ahmed I, Kolowitz BJ, Krinchai T, Monaco SE, Fine JL, Hartman DJ, Pantanowitz L (2016) Exploring virtual reality technology and the oculus rift for the examination of digital pathology slides. J Pathol Inform 7:22

Fernández Serrano M, et al (2014) Cambio mínimo clínicamente relevante en la calidad de vida de pacientes con lumbalgia inespecífica

Fitzgerald D, Foody J, Kelly D, Ward T, Markham C, McDonald J, Caulfield B (2007) Development of a wearable motion capture suit and virtual reality biofeedback system for the instruction and analysis of sports rehabilitation exercises. In: 2007 29th Annual international conference of the IEEE engineering in medicine and biology society. IEEE, pp 4870–4874

Jerald J (2019) What is virtual reality. In: Jerald J (ed) The VR book: human-centered design for virtual reality, 1st edn. Acorn Publishing, Seoul, pp 39–43

Jost TA, Nelson B, Rylander J (2021) Quantitative analysis of the oculus rift s in controlled movement. Disabil Rehabil Assist Technol 16(6):632–636

Kalron A, Fonkatz I, Frid L, Baransi H, Achiron A (2016) The effect of balance training on postural control in people with multiple sclerosis using the CAREN virtual reality system: a pilot randomized controlled trial. J Neuroeng Rehabil 13(1):1–10

Kim GJ et al (2005) A SWOT analysis of the field of virtual reality rehabilitation and therapy. Presence 14(2):119–146

Kim J-N, Ryu M-H, Choi H-R, Yang Y-S, Kim T-K (2013) Development and functional evaluation of an upper extremity rehabilitation system based on inertial sensors and virtual reality. Int J Distrib Sens Netw 9(8):168078

Koo TK, Li MY (2016) A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J Chiropr Med 15(2):155–163

Laver KE, Lange B, George S, Deutsch JE, Saposnik G, Crotty M (2017) Virtual reality for stroke rehabilitation. Cochrane Database Syst Rev 11:1–180

Lee SA (2017) Towards the development of tele-rehabilitation system based on virtual reality environment and cloud service. Adv Sci Lett 23:12807–12811

LLC A (2021) Antilatency. Shared https://antilatency.com/

Maillot P, Perrot A, Hartley A (2012) Effects of interactive physical-activity video-game training on physical and cognitive function in older adults. Psychol Aging 27(3):589

Niehorster DC, Li L, Lappe M (2017) The accuracy and precision of position and orientation tracking in the HTC vive virtual reality system for scientific research. i-Perception. https://doi.org/10.1177/2041669517708205

Oña ED, Balaguer C, Jardón A (2018) Towards a framework for rehabilitation and assessment of upper limb motor function based on serious games. In: 2018 IEEE 6th International conference on serious games and applications for health (SeGAH). IEEE, pp 1–7

Passos DE, Jung B (2020) Measuring the accuracy of inside-out tracking in XR devices using a high-precision robotic arm. In: International conference on human–computer interaction. Springer, pp. 19–26

Patil AK, Balasubramanyam A, Ryu JY, BN PK, Chakravarthi B, Chai YH (2020) Fusion of multiple lidars and inertial sensors for the real-time pose tracking of human motion. Sensors 20(18):5342

Porciuncula F, Roto AV, Kumar D, Davis I, Roy S, Walsh CJ, Awad LN (2018) Wearable movement sensors for rehabilitation: a focused review of technological and clinical advances. Pm&r 10(9):220–232

Postolache O, Teixeira L, Cordeiro J, Lima L, Arriaga P, Rodrigues M, Girão P (2019) Tailored virtual reality for smart physiotherapy. In: 2019 11th International symposium on advanced topics in electrical engineering (ATEE). IEEE, pp 1–6

Premerlani W, Bizard P (2009) Direction cosine matrix IMU: Theory. Diy Drone, pp 13–15

Raaen K, Kjellmo I (2015) Measuring latency in virtual reality systems. In: International conference on entertainment computing. Springer, pp 457–462

Rachitskaya A, Yuan A, Davidson S, Streicher M, DeBenedictis M, Rosenfeldt AB, Alberts J (2020) Computer-assisted immersive visual rehabilitation in Argus II retinal prosthesis recipients. Ophthalmol Retina 4(6):613–619

Rind AR, Shahzad K, Qadir MA (2006) Evaluation and comparison of TCP and UDP over wired-cum-wireless LAN. In: 2006 IEEE international multitopic conference. IEEE, pp 337–342

Shahmoradi L, Almasi S, Ahmadi H, Bashiri A, Azadi T, Mirbagherie A, Ansari NN, Honarpishe R (2021) Virtual reality games for rehabilitation of upper extremities in stroke patients. J Bodyw Mov Ther 26:113–122

Shum LC, Valdés BA, Van der Loos HM (2019) Determining the accuracy of oculus touch controllers for motor rehabilitation applications using quantifiable upper limb kinematics: validation study. JMIR Biomed Eng 4(1):12291

Soucie J, Wang C, Forsyth A, Funk S, Denny M, Roach K, Boone D, Network HTC (2011) Range of motion measurements: reference values and a database for comparison studies. Haemophilia 17(3):500–507

Spitzley KA, Karduna AR (2019) Feasibility of using a fully immersive virtual reality system for kinematic data collection. J Biomech 87:172–176

Wu G, Van der Helm FC, Veeger HD, Makhsous M, Van Roy P, Anglin C, Nagels J, Karduna AR, McQuade K, Wang X et al (2005) ISB recommendation on definitions of joint coordinate systems of various joints for the reporting of human joint motion-part II: shoulder, elbow, wrist and hand. J Biomech 38(5):981–992

Youdas JW, Carey JR, Garrett TR (1991) Reliability of measurements of cervical spine range of motion-comparison of three methods. Phys Ther 71(2):98–104

Funding

This research was funded by the SPANISH GOVERNMENT (FEDER/Ministry of Science and Innovation/AEI.), Grant Number RTI2018-097122-A-I00.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Raya R. is the CEO of Werium Solutions; Urendes E. is a shareholder of Werium Solutions; Rojo A. is a software developer at Werium Solutions. The other authors declare no conflict of interest. The funding sponsors have no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript and in the decision to publish the result.

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Code availability

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rojo, A., Cortina, J., Sánchez, C. et al. Accuracy study of the Oculus Touch v2 versus inertial sensor for a single-axis rotation simulating the elbow’s range of motion. Virtual Reality 26, 1651–1662 (2022). https://doi.org/10.1007/s10055-022-00660-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10055-022-00660-4