Abstract

Unilateral spatial neglect (USN) is a frequent repercussion of a cerebrovascular accident, typically a stroke. USN patients fail to orient their attention to the contralesional side to detect auditory, visual, and somatosensory stimuli, as well as to collect and purposely use this information. Traditional methods for USN assessment and rehabilitation include paper-and-pencil procedures, which address cognitive functions as isolated from other aspects of patients’ functioning within a real-life context. This might compromise the ecological validity of these procedures and limit their generalizability; moreover, USN evaluation and treatment currently lacks a gold standard. The field of technology has provided several promising tools that have been integrated within the clinical practice; over the years, a “first wave” has promoted computerized methods, which cannot provide an ecological and realistic environment and tasks. Thus, a “second wave” has fostered the implementation of virtual reality (VR) devices that, with different degrees of immersiveness, induce a sense of presence and allow patients to actively interact within the life-like setting. The present paper provides an updated, comprehensive picture of VR devices in the assessment and rehabilitation of USN, building on the review of Pedroli et al. (2015). The present paper analyzes the methodological and technological aspects of the studies selected, considering the issue of usability and ecological validity of virtual environments and tasks. Despite the technological advancement, the studies in this field lack methodological rigor as well as a proper evaluation of VR usability and should improve the ecological validity of VR-based assessment and rehabilitation of USN.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A cerebrovascular accident, such as stroke, represents an urgent public health issue and patients surviving this catastrophic acute event must deal with life-long motor and cognitive disabilities. This could restrict patients’ participation to social activities, providing them and their caregivers a relevant psychological burden (Chen et al., 2013; Béjot et al., 2016; Mansfield et al., 2018). Depending on location, type, and severity of the cerebrovascular occlusion, patients typically exhibit two main categories of impairments following the acute phase of a stroke: (i) motor disability, manifested with the inability to walk, problematic coordination and balance, hemiplegia or hemiparesis; (ii) cognitive and neuropsychological impairments, including aphasia, amnesia, executive dysfunctions, apraxia, impaired visuospatial abilities, and mood disorders (Sundar and Adwan 2010; Chen et al., 2013; Sun et al., 2014; Pedroli et al., 2015; Jokinen et al., 2015; Cipresso et al., 2018a). Approximately, 50% of patients who suffered from right-brain stroke manifest unilateral spatial neglect (USN), a complex and heterogeneous attentional-perceptual syndrome characterized by a difficulty or inability to pay attention, detect, and orient toward stimuli presented in the contralesional side (Heilman, et al., 2000; Tsirlin et al., 2009; Pedroli et al., 2015; Rode et al., 2017; Zigiotto et al., 2020). USN can be divided into several subcategories, depending on whether the behavior is elicited by a sensory, motor, or representational modality, or whether it involves one’s peripersonal, extra-personal, or spatial representation (Plummer et al., 2003; Buxbaum et al., 2004; Grattan and Woodbury, 2017). Thus, USN can also be referred to as visuospatial neglect (VSN), visual neglect (VN), and hemispatial neglect (HSN). For the purpose of this review, we will consider the terms USN and VSN interchangeably. Approximately, 50% of patients manifest USN following a stroke concerning the inferior parietal lobe, the superior temporal lobe, the frontal cortex, and subcortical nuclei. Moreover, a right-brain damage accounts for 90% of USN patients (Buxbaum et al., 2004; Yasuda et al. 2017, 2018). Studies that employed functional magnetic resonance imaging (fMRI) on VSN patients’ brains have also shown lesions in the right superior and medio-temporal gyri, the basal ganglia, as well as white matter tracts damages in both uncinate fasciculus and inferior occipitofrontal (Karnath et al., 2011; Vuilleumier, 2013; Lunven et al., 2015; Wahlin et al., 2019). Furthermore, the deficits include the ventral and dorsal areas of the attention networks, placed in the fronto-parietal portion of the brain; the ventral attention network (VAN) includes the temporo-parietal and inferior-frontal right cortex and accounts for the detection of unexpected, relevant stimuli, whereas the dorsal attention network (DAN) accounts for the top-down selection of stimuli and comprises portions of the intraparietal and superior frontal cortex (Corbetta et al., 2005; Ogourtsova et al., 2018a, 2018b; Wahlin et al. 2019; Zigiotto et al., 2020).

At a behavioral level, USN can manifest when patients rotate their head and eyes to the impaired side and focus their attention on the central, unaffected visual field to register information (thus manifesting visuospatial recognition impairment and visual field defects, VFD; Sugihara et al., 2016). USN patients fail to orient their attention and detect contralesional auditory, visual, and somatosensory stimuli, as well as to collect and purposely use information located in their contralesional side (Booth, 1982; Heilman et al., 2000; Tsirlin et al., 2009; Aravind and Lamontagne 2018; Wahlin et al. 2019; Zigiotto et al., 2020). Moreover, patients affected by USN can collide with static or moving people or objects placed on the contralesional near or far space while performing tasks (Tsirlin et al., 2009; Aravind and Lamontagne, 2014, 2018; Aravind et al., 2015). The higher collision rates seem related to a delay in detecting obstacles and adopting an adequate strategy to avoid them (Aravind and Lamontagne, 2014, 2018; Aravind et al., 2015). Patients’ impaired judgment of distances from objects could also reflect an ipsilesional shift of their subjective midline, which acts as a framework for goal-directed walking and spatial orientation (Karnath et al., 1991; Richard et al., 2004; Aravind and Lamontagne, 2018). USN patients also tend to miss words while reading (Kim et al., 2015; Sugihara et al., 2016; Yasuda et al., 2017, 2018), and present a reduced ability to manage both basic and instrumental activities of daily living (BADL and IADL, respectively), such as autonomously bathing or grocery shopping (Buxbaum et al., 2004; Grattan and Woodbury, 2017). Furthermore, USN patients manifest different degrees of unawareness of their impairments (i.e., anosognosia) and this condition is associated with motor, cognitive, and sensory deficits. This could result in longer rehabilitation periods, the need for a constant supervision and, overall, poorer prognosis along with a reduced possibility to benefit from rehabilitative interventions (Cherney et al., 2001; Azouvi et al., 2003; Di Monaco et al., 2011; Nijboer et al., 2008, 2014; Tobler-Ammann 2017a, 2017b; Glize et al., 2017; Cipresso et al., 2018a; Zigiotto et al., 2020). This entails the need for a prompt assessment and rehabilitation of USN, in order to reduce its detrimental effects over patients’ functioning and quality of life.

2 Current USN assessment and rehabilitation

2.1 USN assessment

Among the numerous paper-and-pencil standard neuropsychological assessment tools for USN, the most frequent are: (i) cancellation tests, that require patients to detect specific targets among numerous other distractors, employing stimuli such as lines (Albert, 1973), letters (Diller and Weinberg, 1977), symbols (Weintraub and Mesulam, 1988), and circles (Vallar and Perani, 1986; Pallavicini et al., 2015a; Pedroli et al., 2015); (ii) copy tests, where patients are asked to copy a simple or complex picture or reproduce it from memory (Rey, 1941; Suhr et al., 1998; Shulman 2000); (iii) line bisection tests, which require patients to draw the midline of horizontal lines (Schenkenberg et al., 1980; Pallavicini et al., 2015a; Sugihara et al., 2016). Other measures employ a more ecological approach to USN assessment: (iv) the Behavioral Inattention Test (BIT, Wilson et al., 1987, 2010), a short screening battery of tests to assess the presence and the severity of neglect symptoms in everyday skills. Moreover, some of the tests included could be compared or used separately for a qualitative description of the patient’s functional performance (Hartman-Maeir and Katz, 1995), despite a similar proposal is currently hypothetical and lacks a proper validation; (v) the Catherine Bergego Scale (CBS; Bergego et al., 1995; Azouvi 1996), a standardized checklist used to detect the presence and degree of USN symptoms during the observation of patients performing in daily situations. It also compares patients’ and caregivers’ evaluations to verify the presence of anosognosia (Azouvi et al., 2003; Sugihara et al., 2016). These measures could be considered more ecological because they address patients’ functional performance and their ability to autonomously perform daily life activities, which could be useful for a more sensitive detection of milder forms of USN (Ogourtsova et al., 2018b). In fact, traditional paper-and-pencil tests do not appear sufficiently sensitive in detecting subtle impairments which, over time, could have a detrimental effect and worsen patients’ functioning and life quality (Bowen et al., 1999; Buxbaum et al., 2008; Aravind and Lamontagne, 2014; Ogourtsova et al., 2019, 2018b). These tests, and particularly the line bisection, generally require a manual correction by an experienced clinician; this could increase the risk of biases, inter- and intra-rater variability (Jee et al., 2015). Moreover, clinical assessment of USN is usually limited to near peripersonal space (within arm’s reach) and fails to consider other behavioral manifestations in personal and motor symptoms along with far extra-personal space (beyond the arm’s reach) (Azouvi et al., 2003; Ogourtsova et al., 2018b; Knobel et al., 2020) and egocentric versus allocentric spatial representations as well (Bickerton et al., 2011; Pedroli et al., 2015; Ogourtsova et al., 2018b).

2.2 USN rehabilitation

Rehabilitative interventions for USN patients usually fall under two broad categories of behavioral approaches (Pedroli et al., 2015; Azouvi et al., 2017; Rode et al., 2017; De Luca et al., 2019; Liu et al., 2019; Zigiotto et al., 2020): (i) top-down, activity-based approaches aim at orienting patients’ spatial attention toward the left side of space and to promote their functional abilities. Over the past 40 years, several systematic visual scanning training (VST) programs have been developed (e.g., Pizzamiglio et al., 1992; Antonucci et al., 1995) to stimulate patients to actively explore their contralesional (neglected) side, with therapists asking them to voluntarily direct their gaze leftward (Tsirlin et al., 2009; Liu et al., 2019; Zigiotto et al., 2020). The visual search includes tasks such as scanning, copying, or reading stimuli placed in the contralesional side of space and can be guided either by contralesional cues or therapists’ feedback. Other top-down approaches include the limb activation treatment (LAT), in which patients are asked to perform intentional movements using their contralesional hemibody (Rizzolatti and Berti, 1990; Robertson and North, 1992, 1993) (ii) bottom-up, non-activity-based interventions; these latter methods aim at reducing patients’ bodily deficits using external instruments to manipulate the sensory environment. This kind of intervention also exploit physical stimulation and manipulate patients’ sensory environment to improve neglect symptoms with methods such as hemiblinding, eye-blinding, caloric, galvanic or optokinetic stimulation (OKS; Pizzamiglio et al., 1990; Robertson et al., 1998; Moon et al., 2006; Kim et al., 2015; Azouvi et al., 2017), or prism adaptation (PA; Tsirlin et al., 2009; Jacquin-Courtois et al., 2010, 2013; Azouvi et al., 2017; Glize et al., 2019; Liu et al., 2019). OKS has proved particularly effective for treating left hemispatial neglect; by activating brain stem, basal ganglia, cerebellum, and the parieto-occipital cortex, it improved distorted body orientation, tactile extinction, motor neglect, and even better attention to auditory stimuli. This is particularly true when OKS include leftward moving stimuli such as dots (Vallar et al., 1993, 1997), vertical strip or random dot backgrounds (Kim et al., 2007), or drums (Moon et al., 2006), whereas rightward OKS appear to worsen left hemispatial neglect (Kim et al., 2015). PA, instead, consists of actively exposing patients to a rightward optical deviation of their visual field, with the aim to reorient their behavior toward the neglected side. To achieve this, the procedure exploits prisms that systematically shift both visuomotor and proprioceptive responses to the left (Jacquin-Courtois et al., 2010, 2013; Azouvi et al., 2017; Glize et al., 2019). The process of PA requires patients to repeatedly perform movements toward visual targets placed in the contralesional side, with prisms deviating the environment about 10° rightward. The prismatic exposure is preceded by a pre-test phase, in which patients aim at the direction of visual targets in order to obtain reference values, without wearing glasses. Following prismatic exposure, patients are asked to aim toward the visual targets without the prisms, in order to evaluate the after-effects (Azouvi et al., 2017).

OKS and PA have received increasingly more interest over the years; on the one hand, OKS involves USN patients to observe moving visual targets, to encourage their visual scanning of the neglected hemispace. The initial research conducted on OKS showed that the exposure to a moving stimulus, which included optokinetic nystagmus, could modify patients’ analysis of the perceived space (Pizzamiglio et al., 1990). On the other hand, PA has proved as one of the most effective and widely employed rehabilitation methods which reduce the behavioral biases and the awareness deficits seen in the contralateral hemispace of spatial neglect. The active prism exposure allows to re-calibrate attention and re-orients patients’ behavior toward the neglected side, reducing visual, sensory, and auditory neglect (Dijkerman et al., 2003; Jacquin-Courtois et al., 2010), space and object-based neglect (Dijkerman et al., 2003; Maravita et al., 2003), and spatial dyslexia and dysgraphia (Farnè et al., 2002; Rode et al., 2006). Mental imagery and higher-level spatial representations seem to benefit from PA as well; however, it is unclear whether this impact can be broadened to navigation and topographic memory, which contribute to spatial cognition (Glize et al., 2017). Therefore, despite the positive outcomes following the traditional PA rehabilitation, to date, its mechanisms are largely unclear.

3 What is virtual reality?

A major drawback of a traditional approach to USN is that cognitive functions are considered as a variable isolated from the actual context patients live in. This could hinder the ecological validity of both assessment and rehabilitation processes; in other words, the observations and data obtained from the traditional methods could not be generalized in order to understand how patients function in their real-life surroundings. This entails methodological as well as practical issues that could be improved by using tools that allow simulating patients’ response to stimuli or situations that closely resemble the ones they would encounter outside the clinical setting (Tsirlin et al., 2009; Levick, 2010; Pallavicini et al., 2015a; Ogourtsova et al., 2019, 2018a, 2018b). A plausible solution comes from integrating standard procedures with new technologies, such as virtual reality (VR). VR is a 3D computer-generated environment that allows to virtually recreate real-life contexts in which the individual can feel immersed and “present” and can interact with the surroundings (Riva and Mantovani, 2014, 2019; Riva et al., 2018; Moreno et al., 2019). The interaction with the virtual environment provides the individual with immediate multisensorial feedback (e.g., visual, haptic, auditory), and is highly responsive to the users’ movements and inputs (e.g., gesture, vocal command) (Burdea and Coiffet, 2003; Riva, 2008). Moreover, the concurrent stimulation of multiple senses and the reality’s closeness of the stimuli employed can induce the feeling of immersion within a safe and controlled VR environment and the possibility to interact with objects (Rizzo et al., 2004; Riva, 2008; Slater, 2009; Riva and Mantovani, 2012; Chirico et al., 2016; Riva et al., 2018; Cipresso et al., 2018b). Several VR devices have been developed over time and, depending on their degree of immersiveness, can be categorized in: (i) non-immersive systems, such as computer screens; (ii) semi-immersive systems, such as the cave automated virtual environment (CAVE; Cruz-Neira et al., 1993) which makes use of large, fixed screens distant from the viewer available in many configurations. The three-wall setup provides a 180° scenario that can be considered semi-immersive because as soon as the patient turn over he or she is no longer immersed in the virtual environment; (iii) fully immersive systems, such as head-mounted displays (HMDs) and four-wall setup of the CAVE.

Specifically, the fully immersive devices are capable of isolating individuals from the external “real” environment and, most importantly, generate the feeling of presence, i.e., the feeling of being really “there” in the simulated environment and being able to act purposively within it (Rizzo et al., 2004; Slater, 2009; Riva and Mantovani, 2012, 2014; Negut et al., 2016; Chirico et al., 2016; Riva et al., 2018). The integration between the input data, collected via trackers sensing the user’s position and orientation, and a real-time update of the virtual environment generates a high sense of presence (Riva et al., 2018). This allows achieving a brain activity like that spontaneously elicited when the individual interacts with the real-life surroundings and predicts the inputs incoming from the context in which he is immersed. A more detailed examination of these aspects will be presented later in this paper and will consider a plausible explanation for VR effectiveness based on the concept of the “body matrix” (Moseley et al., 2012; Riva, 2018).

3.1 Technological advancements in USN assessment and rehabilitation

Over time, VR has been extensively applied within the field of neuropsychological assessment and rehabilitation of clinical and non-clinical populations of young adults and elderlies (García-Betances et al., 2015; De Tommaso et al., 2016; Plancher and Piolino, 2017; Riva et al., 2020). Clinical subsamples include, among others, patients suffering from spatial memory (Allain et al., 2014), balance impairments (Serino et al., 2017a, bGerber et al., 2018; Soares et al., 2018), and from the consequences of stroke (Henderson et al., 2007; Saposnik and Levin, 2011; Laver et al., 2017), traumatic brain injury (Aida et al., 2018; Alashram et al., 2019; Maggio et al., 2019), and neurocognitive disorders (Moreno et al., 2019).

Specifically, both USN assessment and rehabilitation seemingly share common issues: despite the vast amount of measures and interventions, a gold standard procedure is currently lacking (Bowen et al., 1999; Menon and Korner-Bitensky, 2004; Pedroli et al., 2015; Grattan and Woodbury, 2017; Ogourtsova et al., 2019, 2018b). USN presents with a complex and heterogeneous set of manifestations and no assessment tool nor rehabilitative training alone could comprehensively address this condition (Menon and Korner-Bitensky 2004; Pedroli et al., 2015; Ogourtsova et al., 2019, 2018b). Furthermore, both employ measures and tasks which often lack sensitivity, standardization, and ecological validity, thus fail to evaluate how patients perform relevant tasks in their daily environment (Perez-Garcia et al., 1998; Azouvi et al., 2003; Tsirlin et al., 2009; Levick, 2010; Pallavicini et al., 2015a; Ogourtsova et al., 2019, 2018a, 2018b). Regardless of the method used, early assessment and prompt rehabilitation are crucial for USN patients and could improve their behavioral and socio-cognitive outcomes: this, in turn, would reflect a decreased hospitalization rate and reduced healthcare assistance costs. Promoting at-home interventions is also crucial for outpatients to maintain and consolidate the positive outcomes following the rehabilitation training, even after dismissal Mugueta-Aguinaga and Garcia-Zapirain 2017; Serino et al. 2017a, 2017b; Kidd et al., 2019). Over time, these limitations have led many researchers to transfer conventional paper-and-pencil assessment tools and rehabilitative training in a computerized form. This transition has represented a first step forward in enhancing both precision and consistency in detecting more subtle forms of deficits and in recording patients’ performance (Tsirlin et al., 2009; Pallavicini et al., 2015a; Pedroli et al., 2015). A computerized task differs from a paper-and-pencil task not only for the format in which the stimuli are presented (i.e., a monitor instead of a sheet), but also for the cognitive and motor demands required to perform the task itself (Jee et al., 2015). Figure 1 illustrated a computerized version of the card dealing task and the barrage task. Paper-and-pencil tasks recruit patients’ motor abilities and visuospatial attention, whereas computerized tasks increase perceptual sensitivity while reducing the motor action required (Jee et al., 2015). The attentional capacity of USN patients tends to reduce as a result of increasingly demanding perceptual tasks; this, in turn, reduces patients’ ability to sustain and orient their attention to the ipsilesional side. Computerized tasks seemingly reduce the motor and attentional load required (Jee et al., 2015). Furthermore, computer-based assessment and rehabilitation provide information unobtainable from the traditional assessment, such as reaction times.

Paper-and-pencil versus computerized USN assessment (courtesy of Pallavicini et al., 2015b)

However, despite their utility, computerized systems are still not capable of simulating a complex set of actions and do not provide an ecological environment with a high sense of realism and presence. This also limits patients’ possibility to interact with the device providing the stimuli. Therefore, the most recent technological advancements within the field of VR have allowed a second major transition, from non-immersive toward fully immersive devices (e.g., HMDs, CAVE) capable of providing a safe, standardized, and more realistic virtual environment that simulates complex daily situations, directly involving patients and engaging them to purposely interact within it (Slater, 2009; Cipresso et al., 2018b). These crucial properties have fostered the widespread diffusion of VR for the assessment and rehabilitation of many neurocognitive disorders (Rizzo et al., 2004; Parsons et al., 2013; García-Betances et al. 2015; Negu et al., 2016; Aida et al., 2018; Cipresso et al., 2018b; Alashram et al., 2019; Moreno et al., 2019; Kim et al., 2019; Maggio et al., 2019; Riva et al., 2020) (Fig. 2). VR-generated environments allow a strict experimental control over stimulus administration and either the assessment or the rehabilitation procedure can be adjusted and tailored to patients’ needs and difficulties and carried out safely. VR-based setups also allow examining not only the peripersonal space, but also the extra-personal space (Knobel et al., 2020). Furthermore, clinicians could benefit from the employment of VR in many ways; this technology could help them detect subtle manifestations of deficits, which could remain unrecognized and have a detrimental effect on patients over time. In terms of assessment, this would be fostered using virtual environments that examine patients’ functioning and impairments. This technology also allows to gradually increase the complexity of the tasks required, which could provide a refined assessment of patients’ impairments. A VR-based rehabilitation, instead, could gradually train patients to carry out tasks similar to those they perform in their daily life and could learn again to execute them in a safe and controlled environment. This also rules out possible interfering variables and enables to suspend the procedure whenever needed; despite VR having very few side effects, sometimes patients can manifest cybersickness (CS), a form of visually induced motion sickness producing a constellation of symptoms and discomfort during or following VR exposure (Martirosov and Kopecek, 2017; Weech et al., 2019; Knobel et al., 2020). CS symptoms include disorientation, nausea, headache, fatigue, and postural instability, due to the mismatch between sensory inputs, since the user sees the movement on the screen without feeling it (Martirosov and Kopecek 2017).

Given the importance of VR devices for assessment and rehabilitation, particularly within the field of USN, a review of the most recent technological advancements is needed to have a comprehensive state-of-the-art and to provide future directions for researchers. Therefore, the present review aims at updating the previous work of Pedroli et al. (2015) and to present the most recent evidence of VR advancements for both assessment and rehabilitation of USN. Specifically, this article will review VR applications from the years 2015 to 2021 included, analyzing the methodology and the technologies employed in the studies analyzed in order to understand the advancements in this field and future research directions. The following sections will consider how VR technologies have been implemented within standard neuropsychological assessment and rehabilitation; it is crucial to remind that VR does not intend to replace traditional methods, but rather to enhance them and help increase the amount of data collected to provide a fuller, more comprehensive and ecological picture of patients’ impairments and help to tailor safe interventions closer to their needs.

4 Methods

The present review builds on and updates the previous work by Pedroli et al. (2015), following the Preferred Reporting Items for Systematic Reviews and Meta-Analysis (PRISMA) guidelines (Moher et al., 2009). This section will present the systematic strategy and the criteria employed to select the studies, which will be discussed later.

4.1 Search Strategy

A computer-based search strategy for relevant publications was performed considering several databases, specifically PsycINFO, Web of Science (Web of Knowledge), PubMed/Medline. In line with the previous work of Pedroli et al. (2015), the search string employed was (“Virtual Reality” OR “Technolog*”) AND [“Neglect” OR (“Unilateral Spatial Neglect” OR “Hemispatial Neglect” OR “Visual Neglect” OR “Visuospatial Neglect”)]. The choice to include both “Virtual Reality” and “Technolog*” as keywords were to avoid possibly missing papers since these words are sometimes used in an interchangeable or misleading way. We also wanted this review to be as replicable and inclusive as possible. The articles were individually considered to verify whether they fulfilled the following inclusion criteria: (a) research article; (b) provide information regarding the sample used; (c) provide information regarding the measures used; (d) published in English. We excluded conference papers; articles that did not consider neglect as a neuropsychological syndrome (e.g., childhood traumatic experiences, maltreatment, and abuse).

4.2 Systematic Review Flow

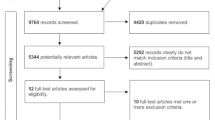

The flowchart of the review is shown in Fig. 3. By searching in PubMed/Medline, Web of Science (Web of Knowledge), and PsycINFO, our initial search yielded a total number of 5066 non-duplicate citations. Considering our inclusion and exclusion criteria, we retrieved 68 articles that were further screened. After the full-text screening, we selected 31 articles, and two additional full papers were excluded in the data extraction phase. In the end, 28 studies met the full criteria and were included in the present review. The risk of bias was assessed using PRISMA recommendations for systematic literature analysis. SC, PC, and EP independently selected paper titles and abstracts and analyzed the full papers meeting the inclusion criteria. Any disagreement was resolved through consensus. SC wrote the manuscript, and all the authors (PC, EP, VM, and FB) read, revised, and approved its final version.

5 Results

5.1 VR systems for USN assessment

The field of USN assessment could benefit from more ecological devices capable of detecting this condition more precisely, to overcome the lack of sensitivity, specificity, and ecological validity of paper-and-pencil tests. Over the past five years, several studies have integrated VR-based devices in USN assessment, either digitalizing traditional neuropsychological tests or developing new paradigms. A total of 15 studies was selected; six studies employed non-immersive technologies (by means of a tablet/iPad, computer screen, and auditory stimulation: Pallavicini et al., 2015a; Jee et al., 2015; Guilbert et al., 2016; Grattan and Woodbury, 2017; Spreij et al., 2020; Siddique et al., 2021); nine studies employed fully immersive technologies (by means of HMDs: Aravind et al., 2015; Sugihara et al., 2016; Aravind and Lamontagne, 2017, 2018; Ogourtsova et al., 2018a, 2018b; Knobel et al., 2020; Yasuda et al., 2020; Kim et al., 2021), which will be illustrated as it follows. Table 1 provides a detailed description of the VR technology employed in the following studies, as well as the characteristics of the sample, the sessions, and the main outcomes.

5.1.1 Non-immersive technologies

Among the studies included, two papers exploited (Pallavicini et al., 2015a; Siddique et al., 2021) the potential of a mobile device, such as a tablet or an iPad, which at the same time has the technological requirements for supporting VR environments, is affordable and user-friendly. Moreover, the iPad can display traditional cancellation tests and allow to present patients with a digitalized assessment. Despite the paucity of studies considering this tool, Pallavicini et al. (2015a) have developed Neglect App, an iPad-based assessment tool for screening USN symptoms (see also: Cipresso et al., 2018b). The study aimed at exploring the potentiality of the application, consisting of two categories of tasks: (i) Neglect App cancellation tests, which provides a digitalized version of paper-and-pencil cancellation tests and include: simple cancellation tests, corresponding to the line cancellation test; cancellation with distractors tests, based on the star cancellation test. (ii) Neglect App card dealing task, which recreates a digitalized version of the card dealing task (Pallavicini et al., 2015a). Both correct answers and omissions were recorded. Following a clinical interview and a neuropsychological assessment, the 16 patients selected were assigned to either a neglect group (patients with USN) or a non-neglect group (patients without USN). The assessment procedure consisted of a single session lasting one hour, where patients completed both the paper-and-pencil tests and the Neglect App tasks, in a randomized order. Each session began by administering a self-report scale to evaluate the individual’s technological skills and each subject completed a 10-min session in a virtual environment to get acquainted with the technology, before starting the Neglect App. At the end of the session, each patient filled a System Usability Scale (SUS; Brooke, 1996) to evaluate the usability of Neglect App. Preliminary evidence showed the feasibility of Neglect App for assessing USN symptoms, with the Neglect App version of the cancellation tests proving equally effective to the paper-and-pencil version. Specifically, the Neglect App Card Dealing task proved more sensitive in the detection of neglect symptoms, compared to the traditional neuropsychological task. With respect to the cancellation tests, USN patients reported a significantly more aberrant search performance compared to the non-USN group in the virtual cancellation task. With respect to the Card Dealing Task, instead, the USN group reported a significant difference in the omission rates, which decreased only in the Neglect App version of the Card Dealing Task, but not in the paper-and-pencil version, compared to the non-USN group reporting higher omission rates. This latter result could be due to a specific App feature; moreover, the Neglect App version of the Card Dealing Task could assess the near extra-personal space to a higher extent than the paper-and-pencil version.

Jee and colleagues evaluated the feasibility of a semi-computerized version of the classical line bisection test (LBT), for improving the quality of unilateral visual neglect and excluding possible confounding errors deriving from a manual correction of the paper-and-pencil test (Jee et al., 2015). The e-system included an electronic pen (e-pen) capable of recognizing position patterns, a micro-pattern printed paper and a line bisection test software, installed on a computer. The e-pen can detect and send written/drawn information from the micro-pattern printed paper to the LBT software installed on the computer, and it consists of a pressure sensor, an infrared LED, image sensor, a digital signal processor and a Bluetooth interface (Jee et al., 2015). The e-system was previously tested on a group of healthy participants and further applied to a sample of eleven patients with unilateral visual neglect. Specifically, patients were asked to use their right hand to cut each line in half, drawing a single pen notch without skipping any of the stimuli. The printed paper was taped to the table in front of the patient, to avoid excessive movement. The e-system automatically recorded the time needed to complete the task as well. All patients also completed a neuropsychological battery (the Korean version of MMSE, K-MMSE). Results of the inter- and intra-rater reliability of the e-system showed very high correlations, particularly for the group of patients; results were simultaneously and reliably computed, and the e-system could provide information such as pressure and pen tilting. In this group, the authors also noted different results in the neglected lines between the e-system and the raters, and the e-system was recalibrated in order to increase its sensitivity and rule out system errors. They also outline the need for further studies including a wider range of cognitively impaired patients and greater sample sizes. The semi-computerized LBT assessment seems a promising tool to be integrated in a standard neuropsychological assessment of USN.

A digitalized version of the traditional Posner visual cuing paradigm was replicated within a VR context by Guilbert et al. (2016). For the purpose of this review, this paradigm has been considered as it allows to understand the mechanisms underlying visual orienting attention, seemingly impaired in USN patients. A thorough description of the paradigm is beyond the scope of the present paper and should be deepened elsewhere (Posner and Cohen, 1984; Posner, 1980). This effect reflects exogenous orientation of attention and patients with USN manifest deficits in disengaging, moving and engaging their attention, with endogenous orientation being less compromised, and an early orientation toward the ipsilateral side of the lesion (Bartolomeo and Chokron, 2001; Guilbert et al., 2016). This paradigm has been previously applied on healthy subjects (Spence and Drive, 1994) and Guilbert and colleagues’ adaptation aimed at studying the exogenous orientation of auditory attention, less studied in USN patients (Guilbert et al., 2016). Specifically, the authors explored whether detection and lateralization tasks could identify the mechanisms underlying exogenous orientation of auditory attention, as well as their impairments in USN patients. A plausible hypothesis was that hearing and visual attentional orienting shared an underlying deficit unobservable in patients without USN. The authors considered a total sample of 14 patients, four of which were without USN. The total group was then divided into two sub-groups depending on the task they completed, either the detection or the lateralization task. The experimental setup included a 3D virtual environment presenting the auditory stimuli using headphones. Patients were blinded and provided their responses by using a mouse placed in front of them. At the beginning of each trial, a white noise signaled the beginning and the end of each trial (4020 ms) followed by a cue (a pure sound of 1000 Hz, for 20 ms). The target, instead, was a complex harmonic sound of 500 Hz, lasting for 100 ms. The detection task required patients to press a mouse button as soon as they heard the target, whereas in the lateralization task patients had to press either the left or right mouse button following the spatial position of the target. Catch trials (cues in absence of targets) were also present. Results of the detection task found no evidence that either patients, with or without USN, and healthy controls recruited a spatial process to detect a sound; furthermore, no evidence was found for significant cueing effects. Previous studies hypothesized that USN does not imply an impaired auditory detection, because the bilateral projection of auditory stimuli in the brain does not rely on spatial location. Concerning the lateralization task, instead, spatial cuing appeared to have been considered when the task required a spatial judgment to locate the target. USN patients appear to manifest difficulties in auditory orienting. Only one patient performed poorly in this task but was also affected with more severe impairments in sustained attention, which authors did not consider carefully and suggest jointly examining multiple aspects of attention alongside the auditory (Guilbert et al., 2016). However, considering a lateralization task could involve difficulties related to spatial impairment in either processing the response or orienting the auditory attention. Further studies should address the auditory attention orientation, possibly employing the VR-based paradigm suggested by the authors.

The paper by Grattan and Woodbury (2017), instead, compared traditional paper-and-pencil, functional and VR-based neglect assessments to test whether different assessment tools detect neglect differently. Specifically, the VR-based neglect assessment considered was the Enhanced Array Virtual Reality Lateralized Attention Test (VRLAT; Dawson et al., 2008; Buxbaum et al., 2012), a brief assessment tool predicting patients’ collision while navigating in real world. It occurs within a VR environment displayed on a PC laptop, and patients are requested to travel down a virtual path by means of a joystick, while correctly identifying targets (e.g., trees, statues), placed on both left and right sides of the path. Specifically, this version of VRLAT is more challenging due to the multiple distractors (e.g., a ball bouncing in front of the patient, a streetlamp) that patients are instructed to ignore. All patients, suffering from neglect due to ischemic or hemorrhagic stroke, completed the measures in a standard order. They were administered in one or two sessions, either in a laboratory (for outpatients) or in the hospital stroke rehabilitation unit (for inpatients). A complete neuropsychological assessment battery was administered as well (Grattan and Woodbury, 2017). Results showed a greater capacity of both functional and VR-based assessment procedures in detecting patients with neglect, therefore highlighting the different performances on different neglect assessment tools. However, results should be cautiously interpreted due to the reduced sample size (Grattan and Woodbury, 2017).

Spreij et al., (2020) employed a simulated driving task to compare the performance of patients with right- versus left-sided VSN, patients without VSN (“recovered”), and healthy controls. Moreover, they wanted to investigate the relationship between VSN severity and patients’ average position on the road, considered as a measure of lateralized attention. The authors also assessed the diagnostic accuracy of the simulated driving test, compared to traditional tasks. Patients were initially screened by means of neuropsychological measures (CBS, shape cancellation task) and categorized as follows: (i) left-sided VSN + ; (ii) right-sided VSN + ; (iii) left-sided R-VSN (i.e., patients who showed right-sided VSN during the screening but not on the second measurement, considered as “recovered”). Due to the small sample size, the right-sided R-VSN was excluded; (iv) a healthy control group. The setup for the simulated driving task consisted of a driving scene on a straight road, projected on a large screen. A steering wheel was fixed on a table, where a white plain board was placed in order to remove any visuospatial references. After a brief practice trial, patients were asked to use the steering wheel to adjust and maintain their position at the center of the right lane, despite a simulated side wind coming from both directions. The projection of the driving scene vibrated when patients drove off into the left or the right verge. The analysis of group performances showed that, in terms of average position, left-sided VSN + patients deviated more compared to right-sided VSN + and VSN-. Neither right-sided VSN + and VSN- patients nor VSN- patients and healthy controls differed significantly in terms of their average position. Compared to right-sided VSN + patients and VSN- patients, left-sided VSN + patients showed a larger magnitude of sway. Again, neither right-sided VSN + and VSN- patients nor VSN- patients and healthy controls differed significantly in terms of sway magnitude. Left-sided R-VSN deviated more leftward, compared to VSN- patients, but their average position on the road did not differ significantly from the position of left-sided VSN + patients. The authors also found a moderate positive relationship between the average position and VSN severity as measured by the shape cancellation task, whereas a stronger relationship was found with CBS. The authors hypothesized that dynamic tasks requiring a natural behavior and relating more to daily activities, such as the CBS and the driving task, could increase the ecological validity of the assessment (Spreij et al., 2020). However, the sensitivity and specificity values of the simulated driving task indicate that this procedure cannot be used as a stand-alone tool for the assessment of VSN yet (Spreij et al., 2020).

Siddique et al., (2021) employed Visual Attention Lite, a mobile app installed on a tablet, to assess USN on a group of 14 acute stroke patients. The app has two modes: (i) test mode, designed to detect both time and accuracy of patients’ scanning abilities, with increasingly difficult tasks. The tasks (e.g., playing fields, clock) require patients to touch as many targets as possible over a short period of time; (ii) practice mode, designed to help patients move their eyes, from top to bottom and from left to right, when they touch the targets. Each level of difficulty is completed only when the patient finds all the targets. Patients had to complete ten consecutive days of practice using the Visual Attention Lite practice mode. Before and after the ten days of sessions, patients were assessed by means of the Visual Attention Lite test mode. Results showed that the app seems a promising tool for the assessment and management of USN in acute stroke patients.

5.1.2 Fully immersive technologies

As previously mentioned, VR-based USN assessment has employed HMDs as well and showed promising results so far. Over the years, the field of USN assessment has benefited from the contribution of Aravind and Lamontagne (Aravind and Lamontagne, 2014; Aravind et al., 2015; Aravind and Lamontagne 2017, 2018), whose studies have created a consistent line of research on neglected patients’ behavior, particularly obstacle detection and avoidance. Some of these studies have also been included in the previous systematic review by Pedroli et al. (2015) (Aravind and Lamontagne, 2014; Aravind et al., 2015). Specifically, the paper of Aravind et al. (2015) created an obstacle avoidance task within a virtual environment to assess patients’ ability to both detect and avoid moving obstacles, approaching from different directions. The tendency to collide with static and moving objects and manifest navigational impairments is a common behavior in VSN; whether the collisions are mostly due to either postural and locomotor impairments following a stroke, or to attentional-perceptual deficits due to VSN remains unclear (Aravind et al., 2015). The authors included three tasks consisting of either detecting an obstacle, avoiding a joystick-driven obstacle, or avoiding a locomotor obstacle. These latter tasks were performed using a VR setup comprising an HMD and a joystick that patients used to navigate within the virtual environment. A detailed description of the procedure and the main outcomes are reported in Pedroli et al. (2015). The authors showed the specific involvement of VSN in the altered behavior of obstacle avoidance: not only do patients display a precise pattern of collision, but they also show a correlation between the distance at the time of detection and at the onset of the avoiding strategy. Thus, patients showed a reduced distance from the obstacle at its detection and, conversely, an increased amount of time for the detection of contralesional obstacles, even though patients performed the tasks while seated. VSN patients also showed a gradient of performance, which gradually worsened with contralesional obstacles approaching. Either the attentional/perceptual bias, the predisposition to initiate environmental visual scanning, or the rightward shift in egocentric representation, observed in VSN, could account for this delayed detection of obstacles and initiation of response strategies. The authors hypothesize that VSN patients underestimate the distance between them and the obstacle approaching on the contralesional side, which becomes much closer than patients think, resulting in collisions. VSN patients also displayed specific (delayed) avoidance strategies consisting of both changing mediolateral deviating and increasing speed. However, this could be influenced by the seated versus walking execution of the task in the two studies. The VR setup appeared an easy and useful tool for the assessment of attentional-perceptual deficits and collision avoidance behaviors in VSN patients.

The obstacle avoidance behavior was later examined in two studies (Aravind and Lamontagne 2017, 2018) comparing VSN + versus VSN- patients performing tasks within a virtual environment displayed via HMD, reflective markers, and a 12-camera Vicon motion capture system. This VR-based setup was employed in a previous study (Aravind et al., 2015). The first study (Aravind and Lamontagne, 2017) tested whether the simultaneous negotiation of moving obstacles and performance of a cognitive task would provide a dual-task, cognitive-motor interference. The authors also hypothesized that both VSN + and VSN- patients would display this interference, which would increase for VSN + performing more complex tasks. VSN + would also manifest a more compromised avoidance performance with obstacles approaching patients from their neglected contralesional side. Patients underwent two conditions: (i) Cognitive Single Task (CogST), an auditory discrimination pitch task (Auditory Stroop) that patients performed while observing the virtual environment from a seated position. This condition included a simple task (i.e., the word “cat” presented in a high versus low pitch) and a complex task (i.e., the words “high”/ “low” presented in a high versus low pitch), the latter requiring greater attention and inhibition skills. Patients simultaneously observed a virtual simulation of a locomotor task and had to verbally denominate the pitch of the sound. (ii) Locomotor Dual task (LocoDT), involving obstacle avoidance while performing the simple and complex cognitive tasks. Results showed that VSN + patients displayed a greater deterioration in locomotor and cognitive performances while dual tasking. In line with their previous study (Aravind et al., 2015), VSN + patients displayed a greater collision rate with delays in obstacle perception. Once again, the attentional/perceptual bias emerged more prominently in VSN + patients explaining their compromised obstacle avoidance strategies and their enhanced risk of obstacle collisions. Moreover, dual-task walking has a significant detrimental effect on both cognitive and locomotor performances of VSN patients and is associated with greater locomotory costs, possibly due to deficits in executive functions. The dual-task condition also delayed patients’ initiation of an avoiding strategy even more and increased collision rates, thus showing the importance of task complexity for VSN patients.

In the second study (Aravind and Lamontagne, 2018), the authors compared VSN + versus VSN- patients on both their changes in heading and head orientation while avoiding obstacles arriving toward them from different directions and reorienting toward the target. A small control group (HC) was included as well. Authors also hypothesized that VSN patients would prefer to orient both their heading and head toward the ipsilesional side rather than to the contralesional side, where they would display increased error rates while heading toward the target. A VR-based setup like that of a previous study (Aravind et al., 2015; Aravind and Lamontagne, 2017) was employed; however, this procedure required patients to walk toward the target while avoiding obstacles. Patients underwent the same locomotor obstacle task, conducted in the same virtual environment as of previous studies (Aravind et al., 2015; Aravind and Lamontagne, 2017). Patients had to walk toward the target and simultaneously avoid the collision with an approaching obstacle; if unavoidable, the collision was signaled with a flashing sign. Furthermore, patients completed (ii) a perceptual task in a seated position, which requested them to press a joystick button as soon as they detected a moving obstacle. Patients also underwent a complete clinical and neuropsychological assessment (Aravind and Lamontagne, 2018). Results showed that VSN- and HC avoided obstacles with a specific strategy, i.e., either deviating to the same side as the obstacle or to its opposite side, thus minimizing the risk of colliding. VSN + patients showed higher collision rates with contralesional static and dynamic objects, which could be explained by the ipsilesional bias occurring in VSN and by patients’ preference to direct their attention toward the ipsilesional space (Posner et al., 1984; Dvorkin et al., 2012). VSN + patients also displayed an attentional/perceptual bias resulting in more time spent with their head oriented toward the ipsilesional side and their tendency to rightward deviate their path while walking toward either static or moving obstacles. Moreover, VSN + patients showed poorer variability in locomotor responses, executive functions (assessed with TMT-B) and ability to reorient toward the goal, compared to VSN- patients (and HC). Therefore, both studies (Aravind and Lamontagne, 2017, 2018) strengthen the VR-based paradigm employed as a useful assessment tool for collision avoidance behavior in VSN patients and the influence of task complexity on cognitive and locomotor performances.

The work of Sugihara et al. (2016) tested a digitalized version of line cancellation tests as well. As opposed to the work of Pallavicini et al. (2015a), previously reported, the authors employ a fully immersive technology exploiting HMDs and comparing USN patients to subjects affected by visual field defects (VFD), i.e., a disorder visual space recognition where patients necessarily capture information by eye movement in an unimpaired visual field and head rotation toward the impaired visual field. The aim was to assess the eye movements of USN versus VFD patients and shed light on the features of spatial recognition impairment. The line cancellation task sheet was blanked firstly on the left side and then on the right side. At first, four USN patients were compared to four VFD patients, and they all completed a line cancellation test without wearing the HMD. Then, patients wore the HMD and started performing four conditions of the virtual line cancellation task: (i) no reduction in the size of the test sheet image, displayed on an LCD screen within the HMD; (ii) 80% reduction in the test sheet image toward the central part of the LCD; (iii) and (iv) 80% reduction toward the right and left of the LCD screen, respectively. All patients performed the line cancellation task following this order. Patients’ single-eye movement was also recorded, while they performed the task, by means of two miniature CMOS cameras. Results showed that VFD patients performed correctly under any condition of the left and right test sheets; specifically, their eyeball position was higher on the left-hand side under the conditions of no image reduction, center image reduction, and left image reduction. On the right-hand side, instead, the eyeball position was higher under the right image reduction condition. USN patients’ answer rates differed depending on the task presentation and the reduction side; their 100% correct performance in the left paper-and-pencil condition significantly dropped to 40% under the no-reduction condition, to 42% in the center/right image reduction, and to 38% in the left image reduction, while wearing HMD (Sugihara et al., 2016). Their eyeball position deviated rightward under all conditions and was particularly high under the center and right reduction conditions. Therefore, HMD did not influence the visual field conditions for VFD patients, despite USN patients performing poorly while wearing the HMD. Authors explained these results showing that VFD patients’ eyeballs tended to stay either over the left sheet (in the no-reduction, center, and left reduction conditions) and over the right side (only under the right reduction condition); this could be because patients generally saw the assessment sheets on the side of their affected visual field and visual search enhanced eye movement compensation. This could have increased the ratio of the left-hand side, whereas the right-hand side ratio could have been increased because the assessment sheets tended to be presented in an unaffected visual field, under the right image reduction condition. USN patients displayed a rightward deviation in the eye movement, compared to VFD patients. USN patients’ decreased performance under the HMD condition was possibly due to the rightward deviated attention of all right-handed patients. Their own hand, displayed on the HMD monitor, hid lines placed on the right side of the test sheet, while they performed the cancellation test from the right-hand side. The HMD condition provided a specific range of attention while diverting it rightward. The major drop was observed for the answers given under the left reduction condition; placing the left sheet to the left side of the screen (under the left image reduction condition) could have contributed to this decrease.

Ogourtsova and colleagues conducted two studies exploiting the potential of VR-based assessment (Ogourtsova et al., 2018a, 2018b). The first study considered the role of spatial cognition on locomotion and navigational abilities in post-stroke USN patients (USN +), presenting with walking deficits in goal-directed conditions with cognitive and perceptual demands (Ogourtsova et al., 2018a). Goal-directed walking deficits could depend on USN-induced perceptual and attentional deficits or could be mediated by post-stroke sensorimotor alterations as well, affecting gait, balance, and posture. The authors compared USN + to non-USN patients (USN-) and healthy controls (HC). They explored the effects of post-stroke USN on controlling goal-directed walking behavior in a goal-directed navigation task carried out with a joystick, within a VR environment displayed by means of an HMD. The navigation task included three conditions: (i) online, where the participant had to navigate toward a target, always visible; (ii) offline, where the participant had to remember the position of a target that disappeared during the navigation task; (iii) online, where the participant had to navigate toward a shifting target, which changed its location following participant’s displacement. The study had several aims, the primary being to estimate the degree to which post-stroke USN affects goal-directed navigation abilities in both conditions. The navigation task was performed while sitting, in order to minimize potential confounding effects of gait and walking abilities, where USN patients differ from a healthy control. Results are in line with a previous study (Aravind et al., 2015): USN presents with specific deficits in both spatial navigation and object detection and a joystick-driven task could reflect real perceptual-motor abilities in neglect. USN subjects showed a greater endpoint mediolateral error in the offline, memory-guided condition. USN patients seemingly manifest an impaired ability to update representation during navigation, as shown by their impaired ability to detect and adapt to a shifting target. Therefore, post-stroke USN appears to have a detrimental effect on spatial navigation, even in a seated position. Contrarily to previous studies, the authors found a left-sided navigation deviation, which could be explained by the absence of a walking demand in this study. The joystick-driven task appeared more useful for detecting perceptual-motor post-stroke USN abilities while failing to estimate USN impact on actual locomotion. The detection task was also able to highlight USN-related deficits in contralesional targets’ detection time, in line with previous research focusing on the attentional theory of USN (Kinsbourne, 1993; Smania et al., 1998).

Later, Ogourtsova, et al. (2018b) developed a three-dimensional Ecological VR-based Evaluation of Neglect Symptoms (EVENS) and examined its feasibility in a cross-sectional observational study. Specifically, the aim was to investigate the effects of post-stroke USN on both object detection and navigation toward a target, within a virtual grocery shopping isle. The VR task was provided by an HMD, requiring USN patients to perform a detection task and a navigation task within a grocery shopping isle. Patients could navigate and interact within simple and complex VR environments by pressing a joystick button. On the one hand, the detection task required patients to press the button when they detected a target (e.g., a blue cereal box), either in a simple scene (e.g., the blue box alone on a shelf) or in a complex scene (e.g., the same blue box among other distractors placed on multiple shelves), receiving auditory feedback afterward. In the absence of the target, patients were asked to wait for the next trial. On the other hand, the navigation task required patients to navigate toward the target using the joystick, following the most straightforward pathway possible. The VR-based assessment was preceded by a practice trial for patients to get acquainted with the procedure and neuropsychological measures were collected (Ogourtsova et al., 2018b). A repeated-measures mixed model was employed to examine the effects of scene complexity and targets’ location on both detection time and goal-directed navigation. For the detection task, USN + patients showed significantly longer detection times compared to both USN- and healthy controls. For the navigation task, USN + patients moved toward the target after “searching” for it, making corrections at the end of the trial, as opposed to USN- patients following a selected direction from the beginning to the end of the task. Moreover, it was tested whether the outcomes from both the detection and navigation tasks varied as a function of USN severity: specifically, the navigation task outcome (time to target) and, to a lesser degree, the detection time task (detection time) were progressively more affected as a function of USN increased severity. Therefore, USN negatively affects both perceptual and navigational abilities to targets placed on the neglected side, and the deficits worsen to a greater extent when patients are exposed to a complex scene. These deficits in detection time and time to target could be explained within the conceptual framework of the attentional mechanisms underlying USN.

The work of Ogourtsova et al. (2018b) also paved the way for the study of Yasuda et al., (2020). The authors note that the study is mainly focused on neglect in the extra-personal space and was not able to quantitatively identify patients’ neglected areas in the three-dimensional space. Therefore, the aim of the proof-of-concept study of Yasuda and colleagues was to develop and introduce an HMD-based assessment for both near and far space neglect. The setup included an HMD, a personal computer running Unity software for the VR environment, and a tracking sensor. The virtual environment was tested on a single stroke patient and consisted of a virtual room seen from a first-person perspective, in which a target sphere appeared at several angles of distance from the patient, ranging from 50 cm to 6 m. The system recorded the patient’s area of neglect for each distance, while the sphere appeared in concentric circles over the patient’s head. Depending on the line-of-eyesight of the patient, three stages of height were defined. The patient was instructed to verbally identify the presence of the red sphere when he recognized the target in the virtual room, within a 30-s time limit. Results showed that the patient had a significantly larger angle of recognition for near-space than far space and tends to increase the angle of recognition when height decreases. The immersive VR environment seemingly allows to record and visualize both near and far space in USN; however, further studies are needed to confirm the reliability and validity of the platform, as well as its clinical utility.

Knobel et al., (2020) aimed at testing the feasibility and acceptance of an HMD-based visual search task and its ability to detect neglect. A group of 15 patients was divided into two sub-groups (Neglect and No-Neglect) depending on their performance at the Sensitive Neglect Test (SNT; (Reinhart et al., 2016). The setup consisted of an HMD displaying a blue background with 120 objects (white spheres as targets and cubes as distractors), disposed within a hemisphere. The spheres were placed symmetrically, whereas the cubes were randomly distributed across the virtual environment. Patients held a controller to provide their answers and were asked to touch all the targets as fast as possible, without touching the distractors. After the procedure, patients filled an adapted version of the System Usability Scale (SUS; Brooke, 1996) and a measure regarding cybersickness (Simulator Sickness Questionnaire, SSQ; Kennedy et al., 1993). Results showed that both sub-groups of patients did not differ in terms of acceptance, usability, or adverse effects of VR-based assessment. The authors also included the Center of Cancellation (CoC, Rorden and Karnath, 2010) as a measure of neglect severity, assessed in cancellation tasks. This measure reflects the normalized mean deviation from the center, due to neglect, ranging from − 1 to 1, there positive CoC values indicate a rightward shift, and a negative CoC indicates a leftward shift in the space. The CoC measure was analyzed comparing Neglect, No-Neglect, and control group; compared to the paper-and-pencil version, the VR cancellation task seems less sensitive in detecting neglect symptoms and severity. This result could be explained by several factors, such as the different configurations of the three-dimensional VR stimuli versus the two-dimensional, paper-and-pencil version. The authors also hypothesize that the low number of targets and distractors could reduce the sensitivity of the test; therefore, future applications of this method should increase the number the stimuli, as well as the sample size (Knobel et al., 2020).

In a previous study, Kim and colleagues proposed the FOPR previously proposed the FOPR test, i.e., a VR-based assessment for the detection of binocular visual stimuli (Jang et al., 2016; Kim et al., 2021). The FOPR test assumes that individuals need to move their head and body to fully explore a visual scenario and that the visual search patterns can be differentiated into the field of perception (FOP) and field of regard (FOR), depending on the head and body movement. FOP refers to the size or angle of the visual field a person can see without moving the head or the body, whereas FOR refers to the total range of the visual field a person has when moving the head or the body (Jang et al., 2016; Kim et al., 2021). The paper-and-pencil assessment of HSN cannot consider the head and body movement does not distinguish between FOP and FOR, which could be useful to increase the sensitivity of USN assessment. Moreover, considering FOP and FOR could shed more light on the perceptual and exploratory components of HSN. Choosing an HMD allowed to evaluate both FOP (which is unaffected by body movements or head rotations, and the screen is fixed on the patient’s head) and FOR (which requires the exploration of the space as if in the real world), Built-in sensors also allowed to track head movements. The authors considered a group of stroke patients with a right-brain lesion and HSN (HSN + SS), a group of stroke patients with a right-brain lesion but no HSN (HSN-SS), and a healthy control group. HSN symptoms were firstly assessed using the line bisection test and the star cancellation test of the BIT and CBS. Secondly, the FOPR test was administered by means of the HMD and included two conditions: (i) the FOP condition, where patients were instructed to constantly look at a white fixation cross between each trial. As soon as the white cross disappeared, the target was presented (either blue or red spheres). To collect the FOP measurement, the head tracker was turned off in order to preserve the patient’s view of the screen despite the head movements; (ii) the FOR condition, where a red cross displayed on the screen identified the center of the HMD. Patients were asked to move their heads in order to align the red cross and the white fixation cross, before each trial. The FOR measurement was collected when the head tracker was active, and the view of the screen changed according to the head rotation. In both conditions, patients had to click the left or right button of a computer mouse as soon as they saw a blue or red sphere, respectively. Auditory feedback for right and wrong answers was provided. The authors considered success rate (FOPR-SR, i.e., the percentage of correct answers) and response time (FOPR-RT, i.e., the interval between target appearance and mouse click). Results showed that both SR and RT provide more sensitive quantification of visuospatial function and discriminating FOP and FOR could allow detecting milder forms of HSN. Therefore, the FOPR test seems a valid tool for the assessment of visuospatial function. However, the test should be integrated with an eye-tracking system as well and provide an equal number of FOP and FOR tests, possibly using a more ecological virtual environment in order to address patient’s behavior in functional tasks (Kim et al., 2021).

5.2 VR systems for USN rehabilitation

The field of USN rehabilitation has progressively implemented VR-based devices as well: we found 14 articles, nine considering non-immersive technologies (such as computer screens, shutter glasses, joysticks or computer keyboards: Faria et al., 2016; Fordell et al., 2016; Ekman et al., 2018; Wahlin et al., 2019; Tobler-Ammann, 2017a, 2017b; Glize et al., 2017; De Luca et al., 2019; Cogné et al. 2020) and 5 articles including fully immersive technologies (such as HMDs: Kim et al., 2015; Yasuda et al. 2017, 2018; Choi et al., 2021; Huygelier et al., 2020). Table 2 provides a detailed description of the VR technology employed in the following studies, as well as the characteristics of the sample, the sessions, and the main outcomes.

5.2.1 Non-immersive technologies

The study of Faria et al., (2016) tackles a relevant issue: cognitive rehabilitation for USN is currently directed toward specific cognitive functions, such as memory, attention, executive functions and language. However, performing daily activities inevitably requires combining them: therefore, a rehabilitation program should account for this complexity and focus on the patient’s global functioning, with ecological tasks and environments reproducing life-like situations. The authors conducted an RCT with 18 stroke patients, divided into two groups; the experimental group underwent a 12-session VR-based intervention with Reh@City (Faria et al., 2016). Reh@City allows an integrative rehabilitation of multiple cognitive domains, personalized with respect to the patient’s needs. The 20-min sessions were distributed over a period of 4 to 6 weeks and patients are asked to complete increasingly difficult tasks within familiar places, such as a post office, a bank, a pharmacy and a supermarket. The virtual city is displayed by means of a computer screen and a joystick enabled the navigation in the environment; if necessary, patients were able to ask for help and several cues were available (e.g., a mini map of the city, a guidance arrow). Whenever patients performed correctly, some of the cues were gradually removed session after session and reintroduced as soon as they failed to respond correctly (method of Decreasing Assistance, DA; Faria et al., 2016). The control group, instead, performed a traditional cognitive rehabilitation. Both groups underwent a cognitive and functional assessment before and after the intervention. Results suggest that VR-based, ecologically valid cognitive rehabilitation programs could be more effective, compared to traditional training. In line with previous studies, the authors report that patients in the experimental condition improved significantly in terms of global functioning, attention, memory, and visuospatial abilities, as well as executive functions (Kim et al., 2011a; Faria et al., 2016). In terms of cognitive functions, the control group reported a significant worsening in verbal fluency and an improvement in attention and processing speed, but this result might be influenced by the fewer years of education of the controls. The experimental group also reported a significant improvement in terms of social participation, emotion, and in the physical domain, suggesting the benefits of a comprehensive rehabilitation outside mere cognition. Despite the promising potential of this rehabilitation program, future studies on Reh@City should include bigger sample size and develop parallel versions for multiple assessments, to avoid learning effects. It would also be relevant to assess the extent of transferring improvements from VR to daily life (Faria et al., 2016).

Three studies (Fordell et al., 2016; Ekman et al., 2018; Wahlin et al., 2019) examined the effectiveness of an integrated platform that includes VR-DiSTRO™ a test battery for assessing neglect, and RehAtt® as a rehabilitation program (Fordell et al., 2016). Specifically, patients completed five computerized neglect tests that assessed spatial attention (Star cancellation test, Baking tray task, Line bisection, Extinction and Posner task). This first testing procedure was carried out using a computer monitor and shutter glasses providing stereoscopic vision. The VR-DiSTRO™ battery test has been previously validated (Fordell et al., 2011; Fordell, 2017). For the purpose of the present review, however, we will consider the VR-based rehabilitation procedure: patients underwent a 5-week VR-based intervention combining multi-modal sensory stimulation, an intense visual scanning training, and a sensorimotor activation while playing 3D videogames (Fordell et al., 2016). The RehAtt® training was firstly developed and employed on patients with chronic neglect due to right side cerebral infarction presenting impaired attentional networks. The primary aim was to provide a recovery method for attention, by stimulating neglect-related mechanisms within and between the ventral (VAN, includes top-down processes) and dorsal (DAN, includes bottom-up processes) attentional network, involved in reorientation, spatial attention, and stimulus selection (Arrington et al., 2000; Corbetta et al., 2005; Corbetta and Shulman, 2011; He et al., 2007; Knudsen, 2007; Ekman et al., 2018).

In their study, Fordell and colleagues (2016) employed the RehAtt® training on 15 patients after they completed a 5-week baseline. The training included both a top-down scanning training with a bottom-up tactile stimulation and visuomotor training. Patients had to wear 3D vision glasses while facing a 27″ monitor and using a robotic pen with a haptic force feedback interface. When playing the VR intervention game, patients used the paretic left hand to grasp the robotic pen, which provided vibrotactile feedback. Patients employed the robotic pen to move, rotate and manipulate 3D objects to perform three different tasks: (i) a mental rotation task, moving 3D figures from left to right side of the screen, placing them on the correct template figure. By means of the robotic pen, these figures could be touched and rotated; (ii) a visuomotor exploring task, picking 3D cubes from a tray located at the right side of the screen. Patients could place the cubes in three-dimensional straight lines; (iii) visuospatial and scanning task, where shapes targets appeared at increasing speed from either above or the left side of the screen, and patients could use the robotic pen to trap, rotate and place them within a puzzle of corresponding shapes. Each 30-min session was separated by five minutes of audio-spatial training. Results showed that the RehAtt™ rehabilitation method could improve elderly patients’ spatial attention performances, and results were both transferring the improvements in activities of daily living and lasting at a 6-months follow-up.

The following studies provided further evidence of neuronal changes following RehAtt® training, by means of fMRI. Specifically, Ekman et al., (2018) tested whether clinical improvements following RehAtt® training resulted in neural changes in attentional networks and related areas, examined by means of fMRI. The authors used the Posner cuing task and evaluated fMRI blood oxygenation level dependent (BOLD) changes before and after RehAtt® training, expecting training-related neuronal changes either within and/or between the ventral and dorsal networks. The authors also hypothesized that RehAtt® training could lead to changes in prefrontal regions, associated to goal-directed behaviors and guiding attention. Twelve patients with chronic neglect following right-sided infarction underwent the same 5-week RehAtt® training as the one described by Fordell et al. (2016). One week before and one week after the training, patients also underwent an fMRI scanning while performing a Posner cuing task. Results showed an increased BOLD signal following the RehAtt® training during top-down focus of attention, where the strongest effects were observed in prefrontal regions associated with goal-directed behaviors and guiding attention (specifically, dorsolateral prefrontal cortex, anterior cingulate cortex and bilateral temporal cortex). Thus, RehAtt® training induces cerebral changes beyond the mere DAN/VAN nodes an expanded to the frontal eye field and ventral frontal cortex as well. Combining an intensive learning of a top-down scanning strategy with a multisensory bottom-up stimulation, implemented in a virtual environment, appears a promising rehabilitation intervention for patients with chronic spatial neglect. However, it should be noted that the present study did have a small sample size and lacked a control group, thus generalization to other neglect patients should be cautious; moreover, estimation accuracy could have been influenced by movement corrections and including brain lesion voxels.

Following this line of research, Wahlin et al. (2019) considered pre-post-rehabilitation improvements in resting-state inter-hemispheric functional connectivity within the DAN following the RehAtt® training. Moreover, the authors adapted a recent tracking method to detect stroke-related longitudinal changes in functional connectivity within resting-state networks. It was examined whether this adaptation allowed to compare changes in DAN to those in other cerebral networks. Thirteen patients affected with visuospatial neglect underwent two fMRI scanning sessions, one week before the RehAtt® training and one week after the end of the intervention. Furthermore, symptoms’ stability was assessed three times at baseline and at follow-up. DAN localizations within fMRI were obtained by means of a scanner-adapted Posner task. Results showed that the intense scanning VR training enhanced resting-state functional connectivity in patients’ DAN, inducing changes in intrinsic neural communication. The tasks included in the intensive training strongly activated neurons within the lateral prefrontal and superior parietal cortices, increasing the functional connectivity between these regions. The intense scanning VR training increased DAN inter-hemispheric functional connectivity in patients affected by chronic visuospatial neglect; this suggests that chronic conditions as well could benefit from training that showed their positive effects for recovery from acute states of neglect. Moreover, the training increased the integration of the frontal eye field (FEF), controlling the saccadic eye movements to the left side of the space, with the left posterior parietal cortex. The authors also explored whether an increased prefrontal activation was observable following the fMRI Posner task. Whereas this effect was not detected within the DAN in a previous study (Ekman et al., 2018), the present study showed an intrinsic DAN change observable in the resting-state condition, and it is plausible that a simultaneous activation within the DAN would provide a major improvement in its intrinsic communication. However, the authors also point out the lack of a control group and the small sample size, which reduces the possibility to carry out more detailed comparisons between sub-groups of patients (Wahlin et al., 2019).