Abstract

A large number of people in the world need to use a wheelchair because of different disabilities. Driving a wheelchair requires complex physical and cognitive abilities which need to be trained. Virtual training helps users acquire driving skills in a safe environment. The aim of this paper is to describe and technically validate simulation models for both manual (MW) and powered wheelchairs (PW) based on immersive virtual reality CAVE (VR). As VR system, the Gait Real-time Analysis Interactive Lab (GRAIL) was used, a CAVE equipped with a motion platform with two degrees of freedom and an optoelectronic motion capture system. A real wheelchair was positioned onto the motion platform with rear wheels free to turn in MW modality, and a commercial joystick was installed on an armrest to simulate the PW modality. Passive markers were used to track the wheel rotation, the joystick and the user hand motion. Custom D-flow applications were developed to manage virtual scene response to user actions. Overground tests, based on single wheel rotation, were performed to verify the simulation model reliability. Quantitative results demonstrated that the MW simulator kinematics was consistent with a real wheelchair overground in the absence of wheel slip and inertia (median error for MW 0.40 °, no systematic bias p = 0.943, high correlation rho > 0.999, p < 0.01). The proposed solution is flexible and adaptable to different wheelchairs, joysticks and optoelectronic systems. The main limitation is the absence of force feedback. Nevertheless, it is a reliable prototype that can be used to validate new virtual scenarios as well as for wheelchair training. The next steps include the system validation with real end users and assessment of the simulator effectiveness as a training tool.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

According to estimates of the World Health Organization, 75 million people in the world were using a wheelchair in 2018 (WHO 2018). Wheelchairs are needed for mobility in many different kinds of disabilities, among which are amputation and spinal cord injury (Archambault et al. 2012; Karmarkar et al. 2009; Singh et al. 2020; van den Akker et al. 2020), paralysis (Aarai et al. 2011; AlAbboudi et al. 2020; Fadhil et al. 2019; Kuntal et al. 2020; Ruzaij et al. 2017), cerebral palsy (Alkhateeb et al. 2019; Rodby-Bousquet and Hägglund 2010; Rozas Llontop et al. 2020), multiple sclerosis (Devitt et al. 2004; Revathi et al. 2008; Silveira et al. 2020), muscular dystrophy (Bayley et al. 2020; Richardson and Frank 2009; Xi et al. 2019), traumatic brain injury (Quilico et al. 2020; Spaeth et al. 2008).

Wheelchairs increase mobility of people with physical impairments (Borg et al. 2011; WHO 2018), enabling them to become more involved and active in their communities, with a significant improvement in their social participation (Brandt et al. 2004; Cooper et al. 2011; Davies et al. 2003; Domingues et al. 2019; Evans et al. 2007; Hosseini et al. 2012; Labbé et al. 2020; Miles-Tapping 1996; Rousseau-Harrison et al. 2009). Manual or powered mobility can have a great effect on the lives of people with mobility impairments. People using wheelchairs reported feeling empowered and more productive, enjoying more free time and self-care (Domingues et al. 2020; Miles-Tapping 1996).

On the other hand, wheelchair driving requires complex physical and cognitive abilities, such as vision, balance, spatial awareness and a general knowledge of the wheelchair characteristics and reaction times (Bigras et al. 2020; Morgan et al. 2017; Pithon et al. 2009). Thus, it is important for people with physical disabilities to manage all these skills. Wheelchair use has indeed many implications for the safety of the user as well as other people, and in terms of safeguarding objects in the environment (Field 1999; Frost et al. 2020). Some studies have highlighted that the risk of tipping over, falling and colliding with static or moving objects in the environment is not negligible (Corfman et al. 2003; Kirby et al. 1994; Rice et al. 2019; Xiang et al. 2006).

New wheelchair users report some difficulties in learning to drive the device, be it a powered wheelchair (PW) (Torkia et al. 2015) or a manual one (MW), especially when secondary pain conditions affect the upper limbs or hands (Briley et al. 2020; Dieruf et al. 2008; Walford et al. 2019). Therefore, training is usually required in order to improve independent mobility in users (Bigras et al. 2020; Keeler et al. 2019; R. Lee Kirby et al. 2015; Mountain et al. 2010a, b; Mountain et al. 2010a, b). Typically, such training aims to teach—with different levels of difficulty—abilities like obstacle avoidance, turning in open or thigh space, passing through doors, moving backward/forward while maneuvering, driving over different terrains, going up/down stairs and ramps. Such training on a real wheelchair can be potentially unsafe (Frost et al. 2020; Mountain et al. 2010a, b; Mountain et al. 2010a, b). By contrast, a virtual reality (VR)-based training can improve maneuvering abilities while ensuring user safety and strongly reducing the risk of injuries (Archambault et al. 2012; Archambault and Bigras 2019; Desbonnet 1998; A. Harrison et al. 2002; Hasdai et al. 1998; Nunnerley et al. 2017; Torkia et al. 2015; Webster et al. 2001).

With the increasing diffusion of VR technology (Arlati et al. 2019), different types of wheelchair simulators were developed in recent years. Most papers in the literature describe PW simulators (Archambault et al. 2012, 2017; Carlozzi et al. 2013; Desbonnet 1998; Devigne et al. 2017; Harrison et al. 2002; Hasdai et al. 1998; Harshal P. Mahajan et al. 2013; Harshal Prabhakar Mahajan 2013; Nunnerley et al. 2017; Panadero et al. 2014; Spaeth et al. 2008; Webster et al. 2001), while only few authors developed MW-specific solutions (Blouin et al. 2015; Chenier et al. 2014; Crichlow et al. 2011; Crichlow and Crichlow 2011; C. S. Harrison et al. 2000; Maxhall et al. 2004). Furthermore, to the best of our knowledge, no studies were published in the literature describing flexible systems that integrate both driving modalities.

Moreover, most simulators are based on non-immersive VR (Archambault et al. 2012, 2017; Blouin et al. 2015; Chenier et al. 2014; Desbonnet 1998; A. Harrison et al. 2002; Hasdai et al. 1998; Harshal Prabhakar Mahajan 2013; Spaeth et al. 2008; Webster et al. 2001), and thus, they do not take advantage of the increased engagement and motivation often related to the increased level of immersion (Kim and Biocca 2018).

Some papers demonstrated that VR training is effective in improving driving abilities (Archambault and Bigras 2019; Keeler et al. 2019), in particular virtual maneuverability tasks seemed to be a useful pre-training activity (Archambault et al. 2012; Desbonnet 1998; A. Harrison et al. 2002; Hasdai et al. 1998; Nunnerley et al. 2017; Webster et al. 2001). Training with non-immersive PW simulators revealed to make the participants able to control their PW in a real environment: Driving tasks were performed in the same way in the virtual environment as the real one, suggesting that similar driving skills were acquired (Archambault et al. 2012). Additionally, such simulators are also useful for training people to perform specific daily living tasks such as cooking, doing self-care activities or moving in small spaces such as a supermarket (Archambault et al. 2012). Since training proved effective regardless of the immersion level (Carlozzi et al. 2013; Harshal P. Mahajan et al. 2013), it is plausible to think that an increased immersion could make such simulators effective while also benefitting of higher engagement and motivation (Kim and Biocca 2018).

On the other hand, considering MW simulators developed in immersive VR, interaction with the virtual environment and control of the virtual wheelchair motion were based on specific hardware interfaces, such as rollers or encoders to traduce real wheel movement in virtual wheelchair motion (Crichlow and Crichlow 2011; . Harrison et al. 2000; Maxhall et al. 2004). Although hardware interface guarantees an appropriate force feedback useful for training, setup is rather complex and does not necessarily enable use of a personal wheelchair.

As a side effect of VR wheelchair simulators, the literature mentions cybersickness which could be related to a combination of the immersion level and the feedback provided to users (Carlozzi et al. 2013; Harshal P. Mahajan et al. 2013; Mittelstaedt et al.2018; Nunnerley et al. 2017; Weech et al. 2019). Specifically, the higher the level of immersion, the greater the sickness as well as the engagement and challenge (Weech et al. 2019).

The main purpose of this work is to describe the development of a new wheelchair simulation system based on an immersive VR CAVE and a real wheelchair. This system allows simulating both manual and powered wheelchair modalities. Furthermore, the simulation system in PW modality integrates interaction between movements of the non-driving hand and objects in the virtual environment. A secondary aim of this work is to verify the reliability of the mathematical model used to simulate the MW motion comparing it to the movement of a real MW overground.

The current work is part of the Rientr@ project whose aim is promoting return to work of wheelchair users after a job-related injury.

The newly developed simulation system could be potentially used as a tool to teach to new wheelchair users—and assess—wheelchair driving skills and interaction with objects in the environment in safe and realistic conditions. Furthermore, this system is independent of scenarios and could also be used to validate newly developed virtual environments.

2 Materials and methods

2.1 The grail system

The wheelchair simulation system was developed using the Gait Real-time Analysis Interactive Lab (GRAIL by Motek, NL)Footnote 1. The GRAIL system comprises many different hardware components, which are controlled by the D-flow software (Geijtenbeek et al. 2011). The GRAIL is an instrumented 2 degrees of freedom (i.e. forward/backward tilt and lateral sway) multisensor platform based on immersive VR for gait training and rehabilitation in engaging VR environments. A Vicon motion capture system (Oxford Metrics, Oxford, UK) captures the kinematics of passive markers in real time. The GRAIL system projects VR environments on a 180 ° semicylindrical screen and gives multisensory (audio, video and proprioceptive) feedback, thus allowing users to feel immersed in the computer-generated world. Finally, the GRAIL system is equipped with 3 video cameras and a Dolby surround system to support this immersive experience (Fig. 1a).

The GRAIL system, controlled by the D-flow software, allows an operator to define feedback strategies through a flexible application development framework. The D-flow software is based on visual and Lua script programming, and it is designed for the development of interactive and immersive VR applications, for the purpose of clinical research and rehabilitation. D-flow is based on the concept of modules with specific functionalities, which can be combined to create complex interactive VR applications. Some software modules directly control specific hardware devices, such as the treadmill or the motion platform. Other modules provide access to real-time data streams from input devices or control of the virtual environment. D-flow integrates and synchronizes all hardware components and makes the information available in real time. Furthermore, D-flow manages the relationship between patient, scenery and interactive feedback and simulations.

The D-flow modules available for the GRAIL system used in this research have different features and can be grouped in four categories according to their functionalities (Fig. 1b). Scene includes all modules connected to the scene and those enabling its modification and interaction. Modules included in Resources receive signals from the hardware device (e.g. the motion capture system) or send signals to another hardware device (e.g. platform and treadmill). Preset comprises embedded modules to manipulate marker coordinates which can provide hardware feedback. Modules belonging to Editor are software modules to perform simple operations, such as sound activation, as well as develop complex programs and algorithms, e.g. the Script module. Finally, Data incorporate all modules related to input and output aimed at defining input features for a specific module or at recording an output.

The main D-flow modules used for the simulator development are detailed below:

-

the Mocap module receives all data coming from motion capture and force plate systems and makes them available as output

-

the VGait module allows to modify pitch (from − 10 ° to + 10 °) and sway (max displacement 5 cm) of the moving platform

-

the Marker Matcher module allows to label markers according to a custom setup

-

the Dynamic Balance module uses input marker coordinates to calculate a combined virtual marker position, as a sort of barycenter; when calibrated, the module sets the zero level position of the virtual marker. This module also enables to scale the virtual marker movements in order to simulate larger or smaller displacements

-

the Navigator module dynamically controls objects in the 3D scene, e.g. it converts an input displacement into speed or acceleration

-

the Camera module is used to set and control the point of view in the 3D scene

-

the Parameter module is used to develop and configure a graphic user interface where the user can define input parameters and control the whole system

-

the Script module is the most flexible module and works as algorithm editor; it can be programmed in Lua and allows to define complex logics and system behaviors not included in standard module functionalities.

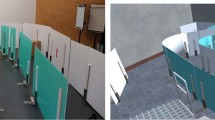

2.2 Physical setup

To develop the simulation system in both modalities (i.e. MW and PW), a real wheelchair (KSP Italia, model KSP N23R24Footnote 2) was used and positioned in the middle of the motion platform of the GRAIL system. The wheelchair was placed over 4 blocks, to leave the rear wheels free to turn in the manual modality (Fig. 2a), and a commercial joystick of a PW was placed on an armrest (Fig. 2b), to allow for motion control in power modality. The joystick can be mounted on the right or the left armrest, to accommodate for right- and left-handed people.

Wheelchair simulator setup. a configuration with 3 markers for each driving wheel, as required for MW simulator; rear wheels are free to move thanks to 4 blocks that raise the wheelchair; b configuration with the commercial joystick on which marker support was mounted, as needed for the PW simulator

Passive markers were placed on the wheels and the joystick to track wheels or joystick movements, respectively, according to the modality used, as described in the next sections. The wheelchair was firmly secured with ropes to the GRAIL platform in both modalities.

2.3 Manual wheelchair modeling

For the MW simulation, 6 spherical markers with a 12.5 mm diameter were placed on the wheels, 3 markers for each wheel: One marker was positioned in the center of the wheel, and two markers were located on two spokes at 90 °, about halfway between the wheel tire and the center (Fig. 2a).

A custom 3D Vicon model was created to recognize and track the motion of the three identified rigid bodies: the left wheel, defined by markers labeled Axis1, Lwheel1 and Lwheel2; the right wheel, represented by markers labeled Axis2, Rwheel1 and Rwheel2; and the wheelchair axis connecting the centers of the two wheels, defined by markers Axis1 and Axis2 (Fig. 3).

Three rigid bodies are identified by the Vicon model of the manual wheelchair simulator: the left wheel, defined by markers labeled Axis1, Lwheel1 and Lwheel2; the right wheel, represented by markers named Axis2, Rwheel1 and Rwheel2; and the wheelchair axis connecting the centers of the two wheels, defined by markers Axis1 and Axis2

The main D-flow modules used for the development of the MW simulator were Mocap, Camera, and Parameter. However, the MW simulator was mainly developed by using the Script module, as described below.

The D-flow received, through the Mocap module, the 3D coordinates (x, y, z) of each marker labeled from the Vicon system in real time. An interactive interface was developed using a Parameter for different models of wheelchair or marker position. The operator needs to enter the wheel radius (Fig. 4a parameter r, in green), the wheel-to-ground distance (Fig. 4a parameter g, in blue), the distance of the markers on the wheel spokes from the center of the wheel itself (Fig. 4b parameter m, in white) and the offset between the real wheel center and the marker on the rotation axis (Fig. 4b parameter c, in red). The application combines these inputs with the marker coordinates to calculate the geometry of the wheelchair that is necessary to reproduce the wheelchair motion.

Since the D-flow sampling rate is equal to 250 Hz and the maximum linear speed of a manual wheelchair can be estimated to be about 3 m/s, the distance between two consecutive detected positions of any marker placed on the wheel spokes (in our setup positioned approximately 305 mm from the center) could be considered less than 12 mm, corresponding to a wheel rotation angle less than 2.25°. Thus, the circumference arc length crossed by each wheel marker between two consecutive frames was approximated to the chord subtended L(t) (Eq. 1), that is the distance between two consecutive detected positions of the same marker.

where x, y, z are the spoke marker coordinates.

A kinematic constraint was assumed to describe movement in the virtual space: The linear speed of each wheel at the ground was set equal to zero (i.e., no slip condition). By this way, the linear displacement of both wheels is combined to calculate the wheelchair movement frame by frame.

The mathematical model used to calculate the MW kinematics was based on the differential drive model (Fig. 5). Angular speeds are ωr(t) for the right wheel and ωl(t) for the left wheel; Vm(t) and ωm(t) are the linear and the angular speed applied to the midpoint axis of the wheelchair, respectively, and θ(t) is the instant angle of the wheelchair at the Center of Instant Rotation (CIR).

Unicycle differential drive model. ωl(t) = instant left wheel angular speed; ωr(t) = instant right wheel angular speed; Vm(t) = instant midpoint linear speed; ωm(t) = instant midpoint wheelchair axis angular speed; CIR = center of instant rotation of wheelchair; and θ(t) = instant attitude angle of wheelchair at CIR

According to this model, the angular speed ωm(t) and the linear speed Vm(t) of the wheelchair midpoint are linked in a unique way to the angular speed ωr(t) of the right wheel and ωl(t) of the left wheel, by means of Eqs. 2 and 3:

where R is the wheel radius and D is the wheelchair axis length.

As previously explained, the product between ω(t)*dt of each wheel and R was assumed to be equal to the chord subtended (Eq. 1).

Operatively, a first Lua Script module was designed to receive the actual coordinates from Mocap while saving those of the previous sample. A second Script—developed to receive dynamically the coordinates by the first one—implements Eq. 1 to calculate the instant linear displacement Lr(t) and Ll(t) (of the right and the left wheel, respectively) frame by frame. At each frame, a third Script module receives the instant linear shift Lr(t) and Ll(t) from the second one and is designed to calculate the rototranslation of the wheelchair axis midpoint according to Eqs. 4 and 5 (Fig. 6).

The instant angle θ(t) of the wheelchair at CIR is computed according to Eq. 4:

The midpoint axis instant shift Lm(t) is calculated according to Eq. 5:

By applying the same considerations above and considering.

the circumference arc length approximated to the subtended chord, the bisector of θ(t) was considered perpendicular to Lm(t) and the relative instantaneous rotation of the wheelchair axis midpoint was approximated to θ(t)/2 (Fig. 7).

Wheelchair movement between two frames; red lines represent the wheelchair axis, and blue lines show the arc covered by the left and right wheels and the midpoint axis. Measures of the midpoint in the relative axis are shown in green. Ll(t) = instant left wheel shift; Lr(t) = instant right wheel shift; Lm(t) = wheelchair instant midpoint shift; θ(t) = instant attitude angle at CIR(t); (Xr;Zr) = relative system; Zm(t) = relative z coordinate; Xm(t) = relative x coordinate; and \(\theta \left(t\right)/2\)= relative instant rotation of the midpoint of wheelchair axis

According to this, the third Script module calculates the instant wheelchair axis midpoint coordinates Xm(t) and Zm(t) along the relative axis according to Eqs. 6 and 7.

Lastly, by applying rototranslation equations between relative and absolute reference systems, such Script is able to calculate the absolute positions X(t) and Z(t) and attitude Rot(t) of the wheelchair midpoint axis, as shown by Eqs. 8, 9 and 10.

The position of the virtual wheelchair is updated by the algorithm frame by frame, so the simulator is able to track the position of the real wheelchair in the virtual space in real time.

The instantaneous absolute wheelchair position calculated by the algorithm is sent to the Camera module available in the D-flow software: The virtual camera was positioned at the axis midpoint to simulate the user’s point of view. The position of the camera along the vertical axis can be adjusted according to the user’s height so that users have the impression to navigate into the virtual scenario using a first-person view.

2.4 Powered wheelchair simulation

For the PW simulation, two 12.5 mm spherical markers were positioned on the joystick through a custom-made handpiece, designed and produced with 3D printing techniques. The handle of the original joystick was removed, and the cylindrical handpiece (25 mm diameter, 80 mm high) was placed onto the lever and a 100 mm metal bar (3 mm in diameter) was fit on the top of the handpiece parallel to the ground. Two markers were placed at the extremities of the bar (Fig. 2b). A third 12.5 mm marker on the back of the non-driving hand tracked its movements and enabled user interaction with objects within the virtual environment.

The main D-flow modules used for the development of the PW simulator were: Mocap, Marker Matcher, Dynamic Balance, Navigator and Camera.

The D-flow Mocap module receives the detected marker coordinates from the Vicon system in real time. The Marker Matcher module is used to receive the coordinates from Mocap and to label markers directly in D-flow according to a custom template. It was decided to use this module instead of the Vicon model because there were only 3 markers in this setup, and markers on the joystick never changed their relative position.

Labeled joystick marker coordinates are sent to the Dynamic Balance module. It computes the combined virtual marker position of the two markers on the joystick. At the beginning of each trial, this virtual marker position is zero-leveled by calibration, which must be performed by the operator before starting the simulator application. Furthermore, the Dynamic Balance module allows to change the relation between the virtual marker displacement and the navigation in the virtual environment, i.e. the joystick sensitivity, according to Eqs. 11 and 12:

where X(t) and Z(t) are the original combined marker coordinates, Xout(t) and Zout(t) are the final coordinates of the combined marker, and α and β are configurable values used by the Dynamic Balance module to change the joystick sensitivity that can be customized, similarly to real wheelchair joysticks.

The D-flow Navigator module receives coordinates Zout(t) and Xout(t) from the Dynamic Balance module in real time. The Navigator module uses these coordinates to update its position and rotation in the virtual environment (Fig. 8). Navigation within the scene takes place thanks to the two markers positioned on the joystick: The increasing/decreasing speed is proportional to the forward/backward movement of the joystick (Zout(t)) compared to the zero position (the one measured during calibration), while rotational movements are proportional to the joystick right/left shift (Xout(t)). The maximum speed that can be reached in forward movements was limited to 3 m/s and in backward movements to 2 m/s.

Since the position and rotation of the Navigator module in the virtual environment reflect the wheelchair movement, they are applied to the Camera module to render the user’s point of view.

This navigation method was considered reliable as several applications developed by the GRAIL manufacturer are based on the same principle. In those exergames, two markers are placed on the subject’s low back or shoulders and he/she moves in the virtual environment as the user moves the correct part of his/her body.

2.4.1 Virtual hand

In the PW simulation modality, the third marker represents the user’s hand, as explained in the previous paragraph. This marker is labeled by the Marker Matcher module (Fig. 8). The hand marker coordinates are considered as the relative position of the hand in relation to the wheelchair position in the virtual environment. The absolute position of the wheelchair is constantly updated by the Navigator module during navigation in the virtual environment. A Script module was designed to compute the absolute position of the hand in real time: The absolute hand coordinates XH(t), YH(t) and ZH(t) are computed by combining the absolute position of the wheelchair and the relative marker coordinates for the hand according to Eqs. 13, 14 and 15.

where Xh(t), Yh(t) and Zh(t) are the relative marker coordinates for the hand received from the Mocap module, XW(t), YW(t) and ZW(t) are the absolute coordinates for the wheelchair, and αW(t) is the absolute rotation for the wheelchair, all received from the Navigator module.

A volume representing the user’s virtual hand was created and associated to the marker coordinates for the hand XH(t), YH(t) and ZH(t) computed by the previously described Script: The simulator could thus recognize when the hand collided with scene objects. The virtual hand was visible in the environment (Fig. 9) and could translate in 3D environment without rotating along its relative axes.

Some custom rules related to collision detection were implemented to make the simulator able to manage the interactions, such as grabbing an object, opening/closing a door and moving an object from one place to another, producing an appropriate feedback for the user.

2.5 User feedback, interaction with scenes and data recording

In both PW and MW simulation modalities, a bounding box—width 50 cm, depth 80 cm and height 100 cm—was created and applied to the midpoint of the wheelchair axis. This bounding box was configured to detect the collision of the virtual wheelchair with other scene objects. When the wheelchair volume touches an object volume, even partially, the simulator system is programmed to detect the triggering collision, and to produce vibrations through treadmill movement and/or audio feedback. Moreover, the bounding box was used to configure some background sounds to be played for a greater immersion experience, e.g. it was possible to hear the traffic noise along a city street or birds chirping inside a park.

The wheelchair bounding box was also used to simulate ascents and descents in the virtual environment, for example, on sidewalks or ramps, as D-flow does not provide the functionalities to implement appropriate physics-based behaviors. Hidden volumes were created and placed at the beginning/end of the ascent/descent and used as trigger colliders. A specific Script module was developed to detect collisions of the wheelchair volume with trigger colliders and to evaluate whether the collision position corresponded to an ascent (or descent). In this case, the module was programmed to send an output command to the Vgait module so as to tilt the platform of the GRAIL system up to + (or − ) 10 ° according to the real slope of the scene.

By means of a dedicated Script developed to manage hand interactions, the simulation system in PW modality is able to detect collisions between the volume representing the user’s virtual hand and scene objects. The algorithm is able to activate visible and audible feedback to reinforce the interaction with objects. For example, if the user touches a closed door, the door opens and an appropriate sound is played by the system. When an object needs to be moved to another place, the object disappears when it is correctly grasped and it reappears when it is released in the correct position, followed by a sound. An object is considered correctly held if the hand volume collides with the object volume, while it is considered correctly placed if the volume of the hand collides with the volume of the area in which it must be placed.

The application in MW modality was configured to record a.txt file containing: midpoint axis coordinates of virtual wheelchair, absolute rotation, instant linear speed, number of collisions against objects in the VR environment and absolute coordinates of collision point, in order to make these quantities available for future analysis.

The simulation system in PW modality was configured to record a.txt file containing the same quantities registered in MW modality and hand interactions with objects.

2.6 Manual wheelchair simulation assessments

To evaluate the accuracy of the model developed for the MW simulation, the overground movement of the wheelchair was compared with the movement calculated by the simulator.

As the wheelchair over the GRAIL platform did not really move because of the available space—only wheels do turn on the simulator—overground tests of the manual wheelchair were performed in a different laboratory equipped with the Elite motion capture system (BTS Bioengineering) furnished with 8 optoelectronic cameras (sampling frequency 100 Hz).

Four markers were used for these tests: 1 marker was applied on each hand-rail wheel and 1 marker on each armrest of the wheelchair. The marker setup was different from that used for GRAIL experiments due to Vicon requirements (i.e. needs of a marker model made by joints connected by segments); however, in both setups it was possible to track wheels and wheelchair movements, thus making the two measurements comparable.

One experimenter performed ten trials for each wheel; in each trial, one wheel was free to turn, while the other was blocked using the brake; each trial consisted in a 360 degree rotation of the wheelchair with the blocked wheel as rotation center. The coordinates of each marker were collected from the Elite motion capture system and registered into a.txt file.

The same experimenter performed tests on the GRAIL system, mimicking the ones performed overground and using the same wheelchair. Markers were positioned according to the Vicon model developed for MW simulator (Sect. 2.3). Ten trials for each wheel, as described above, were performed, and the wheelchair rotation angles were calculated by the simulation system and registered in a text output file together with all the marker coordinates.

The rotational movement with a locked wheel was a type of movement that could be easily reproduced in the two environments. Therefore, this was a replicable test to assess motion equations; tire pressure and wear were also controlled to reduce possible errors.

2.7 Data analysis

Experimental data were analyzed using a custom MATLAB® scripting, which computed the absolute rotational position of the wheelchair every 5 ° of wheel rotation.

The .txt files recorded by the simulator and by the Elite system were collected and loaded in MATLAB workspace.

To track the wheel rotation, the displacement in the vertical direction of the marker on the rotating wheel was converted into the rotation angle of the wheel by using the arccosine function. The expected output was a triangular wave with values between 0 and π.

To evaluate the wheelchair rotation, two different scripts were developed for the simulator and for the overground tests. The GRAIL simulator provided directly this value in the form of a sawtooth wave with values between − π and π. By contrast, for the overground test, the wheelchair rotation was calculated using the arctangent function for the 2 horizontal components of the segment connecting the two markers on the armrests. The output was a sawtooth wave with values between -π and π, comparable to the values provided by the GRAIL system.

The total rotation angles of the wheelchair were thus created by correcting the instantaneous change from − π to π (or vice versa, depending on the rotation direction), for both simulator or overground data, controlling when the angle variation of two subsequent instants was greater than ± π. Data were also filtered by means of moving average, and the window length was set to 1 s for both tests. Finally, the absolute angle values for the wheelchair were extracted every 5 ° of wheel rotation using linear interpolation.

Data used for statistical analysis were collected during the second wheel 360 degree rotation, to avoid considering the initial transition.

2.8 Statistics

According to tests performed as described in paragraph 2.6, 20 trials were collected (10 for each wheel) for both the simulator (virtual wheelchair) and the real wheelchair overground. For each test, data coming from each wheel were pooled to calculate the mean values of wheel and wheelchair angles.

The mean rotational positions of the wheelchair obtained in the two tests were statistically evaluated as described below in order to compare the motion calculated by the simulator with the real motion on the ground.

To evaluate the accuracy of the simulator, the absolute (E) and relative (Er) errors between mean angles of the wheelchair in the two groups were computed (Eqs. 16 and 17).

Median and interquartile range (75th percentile–25th percentile; IQR) were computed for E and Er.

Shapiro–Wilk test was performed to verify whether data were normally distributed. Since the normality of the dataset was not verified, a Mann–Whitney U test was computed to detect any systematic bias. Spearman’s rho was computed to evaluate correlation between the two measurements.

3 Results

The quantitative test of the MW simulator assessed that the developed model, based on the hypothesis of absence of wheel slipping phenomenon and inertia, was consistent with overground movements of a real wheelchair. The mean angles of the wheelchair corresponding to a 360 ° wheel rotation were calculated for the simulator and overground data. The two curves were qualitatively compared (Fig. 10), highlighting that the simulated wheelchair was slightly more sloped than the overground one at the end of the trial.

Table 1 shows the median (IQR) of absolute and relative errors. The absolute error was less than half degree, and the relative error was equal to 0.6%.

The Mann–Whitney U test evidenced no systematic bias between the two measurements (Z = − 0.072, p = 0.943).

The correlation between the two groups is shown in Fig. 11. Spearman's rank-order correlation test was not statistically significant (rho > 0.999, p < 0.01).

4 Discussion

The aim of this study was to describe the development of a new wheelchair simulation system made by an immersive VR CAVE and a real wheelchair. A simulation system that can imitate both manual and powered wheelchairs was developed. The reliability of the mathematical model used to simulate the kinematics of MW motion, in the absence of wheel slipping and inertia, was assessed comparing it to the movement of a real MW overground using a single wheel rotation test. Specifically, this was achieved by evaluating wheelchair angles as function of wheel rotation: Simulated and real manual wheelchairs had very similar slopes, except for the end of wheelchair rotation, where the simulation slope is higher than the overground one.

Furthermore, no systematic bias was detected, and simulated and real MW movements were highly correlated. In terms of accuracy, the absolute and relative errors between the overground test and the MW simulation were very small, less than half degree. These errors may be due to the approximations used in the development of the simulation mathematics, as discussed below.

First of all, the approximation involving the use of the chord instead of the arc of circumference introduces an error that becomes negligible with small angles. In our case, the maximum measured chord was equivalent to 1/146 of circle. This means that we were approximating the circumference with a polygon with a minimum number of sides of 146, being other chords smaller, with a resulting theoretical error smaller than 10–2%. Hence, this approximation did not contribute to the error found.

Secondly, the hypothesis of no-slip condition prevented the simulator from considering the slipping phenomenon existing in overground tests. According to previous considerations, the slightly lower mean angle obtained after a wheel rotation of 360 ° in overground tests (177.58 °) versus the MW simulator mean angle (180.73 °) was probably due to the absence of slipping phenomenon simulation.

The literature describes several wheelchair simulators, mainly for PW (Archambault et al. 2012, 2017; Carlozzi et al. 2013; Desbonnet M, 1998; Devigne et al. 2017; Harrison et al. 2002; Hasdai et al. 1998; Harshal P. Mahajan et al. 2013; Harshal Prabhakar Mahajan 2013; Nunnerley et al. 2017; Panadero et al. 2014; Spaeth et al. 2008; Webster et al. 2001) and few for MW (Blouin et al. 2015; Chenier et al. 2014; Crichlow et al. 2011; Crichlow and Crichlow 2011; Harrison et al. 2000; Maxhall et al. 2004). Most of these simulators are based on non-immersive VR (Archambault et al. 2012, 2017; Blouin et al. 2015; Chenier et al. 2014; Desbonnet 1998; A. Harrison et al. 2002; Hasdai et al. 1998; Harshal Prabhakar Mahajan 2013; Spaeth et al. 2008; Webster et al. 2001) which is known to be less engaging and motivating than immersive VR owing to the low level of immersion (Kim and Biocca 2018).

To the best of our knowledge, the simulation system developed in this work is the first one integrating both manual and powered wheelchairs in immersive VR.

Furthermore, considering manual wheelchair simulators developed in immersive VR, the interaction with the virtual environment and the control of the virtual wheelchair motion were based on specific hardware interfaces. Specifically, Crichlow and coll. (Crichlow and Crichlow 2011) developed a hardware interface with torque sensors used to detect user input torques and a servomotor for force feedback. Similarly, Harrison et al. (Harrison et al. 2000) used rollers to detect wheel turning and a variable torque hysteresis brake to provide resistance feedback at each roller according to different virtual slopes. Maxhall and coll. (Maxhall et al. 2004) developed a hardware interface with step counters to measure wheel rotation, and some motion sensors were integrated to detect motion of the user’s hands, head and relative position between body and wheelchair.

By contrast, the MW simulation system developed in this work exploited an optoelectronic motion analysis system to track wheels motion by means of passive markers. This advantageously allows for changing wheelchair and setup easily and quickly. Furthermore, it could be adapted to different motion tracking systems, including less costly ones (e.g., using inertial sensors). The MW interface developed can be considered more manageable and less complex than an ad hoc hardware interface, such as rollers or encoders, to translate real wheel movement in virtual wheelchair motion.

PW simulators described in the literature used instead different immersive VR technologies and different interfaces. Some authors used head mounted displays (HMDs) or glasses (Carlozzi et al. 2013; Nunnerley et al. 2017; Panadero et al. 2014); others used VR screens (Devigne et al. 2017; Harshal P. Mahajan et al. 2013) to show the virtual wheelchair movement, by using joysticks as interface. Panadero and coll. developed a simulator that integrated a platform to generate motion feedback to the user (Panadero et al. 2014). By contrast, Devigne and colleagues did not integrate any kind of feedback in their simulator and reported virtual sickness in users at the end of tests (Devigne et al. 2017). Furthermore, Mahajan and Carlozzi (Carlozzi et al. 2013; Harshal P. Mahajan et al. 2013) demonstrated that PW simulators based on HMD or on 3 flat screens were similar in terms of training effectiveness and user sickness. However, driving was found to be more challenging with HMD technology than with 3 VR screens (Carlozzi et al. 2013) and more difficult with VR screens than with a PC monitor (Harshal P. Mahajan et al. 2013). Also, in their study Nunnerley et al. reported the effectiveness of a VR simulator for training, together with user nausea at the end of the tests (Nunnerley et al. 2017).

The PW simulation system developed in this work uses actual joystick shifts to drive wheelchair movements in the VR environment through a motion analysis system. Given the available hardware and the possibilities of integration provided by D-flow, this interface is more straightforward than ad hoc systems such as USB joysticks requiring the joystick to be physically connected to the simulator through dedicated electronics. Our solution is also easily adaptable to any type of joystick thus allowing each subject to use the simulator with their wheelchair.

Previous works demonstrated that simulators based on immersive VR may cause cybersickness (Carlozzi et al. 2013; Devigne et al. 2017; Harshal P. Mahajan et al. 2013; Nunnerley et al. 2017) and that appropriate vestibular feedback could reduce it. Cybersickness could be indeed related to a combination between the immersion level and the feedback provided to users, and it was also demonstrated that training is effective regardless of the immersion level and that challenging is proportional to the level of immersion.

Moreover, consistently with what has been shown in the literature, the use of a VR immersive screen and appropriate feedback may provide for realistic navigation and reduce user cybersickness (Mittelstaedt et al. 2018; Weech et al. 2019).

Consistent with the indications provided by previous studies, the simulation system described in this manuscript integrates both platform movements to generate vestibular stimuli and optic/acoustic feedback.

5 Conclusion

This work aimed at describing a new simulation system, based on an immersive VR CAVE that integrates both manual and powered wheelchairs. The innovation of this work was the development of an integrated MW/PW setup that enables users to interact with virtual objects from their own wheelchair, thanks to the virtual hand integration in powered modality.

Concerning the MW mode, this work contributed to the development and implementation in the D-flow of the mathematical model and its validation. This step proved that the simulator is able to reproduce wheelchair overground movements, under the assumption that no wheel slip occurs.

Regarding the PW simulator, the main contribution was the integration of hand movements and the adaptation of the navigation control method, used in other GRAIL applications, to the specific purpose of navigating with a wheelchair. The proposed solution is very flexible: It can be adapted to any type of wheelchair, both manual and powered, and any type of joystick, it does not require long preparations for the patient and the environment, and it could be easily adapted to different tracking systems. Furthermore, the system could also be used to analyze the movements necessary to drive a wheelchair by using a proper upper limb motion analysis protocol, being the simulator integrated in a motion analysis lab.

Finally, use of immersive VR based on a 180 ° cylindrical screen and integrating platform movement to generate vestibular stimuli and optic/acoustic feedback could be considered a trade-off between driving difficulties and user experience. This simulator should thus provide a good level of immersion flanked by a reduced user sickness (Mittelstaedt et al. 2018; Weech et al. 2019).

The authors are aware of the possible limitations of the present work. The MW simulation system described in this manuscript is not provided with force feedback and inertia, differently from other works; wheels are free to turn and extremely sensitive to every slight movement. Furthermore, the absence of force feedback would make training incomplete in terms of resistance and balance maintenance especially for MW users. Nevertheless, the system reproduces the movements of a real wheelchair overground and could thus be used to teach abilities like obstacle avoidance, turning in open or thigh space, passing through doors, moving backward/forward while maneuvering.

Furthermore, in the absence of physics in D-flow, there cannot be any gravity simulation and thus acceleration/deceleration in case slopes. This could be solved by integrating specific hardware, i.e. force feedback interface.

The absence of virtual hand in MW simulator could be considered another limitation, because both MW and PW users have the same training needs in approaching and catching objects. Nevertheless, as this was a first prototype, it was decided to ensure the minimum interference between the markers used for driving and those on the hand and to choose the simplest marker setup. For these reasons, the virtual hand was integrated in the PW simulator on the non-driving hand, and not in the MW simulator where both hands are required for driving. Hand integration in the MW model could be achieved, for example, by creating a Vicon model including markers on trunk, arm, wheels and wheelchair axis: This, however, would have required a very long setup and complex patient preparation.

Furthermore, the virtual hand simulation in PW mode includes exclusively translational movements in the 3D space. Three markers would have been necessary to identify the plane of the hand for simulating hand rotations along its axis. The hand rotation would undoubtedly have made the simulation more realistic and more effective for learning, in terms of approaching and taking an object. Nevertheless, in this prototype we wanted to demonstrate the feasibility of an integrated MW/PW simulator that could also (partially) integrate hand tracking, and therefore, the complete implementation of object dragging was out of scope.

Another limitation is that the PW simulator does not allow to configure the joystick dead space which can be found on real joysticks, thus not reducing possible instabilities.

Moreover, the implementation of the proposed setup requires a complex system integrating motion capture, moving platform and virtual reality, such as the GRAIL system whose cost is not negligible.

A final limitation of this work is that it lacks the description of the PW simulator validation. Nevertheless, the PW simulator development was mainly based on available modules that required parameterization, thus allowing to hypothesize that a correct functionality was preserved.

Despite such limitations, we believe that our simulator is a reliable prototype that can be improved with further work. Future activities must be focused on the validation of the simulator with real end users assessing their experience in VR, possible cybersickness as well as effectiveness and safety of the simulator. Furthermore, quantitative tests for PW modality have to be planned to assess the validity of the simulator to reproduce power wheelchair movements.

For future prototypes, it will also be necessary to consider the integration of force feedback to make the simulator more realistic in both modalities.

Regarding the PW simulator, we will evaluate whether it is possible to take into account the dead space available in real joysticks to improve the performance and fidelity of joystick simulation.

Lastly, for future implementation, we will evaluate possible integration of the virtual hand in MW modality in order to guarantee environment interaction tasks also for manual wheelchair users.

To conclude, future perspectives of the simulator described in this manuscript may be the following. The system could be used to validate new virtual scenarios for wheelchair training, thanks to its independence of the environment used. This simulator could have the potential to be a good wheelchair driving rehabilitation tool for MW and PW users in a safe and controlled environment. During the rehabilitation process, the simulator would also allow to evaluate movements needed to drive a wheelchair, if integrated with a proper protocol for upper limb motion analysis. Finally, it could help test driving skills and the ability to reaching objects, thus representing a useful tool to evaluate the appropriateness of a medical prescription for a specific wheelchair.

Data availability

The authors confirm that the data supporting the findings of this study are available within the article. The complete raw data that support the findings of this study are available from the corresponding author, upon reasonable request.

References

AlAbboudi M, Majed M, Hassan F, Nassif AB (2020) EEG Wheelchair for People of Determination. ArXiv.

Alkhateeb AM, Daher NS, Forrester BJ, Martin BD, Jaber HM (2019) Effects of adjustments to wheelchair seat to back support angle on head, neck, and shoulder postures in subjects with cerebral palsy. Assist Technol. https://doi.org/10.1080/10400435.2019.1641167

Arai K, Mardiyanto R (2011) A prototype of electric wheelchair controlled by eye-only for paralyzed user. J Robotics Mechatron 23(1):66

Archambault PS, Bigras C (2019) Improving wheelchair driving performance in a virtual reality simulator. International conference on virtual rehabilitation, ICVR, 2019-July. https://doi.org/10.1109/ICVR46560.2019.8994644

Archambault PS, Tremblay S, Cachecho S, Routhier F, Boissy P (2012) Driving performance in a power wheelchair simulator. Disabil Rehabil Assist Technol 7(3):226–233. https://doi.org/10.3109/17483107.2011.625072

Archambault PS, Blackburn É, Reid D, Routhier F, Miller WC (2017) Development and user validation of driving tasks for a power wheelchair simulator. Disabil Rehabil 39(15):1549–1556. https://doi.org/10.1080/09638288.2016.1226423

Arlati S, Colombo V, Ferrigno G, Sacchetti R, Sacco M (2019) Virtual reality-based wheelchair simulators: a scoping review. Assist Technol 00(00):1–12. https://doi.org/10.1080/10400435.2018.1553079

Bayley K, Parkinson S, Jacoby P, Cross D, Morris S, Vorster N, Schofield C, Kava M, Siafarikas A, Evans K, Gaynor O, Chiu L, Ryan MM, Cairns A, Clark D, Downs J (2020) Benefits of powered standing wheelchair devices for adolescents with duchenne muscular dystrophy in the first year of use. J Paediatr Child Health 56(9):1419–1425. https://doi.org/10.1111/jpc.14963

Bigras C, Owonuwa DD, Miller WC, Archambault PS (2020) A scoping review of powered wheelchair driving tasks and performance-based outcomes. Disabil Rehabil: Assist Tech 15(1):76–91

Blouin M, Lalumière M, Gagnon DH, Chénier F, Aissaoui R (2015) Characterization of the immediate effect of a training session on a manual wheelchair simulator with haptic biofeedback: Towards more effective propulsion. IEEE Trans Neural Syst Rehabil Eng 23(1):104–115. https://doi.org/10.1109/TNSRE.2014.2330837

Borg J, Larsson S, Östergren PO (2011) The right to assistive technology: for whom, for what, and by whom? Disability Soci 26(2):151–167. https://doi.org/10.1080/09687599.2011.543862

Brandt Å, Iwarsson S, Ståhle A (2004) Older people’s use of powered wheelchairs for activity and participation. J Rehabil Med 36(2):70–77. https://doi.org/10.1080/16501970310017432

Briley SJ, Vegter RJK, Goosey-Tolfrey VL, Mason BS (2020) Scapular kinematic variability during wheelchair propulsion is associated with shoulder pain in wheelchair users. J Biomech 113:110099. https://doi.org/10.1016/j.jbiomech.2020.110099

Carlozzi NE, Gade V, Rizzo A, Tulsky DS (2013) Using virtual reality driving simulators in persons with spinal cord injury: three screen display versus head mounted display. Disabil Rehabil Assist Technol 8(2):176–180. https://doi.org/10.3109/17483107.2012.699990

Chenier F, Bigras P, Aissaoui R (2014) A new wheelchair ergometer designed as an admittance-controlled haptic robot. IEEE/ASME Trans Mechatron 19(1):321–328. https://doi.org/10.1109/TMECH.2012.2235079

Cooper RA, Ferretti E, Oyster M, Kelleher A, Cooper R (2011) The relationship between wheelchair mobility patterns and community participation among individuals with spinal cord injury. Assist Technol 23(3):177–183. https://doi.org/10.1080/10400435.2011.588991

Corfman TA, Cooper RA, Fitzgerald SG, Cooper R (2003) Tips and falls during electric-powered wheelchair driving: effects of seatbelt use, legrests, and driving speed. Arch Phys Med Rehabil 84(12):1797–1802. https://doi.org/10.1016/S0003-9993(03)00467-2

Crichlow LR, Crichlow LR (2011) Development of a comprehensive mathematical model and physical interface for manual wheelchair simulation by development of a comprehensive mathematical model and wheelchair interface for manual wheelchair simulation

Crichlow LR, Fernie GR, Campos JL, Grant PR (2011) A full motion manual wheelchair simulator for rehabilitation research. Proc of the 2011 Annual RESNA Conference, 8–11

Davies A, De Souza LH, Frank AO (2003) Changes in the quality of life in severely disabled people following provision of powered indoor/outdoor chairs. Disabil Rehabil 25(6):286–290. https://doi.org/10.1080/0963828021000043734

Desbonnet MCSA (1998) Development and evaluation of a virtual reality based training system for disabled children; Proceedings of the 2nd European conference on disability, virtual reality and associated technologies (ECDVRAT 1998). Ecdvrat, 177–182

Devigne L, Babel M, Nouviale F, Narayanan VK, Pasteau F, Gallien P (2017) Design of an immersive simulator for assisted power wheelchair driving. IEEE Int Conf Rehabil Robot. https://doi.org/10.1109/ICORR.2017.8009379

Devitt R, Chau B, Jutai JW (2004) The effect of wheelchair use on the quality of life of persons with multiple sclerosis. Occupat Therapy Health Care 17(3–4):63–79. https://doi.org/10.1080/j003v17n03_05

Dieruf K, Ewer L, Boninger D (2008) The natural-fit handrim: factors related to improvement in symptoms and function in wheelchair users. J Spinal Cord Med 31(5):578–585. https://doi.org/10.1080/10790268.2008.11754605

Domingues I, Pinheiro J, Silveira J, Francisco P, Jutai J, Correia Martins A (2019) Psychosocial impact of powered wheelchair, users’ satisfaction and their relation to social participation. Technologies 7(4):73. https://doi.org/10.3390/technologies7040073

Domingues I, Pinheiro J, Silveira J, Martins AC (2020) Powered wheelchair impact – user-centered observational study. IFMBE Proceedings 76:947–953. https://doi.org/10.1007/978-3-030-31635-8_115

Evans S, Neophytou C, de Souza L, Frank AO (2007) Young people’s experiences using electric powered indoor-outdoor wheelchairs (EPIOCs): potential for enhancing users’ development? Disabil Rehabil 29(16):1281–1294. https://doi.org/10.1080/09638280600964406

Fadhil H, Hussam S, Sadoon Y (2019) Intelligent control system of a wheelchair for people with quadriplegia paralysis. IIUM Eng J 20(1):194–201

Field D (1999) Powered mobility: a literature review illustrating the importance of a multifaceted approach. Assist Technol 11(1):20–33. https://doi.org/10.1080/10400435.1999.10131982

Frost KL, Bertocci G, Smalley C (2020) Ramps remain a barrier to safe wheelchair user transit bus ingress/egress. Disabil Rehabil Assist Technol 15(6):629–636. https://doi.org/10.1080/17483107.2019.1604824

Geijtenbeek T, Steenbrink F, Otten B, Even-Zohar O (2011) D-Flow: Immersive virtual reality and real-time feedback for rehabilitation. Proceedings of VRCAI 2011: ACM SIGGRAPH Conference on virtual-reality continuum and its applications to industry, 201–208. https://doi.org/10.1145/2087756.2087785

Harrison CS, Dall PM, Grant PM, Granat MH, Maver TW, Conway BA (2000) Development of a wheelchair virtual reality platform for use in evaluating wheelchair access. The third international conference on disability, virtual reality and associated technologies, December 2016, 1–8

Harrison A, Derwent G, Enticknap A, Rose FD, Attree EA (2002) The role of virtual reality technology in the assessment and training of inexperienced powered wheelchair users. Disabil Rehabil 24(11–12):599–606. https://doi.org/10.1080/09638280110111360

Hasdai A, Jessel AS, Weiss PL (1998) Use of a computer simulator for training children with disabilities in the operation of a powered wheelchair. Am J Occup Ther 52(3):215–220. https://doi.org/10.5014/ajot.52.3.215

Hosseini SM, Oyster ML, Kirby RL, Harrington AL, Boninger ML (2012) Manual wheelchair skills capacity predicts quality of life and community integration in persons with spinal cord injury. Arch Phys Med Rehabil 93(12):2237–2243. https://doi.org/10.1016/j.apmr.2012.05.021

Karmarkar AM, Collins DM, Wichman T, Franklin A, Fitzgerald SG, Dicianno BE, Pasquina PF, Cooper RA (2009) Prosthesis and wheelchair use in veterans with lower-limb amputation. J Rehabil Res Dev 46(5):567–575. https://doi.org/10.1682/JRRD.2008.08.0102

Keeler L, Kirby RL, Parker K, McLean KD, Hayden JA (2019) Effectiveness of the wheelchair skills training program: a systematic review and meta-analysis*. Disabil Rehabil: Assistive Tech 14(4):391–409

Kim G, Biocca F (2018) Immersion in Virtual Reality Can Increase Exercise Motivation and Physical Performance. Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics), 10910 lncs, 94–102. https://doi.org/10.1007/978-3-319-91584-5_8

Kirby RL, Ackroyd-Stolarz SA, Brown MG, Kirkland SA, MacLeod DA (1994) Wheelchair-related accidents caused by tips and falls among noninstitutionalized users of manually propelled wheelchairs in Nova Scotia. Am J Phys Med Rehabil 73(5):319–330. https://doi.org/10.1097/00002060-199409000-00004

Kirby RL, Miller WC, Routhier F, Demers L, Mihailidis A, Polgar JM, Rushton PW, Titus L, Smith C, McAllister M, Theriault C, Thompson K, Sawatzky B (2015) Effectiveness of a wheelchair skills training program for powered wheelchair users: a randomized controlled trial. Arch Phys Med Rehabil 96(11):2017-2026.e3. https://doi.org/10.1016/j.apmr.2015.07.009

Kuntal K, Banerjee I, Lakshmi PP (2020) Design of Wheelchair based on Electrooculography. Proceedings of the 2020 ieee international conference on communication and signal processing, ICCSP 2020, 632–636. https://doi.org/10.1109/ICCSP48568.2020.9182157

Labbé D, Mortenson WB, Rushton PW, Demers L, Miller WC (2020) Mobility and participation among ageing powered wheelchair users: using a lifecourse approach. Ageing Soc 40(3):626–642. https://doi.org/10.1017/S0144686X18001228

Mahajan, Harshal Prabhakar (2013) Development and validation of simulators for power wheelchair driving evaluations. In Dissertation Abstracts International: Section B: The Sciences and Engineering (Vol. 74, Issues 1-B(E), p. No-Specified)

Mahajan HP, Dicianno BE, Cooper RA, Ding D (2013) Assessment of wheelchair driving performance in a virtual reality-based simulator. J Spinal Cord Med 36(4):322–332. https://doi.org/10.1179/2045772313Y.0000000130

Maxhall M et al. (2004) Participants responses to a stroke training simulator M; The 5th International conference on disability, virtual reality and associated technologies Proceedings,2004 Oxford, UK. 225–230

Miles-Tapping C (1996) Power wheelchairs and independent life styles. Canadian J Rehabi 10(2):137–145

Mittelstaedt J, Wacker J, Stelling D (2018) Effects of display type and motion control on cybersickness in a virtual bike simulator. Displays. https://doi.org/10.1016/j.displa.2018.01.002

Morgan KA, Engsberg JR, Gray DB (2017) Important wheelchair skills for new manual wheelchair users: health care professional and wheelchair user perspectives. Disabil Rehabil Assist Technol 12(1):28–38. https://doi.org/10.3109/17483107.2015.1063015

Mountain AD, Kirby RL, Eskes GA, Smith C, Duncan H, MacLeod DA, Thompson K (2010a) Ability of people with stroke to learn powered wheelchair skills: a pilot study. Arch Phys Med Rehabil 91(4):596–601. https://doi.org/10.1016/j.apmr.2009.12.011

Mountain AD, Smith C, Kirby RL (2010b) Are wheelchair-skills assessment and training relevant for long-standing wheelchair users? Two case reports. Disabil Rehabil Assist Technol 5(3):230–233. https://doi.org/10.3109/17483100903391145

Nunnerley J, Gupta S, Snell D, King M (2017) Training wheelchair navigation in immersive virtual environments for patients with spinal cord injury–end-user input to design an effective system. Disabil Rehabil Assist Technol 12(4):417–423. https://doi.org/10.1080/17483107.2016.1176259

Panadero CF, De La CruzBarquero V, Kloos CD, Núñez DM (2014) PhyMEL-WS: physically experiencing the virtual world Insights into mixed reality and flow state on board a wheelchair simulator. J Univ Computer Sci. https://doi.org/10.3217/jucs-020-12-1629

Pithon T, Weiss T, Richir S, Klinger E (2009) Wheelchair simulators: a review. Tech Disabil. https://doi.org/10.3233/TAD-2009-0268

Quilico EL, Harvey WJ, Caron JG, Bloom GA (2020) Interpretative phenomenological analysis of community exercise experiences after severe traumatic brain injury. Qualit Res Sport, Exercise Health. https://doi.org/10.1080/2159676X.2020.1778064

Revathi P, Prasanna Kumar G, Prashanthi K, Thoufeek Raja N (2008) Certain investigation and development of conventional wheelchair to improve the life of a severely disabled person with multiple sclerosis. Int Res J Eng Tech 6(4):1901–1903

Rice LA, Peters J, Sung J, Bartlo WD, Sosnoff JJ (2019) Perceptions of fall circumstances, recovery methods, and community participation in manual wheelchair users. Am J Phys Med Rehabil 98(8):649–656. https://doi.org/10.1097/PHM.0000000000001161

Richardson M, Frank AO (2009) Electric powered wheelchairs for those with muscular dystrophy: problems of posture pain and deformity. Disabil Rehabil: Assistive Tech. https://doi.org/10.1080/17483100802543114

Rodby-Bousquet E, Hägglund G (2010) Use of manual and powered wheelchair in children with cerebral palsy: a cross-sectional study. BMC Pediatr. https://doi.org/10.1186/1471-2431-10-59

Rousseau-Harrison K, Rochette A, Routhier F, Dessureault D, Thibault F, Côté O (2009) Impact of wheelchair acquisition on social participation. Disabil Rehabil Assist Technol 4(5):344–352. https://doi.org/10.1080/17483100903038550

Rozas Llontop DA, Cornejo J, Palomares R, Cornejo-Aguilar JA (2020) Mechatronics design and simulation of anthropomorphic robotic arm mounted on wheelchair for supporting patients with spastic cerebral palsy. IEEE Int Conf Eng Veracruz (ICEV). https://doi.org/10.1109/ICEV50249.2020.9289665

Ruzaij MF, Neubert S, Stoll N, Thurow K (2017) Design and implementation of low-cost intelligent wheelchair controller for quadriplegias and paralysis patient. SAMI 2017 - IEEE 15th International symposium on applied machine intelligence and informatics, Proceedings, 399–404. https://doi.org/10.1109/SAMI.2017.7880342

Silveira SL, Richardson EV, Motl RW (2020) Social cognitive theory as a guide for exercise engagement in persons with multiple sclerosis who use wheelchairs for mobility. Health Educ Res 35(4):270–282. https://doi.org/10.1093/her/cyaa013

Singh H, Scovil CY, Yoshida K, Oosman S, Kaiser A, Craven C, Jaglal S, Musselman KE (2020) Factors that influence the risk of falling after spinal cord injury: a qualitative photo-elicitation study with individuals that use a wheelchair as their primary means of mobility. BMJ Open 10(2):e034279. https://doi.org/10.1136/bmjopen-2019-034279

Spaeth DM, Mahajan H, Karmarkar A, Collins D, Cooper RA, Boninger ML (2008) Development of a wheelchair virtual driving environment: trials with subjects with traumatic brain injury. Arch Phys Med Rehabil 89(5):996–1003. https://doi.org/10.1016/j.apmr.2007.11.030

Torkia C, Reid D, Korner-Bitensky N, Kairy D, Rushton PW, Demers L, Archambault PS (2015) Power wheelchair driving challenges in the community: a users’ perspective. Disabil Rehabil Assist Technol 10(3):211–215. https://doi.org/10.3109/17483107.2014.898159

van den Akker LE, Holla JFM, Dadema T, Visser B, Valent LJ, de Groot S, Dallinga JM, Deutekom M (2020) Determinants of physical activity in wheelchair users with spinal cord injury or lower limb amputation: perspectives of rehabilitation professionals and wheelchair users. Disabil Rehabil 42(14):1934–1941. https://doi.org/10.1080/09638288.2019.1577503

Walford SL, Requejo PS, Mulroy SJ, Neptune RR (2019) Predictors of shoulder pain in manual wheelchair users. Clin Biomech 65:1–12. https://doi.org/10.1016/j.clinbiomech.2019.03.003

Webster JS, McFarland PT, Rapport LJ, Morrill B, Roades LA, Abadee PS (2001) Computer-assisted training for improving wheelchair mobility in unilateral neglect patients. Arch Phys Med Rehabil 82(6):769–775. https://doi.org/10.1053/apmr.2001.23201

Weech S, Kenny S, Barnett-Cowan M (2019) Presence and cybersickness in virtual reality are negatively related: a review. Front Psychol. https://doi.org/10.3389/fpsyg.2019.00158

WHO (2018) Assistive technology. https://www.who.int/news-room/fact-sheets/detail/assistive-technology

Xi L, Yamamoto Y, Mikata T, Shino M (2019) One-dimensional input device of electric wheelchair for persons with severe duchenne muscular dystrophy. Technol Disabil 31(3):101–113. https://doi.org/10.3233/TAD-190225

Xiang H, Chany AM, Smith GA (2006) Wheelchair related injuries treated in US emergency departments. Inj Prev 12(1):8–11. https://doi.org/10.1136/ip.2005.010033

Funding

This study was supported by the National Institute for Insurance against Accidents at Work (INAIL), being part of the Rientr@ project (PDT2/1—“Rientr@: Ambienti Virtuali per facilitare il rientro al lavoro dopo incidente” [Virtual Environments to Facilitate the Return to Work after an Accident]), and by the Italian Ministry of Health (Ricerca Corrente 2020/2021 to Dr. E. Biffi).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors declare that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Genova, C., Biffi, E., Arlati, S. et al. A simulator for both manual and powered wheelchairs in immersive virtual reality CAVE. Virtual Reality 26, 187–203 (2022). https://doi.org/10.1007/s10055-021-00547-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10055-021-00547-w