Abstract

In this paper, we present a method of evaluating binary image dissimilarity based on tree representation and heuristic optimization. Starting from the image, a graph structure of a binary tree is constructed that splits the set of image foreground pixels into consecutive subsets attached to tree nodes. Next, instead of comparing two images themselves, one compares the trees and expresses image dissimilarity as tree dissimilarity, which can be characterized by a nonlinear function. The goal is to find its minimum, as it corresponds with the best match of compared trees. Searching for the minimum would be ineffective with analytical optimization methods. Hence, we have approached the issue with three meta-heuristic algorithms, namely genetic algorithm, particle swarm optimization (PSO) and simulated annealing. The presented results show that PSO achieved the best results. The proposed method is compared with other binary image comparison approaches. The performed tests that are described in the paper show that it outperforms its competitors and can be successfully applied to compare binary images.

Similar content being viewed by others

1 Introduction

The idea presented in this paper follows the approach of extracting and comparing image features to calculate image dissimilarity, which is understood as the extent of how two images are unlike, with scale, rotation and translation invariance. The image (dis)similarity is understood in visual terms. No semantic issues are raised. The proposed method is as follows. For a binary image, a tree (a connected, acyclic graph) is created. The tree represents the image. The dissimilarity between two images is expressed by the degree of dissimilarity of the trees, constructed for the images. To determine the dissimilarity value, the trees have to be matched, which is a nonlinear optimization problem. We address it with the use of meta-heuristic algorithms.

Determining the dissimilarity is based on tree representation of binary images and unlikeness between two images is expressed as unlikeness of the trees constructed upon the images [40–43]. In this paper, the proposed image comparison method has been juxtaposed with reference algorithms. Moreover genetic algorithm, particle swarm optimization and simulated annealing were analyzed as optimization methods for the need of tree matching in the presented approach.

Heuristic optimization methods (often biologically inspired) have constituted an important share in optimization studies recently. The interest in this area has been intensified by successful research, which has yielded many efficient algorithms. They are often used to cope with problems, which are too challenging for standard approaches. Namely, meta-heuristic methods effectively deal with nonlinear, multiple optima functions, with no derivative information, for both continuous and discrete domains. In this paper, we evaluate three meta-heuristic optimization algorithms on a function, which calculates dissimilarity of binary images.

The paper is organized as follows. In the next section, a short survey of previous works in binary image similarity is given. In the next one, the proposed tree-based binary image dissimilarity measure, which underlies the experiments, is described. In Sect. 4, we briefly present the examined optimization algorithms. The experimental results are shown and discussed in Sect. 5. Conclusions are drawn in Sect. 6.

2 Previous works

The problem of determining binary image similarity is an important one in the domain of image processing. The aim is to design a solution robust to basic image transformations, e.g., geometrical ones: scaling, rotation and translation. Moreover, the approach should be robust to basic image transformations. Many different measures have been proposed so far. Some of them are based on the assumption that considered images are of the same size and orientation, and comparison of corresponding pixels is performed. Such methods are usually simple and computationally inexpensive; however, they are not invariant to image modifications. For this reason, more sophisticated methods have been developed. Most of them take advantage of extracting and comparing image features like edges, shapes, etc.

The issue of shape similarity is addressed in, e.g., [3] and [37]. In [13], the inner distance is introduced to measure the shape similarity. The inner distance is defined as the length of the shortest path within the shape boundary to classify shape images. The approach presented in [22] is aimed at handling partially visible shapes. Some methods make use of curve alignment techniques [31]. In [21], curve correspondence is applied to distinguish between shapes. In [1] and [27], the problem is approached with the so-called curvature scale space. The paper [19] introduces a representation based on a recursive division of a shape. Some methods operate on edge images [30] or contours of objects [23]. Others take advantage of curvature trees [2], contour point histogram [34], deformable potential of contour [38] or curve adjustment procedure based on their minimal deformation cost [39]. There is a number of algorithms which are based on Hausdorff distance [10, 16] to compare binary images. These algorithms generally calculate inter-pixel distances between images. The original solution is sensitive to noise and some research [4, 29] was aimed at reducing this shortcoming. An adaptive measure of local Hausdorff distances between images is proposed in [5]. In [15], the image dissimilarity is determined with the use of moment invariants. Both Hausdorff and moment invariant approaches are universal and possible to be applied for various kinds of binary images.

3 Tree-based image dissimilarity

A binary image is usually perceived as a rectangular matrix of two-valued scalars. The main idea of the proposed method is to represent a binary image as a tree. A tree T is understood to be a connected graph with no cycles. Trees considered in the paper are rooted ones, which means that there exists one node in the tree designated as a root. A parent of a node is the node connected to it on the path to the root. |T| signifies the order (number of nodes) of T.

We present an image dissimilarity measure which in general can be applied to both binary [41] and gray-tone images [42]. To determine the dissimilarity of two images, trees created upon the images are compared. This is done in the process of adjusting one tree to the other. The problem itself is challenging and cannot be solved with the use of standard analytical optimization methods. Therefore, searching for the best match of the trees is performed by means of meta-heuristic optimization. This issue will be discussed in Sect. 4; the current one is focused on tree representation and comparison.

3.1 Constructing the tree

The tree construction algorithm works iteratively. Starting from the initial set of all image foreground pixels of a given binary image, its consecutive subsets are extracted. Their characteristic elements are than assigned to tree nodes. Let us define the set of image pixels \(S = \{p :I(p) = u \}\), where p stands for pixel coordinates of an image I, and u is a value of foreground pixels. In successive steps, subsets of the input set of foreground pixels are created. Until the desired tree level is reached, subsets are recursively determined. For every set, a characteristic element is calculated. The tree is constructed by connecting the characteristic elements. As they are bound with sets of pixels, the elements remain in parent–child relationship as well and a tree arises by linking the elements which fulfill the relation. To apply the algorithm, one has to provide the input set of pixels S, a function f which determines the characteristic element, a criterion c which allows to construct subsets upon a set and the height of the output tree. Algorithm 1 presents the idea.

To determine the characteristic element of a set, function f computes the arithmetic mean of all \(p \in S\). In other words, f calculates coordinates of the centroid (center of gravity) of all pixels in S. Each tree node is thus characterized by an appropriate centroid. A criterion c allowing to create two subsets of S is defined as follows. Let \(\rho (o_1, o_2)\) be the Euclidean distance between pixels’ coordinates \(o_1\) and \(o_2\). Let \(m = {\mathrm {median}}( \{ \rho (p, f(S)) :p \in S \})\). Presented in another way, m stands for a median distance between pixels in S and its centroid. Then, \(c(S) = (S_1, S_2)\), where \(S_1 = \{ p \in S :\rho (p, f(S)) \le m \}\) and \(S_2 = \{ p \in S :\rho (p, f(S)) \ge m \}\). In this way, \(S_1\) contains pixels closer (or equally distant) to the centroid than m and \(S_2\) contains pixels farther (or equally distant) from the centroid than m. Alternatively, instead of computing medians for consecutive sets of pixels, in the very beginning the quantiles may be calculated. Then, successive sets of pixels are determined with regard to the values of appropriate quantiles [40].

As there are two subsets created in a single step of our procedure, the output tree T is a perfect binary one. The algorithm successively applies function f to determine the characteristic elements for consecutive sets extracted with use of criterion c. The process stops when the tree is constructed to the desired level. Figure 1 illustrates the process of building a tree.

The way of creating a tree. In a, consecutive sets returned by criterion c are presented. The images are ordered as a tree, in accordance with the way the procedure works. The topmost image is the input image. Left child images (red arrows) are composed of pixels which are closer or equally distant to the centroid of the parent image. Right child images (blue arrows) are composed of pixels which are farther or equally distant to the centroid. For every set, a characteristic element is calculated. The elements are bound with corresponding nodes of a tree. Every element is linked with a characteristic element of a parent node. This way, the final tree (b) arises. The presented tree of height \(h = 2\) is enlarged in relation to the images in a. The color and the style of tree edges correspond to the color and the style of arrows in a (color figure online)

Apart from a centroid, with every set we associate information about pixel distribution of the set. The information comprises average pixel distance from the centroid and standard deviation of pixel distance from the centroid. This ensures comprehensive characterization of the set.

The trees created with the use of the above algorithm describe the content of images in such a way that for different images, depicting different content, corresponding centroids as well as pixel distribution values differ. On the other hand, centroids and pixel distribution values of corresponding nodes of trees created upon similar images remain similar, due to similar subsets returned by criterion c. Therefore, image similarity (or dissimilarity) can be expressed by tree similarity (or dissimilarity). We can estimate the extent of dissimilarity between two trees and therefore conclude upon the degree of difference between the images. Examples of trees created upon four various binary shapes are shown in Fig. 2.

Different binary images imply different trees. Test images of MPEG7 database [28]: a bat, b bird, c frog and d lizard exemplify the statement. The trees are of height \(h = 4\). Left edges (connecting the sets with subsets of closer pixels) are drawn in red, while right edges (connecting the sets with subsets of farther pixels) are drawn in blue (color figure online)

3.2 Comparing the trees

Following the main idea of the proposed approach, to find the dissimilarity of two binary images one computes the dissimilarity of trees obtained for images being compared. Since the way of creating the tree results in a strict structure of the tree, while investigating differences between two trees, nodes can be compared pairwise. This is due to the fact that every node has its corresponding one in another tree. Difference between the trees is computed as a sum of differences between pairs of nodes. This way, the tree dissimilarity is expressed as a single scalar value.

Let \(T_1\) and \(T_2\) be trees of height \(h_1\) and \(h_2\), respectively. The difference between the trees can be computed as:

where \(\delta _{i}\) stands for the difference for a pair of nodes i, \(l = 2^{h+1} - 1\) with \(h \in {\mathbb {N}}_{+}\) and \(h \le {\mathrm {min}}(h_1, h_2)\), and l is the number of nodes. The difference \(\delta _i\) can be viewed as a “work” needed to translate a centroid of a node i to the position of a centroid of a corresponding node of another tree and vice versa. The term “work” is an analog to the physical quantity, which is directly proportional to force and the Euclidean distance between the centroids of a pair of corresponding nodes. The force is proportional to the number of pixels \(z_{ei}\) upon which the centroid for a node i in tree \(T_e\) is calculated. This reasoning leads to the formula:

where \(Z_e = \sum _{i=1}^{l} z_{ei}\), d stands for the distance, \(\widehat{a}_i = | \widehat{a}_{1i} - \widehat{a}_{2i} |\) and \(\widehat{s}_i = | \widehat{s}_{1i} - \widehat{s}_{2i} |\), with \(\widehat{a}_{ei}\) meaning average pixel distance from the centroid i in tree \(T_{e}\) and \(\widehat{s}_{ei}\) symbolizing standard deviation of pixel distance from the centroid i in tree \(T_{e}\).

The above approach may be used directly only if the trees represent images of the same size and orientation. In case of images modified with the use of rotation or scaling, the trees must be matched prior to the computation of dissimilarity. To do this, first of all, trees are aligned, so that their roots are positioned in the origin of the coordinate system. It is achieved by translating all the nodes of a tree by the same vector applied to translate the root of the tree to the origin of the coordinate system. This ensures translation invariance of the trees. Then, tree \(T_1\) is rotated and scaled. This is achieved by transforming coordinates of all nodes of the tree to the polar coordinate system, in which each point is determined by an angle from a fixed direction and a distance from the pole. For every node of the tree, the angle is increased by \(\alpha\) and the distance is multiplied by s. This way, a new tree \(T_1\) arises. Next, the difference D between \(T_1\) and \(T_2\) is calculated.

Let us assume that the image upon which the tree \(T_2\) has been constructed has been scaled, so that the distances in the image increased by factor \(\kappa\) and the number of pixels by factor \(\kappa ^2\). The tree constructed for that image would be a version scaled by \(\kappa\) of an original tree. Then the formula goes as follows:

which is dependent on \(\kappa\). Obviously, a method with no dependence on scale is desired. Then the formula would have to be as follows:

Let us examine the \(\frac{z_{2i}}{Z_2}\) component. It cannot be divided by \(\kappa\), whose value is unknown. What is possible is to raise \(Z_2\) to the power of 1.5 and then the scaled component would be

The first component \(\frac{z_{1i}}{Z_1}\) cannot be divided by \(\kappa\) either. However, the value \(s'\) by which the tree \(T_1\) has been scaled in relation to the tree \(T_2\) is known. The scale equals \(s' = s \, \kappa\), where s is the scale difference between the compared images and is obviously dependent on \(\kappa\). Therefore, we achieve:

which eliminates, in the first component, the relation between \(\delta _i\) and \(\kappa\). However, for an image scaled by factor x, upon which the tree \(T_1\) has been created, the first component would equal

which in turn introduces the dependence on x. Removing the dependence is possible by raising \(Z_1\) to the power of 1.5. Then for an image scaled by x, for which the tree \(T_1\) has been built, the component would equal

and the final formula takes the following form:

which ensures invariance to scaling.

The goal is to find an angle \(\alpha\) and a scale s such that the the difference D is minimal. Then, D specifies the dissimilarity of trees, and thus the dissimilarity of images. When comparing trees created upon the same images even if rotated or scaled, D equals 0 (or almost 0 due to rotation and scaling approximation). The difference between the trees increases along with the growth of the difference between the images and is—theoretically—unlimited. Therefore, \(D \in \langle 0, \infty )\).

A significant feature of the method is that the geometrical transformation of an image refers to the transformation of the tree constructed upon the image. In effect, the trees created with the use of the presented approach can be adjusted by any geometrical transformation, e.g., a mirror flip. In such case, there will obviously be a need to optimize some additional parameters, specific for a particular transformation.

4 Optimization algorithms

In the transformation model introduced in the previous section, two parameters are considered, namely angle \(\alpha\) and scale s. Matching the trees with regard to these parameters is an optimization problem. The goal is to optimize the function expressed by the summation (1). The search space is a two parameters plane. In general, however, adjustment of several parameters may be required. Moreover, the function is nonlinear, it can have multiple minima and calculating its derivative is a very expensive task in computational terms. For such challenging functions, heuristic optimization algorithms, rather than the classical ones, are usually the best choice. Accordingly, in this research, the heuristic optimization approaches were applied. There is a constant necessity for deeper comprehension and further improvement of this type of method. Both external, computational needs and bottom-up algorithm examination appoint directions of advancement in the field of optimization. Heuristic optimization is applied in the current study to find the best match of two trees created upon binary images. This matching is necessary to compute the dissimilarity between the trees in view of evaluating the dissimilarity of two underlying images. In this research, we used three meta-heuristic optimization algorithms, namely, genetic algorithm, particle swarm optimization and simulated annealing. In the remainder of this section, the general view of applied optimization methods has been presented. Detailed descriptions, algorithm variants as well as parameter selection hints are available in a vast literature on the subject (e.g. [6, 7, 11, 12, 14, 18, 20, 26, 33]).

4.1 Genetic algorithm

Genetic algorithm (GA) [12, 14, 26] is a classic meta-heuristic optimization approach. It follows the concept of population advancement based on natural evolution. A population composed of individuals (also called chromosomes) evolves to find the best argument value of an objective function. Individuals undergo mechanisms derived from evolution, to lead the population to better results. The algorithm works as follows. At first, the population is randomly initiated in the search space. Then, in each iteration, chromosomes, each of which encodes a solution, are subjected to selection, gene recombination and mutation. These imitate evolution of a real, biological species. In effect, the next generation replaces the previous one. Selection determines pairs of parent chromosomes, upon which children arise by means of gene crossover. Generally, the better is the value of the fitness function for a particular individual, the greater are the chances for it to become a parent. Crossover operator defines how genes of parent individuals are recombined. Mutation decreases the risk of the population getting stuck in a local minimum. The main parameters are population size P, maximal number of generations G and fraction of the population which undergoes crossover and mutation.

In the issue of tree dissimilarity minimization, each chromosome encoded a scale s and an angle \(\alpha\). The population evolved in search for the optimal values of these parameters.

4.2 Particle swarm optimization

Particle swarm optimization (PSO) [18, 33] is a well-known optimization algorithm. It is an evolutionary computation method, which imitates social behavior of a swarm of creatures. The idea benefits from advancement of a population of particles, which rove in the search space. The position of each particle represents a solution. Particles adjust their movement parameters according to their own experience as well as the experience of other particles of the swarm. A basic variant of PSO works as follows. First of all, the population of particles is initiated. Particles, denoted below as x, are randomly positioned over a search space and are assigned random velocities. At iteration \(k+1,\) the position of a particle i is updated according to the formula:

with the velocity \(v_{k+1}^{i}\) calculated as follows:

where \(w_{k}\) stands for inertia weight and \(c_{1k}\) and \(c_{2k}\) stand for cognitive attraction and social attraction parameters, respectively. \(p_{k}^{i}\) is the best solution particle i ever found, \(p_{k}^{g}\) is the best solution ever found by any particle of the swarm, and \(r_{1}\) and \(r_{2}\) are random numbers uniformly distributed on the interval (0, 1). Inertia weight \(w_{k}\) as well as attraction parameters \(c_{1k}\) and \(c_{2k}\) can be constant or may be changed in the course of the run. The algorithm works and improves the solution until the stopping criterion is met, e.g., satisfactory solution is achieved, inconsiderable solution improvement is negligible or a maximum number of iterations is reached. PSO has gained an exceptional interest. There are many works introducing modifications to increase the performance of PSO and providing analysis of the algorithm, e.g., [7, 8, 11, 25]. Most of them relate to updating particle velocity.

In search of the best tree adjustment, we assumed that each particle’s position represents a scale s and an angle \(\alpha\). Velocity expresses the dynamics of parameter adjustment. Swarm proceeded to find the optimal configuration of the two parameters.

4.3 Simulated annealing

Simulated annealing (SA) [6, 20] is another meta-heuristic, global optimization approach. It is inspired by the process of annealing in metallurgy, where heating and cooling down the alloy is performed, to achieve the best structure of the material, therefore minimizing its energy. Heating shoves the molecules out from their initial positions. This prevents the algorithm getting stuck in a local minimum. On the other hand, cooling lets them go into the state of lower energy then before, which means they head toward an optimal solution. In each step of the algorithm, a new point is randomly generated. Its distance to the current point is based on a probability distribution, whose scale is proportional to the temperature. The current solution is replaced by a new one, when the latter lowers the objective (cooling down the material). However, with a certain probability, a new point may be accepted, even if it raises the objective (heating). The temperature is decreased in the run of the algorithm. This way, the extent of search is reduced in the final stages. The main parameters are the initial temperature and the cooling function, which determine the way of decreasing the temperature.

Similarly as in the other approaches, we optimized our objective function with respect to scale s and angle \(\alpha\).

5 Experiments

As it has been mentioned, the tree-based method allows to determine the dissimilarity between any pair of binary images. The following section presents a comparison of the proposed approach with two reference algorithms, namely the Hu moment invariant method [15] and a modified Hausdorff distance method [10]. All three compared methods are general purpose ones, able to deal with different types of binary images, and their outcome can be presented as a dissimilarity value.

The Hu moment invariants approach allows to calculate image characteristic (as a set of 7 numbers) invariant to scaling, translation and rotation. The methods based on Hausdorff distance are sensitive to such transformations. It is possible to deal with the issue by translating, resizing, as well as determining orientation [9] and rotating of the compared images. However, it significantly increases the computational cost.

Moreover, another set of experiments was performed. For the tree-based approach, three meta-heuristic optimization algorithms were evaluated and juxtaposed.

5.1 Methodology and results

We created a dataset of binary imagesFootnote 1, based on the MPEG7 shape database [28]. Our dataset is composed of 50 classes of images. Every class contains an original object as well as its variants, obtained by binary morphological operators of opening and closing [32]. Therefore, there are 150 images in the dataset. Every morphologically modified image was scaled by a random factor in the range \(\langle 0.5, 2 \rangle\) and rotated by a random factor in the range \(\langle 0, 2 \pi \rangle\). Class example is presented in the Fig. 3. Upon the images, trees of height \(h = 3\) were created. Evaluation procedure were as follows. For a given optimization algorithm, for its certain configuration, every possible pair of trees was compared. As a result, we obtained square matrix of dissimilarity values. If an effective optimization method was used, then the values calculated for objects of the same class were small, whereas those calculated for objects from among different classes were greater. To reason about the efficiency of the considered algorithm, we subjected the matrix of dissimilarity values to cluster analysis.

Clustering was performed with the use of k-medoids algorithm [17]. The method—on the basis of the dissimilarity matrix—partitions the set of observations into a predefined number of clusters k. It is similar to k-means algorithm [24, 36]. However, in contrast to k-means, k-medoids selects cluster centers from among the observations rather than calculates centroids. The chosen centers (called medoids) define clusters in such a way that each observation is assigned to the least dissimilar medoid. The objective of the algorithm is to find such a configuration of medoids for which the sum of dissimilarities between the observations and their centers is minimal. The parameter k was constant and equaled to 50—the number of classes of images.

We express the reliability as the percentage of correctly clustered images. It may be difficult, however, to determine the percentage. Clustering algorithms deliver data grouped into clusters, but without labels. To determine the reliability, it is necessary to find the best cluster labeling. However with different observations within a single cluster, it is tricky to assign the labels. For every case, we aimed at finding such a label arrangement which led to the highest percentage.

The comparative study of the algorithms follows the reasoning that the better an optimization method performed, the higher was the outcome percentage result. The successive tables present results obtained for the considered algorithms, for different parameter configuration. The parameters were arranged in such a way that a maximal number of function evaluation was fixed. In effect, the results of comparable computational effort for every method were achieved.

For comparative purposes, the proposed approach was confronted with two well-known binary image comparison algorithms, namely the Hu moment invariants [15] and the modified Hausdorff approach [10]. The results achieved for the Hu method reached 38.40 % and for the modified Hausdorff approach 36.80 %.

5.2 Comparison of meta-heuristics for the tree-based approach

GA has been applied with different values of population size P, maximal number of generations G and number of chromosomes which underwent crossover. Selection was performed by stochastic universal sampling. Gene recombination was accomplished by uniform crossover. Mutation was executed in an adaptive way [35]. Table 1 presents the results.

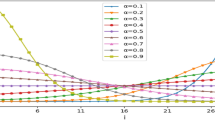

We have applied the PSO algorithm with different values of population size P, maximal number of generations K, cognitive attraction \(c_{1k}\) and social attraction \(c_{2k}\). Initial inertia weight \(w_1 = 0.9\) and \(w_k = w_1 - 0.4 \, (k-1)/(K-1)\), which means that \(w_k\) decreased linearly from 0.9 at the first iteration to 0.5 at iteration K. PSO was made to stop if there was no improvement in the objective function for 5 consecutive generations. Tables 2, 3 and 4 present the results.

The maximal number of function evaluations in a single run was fixed. This way, the algorithm was able to calculate the value of the function as many times as the tested population-based methods. The initial temperature was set to either \(t_1 = 50\) or \(t_1 = 100\) and its value at iteration k was calculated as \(t_k = 0.95^k \times t_1\). Table 5 shows the results for both \(t_1\) values and different numbers of objective function evaluations.

Experiments have been performed for small population sizes and numbers of generations, for both population-based methods. This limited the number of possible evaluations of the objective function. For the simulated annealing approach, the number of the objective function evaluations was also limited. In effect, every approach was able to calculate the objective function at most the same number of times. This way, results are comparable. The experiments show that PSO achieved the best results, which were satisfactory for small population sizes and numbers of generations. Simulated annealing was slightly worse. Both methods significantly outperformed the genetic algorithm.

It is clearly visible that the genetic algorithm achieved better results for greater population size and number of generations. Crossover fraction also played a significant role in the performance of the method. As for values \(f_1 = 0.7\) and \(f_1 = 0.8,\) the results have been similar, and increasing it to \(f_1 = 0.9\) led to the degradation of the results.

In the PSO evaluation, the size of the population has had a positive impact on the outcome. On the other hand, for the number of generations, social and cognitive attraction, we have observed no obvious influence of the parameters on the final outcome.

As one might expect, for the simulated annealing approach, generally, greater number of possible objective function evaluations yielded better optimization results.

6 Conclusions

In this paper, a generic binary image comparison approach with the use of heuristic optimization has been presented. The method is based on image tree representation. In the process of matching the trees constructed upon two images, a dissimilarity value is obtained. The value expresses how the images are unlike. The proposed approach is invariant to translation, scaling and rotation, as well as robust to basic image transformations. In this research, we also found out how heuristic optimization algorithms perform with the tree adjustment issue for two transformation parameters—scaling and rotation. We have evaluated the genetic algorithm, the particle swarm optimization and the simulated annealing approach.The tests have been applied for a different parameter values of the considered methods. The results show the advantage of the particle swarm optimization and the simulated annealing algorithms over the genetic algorithm. Nevertheless, the best results have been obtained for the PSO approach. It is worth noticing that geometrical image transformation corresponds to the transformation of the tree. Therefore, the presented method can be naturally developed to be robust to any geometrical image transformation. Despite higher complexity which will occur in such a case (more variables to optimize), thanks to the proposed heuristic optimization approach, tree adjustment process may be performed using exactly the same scheme. The proposed methods for measuring binary image dissimilarity has also been compared in this paper with other dissimilarity measures, showing its superiority over them. All the above experiments have proven that the proposed method can be successfully applied to binary image comparison.

Notes

Available at http://www.isep.pw.edu.pl/~iwanowsm/binary.zip.

References

Abbasi S, Mokhtarian F, Kittler J (2000) Enhancing CSS-based shape retrieval for objects with shallow concavities. Image Vis Comput 18(3):199–211

Alajlan N, Kamel MS, Freeman G (2006) Multi-object image retrieval based on shape and topology. Signal Process Image Commun 21(10):904–918

Ankerst M, Kriegel H-P, Seidl T (1998) A multistep approach for shape similarity search in image databases. IEEE Trans Knowl Data Eng 10(6):996–1004

Baddeley AJ (1992) An error metric for binary images. Robust computer vision: quality of vision algorithms, proceedings of international workshop on robust computer vision, pp 59–78

Baudrier E, Nicolier F, Millon G, Ruan S (2008) Binary-image comparison with local-dissimilarity quantification. Pattern Recognit 41(5):1461–1478

Cerny V (1985) Thermodynamical approach to the traveling salesman problem: an efficient simulation algorithm. J Optim Theory Appl 45(1):41–51

Clerc M, Kennedy J (2002) The particle swarm—explosion, stability, and convergence in a multidimensional complex space. IEEE Trans Evol Comput 6(1):58–73

Clerc M (2010) Beyond standard particle swarm optimisation. Int J Swarm Intell Res 1(4):46–61

Crespo J, Aguiar P (2011) Revisiting complex moments for 2-D shape representation and image normalization. IEEE Trans Image Process 20(10):2896–2911

Dubuisson MP, Jain AK (1994) A modified Hausdorff distance for object matching. International conference on pattern recognition, pp 566–568

Eberhart R, Shi Y (2000) Comparing inertia weights and constriction factors in particle swarm optimization. In: Proceedings of the 2000 congress on evolutionary computation, pp 84–88

Goldberg D (1989) Genetic algorithms in search. Optimization and machine learning. Addison-Wesley Longman Publishing Co., Inc., Boston

Haibin L, Jacobs DW (2007) Shape classification using the inner-distance. IEEE Trans Pattern Anal Mach Intell 29(2):286–299

Holland J (1975) Adaptation in natural and artificial systems. University of Michigan Press, Michigan

Hu MK (1962) Visual pattern recognition by moment invariants. IRE Trans Inf Theory 8(2):179–187

Huttenlocher DP, Klanderman GA, Rucklidge WJ (1993) Comparing images using the Hausdorff distance. IEEE Trans Pattern Anal Mach Intell 15(9):850–863

Kaufman L, Rousseeuw P (1990) Finding groups in data: an introduction to cluster analysis. Wiley-Interscience, New York

Kennedy J, Eberhart R (1995) Particle swarm optimization. Proc IEEE Int Conf Neural Netw 4:1942–1948

Kim H-K, Kim J-D (2000) Region-based shape descriptor invariant to rotation, scale and translation. Signal Process Image Commun 16(1):87–93

Kirkpatrick S, Gelatt C, Vecchi M (1983) Optimization by simulated annealing. Science 220(4598):671–680

Latecki LJ, Lakmper R (2000) Shape similarity measure based on correspondence of visual parts. IEEE Trans Pattern Anal Mach Intell 22(10):1185–1190

Latecki LJ, Lakmper R, Wolter D (2005) Optimal partial shape similarity. Image Vis Comput 23(2):227–236

Linhui J, Kitchen L (2000) Object-based image similarity computation using inductive learning of contour-segment relations. IEEE Trans Image Process 9(1):80–87

MacQueen J (1967) Some methods for classification and analysis of multivariate observations. Fifth Berkeley symposium on mathematical statistics and probability, pp 281–297

Mendes R, Kennedy J, Neves J (2004) The fully informed particle swarm: simpler, maybe better. IEEE Trans Evol Comput 8(3):204–210

Michalewicz Z (1996) Genetic algorithms + data structures = evolution programs. Springer, London

Mokhtarian F, Abbasi S, Kittler J (1997) Efficient and robust retrieval by shape content through curvature scale space. World Sci Publ Ser Softw Eng Knowl Eng 8:51–58

MPEG7 CE Shape-1 Part B. http://imageprocessingplace.com/downloads_V3 /root_downloads/image_databases/MPEG7_CE-Shape-1_Part_B.zip. Accessed 19 Nov 2014

Paumard J (1997) Robust comparison of binary images. Pattern Recognit Lett 18(10):1057–1063

Prieto MS, Allen AR (2003) A similarity metric for edge images. IEEE Trans Pattern Anal Mach Intell 25(10):1265–1273

Sebastian TB, Klein PN, Kimia BB (2003) On aligning curves. IEEE Trans Pattern Anal Machine Intell 25(1):116–125

Serra J (1982) Image analysis and mathematical morphology. Academic Press, London

Shi Y, Eberhart R (1999) Empirical study of particle swarm optimization. In: Proceedings of the 1999 congress on evolutionary computation, pp 1945–1950

Shu X, Wu X-J (2011) A novel contour descriptor for 2D shape matching and its application to image retrieval. Image Vis Comput 29(4):286–294

Srinivas M, Patnaik LM (1994) Adaptive probabilities of crossover and mutation in genetic algorithms. IEEE Trans Syst Man Cybern 24(4):656–667

Steinhaus H (1956) Sur la Division des Corps Materiéls en Parties. Bull Acad Polon Sci C1.III

Veltkamp RC (2001) Shape matching: similarity measures and algorithms. SMI 2001 international conference on shape modeling and applications, pp 188–197

Xu C, Liu J, Tang X (2009) 2D shape matching by contour flexibility. IEEE Trans Pattern Anal Mach Intell 31(1):180–186

Younes L (1999) Optimal matching between shapes via elastic deformations. Image Vis Comput 17(5):381–389

Zieliński B (2013) Division trees and their application to digital image comparison. PhD dissertation (in Polish), Warsaw University of Technology

Zieliński B, Iwanowski M (2013) Binary image comparison with use of tree-based approach. Adv Intell Syst Comput 184:171–177

Zieliński B, Iwanowski M (2012) Comparing image objects using tree-based approach. Lecture Notes Comput Sci 7594:702–709

Zieliński B, Iwanowski M (2013) Heuristic optimization algorithms for a tree-based image dissimilarity measure. Adv Intell Syst Comput 226:91–100

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Zieliński, B., Iwanowski, M. Tree-based binary image dissimilarity measure with meta-heuristic optimization. Pattern Anal Applic 19, 1–10 (2016). https://doi.org/10.1007/s10044-015-0511-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10044-015-0511-1