Abstract

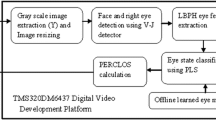

This paper presents a real-time vision-based system to detect the eye state. The system is implemented with a consumer-grade computer and an uncalibrated web camera with passive illumination. Previously established similarity measures between image regions, feature selection algorithms, and classifiers have been applied to achieve vision-based eye state detection without introducing a new methodology. From many different extracted data of 1,293 pair of eyes images and 2,322 individual eye images, such as histograms, projections, and contours, 186 similarity measures with three eye templates were computed. Two feature selection algorithms, the \( J_{5} (\xi ) \) criterion and sequential forward selection, and two classifiers, multi-layer perceptron and support vector machine, were intensively studied to select the best scheme for pair of eyes and individual eye state detection. The output of both the selected classifiers was combined to optimize eye state monitoring in video sequences. We tested the system with videos with different users, environments, and illumination. It achieved an overall accuracy of 96.22 %, which outperforms previously published approaches. The system runs at 40 fps and can be used to monitor driver alertness robustly.

Similar content being viewed by others

References

Underwood G (2005) Cognitive processes in eye guidance. Oxford University Press, New York

Awake Consortium (2001–2004) AWAKE—System for effective assessment of driver vigilance and warning according to traffic risk estimation”. http://www.awake-eu.org. Accessed Jun 2009

Sherry P (2000) Fatigue countermeasures in the railroad industry: past and current developments. Assoc Am Railroads, Washington

Williamson A, Chamberlain T (2005) Review of on-road driver fatigue monitoring devices. NSW Injury Risk Management Research Centre, University of New South Wales, New South Wales

Ueno H, Kaneda M, Tsukino M (1994) Development of drowsiness detection system. In: Proceedings of the vehicle navigation and information systems conference, pp 15–20

Kircher A, Uddman M, Sandin J (2002) Vehicle control and drowsiness. Swedish National Road and Transport Research Institute, Linköping

Dinges DF, Grace R (1998) PERCLOS: a valid psychophysiological measure of alertness as assessed by psychomotor vigilance. US Department of Transportation, Federal Highway Administration, Office of Motor Carrier Research and Standards, Washington

Wierwille WW, Ellsworth LA, Wreggit SS, Fairbanks RJ, Kirn CL (1994) Research on vehicle-based driver status/performance monitoring: development, validation, and refinement of algorithms for detection of driver drowsiness. National Highway Traffic Safety Administration, Washington

Wang Q, Yang J, Ren M, Zheng Y (2006) Driver fatigue detection: a survey. In: Proceedings of the world congress on intelligent control, pp 8587–8591

Seeing Machines Limited. http://www.seeingmachines.com. Accessed Jun 2009

Ji Q, Yang X (2002) Real time eye gaze and face pose tracking for monitoring driver vigilance. Real-Time Imaging 8(5):357–377

Ji Q, Zhu Z, Lan P (2004) Real-time nonintrusive monitoring and prediction of driver fatigue. IEEE Trans Veh Technol 53(4):1052–1068

Selker T, Lockerd A, Martinez J, Burleson W (2001) Eye-R, a glasses-mounted eye motion detection interface. In: Proceedings of the conference on human factors in computing systems, pp 179–180

D’Orazio T, Leo M, Guaragnella C, Distante A (2007) A visual approach for driver inattention detection. Pattern Recogn 40(8):2341–2355

Smith P, Shah M, da Vitoria LN (2003) Determining driver visual attention with one camera. IEEE Trans Intell Transp Syst 4(4):205–218

Wang Q, Yang J (2006) Eye location and eye state detection in facial images with unconstrained background. J Inform Comput Sci 1(5):284–289

Królak A, Strumillo P (2008) Vision-based eye blink monitoring system for human-computer interfacing. In: Proceedings of the conference on human systems interactions, pp 994–998

Grauman K, Betke M, Lombardi J, Gips J, Bradski GR (2003) Communication via eye blinks and eyebrow raises: video-based human-computer interfaces. Univ Access Inf Soc 2(4):359–373

Open Computer Vision Library. http://sourceforge.net/projects/opencvlibrary. Accessed Jan 2009

Spacek L. Collection of facial images: Faces96. http://cswww.essex.ac.uk/mv/allfaces/faces96.html. Accessed Jan 2009

The BioID face database. http://www.bioid.com/downloads/facedb. Accessed Jan 2009

Hansen D, Ji Q (2003) In the eye of the beholder: a survey of models for eyes and gaze. IEEE Trans Pattern Anal Mach Intell 32(3):478–500

Hallinan PW (1991) Recognizing human eyes. In: Geometric methods in computer vision. In: Proceedings of SPIE, vol 1570, San Diego, CA, USA, pp 214–226

Hansen DW (2006) Using colors for eye tracking. In: Lukac R, Plataniotis N (eds) Color image processing: methods and applications. CRC Press, Boca Raton, pp 309–327

Phung SL, Bouzerdoum A, Chai D (2005) Skin segmentation using color pixel classification: analysis and comparison. IEEE Trans Pattern Anal Mach Intell 27(1):148–154

Kakumanu P, Makrogiannis S, Bourbakis N (2007) A survey of skin-color modeling and detection methods. Pattern Recogn 40(3):1106–1122

Yang M, Ahuja N (1998) Detecting human faces in color images. In: Proceedings of the international conference on image processing, vol 1, pp 127–130

Sigal L, Sclaroff S, Athitsos V (2004) Skin color-based video segmentation under time-varying illumination. IEEE Trans Pattern Anal Mach Intell 26(7):862–877

Feng GC, Yuen PC (1998) Variance projection function and its application to eye detection for human face recognition. Pattern Recogn Lett 19(9):899–906

Zhou ZH, Geng X (2004) Projection functions for eye detection. Pattern Recogn 37(5):1049–1056

Tian Z, Qin H (2005) Real-time driver’s eye state detection. In: Proceedings of the IEEE international conference on vehicular electronics and safety, pp 285–289

Albu AB, Widsten B, Wang T, Lan J, Mah J (2008) A computer vision-based system for real-time detection of sleep onset in fatigued drivers. In: Proceedings of the IEEE intelligent vehicles symposium, pp 25–30

Bradski GR (1998) Real time face and object tracking as a component of a perceptual user interface. In: Proceedings of the IEEE workshop on applications of computer vision, pp 214–219

Swain MJ, Ballard DH (1991) Color indexing. Int J Comput Vision 7(1):11–32

Gevers T, Smeulders AWM (2004) Content based image retrieval: an overview. In: Medioni G, Kang SB (eds) Emerging topics in computer vision. Prentice Hall, Englewood Cliffs, pp 333–383

Kawato S, Tetsutani N (2004) Detection and tracking of eyes for gaze-camera control. Image Vis Comput 22(12):1031–1038

Rubner Y, Puzicha J, Tomasi C, Buhmann JM (2001) Empirical evaluation of dissimilarity measures for color and texture. Comput Vis Image Underst 84(1):25–43

Gevers T, Smeulders AWM (1999) Content-based image retrieval by viewpoint-invariant image indexing. Image Vis Comput 17(7):475–488

Schiele B, Crowley JL (1996) Object recognition using multidimensional receptive field histograms. In: Proceedings of the fourth European conference on computer vision, pp 14–16

Rubner Y, Tomasi C (2001) Perceptual metrics for image database navigation. Kluwer Academic Publishers, Norwell

Bhattacharyya A (1943) On a measure of divergence between two statistical populations defined by their probability distributions. Bull Calcutta Math Soc 35:99–109

Comaniciu D, Ramesh V, Meer P (2003) Kernel-based object tracking. IEEE Trans Pattern Anal Mach Intell 25(5):564–575

Rodgers JL, Nicewander WA (1988) Thirteen ways to look at the correlation coefficient. Am Statistician 42(1):59–66

Pratt W (2001) Shape analysis. In: Digital image processing: PIKS inside, 3rd edn. Wiley, New York, USA, pp 589–612

Devijver PA, Kittler J (1982) Pattern recognition: a statistical approach. Prentice Hall, London

Jain AK, Duin RPW, Mao J (2000) Statistical pattern recognition: a review. IEEE Trans Pattern Anal Mach Intell 22(1):4–37

Haykin S (2008) Neural networks and learning machines, 3rd edn. Prentice Hall, London

Xiang C, Ding SQ, Lee TH (2005) Geometrical interpretation and architecture selection of MLP. IEEE Trans Neural Netw 16(1):84–96

Cristianini N, Shawe-Taylor J (2000) An introduction to support vector machines and other kernel-based learning methods. Cambridge University Press, Cambridge

Pan G, Sun L, Wu Z, Lao S (2007) Eyeblink-based anti-spoofing in face recognition from a generic webcamera. In: Proceedings of the IEEE international conference on computer vision, pp 1–8

Fawcett T (2006) An introduction to ROC analysis. Pattern Recogn Lett 27(8):861–874

González-Ortega D, Díaz-Pernas FJ, Martínez-Zarzuela M, Antón-Rodríguez M, Díez-Higuera JF, Boto-Giralda D (2010) Real-time hands, face and facial features detection and tracking: application to cognitive rehabilitation tests monitoring. J Netw Comp Appl 33(4):447–466

Acknowledgments

This work was partially supported by the Spanish Ministry of Science and Innovation under project TIN2010-20529 and by the Regional Government of Castilla y León (Spain) under project VA171A11-2.

Author information

Authors and Affiliations

Corresponding author

Additional information

Originality and contribution

The eye is a high variable non-rigid object and unconstrained vision-based eye state detection is still an open question. This paper proposes an eye state detection approach based on the extraction of many calculations of the rectangular pair of eyes region and two individual eye regions in images: 1-D grayscale histograms, 2-D color histograms, horizontal and vertical projections, and contours. These calculations were also extracted from three templates (open, nearly closed, and closed). 186 similarity measures between the respective calculations of the eye regions and the templates were calculated. These similarity measures are the eye region features. We have selected independently the features and classifier that best address the pair of eyes or the individual eye state detection as some features can characterize properly the pair of eyes region but not the individual eye region or vice versa. Other contribution of the paper is to accomplish feature and classifier selections by comparing intensively two algorithms, the \( J_{5} (\xi ) \) criterion and sequential forward selection (SFS), and two classifiers, multi-layer perceptron (MLP) and support vector machine (SVM). There is no previous work in the literature that computes different features from the eye region and fulfilled selection of features to be the inputs of a vision-based eye state classifier. To compute percentage of eyelid closure over time (PERCLOS), we characterized not only the open and closed eye state in images, but also the nearly closed eye state as it is necessary to make an accurate PERCLOS measure. Both the selected classifiers for the pair of eyes and the individual eye state detection are combined to achieve real-time eye state monitoring in video sequences. The system was tested with varied videos to test the consistency of the system with people different from those used in the training stage, frontal or non-frontal faces, changes in gaze direction, translation and scale changes, different illumination conditions, and glasses. It achieved an overall accuracy of 96.22 %, which outperforms previously published approaches. It can be used to monitor driver alertness robustly in real time by computing PERCLOS and the consecutive time of non-frontal face position and closed eye state.

Rights and permissions

About this article

Cite this article

González-Ortega, D., Díaz-Pernas, F.J., Antón-Rodríguez, M. et al. Real-time vision-based eye state detection for driver alertness monitoring. Pattern Anal Applic 16, 285–306 (2013). https://doi.org/10.1007/s10044-013-0331-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10044-013-0331-0