Abstract

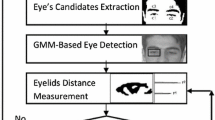

Two video-based human-computer interaction tools are introduced that can activate a binary switch and issue a selection command. “BlinkLink,” as the first tool is called, automatically detects a user’s eye blinks and accurately measures their durations. The system is intended to provide an alternate input modality to allow people with severe disabilities to access a computer. Voluntary long blinks trigger mouse clicks, while involuntary short blinks are ignored. The system enables communication using “blink patterns:” sequences of long and short blinks which are interpreted as semiotic messages. The second tool, “EyebrowClicker,” automatically detects when a user raises his or her eyebrows and then triggers a mouse click. Both systems can initialize themselves, track the eyes at frame rate, and recover in the event of errors. No special lighting is required. The systems have been tested with interactive games and a spelling program. Results demonstrate overall detection accuracy of 95.6% for BlinkLink and 89.0% for EyebrowClicker.

Similar content being viewed by others

References

Bala L-P, Talmi K, Liu J (1997) Automatic detection and tracking of faces and facial features in video sequences. In: Abstracts of the picture coding symposium, September 1997, Berlin, Germany

Baluja S, Pomerleau D (1994) Non-intrusive gaze tracking using artificial neural networks. Technical report CMU-CS-94-102, Computer Science Department, Carnegie Mellon University, Pittsburgh http://www.ri.cmu.edu/pubs/pub_2765.html

Bauby J-D (1997) The diving bell and the butterfly. Knopf, New York

Betke M, Gips J, Fleming P (March 2002) The Camera Mouse: visual tracking of body features to provide computer access for people with severe disabilities. IEEE Transactions on neural systems and rehabilitation engineering, 10(1):1–10

Betke M, Mullally WJ, Magee J (June 2000) Active detection of eye scleras in real time. In: Abstracts of the IEEE workshop on human modeling, analysis and synthesis, Hilton Head Island, SC, Technical report BCCS-99-04

Human-Computer Interfaces Web page at Boston University. http://www.cs.bu.edu/faculty/betke/research/hci.html

Birchfield S (2000) Elliptical head tracking using intensity gradients. In: Abstracts of the IEEE computer vision and pattern recognition conference, Hilton Head Island, SC, IEEE Computer Society, pp 232–237

Black MJ, Yacoob Y (June 1995) Tracking and recognizing rigid and non-rigid facial motions using local parametric models of image motions. In: Abstracts of the 5th international conference on computer vision, Cambridge, MA, pp 374–381

Blink Detection Videos (2001) http://www.ai.mit.edu/∼kgrauman

Bobick A, Davis J (2001) The recognition of human movement using temporal templates. IEEE Transactions on pattern analysis and machine intelligence, 23(3):257–267

Chen YL, Tang FT, Chang WH, Wong MK, Shih YY, Kuo TS (1999) The new design of an infrared-controlled human-computer interface for the disabled. IEEE Transactions on rehabiliation engineering, 7(4):474–481

Cloud RL, Betke M, Gips J (2002) Experiments with a camera-based human-computer interface system. In: Ab- stracts of the 7th ERCIM workshop on user interfaces for all, Paris, pp 103–110

Comaniciu D, Ramesh V (2000) Robust detection and tracking of human faces with an active camera. In: Abstracts of the IEEE international workshop on visual surveillance, Dublin, pp 11–18

Cormen TH, Leiserson CE, Rivest RL (1990) Introduction to algorithms. MIT Press/McGraw-Hill, New York

Crowley JL, Berard F (1997) Multi-modal tracking of faces for video communications. In: Abstracts of the 1997 IEEE conference on computer vision and pattern recognition, Puerto Rico, June 1997, pp 640–645

Davis J, Bobick A (June 1997) The representation and recognition of action using temporal templates. In: Abstracts of IEEE conference on computer vision and pattern recognition, Puerto Rico, pp 928–934

De La Torre F, Yacoob Y, Davis L (2001) A probabilistic framework for rigid and non-rigid appearance based tracking and recognition. In: Abstracts of the 4th IEEE international conference on automatic face gesture recognition, Grenoble, France, pp 491–498

DeCarlo D, Metaxas D (June 1996) The integration of optical flow and deformable models with applications to human face shape and motion estimation. In: Abstracts of the 1996 IEEE Computer Society conference on computer vision and pattern recognition, San Francisco, IEEE Computer Society, pp 231–238

Duda RO, Hart RE, Stork DG (2001) Pattern classification, 2nd edn. Wiley, New York

The EagleEyes Project at Boston College. http://www.cs.bc.edu/∼eagleeye

Edwards GJ, Taylor CJ, Cootes TF (1998) Learning to identify and track faces in image sequences. In: Abstracts of the international conference on face and gesture recognition, Nara, Japan, pp 260–265

Essa IA, Pentland A (1995) Facial expression recognition using a dynamic model and motion energy. In: Abstracts of the 5th international conference on computer vision, Cambridge, MA, pp 360–367

Evans DG, Drew R, Blenkhorn P (2000) Controlling mouse pointer position using an infrared head-operated joystick. IEEE Transactions on rehabilitation engineering, 8(1):107–117

Fagiani C, Betke M, Gips J (2002) Evaluation of tracking methods for human-computer interaction. In: Abstracts of the IEEE Workshop on applications in computer vision, Orlando, pp 121–126

Gips J, Betke M, DiMattia PA (2001) Early experiences using visual tracking for computer access by people with profound physical disabilities. In: Abstracts of the 1st international conference on universal access in human-computer interaction, New Orleans

Gips J, Betke M, Fleming P (July 2000) The camera mouse: preliminary investigation of automated visual tracking for computer access. In: Abstracts of the Rehabilitation Engineering and Assistive Technology Society of North America 2000 annual conference, Orlando, pp 98–100

Gips J, DiMattia P, Betke M (August 2002) Collaborative development of new access technology and communication software. In: Abstracts of the 10th biennial conference of the International Society for Augmentative and Alternative Communication (ISAAC 2002), Odense, Denmark

Gips J, DiMattia P, Curran FX, Olivieri P (1996) Using EagleEyes – an electrodes based device for controlling the computer with your eyes – to help people with special needs. In: Klaus J, Auff E, Kremser W, Zagler W (eds) Interdisciplinary aspects on computers helping people with special needs. R. Oldenbourg, Vienna

Gokturk SB, Bouguet J-Y, Tomasi C, Girod B (2002) Model-based face tracking for view-independent facial expression recognition. In: Abstracts of the 5th IEEE international conference on automatic face and gesture recognition, Washington, DC, pp 272–278

Grauman K, Betke M, Gips J, Bradski GR (2001) Communication via eye blinks – detection and duration analysis in real time. In: Abstracts of the IEEE computer vision and pattern recognition conference, vol 2, Kauai, HI, pp 1010–1017

Haro A, Flickner M, Essa I (June 2000) Detecting and tracking eyes by using their physiological properties, dynamics, and appearance. In: Abstracts of the IEEE conference on computer vision and pattern recognition, Hilton Head Island, SC

Hutchinson T, White Jr KP, Martin WN, Reichert KC, Frey LA (1989) Human-computer interaction using eye-gaze input. IEEE Transactions on systems, man and cybernetics, 19(6):1527–1533

Jacob RJK (1991) The use of eye movements in human-computer interaction techniques: what you look at is what you get. ACM Transactions on information systems, 9(3):152–169

Jacob RJK (1993) What you look at is what you get. Computer 26(7):65–66

Kapoor A, Picard RW (2002) Real-time, fully automatic upper facial feature tracking. In: Abstracts of the 5th IEEE international conference on automatic face gesture recognition, Washington, DC, pp 10–15

Karson CN, Berman KF, Donnelly EF, Mendelson WB, Kleinman JF, Wyatt RJ (1981) Speaking, thinking, and blinking. Psychiatry Res 5:243–246

Kothari R, Mitchell J (1996) Detection of eye locations in unconstrained visual images. In: Abstracts of the IEEE international conference on image processing, vol 3, Lausanne, Switzerland, pp 519–522

LaCascia M, Sclaroff S, Athitsos V (April 2000) Fast, reliable head tracking under varying illumination: an approach based on robust registration of texture-mapped 3D models. IEEE Transactions on pattern analysis and machine intelligence, 22(4):322–336

Lombardi J, Betke M (October 2002) A camera-based eyebrow tracker for hands-free computer control via a binary switch. In: Abstracts of the 7th ERCIM workshop on user interfaces for all, Paris, pp 199–200

Morimoto CH, Flickner M (March 2000) Real-time multiple face detection using active illumination. In: Abstracts of the 4th IEEE international conference on automatic face and gesture recognition, Grenoble, France, pp 8–13

Nakano T, Sugiyama K, Mizuno M, Yamamoto S (October 1998) Blink measurement by image processing and application to warning of driver’s drowsiness in automobiles. In: Abstracts of the international conference on intelligent vehicles, Stuttgart, Germany. IEEE Industrial Electronics Society, pp 285–290

Ogawa K, Okumura T (October 1998) Development of drowsiness detection system. In: Abstracts of the 5th world congress on intelligent transport systems, Seoul

Open Source Computer Vision Library, Intel Corporation (2002) http://www.intel.com/research/mrl/research/opencv

Perkins WJ, Stenning BF (1986) Control units for operation of computers by severely physically handicapped persons. J Med Eng Technol 10(1):21–23

Reilly RB (September 1998) Applications of face and gesture recognition for human-computer interaction. In: Abstracts of the 6th ACM international multimedia conference on face/gesture recognition and their applications, Bristol, UK, pp 20–27

Rinard GA, Matteson RW, Quine RW, Tegtmeyer RS (1980) An infrared system for determining ocular position. ISA Transactions, 19(4):3–6

Shapiro LG, Stockman GC (2001) Computer vision. Prentice-Hall, New York

Singh S, Papanikolopoulos N (May 2001) Vision-based detection of driver fatigue. http://www-users.cs.umn.edu/∼sasingh/research

Stephanidis C, Savidis A (2001) Universal access in the information society: methods, tools, and interaction technologies. Universal Access Inform Soc 1(1):40–55

Stiefelhagen R, Yang J, Waibel A (November 2001) Estimating focus of attention based on gaze and sound. In: Abstracts of the workshop on perceptual user interfaces (PUI), Orlando, ACM Digital Library, ISBN 1-58113-448-7

Stringa L (1993) Eye detection for face recognition. Appl Artif Intell 7:365–382

Tian Y, Kanade T, Cohn J (2000) Dual-state parametric eye tracking. In: Abstracts of the 4th IEEE international conference on automatic face and gesture recognition, pp 110–115

Young L, Sheena D (1975) Survey of eye movement recording methods. Behav Res Meth Instrumentat 7(5):397–429

Zhai S, Morimoto C, Ihde S (May 1999) Manual and gaze input cascaded (MAGIC) pointing. In: Abstracts of CHI’99: ACM conference on human factors in computing systems, Pittsburgh, pp 246–253

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

About this article

Cite this article

Grauman, K., Betke, M., Lombardi, J. et al. Communication via eye blinks and eyebrow raises: video-based human-computer interfaces. UAIS 2, 359–373 (2003). https://doi.org/10.1007/s10209-003-0062-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10209-003-0062-x