Abstract

Ray tracing, combined with ideal retroreflection and mathematical simulation methods, has been used to design aerial imaging systems based on retroreflection. Although aerial images are blurred and have lower brightness than the light source, existing simulation methods do not focus on the appearance of these characteristics. In this study, we propose a computer graphics (CG)-based simulation using the ray tracing method to generate CG renditions of aerial images with reproduced luminance and blurring. CG models of three optical elements (light source, half-mirror, and retroreflector) were created on the basis of existing optical element models to simulate aerial images obtained through retroreflection in aerial imaging systems. By measuring the image formation positioning, we determined that the rendered aerial images consistently formed at a plane-symmetrical position relative to the axis of the half-mirror model, with a mean absolute error of \({0.55\,\textrm{mm}}\). We also compared rendered and actual aerial images in terms of luminance and sharpness characteristics, and found that the mean absolute percentage error of luminance remained within \(0.0376\). Furthermore, the directional dependence of blur was effectively reproduced using the retroreflector bidirectional reflectance distribution function developed in this study.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Aerial imaging technology forms real images in mid-air. Augmented reality (AR) glasses and projection mapping are known examples of the fusion of computer graphics (CG) with the real physical space. AR glasses are optical systems that display virtual images. Interactions using AR glasses require users to wear special devices. Projection mapping requires prior investigation into the shape or reflectance of an object and the positional relationship between the projector and the object. In contrast, aerial imaging technology does not require users to wear special devices nor prior investigation of object shapes.

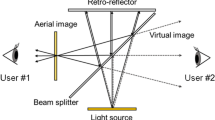

Aerial imaging by retroreflection (AIRR) is a technology comprising a light source, a half-mirror, and a retroreflector [1]. AIRR has better scalability than other aerial imaging technologies and produces images with a broader range of viewing angles [2]. The retroreflectors used in AIRR are easy to process and have a high degree of placement flexibility, making them viable candidates for applications in diverse fields, including biology [3], education [4], and traffic safety [5]. However, aerial images formed by AIRR are blurry and have low luminance relative to the light source. The luminance and blurring characteristics vary depending on various design parameters of the optical system, such as the distance between the half-mirror and the light source, and the angle of light incidence on the retroreflector. Therefore, designing an effective AIRR system without sufficient knowledge of such a system is difficult.

Reproducing the appearance of aerial images in CG is useful in the design of aerial imaging systems. Several simulation methods have been used in the design of AIRR systems [6, 7]. However, conventional AIRR simulation methods assume ideal retroreflection and overlook the blurring of aerial images, which is one of the problems of AIRR. Efforts have also been made to reproduce the appearance of aerial images using ray tracing methods [8]. However, such methods involve a different mechanism for forming aerial images from AIRR that still fails to address the blurring of aerial images.

In this study, we propose a novel approach to reproducing the appearance of aerial images formed by AIRR in CG using a ray tracing method (Fig. 1). Specifically, for each elemental component of a retroreflective material, we model and integrate the bidirectional scattering distribution function (BSDF) and the bidirectional reflectance distribution function (BRDF), which respectively describe how light scatters from and reflects on a surface in CG. We used Mitsuba 2 [9], which is a physically based open source renderer widely used for academic purposes. The CG of an aerial image including blurring was created by physically based rendering and using a microfacet BRDF model, thus simulating the appearance of the AIRR aerial image before preparing the real one.

We defined three requirements for the realization of the simulation: (a) the aerial image must be formed at a position that is plane-symmetrical relative to the half-mirror axis, (b) the spatially varying luminance of the aerial image must be simulated, and (c) the degree of spatially varying blur of the aerial image must be simulated. In AIRR, the appearance of a real image at a position that is plane-symmetrical to the light source with respect to the half-mirror axis means that the real image formed is an aerial image. If the luminance characteristics can be reproduced, the appearance of the aerial image in relation to the lighting of the surrounding environment can be examined, and complex AIRR systems that combine multiple light sources and half-mirrors can be prototyped. In this study, we defined spatial variation as the change in the angle of incidence of light on the retroreflector, and we aimed to reproduce the blurring characteristics that change as the angle of incidence changes. If blur can be reproduced, the visibility of the aerial image can be verified before preparing the actual image. This enables adjustments in content details, such as font size and resolution, to create appropriate content. Reproducing aerial image blurring as a function of placement enables the design of aerial imaging systems when the aerial image is observed from directions other than the front, when the retroreflector is tilted to remove unwanted light, or when the retroreflector is deformed.

We compared rendered aerial images in CG with aerial images obtained in real space and verified the accuracy of CG simulations by evaluating features, such as luminance and blur. The experiments demonstrated that the CG simulation accurately reproduced the image formation position and luminance characteristics of the aerial image. Furthermore, we found that the proposed retroreflector CG model effectively simulates the sharpness characteristic and directional dependence of the aerial image.

The contributions of this study are as follows:

-

Image formation position, luminance, and blurring of aerial images formed by AIRR were simulated by modeling the optical elements, and the aerial images were rendered using the ray tracing method.

-

The optimal parameters for reproducing the luminance of each actual retroreflector were determined by adjusting the parameters governing the reflectance or transmittance of the retroreflector BRDFs.

-

The directional dependence of blurring was effectively replicated by characterizing the BRDF model based on the structural attributes of the actual retroreflector used for AIRR, with its impact validated by evaluating image sharpness.

2 Related work

2.1 Aerial image interactions

AIRR [1] is a type of aerial imaging technology with a purpose similar to that of micro-mirror array plates (MMAPs) [10] and the dihedral corner reflector array [11]. AIRR consists of a light source, a beam splitter, and a retroreflector. Light rays emitted from the light source enter the retroreflector through the beam splitter and are retroreflected. The optical path of the retroreflected light rays is changed by the beam splitter. As a result, an aerial image is formed at a position that is plane-symmetrical to the light source with respect to the beam splitter. The retroreflectors used in AIRR produce aerial images even on curved surfaces because retroreflection is maintained in this case, which simplifies the installation of the retroreflectors. Kikuta created large aerial digital signage by bending a retroreflector to remove unwanted light by taking advantage of the high degree of freedom in the placement of the retroreflector [12].

Such aerial imaging technology can form real images on the users’ side; thus, users can touch the images in some interactions. Tokuda et al. developed R2D2 w/AIRR, which is an aerial imaging interaction system that recognizes user gestures, by attaching a translucent screen and projector for projection and a 3D camera for sensing AIRR [13]. Kim et al. developed MARIO, which is an interactive system that presents a depth-adjustable aerial image using linear actuators and simultaneously detects objects using infrared light [14]. In this system, an aerial image character bounces around a terrain that is created by the user by arranging actual blocks. Matsuura et al. developed Scoopirit, which is an aerial imaging system that enables interactions, by placing a water surface in front of an aerial image optical element and further sensing movements with an ultrasonic sensor [15]. However, these aerial image interactions are table-sized, and few life-sized interaction systems using aerial imaging optics have been developed. This is because research is lacking on the scaling up of AIRR devices to, for example, life-sized devices [16]. Other aerial imaging technologies have been used in several interactive applications because they can form clearer aerial images than AIRR. However, these technologies are substantially more expensive than AIRR because they require special optical elements to form the image.

Simulating aerial images using AIRR that includes blurring would be useful for designing various interaction systems. The main problem with AIRR is that the formed aerial images are blurred [17]. The effects of this blurring problem are more pronounced in AIRR than in other aerial imaging technologies because AIRR makes it easier to create large-scale systems. Although an approach has been developed that performs inverse convolution from the premeasured point spread function [18] to suppress the blurring of aerial images formed by AIRR, it cannot generate a totally blur-free aerial image. Therefore, if the blurred appearance of aerial images can be reproduced, the display content details, such as font size and character appearance, can be examined to match the blurring of the image. In addition, the placement of the optical elements can be considered to match the existing display content to the extent that image blurring is acceptable before preparing the actual content.

2.2 Simulation of aerial images

The simulation of aerial images can be used to verify the performance of a newly designed AIRR system before its implementation. Chiba et al. simulated aerial images formed by visualizing light rays to evaluate a brightness enhancement method in the formation of multiple aerial images, and they investigated the feasibility of the proposed optical system [6]. Fujii and Yamamoto added clear spheres to reduce the area of the retroreflector in AIRR and performed heat-map simulations to reproduce the position and shape of the aerial image formed [7]. However, these methods cannot determine the appearance or blur of the actual aerial image in advance.

Aerial images and stray light are rendered as CG images when using ray tracing methods, and examples of models that support the design of aerial imaging systems have been reported. Kiuchi and Koizumi implemented a polygonal model structurally similar to MMAP to simulate the appearance of aerial images using an MMAP in Blender [8]. The results of the comparison between the simulated and actual images showed that the method can achieve geometric consistency, simulate the luminance, and identify the location of stray light in MMAP aerial images. Hoshi et al. designed a novel MMAP optical system that is smaller than conventional systems and hides ghost images using this MMAP simulation [19]. The aerial imaging technology of their target differs from AIRR in both the features and structures of the optical elements. However, if the appearance of aerial images formed by AIRR is known in advance, a design that hides unwanted light outside the aerial image can be considered similarly to the MMAP ray tracing simulation.

2.3 BSDF and BRDF models

BSDF and BRDF models, which explain how light reaches the surface, are used to render photorealistic scenes in ray tracing simulations by describing the materials appropriately. In BSDF and BRDF, the microfacet theory is used when the reflected light is spread. The microfacet theory focuses on both micro- and macro-surface normals. On a microsurface, specular reflections are considered to occur at each microsurface, and the microscopic reflections are described in statistical terms. This enables the photorealistic rendering of the spread of off-specular, reflected light rays, as observed on metals and dielectrics with rough surfaces. Examples of BRDF models using the microfacet theory include the Cook–Torrance model [20], which is related to metals, and the model by Walter et al. [21], which describes the reflection and transmission through dielectric metals using the BSDF.

Few BRDF models focus on retroreflectors. Spierings et al. investigated the reflective properties of retroreflectors used for white lines on roads and found that the Phong model with retroreflective properties is effective [22]. However, this model is an extension of the classical model in the retroreflective direction and does not focus on its structure of the retroreflectors. To the best of our knowledge, the only CG model of a retroreflector that focuses on the structure for the purpose of physically based rendering is the BRDF model developed by Guo et al. [23]. Their BRDF reproduces the directional dependence of the retroreflected light intensity of the corner reflectors by geometric optical analysis. However, the retroreflector in their work has a different structure from the retroreflector that is actually used for AIRR, and no quantitative comparison with the real retroreflector has been performed.

3 Modeling

3.1 Retroreflector

In this section, we provide an overview of the retroreflector BRDF implemented in this study. The mathematical symbols used herein are listed in Table 1. The subscripts \(\{1, 2, 3\}\) of \(\textbf{n}\) correspond to the sequence in which reflected light traverses through the corner surface, whereas those of \(\textbf{v}\) denote either the incoming (\(\mathbf {v_i}\)) or outgoing (\(\mathbf {v_o}\)) direction of the optical path.

We designed a BRDF that fits the structure of the retroreflector actually used in AIRR systems (Fig. 2) building upon the BRDF of Guo et al. [23], for an internally hollow retroreflector. Such retroreflectors are generally not used for AIRR systems because they are dotted with struts that do not retroreflect to support the air layer. Instead, retroreflectors that are typically used for AIRR have no air layer and their corner arrays consist of mirror-like surfaces. This difference in structure affects the reflecting component inside the object.

In the implemented BRDF, the outgoing light can be divided into three types: surface reflection, retroreflection, and diffuse reflection (Fig. 3). Surface reflection occurs from the incident surface plane. In AIRR, light rays reflected by the surface are undesired as they create virtual images at positions deeper than that of the retroreflector. Retroreflection entails light rays passing through the incident surface, reflected once on each of the three surfaces of the corner, and emitted back through the incident surface. In this study, diffuse reflections are defined as those that are neither surface reflections nor retroreflections. These include instances where light rays transmitted through the incident surface are reflected only on one or two corner surfaces before heading toward the incident plane, or, despite being reflected on the corner surfaces, do not pass through the incident plane but instead are reflected back.

Furthermore, the implemented retroreflector BRDF \(f(\textbf{i},\textbf{o},\textbf{n})\) is the sum of the BRDFs for the three types of reflection:

where \(f_r\), \(f_{rr}\), and \(f_d\) denote the BRDFs for surface reflection, retroreflection, and diffuse reflection, respectively.

We adopted the microfacet theory to reproduce reflected light rays that blur radially. According to Kakinuma et al.[17], diffraction, which spreads light rays radially, is the dominant factor in the blurring of aerial images formed by AIRR. In this study, we assumed that factors forcing outgoing light to spread radially, including diffraction, can be substituted with the standard microfacet model. Therefore, light ray blurring caused by diffraction and the effect of rough surfaces are described by the microfacet BSDF. As the microfacet model that describes each surface interaction occurring within the retroreflector, we used the BSDF proposed by Walter et al. [21], in which reflection and transmission, denoted as \(f_R\) and \(f_T\), respectively, are expressed as follows:

where \(\mathbf {h_r}\) and \(\mathbf {h_t}\) represent the half-vectors corresponding to the directions of incoming reflection and outgoing transmission, respectively.

In our model, the amount of outgoing spread light is controlled by adjusting the parameters of the normal distribution function (NDF). We assumed that the NDF of each surface follows the GGX distribution [21] as shown below:

where \(\theta _h\) denotes the angle of incidence of the half-vector and \(\alpha\) denotes a parameter related to the surface roughness (the higher its value, the rougher the plane surface and the greater the spread of the reflected light). In our model, the NDF roughness parameters associated with the incident plane and corner surfaces of the retroreflector are \(\alpha _i\) and \(\alpha _s\), respectively. As reflection occurs only on corner surfaces and transmission occurs only through the incident plane, the roughness of the NDF in Eq. (2) is \(\alpha _i\) and that in Eq. (3) is \(\alpha _s\).

Two more assumptions were made when constructing the retroreflector BRDF. First, the effect of the corner cube size was considered insignificant. The distance between the positions of the formed aerial images and the retroreflector was substantially larger than the size of the corner cubes in the retroreflector, so the latter was sufficiently blurred when focused on the aerial image. Alternatively, it was assumed that the corner cubes were observed at a distance and resolution such that their size was below one pixel. Second, diffuse reflections were supposed to be equally diffused in all directions, such as in Lambert reflection; hence, the effect of unwanted light other than surface-reflected light was assumed to be negligible.

3.1.1 Surface reflection

The surface reflection term \(f_r\) was implemented as in the model proposed by Guo et al. [23]. As this surface interaction considers reflections on the incident plane of a prism sheet with a rough surface, it is described as

3.1.2 Retroreflection

Initially, we considered the BRDF implementation of Guo et al., but we found that it could not reproduce the directional dependence of the blurring in aerial images, as demonstrated in the experiments described in sect. 4.4. As Guo et al. targeted internally hollow retroreflectors, the total reflection occurred inside the retroreflector, so they approximated the BRDF considering only the transmission on the incident plane twice. Because the interior surfaces constituting the corner arrays of the retroreflectors used in AIRR are mirror-like, reflection on metal surfaces is expected to occur on these surfaces instead of total reflection. We considered that this change in energy causes the blurring to depend on direction.

In this study, the retroreflection term was formulated by incorporating the reflections occurring inside the retroreflector as described by Weidlich and Wilkie [24]. They expressed the reflective properties of an object coated with a series of thin layers that can be computed by sampling paths assuming an ideal surface to compute each surface. Because the BRDF of each layer was considered independent of those of other layers, the final BRDF was expressed as the sum of the BRDFs of all layers. In contrast, in the retroreflection term considered in this study, the BSDFs of all surfaces are interdependent; thus, we believe that implementing the BRDF of retroreflector by taking the product of the BSDF of each surface is possible.

The main process flow in our calculation of the retroreflection term is shown in Fig. 4. This study followed this flowchart to describe the concept behind constructing the retroreflection term.

In the Fig. 4, \(j\) is an index representing the path of the retroreflection of interest. Considering all possible permutations for the order of the three surfaces constituting a single corner unit when each is reflected once, there exist six (\(3!=6\)) distinct paths along which retroreflection may occur. Furthermore, retroreflectors may feature corner units rotated by \(180^\circ\), doubling the total number of retroreflection paths to twelve.

To compute the BRDFs, representative normals and light paths were determined by averaging. First, for the relevant retroreflective paths, their directions were sampled assuming that the starting point corresponded to an ideal state wherein all incident planes are perfectly smooth and each corner plane is strictly orthogonal to the others. Two direction vectors were obtained per path: one starting from the incident direction and the other from the outgoing direction. The average direction of these vectors yielded a representative optical path for BRDF computation. Subsequently, the half-vectors in the microfacet model were determined using the representative optical paths.

\(F_o\) denotes the Fresnel term governing surface transmission on the outgoing direction. When an anomalous path is sampled, the elements of the path vector become non-numeric in Mitsuba 2, causing the Fresnel term to diverge to infinity. In this implementation, paths are calculated sequentially from the direction of incidence, facilitating the identification of reflectable paths by verifying whether the Fresnel term \(F_o\) diverges on the outgoing side. Because these instances are treated as diffuse reflections rather than retroreflections, they are represented by the term \(e\), which denotes the probability of anomalous sampling.

The BSDF of reflection or transmission at each surface is obtained by calculating the product using the model developed by Walter et al. [21]. If \(F_o\) diverges, the BSDF is 0 because the route of interest is invalid.

Finally, the retroreflection term is calculated as the weighted sum of the retroreflective BRDFs for all possible paths. The BRDF for the retroreflection term \(f_{rr}\) is as follows:

where \(E\) denotes the effective retroreflective area (ERA), which represents the percentage of geometrical retroreflection, as explained by Guo et al. [23]; and \(w_j\) is a weighting factor indicating the probability that a path of interest will be geometrically selected, which can be obtained from the inner product of the light paths and the normal at each corner surface.

3.1.3 Diffuse reflection

The implementation of the diffuse reflection BRDF followed a similar approach to that of the model proposed by Guo et al. The differences between their model and ours lie in our consideration of the sampling internal retroreflection paths and the incorporation of a correction factor to address anomalous samples as follows:

where

Here, \(F_d\) denotes the diffuse Fresnel reflectance, which in this study was approximated as described by d’Eon and Irving [25].

3.2 Half-mirror

In the context of AIRR, a half-mirror BSDF that enables direct adjustment of reflectance and transmittance was developed to serve as the beam splitter. When designing AIRR systems, half-mirrors are selected by considering their reflectance and transmittance. Although thin dielectric models in Mitsuba 2 can function as substitutes for half-mirrors owing to their capability to reflect and transmit light, configuring their reflectance and transmittance is not easy. Therefore, we devised a new BSDF model that uses the reflectance \(R\) and the transmittance \(T\) as parameters. This BSDF model is a simple model that transmits and reflects the incident light at a certain rate.

3.3 Light source

The light source model was implemented by modifying the area light plugins in Mitsuba 2 by adding a coefficient to multiply the light source luminance. Common displays are often used as light sources in designing AIRR systems. Displays can be implemented using the area light plugins in Mitsuba 2, but the brightness of the display cannot be adjusted in the scene file when the textures are set. Therefore, we added a new coefficient that can adjust the brightness.

4 Evaluation

After implementing each model in Mitsuba 2, we rendered aerial images in CG and compared them with aerial images that were formed by actual AIRR to confirm whether they reproduced the image formation position and luminance and blurring characteristics.

4.1 Unified measurement setup

In this study, each setup of optical elements was unified between the CG and real-space measurements. The setup is illustrated in Fig. 5. \(L\) indicates the distance between the optical elements or aerial images. \(L_a\), \(L_b\) and \(L_c\) are the distances between the light source and the half-mirror, between the half-mirror and the camera, and between the camera and (a) the aerial image or (b) the light source, respectively. \(\theta\) is the angle of incidence of the optical axis with respect to the retroreflector.

Next, we describe the setup in real space. The retroreflectors used in the measurements were RF-Ay and RF-AN in Fig. 2 (Nippon Carbide Industries). These retroreflectors are manufactured for aerial image display and have been used in various AIRR studies. The half-mirror was a plate-type beam splitter (Edmund Optics). The reflectance and transmittance of this half-mirror were both 50%. The half-mirror was installed such that the thin-film surface faced the light source side. We used a digital camera (SONY, \(\alpha\) R iii) and a lens (TAMRON, Di iii RX0). The focal length was \({35\,\textrm{mm}}\), the F-number was 2.8, the ISO was 50, and the exposure time was adjusted by trial and error while confirming no highlight clipping by the Zebra pattern function. The raw data were converted into the TIFF format without gamma correction for analysis.

The setup scenes for the CG measurements were designed to match the real ones. Distance 1 in Mitsuba 2 corresponded to \({1\,\textrm{mm}}\) in real space in this study. The number of samples and the resolution of the rendering were varied across the experiments.

4.2 Plane-symmetric evaluation

The position of the aerial image generated by the AIRR in the rendered image in CG space was measured in this experiment. Prior to evaluating the luminance and blur, it is necessary to verify that the aerial image in the rendered CG is formed as an aerial image in the first place; that is, the position of the aerial image changes with the position of the light source. Therefore, in the first experiment, we compared the aerial image generated by the AIRR in CG with the case in which the light source was placed at the position where the aerial image was originally formed.

4.2.1 Method

The depth position of the aerial image was measured by varying the distance between the light source and the half-mirror, \(L_a\), between \(150\) and \({300\,\textrm{mm}}\) with increments of \({25\,\textrm{mm}}\) and setting \(L_b = L_a + {300\,\textrm{mm}}\). The distance \(L_c\) from the aerial image to the image sensor was obtained by analyzing the rendered images. As a baseline, the depth position of the light source was also measured when placed at the position where the aerial image was originally formed. The light source had a texture consisting of \(4\times 4\) equally spaced circles, each with a diameter of \({13.3\,\textrm{mm}}\) and separated \({26.6\,\textrm{mm}}\), as shown in Fig. 6. All roughness parameters of the retroreflector BRDF were set to 0 to avoid blurring of the aerial image, which would hinder the detection of the formed circle.

For each condition, two images were rendered with the camera set at \({50\,\textrm{mm}}\) to obtain parallax images. The depth of the image formed in the captured image was determined from these rendered parallax images. The rendered images were binarized and a median filter was applied to remove unwanted light for circle detection. Thereafter, the OpenCV bounding box detection function was used to determine the location of the circle. The depth position of the image \(L_c\) was then calculated using a stereo matching algorithm from the difference in the image position of each circle between the disparity image and the setup of the disparity image.

4.2.2 Results and discussion

The experimental results shown in Fig. 7 indicate that the rendered image was formed at a position that was plane-symmetrical with the light source on the axis of the half-mirror. “Direct” denotes the baseline measurement results from the setup in Fig. 5(b), and “Ours” and “Guo et al.”were obtained from the setup in Fig. 5(a). The legends other than Direct show in which model was used as the retroreflector BRDF. The MAEs of the Direct and respective aerial image formation positions were \({0.550\,\textrm{mm}}\) for our BRDF and \({0.553\,\textrm{mm}}\) for the BRDF of Guo et al. [23], respectively. Noise or floating-point errors during rendering were included. The slope and the intercept of the Direct and aerial images were found to be almost identical; in particular, the slope was almost 1. Thus, the simulated aerial image reproduced the image formation position of the aerial image in the AIRR.

4.3 Luminance evaluation

The luminance attenuation must also be reproduced to accurately render the appearance of the aerial image. This involved rendering images of the white light source alongside the corresponding aerial image, followed by evaluating the ratio of luminance of aerial images to that of the rendered light source. In addition, the reflectance of the appropriate retroreflector BRDF was investigated to simulate the luminance as closely as possible.

4.3.1 Method

In this experiment, we measured the luminance when the light source was viewed from the front and the luminance of the aerial image when the retroreflector was tilted, and we investigated the luminance ratio according to the viewing angle. First, the light source was captured using the setup shown in Fig. 5(b). Thereafter, aerial images were captured using the setup shown in Fig. 5(a). The polar angle \(\theta\) of the incident light on the retroreflector was varied from \(-45^\circ\) to \(45^\circ\) at \(5^\circ\) intervals.

We prepared a square light source with a square hole, as shown in Fig. 8. A light source with a hole in it prevents the superimposition of unwanted light from surface reflections on the aerial image. In the measurement conducted in real space, the light source was prepared by attaching copy paper and absorbers to a YN-900 (YONGNUO) LED light source. In the measurements conducted in CG space, two squares created by the rectangular plugin were prepared and superimposed to form the light source. The area light plugin was set to the large square and the diffuse BSDF with 0 reflectance was set to the small square.

The results from the images were analyzed to calculate the luminance ratio. First, the luminance values were obtained from RGB images using the ITU-R BT.709 grayscale conversion formula [26]. Subsequently, the area of \({50\,\textrm{px}}\times {50\,\textrm{px}}\) was manually trimmed to the area of the aerial image or that of the light source of the image. The ratio of the luminance of the aerial images to that of the light source in the trimmed area was calculated and the average was used for evaluation.

For the measurements in CG space, the reflectance and the transmittance values of the retroreflector BRDF were calculated and used for rendering so that the values at the point of \(\theta = 0^\circ\) matched the measurement results in real space. In the BRDF designed by Guo et al. [23], the transmittance of the incident plane is adjusted. In the newly designed BRDF, the reflectance of the surfaces in the corner array was set to that of aluminum and the additional reflectance coefficients were also adjusted.

4.3.2 Results and discussion

The results of the luminance ratio measurements are shown in Fig. 9, and the reflectance parameters and mean absolute percentage errors (MAPEs) are presented in Table 2. These MAPEs indicate that there was no significant difference between our BRDF and that of Guo et al.[23]. Our model produced an error of approximately \(3\%\), which is considered sufficiently accurate for the optical design of aerial images.

A possible source of the error is the effect of unwanted light from the actual AIRR optical system. Some of the unwanted light rays from the actual retroreflector were reflected once or twice on the corner surface and then exited from the retroreflector. These rays were directional and caused variation in the RF-Ay measurement results. In contrast, both retroreflector BRDF models approximated the unwanted light as a diffuse reflection term with no directivity. This measurement error is believed to have resulted in a larger MAPE.

4.4 Sharpness evaluation

In replicating the appearance of the image, it is also imperative to accurately reproduce the blurring of the aerial image. According to a study by Kakinura et al. [17], the aerial images formed by AIRR are so blurred that the modulation transfer function (MTF) is almost zero below a spatial frequency of \({1\,\mathrm{lp/mm}}\). As a preliminary experiment, we directly compared the MTF curves for a setup with the \(L={150\,\textrm{mm}}\) and \(\theta =0^\circ\), as illustrated in Fig. 5. According to the results shown in Fig. 10, the MTF curves were effectively reproduced in both CG models, albeit with some observed MTF oscillations in the RF-Ay results obtained from real-space measurements due to truncation errors.

Kakinuma et al. also noted that the blurring of AIRR images varies depending on the arrangement of the optics being designed, such as the distance between the half-mirror and the display, and the viewing angle of aerial images. Therefore, to accurately simulate the appearance of an aerial image, it is essential to account for these variations in blurring. In this study, we computed the average of the MTF and examined the trends in aerial image blurring as changes in the placement of pop-up distance were replicated by CG simulations.

4.4.1 Method

The MTF of each setup was measured by varying either the pop-out distance between the aerial image and the half-mirror (\(L_a\)) or the polar angle of the incident light to the retroreflector \(\theta\). In the method involving the change in the pop-out distance of the aerial image, \(L_a\) was varied from \({150\,\textrm{mm}}\) to \({300\,\textrm{mm}}\) at intervals of \({25\,\textrm{mm}}\). The polar angle \(\theta\) was set to \(\theta =0^\circ\) in this case. In the method involving the change in the polar angle of the incident light to the retroreflector, \(\theta\) was varied from \(0^\circ\) to \(45^\circ\) at intervals of \(5^\circ\). The pop-out distance of the aerial image (\(L_a\)) was set to \({150\,\mathrm{\text {m}\text {m}}}\) in this case.

We prepared a slanted edge that was tilted by approximately \(5^\circ\) as the light source. We used a slanted edge of the ISO 12233 resolution chart for the measurements in real space. The position was adjusted so that the slanted edge was at the center of the camera and the chart was lighted by an LED light source (YN-900). The camera exposure time was set to \(1/10\) \({\,\textrm{s}}\). In the measurement in CG space, a slanted edge was created by overlapping two rectangular shapes.

After the measurements, the averages of the MTF values below \({1 \,\mathrm{\textrm{lp}/mm}}\) were calculated for comparison.

In the CG measurements, aerial images were rendered by varying the parameters for the amount of spread of the retroreflected light \(\alpha\), \(\alpha _s\) and \(\alpha _i\); subsequently, the parameters that were closest to the real measurement results were determined. The roughness parameter of the NDF \(\alpha\) for the conventional model [23] was varied from \(0.00000\) to \(0.00150\) at intervals of \(0.00001\). Our retroreflector BRDF model has two roughness parameters: the incident surface roughness \(\alpha _s\) and the corner surface roughness \(\alpha _i\). The surface roughness \(\alpha _s\) was varied from \(0.00100\) to \(0.00400\) at intervals of \(0.00010\). The corner surface roughness \(\alpha _i\) was varied from \(0.00000\) to \(0.00200\) at intervals of \(0.00010\). The average of MTF was obtained from the rendering result under each condition, and the parameter with the lowest MAPE relative to the actual results for \(L_a = {150\,\textrm{mm}}\) and \(\theta =0^\circ\) was determined. The set of parameters with the lowest average MAPE was determined for each condition of the aerial image pop-up distance \(L_a\) or polar angle \(\theta\) as the relevant measurement results. In addition, first-order approximate curves were obtained and plotted for each condition to examine the trend of the changes in the amount of blur.

4.4.2 Results and discussion

The results of varying the polar angle are shown in Fig. 11 and the MAPE for each condition is presented in Table 3. In the Fig. 11, the horizontal axis represents the polar angle of the optical axis to the retroreflector, whereas the vertical axis represents the average MTF below \({1\,\mathrm{lp/mm}}\). Each result is accompanied by a dotted line representing the first-order approximation curve to the data. The previous model introduces a roughness coefficient to regulate the dispersion of retroreflected light. This coefficient decreases as the angle of incidence increases, resulting in clearer aerial images with greater tilt of the retroreflector. Conversely, in actual AIRR, reflections from corner surfaces are present but were disregarded in the previous model. Thus, as the polar angle of the incident light increases, the blurring of the aerial image also increases. Our model, however, incorporates corner surface reflections, thereby reproducing their behavior in CG simulation. The consistency of the positive and negative slopes of the approximate curves between the real (RF-Ay and RF-AN) and our models indicates that our model reproduces the directional dependence of blurring more accurately than the previous model. Note that this tilt is related to the appearance of the aerial image when the retroreflector is deformed or when the aerial image is viewed from an angle. Therefore, compared with the previous model, our new retroreflector BRDF model is better suited for complex aerial imaging systems and viewing aerial images from positions other than the front.

The results of varying the pop-up distance are shown in Fig. 12 and the MAPE for each condition is presented in Table 4. In the Fig. 12, the horizontal axis represents the pop-up distance from the half-mirror to the aerial image, whereas the vertical axis represents the average MTF below \({1\,\mathrm{lp/mm}}\). Data points for each condition are connected by a dotted line representing the first-order approximation curve to the data. The slope coefficients of the linear line are listed in Table 5. A comparison of the slope coefficients reveals that all coefficients in CG space were 2.4 times or more larger than the measurements in real space, although the positive and negative signs remained consistent with those in the measurements in real space. This suggests that factors other than the angle need to be considered as contributors to the spread of retroreflected light. Specifically, it is essential to account for instances where the reflection inside the retroreflective material prevents the positions of incident and reflected light from coinciding with the surface of the reflective material. The maximum error rate observed was \(7\%\); however, this rate is expected to further increase as the scale of the AIRR system expands.

4.5 Comparison of rendered images

Figure 1 shows a comparison of the actual aerial images and the aerial images from the CG simulation. The parameters in the CG closest to RF-Ay from the experimental results were set for each retroreflector model and the retroreflector. The retroreflector was set straight (\(\theta =0^\circ\)) and at an angle of \(\theta =45^\circ\) against the optical axis. The upper side of the image represents a single display with the same luminance as that of the light source to the left of the aerial image, reproducing the diminishing luminance of the aerial image. The lower side of the image shows a magnified view of a portion of the flower. In the model developed by Guo et al., the aerial image did not blur significantly when the retroreflector was set to \(\theta =45^\circ\); in fact, the aerial image appeared sharper. In contrast, our model reproduced the blurring in the direction of the tilt of the retroreflector.

5 Discussion

5.1 Contributions

We summarize the three contributions of our proposed simulation method.

First, an appropriate BRDF model for the retroreflector was designed to generate images of aerial images by ray tracing. Considering the structure of the actual retroreflector that is used in AIRR, the microfacet BRDF model was constructed to transmit light twice through the incident plane and reflect three times onto the corner surfaces. This modeling approach can improve the accuracy of the directional dependence of blur characteristics. Second, by adjusting the reflectance of the corner surfaces to match our retroreflector BRDF (0.825 for RF-Ay and 0.761 for RF-AN), we found that this modeling method simulated the luminance characteristics with an error rate of approximately \(3\%\), as described in Sect. 4.3. Third, by adjusting the roughness parameters of each surface to match those of the actual retroreflector (in our model \(\alpha _s=0.00410, \alpha _i=0.00000\) for RF-Ay and \(\alpha _s=0.00100, \alpha _i=0.00110\) for RF-AN), we found that this modeling method simulated the sharpness characteristics more accurately than the conventional model, as described in Sect. 4.4.

The evaluation results demonstrated that all characteristics of aerial images in AIRR, such as plane symmetry, and luminance and blur characteristics, which depend on the observation angle, can be reproduced by our simulation method. Therefore, we confirmed that the three requirements described in Sect. 1 were satisfied with this simulation method.

5.2 Limitations and future works

Our simulation method presents three limitations:

First, it cannot accurately replicate blur characteristics that are contingent upon variations in the pop-up distance of the aerial image. As evidenced by the findings presented in Sect. 4.4, the effect of the optical path length is greater than it should be. To address this limitation, we intend to enhance the CG model to ensure that parameters remain consistent regardless of alterations in the pop-up distance of the aerial image.

Second, our retroreflector BRDF model does not consider the strictly aerial image blurring and the full effect of diffraction. According to Kikuta et al., diffraction is the main cause of blurring [18], but we assumed that diffraction can be treated as a radial blur. Investigating the impact of approximating the diffraction effects on microfacets and designing a retroreflector BRDF that considers the wavelength dependence of diffraction would provide a more precise simulation of the appearance of the aerial image in AIRR.

Third, it is not possible to simulate polarized AIRR (p-AIRR). The use of polarization enables us to eliminate unwanted light and improve the brightness of aerial images in AIRR systems. In fact, many such studies have been reported [27,28,29]. In future, an even more practical prototyping environment for AIRR systems can be prepared by describing the changes in the polarization state in the retroreflector BRDF and half-mirror BSDF.

Finally, one advantage of our method is that it can easily simulate the deformation of the retroreflector. By applying the retroreflector BRDF to an arbitrarily shaped mesh in our implementation, we can easily simulate the appearance of aerial images when the retroreflector is deformed. This makes it effective to use models based on this method when designing AIRR optical systems [3, 30] that take advantage of the easy processing characteristics of the retroreflector, such as bending and drilling. In future, we would like to consider applying the system to such applications.

6 Conclusions

We have developed a simulation method using ray tracing to design an aerial image system using retroreflection on a computer. Conventional simulation methods using AIRR assume that retroreflection occurs ideally, which makes it difficult to confirm the appearance of blurred aerial images. To solve this problem, CG models of the optical elements that constitute AIRR systems were designed and blurred aerial images were rendered by ray tracing. We designed the new retroreflector BRDF on the basis of the retroreflective material structure used in AIRR. We compared the simulated images with photographs of the actual aerial images to verify that the rendered aerial images simulated the position, brightness, and blurriness of the actual aerial images in AIRR. The experimental results demonstrated that our method reproduces the three characteristics of aerial images in AIRR: plane symmetry, the directional dependence of the luminance characteristics, and the amount of blur. We believe that our method can support the design of the display content of AIRR systems before preparing the actual optical elements. However, the amount of blur that depends on the distance of the aerial image pop-out could not be reproduced. Prototyping of aerial imaging systems that take advantage of the high scalability of AIRR with less cost will be possible by solving this problem. In future, we intend to determine whether the proposed system can be used to design size-independent aerial imaging systems by adding a direction-independent blur element to the retroreflection.

Data availability

There is no data to be made available.

References

Chiba, K., Yasugi, M., Yamamoto, H.: Floating aerial LED signage based on aerial imaging by retro-reflection (AIRR). Opt. Express 22(22), 26919–26924 (2014)

Tsuchiya, K., Koizumi, N.: Levitar: Real Space Interaction through Mid-Air CG Avatar. In: SIGGRAPH Asia 2019 Emerging Technologies, pp. 25–26 (2019)

Abe, E., Yasugi, M., Takeuchi, H., Watanabe, E., Kamei, Y., Yamamoto, H.: Development of omnidirectional aerial display with aerial imaging by retro-reflection (AIRR) for behavioral biology experiments. Opt. Rev. 26, 221–229 (2019)

Ohshima, T., Nishimoto, K.: HoloBurner: Mixed Reality Equipment for Learning Flame Color Reaction by Using Aerial Imaging Display. In: SIGGRAPH Asia 2021 Emerging Technologies, pp. 7:1–2 (2021)

Sakane, S., Kudo, D., Mukojima, N., Yasugi, M., Suyama, H., Yamamoto, H.: Formation of multiple aerial LED signs in multiple lanes formed with AIRR by use of two beam splitters. Opt. Rev. 30, 84–92 (2022)

Chiba, K., Yasugi, M., Yamamoto, H.: Multiple aerial imaging by use of infinity mirror and oblique retro-reflector. Jap. J. of Appl. Phys. 59(SO), SOOD08:1–7 (2020)

Fujii, K., Yamamoto, H.: Aerial Display on a Clear Sphere with Aerial Imaging by Retro-Reflection. In: 2019 24th Microoptics Conference (MOC), pp. 222–223 (2019)

Kiuchi, S., Koizumi, N.: Simulating the appearance of mid-air imaging with micro-mirror array plates. Computers & Graphics 96, 14–23 (2021)

Nimier-David, M., Vicini, D., Zeltner, T., Jakob, W.: Mitsuba 2: a retargetable forward and inverse renderer. ACM Trans. Graph. 38(6), 203:1–17 (2019)

Otsubo, M.: Optical imaging apparatus and optical imaging method using the same . US Patent 8,702,252 (2014)

Yoshimizu, Y., Iwase, E.: Radially arranged dihedral corner reflector array for wide viewing angle of floating image without virtual image. Opt. Express 27(2), 918–927 (2019)

Kikuta, H.: [Design points and latest trends for touchless/non-contact operation/inspection]Sousa/ kensa no touchless ka / hi sesshokuka no tameno sekkei point to saisin doko (in Japanese). JOHOKIKO CO., LTD., Tokyo (2020)

Tokuda, Y., Hiyama, A., Hirose, M., Yamamoto, H.: R2D2 w/ AIRR: Real Time & Real Space Double-Layered Display with Aerial Imaging by Retro-Reflection. In: SIGGRAPH Asia 2015 Emerging Technologies, SA ’15, pp. 20:1–3. New York (2015)

Kim, H., Takahashi, I., Yamamoto, H., Maekawa, S., Naemura, T.: MARIO: Mid-air Augmented Reality Interaction with Objects. Entertainment Computing 5(4), 233–241 (2014)

Matsuura, Y., Koizumi, N.: Scoopirit: A Method of Scooping Mid-Air Images on Water Surface. In: Proceedings of the 2018 ACM International Conference on Interactive Surfaces and Spaces, pp. 227–235. New York (2018)

Yasugi, M., Yamamoto, H.: Exploring the combination of optical components suitable for the large device to form aerial image by AIRR. IDW ’19 pp. 1383–1384 (2019)

Kakinuma, R., Kawagishi, N., Yasugi, M., Yamamoto, H.: Influence of incident angle, anisotropy, and floating distance on aerial imaging resolution. OSA Continuum 4(3), 865–878 (2021)

Kikuta, H., Yasugi, M., Yamamoto, H.: Examination of deblur processing according to optical parameters in aerial image. Opt. Continuum 1(3), 462–474 (2022)

Hoshi, A., Kiuchi, S., Koizumi, N.: Simulation of mid-air images using combination of physically based rendering and image processing. Opt. Rev. 29(2), 106–117 (2022)

Cook, R.L., Torrance, K.E.: A Reflectance Model for Computer Graphics. ACM Trans. Graph. 1(1), 7–24 (1982)

Walter, B., Marschner, S.R., Li, H., Torrance, K.E.: Microfacet Models for Refraction through Rough Surfaces. pp. 195–206. Goslar (2007)

Spieringhs, R.M., Audenaert, J., Smet, K., Heynderickx, I., Hanselaer, P.: Road marking BRDF model applicable for a wide range of incident illumination conditions. J. Opt. Soc. Am. A 40(3), 590–601 (2023)

Guo, J., Guo, Y., Pan, J.: A retroreflective BRDF model based on prismatic sheeting and microfacet theory. Graphical Models 96, 38–46 (2018)

Weidlich, A., Wilkie, A.: Arbitrarily Layered Micro-Facet Surfaces. In: Proceedings of the 5th International Conference on Computer Graphics and Interactive Techniques in Australia and Southeast Asia, pp. 171–178 (2007)

D’Eon, E., Irving, G.: A quantized-diffusion model for rendering translucent materials. ACM Trans. Graph. 30(4), 56:1–14 (2011)

International Telecommunication Union: Recommendation ITU-R BT.709-6 - Parameter Values for the HDTV Standards for Production and International Programme Exchange BT Series Broadcasting service (2015)

Nakajima, M., Tomiyama, Y., Amimori, I., Yamamoto, H.: Evaluation methods of retro-reflector for polarized aerial imaging by retro-reflection. In: 2015 11th Conference on Lasers and Electro-Optics Pacific Rim (CLEO-PR), vol. 1, pp. 1–2 (2015)

Nakajima, M., Tomiyama, Y., Amimori, I., Yamamoto, H.: Aerial video-calling system with eye-matching feature based on polarization-modulated aerial imaging by retro-reflection (p-AIRR). Opt. Rev. 29(5), 429–439 (2022)

Sano, A., Makiguchi, M., Matsumoto, T., Matsukawa, H., Yamamoto, R.: Mirror-Transcending Aerial Imaging (MiTAi): An optical system that freely crosses the boundary between mirrored and real spaces. Journal of the Society for Information Display 31(5), 220–229 (2023)

Ridel, B., Mignard-Debise, L., Granier, X., Reuter, P.: EgoSAR: Towards a Personalized Spatial Augmented Reality Experience in Multi-user Environments. In: 2016 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct), pp. 64–69 (2016)

Acknowledgements

This study was supported by the Japan Science and Technology Agency (JST), through the Fusion Oriented REsearch for disruptive Science and Technology (FOREST) Program, grant No. JPMJFR216L, and The Canon Foundation.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Saito, A., Yue, Y. & Koizumi, N. Simulating the Appearance of Aerial Images formed by Aerial Imaging by Retroreflection. Opt Rev (2024). https://doi.org/10.1007/s10043-024-00895-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10043-024-00895-3