Abstract

Laser Powder Bed Fusion (L-PBF) has immense potential for the production of complex, lightweight, and high-performance components. The traditional optimization of process parameters is costly and time-intensive, due to reliance on experimental approaches. Current numerical analyses often model single-line scans, while it is necessary to model multiple fully scanned layers to optimize for bulk material quality. Here, we introduce a novel approach utilizing discrete element simulations with a ray tracing-modeled laser heat source. Our approach significantly reduces the cost and time consumption compared to conventional optimization methods. GPU acceleration enables efficient simulation of multiple layers, resulting in parameters optimized for bulk material. In a case study, parameters were optimized for AlSi10Mg in just 5 days, a process that would have taken over 8 months without GPU acceleration. Experimental validation affirms the quality of the optimized process parameters, achieving an optical density of 99.91%.

Graphical Abstract

Optimization using the accelerated simulation yielded an optimized parameter set within 5 days. This resulted in a part with an optical density of 99.91%.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Laser powder bed fusion (L-PBF) is a cutting-edge manufacturing technique that has rapidly gained prominence in various industries [1, 2]. The great design freedom offered by the technique makes it instrumental for crafting lightweight, high-performance components in sectors such as aerospace, automotive, medical, and tooling [2,3,4,5,6,7]. Notably, L-PBF’s precise control of high-temperature processes enables the production of refractory metals [8,9,10], known for their exceptional heat resistance and durability, making them pivotal for extreme environment applications. In L-PBF, thin layers of powdered metal alloys are selectively melted by a high-energy laser as shown schematically in Fig. 1. This technology has significant implications for the production of components with high-performance materials that can withstand extreme conditions. To fully leverage the capabilities of this technique continuous exploration of new materials and optimization of process parameters is essential.

The L-PBF process is governed by various parameters, such as scanning speed, laser power, layer thickness, and hatch distance. These process parameters are generally optimized for productivity, part density, and various mechanical strength and ductility requirements [11,12,13]. The selected process parameters have a great influence on the quality of the part. A poor selection of process parameters can lead to various defects such as lack of fusion, gas porosities, and the balling phenomenon [8, 14]. These microscopic defects can in turn lead to poor mechanical properties or even failed prints.

The process involves a delicate interplay of multi-physics phenomena, like powder kinematics, light-matter interactions, phase transitions of melting and evaporation, and metal solidification. The complexity of the process makes it challenging to predict optimal process parameters [14]. This is why currently parameter development is based on the experience of the process engineer and a lot of experimental research. The experimentation of a wide range of process parameter sets makes it an expensive and time-consuming endeavor.

Researchers have proposed various empirical models to better predict the process parameters. One very common method is defining a process window based on the volumetric energy density (VED) \(E_{\text {V}}\) [10], defined as:

In this equation P is the laser power, v is the scanning speed of the laser, h is the hatch distance between scan vectors, and t is the thickness of the powder layer. The process windows proposed using these methods are typically quite wide. With the increased interest in manufacturing using refractory metals, more precisely defined process windows are desired. Due to high thermal cooling rates and high reflectivity in these metals the process windows are quite narrow [8].

To predict process parameters with higher precision various research is put into the development of numerical simulations. Using numerical methods the cost and time consumption of process parameter development can be greatly reduced. These numerical simulations can be roughly split into two categories: finite element methods (FEM) and discrete element methods (DEM). FEM has an advantage over DEM in terms of computational efficiency. However, it requires high degrees of homogenization [15, 16]. Furthermore, it can not capture certain discrete aspects of the process such as material deposition. Due to its discrete nature, DEM naturally provides an answer to these issues. The drawback of DEM is that it is more computationally expensive.

Current parameter optimization studies utilizing numerical simulations often model a single melt track [16,17,18,19,20]. From the results, meltpool dimensions and porosity formation in this melt track are analyzed. The results of these simulations can be highly accurate, especially with modeling of the fluidic phase [21]. While this is a good method to calibrate or validate the numerical model, the process parameters that are found are not directly applicable for the production of full parts. The computational cost of these simulations is too high to expand the simulated geometry to multiple scan vectors.

When modeling only single-line scans there is a large discrepancy between the scan pattern in a full part and the simulated geometry. Thermal boundary conditions for a single line scan differ significantly from that of fully scanned layers. Optimizing process parameters using single-line scans results in parameters optimal for thin walls, which are known to differ from bulk parameters [22]. Furthermore, typically good process parameters do not only melt one layer but also remelt the previous layers[23]. These two aspects indicate the need to simulate multiple fully scanned layers to find optimal bulk parameters.

In this work, a framework is developed to efficiently find optimal bulk process parameters for L-PBF processes within a reasonable timeframe. In order to save on computation time some physics are simplified in the used numerical model. The main goal is to compute if sufficient energy is delivered to the powder to reach the melting temperature, and the physics after melting are simplified. The recoating of the powder is also not modeled and a perfectly recoated layer is assumed. For accurate recoating, the particle dynamics can be modeled more accurately. For example by inclusion of cohesive forces [24], but also the powder shape becomes more pertinent [25, 26].

The framework in this work will be an expansion and improvement on an already existing simulation. The paper is ordered as follows, in the following section a description is made of the numerical model of the process including efficiency considerations as well as post-processing of the simulation results. In Sect. 3 the optimization framework is described and subsequently applied to optimize process parameters for a specific material. The results of the optimization are then validated experimentally in Sect. 4. Finally, conclusions are discussed in Sect. 5.

2 Numerical model

In this section, a description is made of the numerical model. The model consists of two main components: The DEM framework, which is used to model the solid and powder material, and the ray-tracing framework which is used to model the laser heat source, as schematically shown in Fig. 2. In this work, an overview of the model is given, and a detailed description of the model is given in [27] and [28]. The efficiency of the accelerated simulation is demonstrated in a performance test. Lastly, post-processing of the simulation results is described.

2.1 DEM framework

The material of the L-PBF process is modeled using DEM, both for the powder phase and solid phase. The thermo-mechanical model is solved for the degrees of freedom (DOFs) of each particle for each time step. These degrees of freedom are rotations, displacements, and temperature. The particles are modeled as spheres for both the powder phase and the solid phase. The model used to solve each phase is different to comply with the difference in material structure. A particle will transition from the powder phase to the solid phase if it is in contact with another particle and both are above the melting temperature. The bond between the particles is then represented by a massless cylindrical beam.

The particles which are in contact will interact with each other. This interaction depends on whether the two particles in question are bonded. The forces and moments exerted by any two particles on each other are shown in Fig. 3. The exact definition of each force is explained in depth in [27]. On the left of the figure, the unbonded case is presented. The particles exert forces on each other via the contact area. This consists of a contact force \(\textbf{F}^\text {c}_{ij}\), which also results in a contact moment \(\textbf{M}^\text {c}_{ij}\). Then there are the dissipative forces and moments, which will eliminate dynamic oscillations [27]. The damping force \(\textbf{F}^\text {d}_{ij}\) is dependent on the relative velocity vector between the particles. Similarly the damping moment \(\textbf{M}^\text {d}_{ij}\) is dependent on the relative angular velocity vector of the two particles. On the right of Fig. 3 particle interactions between bonded particles are represented. It can be seen that it reduces to just a bond force \(\textbf{F}^\text {b}_{ij}\) and a bond moment \(\textbf{M}^\text {b}_{ij}\). \(\textbf{F}^\text {b}_{ij}\) can transfer compressive and tensile forces, while \(\textbf{M}^\text {b}_{ij}\) can transfer bending and twisting moments. They are dependent on the deformation of the beam. Since it is assumed that the beam deformation is infinitesimally small it is possible to utilize the analytical solutions of the Euler–Bernoulli beam equations[29,30,31].

Other than interaction via forces and moments the particles also conduct heat, when they are in contact with each other. This means convection and radiation are not taken into account. The heat exchange from particle j to i is defined as:

Where K is the thermal conductivity and T the particle temperature. It can be seen that the heat exchange depends on the conductive area between the particles \(A_{ij}\) and the distance between them \(||\textbf{x}_{i-j}||\).

When particles are not bonded \(A_{ij}\) is the contact area at the center of the particle overlap. When particles are bonded \(A_{ij}\) is larger and is dependent on the particle radii and the amount of bonds each particle has [27].

When the temperature of a particle i increases its radius \(R_i\) increases according to:

Where \(R_i^0\) is the initial radius and \(\alpha\) is the coefficient of thermal expansion.

The total force \(\sum \textbf{F}_{i}\) acting on any particle i consists of the gravitational force \(\textbf{F}^\text {g}_i\) and the sum of interaction forces with all particles. The total moment \(\sum \textbf{M}_i\) acting on any particle i is similarly found to be the sum of all interaction moments with all particles. Lastly, the total heat transfer \(\sum \phi _i\) for any particle i depends on the sum of all heat transfer from/to other particles, as well as the heat added to the particle by the heat source \(\phi ^\text {h}_i\). For each particle and for each time increment \(\sum \textbf{F}_i\), \(\sum \textbf{M}_i\), and \(\sum \phi _i\) are computed and used to solve for the evolution of the DOFs. The translation and rotation of the particles are solved using Newton’s second law of motion:

Here \(\textbf{a}_i\) is the acceleration vector and \(\mathbf {\alpha }_i\) is the angular acceleration vector for particle i. \(m_i\) is the mass of particle i. Lastly, \(I_i\) is the mass moment of inertia of particle i. The 2nd order differential equations of Eqs. 4 and 5 are solved to find the change in the translational and rotational DOFs using the Velocity-Verlet integration scheme[32, 33]. The particle positions are updated using the velocity vector. The particle rotations are stored using quaternions, which are a mathematical element that can represent the rotation and orientation of an object in 3D space [34]. The angular velocity vector is used to update the quaternions, the exact manner in which this update occurs is described in [27].

The evolution of the temperature of a particle is solved using a first-order integration scheme. In order to solve the energy balance[18]:

As previously mentioned most particles will not interact with each other since any particle can only be in contact with a certain amount of particles at one time. To speed up computation of particle interactions a cell-domain algorithm is implemented [35]. The domain is split up into smaller cubic cells with a side length larger than the largest particle diameter. Then at each time increment, it is determined in which cell any particle is located. Due to the cell size, any particle is only able to interact with particles in its own cell and/or particles in neighboring cells. The interactions with particles outside of these cells do not need to be computed anymore. This reduces computation time on a number of particles \(N_\text {p}\) from \(\mathcal {O}(N_\text {p}^2)\) to \(\mathcal {O}(N_\text {p}\log {N_\text {p}})\)[27]. Performance is further increased by computing the particle interactions of all cells in parallel on the GPU.

2.2 Ray tracing framework

The material transitions from the powder phase to the solid phase in the model when a sufficient temperature is reached. The heat added to each particle from the heat source is found using a thermal ray-tracing model, which models individual photons originating from the laser. Interactions between light and the particles are assumed to be instantaneous as the photons travel at the speed of light [28]. The manner in which light interacts with the objects can be assumed to be as a light ray as long as the size of the objects is sufficiently large compared to the photon wavelength. In the case of the L-PBF that is considered this is valid, since particles with a diameter of \(\mathcal {O}(10^{-5})\) m are considered, and a laser with a wavelength of \(\mathcal {O}(10^{-6})\) m [28]. The rays from the heat source are focused towards a target location on the powder bed, and the density of rays is dependent on the position with respect to the origin point in the laser. This density corresponds to a Gaussian intensity profile.

Schematic overview of thermal ray tracing of a single ray originating from a laser through a domain with powder particles (left) and a detailed view of the interaction of the ray with particle i (right). The ray loses a part of its energy to the particles after each interaction. Energy is denoted here with the intensity of the red hue, which decreases in the ray and increases in the particles after each interaction

Consider a single ray carrying energy \(E_1\) with origin \(\vec {o}_1\) and direction \(\vec {d}_1\) in a domain \(\Omega\) containing a number of spherical particles, see Fig. 4. The ray initially intersects with powder particle i. During the interaction, the ray reflects off of the surface of the particle, and a part of the ray is refracted into the particle. It is assumed that the energy of the refracted ray is fully absorbed by the powder particle. This is because most of the ray energy is dissipated into the material before the ray reaches another surface [28, 36]. This means that the energy \(E_2\) in the reflected ray will be:

Where \(E_\text {absorb}\) is the energy that is absorbed by the particle from the refracted ray. This energy is transferred to the particle as heat and is included in the left hand side of Eq. 6. The energy in the ray decreases after each interaction until a minimum energy is reached or no more interactions happen. The exact amount of energy distributed between the reflected and refracted ray depends on the reflection coefficient, which can be computed using the Fresnel equations [28]. The reflected ray now has origin \(\vec {o}_2\) and direction \(\vec {d}_2\). The direction is determined by the angle \(\theta _1\) between the direction \(\vec {d}_1\) of the original incident ray and to the surface normal \(\vec {n}\) at \(\vec {o}_2\). \(\vec {d}_2\) will have an angle \(\theta _2\) w.r.t. \(\vec {n}\), but at the opposite side of the surface normal. For the sphere of particle i, with position \(\vec {x}_i\) and radius \(R_i\) any point \(\vec {X}\) on its surface can be described by:

The location \(\vec {p}\) of the intersection between the ray and the powder particle is described as:

Here s represents the distance between the ray origin and the surface of the particle. If it is assumed that \(\vec {p}\) is a point \(\vec {X}\) on the surface of particle i, the value for s can be found by substituting \(\vec {p}\) into equation 8 and solving for s:

where,

Depending on the value for s it can be determined whether there exists an intersection between the ray and particle i. Only positive values for s indicate that an intersection exists. Each ray can only intersect once, for this reason, the computation in Eq. 10 needs to be computed for all particles and the particle with the lowest value for s is the actual intersection.

For large systems of particles, the computation of s is very computationally expensive. Furthermore, it will need to be computed for all particles for each ray. To optimize the ray tracing algorithm it is essential to limit the amount of intersections that need to be computed. One way this is done is by implementing a grid marching algorithm [37]. For this algorithm, the same cells can be used that were used for the DEM particle interactions. Using this algorithm the computation time per ray is vastly reduced. Lastly, the ray tracing algorithm is accelerated using GPU parallelization, by computing the intersection of each ray in parallel.

2.3 GPU implementation and performance

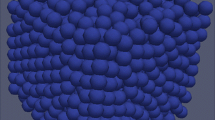

As discussed in the previous subsections, the most computationally expensive parts of the algorithms are accelerated using GPU parallelization. This was implemented using CUDA. To test the performance a simulation is set up where the process of printing of a small block of material is simulated. Some of the details of the simulation geometry as well as the machine properties are shown in Table 1 and a visualization is shown in Fig. 5. Three performance measures are tracked: The duration of the full simulation, the duration of a time step during ray-tracing, and the duration of a time step without ray-tracing. The speedup for the latter two measures is shown in Fig. 6. During the simulation the amount of degrees of freedom is not constant, this is due to the addition of particles when a layer is added. Overall the accelerated simulation is 52 times faster than the original simulation, see Table 2. The speedup during ray tracing is significantly higher than for the particle interactions. This means the total speedup of the simulation will be somewhere between 2 times and 80 times depending on how much time is spent with the heat source activated.

2.4 Post-processing

In L-PBF process development one of the key measures that is maximized is relative density. During the process, the powder melts and the goal is to produce a part with low porosity or no porosity. The level of porosity can be assessed by computing the relative density \(\rho _\text {rel}\):

Here \(\rho _\text {p}\) is the density of the printed part, and \(\rho _\text {m}\) is the bulk density of the raw material. The part density is lower due to various defects in the fusion, such as gas porosities and lack of fusion defects, caused by incomplete melting of the powder. To find \(\rho _\text {rel}\) numerically from the simulation data it is first necessary to define an analysis volume \(V_\text {a}\). This volume is selected such that it is contained within a scanned layer, thus excluding any powder particles that were not meant to be scanned, see Fig. 7. \(V_\text {a}\) can be defined as the cuboid that is spanned by two vectors \(\vec {V}_\text {min}\) and \(\vec {V}_\text {max}\), see Fig. 8. The state of the particles is assessed at the final time step.

Schematic 2D representation of a relative density analysis volume. Here particle i is partly inside the analysis volume, particle j is fully inside, and particle l is outside the volume. Particle k is inside the volume but has not bonded to any other particle, indicated here by the dashed outline. On the right, a detailed view of the enclosed mass computation for particle i is shown. The computation utilizes Monte Carlo integration

In Fig. 8 a 2D representation is shown of the analysis. To compute the density first the total mass \(m_\text {f}\) of all bonded particles within the volume is computed:

Here \(m_i\) is the mass of particle i, and \(N_{\text {b}_i}\) is the amount of bonds for particle i. It can be seen in Eq. 13, that particles with \(N_\text {b}=0\) are disregarded from the sum, such as particle k in Fig. 8. \(f_i\) is the fraction of particle i that is enclosed within \(V_\text {a}\), \(f=0.0\) for particles fully outside \(V_\text {a}\), such as particle l in Fig. 8, \(f=1.0\) for particles fully enclosed by the volume. To determine \(f_i\) for partially enclosed particles the overlapping volume between the sphere of particle i and the cuboid \(V_\text {a}\) is computed using Monte Carlo integration.

After \(m_\text {f}\) is computed the value for the fused mass-based numerical relative density \(\rho _\text {n}\) can be found as:

As the meltpool is not modelled explicitly, it is expected that the true relative density \(\rho _\text {rel}\) will be larger than the numerical relative density \(\rho _\text {n}\). The reason for this is that in the simulation the particles remain spherical after bonding. This means the upper limit for the relative density is determined by the highest achievable packing density. In the actual process, the particles melt and lose their spherical shape. The value for \(\rho _\text {n}\) will be used as a parameter to optimize and not an absolute value that is expected from the real process.

3 Parameter optimization

In the previous section, a model was presented to simulate the PBF process. In this section, a practical use of the model is presented in a case study. A bulk process parameter set is developed for AlSi10Mg. The material is treated as a new alloy for which no process parameters are known in literature. In this way, the optimization process in this case study can be used for finding parameters for novel alloys as well. The parameter set will have a layer thickness t of 60 µm and produced with a scan system with spot size D of 100 µm. The optimization objective will be numerical relative density \(\rho _\text {n}\), under the assumption that this will also lead to the highest relative density \(\rho _\text {rel}\). Furthermore, the theoretical build rate \(B_\text {R}\) should be as high as possible. This build rate is defined as:

where v is the scan speed, h is the hatch distance. The hatch distance is defined as the distance between two parallel scan vectors. An additional optimization condition is that the meltpool depth \(d\ge t\).

In these experiments, a zig-zag scan pattern will be used, which is the default setting on most machines. This leaves three process parameters to optimize: Laser power P, scan speed v, and hatch distance h. The conditions and goals for the optimization are summarized in Table 3.

3.1 Simulation setup

For the simulation, a balance is struck between computational time and accuracy. Even with the significant acceleration using the GPU and the omission of the meltpool dynamics, a single simulation can still take multiple hours. There are multiple components contributing to the length of a simulation: First, the total simulated time, which is affected mostly by the simulated geometry and the scanning parameters. In addition, there is the computational cost per timestep, which depends on the total amount of DOFs in the simulation. The amount of DOFs is also affected by the simulated geometry. Lastly, there is the size of the timestep \(\Delta t\). There is a critical timestep \(\Delta t_\text {c}\), which is the upper limit of the size of \(\Delta t\) before the equations of motion in the DEM simulation become unstable [38, 39]:

If \(\Delta t\) is too large particles may move too far into each other, causing them to jump in the next timestep due to the large force that is exerted. When a smaller timestep is taken partices already exert a small force when they initially get into contact, this prevents them from overlapping too much.

To find optimal parameters multiple parameter sets will be simulated. Since the parameter sets are the target for the optimization only the size of \(\Delta t_\text {c}\) and the simulation geometry is taken into consideration to limit run time. This will make it possible to limit the average runtime to approximately 4 h. In this way, an optimized parameter set can be found within a reasonable time span.

3.1.1 Critical timestep

There are two approaches to increasing the size of the critical timestep: Increase the mass or decrease the stiffness [38, 39]. Both are dependent on the radius of the particle. However, the particle size is already set in accordance with Table 3. This leaves the possibility to alter either the material density \(\rho _\text {m}\) or the elastic modulus \(\lambda\).

The main focus of the simulation is modeling the fusion of the material correctly, which is mainly governed by the heat and temperature dynamics of the system. Particle mass plays a more significant role in this since a larger mass will be able to absorb and dissipate more heat. For this reason, it was decided to reduce the elastic modulus of the material, since the magnitude of the forces and stresses are not of interest for this use case.

3.1.2 Geometry

The goal of the optimization is to find bulk process parameters. In order to properly assess \(\rho _\text {n}\) an analysis volume should be selected that is between two layers in order to fully capture the effect of remelting. This means the minimum amount of layers for the simulation is three.

The density of the printed specimen is usually experimentally assessed using cubes with a side length of 10 mm. It was found that a three-layer simulation for this material already has a run time of 4 h for a scanned area of 1 mm by 1 mm. Fully simulating an area of 10 mm by 10 mm would be too computationally expensive.

There is a significant difference in the heat added to the part for a smaller area since the material will have less time to cool down before a new scan vector passes again. A strategy was devised to simulate a smaller area, while more closely emulating the cooling behavior of a larger area, which is schematically shown in Fig. 9.

Consider a square part with a cross-sectional area of 10 x 10 mm and a volume \(V_\text {part}\). The machine instructions used to simulate the printing process for this part will be adapted. The fusion of powder particles in the center of a 10 mm by 10 mm part is simulated for a smaller 1 mm by 1 mm area. To replicate the heating and cooling cycles a 10 mm by 10 mm area is scanned virtually, where only a 1 mm by 1 mm subsection is scanned with the laser enabled. This means the time between scan vector passes within \(V_\text {sim}\) is identical to the time for a part with \(V_\text {part}\).

The simulation domain will make use of periodic boundary conditions for the contact forces. This allows for the simulation of only a small part of the powder bed. This implies that the simulation domain \(V_{\Omega }\) needs to be slightly larger \(V_\text {sim}\). In order to prevent heat exchange from one side of the scanned area to the other side via the periodic boundary. By adding a boundary of unscanned powder the heat transfer across the periodic boundaries is significantly limited. A summary of the most important simulation settings is shown in Table 4.

3.1.3 Material properties

As discussed at the start of this section the material under investigation is AlSi10Mg and it will be treated as a new alloy. Most material properties for AlSi10Mg are well documented in literature. The properties that are unknown are computed using a weighted average of the alloy component properties. The weights used for the composition of the alloy are in accordance with [40, 41]: 89.8% Aluminum, 10% Silicon, and 0.2% Magnesium. Special care is taken to mainly use this computation for properties where it is known that the alloy component interactions have a linear effect on the property. The material properties used in the simulation are listed in Table 5.

3.2 Parameter optimization

The parameter optimization will make use of the design of experiments technique and response surface methodology (RSM)[49]. At the first stage of the optimization, an initial screening must be made to narrow down the process window. Using the more narrow process window from the initial screening, a definitive screening design (DSD) will be made to find the optimal parameters. If these optimal parameters are located outside the tested design space extra simulations will be performed to validate the optimized parameter set.

The initial screening will be carried out using a half-fractional factorial design. Full fractional factorials will test all combinations of parameters. The number of tested parameters will be \(L^\eta\), here L is the number of levels per parameter, and \(\eta\) is the amount of parameters. In order to save time a half factorial design is used. Factorial designs are not suitable for creating contour plots or response surfaces, because the gradient is only accurate around the center point [50]. Rather they are used to occupy a wide design space and narrow it down [51]. In the case of this optimization, there are three optimization parameters, that are each varied at three levels. The tested parameter sets for the initial screening are presented in Table 6. Since there are three design parameters the design space can be visually represented as three dimensions. The locations of the tested parameter sets within the design space are displayed in Fig. 10.

Using the results from the initial screening a more narrow design space is selected. The definitive screening will take place using this design space. To find the curvature of the response surface a Box-Behnken design is used [49]. This is often used after the initial screening to find the curvature of the response surface [52]. This design is focused more towards the center of the design space rather than the edges of the design space as with the fractional factorial design.

The visualization of the tested parameters for the definitive screening is shown in Fig. 10. The exact parameters used for the definitive screening are shown in Table 6. The values selected based on the initial screening results are already shown. The results of the initial screening as well as the reasoning behind the selected design space for definitive screening will be explained in the next subsection.

3.3 Optimization results

Using the parameter sets in Table 6 13 simulations were performed for the initial screening, using the simulation settings as stated in Table 4. The total computation time for these simulations was 2 days and 20 h. With the longest simulation lasting 13 h and the shortest lasting only 32 min. The results for the initial screening are shown in Table 7.

It can be seen that several parameter sets achieve a numerical relative density of around 75%. From these parameter sets, there were three with a build rate above 20 \(\text {cm}^3/\text {h}\): s_HMM, s_HML, and s_MML. These parameter sets were used to define the design space for the definitive screening simulations.

From the three parameter sets that were selected all of them have a scan speed of 1250 mm/s. The laser power ranges from 275 to 450 W. The hatch distance ranges from 100 µm to 80 µm. The best parameter set out of the three is s_HMM, which uses \(P=450\) W, \(v=1500\) mm/s and \(h=100\) µm. It has the highest build rate out of the three and second-best numerical relative density.

The design space is narrowed down for the definitive screening design, based on the three indicated parameter sets. The ranges for the new design space are: \(P = [400, 500]\) W, \(v = [1000, 1500]\) mm/s, and \(h = [90, 100]\) µm. These new ranges have a different center point located near the expected optimum.

The definitive screening simulations took 4 h on average, amounting to a total of 2 days and 4 h of run time. The results from the definitive screening, together with the results from the initial screening are used to train a quadratic regression model. The resulting response surface is shown on the left of Fig. 11.

It can be seen that the initial regression model expects that there is a saddle point, with higher values for \(\rho _\text {n}\) at the upper left and lower right of the plot. Since the lower right of the plot has a higher build rate two extra optimization runs are performed in this area. The parameter sets for these runs are shown in the second part of Table 6, with the identifiers OPT1 and OPT2. The final regression model including the optimization runs is shown in Fig. 11 on the right. It can be seen that the response surface now does have an optimum. This optimum shifts depending on the power level.

The graph of \(\rho _\text {n}\) against VED seems to flatten roughly around 77%, see Fig. 12. The parameters that were tested in the definitive screening are located around the start of the plateau, as this is also where a good balance is found for high \(\rho _\text {n}\) and high \(B_{{\text {R}}}\).

3.4 Optimal parameter set selection

From the results of the parameter optimization, it is found that r_HLM (P = 500 W, v = 1000 mm/s, h = 100 µm) has the highest expected \(\rho _\text {n}\). It is located at the optimum of the lower right contour plot in Fig. 11. The optima for lower power levels are higher. However, these also require a significantly lower \(B_{\text {R}}\). r_HLM has a \(B_\text {R}\) of 21.6 cm\(^3\)/hr. Another good parameter set with a slightly lower \(\rho _\text {n}\) but higher \(B_\text {R}\) is r_HMH (P = 500 W, v = 1250 mm/s, h = 110 µm). A summary of these two parameter sets is shown in Table 8.

4 Experimental validation

In the previous section, two optimal parameter sets were found. In this section, it is validated that the location of the optimum within the design space aligns with the optimum in the real process. A selection is made from process parameter sets at various locations in the design space. These parameter sets are subsequently fabricated and tested experimentally. The optimal parameters are expected to be found around the inflection point in the \(\rho _\text {n}-\)VED curve. For this reason, the selected parameters are centered around this point.

The selection consists of: The optimal parameter sets in Table 8, three parameters expected to have a slightly lower quality than the optimum, and two parameters expected to have poor quality. This selection is detailed in Table 9. It is expected that the experimentally measured relative density \(\rho _\text {rel}\) of the parameter sets will follow approximately the same order as the order in Table 9.

4.1 Experimental methodology

The various density cubes are fabricated, with a 10 mm side length using a MetalFab G2 from Additive Industries. The relative density of the cubes will be assessed by means of the planar density. This is found by cutting a sample and polishing the cut plane. From this polished surface, pores can be distinguished from the solidified material using optical microscopy. The polished samples will also give insight into the types of porosity defects that are present in the part.

4.2 Density results

Classified microscopy images of the polished density cubes produced with the seven parameters from Table 9. The 6.1 mm x 4.4 mm regions are classified into matrix (white) and pores (black). The optical density (OD) value is displayed per region

As discussed previously the numerical density computed from the simulation is taken as a qualitative measure and not a quantitative measure. The values for numerical relative density and the optical density are different because meltpool dynamics are not modeled, this means that the packing density of the powder is the upper limit of the numerical relative density.

The optical density results are shown in Fig. 13 and compared against the numerical prediction as shown in Table 10. It can be seen that the prediction for the location of the optimum within the design space matches the experimental results. The parameter set r_HLM had the best properties for both the numerical simulations and the experimental validation. Furthermore, the predicted order of the parameters in terms of results also aligns except for the prediction for r_MHH. This parameter set was expected to have medium quality but ended up with a density of 99.83%. From the seven tested parameter sets, four had an optical density above 99.50%, which is the internal requirement of Additive Industries for AlSi10Mg.

There is a manner in which the numerical density can be closer to the real density. As previously discussed the upper limit of the numerical density depends on the powder packing density. A reduction in numerical density is mostly caused by unfused powder. Therefore it would make more sense to change the computation of the numerical relative density from Eq. 14 and 13 in the following manner:

Instead of computing the total fused mass within the volume the total unfused mass \(m_\text {u}\) is computed. Let this new unfused mass based definition for numerical density be called \(\rho _\text {n}^*\). The results using this new definition are shown in Table 10. It can be seen that the drop in density across the parameter sets for the previous definition is much larger than the new definition. The reason for this is the densification in the simulation goes according to two stages: Bonding, then neck growth.

The first stage is caused by the fusion of particles that are in contact and reach the melting temperature. The second stage depends on a few numerical parameters such as bond shrink ratio, and bond shrink time. It is not possible to predict the values for these parameters for a given material beforehand. For this reason, the values for these parameters from the previous simulation do not align with the real process, but they follow a similar trend. In the real process, neck growth does not occur, rather the particles fully melt and form a meltpool. The unfused mass based numerical density \(\rho _\text {n}^*\) only takes the initial stage into account, which is the only stage that is analogous to the real process. It does assume that the rest of the analysis volume contains fully fused material, and gas porosities can not be taken into consideration.

For correct modeling of the second stage, it will be necessary to take the fluid dynamics of the meltpool into consideration. Furthermore, a way to compute the formation of gas porosities should be included. When \(\rho _\text {n}^*\) is compared against the optical density results it can be seen that the drop-off is faster in the optical density. This can be more clearly seen in Fig. 14. Specific point energy \(E_\text {SP}\) is defined as \(E_{\text {SP}} = \frac{P\cdot D}{v}\). It can be seen that \(E_\text {SP}\) takes the spot size D into consideration, which VED fails to capture. The parameter has previously been used as a design parameter in powder bed fusion[53]. Furthermore, it was found to have a strong relation to the resulting meltpool dimensions[54].

The inflection point for the numerical density is at a lower \(E_\text {SP}\) than the experimental results. This can be the cause of certain simulation parameters that need to be tuned. The microscopy images in Figs. 14 and 15 allude to a different explanation. The top surfaces of the samples get progressively more rough for lower values for \(E_\text {SP}\). In the sample with the lowest \(E_\text {SP}\) this clearly results in the balling defect, which is caused by the high surface tension in molten material[8]. The effects of surface tension are not taken into account in the current simulation. This balling phenomenon is not taken into account in the simulation resulting in a higher predicted density.

Comparison of numerically predicted density versus experimental results for parameter sets with various specific point energies. On the left including all numerical results and on the right a closer view with only the numerical results selected for validation. On the right plot, microscopy images are included of the etched top surfaces of each sample

Microscopy images of the etched samples. With the meltpool cross-sections of the last layer visible. Experiments numbered according to Table 9

5 Conclusions

The current state of L-PBF process parameter optimization involves a high degree of experimentation. This increases the cost and time consumption of parameter development. Numerical models are available, which can be used to find optimal process parameters. Current attempts at applying numerical models mainly focus on finding parameters for single-line scans. The models used in these simulations are highly accurate but are computationally expensive.

In order to obtain optimal process parameters for the bulk material it is needed to simulate large multi-layered material volumes. To find process parameters within a reasonable time frame the particle interactions and ray tracing are accelerated using GPU parallelization. Furthermore, some accuracy is sacrificed to decrease computational cost. More specifically the meltpool dynamics are not included in the model. The stiffness of the material is reduced to allow for a larger time step and a subsection of the complete part is simulated. Nevertheless, the model still has excellent predictive properties.

The optimization method utilizes the often-used response surface methodology. Instead of performing experiments, the experiments are replaced by simulations. From the results, an optimum is found in the response surface.

This numerical approach to optimization has resulted in an optimal parameter set within a time frame of five days of run time. This is not only faster than the typical experimental approach, but also much less expensive to carry out.

In a case study, the optimization framework was able to successfully find optimal bulk process parameters for AlSi10Mg. In the optimization process, 28 unique process parameter sets were simulated. The optimized parameter set was found to have an optical density of 99.91% in experimental validation. This points out that despite the assumptions the model was able to find good quality process parameters.

Seven process parameter sets from the simulations were selected for experimental validation. The predicted order in terms of performance was the same in the experiments and the simulation except for one process parameter set, which performed better than expected. The current framework can be used to replace the initial screening step in parameter optimization and arguably the definitive screening can also largely be replaced.

During the experimental validation, it was found that there is a difference between the numerically computed relative density and the relative density of the real process. These discrepancies are likely due to certain unmodelled effects, such as the formation of gas pores and balling defects. The meltpool depth was modeled closely when the melting occurs according to the conduction mode. When the specific point energy gets too low the balling effect gets more dominant and the meltpool depth will be inaccurate again. The inaccuracies in meltpool dimensions are likely due to the lack of a fluid phase in the numerical model.

There is room for improvement in computational efficiency. For example, there are still parts of the simulation that can be parallelized on the GPU such as the solid phase forces. Another way to increase computational efficiency is by implementing implicit solvers at specific parts of the process. The particles are largely static outside of the deposition step. The forces during this timestep can be computed using implicit solvers, which can be stable at much larger timesteps than the explicit solver in the current implementation.

The accuracy of the current model can be improved by implementing a fluid phase into the model. This is expected to increase the accuracy of the relative density as well as the meltpool dimensions. This fluid phase can be modeled using Smoothed Particle Hydrodynamics (SPH). This will allow for accurate modeling of the meltpool dynamics.

References

Khorasani, A., Gibson, I., Kozhuthala Veetil, J., Ghasemi, A.: A review of technological improvements in laser-based powder bed fusion of metal printers. Int. J. Adv. Manuf. Technol. 108, 1–19 (2020). https://doi.org/10.1007/s00170-020-05361-3

Bidulský, R., Gobber, F., Bidulskái, J., Ceroni, M., Kvackaj, T., Actis Grande, M.: Coated metal powders for laser powder bed fusion (l-pbf) processing: a review. Metals 11, 1831 (2021). https://doi.org/10.3390/met11111831

Fujiki, A.: Present state and future prospects of powder metallurgy parts for automotive applications. Mater. Chem. Phys. 67, 298–306 (2001). https://doi.org/10.1016/S0254-0584(00)00455-7

Ramakrishnan, P.: Automotive applications of powder metallurgy, 493–519 (2013). https://doi.org/10.1533/9780857098900.4.493

Vicenzi, B., Boz, K., Aboussouan, L.: Powder metallurgy in aerospace-fundamentals of pm processes and examples of applications. Acta Metallur. Slov. 26, 144–160 (2020). https://doi.org/10.36547/ams.26.4.656

Pandian, V., Kannan, S., Koduru, V.: Recent developments in powder metallurgy based aluminium alloy composite for aerospace applications. Mater. Today Proc. 18, 5400–5409 (2019). https://doi.org/10.1016/j.matpr.2019.07.568

Perdomo, I.L.F., Ramos-Grez, J., Beruvides, G., Mujica, R.: Selective laser melting: lessons from medical devices industry and other applications. Rapid Prototyping J. (2021). https://doi.org/10.1108/RPJ-07-2020-0151. (ahead-of-print)

Bajaj, P., Wright, J., Todd, I., Jägle, E.: Predictive process parameter selection for selective laser melting manufacturing: applications to high thermal conductivity alloys. Addit. Manuf. (2018). https://doi.org/10.1016/j.addma.2018.12.003

Huber, F., Bartels, D., Schmidt, M.: In situ alloy formation of a WMoTaNbV refractory metal high entropy alloy by laser powder bed fusion (PBF-LB/M). Materials (2021). https://doi.org/10.3390/ma14113095

Guo, M., Gu, D., Xi, L., Zhang, H., Zhang, J., Yang, J., Wang, R.: Selective laser melting additive manufacturing of pure tungsten: role of volumetric energy density on densification, microstructure and mechanical properties. Int. J. Refract. Metals Hard Mater. (2019). https://doi.org/10.1016/j.ijrmhm.2019.105025

Karg, M., Hentschel, O., Ahuja, B., Junker, D., Hassler, U., Schäperkätter, C., Haimerl, A., Arnet, H., Merklein, M., Schmidt, M.: Comparison of process characteristics and resulting microstructures of maraging steel 1.2709 in additive manufacturing via laser metal deposition and laser beam melting in powder bed. In: Proceedings of 6th International Conference on Additive Technologies, Nürnberg (2016)

Kuo, C., Chua, C., Peng, P.C., Chen, Y., Sing, S.L., Huang, S., Su, Y.: Microstructure evolution and mechanical property response via 3d printing parameter development of Al-Sc alloy. Virtual Phys. Prototyp. 15, 120–129 (2020). https://doi.org/10.1080/17452759.2019.1698967

Du Plessis, A., Yelamanchi, B., Fischer, C., Miller, J., Beamer, C., Rogers, K., Cortes, P., Els, J., Macdonald, E.: Productivity enhancement of laser powder bed fusion using compensated shelled geometries and hot isostatic pressing. Adv. Ind. Manuf. Eng. (2021). https://doi.org/10.1016/j.aime.2021.100031

Ahmed, N., Barsoum, I., Haidemenopoulos, G., Abu Al-Rub, R.: Process parameter selection and optimization of laser powder bed fusion for 316l stainless steel: a review. J. Manuf. Process. 75, 415–434 (2022). https://doi.org/10.1016/j.jmapro.2021.12.064

Khairallah, S., Anderson, A., Rubenchik, A., King, W.: Laser powder-bed fusion additive manufacturing: physics of complex melt flow and formation mechanisms of pores, spatter, and denudation zones. Acta Mater. 108, 36–45 (2016). https://doi.org/10.1016/j.actamat.2016.02.014

King, W., Anderson, A., Ferencz, R., Hodge, N., Kamath, C., Khairallah, S., Rubenchik, A.: Laser powder bed fusion additive manufacturing of metals; physics, computational, and materials challenges. Appl. Phys. Rev. (2015). https://doi.org/10.1063/1.4937809

Dezfoli, A.R., Lo, Y.-L., Raza, M.M.: Prediction of epitaxial grain growth in single-track laser melting of in718 using integrated finite element and cellular automaton approach. Materials (2021). https://doi.org/10.3390/ma14185202

Ganeriwala, R., Zohdi, T.: A coupled discrete element-finite difference model of selective laser sintering. Granul. Matter (2016). https://doi.org/10.1007/s10035-016-0626-0

Liu, B., Li, B., Li, Z., Bai, P., Wang, Y., Kuai, Z.: Numerical investigation on heat transfer of multi-laser processing during selective laser melting of AlSi10Mg. Results Phys. (2018). https://doi.org/10.1016/j.rinp.2018.11.075

Chen, Q., Zhao, Y., Strayer, S., Zhao, Y., Aoyagi, K., Koizumi, Y., Chiba, A., Xiong, W., To, A.: Elucidating the effect of preheating temperature on melt pool morphology variation in inconel 718 laser powder bed fusion via simulation and experiment. Addit. Manuf. (2020). https://doi.org/10.1016/j.addma.2020.101642

Weirather, J., Rozov, V., Wille, M., Schuler, P., Seidel, C., Adams, N., Zaeh, M.: A smoothed particle hydrodynamics model for laser beam melting of Ni-based Alloy 718. Comput. Math. Appl. (2018). https://doi.org/10.1016/j.camwa.2018.10.020

Fournet-Fayard, L., Cayron, C., Koutiri, I., Lapouge, P., Guy, J., Dupuy, C., Obaton, A.-F.: Thermal analysis of parts produced by l-pbf and correlation with dimensional accuracy. Weld. World 67, 1–14 (2023). https://doi.org/10.1007/s40194-022-01452-9

Nath, S., Gupta, G., Kearns, M., Gulsoy, O., Atre, S.: Effects of layer thickness in laser-powder bed fusion of 420 stainless steel. Rapid Prototyping J. (2020). https://doi.org/10.1108/RPJ-10-2019-0279. (ahead-of-print)

Elekes, F., Parteli, E.: An expression for the angle of repose of dry cohesive granular materials on Earth and in planetary environments. Proc. Natl. Acad. Sci. 118, 2107965118 (2021). https://doi.org/10.1073/pnas.2107965118

Cheng, Z., Wang, J., Zhou, B., Xiong, W.: The micro-mechanical behaviour of sand-rubber mixtures under shear: a numerical study based on X-ray micro-tomography. Comput. Geotech. 163, 105714 (2023). https://doi.org/10.1016/j.compgeo.2023.105714

Cheng, Z., Wang, J., Xu, D.-S., Fan, X.: Dem study on the micromechanical behaviour of sand-clay mixtures. Powder Technol. 435, 119400 (2024). https://doi.org/10.1016/j.powtec.2024.119400

Dorussen, B., Geers, M., Remmers, J.: A discrete element framework for the numerical analysis of particle bed-based additive manufacturing processes. Eng. Comput. (2022). https://doi.org/10.1007/s00366-021-01590-6

Dorussen, B., Geers, M., Remmers, J.: An efficient ray tracing methodology for the numerical analysis of powder bed additive manufacturing processes. Additi Manuf. (2023). https://doi.org/10.1016/j.addma.2023.103706

Jebahi, M., André, D., Terreros, I., Iordanoff, I.: Discrete element method to model 3D continuous materials, 145–159 (2015). https://doi.org/10.1002/9781119103042.biblio

André, D., Iordanoff, I., Charles, J.-L., Néauport, J.: Discrete element method to simulate continuous material by using the cohesive beam model. Comput. Methods Appl. Mech. Eng. 213–216, 113–125 (2012). https://doi.org/10.1016/j.cma.2011.12.002

Leclerc, W.: Discrete Element Method to simulate the elastic behavior of 3D heterogeneous continuous media. Int. J. Solids Struct. (2017). https://doi.org/10.1016/j.ijsolstr.2017.05.018

Steuben, J., Iliopoulos, A., Michopoulos, J.: Discrete element modeling of particle-based additive manufacturing processes. Comput. Methods Appl. Mech. Eng. (2016). https://doi.org/10.1016/j.cma.2016.02.023

Allen, M.P., Tildesley, D.J.: Computer Simulation of Liquids. Oxford University Press, London (2017). https://doi.org/10.1093/oso/9780198803195.001.0001

Unity Technologies: Unity - Manual: Rotation and Orientation in Unity

Zohdi, T.: Modeling and simulation of functionalized materials for additive manufacturing and 3D printing: continuous and discrete media, (2018)

Boley, C., Mitchell, S., Rubenchik, A., Wu, S.: Metal powder absorptivity: modeling and experiment. Appl. Opt. 55, 6496 (2016). https://doi.org/10.1364/AO.55.006496

Amanatides, J., Woo, A.: A fast voxel traversal algorithm for ray tracing. Proc. EuroGraphics 87 (1987). https://diglib.eg.org/items/60c72224-00f3-416d-9952-ee41e8c408da/full

Madan, N., Rojek, J., Nosewicz, S.: Convergence and stability of the deformable discrete element method. Int. J. Numer. Methods Eng. (2018). https://doi.org/10.1002/nme.6014

Luding, S.: Introduction to discrete element methods. Eur. J. Environ. Civ. Eng. 12(7–8), 785–826 (2008). https://doi.org/10.1080/19648189.2008.9693050

Caprenter Additive: PowderRange AlSi10Mg. Retrieved from: https://www.carpenteradditive.com/hubfs/Resources/Data%20Sheets/PowderRange_AlSi10Mg_DataSheet.pdf (2022). Accessed 10 July 2023

F42 Committee: For Additive Manufacturing Finished Part Properties Specification for AlSi10Mg with Powder Bed Fusion Laser Beam. West Conshohocken, PA (2018)

Properties and Selection: Nonferrous Alloys and Special-purpose Materials. ASM International (1990)

Sopra SA: Optical Data from Sopra SA. Available from: http://www.sspectra.com/sopra.html (2008). Accessed 10 July 2023

Palik, E.: Handbook of Optical Constants of Solids. Academic Press, College Park (1985)

Filmetrics Composite: Refractive Index of Mg-Smooth. https://www.filmetrics.com/refractive-index-database/. Accessed June 2023.

Zhou, J., Han, X., Li, H., Liu, S., Shen, S., Zhou, X., Zhang, D.: In-situ laser polishing additive manufactured AlSi10Mg: effect of laser polishing strategy on surface morphology, roughness and microhardness. Materials 14, 393 (2021). https://doi.org/10.3390/ma14020393

EOS: EOS Aluminium AlSi10Mg Material Data Sheet. Retrieved from: https://www.carpenteradditive.com/hubfs/Resources/Data%20Sheets/PowderRange_AlSi10Mg_DataSheet.pdf (2022). Accessed 10 July 2023

Ross, R.: Metallic Materials Specification Handbook (1992). https://doi.org/10.1007/978-1-4615-3482-2

Saleem, M., Soma, A.: Design of experiments based factorial design and response surface methodology for MEMS optimization. Microsyst. Technol. (2014). https://doi.org/10.1007/s00542-014-2186-8

Minitab: Factorial and fractional factorial designs. Available from: https://support.minitab.com/en-us/minitab/21/help-and-how-to/statistical-modeling/doe/supporting-topics/factorial-and-screening-designs/factorial-and-fractional-factorial-designs/ (2023). Accessed 1 July 2023

Wikipedia: Fractional factorial design. Available from: https://en.wikipedia.org/wiki/Fractional_factorial_design (2023). Accessed 1 July 2023

Minitab: What are response surface designs, central composite designs, and Box-Behnken designs? Available from: https://support.minitab.com/en-us/minitab/21/help-and-how-to/statistical-modeling/doe/supporting-topics/response-surface-designs/response-surface-central-composite-and-box-behnken-designs/ (2023). Accessed 1 July 2023

Zavala Arredondo, M.A., London, T., Allen, M., Maccio, T., Ward, S., Griffiths, D., Allison, A., Goodwin, P., Hauser, C.: Use of power factor and specific point energy as design parameters in laser powder-bed-fusion (l-pbf) of alsi10mg alloy. Mater. Des. (2019). https://doi.org/10.1016/j.matdes.2019.108018

Suder, W., Williams, S.: Investigation of the effects of basic laser material interaction parameters in laser welding. J. Laser Appl. (2012). https://doi.org/10.2351/1.4728136

Acknowledgements

Additive Industries is sincerely thanked for the production of the samples and access to experimental facilities in the laboratory.

Author information

Authors and Affiliations

Contributions

Marwan Aarab made improvements on the numerical model, conducted the experiments and analysis, and wrote the original and final article. The original numerical model was developed by Bram Dorussen as part of his PhD research. Sandra Poelsma gave guidance through the case study and reviewed the original article. Joris Remmers gave guidance throughout the code development, and project management and reviewed the original article.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there are no Conflict of interest regarding the research findings presented in this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Aarab, M., Dorussen, B.J.A., Poelsma, S.S. et al. Development of optimal L-PBF process parameters using an accelerated discrete element simulation framework. Granular Matter 26, 69 (2024). https://doi.org/10.1007/s10035-024-01432-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10035-024-01432-4